Abstract

The coronavirus (COVID‐19) pandemic is affecting the environment and conservation research in fundamental ways. Many conservation social scientists are now administering survey questionnaires online, but they must do so while ensuring rigor in data collection. Further, they must address a suite of unique challenges, such as the increasing use of mobile devices by participants and avoiding bots or other survey fraud. We reviewed recent literature on online survey methods to examine the state of the field related to online data collection and dissemination. We illustrate the review with examples of key methodological decisions made during a recent national study of people who feed wild birds, in which survey respondents were recruited through an online panel and a sample generated via a project participant list. Conducting surveys online affords new opportunities for participant recruitment, design, and pilot testing. For instance, online survey panels can provide quick access to large and diverse samples of people. Based on the literature review and our own experiences, we suggest that to ensure high‐quality online surveys one should account for potential sampling and nonresponse error, design survey instruments for use on multiple devices, test the instrument, and use multiple protocols to identify data quality problems. We also suggest that research funders, journal editors, and policy makers can all play a role in ensuring high‐quality survey data are used to inform effective conservation programs and policies.

Keywords: conservation social science, COVID‐19 pandemic, human dimensions, methods, questionnaires, web‐based surveys, ciencias sociales de la conservación, cuestionarios, dimensiones humanas, encuestas basadas en la red, métodos, pandemia de COVID‐19

Short abstract

Article impact statement: Online social surveys can provide reliable data to inform conservation through robust sampling and recruitment, testing, and data cleaning.

Abstract

Resumen

La pandemia del coronavirus (COVID‐19) está afectando al ambiente y a la investigación para la conservación de maneras fundamentales. Muchos científicos sociales de la conservación ahora están aplicando encuestas en línea, pero lo deben hacer mientras aseguran que hay rigor en la recolección de datos. Además, deben abordar un conjunto de retos únicos, como el incremento en el uso de dispositivos móviles por parte de los participantes y la evasión de bots y otros fraudes en las encuestas. Revisamos la literatura reciente sobre los métodos de encuestas en línea para examinar el estado del campo relacionado con la colección y difusión de datos. Ilustramos esta revisión con ejemplos de decisiones metodológicas importantes realizadas durante un estudio nacional de personas que alimentan a aves silvestres, en el cual quienes respondieron la encuesta fueron reclutados por medio de un panel en línea y una muestra fue generada por medio de una lista de participantes en el proyecto. La aplicación de encuestas en línea brinda oportunidades nuevas para el reclutamiento de participantes, diseños y evaluación de pilotos. Por ejemplo, los paneles de las encuestas en línea pueden proporcionar acceso rápido a muestras grandes y diversas de personas. Con base en la revisión de la literatura y en nuestras propias experiencias, sugerimos que para asegurar la elaboración de encuestas en línea de alta calidad uno debe explicar el error potencial de muestreo y por ausencia de respuesta, diseñar instrumentos de encuesta para su uso en diferentes dispositivos, probar el instrumento y usar múltiples protocolos para identificar problemas con la calidad de los datos. También sugerimos que los financiadores de la investigación, los editores de revistas y formuladores de políticas pueden jugar un papel para asegurar que se usen datos de encuestas de alta calidad para orientar a los programas y las políticas de conservación.

INTRODUCTION

The global coronavirus (COVID‐19) pandemic could lead to lasting change in conservation research (Corlett et al., 2020). For example, opportunities for in‐person collection of social data could be drastically restricted. The pandemic will not be the last social upheaval to affect collection of social data. Effectively adapting conservation social science research to such challenges is critical.

Social science data are essential for designing and implementing effective conservation policy and practice (Bennett et al., 2017). Surveys are a common method for collecting such data. Surveys involve designing, collecting, and analyzing responses from people to a set of questions (i.e., a questionnaire or a survey instrument) (Lavrakas, 2008). Questionnaires have long been the most common tool for conducting social surveys (Young, 2016). Surveys allow researchers to generalize based on statistical confidence, given sufficient sample size and appropriate sampling and survey design (Stern et al., 2014). Surveys are also useful for collecting descriptive data from large numbers of people. Data gathered from people help ensure that conservation programs are acceptable to the public (Bennett, 2016) and affect behavior change where intended (Reddy et al., 2017). Surveys take a variety of modes, including in‐person, mail, phone, and online. Unfortunately, surveys have been plagued by declining response rates for decades (Groves, 2011; Stedman et al., 2019). On top of this long‐term trend, social distancing measures necessitated by COVID‐19 have forced many conservation social scientists to experiment with new modes. As some conservation social scientists move their data collection online, they must ensure rigor in this mode.

Online surveys have unique challenges researchers must address, including increased use of mobile devices for surveys, bots, and other survey fraud (Nayak & Narayan, 2019). Yet articles that chart a course for rigorous online conservation survey research are lacking or were published prior to substantial development in online survey platform technologies and increasing concerns about online security and survey fraud (e.g., Vaske, 2011). When social distancing measures were put in place in early 2020, we were in the midst of planning two surveys of people at conservation and natural areas to ask about beach recreation behaviors around birds and boating experiences. As we moved our surveys online, we found no recent best practice resources tailored to our field. We reviewed the survey methods literature from across multiple other social science fields. Furthermore, A.D. and C.W. hosted web meetings on transitions to remote research in the face of the pandemic for the Society for Conservation Biology's Social Science Working Group, in which scientists from across the globe expressed interest in clear guidance to lead this transition. Thus, we sought to address these needs through a review of recent literature on best practices in online survey methods and presentation of a case study of a survey of people who feed birds. We devised guidelines for conducting online surveys to ensure collection of high‐quality survey data.

ONLINE SURVEYS FOR CONSERVATION SOCIAL SCIENCE

In‐person surveys have been considered the most appropriate method for studies of households and individuals, although telephone and mail modes allow lower cost per response (Stern et al., 2014). In comparison with other survey modes, in‐person surveys increase response rates among certain populations (e.g., low‐income or vulnerable populations) (Jackson‐Smith et al., 2016; Weiss & Bailar, 2002) and reach populations in situ (e.g., visitors to protected areas) (Davis et al., 2012). However, response rates for in‐person surveys are declining, in part due to respondents’ decreasing willingness to speak with interviewers (Groves & Couper, 1998). Declining response rates raise concern about response bias regardless of survey mode (Harter et al., 2016), especially when particular subpopulations are underrepresented—including rural populations (Coon et al., 2019), populations with low education levels and incomes (Goyder et al., 2002), and women (Jacobson et al., 2007).

Relative to other modes of social data collection, online surveys offer an efficient option for remote data collection and increase accessibility for some populations. Technological advances make online surveys convenient (e.g., respondents can complete a survey when they like), interactive (e.g., images, videos, or drag‐and‐drop options), accessible (e.g., increased contrast or font), streamlined (e.g., personalized survey items based on previous responses), and available on multiple devices (e.g., desktop computers, mobile phones). In particular, online panels (in which survey participants are recruited, incentivized, and retained by for‐profit companies) provide a novel way to reach target populations and collect data within a short time frame. Online data collection can also reduce data entry errors because researchers do not need to manually enter responses into a spreadsheet.

Survey methodologists in the conservation field argue that online surveys can produce “inaccurate, unreliable, and biased data” (Duda & Nobile, 2010, p. 55). Problems of survey access, recruitment, and low‐quality responses can produce these undesirable outcomes. Depending on the target population, uneven internet access can reduce or bias the response rate (UNESCO & ITU, 2019). Furthermore, nonrandom recruitment methods, such as advertisement on social media, can result in sampling error (Hill & Shaw, 2013), and the responses themselves can be populated by computer bots (Buchanan & Scofield, 2018). We raise these concerns to make the case that online survey development and implementation must be done with a high level of preparation, care, and documentation. Numerous social science methodologists, while acknowledging the downsides of online social surveys, have lauded their value. Witte (2009, p. 287) wrote that web‐based survey research has “reached a level of maturity such that it can be considered an essential part of the sociological tool kit.” Business and marketing researchers Evans and Mathur (2005, p. 195) argue that “if conducted properly, online surveys have significant advantages over other formats.” We agree, and aim to support conservation social scientists in minimizing potential pitfalls of this data collection mode while maximizing its utility.

ONLINE SURVEY DESIGN AND IMPLEMENTATION

We considered, in our view, the most important factors in designing and implementing online surveys and do not offer a comprehensive guide. For researchers new to surveys, we recommend books by Dillman et al. (2014) and Engel (2015). It is common for conservation researchers without formal training in the social sciences to conduct surveys (Martin, 2020). It is especially important for this group to be aware of the considerable preparation needed to conduct an online survey. We suggest these researchers partner with a trained social scientist. Still, even the most seasoned social scientist can benefit from being current with survey research best practices.

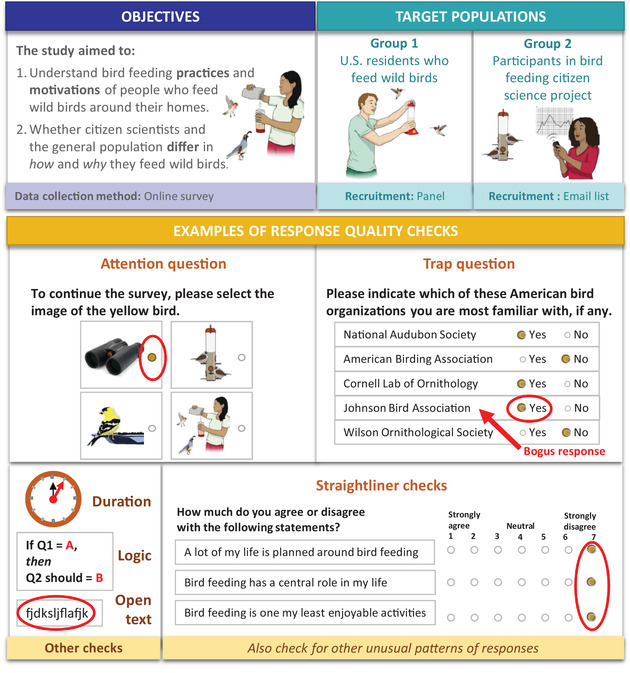

We drew examples from a recent study conducted by A.D. and V.M. and their colleagues (Figure 1). They surveyed people who feed wild birds and aimed to determine the different types of foods people offer to birds and their motivations for doing so. Data were collected through a national‐level online survey of a commercial online panel and a random sample of participants in a citizen science project that records birds at backyard feeders.

FIGURE 1.

Design of a survey study of people in the United States who feed wild birds. Attention questions and trap questions ensure respondents are reading carefully or detect bots; straightliner checks identify a lack of variation in responses when variation is expected, and other checks identify unacceptably short duration spent on the survey, illogical responses (a form of inattention), and nonsense text (sometimes used to satisfy forced responses). More information on response checks is provided in Appendix S1

Strategic planning

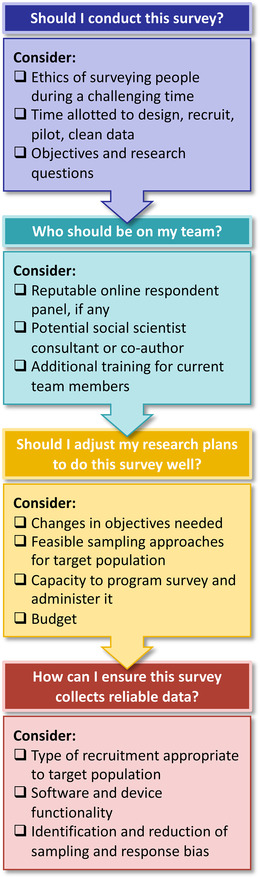

Researchers collecting primary data from people online need to consider how their objectives align with opportunities afforded by the online venue and plan strategically (Figure 2, questions to address). Researchers need to consider the ethics of involving people (Brittain et al., 2020; Ibbett & Brittain, 2020). Most universities require protocol approval from a review board or ethics committee. In the case of the bird feeder study, a mail survey was an option, but an online survey was used to collect a large volume of data at a national scale in a short period and with a limited budget. The team had the expertise to design and program the online survey themselves (i.e., researchers were well‐versed in online survey best practices and had practical experience implementing online surveys).

FIGURE 2.

Questions researchers should ask themselves to strategically plan for an online survey

Online sampling and recruiting

There are two types of potential survey errors associated with online surveys: sampling error and nonresponse error. Sampling error occurs when survey results from a sample are different from what would have been generated from the population. Nonresponse error occurs when some people in the sample do not respond to or do not complete the survey and the nonresponders are different from the responders (Engel, 2015). Probability‐based sampling (randomly sampling from the population), taking larger—but not excessive—samples, and following up with alternative survey modes are some of the best ways to reduce sampling error (Fricker, 2017).

In online survey research, email recruitment to a predetermined random sample helps minimize sampling and nonresponse error if the population is known and email addresses are available (e.g., a survey of a conservation society sent to the member list vs. a survey sent to a listserv with unknown membership). Nonresponse error is still a concern in this recruitment approach, and researchers must be clear about how they calculate response rates. When an email is sent to an address, it may go into a spam filter, be deleted without being read, or be forwarded to another person. Numerous methods publications (e.g., Dillman et al., 2014; Fricker, 2017) offer best practices to minimize this source of error, including making strategic repeated contacts (through multiple modes such as phone and mail), sending individualized rather than mass emails, avoiding attachments, choosing email subject wording carefully, avoiding words commonly used by advertisers, and offering incentives. Researchers should also consider nonresponse bias checks, which can be accomplished using follow‐up questionnaires for nonresponders, comparing respondent demographics to the target population, and comparing responses to key survey variables between early‐wave and late‐wave respondents (characteristics of late responders may differ from early responders) (Aerny‐Perreten et al., 2015). To collect responses from our group in the bird feeding study, we used a list of participants (n = 9960) in the United States who submitted data in the previous year to Project FeederWatch (www.feederwatch.org). We drew a random sample of 2900 participants from this list and invited them via email to complete our online questionnaire about their bird feeding experiences. We received 1270 fully completed responses (43.8% response rate).

If email recruitment from a random sample is infeasible, researchers may consider recruiting via a convenience sample (Battaglia, 2008) on listservs (e.g., email lists for birding groups) or social media. We have noticed a large number of these solicitations since the pandemic lockdown measures began in early 2020. Although these recruitment options offer the opportunity for rapid data collection, researchers must acknowledge that convenience samples cannot be used to make statistical inferences about a population. When a researcher does not know the key characteristics of a population (total population size, age, education, race, gender, etc.) on a listserv or social media, they cannot define their sample in relation to the population or determine the extent of the sampling error. It is also difficult to test for nonresponse error in this form of recruitment because respondents on listservs and social media self‐select participation. These problems apply to direct email recruitment too, but researchers can minimize nonresponse through repeated email contact, which is difficult to track through listserv or social media samples. The trend in online recruitment without a random sample is not unique to 2020; Zack et al. (2019) documented increasing reliance on nonprobability samples in the social sciences before the pandemic.

Our first suggestion for online recruitment without a predrawn sample is to use quota sampling. Quotas allow researchers to determine relevant variables whose distribution in the study population (e.g., age, ethnicity, education) can be matched in the sample (Newing et al., 2011). Although quota sampling is not always as effective as probability sampling (Yang & Banamah, 2014), it can reduce sampling error when the quota variables are tailored to the study (Terhanian et al., 2016). Second, we suggest listservs and social media recruitment be restricted to survey pretesting (e.g., to validate theoretical constructs and reduce measurement error [see “Pretesting and pilot testing”]), developing hypotheses, and identifying and describing issues of importance without statistical inference.

Online panels

If funding is sufficient, online survey panels offer “a sample database of potential respondents who declare that they will cooperate for future data collection if selected” (IOS, 2012), although there are many considerations when choosing a panel vendor. Key terms related to these panels are described in Appendix S1. Survey panels are being used increasingly to provide quick access to large and diverse samples of people (Callegaro et al., 2014). Logistical considerations for online panel vendor choice include cost, populations available, geographic coverage, and how data quality is addressed (Baker et al., 2010).

As with other types of recruitment, sampling and nonresponse errors are important considerations for data collected through online panels. A panel respondent sample may be drawn as a probability sample (random sample of the general population) or a nonprobability sample (e.g., members recruited through online advertisements rather than direct solicitation) (Baker et al., 2010). For probability‐based panels with low response rates, researchers can weight responses to reflect key population demographics (i.e., poststratification adjustment) (Hays et al., 2015). For nonprobability sample online panels, researchers can use quotas to minimize sampling error. However, the composition of some panels may be skewed toward particular demographics. For instance, MTurk (Amazon's online panel) respondents’ demographic composition is significantly different from that of the U.S. population, making some quotas impossible (Shapiro et al., 2013). However, when MTurk workers are used for experimental research, they are as reliable or more reliable than the traditional undergraduate population used for psychological experiments (Buhrmester et al., 2016). There are numerous metrics of response that can be reported for online panels. Callegaro and DiSogra (2008) recommend reporting at minimum recruitment rate (proportion of people who agreed to serve on the panel to all invited) and specific survey's completion rate (proportion of those who started, qualified, and then completed the survey). There are multiple measures of nonresponse that can improve survey transparency, including noncontact (e.g., wrong email address or spam filter), refusal (after reading the email), or not able (e.g., internet connectivity issues).

We used an online panel to recruit the sample of the national public who feeds birds in the United States. Quotas were set based on age, race, and education level; distributions were taken from previous joint USFWS and U.S. Census Bureau (2018) surveys. In contrast, we did not use quotas for the sample of citizen scientists. Instead, we selected a random sample of 2900 emails from the 9960 participants in the project from the previous year. Online panel respondents (who represented the general population who feed wild birds) had different demographic characteristics than the citizen scientists (i.e., there were more White, highly educated, and older respondents in the citizen science group), although it was difficult to fully meet some quotas in the online panel (e.g., non‐Whites with low levels of education, who may be underrepresented in online survey panels due to structural inequalities that prevent their access to online and technological resources). We detected large differences between the two groups in their motivations to feed birds. These differences emphasized the value of using both an online panel and citizen scientist contacts to reach the broader bird feeding population. It was challenging to report response metrics for multiple reasons, including multiple subcontracted panel vendors, a screening question (whether the respondent fed birds at their home) that disqualified the majority of panel members, and automatic termination protocols used if an invalid response was detected (e.g., very short response time). We recommend researchers work closely with the online panel provider to discuss and plan for these measures prior to commencing data collection.

Design and mobile devices

Online survey tools afford unique functionality to improve questionnaire design, including appearance, display logic, and randomization. The appearance of surveys (e.g., fonts, colors, logos) can be customized to an organization's branding, which can increase credibility. Visual design is important because enhancing questionnaires with visual elements can increase response rates (Deutskens et al., 2004), although researchers need to be aware of introducing bias (e.g., prompting respondents to think of responses they may not have recorded otherwise). Contrast, color scheme, and font size can also be configured to increase accessibility for visually impaired respondents. Display logic options can help tailor questionnaires for different subgroups—skip logic skips items based on a filtering question and display logic only displays a question when predetermined criteria are met. Subsets of questions can be randomized or displayed to only a preset number or portion of respondents. Display logic can also be used to avoid question‐order effects by displaying lists of statements in random order (Couper et al., 2004). An order effect occurs when a person's answer to a survey question is influenced by an earlier question (Dillman et al., 2014). Finally, online survey questionnaires can require or request responses to questions, reducing the problem of partial survey completion (although respondents may drop out if frustrated by this feature). Requiring responses is considered unethical by some institutional ethics bodies.

Researchers need to consider how questionnaires look on different devices. Questionnaires should be designed with mobile devices in mind because respondents are very likely to access the survey this way (Link et al., 2014). Up to 53% of questionnaires are responded to on mobile devices (Qualtrics, 2020). User interface problems can be particularly off‐putting (e.g., questionnaire is not mobile device friendly or too much scrolling is required) (Dillman et al., 2014). Respondents may not complete the survey or make biased responses if the questionnaire is not designed for the device (de Bruijne & Wijnant, 2014). For example, a response scale (e.g., strongly agree to strongly disagree) may appear horizontally and in full on a computer, whereas on a smartphone it appears as a dropdown list. Dropdown lists may create response bias because respondents may endorse visible response options more frequently than options that require scrolling to view (Couper et al., 2004). One of the best ways to avoid functionality issues is to test the questionnaire on a variety of devices and internet browsers before finalizing. Most reputable online survey platforms provide valuable support and advice for survey researchers.

We anticipated approximately 60% of respondents from both sample groups would use mobile devices to complete the questionnaire (based on information from the online panel provider and experience with the citizen science project). We created a questionnaire that was visually appealing and engaging by using a clean style and occasional colorful illustrations of feeder birds. It was also tailored to mobile devices. For instance, Likert scale items started with the most likely responses so respondents would need to scroll down less frequently on mobile devices. We also used functions such as piped text, which pulls previous answers into follow‐up questions, skip logic to ensure respondents were not burdened with irrelevant questions, and randomized lists of statements to minimize order effects.

Pretesting and pilot testing

Pretesting and pilot testing are essential steps prior to fielding a survey, regardless of whether the survey is conducted online or via another mode. Pretesting refers specifically to refining the questionnaire, whereas piloting assesses the feasibility of the entire survey process, from sampling and recruitment strategies through data collection and analysis (Ruel et al., 2016). A survey pretest is generally conducted with a small sample of individuals and can help answer questions about questionnaire organization and content with the aim of minimizing potential measurement error (Ruel et al., 2016). For example, were the instructions confusing or misleading? Does each section of the questionnaire flow smoothly from one to the next? Are the questions concise, unbiased, and measuring what they were intended to measure? Pretesting can assess the cognitive process of answering questions in order to increase question validity (Willis, 2004) and can be conducted either in person or online (Geisen & Murphy, 2020). Following pretesting, the survey (including the questionnaire and distribution and analysis protocols) should be piloted with a cross section of the target population and these data analyzed to ensure the proposed implementation and analysis plan is appropriate and efficient (Geisen & Murphy, 2020). When pilot testing is complete, necessary revisions to the survey protocol can be made, including changes to sampling and recruitment methods, the questionnaire and administration procedures, or the analytical approach.

Researchers can, at minimum, pretest the survey instrument among key informants to assess content validity, and ideally, pilot the survey online. There are a number of online vendors that offer options for piloting, including MTurk (Edgar et al., 2016). If researchers are using an online respondent panel vendor, the survey can be piloted through the vendor with a subsample of respondents before sending to the full sample. When planned and conducted well, pretests and pilot surveys can ensure the full‐scale study maintains high validity, reliability, and efficiency.

In our bird feeding study, we twice recruited convenience samples of people who feed wild birds and follow two bird‐feeding Facebook pages run by the Cornell Lab of Ornithology (n 1 = 44, n 2 = 450) to thoroughly pretest our online survey. Our main concern was that people of different ages would be able to understand the questions and provide relevant responses, so we included checks for feedback throughout the questionnaire. We used quota sampling for these test groups, with the key variable being age group, along with screening questions to ensure respondents were over 18 years old, lived in the United States, and had fed wild birds in the last 12 months. Due to the broad reach of these Facebook groups, we were able to gain respondents from all states except Wyoming and Hawaii in the testing phase. However, if we had concerns about geographic reach, we could have included other quota variables, such as state or census region.

Avoiding poor‐quality data

Although data quality can be improved through pretesting the instrument and piloting the survey, there are other data quality concerns unique to online research that should also be addressed. Respondents may satisfice, meaning they expend minimal effort on responses, due to online distractions, survey design issues, or desire for incentive payments (Anduiza & Galais, 2016). Survey bots or automatic form fillers may also complete surveys, leading to falsified data. Bots are becoming an increasing problem in online survey research, and although their aim is usually to receive payment for completed responses, they have been found responding to unpaid survey opportunities (presumably to refine their algorithms) (Prince et al., 2012). It is important to discuss bot‐detection mechanisms with the online panel vendor if applicable, and what recourse is available if suspicious responses are found (e.g., recruiting additional respondents at no additional cost to the researcher). There are a number of data quality checks that can be incorporated into questionnaire design and survey administration to address satisficing and bots (Appendix S2). For instance, Buchanan and Scofield (2018) recommend use of multiple methods, including monitoring response time, reverse scaling of response options for different questions, and attention‐check questions (although attention or so‐called trap questions could lower the level of trust between respondent and researcher). We suggest also checking for straightlining, in which the respondent selects the same response for blocks of questions in a row.

Once the data have been screened, researchers must then decide whether particular respondents should be dropped from the final data set and replaced to meet quotas or statistical power requirements. We recommend keeping detailed records of what is removed and why and working closely on these protocols with online panel vendors, if applicable. In addition to inattention and other checks, researchers must decide what to do with incomplete responses, which is a challenge across most survey modes. Researchers can decide whether to drop the respondent, treat individual items as missing, or impute responses (i.e., fill in the missing responses using statistical methods). For more information on missing data and imputation, see Little and Rubin (2020). We employed our own data quality checks in our bird feeding study, in addition to the online panel vendor's protocols; checks included duration, straightlining, attention and trap questions, logic checks, and open‐ended response checks (Figure 1). Responses failing these checks were flagged. Respondents with two or more flags were reviewed individually for irregularities and excluded from the final data set if deemed unreliable.

Reporting data collection methods

Accurate and thorough reporting of data collection methods is crucial to maintaining the legitimacy of survey data; however, this reporting is often lacking in conservation social science (Martin, 2020). Although requirements vary across journals, we recommend using general guidelines from the American Psychological Association (Journal Article Reporting Standards; www.apastyle.org/jars), the American Association for Public Opinion Research (AAPOR) (https://www.aapor.org/Standards‐Ethics/Best‐Practices.aspx), and best practices for conservation social science research offered by Teel et al. (2018). Thorough reporting of online panel methods, to the extent possible, will advance progress toward a uniform standard of high‐quality online panel data reporting, similar to that which exists for other survey modes.

CALL TO ACTION

Overcoming challenges to collecting survey data online without compromising quality is essential to ensure the accuracy and legitimacy of these data and to the future of conservation social science. The responsibility for data quality lies with researchers and larger institutions producing and consuming the data, including funders and policy makers. We recommend the following tangible actions researchers, funders, vendors, reviewers, editors, and science consumers can take to further high‐quality online data collection and dissemination.

Researchers and their institutions

Researchers must consider the appropriateness of an online survey before embarking on this method and mode of data collection. The practices recommended above will contribute to ensuring quality data, but it is essential that trained social scientists be engaged in survey research. Research institutions can further encourage best practices in online survey research by including protocol guidelines that mirror requirements of institutional review board or ethics committee requirements.

Online panel vendors

Vendors can best support high‐quality science through transparency about recruitment and response rates and by offering multiple measurements of these rates. They can also lead innovations in checks for bots and inattention. Vendors that develop their lists of common populations for conservation social scientists (e.g., private landowners, wildlife recreationists) will be particularly valuable to the field in the future.

Journal editors and reviewers

Although online survey research is being published more frequently in a range of journals, there are few guidelines for submissions that specifically address best practices for implementation or reporting. Journals that publish conservation social science research can contribute by developing reporting guidance for authors, reviewers, and editors, addressing the study's sampling framework, online survey and panel vendors, and which tests were used for data quality and response bias. These policies would be in addition to adopting the AAPOR Survey Disclosure Checklist for general survey reporting (AAPOR, 2009).

Science funders

Different types of funders, including foundations, agencies, and research institutions, can support high‐quality online surveys through thoughtful policies and adequate budget lines. Using an online panel vendor with sufficient quality controls can be costly (though less so than many mail or phone surveys), but results will likely be more generalizable than data from email lists or social media. Many funding agencies do not allow incentive payments, or make the process of paying participants difficult, which creates a barrier to improving response rates. Funders should also be critical of survey methods in proposal review and only support researchers who have clear plans to ensure survey data are of high quality. Funders should also consider the depth of survey research expertise represented on a proposal team to ensure they invest in high‐quality research. Including survey experts on grant proposal review panels is another way to achieve rigorous reviews.

Users of online survey results

There is high demand for survey data from multiple user groups, including policy makers and conservationists. It is the responsibility of these users to be informed about data collection methods. Conservation organizations and natural resource agencies may need social science practitioners to help with the interpretation of results. Social science researchers also need to assist end users of their data to understand the importance of rigorous methods through clear, evidence‐based communication.

CONCLUSION

Social distancing measures resulting from the COVID‐19 pandemic have hastened the growing use of online surveys by conservation social scientists. Yet this moment is not the only time when conservation social scientists have been required to pivot to new data collection methods. Depending on the study population, online surveys may offer an alternative approach to continue with research plans when events preclude in‐person methods, but conservation social scientists must fully prepare for the unique challenges and opportunities of online surveys. Attention to factors that influence data quality is vital, particularly when this information is used to inform conservation policies and programs. All stakeholders in social survey research must be invested in ensuring conservation social science continues to innovate and strive for robust and reliable findings.

Supporting information

Supporting Information

ACKNOWLEDGMENTS

We thank participants of web meetings on transitions to remote research through the Society for Conservation Biology Social Science Working Group, which initially inspired this work. We also thank the Cornell Lab of Ornithology for their support of the study used as an example in this article. V.M. and A.D. acknowledge the contribution of their collaborators in the study, D. Bonter and R. Mady. We also appreciate the time of our survey research participants in the case study. Illustrations are by M. Bishop and E. Regnier. We thank the three anonymous reviewers, handing editor, and regional editor for providing feedback that improved this manuscript. This work was partially funded by USDA National Institute of Food and Agriculture McIntire‐Stennis Award 1015330.

Wardropper CB, Dayer AA, Goebel MS, Martin VY. Conducting conservation social science surveys online. Conservation Biology 2021;35:1650–1658. 10.1111/cobi.13747.

Article impact statements: Online social surveys can provide reliable data to inform conservation through robust sampling and recruitment, testing, and data cleaning.

LITERATURE CITED

- American Association for Public Opinion Research (AAPOR) . (2009). Survey disclosure checklist . https://www.aapor.org/Standards‐Ethics/AAPOR‐Code‐of‐Ethics/Survey‐Disclosure‐Checklist.aspx.

- Aerny‐Perreten, N. , Domínguez‐Berjón, M. F. , Esteban‐Vasallo, M. D. , & García‐Riolobos, C. (2015). Participation and factors associated with late or non‐response to an online survey in primary care. Journal of Evaluation in Clinical Practice, 21, 688–693. [DOI] [PubMed] [Google Scholar]

- Anduiza, E. , & Galais, C. (2016). Answering without reading: IMCs and strong satisficing in online surveys. International Journal of Public Opinion Research, 29, 497–519. [Google Scholar]

- Baker, R. , Blumberg, S. J. , Brick, J. M. , Couper, M. P. , Courtright, M. , Dennis, J. M. , Dillman, D. , Frankel, M. R. , Garland, P. , Groves, R. M. , Kennedy, C. , Krosnick, J. , Lavrakas, P. J. , Lee, S. , Link, M. , Piekarski, L. , Rao, K. , & Thomas, R. K. (2010). Research synthesis: AAPOR report on online panels. Public Opinion Quarterly, 74, 711–781. [Google Scholar]

- Battaglia, M. (2008). Convenience sampling. In Lavrakas P. J. (Ed.), Encyclopedia of survey research methods (pp. 148–149). Sage Publications. [Google Scholar]

- Bennett, N. J. (2016). Using perceptions as evidence to improve conservation and environmental management. Conservation Biology, 30, 582–592. [DOI] [PubMed] [Google Scholar]

- Bennett, N. J. , Roth, R. , Klain, S. C. , Chan, K. , Christie, P. , Clark, D. A. , Cullman, G. , Curran, D. , Durbin, T. J. , Epstein, G. , Greenberg, A. , Nelson, M. P. , Sandlos, J. , Stedman, R. , Teel, T. L. , Thomas, R. , Veríssimo, D. , & Wyborn, C. (2017). Conservation social science: Understanding and integrating human dimensions to improve conservation. Biological Conservation, 205, 93–108. [Google Scholar]

- Brittain, S. , Ibbett, H. , Lange, E. , Dorward, L. , Hoyte, S. , Marino, A. , Milner‐Gulland, E. J. , Newth, J. , Rakotonarivo, S. , Veríssimo, D. , & Lewis, J. (2020). Ethical considerations when conservation research involves people. Conservation Biology, 34, 925–933. [DOI] [PubMed] [Google Scholar]

- Buchanan, E. M. , & Scofield, J. E. (2018). Methods to detect low quality data and its implication for psychological research. Behavior Research Methods, 50, 2586–2596. [DOI] [PubMed] [Google Scholar]

- Buhrmester, M. , Kwang, T. , & Gosling, S. D. (2016). Amazon's mechanical Turk: A new source of inexpensive, yet high‐quality data? In Kazdin A. E. (Ed.), Methodological issues and strategies in clinical research (4th ed., pp. 133–139). American Psychological Association. [DOI] [PubMed] [Google Scholar]

- Callegaro, M. , Baker, R. , Bethlehem, J. , Goritz, A. , Krosnick, J. , & Lavrakas, P. (2014). Online panel research: History, concepts, applications, and a look at the future. In Allegaro M., Baker R., Bethlehem J., Goritz A., Krosnick J., & Lavrakas P. (Eds.), Online panel research (pp. 1–21). John Wiley & Sons. [Google Scholar]

- Callegaro, M. , & Disogra, C. (2008). Computing response metrics for online panels. Public Opinion Quarterly, 72, 1008–1032. [Google Scholar]

- Coon, J. J. , van Riper, C. J. , Morton, L. W. , & Miller, J. R. (2019). Evaluating nonresponse bias in survey research conducted in the rural Midwest. Society & Natural Resources, 33, 968–986. [Google Scholar]

- Corlett, R. T. , Primack, R. B. , Devictor, V. , Maas, B. , Goswami, V. R. , Bates, A. E. , Koh, L. P. , Regan, T. J. , Loyola, R. , Pakeman, R. J. , Cumming, G. S. , Pidgeon, A. , Johns, D. , & Roth, R. (2020). Impacts of the coronavirus pandemic on biodiversity conservation. Biological Conservation, 246, 108571. PMID, 32292203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Couper, M. P. , Tourangeau, R. , Conrad, F. G. , & Crawford, S. D. (2004). What they see is what we get: Response options for web surveys. Social Science Computer Review, 22, 111–127. [Google Scholar]

- Davis, S. K. , Thompson, J. L. , & Schweizer, S. E. (2012). Innovations in on‐site survey administration: Using an iPad interface at National Wildlife Refuges and National Parks. Human Dimensions of Wildlife, 17, 282–294. [Google Scholar]

- De Bruijne, M. , & Wijnant, A. (2014). Mobile response in web panels. Social Science Computer Review, 32, 728–742. [Google Scholar]

- Deutskens, E. , De Ruyter, K. , Wetzels, M. , & Oosterveld, P. (2004). Response rate and response quality of internet‐based surveys: An experimental study. Marketing Letters, 15, 21–36. [Google Scholar]

- Dillman, D. A. , Smyth, J. D. , & Christian, L. M. (2014). Internet, phone, mail, and mixed‐mode surveys: The tailored design method. John Wiley & Sons. [Google Scholar]

- Duda, M. D. , & Nobile, J. L. (2010). The fallacy of online surveys: No data are better than bad data. Human Dimensions of Wildlife, 15, 55–64. [Google Scholar]

- Edgar, J. , Murphy, J. , & Keating, M. (2016). Comparing traditional and crowdsourcing methods for pretesting survey questions. Sage Open. 10.1177/2158244016671770. [DOI] [Google Scholar]

- Engel, U. (Ed.). (2015). Survey measurements: Techniques, data quality and sources of error. Campus Verlag. [Google Scholar]

- Evans, J. R. , & Mathur, A. (2005). The value of online surveys. Internet Research, 15, 195–219. [Google Scholar]

- Fricker, R. D. (2017). Sampling methods for online surveys. In Fielding N., Lee R., & Blank G. (Eds.), The SAGE handbook of online research methods (pp. 162–183). SAGE Publications. [Google Scholar]

- Geisen, E. , & Murphy, J. (2020). A compendium of web and mobile survey pretesting methods. In Beatty A. W. P., Collins D., Kaye L., Padilla J. L., & Willis G. (Eds.), Advances in questionnaire design, development, evaluation and testing (pp. 287–314). John Wiley & Sons. [Google Scholar]

- Goyder, J. , Warriner, K. , & Miller, S. (2002). Evaluating socio‐economic status (SES) bias in survey nonresponse. Journal of Official Statistics, 18, 1–12. [Google Scholar]

- Groves, R. M. (2011). Three eras of survey research. Public Opinion Quarterly, 75, 861–871. [Google Scholar]

- Groves, R. M. , & Couper, M. P. (1998). Nonresponse in household interview surveys. John Wiley & Sons. [Google Scholar]

- Harter, R. , Battaglia, M. P. , Buskirk, T. D. , Dillman, D. A. , English, N. , Fahimi, M. , Frankel, M. R. , Kennel, T. , McMichael, J. P. , McPhee, C. B. , Montaquila, J. , Yancey, T. , & Zukerberg, A. L. (2016). Address‐based sampling. https://www.aapor.org/Education‐Resources/Reports/Address‐based‐Sampling.aspx. [Google Scholar]

- Hays, R. D. , Liu, H. , & Kapteyn, A. (2015). Use of Internet panels to conduct surveys. Behavior Research Methods, 47, 685–690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill, B. M. , & Shaw, A. (2013). The Wikipedia gender gap revisited: Characterizing survey response bias with propensity score estimation. PLoS One, 8, e65782–e65782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibbett, H. , & Brittain, S. (2020). Conservation publications and their provisions to protect research participants. Conservation Biology, 34, 80–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- International Organization for Standardization (IOS) . (2012). IOS 20252 market, opinion and social research: Vocabulary and service requirements (2nd ed.). IOS. [Google Scholar]

- Jackson‐Smith, D. , Flint, C. G. , Dolan, M. , Trentelman, C. K. , Holyoak, G. , Thomas, B. , & Ma, G. (2016). Effectiveness of the drop‐off/pick‐up survey methodology in different neighborhood types. Journal of Rural Social Sciences, 31, 35–66. [Google Scholar]

- Jacobson, C. A. , Brown, T. L. , & Scheufele, D. A. (2007). Gender‐biased data in survey research regarding wildlife. Society & Natural Resources, 20, 373–377. [Google Scholar]

- Lavrakas, P. J. (2008). Introduction. In Lavrakas P. J. (Ed.), Encyclopedia of survey research methods (pp. xxxv–xxxl). Sage Publications. [Google Scholar]

- Link, M. W. , Murphy, J. , Schober, M. F. , Buskirk, T. D. , Childs, J. H. , & Tesfaye, C. L. (2014). Mobile technologies for conducting, augmenting, and potentially replacing surveys. https://www.aapor.org/Education‐Resources/Reports/Mobile‐Technologies‐for‐Conducting,‐Augmenting‐and.aspx. [Google Scholar]

- Little, R. J. A. , & Rubin, D. B. (2020). Statistical analysis with missing data (3rd ed.). John Wiley & Sons. [Google Scholar]

- Martin, V. Y. (2020). Four common problems in environmental social research undertaken by natural scientists. Bioscience, 70, 13–16. [Google Scholar]

- Nayak, M. , & Narayan, K. A. (2019). Strengths and weakness of online surveys. IOSR Journal of Humanities and Social Science, 24, 31–38. [Google Scholar]

- Newing, H. , Puri, R. K. , & Watson, C. W. (2011). Conducting research in conservation: A social science perspective. Routledge. [Google Scholar]

- Prince, K. R. , Litovsky, A. R. , & Friedman‐Wheeler, D. G. (2012). Internet‐mediated research: Beware of bots. The Behavior Therapist, 35, 85–88. [Google Scholar]

- Qualtrics . (2020). Survey methodology and compliance best practices. https://www.qualtrics.com/support/survey‐platform/survey‐module/survey‐checker/survey‐methodology‐compliance‐best‐practices/#MobileCompatibility. [Google Scholar]

- Reddy, S. M. W. , Montambault, J. , Masuda, Y. J. , Keenan, E. , Butler, W. , Fisher, J. R. B. , Asah, S. T. , & Gneezy, A. (2017). Advancing conservation by understanding and influencing human behavior. Conservation Letters, 10, 248–256. [Google Scholar]

- Ruel, E. , Wagner, W. E., III , & Gillespi, B. J. (2016). Pretesting and pilot testing. In Ruel B., Wagner W. E. III, & Gillespie B. J. (Eds.), The practice of survey research: Theory and applications (pp. 101–119). Sage Publications. [Google Scholar]

- Shapiro, D. N. , Chandler, J. , & Mueller, P. A. (2013). Using Mechanical Turk to study clinical populations. Clinical Psychological Science, 1, 213–220. [Google Scholar]

- Stedman, R. C. , Connelly, N. A. , Heberlein, T. A. , Decker, D. J. , & Allred, S. B. (2019). The end of the (research) world as we know it? Understanding and coping with declining response rates to mail surveys. Society & Natural Resources, 32, 1139–1154. [Google Scholar]

- Stern, M. J. , Bilgen, I. , & Dillman, D. A. (2014). The state of survey methodology: Challenges, dilemmas, and new frontiers in the era of the tailored design. Field Methods, 26, 284–301. [Google Scholar]

- Teel, T. L. , Anderson, C. B. , Burgman, M. A. , Cinner, J. , Clark, D. , Estévez, R. A. , Jones, J. P. G. , Mcclanahan, T. R. , Reed, M. S. , Sandbrook, C. , & St. John, F. A. V. (2018). Publishing social science research in Conservation Biology to move beyond biology. Conservation Biology, 32, 6–8. [DOI] [PubMed] [Google Scholar]

- Terhanian, G. , Bremer, J. , Olmsted, J. , & Guo, J. (2016). A process for developing an optimal model for reducing bias in nonprobability samples. Journal of Advertising Research, 56, 14–24. [Google Scholar]

- UN Educational, Scientific and Cultural Organization (UNESCO) and International Telecommunications Union (ITU) . (2019). The state of broadband: Broadband as a foundation for sustainable development. https://www.itu.int/dms_pub/itu‐s/opb/pol/S‐POL‐BROADBAND.20‐2019‐PDF‐E.pdf. [Google Scholar]

- U.S. Fish and Wildlife Service (USFWS) and U.S. Census Bureau . (2018). 2016 national survey of fishing, hunting, and wildlife‐associated recreation. https://www.census.gov/library/publications/2018/demo/fhw‐16‐nat.html.

- Vaske, J. J. (2011). Advantages and disadvantages of internet surveys: Introduction to the special issue. Human Dimensions of Wildlife, 16, 149–153. [Google Scholar]

- Weiss, C. , & Bailar, B. A. (2002). High response rates for low‐income population in‐person surveys. In Ver Ploeg M., Moffitt R. A., & Citro C. F. (Eds.), Studies of welfare populations: Data collection and research issues (pp. 86–104). National Academies Press. [Google Scholar]

- Willis, G. B. (2004). Cognitive interviewing: A tool for improving questionnaire design. Sage Publications. [Google Scholar]

- Witte, J. (2009). Web surveys in the social sciences: An overview. Sociological Methods & Research, 37, 283–290. [Google Scholar]

- Yang, K. , & Banamah, A. (2014). Quota sampling as an alternative to probability sampling? An experimental study. Sociological Research Online, 19, 56–66. [Google Scholar]

- Young, T. (2016). Questionnaires and surveys. In Hua Z. (Ed.), Research methods in intercultural communication: A practical guide (pp. 163–180). Wiley. [Google Scholar]

- Zack, E. S. , Kennedy, J. , & Long, J. S. (2019). Can nonprobability samples be used for social science research? A cautionary tale. Survey Research Methods, 13, 215–227. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information