Abstract

Using a new method for examining parental explanations in a laboratory setting, the prompted explanation task, this study examines how characteristics of parental explanations about biology relate to children's knowledge. Parents (N = 148; M age = 38; 84% female, 16% male; 67% having completed college) of children ages 7–10 (M age = 8.92; 47% female, 53% male; 58% White, 9.5% Black, 9.5% Asian) provided answers to eight how and why questions about biology. Parents used a number of different approaches to address the questions, including providing more mechanistic responses to how questions and more teleological responses to why questions. The characteristics of parental explanations—most notably, how frequently parents provided correct responses—predicted children's performance on measures of verbal intelligence and biological knowledge. Additional exploratory analyses and implications for children's learning are discussed.

Abbreviation

- PET

prompted explanation task.

In learning about the world, children rely heavily on guidance from others (Harris & Koenig, 2006; Mills, 2013). In some cases, this guidance comes from the use of explanations: a child asks a question, and an adult provides a response. This simple dance between question and explanation plays a powerful role in children's development (Kurkul & Corriveau, 2018; Ronfard et al., 2018). For instance, parents can use explanations in ways that encourage children to stay more engaged with and therefore learn more from museum exhibits (Callanan et al., 2017; Willard et al., 2019). Notably, though, not all explanations are equally effective, and regular experience with certain kinds of explanations may impact learning. For example, children who grow accustomed to hearing explanations that contain mechanisms may be more likely to notice when an explanation does not answer their questions. This, in turn, may help them learn more effectively (Danovitch & Mills, 2018; Kurkul & Corriveau, 2018).

Parental explanations are particularly important for domains in which children struggle to learn something without scaffolding from others. Biology is one such domain. Children are curious about animals and other aspects of biology (e.g., Inagaki & Hatano, 2006; Lobue et al., 2013), but they have many questions that are not easily answered through observation (e.g., understanding the process by which fish breathe underwater; understanding what happens to food after it is eaten). In these contexts, testimony from adults is crucial (Crowley et al., 2001; Geerdts et al., 2016; Inagaki & Hatano, 2006; Jipson & Callanan, 2003). In some cases, parents spontaneously provide explanations to guide their children's knowledge; in other cases, parents respond to specific how and why questions, like “how do fish breathe underwater?” and “why do dogs pant?” (Kurkul & Corriveau, 2018). Regardless of what leads parents to provide explanations, though, it is clear that explanations from adults are critical for building children's knowledge about biology.

In order to explore how the characteristics of the explanations parents provide influence children's learning, it is first necessary to measure how parents respond to their children's questions. A challenge in conducting research examining parent explanations is that there are a number of different approaches to collecting data, each with its own strengths and weaknesses. Both museum and naturalistic settings can provide fertile opportunities for examining parental explanation within the context of parent–child conversation. These data can be rich in detail, but there can be significant variability in the topics that are discussed and the distinctive questions that children ask that make it difficult to compare explanations across different dyads. In addition, although some explanatory characteristics are easy to detect in transcripts of parent‐child conversation (e.g., whether or not a parent is using an analogy), it can be difficult to assess other characteristics, such as whether a parent is providing a correct response (see Callanan & Oakes, 1992; Kurkul & Corriveau, 2018). Using daily diaries for parents to record their children's questions and their answers can give researchers a good sense of the topics that were discussed, but the reflective nature of the task of writing a diary entry may introduce bias into the discussion of the day's conversations (Callanan & Oakes, 1992). In laboratory settings, picture books or question cards can be used to prompt conversation about specific topics in parent–child dyads (see Luce et al., 2013). This approach can help steer the conversation to focus on one particular topic, but children can vary in their level of engagement, and distractions can lead the conversation away from the experimental task.

All these approaches to examining parent–child conversation are enormously valuable tools in understanding development, but they are not always appropriate or viable options. There may be times when researchers want to understand nuances in how parents respond to one specific question or set of questions, yet it can be challenging to develop a task that elicits the same question from all children. Researchers may also want to understand the link between parental explanations and child characteristics but lack access to museum or naturalistic settings to collect relevant data. In these situations, it is helpful to have a method that can be used in other settings, including situations where the parent is available while the child is not.

To address the limitations of existing methods and explore our questions of interest, we developed a new tool for use by developmental psychologists interested in understanding parental explanations: the Prompted Explanations Task (PET). Our mission when developing this method was simple: We wanted to develop a controlled task that could be conducted briefly and in laboratory settings for the purpose of providing insight into parental explanations. In the PET, parents are prompted by a computer to answer questions imagined to be from their child, one at a time, while their responses are recorded and later transcribed and coded. All parents respond verbally to the same set of questions while the experimenter is in a separate room. By giving parents the same set of questions, comparing how characteristics of parental explanations vary between parents is straightforward, and we can more easily measure how those characteristics relate to children's performance on different tasks. In addition, given that the child is not present to redirect the conversation, the PET allows us to home in on the process by which parents formulate answers to children's questions. At the same time, by having parents provide spoken responses instead of written ones, this task more closely mimics the spontaneous nature of responding to children's questions.

Past research has found that computer‐based prompts can encourage college students to provide explanations and that these explanations are tuned to the supposed target of the prompt (i.e., child or adult; see Vlach & Noll, 2016). To our knowledge, though, no research has used such a technique to examine qualities of parental explanations. We believe there is significant value in developing other effective approaches to examining parental explanations in laboratory settings, given the challenges in conducting this kind of research.

For this particular study, we used the PET to examine how parents respond to how and why questions about biological processes in order to understand more about the precise explanatory characteristics that may help build children's knowledge. Our central prediction was that the characteristics of a parent's explanations on the PET would correlate with measures of their child's domain‐specific knowledge (i.e., performance on a biology test) as well as domain‐general verbal knowledge. If parental explanations matter for learning, then we should see signs that parental explanatory qualities relate to indicators of children's broader knowledge and understanding. For instance, if parents regularly respond to their children's questions in a particular domain with mechanistic explanations, children are likely to acquire more mechanistic knowledge in that domain, as well as across domains more generally (Kelemen, 2019). Over time, children whose parents more regularly provide mechanistic explanations may show greater knowledge, on average, than children whose parents rarely do so. Of course, factors such as parental education could influence children's knowledge, so we examine whether parental explanatory characteristics matter above and beyond parental education.

We identified three explanatory characteristics to be of particular interest. The first was the type of explanation parents provide. In some cases, parents may provide mechanistic explanations, focusing on how something happens (e.g., “fish breathe underwater using their gills, which filter oxygen into their body”). In other cases, parents may provide teleological explanations, focusing on purpose or function (e.g., “this body part is for hunting”). The type of explanation parents provide may depend in part on the kind of question being asked: a question about how something happens more clearly indicates an interest in causal, mechanistic information, whereas a question about why something happens may be seen as more ambiguous. In self‐report diary studies, parents often report giving mechanistic responses to children's how questions, and, to a lesser extent, to why questions (Callanan & Oakes, 1992; Kelemen et al., 2005). There are mixed findings regarding how frequently parents produce teleological explanations in conversations with their children, although past research supports that in response to why questions, children (and adults under cognitive load) frequently prefer teleological explanations over mechanistic ones (Kelemen, 1999; Kelemen & Rosset, 2009). Notably, it is possible for a parent to provide both mechanistic and teleological information within the same response. Using the PET, we examine whether parents provide types of explanations that are attuned to the type of question, producing more mechanistic explanations in response to how questions and more teleological explanations in response to why questions. We also examine whether the frequency with which parents produce mechanistic explanations relates to children's domain‐specific and domain‐general knowledge.

A second explanatory characteristic is the accuracy of the content of the explanations that parents provide. When a child asks a question like “how do fish breathe underwater?”, a parent could provide a response that contains accurate information, like pointing out that fish use their gills. But a parent could also provide inaccurate information instead of, or in addition to, the accurate information, such as stating the wrong part of the fish's body (e.g., “they use their fins”) or providing incorrect information about the process (e.g., stating that gills pull carbon dioxide into the fish's body instead of oxygen). In theory, the quality of the information that parents provide to address their children's questions should have a significant impact on what children take away from their conversations. By designing a method to examine parental explanations that allows us to independently assess the accuracy and inaccuracy of the content provided, we can gain insight into how accuracy relates to other kinds of explanatory characteristics, as well as whether parents who provide more correct explanations have children who show more sophisticated knowledge in that particular domain as well as more generally.

A third explanatory characteristic is a broader category: what are the other features of the explanations parents provide? Past research using naturalistic settings and daily diaries has identified a number of techniques that parents sometimes use that can be beneficial for immediate learning. Parents may make connections to other knowledge or to personal experience to help enrich their children's understanding (Callanan et al., 2017; Crowley et al., 2001; Fender & Crowley, 2007; Valle & Callanan, 2006). They may also use scaffolded questions to help the child reason through the answer on their own (Callanan et al., 2017; Tenenbaum & Leaper, 2003; Vandermaas‐Peeler et al., 2016). It is possible that parents who use these techniques frequently help children obtain a greater long‐term understanding of related domains. Another technique parents may use is to demonstrate how to handle ignorance: Parents may suggest other means of finding out the correct answer (e.g., “Let's Google it!”), either with or without reference to admitting some uncertainty (e.g., “I'm not sure, but we could ask your dad”). Although to our knowledge no studies have examined these kinds of statements in relation to children's science learning, we suspect that references to how to handle ignorance may have implications for how children think and learn about science.

In developing the PET measure, we were interested in examining variability in the characteristics of parental explanations in the domain of biology, particularly with respect to two questions. First, we examined whether parents would provide different types of responses to how or why questions. Second, we explored how the features of a parent's explanations relate to their child's domain‐general and domain‐specific knowledge. Together, the results from this novel method for collecting parent explanation data elucidate how parents answer children's scientific questions and how the nature of their answers relates to children's outcomes.

METHOD

Participants

Participants were 148 parent‐child dyads from the Dallas/Fort‐Worth and Louisville areas. Data were collected in laboratory spaces at the University of Texas at Dallas and the University of Louisville, respectively. Ten additional dyads were excluded from this study: Four were excluded because they were unable to understand and follow the directions or unable to read and respond in English by themselves; three were excluded because complete recordings of the parent participants' responses could not be transcribed; and two were excluded because the adult did not indicate that they were the child's primary caregiver. Children were between the ages of 7–10 (M = 8.92, SD = 1.12, 70 females, 78 males). According to parent reports, 75% of children were non‐Hispanic and 12% were Hispanic (parents of the other 14% chose not to respond). Approximately 58% of the children were Caucasian American, 9.5% Asian American, 9.5% African American, and 7% belonged to two or more groups. Parents of the other 14% chose not to respond.

Parents were between the ages of 22–60 (M = 37.96, SD = 6.85; 125 females, 23 males). Parents ranged in education from having completed high school to having completed a graduate degree, with 67% reporting completing college or beyond (see Online Supplementary Materials for additional information). Parents ranged in combined household annual salary from “Less than $15,000” to “More than $175,000,” though both income and education were highly skewed toward the upper ends of the scales.

Procedure

Children completed a number of measures as a part of a battery for a larger project supported by the National Science Foundation (Danovitch et al., 2021). Of particular interest for this study, children completed a biological knowledge measure (N = 148) and the Kaufman Brief Intelligence Test—Second Edition (KBIT‐2; N = 71). The biological knowledge measure (see Online Supplementary Materials) consisted of 15 items drawn from the life science sections of the 2007 and 2011 editions of Trends in International Mathematics and Science Study (TIMSS), a broad measure of fourth‐grade students' understanding of math and science. Questions were chosen to encompass a range of topics related to animal life, including survival, lifecycles, and adaptations. They also encompassed a range of difficulties based on the reported percentages of accurate responses by American 4th graders. Thirteen questions were multiple choice and two questions involved brief open‐ended responses (i.e., identifying living and non‐living objects in a picture). Scores ranged from 0 to 15. For the KBIT‐2, children completed the crystallized verbal scale, yielding a standardized score that reflects both vocabulary and general semantic knowledge.

Each parent was led into a private testing space where they sat at a table with a laptop computer. Parents were told that they would see “a series of eight questions, with one question shown on the screen at a time.” Parents were then presented with a training question, “Your child asks, ‘Can I have cookies for dinner every day this week?’,” to which parents responded. The experimenter reminded them to read the question out loud, to think about how they would really, truly respond to their child asking that question, and to then say their response out loud. The recorder was activated and the researcher left the room. Parent participants pressed the spacebar to navigate through the slideshow in a self‐paced manner as they were presented with 4 how and 4 why questions about animal characteristics and behaviors. The questions posed to participants were as follows:

How do fish breathe underwater?

Why do dogs pant?

How do bats find their food in the dark?

Why do some birds fly south for the winter?

How do tadpoles turn into frogs?

Why do polar bears have white fur?

How do bees make honey?

Why are some birds' eggs white and others are different colors?

Most parent participants completed the PET in <10 min. Afterward, they completed other measures, including a demographic questionnaire about themselves and their child.

Coding

General

Before coding began, responses to the PET were transcribed and measured for length of utterance (i.e., the number of words used to answer the question, excluding the initial repetition of the question, partially spoken words, and verbal pauses). We also coded for whether the parents attempted an answer, which we defined as saying something directed at answering the question beyond an indication of ignorance and/or a reference to searching for additional information.

For all of the codes besides length, we coded for the presence or absence of each characteristic within each response.

Explanation types

We classified explanations as mechanistic, teleological, neither, or both. A “mechanistic” explanation specified the underlying process of a causal event, and an explanation was considered mechanistic irrespective of scientific accuracy. For example, in response to “How do bats find their food in the dark?”, two parents answered:

They have a special vision, uh that is made to, for them to see in the dark.

Well, bats have echolocation and so they make these small clicking noises that are too high pitched really for the human ear to hear and those high‐pitched clicking noises bounce off of objects which they're able to hear back and know that there's an object there, so they're able to move through space by making those clicking noises and getting that feedback and knowing when not to run into things.

Both of these examples provide mechanisms, even though the first one is factually inaccurate. Notably, mechanistic explanations can contain both accurate and inaccurate information, and they can include supernatural explanations alongside naturalistic ones. The key feature for categorization as a mechanistic explanation was whether the explanation attempted to describe the process by which a phenomenon occurred, i.e., a feature that helps the bat find food in the dark. An explanation could also be considered mechanistic if it referred to a scientific term for describing a specific process, even if the process was not detailed. For instance, a response to how a tadpole changes into a frog could state “through metamorphosis” and be considered mechanistic, even if the specific characteristics of metamorphosis were not described. The reason for this is a practical one: A scientific label for a process does provide some mechanistic information, particularly if a child has experience with that label in other contexts.

Teleological explanations provided a function or purpose for the behavior or phenomenon. For example, for the question “why do birds fly south for the winter?”, teleological responses included:

God gave them instincts to—to know when it's time to fly south and then again when it's time to fly north. So they fly south during the winter time so that they'll be able to survive and find food during those cold months.

Because they're looking for their family.

The first explanation would be coded as containing both mechanistic and teleological characteristics, because a process—instincts—as well as goals—to survive and find food—are provided. The second explanation is strictly teleological.

Content accuracy

Content accuracy included codes for the presence of any accurate information, the presence of any inaccurate information, and an overall correct response. Science reference materials were used as needed to check the accuracy or inaccuracy of information provided in each response. Based on these references, we developed a coding scheme for the minimum amount of information that would count as a correct answer for each item. After reviewing a subset of the data, we looked up some additional responses, determined that they were correct, and expanded our coding manual on what would count as correct for some items. The coding scheme is described in the Online Supplementary Materials.

We coded for whether each response contained a correct answer. For instance, the following response, given to the question of why dogs pant, was considered a correct answer:

Panting is the way that they let off their heat so they have heat energy in them and they need to get rid of it and they don't sweat like we do but it—that's kind of their sweating is when they're panting and the liquid comes off their tongue.

This answer was considered correct, given that it addressed that panting serves the purpose of helping a dog cool down or release heat from its body. Importantly, the criteria for coding an overall correct response were more stringent than the criteria for coding for any accurate information. It was possible to provide accurate information without providing an answer that would be coded as a correct response, as exemplified by the following parent's response:

They get what they need from the flower, go back to the hive, and manufacture the honey.

This parent provides topic‐relevant accurate information by stating that bees collect something from flowers that are used to make honey. However, the response does not include what was collected from the flowers, and thus this was not coded as a correct response.

Sometimes, parents provided inaccurate vocabulary or mechanisms. For instance, in response to how fish breathe underwater, one parent said:

My answer would be that they have scales that allow them to breathe.

This would be coded as providing inaccurate information, given that gills, not scales, help fish breathe.

Characteristics

In addition to the codes described earlier, we also coded for six other characteristics of parental responses. The code “supernatural responses” denoted an explanation that attributed a mechanism or cause to a source that cannot be studied scientifically. “Dogmatic language” encompassed responses that emphasized the state of affairs as unavoidable, unalterable, or “just the way things are.”

The following responses demonstrate that supernatural language could motivate either dogmatic or non‐dogmatic responses, which captures some of the nuance present when a religious approach was taken to supplying explanations:

Why do polar bears have white fur? Because that's what God gave them.

Why do some birds fly south in the winter? Well birds are created so—such that umm they know what they need to do and that is what is so amazing about creation and that birds know that it is time to fly south when winter is coming because in the south, they can find warmer climates and so they are uhh flying south umm to get away from the cold in the winter.

Just as supernatural responses did not subsume dogmatic ones, dogmatic responses were sometimes given without using supernatural language, as in the following quote:

Why are some bird's eggs white and others are different colors? Probably the same reason why some people are different colors. Just like you and me are different colors than dad. That's just how it is.

Bridges to other knowledge or experiences were attempts on the parent's part to make an explanation explicable in terms of a child's background or common knowledge. In the prior explanation, the parent compares the variety in bird egg colors to the variety of skin tones in their family, thereby creating a connection uniquely available to the child as a function of their particular life experiences. In the following excerpt, the parent uses a bridge to other knowledge (i.e., that all people have different features), as well as a connection to the child's experiences (i.e., purchasing both white and brown eggs at the store):

I may have to google it to find out why the colors are different because even in our, ya know, the eggs we buy from the store, some are white and some are brown just like when we go and we meet friends at school and friends outside. We all look different, we have different features, and different colors. It's the same thing with birds.

The code “information search” was used when a parent referred to the possibility of gathering information from another source, such as the Internet, a book, and/or another person. For example, here the parent suggests consulting both a text‐based source and a person to supplement their explanation:

Do you remember we went to that farm um that showed us how bees make honey and um how they build the hives and um pollinate and uh it was really neat to listen to, but we probably um should read some more about it um and then also your friend ____ and her family they uh raise bees um so we could go to their house and ask too.

A response was coded as containing a “knowledge limitation” if the parent participant gave any indication that they were not completely confident in their answer. This code was used when the limitation was minor, such as an explanation prefaced by “I think,” as well as when the parent's answer consisted exclusively of the response, “I don't know.”

Finally, we noted whether the parent provided any “scaffolding questions,” which we defined as guiding questions meant to encourage deliberation or discussion of a specific possibility. An excellent example of scaffolding is this parent participant's attempt to have the child consider a hypothetical counterfactual scenario with respect to the adaptive trait in question:

Why do you think they have white fur? If they were living—uh, where do they live? If they were living somewhere else, would they have the same color fur?

Reliability

In order to establish intercoder reliability of the 148 transcripts used in this study, two raters first independently coded 14 transcripts (i.e., 10% of the total sample). The raters were blind to all hypotheses.

Each explanation was coded for explanation type, accuracy, and characteristics, as described earlier. The raters discussed their codes for these 14 transcripts, making some final updates to the coding scheme and resolving disagreements through discussion. Next, the two raters coded a different set of 31 transcripts (21% of the total sample). Interrater reliability was calculated based on this second set of transcripts. For each code (e.g., information search, mechanistic, teleological, correct answer), we calculated Cohen's Kappa. Kappa values generally varied from .56 (for whether any inaccurate information was provided) to .94 (for whether there were references to information search). Two primary codes for parental explanation characteristics were used in the regression models: mechanistic explanations and correct responses. Kappa values were substantial for these codes (.79 for mechanistic explanations, .73 for correct responses).

As an additional check of the reliability of the data, a third coder was trained in the coding scheme and coded a separate set of 15 transcripts (i.e., 10% of the sample) that were not part of the initial 45 transcripts. Kappas were as high or higher than those reported earlier (e.g., .88 for mechanistic explanations, .82 for correct responses). All Kappas are reported in the Online Supplementary Materials.

RESULTS

Overview

Because the PET is a new approach to examining parental explanations, our analyses focus on first checking for possible age and gender differences as well as reviewing descriptive statistics for different explanatory characteristics. Next, we address the questions raised in the Introduction regarding whether parental responses vary between how and why questions, and whether characteristics of parental explanations relate to separate measures of children's knowledge. Finally, to help capture some of the variability in how parents responded to the questions, we describe some exploratory analyses that may help inform future research.

Preliminary analyses and descriptive statistics

Preliminary analyses showed no significant main or interaction effects in explanatory characteristics based on either the age or the gender of the child nor the gender of the parent, and so subsequent analyses collapse across these factors. Descriptive statistics are provided in Table 1.

TABLE 1.

Descriptive statistics for parental explanations in response to why and how questions as well as overall

| Explanatory codes | How questions | Why questions | Total | |||||

|---|---|---|---|---|---|---|---|---|

| M (SD) | Range | M (SD) | Range | Paired t‐test | M (SD) | Range | ||

| General | Length | 41.88 (32.01) | 3.25–183.50 | 41.67 (32.30) | 3.50–229.75 | 0.21 | 41.78 (31.58) | 3.38–205.13 |

| Attempted | 3.47 (0.79) | 1–4 | 3.47 (0.80) | 0–4 | 0.00 | 6.93 (1.33) | 2–8 | |

| Type | Mechanistic | 3.20 (0.90) | 1–4 | 0.53 (0.79) | 0–4 | 30.48** | 3.74 (1.32) | 1–8 |

| Teleological | 0.10 (0.43) | 0–4 | 2.58 (0.89) | 0–4 | −30.81** | 2.68 (1.00) | 0–8 | |

| Accuracy | Any accurate | 2.78 (1.10) | 0–4 | 3.09 (0.98) | 0–4 | −3.40** | 5.87 (1.75) | 1–8 |

| Any inaccurate | 1.51 (1.03) | 0–4 | 0.85 (0.83) | 0–3 | 6.99** | 2.37 (1.49) | 0–7 | |

| Correct answer | 1.71 (1.21) | 0–4 | 2.08 (1.11) | 0–4 | −3.95** | 3.79 (2.01) | 0–8 | |

| Characteristics | Supernatural | 0.11 (0.44) | 0–3 | 0.11 (0.44) | 0–3 | 0.28 | 0.22 (0.83) | 0–6 |

| Dogmatic | 0.16 (0.45) | 0–2 | 0.13 (0.36) | 0–2 | 0.78 | 0.29 (0.62) | 0–3 | |

| Bridging | 1.11 (1.16) | 0–4 | 0.89 (0.98) | 0–4 | 2.48* | 2.01 (1.84) | 0–8 | |

| Info search | 1.12 (1.22) | 0–4 | 0.84 (0.93) | 0–4 | 3.34** | 1.97 (1.93) | 0–8 | |

| Limits | 1.58 (1.24) | 0–4 | 1.78 (1.11) | 0–4 | −2.07* | 3.37 (2.04) | 0–8 | |

| Question | 0.07 (0.37) | 0–3 | 0.21 (0.69) | 0–4 | −3.68** | 0.28 (1.01) | 0–7 | |

The paired t‐test compares the mean frequency of different explanatory codes in response to how questions to those in response to why questions.

p < .01

p < .05.

Before describing more targeted analyses, we want to highlight a few findings regarding how parents provided explanations in this task. Recall that our explanatory coding scheme tracks whether or not a certain explanatory type or characteristic is present in each response. This scheme allows us to be sensitive to the fact that explanations can vary in complexity, and parents sometimes provide a number of different characteristics within the same response for various reasons, like when trying to explain something more clearly or when dealing with uncertainty.

First, responses to each prompted question contained an average of approximately 42 words, but there was enormous variability. For some items, parents responded simply with “I don't know,” while others elaborated on their knowledge limitation with a pledge to search for additional information. However, for the vast majority of items (M = 6.93 of 8), parents attempted to respond to the question with their own explanation. As we reviewed the explanation lengths, we noted that there were a few parents who were extremely verbose in their responses, leading the distribution to be positively skewed. To address this issue, we performed a loglinear transformation on length. The rest of the analyses use this transformed length measure.

Second, for content accuracy, parents frequently provided some accurate information (M = 5.87 of 8 items). Sometimes this accurate information was in the context of providing a correct response (3.79 of 8 items), but other times, it appeared that parents were attempting to provide whatever accurate information they were able to give, even if their answer was not entirely complete. Parents also sometimes provided inaccurate information (2.37 of 8 items). Note that throughout the rest of the paper, we will use the term “correct response” to refer to when parents provided an answer that was coded as correct, and “accurate information” to refer to when a parent provided an accurate piece of information in response to an item, even if it was not the correct answer to the question.

Third, there was significant variability in how frequently different explanatory characteristics were used. Bridging language, references to searching for information, and references to limits to knowledge were fairly common (1.97 of 8 or more). In contrast, other explanatory characteristics like supernatural language, dogmatic language, and scaffolding language were very rare (0.29 of 8 or less). Because they were so infrequent, these rare codes are not analyzed or discussed further.

Are there differences in parent responses based on question type?

This set of confirmatory analyses examines differences in how parents responded to how and why questions. The descriptive statistics and results for paired t‐tests are reported in Table 1. Note that there were no differences in the length of responses as a function of question type.

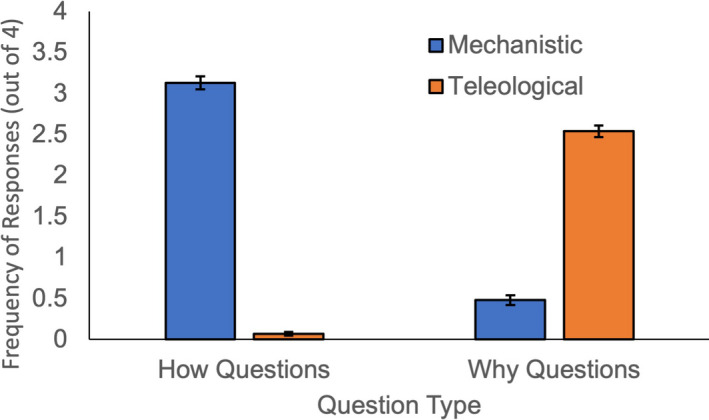

We anticipated that parents would provide mechanistic responses more frequently in response to how questions and teleological responses more frequently in response to why questions. To investigate this, we conducted a repeated measures ANOVA for the number of times each type of explanation (mechanistic, teleological) was provided for each item type (how, why). A main effect of explanation type supported that, overall, parents provided mechanistic explanations more frequently than teleological ones, F(1, 147) = 91.71 p < .001, partial eta squared = .38. Importantly, though, there was an interaction between explanation type and item type, with mechanistic explanations being offered more frequently in response to how questions and teleological explanations being offered more frequently in response to why questions, F(1, 147) = 1692.61, p < .001, partial eta squared = .92. See Figure 1. This finding offers strong support for the idea that parents are sensitive to the type of question being asked in the direction we hypothesized based on past research.

FIGURE 1.

Frequency with which mechanistic and teleological explanations were observed in responses to How and Why questions [Color figure can be viewed at wileyonlinelibrary.com]

Next, we examined differences in content accuracy in response to how and why questions (see Table 1). We present these data with some caution since the how and why questions were not necessarily designed to be equally difficult. Nonetheless, our exploratory analyses found that parents provided accurate information and correct responses more frequently in response to why questions, and inaccurate information more frequently in response to how questions.

We also saw differences in the characteristics of responses to how and why questions. Parents made bridges to other knowledge and experiences and referenced information searches more often for how questions than why questions. In contrast, although parents referenced knowledge limits more often for why than how questions, this did not translate into more frequent references to information searches or bridges to other knowledge and experiences. Together, these data support that parents tune their explanations to the kind of question being asked.

How do parent responses relate to child domain‐specific biological and domain‐general verbal knowledge?

The PET was conducted with parents while children were completing a battery of measures for other studies related to children's science learning. Because of this, we can examine the relation between how parents responded in the PET to how their children performed on measures of domain‐general verbal intelligence and domain‐specific biological knowledge.

More specifically, as noted in the introduction, we had identified three broader explanatory characteristics of interest: type (mechanistic, teleological), accuracy (any accurate information, any inaccurate information, correct response), and other features (dogmatic language, bridges, information search, and reference to knowledge limits). Each explanation was coded for whether or not each characteristic was provided, making it easier to capture the variability in explanations. See Table 2 for correlations. Inspection of this table reveals no statistically significant correlations between explanatory features such as bridges to other knowledge or expressions of knowledge limitations and children's verbal intelligence or biological knowledge. We will return to this later in the results.

TABLE 2.

Correlations between explanatory characteristics, Verbal IQ (from the KBIT‐2), biological knowledge, child age, and parental education

| 1. | 2. | 3. | 4. | 5. | 6. | 7. | 8. | 9. | 10. | 11. | 12. | 13. | 14. | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Verbal IQ | — | |||||||||||||

| 2. Bio knowledge | .59** | — | ||||||||||||

| 3. Child age | .00 | .35** | — | |||||||||||

| 4. Parental education | .17 | .15+ | .01 | — | ||||||||||

| 5. Length transformed | .24* | .20* | −.18* | .30** | — | |||||||||

| 6. Attempted | .25* | .13 | .02 | −.08 | .29** | — | ||||||||

| 7. Mechanistic | .30* | .20* | −.15 | .13 | .50** | .65** | — | |||||||

| 8. Teleological | .22+ | .17* | .02 | .05 | .34** | .43** | .46** | — | ||||||

| 9. Any accurate | .28* | .21 | −.06 | .03 | .56** | .71** | .65** | .52** | — | |||||

| 10. Any inaccurate | −.17 | .00 | .16 | −.01 | −.13 | .29** | −.03 | −.03 | −.20** | — | ||||

| 11. Correct answer | .33** | .26** | −.10 | .05 | .53** | .45** | .57** | .2** | .73** | −.44** | — | |||

| 12. Dogmatic | .04 | −.05 | −.04 | −.21** | −.02 | .25** | .04 | −10 | .01 | .21** | −.15+ | — | ||

| 13. Bridging | .06 | .03 | −.24** | .13 | .59** | .37** | .40** | .20** | .42** | .09 | .31** | .13 | — | |

| 14. Info search | .02 | .10 | −.11 | .12 | .27** | −.43** | −.25** | −.12 | −.12 | −.35** | .05 | −.22** | −.09 | — |

| 15. Limits | −.04 | .00 | .07 | .10 | .20** | −.19* | −.12 | .06 | −.06 | −.12 | .07 | −.17* | −.19* | .51** |

Sample sizes for all explanation‐focused cells and age are 148. The sample sizes for cells related to the KBIT‐2 are 71, and to parental education are 144.

p < .01;

p < .05;

p < .10.

Because a number of factors related to other explanatory characteristics were correlated with children's verbal intelligence scores, we planned to focus our analyses on the two specific factors that seemed most clearly motivated by past research (mechanism and accuracy) as well as two additional factors that seemed like they might contribute to child outcomes (explanation length and parent education). With respect to the frequency of mechanistic responses provided by parents, past research suggests that mechanistic explanation plays an important role in learning (Kelemen, 2019; see also Lockhart et al., 2019). We predicted that parents who provide mechanistic explanations more frequently might have children who know more in general and who specifically have a better understanding of biological phenomena. Regarding the frequency of correct responses, prior research has found that providing an overall correct response to a child's question may be as important for children's learning as providing a mechanistic response (Eberbach & Crowley, 2017). Although these two dimensions are related, there exists a critical distinction: It is possible for a parent to provide a mechanistic explanation that is incorrect or not suited for the question at hand. With regard to explanation length, we investigated whether parents who simply talk more and provide longer explanations expose children to information in a way that helps them build their semantic knowledge. Finally, we examined whether the effects of the characteristics of parental explanations would matter above and beyond the effects of parental education. (See Online Supplementary Materials for analyses focused on parental education.)

To examine how the number of mechanistic explanations, the number of overall correct responses, response length, and parental education related to children's verbal intelligence scores, we conducted a backward stepwise regression. For all regressions, preliminary analyses were conducted to ensure no violations of the assumptions of normality, linearity, and multicollinearity. All four factors were included in the initial model, and factors were subsequently removed if they were not significant predictors (see Kominsky et al., 2016 for discussion of this approach). The regression identified the number of correct responses as the only significant predictor, first removing the length of parent responses, β = −.02, p = .88, then the number of mechanistic explanations, β = .12, p = .43, and then parental education, β = .14, p = .23. The number of overall correct responses was the only remaining predictor, β = .33, p = .005.

Next, we examined performance on the biological knowledge measure in relation to the four variables mentioned earlier. We anticipated that children's age would also correlate with performance, given that the measure is not age standardized. Mirroring the approach taken above, we conducted a backward stepwise regression, with factors subsequently removed if they were not significant predictors. The regression identified the number of correct responses and age as significant predictors, with parental education trending in that direction. The analysis first removed the number of mechanistic explanations, β = .07, p = .46 and then the length of the explanations, β = .115, p = .22. The number of correct responses and age were the remaining predictors, β = .30, p < .001 and β = .38 and p < .001, respectively. Parental education remained in the model, although the correlation with biological knowledge did not reach significance, β = .13, p = .08.

In sum, for both the domain‐general and the domain‐specific tests of children's knowledge, the frequency of correct parent explanations in the PET predicted children's performance. Notably, the number of overall correct responses mattered above and beyond the effects of parental education and explanation length, suggesting that parents who provide correct explanations for biological phenomena have children who know more in general as well as specifically about biology.

Exploratory analyses

The previous analyses support that the correctness of parental explanations relates most strongly to children's verbal intelligence and biological knowledge. This raised two additional questions. First, how do we make sense of the lack of relation between other explanatory features of parental explanations and child outcomes? Second, does the number of correct responses matter more for some kinds of questions than others?

To explore the first question, we refer to Table 2 to describe patterns of explanatory features that parents provided somewhat frequently (i.e., bridges to other knowledge, information search, and reference to knowledge limits). Although none of these explanatory features correlated directly with children's verbal intelligence or biological knowledge, they did correlate with other aspects of parental explanations. For instance, the more frequently parents provided bridges to other knowledge and experiences, the more likely they were to have provided responses that contained mechanistic explanations, teleological explanations, some accurate content, and the correct response, and the less likely they were to reference knowledge limits. This pattern suggests that bridges are more likely to be used in the context of a correct response than an incorrect one. Interestingly, how frequently parents provided bridges to other knowledge negatively correlated with children's age. This finding is in synch with research finding that parents tend to provide fewer scaffolding questions to older children than to preschoolers (Haden et al., 2014).

Another explanatory feature we were interested in is the fact that parents sometimes referenced searching for information if they did not know the answer. Demonstrating to children the importance of seeking out additional information when they do not know an answer might make an important contribution to children's learning. Overall, we found that the more frequently parents referred to looking things up, the more likely they were to acknowledge the limits to their knowledge, the less likely they were to provide a mechanistic explanation, and the less likely they were to provide inaccurate information. Interestingly, although 101 parents (68%) referenced searching for additional information at least once, 47 of the 148 parents (32%) never did so. Compared to parents who referenced searching for information at least once, those parents who never referenced searching for information were more likely to provide inaccurate information and less likely to provide a correct response, ts > 3.7, ps < .01. This finding suggests that parents may sometimes reference searching for additional information as a substitute for providing inaccurate information. More broadly, these findings also support that parents who provide inaccurate information to their children may not provide tools for their child to be able to verify their answers—a practice that may be problematic for long‐term learning.

To address the second question—does the number of correct parental explanations matter more for some kinds of questions than others—we explored whether there were meaningful differences in how many parents provided correct responses to certain questions. More specifically, we were interested in whether parents' answers to more or less difficult questions might differentially relate to the child measures.

We first reviewed performance across the eight questions. As shown in Table 3, the proportion of parents who provided a correct response to each question ranged from 0.15 to 0.80; Cronbach's alpha for the eight items was .70. Clearly, some questions were much easier than others.

TABLE 3.

Means, standard deviations, and factor analysis (principal component analysis with varimax rotation) of correct responses to science questions

| Items | M (SD) | Component 1 | Component 2 |

|---|---|---|---|

| Why do some birds fly south for the winter? | 0.80 (0.40) | 0.58 | 0.14 |

| How do fish breathe underwater? | 0.74 (0.44) | 0.65 | |

| Why do polar bears have white fur? | 0.67 (0.47) | 0.53 | |

| Why do dogs pant? | 0.47 (0.50) | 0.64 | 0.33 |

| How do bats find their food in the dark? | 0.42 (0.50) | 0.57 | 0.46 |

| How do tadpoles turn into frogs? | 0.39 (0.49) | 0.33 | 0.63 |

| How do bees make honey? | 0.17 (0.38) | 0.77 | |

| Why are some birds' eggs white and others are different colors? | 0.15 (0.36) | 0.16 | 0.62 |

Factor loadings over 0.40 appear in bold.

We then turned to factor analysis to see if any particular way of separating the variables into different factors emerged from the dataset. The eight questions were factor analyzed using principal component analysis with Varimax (orthogonal) rotation. The analysis yielded one factor explaining 32.74% of the variance (Eigenvalue of 2.62). The easiest five questions had loadings above 0.40. A second factor explaining 12.53% of the variance was possible, but with an Eigenvalue of 1.003, this was right at the cutoff for interpretation. For this factor, the four hardest items were included. One item (how bats find their food in the dark) was included in both factors.

In order to examine whether the number of correct parent responses to the harder or the easier questions was more predictive of children's performance on the outcome measures, we performed a median split based on difficulty. We then correlated performance on the four easiest questions, the four hardest questions, the KBIT2, and the biological knowledge measure. Correctness on each set of questions significantly correlated with children's performance on the biological knowledge measure and the KBIT2, rs > .19, ps < .05 (see full table in the Online Supplementary Materials).

Next, we used multiple regression to examine whether the number of correct responses on hard questions or easy questions was more predictive of children's performance on the biological knowledge test, or if there were no differences based on difficulty. The overall model was significant, but only performance on the four hardest questions significantly predicted accuracy when both were simultaneously entered into the model (β = .22, p = .03). For the KBIT2 performance, the overall model was significant, but only performance on the four easiest questions significantly predicted accuracy when both were simultaneously entered into the model (β = .29, p = .03). For both analyses, the variance inflation factor was acceptable (<1.32), so the variables were not cancelling each other out.

In sum, these findings suggest that parents who are particularly knowledgeable about biology and answer the more challenging questions correctly have children who are also more knowledgeable about biology. A different pattern is seen with verbal intelligence, for which parents who have difficulty answering the easier questions correctly have children who score lower on the verbal intelligence measure.

DISCUSSION

Explanations play an important role in learning, particularly in response to questions that cannot be easily answered through direct observation. Here, we employed a new method—the PET—to examine how parents respond to questions about biology. We were interested in two questions: First, whether parents would provide different types of responses to how or why questions, and second, whether features of a parent's explanations relate to their child's domain‐general and domain‐specific knowledge.

First, we found that parents adjusted characteristics of their explanations depending on whether they were responding to a how question or a why question: How questions evoked more mechanistic explanations; why questions evoked more teleological ones. Moreover, parental explanations for the two types of questions varied in other ways, with parents bridging to other knowledge and referencing information searches more often for how than why questions, and referencing knowledge limits more often for why than how questions. With this particular set of items, how questions may have seemed more concrete than why questions, making it easier to make connections to other knowledge or imagine being able to search for the answer. That said, additional research is needed to see whether this pattern of responses holds true for parent responses to how and why questions in other domains.

Second, we found that how frequently parents provided correct responses (regardless of whether the responses were mechanistic or teleological) to the questions predicted children's performance on a test of verbal intelligence as well as their performance on a test of biological knowledge, above and beyond any effects of parental education. Although other aspects of parental explanatory characteristics sometimes correlated with child outcomes, it was how often parents provided correct answers in the PET that was most important. Moreover, exploratory analyses found that how frequently parents provided correct responses to the easiest four questions predicted children's verbal intelligence, whereas how frequently parents provided correct responses to the hardest four questions predicted children's biological knowledge.

There are a number of potential explanations for the relations between how frequently parents provided correct responses in the PET and children's domain‐specific and domain‐general knowledge. One explanation is that when parents frequently provide correct responses to their children's questions in a particular domain, their children acquire richer, more coherent knowledge in that domain. This possibility is consistent with observational research finding relations between how much parents know about a scientific topic, how they talk about that topic with their child, and how much the child learns from that specific experience (Eberbach & Crowley, 2017). In theory, over time, as a child regularly engages in parent–child conversation with a parent offering correct explanations, the child is likely to develop more sophisticated knowledge in that domain and more generally. This may help explain why children whose parents provided more accurate responses to the hardest questions in the PET performed better on the biological knowledge test. At the same time, our findings suggest a parent who does not know much specifically about biology can still have a child with a high verbal intelligence score. Indeed, we found that the number of correct responses to the hardest questions was not as strong of a predictor of a child's verbal intelligence as the number of correct responses to the easiest questions. It is possible, then, that accuracy on the PET taps into two different aspects of how parents answer children's questions. Responses to the most challenging questions may relate more to how much parents know about biology, which could then be shared with children. In contrast, responses to the easiest questions may relate more to how parents approach answering children's questions more generally.

A second possible explanation is that the PET is tapping into overall parental intelligence, and parents who are smarter have children who are smarter. Although this is probably true to some extent, there are several reasons to believe that these findings are not solely based on relations between parent and child intelligence, independent of explanation quality. If the PET was simply a measure of intelligence, we would expect that smarter parents would provide more accurate answers on both the hardest and the easiest items, and that accuracy on both types of items or total accuracy would predict both children's domain‐specific biological knowledge and domain‐general intelligence. Instead, though, we see some specificity as described earlier.

In addition, parental education—which is sometimes used as a rough proxy for general intelligence (e.g., Steinmayr et al., 2010)—did not significantly predict either children's domain‐general or domain‐specific knowledge above the effects of explanation correctness. This supports that our findings are not simply tapping into the effect of parental education on child characteristics. Of course, educational attainment is not the same construct as intelligence: Past research suggests that the correlation between education and intelligence ranges from 0.5 to 0.65 (see Plomin & Von Stumm, 2018; Rietveld et al., 2014). Our perspective is that it is likely that both parental intelligence and the quality of parental explanations play significant roles in children's overall learning and knowledge. It will be crucial for future research to investigate the characteristics of parental explanations along with parental verbal intelligence and other factors to better understand the connection between these factors and child outcomes. Still, our prediction is that it may be possible for a parent who does not have a high IQ or who has not achieved a high level of education to provide the kind of explanations that support children's learning overall and specifically in the domain of biology.

Initially, we were surprised by the finding that the frequency with which parents provided mechanistic explanations was not the strongest predictor of children's domain‐general verbal knowledge or domain‐specific biological knowledge. But in reviewing parent responses, our sense is that although explanations that provide mechanisms can provide great benefits for learning (Kelemen, 2019), the benefits may be diminished if the mechanisms are wrong or not appropriate for the question. In response to how questions (e.g., “How do bats find their food in the dark?”), it may be more effective to answer children's questions by referencing how to handle ignorance than to provide incorrect mechanistic information that leaves a child trying to construct an understanding based on that incorrect information. In response to why questions (e.g., “Why do dogs pant?”), it may be more effective to provide a teleological response that addresses a child's question rather than a mechanistic one that goes into more detail than a child is interested in at that point of time. Likewise, bridges to other knowledge can be helpful for scaffolding children's learning, but they are likely not universally helpful. Here, parents were more likely to provide bridges for younger children than for older ones, supporting that they may have been tuning their responses to what they thought their children would need (even though their child was not in the room).

More broadly, we are excited by the PET as an approach to gather information about parental explanations in a laboratory setting. A challenge with some methods of examining parental explanations is that it is difficult to tell if parents are providing accurate or inaccurate information. Here, because the PET used a controlled set of questions with known scientifically accepted answers, we could assess each explanation for whether it included a correct answer, some accurate information, and/or some inaccurate information. We found that parents provided explanations that varied drastically in how much accurate and inaccurate information they contained, as well as how correct they were overall. Our research supports anthropological findings that parents sometimes provide inaccurate information in response to children's questions (see Sak, 2015) and demonstrates that it is possible to capture this variability in a laboratory‐based task.

Although sitting in a laboratory and knowing that they were being recorded might have ostensibly influenced parents' responses, parents' frequent references to their own ignorance suggest that their responses were not driven purely by task demands. In fact, this project documents a common response to questions that, to our knowledge, has rarely been studied in research on parental explanations: reference to searching for additional information. In everyday life, parents are unlikely to know the answer to every question a child asks, and how they handle that ignorance may set the stage for how children approach their own ignorance in the future. In this study, we found signs of different strategies for handling ignorance: Although about two‐thirds of parents referenced information searches at least once, the other one‐third never did so. Those parents who never referenced searches were more likely to provide inaccurate information than parents who did so at least once. Indeed, more broadly, we found that parents sometimes indicated uncertainty and demonstrated how to handle that, and other times they provided inaccurate information and did not indicate the possibility that their answers might be incorrect. Cumulatively and across development, these approaches may have differential effects on children's learning. Children may adopt their parents' epistemic stance (Ronfard et al., 2018) such that children who observe parents dealing with their ignorance or uncertainty by consulting other people or searching the internet might be more likely to do so themselves. Future research might examine the extent to which parents who talk about information searches in response to children's questions either with the PET task or in everyday conversation follow through with the search behaviors they describe. Perhaps watching parents actually engage in searches has a stronger effect on children's ways of conceptualizing information and their subsequent learning.

Given the significant variability present in parental responses and the links between explanatory characteristics and child knowledge, we believe that that the PET taps into meaningful differences in how parents generally respond to their children's questions in a particular domain. An important direction for future research is to examine how parental responses on the PET relate to responses provided in direct parent–child interaction across different settings. For instance, we noticed that scaffolding questions were rare in this study, but they are commonly observed in research examining parent–child interaction in science museums (Haden et al., 2014). That said, parents have been found to provide fewer scaffolding questions to older children, like those in our sample, than to preschool‐age children (Haden et al., 2014). More broadly, it is important to note that how frequently parents use any explanatory technique may depend to some degree on the context. Certain kinds of techniques may be more common in museum settings, which have a heavy emphasis on child learning, than in everyday home interactions. Some trends in explanation may be more context‐dependent, such as how much a parent uses questions to scaffold a child's learning, while others may be more universal across settings, such as sharing correct information when it is known or demonstrating how to handle ignorance. Of course, children are also involved in the learning process, and the questions that they choose to ask may in turn shape how parents respond to their questions (and even what parents choose to learn to help support their children's curiosity).

Taken together, our analyses of parent responses to the PET suggest that parents respond differently to how and why questions, and that the quality of parent responses to questions about biological phenomena links to children's general knowledge and their knowledge about biology. These findings suggest that parents' capacity to provide correct answers to children's questions, and perhaps how they handle questions that they cannot fully answer, could have broad effects on children's learning.

In addition, these findings demonstrate that the PET is an effective method for examining parental explanations. In other words, this project is a “proof of concept”: parental explanations can be successfully elicited outside of direct parent‐child interaction in a way that can relate meaningfully to child outcomes. Importantly, the PET is not intended to replace other methods of examining parental explanations but to complement them. It provides a straightforward and efficient means of measuring the accuracy and content of parental explanations and gaining information about how parents respond to specific types of questions. Thus, the PET could be particularly useful for examining parental responses to questions that might be hard to capture in naturalistic conversation without extensive sampling or for directly comparing how parents respond to the same set of questions. In addition, as researchers continue to explore online options for collecting data, the PET method could be modified for parents to complete in a home setting, potentially allowing access to populations that might not be able to participate in laboratory‐ or museum‐based research.

Based on our initial findings, we offer a few recommendations for using the PET in future research. First, we recommend that researchers collect demographic information, and, when possible, include other parent measures that can help with interpreting the characteristics of their explanations, such as measures of intelligence. Second, although the PET can be flexibly modified to incorporate questions from a broad range of domains (e.g., moral reasoning; mathematical thinking), we recommend choosing questions that are expected to cluster in meaningful ways, such as by difficulty or on other dimensions. Third, additional users of the PET should consider that coding schemes can vary in terms of their granularity, and it is important to consider what level of coding detail makes sense for their project (Chorney et al., 2015). This project used a micro‐level coding approach, coding each response for whether it contained or did not contain specific characteristics. But another option would be to take a macro‐level coding approach, examining responses across an experimental session. This kind of approach can allow researchers to detect broader patterns (e.g., different approaches to handling uncertainty). Regardless of how these specific recommendations are taken into account, we believe that using a structured task like the PET to analyze how parents to respond to children's questions can provide researchers with valuable insights into how parental explanations may shape children's knowledge and understanding.

Supporting information

Supplementary Material

ACKNOWLEDGMENTS

This work was supported by the National Science Foundation grant DRL‐1551795 awarded to Candice Mills and grant DRL‐1551862 awarded to Judith Danovitch. The authors thank the members of the UTD Think Lab and the UL KID Lab for their support with data collection and transcription.

Mills, C. M. , Danovitch, J. H. , Mugambi, V. N. , Sands, K. R. , & Pattisapu Fox, C. (2022). “Why do dogs pant?”: Characteristics of parental explanations about science predict children's knowledge. Child Development, 93, 326–340. 10.1111/cdev.13681

REFERENCES

- Callanan, M. A. , Castañeda, C. L. , Luce, M. R. , & Martin, J. L. (2017). Family science talk in museums: Predicting children's engagement from variations in talk and activity. Child Development, 88(5), 1492–1504. 10.1111/cdev.12886 [DOI] [PubMed] [Google Scholar]

- Callanan, M. A. , & Oakes, L. M. (1992). Preschoolers' questions and parents' explanations: Causal thinking in everyday activity. Cognitive Development, 7, 213–233. 10.1016/0885-2014(92)90012-g [DOI] [Google Scholar]

- Chorney, J. M. , McMurtry, C. M. , Chambers, C. T. , & Bakeman, R. (2015). Developing and modifying behavioral coding schemes in pediatric psychology: A practical guide. Journal of Pediatric Psychology, 40(1), 154–164. 10.1093/jpepsy/jsu099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowley, K. , Callanan, M. A. , Jipson, J. L. , Galco, J. , Topping, K. , & Shrager, J. (2001). Shared scientific thinking in everyday parent‐child activity. Science Education, 85(6), 712–732. 10.1002/sce.1035 [DOI] [Google Scholar]

- Danovitch, J. H. , & Mills, C. M. (2018). Understanding when and how explanation promotes exploration. In Saylor M. & Ganea P. (Eds.), Active learning from infancy to childhood (pp. 95–112). Springer. 10.1007/978-3-319-77182-3_6 [DOI] [Google Scholar]

- Danovitch, J. H. , Mills, C. M. , Sands, K. R. , & Williams, A. J. (2021). Mind the gap: How incomplete explanations influence children’s interest and learning behaviors. Cognitive Psychology, 130, 101421. 10.1016/j.cogpsych.2021.101421 [DOI] [PubMed] [Google Scholar]

- Eberbach, C. , & Crowley, K. (2017). From seeing to observing: How parents and children learn to see science in a botanical garden. Journal of the Learning Sciences, 26(4), 608–642. 10.1080/10508406.2017.1308867 [DOI] [Google Scholar]

- Fender, J. G. , & Crowley, K. (2007). How parent explanation changes what children learn from everyday scientific thinking. Journal of Applied Developmental Psychology, 28(3), 189–210. 10.1016/j.appdev.2007.02.007 [DOI] [Google Scholar]

- Geerdts, M. , Van De Walle, G. , & LoBue, V. (2016). Using animals to teach children biology: Exploring the use of biological explanations in children's anthropomorphic storybooks. Early Education and Development, 27(8), 1237–1249. 10.1080/10409289.2016.1174052 [DOI] [Google Scholar]

- Haden, C. A. , Jant, E. A. , Hoffman, P. C. , Marcus, M. , Geddes, J. R. , & Gaskins, S. (2014). Supporting family conversations and children's STEM learning in a children's museum. Early Childhood Research Quarterly, 29, 333–344. 10.1016/j.ecresq.2014.04.004 [DOI] [Google Scholar]

- Harris, P. L. , & Koenig, M. A. (2006). Trust in testimony: How children learn about science and religion. Child Development, 77(3), 505–524. 10.1111/j.1467-8624.2006.00886.x [DOI] [PubMed] [Google Scholar]

- Inagaki, K. , & Hatano, G. (2006). Young children's conception of the biological world. Current Directions in Psychological Science, 15(4), 177–181. 10.1111/j.1467-8721.2006.00431.x [DOI] [Google Scholar]

- Jipson, J. L. , & Callanan, M. A. (2003). Mother‐child conversation and children's understanding of biological and nonbiological changes in size. Child Development, 74(2), 629–644. 10.1111/1467-8624.7402020 [DOI] [PubMed] [Google Scholar]

- Kelemen, D. (1999). Why are rocks pointy? Children's preference for teleological explanations of the natural world. Developmental Psychology, 35(6), 1440–1452. 10.1037/0012-1649.35.6.1440 [DOI] [PubMed] [Google Scholar]

- Kelemen, D. (2019). The magic of mechanism to explanation‐based instruction on counterintuitive concepts in early childhood. Perspectives on Psychological Science, 14(4), 510–522. 10.1177/1745691619827011 [DOI] [PubMed] [Google Scholar]

- Kelemen, D. , Casier, K. , Callanan, M. A. , & Pérez‐Granados, D. R. (2005). Why things happen: Teleological explanation in parent‐child conversations. Developmental Psychology, 41(1), 251–264. 10.1037/0012-1649.41.1.251 [DOI] [PubMed] [Google Scholar]

- Kelemen, D. , & Rosset, E. (2009). The human function compunction: Teleological explanation in adults. Cognition, 111(1), 138–143. 10.1016/j.cognition.2009.01.001 [DOI] [PubMed] [Google Scholar]

- Kominsky, J. F. , Langthorne, P. , & Keil, F. C. (2016). The better part of not knowing: Virtuous ignorance. Developmental Psychology, 52(1), 31–45. 10.1037/dev0000065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurkul, K. E. , & Corriveau, K. H. (2018). Question, explanation, follow‐up: A mechanism for learning from others? Child Development, 89(1), 280–294. 10.1111/cdev.12726 [DOI] [PubMed] [Google Scholar]

- Lobue, V. , Bloom Pickard, M. , Sherman, K. , Axford, C. , & Deloache, J. S. (2013). Young children's interest in live animals. British Journal of Developmental Psychology, 31(1), 57–69. 10.1111/j.2044-835X.2012.02078.x [DOI] [PubMed] [Google Scholar]

- Lockhart, K. L. , Chuey, A. , Kerr, S. , & Keil, F. C. (2019). The privileged status of knowing mechanistic information: An early epistemic bias. Child Development, 90(5), 1772–1788. 10.1111/cdev.13246 [DOI] [PubMed] [Google Scholar]

- Luce, M. R. , Callanan, M. A. , & Smilovic, S. (2013). Links between parents' epistemological stance and children's evidence talk. Developmental Psychology, 49(3), 454–461. 10.1037/a0031249 [DOI] [PubMed] [Google Scholar]

- Mills, C. M. (2013). Knowing when to doubt: Developing a critical stance when learning from others. Developmental Psychology, 49(3), 404–418. 10.1037/a0029500 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plomin, R. , & Von Stumm, S. (2018). The new genetics of intelligence. Nature Reviews Genetics, 19(3), 148–159. 10.1038/nrg.2017.104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rietveld, C. A. , Esko, T. , Davies, G. , Pers, T. H. , Turley, P. , Benyamin, B. , Chabris, C. F. , Emilsson, V. , Johnson, A. D. , Lee, J. J. , Leeuw, C. D. , Marioni, R. E. , Medland, S. E. , Miller, M. B. , Rostapshova, O. , van der Lee, S. J. , Vinkhuyzen, A. A. E. , Amin, N. , Conley, D. , … Koellinger, P. D. (2014). Common genetic variants associated with cognitive performance identified using the proxy‐phenotype method. Proceedings of the National Academy of Sciences of the United States of America, 111(38), 13790–13794. 10.1073/pnas.1404623111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ronfard, S. , Bartz, D. T. , Cheng, L. , Chen, X. , & Harris, P. L. (2018). Children's developing ideas about knowledge and its acquisition. Advances in Child Development and Behavior, 54, 123–151. [DOI] [PubMed] [Google Scholar]

- Ronfard, S. , Zambrana, I. M. , Hermansen, T. K. , & Kelemen, D. (2018). Question‐asking in childhood: A review of the literature and a framework for understanding its development. Developmental Review, 49, 101–120. 10.1016/j.dr.2018.05.002 [DOI] [Google Scholar]

- Sak, R. (2015). Young children's difficult questions and adults' answers. Anthropologist, 22(2), 293–300. 10.1080/09720073.2015.11891880 [DOI] [Google Scholar]

- Steinmayr, R. , Dinger, F. C. , & Spinath, B. (2010). Parents' education and children's achievement: The role of personality. European Journal of Personality, 24, 535–550. 10.1002/per.755 [DOI] [Google Scholar]

- Tenenbaum, H. R. , & Leaper, C. (2003). Parent‐child conversations about science: The socialization of gender inequities? Developmental Psychology, 39(1), 34–47. 10.1037/0012-1649.39.1.34 [DOI] [PubMed] [Google Scholar]

- Valle, A. , & Callanan, M. (2006). Similarity comparisons and relational analogies in parent‐child conversations about science topics. Merrill‐Palmer Quarterly, 52(1), 96–124. 10.1353/mpq.2006.0009 [DOI] [Google Scholar]

- Vandermaas‐Peeler, M. , Massey, K. , & Kendall, A. (2016). Parent guidance of young children's scientific and mathematical reasoning in a science museum. Early Childhood Education Journal, 44(3), 217–224. 10.1007/s10643-015-0714-5 [DOI] [Google Scholar]

- Vlach, H. A. , & Noll, N. (2016). Talking to children about science is harder than we think: characteristics and metacognitive judgments of explanations provided to children and adults. Metacognition and Learning, 11(3), 317–338. 10.1007/s11409-016-9153-y [DOI] [Google Scholar]

- Willard, A. K. , Busch, J. T. A. , Cullum, K. A. , Letourneau, S. M. , Sobel, D. M. , Callanan, M. , & Legare, C. H. (2019). Explain this, explore that: A study of parent–child interaction in a children's museum. Child Development, 90(5), e598–e617. 10.1111/cdev.13232 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Material