Abstract

Even though hypomimia is a hallmark of Parkinson’s disease (PD), objective and easily interpretable tools to capture the disruption of spontaneous and deliberate facial movements are lacking. This study aimed to develop a fully automatic video-based hypomimia assessment tool and estimate the prevalence and characteristics of hypomimia in de-novo PD patients with relation to clinical and dopamine transporter imaging markers. For this cross-sectional study, video samples of spontaneous speech were collected from 91 de-novo, drug-naïve PD participants and 75 age and sex-matched healthy controls. Twelve facial markers covering areas of forehead, nose root, eyebrows, eyes, lateral canthal areas, cheeks, mouth, and jaw were used to quantitatively describe facial dynamics. All patients were evaluated using Movement Disorder Society-Unified PD Rating Scale and Dopamine Transporter Single-Photon Emission Computed Tomography. Newly developed automated facial analysis tool enabled high-accuracy discrimination between PD and controls with area under the curve of 0.87. The prevalence of hypomimia in de-novo PD cohort was 57%, mainly associated with dysfunction of mouth and jaw movements, and decreased variability in forehead and nose root wrinkles (p < 0.001). Strongest correlation was found between reduction of lower lip movements and nigro-putaminal dopaminergic loss (r = 0.32, p = 0.002) as well as limb bradykinesia/rigidity scores (r = −0.37 p < 0.001). Hypomimia represents a frequent, early marker of motor impairment in PD that can be robustly assessed via automatic video-based analysis. Our results support an association between striatal dopaminergic deficit and hypomimia in PD.

Subject terms: Diagnostic markers, Parkinson's disease

Introduction

The terms characterizing reduction of facial movements such as “masked face” were part of the earliest Parkinson’s disease (PD) descriptions1,2. Current research defines facial bradykinesia also known as hypomimia as a loss of spontaneous facial movements and emotional facial expressions, decreased amplitude of the deliberately posed facial expressions, reduced frequency of blinking, and jaw bradykinesia3. It has been estimated that up to 92% of all PD patients develop hypomimia in the course of the disease, making it the most common orofacial PD manifestation, followed by speech impairment, dysphagia, and drooling4. Hypomimia is also considered to be one of the earliest motor manifestations of PD4, developing up to 10 years before the clinical diagnosis of PD, preceding the onset of limb bradykinesia, rigidity gait abnormalities, or resting tremor5. However, all these findings4,5 are based on a very simple, crude, and routine evaluation of face changes using a subjective 4-point rating scale. To summarize, although being a well-recognized, and common manifestation of PD, the loss of facial expressivity is an understudied topic due to the lack of accessible objective tools for its more precise evaluation6,7. Moreover, the objective evaluation of hypomimia in de-novo, drug-naïve PD patients has never been performed, and the potential sensitivity of specific facial markers in early PD remains generally unknown.

This study aims to develop a fully automatic approach based on state-of-the-art computer vision techniques providing a robust, objective, easy to administer, and easy to interpret tool for hypomimia assessment. Based on the proposed approach, we aim to estimate the prevalence and determine quantitative characteristics of hypomimia in a large cohort of de-novo drug-naïve PD patients. An additional purpose is to explore the potential relationships between facial markers and clinical and neuroimaging data to provide greater insight into the pathophysiology of hypomimia in PD.

Results

Participants

A total number of 97 PD participants were examined, and six were subsequently excluded: one because the diagnosis was updated to corticobasal degeneration, and five had moderate depression level according to BDI II. As a result, a group of 91 de-novo treatment-naïve PD patients were included in this study consisting of 54 (59%) males and 37 (41%) females with an average age of 61.0 (SD 12.3, range 34–81) years, PD motor symptoms duration 2.0 (SD 1.7) years, and mean MDS-UPDRS part III score 29.8 (SD 12.2) (Table 1). As a healthy control group, 75 white sex- and age-matched participants consisting of 45 (60%) males and 30 (40%) females with an average age of 60.8 (SD 8.8, range 45–86) years were recruited. All PD and control participants were Caucasian and Czech native speakers.

Table 1.

The list of demographical and clinical information describing PD participants.

| Clinical characteristics | PD (n = 91; men n = 54) |

|---|---|

| General | |

| Age (years) | 61.0 (12.3, 34–81) |

| Symptom duration (years) | 2.0 (1.7, 0.3–11.3) |

| Motor manifestations | |

| MDS-UPDRS III | 29.8 (12.2, 6–63) |

| Bradykinesia/Rigidity | 19.9 ± (9.3, 3–46) |

| PIGD | 1.9 ± (1.7, 0–7) |

| Non-motor manifestations | |

| MoCA | 25.0 ± (3.9, 17–30) |

| BDI II | 7.8 ± (4.7, 0–19) |

| Brain imaging (DAT-SPECT) | |

| Caudate binding ratio | 3.0 ± (0.6, 1.3–4.3) |

| Putamen binding ratio | 1.5 ± (0.4, 0.9–2.3) |

Values are listed in in the format mean (standard deviation, range).

PD Parkinson’s disease, MDS-UPDRS Movement Disorders Society – Unified Parkinson’s Disease Rating Scale, PIGD postural instability/gait difficulty, MoCA montreal cognitive assessment, BDI II Beck depression inventory II, DAT-SPECT dopamine transporter single-photon emission computed tomography.

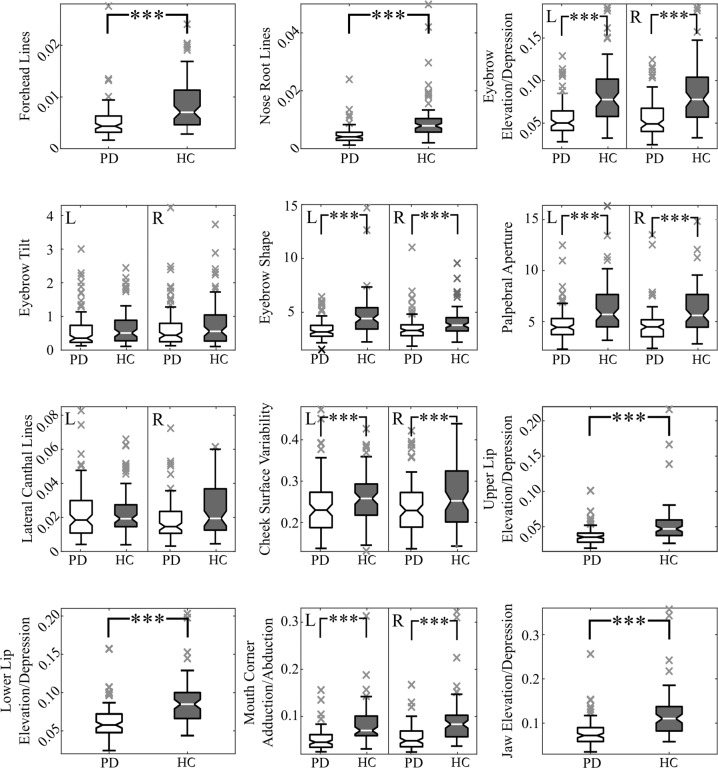

Between-group differences

We revealed significant differences in facial areas, including forehead, nose root, eyebrows (including eyebrow elevation/depression and eyebrow shape), eyes, cheeks, mouth (including upper lip elevation/depression, lower lip elevation/depression, and mouth corner adduction/abduction), and jaw (all analyses significant at p < 0.001) (Fig. 1); both left and the right side was significant for those markers involving laterality. Regarding the perceptual assessment, we also found a significant difference (p < 0.001) between the average PD group rating 1.3 (SD 1.0, range 0–3) and control group rating 0.3 (0.5, 0–2).

Fig. 1. Depiction of between-group differences for each facial marker.

The markers with two-sided variant are presented in the same sub-plot and denoted by L for left side and R for the right side. In the figure the centerlines denote feature medians, bounds of boxes represent 25th and 75th percentiles, whiskers denote nonoutlier data range and the crosses denote outlier values. Statistically significant differences after Bonferroni adjustment are denoted by asterisks: ***p < 0.001. PD = Parkinson’s disease; HC = healthy controls.

Comparison of automatic analysis and perceptual assessment

The analysis of relationship between computerized approach (hypomimia is reflected by decrease of facial movements) and perceptual severity assessment (hypomimia is reflected by increase of perceptual score) of merged PD and control datasets revealed significant negative correlations for markers describing facial areas of forehead (r = −0.47, p < 0.001), nose root (r = −0.50, p < 0.001), eyebrows (eyebrow elevation/depression: r = −0.54, p < 0.001; eyebrow shape: r = −0.44, p < 0.001), eyes (r = −0.38, p < 0.001), lateral canthal areas (r = −0.26, p < 0.001), mouth (upper lip elevation/depression: r = −0.51, p < 0.001; lower lip elevation/depression: r = −0.47, p < 0.001; mouth corner adduction/abduction: r = −0.53, p < 0.001), and jaw (r = −0.49, p < 0.001) (Supplementary Table 1).

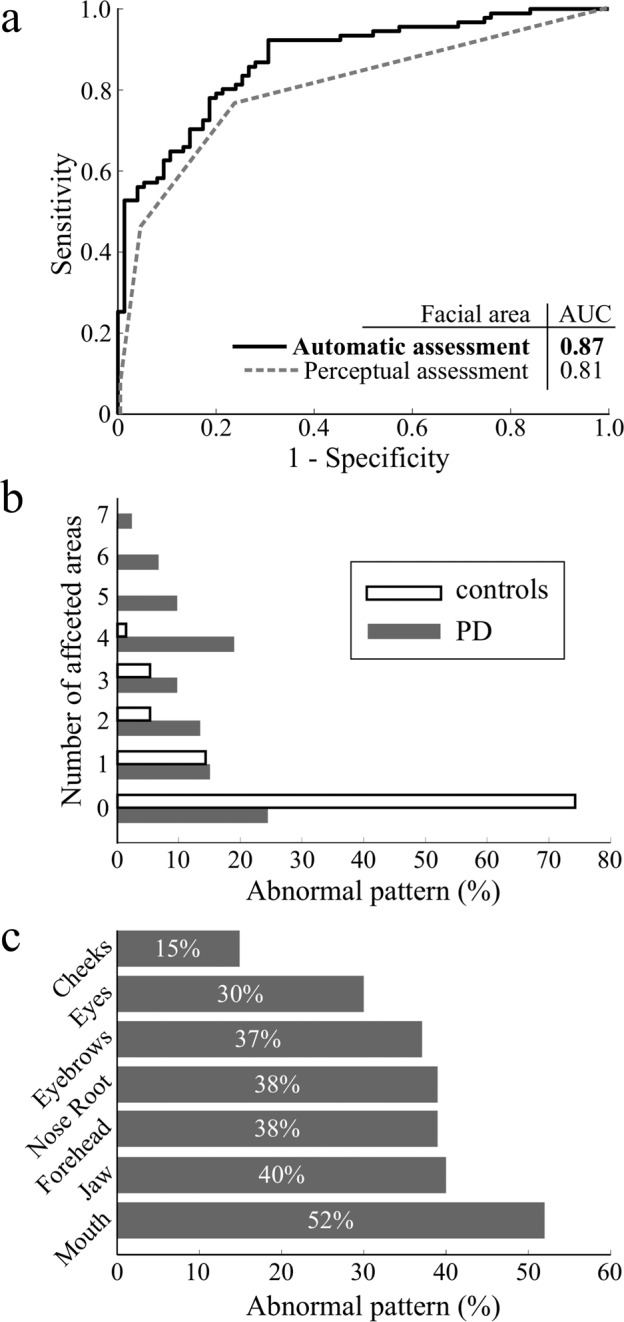

Hypomimia diagnostic sensitivity

The different patterns in facial expressivity between PD and control participants led to an overall AUC of 0.87 with an accuracy of 78.3% (sensitivity of 79.1% and specificity of 77.8%) (Fig. 2A); perceptual assessment reached an AUC of 0.81 with the accuracy of 75.9% (sensitivity of 71.6% and specificity 80.0%). The highest overall AUC was achieved by a combination of five markers, including forehead lines (AUC = 0.81), eyebrow elevation/depression (AUC = 0.78), cheek surface variability (AUC = 0.64), mouth corner adduction/abduction (AUC = 0.81), and jaw elevation/depression (AUC = 0.80) (Supplementary Table 2).

Fig. 2. Results of hypomimia sensitivity analysis.

(A) Receiver operating characteristic curves between PD and controls. The solid line represents automatic assessment with the best overall AUC, which was based on the five facial areas with the best discrimination scores. The dashed line represents the operating characteristic curve based on the 4-point perceptual assessment. B Ratios of participants manifesting abnormal patterns in different numbers of affected areas. C Ratios of disrupted facial areas in PD.

Based upon the receiver operating characteristic curve, the prevalence of hypomimia evaluated by automatic video-based assessment in de-novo PD was estimated as 57% while maintaining a false-positive rate under 5%. In comparison, the perceptual assessment evaluated 45% of PD and 4% of control participants as mildly or moderately/severely hypomimic. Considering the agreement of automated and perceptual assessment in PD subset, both methods yielded same result in 50 (55%) of all participants with 26 (29%) subjects classified as hypomimic and 24 (26%) non-hypomimic. The computerized method detected hypomimia in 26 (29%) PD patients whose facial expression was found to be normal by perceptual assessment, whereas hypomimia in 15 (16%) PD participants was captured perceptually but not using automated system. Regarding healthy controls, human rater and computerized method agreed in 69 (92%) of non-hypomimic scores.

To estimate the number of affected facial areas we computed a cutoff for each facial marker whereby values above 95th percentile of control group were considered abnormal. Using the obtained cutoffs, we estimated that three or more facial areas were affected in 43 PD patients (47%) and in only 5 healthy subjects (7%) (Fig. 2B).The most commonly disrupted facial regions included mouth (52%), jaw (40%), forehead (38%), and nose root (38%) (Fig. 2C).

Relationship between facial features and clinical and imaging parameters

We found significant correlations between the bradykinesia/rigidity composite score taken from the MDS-UPDRS and automatic video-based facial markers describing eyebrow elevation/depression (r = −0.25, p = 0.019), upper lip elevation/depression (r = −0.24, p = 0.026), lower lip elevation/depression (r = −0.37 p < 0.001), mouth corner adduction/abduction (r = −0.34, p = 0.001), and jaw elevation/depression (r = −0.31, p = 0.004). In addition, significant correlations were detected between the total MDS-UPDRS III and facial markers describing eyebrow elevation/depression (r = −0.22, p = 0.042), upper lip elevation/depression (r = −0.24, p = 0.025), lower lip elevation/depression (r = −0.35, p < 0.001), mouth corner adduction/abduction (r = −0.32, p = 0.003), and jaw elevation/depression (r = −0.28, p = 0.008). The relationship analysis did not reveal any significant correlation between automatic hypomimia assessment and postural instability/gait difficulty score (Supplementary Table 3).

The analysis of relationships between facial markers and DAT-SPECT markers for more affected side (see Supplementary Table 4 for analysis across less affected side and mean value of both sides) revealed significant correlations between caudate binding and lower lip elevation/depression (r = 0.23, p = 0.032) and lateral canthal lines (r = −0.27, p = 0.011), as well as between putamen binding ratio and upper lip elevation/depression (r = 0.23, p = 0.028), lower lip elevation/depression (r = 0.32, p = 0.002), mouth corner adduction/abduction (r = 0.29, p = 0.005), and jaw elevation/depression (r = 0.28, p = 0.008). No other relationships between facial dynamic markers and clinical manifestations (Supplementary Table 3) or brain imaging markers were found (Supplementary Table 4).

Discussion

This is the first study to demonstrate a fully automated objective approach assessing facial expressivity of a large drug-naïve de-novo PD cohort, using the natural and unconstrained monologue video recordings. Using the proposed technology detecting hypomimia patterns in both upper and lower face areas, we were able to distinguish PD patients and controls with 78% accuracy. Therefore, the objective analysis of hypomimia may provide a novel biomarker in PD and other α-synuclein-related diseases. The intriguing potential of the facial analysis is that it is easily interpretable, inexpensive, and non-invasive, and video recordings can be made even from patients’ home facilitating future scalability to a larger population.

The results of our study confirmed hypomimia as a common manifestation in de-novo PD3,4,6, which is in accordance with previous research reporting impaired facial exression in 26–37% of de-novo PD4,7. The combination of hypomimia and hypokinetic dysarthria assessed using MDS-UPDRS III items 3.1 and 3.2 has been reported to be able to distinguish newly diagnosed PD from controls with AUC = 0.805. Our results based solely on perceptual hypomimia assessment revealed mild to moderate/severe hypomimia in 45% of PD participants reaching AUC = 0.81 and confirming the high discriminative potential of facial expressivity assessment. In comparison to clinical assessment, our instrumental analysis found that 57% of PD patients manifested hypomimia, which led to a slightly higher discrimination accuracy of AUC = 0.87 between PD and controls.

The automatic assessment captured differences in nearly all the predefined regions of interest regardless of whether the markers were based on surface or geometrical properties. In a closer look, the first and the second most common manifestations were decreased variability of movements in the mouth and jaw, representing diminished movements of the lower face. Indeed, most of the lower face movements are performed voluntarily during the speech therefore, we may assume that in the freely spoken monologue, these two aspects reflect a large portion of deficit in the voluntary facial movements8. Finally, the third and the fourth most common detected deficiencies were a decreased variability in forehead and nose root wrinkles, which primarily represent a decrease in the spontaneous expressivity during the monologue9. A reduction in blinking rate and eyelid movements in general represented by a reduction in palpebral aperture variability, decreased amount of eyebrow elevation/depression, and diminished cheek muscle activity were also observed. Our findings thus suggest that hypomimia manifestations are homogeneously distributed across the entire facial area, and both voluntary and spontaneous type movements are affected. We did not find lateral asymmetry in expressivity across investigated facial areas, although a more detailed investigation of laterality in facial expressivity is warranted10,11.

In accordance with previously published results4,7, our findings confirmed the association between severity of overall motor performance and the hypomimia of the lower and the upper face. Regarding bradykinesia and rigidity, previous neurophysiological studies of voluntary facial dynamics reported reduced velocity and amplitude of movements and increased tone during repetitive syllable production resulting from bradykinesia of facial muscles3,12. Similarly, the previous literature reported an association between the rigidity of labial muscles, decrement of lip movements, and electromyographic activity of orbicularis oris and mentalis muscles13. Moreover, the recent neuroimaging study showed that PD patients with hypomimia display significantly lower DAT-SPECT specific binding ratios in the putamen and caudate, supporting the hypothesis of hypomimia sharing common pathophysiology with bradykinesia13. Indeed, we found a relationship between bradykinesia/rigidity composite sub-score and lower face hypomimia represented by the diminished movement of mouth lips and corners as well as diminished jaw movement, while our results did not confirm any relationship between hypomimia and postural instability/gait difficulty sub-score. This is further supported by results of DAT-SPECT analysis, which revealed a significant correlation between putamen binding ratio and markers describing mouth and jaw movements as well as between caudate binding ratio and lower lip movement. Together, this strong evidence suggests that hypomimia is mainly associated with striatal dopaminergic deficit related to appendicular involvement. We did not find the relation between hypomimia and the extent of depression or cognitive deficits, which is in line with previous studies14,15.

The strength of this study is that we focused on assessing hypomimia via freely spoken monologue, which represents the most natural and available source of facial movements. The assessment of freely spoken monologue holds the greatest potential as the most convenient hypomimia measurement, which could be easily implemented in video communication systems and therefore scalable to a large population16. In addition, the freely spoken monologue extends the assessment of deliberate facial movement by the involvement of spontaneous facial expressions as a constituent of nonverbal communication and therefore provides a comprehensive picture of the hypomimia impact. However, this is at the expense of fully separating voluntary and spontaneous events. Even though the lower face is likely more affected by voluntary speech movements while the upper face is by spontaneous facial expressions8, we cannot entirely prevent events like spontaneous smiling or deliberate raising of eyebrows. Therefore, the detailed analysis of different movement types is a matter of future research based on specialized mimic tasks. Yet, pilot studies aimed at an objective assessment of deliberate facial movement support the effect of PD on reduced speed and peak amplitude of the intentional facial grimacing16–29. On the other hand, the static assessment of facial shape provided only limited results supporting dynamic objective assessment as a superior approach30. Table with a detailed list of previously published literature is available in Supplementary Table 5.

One potential limitation of our method is that marker evaluating variability of lateral canthal lines was not able to capture a decrease in facial expressivity. This is surprising because the occurrence of lateral canthal lines is one of the main signs of Duchenne smile, involving the cheek raiser muscle31. In PD, the Duchenne smile is usually reduced, and smiling is produced only by the involvement of lower face muscles, causing an impression of disingenuity32. The insignificant lateral canthal lines marker might result from two issues, including occasional obscurement by hair or temples of participant’s glasses and/or the reduced visibility of lateral canthal areas due to its perpendicular orientation towards the image plane. This assumption is supported by significant impoverishment of the activity in the cheek area, which also reflects the orbicularis oculi activation during a Duchenne smile33. The second limitation is that our database was composed of only Czech native speakers of Slavic origin and was not tested on participants with different ethnic background. Nonetheless the face landmark tracking is based on the model trained on the 300 Faces-in-the-Wild database34, which contains representatives of different ethnicities and therefore the face tracking should yield similar results regardless of ethnic background. Moreover, the facial markers were based on relative changes in the facial movement which decreases effects of different ethnic attributes. Subsequently, patients with moderate level of depression were excluded to avoid potential negative effect of depression of facial expression35 that might led to overestimation of hypomimia prevalence in PD. On the other hand, we believe the presence of depression would not affect the algorithm reliability. Future research is warranted to explore effect of depression on facial expression in PD. Finally, to get as natural setup as possible, participant head position was not fixed during the freely spoken monologue and therefore, the measurements might be affected by head movements or lighting to a certain degree. To minimize this effect, patients were always positioned heading towards camera, which was placed in fixed position with regards of lighting. The Euclidean markers were normalized using distance of the inner eye corners and the surface markers were estimated from grayscale images with intensity levels normalized between zero and one and additionally, the markers with left and right variants were averaged for further analysis. The video frame sequence with obscured or out of frame landmark positions were not considered if they were longer than one second. From clinical point of view, we analyzed de-novo drug-naïve PD patients without levodopa-induced dyskinesias that would have potential to substantially affect the head position.

In conclusion, we introduced an automatic video-based assessment of hypomimia able to reliably capture distortion of various facial movement patterns, supporting the addition of automated facial assessment to the batteries of motor biomarkers currently used in clinical trials. Our results support an association between striatal dopaminergic deficit and hypomimia in PD. The presented method enables sufficiently sensitive and clinically relevant measurement of facial dynamic and opens the door for more intensive research of longitudinal evolution of hypomimia and its relationship with other disease symptoms as well as responsiveness to therapy. It will also provide a better understanding of the underlying mechanism for disrupted facial expressivity in PD and may ultimately lead to more efficient personalized hypomimia therapy or more accurate prediction of disease prognosis.

Methods

Standard protocol approvals, registrations, and patient consents

All participants provided written informed consent prior to their inclusion. Additionally, the person portrayed in Supplementary Video 1 provided written permission to publish the recording. The study received approval from the ethics committee of General University Hospital in Prague, Czechia, and has been performed in accordance with the ethical principles laid down by the Declaration of Helsinki.

Study design and participants

From 2015 to 2020, we recruited de-novo, drug-naïve PD patients at the Department of Neurology, Charles University, and General University Hospital in Prague, Czechia for this cross-sectional study. Each patient was diagnosed according to Movement Disorder Society clinical diagnostic criteria for PD36 and underwent the clinical assessment including (i) structured interview gathering information about personal and medical history, history of drug substance intake, and current medication usage, (ii) semi-quantitative testing of PD motor symptoms with Movement Disorder Society-Unified Parkinson’s Disease Rating Scale, Part III37 (MDS-UPDRS III), (iii) cognitive testing with the Montreal Cognitive Assessment38 (MoCA), and (iv) evaluation of depressive symptoms with Beck Depression Inventory II39 (BDI II). Two sub-scores were calculated from the MDS-UPDRS III; bradykinesia/rigidity composite score was defined as the sum of items 3.3–3.8 and 3.14, and postural instability/gait difficulty (PIGD) sub-score was defined as the sum of items 3.9–3.13. Disease duration was estimated based on the self-reported occurrence of the first motor symptoms. In addition to the PD cohort, we examined the age- and the sex-matched control group of healthy participants. A neurologist experienced in movement disorders (P.D.) established PD diagnosis in all patients and performed all clinical evaluations.

The exclusion criteria were (i) history of therapy with antiparkinsonian medication, (ii) history of significant neurological disorders (except PD in the patient group), (iii) history of other clinical conditions affecting facial movements such as facial paralysis, hemifacial spasm, or stroke (vi) presence of moderate or severe depression defined as BDI II ≥ 20; (v) current or past involvement in any speech or hypomimia therapy.

Dopamine transporter imaging

In PD patients, the dopamine transporter single-photon emission computed tomography (DAT-SPECT) was performed using the [123I]-2-b-carbomethoxy-3b-(4-iodophenyl)-N-(3-fluoropropyl) nortropane (DaTscan®, GE Healthcare) tracer according to the European Association of Nuclear Medicine procedure guidelines40, using common acquisition and reconstruction parameters described in detail previously41. Automated semi-quantitative analysis was performed using the BasGan V2 software42, and specific binding ratios in caudate nuclei and putamina relative to background binding were calculated. Since neurodegeneration of substantia nigra is frequently asymmetric in early PD, binding ratios in left and right side were calculated separately and the lower value from both hemispheres was used in subsequent analyses43.

Facial expressivity examination

Facial expressivity examination was performed in a room with common indoor lighting with fixed position regarding artificial and natural sources of light, using video recordings obtained by the digital camera (Panasonic Handycam HDR-CX410, Osaka, Japan) placed approximately one meter in front of the subject’s face. The recording was performed with a resolution of 1440 × 1080 pixels (HD) and a frame rate of 25 RGB images (24-bit) per second. Each recording contained one minute of the freely spoken monologue on the given topic. The monologue was part of a comprehensive speech examination protocol performed by a speech specialist (M.N., T.T., or J.R.) during a single session.

Computerized analysis of facial expressivity

During the computerized analysis, the state-of-the-art convolutional neural network localized sixty-eight facial landmarks in each video frame44,45. Using the detected facial landmarks, twelve hypomimia markers describing the dynamic of eight different facial regions were estimated (Supplementary Video 1). Due to the different nature of individual markers, two basic approaches were adopted: (i) Euclidean distance between facial landmarks and (ii) surface description of predefined areas of interest. If the left and right marker variants exist, both variants were estimated.

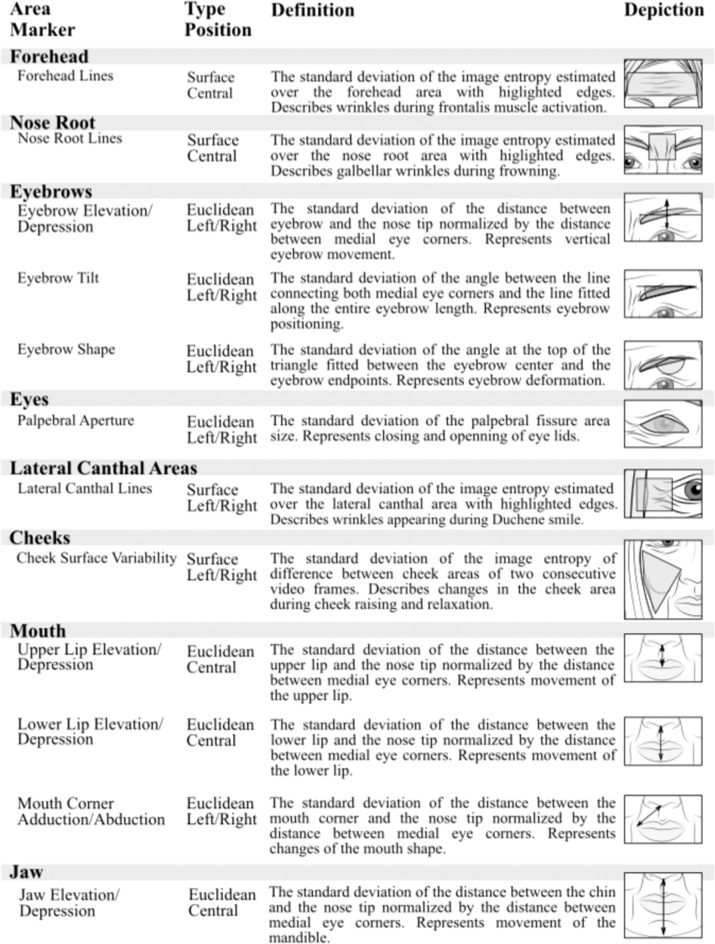

Based on the previous literature, regions of interest describing the entire facial area were selected3. The analyzed areas and the definitions of all facial markers are illustrated in Fig. 3. The eight described areas included forehead, nose root, eyebrows, eyes, lateral canthal areas, cheeks, mouth, and jaw. The forehead area was described by one surface marker centered along the vertical axis. The forehead lines, defined as the standard deviation of the image entropy estimated over the forehead area with highlighted edges, represented wrinkles created by the frontalis muscle activation. The nose root area was described by one surface marker centered along the vertical axis. The nose root lines, defined as the standard deviation of the image entropy estimated over the nose root area with highlighted edges, represented glabellar wrinkles created during frowning. The movements assigned to eyebrows area was described by three Euclidean markers with left/right variants. The eyebrow elevation/depression, defined as the standard deviation of the distance between eyebrow and the nose tip normalized by the distance between medial eye corners, represented vertical eyebrow movement. The eyebrow tilt, defined as the standard deviation of the angle between the line connecting both medial eye corners and the line fitted along the entire eyebrow length, represented eyebrow positioning. The eyebrow shape, defined as the standard deviation of the angle at the top of the triangle fitted between the eyebrow center and the eyebrow endpoints, represented eyebrow deformation. The area of eyes was described by one Euclidean marker with left/right variants. The palpebral aperture, defined as the standard deviation of the palpebral fissure area size, represented eye closing and opening. The lateral canthal areas were described by one surface marker with left/right variants. The lateral canthal lines, defined as the standard deviation of the image entropy estimated over the lateral canthal areas with highlighted edges, reflected lack of wrinkles in Duchenne smile, during which only lower face is involved without involvement of eyes and corresponding cheek raise. The cheek area was described by one surface marker with left/right variants. The cheek surface variability, defined as the standard deviation of the image entropy of difference between cheek areas of two consecutive video frames, represented changes in the cheek area during the raising and relaxation of cheeks. The mouth area was described by three Euclidean markers, two markers centered along the vertical axis and one with left/right variants. The upper lip elevation/depression, defined as the standard deviation of the distance between the upper lip and the nose tip normalized by the distance between medial eye corners, represents a movement of the upper lip. The lower lip elevation/depression, defined as the standard deviation of the distance between the lower lip and the nose tip normalized by the distance between medial eye corners, represented a movement of the lower lip. The mouth corner adduction/abduction, defined as the standard deviation of the distance between the mouth corner and the nose tip normalized by the distance between medial eye corners, represented changes in the mouth shape. Finally, the jaw area was described by one Euclidean marker centered along the vertical axis. The jaw elevation/depression, defined as the standard deviation of the distance between the chin and the nose tip normalized by the distance between medial eye corners, represented a movement of the mandible.

Fig. 3. Illustration of analyzed facial areas.

Detailed description of assessed markers of facial dynamics. All markers are expected to decrease in parallel with increasing severity of facial bradykinesia.

The landmark detection was performed in the Anaconda Individual Edition of Python 3.6 (Anaconda Inc., Austin, TX, USA). The subsequent dynamic marker assessment was conducted in MATLAB® (Mathworks, Natick, MA, USA). A comprehensive description of the computerized hypomimia marker methodology can be found in Supplementary Information 1.

Perceptual analysis of facial expressivity

The speech-language pathologist (H.R.) trained in facial expressivity evaluation provided an assessment of facial expressivity of each video recording assigning scores ranging from 0 for normal facial expressivity, 1 for slight hypomimia, 2 for mild hypomimia, and 3 for moderate/severe hypomimia. The rating criteria were anchored in the MDS-UPDSR III item 3.2 Facial Expression; the only exception was merged moderate/severe hypomimia score, as these two categories differ only by amount of time reflecting mouth at rest with parted lips, which is not possible to differentiate during monologue. The perceptual evaluation was performed on the randomized and blinded video recordings of monologues, including both PD and control group, while the rater could repeatedly replay video recording if needed.

Statistical analysis

The one-sample Kolmogorov–Smirnov test was used to evaluate the normality of distributions. Group differences were calculated using analysis of variance for normally distributed data and the Kruskal–Wallis test for non-normally distributed data with the possible presence of outliers.

We addressed multiple comparisons via the Bonferroni adjustment for the nineteen comparisons, including twelve markers in which seven had left and right variants. The adjusted level of significance was set to p < 0.0026 (i.e., 0.05/19). Pearson’s partial correlation analysis controlled for age was used to test for associations between automatic video-based facial markers and the perceptual, clinical, and neuroimaging scales; the markers capturing the left- and right-side variant were represented by their average value.

To estimate the ability of markers of facial dynamic to distinguish between PD and control groups, we performed a binary logistic regression followed by leave-one-subject-out cross-validation. Several different classification scenarios were evaluated including classifiers based on single facial markers, and the combination yielding the best accuracy. The subset of facial markers providing the best accuracy was searched using a grid-search approach. The accuracy, sensitivity, and specificity values were computed to reflect the predictive value of dynamic facial markers. An overall indication of diagnostic accuracy was reported as the area under the curve (AUC), which we obtained from the receiver operating characteristic curve.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Written consent with publication of video material

Acknowledgements

This study was supported by the Ministry of Health of the Czech Republic, grant nr. NV19-04-00120 and National Institute for Neurological Research (Programme EXCELES), ID project nr. LX22NPO5107 (Funded by the European Union – Next Generation EU). The funders had no role in study design, data collection, analysis, decision to publish, or manuscript preparation. Access to CESNET storage facilities provided by the project “e-INFRA CZ “under the programme “Projects of Large Research, Development, and Innovations Infrastructures “LM2018140), is greatly appreciated.

Author contributions

M.N. was responsible for conception, organization and execution of the research project; data analysis; design of the statistical analysis; and writing of the manuscript. T.T. was responsible for conception, organization and execution of the research project; securing funding; review and critique of the manuscript. H.R. was responsible for execution of the research project; review and critique of the manuscript. E.R. was responsible for execution of the research project; review and critique of the manuscript. P.D. was responsible for conception, organization and execution of the research. project; securing funding; review and critique of the manuscript. J.R. was responsible for conception, organization and execution of the research project; securing funding; review and critique of the manuscript.

Data availability

Individual participant data that underlie the findings of this study are available upon reasonable request from the corresponding author. The data are not publicly available due to their containing of information that could compromise the privacy of study participants.

Code availability

The facial landmark detection was performed using a publicly available Python implementation of 2D Face Alignment Network44.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s41746-022-00642-5.

References

- 1.Gowers, W. R., A manual of diseases of the nervous system (J. & A. Churchill, London, 1886–1888).

- 2.Zhang ZX, Dong ZH, Roman GC. Early descriptions of Parkinson disease in ancient China. Arch. Neurol. 2006;63:782–784. doi: 10.1001/archneur.63.5.782. [DOI] [PubMed] [Google Scholar]

- 3.Bologna M, et al. Facial bradykinesia, J. Neurol. Neurosurg. Psychiatry. 2012;84:1–5. doi: 10.1136/jnnp-2012-303993. [DOI] [PubMed] [Google Scholar]

- 4.Fereshtehnejad SM, Skogar O, Lokk J. Evolution of orofacial symptoms and disease progression in idiopathic Parkinson’s disease: longitudinal data from The Jonkoping Parkinson Registry. Parkinsons Dis. 2017;2017:7802819. doi: 10.1155/2017/7802819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Postuma RB, Lang AE, Gagnon JF. How does parkinsonism start? Prodromal parkinsonism motor changes in idiopathic REM sleep behaviour disorder. Brain. 2012;6:1860–1870. doi: 10.1093/brain/aws093. [DOI] [PubMed] [Google Scholar]

- 6.Ricciardi L, De Angelis A, Marsili L. Hypomimia in Parkinson’s disease: an axial sign responsive to levodopa. Eur. J. Neurol. 2020;27:2422–2429. doi: 10.1111/ene.14452. [DOI] [PubMed] [Google Scholar]

- 7.Gasca-Salas C, Urso D. Association between hypomimia and mild cognitive impairment in de novo Parkinson’s Disease patients. Can. J. Neurol. Sci. 2020;47:855–857. doi: 10.1017/cjn.2020.93. [DOI] [PubMed] [Google Scholar]

- 8.McGettigan C, Scott SK. Voluntary and involuntary processes affect the production of verbal and non-verbal signals by the human voice. Behav. Brain. Sci. 2014;37:564–565. doi: 10.1017/S0140525X13004123. [DOI] [PubMed] [Google Scholar]

- 9.Graf H.P. et al. Visual prosody: Facial movements accompanying speech. Proceedings of Fifth IEEE International Conference on Automatic Face Gesture Recognition. IEEE, 2002.

- 10.Ricciardi L, et al. Emotional facedness in Parkinson’s disease. J. Neural. Transm. (Vienna) 2018;125:1819–1827. doi: 10.1007/s00702-018-1945-6. [DOI] [PubMed] [Google Scholar]

- 11.Ratajska AM, et al. Laterality of motor symptom onset and facial expressivity in Parkinson disease using face digitization. Laterality. 2021;5:1–14. doi: 10.1080/1357650X.2021.1946077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Caligiuri MP. Labial kinematics during speech in patients with parkinsonian rigidity. Brain. 1987;110:1033–1044. doi: 10.1093/brain/110.4.1033. [DOI] [PubMed] [Google Scholar]

- 13.Hunker CJ, Abbs JH, Barlow SM. The relationship between parkinsonian rigidity and hypokinesia in the orofacial system: a quantitative analysis. Neurology. 1982;32:749–754. doi: 10.1212/WNL.32.7.749. [DOI] [PubMed] [Google Scholar]

- 14.Pasquini J, Pavese N. Striatal dopaminergic denervation and hypomimia in Parkinson’s disease. Eur. J. Neurol. 2021;28:e2–e3. doi: 10.1111/ene.14483. [DOI] [PubMed] [Google Scholar]

- 15.Ricciardi L, et al. Reduced facial expressiveness in Parkinson’s disease: a pure motor disorder? J. Neurol. Sci. 2015;358:125–130. doi: 10.1016/j.jns.2015.08.1516. [DOI] [PubMed] [Google Scholar]

- 16.Abrami A, et al. Automated computer vision assessment of hypomimia in Parkinson Disease: Proof-of-Principle Pilot Study. J. Med. Internet Res. 2021;23:e21037. doi: 10.2196/21037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bowers D, et al. Faces of emotion in Parkinsons disease: Micro-expressivity and bradykinesia during voluntary facial expressions. J. Clin. Exp. Neuropsychol. 2006;12:765–773. doi: 10.1017/S135561770606111X. [DOI] [PubMed] [Google Scholar]

- 18.Wu P. et al. Objectifying facial expressivity assessment of Parkinson’s patients: preliminary study. Comput. Math. Methods Med. 427826, 10.1155/2014/427826 (2014). [DOI] [PMC free article] [PubMed]

- 19.Vinokurov N., Arkadir D., Linetsky E., Bergman H., Weinshall D., Quantifying hypomimia in Parkinson patients using a depth camera, In: Serino S, Matic A, Giakoumis D, Lopez G, Cipresso P (eds) Pervasive computing paradigms for mental health, mindcare, communications in computer and information science, 604, Springer, Cham. 10.1007/978-3-319-32270-4_7 (2015).

- 20.Bandini A, et al. Analysis of facial expressions in parkinson’s disease through video-based automatic methods. J. Neurosci. Methods. 2017;281:7–20. doi: 10.1016/j.jneumeth.2017.02.006. [DOI] [PubMed] [Google Scholar]

- 21.Ali MR, et al. Facial expressions can detect Parkinson’s disease: preliminary evidence from videos collected online. NPJ Digit. Med. 2021;4:129. doi: 10.1038/s41746-021-00502-8. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 22.Joshi A., Tickle-Degnen L., Gunnery S., Ellis T., Betke M. Predicting active facial expressivity in people with Parkinson’s Disease. Paper presented at: 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments - PETRA ‘16, June 29, Corfu, Greece. 10.1145/2910674.2910686 (2016).

- 23.Joshi A. et al. Context-sensitive prediction of facial expression using multimodal hierarchical Bayesian neural networks. Paper presented at: 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), May 15, Xi’an, China (2018).

- 24.Grammatikopoulou A., Grammalidis N., Bostantjopoulou A., Katsarou Z. Detecting hypomimia symptoms by selfie photo analysis: for early Parkinson disease detection. Paper presented at: 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments (PETRA ‘19), June, Rhodes. 10.1145/3316782.3322756 (2019).

- 25.Skibińska J., Burget R. Parkinson’s Disease Detection based on Changes of Emotions during Speech. Paper presented at: 12th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), October 15 10.1109/ICUMT51630.2020.9222446 (2020).

- 26.Su G, et al. Detection of hypomimia in patients with Parkinson’s disease via smile videos. Ann. Transl. Med. 2021;9:1307. doi: 10.21037/atm-21-3457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Su G, et al. Hypomimia recognition in Parkinson’s Disease with semantic features. ACM Trans. Multimed. Comput. Commun. 2021;2:383–391. [Google Scholar]

- 28.Jakubowski J, Potulska-Chromik A, Bialek K, Njoszewska M, Kostera Pruszcyk A. A study on the possible diagnosis of Parkinson’s disease on the basis of facial image analysis. Electronics. 2021;10:2832. doi: 10.3390/electronics10222832. [DOI] [Google Scholar]

- 29.Gomez-Gomez L.F., Morales A., Orozco J.R., Daza R., Fierrez J. Improving Parkinson Detection Using Dynamic Features From Evoked Expressions in Video. Paper presented at: IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10.1109/CVPRW53098.2021.00172 (2021).

- 30.Yang L, et al. Changes in facial expressions in patients with Parkinson’s disease during the phonation test and their correlation with disease severity. Comput. Speech Lang. 2022;72:101286. doi: 10.1016/j.csl.2021.101286. [DOI] [Google Scholar]

- 31.Rajnoha M. et al. Towards Identification of Hypomimia in Parkinson’s Disease Based on Face Recognition Methods. In 2018 10th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT 2018): Emerging Technologies For Connected Society. New York: IEEE, 1–4 10.1109/ICUMT.2018.8631249 (2018).

- 32.Smith MC, Smith MK, Ellgring H. Spontaneous and posed facial expression in Parkinson’s disease. J. Int Neuropsychol. Soc. 1996;2:383–391. doi: 10.1017/S1355617700001454. [DOI] [PubMed] [Google Scholar]

- 33.Ekman P, Davidson RJ, Friesen WV. The Duchenne smile: emotional expression and brain physiology II. J. Pers. Soc. Psychol. 1990;58:342–353. doi: 10.1037/0022-3514.58.2.342. [DOI] [PubMed] [Google Scholar]

- 34.Sagonas C, Antonakos E, Tzimiropoulos G, Zafeiriou S, Pantic M. 300 faces in-the-wild challenge: database and results. Image Vis. Comput. 2016;47:3–18. doi: 10.1016/j.imavis.2016.01.002. [DOI] [Google Scholar]

- 35.Katsikitis M, Pilowsky I. A controlled quantitative study of facial expression in Parkinson’s disease and depression. J. Nerv. Ment. Dis. 1991;179:683–688. doi: 10.1097/00005053-199111000-00006. [DOI] [PubMed] [Google Scholar]

- 36.Postuma RB, et al. MDS clinical diagnostic criteria for Parkinson’s disease. Mov. Disord. 2015;30:1591–1601. doi: 10.1002/mds.26424. [DOI] [PubMed] [Google Scholar]

- 37.Goetz CG, et al. Movement Disorder Society‐sponsored revision of the Unified Parkinson’s Disease Rating Scale (MDS‐UPDRS): scale presentation and clinimetric testing results. Mov. Disord. 2008;23:2129–2170. doi: 10.1002/mds.22340. [DOI] [PubMed] [Google Scholar]

- 38.Nasreddine ZS, et al. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J. Am. Geriatr. Soc. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- 39.Beck A.T., Steer R.A., Brown G.K. Manual for the Beck Depression Inventory-II. San Antonio, TX: Psychological Corporation, 10.1007/978-1-4419-1005-9_441 (1996).

- 40.Darcourt J, et al. EANM procedure guidelines for brain neurotransmission SPECT using (123)I-labelled dopamine transporter ligands, version 2. Eur. J. Nucl. Med. Mol. Imagin. 2010;37:443–450. doi: 10.1007/s00259-009-1267-x. [DOI] [PubMed] [Google Scholar]

- 41.Dusek P, et al. Relations of non-motor symptoms and dopamine transporter binding in REM sleep behavior disorder. Sci. Rep. 2019;9:15463. doi: 10.1038/s41598-019-51710-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Calvini P, et al. The basal ganglia matching tools package for striatal uptake semi-quantification: description and validation. Eur. J. Nucl. Med. Mol. Imaging. 2007;34:1240–1253. doi: 10.1007/s00259-006-0357-2. [DOI] [PubMed] [Google Scholar]

- 43.Moccia M, et al. Dopamine transporter availability in motor subtypes of de novo drug-naïve Parkinson’s disease. J. Neurol. 2014;261:2112–2118. doi: 10.1007/s00415-014-7459-8. [DOI] [PubMed] [Google Scholar]

- 44.Bulat A., Tzimiropoulos G. How far are we from solving the 2d & 3d face alignment problem? (and a dataset of 230,000 3d facial landmarks). In: Proceedings of the IEEE International Conference on Computer Vision; 1021–1030. 10.1109/ICCV.2017.116 (2017).

- 45.Gross R, Matthews I, Cohn J, Kanade T, Baker S. Multi-PIE. Proc. Int Conf. Autom. Face Gesture Recognit. 2010;28:807–813. doi: 10.1016/j.imavis.2009.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Written consent with publication of video material

Data Availability Statement

Individual participant data that underlie the findings of this study are available upon reasonable request from the corresponding author. The data are not publicly available due to their containing of information that could compromise the privacy of study participants.

The facial landmark detection was performed using a publicly available Python implementation of 2D Face Alignment Network44.