Abstract

PURPOSE

Structured data elements within electronic health records are health-related information that can be entered, stored, and extracted in an organized manner at later time points. Tracking outcomes for cancer survivors is also enabled by structured data. We sought to increase structured data capture within oncology practices at multiple sites sharing the same electronic health records.

METHODS

Applying engineering approaches and the Plan-Do-Study-Act cycle, we launched dual quality improvement initiatives to ensure that a malignant diagnosis and stage were captured as structured data. Intervention: Close Visit Validation (CVV) requires providers to satisfy certain criteria before closing ambulatory encounters. CVV may be used to track open clinical encounters and chart delinquencies to encourage optimal clinical workflows. We added two cancer-specific required criteria at the time of closing encounters in oncology clinics: (1) the presence of at least one malignant diagnosis on the Problem List and (2) staging all the malignant diagnoses on the Problem List when appropriate.

RESULTS

Six months before the CVV implementation, the percentage of encounters with a malignant diagnosis on the Problem List at the time of the encounter was 65%, whereas the percentage of encounters with a staged diagnosis was 32%. Three months after cancer-specific CVV implementation, the percentages were 85% and 75%, respectively. Rates had increased to 90% and 88% more than 2 years after implementation.

CONCLUSION

Oncologist performance improved after the implementation of cancer-specific CVV criteria, with persistently high percentages of relevant malignant diagnoses and cancer stage structured data capture 2 years after the intervention.

INTRODUCTION

Structured data within electronic health records (EHRs) are health-related information entered and stored in an organized manner for extraction at later time points.1 Structured data are captured using defined language linked to a coding system that ultimately allows easier data collection and aggregation for research, management, and audit purposes.1,2 Structured data stand in sharp contrast to information available in the EHR as unstructured data. Unstructured data include free or dictated text from office notes,3 digital files (eg, radiology images or scanned records), and video files produced during medical procedures (eg, endoscopy).4 Unstructured data require additional processing to convert into discrete data elements for further analysis,3,4 which can be accomplished using natural language processing5 or manual extraction by medical abstractors.6 Structured data have myriad uses, including tracking the receipt of care and improving the capacity to detect redundant and unnecessary care and to identify specific populations.7 In oncology, where clinicians must exchange large amounts of information across multidisciplinary teams and systems,8 structured data can improve care coordination and workflows,9 build registries to support clinical decision and research,3 and facilitate administrative tasks.3,10 To take clinical action on the basis of structured data, it needs to be available in real time at the point of care.11

CONTEXT

Key Objective

To present a successful strategy to improve structured data capture in oncology by conducting quality improvement initiatives.

Knowledge Generated

We discuss the importance of structured data capture in oncology, providing a detailed overview of collaborative informatics initiatives implemented in an National Cancer Institute–designated academic cancer center.

Relevance

By repurposing and optimizing clinical workflows, it is possible to support provider compliance with cancer care quality metrics. These quality improvement initiatives raise important insights into clinical workflow integration to increase structured data capture with the least system and provider burden.

However, capturing data in a structured format in real time and at the point of care can be challenging for patients with cancer.11,12 Challenges with structured data capture may arise on the EHR vendor side if the vendor does not offer a user-friendly interface (eg, information display format interfering with visualization)13 or if the EHR does not offer the data field required for specific data element entry.10 Health care organizations may inadvertently add to the problem if training is not readily available to support clinician users (eg, training to use a relevant EHR form).14 From clinicians' perspective, entering structured data may not be perceived as valuable, especially if it can be entered elsewhere, usually as free text, with a more detailed and/or visually appealing description.14 For example, Problem List completeness is frequently an issue in chronic disease, where a more comprehensive description of patient status may be found in the context of the clinical notes (free text).14,15 Finally, for clinicians, structured data capture may necessitate additional steps within the EHR to complete a task in different sections of the EHR during each encounter.11

Strategies such as the minimal Common Oncology Data Elements (mCODE) initiative are intended to standardize core oncology data elements in the EHR environment to facilitate interoperability between different EHR systems for purposes of clinical care, research, or cancer surveillance.16,17 Through stakeholder consensus, a set of data elements used in clinical practice16 have been defined as standard. TNM stage is an example of information used for clinical decisions, treatment planning, and outcomes reporting. However, the TNM stage is often reported in an unstructured form.11,18 Capturing TNM as structured data in real time facilitates patient supportive services, such as health insurance prior authorization, clinical decision support and best practice advisories, and clinical research screening in addition to supporting business operations.19,20

The University of Wisconsin Carbone Cancer Center (UWCCC) Survivorship Program is tasked with improving outcomes for UWCCC cancer survivors, enabled by EHR-based cancer registries informed by structured data.3,7,21 Thus, the program engages in initiatives to increase structured data capture within oncology practices at multiple sites sharing the same EHR instance. The program applied human factors engineering approaches7,22 to iteratively redesign and repurpose pre-existent EHR functionality to work within clinician workflows. In 2019, the program launched dual quality improvement (QI) initiatives to ensure that (1) a malignant diagnosis and (2) cancer stage were captured as structured data. These dual QI initiatives and their impact will be presented here.

METHODS

Setting and Participants

This study was conducted at the UWCCC, which provides care to roughly 33,000 unique patients (4,600-4,900 new cases) annually. The UWCCC is an National Cancer Institute–designated comprehensive cancer center, National Comprehensive Cancer Network member, and accredited by the Commission on Cancer (CoC). UWCCC is a part of UW Health, the integrated health system of University of Wisconsin-Madison. The EHR vendor is Epic (Epic Systems; Verona, WI), used at the UWCCC since 2008, with structured TNM data capture available through Beacon since December 2011. See Table 1 for Epic-specific terms definitions. This study was exempted from the University of Wisconsin Institutional Review Board review and adhered to the Revised Standards for Quality Improvement Reporting Excellence (SQUIRE 2.0) reporting guidelines.23

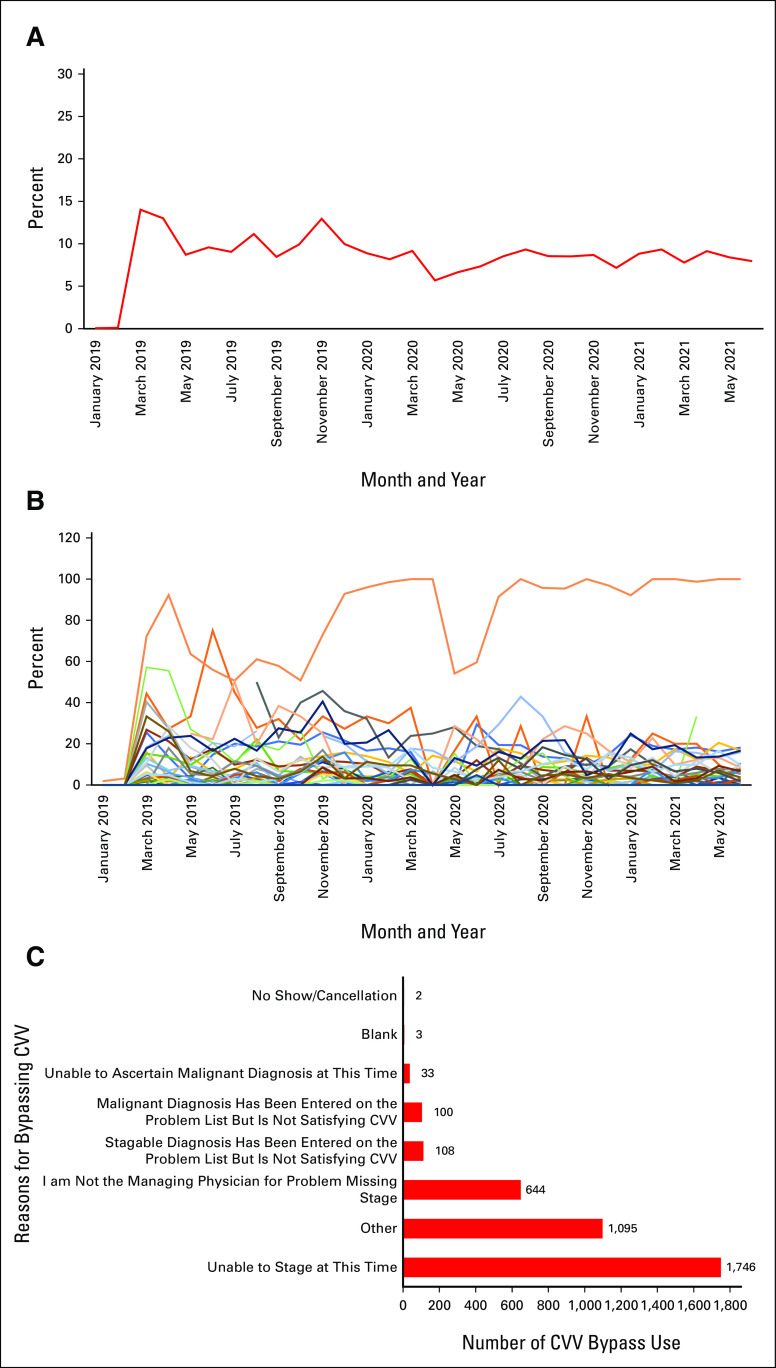

TABLE 1.

Epic-Specific Terms Definitions

As an academic cancer center, UWCCC patients with cancer are seen across a broad range of clinical departments and divisions (eg, surgical, medical, and radiation oncology) and clinic locations. The program's initial focus was on the Hematology, Medical Oncology, and Palliative Care (HOPC) division within the UW Department of Medicine as nearly all patients seen by providers within the HOPC division had malignant diagnoses.

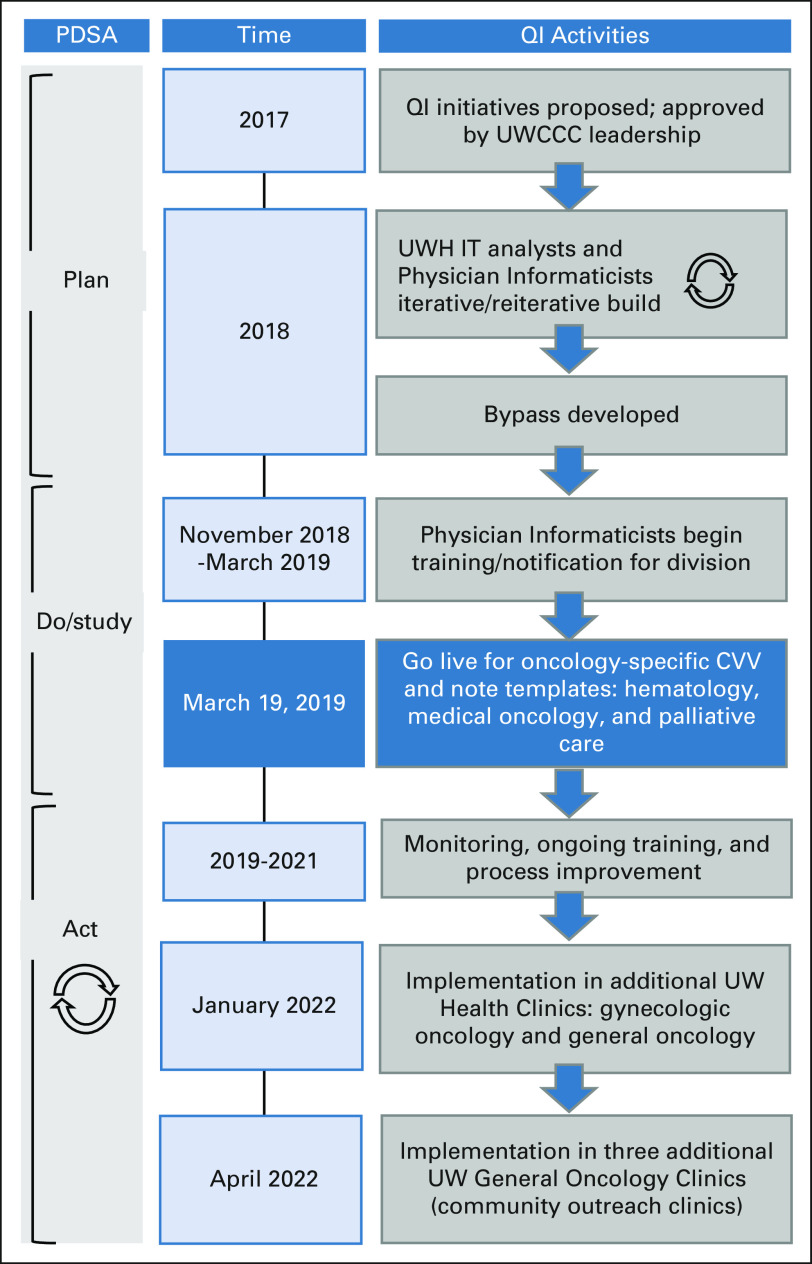

Intervention Development: Cancer-Specific Close Visit Validation

The program's oncology Physician Informaticists suggested that encounter-based, noninterruptive reminders would best fit provider workflows. Best Practice Advisory (BPAs) and in-basket messages were considered before settling on Close Visit Validation (CVV) functionality, which requires providers to satisfy certain criteria (typically, level of service and completed and signed progress note) before an ambulatory encounter can be closed.24,25 CVV can enforce desired workflow such as the closure of open clinical encounters. Care must be taken to ensure that CVV does not unnecessarily contribute to workload, thereby adding to provider burnout.26,27 The program identified CVV as an opportunity for driving cancer-specific documentation with parsimonious EHR build that did not require providers to learn separate workflows while generating structured data that could be leveraged in multiple workflows and contexts such as notes and registries. We followed the Plan-Do-Study-Act cycle28 to apply the intervention and learn from the process, followed by using that knowledge to improve CVV implementation among different clinics (Fig 1).

FIG 1.

Study schema and intervention timeline. Timeline for cancer-specific CVV QI initiatives. CVV, Close Visit Validation; PDSA, Plan-Do-Study-Act; QI, quality improvement; UW, University of Wisconsin; UWCCC, University of Wisconsin Carbone Cancer Center; UWH IT, UW Health Information Technology.

For cancer-specific CVV, we determined that two conditions should be added to standard CVV criteria (eg, level of service and progress note) to close encounters that are logged within the prespecified HOPC division clinics. The additional conditions were (1) the presence of at least one malignant diagnosis as defined by a Systematized Nomenclature of Medicine (SNOMED)29 grouper and (2) that every malignant diagnosis on the Problem List has TNM staging structured data using the Beacon staging functionality. In general, cancer diagnosis and stage directly affect the treatment selection and follow-up planning and oncology providers are more likely to have all the required information to determine the final diagnosis and stage as a part of their clinical care. Requiring these cancer-specific data from treating physicians and advanced practice providers (APPs) was deemed appropriate because (1) UW Health governance states that only a provider can enter a diagnosis on the EHR Problem List and (2) American Joint Committee on Cancer and CoC guidelines state that treating physicians must sign off on cancer staging data.30

Testing of the Intervention: Need for Bypass

Early testing revealed the need for bypasses because not every patient seen would have a known or confirmed malignant diagnosis (eg, tissue may still be pending) or because the staging workup may not yet be complete. A SmartList and subsequently a SmartForm were developed to indicate that an encounter was for malignant, nonmalignant, palliative care, or prediagnosis reasons, with the latter three bypassing cancer-specific CVV requirements. However, bypassing the CVV rules only satisfied the criteria for a single encounter, with the intention to encourage providers to satisfy the criteria permanently by adding a malignant diagnosis in the EHR Problem List and cancer stage data in Beacon. The Data Supplement contains screenshots of the cancer-specific CVV logic and bypass reasons.

Deployment of the Intervention

Faculty providers (physicians and APPs) were informed and trained by oncology Physician Informaticists (H.E., A.J.T.) beginning November 2018 via e-mail communications, faculty meetings, and 1:1 sessions. Three outpatient medical oncology clinics (limited to hematology, medical oncology, and palliative care providers) went live with cancer-specific CVV on March 19, 2019. In the expansion phase of the project, gynecologic oncology and one general oncology clinics went live in January 2022. Three additional general oncology clinics (community outreach clinics) are planned to go live in late April 2022. Figure 1 shows the event timeline.

Data Collection (variables, source, and extraction)

To demonstrate the impact of cancer-specific CVV, the following variables were extracted from the EHR: the number of encounters in three medical oncology clinics from January 1, 2018, to June 30, 2021, the number of encounters with ≥ 1 malignant diagnosis on the Problem List at the time of the encounter, and the number of staged malignant diagnoses at the time of the encounter. Focusing on the time of the encounter avoided the inflation in staging (eg, 115%) seen by Cecchini et al25 after their intervention. Cecchini et al divided the number of cases staged in the EHR by the number of cases in the tumor registry over the same time period. With this metric, they captured retroactive staging in the first months after the intervention (eg, providers had more cases staged than they had seen in encounters).25 We also extracted the bypass reasons selected, encounter provider, encounter clinic, encounter date, and days until the encounter closure. This analysis was limited to providers who primarily treat solid tumors as the TNM staging is not applicable to hematologic malignancies or benign hematologic conditions. These three clinics included two subspecialist clinics (seeing only adult patients with solid tumors) and one general oncology clinic (seeing primarily not only adult patients with solid tumors but also some patients with benign and malignant hematology diagnoses).

Analysis Plan

Our expectations were that the implementation of cancer-specific CVV would increase (1) the percentage of encounters with at least one malignant diagnosis on the Problem List and (2) the percentage of encounters with staged diagnoses. UW leadership set the following a priori as metrics of success on the basis of 2018 UWCCC data and review of staging goals from other institutions19,20:

More than 80% of clinic encounters would have a malignant diagnosis on the Problem List.

More than 80% of clinic encounters with malignant diagnoses associated with structured staging data.

More than 80% of encounters closed by the provider within 3 days to align with UW Health standards (note: division encounters were not closed by providers before CVV went live).

RESULTS

Encounter and Provider Characteristics

Between January 1, 2018, and June 30, 2021, the selected three clinics had 61,224 encounters involving 6,927 unique patients and 35 unique providers (28 attending physicians, 7 APPs; 17 males, 18 females). Among these encounters, 3,721 (6.1%) encounters (all from prior to CVV implementation) had no closure date. Therefore, we could not calculate the time to chart closure for these encounters. Before CVV implementation in March 2019, encounters were not closed by the providers. In that workflow, encounters were closed by the billing team after completion of billing related tasks and after the encounter date.

Impact of Cancer-Specific CVV Criteria

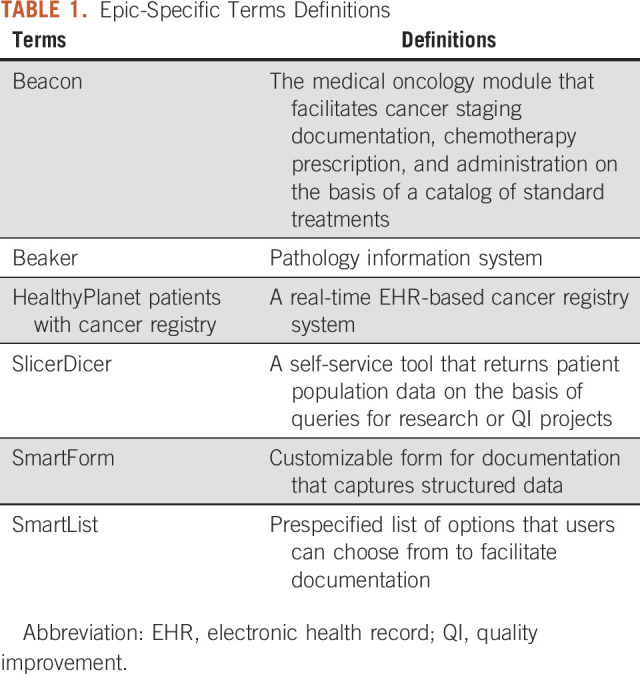

In May 2018, 6 months before the CVV training, the percentage of encounters with a malignant diagnosis present on the Problem List at the time of the encounter was 65%, whereas the percentage of encounters with a staged diagnosis at the time of the encounter was 32%. The average time to close an encounter is not interpretable, as encounters were closed by billing multiple months after office visits before March 19, 2019, as noted in prior paragraph.

In June 2019, 3 months after CVV went live, 85% and 75% of the encounters had a malignant diagnosis present on the Problem List and had staged diagnosis at the time of the encounter, respectively. By June 2021, more than 2 years after CVV went live, the percentages were 90% and 88%, respectively. The average time to close an encounter was 4.8 (range 0-17.9) days for providers as a group in June 2019, and this time decreased to 3.03 (range 0-36) days for providers as a group by June 2021. Figure 2 shows the overall change from January 2018 to June 2021.

FIG 2.

Run charts with the percentage of encounters with malignant diagnosis in the Problem List and cancer stage report. (A) Percentage of encounters with malignant diagnosis documented in the Problem List. The blue line represents the percentage of encounters with malignant diagnosis documented in the Problem List over time. The green vertical line represents provider training in November 2018. The red vertical line represents the date that CVV went live in March 2019. (B) Percentage of encounters with malignant diagnosis documented in the Problem List per provider. Colored lines represent the percentage of encounters with malignant diagnosis documented in the Problem List over time per provider. The green vertical line represents provider training in November 2018. The red vertical line represents the date that CVV went live in March 2019. (C) Percentage of encounters with stage documented. The orange line represents the percentage of encounters with the malignant diagnosis stage documented over time. The green vertical line represents provider training in November 2018. The red vertical line represents the date that CVV went live in March 2019. (D) Percentage of encounters with stage documented per provider. Colored lines represent the percentage of encounters with malignant diagnosis stage documented over time per provider. The green vertical line represents provider training in November 2018. The red vertical line represents the date that CVV went live in March 2019. CVV, Close Visit Validation.

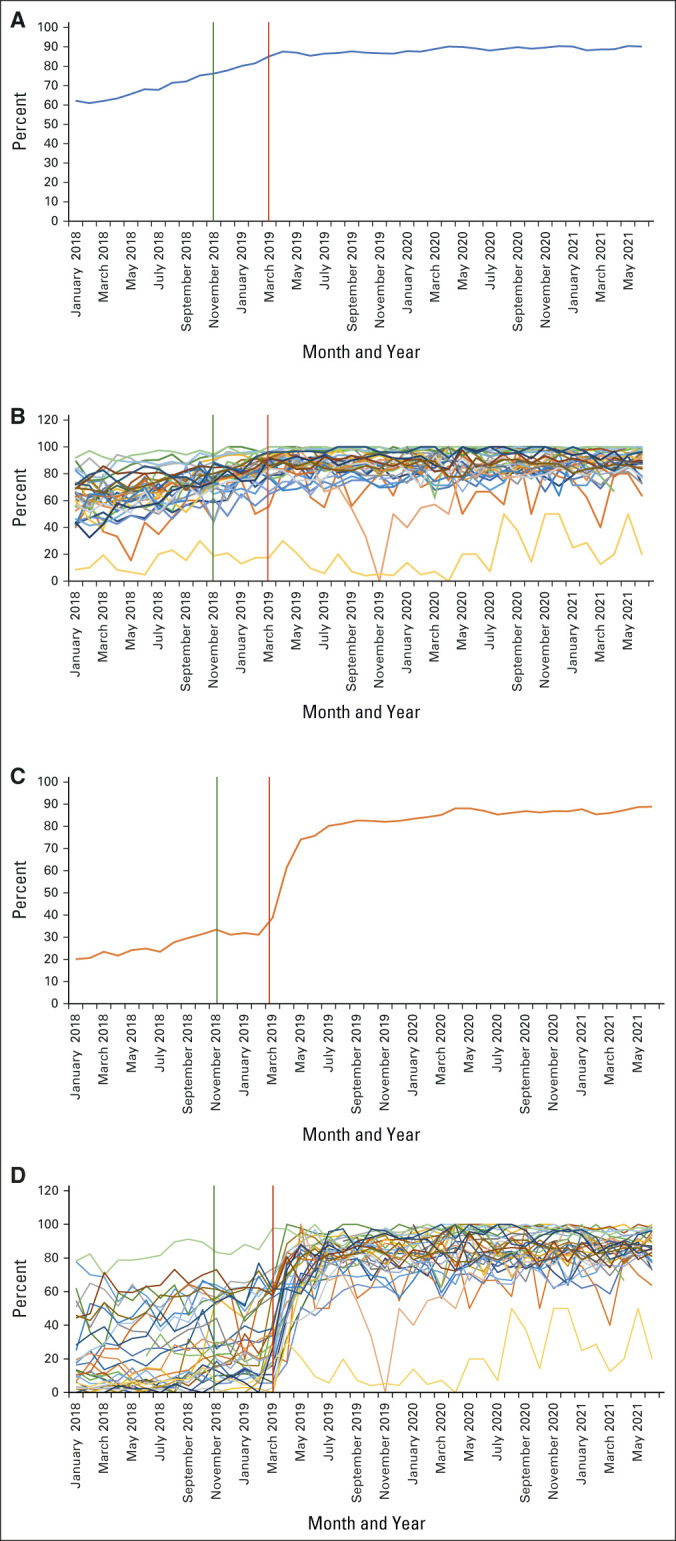

Bypass Usage

The cancer-specific criteria were bypassed in 3,728 encounters (8.57% of the 43,509 visits occurred after January 2019). In three cases, the providers chose more than one option for bypassing the CVV and each one of the options was counted in the specific category. The top reasons for bypassing were as follows: unable to stage at this time (n = 1,746), others (n = 1,095) without additional explanation, and “I am not the managing physician for problem missing stage” (n = 644). Figure 3 shows bypass utilization by the group, by providers, and the reasons for bypassing.

FIG 3.

Percentage of encounters with CVV bypass and bypass reasons. (A) Percentage of encounters with CVV bypass use. The red line represents the percentage of encounters with bypassed CVV since January 2019. (B) Percentage of encounters with CVV bypass use per provider. Colored lines represent the percentage of encounters with bypassed CVV per provider since January 2019. (C) Close visit validation bypass reasons. Red bars represent reasons for bypassing CVV (n) since January 2019. CVV, Close Visit Validation.

DISCUSSION

Our study presents two successful QI initiatives to increase structured data capture in real time and at the point of care for an National Cancer Institute–designated academic cancer center. Cancer diagnosis and stage are crucial measures for defining treatment and prognosis, improving care coordination, driving cancer registries and integrated EHR clinical decision support tools, supporting clinical trials, and communication among providers and are also used to show compliance with patient-centered cancer care quality metrics.11,16,21,25 By creating workflows that support capturing diagnosis and stage as structured data, it was possible to affect providers' workflow and achieve long-lasting outcome improvement. After cancer-specific CVV implementation in March 2019, the percentage of encounters with malignant diagnoses and associated structured staging data steadily increased for medical oncology providers across three different practice locations. Moreover, no regression to the mean has been observed: we have maintained rates close to 90% for both metrics over 2 years after implementation. The average days to close an encounter also improved, decreasing to an average of 3 days in June 2021.

The study design was informed by oncology Physician Informaticists using human factors engineering approaches as recommended by National Academy of Medicine (formerly IOM) to improve health care delivery by iteratively designing, redesigning, and repurposing pre-existing CVV functionality.31 Using this approach, work system elements involved in the process of documenting cancer diagnosis and stage were considered; technology was used to facilitate providers' work and ensure that clinicians had an out if the patient could not be diagnosed or staged at the time of encounter.7 We avoided using an interruptive BPA or repeated reminders outside of the EHR to minimize provider workload. By repurposing and optimizing clinical workflows, it was possible to support provider compliance with cancer care quality metrics.7,9 From research and administrative standpoints, these QI initiatives provide data for both HealthyPlanet patients with cancer registry and SlicerDicer that rely on Problem List and TNM staging data.27 From a clinician's perspective, diagnosis and stage remain registered, preventing future duplicate work. Our initiatives also allowed us to understand the CVV bypass reasons, realizing that not all medical oncology encounters occur for an established malignant diagnosis and/or that staging is not always possible. These QI initiatives were supported by the go-live of cancer-specific note templates that leverage the structured diagnosis and stage data (thus directly benefitting providers who enter the structured data). In addition, we have created a process to directly pull the available discrete data elements from the pathology synoptic reports (Beaker) into the TNM staging forms in Beacon to facilitate the staging process for the treating providers.

Other academic centers have conducted initiatives to increase cancer staging documentation. Initiatives generally rely on reminders (whether synchronous to the encounter v asynchronous) delivered within the EHR (in-basket or CVV) versus external platforms (eg, e-mail) that are either hard stops requiring action versus encouraging soft stops or other prompts for the desired behavior. The team at Yale created a BPA that fired at the beginning of an encounter, which required cancer staging or a reason for not staging to proceed, which increased staging to 60% 9-12 months postintervention.25 By contrast, cancer-specific CVV fires at encounter closure and does not interrupt provider workflow. Stanford used soft-stop CVV criteria to achieve a 70% staging rate; however, this was paired with financial incentives (a tactic not possible for all cancer centers).19 UC San Diego used periodic reports with group and individual staging performance, BPAs, and automatic in-basket messaging in the case of nonstaged encounters to achieve a staging rate of 70%.32 The MGH Cancer Center modestly improved cancer staging performance for new (but not follow-up) patients with up to three peer comparison e-mails to providers.18 By contrast, our intervention was impactful across both new and follow-up patients. Finally, we are aware of workflows using Tumor Registrars to enter the stage data and route this to providers to sign along with a deficiency message20; however, this approach may not be feasible because of registrar resource limitations or medical-legal considerations.

Our study limitations include lacking a formal control arm; however, examination of staging rates for patients seen outside of the division (such as gynecologic oncology) does not indicate a similarly profound impact during the study timeframe. Second, the SNOMED grouper used to define that malignant diagnosis includes diagnoses such as squamous cell carcinoma and basal cell carcinoma. These diagnoses are not typically followed by medical oncology, but the high prevalence in the general population means that these may be present along with another diagnosis in our population. These diagnoses create work for clinicians, who must either mark them as unstageable or bypass the staging criteria. Third, we do not have an established mechanism to evaluate TNM completeness, as the system is satisfied with any data (eg, entering cT1 will suffice, although cT1 cN0 cM0 would be more complete). Fourth, changes over time do not generate a new staging request, eg, a surgery establishing a pathologic stage will not trigger restaging for a patient with structured clinical staging. Fifth, we targeted the HOPC division, and thus, outcomes do not reflect the behavior of other cancer clinicians, such as Radiation and Surgical Oncology. Sixth, we did not conduct formal surveys of provider experience; however, our division's KLAS survey results show stability in provider satisfaction with the EHR between 2018 and 2020.

In conclusion, oncologist behavior and workflow were sustainably influenced with improved structured data capture rates exceeding 90% for malignant diagnoses and cancer stage. The intervention was applicable across different locations and practices. Future research will address limitations such as completeness of cancer staging and how to address changes in the stage with structured data and to assess which methods (synchronous, encouraging v asynchronous, forcing, etc) achieve the best results with the least system and provider burden. Finally, national quality metrics to measure cancer centers' capture of structured data would likely drive rapid and broad workflow change.

ACKNOWLEDGMENT

The authors would like to thank the following: participating providers of the University of Wisconsin HOPC division, the UW Health Physician Informatics Team, and our Epic Beacon TS Shane Joynes. We would also like to thank the staff of the University of Wisconsin Carbone Cancer Center (UWCCC) Biostatistics Shared Resource for their valuable contributions to this research. Shared research services at the UWCCC were supported by Cancer Center Support Grant P30 CA014520.

Hamid Emamekhoo

Consulting or Advisory Role: Exelixis, Cardinal Health, Seattle Genetics

Amye J. Tevaarwerk

Other Relationship: Epic Systems

No other potential conflicts of interest were reported.

PRIOR PRESENTATION

Presented in part at Epic's Experts Group Meeting (XGM), April 29 - May 6, 2021, Verona, WI.

SUPPORT

Supported by NCI Cancer Center Support Grant P30 CA014520 and by grant UL1TR000427 to UW ICTR from NIH/NCATS.

AUTHOR CONTRIBUTIONS

Conception and design: Hamid Emamekhoo, Michael D. Lavitschke, Daniel Mulkerin, Mary E. Sesto, Amye J. Tevaarwerk

Administrative support: Daniel Mulkerin

Collection and assembly of data: Hamid Emamekhoo, Cibele B. Carroll, Chelsea Stietz, Amye J. Tevaarwerk

Data analysis and interpretation: Hamid Emamekhoo, Cibele B. Carroll, Jeffrey B. Pier, Daniel Mulkerin, Mary E. Sesto, Amye J. Tevaarwerk

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/cci/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Hamid Emamekhoo

Consulting or Advisory Role: Exelixis, Cardinal Health, Seattle Genetics

Amye J. Tevaarwerk

Other Relationship: Epic Systems

No other potential conflicts of interest were reported.

REFERENCES

- 1.Joukes E, Abu-Hanna A, Cornet R, et al. Time spent on dedicated patient care and documentation tasks before and after the introduction of a structured and standardized electronic health record Appl Clin Inform 946–532018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Häyrinen K, Saranto K.The core data elements of electronic health record in Finland Stud Health Technol Inform 116131–1362005 [PubMed] [Google Scholar]

- 3.Ehrenstein V, Kharrazi H, Lehmann H, et al. Obtaining data from electronic health records. In: Gliklich RE, Leavy MB, Dreyer NA, editors. Tools and Technologies for Registry Interoperability, Registries for Evaluating Patient Outcomes: A User's Guide. ed 3, Addendum 2. Rockville, MD: Agency for Healthcare Research and Quality (US); 2019. [PubMed] [Google Scholar]

- 4.Kong HJ.Managing unstructured big data in Healthcare system Healthc Inform Res 251–22019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Abedian S, Sholle ET, Adekkanattu PM, et al. Automated extraction of tumor staging and diagnosis information from surgical pathology reports JCO Clin Cancer Inform 51054–10612021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kreimeyer K, Foster M, Pandey A, et al. Natural language processing systems for capturing and standardizing unstructured clinical information: A systematic review J Biomed Inform 7314–292017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tevaarwerk AJ, Klemp JR, van Londen GJ, et al. Moving beyond static survivorship care plans: A systems engineering approach to population health management for cancer survivors Cancer 1244292–43002018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Henkel M, Stieltjes B.Structured data acquisition in oncology Oncology 98423–4292020 [DOI] [PubMed] [Google Scholar]

- 9.Morken CM, Tevaarwerk AJ, Swiecichowski AK, et al. Survivor and clinician assessment of survivorship care plans for hematopoietic stem cell transplantation patients: An engineering, primary care, and oncology collaborative for survivorship health Biol Blood Marrow Transplant 251240–12462019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Schorer AE, Moldwin R, Koskimaki J, et al. Chasm between cancer quality measures and electronic health record data quality. JCO Clin Cancer Inform. 2022;6:e2100128. doi: 10.1200/CCI.21.00128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Patt D, Stella P, Bosserman L.Clinical challenges and opportunities with current electronic health records: Practicing oncologists' perspective JCO Oncol Pract 14577–5792018 [DOI] [PubMed] [Google Scholar]

- 12.Pasalic D, Reddy JP, Edwards T, et al. Implementing an electronic data capture system to improve clinical workflow in a large academic radiation oncology practice JCO Clin Cancer Inform 21–122018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Armijo D, McDonnell C, Werner K. Electronic Health Record Usability: Interface Design Considerations. AHRQ Publication No. 09(10)-0091-2-EF; Rockville, MD, Agency for Healthcare Research and Quality, 2009. [Google Scholar]

- 14. Poulos J, Zhu L, Shah AD. Data gaps in electronic health record (EHR) systems: An audit of problem list completeness during the COVID-19 pandemic. Int J Med Inform. 2021;150:104452. doi: 10.1016/j.ijmedinf.2021.104452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Daskivich TJ, Abedi G, Kaplan SH, et al. Electronic health record problem lists: Accurate enough for risk adjustment? Am J Manag Care 24e24–e292018 [PubMed] [Google Scholar]

- 16.Goel AK, Campbell WS, Moldwin R.Structured data capture for oncology JCO Clin Cancer Inform 5194–2012021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Osterman TJ, Terry M, Miller RS.Improving cancer data interoperability: The promise of the minimal Common Oncology Data Elements (mCODE) initiative JCO Clin Cancer Inform 4993–10012020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Sinaiko AD, Barnett ML, Gaye M, et al. Association of peer comparison emails with electronic health record documentation of cancer stage by oncologists. JAMA Netw Open. 2020;3:e2015935. doi: 10.1001/jamanetworkopen.2020.15935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Staging Wars: A New Hope. Oncology Advisory Council. Presented at Epic's Experts Group Meeting (XGM), Verona, WI, April 30-May 4, 2018.

- 20.Cancer Staging: Process, Improvements and Synoptic Staging. Oncology Advisory Council. Presented at Epic's Experts Group Meeting (XGM), Verona, WI, May 6-10, 2019.

- 21. Cha L, Tevaarwerk AJ, Smith EM, et al. Reported concerns and acceptance of information or referrals among breast cancer survivors seen for care planning visits: Results from the University of Wisconsin Carbone Cancer Center Survivorship Program. J Cancer Educ. doi: 10.1007/s13187-021-02015-0. epub ahead of print on April 26, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Li J, Carayon P.Health Care 4.0: A vision for smart and connected health care IISE Trans Healthc Syst Eng 11171–1802021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ogrinc G, Davies L, Goodman D, et al. SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence): Revised publication guidelines from a detailed consensus process Am J Med Qual 30543–5492015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Ramirez M, Chen K, Follett RW, et al. Impact of a “chart closure” hard stop alert on prescribing for elevated blood pressures among patients with diabetes: Quasi-experimental study. JMIR Med Inform. 2020;8:e16421. doi: 10.2196/16421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cecchini M, Framski K, Lazette P, et al. Electronic intervention to improve structured cancer stage data capture JCO Oncol Pract 12e949–e9562016 [DOI] [PubMed] [Google Scholar]

- 26.Murphy DR, Satterly T, Giardina TD, et al. Practicing clinicians' recommendations to reduce burden from the electronic health record inbox: A mixed-methods study J Gen Intern Med 341825–18322019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gajra A, Bapat B, Jeune-Smith Y, et al. Frequency and causes of burnout in US community oncologists in the era of electronic health records JCO Oncol Pract 16e357–e3652020 [DOI] [PubMed] [Google Scholar]

- 28.Agency for Healthcare Research and Quality (AHRQ) Health Literacy Universal Precautions Toolkit. ed 2. https://www.ahrq.gov/health-literacy/improve/precautions/tool2b.html [Google Scholar]

- 29.Willett DL, Kannan V, Chu L, et al. SNOMED CT concept hierarchies for sharing definitions of clinical conditions using electronic health record data Appl Clin Inform 9667–6822018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Amin MB, Greene FL, Edge SB, et al. The Eighth Edition AJCC Cancer Staging Manual: Continuing to build a bridge from a population-based to a more “personalized” approach to cancer staging CA Cancer J Clin 6793–992017 [DOI] [PubMed] [Google Scholar]

- 31.Reid PP, Compton WD, Grossman JH, et al., editors. Building a Better Delivery System: A New Engineering/Health Care Partnership. Washington DC: National Academies Press (US); 2005. [PubMed] [Google Scholar]

- 32.Lee JH, Mohamed T, Ramsey C, et al. A hospital-wide intervention to improve compliance with TNM cancer staging documentation J Natl Compr Canc Netw 20351–360.e12021 [DOI] [PubMed] [Google Scholar]