Abstract

Published choice experiments linked to various aspects of the COVID-19 pandemic are analysed in a rapid review. The aim is to (i) document the diversity of topics as well as their temporal and geographical patterns of emergence, (ii) compare various elements of design quality across different sectors of applied economics, and (iii) identify potential signs of convergent validity across findings of comparable experiments. Of the N = 43 published choice experiments during the first two years of the pandemic, the majority identifies with health applications (n = 30), followed by transport-related applications (n = 10). Nearly 100,000 people across the world responded to pandemic-related discrete choice surveys. Within health applications, while the dominant theme, up until June 2020, was lockdown relaxation and tracing measures, the focus shifted abruptly to vaccine preference since then. Geographical origins of the health surveys were not diverse. Nearly 50% of all health surveys were conducted in only three countries, namely US, China and The Netherlands. Health applications exhibited stronger pre-testing and larger sample sizes compared to transport applications. Limited signs of convergent validity were identifiable. Within some applications, issues of temporal instability as well as hypothetical bias attributable to social desirability, protest response or policy consequentiality seemed likely to have affected the findings. Nevertheless, very few of the experiments implemented measures of hypothetical bias mitigation and those were limited to health studies. Our main conclusion is that swift administration of pandemic-related choice experiments has overall resulted in certain degrees of compromise in study quality, but this has been more so the case in relation to transport topics than health topics.

Keywords: Discrete choice experiments, Stated choice experiments, Survey design, COVID-19, pandemic

1. Introduction

COVID-19 has had a profound impact on the lives of everyone around the world since early 2020. Due to the rarity of global pandemics, researchers have been keen to analyse the behavioural impact of COVID-19 using choice experiments and choice modelling in various domains. COVID-19 has significant consequences for public health and has strained hospitals and people working in health care. To slow infection rates, many governments have imposed restrictions and lockdowns to reduce mobility. It is, therefore, no surprise that choice modellers have mostly analysed health-related and travel-related choice behaviour. What is surprising is the speed with which choice experiments were designed and implemented, with the first surveys containing choice experiments sent out mere weeks after the start of the pandemic.

In this review, we summarise choice experiments related to COVID-19 that have appeared in the literature with the aim to document the diversity of topics and their emergence, to compare experimental design quality across different applied economics areas, and to identify whether similar choice experiments exhibit convergent validity.

2. Methods

The focus of the analysis is on peer-reviewed discrete choice experiments published in the scholarly literature that are related to any aspect of the COVID-19 pandemic. In order to obtain this set of references, we modified the previously established search query of Haghani et al. (2021a), formulated to source and track the overall literature of discrete choice modelling. One application of such query string is that it can be modified to produce specific subsets of interest within the literature. We did so, in this case, by combining the query string with a combination of terms that characterise pandemic-related studies. In specific terms, we combined the said query, using Boolean operator AND, with the string (“Coronavirus” OR “COVID-19” OR “SARS-COV-2”). See details of this search query in Appendix.

The search was conducted at the end of 2021 in the Web of Science. An initial set of slightly more than 100 peer reviewed articles that were detected by this search were screened to filter those that specifically report on a discrete choice experiment. Studies that used non-experimental choice data, i.e., revealed choice, were excluded. The screening was carried out by the first author based on the main inclusion criterion of identifying studies disseminated as peer reviewed journal articles and reporting on a discrete choice experiment in a context related to the COVID-19 pandemic. After screening, a total of N = 43 articles met the inclusion criteria. Consistent with Haghani et al. (2021b) and Haghani et al. (2021c), the articles were further classified to the subdomains of health, transport, environmental and marketing/consumer studies. This determination was made based on the nature and topic of the choice experiment reported in the paper. This processing identified n = 30 experiments related to health, n = 10 experiments related to transport, and n = 2 and n = 1 experiments respectively related to business and environmental topics.

The text of each paper in the dataset was fully examined and the following information was extracted for each: (1) the country or countries were the experiment was conducted, (2) the topic of the choice experiment, (3) the list of attributes, (4) the period of time where the experiment was undertaken, (5) the sample size, whether the sample represented of a generic population or a specific population within that country, (6) whether any polit study or focus group interview was conducted during the design process, (7) the type of design and number of choice sets, (8) whether the experiment design implemented any measure of testing internal validity, (9) whether the experiment took any explicit measure to mitigate potential hypothetical bias, and (10) the key findings. Information on item (10) was subsequently used as the basis for assessing convergent validity of studies that were conducted on same/comparable topics. Since the number of studies in contexts of marketing or environment was only a few, most of our comparisons will be between health and transport-related experiments.

3. Results

3.1. Geographical distribution of choice experiments

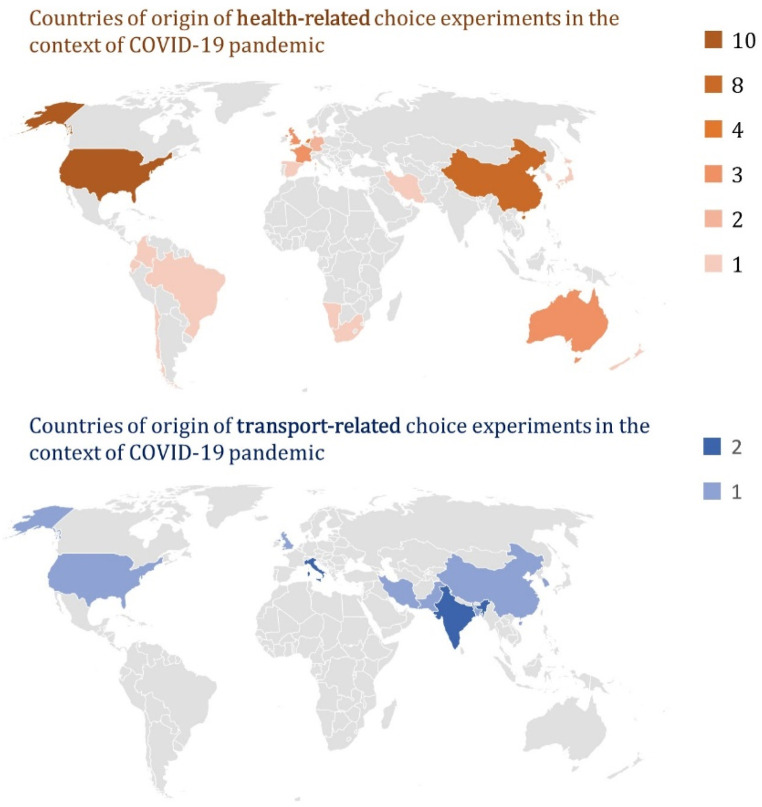

Pandemic-related choice experiments were reported in a total of 25 countries around the world. The distribution of the origin of these experiments in health and transport has been visualised in Fig. 1 . The distribution of these experiments across geographical regions is rather distinctly different across health and transport. Sixty percent of transport choice experiments were conducted in Asian countries, whereas USA as well as Australia and European countries had a more noticeable representation in health experiments. In transport, only India and Italy reported on multiple choice experiments (n = 2 for each) and the rest of the countries (Iran, USA, South Korea, China, UK, Pakistan, Bangladesh) only reported one experiment each. This makes a total of 9 countries. In comparison, there were twenty countries involved in conducting health-related experiments. Nearly fifty percent of health-related experiments, however, were concentrated in three countries, USA (n = 10), China (n = 8) and The Netherlands (n = 4), followed by Australia, France and UK with n = 3 reported experiment in each. It should also be noted that, one single experiment reported by Hess et al. (2022) was administered in 18 countries. If we exclude that study, then the percentage of experiments conducted in the top three countries in that list would be nearly 60% as opposed to 50%. The vast majority of health experiments targeted the population of only a single country. Other than the experiment of Hess et al. (2022), only one experiment in health, that of Liu et al. (2021a), has been extended across more than one country (USA and China). This is also the case within the transport experiments that were mostly limited to the population of a single country, except for the survey of Zannat et al. (2021) that drew samples from both Bangladesh and Pakistan. Both marketing-related experiments were conducted in the USA (Grashuis et al., 2020; Park and Lehto, 2021), whereas the sample for the single reported environmental study was drawn from the population of Canada, Norway and Scotland.

Fig. 1.

The number of health-related and transport-related discrete choice surveys in the context of COVID-19 conducted across the world during the first two years of the pandemic. The temporal month-by-month emergence of these experiments have been visualised and are accessible via the online supplementary material of this article.

The vast majority of the reported surveys composed samples that were representative of generic populations as opposed to specific cohorts. Exceptions to these are a few studies that targeted specific segments of the population, namely Huang et al. (2021) approached clinicians, Li et al. (2021b) and Ceccato et al. (2021) approached university students, Luevano et al. (2021) approached health-care workers, Manca et al. (2021) approached frequent flyers, and Park and Lehto (2021) approached hotel guests.

3.2. Common themes in health-related choice experiments

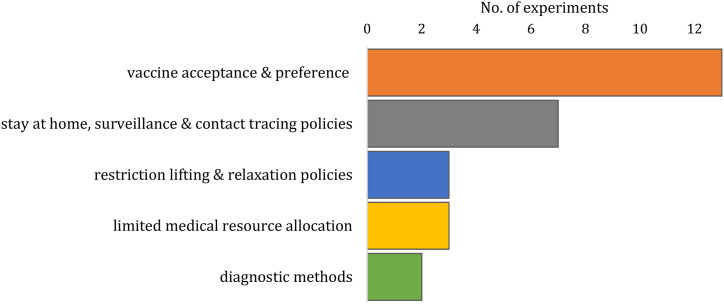

Examination of the topics of health-related experiments revealed that the topic of these experiments could each identify with one of these five major themes. This includes, in the order of their frequency, preferences for (1) vaccine attributes (Borriello et al., 2021; Craig, 2021; Dong et al., 2020; Eshun-Wilson et al., 2021b; Hess et al., 2022; Huang et al., 2021; Kreps et al., 2020; Leng et al., 2021; Li et al., 2021b; Liu et al., 2021a; Luevano et al., 2021; McPhedran and Toombs, 2021; Schwarzinger et al., 2021) (2) non-pharmaceutical preventative measures such as stay at home, social distancing, surveillance and contact tracing policies (Degeling et al., 2020; Eshun-Wilson et al., 2021a; Genie et al., 2020; Jonker et al., 2020; Li et al., 2021a; Mouter et al., 2021; Rad et al., 2021), (3) restriction lifting/relaxation policies and exit strategies (Chorus et al., 2020; Krauth et al., 2021; Reed et al., 2020), (4) allocation of limited medical resources and the associated dilemmas (e.g., ICU capacities, vaccines) (Gijsbers et al., 2021; Luyten et al., 2021; Michailidou, 2021) and (5) diagnostic and testing methods (Katare et al., 2022; Liu et al., 2021b). Fig. 2 shows the frequency of each of these themes within our dataset of references. Studies on preferences for and uptake of vaccines constituted the dominant theme in health, followed by issues related to public preferences for non-pharmaceutical preventative policies, i.e., stay-at-home, surveillance and contact tracing policies.

Fig. 2.

Frequency of major common themes in health-related discrete choice experiments in the context of COVID-19 pandemic.

https://protect-au.mimecast.com/s/_d4rCgZ0N1iAowvKXs2E0BN?domain=unsw-my.sharepoint.com

https://protect-au.mimecast.com/s/oh3KCjZ1N7inBGzDLH74ulj?domain=unsw-my.sharepoint.com.

3.3. Common themes in transport-related choice experiments

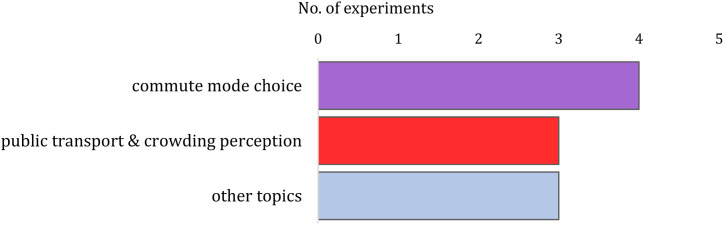

Within the transport domain, only two recurring themes were identifiable. This includes preferences for commute mode choice during the pandemic (Ceccato et al., 2021; Luan et al., 2021; Scorrano and Danielis, 2021; Xu et al., 2021) as well as attitudes towards public transport use and passenger crowding perception in public transport vehicles (Aaditya and Rahul, 2021; Aghabayk et al., 2021; Park and Lehto, 2021). Three individual studies were also detected that did not identify with either of the two major categories. This includes the experimental survey of Cherry et al. (2021) on willingness to pay for travel time saving and reliability, the survey of Manca et al. (2021) on attitude towards air travel, and, the survey of Zannat et al. (2021) on shopping trip behaviour. Fig. 3 visualises these relative frequencies.

Fig. 3.

Frequency of major common themes in transport-related discrete choice experiments in the context of COVID-19 pandemic.

3.4. Topics choice experiments in marketing and environmental sciences

Two pandemic-related choice experiments were attributable to topics that are typically studied in marketing and consumer choice domain. This included the survey of Grashuis et al. (2020) on grocery shopping preferences and that of Park and Lehto (2021) on hotel choice during the pandemic. Both surveys were conducted in the USA and in the early stages of the pandemic (i.e., May and March 2020, respectively).

Only one study was attributable to the environmental domain and that is the work of Hynes et al. (2021). The topic of the survey, per se, is not related to the pandemic, and in fact, is a survey that was conducted during the years prior to the pandemic.1 However, authors repeated the survey during the early stages of the pandemic in order to test potential effects of COVID-19 and the temporal stability of preferences and willingness to pay for environmental benefits.

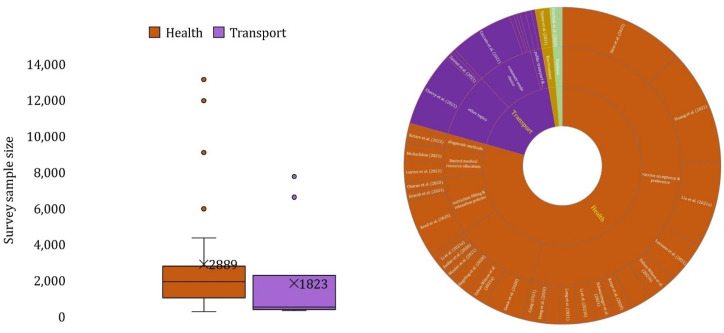

Readers can see the list of all qualified studies along with their major category and the theme within their category in Fig. 4 . Two supplementary videos of this article also demonstrate the temporal sequence and emergence of these choice experiments within health and transport domains and across various geographical regions. The general theme of each survey, their time and location of implementation, their sample size, as well as their key finding(s) are the information provided by the supplementary videos.

Fig. 4.

Pandemic-related discrete choice experiments across various divisions of applied economics.

3.5. Temporal trends in pandemic-related choice experiments

Fig. 5 shows a timeline of all health- and transport-related choice experiments based on the month in which the data collection started. The colour-coding is consistent with those of Fig. 2, Fig. 3, Fig. 4 and demonstrates the theme of each experiment. This analysis shows that, in the health domain, during the early stages of the pandemic, i.e., the first half of 2020, issues surrounding non-pharmaceutical preventative measures as well as restriction relaxation constituted the dominant foci of the experiments. Of the nine experiments conducted before June 2020, seven were related to these two themes. This is understandable, in that, in the absence of pharmaceutical solutions to the pandemic during that period of time, the focus of policy makers around the world was on implementation of policies such as lockdowns, travel restriction, physical distancing, contact tracing as well as planning for exit strategies from such measures. With the prospect of COVID-19 vaccines becoming a reality, however, health economists shifted their focus towards issues related to vaccine preference and uptake since June 2020. An exception to this trend is the experiment of Borriello et al. (2021) that investigated preferences of Australians for vaccine attributes as early as March 2020. The longest duration of data collection for these experiments are reported in Hess et al. (2022) whose overall data collection process on the topic of vaccine uptake, conducted across 18 nations, was ongoing between July 2020 and March 2021. Towards the end of 2020 and early 2021, vaccines became the dominant topic in choice experiments. Health-related choice experiments conducted since November 2020 are exclusively on the topic of vaccines.

Fig. 5.

Temporal sequence of discrete choice surveys conducted on health and transport-related topics in the context of COVID-19 pandemic.

Choice experiments in the transport sector do not exhibit a distinct temporal pattern, as observed with health studies.

3.6. Comparison of sample sizes across health and transport-related choice experiments

Stark contrasts were observable between the health and transport domains in terms of the sample sizes of their choice experiments. The average and median sample size of health-related experiments are respectively 2 889 and 1 915. Whereas the corresponding figures for transport experiments are 1 823 and 509. Pandemic-related choice experiments in the domain of health have overall been conducted using noticeably larger samples. This difference is visually observable in the form of a box-and-whisker plot in Fig. 6 . This difference may be explained by differences in available budget in the two disciplines.

Fig. 6.

Distribution of sample sizes of choice experiments conducted in the health and transport domains (on the left) and visualisation of individual studies (replica of Fig. 4) proportional to their sample sizes (on the right).

3.7. Comparison of piloting and use of focus-groups across health and transport-related choice experiments

In addition to the sample size, the higher prevalence of piloting and the use of focus groups in experiment design was far more notable in health-related surveys. Studies in health contexts conducted as early as March 2020 reported on conducting focus-group interviews during their design process (Rad et al., 2021). Other examples are the survey of Leng et al. (2021) (July 2020) and that of Dong et al. (2020). Seven experiments in total reported on conducting a pilot study, and those were all exclusively studies in the health domain (i.e., 23% of the health studies reported on piloting their survey and 0% in transport). Li et al. (2021a) also reported on piloting the design with faculty members, graduate and undergraduate students, survey design specialists and members of public. Mouter et al. (2021) collected a pilot sample of 80 respondents and also interviewed six experts (for a survey conducted in May 2020). Jonker et al. (2020) reported on two rounds of pilot testing (with 238 and 260 respondents) while their main survey was conducted in April 2020. Huang et al. (2021) pre-tested the survey on a pilot sample of 20 participants (main survey conducted in March 2021). The survey of Eshun-Wilson et al. (2021b) was piloted with a sample of 100 anonymous participants. Eshun-Wilson et al. (2021b); Liu et al. (2021a) also reported on recruiting a pilot sample, though the size of the sample was not reported. Even a number of health-related studies that skipped the piloting phase have acknowledged that explicitly. For example, Reed et al. (2020) conducted their survey in May 2020 and have mentioned in their paper that they prioritised expediency at the cost of some of the standard procedures of choice experiment design, and that they did not pilot the survey and rather relied on informal feedback from a sample of colleagues and friends. Similarly, Li et al. (2021a) who conducted their experiment on August 2020 acknowledged that they did not seek any focus group feedback due to time restriction. The elements of pre-testing, focus groups and piloting, however, appear to be missing from most transport surveys. The more rigorous pre-testing and piloting of health-related studies may be driven by the various guidelines and standard procedures that exist for conducting a choice experiment in health.

3.8. Comparison of design methods across health and transport choice experiments

Of the 30 choice experiments in health, half used an experimental design that was optimised for efficiency, orthogonality was a criterion that was also frequently used (n = 10). A similar finding is observed in the transport context, where five out the ten studies reported the use of an efficient experimental design, while others mostly reported orthogonality as the main design criterion. Noteworthy is that, in three choice experiments in transport, the attribute levels are pivoted around individual reference levels (Cherry et al., 2021; Manca et al., 2021; Scorrano and Danielis, 2021). Four studies do not provide information about how the choice sets were created, two amongst health studies (Katare et al., 2022; Michailidou, 2021) and two amongst transport studies (Aaditya and Rahul, 2021; Scorrano and Danielis, 2021).

3.9. Comparison of internal validity measures across health and transport choice experiments

Two of the surveyed studies in total reported on implementing measures of internal validity. Both studies were in health contexts. In the experiment of Jonker et al. (2020), one out of the fifteen choice sets presented to participants was a duplicate as a test of internal validity. In the experiment of Dong et al. (2020), a trap question, a choice set that includes an alternative with dominant attributes, was presented as a test for internal validity.

3.10. Signs of convergent validity in pandemic-related choice experiments

The multitude of choice experiments that were conducted on the same topic during the pandemic presents a unique opportunity to investigate convergent validity of these experiments, that is, whether comparable experiments conducted independently have resulted in consistent findings. Also, by a stretch, given that temporal distribution of experiments on the topic of vaccines in particular stretched over various stages of the pandemic and also over various geographical regions, there could be opportunities for investigating temporal stability and population validity of choice experiments within this specific context.

The context of vaccine preference is the one in which choice experiments show the highest degree of consistency in design and attribute definitions, and therefore, are most comparable in that regard. The most common finding of convergence across these studies has been the importance of vaccine efficiency, as reflected in the relative magnitude of the coefficient estimated for this attribute (Craig, 2021; Dong et al., 2020; Hess et al., 2022; Leng et al., 2021; Li et al., 2021b; Liu et al., 2021a; McPhedran and Toombs, 2021) and vaccine safety (side effects) (Borriello et al., 2021; Craig, 2021; Hess et al., 2022; Huang et al., 2021; Leng et al., 2021; Li et al., 2021b; Liu et al., 2021a) in the uptake. Two studies also pointed out the importance of the country/origin of manufacturing of the vaccine as an attribute that they found significant (Li et al., 2021b; Schwarzinger et al., 2021). The association between the likelihood of acceptance and respondents’ education level was independently found by three studies (Craig, 2021; Leng et al., 2021; Schwarzinger et al., 2021). A wide range of predicted uptake was reported by these studies: 86% (Australia, March 2020) (Borriello et al., 2021), 29% (France, June 2020) (Schwarzinger et al., 2021), 85% (China, June 2020) (Leng et al., 2021), 69% (USA, Nov 2020).

We consider the three studies whose experiment was in the context of lockdown strategies (Chorus et al., 2020; Krauth et al., 2021; Reed et al., 2020). While the designs of the three surveys are not entirely consistent in terms of the attributes and framing of questions, they were experiments in a comparable context and all conducted around the same time (during early stages of the pandemic, in April and March 2020) in culturally comparable countries (Germany, Netherlands and USA). The identification of preference heterogeneity for lockdown policies in the form of multiple classes of people was a common finding of Chorus et al. (2020) and Reed et al. (2020), although the former survey suggested three classes and the latter suggested four classes. Another common observation was the moderating effect of individual characteristics on preferences (Krauth et al., 2021; Reed et al., 2020). People's willingness to make individual/societal financial sacrifices in favour of saving lives, as a dominant preference, was another repeated observation (Chorus et al., 2020; Reed et al., 2020).

In the context of contact tracing apps, two surveys estimated the uptake, both in the Netherlands and both during early stages of the pandemic (Jonker et al., 2020; Mouter et al., 2021). The difference between their predicted uptake, however, is stark (50%–65% versus 24%–78%). A plausible explanation for this discrepancy is that the study of Jonker et al. (2020) took place in April just before the peak of infections was reached and when individuals did not know yet where the peak of infection rate will be and when it will end), whereas, the study of Mouter et al. (2021)et al. took place in May (i.e. individuals knew that the peak was over; Namely the peak was somewhere in April 2020).

We did not detect any notable sign of convergent validity across studies concerning resource allocation. This is not, per se, a sign of invalidity of their findings, but rather stems from differences of their designs and attribute definitions. The same holds for the surveys conducted in transport contexts.

3.11. Hypothetical bias mitigation in pandemic-related choice experiments

The potential presence of hypothetical bias and its effect on findings and predictions of discrete choice experiments have been documented in previous work (Haghani et al., 2021b). In Haghani et al. (2021c), ten major factors were discussed that could potentially engender such bias in choice experiments. Their role varies across contexts and applications of choice experiments. In other words, in certain applications of choice experiments the potential role of some sources of hypothetical bias is more prominent than others. For example, “lack of familiarity and contextual tangibility” has been listed as a potential factor. But for surveys that were conducted in the middle of the pandemic and in relation to issues such as lockdown and social distancing, this probably has not been a major source of bias. People around the world all lived through this pandemic and the disease prevention restrictions, the language around these policies were dominating the news and people were familiar with them, and as such, the contexts would resonate with them, and they would not find the context of such surveys intangible.2 We believe, of these ten factors, there are three that are most likely a major source of any potential hypothetical bias in pandemic-related choice experiments: (1) lack of (perceived) policy (societal) consequentiality, (2) strategic behaviour, protest response and deceit, and (3) warm glow and social desirability. These three factors are discussed below.

During the time when these surveys were conducted, governments around the world were making and revising policies dynamically and depending on the condition of disease spread and based on the medical knowledge that was emerging about its nature. With time being a pressing issue in relation to these policies, it is plausible that respondents of some of pandemic-related choice surveys have had doubts about whether results obtained from their responses will make it to actual policy and that their expressed preferences will translate to the decision-making at the highest levels of governance. Among the studies that published pandemic-related choice surveys, only one addressed this issue. Li et al. (2021a) carried out their choice experiment to study people's willingness to follow stay-at-home orders in USA. They report on implementing a policy consequentiality script as well as a perceived consequentiality questionnaire, the two mitigation measures that specifically target the abovementioned source of hypothetical bias.

With respect to surveys on vaccine preference and uptake, it would be understandable for people that the survey would mainly be for predicting people's willingness to be vaccinated, while pharmaceutical companies were developing and trialling the vaccines. The majority of vaccine surveys were conducted in 2020 while pharmaceutical companies only made vaccines accessible to governments since early 2021. Therefore, it is plausible that people did not rule out the potential impact of their responses on policies surrounding vaccine rollouts and mandates. This could potentially be a reason for people with some degrees of vaccine hesitancy to, for example, protest in their responses against any hypothetical mandate.3 Craig (2021) reported on administering an honesty oath script to respondents prior to the actual vaccine preference survey as a way of mitigating potential hypothetical bias that could stem from this source.

The hypothetical choice questions put to respondents in certain pandemic-related surveys poses certain social dilemmas to them (Chorus et al., 2020; Gijsbers et al., 2021; Luyten et al., 2021; Michailidou, 2021). In such circumstances it is plausible to assume that respondents may engage in type of responses that depicts a more socially desirable picture of them. They may have personal desires, for example, for lockdowns or social distancing restrictions to be lifted, but may mask that preference during the survey in favour of options that they perceive more socially acceptable, such as those that indicate that they are willing to make financial sacrifices in order to save lives (Chorus et al., 2020). Same goes with experiments that pose trade-offs in terms of the allocation of vital but limited medical resources, such as vaccine or ICU bed prioritisation (Gijsbers et al., 2021; Michailidou, 2021). The survey of Michailidou (2021), for example, found that participants’ response often violated optimal allocation of resources to benefit female patients with respect to hospital bed allocation or that respondents were less likely to allocate resources to higher income groups, while also showing no signs of racial bias. The nature of such questioning makes it possible that the findings be affected by hypothetical bias caused by the warm glow (or social desirability) phenomenon. In order to mitigate this effect, Michailidou (2021) implemented the method of indirect questioning (or third-person response) that is one of the solutions for hypothetical bias caused by social desirability effect4 (see Fig. 3 in Haghani et al. (2021c)). They contrasted that with responses to direct questioning and found a mismatch, implying the abovementioned theory about hypothetical bias. They justify this discrepancy in following words: “This mismatch between choices and beliefs might be due to participants overestimating the extent to which minorities experience discrimination or, due to participants showing less discrimination because of social desirability bias, yet projecting their racial biases when asked about the choices of others” (p. 5).

Transport-related surveys, on the other hand, did not report on implementing distinct measures of hypothetical bias mitigation. Cherry et al. (2021) mentioned that they asked additional opinion and attitudinal questions following the main survey to identify strategic bias. Zannat et al. (2021) also reported on combining their hypothetical choice response data with (self-reported, survey based) revealed preference data. In addition, three studies implemented pivot designs that are essentially to create familiarity and reduce potential hypothetical bias (Cherry et al., 2021; Manca et al., 2021; Scorrano and Danielis, 2021).

4. Concluding remarks

The COVID-19 pandemic created many unprecedented problems that required policy makers to know about preferences of people in novel contexts that had not been studied prior to the pandemic. This includes problems related to the preferences of people for accepting or adhering to a range of pharmaceutical and non-pharmaceutical disease control measures, as well as problems related to their mobility and travel behaviour. These areas, i.e., health and transport, are two domains where choice modeller are typically active and present (Haghani et al., 2021a). As a result, and prompted by these urgent societal needs imposed by the pandemic, choice modellers mobilised their efforts to address these problems and conducted more than forty experiments during the first two years of the pandemic. Some of these experiments began merely weeks after the official declaration of the global pandemic.

It is understandable that the urgency of some of the problems created by the pandemic might have justified that the researchers fast-track their design and execution of the study. The pressing nature of many of these unprecedented problems and the urgent need to obtain knowledge that can guide policy making have, in many cases, reflected in elements of design quality in pandemic-related choice experiments. In designing a typical choice experiment, researchers often have adequate time to conduct focus-group interviews, run pre-test and pilot experiments and utilise such feedback to enhance the quality and rigour of the main experiment, a practice which is assumed to eventually reflect in more accurate estimates and predictions. This process may often take several months or even years. In the context of COVID-19 experiments, however, the luxury of time was not present, and many studies had to compromise on these fronts, although to varying degrees.5

After carefully analysing these experiments, it became evident that experiments in the health domain applied more elements of quality control and hypothetical bias mitigation than the transport counterparts. While health-related experiments were conducted on average earlier than transport surveys, they reported on elements of pre-testing and piloting far more often than transport surveys.6 Moreover, health experiments collected substantially larger samples and, to less noticeable degrees, paid closer attention to pre-empting or mitigating the issue of hypothetical bias in their design.

We argue that the abovementioned observation could potentially be a reflection of a broader problem where choice modellers in the health sector follow more unified guidelines in their designs than choice modellers in transport. In other words, this observation could be flagging a potential broader issue, and if further future studies confirm that this is the case (i.e., that this is not specific to pandemic-related studies per se), then it will be recommendable that transport researchers need to adopt existing guidelines that are common in health or that they develop specific guidelines tailored to specific choice problems typically studied in transport. In a recent study looking at effectiveness of hypothetical bias mitigation methods, it was observed that health economics significantly pay more attention to the issue of hypothetical bias mitigation compared transport researchers (Haghani et al., 2021c). Therefore, we cannot rule out the possibility that the observations of the current study are also reflective of a broader difference in experimental design culture across these two sectors, a problem that warrants attention. Therefore, a conclusion could be that transport researchers need to pay more attention to pre-testing phase and qualitative research in their choice experiment design.

What was further noticeable in terms of the contrast between health and transport-related experiments was the relative popularity of pivot designs in transport and the absence of this method in health. Transport researchers have essentially developed and adopted this method as a mean of creating contextual familiarity for survey respondents and thereby reducing hypothetical bias. A previous study also noted the unique popularity of this technique in transport, compared to all other areas of applied economics (Haghani et al., 2021c). The said observation was also reflected in the current study in pandemic-related choice experiments where three out of ten transport surveys adopted this technique, whereas, similar to a broader trend, this method was absent in health. We do not, however, necessarily see this as a reason to encourage choice modellers in health to adopt the pivoting method. The nature of choice surveys in transport and travel behaviour is such that individual respondents are often familiar with different choice sets, different attributes or different attribute levels. Therefore, for a typical commuter to be able to relate to the survey, it is often useful that we pivot the attributes around their experienced levels. This is not often the case in health-related contexts. In surveys of vaccine preference or restriction policies, for example, there are little variations as to what individual respondents may be experiencing in terms of options (e.g., policies, vaccines) or characteristics of those options. Hence, the absence of this method in health, in our assessment, stems mainly from fundamental differences in the contexts of choice that health and transport researchers typically investigate.

The concurrent and independent investigation of similar choice problems in the context of COVID-19 across the world also offered a unique opportunity to investigate the question of convergent validity in choice experiments. While no two choice experiments on any topic were conducted identically, and despite differences in design, we were able to detect noteworthy signs of convergent validity, i.e., consistency in findings of independent studies using independent samples and surveys. This could be a promising indication and further evidence in support of the assumption that what choice experiment capture could be reliable reflections of people's preferences and reasonable proxies for true behaviour. There were even signs of convergent validity across experiments conducted in different countries and using samples from populations with major cultural differences. At the same time, we should take note of the fact that the issue of temporal stability was also flagged in the context of COVID-19 experiments. We observed that the timing of surveys often made differences in terms of their predictions. We suggest that this issue, i.e., temporal stability of findings of choice experiments, is one that warrants further attention from future studies.

An important dimension that was not particularly analysed in this review was the type of survey instrument used by studies. A large number of studies (particularly in health) (Craig, 2021; Eshun-Wilson et al., 2021b; Genie et al., 2020; Gijsbers et al., 2021; Katare et al., 2022; Li et al., 2021a) reported on administering their surveys through major survey platforms such as Qualtrics, sampling from the panel of respondents of those companies. Web-based survey tools often provide flexibility to randomize question order and cost-effectively select targeted populations. This, however, contrasts with the use of social media platforms such as Facebook, LinkedIn, Twitter, WhatsApp or Instagram for participant recruitment, particularly in some transport studies (Aaditya and Rahul, 2021; Xu et al., 2021; Zannat et al., 2021). It is unclear how the use of unofficial recruitment platforms such as social media might have impacted on the perceived consequentiality of the surveys (on respondents’ part), and thereby, validity of the results. Given the topical nature of COVID-19, it is possible that some respondents might have simply engaged with such surveys on social media out of curiosity and merely to explore what is being questioned. Whether or to what extent the use of these different participant recruitment instruments could impact on the accuracy of results is unclear, but the disparity between health and transport studies in this area is also striking (in line with other elements of survey quality documented by this work). This constitutes another issue that could be taken into consideration by future discrete-choice experiments that will perhaps keep emerging on COVID-19 related topics (Buchanan et al., 2021; van den Broek-Altenburg et al., 2021).

CRediT authorship contribution statement

Milad Haghani: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Visualization, Funding acquisition, Writing – original draft. Michiel C.J. Bliemer: Investigation, Visualization, Writing – review & editing. Esther W. de Bekker-Grob: Conceptualization, Writing – review & editing.

Declaration of competing interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Acknowledgments

This research was funded by Australian Research Council grant DE210100440. The authors are much grateful for the constructive feedback received from two anonymous referees on an earlier version of this work.

Footnotes

This is not a unique feature of this particular study. Rather some of the transport-related experiments are also the repeat of previously designed choice surveys that were readministered post pandemic, while observed preference changes were attributed to the pandemic. This includes studies of Cherry et al. (2021) as well as Cho and Park (2021) and Aghabayk et al. (2021).

However, one may also argue that contextual tangibility might not have been an issue with respect to topics such as restrictions and such measures, while people living through them. But for issues such as vaccine preference, especially for early studies that were conducted far before introduction of any COVID-19 vaccine, the issue of familiarity may have still mattered when it comes to the validity of preferences. It is possible that, prior to the introduction of vaccines, people were exposed to a great deal of misinformation and speculation about how they are made and whether they can be really safe and effective, which might have shaped difference preferences (e.g., uptake intention) compared to the time were information were available based on vaccine clinical trials and government approval procedures. However, one should note that this (dimension of hypothetical bias) has always been the pertinent to the use of choice experiments in relation to any novel product unavailable in the market at the time of the experiment.

In fact, Eshun-Wilson et al. (2021b) established as part of their choice experiments that vaccine mandate had a negative effect of uptake.

Interestingly, Michailidou (2021) did not explicitly acknowledge this as a bias mitigation strategy or even the issue of potential hypothetical bias in their paper. They refer to this as eliciting “choices” versus “beliefs”.

One may argue whether these accelerated streams of research were justified, or it would have perhaps been wiser to conduct these experiments using the established regular procedure but at a slower pace. It is, however, understandable that the research community needed to produce answers for urgent decision-making on matters such as vaccine roll-out or restriction lifting policies. So, in some cases, acceleration of research might have been a necessity.

One could argue that the issue of pre-testing and/or piloting is more important in abstract, unfamiliar or novel contexts of choice compared to established and/or tangible choice-making situations with which both the researcher and the respondent have a higher degree of familiarity. From that lens, issues such as “travel time” or “travel fare” may constitute more tangible contexts compared to “treatment options” or “diagnostic methods”. That could partly explain why piloting is more common in health, including in COVID-19 related experimental contexts.

Supplementary data to this article can be found online at https://doi.org/10.1016/j.jocm.2022.100371.

Appendix A—Studies of discrete choice experiments in the context of COVID-19 pandemic

| reference | topic | population | country | time of survey | sample size | design | variables | summary of findings |

|---|---|---|---|---|---|---|---|---|

| health | ||||||||

| Borriello et al. (2021) | vaccine attribute preference & WTP | generic | Australia | Mar 2020 | 2 136 | efficient 8 sets |

|

|

| Chorus et al. (2020) | Preferences for lockdown relaxation policies | generic | Netherlands | Apr 2020 | 1 009 | efficient 9 sets |

|

|

| Craig (2021) | willingness to be vaccinated | generic | US | Nov 2020 | 1 153 | efficient 8 sets |

|

|

| Degeling et al. (2020) | preference for covid surveillance technology | generic | Australia | Feb 2020 | 2 008 | efficient 12 sets |

|

|

| Dong et al. (2020) | vaccine preference | generic | China | June 2020 | 1 236 | efficient 10 sets |

|

|

| Eshun-Wilson et al. (2021a) | preference for social distancing policy measures | generic | US | June 2020 | 2 428 | near orthogonal 6 sets |

|

|

| Eshun-Wilson et al. (2021b) | preferences for vaccine distribution strategies | generic | US | Mar 2021 | 2 895 | near orthogonal 10 sets |

|

|

| Genie et al. (2020) | preferences for pandemic response | generic | UK | Aug 2020 | 4 000 | efficient 8 sets |

|

n.a. |

| Gijsbers et al. (2021) | preference for ICU priority | generic | Netherlands | Oct 2020 | 243 | orthogonal efficient 9 sets |

|

|

| Hess et al. (2022) | vaccine uptake | generic | 18 countries | Aug-2020 | 13,128 | efficient 6 sets |

|

|

| Huang et al. (2021) | vaccine preference | clinicians | China | Mar 2021 | 11,951 | fractional factorial 16 sets |

|

|

| Jonker et al. (2020) | contact tracing app uptake | generic | Netherlands | Apr 2020 | 900 | near orthogonal 13 sets |

|

|

| Katare et al. (2022) | preferences for diagnostic testing features | generic | US | Jul 2020 | 1 505 | not reported 9 sets |

|

|

| Krauth et al. (2021) | preferences for lockdown exit strategies | generic | Germany | Apr 2020 | 1 020 | efficient 16 sets |

|

|

| Kreps et al. (2020) | vaccine acceptance | generic | US | Jul 2020 | 1 971 | orthogonal 5 sets |

|

|

| Leng et al. (2021) | vaccine preference | generic | China | Jul 2020 | 1 888 | efficient partial profiles 8 sets |

|

|

| Li et al. (2021a) | willingness to follow stay-at-home orders | generic | US | Aug 2020 | 731 | efficient 6 sets |

|

|

| Li et al. (2021b) | vaccine preference | university students | Hong Kong | Jan 2021 | 1 941 | orthogonal 18 sets |

|

|

| Liu et al. (2021b), Liu et al. (2020), Liu et al. (2021c) | preferences for AI diagnosis of covid | generic | China | Aug 2020 | 428 | orthogonal 6 sets |

|

|

| Liu et al. (2021a) | vaccine hesitancy | generic | China & US | Feb 2021 | 9 077 | random |

|

|

| Luevano et al. (2021) | vaccine preference | healthcare workers | France | Dec 2020 | 4 346 | efficient 8 sets |

|

|

| Luyten et al. (2021) | preference for vaccine prioritisation | generic | Belgium | Oct 2020 | 2 060 | Bayesian efficient partial profiles 10 sets |

|

|

| McPhedran and Toombs (2021) | Vaccine acceptance | generic | UK | Aug 2020 | 1 501 | orthogonal efficient 6 sets |

|

|

| Michailidou (2021) | dilemmas in allocation of medical resources to covid patients | generic | US | May 2020 | 1 842 | not reported 2 sets |

|

|

| Mouter et al. (2021) | uptake for contact tracing app | generic | Netherlands | May 2020 | 990 | Bayesian efficient 8 sets |

|

|

| Rad et al. (2021) | willingness to isolate post diagnosis | generic | Iran | March 2020 | 617 | orthogonal 14 sets |

|

|

| Reed et al. (2020) | preference for restriction lifting | generic | US | May 2020 | 5 953 | orthogonal 10 sets |

|

|

| Schwarzinger et al. (2021) | vaccine acceptance | generic | France | June 2020 | 1 942 | efficient 8 sets |

|

|

| transport | ||||||||

| Aaditya and Rahul (2021) | willingness to use public transport | generic | India | June 2020 | 410 | not reported 8 sets |

|

|

| Aghabayk et al. (2021) | crowding perception | generic | Iran | Nov 2020 | 590 | efficient 6 sets |

|

|

| Ceccato et al. (2021) | mode choice | university students & employees | Italy | Aug 2020 | 6 598 | efficient 15 sets |

|

|

| Cherry et al. (2021) | value of travel time saving & reliability | generic | US | Mar 2020 | 7 743 | orthogonal, pivots 8 sets |

|

|

| Cho and Park (2021) | behaviour change of crowding impedance on public transit | generic | South Korea | Nov 2020 | 623 | orthogonal efficient 9 sets |

|

|

| Luan et al. (2021) | mode choice | generic | China | June 2020 | 428 | orthogonal efficient 3 sets |

|

|

| Manca et al. (2021) | attitude change to air travel | air travellers | UK | June 2020 | 388 | efficient, pivots 3 sets |

|

|

| Scorrano and Danielis (2021) | mode choice | generic | Italy | June 2020 | 315 | not reported, pivots 6 sets |

|

|

| Xu et al. (2021) | mode choice | generic | Pakistan | Feb 2021 | 318 | orthogonal 9 sets |

|

|

| Zannat et al. (2021) | shopping trip behaviour | generic | Bangladesh & India | May 2020 & Apr 2020 |

815 | efficient 2 sets |

|

|

| business | ||||||||

| Grashuis et al. (2020) | preference for grocery shopping | generic | USA | May 2020 | 900 | efficient 6 sets |

|

|

| Park and Lehto (2021) | hotel choice | hotel guests | USA | March 2020 | 422 | orthogonal 2 sets |

|

|

| environment | ||||||||

| Hynes et al. (2021) | stability of environmental preferences | generic | Canada, Scotland, Norway | May 2020 | 1 508 | Bayesian efficient |

|

|

Appendix B—The search query string

(TS=(“choice modelling*" OR “choice modeling*" OR “discrete choice model*" OR “model* of discrete choice” OR “random utility choice model*" OR “discrete choice method*" OR “discrete choice analysis*" OR “discrete choice analyses*" OR “discrete choice theory” OR “discrete choice experiment*" OR “stated choice experiment*" OR “stated choice survey” OR “stated choice method*" OR “discrete choice survey” OR “hypothetical choice experiment*" OR “hypothetical choice survey*" OR “econometric choice model*" OR “model* of econometric choice” OR “econometric choice method” OR “econometric choice analysis*" OR “econometric choice theory*" OR (“conjoint analysis” AND “choice”) OR “choice-based conjoint” OR (“stated preference*" AND “choice*") OR (“revealed preference*" AND “choice*") OR “stated choice data” OR “revealed choice data” OR “stated choice observation*" OR “revealed choice observation*"

OR ((“multinomial logit” OR “random parameter logit” OR “random coefficient logit” OR “mixed logit” OR “error components* logit” OR “latent class logit” OR “nested logit” OR “ordered logit” OR “multinomial probit” OR “mixed probit” OR “random parameter probit” OR “random utility theory” OR “random regret minimization*" OR “random utility maximization*" OR “random regret logit” OR “random utility logit”) AND (“choice*" OR “preference*"))

OR “choice survey design” OR ((“choice survey*" AND “design*") AND (“efficient” OR “orthogonal” OR “D-efficient” OR “D-optimal” OR “D-optimum” OR “E-efficient” OR “E-optimal” OR “E-optimum"))))

AND.

(TI=(“Covid-19″ OR “Coronavirus” OR “SARS-COV-2″) OR AK=(“Covid-19″ OR “Coronavirus” OR “SARS-COV-2″))

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- Aaditya B., Rahul T.M. Psychological impacts of COVID-19 pandemic on the mode choice behaviour: a hybrid choice modelling approach. Transport Pol. 2021;108:47–58. doi: 10.1016/j.tranpol.2021.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aghabayk K., Esmailpour J., Shiwakoti N. Effects of COVID-19 on rail passengers' crowding perceptions. Transport. Res. Pol. Pract. 2021;154:186–202. doi: 10.1016/j.tra.2021.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borriello A., Master D., Pellegrini A., Rose J.M. Preferences for a COVID-19 vaccine in Australia. Vaccine. 2021;39(3):473–479. doi: 10.1016/j.vaccine.2020.12.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchanan J., Roope L.S., Morrell L., Pouwels K.B., Robotham J.V., Abel L., Crook D.W., Peto T., Butler C.C., Walker A.S. Preferences for medical consultations from online providers: evidence from a discrete choice experiment in the United Kingdom. Appl. Health Econ. Health Pol. 2021;19(4):521–535. doi: 10.1007/s40258-021-00642-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceccato R., Rossi R., Gastaldi M. Travel demand prediction during COVID-19 pandemic: educational and working trips at the university of padova. Sustainability. 2021;13(12) [Google Scholar]

- Cherry T., Fowler M., Goldhammer C., Kweun J.Y., Sherman T., Soroush A. Transportation Research Record; 2021. Quantifying the Impact of the COVID-19 Pandemic on Passenger Vehicle Drivers' Willingness to Pay for Travel Time Savings and Reliability. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho S.H., Park H.C. Exploring the behaviour change of crowding impedance on public transit due to COVID-19 pandemic: before and after comparison. Trans. Lett. Int. J. Trans. Res. 2021;13(5–6):367–374. [Google Scholar]

- Chorus C., Sandorf E.D., Mouter N. Diabolical dilemmas of COVID-19: an empirical study into Dutch society's trade-offs between health impacts and other effects of the lockdown. PLoS One. 2020;15(9) doi: 10.1371/journal.pone.0238683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig B.M. United States COVID-19 vaccination preferences (CVP): 2020 hindsight. Patient Center. Outcome. Res. 2021;14(3):309–318. doi: 10.1007/s40271-021-00508-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Degeling C., Chen G., Gilbert G.L., Brookes V., Thai T., Wilson A., Johnson J. Changes in public preferences for technologically enhanced surveillance following the COVID-19 pandemic: a discrete choice experiment. BMJ Open. 2020;10(11) doi: 10.1136/bmjopen-2020-041592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dong D., Xu R.H., Wong E.L.Y., Hung C.T., Feng D., Feng Z.C., Yeoh E.K., Wong S.Y.S. Public preference for COVID-19 vaccines in China: a discrete choice experiment. Health Expect. 2020;23(6):1543–1578. doi: 10.1111/hex.13140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eshun-Wilson I., Mody A., McKay V., Hlatshwayo M., Bradley C., Thompson V., Glidden D.V., Geng E.H. Public preferences for social distancing policy measures to mitigate the spread of COVID-19 in Missouri. JAMA Netw. Open. 2021;4(7) doi: 10.1001/jamanetworkopen.2021.16113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eshun-Wilson I., Mody A., Tram K.H., Bradley C., Sheve A., Fox B., Thompson V., Geng E.H. Preferences for COVID-19 vaccine distribution strategies in the US: a discrete choice survey. PLoS One. 2021;16(8) doi: 10.1371/journal.pone.0256394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Genie M.G., Loria-Rebolledo L.E., Paranjothy S., Powell D., Ryan M., Sakowsky R.A., Watson V. Understanding public preferences and trade-offs for government responses during a pandemic: a protocol for a discrete choice experiment in the UK. BMJ Open. 2020;10(11) doi: 10.1136/bmjopen-2020-043477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gijsbers M., Keizer I.E., Schouten S.E., Trompert J.L., Groothuis-Oudshoorn C.G.M., van Til J.A. Public preferences in priority setting when admitting patients to the ICU during the COVID-19 crisis: a pilot study. Patient Center. Outcome. Res. 2021;14(3):331–338. doi: 10.1007/s40271-021-00504-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grashuis J., Skevas T., Segovia M.S. Grocery shopping preferences during the COVID-19 pandemic. Sustainability. 2020;12(13) [Google Scholar]

- Haghani M., Bliemer M.C., Hensher D.A. The landscape of econometric discrete choice modelling research. J. Choice Model. 2021;40 [Google Scholar]

- Haghani M., Bliemer M.C., Rose J.M., Oppewal H., Lancsar E. Hypothetical bias in stated choice experiments: Part I. Macro-scale analysis of literature and integrative synthesis of empirical evidence from applied economics, experimental psychology and neuroimaging. J. Choice Model. 2021;41 [Google Scholar]

- Haghani M., Bliemer M.C., Rose J.M., Oppewal H., Lancsar E. Hypothetical bias in stated choice experiments: Part II. Conceptualisation of external validity, sources and explanations of bias and effectiveness of mitigation methods. J. Choice Model. 2021;41 [Google Scholar]

- Hess S., Lancsar E., Mariel P., Meyerhoff J., Song F., van den Broek-Altenburg E., Alaba O.A., Amaris G., Arellana J., Basso L.J., Benson J., Bravo-Moncayo L., Chanel O., Choi S., Crastes dit Sourd R., Cybis H.B., Dorner Z., Falco P., Garzón-Pérez L., Glass K., Guzman L.A., Huang Z., Huynh E., Kim B., Konstantinus A., Konstantinus I., Larranaga A.M., Longo A., Loo B.P.Y., Moyo H.T., Oehlmann M., O'Neill V., de Dios Ortúzar J., Sanz Sánchez M.J., Sarmiento O.L., Tucker S., Wang Y., Wang Y., Webb E., Zhang J., Zuidgeest M. Social Science & Medicine; 2022. The Path towards Herd Immunity: Predicting COVID-19 Vaccination Uptake through Results from a Stated Choice Study across Six Continents. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang W.F., Shao X.P., Wagner A.L., Chen Y., Guan B.C., Boulton M.L., Li B.Z., Hu L.J., Lu Y.H. COVID-19 vaccine coverage, concerns, and preferences among Chinese ICU clinicians: a nationwide online survey. Expet Rev. Vaccine. 2021;20(10):1361–1367. doi: 10.1080/14760584.2021.1971523. [DOI] [PubMed] [Google Scholar]

- Hynes S., Armstrong C.W., Xuan B.B., Ankamah-Yeboah I., Simpson K., Tinch R., Ressurreicao A. Have environmental preferences and willingness to pay remained stable before and during the global Covid-19 shock? Ecol. Econ. 2021;189 doi: 10.1016/j.ecolecon.2021.107142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jonker M., de Bekker-Grob E., Veldwijk J., Goossens L., Bour S., Rutten-Van Molken M. COVID-19 contact tracing apps: predicted uptake in The Netherlands based on a discrete choice experiment. JMIR Health. 2020;8(10) doi: 10.2196/20741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katare B., Zhao S.L., Cuffey J., Marshall M.I., Valdivia C. Preferences toward COVID-19 diagnostic testing features: results from a national cross-sectional survey. Am. J. Health Promot. 2022;36(1):185–189. doi: 10.1177/08901171211034093. [DOI] [PubMed] [Google Scholar]

- Krauth C., Oedingen C., Bartling T., Dreier M., Spura A., de Bock F., von Ruden U., Betsch C., Korn L., Robra B.P. Public preferences for exit strategies from COVID-19 lockdown in Germany-A discrete choice experiment. Int. J. Publ. Health. 2021;66 doi: 10.3389/ijph.2021.591027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreps S., Prasad S., Brownstein J.S., Hswen Y., Garibaldi B.T., Zhang B.B., Kriner D.L. Factors associated with US adults' likelihood of accepting COVID-19 vaccination. JAMA Netw. Open. 2020;3(10) doi: 10.1001/jamanetworkopen.2020.25594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leng A.L., Maitland E., Wang S.Y., Nicholas S., Liu R.G., Wang J. Individual preferences for COVID-19 vaccination in China. Vaccine. 2021;39(2):247–254. doi: 10.1016/j.vaccine.2020.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li L.Q., Long D.D., Rad M.R., Sloggy M.R. Stay-at-home orders and the willingness to stay home during the COVID-19 pandemic: a stated-preference discrete choice experiment. PLoS One. 2021;16(7) doi: 10.1371/journal.pone.0253910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li X., Chong M.Y., Chan C.Y., Chan V.W.S., Tong X.N. COVID-19 vaccine preferences among university students in Hong Kong: a discrete choice experiment. BMC Res. Notes. 2021;14(1) doi: 10.1186/s13104-021-05841-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T., Tsang W., Huang F., Ming W.K. Should I choose artificial intelligence or clinicians' diagnosis? A discrete choice experiment of patients' preference under COVID-19 pandemic in China. Value Health. 2020;23 S759-S759. [Google Scholar]

- Liu T.R., He Z.L., Huang J., Yan N., Chen Q., Huang F.Q., Zhang Y.J., Akinwunmi O.M., Akinwunmi B.O., Zhang C.J.P., Wu Y.B., Ming W.K. A comparison of vaccine hesitancy of COVID-19 vaccination in China and the United States. Vaccines. 2021;9(6) doi: 10.3390/vaccines9060649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T.R., Tsang W.H., Huang F.Q., Lau O.Y., Chen Y.H., Sheng J., Guo Y.W., Akinwunmi B., Zhang C.J.P., Ming W.K. Patients' preferences for artificial intelligence applications versus clinicians in disease diagnosis during the SARS-CoV-2 pandemic in China: discrete choice experiment. J. Med. Internet Res. 2021;23(2) doi: 10.2196/22841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T.R., Tsang W.H., Xie Y.F., Tian K., Huang F.Q., Chen Y.H., Lau O.Y., Feng G.R., Du J.H., Chu B.J., Shi T.Y., Zhao J.J., Cai Y.M., Hu X.Y., Akinwunmi B., Huang J., Zhang C.J.P., Ming W.K. Preferences for artificial intelligence clinicians before and during the COVID-19 pandemic: discrete choice experiment and propensity score matching study. J. Med. Internet Res. 2021;23(3) doi: 10.2196/26997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luan S.L., Yang Q.F., Jiang Z.T., Wang W. Exploring the impact of COVID-19 on individual's travel mode choice in China. Transport Pol. 2021;106:271–280. doi: 10.1016/j.tranpol.2021.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luevano C.D., Sicsic J., Pellissier G., Chyderiotis S., Arwidson P., Olivier C., Gagneux-Brunon A., Botelho-Nevers E., Bouvet E., Mueller J. Quantifying healthcare and welfare sector workers' preferences around COVID-19 vaccination: a cross-sectional, single-profile discrete-choice experiment in France. BMJ Open. 2021;11(10) doi: 10.1136/bmjopen-2021-055148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luyten J., Tubeuf S., Kessels R. Rationing of a scarce life-saving resource: public preferences for prioritizing COVID-19 vaccination. Health Econ. 2021 doi: 10.1002/hec.4450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manca F., Sivakumar A., Pawlak J., Brodzinski N.J. Transportation Research Record; 2021. Will We Fly Again? Modeling Air Travel Demand in Light of COVID-19 through a London Case Study. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McPhedran R., Toombs B. Efficacy or delivery? An online Discrete Choice Experiment to explore preferences for COVID-19 vaccines in the UK. Econ. Lett. 2021;200 doi: 10.1016/j.econlet.2021.109747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michailidou G. Biases in COVID-19 medical resource dilemmas. Front. Psychol. 2021;12 doi: 10.3389/fpsyg.2021.687069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mouter N., Collewet M., de Wit G.A., Rotteveel A., Lambooij M.S., Kessels R. Societal effects are a major factor for the uptake of the coronavirus disease 2019 (COVID-19) digital contact tracing app in The Netherlands. Value Health. 2021;24(5):658–667. doi: 10.1016/j.jval.2021.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park S., Lehto X. Understanding the opaque priority of safety measures and hotel customer choices after the COVID-19 pandemic: an application of discrete choice analysis. J. Trav. Tourism Market. 2021;38(7):653–665. [Google Scholar]

- Rad E.H., Hajizadeh M., Yazdi-Feyzabadi V., Delavari S., Mohtasham-Amiri Z. How much money should be paid for a patient to isolate during the COVID-19 outbreak? A discrete choice experiment in Iran. Appl. Health Econ. Health Pol. 2021;19(5):709–719. doi: 10.1007/s40258-021-00671-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed S., Gonzalez J.M., Johnson F.R. Willingness to accept trade-offs among COVID-19 cases, social-distancing restrictions, and economic impact: a nationwide US study. Value Health. 2020;23(11):1438–1443. doi: 10.1016/j.jval.2020.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarzinger M., Watson V., Arwidson P., Alla F., Luchini S. COVID-19 vaccine hesitancy in a representative working-age population in France: a survey experiment based on vaccine characteristics. Lancet Public Health. 2021;6(4):E210–E221. doi: 10.1016/S2468-2667(21)00012-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scorrano M., Danielis R. Active mobility in an Italian city: mode choice determinants and attitudes before and during the Covid-19 emergency. Res. Transport. Econ. 2021;86 [Google Scholar]

- van den Broek-Altenburg E.M., Atherly A.J., Hess S., Benson J. The effect of unobserved preferences and race on vaccination hesitancy for COVID-19 vaccines: implications for health disparities. J. Manage. Care Special. Pharmacy. 2021;27(9-a Suppl. l):S2–S11. doi: 10.18553/jmcp.2021.27.9-a.s2. [DOI] [PubMed] [Google Scholar]

- Xu A.F., Chen J.M., Liu Z.H. Exploring the effects of carpooling on travelers' behavior during the COVID-19 pandemic: a case study of metropolitan city. Sustainability. 2021;13(20) [Google Scholar]

- Zannat K.E., Bhaduri E., Goswami A.K., Choudhury C.F. The tale of two countries: modeling the effects of COVID-19 on shopping behavior in Bangladesh and India. Trans. Lett. Int. J. Trans. Res. 2021;13(5–6):421–433. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.