Abstract

Background

Automated conversational agents, or chatbots, have a role in reinforcing evidence-based guidance delivered through other media and offer an accessible, individually tailored channel for public engagement. In early-to-mid 2021, young adults and minority populations disproportionately affected by COVID-19 in the United States were more likely to be hesitant toward COVID-19 vaccines, citing concerns regarding vaccine safety and effectiveness. Successful chatbot communication requires purposive understanding of user needs.

Objective

We aimed to review the acceptability of messages to be delivered by a chatbot named VIRA from Johns Hopkins University. The study investigated which message styles were preferred by young, urban-dwelling Americans as well as public health workers, since we anticipated that the chatbot would be used by the latter as a job aid.

Methods

We conducted 4 web-based focus groups with 20 racially and ethnically diverse young adults aged 18-28 years and public health workers aged 25-61 years living in or near eastern-US cities. We tested 6 message styles, asking participants to select a preferred response style for a chatbot answering common questions about COVID-19 vaccines. We transcribed, coded, and categorized emerging themes within the discussions of message content, style, and framing.

Results

Participants preferred messages that began with an empathetic reflection of a user concern and concluded with a straightforward, fact-supported response. Most participants disapproved of moralistic or reasoning-based appeals to get vaccinated, although public health workers felt that such strong statements appealing to communal responsibility were warranted. Responses tested with humor and testimonials did not appeal to the participants.

Conclusions

To foster credibility, chatbots targeting young people with vaccine-related messaging should aim to build rapport with users by deploying empathic, reflective statements, followed by direct and comprehensive responses to user queries. Further studies are needed to inform the appropriate use of user-customized testimonials and humor in the context of chatbot communication.

Keywords: vaccine hesitancy, COVID-19, chatbots, AI, artificial intelligence, natural language processing, social media, vaccine communication, digital health, misinformation, infodemic, infodemiology, conversational agent, public health, user need, vaccination, health communication, online health information

Introduction

Vaccine hesitancy has emerged as a public health threat as trust in immunization systems has been strained across much of the world [1,2]. Global measles outbreaks occurring in the face of waning vaccine uptake propelled vaccine hesitancy onto the World Health Organization’s list of top global health concerns [3,4]. The COVID-19 pandemic has brought hesitancy into sharp focus, including in the United States, which experienced one of the highest COVID-19 mortality rates among high-income nations [5].

A survey of more than 5 million Americans conducted via Facebook found that adults aged 18-34 years had the highest rates of vaccine hesitancy in May 2021 [6]. Moreover, despite disproportionately high COVID-19 mortality rates within communities of color [7], younger adults and Black, American Indian or Alaska Native, and multiracial groups continued to be the most hesitant, citing concerns regarding vaccine development, safety, and effectiveness [8-11]. As of May 2022, 3 in 10 Americans eligible for a COVID-19 vaccine have yet to get fully vaccinated, fueling continued disease spread and hindering pandemic recovery efforts [12].

To combat hesitancy, agency and advocacy leaders drew upon decades of communication science learning about building vaccine demand. Such guidance included the need for proactive planning efforts to understand the target populations, audience segmentation, tailored messaging, selection of appropriate channels, and commercial marketing approaches to ensure vaccines could be delivered via convenient and accessible services [13-16]. Program planning efforts would include continual analysis of the information landscape for competition, including misinformation and disinformation [15,17]. To build trust and engage young audiences often complacent about individual risk for disease, vaccine communication should be 2-way—listening and telling in equal measures—and in-person as well as web-based [13]. In urban communities, initiatives began by acknowledging historical injustices and ongoing inequities that drive distrust, with community-based health educators deployed to listen to concerns and provide support in person [18].

As a tool for providing tailored messaging, social listening, and 2-way dialogue, automated conversational agents, or chatbots, were cited early in the pandemic as a promising tool to offer COVID-19 health guidance on demand [19,20]. Chatbots have provided support on a range of health issues including chronic disease, addiction, reproductive health, depression, and anxiety, with promising adaptations of evidence-based interventions such as cognitive behavioral therapy [21-25]. This approach may appeal especially to young adults, since a substantial proportion of millennials, born from 1981-1996, are more trusting of web-based information and better equipped to use health technology than earlier generations [26,27]. Since the start of the pandemic, chatbots have been designed to provide COVID-19 health guidance in experimental settings [28], with some available globally via messaging platforms such as WhatsApp and Telegram [29,30]. Given their engaging, dialogue-based design, chatbots have a role in reinforcing public health guidance disseminated via other interventions such as social media campaigns. However, there is limited evidence related to the message design and framing of vaccine-related content delivered over digital platforms (eg, social media) and very little known about how such messaging should be delivered by educational chatbots in public health contexts [31].

Formative research has enabled the production of tailored content to optimize the delivery of messages [32]. An overarching factor in engaging and persuading audiences is the presence of credibility and trust, which can be defined as a combination of integrity, dependability, and competence [33,34]. Continually assessed by audiences, credibility can be lost through the delivery of a muddled or apparently dishonest message. Perhaps most central to vaccine communication in the context of hesitancy is the use of empathy, or a sense of one speaker understanding the experience of another. Empathic and reflective statements are critical components in motivational interviews, one of the few evidence-based means to soften vaccine hesitancy [35,36].

Seeking to review the acceptability of messages to be delivered by a chatbot, we engaged with potential users to identify which styles were preferred by young, urban-dwelling Americans. We also studied message reception with public health workers, anticipating that the chatbot would be used by the latter as a job aid. This formative research supported the development of a COVID-19 vaccine chatbot, VIRA, which was launched in 2021 by the International Vaccine Access Center at the Johns Hopkins Bloomberg School of Public Health [37]. IBM Research developed and managed the chatbot’s back end, which used Key Point Analysis, a commercially available technology that facilitates “extractive summarization” to process numerous comments, opinions, and statements and reveal the most important points and their relative prevalence. VIRA was initially programmed to respond to 100 Key Points, with up to 4 styles of responses to each identified concern. Key Points or distinct vaccine concerns were identified through various means: conducting a Twitter analysis, reviewing audience questions in Zoom-based public forums hosted by our affiliated academic centers, and synthesizing web pages with frequently asked questions [38-40]. To draft responses, we considered previous evidence that emphasizing social and physical consequences in an emotional format elicits broad influence [14], as well as evidence that trust is established through the perceptions of care and concern [41,42]. VIRA’s response database initially consisted of factual-only responses (responses containing data-driven information), empathy-factual responses (factual responses beginning with an empathetic phrase that validated the user’s query), principled responses (responses that appeal to a user’s conscience, often referencing community well-being [43]), rational arguments (responses containing a logical argument), testimonials (responses featuring a quote from a reputable expert), and humorous responses. All responses sought to minimize technical language and word count (under 280 characters). In this analysis, we investigated the appropriateness and tailoring of these responses.

Methods

Recruitment

Through 4 semistructured focus group discussions (FGDs), we assessed the appropriateness of different styles of responses to common COVID-19 vaccine questions. We selected focus groups to generate insightful participant discussions to illustrate group norms and diversity in the sampled population within a short period of time [44]. We recruited 2 participant groups in the United States: (1) urban-dwelling individuals aged 18-28 years and (2) public health workers, defined as individuals contracted or employed by health departments to encourage the uptake of COVID-19 vaccines. To identify health workers, we used snowball sampling through professional contacts in urban health departments of the United States. We also posted ads on Craigslist and Twitter, targeting both health workers and young people in Baltimore, Charlotte, New York City, Philadelphia, and Washington, D.C. We aimed to achieve maximum variability in race and ethnicity for both participant groups to explore attitudes toward chatbots providing health information. Since the chatbot was aimed to support people along the continuum of vaccine hesitancy up to vaccine refusal, and our study aimed to encourage productive group discussions among individuals with some openness to change around vaccination, we excluded people who stated they would “definitely NOT choose to get a COVID-19 vaccine by August 2021” in a scaled response [45,46].

Ethics Approval

The Johns Hopkins Bloomberg School of Public Health Institutional Review Board approved this study (approval 15714).

Data Collection

Following individually obtained informed consent, participants completed a demographic questionnaire using Qualtrics software (SAP America). Each participant then joined a web-based FGD via Zoom (licensed account; Zoom Video Communications Inc), 1 of which was composed exclusively of health workers and the other 3 composed of young participants [47]. Discussions were facilitated by a doctoral-level researcher, a masters-level faculty member, and a trained graduate student. Each FGD lasted 1 hour and had a maximum of 8 participants. Facilitators introduced VIRA, a chatbot developed by Johns Hopkins University that provided answers to common COVID-19 vaccine questions. Participants viewed 7 cards containing a question related to COVID-19 vaccination and 3 or 4 proposed responses written in various message styles. Participants were asked to select their preferred response, and conversation was encouraged between participants to further explore preferences. Table 1 displays sample messages (see Multimedia Appendix 1 for all message content).

Table 1.

Sample questions and tested response styles.

| Question or comment, response type |

Response | |

| I’m not sure if the vaccine is safe, so I want to see how it affects others before I get vaccinated. | ||

|

|

Factual-only response | All vaccines go through clinical trials to test safety and effectiveness. For the COVID-19 vaccines, the FDAa set up rigorous standards for vaccine developers to meet and thousands of people worldwide participated in clinical trials before the vaccines became available to the public! |

|

|

Empathy-factual response | This is an important question for many people! Once a vaccine is authorized for use, monitoring continues with systems in place to track problems or side-effects that were not detected during clinical trials. You can feel safe knowing these systems have got your back! |

|

|

Principled response | It’s very natural to have concerns. Yet, if some people choose to wait, we will not beat this pandemic any time soon. If you are willing to get vaccinated, you can do so knowing that millions have been safely vaccinated and you are helping our path to normalcy. |

| I’m worried about vaccine side effects and adverse reactions. | ||

|

|

Rational argument | The likelihood of experiencing a severe side-effect is very small—less than 5 out of 1,000 people! You’ll probably just have some manageable side-effects that resolve in a few days. |

| I’m young and healthy, so why do I need to get vaccinated? | ||

|

|

Testimonial | A Harvard physician said, “while the vast majority of young adults who get COVID-19 are not going to require hospitalization, those who do have a really high risk for adverse outcomes.” A vaccine can prevent severe illness, even if you’re young and healthy. |

| Should I get the vaccine if I’ve had COVID-19? | ||

|

|

Humorous response | Spoiler: People who have COVID-19 should still get vaccinated, but only AFTER you get well! |

aFDA: U.S. Food and Drug Administration.

Data Analysis

Recorded FGDs were transcribed using Temi software [48], with investigators reviewing each transcript for quality assurance. Dedoose software (version 9.0.46; SocioCultural Research Consultants) [49] was used for data management, such as coding, code report exports, and the reassembly process. We used a grounded theory approach to develop a conceptual framework of how people respond to the various styles of COVID-19 vaccine messaging that could inform the subsequent production of chatbot responses [50].

Through our inductive qualitative analysis process, we identified the emerging themes with which to code our data [51]. First, we developed our initial codebook, with 2 researchers independently reviewing and conducting line-by-line coding of 2 rich transcripts and producing open codes. The study team then convened to review and condense these open codes into broader themes and subthemes. Once the codebook was finalized, 2 researchers then coded each transcript and a third resolved any coding discrepancies. Although some coding redundancy was discovered, no new codes were identified, indicating a saturation of the themes outlined within the codebook [52]. Once the coding process was complete, the team arrayed the data into matrices to identify thematic patterns related to the code “chatbot credibility”—discussions of which were woven throughout FGDs, as seen in memos produced during coding and reassembly. To understand participants’ overall preference for certain message styles, we also produced a count of preferences for each message type reviewed during the FGD process.

Results

Participant Characteristics

Between June and October 2021, several months after COVID-19 vaccines became widely available in the United States, we held 4 web-based focus groups with a total of 7 individuals aged 25-61 years working in public health or vaccine outreach roles and 13 people aged 18-28 years (see Table 2 for participant demographics). Of the 20 participants, most (80%, n=16) were women, with a mean age of 28.5 years. The median self-reported household income was US $56,000; for younger participants, this likely included parental income. Most (90%, n=18) participants said they were vaccinated against COVID-19.

Table 2.

Participant demographics.

| Characteristic | Participant | |

| Self-identified gender (N=20), n (%) | ||

|

|

Female | 16 (80) |

|

|

Male | 4 (20) |

| Highest level of education (N=20), n (%) | ||

|

|

High school graduate | 4 (20) |

|

|

Some college, no degree | 3 (15) |

|

|

Bachelor’s degree or higher | 13 (65) |

| Self-identified race or ethnicity (N=20), n (%) | ||

|

|

American Indian or Alaska Native | 1 (5) |

|

|

Asian or Pacific Islander | 4 (20) |

|

|

Black or African American | 5 (25) |

|

|

Hispanic or Latino | 3 (15) |

|

|

White | 4 (20) |

|

|

More than 1 race or ethnicity | 3 (15) |

| Age group (years; N=20), n (%) | ||

|

|

18-29 | 15 (75) |

|

|

30-49 | 3 (15) |

|

|

50-69 | 2 (10) |

| Self-reported annual household income (2021; US $; n=19), median (range) | 56,000 (25,000-200,000) | |

| Vaccinated (N=20), n (%) | 18 (90) | |

Thematic Findings

Analysis of FGDs identified themes describing the message preferences of young adults and public health workers in urban American communities. In the following quotes, we describe participants as either public health workers (“advocates”) or young people.

Credibility

The credibility of a chatbot message was the predominant theme influencing response selection. Both young participants and advocates said messages achieved credibility through (1) message directness and (2) the establishment of rapport between the user and chatbot through conversational syntax and empathetic, reflective statements. Of the 26 total responses, 6 (23%) consisted of an empathy-factual composite style, beginning with a user-centered, reflective message such as “it sounds like you have concerns” or “this is an important question for a lot of people.” Empathetic responses used casual, nontechnical language to answer questions using evidence in what participants said was a transparent, contextualized response. A young woman described the style as, “kind of sticking to the facts in a colloquial/conversational manner—doesn’t feel like I’m reading a newspaper or a research paper” (Participant 09).

Another young woman liked the conversational tone and “extensive” detail provided in a factual-style message about side effects, which stated that the vaccine’s side effects “should resolve within one or two days of vaccination.” She said, “It covered it pretty extensively. It sets me up for what I can expect. And then I would feel more secure knowing...the chatbot, it’s giving me [a] correct answer” (Participant 19).

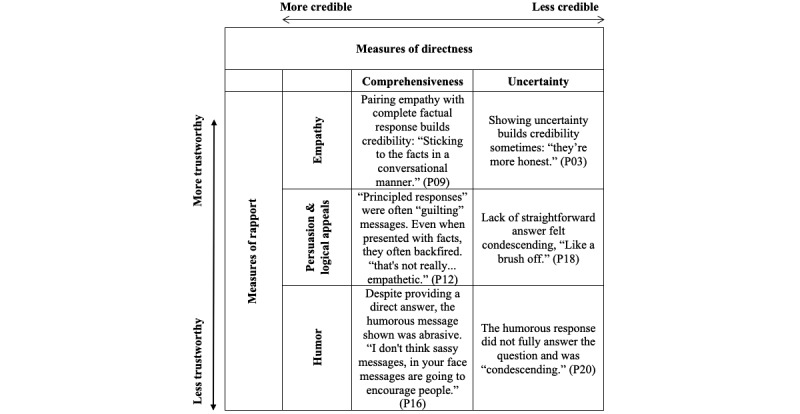

Figure 1 illustrates how messages achieved credibility through rapport-building and directness and how participants felt messages lost credibility when responses didn’t answer a question directly—“like a brush off.” As seen in textual excerpts in the table, trustworthiness was eroded when messages compromised rapport, either by incorporating humor or by deploying guilt-laden arguments.

Figure 1.

Message attributes supporting and hindering credibility with young focus group participants. Textual excerpts coded with both directness- and rapport-related variables (eg, each cell shows a textual passage double coded with a directness-related variable and rapport-related variable). P: participant.

Both groups of participants regarded scientific messages as credible, saying they trusted the message since it was communicated by a Johns Hopkins University chatbot. In a typical response about how the brand affected message reception, a young man said: “I felt this [was] trustworthy, [be]cause I knew it came from like Johns Hopkins, which has a strong reputation” (Participant 07).

Although advocates and young participants both preferred empathetic statements prefacing a full, factual response, some felt such messages seemed inauthentic. The phrase “having doubts is normal,” in the words of a male advocate, “makes [the chatbot] more humane, more human-like, and more accepting” (Participant 08). Meanwhile, participants felt the phrase “I hear you” was marginally reassuring, but the statement “I wondered about that too” seemed “weird and fake [from a chatbot]” to one young woman (Participant 05).

Responsibility

Principled responses sought to persuade users to get vaccinated by appealing to an individual’s responsibility for the well-being of a larger community and using reasoning instead of evidence (see example in Table 1). For instance, instead of stating that vaccines are safe because “All vaccines go through clinical trials” as in a factual response, a principled response would say, “If some people chose to wait, we will not beat this pandemic any time soon.” Young participants saw this as evasive as well as “condescending” and “aggressive.” In the words of several participants, these messages were judgmental or shaming; as told by a young participant “You’re telling them that you put your families, your community at risk too. You’re making them feel guilty...I feel like that’s not really...empathetic” (Participant 12).

However, the style had some appeal with 4 young participants of color. One participant, a young man, felt such direct messaging was warranted:

I like [principled response] D because at this point in time, I think we need more aggressive messaging. Like, guilt people, shake people, let’s be serious...don’t put your friends and family at risk. Just get the shot.

Participant 10

Meanwhile, advocates aged >30 years often preferred such messages, sometimes wanting messaging to be “stronger” and “louder” to combat misinformation around vaccine myths. As one advocate said, “I think that message should be really pushed out a little louder. [The vaccine] prevents you from getting deathly ill” (Participant 16).

Advocates preferred principled responses that emphasized shared responsibility to prevent COVID-19 spread. As one young advocate said, these responses “made me think about the risks I posed not just [for] myself, but the people around me” (Participant 01). Moreover, advocates shared concerns about their family members and discussed feeling surrounded by people that “weren’t doing their part.” As one advocate said, “We’ve been getting hit hard, especially in the Black and Indigenous, Latinx, API communities, and this thing isn’t going anywhere anytime soon” (Participant 18).

An advocate recalled seeing community members previously hospitalized with COVID-19 “still not wearing your mask...it just made me more weary” (Participant 17). Such fatigue with community members not taking precautions to protect themselves and one another was linked with participant preference for principled messaging that was direct and insistent on communal responsibilities.

Resistance to Logical Appeals

Participants rarely preferred the rational arguments shown. We tested the following message in response to the question, “Are COVID vaccines worse than the disease itself?”

The trouble with that logic is that it’s difficult to predict who will survive an infection without becoming a COVID-19 long hauler. Almost 30% of people who’ve survived COVID-19 still experience long-term side-effects!

One participant commented that the tone of the response sounded “judgmental.” Similarly, a young woman said “it was like the most convincing argument as to why you would want to get the vaccine because like it shows how many people get long-term side effects, but I did agree that the tone...was a little condescending” (Participant 20).

Humor

Almost unanimously, participants disapproved of the humorous response shown and said it mocked people for asking questions. Both young people and advocates said it was “pushy,” “saucy,” and “condescending.” Moreover, participants said it did not fully answer the question or provide context to support statements.

Balancing Comprehensiveness and Uncertainty

After “comprehensiveness,” the code “credibility” was most likely to overlap with “transparency,” indicating the importance of directly answering a question without seeming to withhold information. Although both advocates and young people discussed wanting responses to be both direct and comprehensive, the participants did not agree about explicitly highlighted scientific uncertainty. For instance, one message said, “scientists aren’t totally sure” about whether vaccines stop transmission. Advocates said acknowledging ongoing studies was appropriate, since “we’re still learning about it,” in the words of an advocate (Participant 18). However, young participants disagreed, saying phrases acknowledging uncertainty were unsettling and did not promote vaccination, with a young participant stating “she [the chatbot] seemed super uncertain” or “it almost makes it seem like people should wait to see more studies [to get the vaccine]” (Participant 19).

Authority as Elitism

Young participants considered the use of a testimonial-style quote attributed to a Harvard physician to be elitist. One male advocate responded by saying, “Why do I care? It’s throw[ing] that he just has a title at my face” (Participant 08). Advocates aged >30 years agreed that the testimonial was not helpful, citing the politicization of doctors and science and suggesting the chatbot display testimonials from frontline health workers, such as emergency medical technicians.

Relative Message Preference

To triangulate and strengthen our qualitative analysis, we tallied the number of votes the participants cast indicating their preferences for the messages on each of the 7 cards shown. FGD participants voted for a preferred message a total of 84 times (some did not select a response for each card shown). Participants voted for empathetic-factual messages 40 (48%) times, over 50% more times than factual-only messages—which at 24 (29%) votes was the second most preferred message style. However, although young participants most often (51%, 31/61) selected the empathetic-factual messages presented on message cards, public health workers most often (38%, 8/21) selected a principled response; young people rarely (8%, 5/61) selected this style with a few exceptions described above. Participants infrequently (8%, 7/84) preferred rational arguments, and participants never selected the testimonial or humorous messages, although just 1 example of each were shown on the cards. Although these quantitative results are not statistically significant given the qualitative study design and small sample size, the overwhelming preference for empathetic-framed responses among young participants is notable.

Discussion

Principal Findings

In this formative study of preferences for messages delivered by a COVID-19 chatbot, participants from urban American communities favored messages that were empathetic, direct, and comprehensive in answering questions related to COVID-19 vaccination. Messages achieved credibility through a combination of empathy and straightforward, evidence-based responses. User-centered reflective statements and conversational language that minimized the use of technical jargon fostered rapport between the chatbot and user.

Public health messages often contain statistics and rational statements, a strategy shown to effectively counter vaccine misinformation [53]. However, among our participants, most of whom were already vaccinated, this strategy alone was not as successful as messages that also included empathetic statements. Other studies among Black Americans with chronic conditions during COVID-19 found that participants also preferred chatbots to be “personable and empathetic” [54]. Other empathy-simulating chatbots have reported similar positive feedback from users [55-57]. Empathetic statements validated people’s search for knowledge and implicitly acknowledged the loneliness of the pandemic experience [58].

Participants in our study preferred straightforward, comprehensive responses that are similar to answers from an informed and respectful human interlocutor. The chatbot needed to completely answer user questions, or else may be perceived as evasive and potentially untrustworthy. Such expectations for politeness and respect align with the observations of technological anthropomorphizing, building on studies that show individuals’ interactions with computers are “fundamentally social” and that people naturally characterize the computer as a social actor [59-61].

For most young study participants, principled responses—messages appealing to concerns for family and community—counteracted the chatbot’s attempts to simulate empathy. In contrast, a meta-analysis of 60 studies found that younger audiences were the group most influenced by messages highlighting social consequences, showing the complexity of parsing message tactics in vaccine science when layered on top of a pandemic context [62]. However, for public health workers and several young people of color, the strong appeal to solidarity resonated with pandemic fatigue and frustration with individuals remaining unvaccinated in the face of widespread community distress; this finding is reinforced by the meta-analysis, which showed that so-called high-involvement audiences prefer data and strong messages that are somewhat fearful [62]. Built with audience-tested messages, the chatbot could offer a framework to support communication between health workers and community members that would integrate facilitated empathy.

Similarly, “rational arguments” eroded rapport between participants and the chatbot. Social media–related studies have used similar framing to “inoculate” audiences against misinformation, but this approach was not well-received in our study in the context of a chatbot [63,64].

The testimonial message shown to participants in the study was unappealing because the spokesperson was viewed as elitist. Future testimonials used in chatbot messages could be matched to participant demographics [65].

Although the humorous message shown to participants in this study performed poorly, humor is increasingly used to reach social media audiences otherwise disengaged from a public health topic and promote widespread sharing [66-68]. Friendly, self-deprecating humor as seen in popular voice assistant bots may be a better choice for one-to-one anonymous conversations with a chatbot than meme-style humor [69].

Limitations

Although this study revealed COVID-19 vaccine message preferences for chatbot conversations with young, urban community members in the United States, it has several limitations. First, our participants were mostly college-educated. Given our reliance on Twitter ads for recruitment, this may be because Twitter users tend to be better educated and more left-leaning [70]. In addition, our chatbot’s institutional branding, widely known to promote pandemic mitigation measures, likely dissuaded some vaccine skeptics. Despite the fact that most participants were vaccinated, a range of concerns regarding COVID-19 vaccines was proffered, and we regard hesitancy as a reflection of a wide spectrum of concerns and views, including among those deciding to get vaccinated. The uncertainties of the pandemic were challenging to our study’s feasibility. Since we needed to collect data rapidly to iteratively redesign a tool already in use by public health departments, we held only 4 focus groups, limiting the potential transferability of the findings to similar populations in other geographic areas or among other communities. However, due to the observed permeability of the US population segments of vaccinated and unvaccinated people, we believe the results are relevant to support efforts to counteract vaccine hesitancy [6]. The pandemic was a highly dynamic environment for studying a tool to counter vaccine hesitancy, and the well-publicized, highly contagious delta variant spread was concurrent with recruitment, increasing the uptake of vaccines; in the subsequent months, residual concerns in vaccinated members of the public have surfaced as well as the reluctance to get booster doses [71]. Further, most participants identified with or worked in communities with high rates of vaccine hesitancy and referenced the concerns of community members.

Moreover, preferences may not predict web-based behavior, and the appraisal of messages in the FGDs may diverge from assessments in the context of a dialogue-based chatbot [72]; additionally, due to the constraints of the hour-long discussions, we were unable to show multiple varieties of humor- and testimonial-style messages that may have yielded different findings. Other determinants of message acceptance, including the influence of gain- or loss-framing on chatbot message preference, could be further explored, and ultimately, the chatbot’s impact on actual behavior should be evaluated [62,73].

Conclusions

This study focused on the establishment of credible messaging from a chatbot to be used by young Americans and public health workers during the peak of the COVID-19 pandemic. We found that for young audiences, message credibility was optimized with empathetic statements and comprehensive, direct, and evidence-rich content. Pandemic-weary advocates and some young people from communities disproportionately affected by COVID-19 tended to prefer stronger, responsibility-focused messaging. Although credibility is essential to persuading users of a position, persuasion was not the target of this study. Additional controlled and randomized studies are needed to determine if a chatbot could persuade users to change their perception of the safety and effectiveness of vaccines and get vaccinated.

Acknowledgments

This research and the development of the chatbot VIRA [37] was supported by the Johns Hopkins COVID-19 Training Initiative with funding from Bloomberg Philanthropies and in-kind support from IBM Research.

Abbreviations

- FGD

focus group discussion

Full range of messages shown to focus group participants.

Footnotes

Conflicts of Interest: NB-Z received research grants from Johnson & Johnson and Merck for unrelated work outside the scope of this paper. All authors declare no other conflicts of interest.

References

- 1.Ozawa S, Stack ML. Public trust and vaccine acceptance--international perspectives. Hum Vaccin Immunother. 2013 Aug;9(8):1774–8. doi: 10.4161/hv.24961. http://europepmc.org/abstract/MED/23733039 .24961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gross L. A broken trust: lessons from the vaccine--autism wars. PLoS Biol. 2009 May 26;7(5):e1000114. doi: 10.1371/journal.pbio.1000114. https://dx.plos.org/10.1371/journal.pbio.1000114 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Worldwide measles deaths climb 50% from 2016 to 2019 claiming over 207 500 lives in 2019. World Health Organization. 2020. Nov 12, [2022-05-16]. https://tinyurl.com/2jbc6he5 .

- 4.Ten threats to global health in 2019. World Health Organization. [2022-05-23]. https://www.who.int/news-room/spotlight/ten-threats-to-global-health-in-2019 .

- 5.Mortality analyses. Johns Hopkins Coronavirus Resource Center. [2022-05-16]. https://coronavirus.jhu.edu/data/mortality .

- 6.King WC, Rubinstein M, Reinhart A, Mejia R. Time trends, factors associated with, and reasons for COVID-19 vaccine hesitancy: a massive online survey of US adults from January-May 2021. PLoS One. 2021 Dec 21;16(12):e0260731. doi: 10.1371/journal.pone.0260731. https://dx.plos.org/10.1371/journal.pone.0260731 .PONE-D-21-27471 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Siegel M, Critchfield-Jain I, Boykin M, Owens A. Actual racial/ethnic disparities in COVID-19 mortality for the non-Hispanic Black compared to non-Hispanic White population in 35 US states and their association with structural racism. J Racial Ethn Health Disparities. 2022 Jun;9(3):886–898. doi: 10.1007/s40615-021-01028-1. http://europepmc.org/abstract/MED/33905110 .10.1007/s40615-021-01028-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Callaghan T, Moghtaderi A, Lueck JA, Hotez P, Strych U, Dor A, Fowler EF, Motta M. Correlates and disparities of intention to vaccinate against COVID-19. Soc Sci Med. 2021 Mar;272:113638. doi: 10.1016/j.socscimed.2020.113638. http://europepmc.org/abstract/MED/33414032 .S0277-9536(20)30857-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fisher KA, Bloomstone SJ, Walder J, Crawford S, Fouayzi H, Mazor KM. Attitudes toward a potential SARS-CoV-2 vaccine : a survey of U.S. adults. Ann Intern Med. 2020 Dec 15;173(12):964–973. doi: 10.7326/M20-3569. https://www.acpjournals.org/doi/abs/10.7326/M20-3569?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ruiz JB, Bell RA. Predictors of intention to vaccinate against COVID-19: results of a nationwide survey. Vaccine. 2021 Feb 12;39(7):1080–1086. doi: 10.1016/j.vaccine.2021.01.010. http://europepmc.org/abstract/MED/33461833 .S0264-410X(21)00014-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Khubchandani J, Macias Y. COVID-19 vaccination hesitancy in Hispanics and African-Americans: a review and recommendations for practice. Brain Behav Immun Health. 2021 Aug;15:100277. doi: 10.1016/j.bbih.2021.100277. https://linkinghub.elsevier.com/retrieve/pii/S2666-3546(21)00080-6 .S2666-3546(21)00080-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.COVID data tracker. Centers for Disease Control and Prevention. [2022-02-27]. https://covid.cdc.gov/covid-data-tracker .

- 13.Goldstein S, MacDonald NE, Guirguis S, SAGE Working Group on Vaccine Hesitancy Health communication and vaccine hesitancy. Vaccine. 2015 Aug 14;33(34):4212–4. doi: 10.1016/j.vaccine.2015.04.042. https://linkinghub.elsevier.com/retrieve/pii/S0264-410X(15)00506-X .S0264-410X(15)00506-X [DOI] [PubMed] [Google Scholar]

- 14.Nowak GJ, Gellin BG, MacDonald NE, Butler R, SAGE Working Group on Vaccine Hesitancy Addressing vaccine hesitancy: the potential value of commercial and social marketing principles and practices. Vaccine. 2015 Aug 14;33(34):4204–11. doi: 10.1016/j.vaccine.2015.04.039. https://linkinghub.elsevier.com/retrieve/pii/S0264-410X(15)00503-4 .S0264-410X(15)00503-4 [DOI] [PubMed] [Google Scholar]

- 15.Evans WD, French J. Demand creation for COVID-19 vaccination: overcoming vaccine hesitancy through social marketing. Vaccines (Basel) 2021 Apr 01;9(4):319. doi: 10.3390/vaccines9040319. https://www.mdpi.com/resolver?pii=vaccines9040319 .vaccines9040319 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Butler R, MacDonald NE, SAGE Working Group on Vaccine Hesitancy Diagnosing the determinants of vaccine hesitancy in specific subgroups: the guide to Tailoring Immunization Programmes (TIP) Vaccine. 2015 Aug 14;33(34):4176–9. doi: 10.1016/j.vaccine.2015.04.038. https://linkinghub.elsevier.com/retrieve/pii/S0264-410X(15)00502-2 .S0264-410X(15)00502-2 [DOI] [PubMed] [Google Scholar]

- 17.Vaccine misinformation management field guide. United Nations Children's Fund (UNICEF) 2020. Dec, [2021-02-02]. https://vaccinemisinformation.guide .

- 18.Strully KW, Harrison TM, Pardo TA, Carleo-Evangelist J. Strategies to address COVID-19 vaccine hesitancy and mitigate health disparities in minority populations. Front Public Health. 2021 Apr 23;9:645268. doi: 10.3389/fpubh.2021.645268. doi: 10.3389/fpubh.2021.645268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Miner AS, Laranjo L, Kocaballi AB. Chatbots in the fight against the COVID-19 pandemic. NPJ Digit Med. 2020 May 04;3:65. doi: 10.1038/s41746-020-0280-0. doi: 10.1038/s41746-020-0280-0.280 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Amiri P, Karahanna E. Chatbot use cases in the Covid-19 public health response. J Am Med Inform Assoc. 2022 Apr 13;29(5):1000–1010. doi: 10.1093/jamia/ocac014. http://europepmc.org/abstract/MED/35137107 .6523926 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bonnevie E, Lloyd TD, Rosenberg SD, Williams K, Goldbarg J, Smyser J. Layla’s Got You: developing a tailored contraception chatbot for Black and Hispanic young women. Health Educ J. 2021;80(4):413–424. doi: 10.1177/0017896920981122. [DOI] [Google Scholar]

- 22.Kowatsch T, Schachner T, Harperink S, Barata F, Dittler U, Xiao G, Stanger C, V Wangenheim Florian, Fleisch E, Oswald H, Möller Alexander. Conversational agents as mediating social actors in chronic disease management involving health care professionals, patients, and family members: multisite single-arm feasibility study. J Med Internet Res. 2021 Feb 17;23(2):e25060. doi: 10.2196/25060. https://www.jmir.org/2021/2/e25060/ v23i2e25060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pereira J, Díaz Óscar. Using health chatbots for behavior change: a mapping study. J Med Syst. 2019 Apr 04;43(5):135. doi: 10.1007/s10916-019-1237-1.10.1007/s10916-019-1237-1 [DOI] [PubMed] [Google Scholar]

- 24.Wysa. [2021-02-16]. https://www.wysa.io/

- 25.Copper-Ind C. Aetna partners with well-being app to provide mental healthcare during pandemic. International Investment. 2020. May 19, [2021-02-16]. https://www.internationalinvestment.net/news/4015424/aetna-partners-app-provide-mental-healthcare-pandemic .

- 26.Alkire (née Nasr) L, O'Connor GE, Myrden S, Köcher S. Patient experience in the digital age: an investigation into the effect of generational cohorts. Journal of Retailing and Consumer Services. 2020 Nov;57:102221. doi: 10.1016/j.jretconser.2020.102221. [DOI] [Google Scholar]

- 27.Yasgur BS. Millennials flock to telehealth, online research. WebMD. 2021. Apr 02, [2022-03-30]. https://www.webmd.com/lung/news/20210402/millennials-flock-to-telehealth-online-research .

- 28.Altay S, Hacquin AS, Chevallier C, Mercier H. Information delivered by a chatbot has a positive impact on COVID-19 vaccines attitudes and intentions. J Exp Psychol Appl. doi: 10.1037/xap0000400. Preprint posted on October 28, 2021.2021-99618-001 [DOI] [PubMed] [Google Scholar]

- 29.WHO Health Alert brings COVID-19 facts to billions via WhatsApp. World Health Organization. 2021. Apr 26, [2021-07-26]. https://www.who.int/news-room/feature-stories/detail/who-health-alert-brings-covid-19-facts-to-billions-via-whatsapp .

- 30.Almalki M, Azeez F. Health chatbots for fighting COVID-19: a scoping review. Acta Inform Med. 2020 Dec;28(4):241–247. doi: 10.5455/aim.2020.28.241-247. http://europepmc.org/abstract/MED/33627924 .AIM-28-241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Limaye RJ, Holroyd TA, Blunt M, Jamison AF, Sauer M, Weeks R, Wahl B, Christenson K, Smith C, Minchin J, Gellin B. Social media strategies to affect vaccine acceptance: a systematic literature review. Expert Rev Vaccines. 2021 Aug;20(8):959–973. doi: 10.1080/14760584.2021.1949292. [DOI] [PubMed] [Google Scholar]

- 32.Noar SM. A 10-year retrospective of research in health mass media campaigns: where do we go from here? J Health Commun. 2006;11(1):21–42. doi: 10.1080/10810730500461059.H6Q277205W1540U0 [DOI] [PubMed] [Google Scholar]

- 33.National Academies of Sciences, Engineering, and Medicine . Communicating Science Effectively: A Research Agenda. Washington, DC: The National Academies Press; 2017. [PubMed] [Google Scholar]

- 34.Renn O, Levine D. Credibility and trust in risk communication. In: Kasperson RE, Stallen PJM, editors. Communicating Risks to the Public. Dordrecht, Netherlands: Springer; 2010. pp. 175–217. [Google Scholar]

- 35.Cole JW, Chen AMH, McGuire K, Berman S, Gardner J, Teegala Y. Motivational interviewing and vaccine acceptance in children: The MOTIVE study. Vaccine. 2022 Mar 15;40(12):1846–1854. doi: 10.1016/j.vaccine.2022.01.058.S0264-410X(22)00114-1 [DOI] [PubMed] [Google Scholar]

- 36.Gagneur A, Lemaître Thomas, Gosselin V, Farrands A, Carrier N, Petit G, Valiquette L, De Wals P. A postpartum vaccination promotion intervention using motivational interviewing techniques improves short-term vaccine coverage: PromoVac study. BMC Public Health. 2018 Jun 28;18(1):811. doi: 10.1186/s12889-018-5724-y. https://bmcpublichealth.biomedcentral.com/articles/10.1186/s12889-018-5724-y .10.1186/s12889-018-5724-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Vira, the Vax Chatbot. [2022-03-29]. https://vaxchat.org/

- 38.About COVID-19 materials for tribes. Center for American Indian Health. [2022-03-05]. https://caih.jhu.edu/home/about-covid-19-materials-for-tribes .

- 39.Answers to all your questions about getting vaccinated for Covid-19. The New York Times. 2021. Oct 18, [2022-05-10]. https://www.nytimes.com/interactive/2021/well/covid-vaccine-questions.html .

- 40.Twitter API. Twitter. [2022-05-10]. https://developer.twitter.com/en/docs/twitter-api .

- 41.Chen X, Hay JL, Waters EA, Kiviniemi MT, Biddle C, Schofield E, Li Y, Kaphingst K, Orom H. Health literacy and use and trust in health information. J Health Commun. 2018;23(8):724–734. doi: 10.1080/10810730.2018.1511658. http://europepmc.org/abstract/MED/30160641 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Peters RG, Covello VT, McCallum DB. The determinants of trust and credibility in environmental risk communication: an empirical study. Risk Anal. 1997 Feb;17(1):43–54. doi: 10.1111/j.1539-6924.1997.tb00842.x. [DOI] [PubMed] [Google Scholar]

- 43.Bilu Y, Gera A, Hershcovich D, Sznajder B, Lahav D, Moshkowich G, Malet A, Gavron A, Slonim N. Argument invention from first principles. Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics; July 28 to August 2, 2019; Florence, Italy. Proc 57th Annu Meet Assoc Comput Linguist Florence, Italy: Association for Computational Linguistics; 2019. Jul, pp. 1013–1026. [DOI] [Google Scholar]

- 44.Mack N, Woodsong C, MacQueen K, Guest G, Namey E. Qualitative Research Methods: A Data Collector's Field Guide. Research Triangle Park, NC: Family Health International; 2005. [Google Scholar]

- 45.Report of the SAGE Working Group on vaccine hesitancy. World Health Organization. 2014. Nov 12, [2022-06-24]. https://tinyurl.com/2p8uk64j .

- 46.MacDonald NE, SAGE Working Group on Vaccine Hesitancy Vaccine hesitancy: definition, scope and determinants. Vaccine. 2015 Aug 14;33(34):4161–4. doi: 10.1016/j.vaccine.2015.04.036. https://linkinghub.elsevier.com/retrieve/pii/S0264-410X(15)00500-9 .S0264-410X(15)00500-9 [DOI] [PubMed] [Google Scholar]

- 47.Video conferencing, cloud phone, webinars, chat, virtual events. Zoom. [2022-03-29]. https://zoom.us/

- 48.Temi. [2022-03-27]. https://www.temi.com/

- 49.User guide. Dedoose. [2022-02-28]. https://www.dedoose.com/userguide/appendix .

- 50.Corbin J, Strauss A. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. 4th ed. Thousand Oaks, CA: SAGE Publications; 2008. [Google Scholar]

- 51.Charmaz K. Constructing Grounded Theory. 2nd ed. Thousand Oaks, CA: SAGE Publications; 2014. [Google Scholar]

- 52.Hennink MM, Kaiser BN, Weber MB. What influences saturation? estimating sample sizes in focus group research. Qual Health Res. 2019 Aug;29(10):1483–1496. doi: 10.1177/1049732318821692. http://europepmc.org/abstract/MED/30628545 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Vraga EK, Kim SC, Cook J. Testing logic-based and humor-based corrections for science, health, and political misinformation on social media. J Broadcast Electron Media. 2019 Sep 20;63(3):393–414. doi: 10.1080/08838151.2019.1653102. [DOI] [Google Scholar]

- 54.Kim J, Muhic J, Park S, Robert L. Trustworthy conversational agent design for African Americans with chronic conditions during COVID-19. Realizing AI in Healthcare: Challenges Appearing in the Wild; 2021 CHI Conference on Human Factors in Computing Systems (CHI 2021); May 8-13, 2021; Online Virtual Conference (originally Yokohama, Japan). 2020. [DOI] [Google Scholar]

- 55.Ta V, Griffith C, Boatfield C, Wang X, Civitello M, Bader H, DeCero E, Loggarakis A. User experiences of social support from companion chatbots in everyday contexts: thematic analysis. J Med Internet Res. 2020 Mar 06;22(3):e16235. doi: 10.2196/16235. https://www.jmir.org/2020/3/e16235/ v22i3e16235 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Inkster B, Sarda S, Subramanian V. An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data evaluation mixed-methods study. JMIR mHealth uHealth. 2018 Nov 23;6(11):e12106. doi: 10.2196/12106. https://mhealth.jmir.org/2018/11/e12106/ v6i11e12106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fitzpatrick KK, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment Health. 2017 Jun 06;4(2):e19. doi: 10.2196/mental.7785. https://mental.jmir.org/2017/2/e19/ v4i2e19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Czeisler MÉ, Lane RI, Petrosky E, Wiley JF, Christensen A, Njai R, Weaver MD, Robbins R, Facer-Childs ER, Barger LK, Czeisler CA, Howard ME, Rajaratnam SM. Mental health, substance use, and suicidal ideation during the COVID-19 pandemic - United States, June 24-30, 2020. MMWR Morb Mortal Wkly Rep. 2020 Aug 14;69(32):1049–1057. doi: 10.15585/mmwr.mm6932a1. doi: 10.15585/mmwr.mm6932a1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Nass C, Steuer J, Siminoff ER. Computers are social actors. CHI '94: Conference Companion on Human Factors in Computing Systems; April 24-28, 1994; Boston, MA. 1994. Apr 28, pp. 72–78. [DOI] [Google Scholar]

- 60.Nass C, Moon Y. Machines and mindlessness: social responses to computers. J Social Isssues. 2000 Jan;56(1):81–103. doi: 10.1111/0022-4537.00153. [DOI] [Google Scholar]

- 61.Christoforakos Lara, Feicht Nina, Hinkofer Simone, Löscher Annalena, Schlegl Sonja F, Diefenbach Sarah. Connect With Me. Exploring Influencing Factors in a Human-Technology Relationship Based on Regular Chatbot Use. Front Digit Health. 2021;3:689999. doi: 10.3389/fdgth.2021.689999. https://europepmc.org/backend/ptpmcrender.fcgi?accid=PMC8636701&blobtype=pdf . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Keller PA, Lehmann DR. Designing effective health communications: a meta-analysis. Journal of Public Policy & Marketing. 2008 Sep 01;27(2):117–130. doi: 10.1509/jppm.27.2.117. [DOI] [Google Scholar]

- 63.Bode L, Vraga EK. In related news, that was wrong: the correction of misinformation through related stories functionality in social media. J Commun. 2015 Jun 23;65(4):619–638. doi: 10.1111/jcom.12166. [DOI] [Google Scholar]

- 64.Cook J, Lewandowsky S, Ecker UKH. Neutralizing misinformation through inoculation: exposing misleading argumentation techniques reduces their influence. PLoS One. 2017 May 5;12(5):e0175799. doi: 10.1371/journal.pone.0175799. https://dx.plos.org/10.1371/journal.pone.0175799 .PONE-D-16-15763 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Ramirez A, Despres C, Chalela P, Weis J, Sukumaran P, Munoz E, McAlister A. Pilot study of peer modeling with psychological inoculation to promote coronavirus vaccination. Health Educ Res. 2022 Mar 23;37(1):1–6. doi: 10.1093/her/cyab042.6511803 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Public Health Messaging Can Be Funny Too! American College Health Association. [2022-06-28]. https://www.youtube.com/watch?v=C_dgwhWhwGQ .

- 67.Chen S, Forster S, Yang J, Yu F, Jiao L, Gates J, Wang Z, Liu H, Chen Q, Geldsetzer P, Wu P, Wang C, McMahon S, Bärnighausen Till, Adam M. Animated, video entertainment-education to improve vaccine confidence globally during the COVID-19 pandemic: an online randomized controlled experiment with 24,000 participants. Trials. 2022 Feb 19;23(1):161. doi: 10.1186/s13063-022-06067-5. https://trialsjournal.biomedcentral.com/articles/10.1186/s13063-022-06067-5 .10.1186/s13063-022-06067-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Vaala S, Ritter MB, Palakshappa D. Experimental effects of tweets encouraging social distancing: effects of source, emotional appeal, and political ideology on emotion, threat, and efficacy. J Public Health Manag Pract. 2022 Apr;28(2):E586–E594. doi: 10.1097/PHH.0000000000001427.00124784-900000000-99134 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.The AI companion who cares. Replika. [2021-07-26]. https://replika.ai .

- 70.Wojcik S, Hughes A. Sizing up Twitter users. Pew Research Center. 2019. Apr 24, [2022-05-10]. https://www.pewresearch.org/internet/2019/04/24/sizing-up-twitter-users/

- 71.KFF COVID-19 vaccine monitor. Kaiser Family Foundation. [2022-05-22]. https://www.kff.org/coronavirus-covid-19/dashboard/kff-covid-19-vaccine-monitor-dashboard/

- 72.Loft LH, Pedersen EA, Jacobsen SU, Søborg Bolette, Bigaard J. Using Facebook to increase coverage of HPV vaccination among Danish girls: an assessment of a Danish social media campaign. Vaccine. 2020 Jun 26;38(31):4901–4908. doi: 10.1016/j.vaccine.2020.04.032.S0264-410X(20)30512-0 [DOI] [PubMed] [Google Scholar]

- 73.Nan X. Communicating to young adults about HPV vaccination: consideration of message framing, motivation, and gender. Health Commun. 2012;27(1):10–8. doi: 10.1080/10410236.2011.567447. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Full range of messages shown to focus group participants.