Abstract

Ultrasensitive multimodal physicochemical sensing for autonomous robotic decision-making has numerous applications in agriculture, security, environmental protection, and public health. Previously reported robotic sensing technologies have primarily focused on monitoring physical parameters such as pressure and temperature. Integrating chemical sensors for autonomous dry-phase analyte detection on a robotic platform is rather extremely challenging and substantially underdeveloped. Here, we introduce an artificial intelligence-powered multimodal robotic sensing system (M-Bot) with an all-printed mass-producible soft electronic skin–based human-machine interface. A scalable inkjet printing technology with custom-developed nanomaterial inks was used to manufacture flexible physicochemical sensor arrays for electrophysiology recording, tactile perception, and robotic sensing of a wide range of hazardous materials including nitroaromatic explosives, pesticides, nerve agents, as well as infectious pathogens such as SARS-CoV-2. The M-Bot decodes the surface electromyography signals collected from the human body through machine learning algorithms for remote robotic control and can perform in-situ threat compound detection in extreme or contaminated environments with user-interactive tactile and threat alarm feedback. The printed electronic-skin-based robotic sensing technology can be further generalized and applied to other remote sensing platforms. Such diversity was validated on an intelligent multimodal robotic boat platform that can efficiently track the source of trace amounts of hazardous compounds through autonomous and intelligent decision-making algorithms. This fully-printed human-machine interactive multimodal sensing technology could play a crucial role in designing future intelligent robotic systems and can be easily reconfigured toward numerous new practical wearable and robotic applications.

One-Sentence Summary:

Electronic skin printed with nanomaterial inks enables machine learning–driven autonomous robotic physicochemical sensing.

INTRODUCTION

The development of advanced autonomous robotic systems that mimic and surpass human sensing capabilities is critical for environmental and agricultural protection as well as public health and security surveillance (1–4). In particular, robotic tactile perception allows for successful task implementation while avoiding harm to the device, user, and environment (4–6). Additionally, autonomous trace-level threat detection prevents human exposure from toxic chemicals when operating in extreme and hazardous environments (7, 8). Such field-deployable, on-the-spot detection tools can be applied for the rapid identification of minute concentrations of nitroaromatic explosives that pose a health and security threat if they are unchecked (9–11). In fact, there are numerous toxic compounds that need to be tightly regulated in health and agriculture, such as organophosphates (OPs): pesticides or chemical warfare nerve agents that can cause neurological disorders, infertility, and even rapid death (12, 13). Such tools can be extended to monitor pathogenic biohazards such as the SARS-CoV-2 virus without direct human exposure, which could play a crucial role in combating infectious diseases, especially as the current COVID-19 pandemic remains uncontrolled around the world (14–16). These strong demands for autonomous sensitive hazard detection have motivated the development of a controllable human-machine interactive robotic system with both physical and chemical sensing capabilities for task performing and point-of-use analysis.

Due to its high flexibility and conformability, electronic skin (e-skin) presents itself as the ideal interface between electronics and the human/robot bodies. In literature, e-skin has demonstrated a wide range of physical and chemical sensing applications ranging from consumer electronics, digital medicine, smart implants, to environmental surveillance (17–31). Despite such promise, several challenges exist for e-skin–based multifunctional robotic systems. Because most rapid detection approaches for hazardous compounds require manual solution-based sample preparation steps, integrating chemical sensors for autonomous remote dry-phase analyte detection onto an e-skin-based robotic sensing platform is extremely challenging and substantially underdeveloped, hindering e-skin’s capabilities for robotic interaction and cognition of the external world (7, 32). A robotic manipulator would require tactile, chemical, and temperature feedback to handle arbitrary objects, collect target samples, and carry out accurate chemical analysis in extreme environments (33). Another problem for e-skin interfaces is that preparing high-performance sensors generally requires manual drop-casting modifications of nanomaterials, which can lead to large sensor variations (34). Currently, there is a lack of scalable low-cost manufacturing approaches to prepare thin, ultra-flexible, multifunctional robotic physicochemical sensor patches. Despite these concerns, there is a strong need for an efficient human-machine interface that can reliably extract physiological features (35) as well as accurately control and receive real-time user-interactive feedback.

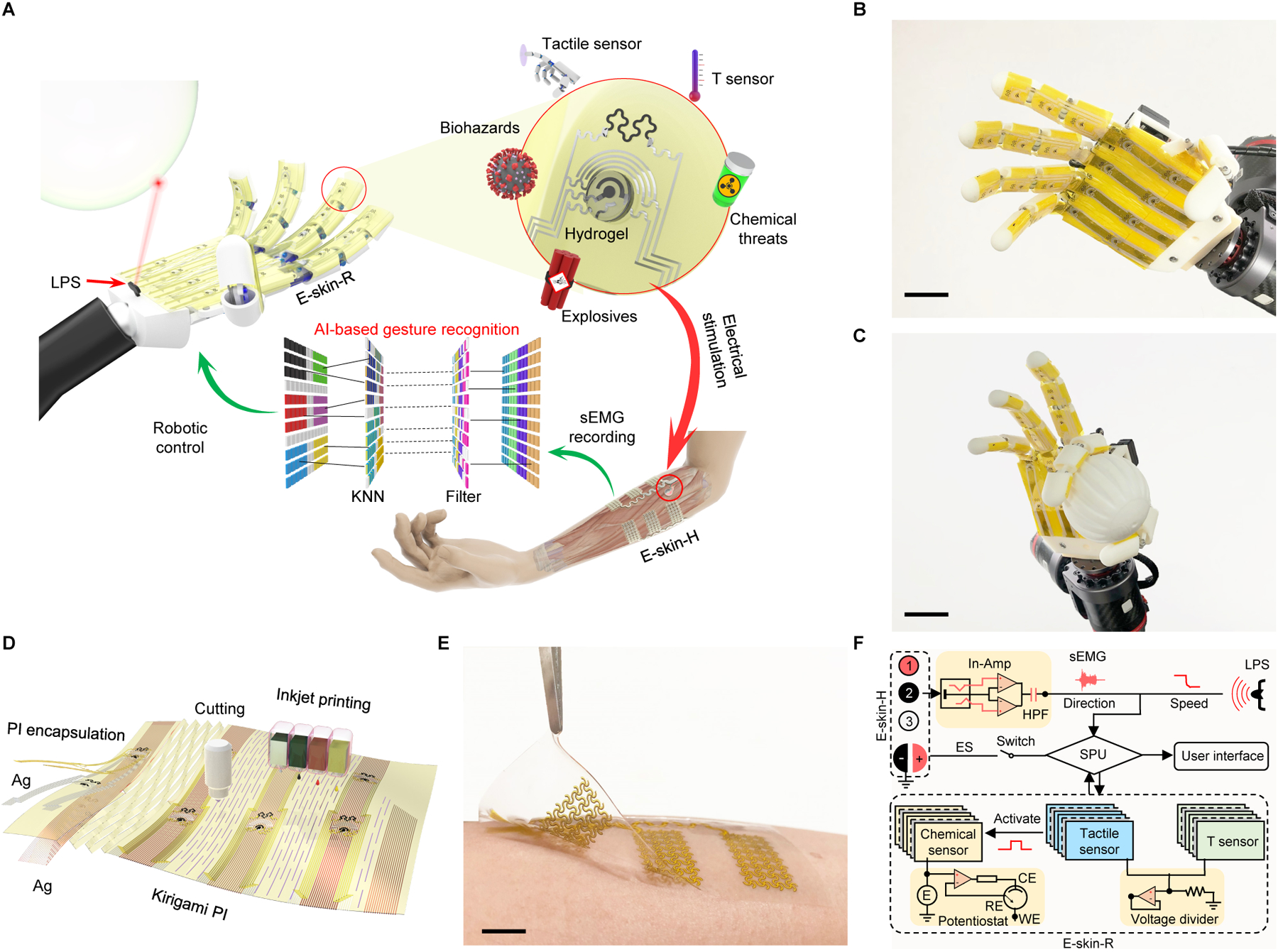

To address these challenges, we introduce here an artificial intelligence-powered human-machine interactive multimodal sensing robotic system (M-Bot) (Fig. 1A). The M-Bot is composed of two fully inkjet-printed stretchable e-skin patches, namely e-skin-R and e-skin-H, that interface conformally with the robot and human skin respectively. The e-skins with powerful physicochemical sensing capabilities are mass-producible, reconfigurable, and can be entirely prepared using a high-speed, low-cost, scalable inkjet-printing technology with a series of custom-developed nanomaterial inks. Upon collecting physiological data, the machine learning model can decode the surface electromyography (sEMG) signals from muscular contractions (recorded by e-skin-H) for robotic hand control. Simultaneously, e-skin-R can perform proximity sensing, tactile and temperature perceptual mapping, alongside real-time hydrogel-assisted electrochemical on-site sampling and analysis of both solution-phase and dry-phase threat compounds including explosives (such as 2,4,6-trinitrotoluene (TNT)), pesticides (such as OPs), and biohazards (such as SARS-CoV-2 virus). Upon detection, real-time haptic and threat alarm feedback communications were achieved via electrical stimulation of the human body with e-skin-H. The threat sensing capabilities of the M-Bot could pave the way for automated chemical sensing, facilitating machine-mediated decisions for a wide range of practical robotic assistance applications.

Fig. 1. Artificial intelligence (AI)-powered multimodal sensing robotic system (M-Bot) based on a fully-printed soft human-machine interface.

(A) Schematic of the M-Bot that contains a pair of fully-printed soft electronic skins (e-skins): e-skin-H (interfacing with the human skin) and e-skin-R (interfacing with the robotic skin) for AI-powered robotic control and multimodal physicochemical sensing with user-interactive feedback. LPS, laser proximity sensor; sEMG, surface electromyography; T, temperature; KNN, K-nearest neighbors algorithm. (B and C) Photographs of the robotic skin-interfaced e-skin-R consisting of arrays of printed multimodal sensors. Scale bars, 3 cm. (D) Schematic illustration of rapid, scalable, and cost-effective prototyping of the kirigami soft e-skin-R using inkjet printing and automatic cutting. PI, polyimide. (E) Photograph of the human skin–interfaced soft e-skin-H with arrays of sEMG and feedback stimulation electrodes. Scale bar, 1 cm. (F) Schematic signal-flow diagram of the M-Bot. In-Amp, instrumentation amplifier; HPF, high-pass filter; E, applied voltage; ES, electrical stimulation; SPU, signal processing unit. WE, CE, and RE represent working, counter, and reference electrodes of the printed chemical sensor, respectively.

RESULTS

Design of the human-machine interactive e-skins

E-skin-R is comprised of high-performance printed nanoengineered multimodal physicochemical sensor arrays that can be placed on the palm and fingers of the robotic hand (Fig. 1B and C). The entire sensor patch can be rapidly manufactured in a large-scale and low-cost method via a powerful drop-on-demand inkjet printing technology (Fig. 1D, fig. S1, and movie S1). On top of e-skin-R are engraved kirigami structures that provide high stretchability without conductivity changes under 100% strain, which is crucial for any robotic hand with high degrees of freedom in movement. E-skin-H consists of four sEMG electrode arrays (channels), alongside a pair of electrical stimulation electrodes, which can be fabricated similarly with inkjet printing followed by transfer printing onto a stretchable polydimethylsiloxane (PDMS) substrate (Fig. 1E). With assistance from artificial intelligence (AI), multimodal physicochemical sensing, and electrical stimulation-based feedback control, e-skin-R and e-skin-H form a closed-loop human-machine interactive robotic sensing system (Fig. 1F).

Fabrication and characterization of the fully inkjet-printed multimodal sensor arrays

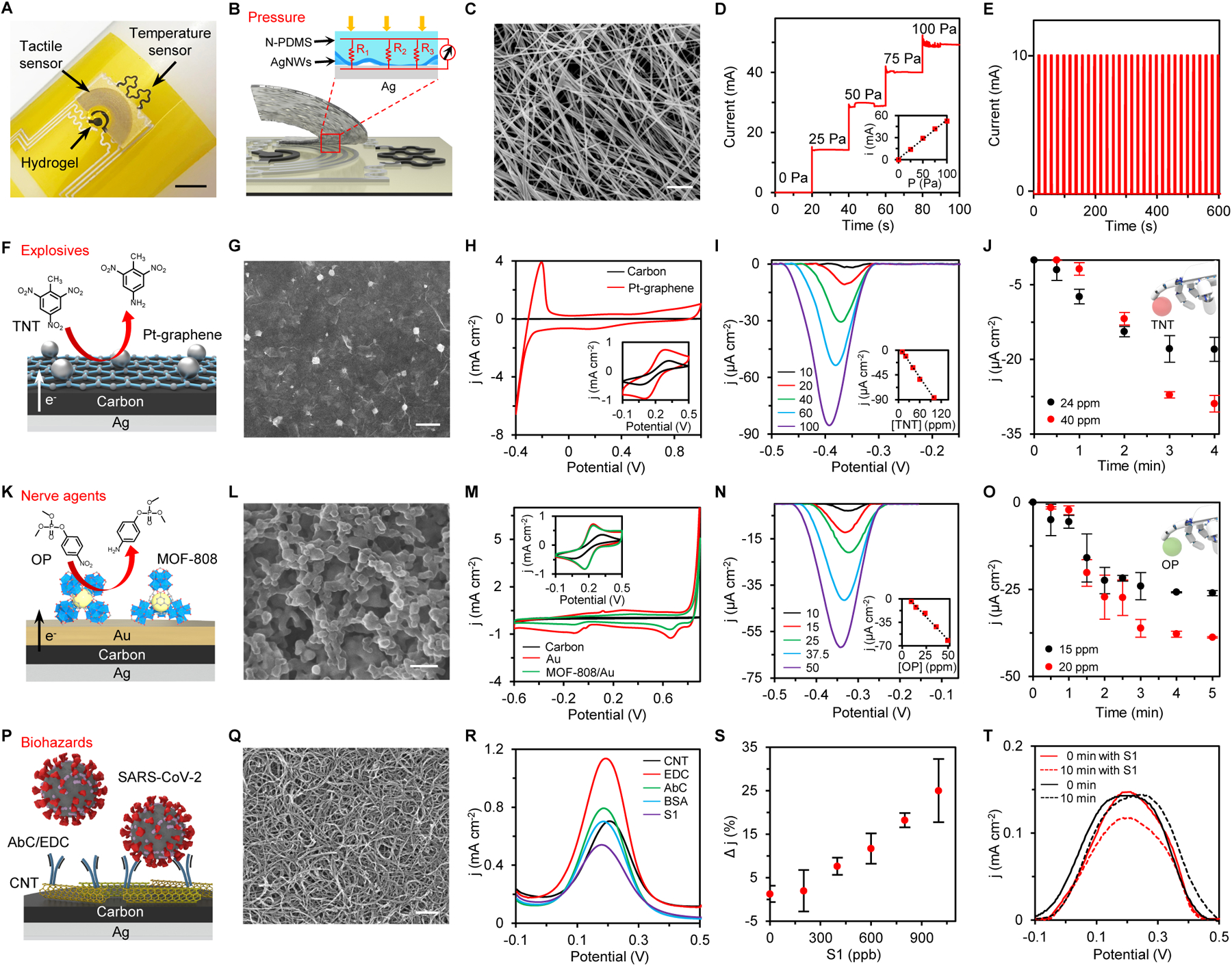

The multimodal physicochemical sensor arrays on e-skin-R were fabricated via serial printing of silver (interconnects and reference electrode), carbon (counter electrode and temperature sensor), polyimide (PI) (encapsulation), and target-selective nanoengineered sensing films (tactile sensor and biochemical sensing electrodes) (Fig. 2A). Customized nanomaterial inks were developed to meet the viscosity, density, and surface tension requirement for inkjet printing and to achieve the desired analytical performance (figs. 2, 3 and table S1). The chemical sensors were coated with a soft gelatin hydrogel that was loaded with an electrolyte or redox probe to facilitate target analyte sampling and analysis in situ (Supplementary Methods and fig. S4). The inkjet-printed carbon electrodes (IPCEs) showed reproducible electroanalytical performance and rapid response for on-site detection of dry-phase analytes (redox probe Fe3+/Fe2+ was used in the hydrogel as an example) (fig. S5). The detection area or resolution can be enhanced by increasing either hydrogel size (fig. S6) or electrode density (fig. S7).

Fig. 2. Characterization of the fully inkjet-printed multimodal sensor arrays on the e-skin-R.

(A) Photograph of a multimodal flexible sensor array printed with custom nanomaterial inks that consists of a temperature sensor, a tactile sensor, and an electrochemical sensor coated with a soft analyte-sampling hydrogel film. Scale bar, 5 mm. (B and C) Schematic (B) and scanning electron microscopy (SEM) image (C) of the printed AgNWs/N-PDMS tactile sensor. Scale bar, 1 μm. (D and E) Response of a tactile sensor under varied pressure loads (D) and repetitive pressure loading (E). (F and G) Schematic (F) and SEM (G) of the printed Pt-graphene electrode for TNT detection. Scale bar, 4 μm. (H) Cyclic voltammograms (CVs) of an IPCE and a printed Pt-graphene electrode in 0.5 M H2SO4 and in 5 mM K3Fe(CN)6 (inset). j, current density. (I) nDPV voltammograms and the calibration plots (inset) of TNT detection using a Pt-graphene electrode. (J) Dynamics of robotic fingertip detection of dry-phase TNT using a Pt-graphene sensor. (K and L) Schematic (K) and SEM image (L) of the printed MOF-808/Au electrode for OP detection. Scale bar, 100 nm. (M) CVs of an IPCE, a Au electrode, and a MOF-808/Au electrode in McIlvaine buffer and in 5 mM K3Fe(CN)6 (inset). (N) nDPV voltammograms of the OP detection. Inset, the calibration plots. (O) Robotic fingertip detection of dry-phase OP using a MOF-808/Au sensor. (P and Q) Schematic (P) and SEM image (Q) of the printed CNT electrode for SARS-CoV-2 detection. Scale bar, 250 nm. (R) DPV voltammograms of a printed CNT electrode in 5 mM K3Fe(CN)6 after each surface immobilization step. EDC, 1-ethyl-3-(3-dimethylamonipropyl)carbodiimide; AbC, capture antibody; BSA, bovine serum albumin. (S) Calibration plots of the CNT-based sensor for S1 detection. Δj, percentage DPV peak current changes after target incubation. (T) Response of a CNT sensor in the presence and absence of dry-phase S1. All error bars represent the s.d. from 3 sensors.

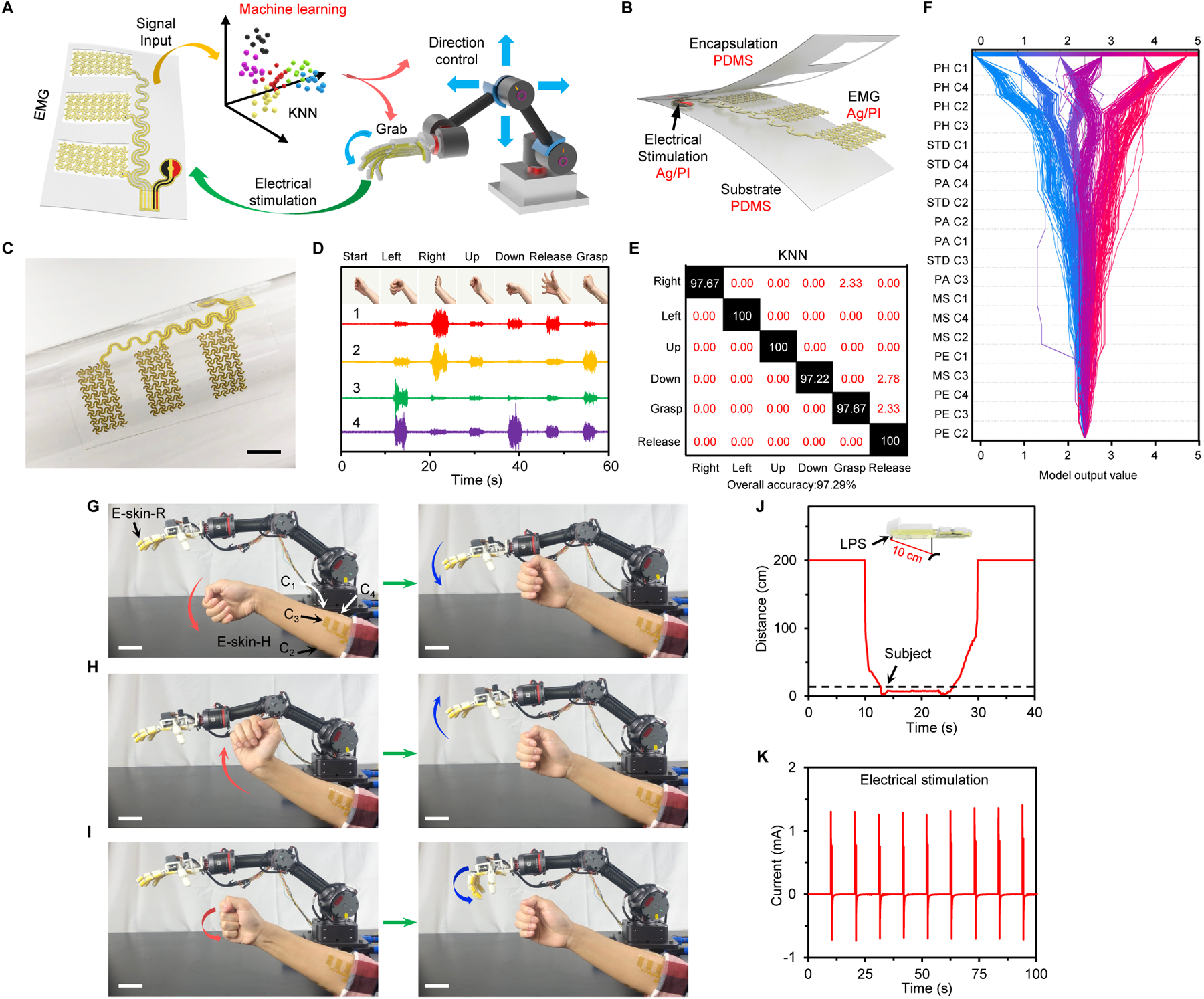

Fig. 3. Evaluation of the e-skin-H for AI-assisted human-machine interaction.

(A) Schematic of machine learning-enabled human gesture recognition and robotic control. (B and C) Schematic (B) and photograph (C) of a PDMS encapsulated soft e-skin-H with sEMG and electrical stimulation electrodes for closed-loop human interactive robotic control. Scale bar, 1 cm. (D) sEMG data collected by the four-channel e-skin-H from 6 human gestures. (E) Classification confusion matrix using a KNN model based on real-time experimental data. White text values, percentages of correct predictions; red text values, percentages of incorrect predictions. (F) A SHAP decision plot explaining how a KNN model arrives at each final classification for every datapoint using all 5 features. Each decision line tracks the features contributions to every individual classification; each final classification is represented as serialized integers (that map to a hand movement). Dotted lines represent misclassified points. (G–I) Time-lapse images of the AI-assisted human-interactive robotic control using the M-Bot. Scale bars, 5 cm. (J) Response of the LPS when the robot approaches and leaves an object. (K) Current applied on a participant’s arm during the feedback stimulation.

To effectively manipulate objects and to avoid harming either the e-skin or the object, real-time tactile feedback was enabled by incorporating a piezoresistive pressure sensor based on a printed Ag nanowires (AgNWs)/nanotextured-PDMS (N-PDMS) sensing film (Fig. 2B and C). Such tactile sensation provides the robot with the haptic capability to grasp and handle samples. The geometry changes of the AgNWs/N-PDMS in response to a pressure load change the sensor’s conductance (Fig. 2D and fig. S8). The pressure sensor displayed stable performance under repetitive pressure loading (Fig. 2E and fig. S8).

To demonstrate the feasibility of using the printed biosensors for hazardous chemical detection, a standard chemical explosive (TNT), an OP nerve agent simulant (paraoxon-methyl), and a biohazard pathogenic protein from the SARS-CoV-2 virus were chosen. The detection of TNT was achieved using a Pt-nanoparticle decorated graphene electrode, which was prepared by droplet inkjet-printing of aqueous graphene oxide (GO), Pt ions, and propylene glycol and subsequently subjected to thermal reduction. The Pt-graphene showed superior electrocatalytic performance compared to classic carbon and graphene electrodes (Fig. 2F–H and fig. S9). The reduction of p–NO2 to p–NH2 catalyzed by the Pt-graphene can be detected via negative differential pulse voltammetry (nDPV) (9, 36). The obtained reduction peak amplitude in the nDPV voltammograms showed a linear relationship with the target TNT concentrations with a sensitivity of 0.95 μA cm−2 ppm−1 and a detection limit of 10.0 ppm (Fig. 2I). It should be noted that a custom voltammogram analysis with an automatic peak extraction strategy was used by the robot to analyze the original nDPV curves as illustrated in fig. S10. When integrated onto a robotic hand, the hydrogel-coated Pt-graphene sensor could sample the dry-phase TNT efficiently and provide a stable current response within 3 minutes (Fig. 2J); the TNT sensor can be regenerated in situ through repetitive nDPV scans to deplete the sampled analyte molecules toward continuous robotic sensing (fig. S11). For OP analysis, Pt-graphene and carbon have low electrochemical activity because Zr-based metal-organic framework (MOF-808) was reported to have strong interaction with OPs (37, 38). Thus the printed MOF-808 modified gold nanoparticle electrode (MOF-808/Au) was selected to achieve efficient non-enzymatic OP reduction at a relatively low voltage (Fig. 2K–M and fig. S12). In this way, the catalyzed reduction of paraoxon-methyl can be monitored via nDPV using the MOF-808/Au sensors with a sensitivity of 1.4 μA cm−2 ppm−1 and a detection limit of 4.9 ppm (Fig. 2N). In addition to high sensitivity, these printed sensors could also perform high-concentration threat analysis (fig. S13). Similar to TNT detection, a 3-to-4-minute sampling time was found to be sufficient for stable robotic dry-phase OP analysis (Fig. 2O). The Pt-graphene TNT sensors and MOF-808/Au OP sensors showed high selectivity over other nitro compounds (figs. S14 and S15). Owing to the excellent stability of the catalytic performance of Pt-graphene and MOF-808/Au, the printed sensors can perform continuous TNT and OP analysis (fig. S16).

Label-free SARS-CoV-2 virus detection was demonstrated from a printed multiwalled carbon nanotube (CNT) electrode that was functionalized with antibodies specific to SARS-CoV-2 spike 1 protein (S1) (Fig. 2P,Q). The CNT layer possessed a high electroactive surface area for sensitive electrochemical sensing while providing rich carboxylic acid functional groups for amine-containing affinity probe immobilization to achieve versatile biohazard sensing (39–41). The successful surface modification of the S1 sensor was confirmed after each surface immobilization step (fig. S17, Fig. 2R, fig. S18). Parts-per-billion (ppb) level S1 sensing was performed based on the signal change of the electroactive redox probe (Fe3+/Fe2+) caused by the blockage of the electrode surface due to the S1 protein binding (Fig. 2S). The response variations of such S1 sensors can be further reduced in the future with an automatic surface modification process. The SARS-CoV-2 S1 sensor shows high selectivity over other viral proteins as illustrated in fig. S19. On-the-spot robotic S1 protein detection was successfully demonstrated using a collection and detection hydrogel containing the redox probe on the sensor that touched a surface containing a dry blot of the S1 protein (Fig. 2T). Although the non-specific adsorption could potentially reduce the selectivity of the hydrogel detection process (fig. S20), the semi-quantitative data conveniently and automatically obtained on site by the sensor could still provide the users rapid, real-time feedback and alert on the presence of biohazard.

To ensure accurate hazard detection in extreme operational environments, a printed carbon-based temperature sensor was designed for on-site temperature sensing and chemical sensor calibration during operation (fig. S21). All printed sensors maintained similar performance under and after repetitive mechanical bending tests, indicating their high mechanical stability (fig. S22). The freshly prepared hydrogels can be stored at 4°C in a moist chamber for over one week and maintain similar sensing performance (fig. S23). To minimize the influence of the shearing and normal forces on the sensor performance, the AgNWs/N-PDMS pressure sensor was designed to form a protection microwell for each hydrogel-coated biosensor and to facilitate reliable chemical analyte sample collection (figs. S24 and S25); moreover, the tactile feedback from the AgNWs/N-PDMS pressure sensor could ensure stable electrochemical sensing performance (contact pressure was maintained between 0 and 500 Pa during operation).

Evaluation of e-skin-H for AI-assisted human-machine interaction

E-skin-H acts as a human-machine interface for autonomous robotic control and object manipulation (Fig. 3A). In particular, e-skin-H records neuromuscular activity, which provides an intuitive interface to perform hand gesture recognition, through its inkjet-printed PDMS-encapsulated four-channel three-electrode sEMG arrays (Fig. 3B,C and fig. S26A–C). Analyzing the interfacial contact with the skin, e-skin-H demonstrates high stretchability with good mechanical compliance during physical activities through its serpentine structure to provide reliable sEMG recordings (fig. S26D–I).

Upon signal acquisition, various machine learning algorithms were evaluated for accurate gesture recognition including linear regression, random forest, artificial neural networks (ANN), support vector machines (SVM; kernels: radial, sigmoid, linear, and polynomial), as well as k-nearest neighbors (KNN). Each algorithm was shown to extract motor intention from sEMG signals, acting as a bridge between conscious thought and prosthetic actuation. Out of all the machine learning algorithms, the KNN model provided the highest prediction accuracy for all six hand gestures with an overall mean accuracy across 5000 randomly selected training data of 97.29±1.11% based on real-time experimental results collected from a human participant (Fig. 3D,E and fig. S27). The next best model was the random forest classifier, which was found to have a similar average classification accuracy except with a higher variance. The KNN model was able to provide high-accuracy recognition of gestures with different angles when applying the e-skin-H to other body parts such as the neck, lower limb, and upper back—each time achieving an accuracy of greater than 90% (figs. S28 and S29).

For each gesture, five features were extracted from the associated peak in the root mean squared (RMS) filtered sEMG data (fig. S30): peak height (PH), peak standard deviation (STD), maximum slope (MS), peak average (PA), and peak energy (PE) (Supplementary Methods). The relevance of each feature and channel in the prediction method was further evaluated using Shapley additive explanation (SHAP) values (42). Through the SHAP values as well as the KNN accuracy, it was determined that PH was the most important feature for accurate gesture classification (Fig. 3F and fig. S31). When considered alongside PH, STD and PA both increased the classification accuracy, with STD being the most beneficial (figs. S31 and S32). In terms of channels, it was found that three EMG channels were sufficient to provide a high gesture accuracy of 96.31±1.25%. Adding a fourth channel was beneficial but not statistically significant (tables S2 and S3).

With the KNN algorithm, the robot can imitate the user’s gesture in millisecond-level time for automatic object manipulation. The data acquisition and signal processing time delay to determine a gesture was around 200 milliseconds, well below the required time for optimal real-time robotic control (43). This was achieved using a sampling rate of 534 Hz and analyzing the data in batches of 100 points. The M-Bot’s time delay was substantially reduced by training the KNN model on only half of any sEMG signal for gesture recognition. By reducing the required data needed to determine a gesture, the machine learning model was able to predict the movement almost immediately after the gesture was complete.

The AI-powered e-skin-H–enabled gesture recognition provides a framework for online multi-directional robotic control with high-accuracy remote object manipulation (as illustrated in Fig. 3G–I and movie S2). After object contact, recognition, and positive threat detection, tactile and alarm feedback can be activated to inform the user of any potential danger using a pulsed current load between the two stimulation electrodes (Fig. 3J). To facilitate safe robotic handling and to protect e-skin-R from uncontrolled collisions, a laser proximity sensor was integrated into the robotic hand to reduce the actuation speed as the hand approaches a barrier (<10 cm) (Fig. 3K and fig. S33).

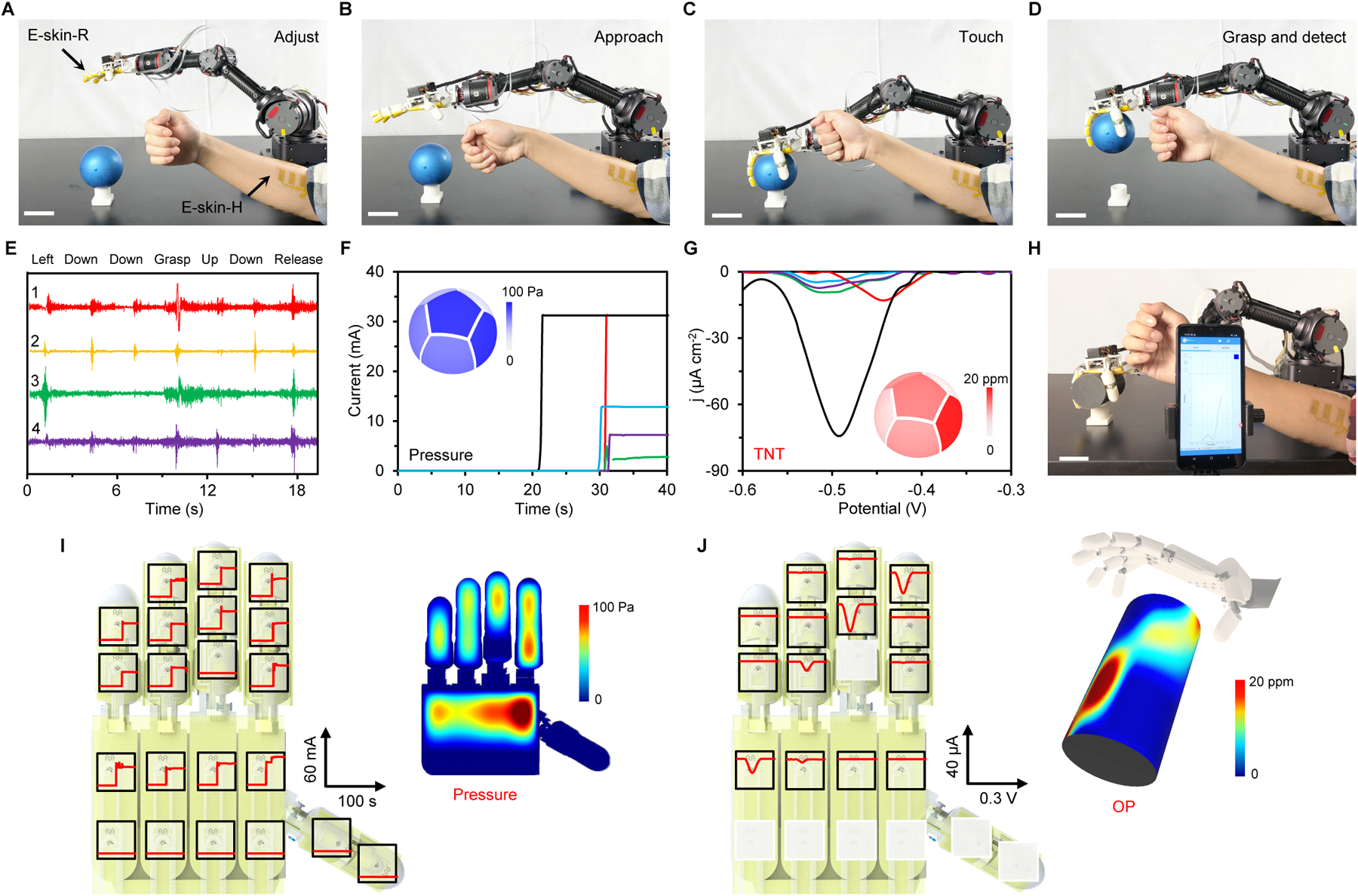

Evaluation of the M-Bot in human-interactive robotic physicochemical sensing

With delicate and precise control, the human-machine interactive M-Bot was successfully evaluated for fingertip point-of-use robotic TNT detection (fig. S34 and movie S3). The multimodal sensor data could be captured in real time using a portable multichannel potentiostat, wirelessly transmitted to the mobile phone, and displayed on the cellphone app (fig. S34 and movie S3). The M-Bot was also able to perform object grasping and multi-spot tactile and chemical sensing (Fig. 4A–D and movie S4). Multiplexed physicochemical data were simultaneously recorded and automatically processed without signal interferences (fig. S35). In an example demonstration, 7 AI-assisted gesture-controlled steps were used in sequence to control the robotic hand as it approached, grasped, and released a spherical object (Fig. 4E, fig. S36, and movie S4); In parallel, 5 sensor arrays were activated, displaying multiplexed tactile readings and surface TNT levels (Fig. 4F and G).

Fig. 4. Evaluation of the M-Bot in human-interactive robotic physicochemical sensing.

(A–D) Time-lapse images of the human-interactive robotic control for object grasping and on-site TNT detection. Scale bars, 5 cm. (E–G) The sEMG data collected in real time, which allow the robotic hand to approach and grasp a spherical object (E) and the corresponding tactile (F) and TNT (G) sensor responses. Insets in F and G, colored mapping of pressure and TNT distributions on the object. (H–J) Photograph of the robotic OP sensing on a cylindrical object (H) and the corresponding responses of the tactile sensors (I) and OP sensors (J) on an e-skin-R. Insets in I and J represent the color mappings of pressure and OP distributions on the object. Scale bar, 5 cm.

The use of the M-Bot for multiplexed physicochemical robotic sensing was further evaluated on an OP-contaminated cylindrical surface (Fig. 4H). During the experiment, 14 sensor arrays on e-skin-R were activated. The tactile and OP sensor responses from each sensor, along with the corresponding color mapping of their distributions across the three-dimensional (3D) surface, are displayed in Fig. 4I and Fig. 4J, respectively (detailed data demonstrated in figs. S37 and S38). We anticipate that by further increasing the number and density of the multimodal sensor arrays, more accurate and informative data can be obtained from arbitrary objects and surfaces.

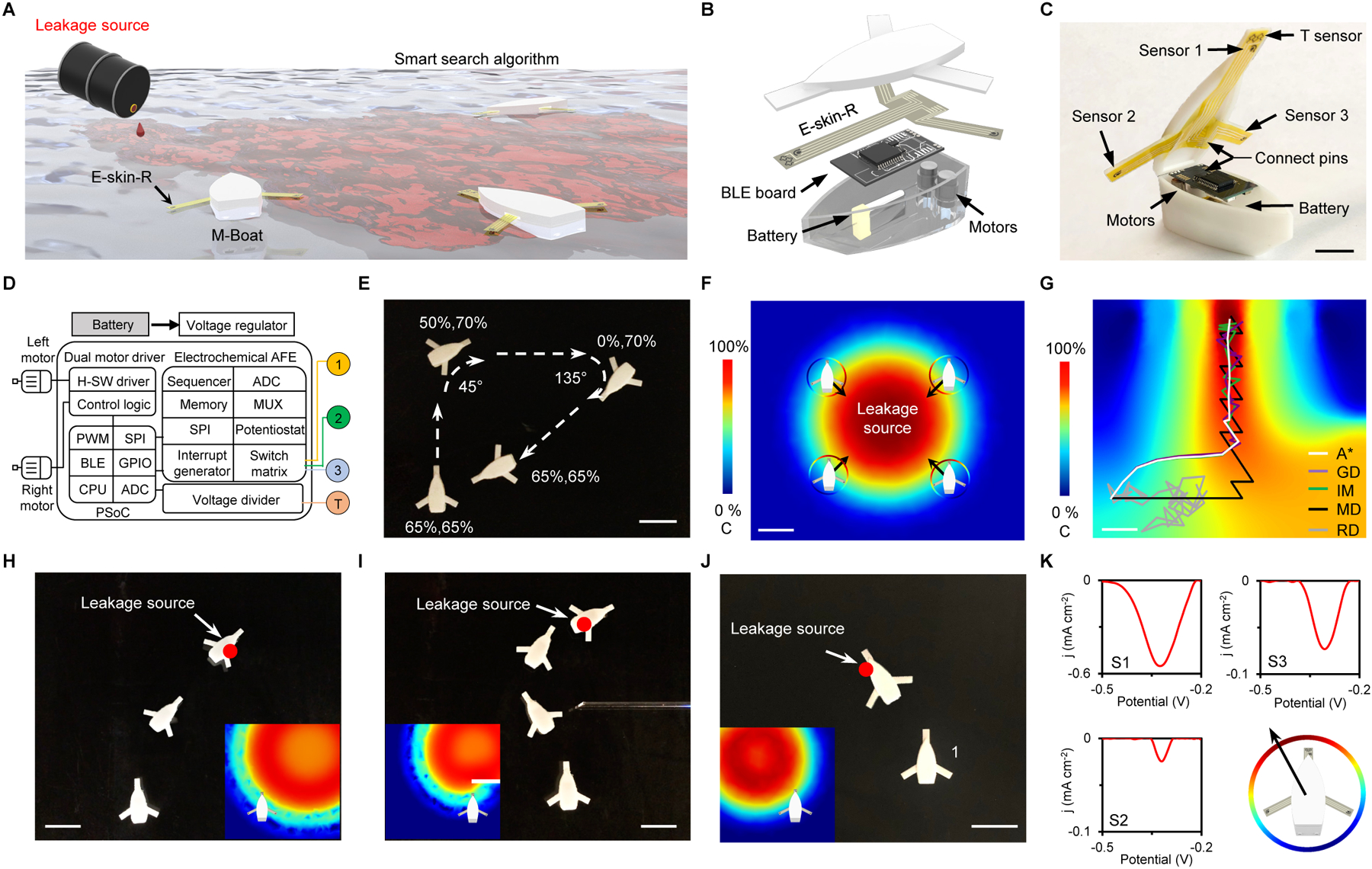

Evaluation of an e-skin-R enabled multimodal sensing robotic boat (M-Boat) for autonomous source tracking

The multimodal robotic sensing platform was further generalized onto an autonomous robotic boat capable of tracking pollutants, explosives, chemical threats, and biological hazards for risk prevention and mitigation, which is an important topic in civil security (7, 44). In this regard, our printed multimodal e-skin-R technology was adapted onto an M-Boat for real-time hazard detection and to autonomously locate the source of water-based chemical leakages (Fig. 5A). 3D printed from simple computer-aided designs, the M-Boat contains an inkjet-printed multimodal sensor array with one temperature and three chemical sensors, two electrical motors (for boat propulsion and steering), and a printed circuit board for data collection, signal processing, and motor control (Fig. 5B–D and fig. S39). The propulsion of the M-Boat can be precisely controlled by adjusting the individual duty cycle of pulsed voltages supplied to each motor (Fig. 5E, fig. S40, and movie S5); For source-detection, an A* search algorithm (45) was implemented for autonomous decision-making while searching for the maximum concentration of the chemical leakage (Fig. 5F,G, fig. S41, and Supplementary Methods). At each decision point, the sensors can detect small traces of the chemical leak in three equidistant locations around the boat. With this input, the algorithm calculates the optimal direction to travel using the gradient vector, indicating the direction of the highest concentration, and a heuristic estimate of the diffusion based on an interpolated map from previous points. By utilizing the heuristic map in parallel with the gradient, the algorithm takes advantage of both the past and present results to precisely predict the spatial location of the source. The performance of the M-Boat was evaluated through simulations as well as experimentally in water tanks containing various chemical gradients induced by a low pH corrosive fluid (Fig. 5H,I, fig. S42, and movie S6) and OP leakage (Fig. 5J and K). In the water tank, the M-Boat performed real-time detection of the surrounding analyte concentrations, automatically adjusting its trajectory based on the A* algorithm, to successfully identify the leakage source. The M-Boat was also able to perform continuous hazard analysis and autonomous leakage tracking in seawater (fig. S43 and movie S6). The surrounding pH and ionic strength of a real-world sample matrix (e.g., lake water or seawater) did not show substantial influence on the sensor performance (fig. S44). When necessary, more real-time calibration mechanisms for precise hazard analysis can be introduced by incorporating more related biosensors (e.g., pH and conductivity sensors) into the e-skin-R. With more advanced robotic control and sensing designs, the M-Boat platform could serve as an important basis for intelligent path planning and decision-making of autonomous vehicles.

Fig. 5. Evaluation of the-skin-R in an autonomous multimodal sensing robotic boat (M-Boat).

(A) Schematic of the intelligent M-Boat integrated with a printed multimodal e-skin-R sensor array that can perform temperature and multiplexed chemical sensing for autonomous source tracking. (B and C), Schematic (B) and photograph (C) illustrating the assembly of the M-Boat components. (D) System block diagram of the M-Boat for autonomous propulsion, sensing, and signal processing. ADC, analog-to-digital converter; AFE, analog front-end; BLE, Bluetooth low energy; CPU, central processing unit; GPIO, general-purpose input/output; H-SW, H-bridge software; MUA: multiplexer; PWM, pulse width modulation; SPI, serial peripheral interface. (E) Wireless control of the M-Boat. (F) Simulated distributions of a hazardous chemical (OP) leak and the algorithm used by the M-Boat for autonomous source tracking. C, concentration. (G) Simulation comparison of different search algorithms used by the M-Boat for intelligent source tracking. GD, gradient descent; IM, interpolated map; MD, max direction; RD, random direction. (H and I) Time-lapse images showing example demonstrations of the M-Boat enabled autonomous source tracking. Insets, simulated target (proton from corrosive acid) distributions. Scale bars, 5 cm. (J and K) Time lapse images (J) and the nDPV voltammograms collected at location 1 (K) for autonomous decision-making and chemical threat (OP) source tracking using an M-Boat. Insets, simulated OP distribution. Scale bar, 5 cm.

DISCUSSION

Here we have described a human-machine interactive e-skin–based robotic system (M-Bot) with multimodal physicochemical sensing capabilities. The mass-producible flexible sensor arrays allow for high-performance on-site monitoring of temperature, tactile pressure, and various hazardous chemicals (in both dry-phase and liquid-phase) such as explosives, OPs, and pathogenic proteins. The integration of such multimodal sensors onto a robotic e-skin platform provides autonomous systems with interactive cognitive capabilities and substantially broadens the range of tasks robots can perform, such as combating infectious diseases like COVID-19.

Existing robotic sensing technologies are largely limited to monitoring physical parameters such as temperature and pressure. To achieve high-performance chemical sensing, nanomaterials are commonly used via manual drop-casting methods, which could lead to large sensor variations. Moreover, most electrochemical sensing strategies require detection in aqueous solutions, making them impractical for dry-phase robotic analysis. Currently, there are no reported scalable low-cost manufacturing approaches to prepare robotic physicochemical sensors. In this work, we proposed a scale solution to fabricate flexible, multifunctional, multimodal sensor arrays prepared entirely by high-speed inkjet printing. Custom-developed functional nanomaterial inks are designed and optimized to achieve highly sensitive and selective sensors for the specific hazardous target analytes. The hydrogel-coated printed nanobiosensors allow for efficient dry-phase chemical sampling and rapid on-site hazard analysis on a robotic platform.

Manufactured using the same approach, e-skin-H ensured stable contact with the soft human skin for reliable recording of neuromuscular activity to facilitate remote robotic sensing and control. To minimize the amount of data collected and analyzed for human-robotic interaction, artificial intelligence and smart algorithms were applied to decode incoming information and efficiently predict and control robotic movement. An in-depth analysis into each sEMG channel’s individual contribution to the machine learning model was presented, allowing future researchers to optimize the number of electrodes needed for robotic control. Using the SHAP analysis, we further untangled the hidden overlapping information between each channel’s features and categorized which features present the most non-overlapping information for gesture prediction. For the M-Bot, machine learning gesture prediction via e-skin-H was further coupled with user interactive tactile and threat alarm feedback that allow seamless human-machine interaction for the remote deployment of robotic technology in extreme or contaminated environments. To obtain such real-time results, the robotic platform’s data acquisition, signal processing, feature extraction, and gesture prediction of the sEMG signals were performed with millisecond-level time after the gesture was complete. The M-Boat similarly employed a smart A* algorithm for autonomous source detection, minimizing the boat’s path, and subsequently time and energy, in finding potentially hazardous chemical leaks. In these applications, the systems demonstrated real-time autonomous movement, all within a low-cost mass-producible system, lowering the barrier for real-time robotic perception.

This human-machine interactive robotic sensing technology represents an attractive approach to develop advanced flexible and soft e-skins that can reliably collect vital data from the human body and the surrounding environments. Full system integration to achieve high-speed, wireless, and simultaneous multi-channel physicochemical sensing is strongly desired for future field deployment and evaluation. Moreover, we envision that, by integrating a high density and new types of multimodal sensors, this technology could substantially enhance the perceptual capabilities of future intelligent robots and pave the way to numerous new practical wearable and robotic applications.

MATERIALS AND METHODS

Materials

Graphite flake was purchased from Alfa Aesar. Sodium nitrate, potassium permanganate, hydrogen peroxide, potassium hexacyanoferrate(III), citric acid, chloroplatinic acid, polydimethylsiloxane (PDMS), zirconium(IV) chloride, aniline, gelatin, paraoxon-methyl, 2,4,6-trinitrotoluene solution (TNT), 4-nitrophenol, 2-nitrophenyl octyl ether, 2-nitroethanol, 2-nitropropane, 4-nitrotoluene, 2,4-dinitrotoluene, poly(pyromellitic dianhydride-co-4,4’-oxydianiline) amic acid (PAA) solution (12.8 wt%), 1-methyl-2-pyrrolidinone (NMP), N-hydroxysulfosuccinimide sodium salt (Sulfo-NHS), N-(3-dimethylaminopropyl)-N′-ethyl carbodiimide hydrochloride (EDC), 2-(N-morpholino) ethanesulfonic acid hydrate (MES), potassium permanganate (KMnO4), and bovine serum albumin (BSA), human immunoglobulin G (IgG), and lysozyme were purchased from Sigma-Aldrich. Sodium chloride, sulfuric acid, hydrochloric acid, disodium phosphate, 1,3,5-benzenetricarboxylic acid (H3BTC), formic acid, N,N′-dimethylformamide (DMF), potassium ferricyanide, propylene glycol, isopropyl alcohol (IPA), and phosphate-buffered saline (PBS) were purchased from Fisher Scientific. His-tagged SARS-CoV-2 Spike S1 protein (PNA002), anti-Spike-RBD human mAb (IgG) (S1-IgG, AHA013), SARS-CoV S1 (40150-V08B1), SARS-CoV Nucleocapsid Protein (NP, 40143-V08B), and SARS-CoV-2 NP (40588-V08B) were purchased from Sanyou Bio. Silver nanowire (AgNWs) suspension (20 mg ml−1 in IPA) was purchased from ACS material, LLC. Silver ink (25 wt%) and carbon ink (5 wt%) were purchased from NovaCentrix. Gold ink (10 wt%) was purchased from C-INK co., Ltd. Carboxyl functionalized multiwalled carbon nanotube (CNT) ink (2 mg ml−1, Nink-1000) was purchased from NanoLab, Inc. PI film (12.5 μm) was purchased from DuPont.

Preparation and characterizations of print inks

To prepare the Pt-graphene ink, graphene oxide (GO) was first prepared following a modified Hummer’s method (46). 1 g of graphite flake was mixed with 23 ml of H2SO4 for more than 24 hours, and then 100 mg of NaNO3 was added inside. Subsequently, 3 g of KMnO4 was added below 5°C in an ice bath. After stirring at 40°C for another 30 minutes, 46 ml of H2O was added while the solution temperature was slowly increased to 80°C. In the end, 140 ml of H2O and 10 ml of H2O2 were introduced into the mixture to complete the reaction. The GO was washed with 1 M HCl and filtered. After dried under vacuum at 60°C, a GO (2 mg ml−1) suspension was prepared followed by the addition of 5 mM chloroplatinic acid under sonication. Last, the suspension was mixed with propylene glycol (80:20, v:v) to form the Pt-graphene ink.

The MOF-808 was synthesized solvothermally. Briefly, H3BTC (0.236 mM) and ZrCl4 (1 mM) were mixed with 15.6 ml of the DMF and formic acid (1:0.56, v:v) solvent and sonicated for 20 minutes. Then, the mixture was transferred to a 25-ml Teflon-lined autoclave and kept at 120°C for 12 hours. After the reaction, the autoclave was naturally cooled to room temperature. The product was washed with DMF and methanol and then dried under vacuum at 60°C. Last, a MOF-808 suspension in DI water was prepared and mixed with propylene glycol (80:20, v:v) to form the MOF-808 ink.

The AgNWs ink was prepared by diluting the silver nanowire suspension with IPA to 2 mg ml−1 and sonicating it for 10 minutes. The CNT ink was prepared by mixing the commercial CNT ink (2 mg ml−1) with propylene glycol (80:20, v:v). PAA ink was prepared by diluting the commercial PAA solution with NMP to 3 wt%. Commercial silver and carbon inks were used as received.

The dynamic viscosity (η), density (ρ) and surface tension (γ) for all inks were characterized before printing. Dynamic viscosity was characterized with an Anton Paar MCR302 rheometer. Surface tension was measured with a Ramé-Hart contact angle goniometer using the equation

| (1) |

Here, Δρ is the density difference between air and inks, g is the gravitational acceleration, R0 is the radius of curvature at the drop apex, and β is the shape factor.

Fabrication and assembly of the soft inkjet-printed e-skin-R

The fabrication process of the inkjet-printed e-skin-R is illustrated in fig. S1. The PI substrate was cut with kirigami structures by automatic precision cutting (Silhouette Cameo 3). A 2-min O2 plasma surface treatment was performed with Plasma Etch PE-25 (10 – 20 cm3 min−1 O2, 100 W, 150 – 200 mTorr) to enhance the surface hydrophilicity of the PI substrate. The multimodal sensor arrays on e-skin-R were fabricated via serial printing of silver (interconnects and reference electrode), carbon (counter electrode and temperature sensor), PI (encapsulation), and target-selective nanoengineered sensing layers (e.g., AgNWs, Pt-graphene, Au, MOF-808) using an inkjet printer (DMP-2850, Fujifilm). The ink composition, characterizations, and thermal annealing conditions are shown in table S1. 30 layers of AgNWs were printed on a nanotextured PDMS substrate (cured on a 1000-mesh sandpaper) to form the piezoresistive tactile sensors. While printing, the plate temperature was set to 40°C to ensure the rapid vaporization of the IPA solvent. The AgNWs/N-PDMS were cut to semicircle shape and set on the e-skin.

For preparing biohazard S1 protein sensor (fig. S17), a CNT film was printed on the IPCE first. The carboxylic groups of multiwall CNTs were activated to NHS esters, by dropcasting 10 μL of EDC (400 mM) and NHS (100 mM) in MES buffer (25 mM, pH 5) for 35 minutes. In the next step, 5 μL of 250 μg ml−1 anti-Spike-RBD antibody in PBS were dropped on the modified electrode and incubated for 2 hours. Next, 10 μl of 1% BSA in PBS were dropped and incubated for 1 hour to deactivate residual NHS esters. The modified sensors were stored in the refrigerator until use.

To assemble the robotic e-skin, the pins of the finger printed e-skin were connected with the bottom printed silver connections of palm part through a z-axis conductive tape (3M), and then e-skin-R was set on a robotic hand printed with a 3D printer (Mars Pro, Elegoo Inc.).

Characterizations of the multimodal robotic sensing performance of e-skin-R

The printed biosensors were characterized with cyclic voltammetry (scan rate, 50 mV s−1 unless otherwise noted), DPV, and amperometric i-t through an electrochemical workstation (CHI 660E). McIlvaine buffer solutions (pH 6.0) were used to prepare the analyte solutions. A commercial Ag/AgCl reference electrode (CHI111) was used for characterizing the printed sensing electrodes in the solution while printed Ag solid-state electrodes were used for hydrogel-based sensor characterization (there was a ~0.1-V difference between these two types of reference electrodes in McIlvaine buffer). To quantify the electrochemical performance and the electrochemical surface areas, the print electrodes were tested in 5 mM K3Fe(CN)6 and 1 M KCl with scan rates of 5 mV s−1 from −0.1 to 0.5 V.

For TNT and OP sensors, the conditions of nDPV measurements include a scan range of −0.15 to −0.5 V, an incremental potential of 0.004V, a pulse amplitude of 0.05V, a pulse width of 0.05 s, and a pulse period of 0.5 s. The reduction peaks of nDPV curves were extracted using a custom developed iterative baseline correction algorithm. To prepare the electrolyte-loaded hydrogel for analyte sampling and sensing, 0.250 g of gelatin powder, 0.075 g of KCl, 0.071 g of citric acid, and 0.179 g of disodium phosphate were mixed in 10 ml of DI water and stirred at 80°C for 15 min. The hydrogel was stored and aged overnight. The gelatin electrolyte-loaded hydrogel was coated on the printed biosensors for dry blot detection.

For S1 protein detection, the modified electrode was incubated with 10 μl of S1 protein in PBS for 10 minutes, and the DPV measurements ranged from −0.1 to 0.5 V. The electrochemical signal of the sensor before and after antigen binding was measured in 5 mM K3Fe(CN)6. The difference between the peak current densities (Δj) was obtained as sensor readout. A sampling hydrogel pad was prepared to demonstrate the feasibility for SAR-CoV-2 virus dry blot detection. To perform one-step detection, 10 μl of gelatin hydrogel (7.5 wt% gelatin, 10 mM K3Fe(CN)6, 0.2 M phosphate buffer pH 7.0) was placed onto a dry S1 protein blot (from 10 μl of a 1 μg ml−1 SARS-CoV-2 S1 protein droplet). Such amount of S1 protein could potentially be found in a COVID-19 patient’s saliva droplet (47). For dry-phase sensing selectivity study, the dry protein blots were created with the same amount of interference proteins (10 μl, 1 μg ml−1). The electrochemical signal of the gel was recorded immediately and 10 minutes after joining the biosensor with the gel.

The temperature sensor characterization was performed on a ceramic hot plate (Thermo Fisher Scientific), and an amperometric method (with an applied voltage of 2 V) was used to detect the temperature response. The piezoresistive tactile sensor characterization was also applied with a constant voltage of 2 V to record the current response under various pressure loads.

The scanning electron microscopy (SEM) images of the electrodes were obtained by a field-emission SEM (FEI Nova 600 NanoLab). EDS mapping were obtained by an EDS spectrometer (Bruker Quantax EDS).

Fabrication and assembly of e-skin-H

The fabrication process of e-skin-H was illustrated in fig. S26. A 2-min O2 plasma surface treatment was performed with Plasma Etch PE-25 to enhance the surface hydrophilicity of the PI substrate. Silver interconnects were printed with DMP-2850. The PI substrate without the printed patterns were removed with laser cut using a 50-W CO2 laser cutter (Universal Laser System). The optimized laser cutter parameter was power 10%, speed 80%, PPI 1,000 in vector mode. After cleaned with ethanol and dried, the remaining patterns were transferred onto a 70-μm-thick PDMS substrate and then encapsulated with another layer of PDMS film as well (with sEMG and electrical stimulation electrodes exposed). An adhesive electrode gel (Parker laboratories, INC.) was spread onto the electrodes before placing on human participants.

Evaluation of the human-machine interactive multimodal sensing robot

To evaluate the performance of the M-Bot, the e-skin-R interfaced 3D print robotic hand was assembled onto a 5-axis robotic arm (Innfos Ltd.). The e-skin-H was then set around a human participant’s forearm after cleaning the skin with alcohol swabs. The sEMG data was acquired with four-channel (three sEMG electrodes in each channel) through an open-source hardware shield (Olimex). The signals were sampled as integers between 0 and 1023 by a 10-bit analog-to-digital converter (ADC) and then processed through a serial (cluster communication, COM) port. Each channel was then scaled back into voltages between 0 and 5 V. While the robotic arm control was performed in real time, data processing was performed asynchronously to signal acquisition. During processing, the data was first put through a high pass filter with a cutoff frequency of 100 Hz. The points were subsequently downsized using a root mean squared (RMS) filter (batch size: 400 points; step size: 10 points). The peaks detected after processing were used as features for the machine learning model. Overlapping peaks from each channel (peaks within a half peak-width away) were categorized as a single group. If multiple peaks were detected in a single channel’s set, then the first peak was used. The KNN training model was built using 60 samples per each of the 6 gestures. The training and testing datasets were divided 2:1 respectively and were randomly selected using an equal representation of each gesture. After the model was developed, it was further evaluated for accuracy using new data from each gesture. The LPS (TOF10120) was operated through a customized interactive control software in Python (Python 3.8). For dry blot threat detection, TNT and OP threat coatings were created by spraying analyte vapor onto the selected objects in a fume hood. Multimodal sensing data collected during robotic sensing operations were collected through a portable electrochemical workstation (Palmsens4) with a multiplexer.

The validation and evaluation of the M-Bot were performed using human participants in compliance with all the ethical regulations under protocols (ID 19–0895) that were approved by the Institutional Review Board (IRB) at the California Institute of Technology. Three participates were recruited from the California Institute of Technology’s campus and the neighboring communities through advertisement. All participants gave written informed consent before study participation.

Machine learning data analysis

For each gesture, all five features were extracted from the associated peak in the RMS filtered EMG data: height, average area, standard deviation, average energy (intensity), and maximum slope. The features extracted were calculated in reference against their baselines, which were determined via a binary search of the previous data in 50-ms intervals.

After feature extraction, SHAP values were used to evaluate the performance enhancement of each feature extracted and EMG channel utilized. In addition to SHAP values, the average testing accuracy across 5000 training sessions was taken for each permutation of features and EMG channels, which supplemented the SHAP values in providing further insight into which channels and features contained non-overlapping beneficial information for gesture determination. For each of the 5000 trials, the testing points represented 33% of the dataset, with each gesture in the test set being proportionally represented in the full dataset. For the arm EMG dataset, this amounted to 387 movements split across 6 gestures; of those points, the KNN model was fit using 257 training points and scored on the remaining 128 testing points (testing and training were proportionally stratified across all 6 gestures).

Evaluation of the multimodal sensing robotic boat

To evaluate the performance of the M-Boat, the e-skin-R was assembled onto a 3D printed boat with a four-layer printed circuit board (PCB), as shown in Fig. 5B and C. On the PCB, a Bluetooth Low Energy (BLE) module (CYBLE-222014–01, Cypress Semiconductor) was employed for controlling the electrochemical front end through a Serial Peripheral Interface (SPI). This module was also used to control the motor driver through general purpose input/output (GPIO) pins and pulse width modulation (PWM) and to transmit data over BLE. An electrochemical front end (AD5941, Analog Devices) was set up via SPI to perform multiplexed electrochemical measurements with the sensor arrays and to send the acquired data to the BLE module for signal processing and BLE transmission. A BLE dongle (CY5677, Cypress Semiconductor) was used to establish a BLE connection with the M-Boat and to securely receive the sensor data via BLE indications. An A* algorithm was utilized to analyze the sensor data and compute the next M-Boat’s movement path with the optimal motor speed. The calculated motor speed information was sent back to the BLE module in real time for the pulse width modulated control of two motors (Q4SL2BQ280001) through a dual DC motor driver (TB6612FNG, Toshiba). The entire system was powered by a 3.7-V Li-ion battery (40 mAh).

For the OP chemical threat tracking experiment, a natural diffusion gradient was generated by 10 droppings of 20 μl of 0.1 M OP into a 0.1 M NaCl solution tank. The seawater studies were performed in seawater samples collected from the Pacific Ocean in Los Angeles. The M-Boat was set into the tank after 30 minutes. For the corrosive acidic threat tracking experiment, pH sensors were modified on e-skin-R instead. Briefly, a polyaniline pH-sensitive film was electropolymerized on the IPCE in a solution containing 0.1 M aniline and 0.1 M HCl using a CV from −0.2 to 1 V for 25 cycles at a scan rate of 50 mV s−1. Then 100 μl of H2SO4 (2 M) as the leakage source was dropped into the middle of the water tank. Lastly the M-Boat was set after 45/30 min with/without barriers in the tank, respectively.

Statistical analysis

All quantitative values were presented as means ± standard deviation of the mean. For all sensor evaluation plots, the error bars were calculated based on standard deviation from 3 sensors. For the hydrogel stability study, the error bars were calculated based on standard deviation from three hydrogels. For bending tests of the sEMG electrodes, the error bars were calculated based on standard deviation from three independent measurements. For the machine learning analysis of the sEMG data, the model was trained on the same data across 5000 trials of randomly splitting the points between training and testing data. The accuracy profile of this training was then fit to a skewed normal distribution, where the mean was extracted.

Supplementary Material

Movie S3. M-Bot: Human-machine interactive robotic fingertip point of sensing.

Movie S4. M-Bot: Human-machine interactive robotic object manipulation and on-site hazard analysis.

Movie S5. M-Boat: Wireless motion control.

Movie S6. M-Boat: Autonomous source tracking.

Fig. S1. Schematic and fabrication procedure of e-skin-R.

Fig. S2. Characterization of drop-on-demand inkjet printing with custom-designed inks.

Fig. S3. Characterizations of inkjet-printed nanostructured patterns for M-Bot.

Fig. S4. Simulation of the hydrogel-based solid-phase hazard analyte sampling and sensing.

Fig. S5. Characterizations of the IPCEs.

Fig. S6. Evaluation of the M-Bot chemical sensing using hydrogels with enlarged size.

Fig. S7. Evaluation of the M-Bot chemical sensing with increased sensor density.

Fig. S8. Characterization of the printed AgNWs/N-PDMS tactile sensors.

Fig. S9. Characterization of printed Pt/graphene electrodes.

Fig. S10. The algorithm used for automatic baseline correction of the DPV voltammograms.

Fig. S11. In situ regeneration of the hydrogel-loaded Pt-graphene TNT sensors.

Fig. S12. Characterization of the printed MOF-808/Au electrodes.

Fig. S13. Evaluation of the MOF-808/Au electrode for high-concentration OP analysis.

Fig. S14. The selectivity of Pt-graphene and MOF-808/Au electrodes over OP and TNT.

Fig. S15. The selectivity of TNT and OP sensors over other nitro compounds.

Fig. S16. The continuous TNT and OP sensing with the printed TNT and OP sensors.

Fig. S17. Schematic of the modification procedure of the printed CNT electrode for SARS-CoV-2 S1 sensing.

Fig. S18. Evaluation of the stability of antibody immobilization.

Fig. S19. The selectivity of the SARS-CoV-2 S1 sensor over other viral proteins.

Fig. S20. The selectivity of the SARS-CoV-2 S1 sensor for dry-phase protein detection.

Fig. S21. Characterization of printed carbon-based temperature sensor toward real-time temperature sensing and calibration.

Fig. S22. Performance of the printed sensor arrays under mechanical deformation.

Fig. S23. Stability of the hydrogel.

Fig. S24. Influence of the contact pressure on the electrochemical sensor performance.

Fig. S25. Interference of the contact pressure on SARS-CoV-2 S1 sensor performance.

Fig. S26. Fabrication and characterization of the soft and stretchable e-skin-H.

Fig. S27. Gesture classification confusion matrix obtained with different machine learning algorithms.

Fig. S28. Evaluation of the e-skin-H on different body parts for AI-assisted human-machine interaction.

Fig. S29. Evaluation of the e-skin-H from gestures with different degrees.

Fig. S30. sEMG and root mean square (RMS) data process for robotic hand control.

Fig. S31. SHAP decision plots based on the arm sEMG dataset with respect to a KNN classification model.

Fig. S32. A SHAP heatmap that clusters each point’s SHAP value by its respective gesture classification.

Fig. S33. Evaluation of the laser proximity sensor in robotic operation.

Fig. S34. Human-interactive robotic control for object touching and on-site TNT detection.

Fig. S35. Multiplexed simultaneous physicochemical sensor analysis using the printed sensor array.

Fig. S36. Human-machine interactive robotic operation for object grasping.

Fig. S37. The tactile responses of the e-skin-R on a cylindrical object.

Fig. S38. The OP sensing responses of the e-skin-R on a cylindrical object.

Fig. S39. Schematic illustration and component list of the electronic system.

Fig. S40. Characterization of the operation of the M-Boat.

Fig. S41. Simulation comparison of the searching algorithms for M-Boat autonomous source locating.

Fig. S42. Evaluation of the M-Boat for autonomous source tracking.

Fig. S43. Evaluation of the M-Boat in seawater.

Fig. S44. Influence of ionic strength and pH on sensor performance.

Table S1. Ink composition and characterizations.

Table S2. Accuracy breakdown per feature with and without one channel removed.

Table S3. Accuracy breakdown based on five features with one and two channels.

Movie S1. Inkjet-printed soft e-skin for human-machine interaction and multimodal sensing.

Movie S2. Machine learning-assisted human-interactive robotic control.

Acknowledgments:

We gratefully acknowledge critical support and infrastructure provided for this work by the Kavli Nanoscience Institute at Caltech. J.T. was supported by the National Science Scholarship from the Agency of Science Technology and Research (A*STAR) Singapore.

Funding:

National Institutes of Health grant R01HL155815 (WG)

Office of Naval Research grant N00014-21-1-2483 (WG)

Translational Research Institute for Space Health grant NASA NNX16AO69A (WG)

Tobacco-Related Disease Research Program grant R01RG3746 (WG)

Carver Mead New Adventures Fund at California Institute of Technology (WG)

Footnotes

Competing interests: Authors declare that they have no competing interests.

Data and materials availability:

All data are available in the main text or the supplementary materials. The code for this study is available at https://github.com/Samwich1998/Robotic-Arm (sEMG-based robotic arm control) and https://github.com/Samwich1998/Boat-Search-Algorithm (M-Boat search algorithm).

References and Notes

- 1.Yang G-Z, Bellingham J, Dupont PE, Fischer P, Floridi L, Full R, Jacobstein N, Kumar V, McNutt M, Merrifield R, Nelson BJ, Scassellati B, Taddeo M, Taylor R, Veloso M, Wang ZL, Wood R, The grand challenges of Science Robotics. Sci. Robot 3, eaar7650 (2018). [DOI] [PubMed] [Google Scholar]

- 2.Sundaram S, Kellnhofer P, Li Y, Zhu J-Y, Torralba A, Matusik W, Learning the signatures of the human grasp using a scalable tactile glove. Nature 569, 698–702 (2019). [DOI] [PubMed] [Google Scholar]

- 3.Cianchetti M, Laschi C, Menciassi A, Dario P, Biomedical applications of soft robotics. Nat. Rev. Mater 3, 143–153 (2018). [Google Scholar]

- 4.Chortos A, Liu J, Bao Z, Pursuing prosthetic electronic skin. Nat. Mater 15, 937–950 (2016). [DOI] [PubMed] [Google Scholar]

- 5.Boutry CM, Negre M, Jorda M, Vardoulis O, Chortos A, Khatib O, Bao Z, A hierarchically patterned, bioinspired e-skin able to detect the direction of applied pressure for robotics. Sci. Robot 3, eaau6914 (2018). [DOI] [PubMed] [Google Scholar]

- 6.Yin J, Hinchet R, Shea H, Majidi C, Wearable soft technologies for haptic sensing and feedback. Adv. Funct. Mater 31, 2007428 (2020). [Google Scholar]

- 7.Ishida H, Wada Y, Matsukura H, Chemical sensing in robotic applications: A review. IEEE Sens. J 12, 3163–3173 (2012). [Google Scholar]

- 8.Trevelyan J, Hamel WR, Kang S-C, in Springer Handbook of Robotics, Siciliano B, Khatib O, Eds. (Springer International Publishing, Cham, 2016), pp. 1521–1548. [Google Scholar]

- 9.Wang J, Electrochemical sensing of explosives. Electroanalysis. 19, 415–423 (2007). [Google Scholar]

- 10.Singh S, Sensors—An effective approach for the detection of explosives. J. Hazard. Mater 144, 15–28 (2007). [DOI] [PubMed] [Google Scholar]

- 11.Fainberg A, Explosives detection for aviation security. Science 255, 1531–1537 (1992). [DOI] [PubMed] [Google Scholar]

- 12.Vucinic S, Antonijevic B, Tsatsakis AM, Vassilopoulou L, Docea AO, Nosyrev AE, Izotov BN, Thiermann H, Drakoulis N, Brkic D, Environmental exposure to organophosphorus nerve agents. Environ. Toxicol. Pharmacol 56, 163–171 (2017). [DOI] [PubMed] [Google Scholar]

- 13.Bajgar J, Organophosphates/nerve agent poisoning: Mechanism of action, diagnosis, prophylaxis, and treatment. Adv. Clin. Chem 38, 151–216 (2004). [DOI] [PubMed] [Google Scholar]

- 14.Gao A, Murphy RR, Chen W, Dagnino G, Fischer P, Gutierrez MG, Kundrat D, Nelson BJ, Shamsudhin N, Su H, Xia J, Zemmar A, Zhang D, Wang C, Yang G-Z, Progress in robotics for combating infectious diseases. Sci. Robot 6, eabf1462 (2021). [DOI] [PubMed] [Google Scholar]

- 15.Shen Y, Guo D, Long F, Mateos LA, Ding H, Xiu Z, Hellman RB, King A, Chen S, Zhang C, Tan H, Robots under COVID-19 pandemic: A comprehensive survey. IEEE Access. 9, 1590–1615 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Yang G-Z, Nelson BJ, Murphy RR, Choset H, Christensen H, Collins SH, Dario P, Goldberg K, Ikuta K, Jacobstein N, Kragic D, Taylor RH, McNutt M, Combating COVID-19—The role of robotics in managing public health and infectious diseases. Sci. Robot 5, eabb5589 (2020). [DOI] [PubMed] [Google Scholar]

- 17.Ray TR, Choi J, Bandodkar AJ, Krishnan S, Gutruf P, Tian L, Ghaffari R, Rogers JA, Bio-integrated wearable systems: A comprehensive review. Chem. Rev 119, 5461–5533 (2019). [DOI] [PubMed] [Google Scholar]

- 18.Kim DH, Lu N, Ma R, Kim YS, Kim RH, Wang S, Wu J, Won SM, Tao H, Islam A, Yu KJ, Kim TI, Chowdhury R, Ying M, Xu L, Li M, Chung HJ, Keum H, McCormick M, Liu P, Zhang YW, Omenetto FG, Huang Y, Coleman T, Rogers JA, Epidermal electronics. Science 333, 838–43 (2011). [DOI] [PubMed] [Google Scholar]

- 19.Gao W, Emaminejad S, Nyein HYY, Challa S, Chen K, Peck A, Fahad HM, Ota H, Shiraki H, Kiriya D, Lien D-H, Brooks GA, Davis RW, Javey A, Fully integrated wearable sensor arrays for multiplexed in situ perspiration analysis. Nature 529, 509–514 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Yang Y, Song Y, Bo X, Min J, Pak OS, Zhu L, Wang M, Tu J, Kogan A, Zhang H, Hsiai TK, Li Z, Gao W, A laser-engraved wearable sensor for sensitive detection of uric acid and tyrosine in sweat. Nat. Biotechnol 38, 217–224 (2020). [DOI] [PubMed] [Google Scholar]

- 21.Shih B, Shah D, Li J, Thuruthel TG, Park Y-L, Iida F, Bao Z, Kramer-Bottiglio R, Tolley MT, Electronic skins and machine learning for intelligent soft robots. Sci. Robot 5, eaaz9239 (2020). [DOI] [PubMed] [Google Scholar]

- 22.Yu Y, Nassar J, Xu C, Min J, Yang Y, Dai A, Doshi R, Huang A, Song Y, Gehlhar R, Ames AD, Gao W, Biofuel-powered soft electronic skin with multiplexed and wireless sensing for human-machine interfaces. Sci. Robot 5, eaaz7946 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Someya T, Bao Z, Malliaras GG, The rise of plastic bioelectronics. Nature 540, 379–385 (2016). [DOI] [PubMed] [Google Scholar]

- 24.Wang C, Li X, Hu H, Zhang L, Huang Z, Lin M, Zhang Z, Yin Z, Huang B, Gong H, Bhaskaran S, Gu Y, Makihata M, Guo Y, Lei Y, Chen Y, Wang C, Li Y, Zhang T, Chen Z, Pisano AP, Zhang L, Zhou Q, Xu S, Monitoring of the central blood pressure waveform via a conformal ultrasonic device. Nat. Biomed. Eng 2, 687–695 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kim J, Campbell AS, de Ávila BE-F, Wang J, Wearable biosensors for healthcare monitoring. Nat. Biotechnol 37, 389–406 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Choi S, Han SI, Jung D, Hwang HJ, Lim C, Bae S, Park OK, Tschabrunn CM, Lee M, Bae SY, Yu JW, Ryu JH, Lee S-W, Park K, Kang PM, Lee WB, Nezafat R, Hyeon T, Kim D-H, Highly conductive, stretchable and biocompatible Ag–Au core–sheath nanowire composite for wearable and implantable bioelectronics. Nat. Nanotech 13, 1048–1056 (2018). [DOI] [PubMed] [Google Scholar]

- 27.Sim K, Ershad F, Zhang Y, Yang P, Shim H, Rao Z, Lu Y, Thukral A, Elgalad A, Xi Y, Tian B, Taylor DA, Yu C, An epicardial bioelectronic patch made from soft rubbery materials and capable of spatiotemporal mapping of electrophysiological activity. Nat. Electron 3, 775–784 (2020). [Google Scholar]

- 28.Almuslem AS, Shaikh SF, Hussain MM, Flexible and stretchable electronics for harsh‐environmental applications. Adv. Mater. Technol 4, 1900145 (2019). [Google Scholar]

- 29.Kwon Y-T, Kim Y-S, Kwon S, Mahmood M, Lim H-R, Park S-W, Kang S-O, Choi JJ, Herbert R, Jang YC, Choa Y-H, Yeo W-H, All-printed nanomembrane wireless bioelectronics using a biocompatible solderable graphene for multimodal human-machine interfaces. Nat. Commun 11, 3450 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bandodkar AJ, Lee SP, Huang I, Li W, Wang S, Su C-J, Jeang WJ, Hang T, Mehta S, Nyberg N, Gutruf P, Choi J, Koo J, Reeder JT, Tseng R, Ghaffari R, Rogers JA, Sweat-activated biocompatible batteries for epidermal electronic and microfluidic systems. Nat. Electron 3, 554–562 (2020). [Google Scholar]

- 31.Zhou Z, Chen K, Li X, Zhang S, Wu Y, Zhou Y, Meng K, Sun C, He Q, Fan W, Fan E, Lin Z, Tan X, Deng W, Yang J, Chen J, Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nat. Electron 3, 571–578 (2020). [Google Scholar]

- 32.Ciui B, Martin A, Mishra RK, Nakagawa T, Dawkins TJ, Lyu M, Cristea C, Sandulescu R, Wang J, Chemical Sensing at the Robot Fingertips: Toward Automated Taste Discrimination in Food Samples. ACS Sens. 3, 2375–2384 (2018). [DOI] [PubMed] [Google Scholar]

- 33.Amit M, Mishra RK, Hoang Q, Galan AM, Wang J, Ng TN, Point-of-use robotic sensors for simultaneous pressure detection and chemical analysis. Mater. Horiz 6, 604–611 (2019). [Google Scholar]

- 34.Kaliyaraj Selva Kumar A, Zhang Y, Li D, Compton RG, A mini-review: How reliable is the drop casting technique? Electrochem. Commun 121, 106867 (2020). [Google Scholar]

- 35.Moin A, Zhou A, Rahimi A, Menon A, Benatti S, Alexandrov G, Tamakloe S, Ting J, Yamamoto N, Khan Y, Burghardt F, Benini L, Arias AC, Rabaey JM, A wearable biosensing system with in-sensor adaptive machine learning for hand gesture recognition. Nat. Electron 4, 54–63 (2021). [Google Scholar]

- 36.Guo S, Wen D, Zhai Y, Dong S, Wang E, Platinum nanoparticle ensemble-on-graphene hybrid nanosheet: one-pot, rapid synthesis, and used as new electrode material for electrochemical sensing. ACS Nano 4, 3959–3968 (2010). [DOI] [PubMed] [Google Scholar]

- 37.Troya D, Reaction mechanism of nerve-agent decomposition with Zr-based metal organic frameworks. J. Phys. Chem C. 120, 29312–29323 (2016). [Google Scholar]

- 38.de Koning MC, van Grol M, Breijaert T, Degradation of paraoxon and the chemical warfare agents VX, tabun, and soman by the metal–organic frameworks UiO-66-NH2, MOF-808, NU-1000, and PCN-777. Inorg. Chem 56, 11804–11809 (2017). [DOI] [PubMed] [Google Scholar]

- 39.Wang J, Carbon-nanotube based electrochemical biosensors: A review. Electroanalysis 17, 7–14 (2005). [Google Scholar]

- 40.Schroeder V, Savagatrup S, He M, Lin S, Swager TM, Carbon nanotube chemical sensors. Chem. Rev 119, 599–663 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Torrente-Rodríguez RM, Lukas H, Tu J, Min J, Yang Y, Xu C, Rossiter HB, Gao W, SARS-CoV-2 RapidPlex: A graphene-based multiplexed telemedicine platform for rapid and low-cost COVID-19 diagnosis and monitoring. Matter 3, 1981–1998 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lundberg SM, Lee S-I, A unified approach to interpreting model predictions. Advances in Neural Information Processing Systems 2017, 4765–4774 (2017). [Google Scholar]

- 43.Hudgins B, Parker P, Scott RN, A new strategy for multifunction myoelectric control. IEEE Trans. Biomed. Eng 40, 82–94 (1993). [DOI] [PubMed] [Google Scholar]

- 44.Russell RA, Thiel D, Deveza R, Mackay-Sim A, A robotic system to locate hazardous chemical leaks. IEEE Int. Conf. Robot. Autom 1, 556–561 (1995). [Google Scholar]

- 45.Liu X, Gong D, A comparative study of A-star algorithms for search and rescue in perfect maze. International Conference on Electric Information and Control Engineering 2011, 24–27 (2011). [Google Scholar]

- 46.Hummers WS, Offeman RE, Preparation of graphitic oxide. J. Am. Chem. Soc 80, 1339–1339 (1958). [Google Scholar]

- 47.Yoon JG, Yoon J, Song JY, Yoon S-Y, Lim CS, Seong H, Noh JY, Cheong HJ, Kim WJ, Clinical Significance of a High SARS-CoV-2 Viral Load in the Saliva. J. Korean Med. Sci 35, e195 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Movie S3. M-Bot: Human-machine interactive robotic fingertip point of sensing.

Movie S4. M-Bot: Human-machine interactive robotic object manipulation and on-site hazard analysis.

Movie S5. M-Boat: Wireless motion control.

Movie S6. M-Boat: Autonomous source tracking.

Fig. S1. Schematic and fabrication procedure of e-skin-R.

Fig. S2. Characterization of drop-on-demand inkjet printing with custom-designed inks.

Fig. S3. Characterizations of inkjet-printed nanostructured patterns for M-Bot.

Fig. S4. Simulation of the hydrogel-based solid-phase hazard analyte sampling and sensing.

Fig. S5. Characterizations of the IPCEs.

Fig. S6. Evaluation of the M-Bot chemical sensing using hydrogels with enlarged size.

Fig. S7. Evaluation of the M-Bot chemical sensing with increased sensor density.

Fig. S8. Characterization of the printed AgNWs/N-PDMS tactile sensors.

Fig. S9. Characterization of printed Pt/graphene electrodes.

Fig. S10. The algorithm used for automatic baseline correction of the DPV voltammograms.

Fig. S11. In situ regeneration of the hydrogel-loaded Pt-graphene TNT sensors.

Fig. S12. Characterization of the printed MOF-808/Au electrodes.

Fig. S13. Evaluation of the MOF-808/Au electrode for high-concentration OP analysis.

Fig. S14. The selectivity of Pt-graphene and MOF-808/Au electrodes over OP and TNT.

Fig. S15. The selectivity of TNT and OP sensors over other nitro compounds.

Fig. S16. The continuous TNT and OP sensing with the printed TNT and OP sensors.

Fig. S17. Schematic of the modification procedure of the printed CNT electrode for SARS-CoV-2 S1 sensing.

Fig. S18. Evaluation of the stability of antibody immobilization.

Fig. S19. The selectivity of the SARS-CoV-2 S1 sensor over other viral proteins.

Fig. S20. The selectivity of the SARS-CoV-2 S1 sensor for dry-phase protein detection.

Fig. S21. Characterization of printed carbon-based temperature sensor toward real-time temperature sensing and calibration.

Fig. S22. Performance of the printed sensor arrays under mechanical deformation.

Fig. S23. Stability of the hydrogel.

Fig. S24. Influence of the contact pressure on the electrochemical sensor performance.

Fig. S25. Interference of the contact pressure on SARS-CoV-2 S1 sensor performance.

Fig. S26. Fabrication and characterization of the soft and stretchable e-skin-H.

Fig. S27. Gesture classification confusion matrix obtained with different machine learning algorithms.

Fig. S28. Evaluation of the e-skin-H on different body parts for AI-assisted human-machine interaction.

Fig. S29. Evaluation of the e-skin-H from gestures with different degrees.

Fig. S30. sEMG and root mean square (RMS) data process for robotic hand control.

Fig. S31. SHAP decision plots based on the arm sEMG dataset with respect to a KNN classification model.

Fig. S32. A SHAP heatmap that clusters each point’s SHAP value by its respective gesture classification.

Fig. S33. Evaluation of the laser proximity sensor in robotic operation.

Fig. S34. Human-interactive robotic control for object touching and on-site TNT detection.

Fig. S35. Multiplexed simultaneous physicochemical sensor analysis using the printed sensor array.

Fig. S36. Human-machine interactive robotic operation for object grasping.

Fig. S37. The tactile responses of the e-skin-R on a cylindrical object.

Fig. S38. The OP sensing responses of the e-skin-R on a cylindrical object.

Fig. S39. Schematic illustration and component list of the electronic system.

Fig. S40. Characterization of the operation of the M-Boat.

Fig. S41. Simulation comparison of the searching algorithms for M-Boat autonomous source locating.

Fig. S42. Evaluation of the M-Boat for autonomous source tracking.

Fig. S43. Evaluation of the M-Boat in seawater.

Fig. S44. Influence of ionic strength and pH on sensor performance.

Table S1. Ink composition and characterizations.

Table S2. Accuracy breakdown per feature with and without one channel removed.

Table S3. Accuracy breakdown based on five features with one and two channels.

Movie S1. Inkjet-printed soft e-skin for human-machine interaction and multimodal sensing.

Movie S2. Machine learning-assisted human-interactive robotic control.

Data Availability Statement

All data are available in the main text or the supplementary materials. The code for this study is available at https://github.com/Samwich1998/Robotic-Arm (sEMG-based robotic arm control) and https://github.com/Samwich1998/Boat-Search-Algorithm (M-Boat search algorithm).