Abstract

Wearable technology is an emerging method for the early detection of coronavirus disease 2019 (COVID-19) infection. This scoping review explored the types, mechanisms, and accuracy of wearable technology for the early detection of COVID-19. This review was conducted according to the five-step framework of Arksey and O’Malley. Studies published between December 31, 2019 and December 15, 2021 were obtained from 10 electronic databases, namely, PubMed, Embase, Cochrane, CINAHL, PsycINFO, ProQuest, Scopus, Web of Science, IEEE Xplore, and Taylor & Francis Online. Grey literature, reference lists, and key journals were also searched. All types of articles describing wearable technology for the detection of COVID-19 infection were included. Two reviewers independently screened the articles against the eligibility criteria and extracted the data using a data charting form. A total of 40 articles were included in this review. There are 22 different types of wearable technology used to detect COVID-19 infections early in the existing literature and are categorized as smartwatches or fitness trackers (67%), medical devices (27%), or others (6%). Based on deviations in physiological characteristics, anomaly detection models that can detect COVID-19 infection early were built using artificial intelligence or statistical analysis techniques. Reported area-under-the-curve values ranged from 75% to 94.4%, and sensitivity and specificity values ranged from 36.5% to 100% and 73% to 95.3%, respectively. Further research is necessary to validate the effectiveness and clinical dependability of wearable technology before healthcare policymakers can mandate its use for remote surveillance.

Keywords: Artificial intelligence, COVID-19, Early detection, Wearable technology

1. Introduction

Scientists have begun to unravel the complexities of the coronavirus disease 2019 (COVID-19) virus, and the end of the pandemic is nowhere in sight as highly resistant variants continue to emerge (Fontanet et al., 2021). Asymptomatic infections remain a challenge for disease control (Gao et al., 2021; Neamah, 2020) and the effectiveness of vaccines have evidently waned in the face of the most recent omicron variant (Callaway, 2021). Studies have shown numerous cases of reinfection (Ren et al., 2022) and technological advances assisting in COVID-19 recovery also remain inchoate (Islam et al., 2020a; Islam et al., 2020b). The recent discovery of the COVID-19 treatment pill cannot be regarded as a panacea as its efficacy is limited to the early stages of infection. The mutagenic potential of the virus may also give rise to highly resistant variants (Singh et al., 2021). With the omnipresence of COVID-19, developing a detection system that can identify the infection before symptom onset or among asymptomatic carriers is imperative to stop the domino effect of the disease (Hashmi and Asif, 2020).

Contact tracing, symptom screening, and routine testing are current COVID-19 public health surveillance methods (World Health Organization, 2020). Manual contact tracing is labor intensive and the efficacy of digital contact tracing depended on substantial user uptake, which is difficult to achieve (Shahroz et al., 2021). Older age groups may also struggle with its navigation (Grekousis and Liu, 2021). Meanwhile, daily symptoms screening mandated by workplaces and schools is subject to respondents’ truthful reporting (Ruffini et al., 2021) and may not be reliable due to asymptomatic and pre-symptomatic presentations (Callahan et al., 2020). Moreover, the wide variety of atypical symptoms makes it difficult to distinguish a COVID-19 infection (Baj et al., 2020).

To address this ambiguity, routine reverse transcription polymerase chain reaction (RT-PCR) testing is mandated in high-risk settings such as healthcare institutions (Hellewell et al., 2021). However, RT-PCR testing is unviable in some countries owing to its long turnaround time and trained personnel are required to perform nasal swabs safely (Peeling et al., 2021). The development of antigen rapid tests (ARTs) provide an inexpensive self-test kits with a faster turnaround time, but are less accurate (Peeling et al., 2021). RT-PCR tests and ARTs are also invasive and cause discomfort for the users (Kinloch et al., 2020). Hence, other detection approaches such as wearable devices are needed to remedy the aforementioned shortcomings. Besides noticeable COVID-19 symptoms, an infection can be identified through changes in physiological characteristics, such as heart rate variability (Hasty et al., 2021), oxygen saturation (Teo, 2020), respiration rate (Natarajan et al., 2020), and arrhythmia (Öztürk et al., 2020). With such knowledge, detection methods should ideally be able to establish a baseline pattern unique to an individual and identify deviations related to an infection (Radin et al., 2021). This process can be facilitated through continuous monitoring and automation, which can be realized with wearable devices (Metcalf et al., 2016; Natarajan et al., 2020; Yetisen et al., 2018).

Wearable technology refers to electronic devices that are worn on various parts of the body or built into clothing or accessories. It leverages on the miniaturization of sensors and integration of network connectivity and predictive analytics to capture, transmit, and analyze biometric information automatically (Yetisen et al., 2018). With its ability to generate real-time measurements continuously, wearable technology requires minimal involvement from users and healthcare professionals, thereby minimizing viral transmission (Metcalf et al., 2016). Unlike other types of surveillance methods, wearable technology can tailor reliable predictions for an individual by gathering multiple physiological characteristics unobtrusively (Metcalf et al., 2016). The burgeoning use of wearable technology can be attributed to its multifunctional and versatile application (Wright and Keith, 2014). Although predominantly used for fitness tracking, the healthcare sector witnessed a proliferation of wearable technology owing to its medical application (Bonato, 2010; Cheung et al., 2019; Iqbal et al., 2021; Yetisen et al., 2018). Before the pandemic, wearable technology was used for the detection of other illnesses such as neurological disorders, cardiovascular and respiratory diseases (Lu et al., 2020).

A recent review recommended the usage of telehealth systems and technology during pandemic to prevent and avoid Covid-19 infection and wearable technology shows potential primarily as a screening and surveillance tool capable of disrupting the ripple effect of COVID-19 (Ullah et al., 2021). Types, mechanisms, and accuracy are relevant aspects to consider when examining the feasibility of deploying wearable technology for the early detection of COVID-19 in real-world settings (Radin et al., 2021). Hardware components including their form factor and placement, can influence the accuracy of gathered physiological metric measurements and users’ comfort (Davies et al., 2020; Park et al., 2019), which may affect the adoption of wearable technology (Li et al., 2016). The mechanism used in the technology may also influence detection accuracy and the credibility of its results (Radin et al., 2021). Furthermore, poor or overoptimistic accuracy results may undermine the applicability of wearable technology in real-world settings (Radin et al., 2021).

Reviews specific to the early detection of COVID-19 via remote monitoring using wearable technology are limited due to its novelty. The available reviews (Islam et al., 2020a; Islam et al., 2020b; Santos et al., 2021; Vindrola-Padros et al., 2021) reported using wearable technology used to support and monitor deterioration in COVID-19 infected patients. Other reviews (Channa et al., 2021; De Fazio et al., 2021; Ding et al., 2021; Islam et al., 2020a; Mirjalali et al., 2021) involving early detection of covid-19 using developing wearable technology have not been tested on real-life subjects. The review done by Anglemyer et al. (2020) focused on the digital contact tracing aspect of wearable technology, while Channa et al. (2021) examined the potential application of wearable technology in the COVID-19 pandemic in general. However, such reviews demonstrate methodological gaps, specifically, their search strategy was incomprehensive, limited to only three databases (Channa et al., 2021), and did not include grey literature (Anglemyer et al., 2020).

Given the novelty of this topic, the available evidence is complex and diverse. Hence, a scoping review methodology is suitable to map the available evidence and identify the existing gaps in knowledge for subsequent systematic reviews (Munn et al., 2018). As more research on this topic is envisaged, the findings of this review can elucidate and provide insights on the available evidence for ensuing reviews (Munn et al., 2018). This scoping review aims to map out the (1) types of wearable technology for the early detection of COVID-19 infection, (2) mechanisms, and (3) detection accuracy.

2. Methods

This scoping review was performed in accordance with the five-step framework of Arksey and O'Malley (2005). As this work is a scoping review, the quality of included papers was not appraised critically (Arksey and O'Malley, 2005). Instead, a broad overview of the use of wearable technology for the early detection of a COVID-19 infection is presented. The results of the search are presented according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses Extension for Scoping Reviews (PRISMA-ScR) checklist (Table S1) (Tricco et al., 2018). This protocol is registered in the Open Science Framework registries (https://osf.io/2v6qc).

2.1. Identifying the research questions

Based on the population, concept, context (PCC) mnemonic recommended for scoping reviews (Peters et al., 2020), the population of this review was the general worldwide population affected by the COVID-19 pandemic. The details of the eligibility criteria are presented in Table S2. The concept of interest was the types, mechanisms, and accuracy of currently available wearable technology for the early detection of COVID-19 infection in the context of the COVID-19 pandemic. In accordance with the PCC framework, the specific review questions are as follows:

-

(1)

What types of currently available wearable technology are used to detect COVID-19 infection early?

-

(2)

How do the mechanisms of wearable technology enable the early detection of COVID-19 infection?

-

(3)

How accurate is wearable technology in detecting COVID-19 infection?

2.2. Identifying relevant studies

A three-step search strategy recommended by the Joanna Briggs Institute (JBI) was utilized for a comprehensive search (Peters et al., 2017). First, a preliminary search of PubMed clinical queries and the Cochrane Database of Systematic Reviews was conducted using search terms such as “wearable technology” and “COVID-19,” but no scoping reviews on this topic were identified. The gaps in similar systematic reviews were evaluated previously. The text words and index terms used in the titles and abstracts of the retrieved papers were also analyzed.

Second, the identified keywords and index terms were refined and used across all the databases and grey literature sources. The Peer Review of Electronic Search Strategies checklist was used to guide the electronic literature search strategy (McGowan et al., 2016). An experienced science librarian was engaged to check the search strategy (Table S3), which included all the identified keywords and index terms adopted according to the utilized database or information source. The final search terms used were “wearable technology,” “wearable electronic devices,” “wearable sensors,” “COVID-19,” and “SARS-CoV-2.” No language restrictions were imposed. Studies published between December 31, 2019, when COVID-19 was first discovered, and December 15, 2021 were included. Published and unpublished studies were searched across 10 electronic databases, namely, PubMed, Embase, Cochrane, CINAHL, PsycINFO, ProQuest, Scopus, Web of Science, IEEE Xplore, and Taylor & Francis Online. Sources of the unpublished studies or grey literature included ProQuest Dissertation & Theses, ClinicalTrials.gov, and Google Scholar.

Third, the reference lists of all the included articles were screened to obtain additional studies. A manual search was also performed to find key journals related to wearable technology and COVID-19 (i.e., Sensors, Nature Medicine, Frontiers in Digital Health, The Lancet, The Lancet Digital Health, and The Lancet Infectious Diseases).

2.3. Study selection

After the database search, all the identified records were uploaded to EndNote X20 (The EndNote Team, 2020), and the duplicate articles were removed. Articles were included if they described a (1) type of currently available wearable technology that will be or was used in experiments on real-life subjects who developed COVID-19 or are COVID-19 positive and/or (2) its mechanism and/or (3) accuracy in the early detection of COVID-19 infection and (4) only one primary study and its study design. Articles were excluded if they (1) described the potential application of wearable technology for the early detection of COVID-19 infection or wearable technology that will not be or was not used in experiments on real-life subjects who developed COVID-19 or are COVID-19 positive (e.g., simulated prototypes or tested only on healthy individuals) or did not describe its (2) mechanisms or (3) accuracy for COVID-19 early detection, (4) were not about the early detection of COVID-19 (e.g., point-of-care diagnosis of COVID-19 or monitoring of COVID-19 patients), or (5) described more than one primary study (e.g., reviews) or did not describe the primary study design (e.g., news, perspectives, and editorials). A pilot test was performed by the two reviewers on 10 articles to refine the eligibility criteria. Both reviewers independently screened the titles and abstracts, followed by the full texts, against the eligibility criteria. Disagreements between the reviewers at each stage of the selection process were resolved by reaching a consensus.

2.4. Charting the data

The data were charted on a data collection form jointly developed by both reviewers, adapted from the JBI methodology guidance for scoping reviews (Peters et al., 2020). The data items were selected based on the review questions and categorized as study details (e.g., authors’ names, year of publication, study design, name of study, clinical trial number, and aim of study), population (study population, sample size, and geographical region of study), wearable technology type (name, placement, form factor, FDA status, type of sensor, and type of physiological measurements gathered), wearable technology mechanism (name of all mechanisms, best mechanism, type of best mechanism, ability to distinguish COVID-19 from similar diseases, data used, and data preparation techniques), and wearable technology accuracy (reference test used, accuracy measures, and values). The two independent reviewers pilot tested the form on five randomly selected articles, and amendments were made before its use. The two reviewers independently charted the data using Microsoft Excel before making comparisons. Disagreements were resolved through discussions. Finally, the data were presented as visual representations, tables, and narrative syntheses.

3. Results

3.1. Study selection and characteristics

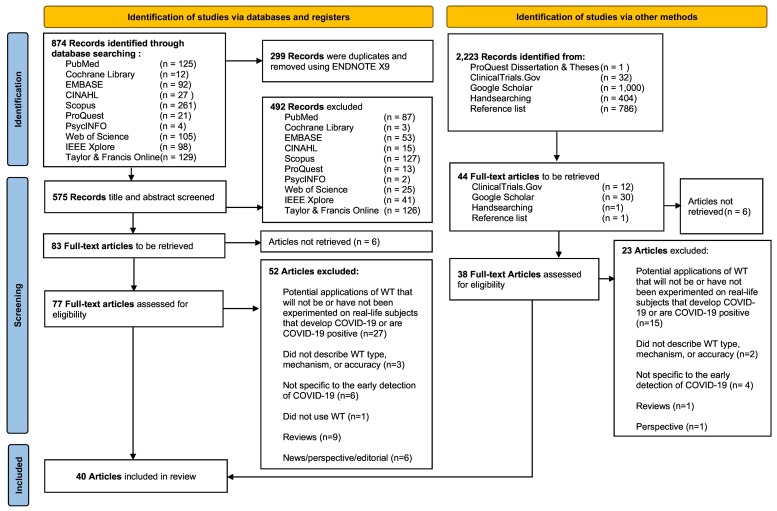

A total of 874 articles were identified from the 10 electronic databases. Thereafter, 299 articles were removed using EndNote X20 (The EndNote Team, 2020). The titles and abstracts of 575 articles were screened against the eligibility criteria, and the full text of 77 articles was retrieved and assessed against the eligibility criteria. An additional 38 full-text articles were retrieved from the grey literature sources, reference lists, and key journals. Subsequently, 75 articles were excluded (Table S4); thus, 40 articles from the electronic databases and other sources were included (Table S5 and Fig. S1). The selection process using the PRISMA flow diagram is presented in Fig. 1 .

Fig. 1.

PRISMA 2020 flow diagram of study retrieval and selection process.

Note: ECG = electrocardiography and PPG = Photoplethysmography.

Table 1 summarizes the characteristics of the selected articles. Most of the included articles were developmental papers (n = 16) and clinical trials (n = 11). The study designs were predominantly observational (n = 34), specifically, cohort studies (n = 24). The studies mainly involved participants from the United States (n = 26). Among the articles that reported on the wearable technology mechanism (n = 18), the majority had a sample size of less than 1000 individuals (n = 12).

Table 1.

Characteristics of the included 40 articles.

| No. | Author (year) | Country/Region | Study Design | Population (Sample Size) | Wearable Technology |

|---|---|---|---|---|---|

| 1. | Alavi et al. (2021) | United States/America | Prospective cohort study | COVID-19 +ve and -ve individuals and untested individuals (N = 3316) | Fitbit, Apple watch |

| 2. | Bogu and Snyder (2021) | United States/America | Retrospective cohort study | COVID-19 +ve and -ve individuals and untested individuals and healthy individuals (N = 106) | Fitbit |

| 3. | Brakenhoff et al. (2021) | The Netherlands/Europe | Clinical trial registered protocol | Residents of Netherlands (N = 20,000) | Ava |

| 4. | Choi (2021) | United States/America | Prospective cohort study | Students at the University (N = 2, 494) | Fitbit, TempTraq |

| 5. | Choi (2021) | United States/America | Prospective cohort study | Healthcare workers at Michigan Medicine (N = 226) | Fitbit |

| 6. | Chung et al (2020) | China/Asia | Prospective cohort study | Healthcare professionals and college students in quarantine (N = 287) | HEARThermo |

| 7. | Cislo et al (2021) | United States/America | Prospective cohort study | College students at the University of Michigan (N = 2158) | Fitbit |

| 8. | Cleary et al (2021) | United States/America | Prospective cohort study | COVID-19 +ve and -ve medical interns (N = 105) | Fitbit, Apple watch |

| 9. | Clingan et al (2021) | United States/America | Observational study protocol | Healthcare workers at Michigan Medicine (N = 226) | Fitbit, TempTraq |

| 10. | ClinOne (2021) | United States/America | Clinical trial registered protocol | All adult subjects seeking a COVID-19 test (N = 2352) | BioSticker (BioIntelliSense) |

| 11. | D’Haese et al (2021) | United States/America | Pilot study | Front-line healthcare workers (N = 867) | Oura |

| 12. | Evidation Health (2020) | United States/America | Clinical trial registered protocol | Adult participants (ages 18+) (N = 847) | Empatica E4 |

| 13. | Gadaleta et al. (2021) | United States/America | Prospective cohort study | Participants with self-reported result for a COVID-19 swab test (N = 1131) | Fitbit, Apple watch |

| 14. | Gielen et al (2021) | United States/America | Case report | Biostrap users who have tested positive for SARS-CoV-2) (N = 2) | Biostrap |

| 15. | Hassantabar et al. (2021) | Italy/Europe | Retrospective cohort study | Healthy individuals, COVID-19 positive individuals (N = 87) | Empatica E4 |

| 16. | Hirten et al. (2021) | United States/America | Prospective observational study | COVID-19 +ve and -ve health care workers (N = 297) | Apple watch |

| 17. | Hung (2020) | China/Asia | Clinical trial registered protocol | Asymptomatic subjects with close COVID-19 contact (N = 200-1000) | Everion |

| 18. | Imperial College London (2020) | United Kingdom/Europe | Clinical trial registered protocol | Returning traveler from airport with mild symptoms of Covid-19 (N = 200) | SensiumVitals |

| 19. | Iqbal et al. (2021) | United Kingdom/Europe | Pilot study | Individuals arriving to London with mild suspected COVID-19 symptoms (N = 14) | SensiumVitals |

| 20. | Jayaraman (2020) | United States/America | Clinical trial registered protocol | Individuals who may have experienced COVID-19 like symptoms (N = 100) | ADAM sensor |

| 21. | King's College London (2020) | United Kingdom/Europe | Clinical trial registered protocol | Healthy healthcare workers who work in high-risk COVID-19 areas (N = 60) | Empatica E4 |

| 22. | Liu et al (2021) | Not reported/Europe | Retrospective cohort study | Participants with multiple sclerosis with COVID-19 symptoms (N = 87) | Fitbit |

| 23. | Lonini et al (2020) | United States/America | Pilot study | Inpatient and home-quarantining COVID +ve individuals and healthy individuals (N = 29) | Soft-wearable |

| 24. | Miller et al (2020) | Australia/Oceania | Retrospective cohort study | Individuals with COVID-19 +ve (N = 271) | WHOOP |

| 25. | Mishra et al. (2020) | United states/North America | Retrospective cohort study | Participants with COVID-19 diagnosis (N = 125) | Fitbit |

| 26. | Natarajan et al. (2020) | United States/America | Retrospective cohort study | Subjects diagnosed with COVID-19 (N = 2, 745) | Fitbit |

| 27. | Nestor et al. (2021) | United States/America | Retrospective cohort study | Influenza individuals and COVID-19 +ve individuals (N = 32,198) | Fitbit |

| 28. | Polsky and Moraveji (2021) | United States/America | Case reports | Patients with COVID-19 (N = 3) | Health Tags (Spire Health) |

| 29. | Quer et al (2021) | United States/America | Prospective cohort study | COVID-19 +ve and -ve individuals and untested individuals (N = 30, 529) | Fitbit, Apple watch |

| 30. | Sarwar and Agu (2021) | United States/America | Retrospective cohort study | COVID-19 positive individuals (N = 20) | Fitbit |

| 31. | Scripps Translational Science Institute (2020) | United States/America | Clinical trial registered protocol | United States residents with any connected wearable devices (N = 100,000) | Fitbit, Apple watch, Garmin Vivosmart 4, Oura, |

| 32. | Shapiro et al (2020) | United States/America | Retrospective cohort study | Participants with self-reported diagnosed COVID-19 cases, non-COVID-19 flu cases and pre-COVID-19 flu (N = 1, 352) | Fitbit |

| 33. | Skibinska et al (2021) | United States/North America | Retrospective cohort study | COVID-19 cases, healthy controls, influenza cases (N = 68) | Fitbit |

| 34. | Smarr et al. (2020) | United States, United Kingdom, Finland, Austria, Canada, Germany, Honduras, Italy, The Netherlands, Norway, and Sweden/America and Europe | Retrospective cohort study | Subjects that reported covid-19 infections (N = 50) | Oura |

| 35. | The Christie NHS Foundation Trust (2020) | United Kingdom/Europe | Clinical trial registered protocol | Patients with solid tumour or haematological malignancy diagnosis who present with symptoms suspicious for Covid-19 who the admitting clinicians deems appropriate for outpatient management (N = 30) | Patient Status Engine (Lifetouch and Lifetemp) |

| 36. | Wendel et al (2021) | United States/America | A cross-sectional study | Medical professionals in 1A and 1B vaccination phases at the UCHealth University of Colorado Hospital (N = 290) | BioButton (BioIntelliSense) |

| 37. | Wong et al. (2020) | China/Asia | Clinical trial registered protocol | Asymptomatic subjects with close COVID-19 contact under mandatory quarantine (N = 200-1000) | Everion |

| 38. | Xu (2020) | United States/America | Clinical trial registered protocol | Healthy adults and adults exposed to or diagnosed with COVID-19 (N = 324) | ANNE One (ANNE Limb and ANNE Chest) |

| 39. | Zargaran et al (2020) | United Kingdom/Europe | Prospective observational trial | Healthcare workers in high-risk areas for COVID-19 (N = 60) | Empatica E4 |

| 40. | Zhu et al (2020) | China, Italy, Spain, Germany and France/Asia and Europe | Retrospective cohort study | Huami device users who wore Huami device from July 1, 2017, to April 8, 2020 (N = 1.3 million) | Huami/Amazfit |

Note. RCT = randomised controlled trial, +ve = Postive; -ve = Negative

3.2. Review question 1: What types of currently available wearable technology are used to detect COVID-19 infection early?

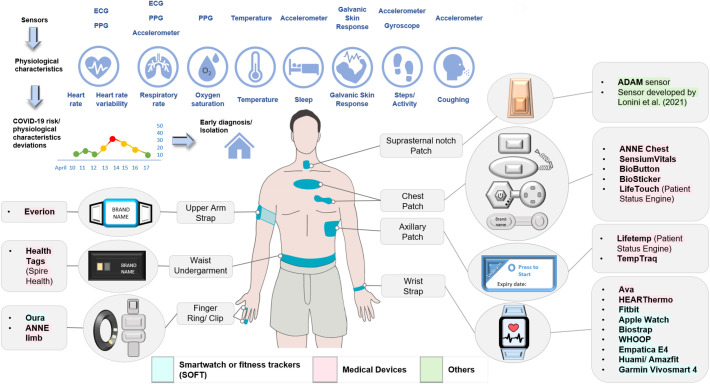

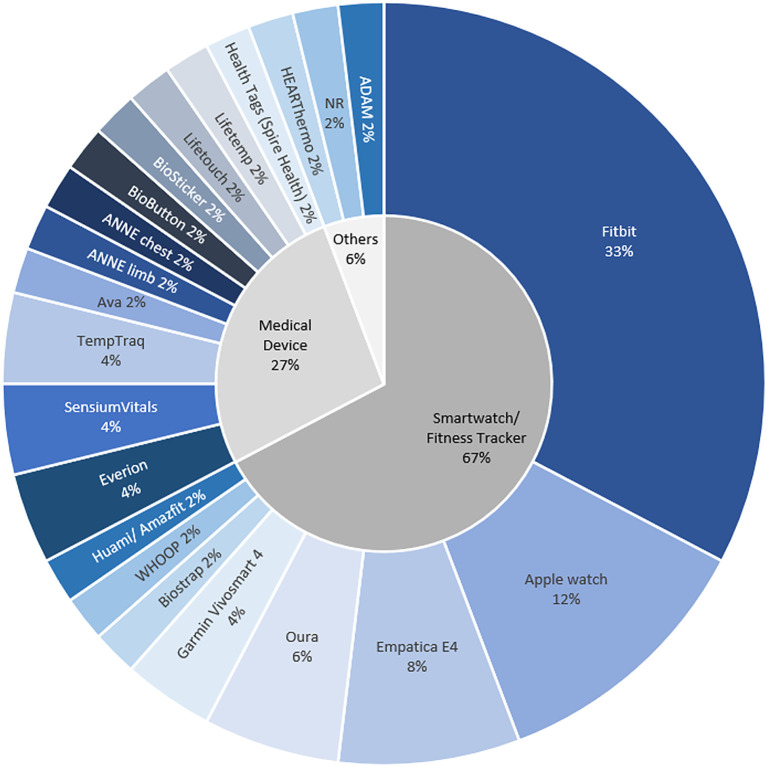

A total of 22 types of wearable technology were identified and categorized as smartwatches or fitness trackers (SOFTs), medical devices, or others (Fig. 2 ). SOFTs with FDA clearance were not classified as medical devices, as only their mobile application features received clearance. Technology that was not SOFT or a medical device was classified as “others.” Among the different types of wearable technology, SOFTs were the most frequently used (67%; Fig. 3 ). SOFTs and “others” were predominantly placed on the wrist (90%) and suprasternal notch (100%), respectively, whereas a variety of placement positions (chest, axillary, upper arm, waist, and wrist; Table S6) were described for the medical devices. The medical devices and “others” were mainly in patch form (58%), while the SOFTs were in strap form (90%). The embedded sensors in wearable technology enable the remote monitoring of physiological changes that serve as potential indicators of a COVID-19 infection (Fig. 2). On average, the “others” (mean = 6.5) gathered the most types of physiological characteristics, followed by the SOFTs (mean = 6.1) and medical devices (mean = 4.1; Fig. S2 and Table S7).

Fig. 2.

Types of wearable technology used for early detection of COVID-19.

Fig. 3.

Frequency of wearable technology types amongst 40 included articles.

3.3. Review question 2: How do the mechanisms of wearable technology enable the early detection of COVID-19 infection?

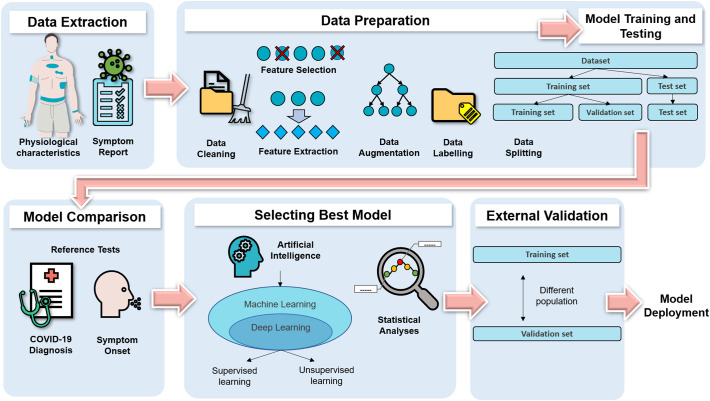

The mechanisms reported in 18 articles focused on anomaly detection (Table 2 ). The process for developing the anomaly detection models (ADMs) included data extraction, data preparation, and model training, testing, and comparison (Fig. 4 ).

Table 2.

Components of wearable technology mechanism (n = 18).

| Author (year) | Data Extraction |

Data Preparation |

Model Comparisons | Best Model (Type) | External Validation | Distinguish COVID-19 from another ILI | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| WT | Data Input | Feature Selection or Extraction | Data Augmentation | Internal Validation | Data Labelling/Data Segmentation | |||||

| Alavi et al. (2021) | Fitbit, Apple Watch | Overnight RHR | NR | NR | NR | NA | RHRAD, CuSum, NightSignal, Isolation Forest | NightSignal (Deterministic Finite State Machine) | NR | No |

| Bogu and Snyder (2021) | Fitbit | RHR | NR | Seven time-series data augmentation techniques | Time series cross-validation | 7 days before and 21 days after symptom onset were considered as infectious | NA | Long Short-Term Memory Networks-based autoencoder (LAAD) (Unsupervised deep learning) | NR | No |

| Cleary et al (2021) | Fitbit, Apple watch | RHR, sleep and steps | NR | NR | NR | 0–7 days after symptom onset as test periods | RHRmetric, SLEEPmetric, STEPmetric, SENSORmetric | SENSORmetric (Statistical Analysis) | NR | No |

| D’Haese et al (2021) | Oura | Overnight HR, HRV, RR, activity, sleep and symptom report | NR | NR | K-fold cross-validation | Label symptoms suspicious of viral-like symptoms | NA | Markov network and Association Rule Mining Algorithm (Supervised deep learning and unsupervised machine learning) | NR | No |

| Gadaleta et al. (2021) | Fitbit, Apple watch | RHR, sleep, activity and symptom report | Feature Extraction | NR | k-fold cross validation | NR | NA | CatBoost (Supervised machine learning) | NR | No |

| Hassantabar et al. (2021) | Empatica E4 | Galvanic skin response, Temperature, inter-beat interval, oxygen saturation and symptom report | NR | Synthetic data generation with the TUTOR framework | Unspecified | NR | Naïve Bayes, Random Forest, Ada Boost, Decision Tree, SVM, k-NN, deep neural network model with grow-and-prune synthesis | Deep neural network model with grow-and-prune synthesis (Supervised deep learning) | NR | No |

| Hirten et al. (2021) | Apple watch | HRV | NR | NR | Bootstrapping | Defined being symptomatic as the first day of a reported symptom | NA | Mixed-effect Cosinor model (Statistical Analysis) | NR | No |

| Liu et al (2021) | Fitbit | HR | NR | NR | leave one subject out cross-validation | NR | CNN, MLPs, LSTM | Contrastive CAE (Unsupervised deep learning) | NR | No |

| CAE, contrastive CAE | ||||||||||

| Lonini et al (2020) | NR | RR intervals, steps, RR and frequency spectrum of cough signals | Feature Selection | NR | leave-one-subject-out nested cross validation | Labelled snapshots as COVID-19 positive and negative | NA | Logistic Regression (Supervised machine learning) | NR | No |

| Miller et al (2020) | WHOOP | Overnight RHR, HRV and RR | NR | NR | Unspecified | Meeting or exceeding threshold was equivalent to classifying healthy or infected days as COVID-19 positive. | NA | Gradient boosted classifier (Supervised machine learning) | NR | No |

| Mishra et al. (2020) | Fitbit | HR and steps | Feature Extraction | NR | NR | Dates of symptom onset and diagnosis to define sick periods | RHR-Diff, HROS-AD, CuSum | CuSum (Statistical Analysis) | NR | No |

| Natarajan et al. (2020) | Fitbit | RR, HR, and HRV | NR | NR | k-fold cross validation | Data from 2nd to 6th day of symptom onset labelled as sick | NA | CNN (Supervised deep learning) | NR | No |

| Nestor et al (2021) | Fitbit | Night-time RR, RHR, HRV and symptom report | Feature Extraction | NR | Time series cross-validation | Days between self-reported symptom onset and self-reported recovery labelled as positive | XGBoost, XGBoost and GRU-D | XGBoost and GRU-D (Supervised machine and deep learning) | Prospective evaluation | Yes |

| Quer et al (2021) | Fitbit, Apple Watch | RHR, sleep, activity and symptom report | NR | NR | Bootstrapping | First date of symptoms to seven days after symptoms considered infectious | RHRMetric, SleepMetric, ActivityMetric, SymptomMetric, SensorMetric, OverallMetric | OverallMetric (Statistical analysis) | NR | No |

| Sarwar and Agu (2021) | Fitbit | RHR and sleep | Feature Selection and Extraction | Synthetic Minority Over-sampling Technique (SMOTE) | k-fold cross-validation | 14 days after the symptom onset was considered as the infectious period | Naïve Bayes, Random Forest, Ada Boost, Logistics Regression, SVM, Gradient Boosting Classifier, LSTM Autoencoder | Gradient Boosting Classifier (Supervised machine learning) | NR | No |

| Skibinska et al (2021) | Fitbit | HR and steps | Feature Extraction | Synthetic data generation with the TUTOR framework | k- fold Stratified Cross-Validation | NR | XGBoost, k-NN, SVM, Logistic Regression Decision Tree Random Forest | k-NN (Supervised machine learning) | NR | Yes |

| Smarr et al. (2020) | Oura | Dermal temperature | NR | NR | NR | “Symptom window” as each individual’s window of reported symptoms | NA | Minimum and maximum temperature threshold (Statistical analysis) | NR | No |

| Zhu et al (2020) | Huami/Amazfit | RHR and sleep | NR | NR | NR | NR | NA | CDNet – CatNN and DenNN (Supervised deep learning) | NR | No |

Note. CAE = conventional convolutional auto-encoder, CNN = conventional neural network, HR = heart rate, HROS-AD = heart rate over steps anomaly detection, HRV = heart rate variability, ILI = influenza-like illness, k-NN = k-nearest neighbour, LSTM =Long Short-Term Memory Networks, MLPs = Multilayer Perceptrons, NA = not applicable, NR = not reported, RHR = resting heart rate, RHRAD = resting heart rate anomaly detection, RHR-Diff = resting heart rate difference, RR = respiratory rate, SVM = support vector machines, WT = wearable technology

Fig. 4.

Wearable technology mechanism: Anomaly detection model developmental process.

3.3.1. Data extraction

As the wearable technology data were obtained mainly from SOFTs (n = 17) and only one device (n = 14), the ADMs were generally not device-agnostic (Table 2). Some of the ADMs also combined self-reported symptoms with data collected from the wearable technology. Hirten et al. (2021), Natarajan et al. (2020), Smarr et al. (2020), and Sarwar and Agu (2021) used only wearable technology data from COVID-19 positive individuals to develop their ADM.

3.3.2. Data preparation and model training, testing, and comparison

Subsequently, the raw data obtained underwent data cleaning. Instead of using automated feature extraction or feature selection techniques, most of the features were manually selected (n = 12). The basis of selection was either knowledge from another study or not stated. On average, only two physiological characteristics were used to develop the ADM (Table 2), among which heart rate variation was the most common (46.3%; Fig. S3). Data augmentation was rarely employed to increase the amount of the available data (n = 4). Internal validation was frequently used to split the dataset into training and validation sets (n = 13). Conversely, only Nestor et al. (2021) conducted external validation by testing the ADM on a new dataset independent of the dataset used for the internal validation. The performance of the ADMs was evaluated and compared based on their COVID-19 detection accuracy.

3.3.3. Best performing models

Majority of the best performing ADMs utilized artificial intelligence (n = 13), and few employed statistical analysis (Table 2). Artificial intelligence was categorized as machine learning; its subset, deep learning; and others (Fig. 4). For supervised machine learning, the datasets were generally labeled as infectious and healthy periods to train the algorithms to identify periods of COVID-19 infection. However, the infectious period varied between the articles. Instead of labeling the data, unsupervised machine learning segmented the data into infectious and noninfectious periods. Autoencoders were trained to reconstruct the wearable technology data from noninfectious periods. Hence, the high reconstruction error when tested on data from infectious periods was used to determine a COVID-19 infection. Statistical analysis determined deviations from baseline values through statistical calculations. Large variations from the baseline values were indicative of an infection. Apart from discerning COVID-19 infection, attempts to distinguish COVID-19 infection from differential diagnoses such as vaccination side effects (Alavi et al., 2021) and influenza-like illnesses, were scarce (n = 3).

3.4. Review question 3: How accurate is wearable technology in detecting COVID-19 infection?

The lack of validation standards was observed among the wearable technology used for the early detection of COVID-19. As mentioned previously, the samples used across the articles to develop and test the ADMs had differing characteristics. Moreover, FDA clearances were not specific to COVID-19 detection. The accuracy results reported across 15 articles revealed inconsistent reference tests used, such as self-reported symptom onset, COVID-19 diagnosis, or both (Table 3 ). The accuracy measures were also heterogenous. The area-under-the-curve (AUC) values ranged from 75% to 94.4%, and the specificity values were generally higher than the sensitivity values. Furthermore, a third of the reported sensitivity values was close to or less than 50%.

Table 3.

Accuracy of wearable technology for COVID-19 detection (n = 15).

| Author (year) | Reference Test | Highest accuracy |

|---|---|---|

| Alavi et al. (2021) | COVID-19 diagnosis for asymptomatic cases and self-reported symptom for symptomatic individuals | Accuracy: 87.7% |

| Sensitivity: 80% | ||

| Specificity: 87.7% | ||

| True negative: 87,124 | ||

| False positive: 12,186 | ||

| Bogu and Snyder (2021) | Self-reported symptoms | Precision: 0.91 (SD 0.13, 95% CI 0·854–0·967) |

| Recall: 0.36 (SD 0.295, 95% CI 0.232-0.487) | ||

| F-beta score: 0.79 SD 0.295, 95% CI 0.232-0.487) | ||

| Cleary et al (2021) | COVID-19 diagnosis | AUC: 75% (95% CI: 62-89%) |

| D’Haese et al (2021) | Self-reported symptoms | AUC: 89% |

| Accuracy: 82% | ||

| Sensitivity: 79% | ||

| Specificity: 83% | ||

| Precision: 34% | ||

| NPV: 97% | ||

| Gadaleta et al. (2021) | COVID-19 diagnosis | AUC: 83% (IQR: 81-85%) |

| Hassantabar et al. (2021) | COVID-19 diagnosis | Accuracy: 98.1% |

| False positive rate: 0.8% | ||

| F1 score: 98.2% | ||

| Liu et al (2021) | Self-reported symptoms recognised as symptoms of COVID-19 | AUC-ROC: 94.4% |

| UAR: 95.3%, | ||

| Sensitivity: 100.0% | ||

| Specificity: 90.6% | ||

| MCC: 0.310 | ||

| Lonini et al (2020) | COVID-19 diagnosis | AUC: 94% (95% CI: 92-46%) |

| Miller et al (2020) | COVID-19 diagnosis | Sensitivity: 36.5% |

| Specificity: 95.3% | ||

| PPV: 73.8% | ||

| NPV: 80.6% | ||

| Natarajan et al. (2020) | COVID-19 diagnosis | AUC ± SD: 77% ±1.8 |

| Sensitivity ± SD: 51.3% ± 3.4 | ||

| Specificity: 90% | ||

| FPR ± SD: 9.4% ± 1.1 | ||

| Nestor et al. (2021) | COVID-19 diagnosis | Sensitivity: 50% (95% CI: 0-74%) |

| Specificity: 79% (95% CI 53- 98%) | ||

| Quer et al (2021) | COVID-19 diagnosis | AUC: 80% (95% CI: 73-86%) |

| Sensitivity: 72% (95% CI: 59-83%) | ||

| Specificity: 73% (95% CI: 68-78) | ||

| PPV: 35% (95% CI: 29-41%) | ||

| NPV: 93% (95% CI: 90-96%) | ||

| Sarwar and Agu (2021) | COVID-19 diagnosis | AUC-ROC ± SD: 78% ± 2 |

| Accuracy ± SD: 71% ± 2 | ||

| Sensitivity ± SD: 69% ± 2 | ||

| Specificity ± SD: 74% ± 3 | ||

| F1-beta: 72% | ||

| Skibinska et al (2021) | COVID-19 diagnosis | Accuracy: 78% |

| Sensitivity: 77% | ||

| Specificity: 80% | ||

| MCC: 60% | ||

| Zhu et al (2020) | NA | Average Pearson’s Correlation: 0.68 |

Note. AUC = area under curve, AUC-ROC = area under receiver operating characteristic curve, MCC = Matthew’s correlation coefficient, UAR = unweighted average recall, NPV = negative predictive value, PPV = positive predictive value, SD = standard deviation, IQR = interquartile range, CI = confidence interval, FPR = false positive rate.

4. Discussion

4.1. Summary of results

In this scoping review, 22 types of wearable technology were identified from 40 articles and categorized as SOFTs, medical devices, and others. The medical devices had diverse placement positions on different parts of the body, while the “other” devices gathered the most physiological characteristics. Nonetheless, the SOFTs were the most frequently used. The mechanisms reported in 18 articles focused on anomaly detection, and the best performing models utilized artificial intelligence and statistical analysis. Generally, the ADMs were not device-agnostic and could not distinguish a COVID-19 infection with its differential diagnoses. Furthermore, shortcomings in data preparation were identified, such as manual feature selection, few selected features, and infrequent use of data augmentation and external validation methods. The accuracy results reported in 15 articles revealed inconsistent reference tests used and heterogenous accuracy measures. Thus, the lack of validation standards for the early detection of COVID-19 was observed among the devices. The use of wearable technology for the early detection of COVID-19 is nascent, evident from the predominance of developmental papers and ongoing trials. The study characteristics revealed the underrepresentation of geographic regions beyond the United States and small sample sizes.

4.2. Wearable form and physiological characteristics

This review found that beyond the wrist, the placement of medical devices on other parts of the body can enhance measurement accuracy for certain physiological characteristics. Wrist placements are affected by motion artefacts and ambient light interference (Kamišalić et al., 2018). Likewise, skin temperature monitoring at the axilla and chest is less affected by the ambient environment and can better reflect the core temperature than the wrist (Kamišalić et al., 2018; Tamura et al., 2018). Central locations such as the waist and chest are also ideal for accelerometer and gyroscope sensors to encapsulate whole-body movement (Bayoumy et al., 2021; Yang and Hsu, 2010). Electrocardiography (ECG) sensors are the gold standard for measuring cardiac arrhythmias and are typically placed on the chest (Zeagler, 2017). and photoplethysmography (PPG) were used to detect abnormalities in heart rhythm and respiratory rate and oxygen saturations (Bayoumy et al., 2021; Charlton et al., 2018; Kamišalić et al., 2018).

Owing to its unique placement at the suprasternal notch, the “other” wearable technology could gather additional physiological characteristics, especially cough sounds. Its anatomical proximity to the throat enables mechano-acoustic sensing for cough detection (Lee et al., 2020). Nevertheless, cough patterns are not useful for early detection prior to symptom onset and hence hardly used. Furthermore, its conspicuous location may be socially undesirable (Casson et al., 2010).

Besides body placement, the form factor can also affect the accuracy of sensor measurements. Sensors perform optimally when in direct contact with the skin. However, increased precision comes at the expense of comfort. Adhesive patches, which were the predominant forms of the medical devices and “others,” conform to the skin and demonstrate flexibility. However, this form factor is highly susceptible to skin irritation, especially since continuous monitoring requires prolonged wear (McAdams et al., 2011). Skin irritation is considerably reduced with the clothing form factor, but loose-fitting clothing is subject to motion artefacts caused by poor contact with the skin, and tight-fitting clothing can be uncomfortable (McAdams et al., 2011). Likewise, sensor accuracies are compromised in the strap form, which is the predominant form factor in SOFTs, owing to poor contact with the skin. Nevertheless, the strap form is more appealing than the other form factors owing to its comfort and convenience (Bayoumy et al., 2021; Sartor et al., 2018).

Coinciding with its burgeoning use, the predominance of SOFTs over the other types of wearable technology can be explained by high user acceptance (Massoomi and Handberg, 2019; McAdams et al., 2011). As continuous monitoring requires prolonged use, relatively accurate devices that users are likely to wear frequently are effective (Radin et al., 2021). Moreover, the ubiquity and accessibility of SOFTs are useful for large-scale deployment (Mishra et al., 2020).

4.3. Anomaly detection models

As COVID-19 is transmissible before the onset of symptoms (Tindale et al., 2020), the combination of symptom reports would be futile for early detection. Viral infections are known to trigger inflammatory responses in the early stages, and COVID-19 is no exception (García, 2020). Several studies documented abnormalities in heart rate variability, resting heart rate, and heart rate as inflammatory responses to a COVID-19 infection, which can explain their frequent use in ADMs (Hasty et al., 2021; Park et al., 2017; Whelton et al., 2014). However, Yanamala et al. (2021) demonstrated the use of features such as body temperature and oxygen saturation as superior indicators. Hassantabar et al. (2021) also showed that galvanic skin response, albeit unconventional, is a useful feature. Thus, given the novelty of the virus, more physiological characteristics should be incorporated and explored. Feature selection and feature extraction techniques can then be employed to identify the most relevant features and prevent overfitting (Zebari et al., 2020). Similar artificial intelligence techniques have been used on chest radiography imaging for COVID-19 diagnosis (Al-Rakhami et al., 2021; Asraf et al., 2020; Islam et al., 2021; Saha et al., 2021) and forecasting the growth of the pandemic (Rahman et al., 2021). However, early detection using radiography imaging remains challenging (Jemioło et al., 2022) and poses radiation exposure risks (Zhou et al., 2021). Moreover, the long-term forecasts were also largely inaccurate (Rahman et al., 2021).

Overfitting occurs when the ADM corresponds too closely to the training data and is unable to perform on unseen data (Ying, 2019). Selecting samples representative of the population to which the ADM will be applied in can prevent overfitting (Faes et al., 2020). However, given the underrepresentation of geographic regions outside the United States and samples that did not reflect the general population, this aspect requires further improvement. The complete spectrum of COVID-19 presentations, especially its differential diagnoses such as influenza-like diseases and vaccination side effects, is often overlooked, resulting in spectrum bias (Faes et al., 2020). Spectrum bias perpetuates the inability to distinguish COVID-19 from illnesses with similar presentations. Moreover, sample sizes were predominantly small, which increased the effect of overfitting, and data augmentation techniques were rarely used to counteract this problem (Ying, 2019).

Validation methods can also mitigate the risk of overfitting (Retel Helmrich et al., 2019) and are necessary to evaluate the reproducibility and generalizability of ADMs (Ramspek et al., 2021). Internal validation can ascertain reproducibility by testing on wearable technology data gathered from individuals with characteristics similar to those of the training population. External validation can ascertain reproducibility and generalizability by testing wearable technology data gathered from a separate population with different characteristics (Ramspek et al., 2021). Given a sufficiently large dataset, the external performance of an ADM can be estimated by internal validation alone (Ramspek et al., 2021), but sample sizes were primarily small. Coinciding with the findings of Ramspek et al. (2021), internal validation was conducted frequently, but externally validated ADMs were rare. As most ADMs involve machine learning, their “black box nature” reinforces the need for external validation (Faes et al., 2020). Ramspek et al. (2021) cautioned against the use of an ADM without external validation, as poor performance can lead to adverse outcomes, such as false reassurance to infectious individuals.

Overall, the reported ADM performance may be overoptimistic due to overfitting, and despite being reproducible, the ADMs lacked generalizability. As a result, the ADMs may not perform well in real-world settings (Ramspek et al., 2021). Moreover, the lack of device-agnostic ADMs limits the types of compatible wearable technology, thereby potentially hampering its scalability (Gadaleta et al., 2021).

4.4. Accuracy

Based on the AUC values, wearable technology may seem promising for the early detection of COVID-19 infection. However, the fair-to-excellent performance of such technology (Li and He, 2018) may be overestimated owing to inconsistencies in the employed reference tests. Using self-reported symptom onset to determine a COVID-19 infection is unreliable since asymptomatic individuals will not report any symptoms (Sah et al., 2021). Furthermore, the performance of ADMs may be inflated due to overfitting, as discussed previously.

In the context of COVID-19 detection, sensitivity evaluates how well an ADM can correctly identify a COVID-19 positive individual, while specificity evaluates how well an ADM can correctly identify a healthy individual (Kumleben et al., 2020). Low sensitivity values may lead to the identification of COVID-19 positive individuals as healthy, resulting in the infectious individuals interacting with others. Alternatively, low specificity values may lead to healthy individuals being incorrectly identified as COVID-19 positive, resulting in unnecessary self-isolation (Kumleben et al., 2020). An optimal tradeoff between sensitivity and specificity is ideal, but given the severe repercussions, sensitivity should be prioritized; however, the contrary was observed (Chubak et al., 2012).

Conducting a diagnostic test accuracy systematic review is challenging, as the accuracy measures presented were disparate, lacking true positive, false positive, true negative, and false negative values (Campbell et al., 2015). Moreover, nearly none of the studies adopted a cross-sectional study design (Campbell et al., 2015). Similar to Cosoli et al. (2020), the accuracy results were difficult to compare owing to the lack of uniform validation standards. This lack of standardization was evident in the sample characteristics, reference tests, and reported accuracy measures (Cosoli et al., 2020). Thus, due to inadequate validation studies and questionable accuracy results, obtaining regulatory agency approval for wearable technology for the early detection of COVID-19 infection would be difficult. Therefore, such technology is not clinically dependable (Radin et al., 2021).

4.5. Strengths and limitations

To the best of our knowledge, this is the first scoping review to focus on the actual application of wearable technology for the early detection of COVID-19 through remote monitoring. The search for published and unpublished literature was systematic and extensive. However, owing to the nature of scoping reviews, the quality of the included articles was not appraised (Peters et al., 2017). In addition, the lack of a Chinese database may have limited the search results, as COVID-19 was first discovered in China and technological solutions could have been implemented early in the country. Considering the predominantly small sample sizes and study settings favoring the United States, the universal applicability of the results is unclear. Furthermore, information on the sensor type of some of the wearable technology could not be retrieved from the website of the manufacturers.

4.6. Recommendations for future research

Validation studies with reliable reference tests are necessary to set validation standards and assess the accuracy of wearable technology for the early detection of COVID-19. To evaluate diagnostic accuracy across different studies, relevant accuracy measures should be reported. Large samples of individuals from geographical locations outside the United States, with varying COVID-19 presentations and differential diagnoses, should be examined to increase the likelihood of successful deployment in real-world settings. Different types of wearable technology should also be employed to build device-agnostic ADMs. Future research can also conduct external validation to assess the generalizability of ADMs (Table S8).

4.7. Implications for practice and policymaking

Once the performance of wearable technology is proven to be accurate and reliable, healthcare policymakers can mandate the use of low-cost SOFTs for remote surveillance of incoming and returning travelers, with the easing of border restrictions. This mandate can be extended to susceptible populations such as unvaccinated individuals and healthcare workers. Employers can also consider requiring employees to wear low-cost SOFTs to adjust work arrangements for those with detected COVID-19 infection and minimize the frequency of swab testing. On a small scale, commercial SOFTs can incorporate the ADM in existing devices through software updates. This approach will enable existing SOFT users to monitor their health by providing real-time detection alerts.

5. Conclusion

The omnipresence of COVID-19 necessitates an early detection system to facilitate early diagnosis and self-isolation. This scoping review examined the use of wearable technology as a solution and highlighted types, mechanisms, and detection accuracy. The review findings revealed that SOFTs were the preferred type of wearable technology owing to their comfort and ubiquity. Given the shortcomings in the development of ADMs, the reported performance was questionable. Moreover, owing to the lack of validation standards, comparing the accuracy results was difficult. Conducting a diagnostic accuracy systematic review was also challenging. Overall, wearable technology is not yet clinically reliable for the early detection of COVID-19 infection in real-world settings.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Authors contributions

SHRC, YL and STL conceptualized and designed the study, SHRC and YJXN conducted the systematic literature search with guided by a senior librarian, and SHRC and YJXN performed the title and abstract screening, data extraction. SHRC and YJXN apprised quality of systematic review. SHRC, YL and STL conducted data management, data analysis, and synthesis of data. SHRC, YL and STL wrote the articles and all authors read and approved the final version of the article.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.ypmed.2022.107170.

Appendix A. Supplementary data

Supplementary material

References

- Alavi A., Bogu G.K., Wang M., Rangan E.S., Brooks A.W., Wang Q., Higgs E., Celli A., Mishra T., et al. Real-time alerting system for COVID-19 using wearable data. MedRxiv. 2021 doi: 10.1101/2021.06.13.21258795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Al-Rakhami M.S., Islam M.M., Islam M.Z., Asraf A., Sodhro A.H., Ding W. Diagnosis of COVID-19 from X-rays using combined CNN-RNN architecture with transfer learning. MedRxiv. 2021 doi: 10.1101/2020.08.24.20181339. [DOI] [Google Scholar]

- Anglemyer A., Moore T.H., Parker L., Chambers T., Grady A., Chiu K., Parry M., Wilczynska M., Flemyng E., et al. Digital contact tracing technologies in epidemics: a rapid review. Cochrane Database Syst. Rev. 2020;8 doi: 10.1002/14651858.CD013699. Cd013699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arksey H., O'Malley L. Scoping studies: towards a methodological framework. Int. J. Soc. Res. Methodol. 2005;8(1):19–32. [Google Scholar]

- Asraf A., Islam M.Z., Haque M.R., Islam M.M. Deep learning applications to combat novel coronavirus (COVID-19) pandemic. SN Comput. Sci. 2020;1:363. doi: 10.1007/s42979-020-00383-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baj J., Karakuła-Juchnowicz H., Teresiński G., Buszewicz G., Ciesielka M., Sitarz E., Forma A., Karakuła K., Flieger W., et al. COVID-19: specific and non-specific clinical manifestations and symptoms: the current state of knowledge. J. Clin. Med. 2020;9(6):1753. doi: 10.3390/jcm9061753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayoumy K., Gaber M., Elshafeey A., Mhaimeed O., Dineen E.H., Marvel F.A., Martin S.S., Muse E.D., Turakhia M.P., et al. Smart wearable devices in cardiovascular care: where we are and how to move forward. Nat. Rev. Cardiol. 2021;18(8):581–599. doi: 10.1038/s41569-021-00522-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonato P. Advances in wearable technology and its medical applications. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2010:2021–2024. doi: 10.1109/IEMBS.2010.5628037. [DOI] [PubMed] [Google Scholar]

- Callahan A., Steinberg E., Fries J.A., Gombar S., Patel B., Corbin C.K., Shah N.H. Estimating the efficacy of symptom-based screening for COVID-19. NPJ Digit. Med. 2020;3:95. doi: 10.1038/s41746-020-0300-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callaway E. Heavily mutated Omicron variant puts scientists on alert. Nature. 2021;25 doi: 10.1038/d41586-021-03552-w. [DOI] [PubMed] [Google Scholar]

- Campbell J.M., Klugar M., Ding S., Carmody D.P., Hakonsen S.J., Jadotte Y.T., White S., Munn Z. Diagnostic test accuracy: methods for systematic review and meta-analysis. Int. J. Evid. Based Healthc. 2015;13(3):154–162. doi: 10.1097/XEB.0000000000000061. [DOI] [PubMed] [Google Scholar]

- Casson A.J., Logesparan L., Rodriguez-Villegas E. An introduction to future truly wearable medical devices—from application to ASIC. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2010:3430–3431. doi: 10.1109/IEMBS.2010.5627898. [DOI] [PubMed] [Google Scholar]

- Channa A., Popescu N., Skibinska J., Burget R. The rise of wearable devices during the COVID-19 pandemic: a systematic review. Sensors (Basel) 2021;21(17):5787. doi: 10.3390/s21175787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charlton P.H., Birrenkott D.A., Bonnici T., Pimentel M.A.F., Johnson A.E.W., Alastruey J., Tarassenko L., Watkinson P.J., Beale R., et al. Breathing rate estimation from the electrocardiogram and photoplethysmogram: a review. IEEE Rev. Biomed. Eng. 2018;11:2–20. doi: 10.1109/RBME.2017.2763681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheung M.L., Chau K.Y., Lam M.H.S., Tse G., Ho K.Y., Flint S.W., Broom D.R., Tso E.K.H., Lee K.Y. Examining consumers' adoption of wearable healthcare technology: the role of health attributes. Int. J. Environ. Res. Public Health. 2019;16(3) doi: 10.3390/ijerph16132257. 2257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chubak J., Pocobelli G., Weiss N.S. Tradeoffs between accuracy measures for electronic health care data algorithms. J. Clin. Epidemiol. 2012;65(3) doi: 10.1016/j.jclinepi.2011.09.002. 343-349.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cosoli G., Spinsante S., Scalise L. Wrist-worn and chest-strap wearable devices: systematic review on accuracy and metrological characteristics. Measurement. 2020;159:107789. [Google Scholar]

- Davies H.J., Williams I., Peters N.S., Mandic D.P. In-ear spo(2): a tool for wearable, unobtrusive monitoring of core blood oxygen saturation. Sensors (Basel) 2020;20(17):4879. doi: 10.3390/s20174879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Fazio R., Giannoccaro N.I., Carrasco M., Velazquez R., Visconti P. Wearable devices and IoT applications for symptom detection, infection tracking, and diffusion containment of the COVID-19 pandemic: a survey. Front. Inf. Technol. Electron. Eng. 2021;22(11):1413–1442. [Google Scholar]

- Ding X., Clifton D., Ji N., Lovell N.H., Bonato P., Chen W., Yu X., Xue Z., Xiang T., et al. Wearable sensing and telehealth technology with potential applications in the coronavirus pandemic. IEEE Rev. Biomed. Eng. 2021;14:48–70. doi: 10.1109/RBME.2020.2992838. [DOI] [PubMed] [Google Scholar]

- Faes L., Liu X., Wagner S.K., Fu D.J., Balaskas K., Sim D.A., Bachmann L.M., Keane P.A., Denniston A.K. A clinician's guide to artificial intelligence: how to critically appraise machine learning studies. Transl. Vis. Sci. Technol. 2020;9(2):7. doi: 10.1167/tvst.9.2.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fontanet A., Autran B., Lina B., Kieny M.P., Karim S.S.A., Sridhar D. SARS-CoV-2 variants and ending the COVID-19 pandemic. Lancet. 2021;397(10278):952–954. doi: 10.1016/S0140-6736(21)00370-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gadaleta M., Radin J.M., Baca-Motes K., Ramos E., Kheterpal V., Topol E.J., Steinhubl S.R., Quer G. Passive detection of COVID-19 with wearable sensors and explainable machine learning algorithms. NPJ Digit. Med. 2021;4(1):166. doi: 10.1038/s41746-021-00533-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gao Z., Xu Y., Sun C., Wang X., Guo Y., Qiu S., Ma K. A systematic review of asymptomatic infections with COVID-19. J. Microbiol. Immunol. Infect. 2021;54(1):12–16. doi: 10.1016/j.jmii.2020.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- García L.F. Immune response, inflammation, and the clinical spectrum of COVID-19. Front. Immunol. 2020;11:1441. doi: 10.3389/fimmu.2020.01441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grekousis G., Liu Y. Digital contact tracing, community uptake, and proximity awareness technology to fight COVID-19: a systematic review. Sustain. Cities Soc. 2021;71:102995. doi: 10.1016/j.scs.2021.102995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hashmi H.A.S., Asif H.M. Early detection and assessment of COVID-19. Front. Med. 2020;7:311. doi: 10.3389/fmed.2020.00311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassantabar S., Stefano N., Ghanakota V., Ferrari A., Nicola G.N., Bruno R., Marino I.R., Hamidouche K., Jha N.K. CovidDeep: SARS-CoV-2/COVID-19 test based on wearable medical sensors and efficient neural networks. IEEE Trans. Consum. Electron. 2021;67(4):244–256. [Google Scholar]

- Hasty F., García G., Dávila H., Wittels S.H., Hendricks S., Chong S. Heart rate variability as a possible predictive marker for acute inflammatory response in COVID-19 patients. Mil. Med. 2021;186(1-2):e34–e38. doi: 10.1093/milmed/usaa405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hellewell J., Russell T.W., Beale R., Kelly G., Houlihan C., Nastouli E., Kucharski A.J. Estimating the effectiveness of routine asymptomatic PCR testing at different frequencies for the detection of SARS-CoV-2 infections. BMC Med. 2021;19:106. doi: 10.1186/s12916-021-01982-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirten R.P., Danieletto M., Tomalin L., Choi K.H., Zweig M., Golden E., Kaur S., Helmus D., Biello A., et al. Use of physiological data from a wearable device to identify SARS-CoV-2 infection and symptoms and predict COVID-19 diagnosis: observational study. J. Med. Internet Res. 2021;23(2) doi: 10.2196/26107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iqbal S.M., Mahgoub I., Du E., Leavitt M.A., Asghar W. Advances in healthcare wearable devices. NPJ Flex. Electron. 2021;5:1–14. [Google Scholar]

- Islam M.M., Mahmud S., Muhammad L.J., Islam M.R., Nooruddin S., Ayon S.I. Wearable technology to assist the patients infected with novel coronavirus (COVID-19) SN Comput. Sci. 2020;1(6):320. doi: 10.1007/s42979-020-00335-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Islam M.M., Ullah S.M.A., Mahmud S., Raju S. Breathing aid devices to support novel coronavirus (COVID-19) infected patients. SN Comput. Sci. 2020;1(5):274. doi: 10.1007/s42979-020-00300-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Islam M.M., Karray F., Alhajj R., Zeng J. A review on deep learning techniques for the diagnosis of novel coronavirus (COVID-19) Ieee Access. 2021;9:30551–30572. doi: 10.1109/ACCESS.2021.3058537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jemioło P., Storman D., Orzechowski P. Artificial intelligence for COVID-19 detection in medical imaging-diagnostic measures and wasting-A Systematic umbrella review. J. Clin. Med. 2022;11(7):2054. doi: 10.3390/jcm11072054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamišalić A., Fister I., Jr., Turkanović M., Karakatič S. Sensors and functionalities of non-invasive wrist-wearable devices: a review. Sensors (Basel) 2018;18(6):1714. doi: 10.3390/s18061714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kinloch N.N., Shahid A., Ritchie G., Dong W., Lawson T., Montaner J.S.G., Romney M.G., Stefanovic A., Matic N., et al. Evaluation of nasopharyngeal swab collection techniques for nucleic acid recovery and participant experience: recommendations for COVID-19 diagnostics. Open Forum Infect. Dis. 2020;7(11) doi: 10.1093/ofid/ofaa488. ofaa488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumleben N., Bhopal R., Czypionka T., Gruer L., Kock R., Stebbing J., Stigler F.L. Test, test, test for COVID-19 antibodies: the importance of sensitivity, specificity and predictive powers. Public Health. 2020;185:88–90. doi: 10.1016/j.puhe.2020.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee K., Ni X., Lee J.Y., Arafa H., Pe D.J., Xu S., Avila R., Irie M., Lee J.H., et al. Mechano-acoustic sensing of physiological processes and body motions via a soft wireless device placed at the suprasternal notch. Nat. Biomed. Eng. 2020;4(2):148–158. doi: 10.1038/s41551-019-0480-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li F., He H. Assessing the accuracy of diagnostic tests. Shanghai Arch. Psychiatry. 2018;30(3):207–212. doi: 10.11919/j.issn.1002-0829.218052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li H., Wu J., Gao Y., Shi Y. Examining individuals' adoption of healthcare wearable devices: an empirical study from privacy calculus perspective. Int. J. Med. Inform. 2016;88:8–17. doi: 10.1016/j.ijmedinf.2015.12.010. [DOI] [PubMed] [Google Scholar]

- Lu L., Zhang J., Xie Y., Gao F., Xu S., Wu X., Ye Z. Wearable health devices in health care: narrative systematic review. JMIR Mhealth Uhealth. 2020;8(11) doi: 10.2196/18907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massoomi M.R., Handberg E.M. Increasing and evolving role of smart devices in modern medicine. Eur. Cardiol. Rev. 2019;14(3):181–186. doi: 10.15420/ecr.2019.02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAdams E., Krupaviciute A., Géhin C., Grenier E., Massot B., Dittmar A., Rubel P., Fayn J. 2011 Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2011. Wearable sensor systems: the challenges; pp. 3648–3651. [DOI] [PubMed] [Google Scholar]

- McGowan J., Sampson M., Salzwedel D.M., Cogo E., Foerster V., Lefebvre C. PRESS peer review of electronic search strategies: 2015 guideline statement. J. Clin. Epidemiol. 2016;75:40–46. doi: 10.1016/j.jclinepi.2016.01.021. [DOI] [PubMed] [Google Scholar]

- Metcalf D., Milliard S.T., Gomez M., Schwartz M. Wearables and the internet of things for health: wearable, interconnected devices promise more efficient and comprehensive health care. IEEE Pulse. 2016;7(5):35–39. doi: 10.1109/MPUL.2016.2592260. [DOI] [PubMed] [Google Scholar]

- Mirjalali S., Peng S., Fang Z., Wang C.H., Wu S. Wearable sensors for remote health monitoring: potential applications for early diagnosis of Covid-19. Adv. Mater. Technol. 2021;7(1):2100545. doi: 10.1002/admt.202100545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishra T., Wang M., Metwally A.A., Bogu G.K., Brooks A.W., Bahmani A., Alavi A., Celli A., Higgs E., et al. Pre-symptomatic detection of COVID-19 from smartwatch data. Nat. Biomed. Eng. 2020;4(12):1208–1220. doi: 10.1038/s41551-020-00640-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munn Z., Peters M.D.J., Stern C., Tufanaru C., McArthur A., Aromataris E. Systematic review or scoping review? guidance for authors when choosing between a systematic or scoping review approach. BMC Med. Res. Methodol. 2018;18(1):143. doi: 10.1186/s12874-018-0611-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Natarajan A., Su H.W., Heneghan C. Assessment of physiological signs associated with COVID-19 measured using wearable devices. NPJ Digit. Med. 2020;3(1):156. doi: 10.1038/s41746-020-00363-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neamah S.R. Comparison between symptoms of COVID-19 and other respiratory diseases. Eur. J. Med. Technol. 2020;13(3) em2014. [Google Scholar]

- Nestor B., Hunter J., Kainkaryam R., Drysdale E., Inglis J.B., Shapiro A., Nagaraj S., Ghassemi M., Foschini L., et al. Dear watch, should i get a COVID-19 test? designing deployable machine learning for wearables. MedRxiv. 2021 doi: 10.1101/2021.05.11.21257052. [DOI] [Google Scholar]

- Öztürk F., Karaduman M., Çoldur R., İncecik Ş., Güneş Y., Tuncer M. Interpretation of arrhythmogenic effects of COVID-19 disease through ECG. Aging Male. 2020;23(5):1362–1365. doi: 10.1080/13685538.2020.1769058. [DOI] [PubMed] [Google Scholar]

- Park W.C., Seo I., Kim S.H., Lee Y.J., Ahn S.V. Association between resting heart Rate and inflammatory markers (white blood cell count and high-sensitivity c-reactive protein) in healthy korean people. Korean J. Fam. Med. 2017;38(1):8–13. doi: 10.4082/kjfm.2017.38.1.8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park H., Pei J., Shi M., Xu Q., Fan J. Designing wearable computing devices for improved comfort and user acceptance. Ergonomics. 2019;62(11):1474–1484. doi: 10.1080/00140139.2019.1657184. [DOI] [PubMed] [Google Scholar]

- Peeling R.W., Olliaro P.L., Boeras D.I., Fongwen N. Scaling up COVID-19 rapid antigen tests: promises and challenges. Lancet Infect. Dis. 2021;21(9):e290–e395. doi: 10.1016/S1473-3099(21)00048-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters M., Godfrey C., McInerney P. Chapter 11: scoping reviews. JBI Rev. Man. Adelaide. 2017;1-24 [Google Scholar]

- Peters M.D.J., Marnie C., Tricco A.C., Pollock D., Munn Z., Alexander L., McInerney P., Godfrey C.M., Khalil H. Updated methodological guidance for the conduct of scoping reviews. JBI Evid. Synth. 2020;18(10):2119–2126. doi: 10.11124/JBIES-20-00167. [DOI] [PubMed] [Google Scholar]

- Radin J.M., Quer G., Jalili M., Hamideh D., Steinhubl S.R. The hopes and hazards of using personal health technologies in the diagnosis and prognosis of infections. Lancet Digital Health. 2021;3(7):e455–e461. doi: 10.1016/S2589-7500(21)00064-9. [DOI] [PubMed] [Google Scholar]

- Rahman M.M., Islam M.M., Manik M.M.H., Islam M.R., Al-Rakhami M.S. Machine learning approaches for tackling novel coronavirus (COVID-19) pandemic. SN Comput. Sci. 2021;2(5):384. doi: 10.1007/s42979-021-00774-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramspek C.L., Jager K.J., Dekker F.W., Zoccali C., van Diepen M. External validation of prognostic models: what, why, how, when and where? Clin. Kidney J. 2021;14(1):49–58. doi: 10.1093/ckj/sfaa188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ren X., Zhou J., Guo J., Hao C., Zheng M., Zhang R., Huang Q., Yao X., Li R., et al. Reinfection in patients with COVID-19: a systematic review. Glob. Health Res. Policy. 2022;7(1):12. doi: 10.1186/s41256-022-00245-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Retel Helmrich I.R., van Klaveren D., Steyerberg E.W. Research note: prognostic model research: overfitting, validation and application. J. Physiother. 2019;65(4):243–425. doi: 10.1016/j.jphys.2019.08.009. [DOI] [PubMed] [Google Scholar]

- Ruffini K., Sojourner A., Wozniak A. Who's in and who's out under workplace COVID symptom screening? J. Policy Anal. Manage. 2021;40(2):614–641. doi: 10.1002/pam.22288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sah P., Fitzpatrick M.C., Zimmer C.F., Abdollahi E., Juden-Kelly L., Moghadas S.M., Singer B.H., Galvani A.P. Asymptomatic SARS-CoV-2 infection: a systematic review and meta-analysis. Proc. Natl. Acad. Sci. U. S. A. 2021;118(34) doi: 10.1073/pnas.2109229118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saha P., Sadi M.S., Islam M.M. EMCNet: Automated COVID-19 diagnosis from X-ray images using convolutional neural network and ensemble of machine learning classifiers. Inform. Med. Unlocked. 2021;22:100505. doi: 10.1016/j.imu.2020.100505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santos M.D., Roman C., Pimentel M.A.F., Vollam S., Areia C., Young L., Watkinson P., Tarassenko L. A real-time wearable system for monitoring vital signs of COVID-19 patients in a hospital setting. Front. Digit. Health. 2021;3:630273. doi: 10.3389/fdgth.2021.630273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sartor F., Gelissen J., van Dinther R., Roovers D., Papini G.B., Coppola G. Wrist-worn optical and chest strap heart rate comparison in a heterogeneous sample of healthy individuals and in coronary artery disease patients. BMC Sports Sci. Med. Rehabilitation. 2018;10(1):10. doi: 10.1186/s13102-018-0098-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarwar A., Agu E. Passive COVID-19 assessment using machine learning on physiological and activity data from low end wearables. 2021 IEEE Int. Conf. Digit. Health ICDH. 2021;2021:80–90. [Google Scholar]

- Shahroz M., Ahmad F., Younis M.S., Ahmad N., Boulos M.N.K., Vinuesa R., Qadir J. COVID-19 digital contact tracing applications and techniques: a review post initial deployments. J. Transp. Eng. 2021;5:100072. [Google Scholar]

- Singh A.K., Singh A., Singh R., Misra A. Molnupiravir in COVID-19: a systematic review of literature. Diabetes Metab. Syndr. 2021;15(6):102329. doi: 10.1016/j.dsx.2021.102329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smarr B.L., Aschbacher K., Fisher S.M., Chowdhary A., Dilchert S., Puldon K., Rao A., Hecht F.M., Mason A.E. Feasibility of continuous fever monitoring using wearable devices. Sci. Rep. 2020;10(1):21640. doi: 10.1038/s41598-020-78355-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamura T., Huang M., Togawa T. Current developments in wearable thermometers. Adv. Biomed. Eng. 2018;7:88–99. [Google Scholar]

- Teo J. Early detection of silent hypoxia in COVID-19 pneumonia using smartphone pulse oximetry. J. Med. Syst. 2020;44(8):134. doi: 10.1007/s10916-020-01587-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The EndNote Team . Clarivate; Philadelphia, PA: 2020. EndNote version 20. [Google Scholar]

- Tindale L.C., Stockdale J.E., Coombe M., Garlock E.S., Lau W.Y.V., Saraswat M., Zhang L., Chen D., Wallinga J., et al. Evidence for transmission of COVID-19 prior to symptom onset. Elife. 2020;9 doi: 10.7554/eLife.57149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tricco A.C., Lillie E., Zarin W., O'Brien K.K., Colquhoun H., Levac D., Moher D., Peters M.D.J., Horsley T., et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann. Intern. Med. 2018;169(7):467–473. doi: 10.7326/M18-0850. [DOI] [PubMed] [Google Scholar]

- Ullah S.M.A., Islam M.M., Mahmud S., Nooruddin S., Raju S., Haque M.R. Scalable telehealth services to combat novel coronavirus (COVID-19) pandemic. SN Comput. Sci. 2021;2(1):18. doi: 10.1007/s42979-020-00401-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vindrola-Padros C., Singh K.E., Sidhu M.S., Georghiou T., Sherlaw-Johnson C., Tomini S.M., Inada-Kim M., Kirkham K., Streetly A., et al. Remote home monitoring (virtual wards) for confirmed or suspected COVID-19 patients: a rapid systematic review. EClinicalMedicine. 2021;37:100965. doi: 10.1016/j.eclinm.2021.100965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whelton S.P., Narla V., Blaha M.J., Nasir K., Blumenthal R.S., Jenny N.S., Al-Mallah M.H., Michos E.D. Association between resting heart rate and inflammatory biomarkers (high-sensitivity c-reactive protein, interleukin-6, and fibrinogen) (from the multi-ethnic study of atherosclerosis) Am. J. Cardiol. 2014;113(4):644–649. doi: 10.1016/j.amjcard.2013.11.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- World Health Organization . World Health Organization; 2020. Public health surveillance for COVID-19: interim guidance, 7 August 2020. Retrieve from https://apps.who.int/iris/handle/10665/333752. [Google Scholar]

- Wright R., Keith L. Wearable technology: if the tech fits, wear it. J. Electron. Resour. Med. Libr. 2014;11(4):204–226. [Google Scholar]

- Yanamala N., Krishna N.H., Hathaway Q.A., Radhakrishnan A., Sunkara S., Patel H., Farjo P., Patel B., Sengupta P.P. A vital sign-based prediction algorithm for differentiating COVID-19 versus seasonal influenza in hospitalized patients. NPJ Digit. Med. 2021;4(1):95. doi: 10.1038/s41746-021-00467-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang C.C., Hsu Y.L. A review of accelerometry-based wearable motion detectors for physical activity monitoring. Sensors (Basel) 2010;10(8):7772–7788. doi: 10.3390/s100807772. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yetisen A.K., Martinez-Hurtado J.L., Ünal B., Khademhosseini A., Butt H. Wearables in medicine. Adv. Mater. 2018;30(33) doi: 10.1002/adma.201706910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ying X. An overview of overfitting and its solutions. J. Phys. Conf. Ser. 2019;1168(2) [Google Scholar]

- Zeagler C. Where to wear it: functional, technical, and social considerations in on-body location for wearable technology 20 years of designing for wearability. Proc. 2017 ACM Int. Symp. Wearable Comput. 2017:150–157. [Google Scholar]

- Zebari R., Abdulazeez A., Zeebaree D., Zebari D., Saeed J. A comprehensive review of dimensionality reduction techniques for feature selection and feature extraction. J. Appl. Sci. Technol. Trends. 2020;1(2):56–70. [Google Scholar]

- Zhou Y., Zheng Y., Wen Y., Dai X., Liu W., Gong Q., Huang C., Lv F., Wu J. Radiation dose levels in chest computed tomography scans of coronavirus disease 2019 pneumonia: a survey of 2119 patients in Chongqing, Southwest China. Medicine (Baltimore) 2021;100 doi: 10.1097/MD.0000000000026692. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material