Abstract

The point spread function of a fixed fluorophore with its dipole axis colinear to the optical axis appears donut‐shaped when seen through a microscope, and its light distribution in the pupil plane is radially polarized. Yet other techniques, such as photolithography, report that this same light distribution in the pupil plane appears as a solid spot. How can this same distribution lead to a spot in one case but a donut in the other? Here, we show how the tube lens of the system plays a critical role in determining this shape. Using a vectorial treatment of image formation, we simulate the relative contributions of both longitudinal and radial components to the image of a dipole emitter and thus show how the donut (typically reported for z‐polarized single molecule fluorescence microscopy) transforms into a solid spot (as commonly reported for photolithography) as the numerical aperture of the tube lens increases. We find that the transition point occurs around 0.7 NA, which is significantly higher than used for most microscopy systems and lower than for common photolithography systems, thus resolving the seeming paradox of dipole shape.

Keywords: image formation theory, Jones matrix calculus, microscopy, vectorial diffraction

Vectorial diffraction simulations show that the point spread function of a z‐polarized emitter changes shape above a 0.7 NA of the image‐forming lens and can thus impact novel microscopy techniques like oblique plane microscopy in single molecule studies.

1. INTRODUCTION

As a standard, optics textbooks introduce the point spread function of a microscope as the spot‐like Airy disk (Hecht & Zajac, 2002). However, a point source emitter can actually be imaged as many different shapes, the properties of which have been increasingly exploited, particularly for advanced microscopy. For single molecule localization imaging, an emitter's position along the optical axis can be encoded into its shape through the use of a phase mask (e.g., double helix [Pavani et al., 2009] or tetrapod phase masks [Shechtman et al., 2015]) or an asymmetric lens (Huang et al., 2008). Less well‐known is that alternative shapes of single emitters are also possible in microscopes without the use of phase masks or asymmetric lenses; in fact, a simple fixed fluorophore can even appear donut‐shaped. Fluorophores are the unit emitters in fluorescence microscopy and their emission can be modeled as the far‐field of an oscillating linear electric dipole (Novotny et al., 2007). If the dipole axis of such a fluorophore is aligned with the optical axis, the field observed in the image plane of a microscope is a donut (Dickson et al., 1998), while in the pupil plane its emitted electric field is radially polarized. Because this distinct donut shape is lost or deformed as the dipole and optical axis lose alignment, several studies have exploited this property for determining molecular orientation (Beausang et al., 2013; Böhmer & Enderlein, 2003; Forkey et al., 2003; Lippert et al., 2017) or improving superresolution microscopy (Backlund et al., 2012; Mortensen et al., 2010). Intriguingly, this very same radially polarized pupil plane distribution of light is used in, for example, photolithography (Quabis et al., 2000; Zhan, 2009) with a completely different outcome. There, a radially polarized vector beam illuminates the pupil plane, creating a field equivalent to that of the dipole emitter (but typically of higher intensity due to application needs), which in turn generates a very tight focal spot in the sample plane. These sharp spots are important for applications as diverse as high‐resolution lithography (Lin et al., 2015; Shoham et al., 2006), optical data storage (Zhan, 2009), laser cutting (Niziev & Nesterov, 1999), or for trapping and manipulating nanoscale particles (Zhan, 2009).

This raises the question: if the fields are equivalent at the pupil plane, why are they different at the image plane? The obvious answer is that there must be something different between the pupil and imaging plane in those two situations, and looking at Figure 1, the culprit appears to be the tube lens (or the “image‐forming” lens for non‐microscopy applications). Indeed, a closer look at the setups used for microscopy versus those for, for example, photolithography reveals that the NAs of their image‐forming lenses are strikingly different. Commonly used tube lenses have NAs well below 0.1, whereas the focusing lenses in lithography systems have NAs closer to 1. What may not be obvious, however, is why the difference in NA has such a significant impact on the image shape. In the following, we first qualitatively explore the basis for this difference in shape, and then use vectorial diffraction simulations to quantitatively investigate the transition from one form to another as the NA of the tube lens is gradually increased.

FIGURE 1.

Optical planes of a microscope. The electric far‐field emitted by a dipole is captured by the objective lens and refracted to the pupil plane. The field distribution is, for the case depicted here of a dipole aligned co‐linear to the optical axis, purely radially polarized; this distribution is akin to the field distribution of a vector beam. In the image plane, two alternatives are commonly seen: (1) the point emitter is imaged with a dip in the intensity at the Centre (a “donut”) as known from single molecule microscopy, or (2) the emitter appears as a tight spot, as used in applications such as direct laser writing with radially polarized beams in photolithography, machining, or optical data storage

An intuitive explanation for this phenomenon may be found by splitting up points in the pupil plane into pairs. Let us have a closer look at the relevant part of the microscope as depicted in Figure 2a, encompassing the pupil plane and the image‐forming lens. Each pair of points in the pupil plane is related by inversion symmetry through the center of the pupil. The red arrows visualize the electric field directions of one such point pair. As the field in the pupil is radially polarized, the arrows point in opposite directions. Equivalently, one could state that the points share the same linear polarization vector but with an effective relative phase shift of between them ().

FIGURE 2.

Longitudinal and radial field contributions to dipole image formation. (a) The field in the pupil plane (gray disk) is radially polarized. Two points are highlighted as red arrows to exemplify the pair‐wise anti‐parallel polarization vectors. After refraction by the lens, the polarization vector of the corresponding plane waves can be split into polarization components in longitudinal and radial directions (blue arrows). (b) The longitudinal components are parallel and in phase with each other and thus interfere constructively into a tight spot, whereas (c) the anti‐parallel radial components cancel each other out in the image center due to destructive interference. (d) The actual observed distribution is formed by summation of both components. The plots in (b–d) show the normalized intensity from simulated imaging of a dipole using a 0.95 NA objective together with a 0.55 NA tube lens

After refraction by the lens, any point on the pupil will produce a corresponding plane wave, and the polarization vector of this plane wave can be divided into a longitudinal component and a radial component with the relative weighting dependent on the angle between the propagation direction and the optical axis. Geometrically, one can see that the point pair components in the longitudinal direction () result in constructive interference (Figure 2b), whereas the radial components () will interfere destructively and cancel each other out in the image center (Figure 2c). The observed image of a dipole is therefore the sum of these two interference patterns (Figure 2d); in lenses with high numerical aperture the longitudinal components of are dominant and the focus appears as a spot, whereas in low NA lenses (like conventional microscope tube lenses) the radial term dominates, and the “donut” appears. It is hence also absolutely expected to find both cases reported in literature as both the donut and the tight focus are possible manifestations of the same phenomenon.

While several groups have contributed to an increased understanding of image formation in high‐NA vector beam focusing (Brown, 2011; Lan & Tien, 2008; Quabis et al., 2000; Sheppard & Choudhury, 2004; Sheppard & Török, 1997; Youngworth & Brown, 2000), none has yet answered a key question: where exactly does the donut turn into a spot? This transition point can be accurately determined by calculating the image of a dipole but will require several steps as discussed below.

2. METHODS

First, the electric far field emitted by the dipole needs to be expressed, which can be done elegantly (Kim et al., 2018) by concatenation of vector products between a wave vector and the emission dipole moment . See Figure 1 for an overview of the used variables. Here, we consider the optical axis to be along the direction. Expressed in Cartesian coordinates, the wave vector is a function of polar angle and azimuthal angle , and the field in object space before the objective is

| (1) |

The portion of this field up to (the half‐opening angle of the objective), is captured and refracted by the microscope objective. Refraction can be modeled via symbolic ray tracing with vectorial Jones matrices (Kim et al., 2018). Two matrices are important for lens refraction. The first is the rotation matrix , which changes the Cartesian coordinate system to the coordinate system spanned by the meridional and sagittal unit vectors of the currently traced ray. The second important matrix, , models the ray refraction caused by a lens and redirects all meridional vector components. This ray refraction matrix is also a rotation matrix (any ray coming from the focus of the lens is parallel to the optical axis after the lens, which is achieved via rotation of the ray by its azimuthal angle ):

| (2) |

Note that the ray refraction matrix also contains an apodization factor of either in aplanatic collimation, or its inverse in aplanatic focussing (Kim et al., 2018) (where and are the refractive indices before and after the lens). Further, for clockwise ray refraction, the azimuthal angle in needs to be used with a negative sign (Kim et al., 2018). Depending on the type of condition the lens is made to obey (Abbe, Herschel, Lagrange, Helmholtz), different forms of apodization should be used (Gu, 2000); objective lenses for microscopy are designed to obey the Abbe sine condition (Gu, 2000). The pupil plane electric field is thus a multiplication of the object‐space electric field with a rotation matrix, the objective lens refraction matrix and a following inverse rotation matrix to restore the Cartesian coordinates:

| (3) |

Following these operations, the ‐component of the electric field in the pupil plane vanishes. This is also intuitively correct since the refracted electric field is invariant along the axial direction and thus possesses only electric field components in the plane. All p‐polarized electric field components (i.e., the ones in the meridional plane) before the objective are hence converted into radial components, whereas all s‐polarized electric field components of the dipole far field become azimuthal polarization components. For the special case of a dipole with its axis along the optical axis, the electric field in the pupil plane contains only radial components ( has no azimuthal components, so neither does ).

Let us continue with the special case. Seen from the perspective of the objective lens, the dipole's electric field is p‐polarized regardless of emission direction and therefore, after refraction by the objective lens, the electric field in the back focal plane is purely radial. Further, the strength of the dipole emission in the pupil plane is proportional to . This is contained within Equation (1): the magnitude of the cross product is the sine of the angle between the two vectors and in our special case, the dipole moment is along the optical axis, that is, As the consecutive cross product with is a cross product with an orthogonal unit vector, it does not change the magnitude. Further, for a dipole with its moment on the optical axis the angle enclosed with wavevector is just the angle of the spherical coordinate system. Therefore, the amplitude distribution in the Fourier plane is times an additional apodization factor due to aplanatic collimation of the objective lens. Taken together, the pupil plane electric field is radially polarized and has a vanishing field strength in the pupil center. If, as implicitly assumed, the dipole is placed in the focus of the objective on the optical axis, the electric field's phase in the pupil plane is constant over the entire pupil and thus is equivalent to a radially polarized vector beam.

Calculation of the image of such a dipole is sometimes simplified in single‐molecule microscopy as the image‐forming tube lens can be regarded as a paraxial lens (Backer & Moerner, 2014) (an example for a fully vectorial treatment of the imaging system is given in Böhmer and Enderlein (2003). Under the paraxial approximation, it is sufficient to take the Fourier transform of the dipole electric field distribution in the pupil plane. As the amplitude distribution in the pupil is an even function, while the direction of the electric field is equivalent to an odd phase function, this makes the overall pupil function odd. A property of the Fourier transform is that odd functions remain odd, so there must be a zero‐crossing at the image plane origin. Addition of the squares of the resulting electric fields in the and directions yields then the overall intensity distribution in the shape of a donut (Böhmer & Enderlein, 2003).

In contrast, the tight spot in vector beam focusing is the result of the omitted non‐paraxial part and hence requires use of a non‐paraxial diffraction integral. The Debye integral is such an integral and synthesizes the field distribution in the focal region from a plane wave spectrum (Leutenegger et al., 2006). Importantly, it requires the plane wave spectrum after the tube lens, so we need to propagate the field in the pupil once more:

| (4) |

The tube lens refraction matrix now incorporates the apodization factor for focusing (i.e., the inverse of Equation (2)) and in is the azimuthal angle in image space . As both lenses, objective and tube lens, can be assumed to obey the Abbe sine condition, we can link the azimuthal angles of object space and image space, and , through the system magnification Here, the refractive index of the immersion medium in object space is , while the refractive index in image space is . For air objectives, the refractive indices can be omitted. The Debye integral is now performed over the plane wave spectrum in image space :

| (5) |

Here, is the imaginary unit and the pre‐factor uses the focal length of the lens and the wavelength . The half‐opening angle of the image‐forming lens, is related to the numerical aperture of the objective via . Following the approach by Leutenegger et al. (2006), a significantly faster computation of the integral in Equation (4) can be obtained by changing the integration variables through a coordinate transform from to to be over the support region of the pupil plane (Leutenegger et al., 2006):

| (6) |

The factor is the wavevector magnitude in image space. The final image intensity is obtained by multiplication of the electric field in image space with its conjugate transpose, that is, .

3. RESULTS

The resulting intensity distribution for a range of tube lens numerical apertures is visualized in Figure 3. There, the pixel size is over a field of view spanning in all spatial dimensions and the simulated wavelength was . Note how the relative sizes of the donut or spot change with the ratio of the numerical aperture between objective and tube lens. The light‐capturing lens in Figure 3 was modeled in all cases with 0.95 numerical aperture and thus did not influence the formed dipole images. Clearly, the controlling factor between the donut and the spot is the ratio of the longitudinal and radial field contributions, which are balanced by the image‐forming lens. Yet to answer the question about where exactly the turning point between the donut and the spot lies, one needs to first define “turning point.” Three answers can easily be given, as indicated by the colored boxes in Figure 3. One could equate the (1) maximal intensity values of radial and longitudinal images or, similarly, (2) their total energy contribution. As an alternative, (3) a zero‐crossing of the second derivative of the total image intensity on the optical axis can be taken. In the latter, note that the direction is arbitrary due to symmetry. The turning point in this case would be equal to a stationary point of the intensity distribution.

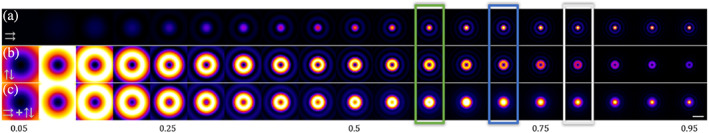

FIGURE 3.

The image of a dipole emitter (colinear to the optical axis), recorded by an objective lens of 0.95 NA, as formed by a tube lens with NAs in the range from 0.05 to 0.95. Shown are the intensities produced by (a) the longitudinal, (b) radial, and (c) all field components. Intensities are normalized separately for each tube lens NA. The colored boxes highlight “turning points”: At NA (green box), the maximum intensity of the longitudinal and radial components are equal; at NA (blue box), the central intensity of the total intensity has a stationary point; and at NA (white box), the total energy contributions of the longitudinal and radial components are equal. The scale bar is and the wavelength used in the simulation was

The ratio of maxima yields a turning point at a tube lens NA just above 0.6, which agrees with reported results in cylindrical vector beam focusing (Biss et al., 2003). In the case of dipole imaging, however, the overall intensity distribution still possesses a local minimum, as the peripheral region of the “spot” adds incoherently to the donut, and thus does not inform about our sought‐after donut‐to‐spot turning point. Taking the ratio of total intensity contributions results in a turning point around 0.8 NA. Interestingly, this definition makes the “turning point” more dependent on the objective NA. At a higher objective NA, the periphery of the pupil receives a relatively higher field strength compared to the pupil center, which contributes mostly to the longitudinal “spot” portion of the overall image. We see that this effect is present also in the other metrics, albeit to a lesser extent. Using a sign change of the local curvature of the image center as a metric (i.e., when the center no longer has a donut “hole”) yields a turning point near a tube lens NA of 0.7, which is in‐between the other two metrics. While this definition is closest to an intuitive understanding of “turning point” between a donut and a spot, it lacks, however, the direct connection to the field components that the other two definitions maintain.

Thus far the “turning point” has been defined as the NA of the tube lens where the donut changes to a spot, but as shown in Figure 4 it also depends to a smaller degree on the NA of the objective lens; adding this dimension, our “turning point” becomes a “turning line.” This small effect is due to the azimuthal field strength dependence of dipole emission. With higher NA, the amplitude distribution in the Fourier plane becomes steeper when moving from the middle to the periphery, and consequently more energy is funneled into the longitudinal field component. Note that immersion objectives with numerical apertures >1, in particular those that can capture evanescent field components, are not included in the present analysis. From this analysis, we can deduce that—as long as the back‐aperture of the tube lens is filled—the magnification of the whole system does not play a significant role, even though can be orders of magnitude different between high‐resolution microscopy and lithography.

FIGURE 4.

The “turning point” between donut and spot‐like images can be defined in various ways: (a) equality between the maximum intensity of longitudinal and radial components, (b) stationary point of the image center, or (c) equality of total energy contributions of longitudinal and radial components. Plotting the turning point for a range of objective NAs results in a “turning line”; the color of these lines matches the colors of the corresponding turning point boxes in Figure 3. Higher objective NAs result in equality of longitudinal and radial field components at smaller tube lens NAs. This is caused by the higher field strength at the periphery of the pupil when higher NA objective lenses are used. Note that in (b) negative values are saturated to better visualize the turning line

4. DISCUSSION AND CONCLUSION

Conventional microscopes operate with tube lens NAs below 0.1, so z‐polarized dipoles are always imaged as donuts. In single molecule localization microscopes, this donut shape can negatively impact the achievable localization precision due to algorithmic assumptions of standard, point‐like light distributions. So far, this effect has been counteracted by modifying the pupil plane (Aguet et al., 2009; Lewog & Moerner, 2014). In general, pupil‐plane polarization and phase masks can be utilized to enable constructive interference (as opposed to destructive) at the central image point; this has been successfully applied in the contexts of z‐polarized confocal imaging (Huse et al., 2001) and single‐molecule (widefield) microscopy (Lew & Moerner, 2014). Both approaches, however, require access to the pupil plane, which in turn requires additional optical relays that cause a lower overall transmission efficiency of the system. As detectors' pixel sizes continue to shrink (the latest smart phone cameras reach pixel sizes as small as ), it might become feasible to use higher NA tube lenses to both achieve Nyquist sampling and simultaneously exploit the intrinsic spot shape due to the dipole's longitudinal field. Care must be taken, however, as the image is sensed not in air, but inside a (typically silicon) sensor, whose index of refraction is about 3.5 and usually has dielectric coatings. In previous analyses on cylindrical vector beam focusing (Biss et al., 2003), it was shown that strong refraction at a silicon surface severely diminishes the longitudinal field component inside the high‐index material, leaving mostly the lateral component, and thus the donut shape, behind. A pixelated display, in contrast to a continuous silicon slab, would impose different boundary conditions on the field formation though, which would further modify this result. As such an analysis requires a finite element method for simulation of the focal region, it cannot be answered here using our approach.

Outside of single molecule localization microscopy, another field where this donut transition, as well as the above‐mentioned camera miniaturization, can have significant future impacts is neuroscience. Here, studies are often conducted by strapping tiny microscopes to the heads of animals during stimulation experiments; the equipment therefore must have minimal size and weight so as to not perturb the animals too much. A smaller imaging chip also comes with the possibility of using a higher NA GRIN (GRadient‐INdex) lens for imaging; here a single lens serves as both objective and image forming lens. For instance, the recently published “miniscope 3D” (Yanny et al., 2020) uses a GRIN lens with 0.55 NA as the sole optical element. This is notably close to the turning point of a donut as shown above and could have strong implications for the interpretation of imaging results. Future applications of this technology may therefore be well‐advised to take vectorial effects into stronger consideration.

Further, with the advent of various microscopy techniques that use high numerical aperture lenses in their light path “downstream” of the detection objective apart from the tube lens, awareness of effects due to the vectorial character of light is becoming much more important in microscopy. For example, the single objective light sheet technique, oblique plane microscopy (OPM), (Dunsby, 2008) actually uses two additional objectives in its light path. The secondary objective is used to construct a so‐called “perfect imaging” relay to observe an aberration‐free tilted image plane using a tertiary objective. The latest OPM techniques employ a refractive index boundary between the secondary and tertiary objectives (Yang et al., 2019) to increase the overall collection efficiency, but this leads to asymmetric Fresnel transmission coefficients of the refractive index boundary. These are expected to lead to different strengths of the image‐forming ray components depending on their polarization and can thus impact image formation.

Taken together, our results provide valuable knowledge of vectorial point spread function shapes in high NA situations, which are becoming increasingly relevant in latest microscopy systems.

CONFLICT OF INTEREST

The authors declare that there are no conflicts of interest.

ACKNOWLEDGMENTS

We thank Jeongmin Kim, James D. Manton, and Ida S. Opstad for helpful discussions and the reviewers of this manuscript for valuable feedback.

Ströhl, F. , Bruggeman, E. , Rowlands, C. J. , Wolfson, D. L. , & Ahluwalia, B. S. (2022). Quantification of the NA dependent change of shape in the image formation of a z‐polarized fluorescent molecule using vectorial diffraction simulations. Microscopy Research and Technique, 85(5), 2016–2022. 10.1002/jemt.24060

Funding informationThis project has received funding from the European Union's Horizon 2020 research and innovation programme under the Marie Skłodowska‐Curie, Grant/Award Number: 836355; the Norwegian Research Council, Grant/Award Number: 314546; the UiT Aurora Outstanding program, and the UiT Open Access fund.

Review Editor: Alberto Diaspro

DATA AVAILABILITY STATEMENT

The data/software that support the findings of this study are openly available in DataverseNO at https://doi.org/10.18710/Z37SBD, reference number Z37SBD.

REFERENCES

- Aguet, F. , Geissbühler, S. , Märki, I. , Lasser, T. , & Unser, M. (2009). Super‐resolution orientation estimation and localization of fluorescent dipoles using 3‐D steerable filters. Optics Express, 17(8), 6829–6848. [DOI] [PubMed] [Google Scholar]

- Backer, A. S. , & Moerner, W. E. (2014). Extending single‐molecule microscopy using optical Fourier processing. The Journal of Physical Chemistry B, 118, 8313–8329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Backlund, M. P. , Lew, M. D. , Backer, A. S. , Sahl, S. J. , Grover, G. , Agrawal, A. , Piestun, R. , & Moerner, W. E. (2012). Simultaneous, accurate measurement of the 3D position and orientation of single molecules. Proceedings of the National Academy of Sciences of the United States of America, 109, 19087–19092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beausang, J. F. , Shroder, D. Y. , Nelson, P. C. , & Goldman, Y. E. (2013). Tilting and wobble of myosin V by high‐speed single‐molecule polarized fluorescence microscopy. Biophysical Journal, 104, 1263–1273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biss, D. P. , Youngworth, K. S. & Brown, T. G. (2003). Longitudinal field imaging. In: i Three‐Dimensional and Multidimensional Microscopy: Image Acquisition and Processing X., Biomedical Optics, San Jose, CA, United States

- Böhmer, M. , & Enderlein, J. (2003). Orientation imaging of single molecules by wide‐field epifluorescence microscopy. Journal of the Optical Society of America B, 20, 554–559. [Google Scholar]

- Brown, T. G. (2011). Unconventional polarization states: Beam propagation, focusing, and imaging. Progress in Optics, 56, 81–129. [Google Scholar]

- Dickson, R. M. , Norris, D. J. , & Moerner, W. E. (1998). Simultaneous imaging of individual molecules aligned both parallel and perpendicular to the optic axis. Physical Review Letters, 81, 5322. [Google Scholar]

- Dunsby, C. (2008). Optically sectioned imaging by oblique plane microscopy. Optics Express, 16, 20306–20316. [DOI] [PubMed] [Google Scholar]

- Forkey, J. N. , Quinlan, M. E. , Shaw, M. A. , Corrie, J. E. T. , & Goldman, Y. E. (2003). Three‐dimensional structural dynamics of myosin V by single‐molecule fluorescence polarization. Nature, 422, 399–404. [DOI] [PubMed] [Google Scholar]

- Gu, M. (2000). Advanced optical imaging theory (Vol. 75). Springer Science & Business Media. [Google Scholar]

- Hecht, E. , & Zajac, A. (2002). Optics (Vol. 5). Addison Wesley. [Google Scholar]

- Huang, B. , Wang, W. , Bates, M. , & Zhuang, X. (2008). Three‐dimensional super‐resolution imaging by stochastic optical reconstruction microscopy. Science, 319, 810–813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huse, N. , Schönle, A. , & Hell, S. W. (2001). Z‐polarized confocal microscopy. Journal of Biomedical Optics, 6, 273–276. [DOI] [PubMed] [Google Scholar]

- Kim, J. , Wang, Y. , & Zhang, X. (2018). Calculation of vectorial diffraction in optical systems. Journal of the Optical Society of America A, 35, 526–535. [DOI] [PubMed] [Google Scholar]

- Lan, T.‐H. , & Tien, C.‐H. (2008). Study on focusing mechanism of radial polarization with immersion objective. Japanese Journal of Applied Physics, 47, 5806. [Google Scholar]

- Leutenegger, M. , Rao, R. , Leitgeb, R. A. , & Lasser, T. (2006). Fast focus field calculations. Optics Express, 14, 11277–11291. [DOI] [PubMed] [Google Scholar]

- Lew, M. D. , & Moerner, W. E. (2014). Azimuthal polarization filtering for accurate, precise, and robust single‐molecule localization microscopy. Nano Letters, 14, 6407–6413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin, L. , Zheng, M.‐L. , Dong, X.‐Z. , Duan, X.‐M. & Zhao, Z.‐S. (2015). The effect of radial polarization in multiphoton lithography. In: i AOPC 2015: Advances in Laser Technology and Applications., Applied Optics and Photonics China (AOPC2015), Beijing, China

- Lippert, L. G. , Dadosh, T. , Hadden, J. A. , Karnawat, V. , Diroll, B. T. , Murray, C. B. , Holzbaur, E. L. F. , Schulten, K. , Reck‐Peterson, S. L. , & Goldman, Y. E. (2017). Angular measurements of the dynein ring reveal a stepping mechanism dependent on a flexible stalk. Proceedings of the National Academy of Sciences of the United States of America, 114, E4564–E4573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mortensen, K. I. , Churchman, L. S. , Spudich, J. A. , & Flyvbjerg, H. (2010). Optimized localization analysis for single‐molecule tracking and super‐resolution microscopy. Nature Methods, 7, 377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niziev, V. G. , & Nesterov, A. V. (1999). Influence of beam polarization on laser cutting efficiency. Journal of Physics D: Applied Physics, 32, 1455. [Google Scholar]

- Novotny, L. , Hecht, B. , & Keller, O. (2007). Books‐principles of nano‐optics. Physics Today, 60, 61. [Google Scholar]

- Pavani, S. R. P. , Thompson, M. A. , Biteen, J. S. , Lord, S. J. , Liu, N. , Twieg, R. J. , Piestun, R. , & Moerner, W. E. (2009). Three‐dimensional, single‐molecule fluorescence imaging beyond the diffraction limit by using a double‐helix point spread function. Proceedings of the National Academy of Sciences of the United States of America, 106, 2995–2999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quabis, S. , Dorn, R. , Eberler, M. , Glöckl, O. , & Leuchs, G. (2000). Focusing light to a tighter spot. Optics Communications, 179, 1–7. [Google Scholar]

- Shechtman, Y. , Weiss, L. E. , Backer, A. S. , Sahl, S. J. , & Moerner, W. E. (2015). Precise three‐dimensional scan‐free multiple‐particle tracking over large axial ranges with tetrapod point spread functions. Nano Letters, 15, 4194–4199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheppard, C. J. R. , & Choudhury, A. (2004). Annular pupils, radial polarization, and superresolution. Applied Optics, 43, 4322–4327. [DOI] [PubMed] [Google Scholar]

- Sheppard, C. J. R. , & Török, P. (1997). An electromagnetic theory of imaging in fluorescence microscopy, and imaging in polarization fluorescence microscopy. Bioimaging, 5, 205–218. [Google Scholar]

- Shoham, A. , Vander, R. , & Lipson, S. G. (2006). Production of radially and azimuthally polarized polychromatic beams. Optics Letters, 31, 3405–3407. [DOI] [PubMed] [Google Scholar]

- Yang, B. , Chen, X. , Wang, Y. , Feng, S. , Pessino, V. , Stuurman, N. , Cho, N. H. , Cheng, K. W. , Lord, S. J. , Xu, L. , Xie, D. , Mullins, R. D. , Leonetti, M. D. , & Huang, B. (2019). Epi‐illumination SPIM for volumetric imaging with high spatial‐temporal resolution. Nature Methods, 16, 501–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yanny, K. , Antipa, N. , Liberti, W. , Dehaeck, S. , Monakhova, K. , Liu, F. L. , Shen, K. , Ng, R. , & Waller, L. (2020). Miniscope3D: Optimized single‐shot miniature 3D fluorescence microscopy. Light: Science & Applications, 9, 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Youngworth, K. S. , & Brown, T. G. (2000). Focusing of high numerical aperture cylindrical‐vector beams. Optics Express, 7, 77–87. [DOI] [PubMed] [Google Scholar]

- Zhan, Q. (2009). Cylindrical vector beams: From mathematical concepts to applications. Advances in Optics and Photonics, 1, 1–57. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data/software that support the findings of this study are openly available in DataverseNO at https://doi.org/10.18710/Z37SBD, reference number Z37SBD.