Abstract

Health and medical contexts have emerged as an important area of inquiry for researchers at the intersection of user experience and technical communication. In addressing this intersection, this article advocates and extends patient experience design or PXD (Melonçon, 2017) as an important framework for user experience research within health and medicine. Specifically, this article presents several PXD insights from a task-based usability study that examined an online intervention program for people with voice problems. We respond to Melonçon’s call (2017) to build PXD as a framework for user experience and technical communication research by describing ways traditional usability methods can provide PXD insights and asking the following question: What insights can emerge from combining traditional usability methods and PXD research? In addressing this question, we outline two primary methodological and practical considerations we found central to conducting PXD research: 1) engaging patients as participants, and 2) leveraging multidisciplinary collaboration.

Keywords: Usability, user experience, patient experience design, rhetoric of health and medicine, collaboration

Introduction

In recent years, technical communication (TC) researchers have increasingly recognized the importance of usability and user experience (UX) methods and theories for health and medical contexts, technologies, and experiences (Arduser, 2018; Bivens, 2019; Breuch et al., 2016; Melonçon, 2017; Rose et al., 2017). As part of this growing area within TC, scholars have called for and developed specific methodologies designed to address the unique needs and desires of patient users (Arduser, 2018; Breuch et al., 2016; Gouge, 2017; Rose et al., 2017; Melonçon, 2017; St. Amant, 2017). Among these, patient experience design (PXD) has emerged as a promising methodology. PXD, as Melonçon (2017) described, “is a participatory methodologies approach centered on contextual inquiry to understand the relationship between information (or technology) and human activities in health care” (p. 20). In other words, PXD is a framework grounded in “patient-centered values, user experience, and technical communication” that TC researchers can apply to the research process by linking it with appropriate methods (Melonçon, 2017, p. 20).

Since its conception in 2017, PXD has proven a useful methodology for research at the intersections of TC, usability and user experience, and health and medicine (Melonçon, 2017; Bivens, 2019; Rose et al., 2017). However, researchers have also argued that implementing PXD with traditional usability methods, such as think-aloud protocols or card-sorting, can present challenges because such methods can be too structured to capture and respond to patient experience needs and contexts (Melonçon, 2017; St. Amant, 2017). In particular, researchers have noted potential limitations of traditional usability methods including the “one-dimensional” and “narrow” nature of traditional usability methods (Melonçon, 2017, p. 24) as well as traditional usability’s “firm and hard metrics” that delimit more emergent and open engagement with users (Rose et al., 2017, p. 227). As Melonçon (2017) summarized, “standard usability methods (e.g., think aloud protocols, card sorting) are not sufficiently developed to work in health care contexts” (p. 21).

We agree that traditional usability methods come with important limitations; however, we found through the present study that traditional usability methods can be calibrated for productive and insightful PXD research. In this article, we aim to enrich the intersection of user experience (UX) and technical communication (TC) by expanding Melonçon’s (2017) patient experience design (PXD) methodology and exploring the risks and rewards of using traditional task-based usability methods to conduct PXD research. Specifically, this article aims to answer: What insights can emerge from combining traditional usability methods and PXD research? To address this question, we report on a recent study that examined the usability of a patient-facing intervention program through the methodological lens of PXD.

Our goal in reporting on this recent study for this special issue is to highlight successes and challenges we experienced in bringing together traditional usability and PXD. In doing so, we present our recent study as a case that enriches the value and role of PXD in the toolkit of methodologies that inform usability and UX research within TC. Our experience and findings affirm two key insights that future usability, UX, and PXD research within healthcare contexts should consider: (1) the value of recruiting patients (rather than, say, proxy users) as participants, and (2) the benefits and challenges of multidisciplinary collaboration for PXD-guided usability studies. These insights build on prior work incorporating patient participants (Breuch et al., 2016; Rose et al., 2017) and/or leveraging multidisciplinary collaborations for UX projects (Cardinal et al., 2020; Melonçon, 2017; Renguette, 2016; Rose et al., 2017). We offer concrete practices identified through the process of conducting our usability study, and in doing so, aim to support future research at the intersections of health and medicine, UX, and TC.

In what follows, we first situate PXD in usability and UX research both within and beyond the field of TC, and articulate the value of PXD for usability and UX research in contexts of health and medicine. Then, we discuss our study, highlighting key challenges, successes, and insights from our research, and point to future directions for PXD work by offering potential solutions to some of the challenges we identified throughout our research.

Critiques of Traditional Usability and the Emergence of PXD in Technical Communication

In alignment with this special issue’s focus on the intersection of TC, usability, and UX, our study brings together traditional usability methods with UX in medical contexts, specifically by using PXD alongside task-based usability assessments. We hypothesized that task-based usability methods, in conjunction with a PXD methodology, could enable us to simultaneously capture important usability and patient experience data.

Our study is informed by and contributes to usability in TC’s expanded focus from discrete tasks to user experience (Potts & Salvo, 2017). Traditional usability methods are rooted in document and software design that focus on standards, performance, and satisfaction metrics (Nielsen, 1993). Specifically, methods such as usability testing and heuristic evaluations have often been used in technical communication as ways to improve usability of documents and interfaces (Breuch et al., 2001; Hackos & Redish, 1998; Johnson, 1998; Redish, 2010; Schriver, 1997). However, some scholars have argued that traditional usability methods do not go far enough to understand user experiences (Howard, 2015; Johnson et al., 2007; Mirel, 2004). Similar to the critiques PXD scholars have made regarding traditional usability methods (Melonçon, 2017; Rose et al., 2017; St. Amant, 2017), Mirel (2004) and Albers (2004) have argued that usability testing is too limited for complex, non-linear information systems.

At the same time, scholars have highlighted the movement of TC as a discipline away from usability testing and toward UX (Barnum, 2019; Redish, 2010). Potts and Salvo (2017) advocated for “experience architecture” that is not limited “to one aspect of a product” but rather a user’s entire experience (p. 6). Additionally, several TC scholars recently have drawn attention to issues of social justice in methods such as usability, warning that researchers must ensure the representativeness of participants and should involve members of marginalized populations, especially those affected by the resulting design in usability and UX design (Rose, 2016; Walton & Jones, 2013). These scholars ultimately suggested that traditional usability methods such as testing and heuristic evaluations are insufficient for studying the broader landscape of UX.

Accordingly, as TC has moved from usability testing toward UX, resistance to the very word “user” has emerged because UX researchers generally do not consider their research participants exclusively as “users” who exist in relationship to the products they use but, rather, as people with their own contexts, histories, and social worlds. In UX scholarship in TC, “participant” (Potts, 2013); “people” (Redish, 2007), and “humans” (Zachry & Spyridakis, 2016) have been suggested as alternatives. In fact, Zachry and Spyridakis (2016) strongly advocated for “human-centered design” as the term of choice both for the interdisciplinary field of usability and for TC scholars. Similar arguments have been made regarding PXD. We also embrace the term “human-centered design” instead of “user-centered design” to acknowledge the broader contexts involving patient experiences. We have chosen the term “participant” in this study to refer to the people who participated in our usability study and deliberately use “patient” as a modifier to strategically recognize and mark the moments when a participant occupied, acknowledged, and spoke about their experiences as a patient. Indeed, participants in our study drew from multiple identities and contexts—including identities of technology user, parent, spouse, student, and patient. This move of pairing participant with patient aligns with Simmons and Zoetewey’s (2012) claim that usability studies should “allow for multiple identities in multiple contexts,” and, in doing so, make “visible the varied user groups who might use the site and their differing agendas” (p. 274). It also underscores Melonçon’s (2017) argument that the term “patient” in PXD “unapologetically keeps the focus on the embodied user and the way the patient needs to use the object” (p. 23).

As we considered the movement to broaden understandings of usability and UX, we embraced PXD as an articulation of “human-centered design” that addresses contextual patient experiences in conjunction with usability as a part of product design. Specifically, Melonçon (2017) introduced PXD as a usability and UX methodology that actively considers the unique concerns that accompany health and medical contexts, including health literacy, patients’ lived, embodied experiences, and patients’ health outcomes. Following Melonçon, rhetoric and TC scholars consequently have applied PXD in a variety of ways — as a lens to analyze health texts and artifacts (Bivens, 2019), as a starting point to develop additional heuristics or frameworks designed to more precisely study usability in health and medical settings (St. Amant, 2017; Bloom-Pojar & DeVasto, 2019), and as a methodological grounding to conduct contextualized usability and usability-related studies with potential or actual users (Melonçon, 2017).

This emerging body of PXD scholarship, along with existing TC participatory research with healthcare users (see Rose et al., 2017; Rose & Racadio, 2017; Arduser, 2018) supports the need for usability and UX studies that attend to deeply contextualized patient experiences. As Bivens (2019) wrote, “in healthcare settings and during medical encounters, complexities exist that are specific to these particular healthcare communication contexts” (p. 17). However, engaging with patient experiences can be complicated methodologically due to recruitment challenges, access, project timelines, and other constraints. As health and medical products and contexts continue to grow as an important area of usability and UX research within TC, there is an increased need to include actual, specific patient groups as research participants. Studies with specific patient participants have been conducted (for example, Breuch et al., 2016; Renguette, 2016); however, TC scholars also have commonly recruited or engaged proxy participants as healthcare users, such as college students who are exposed to their health center’s messaging campaigns (Melonçon, 2017), or people who want information on how to enroll in health insurance through the Affordable Care Act (Rose, 2017).

Relatedly, research within medicine, medical informatics, and medical internet research frequently involves patients in usability testing or UX analyses, particularly when evaluating digital health interventions, and aligns with participatory design and PXD, in that usability testing often takes place mid-development of a digital tool, and incorporates both usability testing and elements of user-experience data collection as part of enacting a user-centered design (for example, see Beatty et al., 2018; Hong et al., 2014; Mayberry et al., 2016). However, the goal often driving these studies is to develop a tool that encourages patient compliance with a digital intervention (for example, see Baldwin et al., 2017), a goal that has been compellingly critiqued within and beyond rhetoric and TC (see Gouge, 2017). While incorporating participatory design principles and patients as participants are steps toward PXD, usability research in medical disciplines could benefit from PXD’s attunement to the highly contextualized and complex nature of patient experience.

Indeed, PXD recognizes the importance of engaging patients as usability and UX study participants while prioritizing patient experience above or in addition to compliance. In our study, working with participants who were recruited because they were patients with voice concerns infused our findings with insights from the lived experience of a typical program user. This was enabled by our multidisciplinary collaboration between a usability research team and a medical research team. Based on this collaborative experience, we advance PXD as a methodology and also complicate claims that “standard usability methods (e.g., think-aloud protocols, card sorting) are not sufficiently developed to work in health care contexts” (Melonçon, 2017, p. 21; see also, Gouge, 2017). We observed that think-aloud protocols and open-ended, post-task questions included in traditional usability tests elicited valuable patient experience data. Thus, we argue that integrating the methodology of PXD with task-based usability is both possible and productive.

In the remainder of this article, we detail our study, discuss how traditional usability metrics can be conducive to collecting and responding to patient experience data, and describe how attentiveness to patient experience as well as multidisciplinary collaboration can produce both deeper understandings of health and medical tools/materials and actionable findings to optimize intervention usability and positive patient impacts.

Methods: Study Background and Design

We write as a collaborative team of researchers from two disciplines — a usability team of technical communication researchers and a voice team from the medical school — who partnered to examine an online health intervention program for patients experiencing voice problems. The program was designed by the voice team as part of an ongoing randomized clinical trial to determine whether a staggered, progressive interactive intervention program could help patients experience better health outcomes for persistent voice problems (i.e., dysphonia). The program teaches skills for increasing patients’ perceived control of their voice problem and responses to related challenges; to do so, the program uses modules that include expert discussion, illustrative examples, and self-directed exercises for skills development (Nguyen-Feng et al., 2020). Initially, the program was housed on a custom-built website, but unexpected bugs and other challenges were frequent, leading to mixed patient feedback.

Based on this preliminary feedback, the voice team transitioned the program to a different platform—REDCap—a web-based electronic data capture platform used widely in the biomedical research community to deliver surveys, compile data, and track participants in clinical and translational research (Harris et al., 2009). REDCap was selected because it provided the ability to maintain and directly update content and monitor participants while ensuring HIPAA compliance. At the same time, however, REDCap offers limited flexibility and adaptability in terms of its features, navigation, and design; therefore, the voice team wanted to test the usability of the REDCap version of the program for patient participants. The online program we tested included a module built using survey functionality in REDCap.

Over the course of a year, the usability and voice teams collaborated to design and conduct our study, analyze the data, and identify revisions for the program.1 This study included (1) heuristic evaluations and (2) task-based usability tests. We selected this combination of heuristic analysis and moderated usability testing because it is often recommended as a best practice for usability research, combining the strengths of two robust research methods (Barnum, 2011). Using Nielsen’s (1994) “10 usability heuristics for user interface design,” usability team members conducted two independent heuristic evaluations to identify potential issues that could be addressed before usability testing sessions began and to provide direction for test design and task/scenario generation. The summary of both heuristic evaluations indicated that the following heuristics could be improved: (1) user control and freedom, (2) consistency and standards, and (3) help and documentation. These heuristic evaluation results then guided usability test development. This article focuses exclusively on the study’s usability test.

For the usability testing, we articulated the following research questions:

How well are participants able to navigate into, through, and out of the program’s modules?

How well are participants able to use features of multiple choice to answer questions within the program?

How do participants use help and documentation in the program’s FAQ?

What observations from the usability test addressed patient experience with the program?

Importantly, the fourth research question was not formally included at study initiation. However, participant insights extended beyond typical online usability concerns, including the impact on quality of life and contexts of use (for instance, participants often questioned how the program intersected with their clinical care). Therefore, early in testing we incorporated the fourth research question to explicitly frame and identify PXD-related findings.

To investigate our research questions, we conducted usability tests that included: a short background questionnaire; a task-based think-aloud protocol featuring five distinct tasks including logging in and out of the program, completing a module within the program, and identifying the help page; a post-task user satisfaction rating (see Appendix A); and a product reaction card sort with a debriefing interview (see Appendix B). A moderator facilitated each test using a think-aloud protocol in which participants were encouraged to verbalize their thoughts as they completed tasks in the usability test.

Metrics such as time-on-task and user satisfaction ratings were gathered to determine baseline data. Time-on-task measured task completion in minutes and seconds. Our goal with this metric was to determine a baseline completion time for tasks. User satisfaction ratings measured ease-of-use on a scale of 1–5 with 1 being “very easy” and 5 being “very difficult.” Our goals for user satisfaction were to have no post-task rating higher than a 3 for any individual participant, and an overall average rating of 2 for each task across all participants.

The product reaction card included a set of 63 positive and negative words from which participants were asked to choose five that best described their experience. This list was derived from a desirability matrix (Benedek & Miner, 2002) comprising of 118 words that reflects a ratio of 60% positive and 40% negative or neutral words (Barnum, 2011 ). The matrix can be used in full or abbreviated as part of a usability test to gather quick descriptive feedback about participant impressions (Moran, 2016).

Debriefing interviews included five open-ended questions asking participants to describe their initial and overall impressions of the program, what they liked least and best, and what they would change if they could. In combination with the think-aloud protocol, the debriefing interview allowed for insights into participants’ healthcare-related contexts of use and engagement with the program and its content.

After each usability test, we calculated task completion rates by measuring the percentage of test participants who were able to complete each task without assistance or errors. We also counted issues and rated them for severity; a high severity issue prevented task completion, and a low severity issue did not impact task completion but negatively impacted efficiency. Additionally, we ranked issues based on frequency, with low-impact but high-frequency issues overall being rated at a higher severity level. Our a priori goal for each participant was five or fewer low severity issues, five or fewer moderate issues, and up to one high severity issue.

With this study design established, the voice team began recruiting participants from local otolaryngology clinics. Inclusion criteria included: diagnosis of a voice disorder, Voice Handicap Index-102 score of 11 or greater (considered an abnormal score3); age 18–80 years old; English literacy; and ability to complete informed consent. Persons who wished to participate completed intake and informed consent forms digitally or via mail.

Ten total participants were recruited and seven completed usability testing. Initially, we invited all participants to complete usability tests in the Usability Lab at the University of Minnesota, where high-quality usability data, including audio recording, screen-captures, and video recording (if permitted by the participant), could be collected,4 and where a two-room set up and a one-way mirror provided a convenient research environment. Notably, this decision to conduct testing in the Usability Lab created several barriers to recruitment, scheduling, and test completion. These included limited availability of the Usability Lab, lack of participant familiarity with the Usability Lab location, and unrelated campus events that limited parking availability. These factors, however seemingly mundane, directly impacted our ability to study patient experience and explored PXD as a methodology. Due to these recruitment barriers, we moved the usability testing to a building adjacent to the otolaryngology clinic, which allowed for more flexibility in scheduling, fewer personnel, and reserved on-site parking (see also Rose, 2016, for discussion of considerations related to participants from vulnerable populations). Unlike the Usability Lab, this new location was not equipped with a one-way mirror; therefore, we conducted these tests using adjacent meeting rooms. Data loggers observed the usability tests via video conferencing software but were only able to observe the computer screen and audio during testing sessions. Two usability testing sessions were conducted at this new location.

Following the completion of seven participant testing sessions, we conducted an initial data analysis and noticed clear insights regarding program navigation challenges and patient experience. At this point, we collaboratively agreed that our sample size had brought us to a point of adequate “information power” or “an aspect of internal validity, influencing the potential of the available empirical data to provide access to new knowledge by means of analysis and theoretical interpretations” (Malterud et al., 2015, p. 7). Information power involves continuous evaluation of study design factors such as the study aim, specificity, use of theory, quality of dialogue, and analysis (Malterud et al., 2015, p. 4); Malterud et al. suggest that “[i]n this way, recruitment can be brought to an end when the sample holds sufficient information power” (p. 7). After seven participants completed usability tests, we began to see recurring patterns within results, and valuable methodological insights in terms of usability and PXD, leading us to decide to close recruitment at that juncture.

Results: Analyzing Usability & Patient Experience

We conducted a traditional usability assessment focusing on metrics of user performance (including task completion, time-on-task, issue rates and severity, user satisfaction) measured against specific performance goals necessary to satisfy usability requirements. As we conducted tests and analyzed results, it became increasingly clear that patient perspectives and experiences related to and extended beyond strict usability metrics. Additionally, it became increasingly clear over the course of our testing sessions that fully understanding and analyzing our usability test findings required attunement to patient experience insights. For example, often as participants completed usability tasks, they paused to tell stories or comment on their own experiences as patients. This resulted in increased time-on-task that had less to do with usability and more to do with how the program itself related to our participants as patients. Therefore, in this results section, we first briefly report on the usability testing results, which address our first three research questions. We then focus on our fourth research question and discuss at length our patient experience-related results in an effort to outline methodological insights our study offers PXD research.

Usability Test Results

Participants.

Participants were aged 30 to 71 years old. All attended some college and five completed graduate school. Five participants identified as female, and one each as male and gender non-conforming. Self-reported race was majority white (n=5), with one each identifying as Black and Asian. All participants described themselves as being very familiar with digital technology, computers, and mobile devices, and most had previously sought information online about a medical condition.

Task Completion.

Participants completed the majority of tasks successfully. Task 1 (login, stop) had 100% task completion rate; Task 2 (open program), Task 4 (exit and log back in), and Task 5 (get help) had completion rates of 86%, and Task 3 (review vocal health tips, navigate back) had a task completion rate of 71%. See Appendix A for full task descriptions. Post-task ratings showed that participants rated Task 2 (open program), Task 3 (review vocal health tips, navigate back), and Task 4 (exit and log back in) as more difficult than the other tasks, with average ratings between 2.0 and 2.6 on a scale of 1–5 (1 being “very easy” and 5 being “very difficult”). These ratings were close to our goals for post-task ratings; however, individual highest post-task ratings exceeded goals (above a 3) on four out of five tasks.

Non-critical and Critical Issues.

Non-critical issues were generally low-impact and did not prevent participants from completing tasks. These issues included items such as complaints about text-heavy pages (n=4), uncertainty about the FAQ page for getting help (n=3), curiosity about content of psychological questions in the program (n=5), and issues with text-wrapping and reading difficulty due to browser window size (n=1).

Critical issues were high-impact, either in terms of severity of impact on the task, or in terms of frequency. Critical issues in this study were generally associated with navigation, such as how to enter the program, advance through the module, exit and re-enter, and locate specific aspects. Critical issues included: confusion about the “submit” button on the landing page and its function for entering the program (n=4), lack of clarity about how to enter or advance to the first page of the program (n=2), error message associated with clicking the back button (n=2), confusion about exiting and re-entering the program (n=6), and inability to locate vocal health tips (n=1).

Product Reaction Cards and Debriefing Interviews.

When asked to select words to describe their experience (Product Reaction Cards), 89% of the selected words were positive, including “organized,” “convenient,” “easy to use, “relevant,” and “useful.” Only one participant selected negative words which included “complex,” “confusing,” “difficult,” and “overwhelming.” Debriefing interviews revealed that participants generally had positive first impressions of the program, despite any navigation issues. When asked about what participants liked least about the program, three commented that the pages were wordy, one expressed preference for “prettier colors,” and two responded that they did not like that they had to type responses into the program. When asked what they liked best, six participants responded that they appreciated the educational aspect of vocal health tips, and four responded that they liked the simple visual design.

PXD Results

In addition to usability-related feedback, participants volunteered patient-related experiences, stories, and insights throughout our study’s think-aloud protocols and interviews. That is, participants contextualized usability of the program within their own embodied health and medical experiences, and thus study participants were not just users but also patients with health-related perspectives that meaningfully influenced how they navigated, engaged, and understood the program. Specifically, participants offered their thoughts, attitudes, and experiences as voice patients interacting with an online intervention program related to their specific medical issue. Patient experience data emerged in our usability test in three primary ways: (1) participants mapped the program and its usability onto their existing healthcare identities and contexts; (2) participants blended the program’s structure (features and navigation) and content; (3) participants questioned how their healthcare providers would read and respond to the patient data being collected.

Mapping usability onto healthcare identities and contexts.

Often, patient experiences were shared by participants even before the moderator introduced the first task. We intentionally did not direct participants to occupy a particular identity or perspective; however, participants did question whose or what experiences were relevant to our study. For example, in two sessions, participants directly asked about the role they were to occupy during the usability testing. One participant asked for clarification: “So this is like, a patient being a patient?” A second participant asked if she would be testing her voice during this usability session or if she was just navigating the program. These two participants were inquiring about the extent to which they should participate in our usability study as patients—that is, as individuals with certain diagnoses and related healthcare experiences and knowledge. In response, the moderator encouraged participants to share any insights or reactions that emerged as they completed our tasks.

Additionally, when participants thought aloud as they completed our study’s tasks, they often imagined how this program might fit into their care with the otolaryngology clinic. Participants commented not only on the navigation and features of the program (“Ok, and now I’m supposed to navigate back to page 4, let’s see, page 4…”; “Ok, I’m supposed to log out, completely close my browser, ok, done.”), but also envisioned how the program would fit into their existing care routines (“I remember answering these questions that you had at the clinic”). In response to a post-task question, one participant explained that the “Voice Tips” section would be a useful complement to her on-site medical care: “And I told this to [my speech pathologist], like I haven’t done the stuff she gave me, but if I had something like this [program], I would definitely do it more, because it’s just like easy.” This participant drew from her healthcare experiences and relationships to imagine how her healthcare provider would respond to the patient information she would hypothetically provide when completing the program’s survey. Further, four participants told us that they would be more likely to use the program on their phones rather than their computers, suggesting that participants were not just focusing on the interface in front of them but were imagining the program within their daily routines. These findings highlight how PXD research that deploys usability methods such as think-aloud protocols and open-ended interviews can foreground the specific “life experiences that may impact the reception and use” of health-related tools and materials (Melonçon, 2017, p. 23). In our study, traditional usability methods allowed participants to oscillate between their different identities and consequently map their user experiences onto past and potential experiences as voice patients.

Blending structure and content.

In both think-aloud protocols and interviews, participants further commented on the program’s content even though the usability tasks and interview questions isolated navigation. For example, one task asked participants to find and review a voice-related tip and then navigate back to the homepage. While our goal was to test the program’s features and navigation (could the participants find and move through the tips pages?), we found that all seven participants read and commented on the tips content. For example, one participant, reading through the tips, commented, “Very good point, right there. Hard to find a quiet restaurant!” then spoke back to another tip, saying: “This is key for me, at school: voice amplifier.” In post-task questions, six participants expressed appreciation for the information and intent behind the educational content of the program. When these participants spoke to the usability of the program, they were focused on the content as well as the structure.

Notably, the time that participants took to read these tips pages could cause misleading time-on-task results. As our examples show, time-on-task did not necessarily represent how efficiently participants could click through pages but sometimes represented instead how closely participants read the program’s content. This potentially misleading metric does not indicate that traditional usability methods are unfit to the task of engaging and responding to patient experience. Rather, it suggests that the freedom of the think-aloud protocols can pick up important patient experience data and that researchers should consider how to analyze their data such that patient experience is not lost. Contextualizing the quantitative results of measures like time-on-task with the qualitative data captured through a think-aloud protocol, as we have done here, is one such strategy for data analysis that utilizes PXD-attuned traditional usability methods.

Questioning how healthcare providers would read and respond.

Finally, participants enmeshed the program into their patient-provider relationships. Four participants considered and posed questions about who would read and respond to the patient information that the program requested. One task asked participants to move through a survey including Likert-scale questions about their voice. Our goal here was to test the program’s features (could the participants easily respond to the Likert questions?) and navigation (could they move through the pages of the survey?). Yet, five participants commented on the questions’ content and future readership. These five participants also noted that the survey questions caught them off guard when the questions moved from straightforward assessments of their voices (“I have control over how I think about the voice problem”) to more holistic questions about their wellbeing and world outlook (“In the last few days, how often have you felt that things were going your way?”). Three participants expressed interest in who would read and respond to the information patients submitted in answering these questions. One participant wondered, “I filled it out for a reason, not just for research, but will someone actually look at it?” The same participant elaborated, “I just want someone who will read these results and use them not just to help the community, but will help me personally.”

Participants’ questions about how their data would be used and the healthcare providers behind the program remind us that UX encompasses more than whether or not end-users can effectively and comfortably navigate the program but also end-users’ goals, concerns, and contexts of use. Participants’ strong positive reaction to the educational content of the program seemed to outweigh frustrations with the navigation aspects we were studying. Likewise, while participants had no trouble completing the Likert-style questions, six commented on the shift from voice-specific to mental health-related questions, and three requested more information about how their answers would be read and used. These findings indicate a stronger focus on the content of the program and their imagined context of use, attributable to participants drawing on their experience as patients during usability testing. That is, ease of use did not equal usefulness, which is situated and contextual (see Mirel, 2004; Simmons & Zoetewey, 2012). The close collaboration between the usability and voice teams enabled us to translate patient experience insights into recommendations for ways to change the program to both alleviate navigation and features issues and also to create a program that better supports patients’ contexts of use. These insights and recommendations also improve our understanding of what PXD looks like in practice, especially in collaboration across medical and TC disciplines.

Discussion: Methodological Insights about Usability and PXD

We began this multidisciplinary study by using traditional usability methods to examine which features such as navigation tools, question formats, and help pages within an online program were effectively working for the intended patient population (patients with voice-related diagnoses), and which features needed additional improvement. Through our study, we observed that navigation was a critical and sometimes high-impact issue, and we identified specific areas within the program that could benefit from clearer navigation. Usability test results did not reveal issues with features such as multiple-choice questions; however, some participants were surprised by features such as open-ended questions in the program that invited participants to type responses. Regarding help and documentation, results identified low-impact issues regarding the FAQ page and suggestions for clarifying help options.

Additionally, we found that participants engaged our usability tasks and analyzed the usability of the voice program through their patient perspectives, experiences, and needs, which resulted in participants sharing both patient-related usability feedback and stories and experiences about their lives as patients that were not directly related to the usability tasks we asked them to complete. While this might seem unsurprising given that we recruited participants specifically because they were patients at local voice clinics, it is notable because it suggests that task-based usability methods are conducive to soliciting patient experience feedback and insight, contrasting disciplinary concerns about the limitations of traditional usability methods. We observed that patient experience findings were surfacing in our study not despite our traditional usability methods but because of those methods.

Importantly, our findings support Melonçon’s grounding claim that a “participatory methodological approach centered on contextual inquiry” like PXD is needed “to understand the relationship between information (or technology) and human activities in health care” (2017, p. 20). Building on Melonçon and others who have theorized PXD (e.g., St. Amant, 2017; Bivens, 2019), our findings also affirm the claim that traditional usability methods can be productively expanded to embrace PXD research. We found task-based usability methods successful in examining patient experiences when two other commitments were also enacted: 1) engaging patients as participants, and 2) embracing multidisciplinary collaboration.

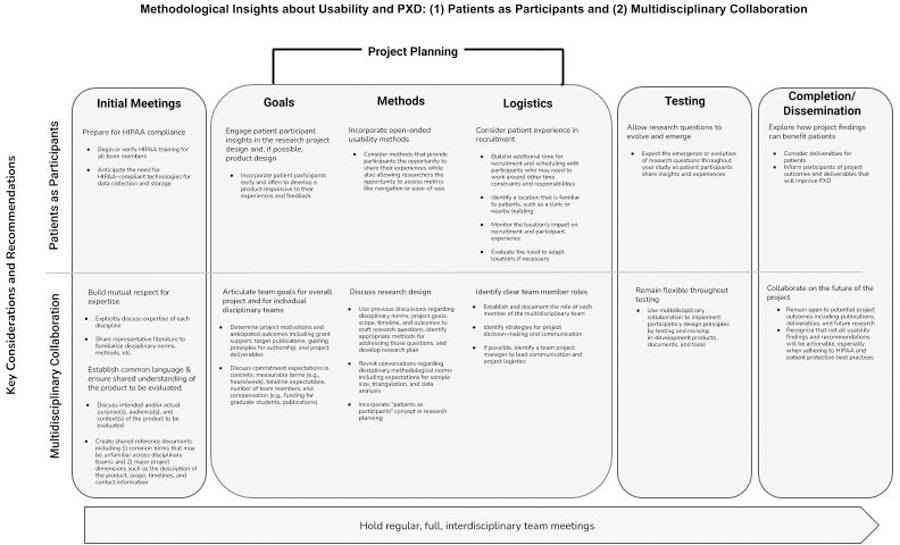

In the final sections of this article, we offer considerations and specific, actionable recommendations for future researchers interested in integrating PXD and traditional usability methods (summarized in Figure 1 and discussed in the following subsections). In doing so, we advance ongoing conversations in usability and UX regarding health-contexts and inter- or multidisciplinary collaborations. These recommendations are informed by the challenges and successes we encountered throughout this study that enabled us to capture patient experience data through a more traditional usability research design.

Figure 1.

Methodological insights, key considerations, and recommendations for conducting research at the intersection of usability and patient experience design: engaging patients as participants and incorporating multidisciplinary collaboration.

Insight 1: Patient as Participants

As discussed earlier, recruitment of patients for whom the voice intervention program was designed was key to our study. These participants provided feedback during testing sessions unique to the lived experiences, concerns, and perspectives of patients, specifically patients with voice-related problems, which enabled us to understand how participants, as voice patients, were using both the program and the information they encountered within it. These findings support prior scholarship in human-centered design that has shown that usability metrics are not, on their own, human-centered (see Mirel, 2004; Potts & Salvo, 2017) and build on prior TC research that has demonstrated the value in recruiting specific patients, not proxy participants (see Renguette, 2016; Breuch et al., 2016). While we agree that traditional usability metrics can problematically narrow researchers’ focus on a product and its features, our findings also suggest that traditional usability methods, when used to engage participants who bring deep contextual knowledge to the product, can yield insights related to the “complexities and nuances of the context in which the patient (and others) would use the information” (Melonçon, 2017, p. 21). In other words, think-aloud protocols with task-based scenarios as well as open-ended interview methods, combined with PXD, can generate rich, contextualized feedback regarding a healthcare product, document, or tool. Although we began this study with research questions tied closely to product-focused, narrower usability issues—navigation, features, and FAQ documentation—our data led us to make observations and recommendations informed by the contexts within which participants, as patients, made sense of the program as a whole. However, working with patients as participants comes with challenges and considerations particularly with recruitment, data privacy, and testing logistics, which we have summarized in Figure 1 and describe below.

Prepare for HIPAA Compliance.

In anticipation of recruiting patient participants, all team members completed HIPAA training, and our consent processes, usability test recordings, and data storage plans were designed to adhere to HIPAA guidelines. Additionally, REDCap was selected for hosting the program because REDCap is a HIPAA compliant tool provided by our institution, yet, as we noted earlier, REDCap also offers limited design flexibility, somewhat constraining our revision recommendations. Thus, PXD work does not just call for methods that focus on patients’ contexts but also can necessitate HIPAA compliance to protect patients’ privacy, which may be less familiar research considerations for researchers in usability and UX. Our study necessitated an understanding of both participants’ patient contexts and also the research protocols, norms, and goals that each research team brought to the table.

Engage Patient Participant Insights in the Research Project & Product Design.

In alignment with other UX and PXD research (Melonçon, 2017; Arduser, 2018), we recommend capturing and integrating patient experience insights throughout product design as well as research design to best operationalize such feedback. In our case, the program we tested was developed by incorporating perspectives collected through patient interviews and focus groups prior to usability testing, which likely contributed to participants’ interest in the content even when that was not the stated focus of the usability tasks.5 However, we did not include patients in planning our usability testing, and based on the challenges we encountered despite our understanding of patient perspectives from a clinical standpoint, we recommend incorporating participant input into usability testing design and logistical planning. This may also carry the additional benefit of better situating the usability study for patients in terms of how it would or would not relate to their clinical care.

Incorporate Open-Ended Usability Methods.

As we have argued throughout this article, a central insight of our study is that task-based traditional usability methods are conducive to eliciting patient experience data when calibrated to PXD’s commitment to engaging patient experience beyond usability dimensions like navigation or ease-of-use. Open-ended usability methods such as think-aloud protocols and debriefing interviews created opportunities for participants to share their patient experiences while simultaneously assessing traditional usability metrics like navigation. Although our prompts asked participants to perform specific, delimited tasks using the program, participants rarely spoke about that “simple singular task,” but continually considered the patient contexts in which they would engage the program (Potts & Salvo, 2017, p. 5). Participants brought their knowledge of voice-related conditions, their relationships with healthcare providers, and the enfolding of medical care with their daily lives to bear on the “simple singular tasks” we asked them to complete. Our findings support Arduser’s (2018) claim that new usability and UX approaches do not necessarily need to replace traditional usability methods nor do traditional usability methods necessarily fail to capture or address the “complexities and nuances” of patient experiences (Melonçon, 2017, p. 22). Our findings also support research across TC, medicine, and social scientific disciplines that has emphasized the value of storytelling and patient narratives for illuminating patients’ lived experiences within and beyond the context of usability and user experiences (see, Garrison-Joyner & Caravella, 2020; Houston et al., 2011). Methods that enable participants to share their stories and contextualize their experiences as both users and patients (in our case, task-based scenarios with think-aloud protocols) are especially valuable for PXD research.

Consider Patient Experience During Recruitment.

Although location and scheduling are recruitment considerations for all on-site research studies, our testing location presented unique challenges for our patient participants regarding the identity or role we were asking them to embody for our study. That is, participants were recruited through their otolaryngology clinics, yet participated in our study at the unfamiliar Usability Lab, which prompted many participants to question what perspective— user, patient, or both—we were asking them to occupy during the tests. Future PXD research should carefully consider location and scheduling as potential barriers to recruitment. Although usability labs have been prized as controlled settings for conducting usability tests (see Redish, 2010), PXD research, even if it includes traditional usability methods, may need to be adapted to clinical settings or other locations that are more familiar and conducive to patients as participants. This kind of logistical agility can further respond to PXD’s attention to patients’ unique contexts of use, offsetting potential data collection constraints presented by test locations outside of usability labs.

Allow Research Questions to Evolve & Emerge.

Our initial research questions were guided by traditional usability dimensions and the results of our heuristic evaluations. However, recognizing that our initial research questions did not fully engage all data we were collecting; we articulated an additional question — What observations from the usability test addressed patient experience with the program? Though broad, this question guided our reflections on the intersections of traditional usability and patient experience. Theorizing UX and Experience Architecture, Sullivan (2017) urged researchers to “[encounter] users’ experiences without controlling them,” which “opens [researchers] to seeing/hearing beyond what we expect,” “broaden[s] our knowledge of user experiences” and “enriches our abilities to understand user, use, and experience in ways that positively impact our capacities to design, develop, and refine the sorts of products, environments, and interactions that support users and their needs” (p. 18). Following Sullivan, we sought to open “space for research that proceeds along a path that is more open to the voice of another” (2017, p. 20). By expanding our research questions, our study accounted for both patient experience and usability of the program’s features, navigation structure, and documentation. PXD research should consider patient experience when articulating initial research questions, revisit research questions throughout data collection and analysis, and remain open to the emergence of additional research questions that can help illuminate patient experience-related findings.

Explore How Project Findings Can Benefit Patients.

Findings from our study demonstrated that patient experience was central to improving the usability of the program, and further, that patient experience contextualized the program in ways that exceeded the program’s initial scope. For instance, participants asked about the program’s availability and accessibility beyond the usability testing sessions including the possibility of a mobile-friendly version of the program. These findings suggest that additional or alternative deliverables may be useful to patients using this program. Further, such findings confirm the importance of using PXD research to benefit, inform, or otherwise intervene in patient experiences. As Melonçon argued, PXD can be used to better support patients’ ability to “help themselves and bring about better health outcomes” (2017, p. 25). In other words, PXD work should be open to the possibility for (additional) deliverables specifically for patients. PXD research that is open and flexible regarding project deliverables and products may be best-suited to value and support patient experience throughout the design and evaluation processes.

Insight 2: Multidisciplinary Collaboration

Our study also highlights how engaging patient participants and multidisciplinary collaboration build on and mutually inform one another. Collaborations with clients, users, industry partners, and extra-disciplinary researchers have been central to TC research particularly for usability and UX studies (see, Cardinal et al., 2020; Melonçon, 2017; Renguette, 2016; Rose et al., 2017) and research in health contexts (see, Graham et al., 2017; Kuehl et al., 2020; Opel et al., 2018). As we considered the past, present, and future of UX research for this special issue, we realized that core to our study and its engagement with patient participants was multidisciplinary collaboration; thus we aim to extend prior collaborative research by further elucidating the value of multidisciplinary collaborations as well as actionable considerations these collaborations require for PXD research.

While PXD research can be conducted without multidisciplinary collaboration (see, Bivens, 2019, for one example), we found it central to our study’s ability to recruit and engage representative patient participants and to operationalize patient feedback into actionable recommendations. Equally important, our multidisciplinary collaboration enabled us to implement participatory design principles and collaboratively actualize results from our study in revisions to the program. Our collaboration enabled the usability team’s patient experience data and related usability recommendations to guide the voice team’s revision of the program. To fully interpret and apply PXD insights, we recommend such cross-disciplinary partnerships; this way, usability researchers remain mindful of the medical contexts and constraints shaping their projects, and data analysis is informed not just by the usability findings but also by contextual knowledge of the product being tested and medical expertise. We identified several insights, challenges, and recommendations that may be helpful to future, collaborative PXD research, summarized in Figure 1 and detailed below.

Build Mutual Respect for Expertise.

Prior to this study, the usability and voice teams had never met. In initial meetings, we established a mutual respect for complementary areas of expertise by sharing information and literature to begin to develop a common language and share methodological and publication norms. These practices maximized the disciplinary expertise each team brought and unified our individual and collaborative goals for this study, while enabling us to work productively together across disciplinary boundaries.

Establish Common Language and Ensure Shared Understanding.

We recommend creating shared documentation that defines key project terms and disciplinary concepts and acronyms, and outlines project goals, scope, and timeline. In our team, these documents developed organically over time, but it may be more efficient to create shared living documents at the outset. We included a summary of the background and goals of the program being evaluated, and often revisited this summary, particularly when the team membership evolved across semesters, graduations, and employment changes.

Articulate Team Goals for Overall Project and for Individual Disciplinary Teams.

At the project planning stage, we discussed specific team and individual goals so we could transparently establish a mutually beneficial plan for our collaboration. In our case, since all team members were in academia, goals related to funding and publications were well-aligned. We discussed specific publication goals, philosophy regarding authorship, and deliverables. We also discussed timeline, number and roles of team members, and compensation. We acknowledged that compensation might not be only monetary, but could include publications and student roles in the project. A list of team members, their roles, and potentially their individual goals may be helpful particularly if team members change over time. Our study did not have a project manager, but this would also be helpful for communication and project logistics.

Discuss Research Design.

During the project planning phase we also discussed research design, and these discussions built on the foundation created in our initial meetings. We worked together to refine the project scope, timeline, and products. The research plan necessitated careful consideration of disciplinary methodological norms, including sample size, data collection, and data analysis. We also discussed potential implications of having patients as participants when considering research design. For example, the voice team, being familiar with this patient population, emphasized the importance of open-ended data collection, which was complemented by the usability team’s familiarity with traditional usability measures.

Remain Flexible Throughout Testing.

We found it very valuable to continue our multidisciplinary collaboration throughout testing; in fact, we made several adjustments during the testing process, and having full team participation was important for those changes. In the project planning stage, we had faculty-only meetings, but as the study team grew and testing began, we expanded meetings to all team members (including undergraduate and graduate students and research professionals) to help the study progress smoothly. We recommend holding regular, full team meetings, with minutes, to share project progress and updates, address emerging challenges, and maintain study momentum. Discussions at these team meetings may also be a valuable educational opportunity. These ongoing multidisciplinary conversations allowed for iterative revisiting of both the immediate and broader project goals.

Collaborate on the Future of the Project.

Our regular, full team meetings allowed for smooth collaboration and co-creation of our planned products (e.g., publications). We also needed to consider the extent to which findings generated by patient participants might be actionable, given constraints of cost and HIPAA-compliant data handling for a patient-facing program collecting private health information. Finally, we began considering how this collaboration could be continued beyond this single project, and explicitly discussed the importance of advocating for the PXD lens in studies focused on patients as participants. We hope that the recommendations offered here will help facilitate other successful PXD collaborations.

Conclusion

As the intersections between TC and UX continue to grow, health and medical contexts will continue to be an important area of inquiry for TC researchers, and a key area where the expertise of TC can be actualized (Melonçon, 2017). Indeed, PXD operationalizes TC’s expertise in UX and interest in healthcare settings and further contributes to recent commitments to human-centered and participatory design. In developing this study, we recognized its unique positioning at this nexus of TC, UX, and healthcare, and its potential to both meaningfully use and build PXD. Specifically, we sought to maximize the potential for gaining insights from our patient participants who were not simply users in health care settings but participants who offered embodied, experiential insight regarding the program we aimed to optimize. Therefore, we turned to PXD as a methodology for engaging the diverse stakeholders, genres, knowledge, and experiences embedded in health and medical contexts (Melonçon, 2017, p. 25). We also aimed to respond to Melonçon’s (2017) call for further building PXD. Consequently, this article both detailed our own experience using PXD with task-based usability methods and explored the methodological and practical considerations we found central to conducting such work. We found that PXD foregrounds the unique considerations, contexts of use, and concerns that people may have as they engage with a product through their patient identities and experiences. Finally, we extended both past PXD research and collaborative research by affirming two intersecting insights that enabled our study — patients as participants and multidisciplinary collaboration — and offered practical recommendations for engaging these intersections in future research.

Task-based usability methods were useful in answering our research questions that engaged both usability dimensions–navigation and features–and patient experience. As Barnum (2011) has shown, usability’s seemingly narrow and specific focus areas (features, navigation, documentation, but also design, layout, typography, etc.) have always been bound up in questions of “how to create a product that works for people” (p. 196). Because technical communicators have a long history as user advocates, they are well positioned to extend this role into usability, UX, and healthcare settings by acting as patient advocates during design and testing of new interventions. At a time when usability and UX are expanding into longer-term, broader, and more deeply contextualized engagement with users, their contexts, and their ecologies of use, we also show that task-based usability methods have an important role to play in PXD and its focus on contextual inquiry, specifically when usability studies are conducted with specialized groups of participants.

That said, these insights and our accompanying recommendations come with limitations. In particular, we have emphasized throughout this article the value of traditional usability methods for PXD research. Our study was small in scope, thus, additional and expanded studies that examine the intersection between PXD and usability methods are needed to further refine the insights presented here. Additionally, we did not measure dimensions such as readability, comprehension, or memorability that are often key to health-related documents, tools, and product designs. Future research using task-based usability methods should consider and address these imitations to further develop PXD. We also recognize that task-based methods represent only one type of usability methods and may not be appropriate for all PXD research. Future studies that examine additional usability methods are necessary to more fully understand the role usability methods can or should play in PXD research. Finally, our study illustrated the value, in our experience, of multidisciplinary collaboration for usability, UX, and PXD research. As collaborative research is increasingly valuable, particularly in the contexts of health and medicine, additional studies that examine, reflect, and report on the challenges and success of this kind of multidisciplinary research can best support future usability and UX research.

Ultimately, our experience demonstrates that the bridging of past and future usability and UX methods is valuable for meaningful engagement of patients and their embodied experiences. Future PXD research should consider the strategic adaptation of traditional usability methods, alongside the innovative development of new usability and user experience methods. As the intersection of healthcare and TC continues to grow, PXD research too will become increasingly important for designing and creating documents, tools, and interface designs that are not only usable, but also attuned to patients’ particular needs, expectations, and desires. We hope our methodological insights regarding patient participants and multidisciplinary collaboration will catalyze future PXD developments.

Appendix A. Usability Test Task Scenarios and Post-Task User Satisfaction Rating

Note: This appendix includes the moderator instructions and five task scenarios we asked participants to complete in our usability tests.

Participants will be asked to complete five tasks. The moderator will ask the participant to read the task aloud before starting, and then to “think aloud” as much as possible while completing the task. A post-task question will follow each task.

Scenario and Task 1: Log in, stop

You have opened an email to start the Voice Education Program. You want to complete the first program. From your email, go to the VOICE site and log in. Say ‘done’ when you are finished.

Scenario and Task 2: Open program

You need to describe your voice and the effects your voice has on your life. Complete the program’s survey to describe your voice. Stop when you get to the beginning of page 4. Say ‘done’ when you are finished.

Scenario and Task 3: Review vocal health tips, navigate back to program

You want to review some vocal health tips, then continue finishing the program’s survey. Explore a voice-related tip category in the links. When you have explored a tip, then navigate back to page 4 of the check-in survey. Say ‘done’ when you are finished.

Scenario and Task 4: Exit the system and log back in

You would like to take a break and come back to finish later. Exit the system completely, close your browser, and then log back in.

Scenario and Task 5: Getting help

You are having issues with the website. You decide to look for help options. Say ‘done’ when you are finished.

Post-Task Rating

After each task, we asked participants the following question:

Please rate how easy or difficult this task was to complete on a scale of 1 to 5, with 1 being very easy and 5 being very difficult.

Appendix B. Please circle five words that best describe your experience with the module.

| advanced | engaging | patronizing |

| annoying | entertaining | personal |

| approachable | essential | poor quality |

| attractive | exceptional | powerful |

| busy | exciting | predictable |

| clean | familiar | professional |

| collaborative | fast | relevant |

| complex | flexible | reliable |

| comprehensive | friendly | rigid |

| confusing | helpful | satisfying |

| consistent | high quality | simplistic |

| convenient | impersonal | straight forward |

| creative | inconsistent | stressful |

| cutting edge | ineffective | time-consuming |

| dated | innovative | trustworthy |

| desirable | inspiring | unattractive |

| difficult | intimidating | unconventional |

| dull | inviting | undesirable |

| easy to use | irrelevant | unpredictable |

| effective | organized | unrefined |

| efficient | overwhelming | useful |

Debriefing Interview Questions

After all tasks in the test, the moderator conducted a debriefing interview. The following questions were included in the debriefing interview:

What was your first impression of the program?

Did that impression change as you used it, and if so, why?

What did you like best about the pages where you did the test tasks, and what did you like least?

If anything, what would you change about the pages where you did the test tasks?

Do you have any additional comments about your experience?

Footnotes

This study was approved as a part of clinical trial #1507S75003 by the University of Minnesota Institutional Review Board.

Voice Handicap Index is a widely used 10-item questionnaire that subjectively measures voice-related quality of life (Rosen et al., 2004).

See Arffa et al., 2012.

Testing sessions were recorded via usability lab computer directly to a HIPAA compliant folder that was permitted to store identifiable health data.

Importantly, the participants of the usability tests had not seen the program prior to testing. In suggesting that patient feedback may have contributed to participants’ interest in the program content, we mean to say that prior feedback from the patient perspective may have improved the program and its content for patients in general.

Contributor Information

Molly M. Kessler, Department of Writing Studies, University of Minnesota Twin Cities, Minneapolis, MN, USA

Lee-Ann K. Breuch, Department of Writing Studies, University of Minnesota Twin Cities, Minneapolis, MN, USA

Danielle M. Stambler, Department of Writing Studies, University of Minnesota Twin Cities, Minneapolis, MN, USA

Kari L. Campeau, Department of English, University of Colorado Denver, Denver, CO, USA

Olivia J. Riggins, Department of Writing Studies, University of Minnesota Twin Cities, Minneapolis, MN, USA

Erin Feddema, Department of Otolaryngology, University of Minnesota Twin Cities, Minneapolis, MN, USA.

Sarah I. Doornink, Department of Otolaryngology, University of Minnesota Twin Cities, Minneapolis, MN, USA

Stephanie Misono, Department of Otolaryngology, University of Minnesota Twin Cities, Minneapolis, MN, USA.

References

- Albers MJ (2004). Communication of complex information: User goals and information needs for dynamic web information. Lawrence Erlbaum Associates. [Google Scholar]

- Arduser L (2018). Impatient patients: A DIY usability approach in diabetes wearable technologies. Communication Design Quarterly Review, 5(4), 31–39. [Google Scholar]

- Arffa RE, Krishna P, Gartner-Schmidt J, & Rosen CA (2012). Normative values for the voice handicap index-10. Journal of Voice, 26(4), 462–465. [DOI] [PubMed] [Google Scholar]

- Baldwin JL, Singh H, Sittig DF, & Giardina TD (2017). Patient portals and health apps: Pitfalls, promises, and what one might learn from the other. Healthcare, 5(3), 81–85. 10.1016/j.hjdsi.2016.08.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnum C (2011). Usability testing essentials: Ready, set … test! Morgan Kaufmann. [Google Scholar]

- Barnum C (2019). Invited essay: The state of UX research. Journal of Usability Studies, 15(1), 1–7. [Google Scholar]

- Beatty AL, Magnusson SL, Fortney JC, Sayre GG, & Whooley MA (2018). VA FitHeart, a mobile app for cardiac rehabilitation: Usability study. JMIR Human Factors, 5(1):e3–e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bivens K (2019). Reducing harm by designing discourse and digital tools for opioid users’ contexts: The Chicago Recovery Alliance’s community-based context of use and PwrdBy’s technology-based context of use. Communication Design Quarterly Review, 7(2), 17–27. [Google Scholar]

- Bloom-Pojar R & DeVasto D (2019). Visualizing translation spaces for cross-cultural health communication. Present Tense, 7(3). [Google Scholar]

- Breuch L, Bakke A, Thomas-Pollei K, Mackey L, & Weinert C (2016). Toward audience involvement: Extending audiences of written physician notes in a hospital setting. Written Communication, 33(4), 418–451. [Google Scholar]

- Breuch L, Zachry M, & Spinuzzi C (2001). Usability instruction in technical communication programs: New directions in curriculum development. Journal of Business and Technical Communication, 15(2), 223–240. [Google Scholar]

- Cardinal A, Gonzales L, & Rose J, E. (2020, October). Language as participation: Multilingual user experience design. Proceedings of the 38th ACM International Conference on Design of Communication (pp. 1–7). [Google Scholar]

- Garrison-Joyner V, & Caravella E (2020). Lapses in literacy: Cultural accessibility in graphic health communication. Technical Communication Quarterly, 29(3), iii–xxv. [Google Scholar]

- Gouge CC (2017). Improving patient discharge communication. Journal of Technical Writing and Communication, 47(4), 419–439. [Google Scholar]

- Graham SS, Harley A, Kessler MM, Roberts L, DeVasto D, Card DJ, … & Kim, S. Y. (2017). Catalyzing transdisciplinarity: A systems ethnography of cancer–obesity comorbidity and risk coincidence. Qualitative Health Research, 27(6), 877–892. [DOI] [PubMed] [Google Scholar]

- Hackos J, & Redish J (1998). User and task analysis for interface design. John Wiley & Sons. [Google Scholar]

- Harris P, Taylor R, Thielke R, Payne J, Gonzalez N, & Conde J (2009). Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics, 42(2), 377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hong Y, Goldberg D, Dahlke DV, Ory MG, Cargill JS, Coughlin R, … Peres SC (2014). Testing usability and acceptability of a web application to promote physical activity (iCanFit) among older adults. JMIR Human Factors, 1(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard TW (2015). Accommodationist and constructivist approaches to academic-industry partnerships in a usability and user-experience facility. In Bridgeford T & Amant K (Eds). Academy-industry relationships and partnerships: Perspectives for technical communicators (pp. 157–177). Routledge. [Google Scholar]

- Johnson RR (1998). User-centered technology: A rhetorical theory for computers and other mundane artifacts. State University of New York Press. [Google Scholar]

- Johnson R, Salvo M, & Zoetewey M (2007). User-centered technology in participatory culture: Two decades “beyond a narrow conception of usability testing.” IEEE Transactions on Professional Communication, 50(4), 320–332. [Google Scholar]

- Kuehl RA, Anderson J, Drury SAM, Holman A, Hunt C, & Leighter JL (2020). Creating a multidisciplinary dialogue about community-based participatory research partnerships of health and medicine. Rhetoric of Health & Medicine, 3(1), 93–132. [Google Scholar]

- Malterud K, Siersma VD, & Guassora AD (2015). Sample size in qualitative interview studies: Guided by information power. Qualitative Health Research, 1–8, DOI: 10.1177/1049732315617444 [DOI] [PubMed] [Google Scholar]

- Mayberry LS, Berg CA, Harper KJ, & Osborn CY (2016). The design, usability, and feasibility of a family-focused diabetes self-care support mHealth intervention for diverse, low-income adults with type 2 diabetes. J Diabetes Research, 1–13. doi: 10.1155/2016/7586385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melonçon L (2017). Patient experience design: Expanding usability methodologies for healthcare. Communication Design Quarterly Review, 5(2), 19–28. [Google Scholar]

- Mirel B (2004). Interaction design for complex problem-solving: Developing useful and usable software. Boston, MA: Elsevier. [Google Scholar]

- Nguyen-Feng VN, Frazier PA, Stockness A, Narayanan A, Merians AN, & Misono S (2020). Web-based perceived present control intervention for voice disorders: A pilot study. Journal of Voice, 34(2), 300.e1–300.e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nielsen J (1993). Usability engineering. Morgan Kaufmann. [Google Scholar]

- Nielsen J (1994). 10 usability heuristics for user interface design. Retrieved from https://www.nngroup.com/articles/ten-usability-heuristics/

- Opel DS, Abbott C, & Hart-Davidson W (2018). Toward an encounter team model of clinical project management: A needs analysis of a family health center. Technical Communication, 65(2), 181–193. [Google Scholar]

- Potts L (2013). Social media in disaster response: How experience architects can build for participation. Routledge. [Google Scholar]

- Potts L & Salvo MJ (Eds.) (2017). Rhetoric and experience architecture. Parlor Press. [Google Scholar]

- Redish G (2007). Expanding usability testing to evaluate complex systems. Journal of Usability Studies, 2(3), 102–111. [Google Scholar]

- Redish JC (2010). Technical communication and usability: Intertwined strands and mutual influences. IEEE Transactions on Professional Communication, 53(3), 191–201. [Google Scholar]

- Renguette C (2016). Technical communication, academic research, and patient education: A multidisciplinary collaboration. Technical Communication, 63(4), 365–374. [Google Scholar]

- Rose E & Racadio R (2017). Testing in translation: Conducting usability studies with transnational users. Rhetoric, Professional Communication, and Globalization, 1, 5–26. [Google Scholar]

- Rose EJ (2016). Design as advocacy: Using a human-centered approach to investigate the needs of vulnerable populations. Journal of Technical Writing and Communication, 46(4), 427, 445. [Google Scholar]

- Rose E, Racadio R, Wong K, Nguyen S, Kim J, & Zahler A (2017). Community-based user experience: Evaluating the usability of health insurance information with immigrant patients. IEEE Transactions on Professional Communication, 60(2), 214–231. [Google Scholar]

- Rosen CA, Lee AS, Osborne J, Zullo T, & Murry T (2004). Development and validation of the voice handicap index‐10. The Laryngoscope, 114(9), 1549–1556. [DOI] [PubMed] [Google Scholar]

- Schriver KA (1997). Dynamics in document design. Wiley Computer Pub. [Google Scholar]

- Simmons W, & Zoetewey M (2012). Productive usability: Fostering civic engagement and creating more useful online spaces for public deliberation. Technical Communication Quarterly, 21(3), 251–276. [Google Scholar]

- Amant K (2017). The cultural context of care in international communication design: A heuristic for addressing usability in international health and medical communication. Communication Design Quarterly Review, 5(2), 62–70. [Google Scholar]

- Sullivan P (2017). Beckon, encounter, experience: The danger of control and the promise of encounters in the study of user experience. In Potts L, & Salvo MJ (Eds.) Rhetoric and experience architecture (pp. 41–57). Parlor Press. [Google Scholar]

- Walton R, & Jones N (2013). Navigating increasingly cross-cultural, cross-disciplinary, and cross-organizational contexts to support social justice. Communication Design Quarterly Review, 1(4), 31–35. [Google Scholar]

- Zachry M, & Spyridakis J (2016). Human-centered design and the field of technical communication. Journal of Technical Writing and Communication, 46(4), 392–401. [Google Scholar]