Abstract

Introduction

To address the need for remote assessments of cognitive decline and dementia, we developed and administered electronic versions of the Clinical Dementia Rating (CDR®) and the Financial Capacity Instrument‐Short Form (FCI‐SF) (F‐CAP®), called the eCDR and eFCI, respectively.

Methods

The CDR and FCI‐SF were adapted for remote, unsupervised, online use based on item response analysis of the standard instruments. Participants completed the eCDR and eFCI first in clinic, and then at home within 2 weeks.

Results

Of the 243 enrolled participants, 179 (73%) cognitively unimpaired (CU), 50 (21%) with mild cognitive impairment (MCI) or dementia, and 14 (6%) with an unknown diagnosis, 84% and 85% of them successfully completed the eCDR and eFCI, respectively, at home.

Discussion

These results show initial feasibility in developing and administering online instruments to remotely assess and monitor cognitive decline along the CU to MCI/very mild dementia continuum. Validation is an important next step.

Keywords: aging research, Alzheimer's disease, Brain Health Registry, internet, remote online instruments

1. INTRODUCTION

The development of psychometrically validated assessments to identify older adults at risk for cognitive decline and dementia due to Alzheimer's disease (AD) is a critical need for clinical researchers. However, many assessments for clinical research, treatment trials, and clinical practice are conducted in person at a research clinic, which can be time consuming and expensive. 1 , 2 In addition to the increase in older adults using the internet for a wide variety of activities, 3 , 4 internet‐based patient communication, including cognitive assessments, is increasing in health‐care settings. There are currently over 40 different computerized neuropsychological tests, although most have not been clinically validated for unsupervised, at‐home use. 4 , 5 , 6 , 7 The COVID‐19 pandemic underscores a pressing need for the development and validation of inexpensive, scalable online methods to remotely assess and longitudinally monitor individuals, which may help to facilitate AD clinical trials and accelerate the development of new treatments more efficiently.

Measures of cognition and activities of daily living have been demonstrated to be highly valuable for clinical AD research, especially for detecting early changes that predict future decline and disease progression. 8 One well‐validated measure of cognitive and functional decline is the Clinical Dementia Rating (CDR®), 9 , 10 , 11 which is widely used to characterize progression of AD and related dementias. Semi‐structured interviews with a participant and a study partner are used to calculate cognitive and functional status scores across six domains, as well as a composite or global CDR score. Another well‐validated measure is the Financial Capacity Instrument‐Short Form (FCI‐SF) (F‐CAP®), 12 , 13 , 14 , 15 , 16 which is used to detect decline in everyday financial skills in older adults. The FCI‐SF is a performance‐based assessment of four financial domains including monetary calculation skills, financial conceptual knowledge, understanding/using a checkbook and register, and understanding/using a bank statement. Items are scored and summed to calculate four component performance scores and a total score in addition to timing indexes of four tasks.

Although both the CDR and FCI‐SF are well validated in clinical settings, there are limitations that hinder their accessibility and scalability. Both require administration in a supervised setting with an experienced and certified rater, and therefore have high burden for administrators 2 and participants. 1 We developed online versions of both instruments, called the electronic CDR (eCDR) and the electronic Financial Capacity Instrument‐Short Form (eFCI) and assessed the feasibility of implementing these newly developed instruments through the Brain Health Registry (BHR) internet‐based platform. 17 , 18 The eCDR and eFCI, if validated, have the potential to facilitate clinical AD research and health care, by allowing remote assessment of cognition and function in an inexpensive, efficient, and scalable way.

2. METHODS

2.1. Description of the traditional instruments: CDR and FCI‐SF

2.1.1. Clinical Dementia Rating (CDR)

The CDR, developed at Washington University in St. Louis (WU), is used to detect the presence of dementia and when present, to determine the extent of cognitive and functional decline. 9 , 10 Using their best judgment, a clinician obtains the information necessary to assign a CDR score by conducting semi‐structured interviews with a participant and their study partner (typically, a spouse or adult child). Impairment is scored based on decline from a previously obtained level of function and is rated in six categories: Memory, Orientation, Judgment and Problem Solving, Community Affairs, Home and Hobbies, and Personal Care. Each category is rated independently across five levels of impairment: none (0), very mild (0.5), mild (1), moderate (2), and severe (3); an exception is the Personal Care domain, which is rated as 0, 1, 2, or 3. Scores in each category are synthesized to yield a global CDR score on an ordinal 5‐point scale, on which CDR 0 indicates normal cognition and CDR 0.5, 1, 2, and 3 designate very mild, mild, moderate, and severe dementia, respectively.

RESEARCH IN CONTEXT

Systematic Review: A PubMed search was conducted to review: (1) currently available computerized neuropsychological tests and remotely administered cognitive and functional assessments in older adults; (2) internet and technology use by older adults; (3) evidence for use of cognitive and functional measures in AD research; and (4) content, psychometric properties, and validity of the Clinical Dementia Rating (CDR) and Financial Capacity Instrument‐Short Form (FCI‐SF).

Interpretation: Our findings demonstrate the feasibility of implementing the electronic versions of the CDR and FCI (eCDR and eFCI) in a cohort of older adults with unimpaired cognition, mild cognitive impairment (MCI), and very mild dementia.

Future Directions: Ongoing analyses are now validating the eCDR and eFCI by: (1) comparing performance on e‐instruments to their in‐clinic counterparts, (2) estimating associations between e‐instrument performance and in‐clinic neuropsychological tests, and (3) assessing the sensitivity and specificity of the e‐instruments to detect MCI. Future studies will validate the instruments in more diverse populations.

Attributes of the CDR include multidimensional assessment of cognition, behavior, and function and linkage to validated diagnostic criteria for dementia due to AD. 19 , 20 The CDR is scored independently of neuropsychological test (NPT) performance, so NPT data can remain as independent variables in longitudinal studies. Because the instrument assesses decline from a person's previous level of function, it also lessens the influence of age, 21 education, linguistic, and sociocultural confounders. 22 The CDR has high inter‐rater reliability in multi‐center trials, 10 , 23 , 24 , 25 strong content and criterion validity, 26 and internal consistency and internal responsiveness. 27 There are well established, standard training and certification protocols 10 to stably administer the CDR and assess cognitive impairment. 28 Because the CDR captures clinically meaningful change, 29 it has been proposed as the primary outcome measure in many AD clinical trials.

2.1.2. Financial Capacity Instrument (FCI‐SF)

The FCI‐SF, developed at the University of Alabama at Birmingham (UAB), is a 15‐minute measure that uses performance and time‐to‐completion variables to detect decline in everyday financial cognition in older adults. 14 The measure uses financial concepts, coins/currency, documents, and other financially related stimuli relevant to the monetary system and financial practice of the US population. The FCI‐SF, derived from the FCI‐Long Form (FCI‐LF), comprises 37 performance items that evaluate four financial domains: monetary calculation, conceptual knowledge, using a checkbook/register, and using a bank statement. 13 The FCI‐SF also includes six processing speed indexes, measuring the time‐to‐completion of four specific FCI tasks. Total performance scores range from 0 to 74 points and the six time‐to‐completion indices include a maximum composite time of 670 seconds. The FCI‐SF also includes four unscored yes/no questions concerning a participant's prior experience with specific financial tasks. The FCI‐SF requires a trained rater and has a detailed and well‐operationalized administration and scoring manual. Age‐ and education‐adjusted older adult norms for the FCI‐SF have been published. 12 The FCI‐SF has been associated with dementia progression and has shown sensitivity to amyloid beta (Aβ) status in cognitively unimpaired (CU) older adults. 14 The FCI‐SF has also been shown to have excellent internal reliability, interrater reliability, and concurrent validity with the FCI‐LF. 12

2.2. Statistical analysis of existing data: CDR and FCI‐SF

To inform item selection for the electronic versions of the CDR and FCI‐SF, item response theory (IRT) 30 analysis of existing CDR and FCI‐SF clinical data was performed for this study. The IRT analysis approach was used to identify specific items that were most informative in estimating the underlying degree of cognitive/functional impairment; these items were kept in the electronic instrument. IRT analysis was also used to identify specific items that had less sensitivity, poor psychometric properties, and that contributed the least information in estimating the underlying degree of cognitive/functional impairment; these items were eliminated from the electronic instrument. Ordinary least squares linear regression analysis also was used to measure associations between individual items and overall instrument score.

IRT analysis for the CDR included item‐level data from WU's Memory and Aging Project. 31 The WU dataset contained >3000 records with 65 items measuring the six subdomains of the CDR. IRT analysis of the CDR indicated that the majority of the items in the CDR discriminated well at mild and very mild levels of cognitive impairment. 32 Of the original 65 items, 53 informative items that had demonstrated high discriminative power were kept in the final statistical model for estimation of overall and domain specific scores. 31 , 32

IRT analysis for the FCI‐SF used item‐level data from the Mayo Clinic Study of Aging (n = 1472) 33 and the Cognitive Observations in Seniors (COINS) study at UAB (n = 144). 34 Statistical IRT and differential item functioning (DIF) analysis of the FCI‐SF indicated that the majority of the items in the FCI‐SF discriminated well between individuals with varying levels of financial capacity and required either a higher or lower level of financial capacity to be answered correctly. 35 All FCI‐SF items, including interrelated tasks, were retained in the eFCI.

2.3. Development of electronic instruments

2.3.1. Overall approach to adapt traditional instruments into online instruments

To adapt the CDR and the FCI‐SF for online administration, each item was reviewed to first determine whether it could be reasonably translated into an online format. The overall approaches used to adapt the CDR and the FCI‐SF for online use are described in Table 1. Items with discrete response options (e.g., yes/no) were adapted verbatim in a simple survey format (Table 1, Row 1). Items with open‐ended responses were altered to have multiple‐choice options (Table 1, Row 2) or dropdown menus. To preserve the integrity of online data collection for these instruments, multiple choice and drop‐down menu options were generated that contained a list of the most frequent responses. Investigators used their extensive experience with the CDR and the FCI‐SF to identify and develop a list of the most frequent responses to open‐ended questions. For items likely to show practice effects, such as the short memory test within the CDR and the items requiring specific calculations in the FCI‐SF, alternative versions of items were developed. All potential items were evaluated by the study team, including clinicians familiar with the in‐clinic administration of the instruments, to determine item content and wording, as well as the importance of clinical judgment. The instruments were adapted for online use via Qualtrics software (https://www.qualtrics.com), a survey software tool for data collection. The eCDR can be administered on smartphones, tablets, and computers, whereas the eFCI is optimally administered on tablets or computers due to screen size requirements for the tasks.

TABLE 1.

Approaches used to adapt traditional CDR and FCI‐SF online use

| Test | Type of response/test materials | Adaptation | Example figure(s) |

|---|---|---|---|

| CDR | Discrete response options (e.g., yes/no) | Simple survey format |

|

| CDR | Open‐ended response field for written text | Multiple choice answer options or dropdown menus |

|

| FCI‐SF | Open‐ended response field for written text | Multiple choice answer options |

|

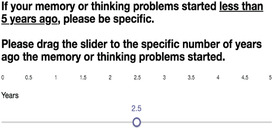

| CDR | Open‐ended response field for numerical response | “Slider” question type for numerical questions |

|

| CDR | Verbal reminders with extra details frequently provided by assessors during in‐clinic assessments | Extra details were included as supplemental text |

|

| FCI‐SF | Physical stimuli provided to participant (examples: check book, blank checks and check register) | Images of physical stimuli were displayed on device screen |

|

| FCI‐SF | Physical stimuli provided to participant to make out a check | Images of a check with clearly highlighted fields to identify different parts of the check that were specific to the question being asked |

|

| FCI‐SF | Verbal instructions to have scratch paper, a pencil, and a calculator readily available | Written instructions were displayed on device screen |

|

| FCI‐SF | Verbal reminders follow along with the administrator as they read the question aloud | Written reminders to scroll down to read and answer the question were displayed on device screen |

|

Abbreviations: CDR, Clinical Dementia Rating; eCDR, electronic Clinical Dementia Rating; eFCI, electronic Financial Capacity Instrument; FCI‐SF, Financial Capacity Instrument – Short Form.

2.3.2. eCDR development

The eCDR was developed collaboratively between the University of California San Francisco (UCSF) and WU. Additional methods were used to address challenges specific to adapting the CDR instrument. Some items, such as the autobiographical recall question, 36 in the CDR that could not be reasonably translated into an online format due to their open‐ended nature (10% of CDR questions) were eliminated from the eCDR (Table 2) or were altered to have a wide range of answer options. For example, the “slider” Qualtrics response type was used for numerical questions (Table 1, Row 4). Another challenge was administering and scoring the adapted eCDR without the ability to ask follow‐up questions and without the judgment of an experienced assessor. To address this, extra details that assessors most frequently provided during past in‐clinic assessments were included as supplemental text in the eCDR. For example, because the CDR was intended to assess decline from a person's previous level of function, reminders for the study partner were incorporated throughout the eCDR to only endorse an answer if the participant's observed behavior was: consistent, a change compared to the participant's longstanding behavior, due to memory and thinking problems, and interfered with the participant's daily function (Table 1, Row 5). A related challenge in adapting the CDR for online use was replicating the clinician's judgement used to assign a CDR score based on a participant's or study partner's responses. To address this, a standard, automated scoring algorithm was developed based on the IRT model to remotely score the eCDR without clinician judgment. 31 , 32 Li et al. 32 described further details on the IRT analysis and the development of the automated scoring algorithm for the eCDR. The algorithm generates multiple outcome measures: categorical global and box scores (like the CDR), and novel, continuous global and box scores. The eCDR scoring algorithm has more granular scoring than the CDR, giving it the potential for higher sensitivity to identify subtle cognitive and functional changes in largely CU individuals with a CDR = 0. Last, the CDR relies heavily on the accuracy of study partner responses, and the accuracy of study partner responses is judged by the assessor based on the dyad relationship. To address this, the eCDR asks both the participant and the study partner questions such as how long they have known each other, whether they currently live together, and how many hours per week they spend together. The participant portion of the eCDR was expected to take a total of 15 minutes, and the study partner portion of the eCDR was expected to take a total of 20 minutes. Pilot data and user feedback from internal alpha testing were used to make minor changes to optimize the eCDR.

TABLE 2.

Items eliminated from the CDR to adapt for online use in eCDR

| Type | Question |

|---|---|

| SP | Is he/she taken to social functions outside a family home? If no, why not? |

| SP | If in nursing home, does he/she participate well in social functions (thinking)? |

| SP | If in nursing home, what can he/she no longer do well (H and H)? |

| SP | Ability to perform household tasks: Please describe (Blessed) |

| SP | Tell me about some recent event in his/her life within 1 week (autobiographical) |

| SP | Tell me about some recent event in his/her life within 1 month (autobiographical) |

| P | A few moments ago within 1 week (autobiographical) |

| P | A few moments ago within 1 month (autobiographical) |

| P | What is the name of this place? |

| P | Upon arriving in a strange city, how would you locate a friend you wanted to see? |

Abbreviations: CDR, Clinical Dementia Rating; eCDR, electronic Clinical Dementia Rating; P, participant; SP, study partner.

2.3.3. eFCI development

The eFCI was developed in collaboration between UCSF and UAB and in consultation with co‐author Dr. Marson, the creator of the FCI‐SF. Additional methods were used to address the challenges specific to adapting the FCI‐SF for online use. An alternative version of the FCI‐SF was created for development of the eFCI: 100% of the alternative FCI‐SF items were adaptable for online use. The eFCI captured timing information for each item and was expected to take a total of 20 minutes to complete. Similar to the issue for the CDR, the FCI‐SF required a trained rater to administer the FCI‐SF in clinic. To address this, we used multiple‐choice answers or drop‐down menus that contained the most frequent responses and intentional foil responses, as well as additional text within the electronic instrument instructions, to create an eFCI that could be scored automatically without a rater. We also included instructions for participants at the beginning and throughout the eFCI to have scratch paper, a pencil, and a calculator readily available for use (Table 1, Row 8). Another challenge was adapting the physical stimuli, such as a checkbook and bank statement, used in the FCI‐SF. We created images of the physical stimuli used in clinic that display on the participant's device screen, including a bank statement, checkbook, check register, and a blank check with clearly highlighted fields to identify different parts of the check specific to the question being asked (Table 1, Row 7). An automatic eFCI scoring algorithm was developed. Pilot data and user feedback from internal alpha testing were used to make minor changes to optimize the eFCI.

2.4. Feasibility of implementing electronic instruments

2.4.1. Participants

Participants and study partners were recruited into the Electronic Validation of Online Methods to Predict and Monitor Cognitive Decline (eVAL) study from two sources. First, we referred current BHR participants to the study using automated e‐mail invitations 17 with the following inclusion criteria: agreed to be contacted about future studies, 55 years of age and older, residing within 50 miles of the UCSF site. 17 Second, participants were recruited from existing clinical cohorts from three Alzheimer's Disease Research Center (ADRC) sites: UAB, Mayo Clinic (Mayo), and WU. All participants signed consent during their in‐clinic visit, and all activities were performed under institutional review board approval at the local site.

2.4.2. Electronic instrument implementation

In‐clinic visits occurred annually during which a trained study staff member administered the CDR, FCI‐SF, eCDR, and eFCI in a supervised testing session. Participants were assisted at the beginning of the electronic instrument (e‐instrument) and had the option to get help from the study staff member as needed. Within 2 weeks of the supervised visit, participants returned home, completed registration on the BHR platform by following instructions provided in the invitation e‐mail and completed the eCDR and eFCI in an unsupervised setting at home without a study staff member. The supervised in‐clinic e‐instrument version was taken before the unsupervised at‐home e‐instrument version in all cases, which may represent a confound. This is a limitation that will be addressed in the Discussion section.

The eCDR and eFCI included instructions emphasizing that the participant should complete the instrument “entirely on his/her own, without any assistance.” After the baseline in‐clinic visit, participants were asked to complete the eCDR and eFCI every 6 months at home in an unsupervised setting. Study partners were also asked to complete the eCDR in an unsupervised setting through the BHR platform on the same timeline. Using the BHR platform, participants and study partners registered by creating a username and password, agreed to an online informed consent, and completed the electronic instruments. Participants were also asked to self‐report “How confident are you with computers?”, with the option to endorse “Not confident at all,” “I usually need help,” “It depends on the task,” or “Confident.” Automatic e‐mails were sent to participants and study partners on 1, 3, 5, 11, and 15 days after their at‐home visit due date and phone calls were made to participants and study partners on approximately 8 and 18 days after their at‐home visit due date to remind them of their at‐home and in‐clinic visits and study tasks.

2.4.3. Clinical diagnoses

The Uniform Data Set, Version 3 (UDS) 37 was administered to all participants. Clinical diagnosis of CU, mild cognitive impairment (MCI), or mild dementia were obtained from the UDS section D1.

2.5. Statistical analysis

To assess the reliability between the unsupervised at‐home eCDR and the supervised in‐clinic eCDR, McNemar's Chi‐square test was performed on a 2 × 2 table of at‐home and in‐clinic eCDR results of 0 or 0.5 (n = 189). Because one of the cell counts was small (<5), continuity correction was applied. We also calculated the mean and median total time it took both participants and study partners to complete their portion of the eCDR (n = 207 pairs). For the eCDR, we removed outliers based on the 1.5× interquartile range (IQR) rule. This was necessary because the initial total time included the sum of any pauses and re‐starts taken resulting in highly right‐skewed values.

A Pearson correlation coefficient was calculated to assess the reliability between the unsupervised at‐home eFCI and the supervised in‐clinic eFCI (n = 209). We also calculated the mean total time to complete the eFCI (n = 209).

3. RESULTS

3.1. Feasibility of electronic instrument implementation

Between January 17, 2020 and January 5, 2022, there were 243 participants enrolled across all sites. Enrollment and task completion rates are shown in Table 3. Out of all 3192 participants invited to enroll in the study, 250 (176 invited from BHR and 74 invited from ADRCs) declined consent or withdrew from the study. Most common specified reasons for declining consent or withdrawing from the study are included in Table 4. Seventy‐seven participants were determined ineligible and excluded.

TABLE 3.

Completion of electronic instruments

| Variable | UCSF | ADRC | Total |

|---|---|---|---|

| Invited | 2915 | 277 | 3192 |

| Enrolled | 140 (5%) | 103 (37%) | 243 (8%) |

| Completed P eCDR in‐clinic | 136 (97%) | 100 (36%) | 236 (74%) |

| Completed P eCDR at home | 135 (96%) | 72 (70%) | 207 (85%) |

| Completed SP eCDR at home | 124 (89%) | 68 (66%) | 192 (79%) |

| Completed eFCI in‐clinic | 136 (97%) | 101 (98%) | 237 (98%) |

| Completed eFCI at‐home | 134 (96%) | 71 (69%) | 205 (84%) |

Notes: Enrolled percentages indicate number enrolled out of invited participants; completed percentages indicate number that completed task out of number enrolled. Data presented as number as of January 5, 2022.

Abbreviations: ADRC, Alzheimer's Disease Research Center; eCDR, electronic Clinical Dementia Rating; eFCI, electronic Financial Capacity Instrument; P, participant; SP, study partner; UCSF, University of California San Francisco.

TABLE 4.

Summary of declined reasons and exclusion reasons

| Declined or exclusion reason | Total |

|---|---|

| Reason for declining consent | 250 a |

| Participant does not specify reason | 120 (48%) |

| Overall not interested | 70 (28%) |

| Too much time | 18 (7%) |

| Inability to use a computer | 16 (6%) |

| Unable to come into clinic | 13 (5%) |

| No available study partner | 11 (4%) |

| Study partner doesn't want the participant to be involved | 10 (4%) |

| Medical reason | 9 (4%) |

| COVID‐19 related concerns | 7 (3%) |

| Not a good time for participant | 7 (3%) |

| Not willing to complete assessments | 3 (1%) |

| Involved in other studies | 3 (1%) |

| Death | 2 (1%) |

| Lack of access to a computer or internet at home | 1 (0.4%) |

| Reason for exclusion | 77 a |

| No available study partner | 22 (29%) |

| Inability to complete computer tasks at home | 17 (22%) |

| Medical reason | 10 (13%) |

| Does not specify reason | 10 (13%) |

| Unable to come into clinic | 7 (9%) |

| Participates in other research activities | 5 (7%) |

| Study partner doesn't want the participant to be involved | 4 (5%) |

| Lost to follow up | 1 (1%) |

| COVID‐19 related concern | 1 (1%) |

| Participant not able to complete assessments | 1 (1%) |

| Study Partner doesn't speak English | 1 (1%) |

Note: Data presented as number (%) as of January 5, 2022.

Unique count of participants as more than one reason can be endorsed.

3.2. Characteristics of enrolled participants

Table 5 summarizes the demographic profile, clinical diagnoses, and computer confidence of participants. Of all enrolled participants, 179 (73%) were CU, 49 (20%) had MCI, 1 (1%) had dementia, and 14 (6%) had an unknown diagnosis.

TABLE 5.

Characteristics of participants

|

Enrolled at UCSF N = 140 |

Enrolled at ADRCs N = 103 |

Total enrolled at all sites N = 243 |

|

|---|---|---|---|

| Demographics | |||

| Age years, median (IQR) | 73 (67–79) | 74 (67–78) | 74 (67–79) |

| Female (%) | 82 (59%) | 43 (42%) | 125 (51%) |

| Education | |||

| High school | 2 (1%) | 8 (8%) | 10 (4%) |

| Some college | 8 (6%) | 9 (9%) | 17 (7%) |

| Two‐year degree | 5 (4%) | 5 (5%) | 10 (4%) |

| Four‐year degree | 40 (29%) | 31 (30%) | 71 (29%) |

| Master's degree | 55 (39%) | 30 (29%) | 85 (35%) |

| Doctoral degree | 12 (8%) | 2 (2%) | 14 (6%) |

| Professional degree | 18 (13%) | 6 (6%) | 24 (10%) |

| Unknown | 0 (0%) | 12 (11%) | 12 (5%) |

| Race | |||

| White (%) | 115 (82%) | 90 (87%) | 205 (84%) |

| Black or African American (%) | 1 (1%) | 11 (11%) | 12 (5%) |

| Asian (%) | 13 (9%) | 0 (0%) | 13 (5%) |

| More than one race (%) | 10 (7%) | 0 (0%) | 10 (4%) |

| American Indian or Alaskan Native (%) | 0 (0%) | 0 (0%) | 0 (0%) |

| Native Hawaiian or Other Pacific Islander (%) | 0 (0%) | 0 (0%) | 0 (0%) |

| Decline to State (%) | 1 (1%) | 1 (1%) | 2 (1%) |

| Unknown (%) | 0 (0%) | 1 (1%) | 1 (1%) |

| Ethnicity | |||

| Hispanic or Latino (%) | 8 (6%) | 0 (0%) | 8 (3%) |

| Not Hispanic or Latino (%) | 132 (94%) | 98 (95%) | 230 (95%) |

| Declined to state (%) | 0 (0%) | 3 (3%) | 3 (1%) |

| Unknown (%) | 0 (0%) | 2 (2%) | 2 (1%) |

| Diagnosis | |||

| Cognitively unimpaired (%) | 110 (79%) | 69 (67%) | 179 (73%) |

| Mild cognitive impairment (%) | 30 (21%) | 19 (18%) | 49 (20%) |

| Dementia (%) | 0 (0%) | 1 (1%) | 1 (1%) |

| Unknown (%) | 0 (0%) | 14 (14%) | 14 (6%) |

| Computer confidence | |||

| Usually needs help (%) | 1 (1%) | 2 (2%) | 3 (1%) |

| Depends on task (%) | 42 (30%) | 30 (29%) | 72 (30%) |

| Confident (%) | 94 (67%) | 45 (44%) | 139 (57%) |

| Unknown (%) | 3 (2%) | 26 (25%) | 29 (12%) |

Abbreviations: ADRC, Alzheimer's Disease Research Center; IQR, interquartile range; UCSF, University of California San Francisco.

Note: Data presented as median (IQR) or number (%) as of January 5, 2022.

3.3. Reliability between in‐clinic and at‐home administration and completion times

The reliability between the supervised in‐clinic eCDR and unsupervised at‐home eCDR was estimated with a McNemar's Chi‐squared test statistic of 0.364 with degrees of freedom equal to one and associated P‐value of .547 (with continuity correction). This supports high test–retest reliability of the administration setting of the eCDR, meaning there is not a significant difference between eCDR scores taken in clinic from those taken at home. The mean total time to complete the participant portion of the eCDR was 12 minutes and 24 seconds (median: 12 minutes and 20 seconds). The mean total time to complete the study partner portion of the eCDR was 16 minutes and 28 seconds (median: 16 minutes and 3 seconds).

The Pearson correlation coefficient between the supervised in‐clinic eFCI and the unsupervised at‐home eFCI was 0.731 (P < .001). This supports a high correlation between the eFCI completed in clinic and at home. The mean total time to complete the eFCI was 13 minutes and 38 seconds (±4 minutes and 39 seconds).

4. DISCUSSION

The development of online unsupervised cognitive assessments can improve the accessibility of existing instruments used to identify, diagnose, and monitor individuals at risk for cognitive decline. We adapted the traditional in‐clinic CDR and FCI‐SF by developing the online unsupervised eCDR and eFCI instruments and automated scoring algorithms for each instrument. Our preliminary results demonstrated feasibility (the ability of a participant to complete all aspects of the electronic instrument) of our approach. We are now validating the e‐instruments against traditional instrument counterparts, clinical diagnosis of CU or MCI/very mild dementia, and exploring the relationship between e‐instrument performance and other important outcomes, such as clinical diagnosis and in‐clinic neuropsychological test performance. If validated, these novel e‐instruments would help facilitate screening and assessment of older adults for AD clinical trials, AD clinical research, and clinical care. Further, they can be used for population‐based screening for early detection of cognitive impairment, and for epidemiological studies.

A significant component of e‐instrument adaption was developing an algorithm‐based instrument that would not require a clinician to score responses using clinical judgment. To do so, we included items that were identified by clinicians as having significant influence on clinician judgment and score assignment. In addition, to best mimic in‐clinic administration, we incorporated in the e‐instruments specific examples and content for answer choices, as well as extra details and prompts that clinicians most frequently used such as instructions to have scratch paper, a pencil, and a calculator readily available and reminders to scroll down to view the entire question in the eFCI (Table 1, Rows 8 and 9). Additionally, we used multiple‐choice answers rather than have open‐ended response fields. However, presenting a list of multiple‐choice answers may have inherently prompted participants, diminished the use of a participant's abstract thinking to craft a response, and possibly changed the construct of the question. To reconcile this in the development of the eCDR and the eFCI, multiple‐choice options and dropdown menus were created to present and capture a wide range of answer responses. Adaptations to existing scoring algorithms were made to incorporate the addition of multiple‐choice answers.

We have implemented the eCDR and eFCI using the BHR platform and have collected data from participants across four clinical sites (UCSF, WU, UAB, and Mayo Clinic). This supports previous studies that have demonstrated the feasibility and benefit of leveraging unsupervised, online measures as a scalable approach to assess cognitive and functional decline and to improve the efficiency of screening and recruitment for clinical trials. 38 , 39 , 40

While our results demonstrate that development and implementation of these new online measures is feasible, we acknowledge limitations in our study. First, we acknowledge selection biases that may limit generalizability of our results. Our sample includes only individuals who have access to the internet, are computer literate, and can successfully complete tasks online. In addition, most of the sample self‐identified as White and had high educational attainment. A crucial next step is to assess feasibility and validity of the online instruments in diverse populations. We are currently using culturally tailored participant communications and digital advertising to increase the enrollment of traditionally underrepresented populations (URPs) in the study. These include recruitment strategies that adapt best practices generated from other projects, rooted in community engaged research methods, focused on enrolling diverse populations in BHR. If validated in diverse populations, the electronic instruments have the potential to expand accessibility to these valuable measures of cognitive and functional status, particularly for individuals who do not have access to a research clinic due to where they live or other logistical constraints that are known to unduly burden URPs. 41 , 42 , 43 , 44 , 45 , 46 , 47 , 48 , 49 , 50 , 51 , 52 , 53 As digital technology and internet use among older adults, including URPs, continues to grow, online assessment approaches show promise as a feasible, valid approach. 17 , 18 , 54 , 55 , 56 , 57 , 58 , 59 , 60 However, lack of access to technology remains a concern for making these assessments available to those with lower socioeconomic status. Further, because 30% of participants expressed that their confidence using a computer “depends on the task,” it will be important to explore the contribution of computer confidence to e‐instrument performance in future analyses. The current sample includes 73% CU individuals and 20% with MCI. The current study focuses on the potential of the e‐instruments to detect/screen for early stages of cognitive impairment. It will be important to address whether the e‐instruments are feasible and valid in a sample with a broader range of cognitive impairment in future studies, and to identify the level of cognitive impairment at which participants are unable to reliably complete e‐instruments.

Finally, in our study, we did not counterbalance the order of e‐instrument administration (in clinic vs. at home), due to current clinical protocols at the sites. Therefore, we could not address the degree to which the initial, supervised administration influenced the subsequent, unsupervised e‐instrument performance. Further, although we have included clear instructions for participants to complete the eCDR and eFCI without any assistance, it is possible that the study partner or someone else may have helped the participant during unsupervised visits.

The goal of this article is to describe the methods used to develop the eCDR and eFCI, and to present evidence for feasibility of their remote administration in a cohort of older adults. Ongoing analyses are now validating the eCDR and eFCI as novel measures to detect and monitor cognitive and functional decline. Future analyses will: (1) compare performance on e‐instruments to their in‐clinic counterparts, (2) estimate associations between e‐instrument performance and both baseline and longitudinal change in in‐clinic neuropsychological tests from the UDS, and (3) assess the sensitivity and specificity of the e‐instruments to detect MCI. We also plan to investigate the association between e‐instrument performance and AD biomarkers, including amyloid and tau. If validated, the eCDR and eFCI will have many, high‐impact applications in the Alzheimer's disease and related dementias and public health fields. They can be used to efficiently screen participants for preclinical and prodromal AD clinical trials and observational studies, and to identify suitable candidates for further in‐clinic assessment and treatment of cognitive impairment. This approach can ultimately have practical clinical applications in health care, epidemiology, and public health settings, to identify individuals at risk for AD and cognitive decline.

CONFLICTS OF INTEREST

Taylor Howell, Shilpa Gummadi, Chau Bui, Jessica Santhakumar, Kristen Knight, Carol Chambless, Adam Gersteneker, Roy Martin, Connie Mayo, Krista L. Moulder, Maria Carroll, and Yan Li have no interests to declare. Rachel L. Nosheny received support for her work from the NIH; received funding for academic travel from the Mild Cognitive Impairment (MCI) 2020 Symposium; and served on the International Society to Advance Alzheimer's Research and Treatment (ISTAART) Subjective Cognitive Decline (SCD) Professional Interest Area (PIA) “Dyadic Patterns of Subjective Report” workgroup. R. Scott Mackin has received research support from the National Institute of Mental Health and Johnson and Johnson. Nikki H. Stricker has received research support outside the scope of this work from the NIA, Alzheimer's Association, and Biogen; a Mayo Clinic invention disclosure has been submitted for remote cognitive assessment tools outside the scope of this work (the Stricker Learning Span and the Mayo Test Drive platform). Ronald C. Petersen receives funding from the National Institute on Aging: P30 AG062677, U01 AG006786, U01 AG024904, U24 AG057437 and National Institute for Neurological Disorders and Stroke: Uf1 NS125417. He consults for Roche, Inc., Merck, Inc, Biogen, Inc., Genentech, Inc., Eisai, Inc. and Nestle, Inc. He receives royalties from Oxford University Press and UpToDate. John C. Morris is funded by NIH grants # P30 AG066444; P01AG003991; P01AG026276; U19 AG032438; and U19 AG024904. Neither his nor his family owns stock or has equity interest (outside of mutual funds or other externally directed accounts) in any pharmaceutical or biotechnology company. Daniel Marson and UAB Research Foundation (UABRF) have previously received royalty income and other compensation for the use of the FCI‐SF in a clinical trial. He receives support from the NIH and is also the inventor of the FCI‐SF, which is owned by the UABRF. He and his company receive compensation for sales and services related to the FCI‐SF. He serves as a paid consultant to UCSD in connection to the e‐VAL research project and the present paper. Richard Kennedy and Yue Zhang receive support from NIH grants R01 AG057684 and R01AG059009. Erik D. Roberson receives support for his work from the following funding sources: NIH, Bluefield Project to Cure Frontotemporal Dementia, Alzheimer's Drug Discovery Foundation. He has also received support from the Alzheimer's Association, Alector, the Weston Brain Institute, and BrightFocus Foundation. He has served on advisory boards for Biogen, AGTC, and AVROBIO, and on a DSMB for Lilly. Michael W. Weiner receives support for his work from the following funding sources: NIH: 5U19AG024904‐14; 1R01AG053798‐01A1; R01 MH098062; U24 AG057437‐01; 1U2CA060426‐01; 1R01AG058676‐01A1; and 1RF1AG059009‐01, DOD: W81XWH‐15‐2‐0070; 0W81XWH‐12‐2‐0012; W81XWH‐14‐1‐0462; W81XWH‐13‐1‐0259, PCORI: PPRN‐1501‐26817, California Dept. of Public Health: 16–10054, U. Michigan: 18‐PAF01312, Siemens: 444951–54249, Biogen: 174552, Hillblom Foundation: 2015‐A‐011‐NET, Alzheimer's Association: BHR‐16‐459161; The State of California: 18–109929. He also receives support from Johnson & Johnson, Kevin and Connie Shanahan, GE, VUmc, Australian Catholic University (HBI‐BHR), The Stroke Foundation, and the Veterans Administration. He has served on Advisory Boards for Eli Lilly, Cerecin/Accera, Roche, Alzheon, Inc., Merck Sharp & Dohme Corp., Nestle/Nestec, PCORI/PPRN, Dolby Family Ventures, National Institute on Aging (NIA), Brain Health Registry and ADNI. He serves on the Editorial Boards for Alzheimer's & Dementia, TMRI, and MRI. He has provided consulting and/or acted as a speaker/lecturer to Cerecin/Accera, Inc., Alzheimer's Drug Discovery Foundation (ADDF), Merck, BioClinica, Eli Lilly, Indiana University, Howard University, Nestle/Nestec, Roche, Genentech, NIH, Lynch Group GLC, Health & Wellness Partners, Bionest Partners, American Academy of Neurology (AAN), NYU, Japanese Government Alliance, National Center for Geriatrics and Gerontology (Japan), US Against Alzheimer's, Society for Nuclear Medicine and Molecular Imaging (SNMMI), The Buck Institute for Research on Aging, FUJIFILM‐Toyama Chemical (Japan), Garfield Weston, Baird Equity Capital, and T3D Therapeutics. He holds stock options with Alzheon, Inc., Alzeca, and Anven. The following entities have provided funding for academic travel; Kenes, Intl., Merck, ADCS, ATRI, Eli Lilly, The Alzheimer's Association, Merck, Tokyo University, Kyoto University, AAN, AC Immune, CHU Toulouse, St. George Hospital University, Indiana U., U. Melbourne, Australian Catholic University, Japanese Government Alliance, National Center for Geriatrics and Gerontology (Japan), US Against Alzheimer's, NYU, USC, and SNMMI.

ACKNOWLEDGMENTS

Funding: Research reported in this publication was supported by the National Institute on Aging of the National Institutes of Health (RF1AG059909). This project was also supported by the National Center for Advancing Translational Sciences, National Institutes of Health, through UCSF‐CTSI Grant Number UL1 TR001872. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The authors gratefully acknowledge the following funding sources for the Brain Health Registry: National Institute on Aging, the Alzheimer's Association, California Department of Public Health, Patient Centered Research Outcomes Institute, Alzheimer's Drug Discovery Foundation, The Rosenberg Alzheimer's Project, the Ray and Dagmar Dolby Family Fund, Connie and Kevin Shanahan, General Electric, GHR, and The Drew Foundation. Additionally, the authors would like to acknowledge the Veterans Affairs and the Northern California Institute for Research and Education (NCIRE). The authors further appreciate the contributions of all eVAL participants and study partners, as well as all the individuals, including faculty and staff members, who contributed to the study planning and operations of the eVAL study. The ADRCs were instrumental in recruiting, evaluating, and following the participants being reported in this paper; the grants that support those ADRCs are as follows. For the Washington University Knight ADRC: P30 AG066444; P01 AG03991, and P01 AG026276. For the UAB ADRC: P20AG068024 and UL1TR003096. For the Mayo Clinic ADRC: P30 AG062677.

Howell T, Gummadi S, Bui C, et al. Development and implementation of an electronic Clinical Dementia Rating and Financial Capacity Instrument‐Short Form. Alzheimer's Dement. 2022;14:e12331. 10.1002/dad2.12331

Rachel L. Nosheny, Scott Mackin, and Michael W. Weiner should be considered joint senior authors.

REFERENCES

- 1. Grill JD, Karlawish J. Addressing the challenges to successful recruitment and retention in Alzheimer's disease clinical trials. Alzheimers Res Ther. 2010;2:34. 10.1186/alzrt58 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Cummings J, Aisen P, Barton R, et al. Re‐Engineering Alzheimer Clinical Trials: global Alzheimer's Platform Network. J Prev Alzheimers Dis. 2016;3:114–120. 10.14283/jpad.2016.93 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Pew Research Center. (2021, April 7).Demographics of Mobile Device Ownership and Adoption in the United States. Pew Research Center: Internet, Science & Tech. https://www.pewinternet.org/fact‐sheet/mobile/

- 4. Wild K, Howieson D, Webbe F, Seelye A, Kaye J. Status of computerized cognitive testing in aging: a systematic review. Alzheimers Dement. 2008;4:428–437. 10.1016/j.jalz.2008.07.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Silverstein SM, Berten S, Olson P, et al. Development and validation of a World‐Wide‐Web‐based neurocognitive assessment battery: webNeuro. Behav Res Methods. 2007;39:940–949. 10.3758/BF03192989 [DOI] [PubMed] [Google Scholar]

- 6. Kane RL, Kay GG. Computerized assessment in neuropsychology: a review of tests and test batteries. Neuropsychol Rev. 1992;3:1–117. 10.1007/BF01108787 [DOI] [PubMed] [Google Scholar]

- 7. Naglieri JA, Drasgow F, Schmit M, et al. Psychological testing on the internet: new problems, old issues. Am Psychol. 2004;59:150–162. 10.1037/0003-066X.59.3.150 [DOI] [PubMed] [Google Scholar]

- 8. Marshall GA, Amariglio RE, Sperling RA, Rentz DM. Activities of daily living: where do they fit in the diagnosis of Alzheimer's disease? Neurodegener Dis Manag. 2012;2:483–491. 10.2217/nmt.12.55 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Morris JC. The Clinical Dementia Rating (CDR): current version and scoring rules. Neurology. 1993;43:2412–2414. 10.1212/wnl.43.11.2412-a [DOI] [PubMed] [Google Scholar]

- 10. Morris JC. Clinical dementia rating: a reliable and valid diagnostic and staging measure for dementia of the Alzheimer type. Int Psychogeriatr. 1997;9(Suppl 1):177–178. [DOI] [PubMed] [Google Scholar]

- 11. Hughes CP, Berg L, Danziger W, Coben LA, Martin RL. A new clinical scale for the staging of dementia. Br J Psychiatry. 1982;140:566–572. 10.1192/bjp.140.6.566 [DOI] [PubMed] [Google Scholar]

- 12. Gerstenecker A, Eakin A, Triebel K, et al. Age and education corrected older adult normative data for a short form version of the financial capacity instrument. Psychol Assess. 2016;28:737–749. 10.1037/pas0000159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Marson DC, Sawrie SM, Snyder S, et al. Assessing financial capacity in patients with Alzheimer disease: a conceptual model and prototype instrument. Arch Neurol. 2000;57:877–884. 10.1001/archneur.57.6.877 [DOI] [PubMed] [Google Scholar]

- 14. Marson DC, Triebel KL, Gerstenecker A, et al. Detecting Declining Financial Skills in Preclinical Alzheimer's Disease: the Financial Capacity Instrument–Short Form 2014.

- 15. Vellas B, Bateman R, Blennow K, et al. Endpoints for pre‐dementia AD trials: a report from the EU/US/CTAD task force. J Prev Alzheimers Dis. 2015;2:128–135. 10.14283/jpad.2015.55 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Marson D. Investigating functional impairment in preclinical Alzheimer's disease. J Prev Alzheimers Dis. 2015;2:4–6. 10.14283/jpad.2015.44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Weiner MW, Nosheny R, Camacho M, et al. The brain health registry: an internet‐based platform for recruitment, assessment, and longitudinal monitoring of participants for neuroscience studies. Alzheimers Dement. 2018;14:1063–1076. 10.1016/j.jalz.2018.02.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Aisen P, Touchon J, Andrieu S, et al. Registries and cohorts to accelerate early phase Alzheimer's trials. a report from the E.U./U.S. Clinical Trials in Alzheimer's Disease Task Force. J Prev Alz Dis. 2016; 3(2): 68‐74. 10.14283/jpad.2016.97 [DOI] [PubMed]

- 19. Morris JC, McKeel DW Jr, Fulling K, Torack RM, Berg L. Validation of clinical diagnostic criteria for Alzheimer's disease. Ann Neurol. 1988;24:17–22. 10.1002/ana.410240105 [DOI] [PubMed] [Google Scholar]

- 20. Berg L, Hughes CP, Coben LA, Danziger WL, Martin RL, Knesevich J. Mild senile dementia of Alzheimer type: research diagnostic criteria, recruitment, and description of a study population. J Neurol Neurosurg Psychiatry. 1982;45:962–968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Rubin EH, Storandt M, Miller JP, et al. Influence of age on clinical and psychometric assessment of subjects with very mild or mild dementia of the Alzheimer type. Arch Neurol. 1993;50:380–383. 10.1001/archneur.1993.00540040042011 [DOI] [PubMed] [Google Scholar]

- 22. Homma A, Meguro K, Dominguez J, Sahadevan S, Wang Y‐H, Morris JC. Clinical dementia rating workshop: the Asian experience. Alzheimer Dis Assoc Disord. 2006;20:318–321. 10.1097/01.wad.0000213869.32676.d8 [DOI] [PubMed] [Google Scholar]

- 23. Burke WJ, Miller JP, Rubin EH, et al. Reliability of the Washington University clinical dementia rating. Arch Neurol. 1988;45:31–32. 10.1001/archneur.1988.00520250037015 [DOI] [PubMed] [Google Scholar]

- 24. McCulla MM, Coats M, Van Fleet N, Duchek J, Grant E, Morris JC. Reliability of clinical nurse specialists in the staging of dementia. Arch Neurol. 1989;46:1210–1211. 10.1001/archneur.1989.00520470070029 [DOI] [PubMed] [Google Scholar]

- 25. Schafer KA, Tractenberg RE, Sano M, et al. Reliability of monitoring the clinical dementia rating in multicenter clinical trials. Alzheimer Dis Assoc Disord. 2004;18:219–222. [PMC free article] [PubMed] [Google Scholar]

- 26. Fillenbaum GG, Peterson B, Morris JC. Estimating the validity of the clinical Dementia Rating Scale: the CERAD experience. Consortium to Establish a Registry for Alzheimer's Disease. Aging Milan Italy. 1996;8:379–385. 10.1007/BF03339599 [DOI] [PubMed] [Google Scholar]

- 27. Coley N, Andrieu S, Jaros M, Weiner M, Cedarbaum J, Vellas B. Suitability of the clinical dementia rating‐sum of boxes as a single primary endpoint for Alzheimer's disease trials. Alzheimers Dement J Alzheimers Assoc. 2011;7:602–610. 10.1016/j.jalz.2011.01.005 [DOI] [PubMed] [Google Scholar]

- 28. Williams MM, Roe CM, Morris JC. Stability of the Clinical Dementia Rating, 1979–2007. Arch Neurol. 2009;66:773–777. 10.1001/archneurol.2009.69 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Cedarbaum JM, Jaros M, Hernandez C, et al. Rationale for use of the clinical dementia rating sum of boxes as a primary outcome measure for Alzheimer's disease clinical trials. Alzheimers Dement J Alzheimers Assoc. 2013;9:S45–S55. 10.1016/j.jalz.2011.11.002 [DOI] [PubMed] [Google Scholar]

- 30. Embretson SE, Reise SP. Item response theory. Psychology Press; 2013. [Google Scholar]

- 31. Li Y, Xiong C, Aschenbrenner A, et al. Item response theory analysis of the Clinical Dementia Rating. J Prev Alzheimers Dis. 2019;6:37–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Li Y, Xiong C, Aschenbrenner AJ, et al. Item response theory analysis of the Clinical Dementia Rating. Alzheimers Dement. 2021;17:534–542. 10.1002/alz.12210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Roberts RO, Geda YE, Knopman DS, et al. The Mayo Clinic Study of aging: design and sampling, participation, baseline measures and sample characteristics. Neuroepidemiology. 2008;30:58–69. 10.1159/000115751 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Triebel KL, Martin R, Griffith HR, et al. Declining financial capacity in mild cognitive impairment. Neurology. 2009;73:928–934. 10.1212/WNL.0b013e3181b87971 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Gerstenecker A. (2021). Item Response Analysis of the Financial Capacity Instrument Short Form. Manuscript submitted for publication 2021 [DOI] [PMC free article] [PubMed]

- 36. Dreyfus DM, Roe CM, Morris JC. Autobiographical memory task in assessing Dementia. Arch Neurol. 2010;67:862–866. 10.1001/archneurol.2010.145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Besser L, Kukull W, Knopman DS, et al. Version 3 of the National Alzheimer's Coordinating Center's uniform data set. Alzheimer Dis Assoc Disord. 2018;32:351–358. 10.1097/WAD.0000000000000279 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Nosheny RL, Camacho MR, Insel PS, et al. Online study partner‐reported cognitive decline in the Brain Health Registry. Alzheimers Dement Transl Res Clin Interv. 2018;4:565–574. 10.1016/j.trci.2018.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Nosheny RL, Camacho MR, Jin C, et al. Validation of online functional measures in cognitively impaired older adults. Alzheimers Dement. 2020;16:1426–1437. 10.1002/alz.12138 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Mackin RS, Insel PS, Truran D, et al. Unsupervised online neuropsychological test performance for individuals with mild cognitive impairment and dementia: results from the Brain Health Registry. Alzheimers Dement Diagn Assess Dis Monit. 2018;10:573–582. 10.1016/j.dadm.2018.05.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Ashford MT, Eichenbaum J, Williams T, et al. Effects of sex, race, ethnicity, and education on online aging research participation. Alzheimers Dement Transl Res Clin Interv. 2020;6(1), e12028. 10.1002/trc2.12028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Grill JD, Kwon J, Teylan MA, et al. Retention of Alzheimer's disease research participants. Alzheimer Dis Assoc Disord. 2019;33:299–306. 10.1097/WAD.0000000000000353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Kennedy RE, Cutter GR, Wang G, Schneider LS. Challenging assumptions about African American participation in Alzheimer's Trials. Am J Geriatr Psychiatry Off J Am Assoc Geriatr Psychiatry. 2017;25:1150–1159. 10.1016/j.jagp.2017.04.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Bardach SH, Jicha GA, Shama K, Xuan Z, Abner EL. Genetic sample provision among National Alzheimer's Coordinating Center participants. J Alzheimers Dis JAD. 2019;69:123–133. 10.3233/JAD-181159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Bilbrey AC, Humber BC, Plowey E, et al. The impact of Latino values and cultural beliefs on brain donation: results of a pilot study to develop culturally appropriate materials and methods to increase rates of brain donation in this under‐studied patient group. Clin Gerontol. 2018;41:237–248. 10.1080/07317115.2017.1373178 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Moulder KL, Besser LM, Beekly D, Blennow K, Kukull W, Morris JC. Factors influencing successful lumbar puncture in Alzheimer research. Alzheimer Dis Assoc Disord. 2017;31:287–294. 10.1097/WAD.0000000000000209 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Gelman CR. Learning from recruitment challenges: barriers to diagnosis, treatment, and research participation for Latinos with symptoms of Alzheimer's disease. J Gerontol Soc Work. 2010;53:94–113. 10.1080/01634370903361847 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Hughes TB, Varma VR, Pettigrew C, Albert MS. African Americans and clinical research: evidence concerning barriers and facilitators to participation and recruitment recommendations. Gerontologist. 2017;57:348. 10.1093/geront/gnv118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Gilmore‐Bykovskyi AL, Jin Y, Gleason C, et al. Recruitment and retention of underrepresented populations in Alzheimer's disease research: a systematic review. Alzheimers Dement Transl Res Clin Interv. 2019;5:751–770. 10.1016/j.trci.2019.09.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Williams MM, Scharff DP, Mathews KJ, et al. Barriers and facilitators of African American participation in Alzheimer's disease biomarker research. Alzheimer Dis Assoc Disord. 2010;24:S24–S29. 10.1097/WAD.0b013e3181f14a14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. George S, Duran N, Norris K. A systematic review of barriers and facilitators to minority research participation among African Americans, Latinos, Asian Americans, and Pacific Islanders. Am J Public Health. 2014;104:e16–e31. 10.2105/AJPH.2013.301706 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Tanner A, Kim S‐H, Friedman DB, Foster C, Bergeron CD. Barriers to medical research participation as perceived by clinical trial investigators: communicating with rural and African American communities. J Health Commun. 2015;20:88–96. 10.1080/10810730.2014.908985 [DOI] [PubMed] [Google Scholar]

- 53. Airhihenbuwa CO, Ford CL. Critical race theory ‐ we are all others. Ethn Dis. 2018;28:219–222. 10.18865/ed.28.S1.219 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Leach V, Redwood S, Lasseter G, et al. Research participation registers can increase opportunities for patients and the public to participate in health services research. J Health Serv Res Policy. 2016;21:183–187. 10.1177/1355819615625699 [DOI] [PubMed] [Google Scholar]

- 55. Saunders KT, Langbaum JB, Holt CJ, et al. Arizona Alzheimer's Registry: strategy and outcomes of a statewide research recruitment registry. J Prev Alzheimers Dis. 2014;1:74–79. 10.14283/jpad.2014.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Langbaum JB, Karlawish J, Roberts JS, et al. GeneMatch: a novel recruitment registry using at‐home APOE genotyping to enhance referrals to Alzheimer's prevention studies. Alzheimers Dement J Alzheimers Assoc. 2019;15:515–524. 10.1016/j.jalz.2018.12.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Zhong K, Cummings J. Healthybrains.org: from registry to randomization. J Prev Alzheimers Dis. 2016;3:123–126. 10.14283/jpad.2016.100 [DOI] [PubMed] [Google Scholar]

- 58. Grill JD, Hoang D, Gillen DL, et al. Constructing a local potential participant registry to improve Alzheimer's disease clinical research recruitment. J Alzheimers Dis JAD. 2018;63:1055–1063. 10.3233/JAD-180069 [DOI] [PubMed] [Google Scholar]

- 59. Langbaum JB, High N, Nichols J, Kettenhoven C, Reiman EM, Tariot PN. The Alzheimer's Prevention Registry: a large internet‐based participant recruitment registry to accelerate referrals to Alzheimer's‐focused studies. J Prev Alzheimers Dis. 2020;7:242–250. 10.14283/jpad.2020.31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Johnson SC, Koscik RL, Jonaitis EM, et al. The Wisconsin Registry for Alzheimer's prevention: a review of findings and current directions. Alzheimers Dement Diagn Assess Dis Monit. 2017;10:130–142. 10.1016/j.dadm.2017.11.007 [DOI] [PMC free article] [PubMed] [Google Scholar]