Abstract

Diseases not only manifest as internal structural and functional abnormalities, but also have facial characteristics and appearance deformities. Specific facial phenotypes are potential diagnostic markers, especially for endocrine and metabolic syndromes, genetic disorders, facial neuromuscular diseases, etc. The technology of facial recognition (FR) has been developed for more than a half century, but research in automated identification applied in clinical medicine has exploded only in the last decade. Artificial-intelligence-based FR has been found to have superior performance in diagnosis of diseases. This interdisciplinary field is promising for the optimization of the screening and diagnosis process and assisting in clinical evaluation and decision-making. However, only a few instances have been translated to practical use, and there is need of an overview for integration and future perspectives. This review mainly focuses on the leading edge of technology and applications in varieties of disease, and discusses implications for further exploration.

Keywords: facial recognition, disease diagnosis, artificial intelligence, automated identification

1. Introduction

The face is a unique marker of bioidentity for humankind. It provides information in regards to age, sex, race, consciousness, emotion, and health status. As it is conveniently accessible and cost-effective, the face is widely accepted for reliable biometrics compared with the fingerprint and iris [1,2]. Various diseases manifest not only as internal structural and functional abnormalities, but they also have facial characteristics and deformities. Diseases with facial manifestations are mainly endocrine and metabolic disorders [3], genetic syndromes [4], and neuromuscular diseases [5], some of which are complex and rare diseases. Early diagnosis and differentiation of these diseases are essential for timely therapy and better prognosis. To identify typical facial features is a part of the traditional diagnosis path, and largely depends on expertise and experience.

Automatic technology for facial recognition appeared in the 1960s, and mature approaches have been developed in real-world applications, covering areas of security surveillance, identity verification, forensic science, law enforcement, etc. [1]. Pioneer research on facial recognition applications in disease diagnosis dates back to the 2000s. Children’s genetic syndromes [6,7] and facial neuromuscular dysfunction [8] were the first diseases detected by knowledge-based methods. In recent years, the emergence of artificial intelligence (AI) has changed human life and has also led to breakthroughs in healthcare. Medical image analysis is the most rapidly developed domain in AI medicine, and broad progress has been made in radiology, pathology, ophthalmology, dermatology, and gastroenterology [9,10]. Facial recognition, as an essential part of automatic image analysis, also displays outstanding efficacy in the era of AI. The number of publications has presented exponential growth since the year 2013. Facial recognition has been introduced at a larger scale to assist diagnosis of diseases that feature facial abnormalities. More disease varieties and racial groups have been incorporated into this dynamic division of AI medicine.

As automated image-based diagnosis is becoming mature, facial-recognition-based diagnosis is becoming one of the most promising and novel fields in interdisciplinary medical practice. It accelerates the screening and detection process of diseases, resulting in an earlier start of comprehensive treatment. Though facial manifestations potentiate identification simply from patients’ appearance, diseases with such particular facial characteristics are mostly complex and rare. In traditional diagnosis methods, to be aware of these diseases is the first challenge, especially for doctors who do not have sufficient experience. The second challenge is to select proper inspections and achieve definite analysis of results. Therefore, the effectiveness of AI facial recognition technology has hypothetically given rise to the opportunity for a more time-saving and cost-saving diagnosis path with less interference from empirical error.

However, to date, numerous advancements in algorithms and applications were widely scattered. Few studies summarize or integrate developments and cutting-edge updates in depth or in breadth. Thus, this is the first review on facial-recognition-based applications in disease diagnosis. The aim is to discuss the evolution and classification of technology, and to focus on clinical implications and advantages of facial recognition in diagnosis. This will be helpful to both clinicians and computer scientists in the following ways: to have an overview of current research, to promote medical engineering cooperation, and to stimulate interest in more investigation.

2. The Facial Recognition System: Approaches and Algorithms

2.1. Image Capture

Static cameras, video cameras, and mobile devices installed with photography software [4] have been used for image capture. After the development of three-dimensional (3D) photography, 3D scanners have also been used to photograph and synthesize facial features [11,12]. The environment setting is mostly in a hospital or a medical organization. In some advanced designs, the application is both patient-side and physiotherapist-side, connected via the cloud network. Images could be taken by patients themselves at home and viewed by doctors through the cloud [13].

Some controllable factors in photography were found to affect the accuracy of facial recognition, including occlusion, low resolution, noise, illumination, pose variation, and expressions [14]. Defects originated from the environment, camera conditions, user’s face conditions, and user-camera positioning [15]. To acquire images with high quality, the process of image capture is standardized. In current studies, clinicians ask patients to expose the entire face and ears; to tidy up hair; to open the eyes and look straight; to close the mouth; to present a neutral and relaxed expression [16,17]. In neurological disorders, patients are asked to perform speech or motor tasks to evaluate their facial neuromuscular function [18]. The position of the camera and patients is fixed in a preset distance and angle to ensure stable illumination intensity. A light source is also required to illuminate the face uniformly. In some research, photos of both sides of the face are taken for more information [3].

2.2. Workflow of Facial Recognition Technology

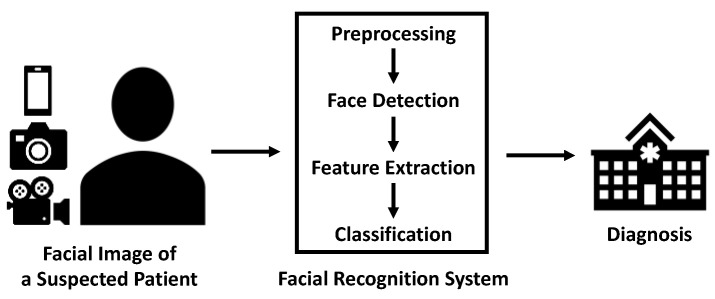

After facial images of acceptable quality are obtained, these images are processed in three steps: face detection, feature extraction, and classification (Table 1) [1]. The image is first preprocessed for further normalization. Face detection corresponds to localization of the human face in the image [19]. After detection, facial phenotypes are extracted based on knowledge, statistical analysis, or deep learning [1]. Then, similarity is calculated by matching these features to the database. When the similarity exceeds a certain threshold, the image is classified [20]. In the user’s interface, they input facial images of a suspected patient into the facial recognition system. Then, the automated classifier outputs a categorical variable as to whether the subject is a patient or a healthy control (Figure 1).

Table 1.

Facial Analysis Algorithms.

| Category | Algorithm |

|---|---|

| Appearance-based | Principal Component Analysis (PCA) Eigenface-Based Methods PCA Algorithm Kernel Principal Component Analysis (Kernel PCA) 2D-Image Principal Component Analysis (2D Image IPCA) Linear Discriminant Analysis (LDA) Discriminant Common Vectors (DCV) Independent Component Analysis (ICA) IPCA-ICA Super Vector Machine (SVM) |

| Feature-based | Geometric Features Local Binary Patterns (LBP) Elastic Bunch Graph Matching (EBGM) Histogram of Oriented Gradients (HoG) Elastic Bunch Graph (EBG) Hidden Markov Model (HMM) |

| Deep learning | Probabilistic-Decision-Based Neural Networks (PDBNN) Radial Basis Function (RBF) Convolutional Neural Network (CNN) |

Figure 1.

Workflow of facial recognition in disease diagnosis.

In the development stage of the system, both images of patients and controls are collected. Controls are normally age- and sex-matched individuals without the target disease. In some conditions, the sample is divided into a training set and a testing set processed by cross-validation [17,21]. The training set is to establish the algorithm and refine parameters. The testing set is to test the performance and diagnosis accuracy.

2.3. Facial Analysis Algorithms

Facial recognition algorithms are categorized into the appearance-based method, the local-feature-based method, and deep learning [14,19]. Classical and frequently used algorithms in disease diagnosis are summarized in Table 1.

2.3.1. Traditional Methods

The appearance-based method, also known as the global feature method, is a model that processes the human face as a whole subject. It extracts global features and matches the holistic face to the database. Not requiring geometry knowledge, the human face is reduced to only a few features or vectors. Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), Discriminant Common Vectors (DCV), and Independent Component Analysis (ICA) are common algorithms. Support Vector Machine (SVM) is often utilized to accomplish feature discrimination. SVM optimizes the performance of PCA and ICA. The appearance-based method is prone to environmental interference and the patient’s face conditions [1,14,19].

In contrast to appearance-based models, the feature-based method, also known as the local feature method, divides the human face into regions based on biological difference, such as nose, eyes, and mouth. This method has been proven to have higher accuracy. Geometric Features, Local Binary Patterns (LBP), Elastic Bunch Graph Matching (EBGM), Histogram of Oriented Gradients (HoG), Elastic Bunch Graph (EBG), and Hidden Markov Model (HMM) are utilized for computation. However, these algorithms need previous knowledge to select proper facial features in the first step of division, which still remains challenging [1,14,19].

2.3.2. Deep Learning Methods

Neural networks, machine learning, and deep learning have addressed the problem of processing multidimensional data. Probabilistic-Decision-Based Neural Networks (PDBNN) and Radial Basis Function (RBF) are neuronal-network-based algorithms with impressive performance in small datasets. Convolutional Neural Network (CNN) has been prevalently used in facial recognition lately with its improvement in accuracy. Deep learning also makes it possible to eliminate emotional variance and the impact of illumination [1,14,19]. Similar to static images, deep learning algorithms to analyze videos recording facial pathological movements for certain diseases have been developed. Three-dimensional CNN, derived from CNN, captures information in multiple adjacent frames [22]. It is applied to detect neurological diseases with facial dysfunction. Other advanced deep learning models, such as long short-term memory (LSTM) were combined with traditional methods for classification [23].

2.3.3. Mature Software

The mature facial recognition software has been gradually incorporated into clinical development of diagnostic approaches. OpenFace 2.0 is an open-source facial behavior analysis tool available to users and researchers. Its framework includes facial landmark detection, head pose tracking, eye gaze, and facial action unit recognition [24]. Some studies directly built their model based on this software [5,25]. The software enables clinical researchers to focus more on improvement of methods according to disease characteristics.

3. The Facial Recognition System: Applications and Advantages

3.1. Performance in Varieties of Disease

3.1.1. Endocrine and Metabolic Diseases

Endocrine diseases are characterized by abnormal hormone levels. Patients present metabolic disorders and typical changes in facial features of bones, muscles, and soft tissues. General symptoms of these diseases at the early stage are easily confused with other metabolic syndromes. The gold standard diagnosis process is complex, with multiple instances of hormone testing and imaging examinations [26,27]. High performance of facial recognition in diagnosis has caused it to be considered a fast, accessible screening approach.

Acromegaly Due to increased release of growth hormone, individuals with acromegaly often have a rectangular face, enlargement of the nose and lips, prognathism, and bulging forehead [26]. Various algorithms have been developed to detect the face of acromegaly patients. In 2006, Learned-Miller et al. [28] proposed a 3D morphable model to classify the frontal face into different categories in a semi-automated manner. Forty-nine acromegaly patients and controls were identified at an accuracy rate of 85.7%. Another study in 2013 [29] generated a method based on Gabor wavelet transformations to reduce unwanted noise. They tested it with 57 patients and 59 controls who were gender- and age-matched, reaching an overall correct classification rate of 81%. Gencturk et al. [30] raised a coupled system of local binary patterns (LBP) and Manhattan classifiers, resulting in an accuracy rate of 97%. The introduction of larger datasets and machine learning methods makes the facial recognition system more effective. Kong et al. [31] constructed a dataset of 527 cases of acromegaly and 596 controls. The machine-learning-based system proved effective, with sensitivity and specificity both 96%. Wei et al. [32] enrolled 1131 individuals with acromegaly, and 12,598 normal individuals. The achieved area under the receiver operating characteristic curve (AUC) was 0.9556 and accuracy was 94.79%.

Cushing’s Syndrome Under prolonged exposure to cortisol, Cushing’s syndrome is facially characterized by “moon face” with plethora and acne [33]. Kosilek et al. [34] included 60 female Caucasian Cushing’s syndrome patients in their first study. The automatic face classification system achieved an overall accuracy rate of 91.7%. To eliminate the impact of obesity sequelae, they further designed a body mass index (BMI)-matched sample study [3]. They enrolled controls suspected but ultimately negative for Cushing’s syndrome, and matched the two groups by age, gender, and BMI. The sample size was also increased to 180 patients. The classification accuracy decreased to 61.1% in females and 66.7% in males [33]. Semi-automatic facial nodes analysis was used in their research. In 2020, Wei et al. [32] constructed a facial image database of 814 endocrinally verified instances of Cushing’s syndrome. The deep learning model generated AUC of 0.9647 and accuracy of 95.93%.

3.1.2. Genetic and Chromosome Abnormalities

Genetic and chromosome abnormalities present as systemic syndromes and complications. Hereditary changes directly dysregulate fetal face development or indirectly affect facial phenotype under the abnormal systemic environment. Standard diagnosis methods are genome sequencing and chromosome karyotyping to confirm the abnormalities of genetic materials. This process requires the clinicians’ early awareness of the possibility, techniques for detection and analysis, and patients’ payment of the cost for genetic tests. Regardless of these conditions, facial recognition makes screening possible in clinical practice. Early diagnosis will be beneficial for patients in terms of start of early therapy and lifelong support.

Down Syndrome (DS) DS is the most common chromosome aberration caused by trisomy 21, occurring in 1/800 newborns worldwide [35]. Facial features of DS patients include a flattened face, upward slanting palpebral fissures, epicanthus, small ears, and protruding tongue [36]. In early studies [37,38,39], non-deep-learning methods were proposed for small numbers of samples. In 2014, Zhao et al. [36] designed a hierarchical constrained local model using ICA. This model located the anatomical facial landmarks accurately, achieving accuracy of 96.7% in classifying 50 DS pediatric patients and 80 controls. Deep convolutional neural networks (DCNN) in a larger-scale database of 10,562 subjects were formed by Qin et al. [40]. This model reached 95.87% in accuracy and 97.40% in specificity, demonstrating the potential of AI-based facial recognition for fast detection of genetic syndromes. These systems were trained and tested in Caucasians. In another three studies, Thai neonates [41], Thai children [42], and Congolese children [43] were enrolled as Asian and African subjects. Since DS is globally prevalent and race brings about natural facial variations, there is considerable AI performance in these studies, further indicating its practicability.

Turner Syndrome (TS) TS is a complete or partial loss of one chromosome X that occurs in women. The prevalence is approximately 1/2000 in women. Patients display phenotypic changes in multiple systems. Typical TS face is characterized by epicanthus, deformity of the external ear, micrognathia, high-arched palate, and multiple pigmented nevus [44]. Song et al. [16] first constructed a 68 feature-points model based on endocrinology observations. This computer-aided automatic classification system had an accuracy rate of 84.6%. A facial diagnostic system based on DCNN was developed by Pan et al. [45] later. They also had a larger dataset of 207 TS patients and 1074 female controls. As most of these patients had been photographed several times in their follow-up, the research study designed different photo selecting scenarios to eliminate bias. This system achieved high accuracy with AUC over 0.95. They further conducted a small-sample-size prospective study of two TS patients and 35 controls. The system reached 96.7% in sensitivity and 97.0% in specificity. Photographs in both studies were collected from Chinese subjects.

Genetic Disorders Both DS and TS are chromosomal diseases. Genetic disorders not involving chromosomal abnormalities also have facial characteristics. Efforts have been made to establish facial image analysis in recent years. Facial dysmorphology novel analysis (FDNA) is an automatic face classification framework based on Bayesian networks and LBP [46]. DeepGestalt [4] is a facial image analysis framework using computer vision and deep-learning algorithms incorporated in a smartphone app Face2Gene (FDNA Inc., Boston MA, USA). DCNN was also proposed for congenital adrenal hyperplasia [21]. Method, sample size, and efficacy for eight genetic diseases tested by these novel approaches are summarized in Table 2. All except one study generated accuracy or AUC over 90%, indicating high efficacy of the facial recognition system in diagnosing genetic diseases.

Table 2.

Studies of facial-recognition-based diagnosis system for genetic disorders.

| Study | Disease | Method | Sample Size | Efficacy |

|---|---|---|---|---|

| Basel-Vanagaite et al. [46] | Cornelia de Lange syndrome | FDNA | 31 cases in training set, 17 cases in testing set | Accuracy = 87% (training), accuracy = 94% (testing) |

| Latorre-Pellicer et al. [47] | Cornelia de Lange syndrome | Face2Gene | 49 cases | Accuracy = 83.7% |

| Hadj-Rabia et al. [48] | X-linked hypohidrotic ectodermal dysplasia | Face2Gene | 136 cases, 717 controls | AUC ≥ 0.98 |

| Liehr et al. [49] | Emanuel syndrome (ES) Pallister-Killian syndrome (PKS) |

Face2Gene | 59 ES, 70 PKS, 973 controls, 973 others | AUC ≥ 0.98 |

| Amudhavalli et al. [50] | Aymé-Gripp syndrome | Face2Gene | 13 cases, 20 controls, 20 DS | AUC = 0.994 (controls), AUC = 0.994 (DS) |

| Pode-Shakked et al. [51] | Mucolipidosis type IV | Face2Gene | 26 cases, 98 controls, 99 others | AUC = 0.822 (controls), AUC = 0.885 (others) |

| Wang et al. [52] | Kabuki syndrome | Face2Gene | 14 cases | Accuracy = 93% |

| AbdAlmageed et al. [21] | Congenital adrenal hyperplasia | DNN | 102 cases, 144 controls | AUC = 92% |

| Porras et al. [53] | Noonan syndrome (NS) Williams-Beuren syndrome (WBS) |

LBP, SVM | 286 NS, 161 WBS | Accuracy = 85.68% |

Abbreviations and explanations: cases, patients; controls, patients without genetic disorders; others, patients with other genetic disorders; FDNA, facial dysmorphology novel analysis; Face2Gene (FDNA Inc., Boston, MA, USA); AUC, area under the curve; DS, Down syndrome; DNN, Deep Neural Network; LBP, Local Binary Pattern; SVM, Support Vector Machines.

3.1.3. Neuromuscular Diseases

Facial phenotypes are essential manifestations in neuromuscular diseases. AI has been extensively applied in the diagnosis of neurological diseases. Though facial recognition is still limited compared to other applications [54], it has demonstrated impressive prospective benefits in a few diseases.

Facial Paralysis Facial paralysis is loose facial muscle or movement dysfunction due to neuropathy. Its diagnosis depends on the doctor’s subjective ranking scale of facial features and muscle movement [55]. Traditional methods are based on asymmetry extraction of two sides of the face. Video clips of 75 patients and 10 controls were evaluated by a method combining Gabor filter, LBP, and Adaboost classification, yielding an accuracy rate of 60.7% [56]. To increase the objectivity of assessment, deep learning methods have been proposed. Guo et al. [57] raised an end-to-end solution that directly analyzes facial image via fine-tuned DCCN. They collected four facial expression images of 105 patients and 75 controls in the experiment and produced a classification accuracy rate of 91.25%. 3DPalsyNet [58] is a facial palsy grading and motion recognition framework using fully 3D CNN, showing accuracy of 82% in facial palsy and 86% in mouth motions, respectively.

Neurodegenerative Diseases Damage or death of neurons in the central nervous system causes neurodegenerative diseases. Parkinson’s disease (PD) results from insufficient secretion of dopamine. Patients often appear with a masked face. Alzheimer’s disease (AD) is the most common form of dementia. Patients progressively lose memory and thinking skills due to brain atrophy and brain cell death. Amyotrophic lateral sclerosis (ALS) is a rare but severe loss of the motor neurons that control voluntary muscles. Automated static image or movement video analysis has been invented to recognize these diseases [59]. Table 3 shows a summary of data type, sample size, method, and efficacy of studies of PD, AD, and ALS. As a novel non-invasive diagnosis approach, facial recognition shows considerable efficacy.

Table 3.

Facial-recognition-based diagnosis system for neurodegenerative diseases.

| Study | Disease | Data | Sample Size | Method | Efficacy |

|---|---|---|---|---|---|

| Bandini et al. [60] | PD | Video | 17 PD, 17 HC | Intraface tracking algorithm, Euclidean distance, SVM | Difference (p < 0.05) between PD and HC |

| Rajnoha et al. [61] | PD | Image | 50 PD, 50 HC | Random Forests, XGBoost | Accuracy = 67.33% |

| Jin et al. [23] | PD | Video | 33 PD, 31 HC | Face++ [62], tremor extraction, LSTM neural network | Precision = 86% |

| Ali et al. [5] | PD | Video | 61 PD, 543 HC | OpenFace 2.0 [24], SVM | Accuracy = 95.6% |

| Hou et al. [63] | PD | Video | 70 PD, 70 HC | HOG, LBP, SVM, k-NN, Random Forests | F1 = 88% |

| Nam et al. [25] | AD | Video | 17 AD, 17 HC | OpenFace 2.0 [24], extract movement coordinates to calculate Spearman’s correlation coefficient | Difference (p < 0.05) between AD and HC |

| Umeda et al. [64] | AD | Image | 121 AD, 117 HC | Xception, SENet50, ResNet50, VGG16, and simple CNN with SGD and Adam optimizer | Xception with Adam showed the best accuracy = 94% |

| Bandini et al. [18] | ALS | Video | 11 ALS, 11 HC | AAM, CLM, ERT, SDM, FAN | Accuracy = 88.9% |

Abbreviations and explanations: PD, Parkinson’s disease; AD, Alzheimer’s disease; ALS, amyotrophic lateral sclerosis; HC, healthy control; SVM, Support Vector Machines; LSTM, Long Short-Term Memory; HOG, Histogram of Oriented Gradient; LBP, Local Binary Pattern; k-NN, k-Nearest Neighbors; AAM, active appearance models; CLM, constrained local model; ERT, ensemble of regression trees; SDM, supervised descent method; FAN, face alignment network.

Real-World and Public Datasets Efforts have also been made to construct datasets from real-world scenes and make them available for sharing. Recently, Zhuang et al. [65] built a “in-the-wild” static image dataset of facial weakness from YouTube, Google Image, and other public repositories. They combined landmarks and intensity features to detect pathological facial asymmetry, which yielded considerable accuracy. Bandini et al. [18] have established an accessible gesture video dataset of oro-facial motion impairment, including post-stroke, amyotrophic lateral sclerosis (ALS), and healthy controls. They incorporated clinical data, manual annotation, and DCCN models. To propel the development of accurate approaches and improvement of automatic identification of neurological disorders from videos and images, more real-world-based and public data are needed.

3.1.4. Other Types of Disease

Acute and Severe Illness In recent years, facial recognition has been applied in acute illnesses for faster screening and patient triage in the emergency room. Forte et al. [66] established a CNN model to distinguish between healthy and simulated acutely ill individuals, yielding sensitivity of 100% and specificity of 42.11%. For severe illnesses, Lin et al. [67] proposed a deep learning model to identify coronary artery disease. In a multicenter cross-sectional study of 5796 patients, this method achieved sensitivity of 0.80, specificity of 0.54, and AUC of 0.730. Zhuang et al. [65] has also built a model to identify the asymmetric face of stroke. These studies represented the potential of an automated facial video- or image-based assessing system to detect acute and severe diseases.

Syndromes without Genetic Abnormality Fetal alcohol syndrome (FAS) results from excess alcohol ingestion during maternal pregnancy. Stereo-photogrammetry was used to measure facial features of 44 FAS subjects in an automated manner [7]. FDNA was further applied in fetal alcohol spectrum disorders, and showed considerable performance compared to manual examination [68]. Chronic fatigue syndrome (CFS) is a complicated disorder characterized by extreme fatigue with an unclarified underlying mechanism. Chen et al. [69] proposed a method based on Gabor wavelet filtering and AdaBoost classification for CFS facial recognition diagnosis. They enrolled 294 CFS volunteers and 297 healthy volunteers in their study and the system reached an average accuracy rate of 89.04% on the training set and 88.32% on the testing set.

3.2. Clinical Applications

Automated facial analysis has been incorporated in software, e.g., Face++ [62]. These tools are used for identity recognition, security surveillance, etc. in our daily life. Clinical researchers have been working to develop a similar technology so that the patient’s facial picture could be analyzed in a mobile phone so they could receive a diagnosis in seconds. Face2Gene (FDNA Inc., Boston, MA, USA) is one of the most widely used smartphone apps for facial recognition diagnosis [4]. Trained by 216 different genetic syndromes using 17,106 images of 10,953 subjects, this app has been employed in several studies and has proven efficiency. Auto-eFACE is a facial assessment tool based on deep learning software Emotrics (http://www.sircharlesbell.com/, accessed on 18 May 2022) for unilateral facial paralysis grading and evaluation [70].

Relevant programs have also attracted attention from the National Human Genome Research Institute (NHGRI), part of the National Institutes of Health (NIH). Research with NHGRI has been used to develop software to identify 22q11.2 deletion syndrome, also known as DiGeorge syndrome [71]. This system was trained by images of patients from diverse populations, including Caucasians, Africans, Asians, and Latin Americans. Sensitivity and specificity were both greater than 96.6% for all ethnic groups.

3.3. Advantages over Traditional Methods

Facial-recognition-based diagnosis has presented the potential to resolve problems in traditional approaches. Diseases amenable to facial diagnosis are mostly complicated with various but not typical clinical manifestations. Due to difficulties in making a definite diagnosis from general inspection, these diseases are diagnosed with a latent period. For instance, acromegaly and Cushing’s syndrome have a delay of 6 years and 2~6 years, respectively [3]. Since these diseases are relatively rare, clinicians, especially basic-level doctors, require experience and knowledge to recognize them. Differential diagnosis between diseases with similar symptoms is another challenge. Moreover, traditional approaches are complex and both time- and money-consuming. To be compared in these aspects, facial detection is more accurate, informative, and time- and cost-saving.

3.3.1. Accurate and Objective

Accurate Studies have found that when compared to clinicians, the automatic system achieved higher accuracy in identifying the same facial image. In early diagnosis of acromegaly, the computer program achieved 86% over 26% accuracy in physicians [72]. Another acromegaly-detecting system showed higher performance than medical experts and general internists, particularly in patients with moderate features [73]. Further studies invited medical workers of different levels. Chen et al. [74] asked physicians and medical students to complete a web-based test including the same photographs of Turner syndrome used in computer testing. The automatic facial classification system showed higher sensitivity and specificity (p < 0.001) than participants. Wei et al. [32] compared their AI-based face classifier of acromegaly and Cushing’s syndrome with medical students, residents, and attending doctors, respectively. The system was more accurate than human doctors.

Objective In addition to clinicians, researchers also compared facial analysis to several diagnostic approaches to explore its practicalities. Pioneer studies in the 2000s compared the automated facial recognition system with manual measurement, demonstrating the objectivity of AI-based diagnosis. In conventional diagnosis of FAS, facial anthropometric measurements made with a hand-held ruler by trained dysmorphologists was thought to be the best approach. A stereo-photogrammetric method was developed to measure the facial dysmorphology of FAS children and showed consistency with experts [7]. Another study developed an automated method to quantify facial motion. Its analysis was consistent with that of manual tracking in facial nerve disorders [8]. For diseases relying on subjective assessment with scales, AI classifiers also proved their accuracy. Studies have been conducted in facial paralysis [57] and AD [64]. Facial-recognition-based diagnostics resulted in similar predictions to the House–Brackmann facial nerve grading system and mini-mental state examination (MMSE) in these diseases, respectively.

3.3.2. Comprehensive and Informative

Comprehensive This novel screening technology not only differentiates patients from healthy individuals, but also provides comprehensive diagnostic possibilities. For each input image, the mobile app Face2Gene (FDNA Inc., Boston, MA, USA) outputs a ranked list of 30 possible genetic disorders, with an accuracy rate of 91% in top 10 diseases [4]. Mishima et al. [75] validated Face2Gene (FDNA Inc., Boston, MA, USA) with Japanese populations and proved its efficacy. Porras et al. [76] have also invented a deep phenotyping screening technology to support early risk stratification at the point of care in global populations.

Informative In addition to classification results of diagnosis, the automatic facial analysis generated much more clinical information. Due to the emergence of 3D technology, facial phenotypes could be quantified more accurately and could act as predictors. In acromegaly, facial features have been elucidated relative to disease severity, progression, and recurrence after surgery. Meng et al. [12] found a few vital variables for disease prediction and gender variation. Guo et al. [77] revealed that insulin-like growth factor 1 (IGF-1) levels were linearly correlated to certain features. In another research study of 668 patients, Fan et al. [78] showed that facial features provided better estimation of transsphenoidal surgical (TSS) responses compared with traditional invasive grading based on pituitary image examination (Knosp Grade).

3.3.3. Improvement of Healthcare System

The convergence of medicine and AI is not only beneficial to patients and clinicians, but also improves the healthcare system [79]. Though direct evidence in the field of facial analysis is insufficient, studies have showed that AI improves workflow and reduces medical errors. The most prominent advantage of AI-based facial recognition diagnosis is the breakdown of the knowledge barrier. Since the size of the dataset far exceeds the upper limit of patients seen by an experienced doctor, it enables doctors at any level to have access to a precise diagnosis. Moreover, as the system can be easily installed in a mobile device and processes images in seconds, it takes much less time than the traditional pathway for diagnosis.

4. Future Outlook

4.1. Expansion of Database Volume

The sample size affects the performance of machine learning models. It is acknowledged that systems trained by larger datasets have better estimation and less bias. Moreover, facial features vary by age, sex, and race naturally. Demographic influence on the performance of face recognition algorithms has been studied, suggesting female, Black, and younger individuals are more difficult to recognize [80]. For diseases with malformations or dysfunction of the trunk and limbs, simultaneous identification would be essential. Langevin et al. [81] has established PARK for PD diagnosis and subsequent monitoring. This interactive framework asks the patient to complete six motor exercises and one audio task with webcam. Considering that the previous atlas featured only individuals of northern European ancestry, NHGRI launched Atlas of Human Malformations in Diverse Populations (https://research.nhgri.nih.gov/atlas/, accessed on 16 May 2022) in September 2016. This atlas aims to collect photos of physical traits of different inherited diseases around the world. Therefore, in addition to genetic syndromes, comprehensive data of face and physical images covering different groups and various diseases are forthcoming to refine current systems.

4.2. Factors Affecting Diagnostic Accuracy

Factors affecting the accuracy of automated facial analysis have been demonstrated such as aging, pose variation, partial occlusion, illumination, and facial expression [14]. Technology is continuously being optimized to reduce the impact of these factors. Clinical scientists also investigated potential confounding factors influencing performance of the facial-recognition-based diagnosis system. Pantel et al. [82] selected genetic disorders with overlapping phenotypic spectra and demonstrated that the growing cohort increased the true positive rate, while ethnicity or sex had no significant effect. Furthermore, only the tip of the iceberg has been discovered in patterns underlying disease severity and phenotypes. To interactively quantify facial classification in disease diagnosis, Wu et al. [83] performed a systematic meta-analysis of 12,557 participants in seven single diseases. They found that the complexity of facial features, defined as Facial Recognition Intensity (FRI), contributed to diagnostic accuracy (p = 0.021). Increasing the training size and applying deep learning models will help to improve accuracy of low-FRI diseases. Object’s Complexity Theory (OCT) was hypothetically proposed, as the complexity of the targeted objects determines the complexity of AI processing and plays a vital role in performance of the model. More supportive evidence is needed to reveal the laws behind this.

4.3. Integration of Novel Technology

In recent years, 3D photography has begun to contain facial depth information and reduce shape distortion. Corresponding machine learning algorithms have been developed to discriminate between genetic syndromes [11]. Three-dimensional CNN has also been proposed to extract and process motion features [22]. This novel technology broadens the range of identification and interpretation, especially for neuromuscular diseases. Moreover, real-time detection could be added to the system to optimize its clinical use. Facial live detection to capture eye blinking discriminates the human face from a photograph [84]. The elaborate analysis of each organ in the face is also promising. Liu et al. [85] has invented a clinically aided diagnosis system to analyze eye images of ocular myasthenia gravis patients. To combine local feature analysis might yield better performance for the whole face identification.

In addition to facial images, human posture and movement could be identified with deep learning methods for kinematic analysis [86]. Emotion and expression are other essential dimensions in facial recognition technology. Emotional neural networks can detect expressions and improve AI learning and generalization [87]. Automatic pain detection technology was invented for non-communicative patients. It assists caregivers and provides more objective assessments [88]. The automatic emotion annotation system based on 2.5D facial landmarking was also proposed to help people with difficulties in interpreting facial expressions. Social lives of individuals with AD, low vision, and autism spectrum disorder patients’ responses would be improved [89]. Another model to evaluate facial reanimation after facial surgery has also been developed [90].

Meanwhile, a systematic review has been performed to figure out algorithm refinement within a limited sample size [91]. Through analysis of machine learning prediction of autism, this study found that Nested Cross-Validation, train/test split stably produces robust and unbiased estimation regardless of sample size. The state-of-the-art of machine learning will keep bringing about expansion and improvement of application in this field.

4.4. Applications beyond Diagnosis

Besides diagnosis and evaluation, facial analysis also has potential for prospective therapy and medical education. Ridha et al. [13] has designed a 3D printed headgear for facial paralysis physical therapy. A Google Machine Learning kit was inputted into a paralysis prediction neural network (PPNN) to predict the percentage of paralysis. Coupled with the headgear, this AI system suggests routine therapy time for physiotherapists. Further tests in real patients and tech convergence are to come. AI-based image analysis has been applied in pathology and radiology education [92,93]. For instance, pathologists generate synthesized images for training. This is also useful for quality control and eliminating perceptual and cognitive bias. In the field of facial recognition, phenotypes could be three-dimensionalized as a model to educate medical students and help understand disease occurrence and development.

4.5. From Research to Products

The list of the Food and Drug Administration (FDA)-approved AI image interpretation algorithms is expanding rapidly [79]. Though studies and articles have proliferated over the last decade, only a few research studies have been translated into diagnostic aiding tools. Apps or tools that could be easily installed onto mobile devices, such as Face2Gene (FDNA Inc., Boston, MA, USA), are the most practical. To ensure safety and effectiveness of AI and machine-learning-based software as a medical device, FDA has issued the regulatory framework and an action plan [94]. In the future, the focus will not only be to productize the algorithms, but to eliminate bias and validate performance in real-world clinical scenes.

4.6. Privacy and Security

Currently, the human face is a sensitive individual privacy concern. When being asked to take photos of their faces, more patients worry about information leak than before [95]. NHGRI asked the patient to sign a consent form before contributing facial images to their atlas website. Ethical implications of facial recognition technology require more regulations and laws. Security, privacy, autonomy, and democratic accountability are the most considered aspects [96]. In a comparative analysis of regulatory frameworks in the United States, Europe, and the United Kingdom, facial recognition technology will improve when considering data protection impact assessments and human rights impact assessments [97]. Another integrative review by Roundtree et al. synthesized [98] academic studies in the past ten years about ethical issues in facial recognition. In clinical practice, facial images of patients should be taken seriously as the medical record. However, external or internal attacks to the deep learning frameworks could exert a safety threat [99]. Another security concern is the vulnerability to adversarial perturbations, especially in deep neural network (DNN) systems. Defenses against adversarial attacks could be divided into gradient masking, robust optimization, and adversarial example detection [100]. In facial recognition, though evaluations and examinations were made to test existing models, few optimized models have been proposed [101,102]. More efforts should be made to secure the facial classifiers in clinical practice. All in all, more regulations and consensus are forthcoming to raise ethical awareness when using this novel technique in disease diagnosis.

5. Conclusions

Facial recognition technology has been developing for decades, but the intersection of facial analysis and disease diagnosis is still an emerging field. In clinical settings, the image capture process is standardized to ensure image quality. Traditional computing methods (appearance-based algorithms, feature-based algorithms) and deep learning have been developed for facial detection. The facial recognition system has showed considerable performance in various types of disease, including endocrine and metabolic disease, genetic and chromosome abnormality, neuromuscular disease, and acute and severe illness. A few software programs have been applied in the clinical practice. Compared to the routine diagnostic approach, facial-recognition-based detection is more accurate, objective, comprehensive, and informative. It also makes it possible to improve healthcare system efficiency. For future perspectives, the facial database volume could be expanded, and factors affecting diagnostic accuracy are to be investigated. Cutting-edge technology could be incorporated into the system to improve its performance. More mature products developed from research are forthcoming. Applications beyond diagnosis are under exploration. Privacy and security are essential ethical problems that need more consideration and regulation. Clinicians and scientists are making continuous efforts to better serve medicine and healthcare.

Author Contributions

Conceptualization, S.C., J.Q. and D.W.; resources, J.Q., D.W. and H.D.; writing—original draft preparation, J.Q.; writing—review and editing, S.C.; supervision, H.Z.; project administration, H.P. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Kaur P., Krishan K., Sharma S.K., Kanchan T. Facial-Recognition Algorithms: A Literature Review. Med. Sci. Law. 2020;60:131–139. doi: 10.1177/0025802419893168. [DOI] [PubMed] [Google Scholar]

- 2.Fontaine X., Achanta R., Süsstrunk S. Face Recognition in Real-World Images; Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); New Orleans, LA, USA. 5–9 March 2017; pp. 1482–1486. [DOI] [Google Scholar]

- 3.Kosilek R.P., Frohner R., Würtz R.P., Berr C.M., Schopohl J., Reincke M., Schneider H.J. Diagnostic Use of Facial Image Analysis Software in Endocrine and Genetic Disorders: Review, Current Results and Future Perspectives. Eur. J. Endocrinol. 2015;173:M39–M44. doi: 10.1530/EJE-15-0429. [DOI] [PubMed] [Google Scholar]

- 4.Gurovich Y., Hanani Y., Bar O., Nadav G., Fleischer N., Gelbman D., Basel-Salmon L., Krawitz P.M., Kamphausen S.B., Zenker M., et al. Identifying Facial Phenotypes of Genetic Disorders Using Deep Learning. Nat. Med. 2019;25:60–64. doi: 10.1038/s41591-018-0279-0. [DOI] [PubMed] [Google Scholar]

- 5.Ali M.R., Myers T., Wagner E., Ratnu H., Dorsey E.R., Hoque E. Facial Expressions Can Detect Parkinson’s Disease: Preliminary Evidence from Videos Collected Online. NPJ Digit. Med. 2021;4:1–4. doi: 10.1038/s41746-021-00502-8. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 6.Loos H.S., Wieczorek D., Würtz R.P., von der Malsburg C., Horsthemke B. Computer-Based Recognition of Dysmorphic Faces. Eur. J. Hum. Genet. 2003;11:555–560. doi: 10.1038/sj.ejhg.5200997. [DOI] [PubMed] [Google Scholar]

- 7.Meintjes E.M., Douglas T.S., Martinez F., Vaughan C.L., Adams L.P., Stekhoven A., Viljoen D. A Stereo-Photogrammetric Method to Measure the Facial Dysmorphology of Children in the Diagnosis of Fetal Alcohol Syndrome. Med. Eng. Phys. 2002;24:683–689. doi: 10.1016/S1350-4533(02)00114-5. [DOI] [PubMed] [Google Scholar]

- 8.Wachtman G.S., Cohn J.F., VanSwearingen J.M., Manders E.K. Automated Tracking of Facial Features in Patients with Facial Neuromuscular Dysfunction. Plast. Reconstr. Surg. 2001;107:1124–1133. doi: 10.1097/00006534-200104150-00005. [DOI] [PubMed] [Google Scholar]

- 9.Rajpurkar P., Chen E., Banerjee O., Topol E.J. AI in Health and Medicine. Nat. Med. 2022;28:31–38. doi: 10.1038/s41591-021-01614-0. [DOI] [PubMed] [Google Scholar]

- 10.Yu K.-H., Beam A.L., Kohane I.S. Artificial Intelligence in Healthcare. Nat. Biomed. Eng. 2018;2:719–731. doi: 10.1038/s41551-018-0305-z. [DOI] [PubMed] [Google Scholar]

- 11.Hallgrímsson B., Aponte J.D., Katz D.C., Bannister J.J., Riccardi S.L., Mahasuwan N., McInnes B.L., Ferrara T.M., Lipman D.M., Neves A.B., et al. Automated Syndrome Diagnosis by Three-Dimensional Facial Imaging. Genet. Med. 2020;22:1682–1693. doi: 10.1038/s41436-020-0845-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Meng T., Guo X., Lian W., Deng K., Gao L., Wang Z., Huang J., Wang X., Long X., Xing B. Identifying Facial Features and Predicting Patients of Acromegaly Using Three-Dimensional Imaging Techniques and Machine Learning. Front. Endocrinol. 2020;11:492. doi: 10.3389/fendo.2020.00492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ridha A.M., Shehieb W., Yacoub P., Al-Balawneh K., Arshad K. Smart Prediction System for Facial Paralysis; Proceedings of the 2020 7th International Conference on Electrical and Electronics Engineering (ICEEE); Antalya, Turkey. 14–16 April 2020; pp. 321–327. [Google Scholar]

- 14.Anwarul S., Dahiya S. A Comprehensive Review on Face Recognition Methods and Factors Affecting Facial Recognition Accuracy; Proceedings of the ICRIC 2019, Recent Innovations in Computing; Jammu, India. 8–9 March 2019; Berlin/Heidelberg, Germany: Springer; 2020. pp. 495–514. [DOI] [Google Scholar]

- 15.Gao X., Li S.Z., Liu R., Zhang P. Standardization of Face Image Sample Quality; Proceedings of the International Conference on Biometrics; Seoul, Korea. 27–29 August 2007; pp. 242–251. [DOI] [Google Scholar]

- 16.Song W., Lei Y., Chen S., Pan Z., Yang J.-J., Pan H., Du X., Cai W., Wang Q. Multiple Facial Image Features-Based Recognition for the Automatic Diagnosis of Turner Syndrome. Comput. Ind. 2018;100:85–95. doi: 10.1016/j.compind.2018.03.021. [DOI] [Google Scholar]

- 17.Hong D., Zheng Y.-Y., Xin Y., Sun L., Yang H., Lin M.-Y., Liu C., Li B.-N., Zhang Z.-W., Zhuang J., et al. Genetic Syndromes Screening by Facial Recognition Technology: VGG-16 Screening Model Construction and Evaluation. Orphanet. J. Rare Dis. 2021;16:344. doi: 10.1186/s13023-021-01979-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bandini A., Rezaei S., Guarin D.L., Kulkarni M., Lim D., Boulos M.I., Zinman L., Yunusova Y., Taati B. A New Dataset for Facial Motion Analysis in Individuals With Neurological Disorders. IEEE J. Biomed. Health Inform. 2021;25:1111–1119. doi: 10.1109/JBHI.2020.3019242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jayaraman U., Gupta P., Gupta S., Arora G., Tiwari K. Recent Development in Face Recognition. Neurocomputing. 2020;408:231–245. doi: 10.1016/j.neucom.2019.08.110. [DOI] [Google Scholar]

- 20.Banerjee D., Yu K. Robotic Arm-Based Face Recognition Software Test Automation. IEEE Access. 2018;6:37858–37868. doi: 10.1109/ACCESS.2018.2854754. [DOI] [Google Scholar]

- 21.AbdAlmageed W., Mirzaalian H., Guo X., Randolph L.M., Tanawattanacharoen V.K., Geffner M.E., Ross H.M., Kim M.S. Assessment of Facial Morphologic Features in Patients With Congenital Adrenal Hyperplasia Using Deep Learning. JAMA Netw. Open. 2020;3:e2022199. doi: 10.1001/jamanetworkopen.2020.22199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ji S., Xu W., Yang M., Yu K. 3D Convolutional Neural Networks for Human Action Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013;35:221–231. doi: 10.1109/TPAMI.2012.59. [DOI] [PubMed] [Google Scholar]

- 23.Jin B., Qu Y., Zhang L., Gao Z. Diagnosing Parkinson Disease Through Facial Expression Recognition: Video Analysis. J. Med. Internet Res. 2020;22:e18697. doi: 10.2196/18697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Baltrušaitis T., Zadeh A., Lim Y., Morency L.-P. OpenFace 2.0: Facial Behavior Analysis Toolkit; Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018); Xi’an, China. 15–19 May 2018; [DOI] [Google Scholar]

- 25.Nam U., Lee K., Ko H., Lee J.-Y., Lee E.C. Analyzing Facial and Eye Movements to Screen for Alzheimer’s Disease. Sensors. 2020;20:5349. doi: 10.3390/s20185349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Colao A., Grasso L.F.S., Giustina A., Melmed S., Chanson P., Pereira A.M., Pivonello R. Acromegaly. Nat. Rev. Dis Primers. 2019;5:20. doi: 10.1038/s41572-019-0071-6. [DOI] [PubMed] [Google Scholar]

- 27.Loriaux D.L. Diagnosis and Differential Diagnosis of Cushing’s Syndrome. N. Engl. J. Med. 2017;376:1451–1459. doi: 10.1056/NEJMra1505550. [DOI] [PubMed] [Google Scholar]

- 28.Learned-Miller E., Lu Q., Paisley A., Trainer P., Blanz V., Dedden K., Miller R. Detecting Acromegaly: Screening for Disease with a Morphable Model. Med. Image Comput. Comput. Assist. Interv. 2006;9:495–503. doi: 10.1007/11866763_61. [DOI] [PubMed] [Google Scholar]

- 29.Frohner R., Würtz R.P., Kosilek R., Schneider H.J. Optimierung Der Gesichtsklassifikation Bei Der Erkennung von Akromegalie. J. Klin. Endokrinol. Stoffw. 2013;6:20–24. [Google Scholar]

- 30.Gencturk B., Nabiyev V.V., Ustubioglu A., Ketenci S. Automated Pre-Diagnosis of Acromegaly Disease Using Local Binary Patterns and Its Variants; Proceedings of the 36th International Conference on Telecommunications and Signal Processing (TSP); Rome, Italy. 2–4 July 2013; pp. 817–821. [DOI] [Google Scholar]

- 31.Kong X., Gong S., Su L., Howard N., Kong Y. Automatic Detection of Acromegaly From Facial Photographs Using Machine Learning Methods. EBioMedicine. 2018;27:94–102. doi: 10.1016/j.ebiom.2017.12.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wei R., Jiang C., Gao J., Xu P., Zhang D., Sun Z., Liu X., Deng K., Bao X., Sun G., et al. Deep-Learning Approach to Automatic Identification of Facial Anomalies in Endocrine Disorders. Neuroendocrinology. 2020;110:328–337. doi: 10.1159/000502211. [DOI] [PubMed] [Google Scholar]

- 33.Popp K.H., Kosilek R.P., Frohner R., Stalla G.K., Athanasoulia-Kaspar A., Berr C., Zopp S., Reincke M., Witt M., Würtz R.P., et al. Computer Vision Technology in the Differential Diagnosis of Cushing’s Syndrome. Exp. Clin. Endocrinol. Diabetes. 2019;127:685–690. doi: 10.1055/a-0887-4233. [DOI] [PubMed] [Google Scholar]

- 34.Kosilek R.P., Schopohl J., Grunke M., Reincke M., Dimopoulou C., Stalla G.K., Würtz R.P., Lammert A., Günther M., Schneider H.J. Automatic Face Classification of Cushing’s Syndrome in Women—A Novel Screening Approach. Exp. Clin. Endocrinol. Diabetes. 2013;121:561–564. doi: 10.1055/s-0033-1349124. [DOI] [PubMed] [Google Scholar]

- 35.Bull M.J. Down Syndrome. N. Engl. J. Med. 2020;382:2344–2352. doi: 10.1056/NEJMra1706537. [DOI] [PubMed] [Google Scholar]

- 36.Zhao Q., Okada K., Rosenbaum K., Kehoe L., Zand D.J., Sze R., Summar M., Linguraru M.G. Digital Facial Dysmorphology for Genetic Screening: Hierarchical Constrained Local Model Using ICA. Med. Image Anal. 2014;18:699–710. doi: 10.1016/j.media.2014.04.002. [DOI] [PubMed] [Google Scholar]

- 37.Burçin K., Vasif N.V. Down Syndrome Recognition Using Local Binary Patterns and Statistical Evaluation of the System. Expert Syst. Appl. 2011;38:8690–8695. doi: 10.1016/j.eswa.2011.01.076. [DOI] [Google Scholar]

- 38.Saraydemir S., Taşpınar N., Eroğul O., Kayserili H., Dinçkan N. Down Syndrome Diagnosis Based on Gabor Wavelet Transform. J. Med. Syst. 2012;36:3205–3213. doi: 10.1007/s10916-011-9811-1. [DOI] [PubMed] [Google Scholar]

- 39.Zhao Q., Rosenbaum K., Okada K., Zand D.J., Sze R., Summar M., Linguraru M.G. Automated Down Syndrome Detection Using Facial Photographs. Annu Int Conf IEEE Eng. Med. Biol Soc. 2013;2013:3670–3673. doi: 10.1109/EMBC.2013.6610339. [DOI] [PubMed] [Google Scholar]

- 40.Qin B., Liang L., Wu J., Quan Q., Wang Z., Li D. Automatic Identification of Down Syndrome Using Facial Images with Deep Convolutional Neural Network. Diagnostics. 2020;10:487. doi: 10.3390/diagnostics10070487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Srisraluang W., Rojnueangnit K. Facial Recognition Accuracy in Photographs of Thai Neonates with Down Syndrome among Physicians and the Face2Gene Application. Am. J. Med. Genet. A. 2021;185:3701–3705. doi: 10.1002/ajmg.a.62432. [DOI] [PubMed] [Google Scholar]

- 42.Vorravanpreecha N., Lertboonnum T., Rodjanadit R., Sriplienchan P., Rojnueangnit K. Studying Down Syndrome Recognition Probabilities in Thai Children with De-Identified Computer-Aided Facial Analysis. Am. J. Med. Genet. A. 2018;176:1935–1940. doi: 10.1002/ajmg.a.40483. [DOI] [PubMed] [Google Scholar]

- 43.Porras A.R., Bramble M.S., Mosema Be Amoti K., Spencer D., Dakande C., Manya H., Vashist N., Likuba E., Ebwel J.M., Musasa C., et al. Facial Analysis Technology for the Detection of Down Syndrome in the Democratic Republic of the Congo. Eur. J. Med. Genet. 2021;64:104267. doi: 10.1016/j.ejmg.2021.104267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Gravholt C.H., Viuff M.H., Brun S., Stochholm K., Andersen N.H. Turner Syndrome: Mechanisms and Management. Nat. Rev. Endocrinol. 2019;15:601–614. doi: 10.1038/s41574-019-0224-4. [DOI] [PubMed] [Google Scholar]

- 45.Pan Z., Shen Z., Zhu H., Bao Y., Liang S., Wang S., Li X., Niu L., Dong X., Shang X., et al. Clinical Application of an Automatic Facial Recognition System Based on Deep Learning for Diagnosis of Turner Syndrome. Endocrine. 2021;72:865–873. doi: 10.1007/s12020-020-02539-3. [DOI] [PubMed] [Google Scholar]

- 46.Basel-Vanagaite L., Wolf L., Orin M., Larizza L., Gervasini C., Krantz I., Deardoff M. Recognition of the Cornelia de Lange Syndrome Phenotype with Facial Dysmorphology Novel Analysis. Clin. Genet. 2016;89:557–563. doi: 10.1111/cge.12716. [DOI] [PubMed] [Google Scholar]

- 47.Latorre-Pellicer A., Ascaso Á., Trujillano L., Gil-Salvador M., Arnedo M., Lucia-Campos C., Antoñanzas-Pérez R., Marcos-Alcalde I., Parenti I., Bueno-Lozano G., et al. Evaluating Face2Gene as a Tool to Identify Cornelia de Lange Syndrome by Facial Phenotypes. Int. J. Mol. Sci. 2020;21:1042. doi: 10.3390/ijms21031042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hadj-Rabia S., Schneider H., Navarro E., Klein O., Kirby N., Huttner K., Wolf L., Orin M., Wohlfart S., Bodemer C., et al. Automatic Recognition of the XLHED Phenotype from Facial Images. Am. J. Med. Genet. A. 2017;173:2408–2414. doi: 10.1002/ajmg.a.38343. [DOI] [PubMed] [Google Scholar]

- 49.Liehr T., Acquarola N., Pyle K., St-Pierre S., Rinholm M., Bar O., Wilhelm K., Schreyer I. Next Generation Phenotyping in Emanuel and Pallister-Killian Syndrome Using Computer-Aided Facial Dysmorphology Analysis of 2D Photos. Clin. Genet. 2018;93:378–381. doi: 10.1111/cge.13087. [DOI] [PubMed] [Google Scholar]

- 50.Amudhavalli S.M., Hanson R., Angle B., Bontempo K., Gripp K.W. Further Delineation of Aymé-Gripp Syndrome and Use of Automated Facial Analysis Tool. Am. J. Med. Genet. A. 2018;176:1648–1656. doi: 10.1002/ajmg.a.38832. [DOI] [PubMed] [Google Scholar]

- 51.Pode-Shakked B., Finezilber Y., Levi Y., Liber S., Fleischer N., Greenbaum L., Raas-Rothschild A. Shared Facial Phenotype of Patients with Mucolipidosis Type IV: A Clinical Observation Reaffirmed by next Generation Phenotyping. Eur. J. Med. Genet. 2020;63:103927. doi: 10.1016/j.ejmg.2020.103927. [DOI] [PubMed] [Google Scholar]

- 52.Wang Y., Li N., Su Z., Xu Y., Liu S., Chen Y., Li X., Shen Y., Hung C., Wang J., et al. The Phenotypic Spectrum of Kabuki Syndrome in Patients of Chinese Descent: A Case Series. Am. J. Med. Genet. A. 2020;182:640–651. doi: 10.1002/ajmg.a.61467. [DOI] [PubMed] [Google Scholar]

- 53.Porras A.R., Summar M., Linguraru M.G. Objective Differential Diagnosis of Noonan and Williams-Beuren Syndromes in Diverse Populations Using Quantitative Facial Phenotyping. Mol. Genet. Genom. Med. 2021;9:e1636. doi: 10.1002/mgg3.1636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Gautam R., Sharma M. Prevalence and Diagnosis of Neurological Disorders Using Different Deep Learning Techniques: A Meta-Analysis. J. Med. Syst. 2020;44:49. doi: 10.1007/s10916-019-1519-7. [DOI] [PubMed] [Google Scholar]

- 55.Wang B., Wu X. Application of Artificial Intelligence in Recognition and Evaluation of Facial Paralysis. Chin. J. Med. Instrum. 2022;46:57–62. doi: 10.3969/j.issn.1671-7104.2022.01.012. [DOI] [PubMed] [Google Scholar]

- 56.Ngo T.H., Seo M., Matsushiro N., Chen Y.-W. Quantitative Analysis of Facial Paralysis Based on Filters of Concentric Modulation; Proceedings of the 2015 12th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD); Zhangjiajie, China. 15–17 August 2015; pp. 1758–1763. [Google Scholar]

- 57.Guo Z., Shen M., Duan L., Zhou Y., Xiang J., Ding H., Chen S., Deussen O., Dan G. Deep Assessment Process: Objective Assessment Process for Unilateral Peripheral Facial Paralysis via Deep Convolutional Neural Network; Proceedings of the 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017); Melbourne, Australia. 18–21 April 2017; pp. 135–138. [Google Scholar]

- 58.Storey G., Jiang R., Keogh S., Bouridane A., Li C.-T. 3DPalsyNet: A Facial Palsy Grading and Motion Recognition Framework Using Fully 3D Convolutional Neural Networks. arXiv. 2019 doi: 10.1109/ACCESS.2019.2937285.1905.13607 [DOI] [Google Scholar]

- 59.Tăuţan A.-M., Ionescu B., Santarnecchi E. Artificial Intelligence in Neurodegenerative Diseases: A Review of Available Tools with a Focus on Machine Learning Techniques. Artif. Intell. Med. 2021;117:102081. doi: 10.1016/j.artmed.2021.102081. [DOI] [PubMed] [Google Scholar]

- 60.Bandini A., Orlandi S., Escalante H.J., Giovannelli F., Cincotta M., Reyes-Garcia C.A., Vanni P., Zaccara G., Manfredi C. Analysis of Facial Expressions in Parkinson’s Disease through Video-Based Automatic Methods. J. Neurosci. Methods. 2017;281:7–20. doi: 10.1016/j.jneumeth.2017.02.006. [DOI] [PubMed] [Google Scholar]

- 61.Rajnoha M., Mekyska J., Burget R., Eliasova I., Kostalova M., Rektorova I. Towards Identification of Hypomimia in Parkinson’s Disease Based on Face Recognition Methods; Proceedings of the 2018 10th International Congress on Ultra Modern Telecommunications and Control Systems and.d Workshops (ICUMT); Moscow, Russia. 5–9 November 2018; pp. 1–4. [Google Scholar]

- 62.Jung S.-G., An J., Kwak H., Salminen J., Jansen B. Inferring Social Media Users’ Demographics from Profile Pictures: A Face++ Analysis on Twitter Users; Proceedings of the International Conference on Electronic Business (ICEB); Dubai, United Arab Emirates. 4–8 December 2017. [Google Scholar]

- 63.Hou X., Zhang Y., Wang Y., Wang X., Zhao J., Zhu X., Su J. A Markerless 2D Video, Facial Feature Recognition-Based, Artificial Intelligence Model to Assist With Screening for Parkinson Disease: Development and Usability Study. J. Med. Internet Res. 2021;23:e29554. doi: 10.2196/29554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Umeda-Kameyama Y., Kameyama M., Tanaka T., Son B.-K., Kojima T., Fukasawa M., Iizuka T., Ogawa S., Iijima K., Akishita M. Screening of Alzheimer’s Disease by Facial Complexion Using Artificial Intelligence. Aging. 2021;13:1765–1772. doi: 10.18632/aging.202545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Zhuang Y., McDonald M., Uribe O., Yin X., Parikh D., Southerland A.M., Rohde G.K. Facial Weakness Analysis and Quantification of Static Images. IEEE J. Biomed. Health Inform. 2020;24:2260–2267. doi: 10.1109/JBHI.2020.2964520. [DOI] [PubMed] [Google Scholar]

- 66.Forte C., Voinea A., Chichirau M., Yeshmagambetova G., Albrecht L.M., Erfurt C., Freundt L.A., Carmo L.O.E., Henning R.H., van der Horst I.C.C., et al. Deep Learning for Identification of Acute Illness and Facial Cues of Illness. Front. Med. 2021;8:661309. doi: 10.3389/fmed.2021.661309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Lin S., Li Z., Fu B., Chen S., Li X., Wang Y., Wang X., Lv B., Xu B., Song X., et al. Feasibility of Using Deep Learning to Detect Coronary Artery Disease Based on Facial Photo. Eur. Heart J. 2020;41:4400–4411. doi: 10.1093/eurheartj/ehaa640. [DOI] [PubMed] [Google Scholar]

- 68.Valentine M., Bihm D.C.J., Wolf L., Hoyme H.E., May P.A., Buckley D., Kalberg W., Abdul-Rahman O.A. Computer-Aided Recognition of Facial Attributes for Fetal Alcohol Spectrum Disorders. Pediatrics. 2017;140:e20162028. doi: 10.1542/peds.2016-2028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Chen Y., Liu W., Zhang L., Yan M., Zeng Y. Hybrid Facial Image Feature Extraction and Recognition for Non-Invasive Chronic Fatigue Syndrome Diagnosis. Comput. Biol. Med. 2015;64:30–39. doi: 10.1016/j.compbiomed.2015.06.005. [DOI] [PubMed] [Google Scholar]

- 70.Miller M.Q., Hadlock T.A., Fortier E., Guarin D.L. The Auto-eFACE: Machine Learning-Enhanced Program Yields Automated Facial Palsy Assessment Tool. Plast. Reconstr. Surg. 2021;147:467–474. doi: 10.1097/PRS.0000000000007572. [DOI] [PubMed] [Google Scholar]

- 71.Kruszka P., Addissie Y.A., McGinn D.E., Porras A.R., Biggs E., Share M., Crowley T.B., Chung B.H.Y., Mok G.T.K., Mak C.C.Y., et al. 22q11.2 Deletion Syndrome in Diverse Populations. Am. J. Med. Genet. A. 2017;173:879–888. doi: 10.1002/ajmg.a.38199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Miller R.E., Learned-Miller E.G., Trainer P., Paisley A., Blanz V. Early Diagnosis of Acromegaly: Computers vs Clinicians. Clin. Endocrinol. 2011;75:226–231. doi: 10.1111/j.1365-2265.2011.04020.x. [DOI] [PubMed] [Google Scholar]

- 73.Schneider H.J., Kosilek R.P., Günther M., Roemmler J., Stalla G.K., Sievers C., Reincke M., Schopohl J., Würtz R.P. A Novel Approach to the Detection of Acromegaly: Accuracy of Diagnosis by Automatic Face Classification. J. Clin. Endocrinol. Metab. 2011;96:2074–2080. doi: 10.1210/jc.2011-0237. [DOI] [PubMed] [Google Scholar]

- 74.Chen S., Pan Z.-X., Zhu H.-J., Wang Q., Yang J.-J., Lei Y., Li J.-Q., Pan H. Development of a Computer-Aided Tool for the Pattern Recognition of Facial Features in Diagnosing Turner Syndrome: Comparison of Diagnostic Accuracy with Clinical Workers. Sci. Rep. 2018;8:9317. doi: 10.1038/s41598-018-27586-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Mishima H., Suzuki H., Doi M., Miyazaki M., Watanabe S., Matsumoto T., Morifuji K., Moriuchi H., Yoshiura K.-I., Kondoh T., et al. Evaluation of Face2Gene Using Facial Images of Patients with Congenital Dysmorphic Syndromes Recruited in Japan. J. Hum. Genet. 2019;64:789–794. doi: 10.1038/s10038-019-0619-z. [DOI] [PubMed] [Google Scholar]

- 76.Porras A.R., Rosenbaum K., Tor-Diez C., Summar M., Linguraru M.G. Development and Evaluation of a Machine Learning-Based Point-of-Care Screening Tool for Genetic Syndromes in Children: A Multinational Retrospective Study. Lancet Digit. Health. 2021;3:e635–e643. doi: 10.1016/S2589-7500(21)00137-0. [DOI] [PubMed] [Google Scholar]

- 77.Guo X., Meng T., Huang J., Wang X., Lian W., Deng K., Gao L., Wang Z., Xing B., Long X. 3D Facial Analysis in Acromegaly: Gender-Specific Features and Clinical Correlations. Front. Endocrinol. 2018;9:722. doi: 10.3389/fendo.2018.00722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Fan Y., Li Y., Li Y., Feng S., Bao X., Feng M., Wang R. Development and Assessment of Machine Learning Algorithms for Predicting Remission after Transsphenoidal Surgery among Patients with Acromegaly. Endocrine. 2020;67:412–422. doi: 10.1007/s12020-019-02121-6. [DOI] [PubMed] [Google Scholar]

- 79.Topol E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Nat. Med. 2019;25:44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 80.Klare B.F., Burge M.J., Klontz J.C., Vorder Bruegge R.W., Jain A.K. Face Recognition Performance: Role of Demographic Information. IEEE Trans. Inf. Forensics Secur. 2012;7:1789–1801. doi: 10.1109/TIFS.2012.2214212. [DOI] [Google Scholar]

- 81.Langevin R., Ali M.R., Sen T., Snyder C., Myers T., Dorsey E.R., Hoque M.E. The PARK Framework for Automated Analysis of Parkinson’s Disease Characteristics. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019;3:1–22. doi: 10.1145/3328925. [DOI] [Google Scholar]

- 82.Pantel J.T., Zhao M., Mensah M.A., Hajjir N., Hsieh T.-C., Hanani Y., Fleischer N., Kamphans T., Mundlos S., Gurovich Y., et al. Advances in Computer-Assisted Syndrome Recognition and Differentiation in a Set of Metabolic Disorders. bioRxiv. 2017:219394. doi: 10.1007/s10545-018-0174-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Wu D., Chen S., Zhang Y., Zhang H., Wang Q., Li J., Fu Y., Wang S., Yang H., Du H., et al. Facial Recognition Intensity in Disease Diagnosis Using Automatic Facial Recognition. J. Pers. Med. 2021;11:1172. doi: 10.3390/jpm11111172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Face Live Detection Method Based on Physiological Motion Analysis. Tsinghua Sci. Technol. 2009;14:685–690. doi: 10.1016/S1007-0214(09)70135-X. [DOI] [Google Scholar]

- 85.Guanjie Liu Y.W., Guanjie Liu Y.W. A Computer-Aided System for Ocular Myasthenia Gravis Diagnosis. Tsinghua Sci. Technol. 2021;26:749–758. doi: 10.26599/TST.2021.9010025. [DOI] [Google Scholar]

- 86.Arac A. Machine Learning for 3D Kinematic Analysis of Movements in Neurorehabilitation. Curr. Neurol. Neurosci. Rep. 2020;20:29. doi: 10.1007/s11910-020-01049-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Khashman A. Application of an Emotional Neural Network to Facial Recognition. Neural Comput. Appl. 2009;18:309–320. doi: 10.1007/s00521-008-0212-4. [DOI] [Google Scholar]

- 88.Hassan T., Seus D., Wollenberg J., Weitz K., Kunz M., Lautenbacher S., Garbas J.-U., Schmid U. Automatic Detection of Pain from Facial Expressions: A Survey. IEEE Trans. Pattern. Anal. Mach. Intell. 2021;43:1815–1831. doi: 10.1109/TPAMI.2019.2958341. [DOI] [PubMed] [Google Scholar]

- 89.Zhao X., Zou J., Li H., Dellandrea E., Kakadiaris I.A., Chen L. Automatic 2.5-D Facial Landmarking and Emotion Annotation for Social Interaction Assistance. IEEE Trans. Cybern. 2016;46:2042–2055. doi: 10.1109/TCYB.2015.2461131. [DOI] [PubMed] [Google Scholar]

- 90.Boonipat T., Asaad M., Lin J., Glass G.E., Mardini S., Stotland M. Using Artificial Intelligence to Measure Facial Expression Following Facial Reanimation Surgery. Plast. Reconstr. Surg. 2020;146:1147–1150. doi: 10.1097/PRS.0000000000007251. [DOI] [PubMed] [Google Scholar]

- 91.Vabalas A., Gowen E., Poliakoff E., Casson A.J. Machine Learning Algorithm Validation with a Limited Sample Size. PLoS ONE. 2019;14:e0224365. doi: 10.1371/journal.pone.0224365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Niazi M.K.K., Parwani A.V., Gurcan M.N. Digital Pathology and Artificial Intelligence. Lancet Oncol. 2019;20:e253–e261. doi: 10.1016/S1470-2045(19)30154-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Hosny A., Parmar C., Quackenbush J., Schwartz L.H., Aerts H.J.W.L. Artificial Intelligence in Radiology. Nat. Rev. Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.U.S. Food and Drug Administration Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan. [(accessed on 20 May 2022)];2021 Available online: https://www.fda.gov.

- 95.Katsanis S.H., Claes P., Doerr M., Cook-Deegan R., Tenenbaum J.D., Evans B.J., Lee M.K., Anderton J., Weinberg S.M., Wagner J.K. A Survey of U.S. Public Perspectives on Facial Recognition Technology and Facial Imaging Data Practices in Health and Research Contexts. PLoS ONE. 2021;16:e0257923. doi: 10.1371/journal.pone.0257923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Smith M., Miller S. The Ethical Application of Biometric Facial Recognition Technology. AI Soc. 2022;37:167–175. doi: 10.1007/s00146-021-01199-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Almeida D., Shmarko K., Lomas E. The Ethics of Facial Recognition Technologies, Surveillance, and Accountability in an Age of Artificial Intelligence: A Comparative Analysis of US, EU, and UK Regulatory Frameworks. AI Ethics. 2021:1–11. doi: 10.1007/s43681-021-00077-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Roundtree A.K. Ethics and Facial Recognition Technology: An Integrative Review; Proceedings of the 2021 3rd World Symp. Artif. (WSAI); Guangzhou, China. 18–20 June 2021; pp. 10–19. [DOI] [Google Scholar]

- 99.Hongsong Chen Y.Z., Hongsong Chen Y.Z. Security Issues and Defensive Approaches in Deep Learning Frameworks. Tsinghua Sci. Technol. 2021;26:894–905. doi: 10.26599/TST.2020.9010050. [DOI] [Google Scholar]

- 100.Silva S.H., Najafirad P. Opportunities and Challenges in Deep Learning Adversarial Robustness: A Survey. arXiv. 20202007.00753 [Google Scholar]

- 101.Yang X., Yang D., Dong Y., Yu W., Su H., Zhu J. Delving into the Adversarial Robustness on Face Recognition. arXiv. 20202007.04118 [Google Scholar]

- 102.Rozsa A., Günther M., Rudd E.M., Boult T.E. Facial Attributes: Accuracy and Adversarial Robustness. Pattern Recognit. Lett. 2019;124:100–108. doi: 10.1016/j.patrec.2017.10.024. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.