Abstract

Optical fiber bending, deformation or shape sensing are important measurement technologies and have been widely deployed in various applications including healthcare, structural monitoring and robotics. However, existing optical fiber bending sensors require complex sensor structures and interrogation systems. Here, inspired by the recent renewed interest in information-rich multimode optical fibers, we show that the multimode fiber (MMF) output speckles contain the three-dimensional (3D) geometric shape information of the MMF itself. We demonstrate proof-of-concept 3D multi-point deformation sensing via a single multimode fiber by using k-nearest neighbor (KNN) machine learning algorithm, and achieve a classification accuracy close to 100%. Our results show that a single MMF based deformation sensor is excellent in terms of system simplicity, resolution and sensitivity, and can be a promising candidate in deformation monitoring or shape-sensing applications.

Subject terms: Electrical and electronic engineering, Optical sensors

Introduction

Complex deformation, bending or shape sensing has received growing attention, due to their booming applications in interactive sensing devices1–4 and soft robots5–8. Optical fiber sensors provide an efficient and competitive sensing solution, owning to their unique properties, including light-weight, low cost, freedom from electromagnetic interference, and resistance to harsh environment and chemicals9–11. Among optical fiber bending sensors, fiber Bragg gratings (FBGs)12–14, long period fiber gratings (LPFGs)15,16 and specially designed optical fibers17,18 are popular choices owing to their robustness. For example, the fiber sensor presented in Ref.19 exhibits high sensitivity (up to 1270 pm/°) and can be used to monitor small bending angles (2°). In Ref.20, a two-dimensional bending sensor based on long period gratings (LPGs) fabricated in three-core fibers were demonstrated. Furthermore, FBGs were fabricated in a six-core fiber to achieve a spatial resolution down to 40 µm21. While these existing fiber-optic sensors have demonstrated good sensitivity and resolution, the application of these previous optical-fiber-shape sensors are limited by the complex fabrication process and expensive detection systems. The fabrication of high-accuracy FBGs and good-performance multicore fibers is complicated. The signal processing and interrogation systems involved are bulky and high cost, which especially limits their application in wearable and portable devices. Furthermore, the spatial resolution of fiber-grating-based bending sensors is restricted by the minimal length of individual gratings that need to be incorporated to measure each deformation point. Therefore, a deformation sensor consisting of only standard and low-cost fibers would be ideal.

Multimode fibers (MMFs) are high-capacity information channels22–24. The MMF output speckles contain rich information and have been used in applications such as spectrometers25, optical trapping26 and imaging27. Very recently, utilizing MMF output speckles, researchers employed deep learning in input-pattern reconstructions, including MINIST characters28,29, natural scenes30 and even randomly distributed spatial information31. Here, we hypothesize that the MMF speckles possess the information that relates to the complex deformation along the fiber itself. In this work. We attempt to use machine learning to interpret the complex deformation of the fiber with output speckles. In fact, speckle-based MMF bending classification was recently reported32–34 on one-point low dimensional to classify such MMF bending. In Ref.34, a spring needle was used to press the fiber to achieve bending at one point, where the freedom of deformation is relatively low as both ends of the fiber were fixed. In this work, we propose the following to experimentally study speckle-based 3D multi-point deformation classification through a single MMF.

Experiment and results

One-point deformation experiment

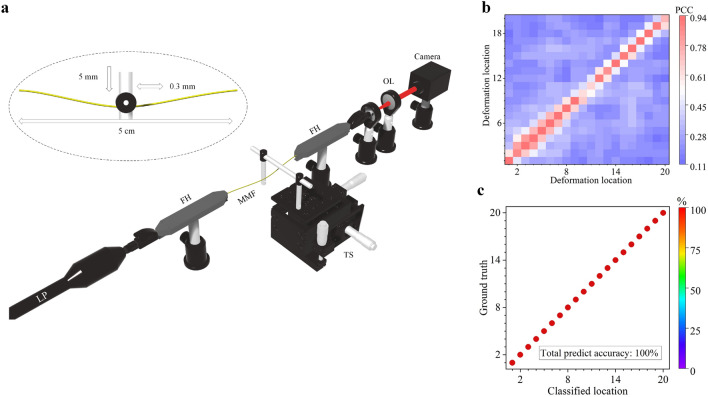

Firstly, we studied the bending-location classification along the MMF axial direction using MMF speckles. As shown in Fig. 1 (see “Methods” section for details), we used a 11 cm long MMF and fixed a 5 cm section of this MMF between two translation stages as the deformation region. A 6 mm diameter steel rod controlled by a translation stage was used to induce one-point deformation within the 5 cm bending region. The fiber was carefully aligned to avoid twisting during deformation and was also stabilized so that the rest of the MMF (except for the bending point) remained undisturbed. The deformation was then formed by moving the steel rod perpendicular to the fiber axial direction for 5 mm to bend the fiber at one point. The camera was used to acquire an output speckle image 5 s after the deformation was applied, leaving enough time for the MMF and the speckle to stabilize. The steel rod was then translated 0.3 mm along the axial direction of the fixed MMF and the speckle acquisition was repeated. Having acquired output speckles at 20 different fiber bending points, we started a new loop by moving the steel rod backwards along the MMF axial direction for another repeating measurements. This process was repeated until we collected 40 speckles for each deformation location of the MMF. Finally, within 2 h we collected in total 800 speckle images, which were subsequently used for the deformation classification.

Figure 1.

(a) Experiment setup for one-point deformation classification. (b) Average PCC for one-point deformation (the average PCC between the speckles collected in the first measurement and subsequently collected speckles in the rest 39 measurements). (c) Normalized confusion matrices for KNN classification results with one-point deformation experiment. KNN is trained by using only 50% dataset. LP Laser pointer, FH fiber holder, TS translation stage, OL objective lens.

Figure 1b shows the average Pearson correlation coefficients (PCC) between the speckles collected in the first measurement and speckles collected in the rest measurements. It can be seen in Fig. 1b that, although the average PCC between neighboring bending locations are high between 0.6 and 0.7. The correlation coefficient decreased to 0.2 after a few times of deformation. Bending locations can still be accurately classified because the PCC between speckles collected at the same bending location is higher. The classification results using k-nearest neighbors algorithm (KNN) are shown in Fig. 1c. The classification accuracy is defined as the percentage of the correctly predicted speckles within test datasets (50% of the total datasets). Here we did not randomly select the training and testing datasets from the 800 datasets. Instead, to show the stability of the MMF-speckle based bending sensor, speckles recorded during the first half of the repeating speckle collections (i.e. the first 20 repeating measurements in the first hour of the 2-h measurements) were used as the training data and the rest were used as the test data. In Fig. 1c, the numbers of the diagonal elements show the percentage of correctly classified speckles, where a 100% classification accuracy is achieved for each bending location.

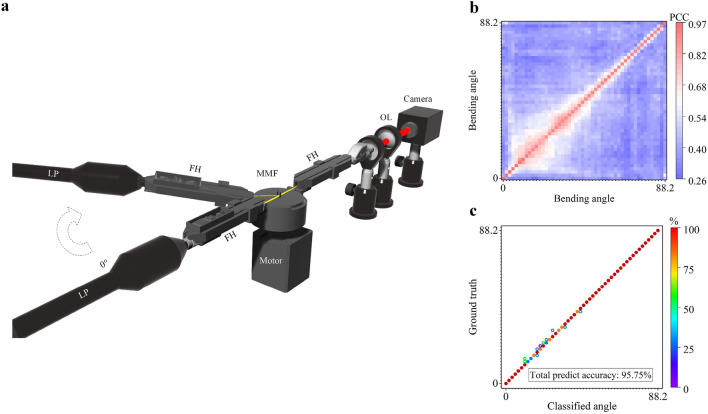

We then set up an electrical-motor driven system to test the classification of different bending angles at one deformation location on a piece of MMF (Fig. 2a). A 20 cm long MMF and electric motors (RS PRO Hybrid Stepper Motor 0.9°, 0.44 Nm, 2.8 V, 1.68 A, 4 Wires) were used in this experiment. The testing MMFs were secured in place on the motor by homemade 3D-printed fiber holders. An Arduino system was used to control the movement of the motor and to trigger the camera to record a speckle in a synchronized manner. One MMF end connected to the camera was fixed, and the other MMF end was movable and was fuse-spliced to a single-mode fiber connected to a laser pointer. The motor bent the MMF at one point with bending angles ranging from 0° to 88.2° at a step of 1.8°. At each bending angle, the camera recorded one speckle image. We allowed 5 s for the system to fully settle before each image acquisition. By repeating 50 different angles bending for 16 times, the entire process took about 1 h and in total 800 datasets were collected (16 speckles for each bending angle). Figure 2b is the average PCC of speckles between bending angles. The correlation coefficient decreased to 0.3 after several times of deformation. It can be seen that the average PCC between speckles collected at the same bending angle is higher than those between speckles collected at different bending angles. Using the first recorded 400 data to train the KNN and the rest 400 as the test data, we were able to achieve a classification accuracy of 95.75% (Fig. 2c). The small classification errors are believed to be a result of the variability of the motorized-bending system, i.e. the MMF and the motor may have not returned to exactly the same state after each movement.

Figure 2.

(a) Experimental setup for one-point motor bending angle classification. (b) Average PCC for one-point motor bending (the average PCC compared themselves with all pictures in other angles). (c) The KNN prediction result with 50% dataset as the training data. The colored circles in c indicate the location and percentage of classification errors while the solid circular dots on the diagonal show the classification accuracy.

The results presented above suggest: (i) the deformation applied at different points along the same MMF results in different speckle patterns and the machine learning algorithms (i.e. KNN here) is able to distinguish such subtle differences; and (ii) different degrees of deformation at the same point also result in different output speckles, which can subsequently be classified by KNN. These forms a good basis of the following complex deformation sensing experiments.

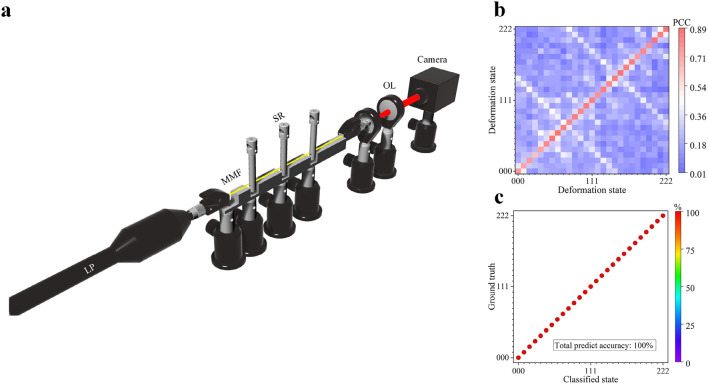

2D multi-point deformation experiment

Next, we tested the performance of our sensor for two-dimensional (2D) multi-point bending. We used a 23 cm long MMF and fixed 12 cm section of the fiber as the experimental deformation region (Fig. 3a). A homemade fiber holder separated this bending region into 4 equal-length 30 mm long sections. Three 6 mm-diameter steel rods were used to apply displacements at the joint between these sections. For each step of the displacement, one steel rod was translated by 0.05 mm to deform the MMF, and for each steel rod there were three displacement values (i.e. 0 mm, 0.05 mm and 0.1 mm). Hence, there were 27 deformation states in total. Note that here we used a much smaller displacement (0.05 mm) compared with one-point experiment, to show the high sensitivity of the system. Similarly, before collecting a speckle we allowed 5 s for the system to settle after the displacement. The deformation process was repeated 50 times. In total, 50 speckles were collected for each deformation state and the entire process lasted about 2 h. Figure 3b shows the PCC between the states of the MMF recorded. The average PCC calculated for speckles of the same deformation state was the highest compared with the PCC between speckles from different deformation states. The correlation coefficient decreased to 0.2 after a few times of deformation. Using the first half recorded data to train the KNN and the rest as the test data, 100% classification accuracy was achieved as shown in Fig. 3c.

Figure 3.

(a) Experimental setup for 2D multi-point deformation classification. (b) Average PCC between the speckle collected in the first measurements and speckles collected in the rest measurements. (c) Normalized confusion matrices for the KNN classification. The deformation states are defined by a deformation coordinates (Y1, Y2, Y3), where x of the Yx element represents the x-th bending rod, and the value of each element Yx defines the bending displacement step, i.e., 0 is 0 mm, 1 is 0.05 mm and 2 is 0.1 mm.

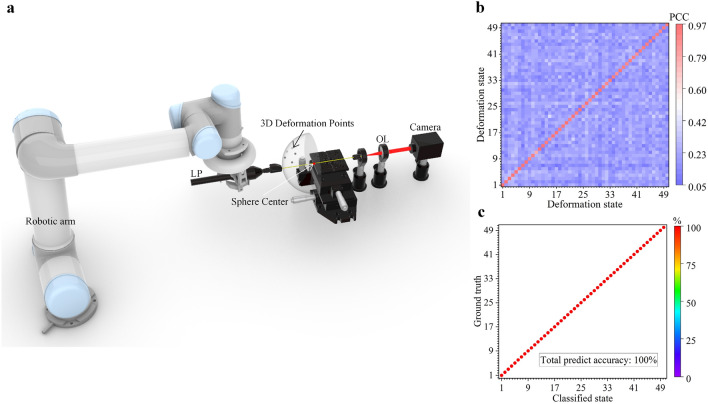

3D deformation experiment

Finally, we attached our MMF sensor to a robotic arm (UR5, Universal Robot Company) for the proof-of-concept demonstration of three-dimensional (3D) deformation sensing. We first attached a 5 cm section of a 12 cm long MMF to a 5 cm-long end-effector section of the robotic arm for 3D one-point deformation test, as shown in Fig. 4a. The movement of the top section of the robotic arm follows a spherical Fibonacci lattice and the tip of the robotic arm can reach any points on a half spherical surface. The attached MMF therefore follows the same movement pattern as the robotic arm and is tested for 3D one-point bending. Based on the spherical Fibonacci lattice, we generated 50 different points on a 5 cm radius half sphere and fed the point coordinates to the end effector of the robotic arm. The laser pointer and the MMF were stabilized on the end effector, the MMF was deformed together with the robotic arm and followed the same 3D bending angle. The output speckle from the other end of the MMF was acquired by a camera. After the robotic arm reached a 3D deformation state, we allowed 3 s for the system to stabilize before the camera recorded a corresponding speckle. Note that we used a short waiting time (3 s) here instead of 5 s used in the previous experiments, as 3 s was found sufficient and additionally we can shorten the overall data acquisition time. These 50 3D deformation states were repeated 16 times within 1 h. The average PCC between speckles collected at the same bending state were much higher than the PCC between speckles collected at different bending states (Fig. 4b). The correlation coefficient decreased to 0.2 after several times of deformation. Again, we trained the KNN using first recorded 400 samples and validated the bending classification with the rest 400 data recorded later. Figure 4c shows the classification accuracy for 3D one-point deformation. These results indicate that all 3D one-point deformation of the fiber can be successfully classified, and a classification accuracy 100% can be achieved. In addition, we also achieved 100% classification accuracy for 3D one-point deformation of the MMF using the Robotic arm at the same bending degree, but different orientation, and the results are given in Supplementary Information 1.

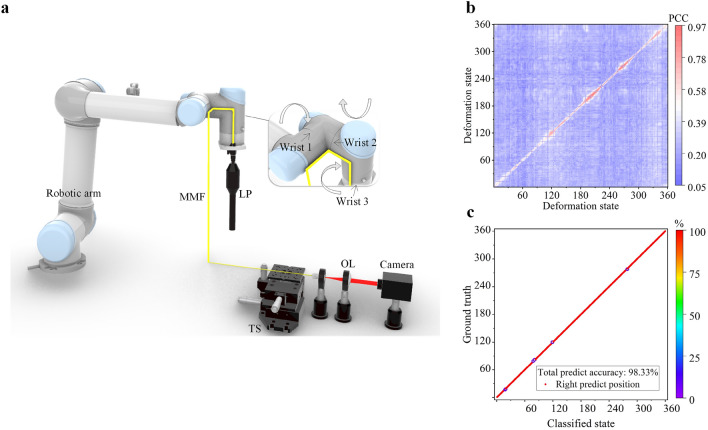

Figure 4.

(a) Experimental setup for 3D one-point deformation classification. (b) Average PCC for 3D one-point bending between the speckles collected in the first measurement and speckles collected in the rest measurements. (c) The KNN classification result for 3D one-point bending using first half collected data as the training dataset and the second half data as the test dataset.

Based on the 3D one-point deformation results above, we tested 3D three-point deformation sensing using a 220 cm-long MMF by attaching a 43 cm section of the fiber onto the entire robotic arm and covering three bendable joints, as shown in Fig. 5a. We used sealer tape to secure the fiber to the surface of the robotic arm (expect for the robotic arm bending joints, where the fiber was not covered by the sealer tape). The 3D three-point deformation was achieved by rotating these three robotic joints, resulting in the corresponding deformation on the attached MMF. For each bending joint, the bending angle can vary in the range from − 30° to + 30° with a minimal step of 1°. 360 deformation states in combination of movements of these three robotic joints were randomly generated and were repeated 5 times within 1 h. The camera recorded one speckle image within 1 s every time when the robotic arm moved to a bending state and a total of 1800 speckles are recorded. Similar to the electric motor experiment presented in the previous section, there appeared instabilities induced by relative fiber movements, i.e. the MMF may have not returned to the exactly same deformation state even though the robotic arm is back to the same state. This can be seen in the average PCC shown in Fig. 5b, where a reduced correlations between speckles collected at the same bending state are noted. The speckles have a low average correlation. We believe that such an instability is not the inherent property of the MMF sensor, but a result of the inevitable relative movements of the fiber under the sealer tape when the robotic arm was moved. Nevertheless, 98% classification accuracy was still achieved as shown in Fig. 5c when we used the first 80% recorded data to train the KNN and the rest 20% data as the test dataset. Further experimental results for 3D multi-point deformation are provided in Supplementary Information 2.

Figure 5.

(a) Experimental setup for 3D three-point deformation classification; (b) Average PCC for 3D three-point bending between the speckles collected in the first measurement and speckles collected in the rest measurements. (c) KNN classification result using the last 20% recorded data in 3D three-point bending. The blue-colored circles in c indicate where the classification is unsuccessful.

Discussions and conclusion

One foreseeable challenge when applying the single MMF deformation sensor in real world is the high variability of the MMF output speckles, which is sensitive to the environment conditions, including temperature, vibration, and mechanical stress. Any small changes in these can lead to variations of the speckles, as shown in Supplementary Information 3 where the decorrelation of the output speckles along the time is clearly seen. The drifting of the MMF output speckle can be minimized by specially designed packaging and fixtures. To compensate the drifting, more training data can be included to enable the neural network to learn all possible variations29, or to update the neural network continuously by semi-supervised learning31.

Most experiment results presented in our work show high classification accuracy of 100% or very close to 100%. We believe that those observe classification errors (although only a few) are not the inherent error of the MMF bending sensor itself but are mainly due to the experimental setup, including the errors of the motor, the elasticity of the fiber jacket material after being stretched, and the small but undesirable relative movements between the taped-fixed fiber and the robotic arm in each robotic arm movement. Again, these can be improved with a specially designed packaging to secure the fiber on the structure or device to be monitored.

In summary, we have demonstrated 3D multi-points deformation sensing via a single MMF using machine-learning based speckle classification. The demonstrated single MMF deformation sensor is advantageous compared with traditional optical fiber deformation sensors in several aspects. Firstly, the transducer itself is a standard MMF and is therefore extremely low cost. The sensing system can be incorporated as a compact and wearable device where only a light source and an image sensor are needed, without requiring complex wavelength interrogation systems such as those needed for FBG sensors. Secondly, our single MMF deformation sensor can be applied to various types of deformation and a good resolution is achieved. As shown in our experiment, a 0.3 mm spatial resolution is achieved in Fig. 1, and a displacement as small as 0.05 mm can be successfully classified along the MMF (Fig. 3). Our experiment results prove that the single MMF speckle-based deformation sensor has good flexibility and sensitivity. Regardless of the influence of variations such as temperature and vibration, a resolution of 1° for 3D deformation is demonstrated in our experiment. It is possible to achieve even smaller resolution in micrometer scale (e.g. 5 µm) as demonstrated in Refs.32–34. Thirdly, 3D multi-point deformation is achieved via a single standard MMF and this is expected to be extended to more complex deformation or 3D shape sensing. In comparison, FBG based 3D shape sensing requires multiple fibers or specially designed multicore fibers. The advantages of our MMF deformation sensor include: high degrees of freedom, simple detection setup without requiring additional wavelength interrogation devices, and an overall low-cost system. This single MMF based 3D multi-points deformation sensing technique may find applications in limb motions monitoring, surgical tools manipulation, wearable device sensing and robotic control.

Methods

Optical sensing experimental setup

Output from a laser pointer (650 nm, 1 mW, KLS-006, Kelushi) was coupled into MMFs (50 μm-core, 0.22NA, FG050UGA, Thorlabs) of different lengths between 110 and 2200 mm according to the requirements of different bending experiments). It has been experimentally demonstrated that multimode fibers with larger cores or smaller numerical apertures are more sensitive to deformation35. A longer MMF increases the variations between speckles for the same deformation but at the same time introduces more instability. The other end of the MMF was imaged with a digital CMOS camera (2048 × 2048 pixels, 6.5 μm × 6.5 μm pixel sizes, C11440-22CU, Flash4.0, Hamamatsu) through a 30 mm focal lens. All fibers were bare fibers and were tested without protective jacket or connectors. How the optical fiber speckle changes with deformation is given in Supplementary Information 4.

Optical sensing experimental setup

The KNN algorithm was used to analyze the experimental data owing to its simple implementation and good classification performance36–38. KNN is simple and requires tuning only one hyperparameter (the value of k), while neural network training involves many hyperparameters controlling the size and structure of the network as well as the optimization procedure. For advanced deep neural networks such as convolutional neural network (CNN), they require a much larger size dataset. Due to the small dataset size in our experiment, KNN is ideally positioned to classify the speckles. Another important justification is that compared it with deep neural networks, such as CNN, KNN can obtained a similar accuracy in our experiment dataset. The results are provided in Supplementary Information 5. For more complicated deformations, deep neural networks could be used for better recovery, for example, to predict states not included in the training.

The KNN classification is performed by using a training dataset which contains both the input and the target variables and then by comparing the test data which contains only the input variables to that reference set the distance of the unknown to k nearest neighbors determines its class assignment by either averaging the class numbers of the k nearest reference points or by obtaining a majority vote for them.

The speckles were firstly normalized and standardized before applying them with the KNN. The size of the speckle was set to 120 × 120. Image preprocessing was done by using scaler.fit_transform function within Python sklearn.preprocessing Library. Bending state labels were inserted in each figure and at the same time corresponding speckle patterns of different bending states were identified. Subsequently, the labels and their corresponding speckles were set up in a dataset. This dataset was then split into two data groups for training and testing, respectively. After the training of KNN, speckle images in the test dataset were sent to KNN to obtain the predicted bending-state labels. The predicted labels were compared with the original bending state labels to determine the accuracy of KNN classification.

The kNN algorithm involves three main factors: the training set, the distance measurement, and the size of k. The best model hyperparameters is k equals to 3. The distance metric for the proximity evaluation in this paper was chosen to be the Euclidean distance.

Supplementary Information

Acknowledgements

X. W. acknowledges PhD scholarship funding from the China Scholarship Council.

Author contributions

L.S. conceived the idea and designed the experiment with X.W. X.W. set up and performed the experiments with the contribution from Y.W. X.W. analyzed the data. X.W. and L.S. wrote manuscript with the contribution from Y.W. K.A. provided advice on bending sensors. K.Z. and K.A. provided support on the robotic arm.

Funding

Engineering and Physical Sciences Research Council (EP/L022559/1, EP/L022559/2, EP/V050311/1 and EP/W004399/1), Royal Society (RG130230, IE161214) and H2020 Marie Skłodowska-Curie Actions (790666).

Data availability

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-022-15781-8.

References

- 1.Moore JP, Rogge MD. Shape sensing using multi-core fiber optic cable and parametric curve solutions. Opt. Express. 2012;20:2967–2973. doi: 10.1364/OE.20.002967. [DOI] [PubMed] [Google Scholar]

- 2.Ryu, S. C. & Dupont, P. E. FBG-based shape sensing tubes for continuum robots. In IEEE Int. Conf. Robot. Autom. 3531–3537 (2014).

- 3.Villatoro J, et al. Ultrasensitive vector bending sensor based on multicore optical fiber. Opt. Lett. 2016;41:832–835. doi: 10.1364/OL.41.000832. [DOI] [PubMed] [Google Scholar]

- 4.Zhao Z, Soto MA, Tang M, Thévenaz L. Distributed shape sensing using Brillouin scattering in multi-core fibers. Opt. Express. 2016;24:25211–25223. doi: 10.1364/OE.24.025211. [DOI] [PubMed] [Google Scholar]

- 5.Bai H, et al. Stretchable distributed fiber-optic sensors. Science. 2020;370:848–852. doi: 10.1126/science.aba5504. [DOI] [PubMed] [Google Scholar]

- 6.Xiong J, Chen J, Lee PS. Functional fibers and fabrics for soft robotics, wearables, and human–robot interface. Adv. Mater. 2021;32:Article 2002640. doi: 10.1002/adma.202002640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Must I, Sinibaldi E, Mazzolai B. A variable-stiffness tendril-like soft robot based on reversible osmotic actuation. Nat. Commun. 2019;10:344. doi: 10.1038/s41467-018-08173-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Van Meerbeek IM, De SCM, Shepherd RF. Soft optoelectronic sensory foams with proprioception. Sci. Robot. 2018;3:eaau2489. doi: 10.1126/scirobotics.aau2489. [DOI] [PubMed] [Google Scholar]

- 9.Lee B. Review of the present status of optical fiber sensors. Opt. Fiber Technol. 2003;9:57–79. doi: 10.1016/S1068-5200(02)00527-8. [DOI] [Google Scholar]

- 10.Campanella CE, Cuccovillo A, Campanella C, Yurt A, Passaro VMN. Fibre Bragg grating based strain sensors: Review of technology and applications. Sensors. 2018;18:3115. doi: 10.3390/s18093115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Correia R, James S, Lee SW, Morgan SP, Korposh S, Lee SW, Korposh S. Biomedical application of optical fibre sensors. J. Opt. 2018;20:073003. doi: 10.1088/2040-8986/aac68d. [DOI] [Google Scholar]

- 12.Feng D, Zhou W, Qiao X, Albert J. Compact optical fiber 3D shape sensor based on a pair of orthogonal tilted fiber Bragg gratings. Sci. Rep. 2015;5:1–7. doi: 10.1038/srep17415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zheng D, Madrigal J, Chen H, Barrera D, Sales S. Multicore fiber-Bragg-grating-based directional curvature sensor interrogated by a broadband source with a sinusoidal spectrum. Opt. Lett. 2017;42:3710–3713. doi: 10.1364/OL.42.003710. [DOI] [PubMed] [Google Scholar]

- 14.Jang M, Kim JS, Um SH, Yang S, Kim J. Ultra-high curvature sensors for multi-bend structures using fiber Bragg gratings. Opt. Express. 2019;27:2074–2084. doi: 10.1364/OE.27.002074. [DOI] [PubMed] [Google Scholar]

- 15.Saffari P, Allsop T, Adebayo A, Webb D, Haynes R, Roth MM. Long period grating in multicore optical fiber: An ultra-sensitive vector bending sensor for low curvatures. Opt. Lett. 2014;39:3508–3511. doi: 10.1364/OL.39.003508. [DOI] [PubMed] [Google Scholar]

- 16.Barrera D, Madrigal J, Sales S. Long period gratings in multicore optical fibers for directional curvature sensor implementation. J. Light. Technol. 2018;36:1063–1068. doi: 10.1109/JLT.2017.2764951. [DOI] [Google Scholar]

- 17.Arrizabalaga O, et al. High-performance vector bending and orientation distinguishing curvature sensor based on asymmetric coupled multi-core fibre. Sci. Rep. 2020;10:14058. doi: 10.1038/s41598-020-70999-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Deng M, Tang CP, Zhu T, Rao YJ. Highly sensitive bend sensor based on Mach–Zehnder interferometer using photonic crystal fiber. Opt. Commun. 2011;284:2849–2853. doi: 10.1016/j.optcom.2011.02.061. [DOI] [Google Scholar]

- 19.Villatoro J, Minkovich VP, Zubia J. Photonic crystal fiber interferometric vector bending sensor. Opt. Lett. 2015;40:3113–3116. doi: 10.1364/OL.40.003113. [DOI] [PubMed] [Google Scholar]

- 20.Wang S, et al. Two-dimensional microbend sensor based on long period fiber gratings in an isosceles triangle arrangement three-core fiber. Opt. Lett. 2017;42:4938. doi: 10.1364/OL.42.004938. [DOI] [PubMed] [Google Scholar]

- 21.Ahmad R, Ko W, Feder KS, Westbrook PS. Measuring the shape of microbends in optical fibers. Opt. Express. 2020;45:5189–5192. doi: 10.1364/OL.392366. [DOI] [PubMed] [Google Scholar]

- 22.Richardson DJ, Fini JM, Nelson LE. Space-division multiplexing in optical fibres. Nat. Photon. 2013;7:354–362. doi: 10.1038/nphoton.2013.94. [DOI] [Google Scholar]

- 23.Fan S, Kahn JM. Principal modes in multimode waveguides. Opt. Lett. 2005;30:135–137. doi: 10.1364/OL.30.000135. [DOI] [PubMed] [Google Scholar]

- 24.Stuart HR. Dispersive multiplexing in multimode optical fiber. Science. 2000;289:281–283. doi: 10.1126/science.289.5477.281. [DOI] [PubMed] [Google Scholar]

- 25.Redding B, Cao H. Using a multimode fiber as a high-resolution, low-loss spectrometer. Opt. Lett. 2012;37:3384–3386. doi: 10.1364/OL.37.003384. [DOI] [PubMed] [Google Scholar]

- 26.Bianchi S, Di Leonardo R. A multi-mode fiber probe for holographic micromanipulation and microscopy. Lab Chip. 2012;12:635–639. doi: 10.1039/C1LC20719A. [DOI] [PubMed] [Google Scholar]

- 27.Čižmár T, Dholakia K. Exploiting multimode waveguides for pure fibre-based imaging. Nat. Commun. 2012;3:1027. doi: 10.1038/ncomms2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Borhani N, et al. Learning to see through multimode fibers. Optica. 2018;5:960–966. doi: 10.1364/OPTICA.5.000960. [DOI] [Google Scholar]

- 29.Fan P, Zhao T, Su L. Deep learning the high variability and randomness inside multimode fibers. Opt. Express. 2019;27:20241–20258. doi: 10.1364/OE.27.020241. [DOI] [PubMed] [Google Scholar]

- 30.Caramazza P, Moran O, Murray-Smith R, Faccio D. Transmission of natural scene images through a multimode fibre. Nat. Commun. 2019;10:2029. doi: 10.1038/s41467-019-10057-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fan P, Ruddlesden M, Wang Y, Zhao L, Lu C, Su L. Learning enabled continuous transmission of spatially distributed information through multimode fibers. Laser Photon. Rev. 2021;15:2000348. doi: 10.1002/lpor.202000348. [DOI] [Google Scholar]

- 32.Liu Y, Li G, Qi Q, Tan Z, Wang M, Yan F. Bending recognition based on the analysis of fiber specklegrams using deep learning. Opt. Laser Technol. 2020;131:106424. doi: 10.1016/j.optlastec.2020.106424. [DOI] [Google Scholar]

- 33.Sun K, Ding Z, Zhang Z. Fiber directional position sensor by multimode interference imaging and machine learning. Appl. Opt. 2020;59:5745–5751. doi: 10.1364/AO.394280. [DOI] [PubMed] [Google Scholar]

- 34.Ding Z, Zhang Z. 2D tactile sensor based on multimode interference and deep learning. Opt. Laser Technol. 2021;136:106760. doi: 10.1016/j.optlastec.2020.106760. [DOI] [Google Scholar]

- 35.Loterie D, Psaltis D, Moser C. Bend translation in multimode fiber imaging. Opt. Express. 2017;25:6263–6273. doi: 10.1364/OE.25.006263. [DOI] [PubMed] [Google Scholar]

- 36.Peterson LE. K-nearest neighbor. Scholarpedia. 2009;4:1883. doi: 10.4249/scholarpedia.1883. [DOI] [Google Scholar]

- 37.Dudani SA. The distance-weighted k-nearest-neighbor rule. IEEE Trans. Syst. Man Cybern. 1976;4:325–327. doi: 10.1109/TSMC.1976.5408784. [DOI] [Google Scholar]

- 38.Keller JM, Gray MR, Givens JA. A fuzzy k-nearest neighbor algorithm. IEEE Trans. Syst. Man Cybern. 1985;4:580–585. doi: 10.1109/TSMC.1985.6313426. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.