Abstract

The state-of-the-art procedure for examining the lymph nodes in a lung cancer patient involves using an endobronchial ultrasound (EBUS) bronchoscope. The EBUS bronchoscope integrates two modalities into one device: (1) videobronchoscopy, which gives video images of the airway walls; and (2) convex-probe EBUS, which gives 2D fan-shaped views of extraluminal structures situated outside the airways. During the procedure, the physician first employs videobronchoscopy to navigate the device through the airways. Next, upon reaching a given node’s approximate vicinity, the physician probes the airway walls using EBUS to localize the node. Due to the fact that lymph nodes lie beyond the airways, EBUS is essential for confirming a node’s location. Unfortunately, it is well-documented that EBUS is difficult to use. In addition, while new image-guided bronchoscopy systems provide effective guidance for videobronchoscopic navigation, they offer no assistance for guiding EBUS localization. We propose a method for registering a patient’s chest CT scan to live surgical EBUS views, thereby facilitating accurate image-guided EBUS bronchoscopy. The method entails an optimization process that registers CT-based virtual EBUS views to live EBUS probe views. Results using lung cancer patient data show that the method correctly registered 28/28 (100%) lymph nodes scanned by EBUS, with a mean registration time of 3.4 s. In addition, the mean position and direction errors of registered sites were 2.2 mm and 11.8, respectively. In addition, sensitivity studies show the method’s robustness to parameter variations. Lastly, we demonstrate the method’s use in an image-guided system designed for guiding both phases of EBUS bronchoscopy.

Keywords: bronchoscopy, endobronchial ultrasound, image registration, image-guided surgery systems, multimodal imaging, lung cancer

1. Introduction

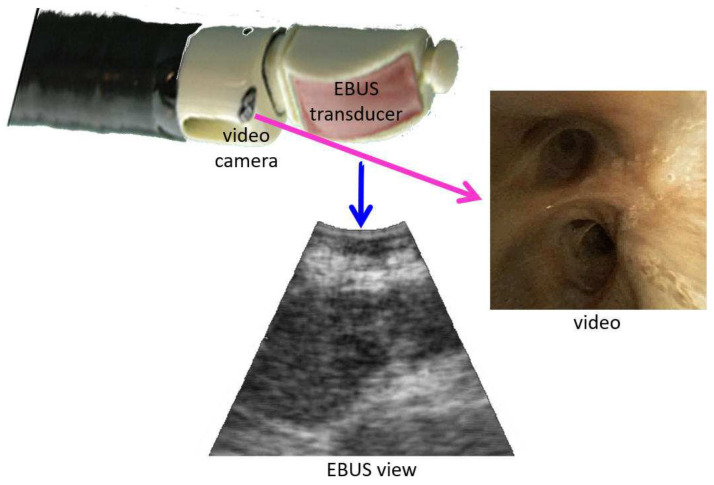

The state-of-the-art procedure for examining the lymph nodes in lung-cancer patients draws on an endobronchial ultrasound (EBUS) bronchoscope [1,2,3,4]. The EBUS bronchoscope, also referred to as a convex-probe EBUS or linear EBUS bronchoscope, integrates two modalities into one device (Figure 1): (1) videobronchoscopy, which gives video images of the airway walls (endoluminal surfaces); and (2) convex-probe EBUS, which gives 2D fan-shaped views of extraluminal structures situated outside the airways. Due to the fact that lymph nodes lie outside the airways—hence, are unobservable by videobronchoscopy—EBUS is essential for confirming a node’s location.

Figure 1.

Device tip and example views for an integrated EBUS bronchoscope. The video camera provides bronchoscopic video, while the EBUS transducer gives 2D EBUS views. The magenta and blue arrows correspond to videobronchoscope camera axis and EBUS probe axis , respectively.

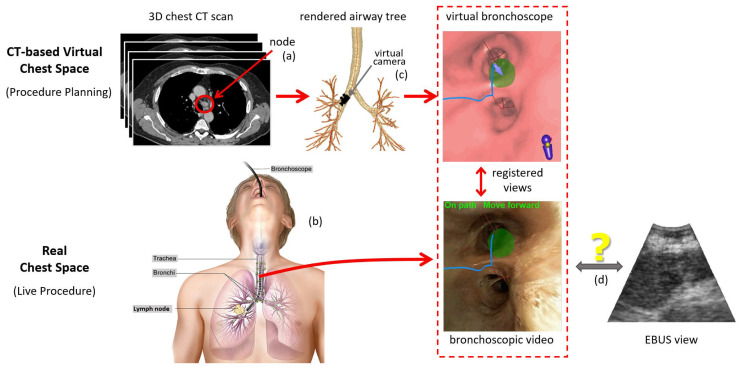

Before the procedure, the physician first examines a patient’s 3D chest computed tomography (CT) scan to select suspicious lymph nodes (Figure 2a). Later, during the live surgical procedure, the physician uses the EBUS bronchoscope to localize and biopsy the lymph nodes (Figure 2b). In particular, for each node, the physician first employs videobronchoscopy to navigate the device through the airways. Next, upon reaching the node’s approximate expected vicinity, the physician probes the nearby airway walls using EBUS to localize the node.

Figure 2.

Overview of an image-guided EBUS bronchoscopy procedure. Top part of the figure focuses on the 3D CT-based virtual chest space, whereas the bottom part features the analogous real 3D chest space. (a) Before the live procedure, the physician selects lymph nodes of interest from a patient’s chest CT scan. (b) During the live surgical procedure, the physician then uses this plan to navigate the bronchoscope toward the lymph node. (c) When using an image-guided bronchoscopy system, the physician receives additional graphical feedback on how to navigate the device toward the lymph node. VB views situated along a precomputed guidance path (blue line) lead the physician toward the lymph node. Image registration between the CT-based VB views and live bronchoscopic video (red dotted-line box) facilitates device synchronization during navigation and leads the physician to the proper airway closest to the node (green region). (d) Existing image-guided bronchoscopy systems offer no means for helping to place the EBUS probe for live localization of the extraluminal lymph node. (Bronchoscopy drawing by Terese Winslow, “Bronchoscopy,” NCI Visuals Online, National Cancer Institute.)

Unfortunately, EBUS can be difficult to use effectively [5]. This situation arises for three reasons [6,7,8]. First, the physician must mentally translate their anatomical knowledge to CT scan observations and live EBUS views. Second, EBUS views generally do not lie on the orthogonal 2D axial, coronal, or sagittal planes readily observed in CT. This makes it hard to correlate CT observations to live EBUS views. Finally, because of EBUS’s limited field of view (FOV), the physician performs an essentially blind trial-and-error EBUS sweep to localize a node. This can be challenging given the typical small size of lymph nodes (≈10 mm long-axis length) and the complex 360 cylindrical span of the airway walls.

Recently proposed image-guided bronchoscopy systems have proven to make videobronchoscopic navigation through the airways a high-success, skill-independent operation [9,10]. Using the concept of virtual bronchoscopy (VB), the live bronchoscopic video can be readily correlated and registered to precomputed CT-based VB views that mimic the video views of the real bronchoscope’s video camera [11,12,13,14]. Figure 2c illustrates this operation during a live procedure. The physician follows the guidance system’s presented sequence of VB views along a preplanned guidance route, which, in turn, leads the physician to each diagnostic site. Along the way, image registration synchronizes the position of the real device and virtual camera.

These systems, however, do not offer any means to guide the placement of the EBUS probe for localizing extraluminal sites, as suggested by Figure 2d. Thus, accurate localization, crucial for effective biopsy, continues to be problematic [8,15]. To emphasize this point, a multi-physician study showed that a physician’s biopsy success rate was only 43% despite successfully navigating to the correct airway 78% of the time [16]. Other studies have shown that, even when image-guided navigation and EBUS are used together, biopsy success rates barely >50% still occur [17,18]. We propose a method for registering the pre-operative chest CT scan to the live EBUS views, thereby facilitating accurate image-guided EBUS bronchoscopy.

Several recent works have attempted to extend image guidance to EBUS localization [19,20,21,22,23]. For EBUS bronchoscopy as applied to lymph node examination, the approach of Sato et al. entails considerable manual interaction to preplan sites for invoking EBUS, and offers no virtual-to-real EBUS registration to help with EBUS localization [19]. Sorger et al. proposed a commendable electromagnetic navigation system that draws upon an extra sensor attached to the EBUS bronchoscope [20,21]. However, the system does not register the live EBUS probe view to the pre-operative CT, thereby limiting optimal EBUS placement. It also adds the well-known issues arising from using electromagnetic technology, namely the extra device cost and susceptibility to patient motion [24].

The other works applied virtual bronchoscopy to the allied problem of peripheral nodule diagnosis [22,23]. The physician uses VB to navigate a standard videobronchoscope close to the nodule and then inserts a radial-probe EBUS, which gives 360 views of the extraluminal airway structure, into the videobronchoscope’s working channel. Since radial-probe EBUS is not designed for examining central-chest lymph nodes, it presents a different guidance scenario (separate devices, requires probe removal before biopsy, dissimilar imaging scenario) [1]. This research, however, does give impetus for work with convex-probe EBUS. The approach of Tamiya et al. does not give direct guidance for EBUS placement [22]. The method of Luo and Mori does accomplish EBUS localization. However, it needs extra sensors to track the device and was only tested in a controlled phantom environment [23].

Our proposed registration method draws upon the concept of a CT-based virtual EBUS, as introduced by Zang et al. [25,26]. The virtual EBUS, which mimics the FOV of the convex-probe EBUS, acts as a complementary analog to the virtual bronchoscope used for image-guided navigation. With the virtual EBUS and associated registration method, live image-guided EBUS probe localization can now be performed.

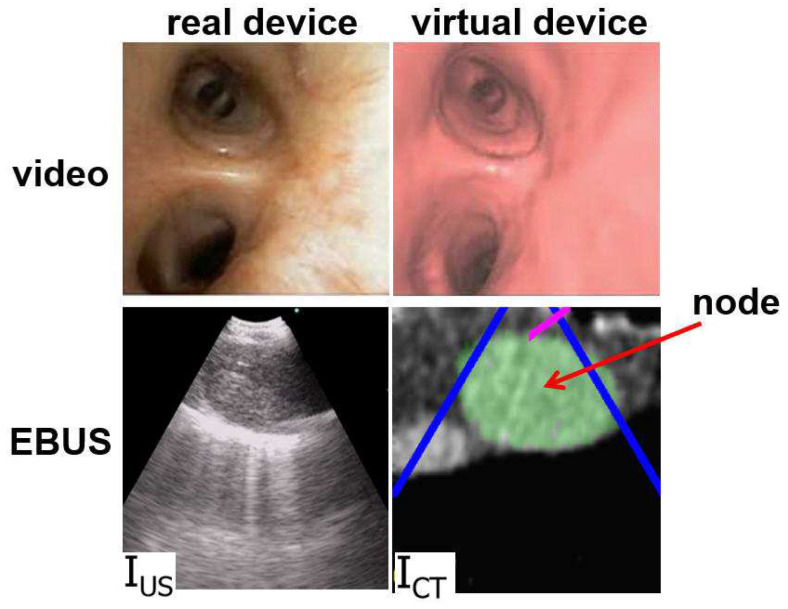

The method, partially motivated by previous research in ultrasound registration, draws upon the idea of mutual information (MI). MI techniques have been commonly used to register ultrasound and CT images since the MI metric does not require the two sources to have similar image appearances [27,28]. Figure 3, discussed in Section 2, clearly illustrates how the real and virtual EBUS views differ in appearance. Unfortunately, because the MI metric relies on a joint intensity probability model, while completely ignoring spatial information, MI-based techniques can fail when shading artifacts exist [29,30]. To improve MI-based methods, Studholme et al. proposed a normalized MI (NMI) metric, which is more robust to image overlap [31]. They also integrated spatial information by calculating mutual information among local regions [32]. As a further enhancement, researchers have included prior knowledge, such as the region shape, to achieve more robust accurate registration. For example, Knops et al. performed k-means clustering to distinguish image pixels having similar intensities but belonging to different regions. They then used these modified intensity bins to calculate NMI during registration [33].

Figure 3.

Example videobronchoscope and EBUS views constituting the multimodal EBUS bronchoscope. (Top) bronchoscopic video sources for the real EBUS bronchoscope and virtual device. (Bottom) corresponding fan-shaped EBUS views, and , for the real and virtual devices, respectively. For virtual EBUS view , the blue lines demarcate the EBUS FOV, the magenta line indicates the video camera’s viewing direction , and the green region denotes a lymph node predefined in the chest CT scan. In this figure, the videobronchoscope and EBUS view pairs are not at the same site. In addition, both view pairs are registered.

During live EBUS localization, our proposed method uses an optimization process, which combines both NMI and region shape information, to register CT-based virtual EBUS views to the live views generated by the real EBUS probe. We also integrated the method into an image-guided EBUS bronchoscopy system that features a multimodal virtual EBUS bronchoscope, which integrates both the standard virtual videobronchoscope and the virtual EBUS [26]. Thus, intuitive image guidance is now attainable for both videobronchoscopic navigation and EBUS localization. Section 2 details the method. Section 3 validates the method performance using lung cancer patient data. It also illustrates the method’s utility in our image-guided EBUS bronchoscopy system. Finally, Section 4 offers concluding comments.

2. Methods

2.1. Overview

We first overview the protocol employed by our image-guided EBUS bronchoscopy system [26]. Section 2.2 then discusses the method for registering the virtual EBUS to live EBUS probe views.

To begin, the system complied with the standard protocol of nodal staging bronchoscopy. First, prior to the procedure, a guidance plan was derived from the patient’s high-resolution 3D chest CT scan (voxel dimensions 1 mm) using methods similar to those employed by existing image-guided bronchoscopy systems [9,24,34]. This gives the airway tree’s endoluminal surfaces, a set of target lymph nodes, and an airway guidance route for each node. We used previously validated methods to generate the guidance plan [25,34,35,36,37].

Next, in the operating room, the EBUS bronchoscope was interfaced to the guidance computer. It was assumed that the physician uses the Olympus BF-UC180F EBUS bronchoscope, the de facto standard device for EBUS nodal staging [1,3]. This device produces data streams for both the videobronchoscope and EBUS probe (Figure 1), which serve as system inputs. Section 2.3 gives technical detail for the guidance computer and interfacing to the bronchoscopy suite.

Image-guided EBUS bronchoscopy fundamentally involves aligning planning data derived from the patient’s chest CT scan to live views provided by the EBUS bronchoscope. This requires the definition of virtual and real spaces representing the chest (Figure 2). For our scenario, the 3D chest CT scan defines a virtual chest space, whereby a virtual EBUS bronchoscope moves through the CT-based virtual airways. Similarly, as the real device navigates through the physical airways, the EBUS bronchoscope’s data streams give views inside the corresponding real 3D chest space. This is similar to what current image-guided bronchoscopy systems perform, where virtual and real videobronchoscopes simultaneously navigate through distinct manifestations of the same physical chest space [14,38]. For our problem, image guidance involves synchronizing the two spaces during both videobronchoscopic navigation and EBUS localization. In this way, the location of the real device can be confirmed at all times.

During the live procedure, the system used a multimodal virtual EBUS bronchoscope, which extends the concept of the CT-based virtual bronchoscope. This tool’s multimodal display mimics both components of the real EBUS bronchoscope, as shown in Figure 3. The CT-based virtual videobronchoscope simulated the views supplied by the real device’s videobronchoscope camera, as in existing image-guided bronchoscopy systems. In addition, a CT-based virtual EBUS probe simulated 2D fan-shaped EBUS views mimicking the scan plane of the real EBUS probe.

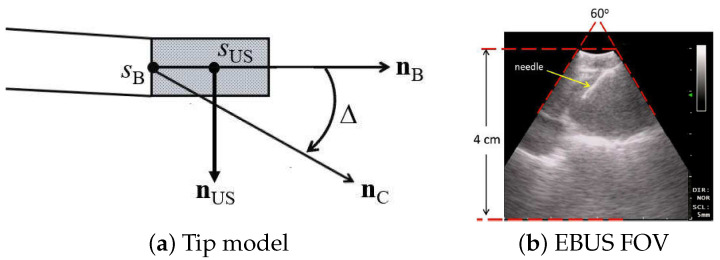

We designed the virtual device’s videobronchoscope camera and EBUS probe to comply with the known geometry and specifications of the Olympus BF-UC180F. Both components are at 3D orientations identical to the configuration of the real EBUS bronchoscope, and both components have identical FOVs of the real device. We give the specific design parameters below, per Figure 4:

Bronchoscope tip starts at point with axis .

Videobronchoscope camera axis = , offset by an angle from .

EBUS probe axis ⊥ at a distance 6 mm from the tip start ; i.e., mm, where is the origin of .

2D EBUS fan-shaped scan plane sweep = 60 with range = 4 cm.

Figure 4.

EBUS probe model: (a) device tip model; (b) 2D EBUS probe view; red lines denote the 60 fan-shaped view. Standard EBUS display settings were used throughout (gain = 19; contrast = 6).

With reference to Figure 1, the magenta arrow represents the virtual bronchoscope’s camera axis , and the blue arrow represents the virtual EBUS probe axis . In addition, per Figure 3, in virtual EBUS view , the blue lines delineate the virtual EBUS’s fan-shaped field FOV in , whereas the magenta line denotes the camera axis . In this way, the virtual EBUS bronchoscope gives a mechanism for relating the two live data streams to the CT-based virtual space. In particular, it provides the necessary linkage to enable image-guided EBUS bronchoscopy.

For a given lymph node, the physician follows the system display as follows. First, the physician navigates the “real” EBUS bronchoscope close to the node along the preplanned airway guidance route by following the standard virtual bronchoscope. This step draws on an established approach for registering live bronchoscopic video to CT-based VB views (e.g, Merritt et al. [14])—this synchronizes the positions of both the virtual and real devices. Next, the physician follows the virtual EBUS display to localize the lymph node via EBUS. As discussed in Section 2.2, this second step critically hinges on our proposed CT-EBUS registration method, which synchronizes the positions of the virtual and real EBUS probes. Section 3 later highlights the complete system during image-guided bronchoscopy, while Zang et al. gives more system detail [26].

2.2. Virtual-to-Real EBUS Registration

Proper localization of a lymph node depends on our proposed method for registering the virtual and real EBUS probes. To this point, registration involves aligning the virtual EBUS view derived from the preoperative CT scan and the live “real” intraoperative EBUS probe view.

For our EBUS bronchoscopy problem, we performed virtual-to-real EBUS registration by solving an optimization problem. Let denote the target EBUS view in 3D real space and denote a CT-based virtual EBUS view at pose p within the 3D virtual space, where

| (1) |

denote the Euler angles, and denote the 3D position. Per Figure 4a, p specifies the location and probe axis direction of the virtual EBUS probe. Upon reaching a lymph node’s general vicinity via standard videobronchoscopy guidance as specified by known pose along the preplanned airway guidance route (e.g., using [14]), the physician next pushes the EBUS probe against the airway wall to give view . The pose of is technically unknown, since the physician manually performs this scan at their discretion. However, assuming that the physician follows the cues supplied by the guidance system during the guided procedure, we can surmise that the pose of is close to the known pose of view . Hence, pose p in (1) is initialized to known pose in virtual space. This is the starting point of our optimal registration problem.

To formulate the optimization, we employed a cost function that combines raw image intensity information and known ROI segmentation knowledge. In particular,

| (2) |

where is the NMI metric, focusing on intensity information, and is the Dice index, focusing on ROI shape knowledge. The NMI metric, adapted from Helferty et al. [39], is given by

| (3) |

whereas the Dice index, a commonly used measure of ROI overlap between two images [40,41], is given by

| (4) |

In (3), and are marginal entropies of images and , whereas is a joint entropy:

| (5) |

where and are the respective marginal probability density functions of the pixel intensity values in images and , is the joint density function between corresponding pixels in the two images, and is the number of gray levels that an image pixel can assume. In (4), represents the target lymph node’s region of interest (ROI) as it appears in virtual EBUS view ; i.e.,

| (6) |

where the ROI was defined during planning. Similarly, equals the segmented ROI appearing in EBUS frame , where we used the previously validated automatic method of Zang et al. for this operation [42]. Thus, the collection of CT scan voxels constituting , as given by (6), corresponds to the known ROI knowledge that will be correlated with during optimization. Cost of (2) is in the range since ranges from −1 (strongest NMI correlation) to 1 (no correlation) and ranges from 0 (no ROI overlap) to 1 (total ROI overlap).

Given ((2)–(6)), our optimization problem was formulated as

| (7) |

where is the initial pose, is a search neighborhood around , and is the optimal pose minimizing cost . To solve (7), we adapted the simplex optimization algorithm to our EBUS registration problem to iteratively search for pose giving minimum cost [43]. To this search, we added a constraint that took into account that the physician must push the EBUS probe against the airway wall to acquire an EBUS view. This implies that and poses must be situated on the airway wall surface. Therefore, we interleaved a surface-voxel search with the simplex algorithm. We now summarize the complete Algorithm 1.

| Algorithm 1: Multimodal CT-EBUS Registration Algorithm. |

|

At the conclusion of the registration algorithm, we now know the precise location in 3D chest space of the lymph node depicted in the live intraoperative EBUS probe view , as specified by . We elaborate on the algorithm’s steps below.

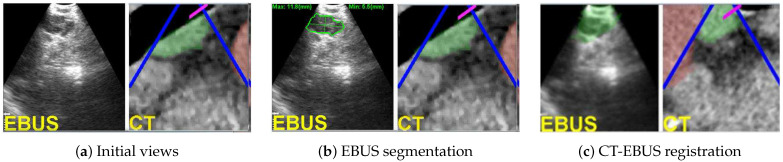

Regarding steps 1–2, Figure 5a,b depicts an example of initial real and virtual EBUS views for a station-10 lymph node. For the real space, is initialized as an EBUS frame captured after completing bronchoscopic navigation. For the virtual space, is the known final pose reached during navigation, whereas equals the predefined nodal ROI as it appears in (green regions in right-side views of Figure 5a–c).

Figure 5.

CT-EBUS registration for a station-10 lymph node (case 21405-139). (a) Initial raw EBUS view (left) and corresponding CT-based virtual EBUS view (right). (b) Result after segmenting the nodal ROI in EBUS view ; the green outline signifies the ROI contour [42]. (c) Registered pair (, ) after final registration; depicts the fused registered CT-based ROI. In all virtual EBUS views, green regions denote nodal ROIs predefined during planning, whereas red regions represent blood vessels.

Step 3 next generates a simplex of suitable candidate search poses, where is defined by seven vertices situated on the airway wall within CT-based virtual space. Each simplex vertex represents a pose on the airway wall neighboring . To begin, we first derived a modified pose as the first voxel along the virtual EBUS device’s viewing axis whose value was > HU (HU = Hounsfield units). In chest CT images, it is well known that air appears as dark voxels with HU value ≈ and the brighter surrounding airway walls have HU values in the range [50, 200]. Hence, we picked the conservative threshold −600 to identify potential airway wall voxels [35]. Next, to derive simplex , we first computed six candidate vertices defining the simplex by changing the six parameters of separately with increments , , , , , and , per (1) [44]. We then adjusted the vertices to corresponding surface points on the airway wall.

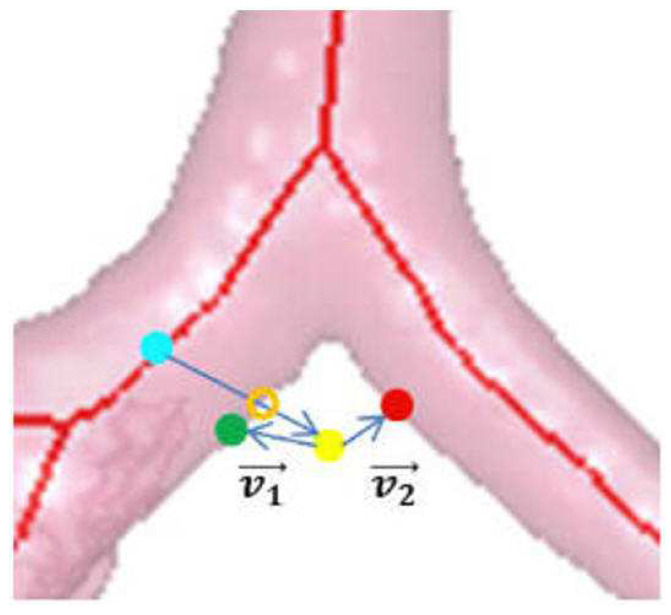

To find the surface points defining the simplex, we used the algorithm of Gibbs et al. [35]. The search drew upon the airway-tree surface-voxel structure constituting the previously derived airway-tree endoluminal surfaces. To begin, we constructed a k-d tree structure for these voxels to facilitate a quick search. Subsequently, for each vertex of the initial simplex, we searched the k-d tree for the point closest to . Since the marching cubes algorithm was used to compute the airway-tree endoluminal surfaces, it had a thickness of 1 layer [45]. However, larger airways have walls of thickness >1 voxel; this introduces uncertainty into the surface voxel locations [35]. Thus, to account for thicker airway walls, we also considered neighboring voxels surrounding surface point as valid candidate vertices. In particular, if has HU value above the surface threshold and mm, then we accepted as an adjusted vertex. Note, however, that the k-d tree was based only on surface voxel coordinates, without regard for airway location. Hence, the closest to a given could be on the wrong airway tree branch, as Gibbs et al. noted [35]. To ensure that is on the same airway branch as , we performed the following, per Figure 6:

-

1.

Compute dot product , where and .

-

2.

If , is part of the correct airway and is kept as the updated vertex.

-

3.

Otherwise, is from the wrong branch. Find the closest airway centerline point to and search for a new surface voxel candidate based on the HU threshold along the direction from to .

Figure 6.

Locating airway wall point . Green dot denotes the current surface point , yellow dot denotes the candidate position , red dot is the closest k-d tree point to , cyan dot is the closest airway centerline point to , and orange hollow dot is the correct surface voxel .

The six adjusted vertices along with delineate the initial simplex for optimization step 4.

Step 4 now applies the iterative simplex algorithm to collapse the simplex around an optimal pose [43,44]. During each iteration, cost was evaluated for all vertices. Depending on these results, various geometric operations were performed that either expand, contract, or reflect the simplex vertices toward a minimum cost voxel solution . The algorithm continued until stayed unchanged for T iterations. Steps 3–4 of the top-level multimodal CT-EBUS registration algorithm were then repeated until the voxel corresponding to no longer changed.

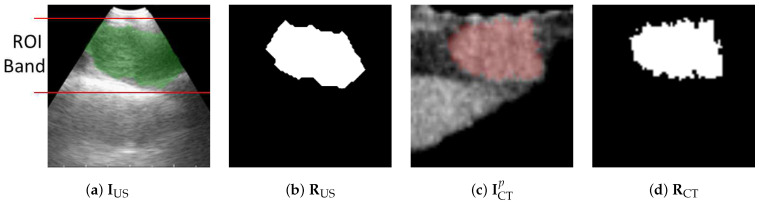

The marginal densities (5) used by cost function in ((2)–(3)) were estimated by normalized image histograms of images and , while the joint density was given by the normalized joint histogram between the two images. These calculations, however, did not use an entire image. Since EBUS images are noisy and filled with bland low-information regions, major portions of and provided misleading/useless information. Instead, we first defined the smallest trapezoid that bounds the segmented ROI in the given image and stays within the EBUS’s fan-shaped scan region. This region contains the most useful findings. Next, for both and , we only used pixels within the bounding trapezoid to calculate the required histograms; see Figure 7.

Figure 7.

Limiting region of calculations for (5) in cost . (a) Trapezoidal EBUS region delineated by the lines encompassing the EBUS ROI in with (b) showing segmented ROI . (c,d) Corresponding virtual EBUS view and predefined ROI .

For the station 10 node example of Figure 5, the final registration (Figure 5c) required 57 iterations of steps 3–5. The result clearly confirms that the physician has settled at an effective site. Figure 3 gives another registration example for a station 4R node (case 21405-116).

2.3. Implementation

We ran all experiments on the guidance computer. For our tests, this computer was a Dell Precision T5500 64-bit Windows-based PC (dual 2.8 GHz 6-core CPUs, 24 GB RAM) powered by an Nvidia Quadro 4000 2GB PCIe graphics engine and a Matrox Vio IA/OA frame grabber. All software was implemented in C++. Many data-intensive operations were parallelized using Nvidia’s compute-unified device-architecture (CUDA) tools and OpenMP. A 24-inch Dell monitor served as the guidance system’s display. A standard Olympus Evis Exera III bronchoscopy suite was used for all tests (CV-190 video system, CLV-190 light source, EU-ME1 ultrasound unit, and display monitor). To interface the EBUS bronchoscope to the guidance computer, we connected a BNC-to-BNC cable from the bronchoscope display monitor’s PnP (picture-in-picture) video output to the guidance computer’s Matrox video input. This gave access to the live video streams from the EBUS bronchoscope (both the bronchoscopic video and EBUS).

3. Results

Section 3.1 tests the efficacy of our virtual-to-real EBUS registration method, whereas Section 3.2 presents example results using our method within a complete image-guided EBUS bronchoscopy system.

3.1. CT-EBUS Registration Study

We developed and tested our method using data collected retrospectively from 10 lung-cancer patients. These data were collected at our University’s lung cancer management clinic through two IRB-approved study protocols under informed consent. Chest CT scans were generated by Siemens CT scanners. Each scan was made up of 512 × 512 axial-plane sections (number of sections per scan: 570 to 720), with section thickness = 0.75 mm, section spacing = 0.5 mm, and axial-plane resolution ranging from 0.60 mm to 0.81 mm. Seven studies drew upon a standard definition EBUS bronchoscope video feed giving 300 × 300 EBUS views, and three studies drew upon a high-definition video feed giving 816 × 848 EBUS views. Over the 10 patients, 28 ROIs were predefined, with a typical long axis length >15 mm: 27 lymph nodes and 1 azygos vein. The lymph nodes were distributed over the nodal stations as follows: station 4, 10 nodes; station 7, 9 nodes; station 10, 5 nodes; and station 11, 3 nodes.

To define the ground truth for each ROI, we first picked a 2D EBUS frame that depicts the ROI. Next, we performed 2D EBUS segmentation using Zang’s method to extract the ROI in [42]. Finally, we established the ground-truth virtual EBUS view best matching . To achieve this, we started at the final pose of the ROI’s precomputed optimal airway route in CT-based virtual space [25]. We then manually moved the virtual EBUS bronchoscope around this pose to locate a ground-truth pose such that view most closely mimics the anatomical appearance shown in .

To perform the test for each ROI, we began by running the registration method at initial pose in virtual space. Upon convergence, the method returned a virtual EBUS view at an optimal pose . Let

represent the 3D positions and

denote the direction vectors for and , respectively. To quantify the registration performance, we compared and using three metrics, as suggested in [14]:

-

1.Position difference , which measures the Euclidean distance between and :

(8) -

2.Direction error , which gives the angle between and :

(9) -

3.Needle difference , which indicates the distance between two extended needle tips at and :

(10)

Regarding these metrics, indicates the positional error of the bronchoscope on the airway surface, quantifies the orientation error, and measures the potential biopsy error.

For the 28-ROI test set, the registration method correctly localized 28/28 ROIs (100%), with average registration time = 3.4 s, which represents the time to complete the iterative simplex optimization algorithm for (7) after segmenting the ROI in the captured EBUS frame . (We chose for this test.) Table 1 gives the aggregate performance for the error metrics. Lastly, Figure 8 illustrates two registration examples. The results firmly assert the effectiveness of the method.

Table 1.

Registration performance over the 28-ROI test set.

| Metric | Mean ± Std. Dev. | [Low, High] |

|---|---|---|

| (mm) | 2.2 mm ± 2.3 mm | [0.2 mm, 11.8 mm] |

| (mm) | 4.3 mm ± 3.0 mm | [1.1 mm, 11.7 mm] |

| () | 11.8 ± 8.8 | [0.4, 41.3] |

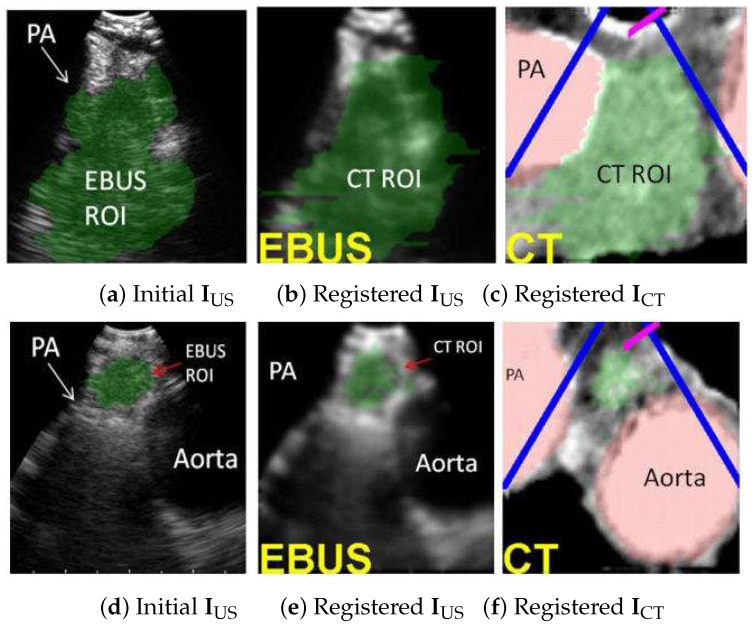

Figure 8.

CT-EBUS registration examples. (a–c) Station 4L node for case 20349-3-84. (d–f) Station 4L node for case 21405-108. Parts (a,d) show the automatically segmented ROI in EBUS frame using [42]. Parts (b,e) show the registered CT-based predefined ROI superimposed on . Parts (c,f) depict the CT-based virtual EBUS view after registration. In all views, the green region corresponds to the lymph node, whereas the red regions represent major vessels (PA = pulmonary artery).

We next performed a parameter sensitivity test for the registration method. For the test, we first randomly picked one ROI from each human case used in the 28-ROI study. We then performed tests on these 10 ROIs, whereby one search parameter was varied over a given range:

-

1.

Positional parameters , , and , range [−10 mm, 10 mm], step size = 2.5 mm.

-

2.

Angle parameters , , and , range[−100, 100], step size = 25.

-

3.

Iteration parameter T from 5 to 25, step size 5.

(The angle range accounts for the physician’s limitations in twisting the bronchoscope [46]). For each test, one parameter was changed, whereas all others were held at the default values. Each run always started at the ground truth pose that terminates an ROI’s derived optimal path.

Table 2, Table 3 and Table 4 summarize the results for three parameters; results for the other parameters are similar. All results are given over the 10-ROI test set. As a disclaimer, we point out that technician judgment was required in interactively deriving the “best matching” virtual EBUS bronchoscope views serving as the ground truth. As a result of the inherent degradations in EBUS views (broken edges, speckle, low detail content), it was difficult to locate the single pose for which the virtual EBUS view “best” matches the real EBUS frame. This adds bias to our ground truth. In addition, ambiguity can exist when registering a 2D EBUS image to a 3D CT subvolume because the lymph nodes often have approximately spherical/elliptical 3D shapes. Thus, results can vary over different initial conditions.

Table 2.

Registration sensitivity to variation in .

| (mm) | (mm) | (mm) | () | |||

|---|---|---|---|---|---|---|

| −10.0 | 3.7 ± 3.4 | [1.1, 11.7] | 7.3 ± 5.1 | [2.1, 14.6] | 18.1 ± 11.1 | [4.6, 38.3] |

| −7.5 | 4.3 ± 3.3 | [1.8, 12.2] | 8.4 ± 5.3 | [2.5, 18.7] | 21.2 ± 15.2 | [6, 55.9] |

| −5.0 | 4.5 ± 3.5 | [1.3, 12.2] | 6.4 ± 4 | [1.5, 11.4] | 18.9 ± 12.7 | [5.9, 45.8] |

| −2.5 | 4.6 ± 3.1 | [1, 10.2] | 7.8 ± 4 | [2.1, 14.4] | 22.5 ± 14.5 | [6.2, 46.5] |

| 0.0 | 3.7 ± 3.2 | [1.4, 10.5] | 7.8 ± 3.9 | [2.7, 14.4] | 22.8 ± 12.9 | [8.8, 46.2] |

| 2.5 | 4 ± 2.8 | [0.9, 9.4] | 8.5 ± 6.7 | [1.4, 20.9] | 25.9 ± 19.4 | [5.7, 61.5] |

| 5.0 | 3.8 ± 4.5 | [0.6, 14.5] | 6.4 ± 4.8 | [2, 14.9] | 19.3 ± 12.1 | [5.9, 41.8] |

| 7.5 | 2.8 ± 3.8 | [0.3, 11.8] | 4.8 ± 4.5 | [1.1, 11.7] | 10.8 ± 8.8 | [0.4, 22.9] |

| 10.0 | 3.8 ± 3.9 | [1.1, 12] | 5.7 ± 4.1 | [1.6, 11.7] | 16 ± 9.8 | [5.5, 30.3] |

Table 3.

Registration sensitivity to variation in .

| (mm) | (mm) | () | ||||

|---|---|---|---|---|---|---|

| −100.0 | 6.6 ± 4.2 | [2.2, 13.4] | 9.0 ± 4.1 | [5.4, 14.5] | 25.0 ± 12.6 | [7.1, 41.2] |

| −75.0 | 4.8 ± 4.0 | [1.2, 11.5] | 8.6 ± 4.8 | [3.3, 16.2] | 24.2 ± 11.5 | [8.0, 40.4] |

| −50.0 | 5.0 ± 4.6 | [1.3, 14.9] | 6.7 ± 3.2 | [2.8, 12.3] | 17.3 ± 8.8 | [5.3, 28.2] |

| −25.0 | 3.6 ± 3.6 | [0.6, 11.2] | 8.2 ± 6.2 | [1.3, 20.1] | 23.3 ± 13.6 | [5.2, 50.8] |

| 0.0 | 4.4 ± 3.6 | [1, 11.8] | 8 ± 2.4 | [5.5, 12] | 26.3 ± 10.3 | [15.9, 47.9] |

| 25.0 | 3.5 ± 2.9 | [1, 9.9] | 7 ± 3.4 | [2.1, 13.4] | 20.1 ± 11 | [7.8, 36] |

| 50.0 | 2.8 ± 3.8 | [0.3, 11.8] | 4.8 ± 4.5 | [1.1, 11.7] | 10.8 ± 8.8 | [0.4, 22.9] |

| 75.0 | 3.1 ± 3.2 | [0.9, 10.4] | 6.1 ± 2.8 | [3.0, 11.5] | 16.5 ± 9.5 | [5.6, 29.4] |

| 100.0 | 3.6 ± 3.2 | [1.0, 11.0] | 5.0 ± 4.1 | [1.4, 10.5] | 19.0 ± 11.5 | [8.1, 43.7] |

Table 4.

Registration sensitivity to variation in iteration number T. Time denotes computation time to complete the optimization.

| T | (mm) | (mm) | () | Time (s) | |||

|---|---|---|---|---|---|---|---|

| 5 | 3 ± 4.2 | [0.9, 13.2] | 5.9 ± 5.2 | [2, 17.7] | 17.3 ± 9.3 | [6.4, 36] | 1.3 |

| 10 | 2.8 ± 3.8 | [0.9, 11.8] | 5.3 ± 4.2 | [1.3, 11.7] | 13.6 ± 7.3 | [1.3, 21.9] | 2.6 |

| 15 | 2.8 ± 3.8 | [0.3, 11.8] | 4.8 ± 4.5 | [1.1, 11.7] | 10.8 ± 8.8 | [0.4, 22.9] | 3.4 |

| 20 | 3.2 ± 3.9 | [0.3, 11.8] | 4.4 ± 3.9 | [1.1, 11.7] | 9.6 ± 7.5 | [0.4, 20.5] | 5.0 |

| 25 | 2.7 ± 3.8 | [0.3, 11.8] | 4.2 ± 3.7 | [1.1, 11.7] | 9.8 ± 7.6 | [0.4, 20.5] | 8.0 |

Overall, the initial condition step sizes, which affect the size of the initial simplex, had a minimal impact on the performance. The bronchoscope position and needle errors, and , were generally always under 5 mm and 9 mm, respectively, which are smaller than the typical clinically considered lymph node (long axis > 10 mm). The angle error also fluctuated under 25. Larger errors are attributable to the aforementioned ambiguity. Increasing iteration number T beyond five iterations does result in performance improvement. This gain was less significant, however, from 15 to 25 iterations, especially considering that the mean execution time increased from 3.4 to 8.0 s. Hence, for the previous 28-ROI study and all later human studies, we chose as the default iteration number.

Regarding the 28-ROI test, the precise mean registration time = 3.4 s ± 1.6 s, with a range = [1.9 s, 10.0 s]. Notably, the registration time does depend somewhat on the size of the node. We note that the two largest nodes considered required the longest registration times: (1) 10.0 s for a station 7 node with all axes cm (long axis = 3.1 cm); and (2) 7.9 s for a station 4R node with all axes ≥ 1.6 cm (long axis = 2.7 cm). Excluding these two outliers, the mean registration time for the other 26 test nodes = 3.0 s ± 0.5 s (range, [1.9 s, 4.1 s]. As the discussion later highlights, these times are acceptable for real usage. Overall, these results support the method’s robustness.

3.2. Image-Guided EBUS Bronchoscopy System

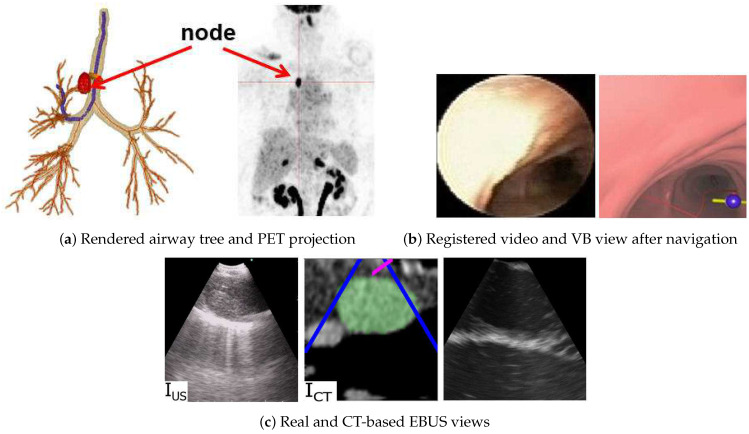

The registration method has been integrated into a complete image-guided EBUS bronchoscopy system, as described more fully in [26]. Figure 9 illustrates a retrospective example of system guidance for a 68-year-old female lung cancer patient presenting a station 4R lymph node. Both a high-resolution chest CT and a whole-body PET/CT study were available. The chest CT, produced by a Siemens SOMATOM Definition Flash, has specifications 0.77 mm, 0.5 mm, and volume dimensions = 512 × 512 × 570, while the PET/CT study, generated by a Philips TrueFlight integrated scanner, provided a PET scan with 4 mm, 3 mm, and volume dimensions = 144 × 144 × 284. The example depicts both the videobronchoscopic navigation and EBUS localization guidance phases. Figure 9c clearly shows successful registration during the EBUS localization of the node. Notably, Figure 9c also depicts a CT-based simulated EBUS view, generated by the method in [47]. While CT-EBUS registration is performed using the CT-based EBUS view, the supplemental EBUS simulation, which strongly resembles the real EBUS view, adds confidence in the attained position of the real EBUS probe.

Figure 9.

Image-guided EBUS bronchoscopy for a station 4R lymph node for patient 21405-116. (a) 3D airway tree rendering and whole-body PET projection image indicating the target lymph node (red). (b) Registered real video and VB view after navigation. (c) Registered real EBUS view , virtual EBUS view (green region = node), and a CT-based simulated EBUS view, respectively, at final site.

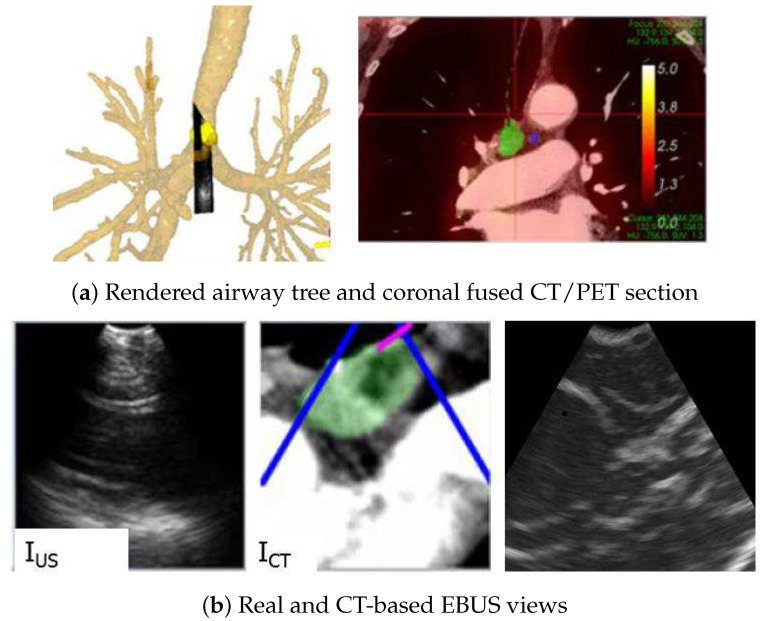

Figure 10 gives a second example from a live prospective guided procedure for a 77-year-old female lung cancer patient presenting a station 4R node. As with the previous example, both a high-resolution chest CT and a whole-body PET/CT study were available. The chest CT, produced by a Siemens SOMATOM Definition Flash, has specifications 0.57 mm, 0.5 mm, and volume dimensions = 512 × 512 × 663, while the PET/CT study, again generated by a Philips TrueFlight integrated scanner, provided a PET scan with 4 mm, 3 mm, and volume dimensions = 144 × 144 × 284. The composite display view during EBUS localization indicates that the EBUS bronchoscope has reached the target node. In addition, the virtual and real EBUS views align well, with the simulated view corroborating the expected appearance of the real view.

Figure 10.

Image-guided EBUS bronchoscopy for a station 4R lymph node for patient 20349-3-87. (a) 3D airway tree rendering and coronal fused CT/PET section indicating the target lymph node (color scale bar indicate PET SUV value). (b) Registered real EBUS view , virtual EBUS view (green region = node), and a CT-based simulated EBUS view, respectively, at final site.

4. Discussion

Lung cancer persists as the most common type of cancer death, with a mortality rate >85% [48]. The staging of the chest lymph nodes via EBUS bronchoscopy is one of the major steps in managing a lung cancer patient. While physicians can readily navigate the device close to the correct vicinity of a lymph node—i.e., get into the right “room” (airway)—their subsequent attempt to then correctly localize the node with EBUS and then perform an adequate biopsy—i.e., hit the right wall in the room (airway wall)—is well-known to be problematic.

We proposed a near real-time CT-EBUS registration method that facilitates an accurate EBUS-based examination of a lymph node during an EBUS bronchoscopy. In particular, after the physician navigates the bronchoscope near the lymph node’s vicinity, the method enables accurate image-guided EBUS probe placement; i.e., the physician can immediately localize the lymph node without the uncertainty encountered in standard EBUS usage. Laboratory results using data from lung cancer patients show the registration method’s robust performance.

The method has also been integrated into a complete image-guided EBUS bronchoscopy system designed for chest nodal staging, as demonstrated with system examples. A companion paper by Zang et al. gives more detail related to the system’s guidance and display capabilities [26]. As discussed fully in the companion paper, the system’s functionality and feasibility has been validated in both retrospective and prospective human studies at our University Hospital. For the 13-patient prospective study run within our hospital’s standard clinical work flow, 100% of preselected target lymph nodes were successfully localized using the system, a strong indicator of our registration method’s efficacy. The mean time for performing image-guided EBUS for a particular node was 87.4 s, with a total mean procedure time of 6 min 43 s (4.61 nodes per patient). This time includes all time for device navigation, EBUS localization, EBUS segmentation, and final registration, and it excludes biopsy time. In addition, the system appeared to be safe and feasible in the live clinical setting, with no adverse events reported. Complete detail of this study appears in [26].

While these results point to the potential practicality of the method for live clinical practice, a larger multi-center study is needed to more fully ascertain our methodology’s utility for enabling more efficacious EBUS-based nodal staging. One notable limitation is the need to segment ROIs live in the captured real EBUS frame. Some ROIs required multiple segmentation attempts. However, the prospective study gave an acceptable 18.1 s mean time to segment an ROI in a real EBUS frame (part of the 87.4 s mean procedure time per node). Finally, the system requires integration into an approved system meeting federal FDA quality standards.

As an additional point, for all lymph nodes considered in our studies, the physician specified the nodal station labels while selecting target lymph nodes on CT during procedure planning. Hence, during the later image-guided EBUS bronchoscopy procedure, when a node scanned by the “real” EBUS is registered to the target CT-based node, this not only confirms the 3D physical location of the node, but it also implicitly confirms the real node’s station label. While not a part of this paper, we had devised a methodology previously for automatically defining the nodal stations and assigning station labels to lymph nodes identified in CT; e.g., [49,50].

On another note, we also easily adapted the registration method to the newer Olympus BF-UC190F EBUS bronchoscope by a simple adjustment of scope tip specifications [51]. By making a similar adjustment, other related devices, such as a recently proposed thinner EBUS bronchoscope capable of going deeper into the airway tree [52], could also be guided using our methodology. Finally, our methodology could help to drive robotics-based bronchoscopy systems, which currently offer assistance for the bronchoscope only [10].

Author Contributions

Conceptualization, X.Z., W.Z. and W.H.; methodology, X.Z., W.Z. and W.H.; software, X.Z. and W.Z.; validation, X.Z., W.Z., J.T., R.B. and W.H.; formal analysis, X.Z. and W.Z.; investigation, X.Z., W.Z., J.T., R.B. and W.H.; resources, J.T., R.B. and W.H.; data curation, X.Z., W.Z., R.B. and W.H.; writing—original draft preparation, X.Z., W.Z., J.T., R.B. and W.H.; writing—review and editing, X.Z., W.Z. and W.H.; visualization, X.Z., W.Z. and W.H.; supervision, R.B. and W.H.; project administration, R.B. and W.H.; funding acquisition, W.H. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Penn State University, Hershey, PA (protocol 21405, approval date July 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

William E. Higgins and Penn State have an identified conflict of interest and financial interest related to this research. These interests have been reviewed by the University’s Institutional and Individual Conflict of Interest Committees and are currently being managed by the University and reported to the NIH.

Funding Statement

This research was funded by NIH National Cancer Institute grant R01-CA151433.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Wahidi M., Herth F., Chen A., Cheng G., Yarmus L. State of the Art: Interventional Pulmonology. Chest. 2020;157:724–736. doi: 10.1016/j.chest.2019.10.013. [DOI] [PubMed] [Google Scholar]

- 2.Avasarala S.K., Aravena C., Almeida F.A. Convex probe endobronchial ultrasound: Historical, contemporary, and cutting-edge applications. J. Thorac. Disease. 2020;12:1085–1099. doi: 10.21037/jtd.2019.10.76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sheski F., Mathur P. Endobronchial Ultrasound. Chest. 2008;133:264–270. doi: 10.1378/chest.06-1735. [DOI] [PubMed] [Google Scholar]

- 4.Kinsey C.M., Arenberg D.A. Endobronchial Ultrasound–guided Transbronchial Needle Aspiration for Non–Small Cell Lung Cancer Staging. Am. J. Respir. Crit. Care Med. 2014;189:640–649. doi: 10.1164/rccm.201311-2007CI. [DOI] [PubMed] [Google Scholar]

- 5.Fernández-Villar A., Leiro-Fernández V., Botana-Rial M., Represas-Represas C., Núñez-Delgado M. The endobronchial ultrasound-guided transbronchial needle biopsy learning curve for mediastinal and hilar lymph node diagnosis. Chest. 2012;141:278–279. doi: 10.1378/chest.11-1986. [DOI] [PubMed] [Google Scholar]

- 6.Ernst A., Herth F.J. Endobronchial Ultrasound: An Atlas and Practical Guide. Springer Science & Business Media; New York, NY, USA: 2009. [Google Scholar]

- 7.Davoudi M., Colt H., Osann K., Lamb C., Mullon J. Endobronchial ultrasound skills and tasks assessment tool. Am. J. Respir. Crit. Care Med. 2012;186:773–779. doi: 10.1164/rccm.201111-1968OC. [DOI] [PubMed] [Google Scholar]

- 8.Folch E., Majid A. Point: Are > 50 supervised procedures required to develop competency in performing endobronchial ultrasound-guided transbronchial needle aspiration for mediastinal staging? Yes. Chest. 2013;143:888–891. doi: 10.1378/chest.12-2462. [DOI] [PubMed] [Google Scholar]

- 9.Reynisson P.J., Leira H.O., Hernes T.N., Hofstad E.F., Scali M., Sorger H., Amundsen T., Lindseth F., Langø T. Navigated bronchoscopy: A technical review. J. Bronchol. Interv. Pulmonol. 2014;21:242–264. doi: 10.1097/LBR.0000000000000064. [DOI] [PubMed] [Google Scholar]

- 10.Criner G.J., Eberhardt R., Fernandez-Bussy S., Gompelmann D., Maldonado F., Patel N., Shah P.L., Slebos D.J., Valipour A., Wahidi M.M., et al. Interventional Bronchoscopy: State-of-the-Art Review. Am. J. Respir. Crit. Care Med. 2020;202:29–50. doi: 10.1164/rccm.201907-1292SO. [DOI] [PubMed] [Google Scholar]

- 11.Vining D.J., Liu K., Choplin R.H., Haponik E.F. Virtual bronchoscopy: Relationships of virtual reality endobronchial simulations to actual bronchoscopic findings. Chest. 1996;109:549–553. doi: 10.1378/chest.109.2.549. [DOI] [PubMed] [Google Scholar]

- 12.Mori K., Hasegawa J., Toriwaki J., Anno H., Katada K. Recognition of bronchus in three dimensional X-Ray CT images with application to virtualized bronchoscopy system; Proceedings of the 13th International Conference on Pattern Recognition; Vienna, Austria. 25–29 August 1996; pp. 528–532. [Google Scholar]

- 13.Higgins W.E., Ramaswamy K., Swift R., McLennan G., Hoffman E.A. Virtual bronchoscopy for 3D pulmonary image assessment: State of the art and future needs. Radiographics. 1998;18:761–778. doi: 10.1148/radiographics.18.3.9599397. [DOI] [PubMed] [Google Scholar]

- 14.Merritt S., Khare R., Bascom R., Higgins W. Interactive CT-Video Registration for Image-Guided Bronchoscopy. IEEE Trans. Med. Imaging. 2013;32:1376–1396. doi: 10.1109/TMI.2013.2252361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wahidi M.M., Hulett C., Pastis N., Shepherd R.W., Shofer S.L., Mahmood K., Lee H., Malhotra R., Moser B., Silvestri G.A. Learning experience of linear endobronchial ultrasound among pulmonary trainees. Chest. 2014;145:574–578. doi: 10.1378/chest.13-0701. [DOI] [PubMed] [Google Scholar]

- 16.Merritt S.A., Gibbs J.D., Yu K.C., Patel V., Rai L., Cornish D.C., Bascom R., Higgins W.E. Image-Guided Bronchoscopy for Peripheral Lung Lesions: A Phantom Study. Chest. 2008;134:1017–1026. doi: 10.1378/chest.08-0603. [DOI] [PubMed] [Google Scholar]

- 17.Ost D.E., Ernst A., Lei X., Feller-Kopman D., Eapen G.A., Kovitz K.L., Herth F.J., Simoff M. Diagnostic yield of endobronchial ultrasound-guided transbronchial needle aspiration: Results of the AQuIRE Bronchoscopy Registry. Chest. 2011;140:1557–1566. doi: 10.1378/chest.10-2914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ost D.E., Ernst A., Lei X., Kovitz K.L., Benzaquen S., Diaz-Mendoza J., Greenhill S., Toth J., Feller-Kopman D., Puchalski J., et al. Diagnostic Yield and Complications of Bronchoscopy for Peripheral Lung Lesions. Results of the AQuIRE Registry. Am. J. Respir. Crit. Care Med. 2016;193:68–77. doi: 10.1164/rccm.201507-1332OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sato M., Chen F., Aoyama A., Yamada T., Ikeda M., Bando T., Date H. Virtual endobronchial ultrasound for transbronchial needle aspiration. J. Thorac. Cardiovas. Surg. 2013;146:1204–1212. doi: 10.1016/j.jtcvs.2013.01.019. [DOI] [PubMed] [Google Scholar]

- 20.Sorger H., Hofstad E.F., Amundsen T., Langø T., Leira H.O. A novel platform for electromagnetic navigated ultrasound bronchoscopy (EBUS) Int. J. Comput. Assist. Radiol. Surg. 2016;11:1431–1443. doi: 10.1007/s11548-015-1326-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sorger H., Hofstad E., Amundsen T., Lango T., Bakeng J., Leira H. A multimodal image guiding system for Navigated Ultrasound Bronchoscopy (EBUS): A human feasibility study. PLoS ONE. 2017;12:e0171841. doi: 10.1371/journal.pone.0171841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tamiya M., Okamoto N., Sasada S., Shiroyama T., Morishita N., Suzuki H., Yoshida E., Hirashima T., Kawahara K., Kawase I. Diagnostic yield of combined bronchoscopy and endobronchial ultrasonography, under LungPoint guidance for small peripheral pulmonary lesions. Respirology. 2013;18:834–839. doi: 10.1111/resp.12095. [DOI] [PubMed] [Google Scholar]

- 23.Luo X., Mori K. Beyond Current Guided Bronchoscopy: A Robust and Real-Time Bronchoscopic Ultrasound Navigation System. In: Mori K., Sakuma I., Sato Y., Barillot C., Navab N., editors. MICCAI 2013 Lecture Notes in Computer Science. Volume 8149. Springer; Berlin/Heidelberg, Germany: 2013. pp. 388–395. [DOI] [PubMed] [Google Scholar]

- 24.Asano F. Practical Application of Virtual Bronchoscopic Navigation. In: Mehta A., Jain P., editors. Interventional Bronchoscopy. Humana; Totowa, NJ, Canada: 2013. pp. 121–140. [Google Scholar]

- 25.Zang X., Gibbs J., Cheirsilp R., Byrnes P., Toth J., Bascom R., Higgins W. Optimal Route Planning for Image-Guided EBUS Bronchoscopy. Comput. Biol. Med. 2019;112:103361. doi: 10.1016/j.compbiomed.2019.103361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zang X., Cheirsilp R., Byrnes P.D., Kuhlengel T.K., Abendroth C., Allen T., Mahraj R., Toth J., Bascom R., Higgins W.E. Image-guided EBUS bronchoscopy system for lung-cancer staging. Inform. Med. Unlocked. 2021;25:1–13. doi: 10.1016/j.imu.2021.100665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Huang X., Moore J., Guiraudon G., Jones D.L., Bainbridge D., Ren J., Peters T.M. Dynamic 2D Ultrasound and 3D CT Image Registration of the Beating Heart. IEEE Trans. Med. Imaging. 2009;28:1179–1189. doi: 10.1109/TMI.2008.2011557. [DOI] [PubMed] [Google Scholar]

- 28.Kaar M., Hoffmann R., Bergmann H., Figl M., Bloch C., Kratochwil A., Birkfellner W., Hummel J. Comparison of two navigation system designs for flexible endoscopes using abdominal 3D ultrasound; Proceedings of the SPIE Medical Imaging 2011; Lake Buena Vista, FL, USA. 13–17 February 2011; pp. 18–25. [Google Scholar]

- 29.Rueckert D., Clarkson M.J., Hill D.L.J., Hawkes D.J. Non-rigid registration using higher-order mutual information; Proceedings of the SPIE Medical Imaging 2000; San Diego, CA, USA. 18–29 March 2000; pp. 438–447. [Google Scholar]

- 30.Sotiras A., Davatzikos C., Paragios N. Deformable Medical Image Registration: A survey. IEEE Trans. Med. Imaging. 2013;32:1153–1190. doi: 10.1109/TMI.2013.2265603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Studholme C., Hill D.L.G., Hawkes D.J. An overlap invariant entropy measure of 3D medical image alignment. Pattern Recognit. 1999;32:71–86. doi: 10.1016/S0031-3203(98)00091-0. [DOI] [Google Scholar]

- 32.Studholme C., Drapaca C., Iordanova B., Cardenas V. Deformation-based mapping of volume change from serial brain MRI in the presence of local tissue contrast change. IEEE Trans. Med. Imaging. 2006;25:626–639. doi: 10.1109/TMI.2006.872745. [DOI] [PubMed] [Google Scholar]

- 33.Knops Z.F., Maintz J.B.A., Viergever M.A., Pluim J.P.W. Registration using segment intensity remapping and mutual information; Proceedings of the International Conference on Medical Imaging and Computer Assisted Intervention; Saint-Malo, France. 26–29 September 2004; pp. 805–812. [Google Scholar]

- 34.Gibbs J., Graham M.W., Bascom R., Cornish D., Khare R., Higgins W. Optimal procedure planning and guidance system for peripheral bronchoscopy. IEEE Trans. Biomed. Eng. 2014;61:638–657. doi: 10.1109/TBME.2013.2285627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gibbs J.D., Graham M.W., Higgins W.E. 3D MDCT-based system for planning peripheral bronchoscopic procedures. Comput. Biol. Med. 2009;39:266–279. doi: 10.1016/j.compbiomed.2008.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Graham M.W., Gibbs J.D., Cornish D.C., Higgins W.E. Robust 3D Airway-Tree Segmentation for Image-Guided Peripheral Bronchoscopy. IEEE Trans. Med. Imaging. 2010;29:982–997. doi: 10.1109/TMI.2009.2035813. [DOI] [PubMed] [Google Scholar]

- 37.Lu K., Higgins W.E. Segmentation of the central-chest lymph nodes in 3D MDCT images. Comput. Biol. Med. 2011;41:780–789. doi: 10.1016/j.compbiomed.2011.06.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bricault I., Ferretti G., Cinquin P. Registration of Real and CT-Derived Virtual Bronchoscopic Images to Assist Transbronchial Biopsy. IEEE Trans. Med. Imaging. 1998;17:703–714. doi: 10.1109/42.736022. [DOI] [PubMed] [Google Scholar]

- 39.Helferty J.P., Sherbondy A.J., Kiraly A.P., Higgins W.E. Computer-based system for the virtual-endoscopic guidance of bronchoscopy. Comput. Vis. Image Underst. 2007;108:171–187. doi: 10.1016/j.cviu.2006.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Crum W., Camara O., Hill D. Generalized Overlap Measures for Evaluation and Validation in Medical Image Analysis. IEEE Trans. Med. Imaging. 2006;25:1451–1461. doi: 10.1109/TMI.2006.880587. [DOI] [PubMed] [Google Scholar]

- 41.Rueda S., Fathima S., Knight C.L., Yaqub M., Papageorghiou A.T., Rahmatullah B., Foi A., Maggioni M., Pepe A., Tohka J., et al. Evaluation and comparison of current fetal ultrasound image segmentation methods for biometric measurements: A grand challenge. IEEE Trans. Med. Imaging. 2014;33:797–813. doi: 10.1109/TMI.2013.2276943. [DOI] [PubMed] [Google Scholar]

- 42.Zang X., Bascom R., Gilbert C., Toth J., Higgins W. Methods for 2-D and 3-D Endobronchial Ultrasound Image Segmentation. IEEE Trans. Biomed. Eng. 2016;63:1426–1439. doi: 10.1109/TBME.2015.2494838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Nelder J.A., Mead R. A simplex method for function optimization. Comput. J. 1965;7:308–313. doi: 10.1093/comjnl/7.4.308. [DOI] [Google Scholar]

- 44.Higgins W.E., Helferty J.P., Lu K., Merritt S.A., Rai L., Yu K.C. 3D CT-video fusion for image-guided bronchoscopy. Comput. Med. Imaging Graph. 2008;32:159–173. doi: 10.1016/j.compmedimag.2007.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Schroeder W., Martin K., Lorensen B. The Visualization Toolkit. 4th ed. Prentice Hall; Upper Saddle River, NJ, USA: 2008. [Google Scholar]

- 46.Khare R., Bascom R., Higgins W. Hands-Free System for Bronchoscopy Planning and Guidance. IEEE Trans. Biomed. Eng. 2015;62:2794–2811. doi: 10.1109/TBME.2015.2401514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Zhao W., Ahmad D., Toth J., Bascom R., Higgins W.E. Endobronchial Ultrasound Image Simulation for Image-Guided Bronchoscopy. IEEE Trans. Biomed. Eng. 2022 doi: 10.1109/TBME.2022.3190165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bray F., Ferlay J., Soerjomataram I., Siegel R., Torre L., Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018;68:394–424. doi: 10.3322/caac.21492. [DOI] [PubMed] [Google Scholar]

- 49.Lu K., Taeprasartsit P., Bascom R., Mahraj R., Higgins W. Automatic definition of the central-chest lymph-node stations. Int. J. Comput. Assist. Radiol. Surg. 2011;6:539–555. doi: 10.1007/s11548-011-0547-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kuhlengel T.K., Higgins W.E. Multi-Destination Planning for Comprehensive Lymph Node Staging Bronchoscopy. In: Fei B., Linte C., editors. SPIE Medical Imaging 2020: Image-Guided Procedures, Robotic Interventions, and Modeling. Volume 11315. SPIE; Bellingham, DC, USA: 2020. pp. 113151T-1–113151T-7. [Google Scholar]

- 51.Zhao W. Ph.D. Thesis. The Pennsylvania State University, Department of Electrical Engineering; State College, PA, USA: 2022. Planning and Guidance Methods for Peripheral Bronchoscopy. [Google Scholar]

- 52.Fujino K., Ujiie H., Kinoshita T., Lee C.Y., Igai H., Inage T., Motooka Y., Gregor A., Suzuki M., Yasufuku K. First Evaluation of the Next-Generation Endobronchial Ultrasound System in Preclinical Models. Ann. Thorac. Surg. 2019;107:1464–1471. doi: 10.1016/j.athoracsur.2018.11.068. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.