Abstract

Given the current COVID-19 pandemic, medical research today focuses on epidemic diseases. Innovative technology is incorporated in most medical applications, emphasizing the automatic recognition of physical and emotional states. Most research is concerned with the automatic identification of symptoms displayed by patients through analyzing their body language. The development of technologies for recognizing and interpreting arm and leg gestures, facial features, and body postures is still in its early stage. More extensive research is needed using artificial intelligence (AI) techniques in disease detection. This paper presents a comprehensive survey of the research performed on body language processing. Upon defining and explaining the different types of body language, we justify the use of automatic recognition and its application in healthcare. We briefly describe the automatic recognition framework using AI to recognize various body language elements and discuss automatic gesture recognition approaches that help better identify the external symptoms of epidemic and pandemic diseases. From this study, we found that since there are studies that have proven that the body has a language called body language, it has proven that language can be analyzed and understood by machine learning (ML). Since diseases also show clear and different symptoms in the body, the body language here will be affected and have special features related to a particular disease. From this examination, we discovered that it is possible to specialize the features and language changes of each disease in the body. Hence, ML can understand and detect diseases such as pandemic and epidemic diseases and others.

Keywords: body language, pandemic, epidemic, body language analysis, AI

1. Introduction

Body language constitutes one of the languages of communication. The types of languages are classified into verbal and non-verbal languages. Body language includes non-verbal language, where the movements and behaviors of the body are used instead of words to express and convey information. Body language may involve hand movements, facial expressions and hints, eye movements, tone of voice, body movements and positions, gestures, use of space, and the like. This research will focus on interpretations of the human body language, classified under kinesiology.

Body language is entirely different from sign language, a complete language—like verbal language—with its own basic rules and complex grammar systems [1,2]. On the other hand, body language does not contain grammatical rules and is usually a language belonging to or classified according to cultures [3]. Interpretations of body language may differ from country to country and from one culture to another. There exists some controversy over whether body language can be regarded as a universal language for all people. Some researchers have concluded that most communication among individuals involves physical symbols or gestures since the interaction of body language here facilitates speedy information transmission and understanding [4]. According to [5], body language speaks more and better content than verbal language. When, for example, an individual speaks over the phone to someone about an inquiry, the information becomes mysterious due to the physical language’s restrictions. However, an individual sitting directly in front of an audience has fewer restrictions and does not have an audience. The information with body language is more easily transmitted and received, even more so if the speaker is standing, allowing more freedom of movement. Thus, it follows that body language enhances communication. This work attempts to prove that body language enhances workplace positivity.

Several experiments were performed in [6] on facial expressions and body movements affected by human emotions. The study has shown that facial expressions and body movements can accurately determine human emotions. It also proved that combining facial features and activities with body movements is essential for analyzing human expressions. Three different stages of experiments were conducted to determine whether it is necessary to combine the two expressions or not. It was confirmed that it is essential to connect them for identification. Reading someone’s eyes should also not be ignored. It is considered an important factor in expressing and understanding human emotions. We are generally able to know what others want from their eye movements. For that, eye language has many effects. According to [7], the expansion and tightness of the eye size are affected by emotions and allow the observer to convey specific additional information. The human eye blinks, on average, 6 to 10 times per minute. However, when someone is attracted to someone else, the number of blinks is fewer. Study [8] discovered that human feelings could be identified and defined through body position. For example, when a person feels angry, they will push their body forward to express dominance over the other person, and their upper body is tilted and no longer upright. On the other hand, if someone feels intimidated by the opponent, they signal submission by retreating backward or moving their head back. Additionally, a person’s emotional state can be determined from their sitting position. Someone sitting on a chair with half of their upper body and head slightly tilted forward indicates attentiveness and eagerness to follow what is being said. However, sitting with legs and hands crossed suggests that they do not wish to engage and feel uncomfortable with what is being said or the person saying it [5].

Body language analysis is also essential to avoid confusion in a single movement’s meanings and purposes that carry more than one meaning. For example, the expressive movement of a person may be due to a physical handicap or a compulsive movement rather than an intentional one. Furthermore, a particular movement in the body of someone may not mean the same to another. For example, a person may rub their eyes due to itchiness and not fatigue. Foreign cultures also need careful analysis due to their social differences. Although most body movements are universal, there are also movements specific to each culture. This may vary from country to country, region to region, and even social group.

Pandemic and epidemic diseases constitute a global risk factor responsible for the death of millions of people worldwide. The ability to detect and treat casualties is limited, primarily due to the lack of human and technical resources. When patients are not physically accessible, remote diagnosis is required. All pandemic and epidemic diseases are characterized by distinct body movements affecting the face, shoulders, chest, and hands. AI technology has shown positive results in some reading of these gestures. Hence, the idea is to use body language to detect epidemic diseases early and provide treatment. It should be noted that the primary and vital catalyst for the proposal of this study is the COVID-19 disease, which is presently terrorizing the whole world. As researchers in information technology and computer science, we must play our part in rapidly detecting this disease.

This paper aims to study the previous literature and identify body language expressions that indicate disease. Body language is defined as certain expressions, movements, and gestures that point to the physical and emotional state of the bearer. Certain parts of the body can express different characteristics or feelings. Some studies have demonstrated the presence of certain emotional conditions as reflected in particular facial expressions (e.g., joy, sadness, surprise, and anger). Regarding the relationship between diseases and body language, it is known that diseases affect the body parts and qualities and are reflected in the movements and expressions of parts of the body. Different diseases affect different body parts and can be measured, identified, and used for diagnosis.

Hence, this paper is proposed to study some diseases that can be diagnosed by identifying and measuring the external movements of the body. In addition, this paper discusses the findings of previous studies to demonstrate the usefulness and contribution of AI in detecting diseases through body language. One of the biggest obstacles to treating COVID-19 patients effectively is speedy diagnosis. However, the large number of cases exceeds the capacity of most hospitals. Hence, AI offers a solution through ML. ML can detect disease symptoms as manifested in the patient’s body language and can be used to generate correct readings and predictions.

Therefore, the main contribution of this paper is to show the potential use of analyzing body language in health care. The importance of body language analysis in health care and patient body language analysis using AI will be discussed in the following sections. The added tables list previous studies that used ML to identify symptoms through body expressions. The findings demonstrate that a patient’s body language can be analyzed using ML for diagnostic purposes.

2. Methodology

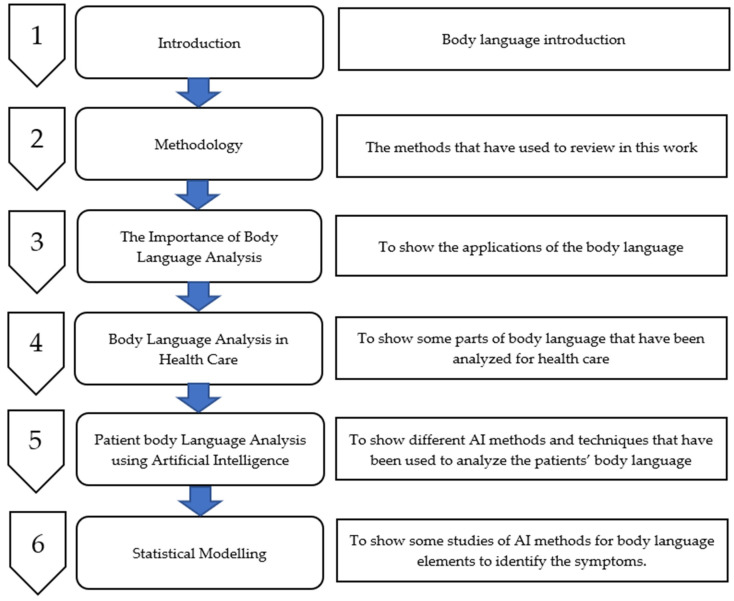

The methods used to review in this work are as follows (also see Figure 1): first, the importance of body language analysis is highlighted to prove that the body movements can be read and analyzed to produce outcomes that are useful for many applications; second, body language analysis in health care is presented to show the importance of body language in medical diagnosis in research; third, ML is used successfully to identify characteristic symptoms; fourth, Table 1 show studies that used ML as a diagnostic tool and include the used algorithms. Each topic was discussed separately, as detailed in the following sections.

Figure 1.

The Review Stages.

Table 1.

Some Studies of AI Methods for Body Language Elements to Identify the Symptoms.

| References | Title | Study Purpose | Method | Year | Result Evaluation | Future Work |

|---|---|---|---|---|---|---|

| [36] | Early prediction of disease progression in COVID-19 pneumonia patients with chest CT and clinical characteristics |

|

|

2020 |

|

|

| [37] | Individual-Level Fatality Prediction of COVID-19 Patients Using AI Methods |

|

|

2020 |

|

|

| [38] | COVID-19 Prediction and Detection Using Deep Learning |

|

|

2020 |

|

|

| [39] | Artificial Intelligence was applied to chest X-ray images to detect COVID-19 automatically. A thoughtful evaluation approach |

|

|

2020 |

|

|

| [40] | A Machine Learning Model to Identify Early-Stage Symptoms of SARS-CoV-2 Infected Patients |

|

|

2020 |

|

|

| [41] | A combined deep CNNLSTM network for the detection of novel coronavirus (COVID-19) using X-ray image |

|

|

2020 |

|

|

| [42] | Smart and automation technologies for ensuring the long-term operation of a factory amid the COVID-19 pandemic: An evolving fuzzy assessment approach |

|

|

2020 |

|

|

| [43] | A Rapid, Accurate, and Machine-Agnostic Segmentation and Quantification Method for CT-Based COVID-19 Diagnosis |

|

|

2020 |

|

|

| [44] | Coronavirus (COVID-19) Classification using CT Images by Machine Learning Methods |

|

|

2020 |

|

|

| [45] | Early Prediction of Mortality Risk Among Severe COVID-19 Patients Using Machine Learning |

|

|

2020 |

|

|

| [46] | Multi-task deep learning-based CT imaging analysis for COVID-19 pneumonia: Classification and segmentation |

|

|

2020 |

|

|

| [47] | Artificial Intelligence and COVID-19: Deep Learning Approaches for Diagnosis and Treatment |

|

|

2020 |

|

|

| [38] | COVID-19 Prediction and Detection Using Deep Learning |

|

|

2020 |

|

|

| [48] | Automated detection and quantification of COVID-19 pneumonia: CT imaging analysis by a deep learning-based software |

|

|

2020 |

|

|

| [49] | Common cardiovascular risk factors and in-hospital mortality in 3894 patients with COVID-19: survival analysis and machine learning-based findings from the multicenter Italian CORIST Study |

|

|

2020 |

|

|

| [50] | InstaCovNet-19: A deep learning classification model for the detection of COVID-19 patients using Chest X-ray |

|

|

2020 |

|

|

| [51] | Monitoring and analysis of the recovery rate of COVID-19 positive cases to prevent dangerous stages using IoT and sensors |

|

|

2020 |

|

|

| [52] | COVID-19 Patient Detection from Telephone Quality Speech Data |

|

|

2020 |

|

|

| [53] | Machine learning-based approaches to detect COVID-19 using clinical text data |

|

|

2020 |

|

|

| [54] | Data science and the role of Artificial Intelligence in achieving the fast diagnosis of COVID-19 |

|

|

2020 |

|

|

| [55] | DeepCOVIDNet: An Interpretable Deep Learning Model for Predictive Surveillance of COVID-19 Using Heterogeneous Features and Their Interactions |

|

|

2020 |

|

|

| [56] | COVID-19 Detection Through Transfer Learning Using Multimodal Imaging Data |

|

|

2020 |

|

|

| [57] | Early Detection of COVID19 by Deep Learning Transfer Model for Populations in Isolated Rural Areas |

|

The processes from image pre-processing, data augmentation, VGG16, VGG19, and transfer of knowledge using

|

2020 |

|

|

| [58] | Deep learning-based detection and analysis of COVID-19 on chest X-ray images |

|

|

2020 |

|

|

| [59] | Analysis of novel coronavirus (COVID-19) using machine learning methods |

|

|

2020 |

Results on average accuracy evaluation for the total number of cases across all four countries:

For the average growth rate spread across all four countries: Simple Linear

Average prediction score across all four countries:

|

|

| [11] | Facial emotion detection using deep learning |

|

|

2016 |

|

|

| [23] | Measuring facial expressions of emotion |

|

The three approaches of

|

2007 |

|

|

| [60] | PRATIT: a CNN-based emotion recognition system using histogram equalization and data augmentation |

|

Pre-processing procedures such as

|

2020 |

|

|

| [61] | Facial expression video analysis for depression detection in Chinese patients |

|

|

2018 |

|

|

| [62] | A Novel Facial Thermal Feature Extraction Method for Non-Contact Healthcare System |

|

Four models are trained using

|

2020 |

|

|

| [63] | Emotion Detection Using Facial Recognition |

|

Emotion detection experiments are performed using

|

2020 |

|

To extend facial recognition systems based on

|

| [64] | Stress and anxiety detection using facial cues from videos |

|

|

2017 |

|

|

| [65] | Automatic Detection of ADHD and ASD from Expressive Behavior in RGBD Data |

|

|

2017 |

|

|

| [66] | A Facial-Expression Monitoring System for Improved Healthcare in Smart Cities |

|

|

2017 |

|

|

| [67] | Deep Pain: Exploiting Long Short-Term Memory Networks for Facial Expression Classification |

|

|

2017 |

|

|

| [68] | Patient State Recognition System for Healthcare Using Speech and Facial Expressions |

|

|

2016 |

|

|

| [69] | Facial expression monitoring system for predicting patient’s sudden movement during radiotherapy using deep learning |

|

|

2020 |

|

|

| [70] | Patient Monitoring System from Facial Expressions using Python |

|

|

2020 |

|

|

| [71] | Combining Facial Expressions and Electroencephalography to Enhance Emotion Recognition |

|

|

2019 |

Each surpasses the most noteworthy performing single technique

|

|

| [72] | Gestures Controlled Audio Assistive Software for the Voice Impaired and Paralysis Patients |

|

|

2019 |

|

|

| [73] | Detecting speech impairments from temporal Visual facial features of aphasia patients |

|

|

2019 |

|

|

| [74] | Gestures Controlled Audio Assistive Software for Voice Impaired and Paralysis Patients |

|

|

2019 |

|

|

| [75] | Detecting Speech Impairments from Temporal Visual Facial Features of Aphasia Patients |

|

|

2018 |

|

|

| [22] | The Elements of End-to-end Deep Face Recognition: A Survey of Recent Advances |

|

|

2021 |

|

|

| [76] | Automated Facial Action Coding System for Dynamic Analysis of Facial Expressions in Neuropsychiatric Disorders |

|

The automated FACS system and its application to video analysis will be described. processed for feature extraction

|

2011 |

|

|

| [23] | Measuring facial expression of emotion |

|

|

2015 |

|

|

| [10] | Classifying Facial Action |

|

Three methods are compared

|

1996 |

|

|

| [24] | Real-Time Gait Analysis Algorithm for Patient Activity Detection to Understand and Respond to the Movements |

|

|

2012 |

|

|

| [25] | A Real-Time Patient Monitoring Framework for Fall Detection |

|

|

2019 |

|

|

| [26] | Anomaly Detection of Elderly Patient Activities in Smart Homes using a Graph-Based Approach |

|

|

2018 |

|

|

| [28] | Fall Detection and Activity Recognition with Machine Learning |

|

|

2008 |

|

|

| [30] | Vision-based detection of unusual patient activity |

|

|

2011 |

|

|

| [31] | MDS: Multi-level decision system for patient behavior analysis based on wearable device information |

|

|

2019 |

|

To widen the scope of decision-making and include other activities for different age groups. |

| [77] | Proposal Gesture Recognition Algorithm Combining CNN for Health Monitoring |

|

|

2019 |

|

|

| [78] | A robust method for VR-based hand gesture recognition using density-based CNN |

|

|

2020 |

|

|

| [79] | Hand Gesture Recognition Using Convolutional Neural Network for People Who Have Experienced a Stroke |

|

|

2019 |

|

|

| [80] | Determining the affective body language of older adults during socially assistive HRI |

|

|

2014 |

|

|

| [81] | Vision based body gesture meta features for Affective Computing |

|

|

2020 |

|

|

| [82] | Deep Learning and Medical Diagnosis: A Review of Literature |

|

|

2020 |

|

|

| [83] | Machine learning classification of design team members’ body language patterns for real time emotional state detection |

|

|

2015 |

|

|

| [84] | Towards Automatic Detection of Amyotrophic Lateral Sclerosis from Speech Acoustic and Articulatory Samples |

|

|

2016 |

|

|

| [85] | Towards improving diagnosis of skin diseases by combining deep neural network and human knowledge |

|

|

2018 |

|

|

| [86] | Early prediction of chronic disease using an efficient machine learning algorithm through adaptive probabilistic divergence-based feature selection approach |

|

|

2020 |

|

|

| [87] | Multi-Modal Depression Detection and Estimation |

|

|

2019 |

|

|

| [88] | Dual-hand detection for human-robot interaction by a parallel network based on hand detection and body pose estimation |

|

A deep parallel neural network uses two channels:

|

2019 |

|

|

| [89] | Gesture recognition based on multi-modal feature weight |

|

|

2021 |

Simulation experiments show that:

|

|

| [90] | Pose-based Body Language Recognition for Emotion and Psychiatric Symptom Interpretation |

|

|

2021 |

|

3. The Importance of Body Language Analysis

AI is one of the most significant technological developments, increasing in popularity and being used in all application areas. One of the most important of these applications is the use of AI in healthcare. Health is the most important human factor for life on this planet. Recently, the use and applications of AI in healthcare have played a significant role in helping doctors discover diseases and improve human health. The use of AI in health depends on the appearance of some symptoms on parts of the body. These symptoms affect and are reflected in the movements and expressions of the body, which are manifested as body language. From this point, these features of body language can be used to classify disease symptoms by detecting them in ML. In this section, we want to explain the importance of using body language by artificial intelligence. There are features that appear in body language that AI can analyze to solve many problems in many applications. For example, facial expressions can be analyzed to know human feelings and benefit from them in psychotherapy or examine subjects’ emotions in the study. Another example is analyzing the movements of the hand, shoulder, or leg, and using them to discover valuable features in medicine, security, etc. From this point, we want to show that body language has many benefits and applications, so this is important. Therefore, we want to suggest that body language can also be used to detect infectious diseases such as COVID-19 using ML.

Now, it is feasible to employ this technology in healthcare systems. Pandemic and epidemic diseases are considered an intractable matter that inferiorly affects human health, regarded as peoples’ most valuable asset. Additionally, the biggest worry is that new pandemics or epidemics will suddenly appear and become deadly, such as COVID-19, which has claimed nearly a million lives so far. This stimulates us to develop AI technologies to help detect the disease’s external symptoms by analyzing the patients’ body language. This work deals with general studies that prove the importance of body language processing in various fields.

Every computer user interacts with the device via mouse and keyboard. Currently, researchers are developing a computer system for interaction and response through body language such as hand gestures and movement. In [8], a comprehensive survey was completed evaluating the published literature recommending the visual interpretation of hand gestures when interacting with computing devices and introducing more advanced methods to analyze body language rather than mouse and keyboard movements. The study of [9] considered the problem of robot accuracy recognition. It proposed a fusion system to identify the fall movement types and abnormal directions with an accuracy rate of 99.37%. A facial coding system was developed in [10] to measure and analyze facial muscle movements and identify facial expressions. A database was created with a series of 1100 images. The system analyzed and classified facial creases and wrinkles to match their movements. The results showed that the performance improved, reaching 92%. Combining facial features and movements with body movements is essential for analyzing individual expressions. Three different experiments were conducted to determine whether facial expressions and body language should be combined and concluded in the affirmative. Another study [11] focused on deep learning techniques to identify emotions revealed in facial expressions. This research used pure convolutional neural network techniques to prove that deep learning using these neural networks successfully recognizes emotions by developing cognition, significantly improving the usability. A new model was invented in [12] that detected body gestures and movements with a pair of digital video images, which supplied a set of vector monitors with three dimensions.

The first study showed the relationship between the contraction of the internal muscles of the face and the facial movements as established by Hjortsjo 1970 [13] to develop a coding system by identifying the minor units of facial muscle movements and then drawing coordinates that defined the facial expressions. The recognition of people’s emotions has merited much attention. However, the issue of detecting facial emotions and expressions of speech, especially among researchers, is still problematic. The work presented in [14] offered a comprehensive survey to facilitate further research in this field. It focused on identifying gender-specific characteristics, setting an automatic framework to determine the physical manifestation of emotions, and identifying constant and dynamic body shape comments. It also examined recent studies on learning and emotion by identifying gestures through photos or video. Several methods combined speech, body, and facial gestures were also discussed to identify optimized emotions. The study concluded that the knowledge of a person’s feelings through overtones was still incomplete.

4. Body Language Analysis in Healthcare

A coding system was created to classify the facial expressions by analyzing more than 1100 pictures at work [10]. Three ways to classify facial expressions were compared: a method for analyzing image components in the gray field, measuring wrinkles, and a template for creating facial movements. The accuracy of performance of the coding system for the three roads was 89%, 57%, and 85%, respectively, while when assembling the methods, the performance accuracy reached 92%. Online learning is challenged by knowing students’ participation in learning processes. In work [15], an algorithm is introduced to learn about student interactions and see their problems. In this algorithm, two methods were used to collect evidence of student participation: the first method involved collecting facial expressions using a camera, and the second involved collecting hand movement data using mouse movements. The data were trained by building two groups; one group collected facial data with mouse data, and the second was without the mouse. It was discovered that the first group’s performance was better than the second group’s by 94.60% compared to 91.51%. Work [14] commented on recognizing facial and speech gestures that may provide a comprehensive survey of body language. It provided a framework for the automatic identification of dynamic and fixed emotional body gestures that combined facial and speech gestures to improve recognition of a person’s emotions. Paper [16] defines facial expressions by matching them with body positions. The work demonstrated that the effects and expressions are more evident when the major irritations on the face are similar to those highlighted in the body. However, the model produces different results according to the dependence on the properties, whether physical, dimensional, or latent. Another significant finding in the study is that expressions of fear bloom better when paired with facial expressions than when performing tasks.

In [17], the authors stated that the medical advisor must exhibit exciting communication qualities that make the patient feel comfortable making a correct decision. They advised doctors to know how to use facial expressions, eyes, hand gestures, and other body expressions. It was mentioned that a smile is the most robust expression that a doctor can use to communicate with their patients, as the doctor’s smile makes the patient feel comfortable. The patient’s sense of comfort makes them appear confident, and they answer the doctor’s questions with clear responses, credibility, and confidence. In addition, communicating with the eyes is very important to help the patient, as the lack of this from the doctor may suggest that the doctor does not care about them. The research in [18] concludes that the doctor’s appropriate nonverbal communication positively impacts the patient. Objective evidence has shown that the patient improves and recovers better and faster when the doctor uses a smile and direct eye communication with the patient compared to those who do not use a smile and direct eye with the patient. It was also concluded that patients who receive more attention, feeling, sensation, and participation by the doctor respond better to treatment, as the tone of voice, movement of the face and body, and eye gaze affect the patient. Clint [19] reported his first day on the job in the intensive care unit. He felt fear and anxiety on that day as the unit was comprehensive and informative. Clint was asking himself, “is it worth working in that unit?” He had a patient with her sister next to her. The patient glimpsed Clint’s nervousness and anxiety but did not dare ask him, so she whispered that the nurse was nervous to her sister. Then, her sister asked Clint, “you are worried and anxious today; why?” What is there to be so nervous about? Clint thought to hide his nervousness and anxiety and restore confidence; he smiled and replied, “I am not nervous.” However, sometimes, we have to ask our patients ridiculous questions that make us tense. Here, Clint states that he noticed from the patient’s looks that he could not persuade her to hide his stress. Clint made it clear that patients are affected by their body language and facial expressions. They can know their cases through their body language. From here, Clint realized that he was wrong. As anxiety and stress began on his patient, his condition may increase for that reason.

In one of Henry’s articles [20], he wrote that treating a patient with behaviors and body language has a more significant impact than using drugs. The work [21] concluded that non-verbal language between a doctor and their patient plays a vital role in treating the patient. The doctor can use non-verbal signals sent from the patient to collect information about the condition of the disease to help them decide on diagnosis and treatment. The research summarized that the non-verbal technique used by the doctor toward the patient affects them in obtaining information and helping them recover from the disease. For example, eye gaze, closeness to the patient, and facial and hand gestures to appear relaxed. The research suggests that there is a positive effect on the use of non-verbal cues on the patient. It is recommended that doctors be trained in incorporating non-verbal cues as a significant way of dealing with patients to speed up their treatment.

5. Patient’s Body Language Analysis Using AI

Different AI methods and techniques have been used to analyze patients’ body language. We briefly discuss some studies conducted so far in this area. More specifically, focusing on facial recognition, a pimple system was introduced in [22] to analyze facial muscles and thus identify different emotions. The proposed system automatically tracks faces using video and extracts geometric shapes for facial features. The study was conducted on eight patients with schizophrenia, and the study collected dynamical information on facial muscle movements. This study showed the possibility of identifying engineering measurements for individual faces and determining their exact differences for recognition purposes. Three methods were used in [23] to measure facial expressions to define emotions and identify persons with mental illness. The study’s proposed facial action coding system enabled the interpretation of emotional facial expressions and thus contributed to the knowledge of therapeutic intervention for patients with mental illnesses.

Many people suffer from an imbalance in the nervous system, which leads to paralysis of the patient’s movement and falls without prior warning. The study [24] was targeted to improve early warning signs detection and identification rate using a platform (R). Wireless sensor devices were placed on the chest and waist. The collected data were converted to an algorithm for analysis that extracted them and activated if there was a risk. The results showed that the patient at risk engaged in specific typical movements, which indicated an imminent fall. The authors further suggested applying this algorithm to patients with seizures to warn of an imminent attack and alert the emergency services.

In research [25], a computational framework was designed to monitor the movements of older adults to signal organ failures and other sudden drops in vital body functions. The system monitored the patient’s activity and determined its level using sensors placed on different body parts. The experiments show that this system identifies the correct locations in real-time with an accuracy of 95.8%. Another approach based on data analysis was presented in [26] for an intelligent home using sensors to monitor its residents’ movements and behaviors. This system helps detect behaviors and forecast diseases or injuries that residents may experience, especially older people. This study is helpful for doctors in providing remote care and monitoring their patients’ progress. The target object capture setup model proposed in [27] is based on the candidate region–suggestion network to detect the position grab of the manipulator combined with information for color and deep image capture using deep learning. It achieved a 94.3% crawl detection success rate on multiple target detection datasets through merging information for a color image. A paper [28] under review deals with the elderly and their struggle to continue living independently without relying on the support of others—the research project aimed to compare automated learning algorithms used to monitor their body functions and movements. Among the eight higher education algorithms studied, the support conveyor algorithm achieved the highest accuracy rate of 95%, using reference traits. Some jobs require prolonged sitting, resulting in long-term spinal injury and nervous disease. Some surveys helped design sitting position monitoring systems (SPMS) to assess the position of the seated person using sensors attached to the chair. The drawback of the proposed method was that it required too many sensors. This problem was resolved by [29], who designed an SPMS system that only needed four such sensors. This improved system defined six different sitting positions through several machine-learning algorithms applied to average body weight measurements. The positions were then analyzed and classified into any approach that would produce the highest level of accuracy, reaching from 97.20% to 97.94%. In most hospitals, medical doctors face anxiety about treating patients with mental illness regarding potential bodily harm, staff risks, and hospital tool damage. The study [30] devised a method to analyze the patient’s movements and identify the risk of harmful behavior by extracting visual data monitoring the patient’s movements from cameras installed in their rooms. The proposed method traced the movement points, accumulated them, and extracted their properties. The characteristics of the movement points were analyzed according to spacing, position, and speed. The study concluded that the proposed method could be used to explore features and characteristics for other purposes, such as analyzing the quality of the disease and determining its level of progression. In the study [31], wireless intelligent sensor applications and devices were designed to care for patient health, provide better patient monitoring, and facilitate disease diagnosis. Wireless sensors were installed on the body to periodically monitor the patient’s health, update the information, and send it to the service center. The researchers investigated the multi-level decision system (MDS) to monitor patient behaviors and match them with the stored historical data. This information allowed the decision makers in the medical centers to give treatment recommendations. The proposed system could also record new cases, store new disease data, and reduce the doctors’ effort and time spent examining the patients. The results proved accurate and reliable (MDS) in predicting and monitoring patients.

The study of [32] proposed the Short Time Fourier Transform application to monitor the patient’s movements and voice through sensors and microphones. The system transmitted sound and accelerometer data, analyzed the data to identify the patient’s conditions, and achieved high accuracy. Three experiments were conducted in reference [33], which involve the recognition of full-body expressions. The first experiment was about matching body expressions to incorporate all emotions, where fear was the most difficult emotion to express. At the same time, the second experiment focused on facial expressions strongly influenced by physical expression and, as a result, was ambiguous. In the last experiment, attention was given to expressions of the tone of a voice to identify emotional feelings related to the body. Finally, it was concluded that it was essential to pool the results of the three experiments to reveal true body expression.

A valuable study was conducted at the MIT Institute [34] to develop a system that detects pain in patients by analyzing data on brain activities using a wearable device to scan brain nerves. This was shown to help diagnose and treat patients with loss of consciousness and sense of touch. In this research, researchers use several fNIRS sensors specifically on the patient’s front to measure the activity of the frontal lobe, where the researchers developed ML models to determine the levels of oxygenated hemoglobin related to pain. The results showed that pain was detected with an accuracy of 87%.

The study [35] considered the heartbeat as a type of body language. Checking a patient’s heartbeat constitutes a crucial medical examination tool. The researcher suggested a one-dimensional (1D) convolutional neural network model CNN, which classified the vibrational signals of the regular and irregular heartbeats through an electrocardiogram. The model used the de-noising auto-encoder (DAE) algorithm, and the results showed that the proposed model classified the sound signals of the heart with an accuracy of up to 99%.

6. Discussion

We can conclude from this study that reading and understanding body language through AI will help automatically detect epidemic diseases. Counting epidemic patients is a significant obstacle to detecting every infected person. The most prominent example that is evident now is COVID-19 sufferers. All the developed, middle, and developing countries of the world have faced a significant problem examining the disease due to many infected people and the rapid spread. Thus, infections increased significantly, making it difficult to catch up to detect. We suggest conducting a study to determine the movements and gestures of the body with epidemic diseases, such as those with COVID-19. Indeed, the epidemic disease will have unique and distinct movements in some body parts. The thermal camera to detect high body temperature certainly plays a significant role in indicating a patient with a disease. Still, it is difficult to determine what kind of disease is affected, and secondly, there may be a patient with epidemic disease, but their temperature may not have significantly increased. Thirdly, it may be revealed that the high temperature of an epidemic may be delayed, and the patient is in a critical stage of treatment. We focus in this study on the interest in studying the body language of some epidemics, especially COVID-19, which changed our lives for the worse. We have learned a harsh lesson from this deadly enemy: not to stand still. We must help our people, countries, and the world defend and attack this disease. Hence, we propose studying the use of body language using AI. We hope to collect and identify body parts’ gestures that characterize the epidemic in the upcoming studies on which we are currently working.

Table 1 indicates some studies that have used ML to discover disease and symptoms through gestures, hands, and facial expressions. This table concludes that the CNN algorithms are the most common and efficient methods of identifying disease symptoms through facial expressions and hand gestures. Some studies indicate that analyzing other body parts is also helpful in identifying some types of diseases using different ML algorithms, such as SVM and LSTM. It appears to us here that combining the proposed CNN algorithm with a new proposed algorithm to determine facial expressions will lead to high-quality results for detecting some epidemic diseases. It is essential first to study the symptoms that characterize the epidemic disease and their reflection on body expressions and then use the algorithm to learn the machine that has a higher efficiency in identifying these expressions.

The studies in Table 1 are classified as follows:

-

(1)

Studies on medical diagnosis using AI for analyzing body language.

-

(2)

Studies on medical diagnosis using electronic devices and AI for analyzing body language.

-

(3)

Studies on COVID-19 diagnosis using other methods.

This study aims to survey research using ML algorithms to identify body features, movements, and expressions. Each movement is affected by the disease, and each disease is characterized by a distinct and different effect on the body. This means some body parts will undergo certain changes that point to a specific disease. Thus, we propose that ML algorithms capture images of body movements and expressions, analyze them, and identify diseases. This study surveyed a selection of existing studies that use different ML algorithms to detect body movements and expressions. Since these studies do not discuss this epidemiology method, this study seeks to document the use of ML algorithms in discovering epidemics such as COVID-19. Our survey analysis concludes that the results achieved indicate the possibility of identifying the body movements and expressions and that ML and convolutional neural networks are the most proficient in determining body language.

From an epidemiological, diagnostic, and pharmacological standpoint, AI has yet to play a substantial part in the fight against coronavirus. Its application is limited by a shortage of data, outlier data, and an abundance of noise. It is vital to create unbiased time series data for AI training. While the expanding number of worldwide activities in this area is promising, more diagnostic testing is required, not just for supplying training data for AI models but also for better controlling the epidemic and lowering the cost of human lives and economic harm. Clearly, data are crucial in determining if AI can be used to combat future diseases and pandemics. As [91] previously stated, the risk is that public health reasons will override data privacy concerns. Long after the epidemic has passed, governments may choose to continue the unparalleled surveillance of their population. As a result, worries regarding data privacy are reasonable.

7. Conclusions

According to patient surveys, communication is one of the most crucial skills a physician should have. However, communication encompasses more than just what is spoken. From the time a patient first visits a physician, their nonverbal communication, or “body language”, determines the course of therapy. Bodily language encompasses all nonverbal forms of communication, including posture, facial expression, and body movements. Being aware of such habits can help doctors get more access to their patients. Patient involvement, compliance, and the result can all be influenced by effective nonverbal communication.

Pandemic and epidemic illnesses are a worldwide threat that might kill millions. Doctors have limited abilities to recognize and treat victims. Human and technological resources are still in short supply regarding epidemic and pandemic conditions. To better the treatment process and when the patient cannot travel to the treatment location, remote diagnosis is necessary, and the patient’s status should be automatically examined. Altering facial wrinkles, movements of the eyes and eyebrows, some protrusion of the nose, changing the lips, and the appearance of certain motions of the hands, shoulders, chest, head, and other areas of the body are all characteristics of pandemic and epidemic illnesses. AI technology has shown promise in understanding these motions and cues in some cases. As a result, the concept of allocating body language to identifying epidemic diseases in patients early, treating them before, and assisting doctors in recognizing them arose owing to the speed with which they spread and people died. It should be emphasized that the COVID-19 disease, which horrified the entire world and revolutionized the world’s life, was the significant and crucial motivator for the idea of this study after we studied the body language analysis research in healthcare and defined the automatic recognition frame using AI to recognize various body language elements.

As researchers in information technology and computer science, we must contribute to discussing an automatic gesture recognition model that helps better identify the external symptoms of epidemic and pandemic diseases to help humanity.

Acknowledgments

First author’s research has been supported by Grant RMCG20-023-0023, Malaysia International Islamic University, and the second author’s work has been endorsed by the United Arab Emirates University Start-Up Grant 31T137.

Author Contributions

Conceptualization, R.A. and S.T.; methodology, R.A.; software, R.A.; validation, R.A. and S.T.; formal analysis, R.A.; investigation, M.A.H.A.; resources, M.A.H.A.; data curation, R.A.; writing—original draft preparation, R.A.; writing—review and editing, S.T.; visualization, M.A.H.A.; supervision, R.A. and S.T.; project administration, R.A. and S.T.; funding acquisition, R.A. and S.T. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was funded by Grant RMCG20-023-0023, Malaysia International Islamic University, and United Arab Emirates University Start-Up Grant 31T137.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Klima E.S., Bellugi U. The Signs of Language. Harvard University Press; Cambridge, MA, USA: 1979. [Google Scholar]

- 2.Sandler W., Lillo-Martin D. Sign Language and Linguistic Universals. Cambridge University Press; Cambridge, MA, USA: 2006. [Google Scholar]

- 3.Garfield B. Top 100 Advertising Campaigns of the Century. Ad Age; NewYork, NY, USA: 2006. pp. 8–15. [Google Scholar]

- 4.Onsager M. Body Language Dictionary. HarperCollins; New York, NY, USA: 2014. Understanding the importance of non-verbal communication. [Google Scholar]

- 5.Kurien D.N. Body language: Silent communicator at the workplace. IUP J. Soft Skills. 2010;4:29–36. [Google Scholar]

- 6.Gu Y., Mai X., Luo Y.-J. Do Bodily Expressions Compete with Facial Expressions? Time Course of Integration of Emotional Signals from the Face and the Body. PLoS ONE. 2013;8:e66762. doi: 10.1371/journal.pone.0066762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Eye Reading (Body Language) [(accessed on 2 June 2022)]. Available online: https://www.psychologistworld.com/body-language/eyes.

- 8.Murthy G.R.S., Jadon R.S. A review of vision based hand gestures recognition. Int. J. Inf. Technol. Knowl. Manag. 2009;2:405–410. [Google Scholar]

- 9.Yang T., Gao X., Gao R., Dai F., Peng J. A Novel Activity Recognition System for Alternative Control Strategies of a Lower Limb Rehabilitation Robot. Appl. Sci. 2019;9:3986. doi: 10.3390/app9193986. [DOI] [Google Scholar]

- 10.Bartlett M.S., Viola P.A., Sejnowski T.J., Larsen J., Hager J., Ekman P. Advances in Neural Information Processing Systems. The MIT Press; Cambridge, MA, USA: 1996. Classifying facial action; pp. 823–829. [Google Scholar]

- 11.Spiers D.L. Master’s Thesis. Uppsala University; Uppsala, Sweden: 2016. Facial Emotion Detection Using Deep Learning. [Google Scholar]

- 12.Campbell L.W., Becker D.A., Azarbayejani A., Bobick A.F., Pentland A. Invariant features for 3-D gesture recognition; Proceedings of the Second International Conference on Automatic Face and Gesture Recognition; Killington, VT, USA. 14–16 October 1996; pp. 157–162. [Google Scholar]

- 13.Hjortsjö C.-H. Man’s Face and Mimic Language. Studenlitteratur; Lund, Sweden: 1969. [Google Scholar]

- 14.Noroozi F., Corneanu C.A., Kaminska D., Sapinski T., Escalera S., Anbarjafari G. Survey on Emotional Body Gesture Recognition. IEEE Trans. Affect. Comput. 2018;12:505–523. doi: 10.1109/TAFFC.2018.2874986. [DOI] [Google Scholar]

- 15.Zhang Z., Li Z., Liu H., Cao T., Liu S. Data-driven Online Learning Engagement Detection via Facial Expression and Mouse Behavior Recognition Technology. J. Educ. Comput. Res. 2020;58:63–86. doi: 10.1177/0735633119825575. [DOI] [Google Scholar]

- 16.Mondloch C.J., Nelson N.L., Horner M. Asymmetries of Influence: Differential Effects of Body Postures on Perceptions of Emotional Facial Expressions. PLoS ONE. 2013;8:e73605. doi: 10.1371/journal.pone.0073605. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Reena M. Body Language in Patient Counselling. [(accessed on 2 June 2022)]. Available online: https://www.scribd.com/document/439511370/Body-language.

- 18.Chahal L. How your body language affects patient care. Curr. Psychiatry. 2017;16:41–42. [Google Scholar]

- 19.Lange C. Nursing and the importance of body language. Nursing. 2016;46:48–49. doi: 10.1097/01.NURSE.0000481421.03964.34. [DOI] [PubMed] [Google Scholar]

- 20.Nasrallah H.A. The most powerful placebo is not a pill. Curr. Psychiatry. 2011;10:18–19. [Google Scholar]

- 21.Mast M.S. On the importance of nonverbal communication in the physician-patient interaction. Patient Educ. Couns. 2007;67:315–318. doi: 10.1016/j.pec.2007.03.005. [DOI] [PubMed] [Google Scholar]

- 22.Du H., Shi H., Zeng D., Zhang X.-P., Mei T. The elements of end-to-end deep face recognition: A survey of recent advances. ACM Comput. Surv. (CSUR) 2020 doi: 10.1145/3507902. [DOI] [Google Scholar]

- 23.Wolf K. Measuring facial expression of emotion. Dialogues Clin Neurosci. 2015;17:457–462. doi: 10.31887/DCNS.2015.17.4/kwolf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jalil A. Master’s Thesis. Blekinge Institute of Technology; Karlskrona, Sweden: 2012. Real-Time Gait Analysis Algorithm for Patient Activity Detection to Understand and Respond to the Movements. [Google Scholar]

- 25.Ajerla D., Mahfuz S., Zulkernine F. A Real-Time Patient Monitoring Framework for Fall Detection. Wirel. Commun. Mob. Comput. 2019;2019:1–13. doi: 10.1155/2019/9507938. [DOI] [Google Scholar]

- 26.Paudel R., Eberle W., Holder L.B. Anomaly detection of elderly patient activities in smart homes using a graph-based approach; Proceedings of the 2018 International Conference on Data Science; Singapore. 22–22 July 2018; pp. 163–169. [Google Scholar]

- 27.Jiang D., Li G., Sun Y., Hu J., Yun J., Liu Y. Manipulator grabbing position detection with information fusion of color image and depth image using deep learning. J. Ambient. Intell. Humaniz. Comput. 2021;12:10809–10822. doi: 10.1007/s12652-020-02843-w. [DOI] [Google Scholar]

- 28.Luštrek M., Kaluža B. Fall detection and activity recognition with machine learning. Informatica. 2009;33:205–212. [Google Scholar]

- 29.Roh J., Park H.-J., Lee K.J., Hyeong J., Kim S., Lee B. Sitting Posture Monitoring System Based on a Low-Cost Load Cell Using Machine Learning. Sensors. 2018;18:208. doi: 10.3390/s18010208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Borges P.V.K., Nourani-Vatani N. Health Informatics: The Transformative Power of Innovation. IOS Press; Amsterdam, The Netherlands: 2011. Vision-based detection of unusual patient activity; pp. 16–23. [PubMed] [Google Scholar]

- 31.Tolba A., Said O., Al-Makhadmeh Z. MDS: Multi-level decision system for patient behavior analysis based on wearable device information. Comput. Commun. 2019;147:180–187. doi: 10.1016/j.comcom.2019.08.022. [DOI] [Google Scholar]

- 32.Doukas C., Maglogiannis I. Advanced patient or elder fall detection based on movement and sound data; Proceedings of the 2008 Second International Conference on Pervasive Computing Technologies for Healthcare; Tampere, Finland. 30 January–1 February 2008; pp. 103–107. [Google Scholar]

- 33.Van den Stock R.R., De Gelder B. Body expressions influence recognition of emotions in the face and voice. Emotion. 2007;7:487–494. doi: 10.1037/1528-3542.7.3.487. [DOI] [PubMed] [Google Scholar]

- 34.Lopez M. Detecting Patients’ Pain Levels via Their Brain Signals. 2019. [(accessed on 12 September 2019)]. Available online: https://news.mit.edu/2019/detecting-pain-levels-brain-signals-0912.

- 35.Li F., Liu M., Zhao Y., Kong L., Dong L., Liu X., Hui M. Feature extraction and classification of heart sound using 1D convolutional neural networks. EURASIP J. Adv. Signal Process. 2019;2019:1–11. doi: 10.1186/s13634-019-0651-3. [DOI] [Google Scholar]

- 36.Feng Z., Yu Q., Yao S., Luo L., Zhou W., Mao X., Li J., Duan J., Yan Z., Yang M., et al. Early prediction of disease progression in COVID-19 pneumonia patients with chest CT and clinical characteristics. Nat. Commun. 2020;11:1–9. doi: 10.1038/s41467-020-18786-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li Y., Horowitz M.A., Liu J., Chew A., Lan H., Liu Q., Sha D., Yang C. Individual-Level Fatality Prediction of COVID-19 Patients Using AI Methods. Front. Public Health. 2020;8:566. doi: 10.3389/fpubh.2020.587937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Alazab M., Awajan A., Mesleh A., Abraham A., Jatana V., Alhyari S. Covid-19 prediction and detection using deep learning. Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2020;12:168–181. [Google Scholar]

- 39.Arias-Londoño J.D., Gomez-Garcia J.A., Moro-Velazquez L., Godino-Llorente J.I. Artificial Intelligence applied to chest X-ray images for the automatic detection of COVID-19. A thoughtful evaluation approach. arXiv. 2020 doi: 10.1109/ACCESS.2020.3044858.2011.14259 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Ahamad M., Aktar S., Al Mahfuz R., Uddin S., Liò P., Xu H., Summers M.A., Quinn J.M., Moni M.A. A machine learning model to identify early stage symptoms of SARS-Cov-2 infected patients. Expert Syst. Appl. 2020;160:113661. doi: 10.1016/j.eswa.2020.113661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Islam Z., Islam M., Asraf A. A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images. Inform. Med. Unlocked. 2020;20:100412. doi: 10.1016/j.imu.2020.100412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Chen T., Lin C.-W. Smart and automation technologies for ensuring the long-term operation of a factory amid the COVID-19 pandemic: An evolving fuzzy assessment approach. Int. J. Adv. Manuf. Technol. 2020;111:3545–3558. doi: 10.1007/s00170-020-06097-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Zhou L., Li Z., Zhou J., Li H., Chen Y., Huang Y., Xie D., Zhao L., Fan M., Hashmi S., et al. A Rapid, Accurate and Machine-Agnostic Segmentation and Quantification Method for CT-Based COVID-19 Diagnosis. IEEE Trans. Med. Imaging. 2020;39:2638–2652. doi: 10.1109/TMI.2020.3001810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Barstugan M., Ozkaya U., Ozturk S. Coronavirus (covid-19) classification using ct images by machine learning methods. arXiv. 20202003.09424 [Google Scholar]

- 45.Chen X., Liu Z. Early prediction of mortality risk among severe COVID-19 patients using machine learning. arXiv. 20202003.09424 [Google Scholar]

- 46.Amyar A., Modzelewski R., Li H., Ruan S. Multi-task deep learning-based CT imaging analysis for COVID-19 pneumonia: Classification and segmentation. Comput. Biol. Med. 2020;126:104037. doi: 10.1016/j.compbiomed.2020.104037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Jamshidi M., Lalbakhsh A., Talla J., Peroutka Z., Hadjilooei F., Lalbakhsh P., Jamshidi M., La Spada L., Mirmozafari M., Dehghani M., et al. Artificial intelligence and COVID-19: Deep learning approaches for diagnosis and treatment. IEEE Access. 2020;8:109581–109595. doi: 10.1109/ACCESS.2020.3001973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zhang H., Zhang J., Zhang H., Nan Y., Zhao Y., Fu E., Xie Y.-H., Liu W., Li W.-P., Zhang H.-J. Automated detection and quantification of COVID-19 pneumonia: CT imaging analysis by a deep learning-based software. Eur. J. Nucl. Med. Mol. Imaging. 2020;47:2525–2532. doi: 10.1007/s00259-020-04953-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Di Castelnuovo A., Bonaccio M., Costanzo S., Gialluisi A., Antinori A., Berselli N., Blandi L., Bruno R., Cauda R., Guaraldi G., et al. Common cardiovascular risk factors and in-hospital mortality in 3,894 patients with COVID-19: Survival analysis and machine learning-based findings from the multicentre Italian CORIST Study. Nutr. Metab. Cardiovasc. Dis. 2020;30:1899–1913. doi: 10.1016/j.numecd.2020.07.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Gupta A., Anjum, Gupta S., Katarya R. InstaCovNet-19: A deep learning classification model for the detection of COVID-19 patients using Chest X-ray. Appl. Soft Comput. 2020;99:106859. doi: 10.1016/j.asoc.2020.106859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Kumar K.R., Iyapparaja M., Niveditha V.R., Magesh S., Magesh G., Marappan S. Monitoring and analysis of the recovery rate of Covid-19 positive cases to prevent dangerous stage using IoT and sensors. Int. J. Pervasive Comput. Commun. 2020 doi: 10.1108/IJPCC-07-2020-0088. [DOI] [Google Scholar]

- 52.Ritwik K.V.S., Kalluri S.B., Vijayasenan D. COVID-19 Patient Detection from Telephone Quality Speech Data. arXiv. 20202011.04299 [Google Scholar]

- 53.Khanday A.M.U.D., Rabani S.T., Khan Q.R., Rouf N., Din M.M.U. Machine learning-based approaches for detecting COVID-19 using clinical text data. Int. J. Inf. Technol. 2020;12:731–739. doi: 10.1007/s41870-020-00495-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Vinod D.N., Prabaharan S. Data science and the role of Artificial Intelligence in achieving the fast diagnosis of Covid-19. Chaos Solitons Fractals. 2020;140:110182. doi: 10.1016/j.chaos.2020.110182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Ramchandani A., Fan C., Mostafavi A. DeepCOVIDNet: An Interpretable Deep Learning Model for Predictive Surveillance of COVID-19 Using Heterogeneous Features and Their Interactions. IEEE Access. 2020;8:159915–159930. doi: 10.1109/ACCESS.2020.3019989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Horry M.J., Chakraborty S., Paul M., Ulhaq A., Pradhan B., Saha M., Shukla N. COVID-19 Detection Through Transfer Learning Using Multimodal Imaging Data. IEEE Access. 2020;8:149808–149824. doi: 10.1109/ACCESS.2020.3016780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Qjidaa M., Mechbal Y., Ben-Fares A., Amakdouf H., Maaroufi M., Alami B., Qjidaa H. Early detection of COVID19 by deep learning transfer Model for populations in isolated rural areas; Proceedings of the 2020 International Conference on Intelligent Systems and Computer Vision (ISCV); Fez, Maroco. 9–11 June 2020; pp. 1–5. [Google Scholar]

- 58.Jain R., Gupta M., Taneja S., Hemanth D.J. Deep learning based detection and analysis of COVID-19 on chest X-ray images. Appl. Intell. 2020;51:1690–1700. doi: 10.1007/s10489-020-01902-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Yadav M., Perumal M., Srinivas M. Analysis on novel coronavirus (COVID-19) using machine learning methods. Chaos Solitons Fractals. 2020;139:110050. doi: 10.1016/j.chaos.2020.110050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Mungra D., Agrawal A., Sharma P., Tanwar S., Obaidat M.S. PRATIT: A CNN-based emotion recognition system using histogram equalization and data augmentation. Multimed. Tools Appl. 2019;79:2285–2307. doi: 10.1007/s11042-019-08397-0. [DOI] [Google Scholar]

- 61.Wang Q., Yang H., Yu Y. Facial expression video analysis for depression detection in Chinese patients. J. Vis. Commun. Image Represent. 2018;57:228–233. doi: 10.1016/j.jvcir.2018.11.003. [DOI] [Google Scholar]

- 62.Wang Z.-H., Horng G.-J., Hsu T.-H., Chen C.-C., Jong G.-J. A Novel Facial Thermal Feature Extraction Method for Non-Contact Healthcare System. IEEE Access. 2020;8:86545–86553. doi: 10.1109/ACCESS.2020.2992908. [DOI] [Google Scholar]

- 63.Patil S., Joshi P., Deore G., Taley A., Sawant P. Emotion Detection Using Facial Recognition. 2020. [(accessed on 2 June 2022)]. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3645477.

- 64.Giannakakis G., Pediaditis M., Manousos D., Kazantzaki E., Chiarugi F., Simos P., Marias K., Tsiknakis M. Stress and anxiety detection using facial cues from videos. Biomed. Signal Process. Control. 2017;31:89–101. doi: 10.1016/j.bspc.2016.06.020. [DOI] [Google Scholar]

- 65.Jaiswal S., Valstar M.F., Gillott A., Daley D. Automatic detection of ADHD and ASD from expressive behaviour in RGBD data; Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017); Washington, DC, USA. 30 May–30 June 2017; pp. 762–769. [Google Scholar]

- 66.Muhammad G., Alsulaiman M., Amin S.U., Ghoneim A., Alhamid M.F. A Facial-Expression Monitoring System for Improved Healthcare in Smart Cities. IEEE Access. 2017;5:10871–10881. doi: 10.1109/ACCESS.2017.2712788. [DOI] [Google Scholar]

- 67.Rodriguez P., Cucurull G., Gonzalez J., Gonfaus J.M., Nasrollahi K., Moeslund T.B., Roca F.X. Deep Pain: Exploiting Long Short-Term Memory Networks for Facial Expression Classification. IEEE Trans. Cybern. 2017;52:3314–3324. doi: 10.1109/TCYB.2017.2662199. [DOI] [PubMed] [Google Scholar]

- 68.Hossain M.S. Patient State Recognition System for Healthcare Using Speech and Facial Expressions. J. Med. Syst. 2016;40:272. doi: 10.1007/s10916-016-0627-x. [DOI] [PubMed] [Google Scholar]

- 69.Kim K.H., Park K., Kim H., Jo B., Ahn S.H., Kim C., Kim M., Kim T.H., Lee S.B., Shin D., et al. Facial expression monitoring system for predicting patient’s sudden movement during radiotherapy using deep learning. J. Appl. Clin. Med. Phys. 2020;21:191–199. doi: 10.1002/acm2.12945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Scholar U.G., Sudharsan A., Yaswanth U.G.S.A., Hemapriya M.K. Patient Monitoring System from Facial Expressions using Python. Int. J. Sci. Res. Eng. Trends. 2020;6:1460–1464. [Google Scholar]

- 71.Huang Y., Yang J., Liu S., Pan J. Combining facial expressions and electroencephalography to enhance emotion recognition. Future Internet. 2019;11:105. doi: 10.3390/fi11050105. [DOI] [Google Scholar]

- 72.Chanu O.R., Mishra S., Gupta N., Lhazay N. Gestures Controlled Audio Assistive Software for the Voice Impaired and Paralysis Patients. J. Phys. Conf. Ser. 2019;1362:012029. doi: 10.1088/1742-6596/1362/1/012029. [DOI] [Google Scholar]

- 73.Einfalt M., Lienhart R., Lee M., Kennedy L. Detecting speech impairments from temporal Visual facial features of aphasia patients; Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR); San Jose, CA, USA. 28–30 March 2019; pp. 103–108. [Google Scholar]

- 74.Bishay M., Palasek P., Priebe S., Patras I. SchiNet: Automatic Estimation of Symptoms of Schizophrenia from Facial Behaviour Analysis. IEEE Trans. Affect. Comput. 2019;12:949–961. doi: 10.1109/TAFFC.2019.2907628. [DOI] [Google Scholar]

- 75.Ketcham M., Inmoonnoy V. The message notification for patients care system using hand gestures recognition; Proceedings of the 2017 International Conference on Digital Arts, Media and Technology (ICDAMT); Chiang Mai, Thailand. 1–4 March 2017; pp. 412–416. [Google Scholar]

- 76.Hamm J., Kohler C.G., Gur R.C., Verma R. Automated facial action coding system for dynamic analysis of facial expressions in neuropsychiatric disorders. J. Neurosci. Methods. 2011;200:237–256. doi: 10.1016/j.jneumeth.2011.06.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Huu P.N., Thu H.N.T. Proposal Gesture Recognition Algorithm Combining CNN for Health Monitoring; Proceedings of the 2019 6th NAFOSTED Conference on Information and Computer Science (NICS); Hanoi, Vietnam. 12–13 December 2019; pp. 209–213. [Google Scholar]

- 78.Liliana L., Chae J.-H., Lee J.-J., Lee B.-G. A robust method for VR-based hand gesture recognition using density-based CNN. Telkomnika. 2020;18:761. doi: 10.12928/telkomnika.v18i2.14747. [DOI] [Google Scholar]

- 79.Alnaim N., Abbod M., Albar A. Hand Gesture Recognition Using Convolutional Neural Network for People Who Have Experienced A Stroke; Proceedings of the 2019 3rd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT); Ankara, Turkey. 11–13 October 2019; pp. 1–6. [Google Scholar]

- 80.McColl D., Nejat G. Determining the affective body language of older adults during socially assistive HRI; Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems; Chicago, IL, USA. 14–18 September 2014; pp. 2633–2638. [Google Scholar]

- 81.Orton I.J.D. Vision based body gesture meta features for Affective Computing. arXiv. 20202003.00809 [Google Scholar]

- 82.Bakator M., Radosav D. Deep Learning and Medical Diagnosis: A Review of Literature. Multimodal Technol. Interact. 2018;2:47. doi: 10.3390/mti2030047. [DOI] [Google Scholar]

- 83.Behoora I., Tucker C.S. Machine learning classification of design team members’ body language patterns for real time emotional state detection. Des. Stud. 2015;39:100–127. doi: 10.1016/j.destud.2015.04.003. [DOI] [Google Scholar]

- 84.Wang J., Kothalkar P.V., Cao B., Heitzman D. Towards Automatic Detection of Amyotrophic Lateral Sclerosis from Speech Acoustic and Articulatory Samples. Interspeech. 2016;2016:1195–1199. [Google Scholar]

- 85.Zhang X., Wang S., Liu J., Tao C. Towards improving diagnosis of skin diseases by combining deep neural network and human knowledge. BMC Med. Inform. Decis. Mak. 2018;18:69–76. doi: 10.1186/s12911-018-0631-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Hegde S., Mundada M.R. Early prediction of chronic disease using an efficient machine learning algorithm through adaptive probabilistic divergence based feature selection approach. Int. J. Pervasive Comput. Commun. 2020;17:20–36. doi: 10.1108/IJPCC-04-2020-0018. [DOI] [Google Scholar]

- 87.Yang L. Multi-Modal Depression Detection and Estimation; Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW); Cambridge, UK. 3–6 September 2019; pp. 26–30. [Google Scholar]

- 88.Gao Q., Liu J., Ju Z., Zhang X. Dual-Hand Detection for Human–Robot Interaction by a Parallel Network Based on Hand Detection and Body Pose Estimation. IEEE Trans. Ind. Electron. 2019;66:9663–9672. doi: 10.1109/TIE.2019.2898624. [DOI] [Google Scholar]

- 89.Duan H., Sun Y., Cheng W., Jiang D., Yun J., Liu Y., Zhou D. Gesture recognition based on multi-modal feature weight. Concurr. Comput. Pract. Exp. 2021;33:e5991. doi: 10.1002/cpe.5991. [DOI] [Google Scholar]

- 90.Yang Z., Kay A., Li Y., Cross W., Luo J. Pose-based Body Language Recognition for Emotion and Psychiatric Symptom Interpretation; Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR); Milan, Italy. 10–15 January 2020. [Google Scholar]

- 91.Naudé W. Artificial Intelligence Against COVID-19: An Early Review. Institute of labor economics; Bonn, Germany: 2020. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.