Key Points

Question

What is the quality and perceived usefulness of patient-submitted dermatology images?

Findings

In this survey study, 10 dermatologists found patient-submitted images useful for decision-making and sufficient in quality 55.1% and 62.2% of the time, respectively. Interrater agreement was fair to substantial for diagnostic categorization and fair to moderate for image quality and perceived usefulness, and the odds that an image was considered useful for decision-making were highest when the image depicted a wound, was in focus, and the evaluator was a senior faculty member.

Meaning

A slight majority of patient-submitted images were of adequate quality and perceived usefulness; thus, in-focus images may be useful for wound surveillance when reviewed by experienced attending physicians.

Abstract

Importance

Patient-submitted images vary considerably in quality and usefulness. Studies that characterize patient-submitted images in a real-life setting are lacking.

Objective

To evaluate the quality and perceived usefulness of patient-submitted images as determined by dermatologists and characterize agreement of their responses.

Design, Setting, and Participants

This survey study included patient images submitted to the Department of Dermatology at Duke University (Durham, North Carolina) between August 1, 2018, and December 31, 2019. From a total pool of 1200 images, 10 dermatologists evaluated 200 or 400 images each, with every image being evaluated by 3 dermatologists. Data analysis occurred during the year leading up to the article being written.

Main Outcomes and Measures

The primary outcomes were the responses to 2 questions and were analyzed using frequency counts and interrater agreement (Fleiss κ) to assess image quality and perceived usefulness. We performed a random-effects logistic regression model to investigate factors associated with evaluators’ decision-making comfort. We hypothesized that most images would be of low quality and perceived usefulness, and that interrater agreement would be poor.

Results

A total of 259 of 2915 patient-submitted images (8.9%) did not depict a skin condition at all. The final analysis comprised 3600 unique image evaluations. Dermatologist evaluators indicated that 1985 images (55.1%) were useful for medical decision-making and 2239 (62.2%) were of sufficient quality. Interrater agreement for a given image’s diagnostic categorization was fair to substantial (κ range, 0.36-0.64), while agreement on image quality (κ range, 0.35-0.47) and perceived usefulness (κ range, 0.29-0.38) were fair to moderate. Senior faculty had higher odds of feeling comfortable with medical decision-making than junior faculty (odds ratio [OR], 3.68; 95% CI, 2.9-4.66; P < .001) and residents (OR, 5.55; 95% CI, 4.38-7.04; P < .001). Images depicting wounds (OR, 1.75; 95% CI, 1.18-2.58; P = .01) compared with inflammatory skin conditions and that were in focus (OR, 5.56; 95% CI, 4.63-6.67; P < .001) had higher odds of being considered useful for decision-making.

Conclusions and Relevance

In this survey study including 10 dermatologists, a slight majority of patient-submitted images were judged to be of adequate quality and perceived usefulness. Fair agreement between dermatologists was found regarding image quality and perceived usefulness, suggesting that store-and-forward teledermatology initiatives should consider a physician’s individual experiences and comfort level. The study results suggest that images are most likely to be useful when they are in focus and reviewed by experienced attending physicians for wound surveillance, but dermatologists may be burdened by irrelevant or unsuitable images.

This survey study examines the quality and perceived usefulness of patient-submitted dermatology images.

Introduction

Store-and-forward teledermatology uses electronically stored data, including patient photographs and demographic information, for clinical decision-making. Although adequate quality of patient-submitted photographs is important for diagnostic accuracy, poor quality images are common (10%-40%).1,2,3 While previous studies used motivated patient cohorts and controlled study formats, we characterized the images that patients transmitted in a real-life setting. During a period of 1.5 years at our institution (Duke University; Durham, North Carolina), a slight majority of patient-submitted images were of adequate quality and considered helpful for medical decision-making.

Methods

This study met the criteria for exemption by the Duke University institutional review board, which included a waiver of informed consent. We included 2915 images that patients submitted to the Department of Dermatology at Duke University between August 1, 2018, and December 31, 2019. We excluded 72 images that could not be deidentified and 259 images that did not contain a skin condition (eFigure in the Supplement). Images were extracted from the electronic health record and uploaded to the Duke University Research Electronic Data Capture (REDCap) platform.4 Within REDCap, we generated surveys that contained a questionnaire for every image based on previously published studies, guidelines for clinical photography, and our determination of important information (eAppendix in the Supplement).5,6,7

The first 1200 images uploaded to REDCap were evaluated by dermatology faculty (n = 6) and dermatology resident physicians (n = 4) between March 5, 2021, and July 20, 2021 (eFigure and eAppendix in the Supplement). Each set of 200 images was evaluated by a unique combination of 3 image evaluators (eFigure in the Supplement). Every dermatology faculty member completed 400 evaluations, and resident physicians completed 200 or 400 evaluations each. Faculty evaluators consisted of senior faculty (associate professor or professor) and junior faculty (assistant professor).

Because of low sample sizes, categorically similar diagnoses were combined for analyses of interrater agreement and logistic regression (Figure). Agreement among 3 evaluators was determined using Fleiss κ statistics. Kappa values of 0.01 to 0.2 indicated slight agreement, 0.21 to 0.4 fair agreement, 0.41 to 0.6 moderate agreement, and 0.61 to 0.8 substantial agreement.8 A random-effects logistic regression model was fit to study the association between the evaluator’s comfort with medical decision-making and the clinical experience of evaluators, depicted skin conditions, and components of image quality. The correlation between the same image reviewed by different evaluators and between images reviewed by the same evaluator were accounted for using the compound symmetry covariance structure. The significance of the test was assessed at α = .05. Statistical analyses were performed using SAS, version 9.4 (SAS Institute).

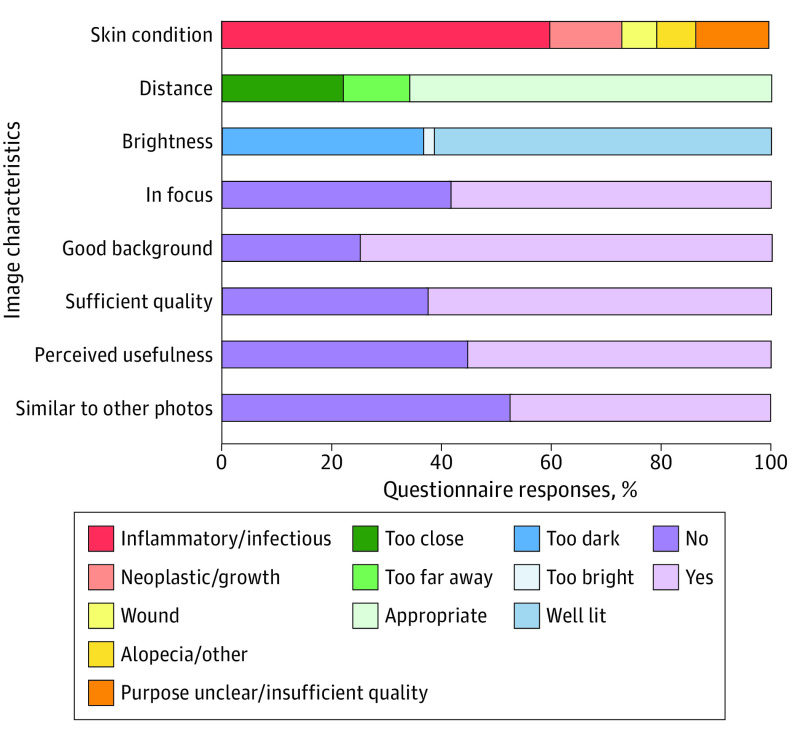

Figure. Frequency Counts of Questionnaire Responses to 3600 Image Evaluations.

Results are displayed as percentages. For the analysis of diagnostic categorization (question 1), the initial answers “inflammatory” and “infectious” were combined as “inflammatory,” “alopecia,” and “other” were combined as “other,” and “the purpose of the photo is unclear” and “the image quality is insufficient to make a guess” were combined as “insufficient quality/information.”

Results

Characterization of Image Quality and Perceived Usefulness

The final analysis comprised 3600 image evaluations. Evaluators indicated that they would feel comfortable with medical decision-making for 1985 images (55.1%) and that 2239 images (62.2%) were of sufficient quality for the electronic health record (Figure). A total of 2352 images (65.3%) were appropriately distanced, 2189 (60.8%) sufficiently lit, 2091 (58.1%) in focus, and 2677 (74.4%) free of background distractions (Figure). A total of 2153 images (59.8%) depicted inflammatory skin conditions, 485 (13.5%) neoplasms/growths, 227 (6.3%) wounds, and 253 (7.0%) other skin conditions (Figure). Diagnostic categorization could not be assigned for 482 evaluations (13.4%) because of insufficient image quality or context (Figure). Additionally, 1697 responses (47.1%) indicated that images were similar to another image in the set (Figure).

Agreement Between Image Evaluators

Interrater agreement measures the degree to which responses of 2 or more evaluators are concordant.9 Across different levels of training, the interrater diagnostic agreement was fair to substantial (κ = 0.36; range, 0.55-0.64) (Table 1). Interrater agreement was fair to moderate for image quality (κ = 0.35; range, 0.37-0.47) and fair for perceived usefulness (κ = 0.29; range, 0.36-0.38) (Table 1). For the comparison between senior faculty only, agreement for diagnostic categorization was moderate (κ, 0.54; 95% CI, 0.47-0.62) while agreement for image quality (κ, 0.32; 95% CI, 0.23-0.42) and decision-making (κ, 0.32; 95% CI, 0.22-0.42) was fair (Table 1). Agreement in diagnosis and image quality between junior faculty and between resident physicians was similar to that between the senior faculty, while agreement on subjective usefulness in decision-making was lower compared with senior faculty (Table 1).

Table 1. Interrater Agreement of Different Evaluator Combinations for Key Survey Questionsa.

| Comparison | Diagnosis | Quality sufficient for EHR | Utility in decision-making |

|---|---|---|---|

| Senior faculty vs junior faculty vs residentb | 0.36 (0.29-0.43) | 0.35 (0.25-0.44) | 0.29 (0.19-0.38) |

| 0.55 (0.48-0.63) | 0.37 (0.28-0.47) | 0.36 (0.26-0.45) | |

| 0.64 (0.58-0.71) | 0.47 (0.37-0.57) | 0.38 (0.29-0.48) | |

| Senior faculty ×3 | 0.54 (0.47-0.62) | 0.32 (0.23-0.42) | 0.32 (0.22-0.42) |

| Junior faculty ×3 | 0.54 (0.47-0.61) | 0.28 (0.17-0.38) | 0.11 (0.02-0.19) |

| Resident ×3 | 0.47 (0.39-0.55) | 0.36 (0.26-0.46) | 0.18 (0.09-0.26) |

Abbreviation: EHR, electronic health record.

Values are presented as Fleiss κ (95% CI).

The senior faculty vs junior faculty vs resident physician comparison was performed 3 times; therefore, 3 Fleiss κ values are reported for this comparison. The remaining evaluator comparisons were each performed once.

Regression Analysis

Senior faculty had higher odds of feeling comfortable making a medical decision for a given image than junior faculty (odds ratio [OR], 3.68; 95% CI, 2.9-4.66; P < .001) and residents (OR, 5.55; 95% CI, 4.38-7.04; P < .001) (Table 2). The odds that an image evaluator felt comfortable with decision-making were 75% higher for wounds than inflammatory/infectious skin conditions (OR, 1.75; 95% CI, 1.18-2.58; P = .01) (Table 2). Conversely, the odds that neoplasms were considered useful for medical decision-making were 36% lower than for inflammatory/infectious skin conditions (OR, 0.64; 95% CI, 0.5-0.81; P < .001) (Table 2). Individual components of image quality were all significantly associated with higher odds of perceived decision-making usefulness when these components were evaluated positively (Table 2). In focus (OR, 5.56; 95% CI, 4.63-6.67; P < .001) and appropriately distanced (OR, 3.96; 95% CI, 3.26-4.81;P < .001) images had the highest odds of being considered useful (Table 2).

Table 2. Logistic Regression of Factors Associated With Image Decision-Making Utilitya.

| Covariates | Odds ratio (95% CI) | P value |

|---|---|---|

| Experience | ||

| Junior faculty vs resident | 1.51 (1.22-1.87) | <.001 |

| Senior faculty vs resident | 5.55 (4.38-7.04) | <.001 |

| Senior faculty vs junior faculty | 3.68 (2.9-4.66) | <.001 |

| Skin condition | ||

| Insufficient quality vs inflammatory | 0.04 (0.03-0.06) | <.001 |

| Neoplastic/growth vs inflammatory | 0.64 (0.5-0.81) | <.001 |

| Wound vs inflammatory | 1.75 (1.18-2.58) | .01 |

| Other vs inflammatory | 0.79 (0.57-1.09) | .15 |

| Image quality components | ||

| Appropriately distanced vs too close/far | 3.96 (3.26-4.81) | <.001 |

| Well lit vs too bright/dark | 2.7 (2.23-3.26) | <.001 |

| In focus vs not | 5.56 (4.63-6.67) | <.001 |

| Background free of distraction vs not | 1.49 (1.21-1.83) | <.001 |

The answer to question 7 (feeling comfortable making a medical decision based on the attached image) was the outcome in the model. Experience level, diagnostic categorization of the depicted skin condition, and components of image quality were covariates in the model.

Discussion

Use of store-and-forward teledermatology has recently increased and may improve access to dermatologic care. This study found that 62.2% of patient-submitted images were of sufficient quality and 55.1% were considered useful for decision-making.

There was fair to moderate concordance in image evaluators’ perceptions of quality and usefulness. Despite this relatively low concordance, there was substantially higher agreement among image evaluators on diagnosis of a depicted skin condition; dermatologists felt more comfortable providing a diagnostic categorization rather than decision-making based on the images (86.6% vs 55.1% of responses). Previous studies suggested that diagnoses could be moderately to substantially concordant even when image reviewers had lower comfort with decision-making.10,11 Evaluator agreement has the potential to be higher during in-person appointments; one study observed that the diagnostic agreement between 2 dermatologists evaluating patients in person was substantially higher than the agreement between the same dermatologists who reviewed patient images remotely (88% vs 68%).12

Previous research found that increased messages and images from patients were correlated with higher burnout scores for clinicians.13 In this study, 8.9% of all images did not depict skin conditions, evaluators were unable to assign a diagnosis 13.4% of the time, and evaluators felt uncomfortable with decision-making for a given image 44.9% of the time, possibly contributing to burnout associated with reviewing high volumes of patient-submitted images.

It is important to consider the circumstances in which patient-submitted photographs are most useful for clinical practice. Image evaluation for postoperative wound assessment could yield strong agreement between in-person and remote clinical review for diagnosing surgical site infections.14 In another study, wound photography was associated with increased specificity and surgeon confidence but decreased sensitivity of surgical site infection diagnosis in a practical simulation.15 Alhough both studies used clinician/researcher-taken photographs, the present study may support the utility of patients sending digital images to physicians for postoperative wound surveillance.

Limitations

This study had several limitations. Patient-submitted images were deidentified and presented without clinical context. Additional clinical information from patient messages or electronic health record review may have increased the image evaluator’s comfort with medical decision-making and strengthened diagnostic categorization. A substantial portion of patient-submitted images were similar to one another. Without patient identification, we were unable to ascertain the weight that each set of low-quality or high-quality images contributed. Finally, we analyzed remote interrater agreement images but did not include in-person or histopathological outcomes. Future studies should examine outcomes following transmission of patient-submitted photographs.

Conclusions

The results of this survey study suggest that a slight majority of patient-submitted images were of sufficient quality and perceived usefulness, but many were not considered helpful for decision-making. Dermatologists and dermatology residents were in fair to moderate agreement regarding image quality and perceived usefulness. Patient-submitted images are most likely to be useful for wound surveillance when they are in focus and reviewed by experienced dermatologists.

eFigure. Experimental set up to evaluate 1200 patient-submitted images

eAppendix. Questionnaire assigned to each image and instructions for evaluators

References

- 1.Hubiche T, Valério L, Boralevi F, et al. ; Research Group of the French Society of Pediatric Dermatology Groupe de Recherche de la Société Française de Dermatologie Pédiatrique . Visualization of patients’ skin lesions on their smartphones: a new step during dermatology visits. JAMA Dermatol. 2016;152(1):95-97. doi: 10.1001/jamadermatol.2015.2977 [DOI] [PubMed] [Google Scholar]

- 2.Rimner T, Blozik E, Fischer Casagrande B, Von Overbeck J. Digital skin images submitted by patients: an evaluation of feasibility in store-and-forward teledermatology. Eur J Dermatol. 2010;20(5):606-610. doi: 10.1684/ejd.2010.1019 [DOI] [PubMed] [Google Scholar]

- 3.Weingast J, Scheibböck C, Wurm EM, et al. A prospective study of mobile phones for dermatology in a clinical setting. J Telemed Telecare. 2013;19(4):213-218. doi: 10.1177/1357633x13490890 [DOI] [PubMed] [Google Scholar]

- 4.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377-381. doi: 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.O’Connor DM, Jew OS, Perman MJ, Castelo-Soccio LA, Winston FK, McMahon PJ. Diagnostic accuracy of pediatric teledermatology using parent-submitted photographs: a randomized clinical trial. JAMA Dermatol. 2017;153(12):1243-1248. doi: 10.1001/jamadermatol.2017.4280 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Muraco L. Improved medical photography: key tips for creating images of lasting value. JAMA Dermatol. 2020;156(2):121-123. doi: 10.1001/jamadermatol.2019.3849 [DOI] [PubMed] [Google Scholar]

- 7.Finnane A, Curiel-Lewandrowski C, Wimberley G, et al. ; International Society of Digital Imaging of the Skin for the International Skin Imaging Collaboration . Proposed technical guidelines for the acquisition of clinical images of skin-related conditions. JAMA Dermatol. 2017;153(5):453-457. doi: 10.1001/jamadermatol.2016.6214 [DOI] [PubMed] [Google Scholar]

- 8.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159-174. doi: 10.2307/2529310 [DOI] [PubMed] [Google Scholar]

- 9.McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb). 2012;22(3):276-282. https://pubmed.ncbi.nlm.nih.gov/23092060. doi: 10.11613/BM.2012.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gabel CK, Nguyen E, Karmouta R, et al. Use of teledermatology by dermatology hospitalists is effective in the diagnosis and management of inpatient disease. J Am Acad Dermatol. 2021;84(6):1547-1553. doi: 10.1016/j.jaad.2020.04.171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Trinidad J, Gabel CK, Han JJ, et al. Telemedicine and dermatology hospital consultations during the COVID-19 pandemic: a multi-centre observational study on resource utilization and conversion to in-person consultations during the COVID-19 pandemic. J Eur Acad Dermatology Venereol. Published online December 21, 2021. doi: 10.1111/jdv.17898 [DOI] [PubMed] [Google Scholar]

- 12.Börve A, Holst A, Gente-Lidholm A, Molina-Martinez R, Paoli J. Use of the mobile phone multimedia messaging service for teledermatology. J Telemed Telecare. 2012;18(5):292-296. doi: 10.1258/jtt.2012.120206 [DOI] [PubMed] [Google Scholar]

- 13.Borre ED, Nicholas MW. The disproportionate burden of electronic health record messages with image attachments in dermatology. J Am Acad Dermatol. 2022;86(2):492-494. doi: 10.1016/j.jaad.2021.09.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Totty JP, Harwood AE, Wallace T, Smith GE, Chetter IC. Use of photograph-based telemedicine in postoperative wound assessment to diagnose or exclude surgical site infection. J Wound Care. 2018;27(3):128-135. doi: 10.12968/jowc.2018.27.3.128 [DOI] [PubMed] [Google Scholar]

- 15.Kummerow Broman K, Gaskill CE, Faqih A, et al. Evaluation of wound photography for remote postoperative assessment of surgical site infections. JAMA Surg. 2019;154(2):117-124. doi: 10.1001/jamasurg.2018.3861 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure. Experimental set up to evaluate 1200 patient-submitted images

eAppendix. Questionnaire assigned to each image and instructions for evaluators