Abstract

Background and Objectives: Commonly being the first step in trauma routine imaging, up to 67% fractures are missed on plain radiographs of the thoracolumbar (TL) spine. The aim of this study was to develop a deep learning model that detects traumatic fractures on sagittal radiographs of the TL spine. Identifying vertebral fractures in simple radiographic projections would have a significant clinical and financial impact, especially for low- and middle-income countries where computed tomography (CT) and magnetic resonance imaging (MRI) are not readily available and could help select patients that need second level imaging, thus improving the cost-effectiveness. Materials and Methods: Imaging studies (radiographs, CT, and/or MRI) of 151 patients were used. An expert group of three spinal surgeons reviewed all available images to confirm presence and type of fractures. In total, 630 single vertebra images were extracted from the sagittal radiographs of the 151 patients—302 exhibiting a vertebral body fracture, and 328 exhibiting no fracture. Following augmentation, these single vertebra images were used to train, validate, and comparatively test two deep learning convolutional neural network models, namely ResNet18 and VGG16. A heatmap analysis was then conducted to better understand the predictions of each model. Results: ResNet18 demonstrated a better performance, achieving higher sensitivity (91%), specificity (89%), and accuracy (88%) compared to VGG16 (90%, 83%, 86%). In 81% of the cases, the “warm zone” in the heatmaps correlated with the findings, suggestive of fracture within the vertebral body seen in the imaging studies. Vertebras T12 to L2 were the most frequently involved, accounting for 48% of the fractures. A4, A3, and A1 were the most frequent fracture types according to the AO Spine Classification. Conclusions: ResNet18 could accurately identify the traumatic vertebral fractures on the TL sagittal radiographs. In most cases, the model based its prediction on the same areas that human expert classifiers used to determine the presence of a fracture.

Keywords: vertebral fracture, fracture detection, heatmap, machine learning, artificial intelligence

1. Introduction

The thoracolumbar (TL) spine is the most frequent site of traumatic fracture occurrence [1,2], with blunt trauma being the most common cause [3,4]. Traumatic TL fractures are serious injuries associated with decreased physical function, severe reduction in the quality of life, and the lowest rate of return to work among all major organ injuries [2,3,4,5,6]. Although controversy exists, plain radiographs of the spine are commonly the first imaging step performed in trauma routine imaging, especially in hemodynamically stable patients and in low- and middle-income countries with limited availability of computed tomography (CT) and magnetic resonance imaging (MRI) [7,8,9,10,11,12]. Despite its current widespread use, the reported false-negative rates in diagnosing TL fractures on plain radiographs remain high, ranging from 24 to 67% [13,14,15,16]. A missed vertebral fracture can result in chronic pain, deformity, and delayed injury to the spinal cord and/or adjacent nerve root, which occurs in 19–50% of the cases [14,17].

The recent explosion of using labeled data, namely ‘big data’, has brought upon the era of artificial intelligence (AI) into the field of medical diagnostics and imaging, which has particularly benefitted from the application of AI based innovations [18]. Regarding imaging of the spine, promising results in the assessment of degenerative disorders [19], adult deformities [20] and adolescent idiopathic scoliosis [21], as well as in the detection of primary and secondary bone tumors [22,23], and vertebral fractures [24] have been recently published. Deep learning (DL) is a machine learning method that uses an algorithmic structure most commonly based on neural networks, such as convolutional neural networks. This method has been reported to perform equally well or even better than humans in image classification [25]. The power of this technique lies in the ability to identify and extract relevant features from labeled data at a grand scale [26].

The main aim of this study was to adapt existing DL models to accurately detect vertebral fractures on sagittal radiographs of the TL spine. The secondary aim was to gain a deeper understanding of the model’s interpretation of the “fracture zone“ through a heatmap representation. This study did not aim to compare the diagnostic accuracy of the DL models against expert human classifiers such as surgeons or radiologists. By supporting the treating physician in identifying fractures in the routine trauma imaging, the implementation of a diagnostic aid tool is anticipated to reduce the rate of missed vertebral fractures in plain radiographs.

2. Materials and Methods

2.1. Patient Selection and Image Acquisition

Imaging studies of 362 patients older than 12 years and treated for traumatic vertebral fractures from 2010 to 2020 in a Spine Surgery Reference Center (ASST Grande Ospedale Metropolitano Niguarda, Milano, Italy) were retrospectively reviewed. To identify the patients, internal disease and surgical codes corresponding to traumatic injuries of the spine were used (Table 1).

Table 1.

Disease and procedure codes.

| Disease Codes | Procedure Codes |

|---|---|

| Fracture of thoracic spine | Thoracolumbar instrumentation |

| Fracture of thoracolumbar spine | Instrumentation lumbar spine |

| Fracture of lumbar spine | Instrumentation thoracic spine |

| Vertebra fracture | Osteosynthesis of the spine |

| Vertebra injury | Spinopelvic fixation |

| Kyphoplasty | |

| Spinal fixation |

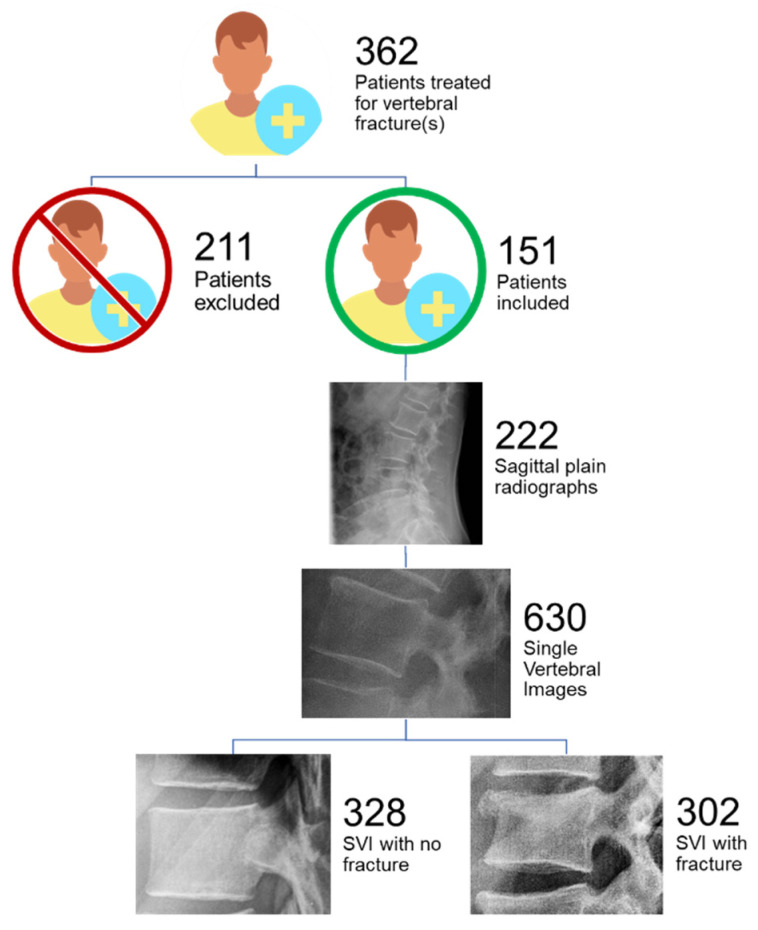

Fractures resulting from mechanisms other than trauma such as osteoporosis or pathologic fractures were excluded. After exclusion, only patients with complete imaging studies, defined as having a plain sagittal radiograph, CT and or MRI data, were included. By applying these criteria, 151 patients were selected for the final analysis.

In total, 222 sagittal radiographs of the TL spine and their corresponding CT and/or MRI data were obtained from the 151 patients. In case of repeated X-rays of the same patient with a change in the observed fracture morphology, more than one sagittal projections from the same patient was used, thus resulting in 222 radiographs from 151 patients (Figure 1).

Figure 1.

Patient inclusion and image set acquisition. SVI: single vertebral images.

2.2. Standard of Reference

An expert group of three spinal surgeons with more than 20 years of accumulated experience (approx. 15, 6, and 5 years) identified fractures on each sagittal radiograph, using all available image modalities to ensure a high diagnostic standard, including bone windows on Multidetector CT, low dose spine CT, and short tau inversion recovery (STIR) modality for MRI. The fractures where then classified according to the AO Spine Classification. Initially, each separate case was evaluated individually by each surgeon. For cases where disagreement existed, meetings were held to reach unanimous consensus.

2.3. Image Processing and Annotation

Each sagittal radiograph was annotated using C++ software code specifically developed for this study. According to the surgeon’s indications, the annotator found all fractured vertebrae present on each sagittal radiograph. Each fractured vertebra was cropped to produce a single vertebra image. This resulted in 302 single vertebral images classified as “fractures”. The vertebral level from T1 to L5 and the fracture type according to the AO Spine Classification were then assigned to the image. Further, radiologically confirmed non-fractured vertebrae corresponding to the same spine segments of the fractured vertebrae (e.g., thoracal or lumbar) were cropped from the same radiographs and included in a control group. To keep the groups balanced, 328 single vertebral images classified as “non-fracture” were cropped for a total set of 630 single vertebra images.

2.4. Adapting the Deep Learning Model

To achieve our aim, we first pre-selected, adapted, and then compared the performance of two existing DL models in the task of identifying traumatic fractures on plain sagittal radiographs of the TL spine.

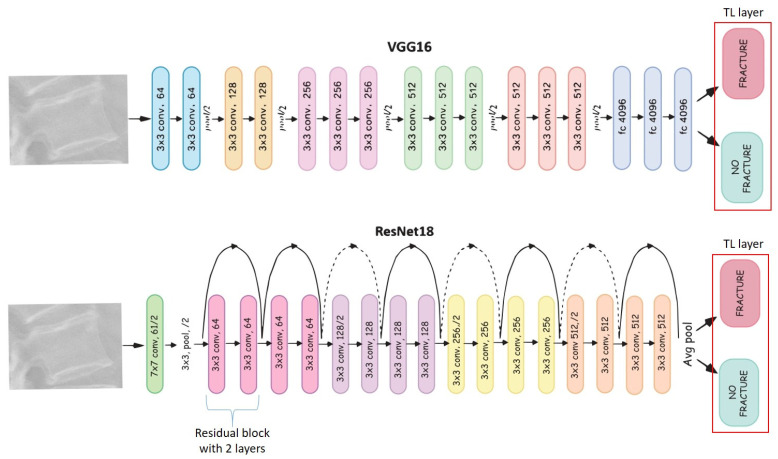

Two deep learning convolutional neural network models VGG16 [27] and ResNet18 [28] were pre-selected as building blocks due to their state-of-the-art performance on computer vision tasks such as image classification, object detection, and landmark localization [29]. The main difference between the two models is that VGG16 is a plain neural network where the image is compressed step-by-step until the final classification layer, whereas ResNet tries to preserve, as much as possible, the input of each block using skipping connections (indicated by the arrows in Figure 2).

Figure 2.

Comparison of the two deep learning convolutional neural model architectures VGG16 and ResNet18. Each colored block corresponds to a layer. The “fracture” and “no fracture” blocks are the output neurons. The last original layer of both architectures is removed and replaced by a layer with two neurons, namely “fracture” and “no fracture”. This technique of replacing the last layer of each network is called transfer learning. The dotted lines indicate an increase in the number of convolutional filters in residual block’s input to match the number of the output’s filters of the same block. TL: thoracolumbar; conv.: convolution.

Since the number of available images was not high, we used transfer learning [30,31]—a technique where a model trained on one task will be repurposed to perform a second related task using the acquired knowledge of the first one. Namely, the two last residual blocks of ResNet18 and the final classification block of VGG16 were repurposed to the new task of vertebral fracture classification. A cross-validation with 10 folds was performed. The adaptations were implemented in Python language using PyTorch (Version 1.7, manufactured by Meta, Menlo Park, CA, USA) [32].

2.5. Training and Test Sets

The single vertebra image dataset was split into a training set (N = 578) and a test set (N = 52), both containing a balanced mix, namely, 278 “fracture” and 300 “non-fracture” single vertebra images in the training set, and 28 “non-fracture” and 24 “fracture” images in the test set. To increase the generalization capability of the model, we used augmentation techniques such as random rotation, flipping, and shifting. This allowed for training of the model on different versions of the same single vertebra images during the training epochs. The images were resized to 512 × 512 pixels and normalized to have zero mean and unit variance, according to the image guidelines used in the ImageNet challenge—a reference standard for computer vision tasks.

For training and evaluation we used a Linux workstation with a NVIDIA QUADRO RTX 5000 (Salt Lake City, UT, USA). The models ran for 200 epochs using a batch size of 32 and a learning rate of 0.00016. We used the Adam optimizer, a Pytorch classificator for model optimization and a method that reduced the learning rate by a factor of 0.1 if the accuracy did not improve for 10 epochs in a row (ReduceLROnPlateau in PyTorch). The model was implemented using the PyTorch library and the results and statistical evaluation were computed in the numpy and scikit-learn libraries.

2.6. Model’s Performance Parameters

The model’s performance was assessed quantitatively by calculating the accuracy, sensitivity, and specificity in fracture identification. Accuracy represents the ability of the model to assign the images to the correct class [33]—in this case, to predict the presence of fracture in each single vertebra image. Sensitivity describes the ability to detect fractures, and specificity is the ability to detect lack of a fracture.

2.7. Understanding the Model’s Prediction

To ensure that the model’s prediction was based on correct identification of the fracture zone, we conducted a heatmap analysis based on Activation Maps. These depict the areas of the image that led the model to classify the vertebra as “fracture” or “no fracture” by displaying a “warm zone”. Technically, they were obtained by multiplying the second last layer of the neural network by the weights that point to the neuron of the class predicted by the model. Finally, the same surgeons that set the standard of reference evaluated each heatmap to determine whether the “warm zones” correlated with the fracture zones seen in the CT and MRI data.

3. Results

3.1. Epidemiological Distribution of TL Fractures

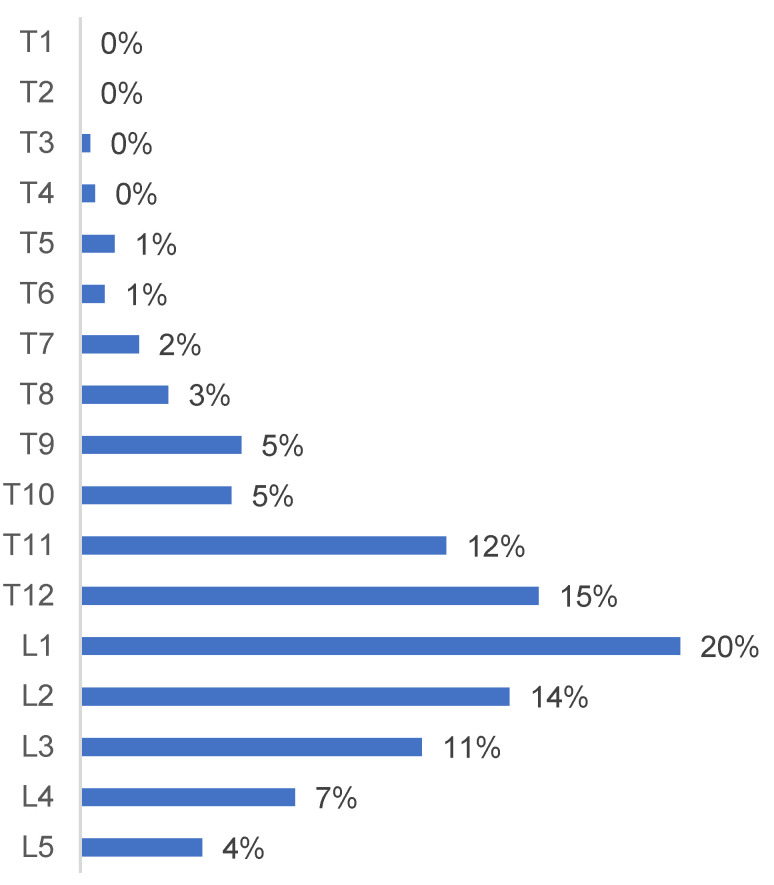

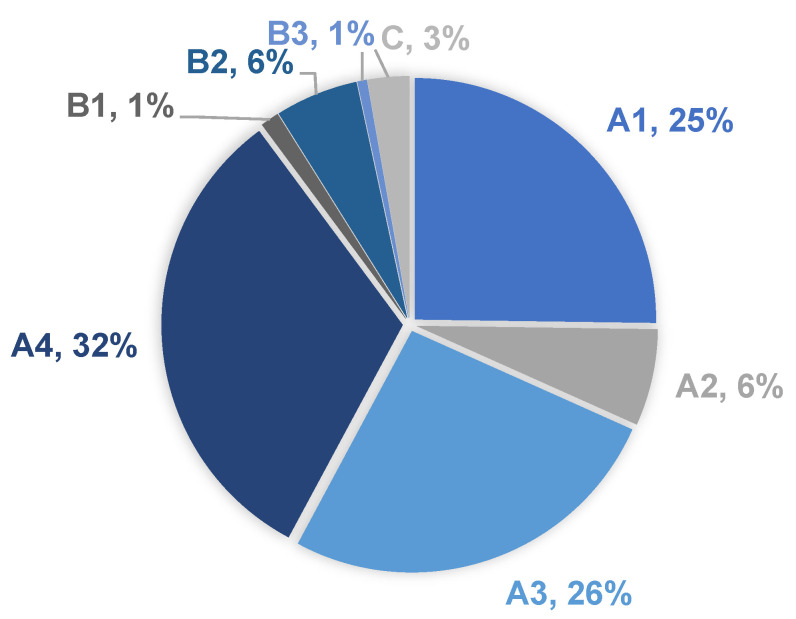

Vertebrae T12 to L2 were the most frequently involved, accounting for 48% of the fractures (Figure 3). A4, A3, and A1 were the most frequent fracture types according to the AO Spine Classification (Figure 4).

Figure 3.

Epidemiological distribution of the thoracolumbar fractures at vertebral levels from T1 to L5.

Figure 4.

Thoracolumbar fracture types according to the AO Spine Classification and their distribution among the patients.

3.2. Deep Learning Model Performance

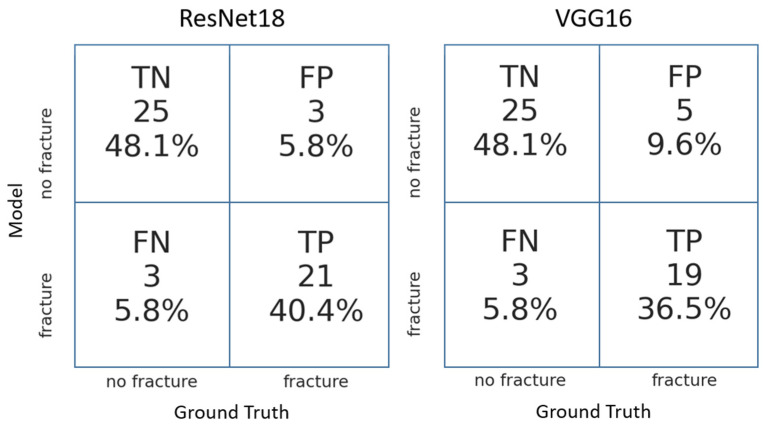

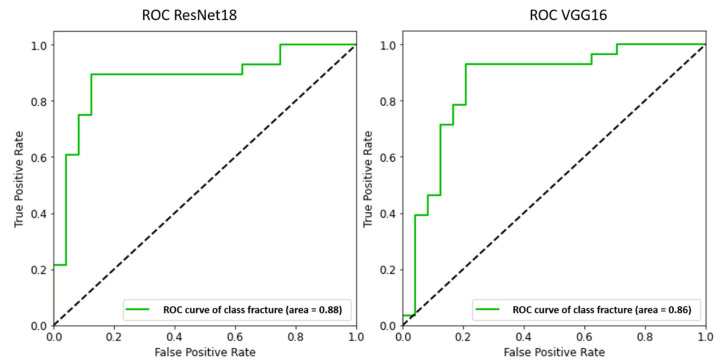

Both DL models achieved high accuracy, sensitivity, and specificity after hyperparameter optimization. In the direct comparison, ResNet18 displayed a better performance, achieving higher sensitivity, specificity (Figure 5, Table 2), and accuracy (Figure 6, Table 2).

Figure 5.

Confusion matrices obtained with the two deep learning convolutional neural models ResNet18 and VGG16. TN: True negative; FN: False negative; TP: True positive; FP; False positive.

Table 2.

Performance comparison of the two deep learning convolutional neural models ResNet18 and VGG16.

| Sensitivity | Specificity | Negative Predictive Value | Accuracy | |

|---|---|---|---|---|

| ResNet 18 | 0.91 | 0.89 | 0.89 | 0.88 |

| VGG16 | 0.90 | 0.83 | 0.89 | 0.86 |

Figure 6.

Comparison of the receiver operator characteristic (ROC) curve obtained with the two deep learning convolutional neural models ResNet18 and VGG16.

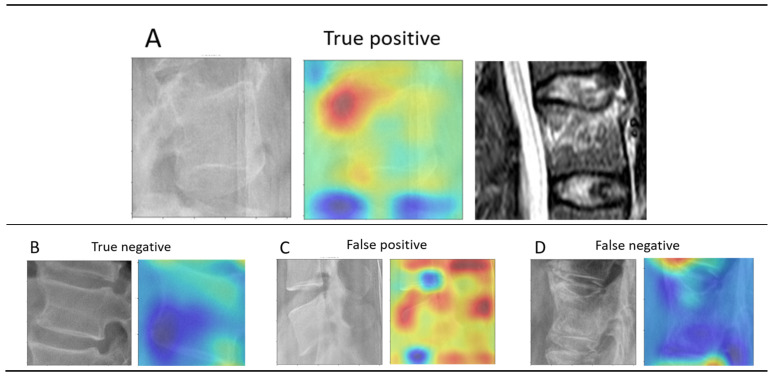

3.3. Heatmap Analysis

In 81% of the single vertebrae images, the “warm zone” correlated with the fracture zone observed in the corresponding CT or MRI data (Figure 7). In the remaining 19% of cases, the “warm zone” was allocated to the immediate vicinity of the fracture zone.

Figure 7.

Heatmap analysis of the fracture zone. (A) Although challenging to observe on the radiograph (left), the signal hyperintensity in the MRI image (right) correlates with the “warm zone” on the activation map (middle). (B) No “warm zone” is displayed, thus ruling out the presence of a fracture. (C) Multiple “warm zones” are displayed, thus incorrectly suggesting presence of fracture(s). (D) No “warm zone” is displayed within the vertebral body, incorrectly ruling out the presence of a fracture.

4. Discussion

This study demonstrated that existing DL models can be adapted to accurately detect vertebral fractures on sagittal radiographs of the TL spine. Both models achieved similar sensitivity and specificity to that reported for expert surgeons and radiologists [24,34,35,36,37,38,39], however, our results should not be extrapolated to a human versus a machine situation given the lack of a strict comparison methodology. ResNet18 demonstrated better performance regarding the fracture identification task. A reason for this could be that the skipping connection aims to preserve as much information of the original image as possible, whereas VGG compresses the original image layer-by-layer in a sequential way, thus sacrificing the original input information. Additionally, ResNet18 was less resource intensive in terms of used memory (43 MB versus 524 MB) and faster in the inference. To our knowledge, this is the first study utilizing and adapted version of ResNet18 on the task of fracture identification.

4.1. Heatmap Analysis

In 81% of the cases, ResNet18 predictions were related to the regions on the vertebral body corresponding to fracture zones observed in the CT and MRI data. Although this finding should be cautiously considered due to its exemplary nature, it illustrates the potential of AI to contribute to physicians’ decisions in the clinical workflow. Interestingly, a detailed analysis of the false negative images demonstrated that all images where the model failed to predict presence of a fracture corresponded to acute A1 injuries with no apparent dislocation or deformation observed on the X-ray, thus the presence of fracture could only be confirmed via second level imaging. Although elucidating the exact mechanism of the model’s predictions is outside the scope of this work and corresponds to the “black box” dilemma of DL algorithms, one could infer that the model tries to recognize a pattern of a “no fracture” vertebra. When this pattern is lacking, a higher probability of a fracture is then computed.

4.2. Choice of DL Model for Fracture Classification Task

Only recently have AI based models been adapted for fracture detection. Chung et al. applied a ResNet-152 convolutional neural model on cropped anteroposterior radiographs of the shoulder to distinguish fractured from normal humeri, achieving an accuracy of 95%, an area under the curve (AUC) of 0.996, sensitivity of 99%, and specificity of 97% [36]. Kim and MacKinnon used a version of the Inception V3 model to identify distal radius fractures on sagittal radiographs, achieving an AUC of 0.954 [37]. Their model analyzed the complete radiograph image instead of a cropped region of interest, as we and most other researchers have done. However, their study was limited by the exclusion of radiographs with single lateral projections inconclusive for presence of fractures, thus eliminating the potential use case for its application in clinical practice. Adams et al. concluded that GoogLeNet achieved a higher overall accuracy (90.6%) compared to AlexNet in predicting presence of femoral neck fractures, also using cropped radiographs. The reference standard was set by confirming the fracture presence intraoperatively, thus cleverly minimizing the bias introduction into the model [35]. Similarly, we minimized the annotation bias by training the model exclusively with radiographs where the presence of fracture was confirmed via CT or MRI data.

A model based on Visual Recognition V3 (IBM, Armonk, NY, USA) was recently used to identify vertebral fractures by Murata et al., achieving an accuracy, sensitivity, and specificity of 86.0%, 84.7%, and 87.3%, respectively [24]. While their results are similar to ours, there are important methodological differences to consider. To avoid the introduction of systematic errors while training the model, all of the fractures included in our study were evaluated individually by expert spinal surgeons before annotation, and then discussed in consensus meetings in case of discrepancy. In contrast, each classifying surgeon in the study by Murata et al. evaluated only a single subgroup of images. While our model was trained to identify anomalies in single vertebrae to eliminate confounding factors and ensure a future clinical applicability—as demonstrated in the heatmap analyses (Figure 7)—Murata’s group analyzed the entire radiograph. The exclusion of cases with multiple traumatic fractures impairs the application of their model in clinical practice. However, the inclusion of anteroposterior radiographs resembles a regular clinical scenario where both projections would be evaluated. In addition to the use of a different model, these factors might have contributed to the marginally better performance achieved in our study.

4.3. Clinical Relevance of AI for Automated Traumatic Lesion Detection in Radiographs

The reported rates for missed fractures on TL radiographs in the trauma setting remains high [13,14,16]. Plain radiographs of the spine are commonly the first step performed in trauma routine imaging [7,8]. They provide insights into the nature of the injury, the involvement of one or more spinal columns, and can be used to assess the need for more advanced imaging [40]. Whereas in most high-income countries CT and or MRI are typically performed as next step in the diagnosis routine, these are not readily available in the emergency setting in many low- and middle-income countries. In these countries, the use of plain radiographs to rule out vertebral fractures might be far more widespread than reported [10,11,12]. Additionally, and independently from economic factors, a validated decision aid to determine which trauma patients warrant TL spine imaging does not exist [14]. This controversy is highlighted by the seemingly opposing recommendations of various health institutions. The Eastern Association for the Surgery of Trauma and the American College of Radiology recommend CT as the modality of choice [41]. In contrast, the Advanced Trauma Life Support (ATLS) and the National Institute for Health and Care Excellence recommend plain radiographs as initial imaging modality [7,8]. Some centers have applied a stepwise approach using only plain radiographs to clear the thoracic and lumbar spine, recurring to contrast-enhanced CT only in cases where deformity or pain were present among the other parameters of hemodynamical instability [9]. Despite its current widespread use, false negative rates of up to 67% in diagnosing TL fractures have been reported [13,14,15,16]. Delayed or missed diagnosis are qualified as diagnostic errors by the Institute of Medicine [42] and carry important legal and clinical implications. Legally, misdiagnoses are the most common source of malpractice claims or litigation [43]. Clinically, missed fractures on radiographs have consequences such as malunion with restricted range of motion, posttraumatic osteoarthritis, and joint collapse [44]. In this context, the use of an AI based diagnostic aid tool that supports clinicians to identify vertebral fractures could reduce the frequency of such undesirable outcomes. A commonly mentioned rebuttal for implementation of AI based algorithms is the so called “black box” problem, where the clinician is blinded to the “reasoning” behind the model’s prediction [43]. Visualization techniques such as heatmaps could improve the acceptance of fracture detection systems in clinical practice.

4.4. Limitations

The present study had some limitations. First, some centers favor the use of CT or MRI as initial imaging modality for detection of spine injuries, thus obviating the need for diagnostic aid tools such as our model. Second, the image set had a relatively small size. However, the impact of this limitation was mitigated by performing aggressive image augmentation and taking advantage of models pre-trained on the ImageNet dataset. Third, the aim of this study was to develop a model capable of identifying anomalies correlating with the presence of a fracture in a single radiographic projection. A physician relies on several imaging modalities and clinical findings to establish the diagnosis of a fracture. Future studies should also explore the diagnostic capabilities of AI based algorithms on more radiographic projections and eventually different imaging modalities. Regarding the heatmaps, it should be noted that the activation maps do not necessarily visualize the fracture zone, but rather the zones being more important in determining the output of the classifier, which may not correspond to the fracture itself.

5. Conclusions

This study demonstrated that existing DL models can be adapted to accurately identify traumatic vertebral fractures on TL sagittal radiographs, as revealed by the performance obtained by our adapted versions of VGG16 and ResNet18. Specifically, the adapted version of ResNet18 achieved higher sensitivity, accuracy, and lower false negative rate. Its performance was similar to that of other models reported in the literature. Interestingly, the model based its prediction on the same image areas that lead human experts to diagnose a fracture. Our findings suggest that current AI based applications could be optimized to create a diagnostic aid tool, which supports clinicians in identifying vertebral fractures. The implementation of such a tool could reduce the frequency of diagnostic errors and thus improve patient outcomes.

Author Contributions

Conceptualization, E.G.; Methodology, P.V. and E.G.; Software, F.G. and A.C.; Human expert classification, G.R.S. and P.D.G.; Funding acquisition, B.G., M.A., and F.G.; Data curation, G.S.R.; Writing—original draft preparation, G.S.R. and A.C.; Writing—review and editing, E.G., P.V., M.A., B.G., G.R.S., P.D.G. and F.G.; All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The Ethical Committee approval was granted via the Comitato Etico Milano Area 3 under Ref. No. 359-24062020, granted on 19 October 2020.

Informed Consent Statement

Due to the retrospective nature of the study, the minimum risk to the subjects and the anonymization of the material, a waiver of informed consent was approved.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request. In order to comply with the requirements of the Ethical Committee, the image set is not available for request due to data privacy policies.

Conflicts of Interest

The named authors have no conflict of interest, financial or otherwise.

Funding Statement

This study was performed with the assistance of the AO Foundation and the Italian Ministry of Health (“Ricerca Corrente”).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Leucht P., Fischer K., Muhr G., Mueller E.J. Epidemiology of traumatic spine fractures. Injury. 2009;40:166–172. doi: 10.1016/j.injury.2008.06.040. [DOI] [PubMed] [Google Scholar]

- 2.Liu B., Zhu Y., Liu S., Chen W., Zhang F., Zhang Y. National incidence of traumatic spinal fractures in China. Medicine. 2018;97:e12190. doi: 10.1097/MD.0000000000012190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Holmes J.F., Miller P.Q., Panacek E.A., Lin S., Horne N.S., Mower W.R. Epidemiology of thoracolumbar spine injury in blunt trauma. Acad. Emerg. Med. 2001;8:866–872. doi: 10.1111/j.1553-2712.2001.tb01146.x. [DOI] [PubMed] [Google Scholar]

- 4.Katsuura Y., Osborn J.M., Cason G.W. The epidemiology of thoracolumbar trauma: A Meta-Analysis. J. Orthop. 2016;13:383–388. doi: 10.1016/j.jor.2016.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hu R., Mustard C.A., Burns C. Epidemiology of Incident Spinal Fracture in a Complete Population. Spine. 1966;21:492–499. doi: 10.1097/00007632-199602150-00016. [DOI] [PubMed] [Google Scholar]

- 6.Daly M.C., Pate M.S., Bhatia N.N., Bederman S.S. The Influence of Insurance Status on the Surgical Treatment of Acute Spinal Fractures. Spine. 2016;41:E37–E45. doi: 10.1097/BRS.0000000000001177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Advanced trauma life support (ATLS®): The ninth edition. J. Trauma Acute Care Surg. 2013;74:1363–1366. doi: 10.1097/TA.0B013E31828B82F5. [DOI] [PubMed] [Google Scholar]

- 8.Spinal Injury Assessment and Initial Management. National Institute for Health and Care Excellence; London, UK: 2016. [PubMed] [Google Scholar]

- 9.Reitano E., Briani L., Sammartano F., Cimbanassi S., Luperto M., Vanzulli A., Chiara O. Torso computed tomography in blunt trauma patients with normal vital signs can be avoided using non-invasive tests and close clinical evaluation. Emerg. Radiol. 2019;26:655–661. doi: 10.1007/s10140-019-01712-0. [DOI] [PubMed] [Google Scholar]

- 10.Silva H.P., Viana A.L.D. Health technology diffusion in developing countries: A case study of CT scanners in Brazil. Health Policy Plan. 2011;26:385–394. doi: 10.1093/heapol/czq076. [DOI] [PubMed] [Google Scholar]

- 11.Ngoya P.S., Muhogora W.E., Pitcher R.D. Defining the diagnostic divide: An analysis of registered radiological equipment resources in a low-income African country. Pan Afr. Med. J. 2016;25:99. doi: 10.11604/pamj.2016.25.99.9736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Alibhai A., Hendrikse C., Bruijns S.R. Poor access to acute care resources to treat major trauma in low- and middle-income settings: A self-reported survey of acute care providers. Afr. J. Emerg. Med. 2019;9:S38. doi: 10.1016/j.afjem.2019.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Brandt M.-M., Wahl W.L., Yeom K., Kazerooni E., Wang S.C. Computed Tomographic Scanning Reduces Cost and Time of Complete Spine Evaluation. J. Trauma Inj. Infect. Crit. Care. 2004;56:1022–1028. doi: 10.1097/01.TA.0000124304.68584.2C. [DOI] [PubMed] [Google Scholar]

- 14.VandenBerg J., Cullison K., Fowler S.A., Parsons M.S., McAndrew C.M., Carpenter C.R. Blunt Thoracolumbar-Spine Trauma Evaluation in the Emergency Department: A Meta-Analysis of Diagnostic Accuracy for History, Physical Examination, and Imaging. J. Emerg. Med. 2019;56:153–165. doi: 10.1016/j.jemermed.2018.10.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wintermark M., Mouhsine E., Theumann N., Mordasini P., Van Melle G., Leyvraz P.F., Schnyder P. Thoracolumbar spine fractures in patients who have sustained severe trauma: Depiction with multi-detector row CT. Radiology. 2003;227:681–689. doi: 10.1148/radiol.2273020592. [DOI] [PubMed] [Google Scholar]

- 16.Pizones J., Izquierdo E., Álvarez P., Sánchez-Mariscal F., Zúñiga L., Chimeno P., Benza E., Castillo E. Impact of magnetic resonance imaging on decision making for thoracolumbar traumatic fracture diagnosis and treatment. Eur. Spine J. 2011;20:390–396. doi: 10.1007/s00586-011-1913-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Levi A.D., Hurlbert R.J., Anderson P., Fehlings M., Rampersaud R., Massicotte E.M., France J.C., Le Huec J.C., Hedlund R., Arnold P. Neurologic deterioration secondary to unrecognized spinal instability following trauma-A multicenter study. Spine. 2006;31:451–458. doi: 10.1097/01.brs.0000199927.78531.b5. [DOI] [PubMed] [Google Scholar]

- 18.Yang J., Zhang Y.S., Yue K., Khademhosseini A. Cell-laden hydrogels for osteochondral and cartilage tissue engineering. Acta Biomater. 2017;57:1–25. doi: 10.1016/j.actbio.2017.01.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jamaludin A., The Genodisc Consortium. Lootus M., Kadir T., Zisserman A., Urban J., Battié M., Fairbank J., McCall I. ISSLS PRIZE IN BIOENGINEERING SCIENCE 2017: Automation of reading of radiological features from magnetic resonance images (MRIs) of the lumbar spine without human intervention is comparable with an expert radiologist. Eur. Spine J. 2017;26:1374–1383. doi: 10.1007/s00586-017-4956-3. [DOI] [PubMed] [Google Scholar]

- 20.Weng C.-H., Wang C.-L., Huang Y.-J., Yeh Y.-C., Fu C.-J., Yeh C.-Y., Tsai T.-T. Artificial Intelligence for Automatic Measurement of Sagittal Vertical Axis Using ResUNet Framework. J. Clin. Med. 2019;8:1826. doi: 10.3390/jcm8111826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Vergari C., Skalli W., Gajny L. A convolutional neural network to detect scoliosis treatment in radiographs. Int. J. Comput. Assist. Radiol. Surg. 2020;15:1069–1074. doi: 10.1007/s11548-020-02173-4. [DOI] [PubMed] [Google Scholar]

- 22.Maki S., Furuya T., Horikoshi T., Yokota H., Mori Y., Ota J., Kawasaki Y., Miyamoto T., Norimoto M., Okimatsu S., et al. A Deep Convolutional Neural Network With Performance Comparable to Radiologists for Differentiating Between Spinal Schwannoma and Meningioma. Spine. 2020;45:694–700. doi: 10.1097/BRS.0000000000003353. [DOI] [PubMed] [Google Scholar]

- 23.Chmelik J., Jakubicek R., Walek P., Jan J., Ourednicek P., Lambert L., Amadori E., Gavelli G. Deep convolutional neural network-based segmentation and classification of difficult to define metastatic spinal lesions in 3D CT data. Med. Image Anal. 2018;49:76–88. doi: 10.1016/j.media.2018.07.008. [DOI] [PubMed] [Google Scholar]

- 24.Murata K., Endo K., Aihara T., Suzuki H., Sawaji Y., Matsuoka Y., Nishimura H., Takamatsu T., Konishi T., Maekawa A., et al. Artificial intelligence for the detection of vertebral fractures on plain spinal radiography. Sci. Rep. 2020;10:20031. doi: 10.1038/s41598-020-76866-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Krizhevsky A., Sutskever I., Hinton G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 26.Gyftopoulos S., Lin D., Knoll F., Doshi A., Rodrigues T.C., Recht M.P. Artificial Intelligence in Musculoskeletal Imaging: Current Status and Future Directions. AJR Am. J. Roentgenol. 2019;213:506–513. doi: 10.2214/AJR.19.21117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. 20141409.1556 [Google Scholar]

- 28.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [DOI] [Google Scholar]

- 29.Galbusera F., Casaroli G., Bassani T. Artificial intelligence and machine learning in spine research. JOR Spine. 2019;2:e1044. doi: 10.1002/jsp2.1044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Shin H.-C., Roth H.R., Gao M., Lu L., Xu Z., Nogues I., Yao J., Mollura D., Summers R.M. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans. Med. Imaging. 2016;35:1285–1298. doi: 10.1109/TMI.2016.2528162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Panigrahi S., Nanda A., Swarnkar T. A Survey on Transfer Learning. Smart Innov. Syst. Technol. 2021;194:781–789. doi: 10.1007/978-981-15-5971-6_83. [DOI] [Google Scholar]

- 32.Paszke A., Gross S., Massa F., Lerer A., Bradbury J., Chanan G., Killeen T., Lin Z., Gimelshein N., Antiga L., et al. PyTorch: An imperative style, high-performance deep learning library. arXiv. 20191912.01703 [Google Scholar]

- 33.Sidey-Gibbons J.A.M., Sidey-Gibbons C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019;19:64. doi: 10.1186/s12874-019-0681-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Olczak J., Fahlberg N., Maki A., Razavian A.S., Jilert A., Stark A., Sköldenberg O., Gordon M. Artificial intelligence for analyzing orthopedic trauma radiographs: Deep learning algorithms—Are they on par with humans for diagnosing fractures? Acta Orthop. 2017;88:581–586. doi: 10.1080/17453674.2017.1344459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Adams M., Chen W., Holcdorf D., McCusker M.W., Howe P.D., Gaillard F. Computer vs. human: Deep learning versus perceptual training for the detection of neck of femur fractures. J. Med. Imaging Radiat. Oncol. 2019;63:27–32. doi: 10.1111/1754-9485.12828. [DOI] [PubMed] [Google Scholar]

- 36.Chung S.W., Han S.S., Lee J.W., Oh K.-S., Kim N.R., Yoon J.P., Kim J.Y., Moon S.H., Kwon J., Lee H.-J., et al. Automated detection and classification of the proximal humerus fracture by using deep learning algorithm. Acta Orthop. 2018;89:468–473. doi: 10.1080/17453674.2018.1453714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kim D.H., MacKinnon T. Artificial intelligence in fracture detection: Transfer learning from deep convolutional neural networks. Clin. Radiol. 2018;73:439–445. doi: 10.1016/j.crad.2017.11.015. [DOI] [PubMed] [Google Scholar]

- 38.Chen J.-X., Goswami A., Xu D.-L., Xuan J., Jin H.-M., Xu H.-M., Zhou F., Wang Y.-L., Wang X.-Y. The radiologic assessment of posterior ligamentous complex injury in patients with thoracolumbar fracture. Eur. Spine J. 2017;26:1454–1462. doi: 10.1007/s00586-016-4687-x. [DOI] [PubMed] [Google Scholar]

- 39.Yang S., Yin B., Cao W., Feng C., Fan G., He S. Diagnostic accuracy of deep learning in orthopaedic fractures: A systematic review and meta-analysis. Clin. Radiol. 2020;75:713.e17–713.e28. doi: 10.1016/j.crad.2020.05.021. [DOI] [PubMed] [Google Scholar]

- 40.Rajasekaran S., Vaccaro A.R., Kanna R.M., Schroeder G.D., Oner F.C., Vialle L., Chapman J., Dvorak M., Fehlings M., Shetty A.P., et al. The value of CT and MRI in the classification and surgical decision-making among spine surgeons in thoracolumbar spinal injuries. Eur. Spine J. 2017;26:1463–1469. doi: 10.1007/s00586-016-4623-0. [DOI] [PubMed] [Google Scholar]

- 41.Sixta S., Moore F.O., Ditillo M.F., Fox A.D., Garcia A.J., Holena D., Joseph B., Tyrie L., Cotton B. Screening for thoracolumbar spinal injuries in blunt trauma: An eastern association for the surgery of trauma practice management guideline. J. Trauma Acute Care Surg. 2012;73:S326–S332. doi: 10.1097/TA.0b013e31827559b8. [DOI] [PubMed] [Google Scholar]

- 42.Balogh E.P., Miller B.T., Ball J.R. Improving Diagnosis in Health Care. National Academies Press; Washington, DC, USA: 2016. [PubMed] [Google Scholar]

- 43.Fazal M.I., Patel M.E., Tye J., Gupta Y. The past, present and future role of artificial intelligence in imaging. Eur. J. Radiol. 2018;105:246–250. doi: 10.1016/j.ejrad.2018.06.020. [DOI] [PubMed] [Google Scholar]

- 44.Lindsey R., Daluiski A., Chopra S., Lachapelle A., Mozer M., Sicular S., Hanel D., Gardner M., Gupta A., Hotchkiss R., et al. Deep neural network improves fracture detection by clinicians. Proc. Natl. Acad. Sci. USA. 2018;115:11591–11596. doi: 10.1073/pnas.1806905115. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request. In order to comply with the requirements of the Ethical Committee, the image set is not available for request due to data privacy policies.