Abstract

Early detection of esophageal cancer has always been difficult, thereby reducing the overall five-year survival rate of patients. In this study, semantic segmentation was used to predict and label esophageal cancer in its early stages. U-Net was used as the basic artificial neural network along with Resnet to extract feature maps that will classify and predict the location of esophageal cancer. A total of 75 white-light images (WLI) and 90 narrow-band images (NBI) were used. These images were classified into three categories: normal, dysplasia, and squamous cell carcinoma. After labeling, the data were divided into a training set, verification set, and test set. The training set was approved by the encoder–decoder model to train the prediction model. Research results show that the average time of 111 ms is used to predict each image in the test set, and the evaluation method is calculated in pixel units. Sensitivity is measured based on the severity of the cancer. In addition, NBI has higher accuracy of 84.724% when compared with the 82.377% accuracy rate of WLI, thereby making it a suitable method to detect esophageal cancer using the algorithm developed in this study.

Keywords: esophageal cancer, small data, semantic segmentation, encoder–decoder model, U-Net, ResNet150V2, white light imaging, narrowband imaging

1. Introduction

Esophageal cancer (EC) is an extremely dangerous and minimally researched cancer [1,2,3,4]. It is the eighth leading cause of cancer-related mortality and the sixth most common cancer type [5]. By the end of this decade, at least one in a hundred men in European countries such as the United Kingdom and the Netherlands will have EC [6]. Two out of five patients with EC are likely to be detected in late stages; hence, less than 20% of the patients survive more than 3 years [7,8,9]. Nevertheless, if the disease is diagnosed in the early stages, then the five-year survival rate will reach more than 90%. On the contrary, the rate will decrease to less than 10% when the disease is detected in the later stages. Thus, early detection of EC is important for increasing the survival rate [10,11]. At present, endoscopists are unable to draw a conclusion from the endoscope images of the esophagus during the early stages of EC [12]. Therefore, the disease can go unnoticed in the earlier stages. A precise assessment of individualized treatment depends on the accuracy of the initial diagnosis [13]. The endoscopic images acquired by using generic mechanisms will be altered by tissue secretion or instrument specifications, which may directly or indirectly lead to misjudgments in diagnosis. Computer-aided diagnosis (CAD) algorithms can be categorized based on the nature of the endoscopic image, either by narrowband image (NBI) or by white light imaging (WLI). These techniques use the penetration attributes of light. WLI utilizes a broad range of visible light to characterize the mucosa. Conversely, for NBI, two filters are placed on top of the light source, in the middle wavelength range: blue (415 nm) and green (540 nm) [14]. The infiltration of the blue filter is smaller than long-wavelength light. Nonetheless, it corresponds to the absorption of hemoglobin, thereby enabling veins, capillaries, and other parts with a higher hemoglobin ratio to appear darker and generate an adequate amount of contrast to the enclosing mucosa that reflects light. The second wavelength (540 nm) light corresponds to the secondary hemoglobin absorption peak; thus, deeper mucosal and submucosal vessels are made evident by the 540 nm light and are displayed in cyan [15,16].

In the past few years, considerable research has been published based on deep learning models, CAD, and other methodologies for diagnosing EC, and most of this research has shown potential for application [17,18,19,20,21,22,23,24,25,26,27,28,29]. For example, one study was conducted by Shahidi et al. by using the WLI and NBI to precisely detect EC through artificial intelligence (AI) [30]. Another study was conducted by Yoshitaka et al. by employing convolutional neural networks (CNN) to ascertain the invasion depth of EC under WLI. Results showed that using AI can be efficient to a greater extent for detecting esophageal squamous cell carcinoma (ESCC) than endoscopists [31]. Hiromu et al. conducted a study similar to CAD studies for the identification of ESCC and found that all CAD studies had better sensitivity and accuracy [32]. Wang et al. established a single shot multibox detector by using a CNN for identifying EC. Another study conducted by de Groof et al. built a hybrid ResNet-UNet model CAD algorithm [33]. Size et al. also built a ResNet-Based FCN backbone network to recognize cancerous areas in the esophagus [34]. Barbeiro et al. (2019) used NBI to study gastrointestinal endoscopy and found that NBI can be used as an important auxiliary image for WLI, which can improve the detection of gastrointestinal lesions. Apart from CAD, many biosensors have been recently researched to detect different cancer types [35,36,37]. However, most of these biosensors have a high limit of detection, thereby leading to poor sensitivity in early-stage cancer detection. With the increase in the domestic air-pollution rate, the risk for EC is constantly increasing [38,39]. Despite the rapid development of AI in recent years, EC continues to have the lowest survival rate because its symptoms are difficult to detect. Most of the recent research results are displayed in the form of box selection, which is less accurate in predicting the disease compared with semantic segmentation.

Therefore, this study aims to use the concept of semantic segmentation and U-Net as the basic artificial neural network, and Resnet to extract feature maps that will classify and predict the location of EC in its early stages.

2. Materials and Methods

2.1. Image Pre-Processing

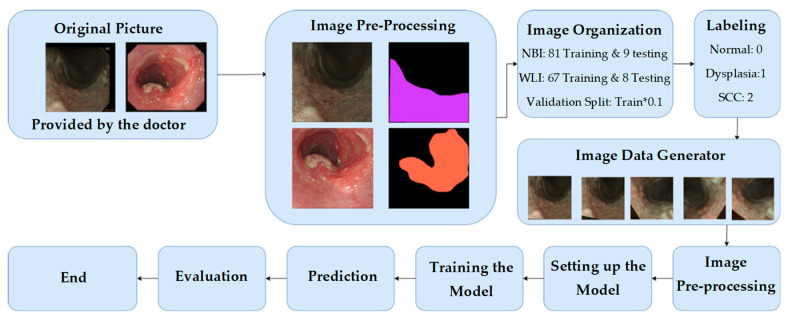

As shown in Figure 1, the data used in this experiment are endoscopic images, and all endoscopic images generally have black frames. These black frames are unhelpful data in the training context. Therefore, the black frame was cropped; thus, only the esophagus image was provided for training, and the size of the image was modified to 608 pixels × 608 pixels. Another screening was conducted to ensure that the heavily blurry image and the images with rainbow lens flare or bubble interference were removed. All these images must be removed because the data set was already small, and in this case, any small interference could significantly affect the training set. Afterward, the doctors marked the area of the images with the symptoms. Then, these data were organized into two types: WLI and NBI. The training/validation set accounted for 90% of the total data, and the test set accounted for 10% of the total data. The number of images for both categories was as follows:

WLI: 67 train/validation sets plus eight test sets

NBI: 81 training/validation sets plus nine test sets

Figure 1.

Overall experimental flow chart.

After allocating the data, the markers in the image were divided into two categories. The 0th category was normal, and the 1st category was a combination of dysplasia and SCC. All the data were saved as a .npy file to increase the speed of reading the data. Data augmentation was performed to increase the amount of data using the function ImageDataGenerator from the Keras library. The rotation range was set to 60, within which the images were rotated randomly. The sheer range was also set to 0.5, which will randomly apply shearing transformations.

2.2. Network Architecture

In this research, ResNet152V2+U-Net was chosen as the network design. Typically, the contracting path conforms to the design of a conventional convolutional network. It is comprised of the repeated application of two 3 × 3 convolutions (unpadded convolutions), each followed by a rectified linear unit (ReLU) and a 2 × 2 maximum pooling operation with stride 2 for downsampling [40,41]. However, the algorithm developed in this study was built as U-Net while the contracting path was updated to Res-Net152V2. Four of the five convolutional blocks of ResNet matched the number of convolutional blocks in the original U-Net expansion path. The respective contraction and expansion paths were then joined. The contraction path used Res-Net152V2, whilst the expansion path utilized the original U-Net. This design emphasized the use of a more complex model to locate a superior feature map. Multiple testing on the contracting route using the original U-Net, VGG19, and ResNet50 resulted in a significant accuracy improvement. As the input, the block from the first layer of ResNet152V2 was used. The block transferred to the expansion route was made compatible by an iterative procedure. Conv1 was utilized for the second layer, and the result of Conv1 was transferred to the extension path for connection. The third, fourth, and fifth levels then used Conv2 x, Conv3 x, and Conv4 x, respectively, of which one may be used to link the extension path layer. Its model architectures diagram are shown in Supplementary Materials.

3. Results

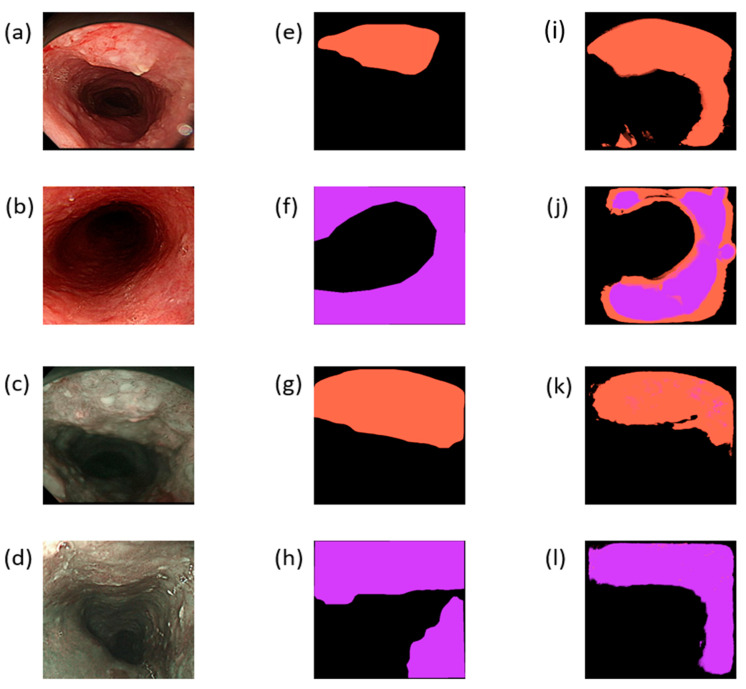

The results of this study are divided into two parts: WLI and NBI. A total of 67 images were included for the training/validation set in WLI, whereas 81 images were included for the training/validation set in NBI. During training, WLI and NBI were trained simultaneously. The first 25 epochs used a learning rate of 0.001, and the learning rate of the last 10 epochs was revised down to 0.0002. The batch size was set to 1, and the number of steps was the number of samples. For the loss function, categorical_crossentropy was used, and for accuracy, categorical_accuracy was used because this study involved a multi-classification problem. The training accuracy was almost stable, and it gradually increased in the first 25 epochs before training. The verification accuracy was also higher than the training accuracy except for a few unstable ones. The training accuracy of the next 10 epochs gradually increased, and the verification accuracy was stable. The overall training effect was also good, and the gradual increase of the training accuracy was controlled at a good number of epochs to avoid overfitting. The verification accuracy of the 25 epochs before NBI training was higher than the accuracy during training except for a few instabilities. The accuracy rate of the 10 epochs after training was constantly changing and gradually increasing, whereas the verification accuracy rate was constantly changing and gradually decreasing close to the training accuracy rate. Despite a potential trend of overfitting, the data have also been expanded; thus, several epochs must be considered to achieve the best training. Using the trained model for the test set, WLI and NBI have eight and nine prediction sets, respectively. Several evaluation criteria were used for the results. Sensitivity is also known as a true positive or recall rate, which indicates the probability of successfully detecting positive samples among all true positive samples. The second criterion is precision, which indicates the probability of successfully detecting positive samples among all predicted positive samples. The F1-score is a harmonic average function of precision and recall, which is a rough indicator for checking the performance of this model. A confusion matrix shows the prediction results of the test set, which are presented in a tabular form. For the final result of this study, the three abovementioned evaluation indicators can be calculated based on the results of the confusion matrix. Figure 2 shows a schematic diagram of the results of this research. Figure 2a,b show the input WLI images after cropping, whereas Figure 2c,d are the input NBI images after cropping the original image. Figure 2e–h are ground-truth images marked by doctors based on the corresponding input images, whereas Figure 2i–l show the prediction results of the corresponding graphs. The orange-marked area corresponds to the category SCC, whereas the purple-marked area corresponds to the category dysplasia. Semantic segmentation can intuitively locate the marked position through the diagram. However, as shown in Figure 2i, some extra areas have EC. However, in the endoscopic image, a small lens flare is detected (Figure 2a). The similarity between the ground-truth and predicted results can be observed from Figure 2k. As shown in Figure 2j, two categories were marked similarly, which outperformed the detection of an expert doctor. However, in the NBI part, the symptoms of SCC and dysplasia were almost perfectly marked. Therefore, the NBI images can detect EC better than WLI images.

Figure 2.

(a,b) Input images after cropping the original WLI images; (c,d) input images after cropping the original NBI images. (e–h) Ground-truth maps marked by doctors based on the corresponding input images. (i–l) Prediction results of the corresponding graphs; the orange mark corresponds to SCC, whereas the purple mark corresponds to dysplasia.

The accuracy, sensitivity, and F1 score were marked based on the severity of the diseases into three stages: normal, dysplasia, and SCC. The results of NBI are shown in Table 1, whereas the results of WLI are shown in Table 2. The overall accuracy rate was 84.7245% in NBI. The precision of dysplasia and SCC categories was 85.67%, which was higher than the accuracy of the normal category. However, in WLI, the accuracy was reduced to 82.37%. Moreover, the precision rate of dysplasia and SCC categories was only 77.24%, which was much lower than the required medical standards. Nevertheless, the accuracy of the normal category was 85.89%. Based on these results, we can infer that the NBI is a suitable method to detect EC in its early stages using the algorithm developed in this study.

Table 1.

Confusion matrix for narrow-band imaging.

| Predicted | Normal | Dysplasia and SCC | Precision | F1 Score | IoU | |

| Normal | 525,422 | 100,490 | 83.95% | 0.857922 | 67.89% | |

| Dysplasia and SCC | 73,537 | 439,809 | 85.67% | 0.834833 | 71.35% | |

| True Positive Rate | 87.72% | 81.40% | ||||

Table 2.

Confusion matrix for white-light imaging.

| Predicted | Normal | Dysplasia and SCC | Precision | F1 Score | IoU | |

| Normal | 504,168 | 82,798 | 85.89% | 0.852622 | 71.79% | |

| Dysplasia and SCC | 91,495 | 310,531 | 77.24% | 0.780861 | 54.48% | |

| True Positive Rate | 84.64% | 78.95% | ||||

4. Discussion

In recent years, the research on AI, biosensors, and medical treatment has constantly developed new methods to increase the probability of recovery after illness and reduce the possibility of morbidity or serious illness. Esophageal cancer has a low survival rate. Endoscopy can help doctors to grasp the location of the disease for the first time, no longer the approximate location, and it can also help patients reduce the chance of suffering from the use of iodine dyes. Semantic segmentation can intuitively see the marked position through the map. In the method developed in this study, particularly the NBI, SCC, and dysplasia symptoms were marked with high accuracy. In addition, NBI images can detect EC in its early stages better than WLI images in dysplasia and SCC, with high accuracy. Concerning accuracy, the symptoms are similar to the pixel data of the general esophagus. However, the amount of data in the training/validation set is relatively small, and the amount of disease information is insufficient. Hence, some results were not accurate. Furthermore, the accuracy of the developed model can be increased drastically by increasing the number of NBI and WLI images used for the training set.

5. Conclusions

Semantic segmentation is a method of classifying each pixel, and the information obtained from every pixel is important. When this technique is used in medical imaging, various abnormal areas can be marked. However, the symptoms of EC are negligible. Hence, the number of training and testing data as well as the resolution will be important factors in detection and classification. If the color of a single pixel is not accurate enough, then the model will have a color difference error when predicting the pixel and reproducing ground truth. However, the acquisition of endoscopic data is difficult. In this study, semantic segmentation was used to predict and label EC. ResNet152V2, and U-Net were also used as the AI architecture. In addition, a total of 165 images have been used, 67 of which were used for training and verification, whereas eight images were used for prediction in WLI images. A total of 81 images were used for training and verification, whereas nine images were used for prediction in NBI images. The time taken to predict each image in the test set is only 111 ms. The results indicate that the NBI has higher accuracy of 84.724% when compared with the 82.377% accuracy rate of WLI, thereby making it a suitable method to detect EC using the algorithm developed in this study.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jpm12081204/s1, Figure S1: Simple encoder-decoder model diagram. Figure S2: U-Net original architecture diagram. Figure S3: Residual block. Figure S4: Bottleneck building block. Figure S5: ResNet152V2+U-Net Architecture Diagram. Table S1: Architecture diagram of ResNet for ImageNet.

Author Contributions

Conceptualization, Y.-J.F., C.-W.H. and H.-C.W.; data curation, Y.-M.T., A.M. and H.-C.W.; formal analysis, C.-W.H. and H.-C.W.; funding acquisition, Y.-M.T. and H.-C.W.; investigation, C.-W.H., A.M., Y.-J.F. and H.-C.W.; methodology, C.-W.H. and Y.-M.T.; software, Y.-M.T., Y.-J.F. and A.M.; supervision, H.-C.W.; validation, C.-W.H. and H.-C.W.; writing—original draft, A.M.; writing—review and editing, H.-C.W. and A.M. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted by the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of National Taiwan University Hospital (NTUH) (NTUH-202009096RIND).

Informed Consent Statement

Written informed consent was waived in this study because of the retrospective, anonymized nature of the study design.

Data Availability Statement

Data sharing is not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was supported by the Ministry of Science and Technology, The Republic of China, under the grants MOST 111-2221-E-194-007. This work was financially/partially supported by the Advanced Institute of Manufacturing with High-tech Innovations (AIM-HI) and the Center for Innovative Research on Aging Society (CIRAS) from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE), the National Chung Cheng University-National Taiwan University Hospital Yunlin Branch Joint Research Program (CCU-NTUHYB-2022-01), and Kaohsiung Armed Forces General Hospital research project 111-010 in Taiwan.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Holmes R.S., Vaughan T.L. Seminars in Radiation Oncology. Elsevier; Amsterdam, The Netherlands: 2007. Epidemiology and pathogenesis of esophageal cancer; pp. 2–9. [DOI] [PubMed] [Google Scholar]

- 2.Layke J.C., Lopez P.P. Esophageal cancer: A review and update. Am. Fam. Physician. 2006;73:2187–2194. [PubMed] [Google Scholar]

- 3.Mariette C., Markar S.R., Dabakuyo-Yonli T.S., Meunier B., Pezet D., Collet D., D’Journo X.B., Brigand C., Perniceni T., Carrère N. Hybrid minimally invasive esophagectomy for esophageal cancer. N. Engl. J. Med. 2019;380:152–162. doi: 10.1056/NEJMoa1805101. [DOI] [PubMed] [Google Scholar]

- 4.Horie Y., Yoshio T., Aoyama K., Yoshimizu S., Horiuchi Y., Ishiyama A., Hirasawa T., Tsuchida T., Ozawa T., Ishihara S. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest. Endosc. 2019;89:25–32. doi: 10.1016/j.gie.2018.07.037. [DOI] [PubMed] [Google Scholar]

- 5.Barret M., Prat F. Diagnosis and treatment of superficial esophageal cancer. Ann. Gastroenterol. 2018;31:256. doi: 10.20524/aog.2018.0252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Arnold M., Laversanne M., Brown L.M., Devesa S.S., Bray F. Predicting the future burden of esophageal cancer by histological subtype: International trends in incidence up to 2030. Off. J. Am. Coll. Gastroenterol. ACG. 2017;112:1247–1255. doi: 10.1038/ajg.2017.155. [DOI] [PubMed] [Google Scholar]

- 7.Ferlay J., Colombet M., Soerjomataram I., Mathers C., Parkin D., Piñeros M., Znaor A., Bray F. Estimating the global cancer incidence and mortality in 2018: GLOBOCAN sources and methods. Int. J. Cancer. 2019;144:1941–1953. doi: 10.1002/ijc.31937. [DOI] [PubMed] [Google Scholar]

- 8.Hur C., Miller M., Kong C.Y., Dowling E.C., Nattinger K.J., Dunn M., Feuer E.J. Trends in esophageal adenocarcinoma incidence and mortality. Cancer. 2013;119:1149–1158. doi: 10.1002/cncr.27834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Thrift A.P. The epidemic of oesophageal carcinoma: Where are we now? Cancer Epidemiol. 2016;41:88–95. doi: 10.1016/j.canep.2016.01.013. [DOI] [PubMed] [Google Scholar]

- 10.Kunzmann A.T., McMenamin Ú.C., Spence A.D., Gray R.T., Murray L.J., Turkington R.C., Coleman H.G. Blood biomarkers for early diagnosis of oesophageal cancer: A systematic review. Eur. J. Gastroenterol. Hepatol. 2018;30:263–273. doi: 10.1097/MEG.0000000000001029. [DOI] [PubMed] [Google Scholar]

- 11.Janse M.H., van der Sommen F., Zinger S., Schoon E.J. Medical Imaging 2016: Computer-Aided Diagnosis. SPIE; Bellingham, WA, USA: 2016. Early esophageal cancer detection using RF classifiers; pp. 344–351. [Google Scholar]

- 12.Tsai C.-L., Mukundan A., Chung C.-S., Chen Y.-H., Wang Y.-K., Chen T.-H., Tseng Y.-S., Huang C.-W., Wu I., Wang H.-C. Hyperspectral Imaging Combined with Artificial Intelligence in the Early Detection of Esophageal Cancer. Cancers. 2021;13:4593. doi: 10.3390/cancers13184593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wu I., Syu H.-Y., Jen C.-P., Lu M.-Y., Chen Y.-T., Wu M.-T., Kuo C.-T., Tsai Y.-Y., Wang H.-C. Early identification of esophageal squamous neoplasm by hyperspectral endoscopic imaging. Sci. Rep. 2018;8:13797. doi: 10.1038/s41598-018-32139-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Song L.M.W.K., Adler D.G., Conway J.D., Diehl D.L., Farraye F.A., Kantsevoy S.V., Kwon R., Mamula P., Rodriguez B., Shah R.J. Narrow band imaging and multiband imaging. Gastrointest. Endosc. 2008;67:581–589. doi: 10.1016/j.gie.2008.01.013. [DOI] [PubMed] [Google Scholar]

- 15.Kuznetsov K., Lambert R., Rey J.-F. Narrow-band imaging: Potential and limitations. Endoscopy. 2006;38:76–81. doi: 10.1055/s-2005-921114. [DOI] [PubMed] [Google Scholar]

- 16.Gono K., Obi T., Yamaguchi M., Oyama N., Machida H., Sano Y., Yoshida S., Hamamoto Y., Endo T. Appearance of enhanced tissue features in narrow-band endoscopic imaging. J. Biomed. Opt. 2004;9:568–577. doi: 10.1117/1.1695563. [DOI] [PubMed] [Google Scholar]

- 17.Le Berre C., Sandborn W.J., Aridhi S., Devignes M.-D., Fournier L., Smail-Tabbone M., Danese S., Peyrin-Biroulet L. Application of artificial intelligence to gastroenterology and hepatology. Gastroenterology. 2020;158:76–94.e2. doi: 10.1053/j.gastro.2019.08.058. [DOI] [PubMed] [Google Scholar]

- 18.Wang Y.-K., Syu H.-Y., Chen Y.-H., Chung C.-S., Tseng Y.S., Ho S.-Y., Huang C.-W., Wu I., Wang H.-C. Endoscopic images by a single-shot multibox detector for the identification of early cancerous lesions in the esophagus: A pilot study. Cancers. 2021;13:321. doi: 10.3390/cancers13020321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cai S.-L., Li B., Tan W.-M., Niu X.-J., Yu H.-H., Yao L.-Q., Zhou P.-H., Yan B., Zhong Y.-S. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video) Gastrointest. Endosc. 2019;90:745–753.e2. doi: 10.1016/j.gie.2019.06.044. [DOI] [PubMed] [Google Scholar]

- 20.Ebigbo A., Mendel R., Probst A., Manzeneder J., de Souza Jr L.A., Papa J.P., Palm C., Messmann H. Computer-aided diagnosis using deep learning in the evaluation of early oesophageal adenocarcinoma. Gut. 2019;68:1143–1145. doi: 10.1136/gutjnl-2018-317573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zhao Y.-Y., Xue D.-X., Wang Y.-L., Zhang R., Sun B., Cai Y.-P., Feng H., Cai Y., Xu J.-M. Computer-assisted diagnosis of early esophageal squamous cell carcinoma using narrow-band imaging magnifying endoscopy. Endoscopy. 2019;51:333–341. doi: 10.1055/a-0756-8754. [DOI] [PubMed] [Google Scholar]

- 22.Guo L., Xiao X., Wu C., Zeng X., Zhang Y., Du J., Bai S., Xie J., Zhang Z., Li Y. Real-time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos) Gastrointest. Endosc. 2020;91:41–51. doi: 10.1016/j.gie.2019.08.018. [DOI] [PubMed] [Google Scholar]

- 23.Kumagai Y., Takubo K., Kawada K., Aoyama K., Endo Y., Ozawa T., Hirasawa T., Yoshio T., Ishihara S., Fujishiro M. Diagnosis using deep-learning artificial intelligence based on the endocytoscopic observation of the esophagus. Esophagus. 2019;16:180–187. doi: 10.1007/s10388-018-0651-7. [DOI] [PubMed] [Google Scholar]

- 24.Tseng K.-W., Hsiao Y.-P., Jen C.-P., Chang T.-S., Wang H.-C. Cu2O/PEDOT: PSS/ZnO Nanocomposite Material Biosensor for Esophageal Cancer Detection. Sensors. 2020;20:2455. doi: 10.3390/s20092455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wu C.-W., Wang H.-C., Tasi J.-M., Horng C.-T. Radio-chemotherapy with Cisplatin, 5-Fluorouracil and Cetuximab for patients with locally advanced esophageal cancers. N. Y. Sci. J. 2018;11:26–34. [Google Scholar]

- 26.Nan C.-C., Wang H.-C., Tasi J.-M., Horng C.-T. Stereotactic radiotherapy in the role of treating local recurrences of esophageal cancers. J. Am. Sci. 2018;14:45–51. [Google Scholar]

- 27.Wang Y.K., Chuang Y.S., Wu T.S., Lee K.W., Wu C.W., Wang H.C., Kuo C.T., Lee C.H., Kuo W.R., Chen C.H. Endoscopic screening for synchronous esophageal neoplasia among patients with incident head and neck cancer: Prevalence, risk factors, and outcomes. Int. J. Cancer. 2017;141:1987–1996. doi: 10.1002/ijc.30911. [DOI] [PubMed] [Google Scholar]

- 28.Wang H.-C., Nguyen N.-V., Lin R.-Y., Jen C.-P. Characterizing esophageal cancerous cells at different stages using the dielectrophoretic impedance measurement method in a microchip. Sensors. 2017;17:1053. doi: 10.3390/s17051053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wu I.-C., Weng Y.-H., Lu M.-Y., Jen C.-P., Fedorov V.E., Chen W.C., Wu M.T., Kuo C.-T., Wang H.-C. Nano-structure ZnO/Cu2O photoelectrochemical and self-powered biosensor for esophageal cancer cell detection. Opt. Express. 2017;25:7689–7706. doi: 10.1364/OE.25.007689. [DOI] [PubMed] [Google Scholar]

- 30.Shahidi N., Bourke M.J. Can artificial intelligence accurately diagnose endoscopically curable gastrointestinal cancers? Tech. Innov. Gastrointest. Endosc. 2020;22:61–65. doi: 10.1016/j.tgie.2019.150639. [DOI] [Google Scholar]

- 31.Tokai Y., Yoshio T., Aoyama K., Horie Y., Yoshimizu S., Horiuchi Y., Ishiyama A., Tsuchida T., Hirasawa T., Sakakibara Y. Application of artificial intelligence using convolutional neural networks in determining the invasion depth of esophageal squamous cell carcinoma. Esophagus. 2020;17:250–256. doi: 10.1007/s10388-020-00716-x. [DOI] [PubMed] [Google Scholar]

- 32.Fukuda H., Ishihara R., Kato Y., Matsunaga T., Nishida T., Yamada T., Ogiyama H., Horie M., Kinoshita K., Tada T. Comparison of performances of artificial intelligence versus expert endoscopists for real-time assisted diagnosis of esophageal squamous cell carcinoma (with video) Gastrointest. Endosc. 2020;92:848–855. doi: 10.1016/j.gie.2020.05.043. [DOI] [PubMed] [Google Scholar]

- 33.de Groof A.J., Struyvenberg M.R., Fockens K.N., van der Putten J., van der Sommen F., Boers T.G., Zinger S., Bisschops R., Peter H., Pouw R.E. Deep learning algorithm detection of Barrett’s neoplasia with high accuracy during live endoscopic procedures: A pilot study (with video) Gastrointest. Endosc. 2020;91:1242–1250. doi: 10.1016/j.gie.2019.12.048. [DOI] [PubMed] [Google Scholar]

- 34.Li S., Zhang J., Jin Y., Zheng L., Xu J., Yu G., Zhang Y. Automatic segmentation of esophageal cancer pathological sections based on semantic segmentation; Proceedings of the 2018 International Conference on Orange Technologies (ICOT); Bali, Indonesia. 23–26 October 2018; pp. 1–5. [Google Scholar]

- 35.Mukundan A., Tsao Y.-M., Artemkina S.B., Fedorov V.E., Wang H.-C. Growth Mechanism of Periodic-Structured MoS2 by Transmission Electron Microscopy. Nanomaterials. 2022;12:135. doi: 10.3390/nano12010135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hsiao Y.P., Mukundan A., Chen W.C., Wu M.T., Hsieh S.C., Wang H.C. Design of a Lab-On-Chip for Cancer Cell Detection through Impedance and Photoelectrochemical Response Analysis. Biosensors. 2022;12:405. doi: 10.3390/bios12060405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mukundan A., Feng S.-W., Weng Y.-H., Tsao Y.-M., Artemkina S.B., Fedorov V.E., Lin Y.-S., Huang Y.-C., Wang H.-C. Optical and Material Characteristics of MoS2/Cu2O Sensor for Detection of Lung Cancer Cell Types in Hydroplegia. Int. J. Mol. Sci. 2022;23:4745. doi: 10.3390/ijms23094745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chen C.-W., Tseng Y.-S., Mukundan A., Wang H.-C. Air Pollution: Sensitive Detection of PM2.5 and PM10 Concentration Using Hyperspectral Imaging. Appl. Sci. 2021;11:4543. doi: 10.3390/app11104543. [DOI] [Google Scholar]

- 39.Josyula S., Lin J., Xue X., Rothman N., Lan Q., Rohan T.E., Hosgood H.D. Household air pollution and cancers other than lung: A meta-analysis. Environ. Health. 2015;14:24. doi: 10.1186/s12940-015-0001-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zunair H., Hamza A.B. Sharp U-Net: Depthwise convolutional network for biomedical image segmentation. Comput. Biol. Med. 2021;136:104699. doi: 10.1016/j.compbiomed.2021.104699. [DOI] [PubMed] [Google Scholar]

- 41.Ronneberger O., Fischer P., Brox T. U-net: Convolutional networks for biomedical image segmentation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Munich, Germany. 5–9 October 2015; Cham, Switzerland: Springer; 2015. pp. 234–241. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data sharing is not applicable.