Abstract.

Functional optical imaging in neuroscience is rapidly growing with the development of optical systems and fluorescence indicators. To realize the potential of these massive spatiotemporal datasets for relating neuronal activity to behavior and stimuli and uncovering local circuits in the brain, accurate automated processing is increasingly essential. We cover recent computational developments in the full data processing pipeline of functional optical microscopy for neuroscience data and discuss ongoing and emerging challenges.

Keywords: fluorescence microscopy, calcium imaging, functional imaging, data analysis

1. Introduction

Modern neuroscience has been propelled forward by the development of new technologies that offer unique windows into the brain’s activity. These new techniques, including high-density electrodes,1,2 functional ultrasound imaging,3–6 high magnetic field functional Magnetic Resonance Imaging (fMRI),7–9 and optical imaging10,11 all stand to further our fundamental understanding of the brain and represent potential new tools for future therapies. One of the fastest growing directions in this push for advanced neural recording technologies is functional optical microscopy, in particular, in-vivo recordings using fluorescence microscopy.

Fluorescence microscopy holds a number of unique advantages over other imaging methods. Unlike fMRI and functional ultrasound, the activity measured is more directly related to neural activity and not as spatially and temporally blurred by the hemodynamic response.12,13 Furthermore, optical methods do not require inserting probes into the brain, making them typically less invasive than electrophysiology. The drawback to this advantage is the limited penetration depth of the photons. Imaging deeper structures requires more invasive methods, such as implanting a gradient refractive index (GRIN) lens.14,15 Additionally, fluorescence microscopy provides entire images that capture not just the neural activity, but also the morphology of the cells. Thus fields of view can in theory be registered across days to enable chronic long-term recordings of the same identified neurons, e.g., during learning. However, these high-dimensional and spatiotemporally rich data require significant computational power to extract the core information contained within. Moreover, fluorescence microscopy is actively growing and new volumetric imaging techniques10,11,16–18 promise to even further increase the scale of such data and the spatiotemporal statistics that must be leveraged in the analysis. To solve this “big data” explosion and to process the ever-growing datasets, there is an ongoing need to meet the challenge of designing robust automated algorithms to accurately extract information from these rich data.

In functional optical imaging of neurons, a fluorescing indicator (typically a protein) sensitive to a biomarker of neural activity is introduced to a cell. Examples of biomarkers include voltage, calcium, potassium, glutamate, sodium, etc.19–23 Out of these, calcium indicators have been the most widespread. When the level of given biomarker changes, i.e., during or right after a neural firing event, a fluorescent property such as the brightness or emission color of the indicator changes as well. At each image frame, the tissue is illuminated with light at a specific wavelength, and any fluorescing indicators have a probability of raising their energy level. When the energy level falls back to the lower-energy state, light at a longer wavelength is emitted and collected by the microscope. The measured value of the collected light thus reflects the value of the biomarker at a given location.

Practically, the tissue can be illuminated in a number of ways. For example, in single photon widefield microscopy, an entire plane is illuminated at once, using a camera to collect full frames simultaneously. Widefield microscopy can thus acquire high-resolution videos at kilohertz framerates; however, it is limited in its ability to image deeper regions in highly scattering tissues. Specifically, scattering of light in brain tissue greatly blurs images, requiring optical methods that better localize fluorescence at depth. Multiphoton imaging, e.g., two- and three-photon microscopy can penetrate deeper tissue 400 to , but rely on raster scanning technologies to sequentially measure small volumes throughout the plane of imaging.24–26 Hence, multiphoton imaging is often used to image smaller structures, such as axons, dendrites, and somas, whereas one-photon imaging is used at meso- and cortex-wide scales24,27–29 or at somatic resolution under challenging optical conditions, such as via endoscopes and miniscopes in freely moving animals.30,31

Here, we discuss the emerging line of work that has focused on the task of building the analysis tools required to realize the full potential of high-resolution large scale imaging. The primary goal is extracting time-courses of all the individual units (e.g., neurons, dendrites, brain regions, etc.) from the data so as to relate these to behavior and stimuli in downstream analyses. This is often accomplished by decomposing the movies into two sets of variables: the spatial profiles, representing the area in the field-of-view (FOV) that a unit occupies, and the corresponding temporal fluorescence traces. While this goal is simply stated, the unique properties of in-vivo fluorescence imaging create a number of challenges in misalignment of data, tissue aberrations and signal distortion, imaging-dependent noise levels, and severe lack of large-scale ground truth data for validation. These challenges have led to a myriad of approaches, from solving one specific step in the process, e.g., denoising,32 to whole pipeline implementations.33 Approaches also range from imaging of specific structures, e.g., widefield34 to methods aimed at many imaging classes.35 We aim to provide here a walkthrough of the basic challenges, the landscape of current approaches, and finally emerging challenges with no current solutions.

2. Functional Fluorescence Microscopy

Functional fluorescence microscopy in neuroscience is used to capture the dynamics of neural activity in a wide variety of animal models and targets. Improvements in bioengineering have allowed researchers to study targets from nanometer-sized targets in individually labeled neurons to spanning multiple brain regions across centimeter-wide fields of view. Recordings are taken at several different timescales and framerates, up to hours long recordings at kilohertz rates. Accordingly, specialized microscopes have been developed to tackle these individual experimental requirements.

Most microscopes can be described by two properties: illumination and sampling, of which either one or both may be time-varying. The illumination is a structured light source that aims to excite fluorophores (indicators) in the targeted region of interest without needlessly exciting other areas. The sampling is a mapping of the light emitted from targeted regions onto the sensor. The two most common configurations used for functional imaging today are two-photon laser scanning microscopy (TPM)36 [Figs. 1(a) and 1(b)] and widefield microscopy [Figs. 1(c) and 1(d)].

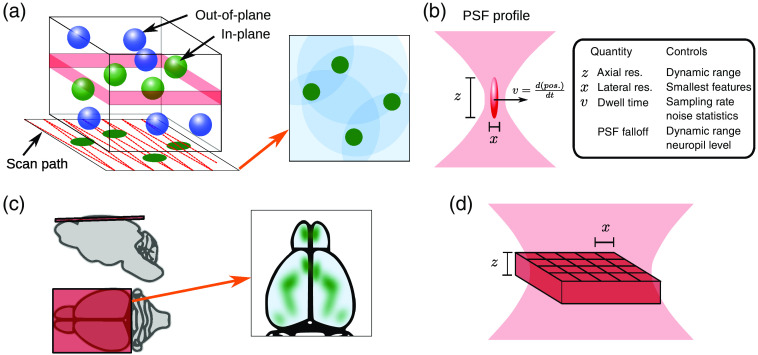

Fig. 1.

Optical imaging basics. (a) Laser scanning microscopy (e.g., two-photon microscopy) focuses the laser on a point inside the tissue, sequentially illuminating a chosen plane. Neurons intersecting the plane show up in the rendered images, whereas out-of-plane neurons accumulate as background, and combined nonsomatic activity (e.g., smaller dendritic and axonal components) as neuropil. (b) Choices in the optical illumination impact image properties. The image resolution is defined by the PSF, which is determined by microscope optics and optical properties of the sample. The dwell time controls the time spent at each location and is related to the scanning speed, i.e., the framerate, and can influence the number of photons collected per pixel and thus the signal strength. In laser scanning microscopy, these, in combination with scanning and laser technology, the image size, resolution, framerate, and noise characteristics of the image are determined. (c) Widefield imaging simultaneously illuminates the entire cortical surface plane, capturing dynamics across multiple brain areas. (d) In widefield imaging, camera characteristics primarily determine the image acquisition size and rate. The optical resolution for widefield imaging is strongly limited by microscope optics and optical aberrations from the sample.

In TPM, the illumination is a focused, pulsed near-infrared (NIR) laser that is raster-scanned in a two-dimensional (2D) pattern [Fig. 1(a)] and the sensor is a single pixel detector (typically a photo-multiplier tube) collecting all emitted light from the whole FOV. The fluorophores are excited via two-photon absorption,37 which has a squared-law relationship with the intensity of the excitation beam. This results in the absorption process being confined to a small, point-like focal volume [Fig. 1(b)]. As emitted light is typically bulk-collected in TPM, the excitation focal volume describes the point-spread function (PSF) for these systems. TPM has been widely adopted primarily due to its excellent optical sectioning properties and use of NIR wavelengths. Because scattering of NIR light is much lower in brain tissue than visible light and scattering of the fluorescence does not impact image quality, TPM achieves a practical imaging depth of up to , even in the highly scattering mammalian or avian brain.

In widefield microscopy [Fig. 1(c)], the illumination is a visible LED or laser that excites the imaged volume and the sensor is a camera that maps onto the target volume. The excitation light propagates through the whole imaging volume, strongest near the surface of the sample, and is attenuated over a couple of into the sample via tissue absorption. The emitted light is imaged onto the camera, with the contribution from the focal plane mapped most closely onto the sensor and out-of-focus light diffusely collected as well [Fig. 1(d)]. The PSF for the system is determined by the imaging optics, with light from the focal plane having the sharpest response and blurring away from the focus. The image quality is further degraded by scattering in the emission. In reasonably transparent animal models (such as C. elegans or larval zebrafish), this effect is minimal. In highly scattering tissues, like the mammalian brain, this effect limits the overall depth with acceptable imaging quality for neuron-scale recordings to typically . While the emitted light frequently comes from the whole volume, as in densely labeled samples, genetic targeting or microscopy techniques can isolate the signal to a smaller population of cells. A major advantage of widefield microscopy is the ease of setup in a variety of animal models and scalability to very large areas and framerates.

Several microscopes have been designed to optimize functional neural recordings. For TPM, development has focused on improving the acquisition speed and volume size. The illumination shape of the PSF has been transformed for imaging sparse tissues effectively38 or for volumetric imaging.16 Three-photon microscopy enables even deeper imaging.39 Changing the illumination time course with custom scanning paths can make fast jumps from cell to cell.40 Live updating of both the scanning pattern and timecourse is used for high-speed image acquisition.41 For collection, cameras have been used to replace the single-pixel detectors to improve acquisition rates.42 Recently, the combination of multiple techniques and technologies has reported cellular resolution imaging of up to a million neurons simultaneously.11

Changes to the techniques of widefield microscopy have focused on optical sectioning and volume scanning. Optical sectioning can be achieved by structuring the illumination such that only the plane mapping onto the camera is excited. This has been achieved primarily through light-sheet microscopy, which has been scanned to image three-dimensional (3D) volumes in larval zebrafish43 and also with a single objective in mice.10 Light field microscopy has been used to structure mapping of the sampling from the imaged region to the camera44 and in combination with confocal microscopy enables high-quality optical sectioning.45 One of the most major developments has been the miniaturization of microscopes (miniscopes) for head-mounted functional imaging, which has greatly expanded the types of problems and brain areas researchers can explore.46–48

All of the advances outlined have enriched the space of acquired brain recordings, especially when intersected with the range of available indicators. Specifically, they create a range of spatiotemporal resolutions, signal quality, and imaged morphology, a subsection of which we depict in Fig. 2. To bridge the gap between the raw data and scientific discovery, these data must be analyzed to extract useful neuronal activity.

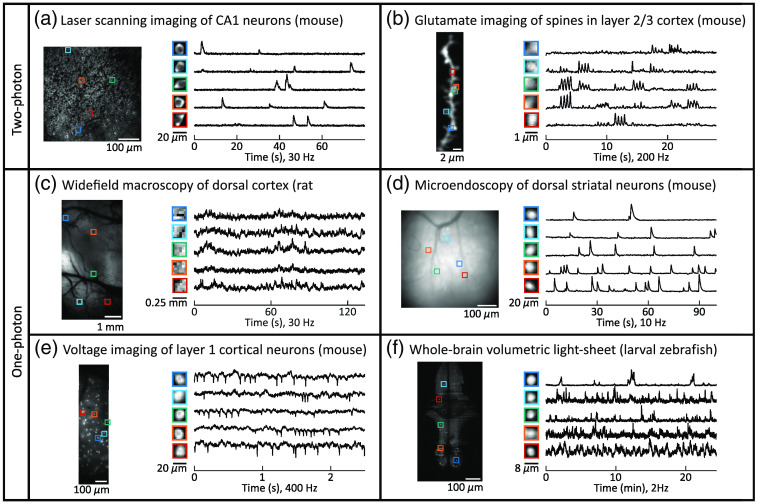

Fig. 2.

Examples of functional microscopy data. Functional microscopy data obtained via different modalities, at different scales, and in different animal models expressing different indicators can take on a diversity of signal characteristics. Shown are six examples spanning a range of datasets. (a) Laser scanning two-photon calcium imaging (GCaMP5) in mouse hippocampus.49 (b) Glutamate (iGluSnFR3.v857) spine imaging using laser-scanning two-photon microscopy in layer two-third mouse cortex.50 (c) One-photon widefield calcium (GCaMP6f) imaging of rat dorsal cortex using a head-mounted macroscope.48 (d) One-photon calcium imaging using a head-mounted microendoscope in mouse striatum.51 (e) One-photon voltage imaging (Voltron525-ST) in layer 1 of mouse cortex.52 (f) Whole-brain light-sheet calcium imaging (GCaMP6f) in larval zebrafish.53 Only one of 29 imaging planes each recorded at 2 Hz is depicted here. Note that the spatial and temporal statistics range dramatically across domains, requiring flexible data processing approaches.

3. Fluorescence Microscopy in Space and Time

The current state of functional fluorescence microscopy analysis can be understood through the long history of developments that have led to today’s state-of-the-art. Initially, fluorescence microscopy had long scan times and was thus focused on imaging static tissue (see Ref. 54 for a review of the very early history of fluorescence microscopy for biology). Thus, all the relevant information was anatomical in nature and could be traced manually or, later on, identified automatically to discover complex biological structures. Fluorescence microscopy continues to play a vital role in imaging static anatomical structures and morphological fitting algorithms have evolved with the technology.55–57

As scan times improved and the ability to inject fluorescent indicators into live tissue emerged, fluorescence microscopy expanded to the imaging of time-varying biomarkers, e.g., voltage indicators in the 1970s58,59 and calcium indicators in the 1980s.60 With this shift came the added dimension of time, as now fluorescence was a temporal quantity. In neuroscience, this enabled the study of the activity of the neurons over time. Initially, however, labeling methods were in their nascent stage and the use of viruses to introduce fluorescing proteins into cells created a high variability in labeling strength and longevity. The result was often sparsely labeled tissue where overlapping neurons were rare. Without overlap, isolating a single cell’s activity could thus be accomplished by identifying individual neurons anatomical extent in the video (e.g., based on the temporal mean or variance of the imaging stack), and then averaging the pixels belonging to each neuron to extract their time-trace.

To see this, we consider the statistical assumption that each neuron is modulated in a linear way (i.e., the spatial shape and temporal fluctuations are independent). Each -pixel frame at time , stacked as an vector, can be thought of as the linear combination of the cell shapes for and its activation at time :

| (1) |

where is a matrix consisted of the spatial components and is the activation of all cells at time . Solving a least-squares optimization of the form

| (2) |

for the activity of all cells at time can then be written as the pseudo-inverse . With no overlap the Gram matrix, is simply a diagonal matrix with the norms of the cell shapes on the diagonal. Thus, the activity for the cell at time is simply a weighted average of the pixel values at that time-point, which has been exactly the methodology with hand-drawn spatial profiles, sometimes also called regions of interest (ROIs).

As labeling methods advanced and indicator designs provided brighter fluorescent tags, overlaps between neurons and with other processes (e.g., dendrites) became more common. The increased cell count vastly increased the burden of manual annotation and the increased overlaps mathematically results in a nondiagonal Gram matrix , removing the validity of direct averaging. Thus, new conceptual approaches were required for cell identification. Rather than solely relying on spatial cues to isolate neurons, activity had to be demixed in space and time. In fact, as opposed to purely anatomical studies, many modern systems neuroscience analyses abstract away from space, analyzing a neuron--time matrix irrelevant of anatomy. Methods such as dimensionality reduction,61–63 dynamical systems analysis,64 statistical connectivity,65 etc. operate on the time-traces and thus suffer more from inexact estimation of neural activity rather than inexact estimation of spatial shapes.

Under the statistical assumption that each neuron is modulated in a linear way, i.e., the spatial profile is constant over time with brightness controlled by a time-vector representing the cell’s activity, the problem of isolating neurons can be considered as a matrix factorization problem. If the data matrix is the pixel-by-time fluorescence video matrix, each neuron contributes one rank-1 component

| (3) |

where is again the ’th neuron’s spatial profile (how it appears visually in the data), and is the matrix where the ’th column, is the ’th neuron’s time-trace (how the biomarker-driven fluorescence changes over time). To find the rank- decomposition, Mukamel et al.66 used a combination of PCA and ICA: first, PCA was used to reduce the overall dimension of the data and to obtain an initial guess , where and are the left and right singular vectors, respectively. ICA was then performed on the set of vectors , i.e., identifying a rotation of that makes the columns more independent, and the parameter trades off importance of the spatial and temporal components obtained via PCA (i.e., the left and right singular vectors). The rotation in the ICA step transforms the PCA components into their independent components, demixing both the spatial and the temporal components at once. This procedure allowed for overlapping components and included temporal independence when identifying neurons.

The matrix factorization approach continued to expand,67–69 primarily formulated as an optimization program:

| (4) |

where and represent appropriate regularization terms over space and time, respectively, that can vary between methods, and often include terms such as the component norms, number of components, sparsity, non-negativity, and spatial cohesion. As direct optimization is often difficult for problems of this size, alternating descent type algorithms are often employed, i.e., iteratively solving

Positive aspects of this approach include that each of these optimization problems can be solved reasonably efficiently (conditioned on judicious choices of regularization) and that different assumptions on the time-traces and component shapes can be incorporated naturally via regularization.

Thus, in the current landscape of algorithms that extract neural signals from fluorescence microscopy data we now see a combination of approaches. One approach focuses primarily on anatomical identification, leaving the identification of functional traces as a later stage, and another which places identification of the time-traces on equal ground (or more) with the spatial maps and extracts the two jointly.

3.1. Methods Focusing on Space

The class of methods that focus on anatomical identification have been mostly inspired by image segmentation, using both classical and modern approaches. Each of these methods relies on a spatial model of the data, either preset or learned from the data.

One such approach, dictionary learning, stems from the broader image processing literature.70 Dictionary learning assumes a sparse generative model for image patches71 and has been applied to calcium imaging to learn spatial dictionaries whose atoms represent neuronal shapes. The identified neuron shapes can then be used to estimate corresponding time courses.72–74 An early approach ignored temporal information entirely and developed a spatial generative model based on convolutional sparse block coding,72 applied to normalized mean projections of the imaging data. A dictionary of cellular shapes was learned with corresponding locations of these shapes in the mean image.

Modern deep learning methods for image segmentation have also been adapted to the ROI extraction problem. These are supervised approaches that require labeled training data. UNet2DS75 is a neural network based on the fully convolutional UNet76 model, an architecture that was developed for biomedical image segmentation. UNet2DS takes the mean image as an input and outputs two probability masks of a pixel belonging to either a cell or the background. Since U-Net2DS ignores temporal information, it has difficulty separating overlapping neurons and detecting sparsely firing cells. Such methods also cannot differentiate active cells, i.e., cells that exhibit deviations from baseline fluorescence consistent with spiking events, from nonactive cells.

3.2. Methods Using Time Information to Identify Spatial Masks

Another class of methods still emphasizes the identification of the spatial components, however, they rely on temporal information, e.g., in calculating correlations between pixels in the FOV based on their temporal activity.

One approach is to view cell detection as a clustering problem.77,78 For example, in HNCcorr78 after selecting a set of seeds (superpixels), this method aims to find a cluster within a patch containing the seed by solving the Hochbaum’s normalized cut (HNC) problem, which is similar to normalized cut. The HNC formulation balances a coherence term, which maximizes the total similarity of the pixels within each cluster, with a distinct term that minimizes the similarity between the cluster and all others, where similarity is based on time-trace activity. Local selective spectral clustering (LSSC)79 also solves the cell identification problem as clustering pixels in a high-dimensional feature space. Following the construction of a sparse graph whose nodes are the pixels and whose weights are determined by pairwise similarity of the time-traces of the pixels, the nodes are embedded using the eigenvectors of the random-walk graph Laplacian. Pixels are then clustered together in an iterative approach based on selecting subsets of the eigenvectors that best separate a cluster from the rest of the data, and enabling overlapping clusters. After detecting neuronal components (the graph construction allows for morphology-agnostic clustering), time traces are demixed and calculated by projecting to a low-rank space.

Activity-based level set segmentation (ABLE)80 is an image segmentation approach relying on active contours.81 ABLE defines multiple coupled active contours in the FOV where an active contour seeks to partition a local region into an interior corresponding to a neuronal component and a local exterior, such that the pixels within each region are similar to one another temporally. The evolution of the contours is performed by the level set method,82 where only neighboring cells directly affect a cell’s evolution. ABLE handles overlapping cells by coupling the evolution of active contours that are close to one another. ABLE automatically merges two cells if they are close and temporally correlated and prunes cells if their size is too small or too large. An advantage of this method is that it makes no assumption on a cell’s morphology or temporal activity, therefore it can potentially generalize to different indicators and spatial morphologies.

Convolutional deep learning networks taking into account temporal statistics or activity have also been developed in recent years.83–85 A (2+1)D convolutional neural network83 was trained on spatiotemporal sliding windows and the output represented the probability of a pixel belonging to an ROI centroid. Apthorpe et al.83 demonstrated that adding the temporal domain helps suppress noisy detections compared with a 2D network that only took as input the time-averaged image. However, the network they trained only learned spatial 2D kernels.

In comparison, STNeuroNet84 is a 3D convolutional neural network based on DenseVNet (similar to a U-Net) also trained on overlapping spatiotemporal blocks, and its output is a binarized probability map. By adding a temporal max-pooling layer to a typical DenseVNet architecture, the spatiotemporal features are reduced to a spatial output, which increases the speed of training and inference. This gain is important for network validation and low-latency inference in closed-loop experiments. Shallow U-net neuron segmentation (SUNS)85 aims to simplify the neural network architecture of STNeuroNet to further improve speed while still incorporating temporal information. To this end, a temporal matched filter tailored for shot noise is applied to the input video, thereby enhancing calcium transients occurring across multiple frames, and reducing temporal information into individual 2D frames. Preprocessing also includes whitening, which normalizes the fluorescence time series of each pixel by the estimated noise of that pixel. This yields an SNR representation that highlighted active neurons and obscured inactive neurons. The SNR video is then processed by a shallow UNet, whose output is a probability map.

Postprocessing for both of these deep-learning methods includes clustering of the output probability maps to detect individual cells and aggregation of detected cells across the probability maps for merging and pruning. Finally, deep learning, instance segmentation, and correlations (DISCo)86 uses a combination of time-trace correlation-based pixel segmentation on a graph and a convolutional neural network to identify individual spatial profiles. Note that while these methods identify cells based on a spatiotemporal analysis, they do not address the issue of estimating time-traces.

3.3. Methods Focusing on Space and Time

Rather than leave time-trace estimation as a secondary step, spatiotemporal demixing methods aim to simultaneously identify the spatial maps with their corresponding fluorescence traces.

One such method builds off of the dictionary learning framework first used in spatially-focused cell identification. Specifically, Diego et al.73 generate a space-time dictionary learning extension of convolutional sparse coding to video data with nonuniform and temporally varying background components. Petersen et al.74 approach the dictionary learning of spatial components via iterative merging and clustering. The former of these extracts sparse spike trains in the convolutional dictionary. The latter mainly emphasizes identifying the spatial maps but, as a by-product of the decomposition algorithm, computes the time traces as well.

Many algorithms that jointly estimate both spatial profiles and time traces aim to optimize the cost of the form of the optimization program in Eq. (4). In particular, many of these methods follow developments in analyzing somatic calcium imaging data, which are based on matrix factorization with non-negative constraints that represent the expected positive deviations from baseline: non-negative matrix factorization (NMF).35,67–69,87–89 The main difference between these methods is the specific choices of regularization and the specific implementation of the algorithmic steps. For example, multiple methods follow the aforementioned alternating optimization,35,67,69,87 whereas another approach instead uses semidefinite programming.68 Versions of matrix factorization methods have been perhaps the most widespread, with special versions being designed for one-photon endoscopy data,90 widefield data,34,35 and voltage data.91

Regularization has been applied both on the temporal and spatial dimensions. Temporally, sparsity over the time traces has been a popular constraint. Specifically, neurons often fire infrequently, relative to the number of frames in a video.16,67 Taking advantage of this sparsity requires accounting for the biophysically-induced exponential decay of fluorescence. While more sophisticated methods have been developed for postprocessing deconvolution (see Sec. 4.5), a simple way that has been applied to cheaply deconvolve data is based on the observation that exponentially decaying responses (i.e., a single-pole response function) with decay rate can be expressed as the difference equation , where is the instantaneous impulses over time. Thus simply computing should give a reasonable estimate of a sparse impulse train. The parameter can further be tuned to maximize sparsity .

Spatial regularization is more complex and often requires a model for neural processes of interest. Initial ideas included ensuring connected components, or by minimizing the total variation (TV) norms to minimize the complexity of shapes and remove spurious pixels.68 In more recent versions of matrix factorization algorithms, deep networks trained on human-annotated labeled data have been used to classify true and false cells,49 the resulting system serving as a posthoc filter that effectively double-checks the spatial regularization.

As an alternative to shape-based regularization, recent work has leveraged sparsity and spatial correlations in a new way.35 Instead of using spatial locations of pixels to constrain the components’ spatial profiles, graph-filtered temporal (GraFT) dictionary learning builds a graph among all pixels that enables like-pixels to share time-trace decompositions. This approach effectively gives up completely on space and instead focuses on the learning of a dictionary of time traces over the data-driven pixel graph.

More recently, another important modification to the base optimization in (4) has been emerging. Rather than focusing on changing the regularization terms used, the least-squares form of the data fidelity term has instead been reconsidered. The least-squares cost enforces a linear-Gaussian data generation hypothesis, however, a number of nonlinearities in fluorescence dynamics, incompleteness in component discovery, and the non-Gaussian statistics of the photo-diodes all contribute to various extents of errors in demixing. Four main alternatives have emerged, including a robust-statistical approach leveraging a Huber cost function,87 a contamination-aware generative model approach,92 a zero-Gamma mixture model,93 and a deep-learning approach.94

4. Imaging Analysis Pipeline

The fundamental output of an optical functional imaging analysis pipeline is the identified spatial profiles and more importantly their corresponding time traces. While demixing thus serves as the core of analysis, often multiple preprocessing and postprocessing steps are typically part of the pipeline to facilitate this output (Fig. 3). Motion correction is typically the first step in the analysis pipeline, to register all frames in the imaging stack such that the neuronal components to be extracted occupy the same spatial footprint in all frames. Denoising can be applied as a preprocessing step either temporally35 or spatially79 to improve the detection of ROIs. Normalizing per-pixel time-traces, e.g., by -scoring, can enhance dim cells, and improve cell detection. Following ROI extraction, postprocessing in the temporal domain can include the following: neuropil estimation, denoising of time traces (if not implicitly part of the extraction itself), and calculating normalized time traces (). Postprocessing in the spatial domain can include automatic classification49 of identified components into true or false neuronal components or manual quality control. To identify single spiking events, deconvolution is a postprocessing step that aims to recover sparse firing events from the fluorescence time traces. Finally, in longitudinal studies, postprocessing in the spatial domain can include registration of imaging sessions and matching of ROIs across sessions.

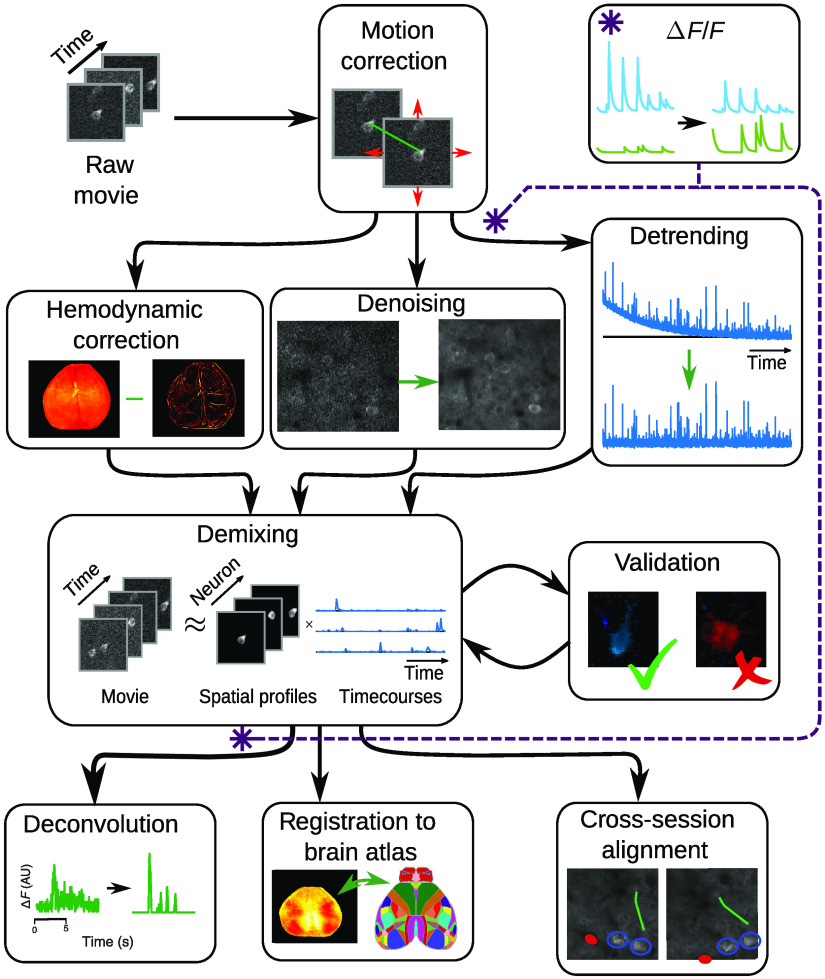

Fig. 3.

The optical imaging pipeline, expanded. The first step in analyzing fluorescence microscopy data is to correct any motion, reducing interframe misalignment. Between motion correction and demixing (i.e., cell-finding), steps to remove imaging noise and confounding factors are taken. Specific steps include de-trending to remove photobleaching, denoising to increase SNR, and hemodynamic correction in widefield data. The core pipeline stage of focus is demixing or identification of individual fluorescing components. Demixing should always be paired with some sort of validation on the output to prevent artifacts and other errors. The output of the demixing can be used to infer firing events (in single-cell resolution imaging), align multiple imaging sessions, or register to a global brain atlas (in widefield data). One important step: the calculation, which normalized fluorescence traces to their baseline, can be computed before or after demixing.

4.1. Motion Correction

The majority of ROI extraction methods rely on neuronal components being within a fixed/consistent spatial footprint in the FOV – thus necessitating image registration of the individual frames. Motion between frames can be due to several factors:95–99 animal motion during imaging (e.g., locomotion), scanning artifacts, mechanical strain, drift relative to the objective, changes in the brain, e.g., due to hydration, etc. Given the length of imaging sessions (tens of thousands of time frames), computational complexity of the registration approach is an important consideration. For fast acquisition rates, interframe motion can be considered as a global constant offset of the FOV, therefore rigid registration of translational shifts is sufficient.100–102 For example, registration can be performed to a reference (template) image using cross-correlation or phase-correlation.69 The reference image is typically set as an average over the initial frames and then regularly updated as an average over later subsets of frames, or an average over the full stack. The reference frame can be made more precise by an iterative refinement procedure to reduce blurring.69,103 As an alternative to performing correlation-based translation registration, bright cells can be detected and tracked over time using particle tracking.104 A rigid (translation and rotation) transform is then calculated for each frame to the next by minimizing the residual displacements of all tracked cells. This approach can also be applied to volumetric imaging.

Nonrigid motion artifacts can occur due to lower acquisition speeds, which result in artifacts such as shearing in later scanned lines of the image, or slow distortions over long recording sessions due to mechanical (-drift with respect to the objective) and biological issues (warping of brain tissue due to metabolic activity, dilation of blood vessels, and liquid reward consumption).105 Nonrigid motion correction usually relies on splitting the image into overlapping spatial patches and performing registration at the patch level. This registration can be rigid at subpixel resolution69,106 or a more flexible affine transform.103

Most methods for motion correction target two-photon somatic imaging. However, such methods can struggle with nonsomatic neuronal components such as dendrites, due to the difference in size and impact of -drift. While a cell-body is typically on the order of —with variation depending on brain area, species, etc.—the width of an axon or a dendrite is . Thus, slight registration errors can have a significant effect on identifying the spatial footprint of these components. Furthermore, -drift can cause segments of tuft dendrites to shift in and out of the FOV. This can lead to difficulties in aligning the dendrite to the reference image. From a computer vision perspective, this can be thought of as image registration under occlusions.

Another potential complication is the use of GRIN lenses to image deeper structures in the brain. Optical aberrations near the edges of GRIN lenses can significantly change the motion characteristics, requiring distortion-aware realignment. Finally, niche technologies can also create novel situations, such as the rotating platform developed for near-freely moving imaging.107,108

4.2. Denoising and Normalization

The absolute noise levels present in optical imaging create a challenging signal extraction environment. It is only because many sequential frames of the same population are recorded that individual cells can be identified. This process, however, can be improved by modest noise filtering as a preprocessing stage. A number of methods (with example applications) are used across the literature, including median or low-pass filtering,16,79 downsampling, PCA projection,69,79 z-scoring, wavelet denoising,35 other hierarchical models,109 and deep learning-based denoising.32,110 All these approaches make different noise and signal model assumptions and should be used judiciously.

For example, median/low-pass filtering and downsampling, are simple, quick steps that can be run on the data at the early stages. Median filtering is effective at reducing shot noise common in low-photon environments, at the cost of making shapes in the image more “convex” (i.e., filling in corners). Low-pass filtering and downsampling reduce high-frequency noise, however, blur the data via the convolutional filter. Downsampling83,111 has the further benefit of reducing the data size in space or time, thus reducing later computational costs, however, effectively reduces the sampling resolution.

Other signal models are more complex. Hierarchical models can provide flexible ways of both incorporating different noise classes (e.g., Poisson) and flexible signal models (e.g., via interpixel correlations), however, at a heavy computational overhead.109 Wavelet-based denoising112,113 is perhaps the most versatile in this class, as both per-image and per-pixel time-trace denoising are computationally efficient, readily implemented across programming languages, and can handle sharp transitions, thereby reducing blurring.

Yet other methods model the noise in ways based on the data itself. PCA-based projections identify a low-dimensional space that captures much of the data variance, effectively assuming that low-variance principal components represent noise. Sparsely firing cells or dim cells, however, can often end up in low-variance components and thus removed with the noise. Penalized matrix decomposition91 denoises the imaging data by performing a patch-wise penalized low-rank decomposition. Recently, general advances from deep learning32,110,114 have been proposed for denoising calcium imaging.

For example, DeepInterpolation32 uses a clever design that trains a neural network to predict a movie frame based on previous and past frames. Independent noise, which cannot be predicted, is thus filtered out. A broader image-restoration method CARE,115 uses a U-net trained on high- and low-resolution images to enhance signal quality. As with all deep-learning methods, the drawbacks include training the network (if the pre-trained options do not fit the application) and a general lack of knowledge as to the exact expected biases of the black box system.

Usually performed at the same time as denoising, normalization plays an important role in the numerical stability of algorithms. Having data with large (or small) overall fluorescence values can cause problems in the condition numbers of the matrices used in optimizing costs in the demixing step. Normalizing, e.g., to unit-median or unit-max values help constrain these effects, and all normalization can be undone after demixing to return meaningful fluorescence values to the identified time-traces. As an example, methods based on deep networks typically employ preprocessing to ensure appropriate dynamic range across the data, regardless of background or other inhomogeneities in the illumination (e.g., via homomorphic filtering85).

As a final note, a form of normalization that often is required in preprocessing is detrending to remove photobleaching116 (e.g., see Refs. 117 and 118). Photobleaching is the reduction in overall fluorescence over time stemming from the fluorescent proteins becoming trapped in an intermediate quantum state and becoming inactive. The result is a large imbalance over time in the dynamic range of the signal, which can hinder most demixing algorithms.

4.3. Neuropil Estimation

In 2p imaging, neuropil is a “background” signal that contains the fluorescence of neuronal elements that are out-of-focus (e.g., dendritic and axonal) and scattering. This signal can contaminate the estimated fluorescence of an ROI if it is not properly accounted for. Some methods estimate neuropil as part of the ROI extraction process by automatically identifying the signal of the exterior surrounding the cell,80 or explicitly or implicitly adding at least one background signal in the linear decomposition model,35,49,67,89 e.g.

| (5) |

where and are the spatial and temporal components of the background, respectively. In other methods, neuropil is estimated in a posthoc process by calculating the average time trace from the pixels within the ring surrounding each extracted spatial profile.69,119 The signal is then subtracted from the time trace of the spatial profile. Care should be taken in neuropil subtraction not to over-correct. Over-correction can often be identified by noting significant negative dips in the fluorescence baseline

4.4. Normalized Fluorescence ()

Neurons have different concentrations of fluorescence indicator, e.g., GCaMP, which can result in varying levels of fluorescence both across cell populations and between individual neurons.120,121 Additional variation also occurs across the image field of view due to imaging technique, microscope optics, and sample variation.

Therefore, to obtain comparable signals from varying neural sources, extracted time-traces are normalized122 to remove the baseline fluorescence activity and adjust the amplitude as follows:

| (6) |

where is the extracted time trace and is the baseline fluorescence.

Calculation of baseline fluorescence relies on the assumption that neurons have sufficiently sparse activity, and thus a baseline level that does not correspond to neuronal activity can be estimated using varying heuristics. A common method of estimating is averaging the activity of the time frames with lowest activity (the exact value of can vary from lab to lab, for widefield imaging may be used to instead describe the percent change from the mean activity level). This method assumes neurons are quiescent for long enough periods that the change in fluorescence level is effectively zero at this percentile. A lower percentile is not used to account for error caused by sources such as shot noise or axial motion.

The running percentile calculation additionally assumes there is little to no fluorescence from other sources. In datasets that are densely labeled or have high background fluorescence, the technique will consistently underestimate the true as contributions from other sources get added to the baseline estimate. Estimating the baseline fluorescence with overlapping sources requires separating the fluorescence contributions from individual sources and any background source, which is automatically achieved in most segmentation algorithms.67,69 This approach may be more reliable than the running percentile calculation by integrating additional information provided by activity transients. A test using synthetic data demonstrated that these estimates of baseline fluorescence were reliable for the brightest cells, and another estimation procedure making use of pixel-wise spatial information further improved estimates of baseline activity.123

4.5. Deconvolution

The product of the core demixing stage includes a set of time traces—one per component—that contain the temporal fluorescence fluctuations. Due to the complex interactions and resulting buffering of nonvoltage fluorescence-inducing biomarkers, the transient increases in fluorescence due to individual spiking events are stretched out over time. For example, calcium indicators can observe deviations from baseline in the recorded fluorescence from a single spiking even for seconds.

As spiking events are typically considered the primary source of neural communication, efforts to infer underlying spiking from fluorescent data have emerged, taking a number of forms.73,124–131 The attempt to invert a generative model of fluorescence as a function of spike times is fundamental. The full biophysical model dictates that at each time of a spike event, a stochastic influx of the biomarker (e.g., calcium) flows into the cell and drives nonlinear differential equations and nonlinearity that determine the level of bound fluorescent protein over time. While the full biophysical model includes nonlinear differential equations and nonlinearities,123,132,133 this model can be linearly approximated as

| (7) |

where is the response curve (e.g., exponential rise-and-decay) to a single event and represents the stochasticity of the influx of biomarker at the ’th event.

Given the convolutional form of this model, identifying the time-points of events has taken the form of deconvolution. These algorithms have taken the form of assuming functional forms over , such as exponential67 or double exponential124 kernels, or taken more model-free approaches in a deep-learning framework.130,131,134 Specific algorithms have also varied widely, including exact -regularized optimization,127 marked point process,129 interior point optimization,135 active-set methods,136 and variational autoencoders.134

One of the core difficulties in deconvolution is the probabilistic relationship between spikes and fluorescence. In addition to the biomarker levels being variable, simultaneous recordings of electrophysiology and calcium imaging show that at times optical imaging can miss single events at significant levels (i.e., missing 70% to 80% of individual spikes).137 Thus, in general across noise, indicators, and other experimental conditions, deconvolution may have highly varying performance limits. In fact, for assessment, benchmarking attempts have avoided direct timing comparisons, opting instead for using local rate averages over bins as a validation metric.130 Interestingly, spike ambiguity in optical imaging has seeded another approach: to remove spiking events from the equation—literally—by marginalizing out the spiking events and directly estimating a latent spike rate.138

4.6. Multisession Registration

The ability to record from the same identified population of neurons with functional optical imaging in longitudinal experiments across multiple days is a major advance and enables understanding of long-term processes such as learning and memory. One of the crucial postprocessing steps of such longitudinal experiments is the alignment of the recorded imaging data across days to enable one-to-one mapping of neurons across all sessions. This is essential to understanding the changes in neural representation over time. However, alignment is challenging due to the 3D nonrigid transformations between imaging sessions. These are a result of, for example, day-to-day variance in the imaging angle due to slight changes in the angular placement of the microscope objective, day-to-day variance in optical clarity of cranial windows, and changes in the brain tissue over days. Specifically, in TPM, this can lead to differences in the shape of recorded cells since slight -drift and tilts can result in relatively large changes in the cross-section of a cell. Semiautomated approaches to calculate the transform between imaging sessions and match neurons exist, however these rely on user input to select matching ROIs and only align pairs of sessions.69 Recent methods propose registering imaging sessions based on fully affine invariant methods originally developed for natural image registration.139 A recent approach140 based on the classical SIFT141 algorithm enables fast automatic registration of calcium imaging sessions and one-to-one matching of ROIs, even if the neuron was not detected in all sessions.

In one-photon imaging, alignment is challenging due to light scattering and lack of optical sectioning, which increase the similarity between the time traces of neighboring neurons in the FOV.142 In addition, only active cells can be tracked, as opposed to multiphoton imaging. Sheintuch et al.142 developed a probabilistic method for automated registration across one-photon imaging sessions that is adaptive and optimized to different datasets. First, all cells are mapped to the same image by registering each session to a reference session using a rigid transformation based on the centroid locations of extracted ROIs. Next, the probability for any pair of neighboring cells from different sessions to be the same cell is calculated, given their spatial correlation and centroid distance and a probabilistic model for similar and dissimilar matched cells. Cells are finally aligned across sessions by an iterative procedure based on the estimated probabilities.

5. Widefield Imaging Analysis

Multiphoton microscopy, which was the main focus of the previous sections, provides a way to record neuronal activity at the cellular and sub-cellular levels in a given FOV, typically limited to a few . These recordings allow one to thoroughly investigate local microcircuits comprised from 100s to 1000s of cells at a time. At the other end of the spectrum, cortex-wide (i.e., widefield) imaging trades cellular resolution for increased FOV (millimeters) and enables exploration of the overall activity through imaging of the entire cortical surface through one-photon illumination143 [Figs. 4(a) and 4(b)]. The captured signal exhibits significantly different statistics from micron-resolution imaging both in time and space: the spatial resolutions are too coarse to isolate activity signals of individual neurons, but instead can capture brain-wide activity patterns.48 As a developing modality it presents many open questions regarding processing and analysis. Current approaches are nascent and inconsistent across labs. Moreover, validation and comparisons between distinct approaches are limited. In this section, we review the arising challenges and leading technological and computational approaches for capturing and processing of widefield calcium imaging.

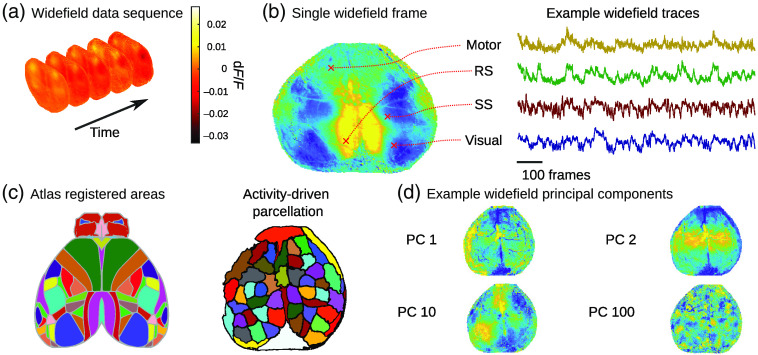

Fig. 4.

Widefield imaging. (a) Imaging frames of widefield signal. (b) Left—a single frame, red “” correspond to motor, retrosplenial (RS), somatosensory (SS), and visual cortices, right—time-traces of the corresponding locations. (c) Left—anatomical atlas CCFv3, right—functional parcellation by LSSC. (d) Spatial components derived by SVD. Data from Ref. 144.

The collection of widefield signals is performed using a scientific CMOS camera, capable of imaging hundreds of frames per second. To control the acquired data size and increase framerate, most researchers reduce the spatial resolution to pixels, resulting in to spatial resolution.

The acquired time traces reflect an aggregated summary of the neuronal activity captured from thousands of cells, cellular compartments, and depths (although mostly superficial layers12). Estimation of spike rates is usually not performed for widefield signals as the captured signals may originate from various cell parts such as axons, dendrites as well as somas, each related to a different kernels. While this issue can be resolved by calcium indicators targeting specific parts of the cell at the expense of limited temporal resolution,145,146 most researchers find the standard GCaMP indicators as the (current) best in terms of spatial and temporal resolution for capturing synaptic activity, providing a valuable tool for exploration of the dynamics of large-scale networks and their relation to complex behavior, perception, and cognition.147,148

Preprocessing of widefield recordings typically includes four stages: alignment, normalization, hemodynamics correction, and parcellation. Below we describe the motivation for each stage and common practices. Imaging of the entire cortical surface naturally enables analysis/modeling across animals. To facilitate a one-to-one correspondence of data acquired from different animals, frames of each session are registered to align to a global template of the cortex, according to several anatomical control points using an affine transform.149 In many cases, the captured time traces exhibit a slow decrease in baseline activity, ascribed to bleaching. This effect is easily removed by subtracting the slow trend (evaluated by low-pass filtering) from each pixel.150 To equalize spatial differences of expression levels, each pixel is normalized with respect to its own overall variance post detrending.

The next stage of preprocessing aims to correct the hemodynamics artifacts, which is unique to widefield signals and is not typically present in multiphoton imaging. Fluctuations in blood flow and oxygenation alter excitation and emission of photons due to hemoglobin absorption. This phenomenon contaminates the captured signals with unwanted dynamic components.12 The most common approach to correct this artifact is to alternate the emitted light with an additional reference channel, for example, UV light (). As GCaMP6 is isosbestic to UV light, the emitted photons are assumed to be independent of neuronal activity whereas the data channel (typically blue ) will cause emission of photons affected by fluctuations of both neuronal activity and hemodynamics signals.

The Beer–Lambert law is an exponential model for the measured light intensity as a function of wavelength, absorption, and traveled path. Assuming that temporal deviations from the average signal are small (as they often are for widefield calcium imaging) this relation is simplified by taking a first-order estimation of the signal in a given pixel

| (8) |

where and are -dimensional vectors of the recorded signals at time through the blue and UV channels respectively and is the number of pixels in a frame. The corrected signal, , is evaluated using a pixelwise linear regression.150,151 Recently, a computational approach for improving hemodynamics reduction based on a single reference wavelength was proposed.152 This approach exploits spatial dependencies between pixels by formulating a multivariate model

| (9) |

where , and are the -dimensional vectors, and is the number of pixels included in a certain local patch. The corrected signal is estimated using the optimal linear predictor, , where the matrices are evaluated from the signals at both wavelengths. Taking the patch size to be 1, this approach reduces to pixelwise regression Eq. (8) where using leads to improved reduction of the hemodynamics artifact.152 An alternative approach relies on using two reference wavelength (thus alternating three channels altogether) to obtain a more accurate correction of hemodynamic absorption.153

The signals at this stage are time-traces of the activity at individual pixels. The data are high-dimensional in space (typically over pixels) and subject to several noise sources (e.g., electronic, photonic shot noise). The final stage of widefield preprocessing is therefore to extract a compact representation of brain activity and filter out the noise component. As with spatiotemporal decomposition models in TPM, most methods for extracting this representation can be formulated as a linear decomposition model: , where is a matrix of the activity of pixels at time frames, is a matrix of spatial components, is a matrix of temporal components, and . Different methods for decomposing vary from solely relying on anatomical features to being completely data-driven, which affects the spatial interpretability of accordingly. Choosing one method over another should be done considering what downstream analyses will be used and the overall biological hypothesis of the research.

Using singular value decomposition (SVD) to reduce the dimension of widefield data relies on the assumption that the variance of neuronal activity within the widefield signal is significantly higher than the noise variance. The activity is decomposed to where is a diagonal matrix of the singular values and are orthogonal matrices and a low-dimensional representation is obtained by setting

| (10) |

where and are the columns of and , respectively and is the singular values. The number of components, , is selected to capture at least 80% to 90% of variance explained of the data, assuming that the remaining 10% to 20% relates to noise. The spatial components are not constrained to be localized or non-negative and therefore the temporal components extracted in Eq. (10) do not indicate a trace of activity related to a specific brain region. Postprocessing is performed in the reduced dimension domain and then projected back to the full dimension using . For example, in Ref. 149, SVD components were used to measure how well external variables (behavior and stimuli) can predict brain activity. The trained regression parameters, each computed per temporal component, were projected to the brain-mask domain to biologically interpret the statistical findings.

A different approach is to take advantage of the spatial structure of widefield signals where adjacent pixels are typically highly correlated while noise is uncorrelated. Therefore a compact and (spatially) filtered representation can be obtained by dividing the brain into localized subregions (parcels) and extracting the average trace within each parcel. In this case is non-negative where each row relates to a specific brain parcel with the corresponding column in as its activity time trace. Unlike imaging of local circuits, where identifying cell boundaries is a well-defined task (although not simple for automation), detection of parcel boundaries is not straightforward.

The most common approach for cortex-wide parcellation is to use a predefined atlas based on anatomical features such as the common coordinate framework (CCFv3) proposed by the Allen Institute for Brain Research154 or the mouse brain atlas of Paxinos and Franklin155 [Fig. 4(c)]. Parcellation based on anatomical atlases presents many advantages—each brain parcel represents a well-known biological functionality (e.g., vision, motor, and sensory) and a straightforward way to compare neuronal activity across animals (and studies). However, it is often observed that the spatial patterns of activity in some regions are not well described by anatomical outlines [e.g., via principal components analysis Fig. 4(d)]. Identification of subcortical borders can be performed experimentally by presenting sensory stimuli, e.g., the auditory cortex156 or the visual cortex.157 These methods are highly efficient for detecting boundaries of subregions within a specific cortical area (visual cortex and auditory cortex), but cannot be used to detect regions that are not responsive to sensory stimuli. Therefore, computational methods for functional parcellation [Fig. 4(c)] have been a major target of research in recent years for calcium imaging as well as in the fMRI community.158

Localized seminonnegative matrix factorization (LocaNMF) is a recently proposed approach aiming to tackle this issue by formulating an optimization problem for minimizing the mean-squared error between the widefield signal and the estimated signal such that the columns of are non-negative and localized according to anatomical clusters.34 LocaNMF produces, by nature, spatial patterns that are similar to the anatomical boundaries used by the optimization process and therefore typically does not deviate much from the anatomical atlas. Related to NMF, a linear one-hidden-layer autoencoder has also been used to identify parcellations in auditory cortex.159 Similar in spirit, GraFT,35 being agnostic to spatial morphology due to its underlying graph-based modeling, has also been applied to extracting (potentially overlapping) widefield spatial maps in rat and mouse macroscopic data. In a recent study, functional parcellation took a different turn by adding a temporal component to brain parcellation. This approach is based on finding repeated spatiotemporal patterns of activation termed motifs, which can be viewed as a time-varying brain parcel. The overall activity is therefore represented as a sum of convolution terms between each motif and its corresponding time trace.160

A different approach for brain parcellation is to cluster the brain into regions of coactivity, with no regard to anatomical features. In this case, the matrix is comprised of binary vectors, each corresponding to a specific brain parcel. Li et al.161 proposed an iterative greedy algorithm for parcellating the brain based on correlation similarity. Other approaches use correlations as graph weights connecting pixels serving as nodes. Parcels are obtained by clustering the graph using Ncut, where the number of parcels is a hyperparameter,162 or a greedy adaptation of spectral clustering, where the number of parcels is learned from the data.163

Overall, the product of functional parcellation methods describe the spatial distribution of coactivity in a given session (per animal). These patterns may be consistent across sessions163 but are not, in general, uniform across animal and obviously vary with the nature of the experiment (e.g., spontaneous activity and task directed). In that regard, as performed for the SVD approach, postprocessing values produced per functional parcel (e.g., goodness of fit and modeling coefficients) can be projected onto the brain mask using the spatial components.

Mapping of cortical neuronal activity is also recently addressed experimentally through multimodal imaging where widefield calcium imaging recording is performed simultaneously with fRMI signals164 or imaging of local circuit using two-photon microscopy150 or electrophysiology165–168 where specific regions are identified as highly correlated to a specific cell (or subpopulation).

6. Modern Challenges in Optical Functional Imaging

While there remain numerous challenges in widespread multiphoton and widefield imaging, there are further emerging challenges that will necessitate new data processing solutions in the near future. We outline here a number of primary avenues that we believe show promise but have many outstanding problems to solve.

6.1. Imaging Morphologies Beyond the Soma

More recently, variants of optical imaging have aimed to expand the scope of accessible brain signals by imaging both larger and smaller neural structures. At one end of this spectrum, zooming in enables the imaging of dendritic and spine structures, which captures how individual neurons communicate.169–174 Dendritic175 and axonal176 imaging, while also having sparse temporal statistics, can have long, thin spatial profiles that span the entire FOV. We note that these types of neural morphologies are also vital in some species, such as Drosophila melanogaster, where dendritic activity is vital to tracking neural processing.177,178 Approaches to dendritic/axonal imaging have largely followed the path of somatic imaging analysis. For example, recent versions of Suite2p69 can be run in “dendrite mode.” The long, stringy morphologies, however, are at odds with typical built-in assumptions of spatial locality. A more recent approach instead redefines pixel ordering on a data-driven graph to better identify irregular morphologies.35 Astrocytes also exhibit irregular morphologies and size, for which targeted approaches have been recently developed.179,180 Interestingly, larger-scale optical imaging of hemodynamic activity also captures components (i.e., blood vessels) that have spatial statistics more similar to dendrites than somas.181

6.2. Voltage Imaging

Voltage imaging is a technique that has been used for decades to record changes in neural activity using voltage-sensitive dyes.182 Voltage imaging, as compared with recording changes in calcium, is a more direct way of measuring neural signals. A comparison of population responses to optical recordings using calcium indicators and voltage indicators showed major differences in the temporal response of the recorded calcium signal as compared with the voltage signal.183 Widefield voltage imaging has also been explored184 and faces many of the same challenges as widefield imaging with calcium sensors (see Sec. 4.6).

Technology for the optical recording of voltage signals has improved rapidly over the past few years. The development of improved voltage sensors in the form of bright genetically encoded voltage indicators (GEVIs) has enabled high-resolution voltage recordings at multiple spatial scales.185 Genetic targeting of these indicators to subcellular structures isolate signal to particular neuronal structures, further increasing the signal-background ratio (SBR). These improvements have allowed researchers to generate optical voltage recordings from a population of cells in awake, behaving animals.186

Several challenges still exist that prevent the generation of large-scale optical voltage recordings. Voltage indicators are membrane-bound, which limits the total concentration of the sensors is compared with calcium indicators, which may fill the whole cytoplasm. Generally, GEVIs are, at least for now, dimmer than their GECIs counterparts. To take advantage of the improved temporal response function, recordings must be made at much higher framerates as compared with calcium indicators (versus ). The combination of these challenges reduces the overall spatial scale of current cellular or subcellular resolution recordings with voltage indicators.

Current-voltage imaging analysis includes both matrix factorization91,187 and deep learning52 demixing approaches similar to methods used in calcium imaging. The potential for low-SNR and non-Gaussian noise statistics can complicate demixing. Moreover, extremely high temporal resolutions create much larger datasets, increasing the computational cost of processing. Finally, non-negativity is a basic assumption built into many calcium imaging analysis methods: i.e., deviations from baseline are only positive, however, voltage traces have no such constraint.

6.3. Computational Imaging

One critical avenue that may completely upend much of how optical imaging data is processed is computational imaging. Computational imaging represents a paradigm wherein optical and algorithmic components are codesigned to compensate and enhance each other and achieve superior results to advances in either area independently.188,189 Codesigned approaches are nascent in in-vivo functional imaging of the brain, with only a few examples aimed at faster imaging41,190 or volumetric imaging.16,45,191,192 The algorithmic designs for computational imaging tend to require specialized and often unique processing elements that invert the optical path of the co-designed microscope.189 Examples include tomography,41 light-field imaging,45,192–194 combined light-field and tomography,191 stereoscopy,16 and computational systems for imaging through scattering tissue.195

While basic denoising or other discussed techniques might still be applicable, more highly coded optics may not even be able to use the same motion correction, let alone the other advances in demixing of individual neuronal signals. For example, lightfield and stereoscopy methods need demixing methods for decoding projections of volumetric components onto 2D arrays. Thus, new frontiers are constantly expanding and requiring novel advances in our handling of functional optical data.

7. Validation and Assessment

One of the most difficult tasks in creating widely applicable and robust calcium image processing methods is proper assessment.196 The effects of mismatch between the necessary simplified statistical assumptions in the signal processing models and the actual data properties must be explored in terms of the effect on the fidelity of extracted signals and, when possible, the later scientific analyses. A prime example of such an effect is explored in Ref. 92, in which it is shown that the i.i.d. Gaussian noise assumption often considered can create bleed-through between overlapping cells and additional fluorescent biological processes in the tissue. The result is that the time traces have high levels (between 15% and 25%) of transient events being false transients in that they do not reflect the activity of that cell, but rather other, nearby, fluorescing components in the tissue. While significant in and of itself, it is further noted that these errors can cause errors in the interpretation of the neural activity, including the skewed discovery of location encoding in the hippocampus.92

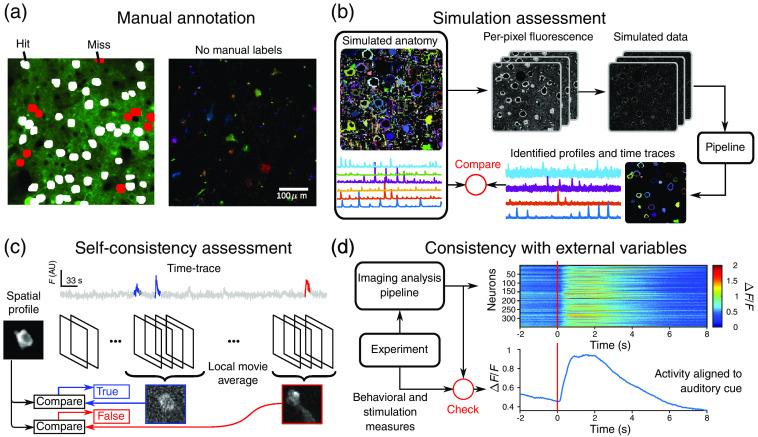

To identify the accuracy of optical imaging processing algorithms, a number of avenues have emerged. Specifically, four current available avenues are assessment based on (1) manual annotation, (2) biophysical simulation, (3) local self consistency of global decompositions, and (4) consistency based on external experimental variables.

We note that a fifth form of validating signals extracted from optical measurements take the form of simultaneous electrophysiological recording and optical imaging.130,137,197 The number of simultaneous cells that can be recorded during imaging within an FOV, however, is limited. Thus these recordings have primarily taken a role in assessing either the accuracy of estimated spikes from optically recorded calcium,130,197 or the similarity between electrically and optically recorded neural signals.137 Until now, this approach does not meet the scale required to completely assess the processing of a full FOV.

7.1. Manual Annotation

The most basic assessments of optical imaging analysis are the manual labeling of cells. This is typically done by annotating an image summarizing the structure in an imaging session, i.e., temporal max projection, temporal average, local temporal correlation,198 or nonlinear embeddings.199 We note that while manual labeling is typically done on the processed data, labels can also be obtained via anatomical imaging (e.g., -stacks or nuclear labeling with activity-invariant fluorescent proteins). Comparison to manual annotation gives clear metrics: hits (manually identified cells that overlapped significantly with a match in the returned spatial profiles) and misses (those with no match) [Fig. 5(a)].

Fig. 5.

Assessing optical imaging analysis outputs. (a) Manual annotation, e.g., the NeuroFinder benchmark dataset (example shown here), can help detect true detections (white) and missed cells (red), as compared with a labeling of the spatial area imaged (right). Manual annotation, however, does not provide information on cells that do not match any of the manual annotations (left). (b) Simulation-based assessment uses in silico simulations of activity and anatomy to create faux fluorescence microscopy data. The outputs of an analysis pipeline using the data can be compared with the simulated ground truth. (c) Self-consistency measures use the global demixed components and analyze local space-time extents of the movie to check if the spatial and temporal components still describe the data well. For example, is activity erroneously attributed to a found cell. (d) Consistency with external variables examines if expected gross behavior of the imaged neural populations match behavioral or stimulation events corecorded with the fluorescence video. For example, shown here are neurons in motor cortex responding to a motion made by a mouse responding to an auditory cue.200

However, there are limitations to assessment via manual annotation. Time traces are not available as ground truth and must be inferred from the data given in the annotations. Additionally, the annotations are often incomplete, excluding sparsely firing cells, or nonsomatic components. The effect is that found profiles that do not match well to the manual annotations could appear as “false alarms” but fall into many categories. They may be actual cells in the data missed by the annotator, they may be algorithmic artifacts caused by merging or splitting parts of cells,92 or they may be overfitting to noise or neuropil. Thus at best, manual annotations give a lower bound on true hits and missed detections but not much information on false positives. Some of the limitations stemming from human error can be removed in new datasets consisting of electron microscopy reconstructions of the imaged tissue.51 This dataset, and any that follow, provide anatomical ground truth in the form of a registerable volume to match spatial components to, although time traces are still unavailable.

7.2. Biophysical Simulations

Another important form of assessment is in-silico simulation.123,201 Simulation plays an important role in assessing the fundamental limits of algorithms across signal processing and machine learning. In particular, simulations are vital when ground truth information is difficult to obtain, either efficiently, or at all. Functional fluorescence microscopy is exactly one such situation. The time, effort, and expense of simultaneous recording fluorescence imaging and electrophysiology is a remarkable effort for only a limited portion of the ground-truth data. Simulations offer a potential solution by leveraging anatomical and physical knowledge of the system to generate data where the underlying activity and anatomy driving the synthetic observations are completely known and can be compared with Fig. 5(b).

Simulations, however, pose their own risk. Simplistic simulations can miss complexities in the real-data imaging statistics, e.g., non-Gaussian or non-i.i.d. noise. Complex simulations run the opposite risk of being so detailed that the computational run-time and memory requirements become excessive and capture details irrelevant to the assessment being conducted. Recent work on the neural anatomy and optical microscopy (NAOMi) simulator seeks to balance these competing needs.123 To date, NAOMi has been applied to a number of scenarios, such as testing different demixing algorithms,35 denoising methods,32 sensitivity to negative transients,202 and testing/training calcium imaging demixing algorithms.131

7.3. Data Consistency

A third form of assessment uses no ground truth data, manual or synthetic, and instead focuses on self-consistency of the data model. The spatial profiles and time traces give a global decomposition of the data by minimizing data fidelity and regularization terms over the entire dataset. In this approach, one can focus on a smaller segment of the data and check if the global decomposition matches the local statistics. Specifically, one can check if in the movie frames, during which a given cell was purported to have fired (a transient burst in the time trace during those frames), if the shape of the cell truly appears in the video. Recent work used this local averaging idea to identify how many algorithms are not locally consistent: i.e., demonstrating that activity from different sources bleed into each other92 [Fig. 5(c)]. The resulting errors (termed false transients) can influence scientific findings and can appear in the time-trace estimates of many different algorithms and across many datasets.92 To address this finding, additional work has sought to develop more robust time-trace estimators that prevent these errors.87,92,94

7.4. Consistency with External Measures

The final form of validation we discuss is with respect to external measures outside the imaged brain area. To explore the relation between brain activity and behavior, perception, and cognition most experimental setups record, simultaneously with the brain activity, external variables such as spontaneous behavior (whisking, running, and pupil size), responses to sensory stimuli, and task-related behavior. A thorough examination of the extracted traces of activity with respect to external variables can be an important tool for the assessment of fluorescence microscopy processing. For example, aligning the activity traces to external onsets such as repeated presentations of a sensory cue, specifically trained behavior or even running onsets allows one to examine brain activity in a behavioral context. Applying this strategy should be done with caution, as uninstructed behavior may cause significant cell-to-cell and trial-to-trial variability.149 Still, averaging across dozens of well-pronounced presentations (visual or auditory cues for example) and across cells usually yields a significant increase of activity in the visual/auditory cortices203,204 or to a distinct pattern of activation of the motor cortex in well-trained animals200 [Fig. 5(d)]. For widefield imaging, these same strategies can be applied with respect to the appropriate brain parcels. If no sensory cues are presented, averaging across running onsets should lead to a significant increase in the overall activity of the cortex.152 Alternatively, additional modalities of neuronal activity can be used for validation of calcium imaging signals such as electrophysiology,115 fMRI,164 or dual imaging of widefield and two-photon imaging.150

8. Discussion

We have aimed to review here a large portion of the literature related to the analysis of functional optical microscopy data. The topics we have covered aim to provide a practical overview of how optics choices affect signal processing challenges, and the many methods that have been developed to solve these challenges. While broad categories, such as denoising, motion correction, and in particular signal extraction, have been explored in detail in the literature, there are many other challenges that have yet to be solved.