Thaler and Sunstein’s “nudge” (1) has spawned a revolution in behavioral science research. Despite its popularity, the “nudge approach” has been criticized for having a “limited evidence base” (e.g., ref. 2). Mertens et al. (3) seek to address that limitation with a timely and comprehensive metaanalysis. Mertens et al.’s headline finding is that “choice architecture [nudging] is an effective and widely applicable behavior change tool” (p. 8). We propose their finding of “moderate publication bias” (p. 1) is the real headline; when this publication bias is appropriately corrected for, no evidence for the effectiveness of nudges remains (Fig. 1).

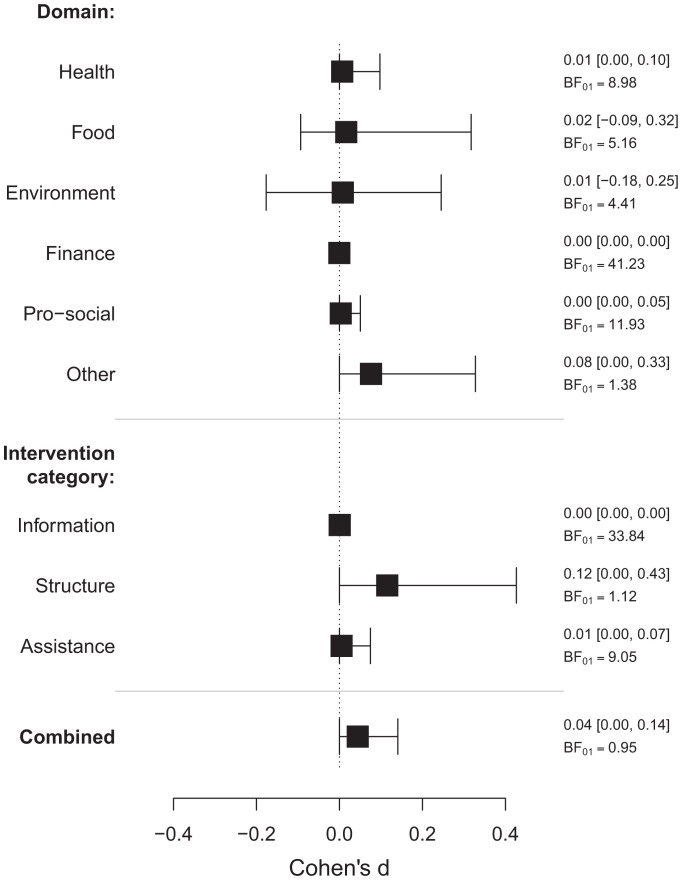

Fig. 1.

RoBMAPSMA model-averaged posterior mean effect size estimates with 95% credible intervals and Bayes factors for the absence of the effect for the combined sample or split by either the domain or intervention category (ignoring the clustering of SEs). BF01 quantifies evidence for the null hypothesis. BF01 larger than one corresponds to evidence in favor of the null hypothesis, and BF01 lower than one corresponds to evidence in favor of the alternative hypothesis (evidence for the alternative hypothesis can be obtained by reciprocating the Bayes factor; BF10 = 1/BF01). As a rule of thumb, Bayes factors between 3 and 10 indicate moderate evidence, and Bayes factors larger than 10 indicate strong evidence.

Mertens et al. (3) find significant publication bias, through Egger regression. Their sensitivity analysis (4) indicates that the true effect size could be as low as d = 0.08 (if publication bias is severe). Mertens et al. argue that severe publication bias is only partially supported by the funnel plot and proceed largely without taking publication bias into account in their subsequent analyses. However, the reported Egger coefficient (b = 2.10) is “severe” (5).

A newly proposed bias correction technique, robust Bayesian metaanalysis (RoBMA) (6), avoids an all-or-none debate over whether or not publication bias is “severe.” RoBMA simultaneously applies 1) selection models that estimate relative publication probabilities (7) and 2) models of the relationship between effect sizes and SEs [i.e., Precision Effect Test and Precision Effect Estimate with Standard Error (6, 8, 9)]. Multimodel inference is then guided mostly by those models that predict the observed data best (6, 9, 10). RoBMA makes multimodel inferences about the presence or absence of an effect, heterogeneity, and publication bias (6, 9).

Table 1 compares the unadjusted results to the publication bias–adjusted results.* Since publication bias–corrected three-level selection models are computationally intractable, we analyzed the data in two ways: 1) ignoring the three-level structure (column 2) and 2) using only the most precise estimate from studies with multiple results (column 3). Strikingly, there is an absence of evidence for an overall effect and evidence against an effect in the “information” and “assistance” intervention categories, whereas the evidence is undecided for “structure” interventions. When using only the most precise estimates, we further find evidence against an effect in most of the domains, apart from “other,” “food,” and “prosocial” (the evidence is indecisive) and weak evidence for the overall effect.† However, all intervention categories and domains apart from “finance” show evidence for heterogeneity, which implies that some nudges might be effective, even when there is evidence against the mean effect. Finally, we find strong evidence for publication bias across all subdomains (BFpb > 10), apart from food, when using only the most precise estimates (BFpb = 2.49).

Table 1.

Comparison of unadjusted and adjusted effect size estimates for all studies and for subsets of studies based on different categories or domains

| Random effects | RoBMAPSMA | RoBMAPSMA (precise) | |

|---|---|---|---|

| Combined | 0.43 [0.38, 0.48] | 0.04 [0.00, 0.14] | 0.11 [0.00, 0.24] |

| t(333) = 16.51 | BF01 = 0.95 | BF01 = 0.31 | |

| Intervention category | |||

| Information | 0.25 [0.19, 0.30] | 0.00 [0.00, 0.00] | 0.00 [0.00, 0.07] |

| t(88) = 8.79 | BF01 = 33.84 | BF01 = 10.57 | |

| Structure | 0.58 [0.50, 0.66] | 0.12 [0.00, 0.43] | 0.23 [0.00, 0.49] |

| t(186) = 13.93 | BF01 = 1.12 | BF01 = 0.33 | |

| Assistance | 0.22 [0.15, 0.29] | 0.01 [0.00, 0.07] | 0.01 [0.00, 0.12] |

| t(65) = 6.42 | BF01 = 9.05 | BF01 = 8.00 | |

| Domain | |||

| Health | 0.31 [0.22, 0.39] | 0.01 [0.00, 0.10] | 0.02 [0.00, 0.19] |

| t(64) = 7.03 | BF01 = 8.98 | BF01 = 3.53 | |

| Food | 0.66 [0.52, 0.81] | 0.02 [−0.09, 0.32] | 0.27 [0.00, 0.64] |

| t(81) = 9.01 | BF01 = 5.16 | BF01 = 0.55 | |

| Environment | 0.48 [0.37, 0.58] | 0.01 [−0.18, 0.25] | 0.00 [−0.44, 0.34] |

| t(56) = 9.16 | BF01 = 4.41 | BF01 = 3.05 | |

| Finance | 0.23 [0.15, 0.31] | 0.00 [0.00, 0.00] | 0.00 [0.00, 0.00] |

| t(34) = 6.08 | BF01 = 41.23 | BF01 = 30.95 | |

| Prosocial | 0.32 [0.22, 0.42] | 0.00 [0.00, 0.05] | 0.05 [0.00, 0.27] |

| t(38) = 6.36 | BF01 = 11.93 | BF01 = 1.89 | |

| Other | 0.40 [0.29, 0.50] | 0.08 [0.00, 0.33] | 0.04 [−0.22, 0.40] |

| t(55) = 7.66 | BF01 = 1.38 | BF01 = 2.45 | |

First column: Random effects metaanalysis estimates with 95% CI based on clustered SEs, all P values < 0.001. Second and third columns: RoBMAPSMA model-averaged posterior mean effect size estimates with 95% credible intervals and Bayes factor for the presence of the effect ignoring the clustering of SEs or using the most precise estimates (precise). Results differ slightly from the moderator analysis presented in the article because we analyzed each subfield separately to allow 1) testing for the presence of the effect in each category/domain in the Bayesian framework, and 2) publication bias to operate differently in different subdomains.

We conclude that the “nudge” literature analyzed in ref. 3 is characterized by severe publication bias. Contrary to Mertens et al. (3), our Bayesian analysis indicates that, after correcting for this bias, no evidence remains that nudges are effective as tools for behaviour change.

Acknowledgments

We thank Mertens et al. for sharing well-documented data and code.

Footnotes

The authors declare no competing interest.

*Our analysis is based on the corrected dataset in ref. 12.

†We also reanalyzed the data by including only models of selection for statistical significance, confirming our results.

Data Availability

Data and analysis script are available in ref. 11.

References

- 1.Thaler R. H., Sunstein C. R., Nudge: Improving Decisions about Health, Wealth, and Happiness (Yale University Press, 2008). [Google Scholar]

- 2.Lin Y., Osman M., Ashcroft R., Nudge: Concept, effectiveness, and ethics. Basic Appl. Soc. Psych. 39, 293–306 (2017). [Google Scholar]

- 3.Mertens S., Herberz M., Hahnel U. J. J., Brosch T., The effectiveness of nudging: A meta-analysis of choice architecture interventions across behavioral domains. Proc. Natl. Acad. Sci. U.S.A. 119, e2107346118 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vevea J. L., Woods C. M., Publication bias in research synthesis: Sensitivity analysis using a priori weight functions. Psychol. Methods 10, 428–443 (2005). [DOI] [PubMed] [Google Scholar]

- 5.Doucouliagos C., Stanley T. D., Theory competition and selectivity: Are all economic facts greatly exaggerated? J. Econ. Surv. 27, 316–339 (2013). [Google Scholar]

- 6.Maier M., Bartoš F., Wagenmakers E. J., Robust Bayesian meta-analysis: Addressing publication bias with model-averaging. Psychol. Methods, 10.1037/met0000405 (2022). [DOI] [PubMed] [Google Scholar]

- 7.Vevea J. L., Hedges L. V., A general linear model for estimating effect size in the presence of publication bias. Psychometrika 60, 419–435 (1995). [Google Scholar]

- 8.Stanley T. D., Doucouliagos H., Meta-regression approximations to reduce publication selection bias. Res. Synth. Methods 5, 60–78 (2014). [DOI] [PubMed] [Google Scholar]

- 9.Bartoš F., Maier M., Wagenmakers E.-J., Doucouliagos H., Stanley T. D., No need to choose: Model-averaging across complementary publication bias adjustment methods. Evidence Synthesis Methods, in press. [DOI] [PMC free article] [PubMed]

- 10.Hoeting J. A., Madigan D., Raftery A. E., Volinsky C. T., Bayesian model averaging: A tutorial. Stat. Sci. 14, 382–417 (1999). [Google Scholar]

- 11.S. Mertens, M. Herberz, U. J. J. Hahnel, T. Brosch, mhhb_nma_data_corrected.csv. Open Science Framework. https://osf.io/ubt9a/. Accessed 3 May 2022. [Google Scholar]

- 12.M. Maier et al., Code and data for analyses in “No evidence for nudging after adjusting for publication bias.” Open Science Framework. https://osf.io/svz6e/. Deposited 6 January 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data and analysis script are available in ref. 11.