Abstract

We introduce a class of scalable Bayesian hierarchical models for the analysis of massive geostatistical datasets. The underlying idea combines ideas on high-dimensional geostatistics by partitioning the spatial domain and modeling the regions in the partition using a sparsity-inducing directed acyclic graph (DAG). We extend the model over the DAG to a well-defined spatial process, which we call the Meshed Gaussian Process (MGP). A major contribution is the development of a MGPs on tessellated domains, accompanied by a Gibbs sampler for the efficient recovery of spatial random effects. In particular, the cubic MGP (Q-MGP) can harness high-performance computing resources by executing all large-scale operations in parallel within the Gibbs sampler, improving mixing and computing time compared to sequential updating schemes. Unlike some existing models for large spatial data, a Q-MGP facilitates massive caching of expensive matrix operations, making it particularly apt in dealing with spatiotemporal remote-sensing data. We compare Q-MGPs with large synthetic and real world data against state-of-the-art methods. We also illustrate using Normalized Difference Vegetation Index (NDVI) data from the Serengeti park region to recover latent multivariate spatiotemporal random effects at millions of locations. The source code is available at github.com/mkln/meshgp.

Keywords: Bayesian, spatial, large n, graphical models, domain partitioning, sparsity

1. Introduction

Collecting large quantities of spatial and spatiotemporal data is now commonplace in many fields. In ecology and forestry, massive datasets are collected using satellite imaging and other remote sensing instruments such as LiDAR that periodically record high-resolution images. Unfortunately, clouds frequently obstruct the view resulting in large regions with missing information. Figure 1 shows this phenomenon in Normalized Difference Vegetation Index (NDVI) data from the Serengeti region. Filling such gaps in the data is an important goal as is quantifying uncertainty in predictions. This goal is achieved through stochastic modeling of the underlying phenomenon, which involves the specification of a spatial or spatiotemporal process characterizing dependence from a finite realization. Gaussian processes (GP) are a customary choice to characterize spatial dependence, but their implementation is notoriously burdened by their O(n3) computational complexity. Consequently, intense research has been devoted in recent years to developing scalable models for large spatial datasets – see detailed reviews by Sun et al. (2011) and Banerjee (2017).

Figure 1:

Left: NDVI in the Serengeti region on 2016-12-17. White areas correspond to missing data due to cloud cover. Right: elevation data for the same region.

Computational complexity can be reduced by considering low-rank models; among these, knot-based methods motivated by “kriging” ideas enjoy some optimality properties but over-smooth the estimates of spatial random effects unless the number of knots is large, and require corrections to avoid overestimation of the nugget (Banerjee et al., 2008; Cressie and Johannesson, 2008; Banerjee et al., 2010; Guhaniyogi et al., 2011; Finley et al., 2012). Other methods reduce the computational burden by introducing sparsity in the covariance matrix; strategies include tapering (Furrer et al., 2006; Kaufman et al., 2008) or partitioning of the spatial domain into regions with a typical assumption of independence across regions (Sang and Huang, 2012; Stein, 2014). These can be improved by considering a recursive partitioning scheme, resulting in a multi-resolution approximation (MRA; Katzfuss 2017). Other assumptions on conditional independence assumptions also have a good track record in terms of scalability to large spatial datasets: Gaussian random Markov random fields (GMRF; Rue and Held, 2005), composite likelihood methods (Eidsvik et al., 2014), and neighbor-based likelihood approximations (Vecchia, 1988) belong to this family.

The recent literature has witnessed substantial activity surrounding the so called Vecchia approximation (Vecchia, 1988). This approximation can be regarded as a special case of the GMRF approximations with a simplified neighborhood structure motivated from a directed acyclic graphical (DAG) representation of a Gaussian process likelihood. Extensions leading to well-defined spatial processes to accommodate inference at arbitrary locations by extending the DAG representation to the entire domain include Nearest neighbor Gaussian processes (NNGP; Datta et al. 2016a,b) and further generalizations by constructing DAGs over the augmented space of outcomes and spatial effects (Katzfuss and Guinness, 2017). These approaches render computational scalability by introducing sparsity in the precision matrix. The DAG relies upon a specific topological ordering of the locations, which also determine the construction of neighborhood sets, and certain orderings tend to deliver improved performance of such models (Katzfuss and Guinness, 2017; Guinness, 2018).

When inference on the latent process is sought, Bayesian inference has the benefits of providing direct probability statements based upon the posterior distribution of the process. Inference based on asymptotic approximations are avoided, but there remain challenges in computing the posterior distribution given that inference is sought on a very high-dimensional parameter space (including the realizations of the latent process). One possibility, available for Gaussian first-stage likelihoods, is to work with a collapsed or marginalized likelihood by integrating out the spatial random effects. However, Gibbs samplers and other MCMC algorithms for the collapsed models can be inexorably slow and are impractical when data are in the millions. A sequential Gibbs sampler that updates the latent spatial effects (Datta et al., 2016a) is faster in updating the parameters but suffers from high autocorrelation and slow mixing. Another possibility emerges when interest lies in prediction or imputation of the outcome variable only and not the latent process. Here, a so called “response” model that models the outcome itself using an NNGP can be constructed. This model is much faster and enjoys superior convergence properties, but we lose inference on the latent process and its predictive performance tends to be inferior to the latent process model. Furthermore, these options are unavailable in non-Gaussian first-stage hierarchical models or when the focus is not uniquely on prediction. A detailed comparison of different approaches for computing Bayesian NNGP models is presented in Finley et al. (2019).

Our current contribution introduces a class of Meshed Gaussian Process (MGP) models for Bayesian hierarchical modeling of large spatial datasets. This class builds upon the aforementioned works that build upon Vecchia (1988) and other DAG based models. The inferential focus remains within the context of massive spatial datasets over very large domains. We exploit the demonstrated benefits of the DAG based models, but we now adapt them to partitioned domains. We describe dependence across regions of a partitioned domain using a small, patterned DAG which we refer to as a mesh. Within each region, some locations are selected as reference and collectively mapped to a single node in the DAG. Relationships among nodes are governed by kriging ideas. In the resulting MGP, regions in the spatial domain depend on each other through the reference locations. Realizations at all other locations are assumed independent, conditional upon the reference locations. This construction leads to a valid standalone spatial process.

As a particular subclass of MGPs, we propose a novel partitioning and graph design based on domain tessellations. Unlike methods that build sparse DAGs by limiting dependence to m nearest neighbors, our approach shapes the underlying DAG with a known, repeating pattern corresponding to the chosen tessellation geometry. The underlying sparse DAG enables scaling computations to large data settings and its known pattern guarantees the availability of block-parallel sampling schemes; furthermore, large computational savings can be achieved at no additional approximation cost if data are collected on patterned lattices. Finally, extensions to spatiotemporal and/or multivariate data are straightforward once a suitable covariance function has been defined. We use axis-parallel domain partitioning and the corresponding cubic DAG – resulting in cubic MGPs or Q-MGPs – to show substantial improvements in computational time and inferential performance relative to other models with data sizes ranging from the thousands to the several millions, for both spatial and spatiotemporal data and using multivariate spatial processes.

The present work may appear to share similarities with the block-NNGP model of Quiroz et al. (2019), who advocate building sparse DAGs on grouped locations based on their ordering and subsequent identification of m “past” neighbors. Unlike block-NNGPs, our tessellated GPs consider the domain tessellation as generating the DAG; the number of parents of any node is thus fixed and depends on the geometry of the chosen tessellation rather than on a user-defined parameter. Inclusion of more distant locations in the parent set of any location will, therefore, not proceed by increasing the number of neighbors m, but rather by increasing the regions’ size and/or modifying their shape. Central to tessellated GPs is the idea of forcing a DAG with known coloring on the data, resulting in guaranteed efficiencies when recovering the latent spatial effects. This strategy is analogous in spirit to multi-resolution approximations (Katzfuss, 2017; Gramacy and Lee, 2008), which also force a DAG on the data, resulting in conditional independence patterns that are known in advance and that can be used to improve computations. However, while multi-resolution approximations are defined by branching graphs associated to recursive domain partitioning, tessellated GPs use a single domain partition, with each region connected in the DAG only to its immediate neighbors. Compared to treed graphs, tessellated GPs are associated to DAGs with fewer conditionally-independent groups and whose repeated patterns facilitate the identification of redundant matrix operations arising when one or more coordinate margins are gridded. We also note that while the idea of partitioning domains to create approximations is not new, construction of the DAG-based approximation over partitioned domains has received considerably less attention. Finally, our focus here is in developing tessellated GPs as a methodology that enables the efficient recovery of the latent spatial random effects and the Bayesian estimation of covariance parameters via MCMC; we are thus not focusing on alternative computational algorithms (see e.g. Finley et al. 2019), which have been developed for NNGPs but can nonetheless all be adapted to general MGP models.

The balance of this paper proceeds as follows. Section 2 introduces our general framework for hierarchical Bayesian modeling of spatial processes using networks of grouped spatial locations. The MGP is outlined in Section 3, where we provide a general, scalable computing algorithm in Section 3.1. Tessellation-based schemes and the specific case of Q-MGPs are outlined in Section 4, which highlights their properties and computational advantages. We illustrate the performance of our proposed approach in Section 5 using simulation experiments and an application on a massive dataset with millions of spatiotemporal locations. We conclude the paper with a discussion and pointers to further research. Supplementary material accompanying this manuscript as an Appendix is available online and contains further comparisons of Q-MGPs with several state-of-the-art methods for spatial data.

2. Spatial processes on partitioned domains

A q × 1 spatial process assigns a probability law on , where w(ℓ) is a q × 1 random vector with elements wi(ℓ) for i = 1,2,…,q. In the following general discussion we will not distinguish between spatial and spatiotemporal domains , and denote spatial or spatiotemporal locations as ℓ,s, or u.

For any finite set of spatial locations of size , let P(·) denote the probability law of the random vector with probability density p(·). The joint density of can be expressed as a DAG (or a Bayesian network model) with respect to the ordered set of locations as

| (1) |

where the conditional set for each w(ℓi) can be interpreted as the set of its parents in a large, dense Bayesian network. Defining a simplified valid joint density on by reducing the size of the conditioning sets is a popular strategy for fast likelihood approximations in the context of large spatial datasets. One typically limits dependence to “past” neighboring locations with respect to the ordering in (1) (Vecchia, 1988; Gramacy and Apley, 2015; Stein et al., 2004; Datta et al., 2016a; Katzfuss and Guinness, 2017). The neighbors are defined and fixed and model performance may benefit from the addition of some distant locations (Stein et al., 2004). The ordering in is also fixed and inferential performance may benefit from the use of some fixed permutations (Guinness, 2018). The result of shrinking the conditional sets to a smaller set of neighbors from the past yields a sparse DAG or Bayesian network, which yields potentially massive computational gains.

We proceed in a similar manner, but instead of defining a sparse DAG at the level of each individual location, we map entire groups of locations to nodes in a much smaller graph; the same graph will be used to model the dependence between any location in the spatial domain and, therefore, to define a spatial process. Let be a partition of into M mutually exclusive subsets so that and whenever i ≠ j. Similar to the nomenclature in the NNGP, we fix a reference set , which itself is partitioned using by letting . The set of non-reference locations is similarly partitioned with so that for each j = 1,2,…,M. We now construct a DAG to model dependence within and between and . Let be a graph with nodes V = A ∪ B, where we refer to A = {a1,…,aM} as the reference nodes and to B = {b1,…,bM} as the non-reference, or simply “other”, nodes. Let A ∩ B = ∅. We introduce a map such that

| (2) |

This surjective many-to-one map links each location in and to a node in . The edges connecting nodes in are E = {Pa[v1],…,Pa[v2M]} where Pa[v] ⊂ V denotes the set of parents of any v ∈ V and, hence, identifies the directed edges pointing to v. We let be acyclic, i.e., there is no chain of elements of V such that and . Crucially, we assume that Pa[v] ⊂ A for all v ∈ V, i.e., that only reference nodes have children, to distinguish the reference nodes A from the other nodes B. Apart from the assumption that aj ∈ Pa[bj], we refrain from defining the parents of a node, thereby retaining flexibility. In general, however, all locations in will share the same parent set. In Section 4 we will consider meshes associated to domain tessellations.

Consider the enumeration , where , and let be the niq × 1 random vector listing elements of w(s) for each . We now rewrite (1) as a product of M conditional densities

| (3) |

The conditioning sets are then reduced based on the graph :

| (4) |

where we denote w[i] = {wj : aj ∈ Pa[ai]}, and Pa[ai] ⊂ {a1,…,ai−1} ⊂ A. This is a proper multivariate joint density since the graph is acyclic (Lauritzen, 1996). It is instructive to note how the above approximation behaves when the size of the parent set shrinks, for a given domain partitioning scheme. To this end, we adapt a result in Banerjee (2020) and show that sparser DAGs correspond to a larger Kullback-Leibler (KL) divergence from the base density p. This result has been proved earlier for Gaussian likelihoods by Guinness (2018), but the argument given below is free of distributional assumptions and is linked to the submodularity of entropy and the “information never hurts” principle (see e.g. Cover and Thomas, 1991).

Consider random vector w and some partition of the domain corresponding to nodes V = {v1,…,vM} via map η. Let the base process correspond to graph where E0 = {Pa0[v1],…,Pa0[vM]}. Then, let where E1 = {Pa1[v1],…,Pa1[vM]} and Pa1[vi] ⊆ Pa0[vi] for all i ∈ {1,…,M}. Finally construct by letting Pa2[vi*] = Pa1[vi*] \ {v*} for some v* ∈ Pa1[vi*]. In other words, graph is obtained by removing the directed edge v* → vi* from . We approximate p using densities p1 and p2 based on and , respectively, obtaining

| (5) |

Considering the Kullback-Leibler divergence of each density from p, and denoting V * = V \ {{i*} ∪ Pa1[i*]}, we find

| (6) |

where we use (5), the fact that V * and {i*} ∪ Pa1[i*] are disjoint, and Jensen’s inequality. This result implies that larger parent sets are preferrable as they correspond to better approximations to the full model; the choice of sparser graphs will be driven by computational considerations – see Section 3.2.

We construct the spatial process over arbitrary locations by enumerating other locations as and extending (4) to the non-reference locations. Given the partition of defined earlier with components for j = 1,2,…,M, for each we set η(u) = bj and recall that Pa[bi] ⊂ A by construction. For each , we denote and define the conditional density of given as

| (7) |

Therefore, for any finite subset of spatial locations we can let and obtain

We show (see Appendix A, available online) that this is a well-defined process by verifying the Kolmogorov consistency conditions. This new process can be built starting from a base process, a fixed reference set, domain partition and a graph . Next, we elucidate with Gaussian processes.

3. Meshed Gaussian Processes

Let be a q-variate multivariate Gaussian process, denoted as w(ℓ) ~ GP(0, C(·, · | θ)). The cross-covariance C(·,· | θ) indexed by parameters θ is a function , where is a subset of (the space of all q × q real matrices) such that the (i, j)-th entry of C(ℓ, ℓ′ | θ) evaluates the covariance between the i-th and j-th elements of w(ℓ) at ℓ and ℓ′, respectively, i.e., cov(wi(ℓ), wj(ℓ′)). We omit dependence on θ to simplify notation. The cross-covariance function itself needs to be neither symmetric nor positive-definite, but must satisfy the following two properties: (i) C(ℓ, ℓ′) = C(ℓ′, ℓ)⊤; and (ii) for any integer n and any finite collection of points {ℓ1, ℓ2, …, ℓn} and for all . See Genton and Kleiber (2015) for a review of cross-covariance functions for multivariate processes. The (partial) realization of the multivariate process over any finite set has a multivariate normal distribution where is the column vector and is the block matrix with the q×q matrix C(ℓi, ℓj) as its (i, j) block for .

We construct the MGP from a base, or parent, multivariate GP for w(ℓ) and then, using the graph defined in Section 2, represent the joint density at the reference set as

| (8) |

where , and for j > 1, and . The resulting joint density is multivariate normal with covariance and a precision matrix . The precision matrix for Gaussian graphical models is easily derived using customary linear model representations for each conditional regression. Consider the DAG in (4). Each wi is niq × 1 and let Ji = |Pa[ai]| be the number of parents for ai in the graph . Furthermore, let Ci,j be the niq × njq covariance matrix between wi and wj, Ci,[i] be the niq × Jiq covariance matrix between wi and w[i], and C[i],[i] be the Jiq × Jiq covariance matrix between w[i] and itself. Representing each conditional density in (4) as a linear regression on wi, we get

| (9) |

where each Hij is an niq×njq is a coefficient matrix representing the multivariate regression of wj given w[i], for i = 1,2,…,M, and each Ri is an niq × niq residual covariance matrix. We set Hii = O and Hij = O, where O is the matrix of zeros, whenever j ∈ {j : aj ∉ Pa[ai]}. For j ∈ {j : aj ∈ Pa[ai]}, let be the indices in Pa[ai] and let be the block matrix formed by stacking side by side for each . Since , we obtain and each can be obtained from the respective submatrix of Hi[i]. We also obtain . Therefore, all the Hij’s and Ri’s can be computed from the base cross-covariance function.

The distribution of can be obtained by noting that w = Hw+ ω, where H = {Hij} is the block matrix with {Hij} as (i, j)-th block. Therefore, , where R is block-diagonal with Ri as the (i, i)-th block. Note that I − H is block lower-triangular with 1’s on the diagonal, hence non-singular. Also, the precision matrix is sparse because of Hij = O whenever aj ∉ Pa[ai]. Block-sparsity of can be induced by building with few, carefully placed directed edges among nodes in A; Appendix B, available online, contains a more in-depth treatment. We extend (8) to the collection of non-reference locations :

| (10) |

where and , analogously to (8), while and are analogous to and . Clearly, given that all the densities are Gaussian, all finite dimensional distributions will also be Gaussian. We have constructed a Gaussian process with the following cross-covariance function for any two locations

For a given base Gaussian covariance function C, domain partitioning , mesh , and reference set , we denote the corresponding meshed Gaussian process as MGP(, , ,C).

3.1. Bayesian hierarchical model and Gibbs sampler

Meshed GPs produce block-sparse precision matrices that are constructed cheaply from their block-sparse Cholesky factors by solving small linear systems. General purpose sparse-Cholesky algorithms (Davis, 2006; Chen et al., 2008) can then be used to obtain collapsed samplers as in Finley et al. (2019). Unfortunately, these algorithms can only be used on Gaussian first stage models and are computationally impracticable for data in the millions. Hence, we develop a more general scalable Gibbs sampler for the recovery of spatial random effects in hierarchical MGP models that entirely circumvents large matrix computations.

Consider a multivariate spatiotemporally-varying regression model at ,

| (11) |

where is the multivariate point-referenced outcome, is a l × p = l × ∑pi matrix of spatially referenced predictors linked to constant coefficients β, w(ℓ) is the spatial process, Z(ℓ) is a l × q design matrix, ε(ℓ) is measurement error such that and . A simple univariate regression model with a spatially-varying intercept can be obtained with l = 1, Z(ℓ) = 1. For observed locations , we write the above model compactly y = Xβ + Zw + ε, where y = (y(ℓ1)⊤, …, y(ℓn)⊤)⊤, w and ε are similarly defined, X = [X(ℓ1) : ⋯ : X(ℓn)]⊤, , and .

For subsets , let , with analogous definitions for and , , and . After fixing a reference set , we obtain and . We partition the domain as above to obtain , , for j = 1, …, M and model w(ℓ) using the MGP which yields . We complete the model specification by assigning β ~ N(β | μβ, Σβ), , θ ~ p(θ).

The resulting full conditional distribution for β is , where , . For , r = 1, …, q, the full conditional is Inverse-Gamma with parameters and where Er = y·r −X·rβ−Z·rw and y·r,X·r,Z·r are the subsets of y,X,Z corresponding to outcome r (out of q).

The Gibbs update of the components can proceed simultaneously as all blocks in have no children and their parents are in . The full conditional for for j = 1,…,M is thus where and , where is the spatial process at locations corresponding to the parents of bj ∈ B ⊂ V.

We update for j = 1,…,M via its full conditional . Let be the vector of indicators that identify locations with non-missing outputs, and let aj ∈ V be the node in corresponding to . Then,

| (12) |

where with , and and ⊙ denotes the Hadamard or Schur (element-by-element) product. Finally, θ is updated via a Metropolis step with target density using (8) and (10). The Gibbs sampling algorithm will iterate across the above steps and, upon convergence, will produce samples from .

We obtain posterior predictive inference at arbitrary by evaluating p(y(ℓ) | y). If , then we draw one sample of y(ℓ) ~ N(X(ℓ)⊤β + Z(ℓ)⊤w(ℓ), D) for each draw of the parameters from . Otherwise, considering that for some j and thus η(ℓ) = bj, with parent nodes Pa[bj] and children Ch[bj] = ∅, we sample w(ℓ) from the full conditional , where and , then draw y(ℓ) ~ N(X(ℓ)⊤β + Z(ℓ)⊤w(ℓ), D).

3.2. Non-separable multivariate spatiotemporal covariances

We provide an account of the computational cost of general MGPs as a starting point to motivate the introduction of more efficient tessellated MGPs, and specifically Q-MGPs, in Section 4. We consider (11) and take l = 1 to simplify our exposition. In the resulting model, β is the regression coefficient on the p point-referenced regressors with a static effect on the outcome, whereas the q-variate spatiotemporal process w(·) captures the dynamic effect of the Z regressors. Typically in geostatistical modeling p and q are small, hence sampling β and τ2 carries a negligible computational cost. The cost of each Gibbs iteration is dominated by updates of θ and w. Let us assume, solely for expository purposes, that each of the M blocks comprise the same number of locations, i.e. , for all j = 1,…,M. Thus, and the graph nodes have J or fewer parents and L or fewer children.

The evaluation of and dominates the computation. Each term in the product entails and , both of size qm×qm, and their determinants. These require of size Jqm×Jqm or less, resulting in flops via Cholesky decomposition. Reasonably, J and m are fixed so M may grow linearly with sample size and the cost is O(nq3J3) considering M ∝ n. The total computing time is with K processors for computing the 2M densities. Sampling and from their full conditional distributions requires O(2Mq3m3 +MLq2m2 +Mq2m2) flops, assuming and are stored in the previous step. The first term in the complexity order is due to the Cholesky decomposition of covariance matrices, the second is due to sampling the reference nodes, and the third comes from sampling other nodes. Without further assumptions, parallelization reduces complexity to , since the covariances can be computed beforehand and the M components of are independent given . With fixed block size m, the overall complexity for a Gibbs iteration is , linear in the sample size and cubic in J, highlighting the computational speedup of sparse graphs (J small), the negative impact of large q, and the serial sampling of .

In terms of storage, Hj and Rj correspond to a storage requirement of O(4Mq2m2) = O(q2n). The matrix Z of size qn×qn can be represented as a list of 2M block-diagonal (hence sparse) Zj matrices. Furthermore, computing Zw (dimension n×1) can be vectorized as the row-wise sum of Z* ⊙ w* where Z* and w* are n×q matrices with jth column representing the jth space-time varying predictor. The cost of storing Z is thus O(2qn).

Complexity is further reduced by considering a graph with small J or a finer partition resulting in large M and small m, whereas the overall time can be reduced by distributing computations on K processors. Possible choices for include nearest-neighbor graphs and multiresolution trees. In settings with large q, adjusting J and M may be insufficient to reduce the computational burden. Covariance functions that are separable in the variables (but perhaps non-separable in space and time) bring the cost of Choesky factorizations of Jqm × Jqm matrices from O(J3q3m3) to O(J3m3 + q3) because , where Ch,u is the Jm×Jm space-time component of the cross-covariance, and Cv the q × q variable component. Savings accrue when evaluating the likelihood and in sampling from the full-conditionals at the cost of realism in describing the spatial process.

The next section develops a novel MGP design based on domain tessellations or tiling – i.e. partitions of the domain forming repeated patterns – to which we associate similarly patterned meshes. If observations are also located in patterns, the bulk of the largest linear solvers will be redundant, resulting in a significant reduction in computational time. In either scenario, sampling will also proceed in parallel with improved mixing.

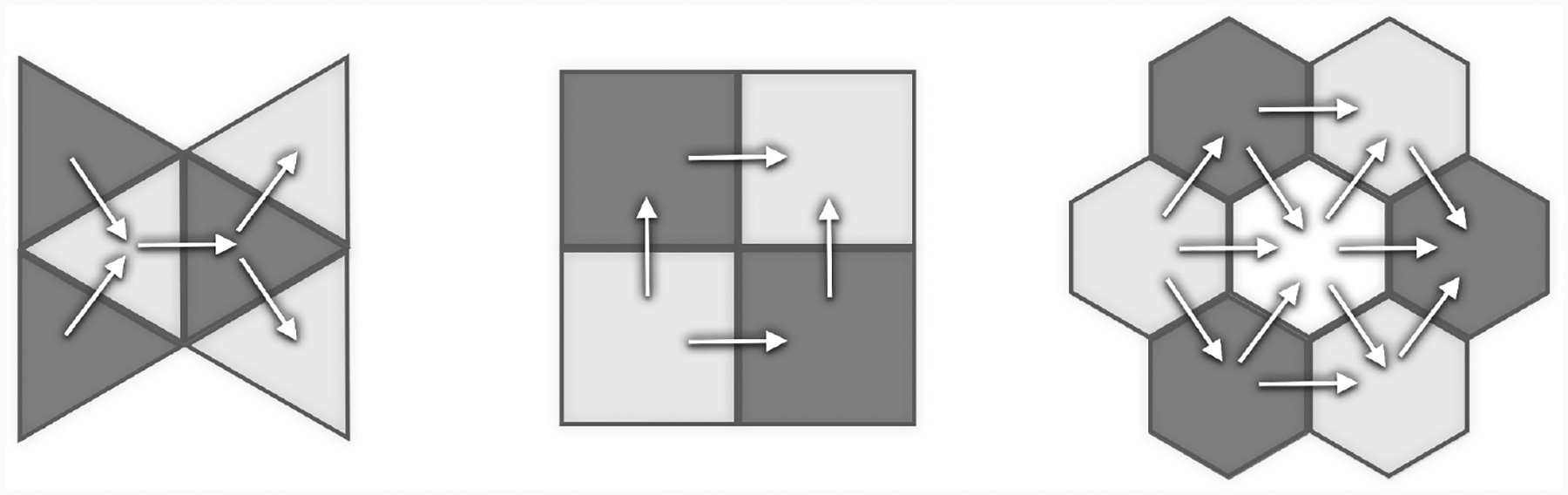

4. MGPs based on domain tessellation or tiling

We construct MGPs based on a tessellation or tiling of the domain. For spatial domains (d = 2, Figure 2), regular tiling results in triangular, quadratic, or hexagonal tessellations; mixed designs are also possible. These partition schemes can be linked to a DAG by drawing directed outgoing edges starting from an originating node/tile. The same fixed pattern can be repeated over a surface of any size. In dimensions d > 2, which may include time, space-filling tessellations or honeycombs can be constructed analogously, along with their corresponding meshes. Constructions of MGPs based on these ideas simply requires partitioning the locations into subsets based on the chosen tessellation.

Figure 2:

Regular tessellation base units and corresponding MGP graphs for spatial domains.

This subclass of MGP models corresponds by design to graphs with known coloring, with each color linked to a subgraph conditionally independent of all nodes of other colors, regardless of the dimension of the domain. This feature enables large-scale parallel sampling of and improves mixing without the need to implement heuristic graph-coloring algorithms. Furthermore, regions in a tessellated domain are typically translations and/or rotations of a single geometric shape. Carefully choosing , it will be possible to avoid computing the bulk of linear solvers, resulting in substantial computational gains. Subsequently, we focus on axis-parallel partitioning (quadratic or cubic tessellation) and cubic meshes, but analogous constructions and the same properties hold with other tessellation schemes.

A cubic MGP (Q-MGP) is constructed by partitioning each coordinate axis into intervals. In d + 1 dimensions, splitting each axis into L intervals results in Ld+1 regions. Consider a spatiotemporal domain , where is the time dimension. We partition each coordinate axis into Lr disjoint sets: , where if j ≠ k and denotes the sth interval in the rth coordinate axis. Solely for exposition, and without loss of generality, assume that and Lr = L for r = 1,…,d + 1. Any location will be such that for some i1,…,id+1 and with j = 1,…,M, where M = Ld+1. We refer to this axis-parallel partition scheme as a cubic tessellation and denote it by . We use T to partition the reference set as for j = 1,…,Ld+1.

Next, we define η(ℓ) = (η1(ℓ), …,ηL(ℓ)) ∈ {1, …, L}d+1, where ηj = ηj(ℓ) = r if . Then, let be a directed acyclic graph with V = A∪B and reference nodes . Therefore, for any j = 1,…,Ld+1 if then η(s) = aj ∈ A ⊂ V. We write each node v ∈ V as . The directed edges are constructed using a “line-of-sight” strategy. Suppose Pa[v] = {x(1),…,x(d+1)}. The hth parent of v is defined as , where k ≥ 1 is the smallest integer such that x(h) ∈ A. Consequently x(h) = ∅ if ah = 1. Thus, the parents of node v = η(ℓ) are the ones that precede it along each of the d + 1 coordinates. If , then η(ℓ) = bj ∈ B and Pa[bj] = {aj} ∪ Pa[aj] where aj ∈ A is a reference node. To avoid Pa[bj] = ∅ we set . The two parents along the hth dimension are where ki is the smallest positive integer such that , i = 1,2. In this setting J = 2(d + 1). The construction is finalized by fixing the cross-covariance function C(ℓ, ℓ′); Figure 3 shows that the same basic structure can be immediately extended to higher dimensions, including time.

Figure 3:

Q-MGP meshes used for spatial data on d = 2 (left) can be extended for use on spatiotemporal data d = 3 (right). Node colors correspond to Gibbs sampler blocks.

4.1. Caching redundant expensive matrix operations

The key computational bottleneck for the Gibbs sampler in Section 3.2 is calculating, for j = 1,…,2M, of (i) (2MJ3q3m3 flops) and (ii) , 4Mq3m3 flops). The former is costlier than the latter by a factor of J3/2. Q-MGPs are designed to greatly reduce this cost. We start with an axis-parallel tessellation of the domain in equally-sized regions , storing observed locations in to create , which we assume, for simplicity, to be no larger than m in size. Taking a stationary base-covariance function C, implies that , where is used to shift all locations in the sets. Recall that the reference set of MGPs can include unobserved locations. Hence, we can build on a lattice of regularly spaced locations. Since domain partitions have the same size, we have for j = 1,…,M, where is a single “prototype set” using which one can locate all other reference subsets. Also, since Pa[aj] ⊂ Pa[bj], there will be 4(d + 1) prototype sets for parents, i.e. for some r ∈ {1,…,4(d + 1)} and j = 1,…,2M. Then, we can build maps and linking each of and to a parent prototype. This ensures that for each j = 1,…,2M. One only needs the maps and , cache the r unique inverses, and reuse them. The same method applies to cache on reference sets, but not on other locations since no redundancy arises in for j = 1,…,M. See Figure 4 for an illustration. Compared to general MGPs (see Table 1), the number of large linear system solvers is now constant with sample size and (d + 1) ≪ M significantly reduces computational cost.

Figure 4:

Visualizing redundancies: a spatial domain is partitioned in M = 25 regions and linked to a quadratic mesh. The reference set is fixed on a regular grid, with m = 9. Parent locations of the orange (resp. purple) are in green (resp. blue). Using a stationary covariance, Cblue,blue = Cgreen,green. Therefore only one inversion is necessary; this can be replicated at no cost across 9 of the 16 regions.

Table 1:

Summary of computational cost of general MGPs and Q-MGPs. Rows are sorted from most expensive (top) to least expensive (bottom).

| Sampling , | ||||||

|---|---|---|---|---|---|---|

| MGPs (all cases) | 2MJ3q3m3 | Mq 3 m 3 | Mq 3 m 3 | Mq 3 m 3 | Mq 3 m 3 | MLq2m2 + Mq2m2 |

| Q-MGPs | ||||||

| — Irregular locations | 4(d + 1)J3q3m3 | 4(d + 1)q3m3 | Mq 3 m 3 | Mq 3 m 3 | Mq 3 m 3 | MLq2m2 + Mq2m2 |

| — Pattern lattice w/missing | 2M*J3q3m3 | 2M*q3m3 | Mq 3 m 3 | MLq 2 m 2 | ||

| — Lattice w/missing | 4(d + 1)J3q3m3 | 4(d + 1)q3m3 | Mq 3 m 3 | MLq 2 m 2 | ||

| — Full lattice and Z(ℓ) = Iq | 4(d + 1)J3q3m3 | 4(d + 1)q3m3 | 2(d + 2)(d + 1)q3m3 | MLq 2 m 2 |

Furthermore, Q-MGPs automatically adjust to settings where observed locations are on partly regular lattices, i.e., they are located at patterns repeating in space or time which emerge after initial inspections of the data. Appendix G, available online, outlines a simple algorithm to identify such patterns and create maps and . In such cases, we fix and . In addition to the above mentioned savings, we now do not have to compute and . If is not a regular lattice over the whole domain, 4(d + 1) is a lower bound and in general there are M* ≪ M inverses to compute. If is a fully observed regular lattice and if Z(ℓ) = I (a varying intercept model), then we save in computing the full conditional covariances as well, since all Dj = I. See Appendix C, available online, for details on choosing and .

4.2. Improved mixing via parallel sampling

With caching, a much larger proportion of time is spent on sampling; parallelization may in general be achieved via appropriate node coloring algorithms (see e.g. Molloy and Reed, 2002; Gonzalez et al., 2011; Lewis, 2016), but this step is unnecessary in Q-MGPs as the colors in are set in advance independently of the data and result in efficient parallel sampling of the latent effects. Reference nodes A of are colored to achieve independence conditional on realizations of nodes of all other colors. For example, we partition spatial domains (d = 2) into M1 × M2 regions and link each region to a reference node in a quadratic mesh. A “central” reference node v+ will have two parents and two children, i.e. Pa[v+] = {vl, vb} and Ch[v+] = {vr, vt}, with l, b, r, t respectively denoting left, bottom, right, top – refer to Figure 3 (left). We have Pa[vt] = {v+, vtl} and Pa[vr] = {v+, vbr}. The Markov blanket of v+, denoted as mb(v+), is the set of neighbors of v+ in the undirected “moral” graph , hence mb(v+) = Pa[v+] ∪ Ch[v+] ∪ {vtl, vbr}. The corresponding spatial process is such that . Denoting vbl = Pa[vl] ∩ Pa[vb] and vtr = Ch[vr] ∩ Ch[vt], we note that {vbl, vtr} ∩ mb(v+) = ∅. We partition reference nodes A into four groups {A(1),A(2),A(3),A(4)}, such that {v+} ⊂ A(1), {vb, vt} ⊂ A(2), {vl, vr} ⊂ A(3), and {vtl,vtr, vbl, vbr} ⊂ A(4). This 3 × 3 pattern is repeated over the whole graph. Then, if v ∈ A(j), mb(v) ∩ A(j) = ∅. Denoting by the other variables in the Gibbs sampler, we get:

Since parallelization is possible within each of the groups, only be four serial steps are needed; time savings are due to M/4 typically being orders of magnitude larger than the number of available processors. Extensions to other tessellation schemes and higher dimensional domains and the associated graphs follow analogously.

5. Data analysis

Satellite imaging and remote sensing data are nowadays frequently collected in large quantities and processed to be used in geology, ecology, forestry, and other fields, but clouds and atmospheric conditions obstruct aerial views and corrupt the data creating gaps. Recovery of the underlying signal and quantification of the associated uncertainty are thus the major goals to enable practitioners in the natural sciences to fully exploit these data sources. Several scalable geostatistical models based on Gaussian processes have been implemented on tens or hundreds of thousands of data points, with few exceptions. In considering larger data sizes, one must either have a large time budget – usually several days – or reduce model flexibility and richness. Scalability concerns become the single most important issue in multivariate spatiotemporal settings. In fact, repeated collection of aerial images and multiple spatially-referenced predictors modeled to have a variable effect on the outcome have a multiplicative effect on data size. With no separability assumptions, the dimension of the latent spatial random effects that one may wish to recover will be huge even when individual images would be manageable when considered individually.

The lack of software to implement scalable models for spatiotemporal data makes it difficult to compare our proposed approach with others in these settings. On the other hand, a recent article (Heaton et al., 2019) pins many state-of-the-art models against each other in a spatial (d = 2) prediction contest. On the same data, we show in Appendix E, available online, that Q-MGPs can outperform all competitors in terms of predictive performance and coverage while using a similar computational budget.

5.1. Non-separable multivariate spatiotemporal base covariance

In our analyses, we choose a class of multivariate space-time cross-covariances that models the covariance between variables i and j at the space-time lags as:

| (13) |

where δij > 0 (and with δij = δji) is the latent dissimilarity between variables i and j. In the resulting cross-covariance function C(h, u, v) in , each component of the q-variate spatial process is represented by a point in a k-dimensional latent space, k ≤ q. Refer to Apanasovich and Genton (2010) for a more in-depth discussion. We set ϕ1(x) = exp(−cx) and ψj(x) = (ajxαj + 1)βj, j = 1,2; see Gneiting (2002) for alternatives. We also fix , and seek to estimate θ = (σ2, c, a1, β1, a2, β2, {δij}i<j,j=1,…,q) a posteriori. The usual exponential covariance arises in univariate spatial settings.

5.2. Synthetic data

We mimick real world satellite imaging data analyzed later in Section 5.3 at a much smaller scale by generating 81 datasets from the model y(ℓ) = Z(ℓ)⊤w(ℓ) + ε(ℓ), where ε(ℓ) ~ N(0, τ2) with and is a regular grid of size 40×40×10, resulting in nall = 16,000 total locations. We take w ~ GP(0,C) where C is as in (13), ψ2 ≡ 1 and σ2 = 1. We generate one dataset for each combination of τ2 ∈ {1/1000,1/20,1/10}, temporal range α ∈ {5,50,500}, space-time separability , and spatial range c ∈ {1,5,25}.

We compare Q-MGPs with the similarly-targeted Gapfill method of Gerber et al. (2018) as implemented in the R package gapfill. We create “synthetic clouds” of radius and with center (c1,t, c2,t) ∈ [0,1/20]2 where to cover the outcomes at six randomly selected times for each of the 81 datasets. Outcomes at two of the remaining four time periods were then randomly selected to be completely unobserved at all but 10 locations in order to avoid errors from gapfill. Refer to Figure 5 for an illustration.

Figure 5:

Artificial cloud coverin in synthetic data.

A Q-MGP model with M = 500 was fit by partitioning each spatial axis into 10 intervals and the time axis into 5 intervals. The priors were τ2 ~ Inv.G.(2,1), σ2 ~ Inv.G.(2,1), β ~ U(0,1), α ~ U(0,104), c ~ U(0,104); 7000 iterations of Gibbs sampling were run, of which 5000 used for burn-in and thinning the remaining 2000 to obtain a posterior sample of size 1000. For each of the 81 datasets we calculate the mean absolute prediction error (MAE) and the root mean squared prediction error (RMSE). Figure 6 compares Gapfill’s 90% intervals with 90% posterior equal-tailed credible intervals for the Q-MGP predictions obtained from 1000 posterior samples. In terms of MAE, the Q-MGP model outperformed Gapfill in all datasets; in terms of RMSE, it outperformed Gapfill in all but one dataset. The average MAE of Q-MGP was 0.4094 against Gapfill’s 0.5366; the average RMSE was 0.5308 against Gapfill’s 0.6820. The Q-MGP also yielded improved coverage of the prediction intervals, although some under-coverage was observed possibly due to the large M. This comparison may favor Q-MGPs as the data were generated from a Gaussian process. Appendix K, available online, confirms similar findings on non-Gaussian data (a GIF image).

Figure 6:

Performance of Q-MGP and Gapfill in out-of-sample predictions in 81 spatiotemporal datasets, at the three tested levels of noise variance τ2.

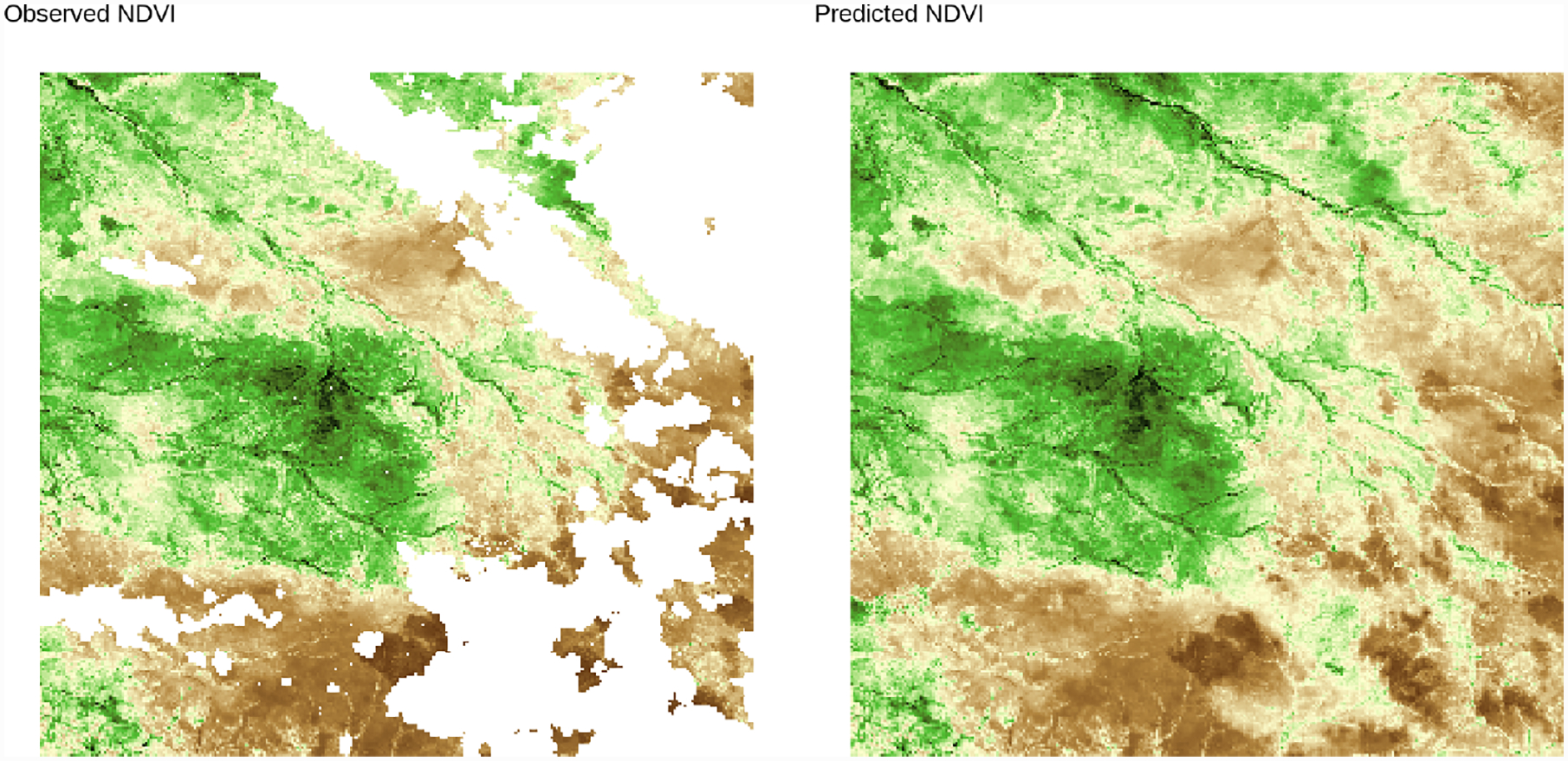

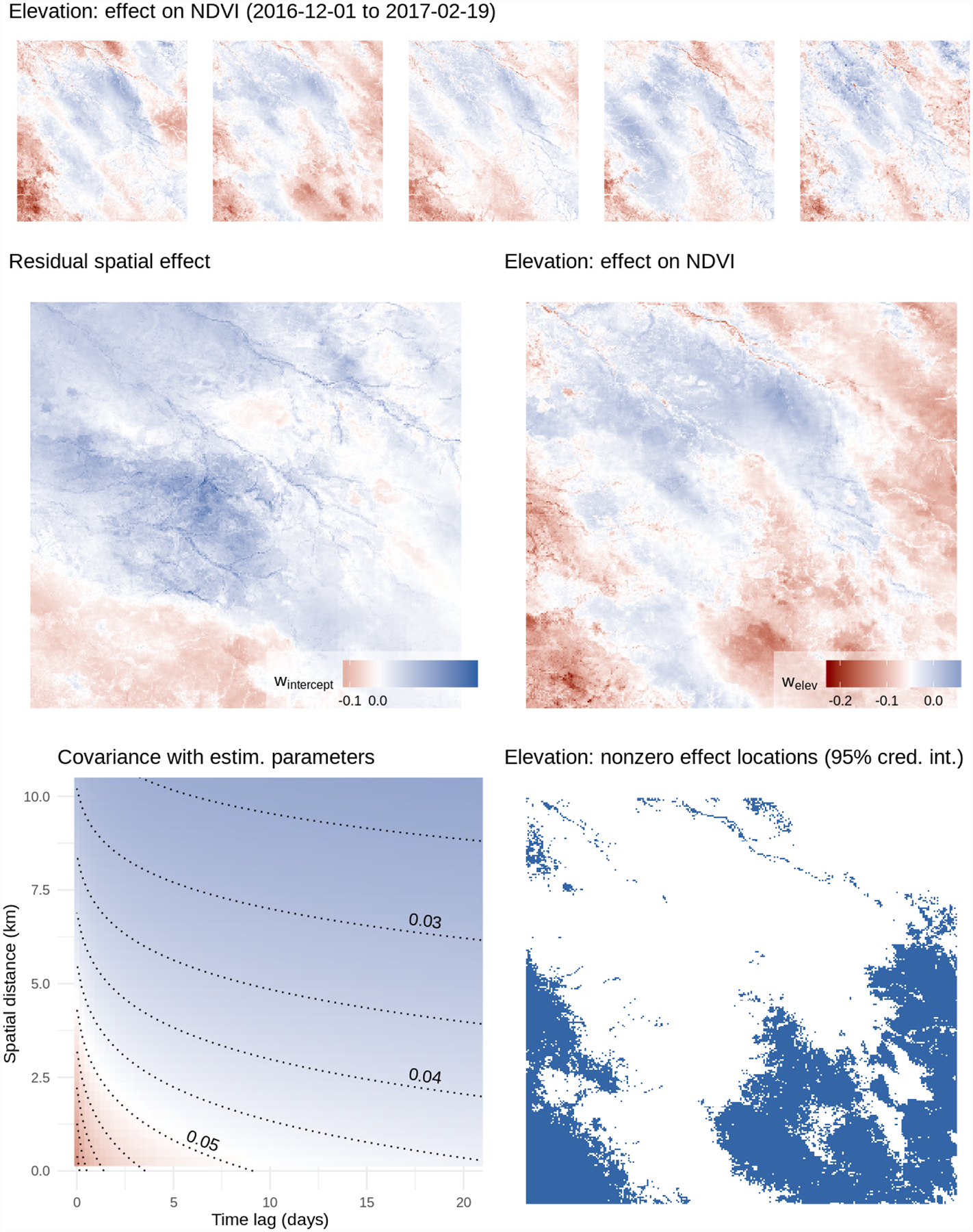

5.3. NDVI data from the Serengeti ecosystem

Time series of Normalized Difference Vegetation Index (NDVI) derived from satellite imagery are used to understand spatial patterns in vegetation phonology. For such studies, image pixel-level NDVI values are observed over time to assess seasonal trends in vegetation green-up, growing season length and peak, and senescence. These analyses typically require NDVI values for all pixels over the region and time period of interest. As noted in the beginning of this section, atmospheric conditions, e.g., cloud cover, and sensor malfunction cause missing NDVI pixel values and hence predicting such values, i.e., gap-filling, is of key interest to the remote sensing community. Here, we consider NDVI data derived from the LandSat 8 sensor (which provides a ~30×30 m spatial resolution pixel) taken over Serengeti National Park, Tanzania, Africa. These data were part of a larger study conducted by Desanker et al. (2019) that looked at environmental drivers in vegetation phonology change. The data cover an area of 30×30km and 34 months, and correspond to 64 images of size 1000×1000 collected at 16-day intervals. Data on NDVI are complemented with elevation and soil moisture data, for a total of three spatially referenced predictors. We are thus interested in understanding their varying effect in space and time, in addition to predicting NDVI output at missing locations. We achieve both these goals by implementing model (11), where Z(ℓ) = X(ℓ) includes the intercept and three predictors; their varying effect will be represented by w(ℓ), which we recover by implementing Q-MGP models. Storing posterior samples of the multivariate spatially-varying coefficients for the full data with q = 4 is impossible using our computing resources as each sample would be of size 1000×1000×64×4 = 2.56e+8. For this reason, we consider two feasible setups. Denote by nall the number of observed and missing locations. In model (1), we subsample each image to obtain 64 frames of size 500 × 500, and fit a regression model with Z(ℓ) = 1 resulting in a spatially-varying intercept model on nobs = 12,582,484 observed locations, a total of nall = 16,000,000 locations for prediction, and a latent spatial random effect w of the same size. The Q-MGP was fit using M = 328,125 space-time regions of size ~48.

The base covariance of (13) becomes a univariate non-separable spatiotemporal covariance as in Gneiting (2002). In model (2), we aim to estimate the varying effect of elevation on NDVI. We subsample each image to obtain 64 frames of size 278 × 278, each covering an area of 25×25km, and take Z(ℓ) = (1 Xelev(ℓ)) resulting in q = 2 and targeting the recovery of latent effects of size 9,892,352. Considering the additional computational burden of the multivariate latent effects, in this case we used M = 156,800, corresponding to smaller space-time regions of average size ~31. In this model there is a single unknown δij in (13) which corresponds to the latent dissimilarity between the intercept and elevation. We thus consider ψ2 = (a2δij + 1)β2 as the unknown parameter. We assign priors βr ~ N(0,100) for r = 1,…,q, σ2 ~ Inv.G.(2,1), τ2 ~ Inv.G(2,1), and uniform priors to other covariance parameters (their support is reported in Table 2).

Table 2:

Posterior summaries of Q-MGP models implemented on the Serengeti data.

| Q-MGP Model (1) | Q-MGP Model (2) | |

|---|---|---|

| n all | 16, 000, 000 | 4, 946, 176 |

| n obs | 12, 755,856 | 3, 961, 715 |

| M | 328125 | 156800 |

| q | 1 | 2 |

| β elevation | 0.0017(0.0014,0.0021) | 0.0415(0.0398,0.0432) |

| β topoindex | 5.54e-4(4.72e-4,6.30e-4) | −0.0011(−0.0012,−0.0008) |

| β accum | −4.84e-4(−5.66e-4,−4.02e-4) | 7.88e-4(6.94e-4,9.06e-4) |

| σ 2 | 0.0585(0.0583,0.0587) | 0.0728(0.0711,0.0749) |

| τ 2 | 1.05e-4(1.05e-4, 1.05e-4) | 1.27e-4(1.21e-4,1.32e-4) |

| c ~ U(0, 1e+6) | 7.0331(7.0146,7.0519) | 3.0710(3.0562,3.0846) |

| a1 ~ U(0, 1e+6) | 433.98(429.67,439.50) | 3857.6(3492.6,4154.7) |

| β1 ~ U(0,1) | 0.0694(0.0690,0.0697) | 0.1058(0.1043,0.1080) |

| ψ2 ~ U(0, 1e+6) | – | 221.36(198.09,240.57) |

| 95% coverage | 94.96 | 95.66 |

| RMSE | 0.0175 | 0.0253 |

| Time/it. (s) | 6.18 | 4.53 |

| Time (hours) | 42.9 | 31.5 |

In both cases, approximate posterior samples of the latent random effects and the other unknown parameters were obtained by running the proposed Gibbs sampler for a total of 25,000 iterations. A posterior sample of size 500 was obtained by using the first 22,000 iterations as burn-in, and thinning the remaining 3,000 by a factor of 6. Additional computational details are at Appendix F, available online. Posterior summaries of the unknown parameters for these models are reported in Table 2, along with RMSE in predicting NDVI at 10,000 left-out locations, 95% posterior coverage at those locations, and run times. Both models achieved similar out-of-sample predictive performance and coverage. Figure 7 shows the NDVI predictions of model (2) at one of the 64 time points. This reveals that the varying effect of elevation on NDVI output (see e.g. Figure 8) is credibly different from zero at 42.54% of the space-time locations (95% c. i.). In particular, it highlights the extent to which higher elevation reduces vegetation. The spatial range is approximately 4km; the time range is about 8 days. The large estimated ψ2 indicates that the correlation between the two covariates of the latent random process is very small at all spatial and temporal lags. The predicted NDVI and latent spatiotemporal effects are supplied as animated GIF images in the supplement.

Figure 7:

NDVI predictions from Q-MGP model (2) at time 60 (2016-12-17).

Figure 8:

Top: the effect of elevation on NDVI output, evolving over five time periods. Middle left: effect on NDVI not explained by elevation; right: effect on NDVI attributable to elevation. Bottom left: estimated covariance at different space-time lags; right, in blue: locations with credibly nonzero effect of elevation on NDVI output.

6. Discussion

We have developed a class of Bayesian hierarchical models for large spatial and spatiotemporal datasets based on linking domain partitions to directed acyclic graphs. These models can be tailored for specific algorithmic needs, and we have demonstrated the advantages of using a cubic tessellation scheme (Q-MGP) when targeting the efficient recovery of spatial random effects in Bayesian hierarchical models using Gibbs samplers.

When considering alternative computational strategies, the proposed Q-MGP may not be optimal. For example, Gaussian first stage models enable marginalization of the latent spatial effects; posterior sampling of unknown covariance parameters via MCMC is typically associated by better mixing. Future research may thus focus on identifying “optimal” DAGs for collapsed samplers. Furthermore, the blocked conditional independence structure of Q-MGPs may be suboptimal as it corresponds to possibly restrictive conditional independence assumptions in neighboring locations. While we have not focused on the effect of different tessellations or partitioning choices in this article, alternative tessellation schemes (e.g. hexagonal) may be associated to less stringent assumptions and possibly better performance, while retaining all the desirable features of Q-MGP.

Other natural extensions to high-dimensional spatiotemporal statistics include settings where there are a very large number of spatiotemporal outcomes in addition to a large number of spatial and temporal locations. Here there are a few different avenues. One approach is in the same spirit of joint modeling pursued here, but instead of modeling the cross-covariance functions explicitly, as has been done here, we pursue dimension reduction using factor models (see, e.g., Christensen and Amemiya, 2003; Lopes et al., 2008; Ren and Banerjee, 2013; Taylor-Rodriguez et al., 2019). The aforementioned references have attempted to model the factor models using spatial processes some of which have used scalable low-rank predictive processes or the NNGP. We believe that modeling latent factors using spatiotemporal MGPs will impart some of the computational and inferential benefits seen here. However, this will need further development especially with regard to identifiability of loading matrices (Lopes et al., 2008; Ren and Banerjee, 2013) and process parameters.

A different approach to multivariate spatial modeling has relied upon conditional or hierarchical specifications. This has been well articulated in the text by Cressie and Wikle (2011); see also Royle and Berliner (1999) and the recent developments in Cressie and Zammit-Mangion (2016). An advantage of the hierarchical approach is that the multivariate processes are valid stochastic processes, essentially by construction and without requiring spectral representations, and can also impart considerable computational benefits. It will be interesting to extend the ideas in Cressie and Zammit-Mangion (2016) to augmented spaces of DAGs to further introduce conditional independence, and therefore sparsity, in MGP models with high-dimensional outcomes.

Finally, it is worth pointing out that alternate computational algorithms, particularly tuned for high-dimensional Bayesian models, should also be explored. Recent developments on algorithms based upon classes of piecewise deterministic Markov processes (see e.g., Fearnhead et al., 2018; Bierkens et al., 2019, and references therein) that avoid Gibbs samplers and even reversible MCMC algorithms are being shown to be increasingly effective for high-dimensional Bayesian inference. Adapting such algorithms to MGP and Q-MGP for scalable Bayesian spatial process models will constitute natural extensions of our current offering.

Supplementary Material

Acknowledgements

Banerjee was supported by the NSF grants DMS-1513654, IIS-1562303, and DMS-1916349; and by the National Institute of Health grants NIEHS-R01ES027027 and NIEHS-R01ES030210. Finley and Peruzzi were supported by National Science Foundation (NSF) EF-1253225 and DMS-1916395, and National Aeronautics and Space Administration’s Carbon Monitoring System project. Peruzzi was supported in part by 1R01ES028804 of the National Institute of Environmental Health Sciences of the National Institutes of Health and European Union project 856506.

References

- Apanasovich TV and Genton MG (2010). Cross-covariance functions for multivariate random fields based on latent dimensions. Biometrika, 97:15–30. doi: 10.1093/biomet/asp078. [DOI] [Google Scholar]

- Banerjee S (2017). High-dimensional Bayesian geostatistics. Bayesian Analysis, 12(2):583–614. doi: 10.1214/17-BA1056R. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee S (2020). Modeling massive spatial datasets using a conjugate Bayesian linear modeling framework. Spatial Statistics, in press. doi: 10.1016/j.spasta.2020.100417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee S, Finley AO, Waldmann P, and Ericsson T (2010). Hierarchical spatial process models for multiple traits in large genetic trials. Journal of American Statistical Association, 105(490):506–521. doi: 10.1198/jasa.2009.ap09068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banerjee S, Gelfand AE, Finley AO, and Sang H (2008). Gaussian predictive process models for large spatial data sets. Journal of the Royal Statistical Society, Series B, 70:825–848. doi: 10.1111/j.1467-9868.2008.00663.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bierkens J, Fearnhead P, and Roberts G (2019). The zig-zag process and super-efficient sampling for bayesian analysis of big data. The Annals of Statistics, 47(3):1288–1320. doi: 10.1214/18-AOS1715. [DOI] [Google Scholar]

- Chen Y, Davis TA, Hager WW, and Rajamanickam S (2008). Algorithm 887: CHOLMOD, Supernodal Sparse Cholesky Factorization and Update/Downdate. ACM Trans. Math. Softw, 35(3). doi: 10.1145/1391989.1391995. [DOI] [Google Scholar]

- Christensen WF and Amemiya Y (2003). Modeling and prediction for multivariate spatial factor analysis. Journal of Statistical Planning and Inference, 115(2):543–564. doi: 10.1016/S0378-3758(02)00173-8. [DOI] [Google Scholar]

- Cover TM and Thomas JA (1991). Elements of information theory. Wiley Series in Telecommunications and Signal Processing. Wiley Interscience. [Google Scholar]

- Cressie N and Johannesson G (2008). Fixed rank kriging for very large spatial data sets. Journal of the Royal Statistical Society, Series B, 70:209–226. doi: 10.1111/j.1467-9868.2007.00633.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cressie N and Zammit-Mangion A (2016). Multivariate spatial covariance models: a conditional approach. Biometrika, 103(4):915–935. doi: 10.1093/biomet/asw045. [DOI] [Google Scholar]

- Cressie NAC and Wikle CK (2011). Statistics for spatio-temporal data. Wiley series in probability and statistics. Hoboken, N.J. Wiley. [Google Scholar]

- Datta A, Banerjee S, Finley AO, and Gelfand AE (2016a). Hierarchical nearest-neighbor gaussian process models for large geostatistical datasets. Journal of the American Statistical Association, 111:800–812. doi: 10.1080/01621459.2015.1044091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Datta A, Banerjee S, Finley AO, Hamm NAS, and Schaap M (2016b). Non-separable dynamic nearest neighbor gaussian process models for large spatio-temporal data with an application to particulate matter analysis. The Annals of Applied Statistics, 10:1286–1316. doi: 10.1214/16-AOAS931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis TA (2006). Direct Methods for Sparse Linear Systems. SIAM, Philadelphia, PA. doi: 10.1137/1.9780898718881. [DOI] [Google Scholar]

- Desanker G, Dahlin KM, and Finley AO (2019). Environmental controls on landsat-derived phenoregions across an east african megatransect. Ecosphere, In press. [Google Scholar]

- Eidsvik J, Shaby BA, Reich BJ, Wheeler M, and Niemi J (2014). Estimation and prediction in spatial models with block composite likelihoods. Journal of Computational and Graphical Statistics, 23:295–315. doi: 10.1080/10618600.2012.760460. [DOI] [Google Scholar]

- Fearnhead P, Bierkens J, Pollock M, and Roberts GO (2018). Piecewise deterministic Markov processes for continuous-time Monte Carlo. Statistical Science, 33(3):386–412. doi: 10.1214/18-STS648. [DOI] [Google Scholar]

- Finley AO, Banerjee S, and Gelfand AE (2012). Bayesian dynamic modeling for large space-time datasets using Gaussian predictive processes. Journal of Geographical Systems, 14:29–47. doi: 10.1007/s10109-011-0154-8. [DOI] [Google Scholar]

- Finley AO, Datta A, Cook BD, Morton DC, Andersen HE, and Banerjee S (2019). Efficient algorithms for Bayesian nearest neighbor Gaussian processes. Journal of Computational and Graphical Statistics, 28:401–414. doi: 10.1080/10618600.2018.1537924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furrer R, Genton MG, and Nychka D (2006). Covariance tapering for interpolation of large spatial datasets. Journal of Computational and Graphical Statistics, 15:502–523. doi: 10.1198/106186006X132178. [DOI] [Google Scholar]

- Genton MG and Kleiber W (2015). Cross-covariance functions for multivariate geostatistics. Statistical Science, 30:147–163. doi: 10.1214/14-STS487. [DOI] [Google Scholar]

- Gerber F, Furrer R, Schaepman-Strub G, de Jong R, and Schaepman ME (2018). Predicting missing values in spatio-temporal remote sensing data. IEEE Transactions on Geoscience and Remote Sensing, 56(5):2841–2853. doi: 10.1109/TGRS.2017.2785240. [DOI] [Google Scholar]

- Gneiting T (2002). Nonseparable, stationary covariance functions for space-time data. Journal of the American Statistical Association, 97:590–600. doi: 10.1198/016214502760047113. [DOI] [Google Scholar]

- Gonzalez J, Low Y, Gretton A, and Guestrin C (2011). Parallel Gibbs sampling: From colored fields to thin junction trees. In Gordon G, Dunson D, and Dudík M, editors, Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, volume 15 of Proceedings of Machine Learning Research, pages 324–332, Fort Lauderdale, FL, USA. PMLR. [Google Scholar]

- Gramacy RB and Apley DW (2015). Local Gaussian process approximation for large computer experiments. Journal of Computational and Graphical Statistics, 24:561–578. doi: 10.1080/10618600.2014.914442. [DOI] [Google Scholar]

- Gramacy RB and Lee HKH (2008). Bayesian treed Gaussian process models with an application to computer modeling. Journal of the American Statistical Association, 103:1119–1130. doi: 10.1198/016214508000000689. [DOI] [Google Scholar]

- Guhaniyogi R, Finley AO, Banerjee S, and Gelfand AE (2011). Adaptive Gaussian predictive process models for large spatial datasets. Environmetrics, 22:997–1007. doi: 10.1002/env.1131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guinness J (2018). Permutation and grouping methods for sharpening Gaussian process approximations. Technometrics, 60(4):415–429. doi: 10.1080/00401706.2018.1437476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heaton MJ, Datta A, Finley AO, Furrer R, Guinness J, Guhaniyogi R, Gerber F, Gramacy RB, Hammerling D, Katzfuss M, Lindgren F, Nychka DW, Sun F, and Zammit-Mangion A (2019). A case study competition among methods for analyzing large spatial data. Journal of Agricultural, Biological and Environmental Statistics, 24(3):398–425. doi: 10.1007/s13253-018-00348-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Katzfuss M (2017). A multi-resolution approximation for massive spatial datasets. Journal of the American Statistical Association, 112:201–214. doi: 10.1080/01621459.2015.1123632. [DOI] [Google Scholar]

- Katzfuss M and Guinness J (2017). A general framework for Vecchia approximations of Gaussian processes. arXiv:1708.06302. [Google Scholar]

- Kaufman CG, Schervish MJ, and Nychka DW (2008). Covariance tapering for likelihood-based estimation in large spatial data sets. Journal of the American Statistical Association, 103:1545–1555. doi: 10.1198/016214508000000959. [DOI] [Google Scholar]

- Lauritzen S,L (1996). Graphical Models. Clarendon Press, Oxford, UK. [Google Scholar]

- Lewis R (2016). A guide to graph colouring. Springer International Publishing. doi: 10.1007/978-3-319-25730-3. [DOI] [Google Scholar]

- Lopes HF, Salazar E, and Gamerman D (2008). Spatial dynamic factor analysis. Bayesian Analysis, 3(4):759–792. doi: 10.1214/08-BA329. [DOI] [Google Scholar]

- Molloy M and Reed B (2002). Graph colouring and the probabilistic method. Springer-Verlag; Berlin Heidelberg. doi: 10.1007/978-3-642-04016-0. [DOI] [Google Scholar]

- Quiroz ZC, Prates MO, and Dey DK (2019). Block nearest neighboor Gaussian processes for large datasets. arXiv:1908.06437. [Google Scholar]

- Ren Q and Banerjee S (2013). Hierarchical factor models for large spatially misaligned data: A low-rank predictive process approach. Biometrics, 69(1):19–30. doi: 10.1111/j.1541-0420.2012.01832.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Royle JA and Berliner LM (1999). A hierarchical approach to multivariate spatial modeling and prediction. Journal of Agricultural, Biological, and Environmental Statistics, 1(4):29–56. doi: 10.2307/1400420. [DOI] [Google Scholar]

- Rue H and Held L (2005). Gaussian Markov Random Fields: Theory and Applications. Chapman & Hall/CRC. doi: 10.1201/9780203492024. [DOI] [Google Scholar]

- Sang H and Huang JZ (2012). A full scale approximation of covariance functions for large spatial data sets. Journal of the Royal Statistical Society, Series B, 74:111–132. doi: 10.1111/j.1467-9868.2011.01007.x. [DOI] [Google Scholar]

- Stein ML (2014). Limitations on low rank approximations for covariance matrices of spatial data. Spatial Statistics, 8:1–19. doi:doi: 10.1016/j.spasta.2013.06.003. [DOI] [Google Scholar]

- Stein ML, Chi Z, and Welty LJ (2004). Approximating likelihoods for large spatial data sets. Journal of the Royal Statistical Society, Series B, 66:275–296. doi: 10.1046/j.1369-7412.2003.05512.x. [DOI] [Google Scholar]

- Sun Y, Li B, and Genton M (2011). Geostatistics for large datasets. In Montero J, Porcu E, and Schlather M, editors, Advances and Challenges in Space-time Modelling of Natural Events, pages 55–77. Springer-Verlag, Berlin Heidelberg. doi: 10.1007/978-3-642-17086-7. [DOI] [Google Scholar]

- Taylor-Rodriguez D, Finley AO, Datta A, Babcock C, Andersen HE, Cook BD, Morton DC, and Banerjee S (2019). Spatial factor models for high-dimensional and large spatial data: An application in forest variable mapping. Statistica Sinica, 29(3):1155–1180. doi: 10.5705/ss.202018.0005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vecchia AV (1988). Estimation and model identification for continuous spatial processes. Journal of the Royal Statistical Society, Series B, 50:297–312. doi: 10.1111/j.2517-6161.1988.tb01729.x. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.