Abstract

Experimental research controls for past experience yet prior experience influences how we learn. Here, we tested whether we could recruit a neural population that usually encodes rewards to encode aversive events. Specifically, we found that GABAergic neurons in the lateral hypothalamus (LH) were not involved in learning about fear in naïve rats. However, if these rats had prior experience with rewards, LH GABAergic neurons became important for learning about fear. Interestingly, inhibition of these neurons paradoxically enhanced learning about neutral sensory information, regardless of prior experience, suggesting that LH GABA neurons normally oppose learning about irrelevant information. These experiments suggest that prior experience shapes the neural circuits recruited for future learning in a highly specific manner, reopening the neural boundaries we have drawn for learning of particular types of information from work in naïve subjects.

Prior experience shapes the way we view our world. War movies are rife with veterans jumping at the sound of a car door slamming when they return home, and lottery winners may view life through rose-tinted glasses. We use our prior experience to build models of our environment, and preferentially interpret new experiences in a manner consistent with these models1–3. Despite this, experimental research often does not consider a role for prior experience as a factor. Research involving the human population samples from homogenous groups, controlling factors that might give rise to differences. And of course, one of the main benefits of animal research is an ability to eliminate prior experience. Rats and mice, and to a lesser extent even primates, used for experimental research are housed in experiential vacuums, devoid of any exposure to events that may contaminate their naivety.

The few paradigms that investigate prior experience as an experimental variable have focused on extreme cases, where humans or other animals are exposed to traumatic events 4–7. Here, prior trauma appears to “prime” fear circuits to learn, resulting in individuals who have experienced trauma learning about an aversive event so mild others would usually ignore it4,5,8. This work has been integral to understanding some of the factors that could contribute to pathological fear, such as that seen in post-traumatic stress disorder (PTSD). But what if these impacts can occur outside of a pathological environment?

Here, we focused on GABAergic neurons in the LH, which are generally implicated in learning about rewards9–13, to test whether non-aversive experiences might prime other circuits to encode fear memories. We found that LH GABA neurons were important for encoding fear memories, but only in rats that had experience with reward learning. Interestingly, reward learning did not recruit LH GABA neurons to encode all types of learning; inhibiting these neurons while rats learnt about cue-cue relationships paradoxically enhanced such learning, whether or not rats had experience with reward learning. This suggests that these neurons oppose the development of associations between cues regardless of prior experience, biasing learning towards rewards, but that they can be recruited to learn about other motivationally-significant events, like aversive events, with appropriate prior experience.

Results

Experiment 1: GABAergic neurons in the lateral hypothalamus are not necessary to learn about aversive events in naïve rats.

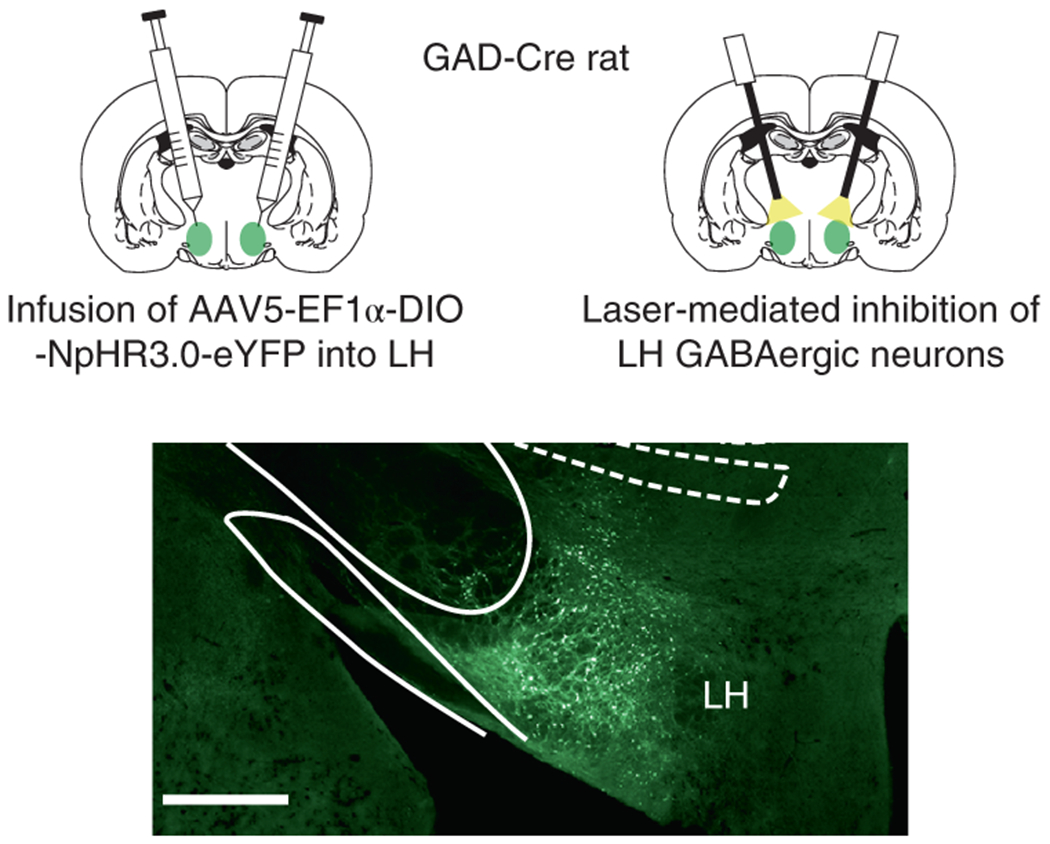

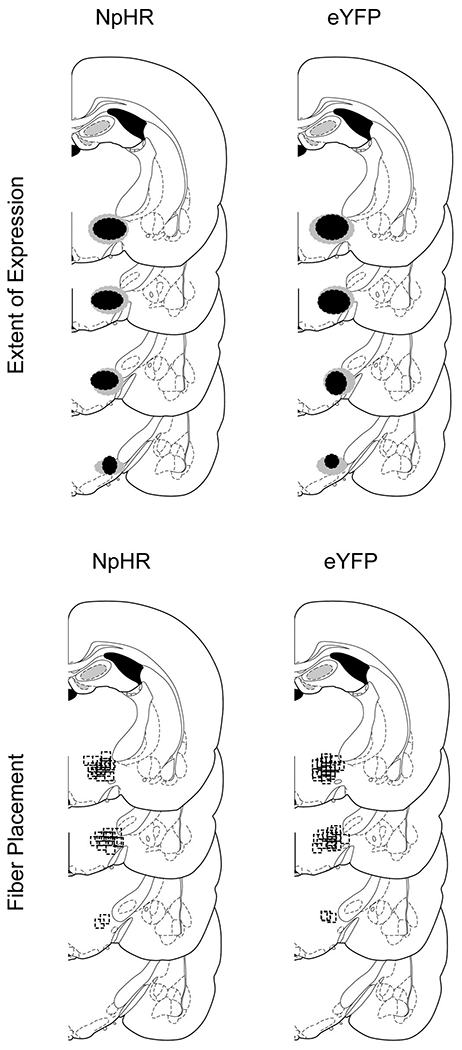

Prior to training, all rats underwent surgery to infuse virus and implant fiber optics targeting the lateral hypothalamus (LH; Fig 1; Extended Data Figure 1). We infused a Cre-dependent AAV carrying halorhodopsin (AAV5-EF1α-DIO-eNpHR3.0-eYFP; NpHR experimental group; n = 4) or the control AAV vector (AAV5-EF1α-DIO-eYFP; eYFP control group; n = 4) into the LH of rats expressing Cre recombinase from the glutamate decarboxylase 1 (GAD1) promoter—one of two genes encoding the GAD enzyme that converts glutamate to GABA (hereafter referred to a GAD-Cre rats)10. During this surgery, we also implanted optic fibers terminating 0.5mm above the injection site in LH, allowing us to silence GABAergic neurons in the LH by delivering green light (532nm, 16mW).

Figure 1. Inhibition of LH GABA neurons was achieved by infusion of a Cre-dependent AAV virus carrying halorhodopsin (NpHR) into the LH of GAD-Cre rats.

Top panels indicate optogenetic technique used to inactivate neurons carrying the Cre-dependent NpHR virus. Rats were first infused with AAV5-Ef1α-DIO-NpHR- eYFP or AAV5-Ef1α-DIO-eYFP into the LH. During this surgery, 200-μm fiber optics were implanted above the LH. Bottom panel shows LH virus expression selective to GABAergic neurons 10. See Extended Data 1 for individual virus expression and fiber placement. Scale bar = 1mm.

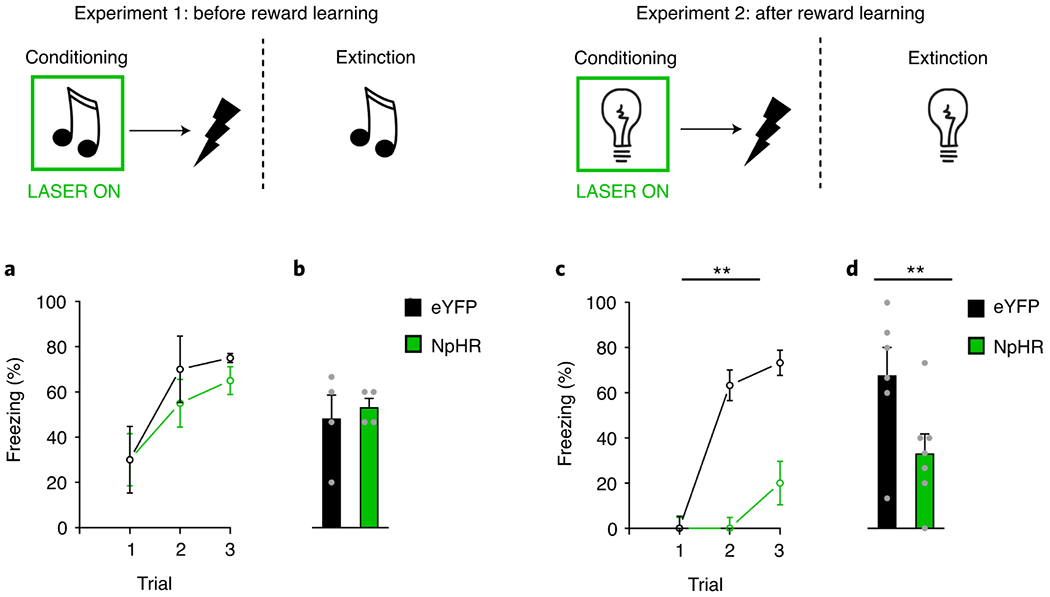

During conditioning, rats received three tone-shock pairings, with an inter-trial interval (ITI) varying around a 7min mean. A 10s auditory tone (70dB) was presented followed by a 1s footshock (0.5mA), after a 1s delay. Light was delivered into the brain during the tone, beginning 500ms before tone onset, and continuing until 500ms after tone offset. During this conditioning, all rats learnt to fear the tone stimulus, exhibiting an increase in freezing elicited by the tone, with no difference between groups (Fig 2A; trial: F2,12=5.812, p=0.017; trial x group: F2,12=0.187, p=0.831; group: F1,6=0.377, p=0.562). There were no differences in levels of freezing exhibited to the background contextual cues prior to cue presentation [mean % (±SEM): eYFP 45 (22.17), NpHR 35 (15); F1,6=0.140, p=0.722]. Following conditioning, we tested whether inactivation of LH GABA neurons during conditioning would impact freezing the following day. To test this, rats were presented with the tone again without shock or light delivery into LH. Rats exhibited high levels of fear to the tone and the level of freezing did not differ between groups (Fig 2B; group: F1,6=0.206, p=0.666). Thus, LH GABA neurons are not normally necessary for the acquisition of conditioned fear in naïve rats.

Figure 2. LH GABAergic neurons are necessary to encode fear memories after reward learning.

Responding is shown as mean level of freezing (%; ±SEM) Top: LH GABAergic neurons were inhibited by light (green rectangle) during the tone or light, and not during shock presentation, in both naïve rats (Exp 1; left) or a separate group of rats that had experienced reward learning (Exp 2; right). Bottom left: (A) shows freezing during conditioning in naïve rats, where inhibition of LH GABA neurons in the NpHR group (n=4 rats) had no impact on learning about fear relative to the eYFP control group (n=4 rats; trial: F2,12=5.812, p=0.017; trial x group: F2,12=0.187, p=0.831; group: F1,6=0.377, p=0.562). Similarly, (B) demonstrates that there were no differences in levels of freezing between groups during extinction (group: F1,6=0.206, p=0.666). Bottom right: (C) in a separate group of rats that had prior experience with rewards, inhibition of LH GABAergic neurons in our NpHR group (n=7 rats) significantly attenuated fear learning during conditioning relative to eYFP controls (n=6 rats; trial: F2,22=17.886, p=0.000; trial x group: F2,22=9.133, p=0.001; group: F1,11=29.615, p=0.000). (D) shows this difference was maintained in an extinction test with LH GABA neurons intact (group: F1,11=5.553, p=0.038). Data were analyzed with a repeated-measures ANOVA, which utilize a two-sided test.

Experiment 2: GABAergic neurons in the lateral hypothalamus are necessary to learn about aversive events in rats that have previous experience learning about rewards.

Next we tested whether LH GABAergic neurons could be recruited for learning about fear if rats had been trained in a procedure that is dependent on LH GABAergic function10. We first trained rats to associate a tone with delivery of a sucrose pellet, then we again tested the effects of optogenetic inhibition of LH GABAergic neurons on fear conditioning.

Prior to training, all rats underwent surgery to infuse virus and implant fiber optics targeting the LH. We infused a Cre-dependent AAV carrying halorhodopsin (NpHR experimental group; n = 7) or the control AAV vector (eYFP control group; n = 6) into the LH of GAD-Cre rats, as in the previous experiment. Following appetitive learning, rats began aversive training. As in Experiment 1, rats received three cue-shock pairings during conditioning, with an inter-trial interval (ITI) varying around a 7min mean. Since the rats had learnt to associate auditory cues with reward in the prior appetitive training, we used a light cue to predict delivery of a mild foot shock (0.35mA), delivered 1s later. LH GABAergic neurons were again inhibited only during the cue. Across conditioning, rats in the eYFP group learnt to associate the light with shock, increasing levels of freezing during light presentation as conditioning progressed. But this time, inactivation of LH GABAergic neurons significantly attenuated this fear learning, as rats in the NpHR group were slower to increase freezing to the light (Fig 2C; trial: F2,22=17.886, p=0.000; trial x group: F2,22=9.133, p=0.001; group: F1,11=29.615, p=0.000). There were no differences in levels of freezing to the background contextual cues [mean % (±SEM): eYFP 36.67 (18.20), NpHR 14.28 (14.28); F1,11=0.962, p=0.348].

Subsequently, we gave the rats a final test session in which the light stimulus was presented without shock. Here, we did not deliver light into the brain. This allowed us to test if the deficit seen in our NpHR group during learning was due to impaired learning, or a transient effect on expression of the fear response. Consistent with deficient learning, the NpHR group continued to show lower freezing to the cue in this test session relative to the eYFP group (Fig 2D; group: F1,11=5.553, p=0.038). Thus, LH GABAergic neurons become important for encoding fear memories after rats have had experience with reward learning.

Experiment 3: GABAergic neurons in the lateral hypothalamus are recruited to encode fear memories in rats following experience with reward contingencies, and not as a result of other experimental factors.

Our first two experiments suggested that LH GABAergic neurons become necessary for the acquisition of fear memories after rats have had experience with rewards. However, it is unclear whether this is because of experience with the contingency between cues and rewards versus other factors in the combined appetitive and aversive conditioning that are absent in simple fear conditioning. Further, the role of LH GABA neurons in fear learning after experience with cues and rewards might be due to a role for these neurons in segregating prior reward learning from the new fear memories, rather than due to a specific role in encoding fear memories per se. In order to exclude these other possibilities, we ran another experiment where we controlled for these factors.

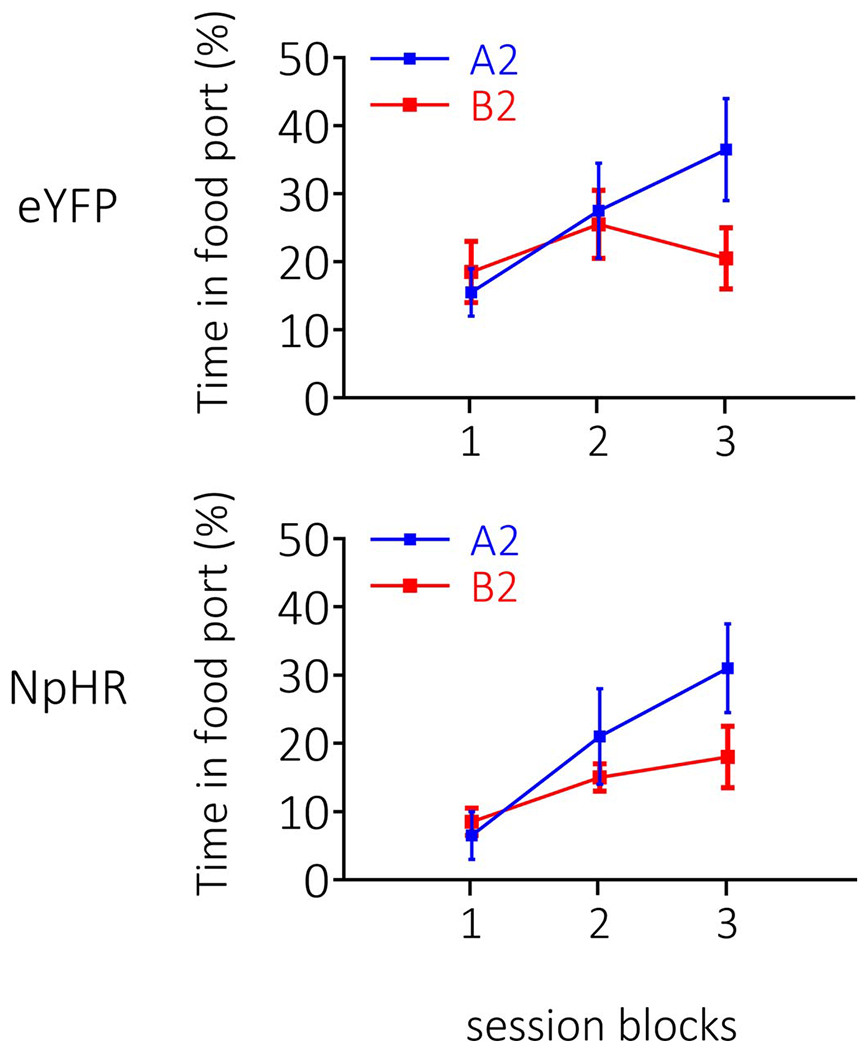

Prior to training, all rats underwent surgery to infuse virus and implant fiber optics targeting the LH. We infused a Cre-dependent AAV carrying halorhodopsin (NpHR experimental groups; n = 12) or the control AAV vector (eYFP control groups; n = 12) into the LH of GAD-Cre rats. Rats were then randomly allocated into one of two conditions, “learners”, that received light-food pairings prior to fear conditioning, or “naïve” rats that experienced all aspects of the experimental procedures without reward presentations (Fig 3). This yielded four groups: NpHR learners (n=6), eYFP learners (n=6), NpHR naïve (n=6), and eYFP naïve (n=6). Reward learning occurred in one context (context A), differentiated by flooring, wallpaper, and scent, from the context in which aversive procedures would take place (context B). In context A, learners experienced light presentations paired with delivery of sucrose pellets; NpHR and eYFP learners showed appetitive learning across sessions, increasing the time spent in the food port during light presentations as conditioning progressed, with no differences in the rates or ultimate levels of learning between groups (Fig 3A; session: F4,40=11.171, p=0.000; session x group: F4,40=0.174, p=0.951; group: F1,10=1.498, p=0.249). During this stage, the NpHR naïve and eYFP naïve groups received presentations of the light stimulus in context A without presentation of reward. Unsurprisingly, rats in this group did not show learning about the light stimulus (Fig 3D; session: F4,40=0.498, p=0.737; session x group: F4,40=0.951, p=0.445; group: F1,10=0.263, p=0.620). During appetitive learning, all rats were exposed to context B on alternate sessions (i.e. morning or afternoon, counterbalanced across days and subjects), to enhance context discrimination.

Figure 3. LH GABAergic neurons are recruited to encode fear memories only in rats that experience contingencies between cues and rewards.

Responding is shown as mean level of responding (%; ±SEM) during Exp 3. Top: LH GABAergic neurons were inhibited (green rectangle) during fear learning in rats with a prior history of reward learning. (A) Prior to aversive learning, rats were trained to associate a light with food delivery in context A, with no differences between NpHR learners (n=6 rats) and eYFP learners (n=6 rats; session: F4,40=11.171, p=0.000; session x group: F4,40=0.174, p=0.951; group: F1,10=1.498, p=0.249). (B) During fear learning, we found that NpHR learners showed less learning about the tone, and greater learning to the context (simple main effect after group interaction: F1,20=4.831, p=0.040), in contrast to eYFP reward learners (F1,20=0.773, p=0.390). (C) The deficit in learning about the tone was maintained in extinction test, after extinction to the contextual cues, with LH GABAergic neurons intact (group: F1,10=6.085, p=0.033). Bottom: LH GABAergic neurons were inhibited (green rectangle) during fear learning in rats that received light presentations without reward. (D) Prior to aversive learning, NpHR naïve (n=6 rats) and eYFP naïve (n=6 rats) group received presentations of the light and did not acquire an appetitive response across days (session: F4,40=0.498, p=0.737; session x group: F4,40=0.951, p=0.445; group: F1,10=0.263, p=0.620). (E) During fear learning, NpHR naïve rats without LH GABAergic activity showed no difference in freezing to the tone and contextual cues (F1,20=0.435, p=0.517), similarly to eYFP naïve rats (F1,20=0.048, p=0.828). (F) There was no difference in the expression of fear to the tone during the subsequent extinction test when LH GABAergic neurons were intact (group: F1,10=0.303, p=0.594). Data were analyzed with a repeated-measures ANOVA, where analyses of simple-main effects were warranted after a significant interaction was determined and did not necessitate controls for multiple comparisons. In the case of an expected interaction, one-tailed tests were used to warrant investigation of further simple-main effects.

Following appetitive learning, all rats underwent fear conditioning in context B. Here, rats received three tone-shock pairings, with an inter-trial interval (ITI) varying around a 7min mean. As in Experiment 1, a 10s auditory tone cue (70dB) was presented, and 1s later a mild foot shock (0.35mA) was delivered. LH GABAergic neurons were inhibited by light during the tone. In contrast to the prior experiments, all rats showed higher levels of freezing to the background contextual cues as a consequence of our context-specific design, which made the context a competing predictor of shock. As a result, when we disrupted learning during the tone, these rats learned more about the context. Specifically, while there was no difference in responding to the tone and context overall at the end of conditioning (Fig 3B and E; F1,20=0.193, p=0.665; measured by freezing during the pre-tone period and tone on the last trial), there was a significant interaction as a function of prior experience with reward learning (i.e. condition), and whether or LH GABAergic neurons were inhibited (i.e. virus: eYFP or NpHR; tone x context x virus x condition: F1,20=3.913, p=0.031). Follow-up analyses showed that this effect was due to greater freezing to the contextual than tone cues in NpHR reward learners (Fig 3B: F1,20=4.831, p=0.040) but not in the other groups (Fig 3B and E; eYFP reward learners: F1,20=0.773, p=0.390; eYFP naïve: F1,20=0.048, p=0.828; NpHR naïve: F1,20=0.435, p=0.517). Thus in the reward learners, inhibition of LH GABAergic neurons selectively disrupted learning for the tone and produced a compensatory increase in learning about the contextual cues present during fear conditioning.

Following conditioning, rats were again exposed to context B in the absence of shock to extinguish fear to the contextual cues (see Extended Data 2). Then, we tested responding to the tone under extinction while LH GABAergic neurons were online. This allowed us to get a clean test of what the rats had learnt about the tone during conditioning, without confounding this measure with differential levels of contextual fear.

We found that our eYFP and NpHR groups no longer showed differences in freezing to the background contextual cues at the beginning of the tone test (see Extended Data 2). However, during the tone test, the deficit in learning about the tone was maintained in our NpHR learners group. Specifically, responding to the tone was significantly reduced relative to the eYFP learners group (Fig 3C; group: F1,10=6.085, p=0.033). Again, this was not seen in our NpHR naïve group, which demonstrated similar levels of responding to the tone relative to the eYFP naïve group (Fig 3E; group: F1,10=0.303, p=0.594). These data demonstrate that LH GABAergic neurons become recruited to learn about aversive events as an explicit result of experiencing the contingency between the cue and reward.

GABAergic neurons in the lateral hypothalamus contribute to fear learning by reducing associative strength acquired to conditioned stimuli.

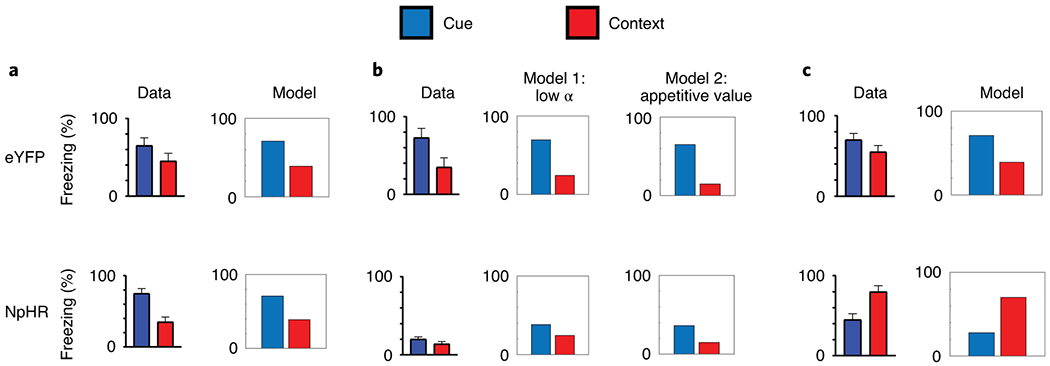

We next performed computational modeling to test whether a role for LH GABA neurons in learning about the shock-predictive cue could explain our specific pattern of results found after experience with reward learning. To do this, we used temporal-difference reinforcement learning (TDRL)14, in the form of the multi-step TD (λ)15, combined with Mackintosh’s (1975) attentional model, known to be at play in fear conditioning using these parameters16–18. We allowed inhibition of LH GABA neurons to partially (70%) block the update of the associative weights accumulating to the shock-predictive cue across learning, after rats had experience with reward learning (Fig 4). This degree of this block in learning is consistent with what we have found in ex vivo experiments manipulating neural activity in cell bodies using optogenetics19,20. We found that this model could predict two critical features of our fear conditioning data: 1) the reduction of learning that accrues to the shock-predictive cue, and 2) the differences in context learning seen across our fear conditioning experiments.

Figure 4. Computational modeling of the fear conditioning data supports a role for LH GABA neurons in learning about the shock-predictive cue after experience with rewards.

We tested whether a computational model of reinforcement learning could predict our specific pattern of data seen in Figure 2 and 3. We modeled LH GABA neurons blocking the update of learning attributed to the shock-predictive cue after experience with rewards. We used the data from Exp 1 (A) to set the parameters for our remaining experiments. This model predicted the differences seen in context learning between Exp 2 (B) and Exp 3 (C). The model can account for the low levels of context fear seen in Exp 2 in two ways: as reward learning also occurred in the fear context, the context could either receive little attention as the rats have received a lot of exposure to it (model 1), or the context acquires appetitive value (model 2). The higher levels of contextual fear occur in Exp 3 as learning to the cue is blocked by LH GABA neuronal inhibition, and is relayed instead to the background contextual cues (present when LH GABA neurons are not inhibited, and unique to the fear conditioning procedures). The model supports the validity of our context-specific design in Exp 3, as it suggests generalization between the fear and reward contexts was low, and that was unlikely the reason for LH GABA involvement in fear conditioning after reward learning.

In particular, one prominent feature of our data from Exp 3 was low levels of learning about the shock-predictive cue, but a higher level of learning about the contextual cues present during fear conditioning. Using the parameters set by our control experiment with naïve rats (i.e. Exp 1; Figure 4, A), we found that when we modeled LH GABA neuronal inhibition as blocking the learning update attributed to the tone in Exp 3, it produced lower levels about the tone, and higher levels of learning about the context (Figure 4, C). This happens because inhibition of LH GABA neurons during the cue blocks learning about the cue, so the learning that is produced by the shock is attributed to the contextual cues, which are present outside inhibition of LH GABA neurons (unlike the cue).

In Exp 2, which tests a role for LH GABA neurons in learning about the shock-paired tone after reward learning in the same context, we see low levels of freezing to the cue and unchanged levels of context fear. The model predicts this in two ways: either the context acquires a low learning rate (α; attention) across time during reward learning, or the context develops appetitive value across reward learning (that opposes predicting shock; Figure 4, B). In either case, this results in the context gaining aversive value during fear conditioning more slowly, and so it does not accrue much learning across the few cue-shock pairings rats receive. This validated our reasoning for performing Exp 3 using a context-specific design to ensure that LH GABA neurons were not recruited for fear learning because they were necessary to bring the appetitive memory to mind (i.e. the context-food association). Further, it added validity to our design in Exp 3 segregating the reward and fear learning episodes, as attributing appetitive value to the context could not explain our data from Exp 3. Thus, the modeling provided support for the notion that inhibition of LH GABA neurons blocks the learning that accrues to the shock-predictive cue after rats have had experience with rewards.

LH GABAergic neurons oppose learning of cue-cue associations, regardless of prior experience.

The previous experiments show that LH GABAergic neurons become necessary for learning about fear following experience with reward learning. We next asked how reward learning experience affects the role of LH GABAergic neurons in learning about other experiences that do not involve fear. To determine this, we inhibited LH GABA neurons during second-order conditioning, a procedure in which rats first learn to associate a cue with reward and then learn to associate another cue with the reward-paired cue. We inhibited LH GABAergic neurons during the second phase of training to test whether LH GABA neurons would be necessary for associating the two cues, in the absence of reward.

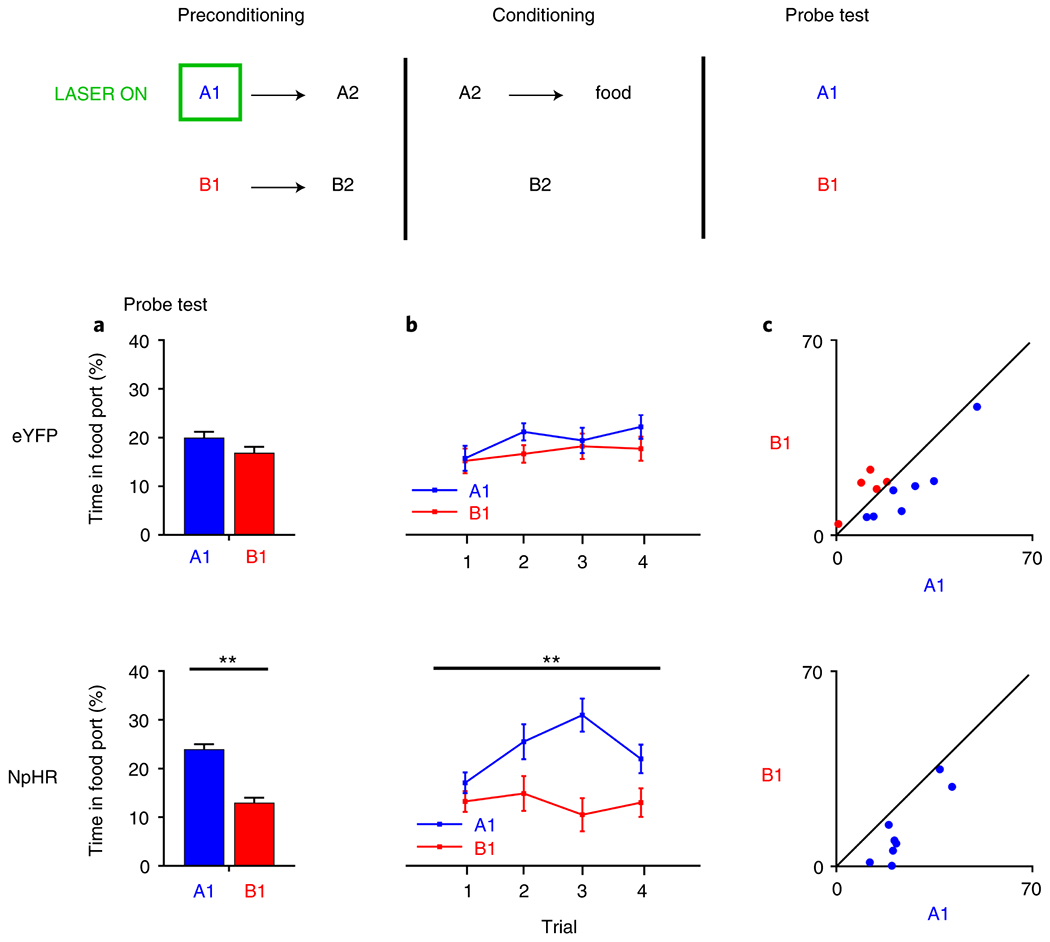

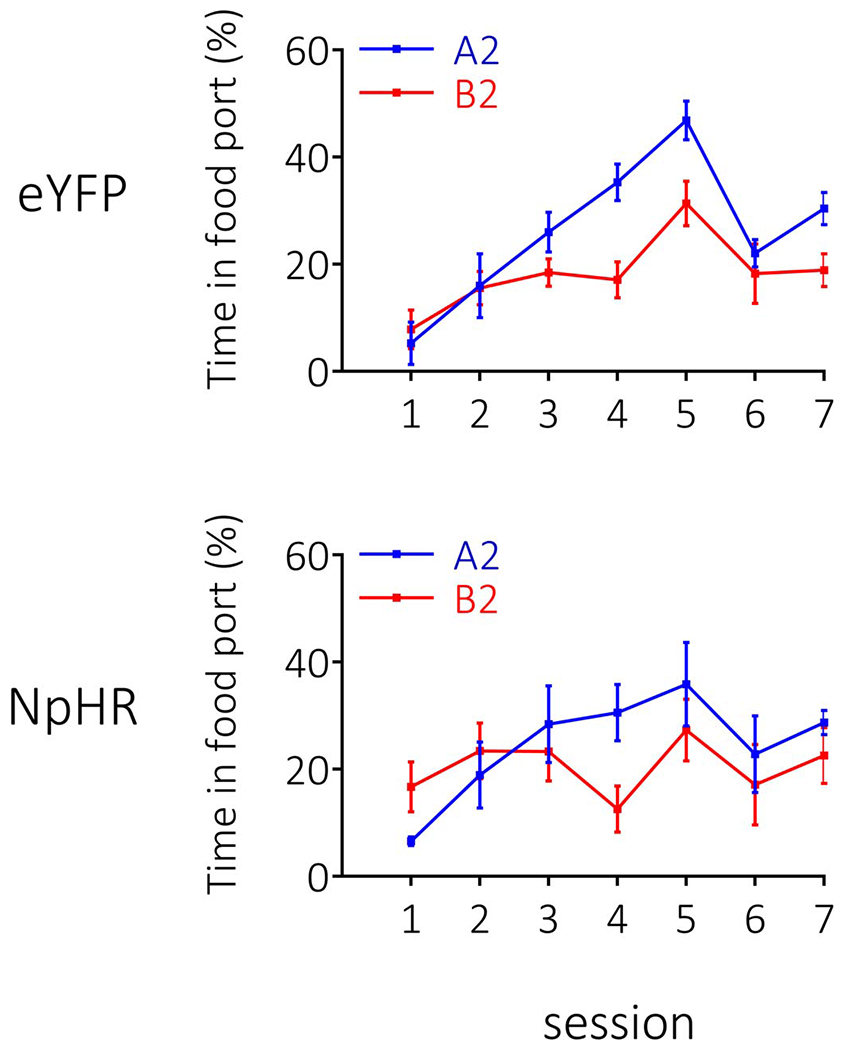

Prior to training, all rats underwent surgery to infuse virus and implant fiber optics targeting the LH. We infused a Cre-dependent AAV carrying halorhodopsin (NpHR experimental groups; n = 8) or the control AAV vector (eYFP control groups; n = 12) into the LH of GAD-Cre rats. Behavioral procedures began with 7 days of conditioning. On each day rats were presented with 6 pairings of A2 and the sucrose pellets, and 6 presentations of B2 without consequence (here, A2 and B2 are either tone or siren, counterbalanced across rats21–23). During this time, all rats acquired the conditioned response the enter the food port when A2 was presented, and not when B2 was presented, with no differences in the rate or ultimate level of learning between groups (see Extended Data 3; stimulus: F1,18=12.383, p=0.002; stimulus x group: F1,18=1.151, p=0.298; session: F6,108=10.799, p=0.000; session x group: F6,108=1.008, p=0.424; stimulus x session: F6,108=5.440, p=0.000; stimulus x session x group: F6,108=0.233, p=0.965).

Following conditioning, rats began second-order conditioning with the A1→A2 and B1→B2 pairs. Here, A1 and B1 were either clicker or white noise, counterbalanced across rats21–24. Specifically, on 12 trials, A1 was presented and followed 1s later by A2, and on the other 12 trials, B1 was followed 1s later by B2. The 1s gap between the cues was used to mimic our previous studies with cues and food10, and cues and shock, originally adopted to allow us to inhibit LH GABA neurons across just the antecedent cue and not the delivery of the outcome. To accomplish this, light (532, 16mW) was delivered during A1, beginning 500ms before A1 onset, and ending 500ms after A1 offset10.

Across this stage, we found inhibition of LH GABA neurons surprisingly facilitated the learning of the A1→A2 association. That is, rats without LH GABA activity on A1→A2 trials, showed a greater increase in responding directed towards A1 relative to B1 (Figure 4, left, orange). Importantly, this difference was maintained in a test session where we presented A1 and B1 in a probe test session without delivery of light (Figure 5, right, gray). These observations were confirmed by statistical analyses with a three-factor ANOVA on responding to A1 and B1 across these sessions revealing a significant main effect of stimulus (F1,18=10.576, p=0.004), and a significant interaction by group (stimulus x group: F1,18=4.657, p=0.045), with no difference in the magnitude of this effect across sessions (stimulus x session: F2,36=0.368, p=0.695). Follow up analyses revealed the significant stimulus x group interaction was due to a significant difference between responding to A1 and B1 in the NpHR group (F1,18=12.195, p=0.003) that was not present in the eYFP group (F1,18=0.748, p=0.398), likely because the insertion of the 1s gap between the cue pairs reduced their association25,26, which allowed us to detect enhanced learning in our NpHR group. Thus, inhibition of LH GABA activity facilitated the development of the A1→A2 association during second-order conditioning, in contrast to our previous findings where inhibition of LH GABA neurons impaired the development of associations between cues and food or shock.

Figure 5. LH GABAergic neurons oppose learning of cue-cue associations after reward learning.

Responding is represented as the time spent in the food port (%; ±SEM) during stimulus presentation when rats were learning the second-order contingencies (orange box; left panels early trials, right panels late trials), and during the probe test (gray box; left panels index group means, right panels indicate individual rat responses). Scatterplots represent rats’ individual responses, where equivalent rates of responding to the cues mean responses should congregate on the diagonal. Rats first learnt to associate A2 with food, and to differentiate that from B2, which did not predict food. Then, during second-order learning, rats were presented with A1→A2 and B1→B2 pairs. During this time, light (532nm, 16mW, green rectangle) was delivered into the brain during A1, resulting in inhibition of LH GABA neurons in our NpHR group (n=8 rats) but not our eYFP group (n=12 rats). Finally, we conducted a probe test, where we presented A1 and B1 alone and without reward. Across both learning and the probe test, rats in our NpHR group showed an enhancement of responding to A1 relative to B1 (stimulus: F1,18=10.576, p=0.004; stimulus x group: F1,18=4.657, p=0.045), which was maintained in the probe test without LH GABA neuronal inhibition (stimulus x session: F2,36=0.368, p=0.695). Follow up analyses showed a significant difference in responding to A1 and B1 in our NpHR group (F1,18=12.195, p=0.003) that was not present in the eYFP group (F1,18=0.748, p=0.05). This demonstrated that inhibition of LH GABA neuronal activity enhanced learning about cue-cue relationships after experience with reward learning. Data were analyzed with a repeated-measures ANOVA, with simple-main effects following a significant interaction, not necessitating control for multiple comparisons.

To further explore the unexpected result in second-order conditioning, we next inhibited LH GABA neurons during sensory preconditioning. This procedure is essentially identical the second-order conditioning procedure, with the exception that pairing of A1→A2 and B1→B2 occurs before any appetitive conditioning has occurred (with the exception of magazine training, see discussion). Appetitive conditioning only happens in later phases of the task, when A2 is paired with food; the conditioning only serves to allow us to test what rats learned in the first phase about the cue pairs by presenting A1 and B1 alone and without reward in a probe test after conditioning. If rats have learnt the association between the cue pairs in the first phase, they will increase appetitive responding directed towards A1 relative to B1, as A1 is associated with A2, which predicts reward. However, importantly, this procedure allows us to isolate learning about the cue-cue relationships in rats that have not had experience with cues and rewards.

Prior to training, all rats underwent surgery to infuse virus and implant fiber optics targeting the LH. We infused a Cre-dependent AAV carrying halorhodopsin (NpHR experimental groups; n = 8) or the control AAV vector (eYFP control groups; n = 12) into the LH of GAD-Cre rats. Four weeks later, all rats were food restricted and began behavioral procedures. Rats were first presented with the neutral cue pairs. Accordingly, on 12 trials A1 was presented and followed 1s later by A2, and on the other 12 trials, B1 was presented and followed 1s later by B2. Light (532nm, 16mW) was delivered during A1, beginning 500ms before A1 onset and ending 500ms after A1 offset10. Then, conditioning commenced and continued for 6 days. On each day of conditioning, rats were presented with 6 trials of A2 paired with food reward, and 6 trials where B2 was presented without consequence. During conditioning, all rats acquired the conditioned response to enter the food port when A2 was presented, and not when B2 was presented, with no difference in the rate or ultimate levels of conditioning between groups (see Extended Data 4; stimulus: F1,18=3.553, p=0.076; stimulus x session: F2,36=8.281, p=0.001; stimulus × group: F1,18=0.13, p=0.911), with follow-up comparisons, granted by the significant stimulus x session interaction, showing an increase in learning about A2 across sessions (F2,17=8.860, p=0.002), that was not present with relation to B2 (F2,17=1.953, p=0.172).

Following conditioning, we gave rats a probe test where A1 and B1 were presented without their associates. We found that all rats showed an increase in responding to A1 relative to B1, but rats in the NpHR group showed a significantly greater difference in responding (Figure 6). This was confirmed with a cue x group ANOVA, showing a significant main effect of cue (F1,18=15.438, p=0.001), as well as a significant cue x group interaction (F1,18=5.691, p=0.028); a simple main effects analyses demonstrated this was due to a significant difference between responding to A1 and B1 in the NpHR group (F1,18=16.615, p=0.001) that was not present in the eYFP control group (F1,18=1.489, p=0.238). Thus, inhibition of LH GABA neurons while naïve rats are learning about neutral cue-cue relationships significantly enhanced learning about the cues. This suggests that a general function of LH GABA neurons may be to oppose the development of associations between cues, independent of the changes in fear learning induced by reward experience.

Figure 6. LH GABAergic neurons oppose learning of cue-cue associations in naïve rats.

Responding is represented as time spent in the food port (%; ±SEM) during stimulus presentation in the probe test (gray box). Left panels index group mean responses, middle panels show responding by trial, and the right panel shows individual rats’ responses. Rats first learnt novel cue pairs, A1→A2 and B1→B2, where we delivered light into the brain during A1, inhibiting LH GABAergic neurons in our NpHR group (n=8 rats) and not our eYFP group (n=12 rats) during A1 presentation. Then, A2 was paired with food and B2 was presented without consequence. Pairing A2 with reward allowed us to test what rats had learnt during learning of A1→A2 and B1→B2 in the first phase. (A) shows mean levels of responding by group for all rats, demonstrating more appetitive responding towards A1, showing they had learnt the A1→A2 association and so inferred A1 predicts food after it’s associate A2 was paired with reward. However, rats without LH GABA neuronal activity in the first phase showed an enhancement of this effect, demonstrating that inhibition of LH GABA neurons increased the association between A1→A2. This was confirmed with statistical analyses, showing a main effect of stimulus (F1,18=15.438, p=0.001), as well as a significant cue x group interaction (F1,18=5.691, p=0.028), where simple main effects analyses afforded by the interaction demonstrated this was due to a significant difference between responding to A1 and B1 in the NpHR group (F1,18=16.615, p=0.001), that was not present in the eYFP control group (F1,18=1.489, p=0.238), (B) shows the difference between A1 and B1 across trial in the analyses represented in A, and (C) shows rats’ individual responses to A1 and B1 in the probe test; to the extent that responding to these cues is equivalent, cues should congregate around the diagonal. Data were analyzed with a repeated-measures ANOVA, with simple-main effects following a significant interaction, not necessitating control for multiple comparisons.

LH GABA neurons are necessary for the downregulation of processing of explicitly irrelevant cues.

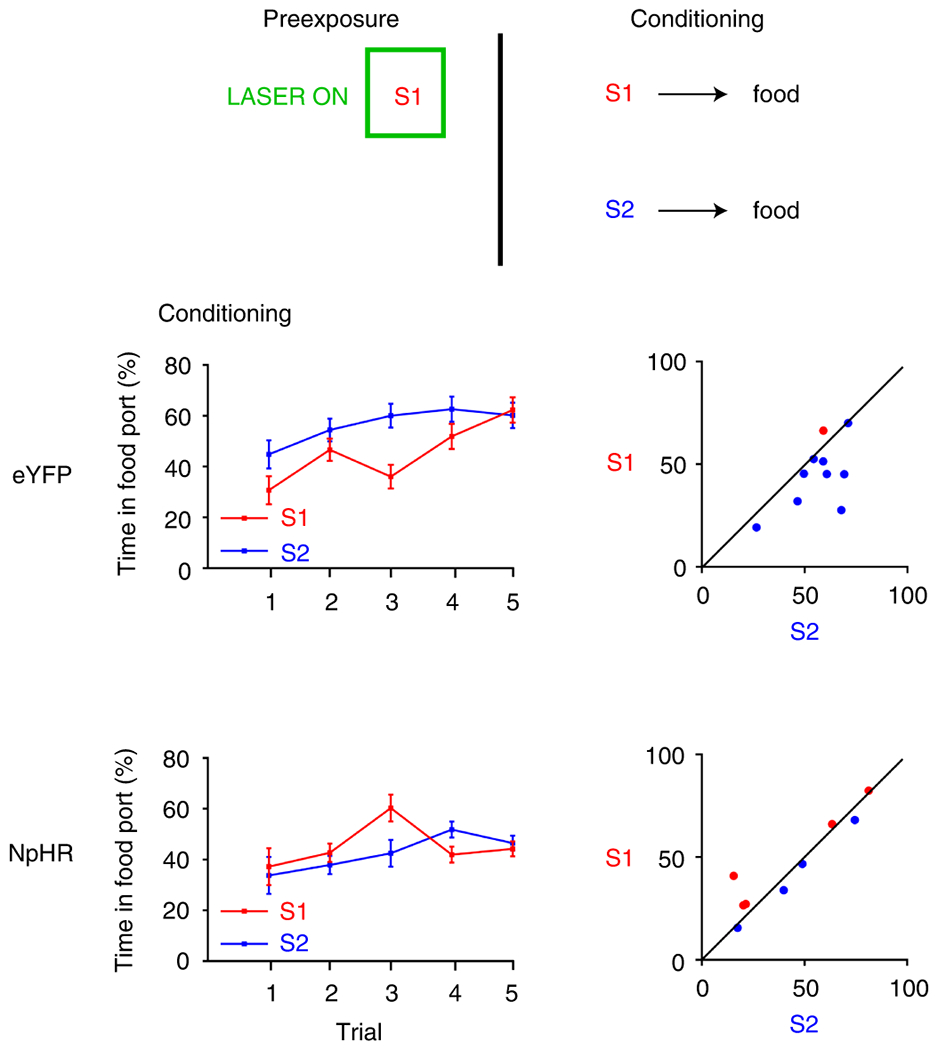

If LH GABA neurons normally oppose learning about information that does not directly produce something motivationally-significant, then they might also contribute to the normal decline in processing of cues that are explicitly irrelevant to reward prediction. To test this, we performed one last experiment using latent inhibition 27–29. We first presented rats with an auditory stimulus, S1, repeatedly over three pre-exposure sessions, inhibiting LH GABA neurons during presentation of S1. Then, rats received conditioning where S1 was paired with food. We compared amount of learning about the pre-exposed S1 stimulus with that seen to a different, novel cue, S2, that was also paired with food. Our eYFP group showed robust latent inhibition, characterized by less learning about S1 relative to S2 (Figure 7). In contrast, our NpHR group failed to demonstrate this effect, showing equivalent amounts of conditioning about S1 and S2. To formally analyze this effect, we conducted analyses on the first trial (before the reward has been presented, which reflects unconditioned responding) and then all subsequent presentations of each stimulus. There were no differences in unconditioned responding to S1 and S2 on the first trial (cue: F1,17=1.436, p=0.247; cue x group: F1,17=0.267, p=0.612; group: F1,17=0.007, p=0.933), but during the subsequent trials, a repeated-measures ANOVA showed no main effect of cue (F1,17=2.246, p=0.152), but a significant interaction between cue and group (F1,17=6.333, p=0.022), due to a significant difference between responding for S1 and S2 in the eYFP group (F1,17=8.508, p=0.010) not present in the NpHR group (F1,17=0.492, p=0.492). There was no significant between-group difference in responding to S1 (F1,17=0.001, p=0.980), or S2 (F1,17=2.263, p=0.151). Finally, there was no between-group difference in overall levels of responding during the cues (F1,17=0.667, p=0.425). This result suggests that activity in LH GABA neurons is necessary for the normal decline in processing of the reward-irrelevant, pre-exposed stimulus, here S1.

Figure 7. LH GABAergic neurons are necessary for the downregulation of processing of explicitly irrelevant cues.

Responding is represented as the time spent in the food port (%; ±SEM) during stimulus presentation when S1 and S2 were paired with food reward. Plots to the left indicate group means by trial, while plots on the right show individual rats’ responses. To the extent that responding to the cues is equivalent, points should congregate on the diagonal. Rats first received pre-exposure to S1. Here, we optogenetically inhibited LH GABA neurons during S1 in our NpHR group (n=9 rats). Then, rats received S1 and S2 paired individually with food. During this session, our control group (eYFP; n=10 rats), showed slower learning about S1 relative to novel S2 (top), demonstrating that our control group downregulated processing of S1 during pre-exposure. However, rats in our NpHR group did not show this effect, demonstrating equivalent rates of learning about S1 and S2 across conditioning (bottom). This shows that LH GABA neurons are necessary to downregulate processing of the irrelevant S1, consistent with the idea that LH GABA neurons usually oppose learning and processing of information that does not predict something motivationally significant. This was confirmed with statistical analyses. A repeated-measures ANOVA showed no main effect of cue (F1,17=2.246, p=0.152), but a significant interaction between cue and group (F1,17=6.333, p=0.022), due to a significant difference between responding for S1 and S2 in the eYFP group (F1,17=8.508, p=0.010) that was not present in the NpHR group (F1,17=0.492, p=0.492). There was no significant between-group difference in responding to S1 (F1,17=0.001, p=0.980), or S2 (F1,17=2.263, p=0.151). Finally, there was no between-group difference in overall levels of responding during the cues (F1,17=0.667, p=0.425). Data were analyzed with a repeated-measures ANOVA, with simple-main effects following a significant interaction, not necessitating control for multiple comparisons.

The finding that inhibition of LH GABA neurons reduce latent inhibition is consistent with the proposal that LH GABA neurons oppose learning that does not lead to reward, here the downregulation of processing of an explicitly irrelevant cue. This result also rules out the possibility that facilitated second-order conditioning and sensory preconditioning might reflect reduced generalization or better discrimination between cues as the result of LH GABA inhibition. If LH GABA neurons were important for generalizing learning across cues, then inhibiting them should have enhanced the difference between S1 and S2 and produced better, not worse, latent inhibition.

Discussion

Our prior work has shown that LH GABAergic neurons are necessary for rats to associate cues with rewards10. In the present experiments, we first showed that optogenetic inhibition of LH GABAergic neurons during a cue, just prior to delivery of shock, did not have a similar impact on the acquisition of conditioned fear. This is as expected, given the overwhelming evidence that the LH is involved in feeding and reward processing9–11,13,30. However, in our second experiment, the very same manipulation in rats that had prior experience with reward learning significantly attenuated learning. This demonstrates that LH GABAergic neurons become involved in encoding fear memories following experience with rewards.

We then tested the conditions of LH involvement in aversive learning. Using a context-specific design that reduced generalization between the appetitive and aversive settings, we demonstrated that LH GABAergic neurons were only necessary for fear learning in rats that had prior experience with the reward contingency, and not those that experienced all other aspects of the experimental process. This confirms that LH GABAergic neurons become necessary to encode aversive memories following reward learning, which is a phenomena dependent on LH GABAergic function10.

We next modelled these data using TDRL14,15, which provided support that the pattern of results we saw was similar to that predicted by models of reinforcement learning. Here, we modelled inhibition of LH GABA neurons blocking the associative (or learning) weights attributed to the shock-predictive cue across learning. We view this model as consistent with a role for LH GABA neurons in accumulating and storing the predictive status of the shock-paired stimulus, similarly to our previous work implicating these neurons in accumulation and storage of cue-food associations10. The parameters that produced results closest to the observed data treated LH GABA neuronal inhibition as blocking this weight by 70%, consistent with the fact that we still observed some learning accruing to the shock-predictive cue when LH GABA neurons were inhibited. This amount of blocking is also supported by ex vivo optogenetic studies from our lab, which have shown that inhibition of cell bodies using halorhodopsin blocks neuronal activity by about 70% in our hands19,20. However, it is also possible that the remaining learning seen to the shock-predictive cue reflects a role for LH GABA neurons as an arbitrator of learning, rather than the nucleus necessary for fear learning per se. That is, perhaps LH GABA neurons influence the development of fear associations after reward learning, rather than being the site for plasticity, which is likely to involve amygdala circuits31–33. Indeed, an exciting direction for future research would be to investigate how LH GABA neurons interact with the known fear circuit to influence learning about shock-predictive cues.

We also examined the extent to which LH GABAergic neurons could be recruited for learning about other types of information following experience with reward learning. Specifically, we asked whether these neurons could become necessary for learning about cue-cue relationships. We found that this was not the case. Instead, inhibition of LH GABAergic neurons as rats were learning about cue-cue relationships, after they had had experiences with reward learning (i.e. second-order conditioning34,35), enhanced the association between the cues. This showed that a function of these neurons is to oppose learning about cue-cue relationships. Thus, when we remove the influence of these neurons on the circuit, it allows other areas of the brain to “overlearn” the cue-cue association, resulting in enhancement of the cue-cue association.

We next asked if the involvement of LH GABAergic neurons in opposing the development of cue-cue relationships was due to a prior history of reward learning, or if this was a general feature of this neuronal population. Accordingly, we inhibited LH GABAergic neurons as rats were learning to associate cues together prior to any experience learning, using the sensory preconditioning procedure35,36. Here, we found that inhibition of LH GABAergic neurons again enhanced the development of the cue-cue association. This showed that a general function of these neurons is to oppose learning about cue-cue relationships and is not dependent on prior experience, in contrast to our current findings with fear learning.

It is also interesting to note that these two procedures assessing cue-cue learning, second-order conditioning and sensory preconditioning, are generally thought of as assessing two distinct learning phenomena22,35. That is, second-order conditioning is thought to involve the backpropagation of reward value from the reward-predictive cue (in our hands, A2) to the neutral cue (A1)35. In this sense it involves learning about something that is motivationally significant. On the other hand, sensory preconditioning involves the chaining of information together (e.g. A1 → A2 → food), in a manner that is independent of value backpropagation22,35. That inhibition of LH GABA neurons produces an enhancement of learning about cue-cue relationships in both procedures suggests that these neurons are sensitive to how information relates to directly predicting something relevant to current state; if a cue is not currently predicting food (or something else relevant to the animal’s state), LH GABA neurons will function to oppose learning involving that stimulus. In this sense, LH GABA neurons seem to transcend differences in associative structure in favor of their direct relevance for predicting unconditioned stimuli.

We found additional support for this hypothesis by testing whether LH GABA neurons would be involved in downregulating attention to an explicitly irrelevant cue. Specifically, we tested whether inhibition of LH GABA neurons during pre-exposure of a cue, S1, would impact on the ability of rats to reduce processing that stimulus (i.e. the latent inhibition effect28,29). Consistent with this idea, rats without LH GABA function during pre-exposure of S1 showed equivalent amounts of learning about S1 when it was later paired with food, when compared with a novel cue, S2, that was experienced for the first time and paired with reward. This provided additional support for the idea that LH GABA neurons oppose learning about cues that are not directly relevant for predicting motivationally-significant events.

Overall, these data are important for two reasons. Firstly, they demonstrate that a neuronal population overwhelmingly implicated in learning about rewards is recruited to learn about aversive events, simply by giving subjects prior experience learning about rewards. This is important because it suggests we need to look outside traditional fear circuits when searching for the fear engram. Secondly, these data show that this effect is selective; prior reward learning does not recruit LH GABAergic neurons to become necessary for all associative learning. Instead, these neurons appear to oppose development of cue-cue associations in a manner that is not dependent on prior experience. In addition to demonstrating selectivity of the effect of reward learning, this second point is important in its own right. To our knowledge, this is the first time one region has been implicated in opposing the development of cue-cue relationships in favor of learning about motivationally-significant events, suggesting the LH is a powerful arbitrator between learning processes in the brain. This is important because changes in this balance of learning has been heavily implicated in psychological disorders, such as addiction37,38 and schizophrenia39,40.

But there is more work to do. Would LH GABA neurons show an increase in activity to shock-paired cues after reward learning and not before? And we do not currently know the boundary parameters around LH GABA involvement in fear learning. For example, in our fear conditioning experiments, we did not expose our “naïve” groups to food rewards for fear of them learning about the food. Similarly, rats in our sensory preconditioning procedure received exposure to food pellets in the form of magazine training, which could have produced learning prior to learning the cue-cue associations. Understanding the complexities of these data would be facilitated by formalizing these observations into a coherent computational model that can explain the dissociations we see here, beyond the fear conditioning results. This may require looking outside standard reinforcement learning frameworks. This will not only determine the mechanism by which LH GABA neurons are primed to learn about fear, but tell us how this relates to the human conditioning; what is the balance of experience that shifts learning towards one system over another.

Taken together, these data paint a complex picture of a neuronal population that is specialized to learn about rewards, but can become necessary for learning about other important outcomes like shock, while generally functioning to oppose learning that is not directly related to predicting something motivationally significant. From a more general perspective, these findings suggest caution in interpreting data that suggests learning is restricted to particular neural circuits in naïve subjects. That is, these findings suggest that prior work investigating the specialization of learning in particular neural circuits may be oversimplified, providing the impetus to explore how the involvement of other circuits in learning may change with prior experience.

Online methods

Subjects:

One hundred and six male and female Long-Evans transgenic rats carrying a GAD1-dependent Cre expressing system (10; NIDA animals breeding facility) were used in these experiments. Rats were aged 2-5 months prior to surgical procedures. Rats were randomly allocated to groups and matched for age and sex. Rats were maintained on a 12-hour light-dark cycle, where all behavioral experiments took place during the light cycle. Rats had ad libitum access to food and water unless, undergoing the behavioral experiment (with the exception of Experiment 1), during which they received sufficient chow to maintain them at ~85% of their free-feeding body weight. All experimental procedures were conducted in accordance with Institutional Animal Care and Use Committee of the US National Institute of Health guidelines.

Surgical Procedures:

Surgical procedures have been described elsewhere 10,23. Briefly, rats received bilateral infusions of 1μL AAV5-EF1α −DIO-NpHR −eYFP (n=48) or AAV5-EF1a-DIO-eYFP (n=58) into the LH at the following coordinates relative to bregma: AP: −2.4; ML: ± 3.5; DV: −8.4 (female) and −9.0 (male) at an angle of 10° pointed towards the midline. Virus was obtained from the Vector Core at University of North Carolina at Chapel Hill (UNC Vector Core). During surgery, optic fibers were implanted bilaterally (200μm diameter, Thorlabs) at the following coordinates relative to bregma: AP: −2.4; ML: ± 3.5; DV: −7.9 (female) and −8.5 (male) at an angle of 10° pointed towards the midline.

Apparatus:

Training was conducted in 8 standard behavioral chambers (Coulbourn Instruments; Allentown, PA), which were individually housed in light- and sound-attenuating boxes (Jim Garmon, JHU Psychology Machine Shop). Chambers for appetitive experiments were equipped with a pellet dispenser that delivered 45-mg pellets into a recessed magazine when activated. Access to the magazine was detected by means of infrared detectors mounted across the opening of the recess. A computer equipped with GS3 software (Coulbourn Instruments, Allentown, PA) controlled the equipment and recorded the responses. Raw data was output and processed in Matlab (Mathworks, Natick, MA) to extract relevant response measures. The chambers contained a speaker connected to white noise and tone generators and a relay that delivered a 5 kHz clicker stimulus, as well as a house light that could illuminate the chambers when programmed. Chambers used for fear experiments lacked the food port magazine, but were otherwise similar. Data from fear conditioning procedures were hand scored by two experimenters blind to the conditions of the experiment. Scoring between these experimenters was generally very similar, falling within 90% of one another. In Experiment 3, we further distinguished the aversive and appetitive chambers by adding Perspex flooring, scents, and sandpaper walls to the appetitive chambers to create “context A”. Here, the aversive chambers were left bare, to create a distinct “context B” (Figure 3).

Behavioral procedures:

All conditioning trials consisted of 10-s cues as described below. Training procedures used a total of 7 different stimuli, 5 of which were drawn from stock equipment available from Coulbourn (tone, siren, clicker, white noise, flashing house light), and another two produced from an Arduino (warp and chime). Assignment of these stimuli to the cues depicted in Figure 5 and 6 were as follows: A1 and B1 were either click or white noise, A2 and B2 were either tone or siren21–23. All stimuli represented in Figure 5 and 6, were counterbalanced across rats. Assignment of these stimuli to the cues depicted in Figure 6 were as follows: S1 and S2 were either warp or chime, produced from the Arduinos. These stimuli were counterbalanced across rats (e.g. half of the rats received warp as S1, and the other half received chime as S1).

Experiment 1:

Naïve rats were kept on ad lib food for the duration of the experiment. Rats were handled for three days prior to commencing behavioral procedures. Day 1 of training consisted of placing rats in the aversive chambers for 45mins to habituate them to the contextual cues. On day 2, rats underwent fear conditioning, where a 10s 77dB tone predicted arrival of a 0.5mA shock 1s later. Rats received three such pairings, separated by an inter-trial interval (ITI) averaging 7mins. 500ms prior to tone onset, light was delivered into the brain continuously (532nm, 16mW, Shanghai Laser & Optics Century Co., Ltd), ending 500ms after tone offset10. As in our prior food reward experiment, we again left a 1s gap between our cue and reward to account for any rebound that could occur after we release inhibition of LH GABA neurons 500ms after cue presentation, although there is now published data to show that halorhodopsin does not produce a rebound after 10s of inhibition41, and we have shown using similar parameters that inhibition of LH GABA neurons ex vivo does not produce a rebound10. On Day 3, all rats were again placed in the aversive chambers for 45 mins to extinguish any residual contextual fear. On day 4, rats received a test session where they were presented with the tone 5 times without presentation of shock. Finally, on Day 5, rats received another test session where they were again presented with 5 presentations of the tone without shock.

Experiment 2:

Rats previously receiving reward training for 7 days, with one auditory cue predicting reward, and another presented without consequence. On each day, rats received 6 presentations of each cue, separated by a 5-min ITI. Rats were maintained on food restricted following reward training. Then, they underwent fear conditioning in the same manner described above, with the exception that they received a flashing light stimulus paired with a 0.35mA shock (factors controlled for in the following experiment).

Experiment 3:

All rats were first food restricted. Care was taken to deliver their daily food chow in a way that would not allow them to associate any specific event with arrival of food chow, so as not to produce learning about food in our “naïve” groups. Here, rats in the “learners” group first received pairings of a light with food in “context A” across 5 days. In each conditioning session, rats received 12 pairings separated by a 4-min ITI. Rats in the “naïve group” received the same procedures, with the exception that the light stimulus was presented without consequence. During this training, rats were also pre-exposed to context B to facilitate context discrimination. Their pre-exposure was counterbalanced such that one rat might receive exposure in context B in the morning, and reward training in context A in the afternoon, and then the following day this would be switched. Following conditioning and pre-exposure, rats underwent fear conditioning in context B. The procedure for fear conditioning were the same as Experiment 1, with the exception that a 0.35mA shock followed the 10s 77dB tone conditioned stimulus.

Experiment 4: Second-order conditioning.

These procedures have been described elsewhere22. Briefly, rats first received conditioning across 7 days, where A2 was paired with delivery of 2 45-mg sucrose pellets (5TUT; Test Diet, MO), and B2 was presented without consequence. Rats received a total of 12 trials, separated by a 5-min ITI, half of which were the A2 stimulus and the other half of which was the B2 stimulus. Cues were presented in an inter-mixed and counterbalanced fashion. Following conditioning, rats received 2 days of second-order conditioning. On each day, the rats received 6 presentations of A1 → A2, and 6 presentations of B1 → B2, where a 10-s presentation of either A1 (or B1) was followed by a 10s presentation of A2 (or B2) separated by a 1s delay. During A1 presentation, light was delivered into the LH for 11 s beginning 500 ms before onset of the A1 stimulus, as in our previous study with cues and rewards 10. Finally, rats received two probe tests in which each of the critical test cues (A1 and B1) was presented 6 times alone and without reward, again in an intermixed and counterbalanced fashion.

Experiment 5: Sensory preconditioning:

These procedures have been described elsewhere21–23. Briefly, Training began with 2 days of pairings of the neutral stimuli A1-A2 and B1-B2. On each day, the rats received 6 presentations of A1 → A2, and 6 presentations of B1 → B2, where a 10-s presentation of either A1 (or B1) was followed by a 10s presentation of A2 (or B2) separated by a 1s delay. During A1 presentation, light was delivered into the LH for 11 s beginning 500 ms before onset of the A1 stimulus, as in our previous study with cues and rewards 10. Following pairings of the neutral cues, rats underwent 6 days of conditioning in which A2 was presented 6 times each day followed immediately by delivery of two 45-mg sucrose pellets (5TUT; Test Diet, MO). During these sessions, B2 was also presented but without delivery of the sucrose pellets. Finally, rats received two probe tests in which each of the critical test cues (A1 and B1) were presented 6 times alone and without reward.

Experiment 6: Latent Inhibition:

Training began with 3 days of pre-exposure to S1. On each day, rats received 12 presentations of S1. During S1 presentation, light (532nm, 16-18mW output, Shanghai Laser & Optics Century Co., Ltd) was delivered into the LH for 11 s beginning 500 ms before onset of the S1 stimulus, as in our previous experiment with cues and rewards21. Following pre-exposure to the S1 stimulus, rats received the single critical conditioning session in which S1 and another novel stimulus S2 were presented 6 times each followed immediately by delivery of two 45-mg sucrose pellets (5TUT; Test Diet, MO).

Histology and Immunohistochemistry:

All rats were euthanized with an overdose of carbon dioxide and perfused with phosphate buffered saline (PBS) followed by 4% Paraformaldehyde (Sigma-Aldrich Inc, NJ). Fixed brains were cut in 40μm sections to examine fiber tip position under a fluorescence microscope (Olympus Microscopy, Japan). Images of these brain slices were acquired by a fluorescence Virtual Slide microscope (Olympus America, NY) and later analyzed in Adobe Photoshop.

Statistical Analyses:

All statistics were conducted using SPSS 25 IBM statistics package, using data processed in MATLAB and EXCEL. Generally, analyses were conducted using a mixed-design repeated-measures analysis of variance (ANOVA). All analyses of simple main effects were planned and orthogonal and therefore did not necessitate controlling for multiple comparisons. For effects in the expected direction, one-tailed tests were used to assess significance of the expected interaction42. Data distribution was assumed to be normal but homoscedasticity was not formally tested. No statistical methods were used to pre-determine sample sizes but our sample sizes are similar to that used in our previous publications9,17–19. Data collection and analysis were not performed blind to the experimental conditions, however, at the time of scoring of behavior or histological assessment of virus and fiber placement, which is a subjective measure, the experimenter was blind to the conditions. Statistical analyses on Pavlovian responses in Experiment 4 and 5 were conducted on the duration of time that rats spent in the magazine during the last 5s of cue presentation, as done previously 43. Analyses on probe test data from Experiment 1–3 were analyzed across the first 3 trials of both test sessions. Experiment 4 and 5 probe tests were conducted on the first four trials of each cue across all test sessions. Analyses on data from the critical conditioning session in Experiment 6 were conducted on all trials following the first presentation of each stimulus where rats were unaware of the consequences of the cue and, therefore, responding to the cues was unconditioned on the first trial and did not reflect learning. Where appropriate (i.e. where statistical tests involve within-subject comparisons), standard error of the mean (SEM) were calculated using the loftus procedure44,45, which takes into the account the within-subject variability in the data.

Modeling

Simulations of the behavioral designs were run using a multi-step temporal difference learning algorithm, TD(λ)15. This algorithm was used to estimate the value of different states of the behavioral paradigm with states being determined by the stimuli present at any particular time. Linear function approximation was used in order to estimate the value, V, of a given state, st, by the features present during that state according to

where j is indexed through all possible components of the feature vector x and corresponding weight vector w. The feature vector is considered to be the set of possible observed stimuli such that if stimulus j is present during state s at time t, then xj(st) = 1, and zero otherwise. The weights are adjusted over time in order to best approximate the value of each state given the current set of stimuli. Weights, wj, corresponding to each feature, xj, are updated at each time step according to the TD error rule

under linear value function approximation where γ is the temporal discounting factor. The weights are updated as

in which the scalar α is the learning rate, and ε is the eligibility trace of previous states such that ε ∈ [0,1]. The eligibility trace updates on each trial by reducing the impact of previous features experienced in previous states. The decay of previous features is set by λ and the temporal discounting factor, γ, according to the update rule:

which results in the ‘replacing traces’ method whenever a feature is reexperienced, such that the eligibility trace is reset back to one.

The linear value approximation reduces the size of the possible state space by generalizing states based on the features present. This approximation results in the calculation of the total expected value of a state as the sum of the expected value of each stimulus element present in the current state, a computation which is consistent with a global prediction error as stipulated by the Rescorla-Wagner model.

As fear conditioning has also been shown to engage an attentional mechanism, we also allowed α to fluctuate according to the well-established Mackintosh (1975) attentional model16–18, where α increases to cues that are better predictors of an outcome, and decreases to cues that are poorer predictors of their outcomes, relative to their competitors, according to the following:

where λ in this case represents the maximal evoked unconditioned stimulus. Within the TD(λ) model described above, the Mackintosh rule was applied as follows:

In which N is the number of features and w is the weight vector. The λ term was dropped since only one outcome was modeled.

Modeling of optogenetic manipulation of LH GABAergic neurons:

Inhibition of LH GABA neurons disrupts the update of the weights resulting from the prediction error δt.

where η again determines whether the inhibition was present. η is set at 0.3, meaning optical inhibition of LH GABA neurons produces a partial block on the update, designed to mimic ex vivo recordings from our lab, which have estimated the degree of inhibition achieved using the cell body inhibition with optogenetics19,20.

Model Parameterization:

We set ɵ at 0.2 for all simulations, where the initial α for the tone is set at 0.3 for all simulations. λ was set at 0.7, and γ was set at 0.95 for all simulations. α for the context varied, depending on the experiment, as described in the text.

Conditioned responding (freezing), CR, at each state was modeled using a logistic function

in which the parameters were determined based on empirical estimates of the maximal responding, c, the baseline responding, a, as well as the steepness of the learning curve, b. These were set as 90, 0.4, and 5 respectively for all simulations.

Extended Data

Extended Data Fig. 1. Extended data 1. Histological verification of Cre-dependent NpHR and eYFP in GAD+ neurons and fiber placement in the LH for all experiments.

Top row: Unilateral representation of the bilateral viral expression in the LH, −2mm to −3mm posterior to bregma. Bottom row: Approximate location of fiber tips in LH, indicated by black squares, −2mm to −3mm posterior to bregma.

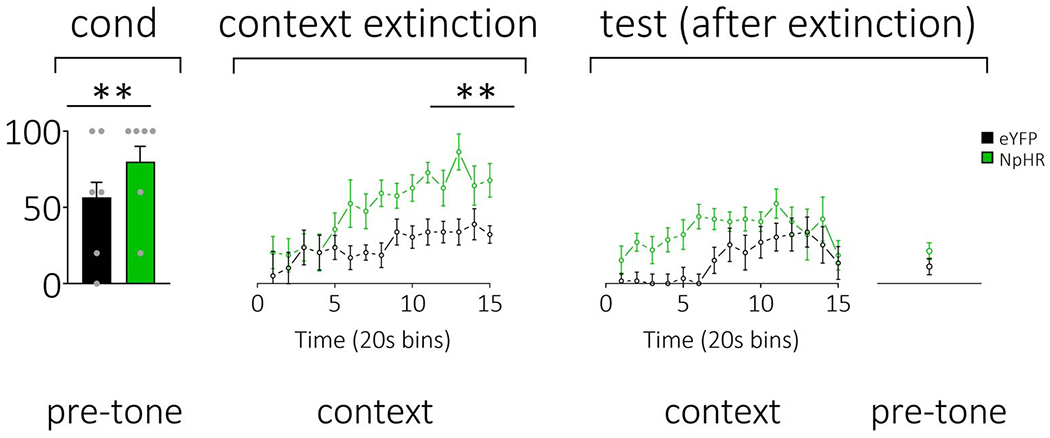

Extended Data Fig. 2. Extended data 2. Rats in our NpHR learners group showed a persistent increase in conditioned fear to the contextual cues, which extinguished before tone presentations in the extinction test.

During conditioning, our NpHR learners group showed high levels of fear to the background contextual cues (left; see Figure 3 in main text for more information). To reduce these levels of context fear before the test, 24 hours after conditioning, rats received a context extinction session where they were placed in the experimental chambers without any stimuli. Here, we found that our NpHR learners group maintained higher level of context fear relative to our eYFP learners group (middle). This context extinction was effective in reducing contextual fear as all rats showed low levels of freezing at the beginning of the next test session, where we presented the tone under extinction to examine fear that had acquired to these stimuli (right). A mixed-design repeated-measures ANOVA on levels of freezing to the contextual cues across the context and tone extinction sessions showed a main effect of time (F14,140=4.614, p=0.000), and a significant session x time x group interaction (F14,140=1.697, p=0.032). This interaction was owed to a between-group difference in freezing during context extinction that revealed itself most prominently towards the end of the scoring period (group: F1,10=5.939, p=0.035), that was not seen in the tone extinction test (group: F1,10=0.007, p=1.000). Further, there was a significant difference in freezing exhibited by the NpHR group when comparing the context extinction session with the tone test (n=6 rats; F1,10=8.071, p=0.018), that was not present in the eYFP control group (n=6 rats; F1,10=1.161, p=0.307). Finally, a one-way ANOVA showed there was no between-group difference in freezing to the context immediately before tone presentations in the tone test after context extinction had taken place (F1,10=1.943, p=0.194). Error bars = SEM.

Extended Data Fig. 3. Extended data 3. Responding during conditioning in the second-order conditioning experiment (see Figure 4 main text).

Rates of responding are represented as time spent in the food port (%; ±SEM). Rats (n=12 eYFP; n=8 NpHR) learnt to distinguish between A2 and B2 during conditioning, with no difference in the rates of learning between groups (note: patch cords were placed on rats in session 6 of conditioning to habituate them to the cords prior to pairings of A1→A2 and B1→B2, which is why there is a dip in responding). A repeated-measures ANOVA revealed a main effect of stimulus (F1,18=12.383, p=0.002) and session (session: F6,108=10.799, p=0.424), with no interactions by group (stimulus x group: F1,18=1.151, p=0.298; session x group: F6,108=1.008, p=0.424; stimulus x session: F6,108=5.440, p=0.000; stimulus x session x group: F6,108=0.233, p=0.965).

Extended Data Fig. 4. Extended data 4. Responding during conditioning in the sensory-preconditioning experiment (see Figure 5 main text).

Rates of responding are represented as time spent in the food port (%; ±SEM). Rats (n=12 eYFP, n=8 NpHR) learnt to distinguish between A2 and B2 during conditioning, with no difference in the rates or ultimate levels of learning between groups A repeated-measures ANOVA revealed a main effect of stimulus (F1,18=3.553, p=0.076), and a stimulus x session interaction (F2,36=8.281, p=0.001; stimulus × group: F1,18=0.13, p=0.911), with follow-up comparisons, granted by the significant stimulus x session interaction, showing an increase in learning about A2 across sessions (F2,17=8.860, p=0.002), that was not present with relation to B2 (F2,17=1.953, p=0.172).

Supplementary Material

Acknowledgements

The authors would like to thank Marisela Morales and Carlos Mejias-Aponte for their assistance. This work was supported by R01-MH098861 (to G.S.), the Intramural Research Program at NIDA ZIA-DA000587 (to G.S.), and an NHMRC CJ Martin Fellowship (to M.J.S). The opinions expressed in this article are the authors’ own and do not reflect the view of the NIH/DHHS.

Footnotes

Competing Interests

The authors declare no competing interests.

Code Availability: Simulations were performed using custom-written functions in MATLAB (Mathworks, Natick, MA), available at the following link on github: https://github.com/mphgardner/LH_Inact_Model.

Data Availability

The data that support the findings of this study, and any associated custom programs used for its acquisition, are available from the corresponding authors upon reasonable request.

References

- 1.Axelrod R Schema theory: An information processing model of perception and cognition. American political science review 67, 1248–1266 (1973). [Google Scholar]

- 2.Bem SL Gender schema theory and its implications for child development: Raising gender-aschematic children in a gender-schematic society. Signs: Journal of women in culture and society 8, 598–616 (1983). [Google Scholar]

- 3.Rumelhart DE Schemata: The building blocks. Theoretical issues in reading comprehension: Perspectives from cognitive psychology, linguistics, artificial intelligence and education 11, 33–58 (2017). [Google Scholar]

- 4.Rau V, DeCola JP & Fanselow MS Stress-induced enhancement of fear learning: an animal model of posttraumatic stress disorder. Neuroscience & biobehavioral reviews 29, 1207–1223 (2005). [DOI] [PubMed] [Google Scholar]

- 5.Rau V & Fanselow MS Exposure to a stressor produces a long lasting enhancement of fear learning in rats: Original research report. Stress 12, 125–133 (2009). [DOI] [PubMed] [Google Scholar]

- 6.Sillivan SE et al. Susceptibility and resilience to posttraumatic stress disorder–like behaviors in inbred mice. Biological psychiatry 82, 924–933 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Holmes NM, Parkes SL, Killcross AS & Westbrook RF The basolateral amygdala is critical for learning about neutral stimuli in the presence of danger, and the perirhinal cortex is critical in the absence of danger. Journal of neuroscience 33, 13112–13125 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ponomarev I, Rau V, Eger EI, Harris RA & Fanselow MS Amygdala transcriptome and cellular mechanisms underlying stress-enhanced fear learning in a rat model of posttraumatic stress disorder. Neuropsychopharmacology 35, 1402 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Nieh EH et al. Inhibitory input from the lateral hypothalamus to the ventral tegmental area disinhibits dopamine neurons and promotes behavioral activation. Neuron 90, 1286–1298 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sharpe MJ et al. Lateral hypothalamic GABAergic neurons encode reward predictions that are relayed to the ventral tegmental area to regulate learning. Current Biology 27, 2089–2100. e2085 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stuber GD & Wise RA Lateral hypothalamic circuits for feeding and reward. Nature neuroscience 19, 198 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Urstadt KR & Berridge KC Optogenetic mapping of feeding and self-stimulation within the lateral hypothalamus of the rat. Plos one 15, e0224301 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Margules D & Olds J Identical” feeding” and” rewarding” systems in the lateral hypothalamus of rats. Science 135, 374–375 (1962). [DOI] [PubMed] [Google Scholar]

- 14.Sutton RS & Barto AG in Proceedings of the ninth annual conference of the cognitive science society. 355–378 (Seattle, WA: ). [Google Scholar]

- 15.Seijen H & Sutton R in International Conference on Machine Learning. 692–700.

- 16.Sharpe MJ & Killcross S The prelimbic cortex directs attention toward predictive cues during fear learning. Learning & Memory 22, 289–293 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sharpe MJ & Killcross S Modulation of attention and action in the medial prefrontal cortex of rats. Psychological review 125, 822 (2018). [DOI] [PubMed] [Google Scholar]

- 18.Mackintosh NJ A theory of attention: Variations in the associability of stimuli with reinforcement. Psychological review 82, 276 (1975). [Google Scholar]

- 19.Gardner MP et al. Medial orbitofrontal inactivation does not affect economic choice. Elife 7, e38963 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Chang CY, Gardner MP, Conroy JC, Whitaker LR & Schoenbaum G Brief, but not prolonged, pauses in the firing of midbrain dopamine neurons are sufficient to produce a conditioned inhibitor. Journal of Neuroscience 38, 8822–8830 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sharpe MJ et al. Dopamine transients do not act as model-free prediction errors during associative learning. Nature Communications 11, 1–10 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sharpe MJ, Batchelor HM & Schoenbaum G Preconditioned cues have no value. Elife 6, e28362 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sharpe MJ et al. Dopamine transients are sufficient and necessary for acquisition of model-based associations. Nature Neuroscience 20, 735 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Langdon AJ, Sharpe MJ, Schoenbaum G & Niv Y Model-based predictions for dopamine. Current Opinion in Neurobiology 49, 1–7 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Holland PC CS–US interval as a determinant of the form of Pavlovian appetitive conditioned responses. Journal of Experimental Psychology: Animal Behavior Processes 6, 155 (1980). [PubMed] [Google Scholar]

- 26.Lavin MJ The establishment of flavor-flavor associations using a sensory preconditioning training procedure. Learning and Motivation 7, 173–183 (1976). [Google Scholar]

- 27.Killcross A, Dickinson A & Robbins T Effects of the neuroleptic α-flupenthixol on latent inhibition in aversively-and appetitively-motivated paradigms: evidence for dopamine-reinforcer interactions. Psychopharmacology 115, 196–205 (1994). [DOI] [PubMed] [Google Scholar]

- 28.Lubow R & Moore A Latent inhibition: the effect of nonreinforced pre-exposure to the conditional stimulus. Journal of comparative and physiological psychology 52, 415 (1959). [DOI] [PubMed] [Google Scholar]

- 29.Lubow RE & Gewirtz JC Latent inhibition in humans: data, theory, and implications for schizophrenia. Psychological bulletin 117, 87 (1995). [DOI] [PubMed] [Google Scholar]

- 30.Jennings JH, Rizzi G, Stamatakis AM, Ung RL & Stuber GD The inhibitory circuit architecture of the lateral hypothalamus orchestrates feeding. Science 341, 1517–1521 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fanselow MS & LeDoux JE Why we think plasticity underlying Pavlovian fear conditioning occurs in the basolateral amygdala. Neuron 23, 229–232 (1999). [DOI] [PubMed] [Google Scholar]

- 32.Maren S Neurotoxic basolateral amygdala lesions impair learning and memory but not the performance of conditional fear in rats. Journal of Neuroscience 19, 8696–8703 (1999). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ressler RL & Maren S Synaptic encoding of fear memories in the amygdala. Current opinion in neurobiology 54, 54–59 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Holland PC & Rescorla RA Second-order conditioning with food unconditioned stimulus. Journal of comparative and physiological psychology 88, 459 (1975). [DOI] [PubMed] [Google Scholar]

- 35.Rizley RC & Rescorla RA Associations in second-order conditioning and sensory preconditioning. Journal of comparative and physiological psychology 81, 1 (1972). [DOI] [PubMed] [Google Scholar]

- 36.Brogden WJ Sensory pre-conditioning. Journal of Experimental Psychology 25, 323 (1939). [DOI] [PubMed] [Google Scholar]

- 37.Ersche KD et al. Carrots and sticks fail to change behavior in cocaine addiction. Science 352, 1468–1471 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Everitt BJ & Robbins TW Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nature neuroscience 8, 1481 (2005). [DOI] [PubMed] [Google Scholar]

- 39.Corlett PR & Fletcher PC Delusions and prediction error: clarifying the roles of behavioural and brain responses. Cognitive neuropsychiatry 20, 95–105 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Powers AR, Mathys C & Corlett P Pavlovian conditioning–induced hallucinations result from overweighting of perceptual priors. Science 357, 596–600 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

Methods-only references

- 41.Mahn M, Prigge M, Ron S, Levy R & Yizhar O Biophysical constraints of optogenetic inhibition at presynaptic terminals. Nature neuroscience 19, 554 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Howell DC Statistical methods for psychology. (Cengage Learning, 2012). [Google Scholar]

- 43.Jones JL et al. Orbitofrontal cortex supports behavior and learning using inferred but not cached values. Science 338, 953–956 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Loftus GR & Masson ME Using confidence intervals in within-subject designs. Psychonomic bulletin & review 1, 476–490 (1994). [DOI] [PubMed] [Google Scholar]

- 45.Franz VH & Loftus GR Standard errors and confidence intervals in within-subjects designs: Generalizing Loftus and Masson (1994) and avoiding the biases of alternative accounts. Psychonomic bulletin & review 19, 395–404 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data that support the findings of this study, and any associated custom programs used for its acquisition, are available from the corresponding authors upon reasonable request.