Summary

Animals adaptively integrate sensation, planning, and action to navigate toward goal locations in ever-changing environments, but the functional organization of cortex supporting these processes remains unclear. We characterized encoding in approximately 90,000 neurons across mouse posterior cortex during a virtual-navigation task with rule-switching. The encoding of task and behavioral variables was highly distributed across cortical areas but differed in magnitude, resulting in three spatial gradients encoding visual cue, spatial position plus dynamics of choice formation, and locomotion, with peaks respectively in visual, retrosplenial, and parietal cortices. Surprisingly, the conjunctive encoding of these variables in single neurons was similar throughout posterior cortex, creating high-dimensional representations in all areas instead of revealing computations specialized for each area. We propose that, for guiding navigating decisions, posterior cortex operates in parallel rather than hierarchically, and collectively generates a state representation of the behavior and environment, with each area specialized in handling distinct information modalities.

In Brief

In mice navigating in dynamic environments, Tseng, Chettih et al. find highly distributed but specialized encoding of visual, cognitive, and locomotor signals in posterior cortex with similar conjunctive codes across areas. They propose posterior cortex integrates distinct information modalities to form a general-purpose state representation of the environment and behavior.

Introduction

As an animal navigates an ever-changing environment, it adaptively incorporates acquired sensory information into a navigation plan to guide its movements. The neural circuits supporting this behavior must integrate sensory processing, navigation planning, and motor execution, and furthermore adapt the rules governing their integration in response to experience. Evidence in rodents suggests densely interconnected dorsal-posterior cortical areas are critical for visually guided and navigation-based decision-making, including primary (V1) and secondary visual cortices, retrosplenial cortex (RSC), and posterior parietal cortex (PPC) (Zingg et al., 2014). However, it remains uncertain how the set of processes for navigation-based decision-making are represented across posterior cortex, and what principles specify the functional organization of these areas.

A longstanding view is that cortex is organized as anatomically and functionally distinct modules that encode different information, reflecting their specialized functions. Accordingly, many studies have aimed to identify how specific areas contribute to behavior by identifying the variables each area encodes. For example, visual areas in posterior cortex encode visual features with increasing complexity between primary and secondary areas and are proposed to serve distinct functions in visual processing (Andermann et al., 2011; Glickfeld and Olsen, 2017; Marshel et al., 2011; Siegle et al., 2021). PPC has roles in accumulating sensory evidence and history-dependent signals (Hanks et al., 2015; Hattori et al., 2019; Hwang et al., 2017; Morcos and Harvey, 2016; Pinto et al., 2019), transforming stimuli into motor outputs (Goard et al., 2016; Harvey et al., 2012; Licata et al., 2017; Pho et al., 2018; Raposo et al., 2014), and monitoring navigation route progression (Nitz, 2006), often in egocentric coordinates (Nitz, 2012; Wilber et al., 2014). In RSC, information for navigation and spatial memory is prevalent, including for heading direction (Cho and Sharp, 2001; Jacob et al., 2017), landmark cues (Fischer et al., 2020), and goal locations (Miller et al., 2019; Vale et al., 2020), and is often represented as conjunctions of variables in an allocentric reference frame (Alexander and Nitz, 2017).

Recent studies have observed that the encoding of actions and spatial position is distributed widely across posterior cortex and, relatedly, that individual areas encode many variables (Allen et al., 2017, 2019; Kauvar et al., 2020; Minderer et al., 2019; Musall et al., 2019; Steinmetz et al., 2019; Stringer et al., 2019). Furthermore, even sensory areas like V1 exhibit conjunctive, multi-modal tuning (Keller et al., 2012; Saleem et al., 2018; Shuler and Bear, 2006). It is unclear how to reconcile this highly distributed encoding in posterior cortex with evidence for specialized functions in distinct areas. One possibility is that studies proposing specialized functions typically examined only one or two cortical areas with different experimental designs, and thus underemphasized the commonalities in encoding across areas. Another possibility is that some variables are encoded in a distributed manner and others more modularly. In particular, distributed encoding of bodily movements observed during spontaneous or simple behaviors might contrast with modular encoding of cognitive variables in more complex tasks.

Functional organization is determined not only by the “distributedness” of encoding for individual variables but also the pattern of variable combinations in single neurons and the resulting population geometry of encoding for multiple variables. For instance, given distributed encoding of two variables, one area may encode each variable in separate neurons and relay them to distinct downstream targets, whereas another area may perform arithmetic manipulation of the two to generate new quantities useful for specialized computations. Areas may build increasingly complex multi-modal, high-dimensional population codes along a functional hierarchy (Bernardi et al., 2020; Rigotti et al., 2013). Alternatively, even if areas specialize in which variables they encode, variables may be combined in similar ways across areas, suggesting general rules of integration that underlie shared computational goals. Thus, a quantitative analysis of functional organization must look beyond the “distributedness” of encoding for single variables to consider the “conjunctive structure”, namely the way variables are integrated by single neurons and the resulting geometry of population representations.

Here we determine how the various processes underlying flexible, navigation-based decision-making are functionally organized, using a single experimental and analysis framework for quantitative comparison of the encoding of ~90,000 neurons across posterior cortex. We find that encoding of all variables is more distributed than modular, despite significant differences in encoding strength across areas for visual, locomotor, position, and choice variables. Surprisingly, each area does not create unique conjunctions of variables, as would be expected for areas performing distinct computations. Instead, all areas combine variables similarly, resulting in a high-dimensional representation of variable conjunctions that is shared across areas. We propose that posterior cortical areas integrate qualitatively distinct input modalities to form a general-purpose state representation of the environment and behavior, which is used by downstream circuits to guide flexible navigation decisions.

Results

Mice learned flexible cue-choice associations in a virtual reality decision-making task

We designed a behavioral task to study how sensation, planning, movement, and recent experience are integrated during navigation decisions. Mice were trained to navigate a virtual reality Y-maze using visual cues (black or white walls) to make decisions to run toward rewarded locations (left or right arms), based on learned and changing rules (Figure 1A, 1B and S1A). We switched the rule determining the rewarded cue-choice associations every 100–175 trials in a session, without explicitly signaling the rule or rule switch. To maximize reward, the mouse had to combine the visual cue with an estimate of the current rule to generate a choice and update its rule estimate following reward.

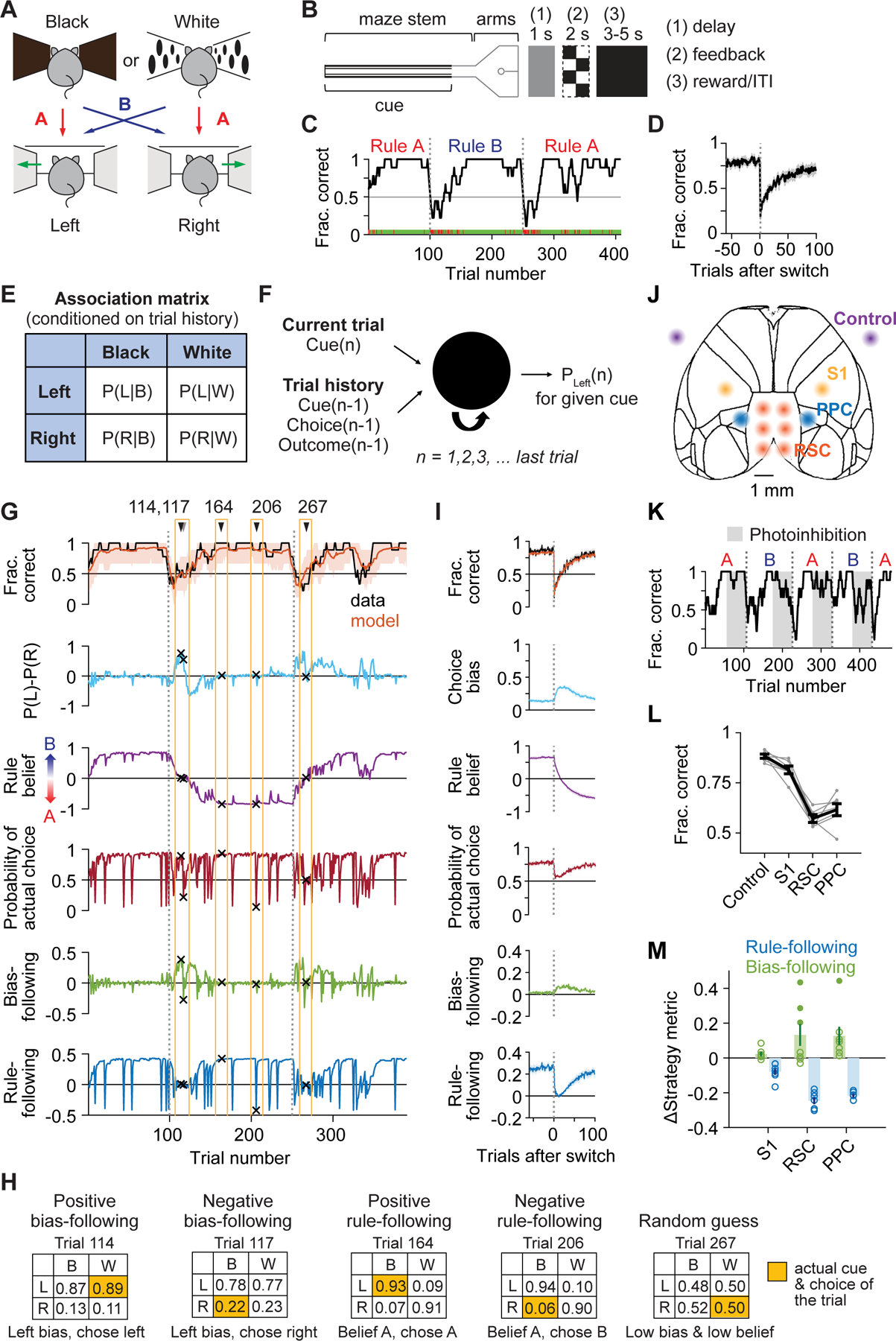

Figure 1. Diverse decision-making strategies during flexible navigation decisions and photoinhibition in posterior cortex.

(A) Rewarded cue-choice associations for rules A and B.

(B) Maze configuration and structure of trial epochs. At the trial end, after a delay, mice received visual feedback about the correctness before a reward and inter-trial interval.

(C) Task performance for an example session. Green ticks, correct trials; red ticks, incorrect trials; black line, smoothed performance (boxcar of 9 trials); gray dashed line, rule switches.

(D) Switch-aligned performance. n = 513 switches from 8 mice.

(E) Association matrix used to quantify strategy variables: the probability of choosing left or right given a black or white cue for a given trial, conditioned on its trial history.

(F) Schematic of LSTM for deriving the association matrix on each trial.

(G) Modeled fraction correct and strategy variables for an example session. Orange shading, 90% CI from 1000 simulations of task performance from the model.

(H) Association matrices for the 5 example trials in (G).

(I) Switch-aligned modeled fraction correct and strategy variables. n = 265 switches.

(J) Bilateral inhibition sites in VGAT-ChR2 mice.

(K) Task performance of an example session during photoinhibition.

(L) Effects of photoinhibition on task performance. Gray lines, individual mice; black line, all mice. Control vs. RSC or PPC: p < 10−4; control vs. S1: p = 0.0002; S1 vs. RSC or PPC: p < 10−4; RSC vs. PPC: p = 0.058. n = 164 sessions from 7 mice.

(M) Effects of photoinhibition on strategy variables, measured as differences from control. Open circles, average for individual mice. For bias-following, p = 0.025 for S1, p = 0.018 for RSC, p < 10−4 for PPC; for rule-following, p < 10−4 for all targets. Filled circles indicate mice with large increase in bias-following (greater than 0.2; 3 mice for RSC and one mouse for PPC).

Data and statistics in (D), (I), (L), (M) are presented as hierarchical bootstrap mean ± SEM.

See also Figure S1.

After training, mice learned both rules and adapted to rule switches over tens of trials multiple times within a single session (Figure 1C). Their behavioral performance was high before rule switches and typically dropped below chance after switches before gradually recovering to high accuracy by the end of a block, without signs of anticipating rule switches (Figure 1D and S1B–S1F). This task encouraged behavioral variability driven by trial-and-error even in expert mice, particularly following rule switches, as we characterize below.

Decision-making strategy varied between rule-guided, biased, and random modes

The performance of the mouse varied greatly within a session, including high performance before rule switches and many errors immediately after rule switches. A mouse’s choice might reflect a variety of time-varying strategies such as following a specific cue-choice mapping based on a rule, or repeatedly making the same choice regardless of the cue identity, or making random choices. We modeled decision-making strategy on each trial by estimating the conditional probability that the mouse would select a choice given a specific cue and its trial history using a long short-term memory recurrent neural network (LSTM) (Figure 1E and 1F). The LSTM accurately predicted a mouse’s choices in held-out sessions (80.2% ± 5.1%, mean ± SD) and served as a simple descriptive model, in contrast to more interpretable but less accurate reinforcement learning models (Figure S1G and S1H).

We extracted a set of strategy variables using the model to describe a wide range of behaviors exhibited during flexible decision-making (STAR Methods – Decision-making strategy variables). From model predictions, we computed “rule belief” as the probability that the cues informed choices consistent with one rule versus the other, and “choice bias” as the tendency to choose left versus right independent of the cue. In individual sessions, we observed periods of strong rule belief (Figure 1G and 1H, example trials 164, 206), choice bias (trials 114, 117), and unpredictable choices or “random guessing” (trial 267). We also compared model predictions to the mouse’s actual choice to determine the degree to which individual decisions were influenced by bias (bias-following) or rule belief (rule-following). These metrics were positive when actual choices followed a bias (example trial 114) or rule belief (trial 164), negative when choices were opposite a bias (trial 117) or rule belief (trial 206), and near zero for random guessing.

On average, prior to a rule switch, mice performed with little choice bias and followed the correct rule (Figure 1I and S1I). After a rule switch, the randomness of choices increased rapidly, as seen by near chance levels of the model’s probability of the actual choice, and bias-following increased moderately. Then, rule-following recovered gradually for the new rule belief. Although behavior on individual rule blocks and sessions was highly variable around these average trends (Figure S1J), our strategy variables quantified these variations at a single-trial level. This task and behavioral modeling thus dissociated both cue and choice from the diverse decision-making strategies on a trial-by-trial basis.

Photoinhibition of posterior cortical areas impaired rule-following

Previous studies have indicated that various areas of posterior cortex are necessary for navigation and decision-making, including RSC and PPC (Harvey et al., 2012; Licata et al., 2017; Pinto et al., 2019). We tested the necessity of these areas using transcranial optogenetic excitation of GABAergic interneurons, leading to inhibition of nearby excitatory cells (Figure 1J) (Guo et al., 2014; Li et al., 2019). Inhibition was performed throughout maze traversal on randomized trials after mice reached high performance between rule switches (Figure 1K). Inhibiting either RSC or PPC led to markedly lower task performance compared to control trials or trials in which part of primary somatosensory cortex (S1) was inhibited (Figure 1L).

We analyzed the behavioral changes underlying impaired task performance by including the inhibition sites as an input to our LSTM model of decision-making strategy. PPC and RSC inhibition decreased rule-following compared to control trials and S1 inhibition (Figure 1M, S1K and S1L). Inhibition also caused large increases in bias-following in a subset of mice (Figure 1M, filled green dots), but this was inconsistent across mice and uncorrelated with effects on rule-following (Figure S1M). Our results are consistent with and extend previous work using tasks with static rules in which inhibition of PPC did not disrupt basic sensory or motor function but prevented abstract sensory cues from appropriately guiding actions (Harvey et al., 2012), which in our study was quantified as rule-following.

Running trajectories reflected the within-trial dynamics of choice formation

Mice used the presented cue and their rule belief to report their choice at the end of the maze. However, the choice might develop at any point in the trial and with different time courses from trial to trial, potentially depending on the mouse’s decision-making strategy. We reasoned that choice formation might be reflected in the running of the mouse during navigation. For example, early in a trial, mice might exhibit movements in preparation to report their choice when confident (Figure 2B; right panel, trials 1 and 2) but may delay such movements (trial 3) or alternate between options (trial 4) when uncertain. Indeed, mice exhibited diverse running trajectories in the maze stem which were typically predictive of the choice reported at the end of the trial, even though the virtual heading and lateral position in the maze were fixed by task design until the end of the maze stem (Figure 2A; 2B, left panel; Figure S2A). This variability in running trajectories increased following a rule switch, suggesting that running variability reflected differences in underlying decision-making strategies (Figure 2C and S2B).

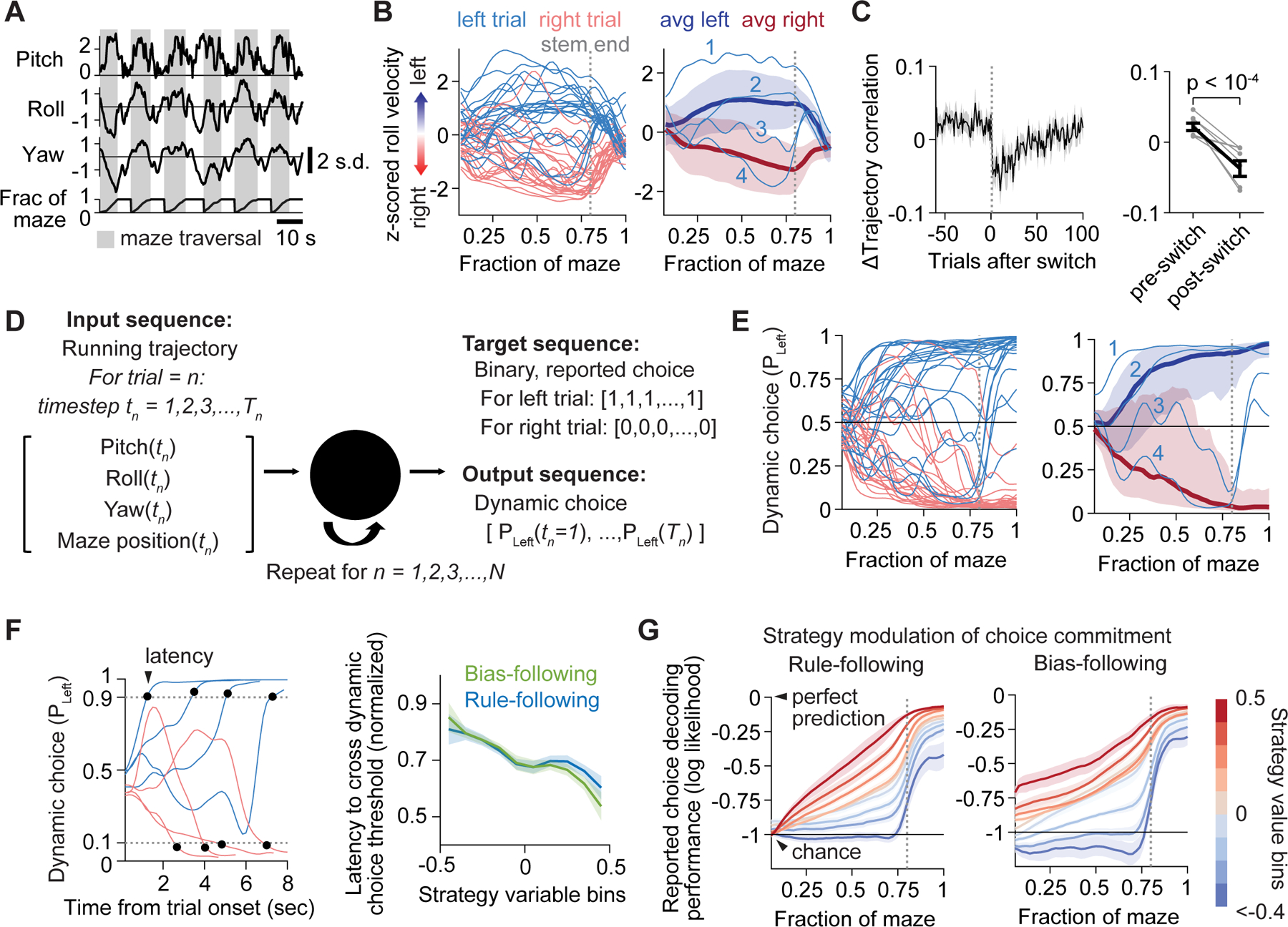

Figure 2. Choice formation estimated from running trajectories.

(A) Example normalized treadmill velocities and position in the maze.

(B) Roll velocity aligned to maze position. Left: example single trials from one session. Right: four example left trials compared to average left and right trials; mean ± SD.

(C) Correlation of running trajectories for choice-matched trials, measured as difference from session average, aligned to the switch (left; n = 265 switches) or averaged for 20 trials before vs. after switches (right; gray lines, individual mice).

(D) Schematic of LSTM for decoding reported choice from running trajectories. The output is dynamic choice (Pleft).

(E) Dynamic choice for same data shown in (B).

(F) Left: latency to dynamic choice crossing a threshold (dashed line) for example trials. Right: relationship between latency (normalized by session-averaged trial duration, 8.95 ± 2.04 sec, mean ± SD) and strategy variables. n = 85,463 trials.

(G) Time course of choice commitment (LSTM decoding performance for reported choice, calculated as log likelihood with log base 2), binned by values of rule-following (left) and bias-following (right). n = 68,249 trials.

Data and statistics in (C), (F), (G) are presented as hierarchical bootstrap mean ± SEM.

See also Figure S2.

To estimate choice formation from running, we quantified how well the running trajectory in a single trial predicted the mouse’s reported choice on that trial with an LSTM (Figure 2D). At each timepoint, the model used all previous timepoints to estimate the probability that the mouse eventually chose left or right. This estimate evolved with varying time courses and settled on correct predictions at different maze positions in different trials, recapitulating the variability seen in running trajectories (Figure 2E). We termed this quantity “dynamic choice”, to distinguish it from the binary, reported choice, and interpret it as a real-time estimate of the mouse’s choice formation. We also observed running trajectories that reflected the identity of the cue early in a trial, which we refer to as “cue-biased running”; however, this behavior was variable across mice and sessions and was uncorrelated with task performance and the time course of dynamic choice (Figure S2C–S2K).

We validated our interpretation of dynamic choice by demonstrating that it varied in an expected manner with differences in decision-making strategy. In particular, when a mouse is confident in its choice, due to high rule- or bias-following, it will select its choice more rapidly than trials with random or unpredictable choices (rule- or bias-following ≤ 0). As expected, the latency to dynamic choice crossing a threshold was shorter on trials with higher rule- or bias-following (Figure 2F). We then analyzed how strategy shaped the within-trial time course of dynamic choice by calculating how accurately dynamic choice at each timepoint predicted the actual reported choice (“choice commitment”). On trials with high rule-following, choice commitment started near chance but increased rapidly during maze traversal (Figure 2G). In contrast, when rule-following was low, choice commitment was low until late in the trial, consistent with indecisiveness or changes of mind when mice were uncertain of the rule. Furthermore, when mice followed a bias, choice commitment was high at trial onset, reflecting a choice formed early and irrespective of the cue. Bias-following and rule-following thus both affected choice commitment with distinct and sensible dynamics (Figure S2L), and consistent effects were observed in photoinhibition experiments (Figure S2M). Our findings support the interpretation that running trajectories reveal nuances of decision-making beyond the reported choice.

Calcium imaging of neural activity across posterior cortex

The task and behavioral modeling allowed for the study of neural representations of visual, motor, and a variety of cognitive variables, including choice and decision-making strategy. To determine the functional organization of posterior cortex, we aimed to quantify the spatial distribution of the encoding for these variables and their conjunctive structure. We used two-photon calcium imaging to measure the activity of hundreds of neurons simultaneously in a local region as mice performed the task and tiled imaging windows across posterior cortex over multiple sessions (Figure 3A and 3B). Within each mouse, we sampled neurons across V1, areas adjacent and medial to V1 (anteromedial, or AM, and posteromedial, or PM), areas between V1 and S1 (anterior, or A, and a small portion of rostrolateral, or RL), RSC, and an area adjacent and lateral to RSC (mediomedial, or MM) (Gămănuț et al., 2018; Paxinos and Franklin, 2013). Using surface vasculature patterns and retinotopic mapping, we registered all fields-of-view into the Allen Institute Mouse Common Coordinate Framework (CCF) and assigned coordinates to 93,881 layer 2/3 neurons imaged from 141 sessions of 8 mice (Figure 3C, 3D and S3A–S3C). This registration permitted analysis of neural activity as a function of cortical location, without assuming pre-defined area boundaries.

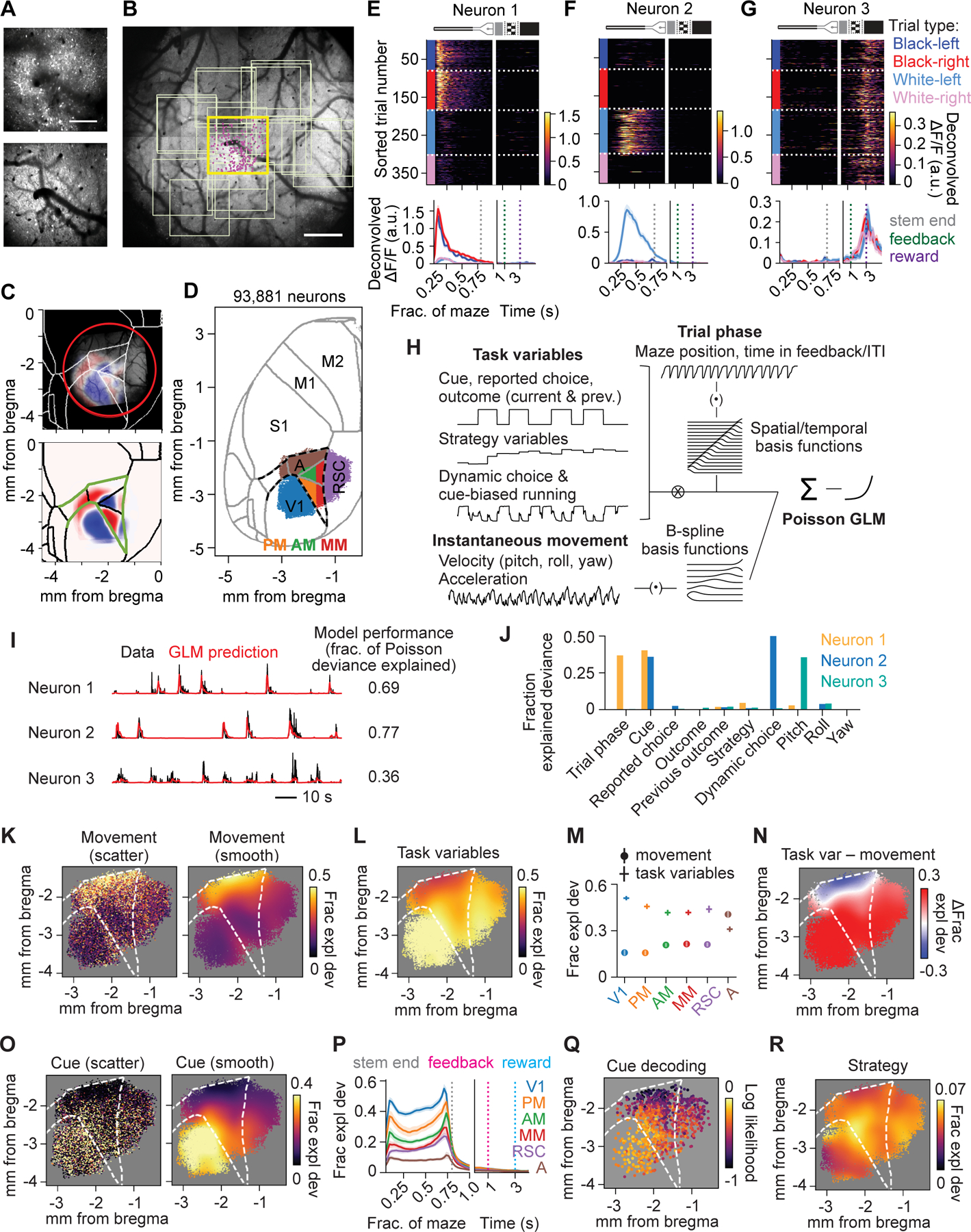

Figure 3. Calcium imaging in posterior cortex and encoding of instantaneous movement and task variables.

(A) Example mean GCaMP6s image (top) and overlying vasculature pattern near the brain surface (bottom) for an example field of view (FOV).

(B) Overview image of the vasculature pattern within the cranial window. Yellow box, location of the FOV in (A); pink dots, locations of neurons recorded in that FOV; light yellow boxes, other FOVs.

(C) Top: Registered field sign map overlaid with the vasculature pattern in (B). White lines, area contours from Allen Institute Mouse CCF; red circle, cranial window location. Bottom: Mean field sign map of 8 mice. Green lines: anterior medial border of V1, lateral border of RSC, and posterior border of S1; used as anatomical landmarks in subsequent figures.

(D) Parcellation of all recorded neurons into 6 discrete areas, overlaid with area borders from CCF. n = 93,881 neurons from 141 sessions from 8 mice.

(E-G) Deconvolved activity of three example RSC neurons. Top: heatmap of single trial activity sorted by trial types. Bottom, trial-type average activity; mean ± SEM. The x-axis is in spatial units during maze traversal and in time units during feedback period/ITI.

(H) Schematic of the GLM.

(I) Example traces of deconvolved activity and GLM prediction on held-out data for the three neurons shown in (E-G).

(J) Encoding profiles (fraction explained deviance for individual variables) of the three neurons in (E-G).

(K) Left: encoding magnitude of instantaneous movement for single neurons (individual dots) at their cortical locations. Right: smoothed encoding map (Gaussian filter, SD = 150 μm). n = 42,998 well-fit neurons from 8 mice.

(L) Smoothed encoding map for task variables.

(M) Average encoding magnitude of instantaneous movement and task variables for 6 areas. Hierarchical bootstrap mean ± SEM. (error bars for movement are contained in the symbols). Area A had higher encoding for movement and lower encoding for task variables than every other area (p < 10−3), while V1 had higher encoding for task variables than every other area (p < 10−3).

(N) Smoothed map showing difference between encoding magnitude of task variables and movement.

(O) Left: encoding magnitudes of cue for individual neurons during stem traversal at their cortical locations. Right: smoothed encoding map.

(P) Time course of cue encoding for 6 areas for sessions with cue offset at 0.76 of maze length. Hierarchical bootstrap mean ± SEM.

(Q) Decoding performance for cue from population activity, quantified as log likelihood with log base 2. Each point represents one population decoder consisting of ~100 nearby neurons, plotted at the mean location of all member neurons. n = 974 decoders.

(R) Encoding map of strategy variables, including individual strategy variables and their interactions with task variables.

Single neurons had diverse responses across trial types, and many were seemingly selective for specific maze positions or time points within a trial (“trial phase”) (Figure 3E–3G). To systematically quantify and distinguish the contributions of many visual, cognitive, and motor components, we built a generalized linear model (GLM) to fit and predict the activity of single neurons (Figure 3H). Predictors of neural activity included task variables like cue, strategy variables and dynamic choice, as well as variables of instantaneous movement, measured as rotational velocities and accelerations of the treadmill around three axes.

The GLM explained a substantial amount of moment-to-moment variability in a neuron’s activity (fraction of Poisson deviance explained: 0.25 ± 0.17, mean ± SD; Figure 3I and S3D–S3F), and for subsequent analyses, we focused on well fit neurons although results were robust to this criterion (Figure S8K–S8O). To build an encoding profile for each neuron, we quantified the fraction of explained deviance accounted for by each individual variable by measuring the decrease in cross-validated prediction performance after zeroing the variable’s coefficients or by refitting the GLM after excluding a given variable (Figure 3J, S3G and S3H).

Distinct encoding gradients for task variables and instantaneous movement

To examine the distribution of encoding, we constructed encoding maps by plotting each neuron’s fraction of explained deviance for selected variables at the neuron’s cortical location and smoothed these maps to show trends over space. We observed that the encoding of both task variables and instantaneous movement was present throughout all areas (Figure 3K and 3L). However, the encoding of task variables exhibited an anterior-posterior gradient with highest strength in V1, intermediate in RSC, and lowest in area A, whereas movement encoding strength exhibited a gradient in the opposite direction (Figure 3M, 3N and S4A–S4I). Posterior cortex therefore had widespread encoding of both task variables and instantaneous movement, consistent with previous literature (Musall et al., 2019; Stringer et al., 2019), but with distinct quantitative gradients.

Encoding of the visual cue identity was strongest in V1 and neighboring areas AM and PM, weaker in RSC, and weakest in area A (Figure 3O, S4J and S4K), which was corroborated with decoding of these variables from the activity of ~100 simultaneously recorded neurons (Figure 3Q). Cue encoding increased rapidly after cue onset and decreased after the cue disappeared, without major differences in the average time course between areas (Figure 3P). Variables related to the decision-making strategy, including their interactions with other task variables, collectively exhibited a moderate encoding strength distributed evenly across posterior cortex (Figure 3R and S4L–S4O). Strategy variables reflect a complex function of trial history, but we also examined direct representations of the previous trial’s cue, choice, and outcome (Akrami et al., 2018; Hattori et al., 2019; Hwang et al., 2019; Koay et al., 2021; Morcos and Harvey, 2016). The previous trial’s outcome was much more strongly encoded than the previous trial’s cue or choice, which may relate to the task demands imposed by rule-switching, and its encoding was evenly distributed over space (Figure S4P–S4S). In summary, whereas cue encoding showed a gradient with enrichment in visual areas, decision-making strategy had modulatory and widespread effects on activity in posterior cortex.

Encoding of dynamic choice was enriched in RSC and distinct from instantaneous movement

We next examined where and how choice was encoded across posterior cortex. We considered that, as mice traversed the maze, neural activity may be more related to dynamic choice than the eventual reported choice because dynamic choice approximates the actual time course of the mouse’s choice formation within a trial. Indeed, during traversal of the maze stem, encoding of dynamic choice was distributed across all areas but enriched in RSC and adjacent medial areas, accounting for a substantial amount of neural activity compared to near-zero encoding of reported choice (Figure 4A, 4B and S5A, S5D, S5E). In contrast, during the feedback and ITI periods, we observed greater encoding of reported choice than dynamic choice (Figure 4C and S5B–S5E). This is sensible since choice encoding during the feedback epoch is a memory of a recent decision, rather than an evolving variable linked to ongoing movements. The magnitude and spatial distribution of choice encoding was similar between the maze stem and feedback epochs, with a medial-to-lateral gradient distinct from cue and movement.

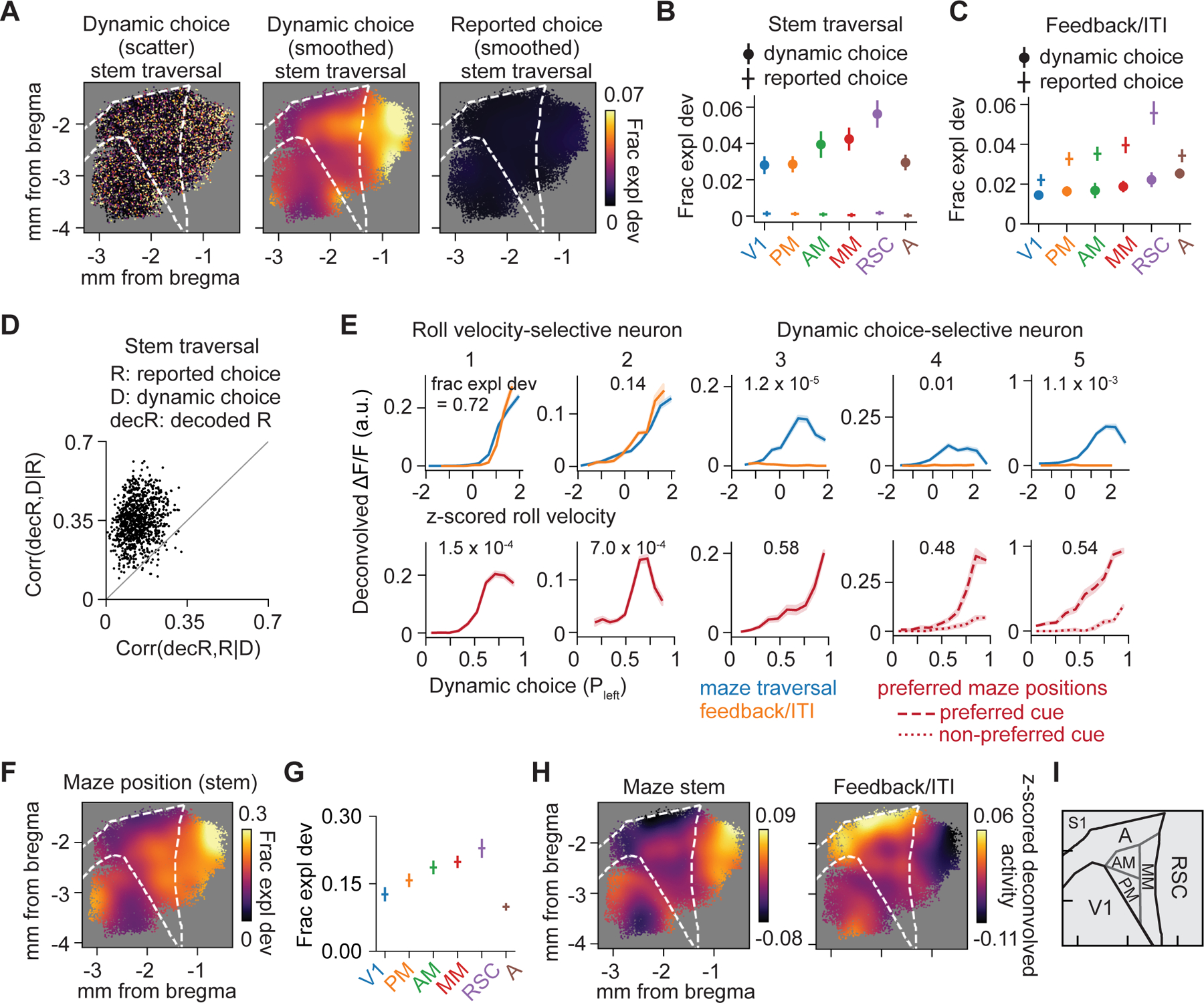

Figure 4. Encoding of choice and maze position.

(A) Encoding magnitude (left) and smoothed map of dynamic choice (middle) and smoothed map of reported choice (right) during stem traversal.

(B) Average encoding magnitude of dynamic choice and reported choice during stem traversal for 6 areas. All 6 areas: dynamic choice vs. reported choice, p < 10−3. Dynamic choice: RSC vs. V1, PM or A, p < 10−3; RSC vs. AM or MM, p > 0.05. Reported choice: each area vs. zero, p > 0.05.

(C) Same as (B), but in feedback period/ITI. All 6 areas: reported choice vs. dynamic choice, p < 10−3. Reported choice, RSC vs. each other area, p < 0.05.

(D) Partial correlation (Spearman) of decoded reported choice (decR) with dynamic choice (D), conditioned on reported choice (R) (bootstrap mean ± SEM, 0.35 ± 0.02) vs. partial correlation of decR with R, conditioned on D (bootstrap mean ± SEM, 0.14 ± 0.02) during stem traversal. Each point represents one population decoder consisting of ~100 nearby neurons. Mean difference between the two partial correlations is greater than 0 (bootstrap mean difference ± SEM, 0.21 ± 0.02, p < 10−3). n = 974 decoders.

(E) Tuning curves for roll velocity (top; plotted during maze traversal and feedback period/ITI) and dynamic choice (bottom; plotted at each neuron’s preferred maze position) for two roll velocity-selective neurons (neuron 1 and 2) and three dynamic choice-selective neurons (neuron 3–5). The GLM-derived encoding magnitude (fraction explained deviance) for that variable is indicated on each panel.

(F) Smoothed encoding map of maze position during stem traversal.

(G) Average encoding magnitude for maze position during stem traversal for 6 areas. RSC vs. V1, PM, or A, p < 10−3; RSC vs. AM or MM, p > 0.05.

(H) Smoothed maps of average z-scored deconvolved activity during the maze stem (left) and feedback period/ITI (right).

(I) Schematic of area parcellation.

Data and statistics in (B), (C), (G) are presented as hierarchical bootstrap mean ± SEM.

See also Figure S5.

Our finding of near-zero encoding of reported choice during stem traversal suggests posterior cortex contained little information about upcoming choice beyond that embodied in behavior. We more closely examined this by training neural decoders to predict the mouse’s reported choice based on population activity (Figure S5F) and examined whether reported choice decoding contained information that was not accounted for by dynamic choice. We calculated the partial correlation between the reported choice decoder’s output and either the reported choice it was designed to predict or the dynamic choice, conditioned on the value of the other. Partial correlations were greater for dynamic choice than reported choice, revealing that even decoders trained to predict reported choice were more closely related to dynamic choice (Figure 4D and S5G). Thus choice-related activity in posterior cortex closely reflected the embodied process summarized by dynamic choice.

Our finding of distinct encoding gradients for instantaneous movement and dynamic choice may seem surprising given that dynamic choice was derived from running trajectories. To clarify the distinction, we generated model-free tuning curves for single neurons identified by the GLM as selective to left-right running (roll velocity) or dynamic choice (Figure 4E and S5H). The activity of roll velocity-selective neurons (neurons 1 and 2) closely tracked instantaneous movement, importantly both during maze traversal and the feedback period/ITI, and their activity was thus somewhat correlated with dynamic choice. Dynamic choice-selective neurons had weak and inconsistent activity correlations with roll velocity and were instead most active at specific positions in the maze with strong choice (neuron 3) and often cue (neurons 4 and 5) selectivity. Dynamic choice encoding, and its enrichment in RSC, was not explained by more complex or temporally integrated movement encoding (Figure S5I–S5N).

Because choice in this task corresponded to a navigation goal in the maze, we also considered whether RSC and medial areas encoded other navigation-related signals. Encoding of maze position was present in all areas, but strongest in RSC, with a similar medial-to-lateral gradient as choice encoding (Figure 4F and 4G). RSC was also most active in the maze stem and least active during feedback and ITI periods, when area A was more active (Figure 4H and S5O). Together, these differences justify a distinction between the anterior-posterior movement encoding gradient and a medial-lateral choice and position encoding gradient.

Encoding of most variables is highly distributed

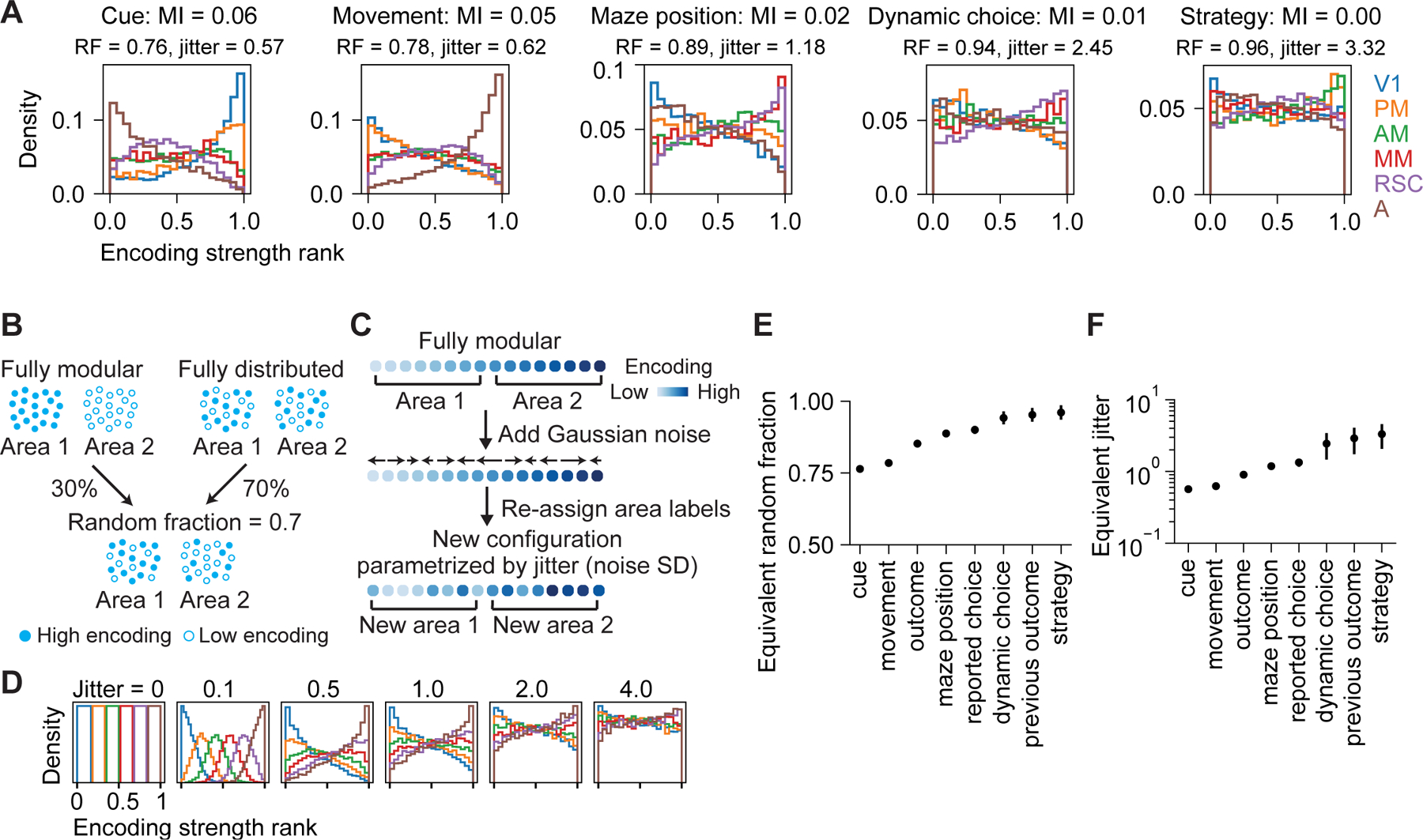

Our results show that specializations in encoding coincide with widely distributed encoding, and we sought to quantify where each variable’s encoding lies on the spectrum of distributedness. We first quantified the mutual information between encoding strength and area identity, with higher values indicating encoding that is more modular, i.e. specialized to specific locations in posterior cortex (Figure 5A and S6A). We then compared these values to two intuitive models that we used to generate synthetic encoding strength distributions spanning the entire spectrum from “fully distributed” to “fully modular” (Figure 5B–5D; STAR Methods – Quantification of distributedness). One model mixed encoding strengths from modular and distributed distributions according to a “random fraction”, while the other added gaussian “jitter” to a modular encoding strength distribution. By varying each model’s underlying parameter to produce encoding strength distributions with equivalent mutual information as the empirical data (Figure S6B and S6C), we obtained “equivalent” random fraction and jitter parameters, which summarize the distributedness of encoding for a single variable along an intuitive quantitative spectrum.

Figure 5. Distributedness of encoding across posterior cortical areas.

(A) Distribution of encoding strength rank of single neurons in 6 areas for various variables. MI, normalized mutual information between encoding strength and area identity; RF: equivalent random fraction; jitter, equivalent jitter; see (B) and (C).

(B) Schematic of toy models generated by mixing fully modular and fully distributed configurations with random faction = 0.7.

(C) Schematic of toy models generated by perturbing the encoding strength rank of the fully modular configurations by adding Gaussian noise (parametrized by jitter, or Gaussian noise SD) to the rank.

(D) Distribution of encoding strength rank for toy models in (C) generated with different jitter values.

(E-F) Equivalent random fraction and jitter for various variables. Bootstrap mean ± SEM.

See also Figure S6.

Cue and movement were the two variables with the highest mutual information between encoding strength and posterior cortical area. However, their equivalent random fractions were greater than 0.75, implying that the representations were closer to “fully distributed” (random fraction = 1) than “fully modular” (random fraction = 0) (Figure 5E). Similarly, their equivalent jitters were near 0.6, which is large relative to the normalized encoding strength range of 0–1 (Figure 5F). The encoding of maze position and choice were even more distributed than cue and movement, with equivalent random fractions near 0.9. All other variables were also highly distributed, with decision-making strategy being the most distributed (random fraction near one). These results are consistent with studies showing distributed movement encoding across mouse cortex, as all variables were closer to a fully distributed than modular organization. Interestingly, however, many cognitive variables are even more widely distributed than movement encoding, at least for posterior cortex.

Single-neuron encoding profiles confirm functional gradients and distributed representations

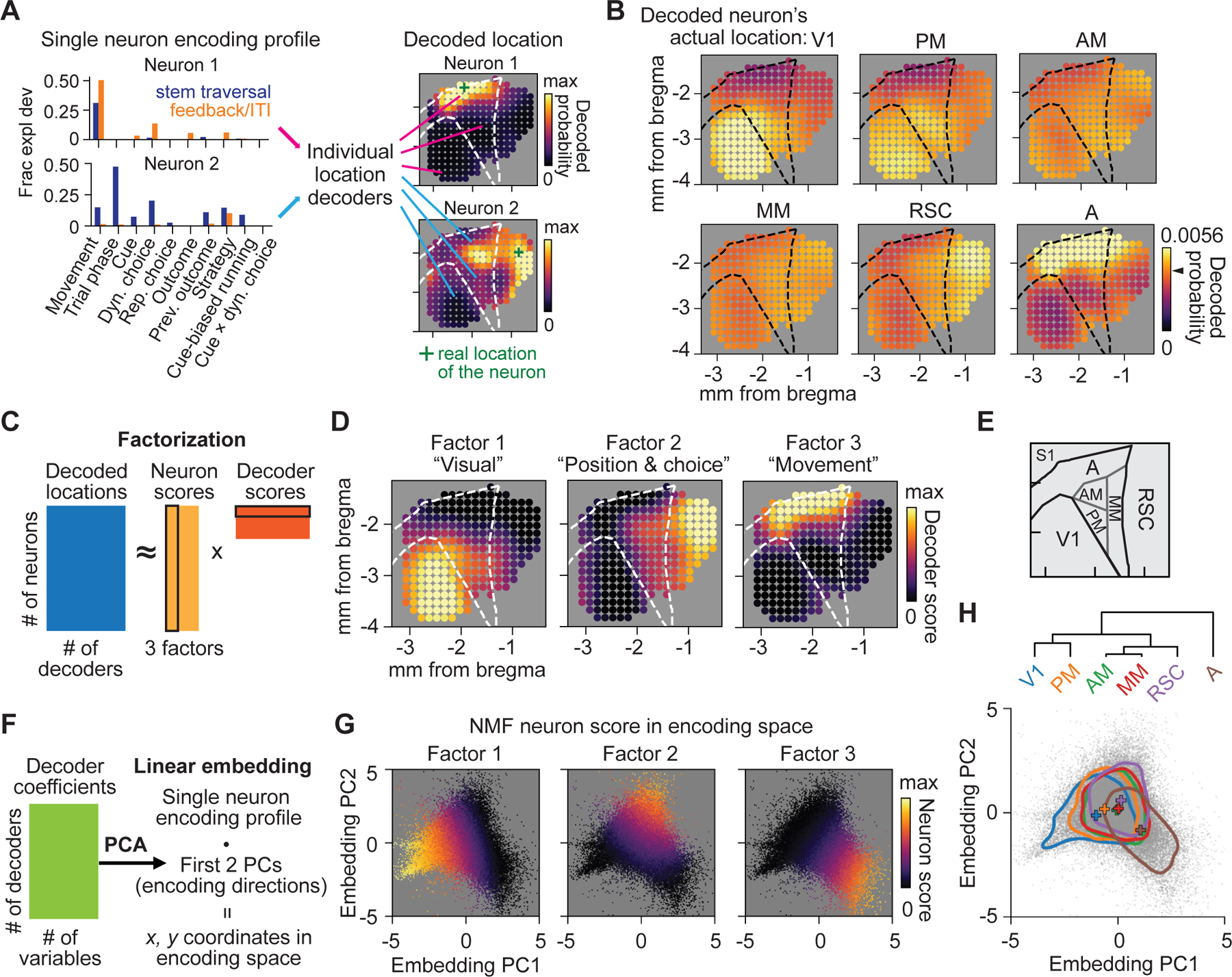

We have so far analyzed the distributedness of encoding only for single variables, but it is possible that a more modular picture of cortical organization emerges if we consider multiple variables at once. We designed analyses to identify the patterns of encoding that best distinguish between cortical areas when considering multiple variables and to visualize the distributedness of these patterns across posterior cortex. We first identified location-informative encoding by training decoders to predict the probability that each neuron was located at each site in a grid across cortex based on the neuron’s encoding strengths for multiple variables, which we termed a neuron’s “encoding profile” (Figure 6A, S6E and S6F). Neurons in V1, A, and RSC were typically predicted to reside in their actual areas of origin, indicating that these neurons had distinctive encoding profiles, in contrast to neurons from AM and MM that were predicted to reside in all sites across posterior cortex (Figure 6B).

Figure 6. Distinct spatial gradients of encoding in posterior cortex.

(A) Examples of decoding cortical locations from GLM-derived encoding profiles of single neurons.

(B) For all neurons in one of 6 areas, the average decoded probability distribution of a neuron’s location over posterior cortex. Chance level is 0.0041 (1/number of location decoders; black arrow on the color bar).

(C) Schematic of the non-negative factorization (NMF) of the decoded locations of all neurons.

(D) NMF decoder scores plotted spatially for each non-negative factor.

(E) Schematic of area parcellation.

(F) Schematic of embedding of single neuron encoding profiles.

(G) All neurons embedded in the encoding space, colored with the NMF neuron scores for each factor.

(H) Top: dendrogram showing hierarchical clustering of 6 areas by centroid locations. Bottom: Summary of distribution of neurons in 6 areas in the encoding space. Colored lines, contours at 25% of the peak density; plus signs, centroid locations.

See also Figure S6.

To identify the most significant spatial differences in encoding profiles across posterior cortex, we applied low-rank factorization to the predictions of these decoders – the probability that a neuron resided at each anatomical site based on its encoding profile (Figure 6C; STAR Methods – Non-negative matrix factorization of decoded locations). Three non-negative factors dominated the factorization (Figure S6I), each representing an encoding profile most typical to a set of distinct locations. Strikingly, these factors formed three distinct spatial gradients that dominated the anatomical organization of encoding with peaks in V1, RSC and A, respectively (Figure 6D). Although each factor’s encoding profile had contributions from diverse task and behavior variables, cue was greatest in factor 1, maze position and choice were greatest in factor 2, and movement was greatest in factor 3 (Figure S6G).

Although factorization revealed encoding profiles enriched in distinct areas, the actual locations of neurons exhibiting these encoding profiles were distributed throughout all areas (Figure S6H). To visualize the heterogeneity of encoding, we developed a linear embedding of each neuron’s encoding profile based on dimensionality reduction of the learned location decoder coefficients across all cortical locations (Figure 6F and S6J; STAR Methods – Linear embedding of single neuron encoding properties). This embedding positioned neurons nearby that, based on encoding profiles, were predicted to be in similar anatomical locations. The embedding mapped neurons onto a triangular manifold, corresponding closely to the representative encoding profiles of the three factors (Figure 6G and S6K). Neurons from individual cortical areas formed continuous, overlapping distributions in embedded space (Figure 6H and S6L). Although the centroids of the distributions (i.e. most typical encoding profiles) were offset from one another, many neurons were functionally closer to the centroid of a different area than to the centroid of the area where they resided. Our analyses showed how specialization and distributed representation coexist in posterior cortex: while V1, RSC, and A each contained neurons whose encoding profiles were mostly unique to each area, corresponding to the peaks of three spatial gradients, the majority of neurons in all areas had encoding profiles that could occur in any area.

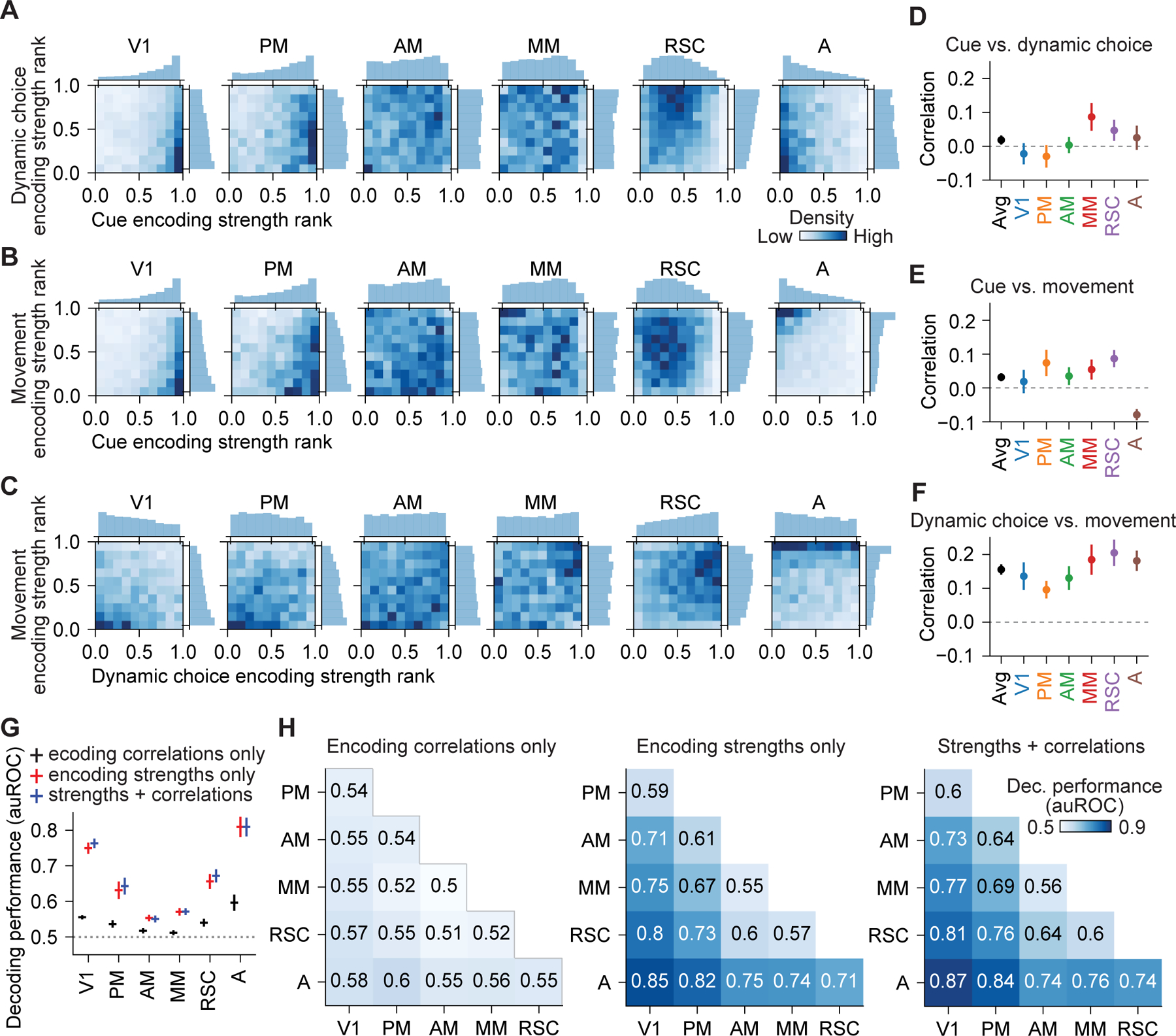

Conjunctive structure of encoding in single neurons is similar across posterior cortex

The analyses of the encoding profiles of neurons revealed how different variables’ encoding strengths varied across cortical space, but they do not specify the higher-order principles by which variables are combined in single neurons, or conjunctive structure. Conjunctive structure may indicate computational functions that differ across posterior cortical space beyond that revealed in encoding strengths alone. For example, an area which integrates current movement with previous position to estimate present position should exhibit single neurons whose activity is modulated by both movement and position. In contrast, another area could encode these same variables with similar encoding strength, but with different variables preferentially encoded by separate neurons and relayed to distinct downstream targets. We therefore consider conjunctive structure a key component of cortical organization and examined whether such structures are similar or different across posterior cortical areas, which we refer to respectively as “generic” or “specialized” integration.

We first examined conjunctive coding in single neurons by visualizing joint histograms of the encoding strength rank for pairs of variables (Figure 7A–7C and S7A–S7C). We observed a wide distribution in how much individual cells encoded both variables, including cells that showed prominent conjunctive coding. Differences between joint histograms for each area were apparent, but mostly reflected the different marginal distributions of encoding strength in each area. To isolate conjunctive structure, we measured the correlation in encoding strength for both variables in single neurons within each area. A positive correlation indicates the variables are conjunctively encoded in single neurons, a correlation near zero reveals the encoding strength for one variable in a neuron is uninformative of encoding for other variables, and a negative correlation means the variables are encoded in distinct neurons. Strikingly, the correlations in encoding between pairs of cue, choice, and movement variables were similar across areas, despite major differences in the encoding strengths of these variables between areas (Figure 7D–7F and S7D–S7H). In addition, the correlation coefficients were close to zero, indicating near-random mixing of the variables within each area, which includes some neurons that conjunctively encode both variables.

Figure 7. Quantification of encoding correlations showed generic integration.

(A) Joint and marginal distributions for encoding strength rank of cue and dynamic choice during stem traversal in 6 areas.

(B) Same as (A), but for cue and movement.

(C) Same as (A), but for dynamic choice and movement.

(D) Pearson correlation between the encoding strength of cue and dynamic choice during stem traversal for neurons in 6 areas. Bootstrap mean ± SEM. Correlations in all areas were not significantly different from one another (p > 0.05).

(E) Same as (D), but for cue and movement. A vs. each other area, p < 0.013, whereas correlations in other 5 areas were not significantly different from each other (p > 0.05).

(F) Same as (D), but for dynamic choice and movement. Correlations in all areas were not significantly different from one another (p > 0.05).

(G) Decoding performance for one-vs.-others decoders that distinguished neurons in each of the 6 areas from neurons in all other areas based on encoding correlations only, encoding strengths only, and both, during stem traversal. Mean ± SEM with leave-one-mouse-out procedure. All decoding was above chance (p < 0.05), except encoding correlations only for PM and AM. Encoding strengths only vs. encoding correlations only: p < 0.05 in all areas except for AM. Encoding strengths only vs. strengths + correlations: p > 0.03 for all areas, not significant after multiple comparison correction. Wilcoxon signed-rank test.

(H) Decoding performance for pairwise decoders that distinguished neurons in a pair of areas during stem traversal.

See also Figure S7.

We then more thoroughly searched for differences in conjunctive structure by decoding the area a neuron resided in based on either the encoding strengths of variables or the encoding correlations between pairs of variables. The ability to decode a neuron’s location was dominated by the encoding of individual variables rather than the encoding correlations between variables (Figure 7G, 7H and S7H, S7I). Although decoding based on encoding correlations allowed above-chance performance, it was poor relative to decoding from encoding strengths alone. Further, decoding with both encoding correlations and encoding strengths was barely improved from decoding with encoding strengths alone. Therefore, differences in conjunctive structure between areas were not substantial, and posterior cortex exhibited generic integration across areas, rather than specialized integration in different areas.

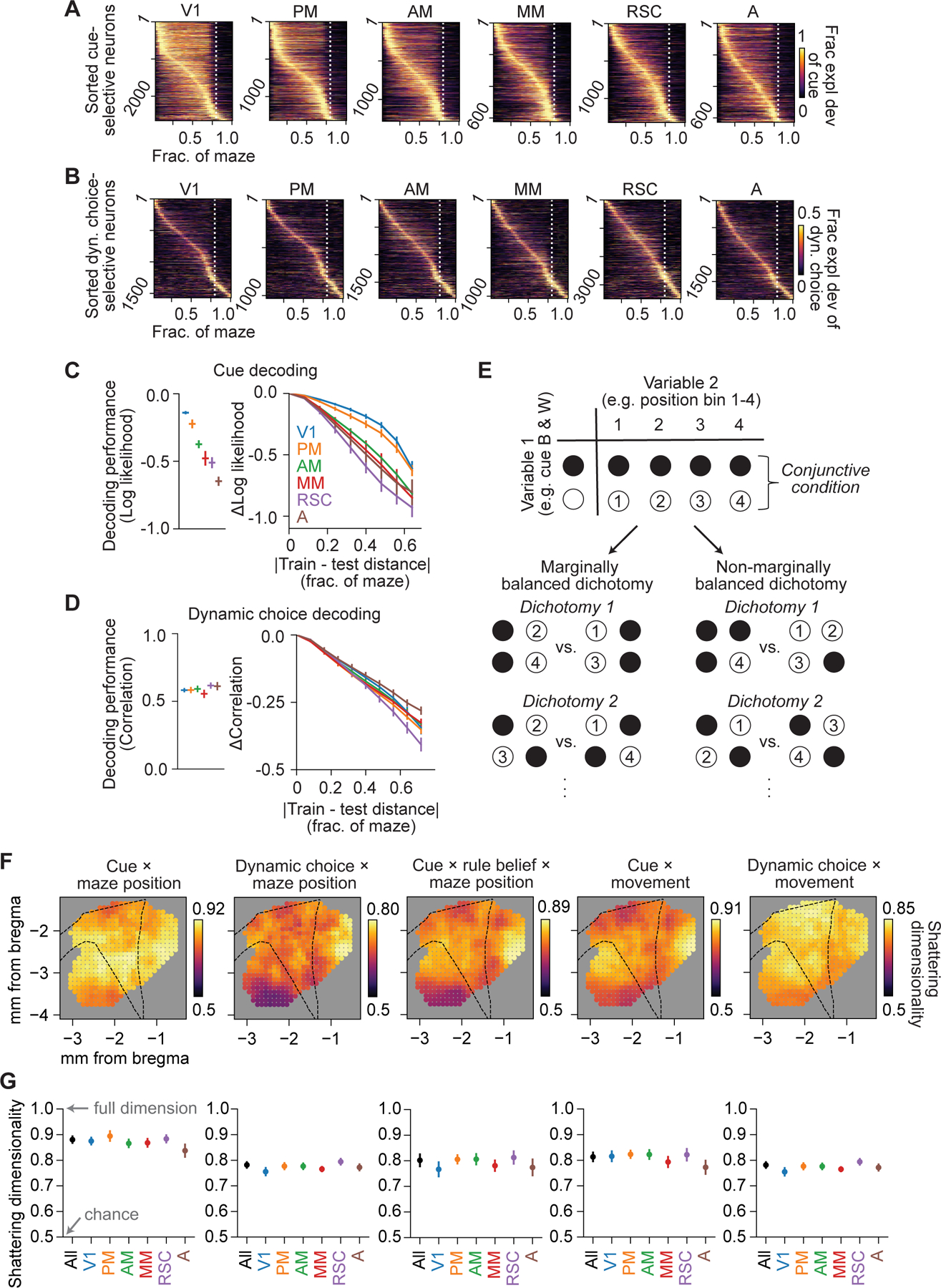

Flexible, high-dimensional representations in posterior cortex

Having observed generic integration in the conjunctive structure of single-neuron encoding strengths, we next considered whether similar results would hold at the population level of representational geometry. Nonlinear mixing of variables in single neurons can create high-dimensional population representations, which provide downstream areas with flexibility and specificity in responding to task conditions (Fusi et al., 2016; Rigotti et al., 2013). As one example, we observed cue- and choice-selective sequences of activity during navigation (Harvey et al., 2012; Koay et al., 2021) (Figure 8A and 8B). These represent a mixing of tuning for position and cue or choice in individual neurons leading to a high dimensional representation, as decoders trained to predict cue or choice at one maze position degraded in accuracy when tested at other maze positions (Figure 8C and 8D). The high-dimensional representation for cue and choice with position would allow linear downstream readouts to trigger relevant actions at specific maze positions, dependent on a given cue or choice.

Figure 8. High dimensional representation of conjunctive variables across posterior cortex.

(A) Fraction explained deviance of cue for the top 25% cue-selective neurons across all cells, separated in 6 areas and sorted by peak location.

(B) Same as (A), except the top 25% of dynamic choice-selective neurons.

(C) Left: average decoding performance for cue based on populations of ~100 nearby neurons for 6 areas, quantified as log likelihood with log base 2. Right: change in decoding performance as a function of the distance between maze positions of the data that the decoders were trained on and tested on (restricted to positions where cue was present). n = 698 decoders.

(D) Same as (C), but for decoding performance of dynamic choice, quantified as the Spearman correlation between decoded and real values. n = 974 decoders.

(E) Schematic for identifying marginally balanced dichotomies over conjunctive conditions formed by a pair of variables.

(F) Spatial maps of shattering dimensionality (average decoding accuracy over all marginally balanced dichotomies) during stem traversal. Each dot indicates a population of 1000 nearby neurons centered on that cortical location.

(G) Shattering dimensionality based on populations of 1000 neurons subsampled from all neurons and each of the 6 areas. All datapoints were not significantly different from one another (p > 0.01, not significant after multiple comparison correction).

Data and statistics in (C), (D), (G) are presented as hierarchical bootstrap mean ± SEM.

See also Figure S8.

We compared the dimensionality of population representations across posterior cortex by adapting recent techniques to measure “shattering dimensionality” for a range of conjunctions including cue, choice, position, movement, and rule belief (Bernardi et al., 2020). Shattering dimensionality refers to the fraction of arbitrary groupings of task conditions that a linear decoder of population activity can discriminate between, which approaches one as the dimensionality of neural encoding approaches the dimensionality of task conditions. We generated conjunctive task conditions for a combination of variables by dividing each variable into discrete bins and averaged neural activity for each combination of bins across trials for pseudo-populations across posterior cortex. Dichotomies of conjunctive conditions with unbalanced marginal distributions were discarded, so that shattering dimensionality was quantified as decoding accuracy on only “marginally balanced” dichotomies, to strictly measure the nonlinear conjunctive coding rather than individual variable encoding strengths (Figure 8E; STAR Methods – Quantification of shattering dimensionality for conjunctive variables).

Shattering dimensionality for all conjunctions in all areas was well above chance, and interestingly, shattering dimensionality for each conjunction was similar across posterior cortex (Figure 8F, 8G and S8A–S8C). Almost all differences between areas were not statistically significant and fell within a range of < 10% classification accuracy for each conjunction (Figure 8G). Also, pseudo-populations including neurons from all areas exhibited nearly identical shattering dimensionality as when including only individual areas. Thus, for a range of conjunctions of variables, similar high-dimensional codes were present across posterior cortex, consistent with the generic integration scheme, and in contrast with significant differences in the encoding strength of individual variables. For example, cue and maze position conjunctions were decoded well by populations in V1, RSC, and A, despite cue and maze position encoding being strongest in V1 and RSC, respectively, and weak in A. Our results suggest that previously described neural sequences are examples of a more general function of posterior cortex, which may integrate diverse variables into a distributed, high-dimensional representation of task and behavioral state, while individual areas are specialized to handle inputs of different modalities.

Discussion

We observed that posterior cortical areas differed in the quantitative degree to which they encoded variables, not which variables they encoded, and surprisingly not the way variables are combined in single neurons or the resulting population geometry of conjunctive representation. This organization is poorly fit to the common notion of a “functional hierarchy”. Association areas (e.g. A, RSC) did not exhibit more complex representations than sensory areas (e.g. V1), and encoding of all variables was highly distributed, particularly for more abstract quantities like decision-making strategy variables. These results also argue against a “specialized integration” principle, in which areas generate distinct combinations of variables to subserve distinct computations. Instead, during flexible navigation decisions, our results point to a parallel organization of posterior cortex based on generic integration. While posterior cortical areas have differential enrichment in the modalities of information they process, all areas share a high-dimensional code for relevant task and behavioral variables. We caution that our analyses do not capture the conjunctions of behavior or task variables that were not measured in our task or modeled by the GLMs, and thus it is possible that posterior cortical specialization could be greater for different variables and tasks. However, in contrast to prevalent theories which conceive of cortical areas performing modular and hierarchically organized computations, the functional organization we observed suggests new hypotheses for the role of posterior cortex in navigation decisions.

In the task studied here, determining the next action to take at any moment depends on a combination of many variables, including visual cues, current position, goal location, internal rule estimate, and ongoing movement. We have shown that many parts of posterior cortex represent this high-dimensional state of variable conjunctions, which provides linear downstream readouts great flexibility and specificity in selecting appropriate actions, such as “run left at the Y-intersection when seeing the black cue during Rule A”. It is possible that identifying relevant combinations of variables and generating a corresponding representation of the behavioral and environmental state is a primary function of posterior cortex in guiding navigation decisions. In this case, areas may be specialized to integrate different signal modalities into a distributed, general high-dimensional state representation, which is then available to a range of circuits downstream of posterior cortex. This hypothesis has similarities to theories that cortex performs unsupervised learning on its inputs (Doya, 1999) and the machine learning concept of representation learning (Xie et al., 2020). In addition, results from the inactivation of PPC and RSC in this and previous studies are consistent with the notion that downstream areas use the posterior cortex’s state representation to guide navigation decisions, instead of PPC and RSC having a direct role in sensory perception or motor control, as inhibiting these areas eliminates associations between cue and choice without disrupting the mouse’s ability to locomote, or to perceive and respond to visual stimuli (Arlt et al., 2021; Harvey et al., 2012; Pinto et al., 2019). Furthermore, across studies, PPC and RSC have been shown to be necessary for a variety of decision tasks that lack common computational requirements (Akrami et al., 2018; Arlt et al., 2021; Hwang et al., 2017; Lyamzin and Benucci, 2019), consistent with these areas participating in a general-purpose state representation. It is likely this state representation coexists with localized and specialized computations that differ across posterior cortex. However, it is interesting to speculate that diverse cognitive functions proposed for posterior cortex across tasks, such as evidence accumulation or maintaining trial history of task variables, might be task-specific computations which contribute to the synthesis of a task-appropriate state representation.

The functional specializations we observed as encoding gradients, along with the great extent of conjunctive coding, is consistent with much prior work that investigated one or two areas at a time. The enrichment of visual signals in V1 and PM is consistent with studies mapping visual representations in posterior cortex, and the presence of spatial and motor information in these areas is in agreement with recent results showing a surprising degree of non-visual signals in V1 (Fiser et al., 2016; Keller et al., 2012; Parker et al., 2020; Saleem et al., 2018; Shuler and Bear, 2006; Stringer et al., 2019; Zmarz and Keller, 2016). The enrichment of choice and position information in RSC is consistent with its well characterized role in navigation, and the conjunctions of sensory, movement, position, and choice variables are in line with previous work (Alexander and Nitz, 2015; Bicanski and Burgess, 2016; Cho and Sharp, 2001; Fischer et al., 2020; Hinman et al., 2018; Keshavarzi et al., 2021; Mao et al., 2020). Our work highlights an important role of RSC in encoding dynamic choice, which is closely related to representation of navigation goals (Miller et al., 2019; Vale et al., 2020). The enrichment of encoding of navigation-relevant movement in area A relates to its identified roles in representing postures and self-motion in rodents (Mimica et al., 2018; Whitlock et al., 2012), and results in primates that suggest PPC contributes to movement intention and planning (Andersen and Cui, 2009; Desmurget et al., 2009; Hanks et al., 2006; Roitman and Shadlen, 2002; Thier and Andersen, 1998). However, this result is perhaps surprising given area A is sometimes considered to be a secondary visual area (Wang and Burkhalter, 2007; Wang et al., 2020). Our findings are also consistent with our previous study identifying distributed encoding of tens of task and behavior-related features in posterior cortex during a visually guided locomotion task (Minderer et al., 2019). As the number of distinct spatial gradients was not explicitly quantified in that study, here we showed that variability in tuning across cortical space was mostly captured by three functional modes (Figure 6D; see also STAR Methods – Analysis of dimensionality of encoding across neurons versus encoding across cortical space).

One critical feature of our approach was utilizing a model to extract a continuously evolving estimate of the animal’s decision from its running trajectory (dynamic choice). This was possible because navigation decisions were executed by continuous movement over many seconds, during which choice could evolve and influence the ongoing navigation trajectory of the mouse. Notably, similar embodiment of cognitive processes has been observed across diverse species and laboratory tasks (Kaufman et al., 2015; Lakshminarasimhan et al., 2020; Pinto et al., 2018; Redish, 2016; Resulaj et al., 2009; Song and Nakayama, 2009). Previous work has suggested that heading angle during navigation may predict neural activity better than (reported) choice (Krumin et al., 2018), and that it may reflect an accumulation of evidence (Pinto et al., 2018). Since heading angle was constant throughout maze traversals in our task by design, we believe these findings can be explained alternatively by neural representations for a continuously evolving choice signal that is tightly coupled to behavioral output. We note this relationship is sensitive to the incentives created by task design, as some studies have observed neural encoding of upcoming choice without substantial accompanying behavioral embodiment (Harvey et al., 2012). However, more generally, we anticipate that modeling behavioral outputs to infer cognitive processes will prove fruitful, especially in tasks with increasing complexity and unconstrained behaviors (Brunton et al., 2013; Havenith et al., 2018, 2019; Lakshminarasimhan et al., 2018; Rosenberg et al., 2021; Roy et al., 2021).

Our work uncovers an organizing principle for posterior cortex and proposes a functional role for it in flexible, goal-directed navigation. A major direction for future work will be to understand what aspects of the representations studied here arise within posterior cortex or are inherited from other regions. This important direction regarding the functional organization of representation versus computation can potentially be addressed with functional imaging at synaptic and dendritic levels, simultaneous recording of multiple brain areas, labeling neurons based on projection targets, and monitoring changes in neural activity during targeted perturbation of neural populations.

STAR METHODS

RESOURCE AVAILABILITY

Lead Contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Christopher Harvey (harvey@hms.harvard.edu).

Material Availability

This study did not generate new unique reagents.

Data and Code Availability

Calcium imaging and mouse behavioral data reported in this paper will be shared by the lead contact upon request.

The original code for fitting generalized linear models has been deposited at Github and is publicly available as the date of publication. DOI is listed in the key resource table.

Other code to run all analyses and produce all figures in this paper will be shared by the lead contact upon request.

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

KEY RESOURCES TABLE

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Bacterial and virus strains | ||

| AAV2/1-synapsin-GCaMP6s-WPRE-SV40 | UPenn Vector Core | Catalog No: AV-1-PV2824 |

| Deposited data | ||

| Allen Mouse Common Coordinate Framework | Allen Institute for Brain Science | http://help.brain-map.org/display/mousebrain/Documentation |

| Retinotopic field sign maps | Allen Institute for Brain Science | https://portal.brain-map.org/ |

| Experimental models: Organisms/strains | ||

| C57BL/6J mouse | The Jackson Laboratory | RRID: IMSR_JAX:000064 |

| VGAT-ChR2-EYFP mouse | The Jackson Laboratory | RRID: IMSR_JAX:014548 |

| Software and algorithms | ||

| MATLAB | The MathWorks | https://www.mathworks.com/ |

| ViRMEn (Virtusl Reality Mouse Engine) | Aronov and Tank, 2014 | https://pni.princeton.edu/pni-software-tools/virmen |

| ScanImage 2016a | Vidrio Technologies | https://vidriotechnologies.com/scanimage/ |

| Image preprocessing and motion correction code | Chettih and Harvey, 2019 | https://github.com/HarveyLab/Acquisition2P_class |

| OASIS algorithm for calcium imaging deconvolution | Friedrich et al., 2017 | https://github.com/zhoupc/OASIS_matlab |

| CNMF algorithm for calcium imaging source extraction | Pnevmatikakis et al., 2016 | https://github.com/Selmaan/NMF-Source-Extraction |

| DataJoint 0.13 | DataJoint | https://datajoint.io/ |

| Python 3.6 | Python | https://www.python.org/ |

| Tensorflow v1 | Google Inc. | https://www.tensorflow.org/ |

| Code for fitting generalized linear models | This study | https://zenodo.org/badge/latestdoi/491659726 |

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Animals

All experimental procedures were approved by the Harvard Medical School Institutional Animal Care and Use Committee and were performed in compliance with the Guide for Animal Care and Use of Laboratory Animals. Behavioral and imaging data were obtained from eight male C57BL/6J mice from Jackson Laboratory (stock no. 000664). Mice were 10–12 weeks old at the start of behavioral training, and 3–6 months old during imaging. For optogenetic inhibition experiments, seven male VGAT-ChR2-YFP mice from Jackson Laboratory (stock no. 014548) were used. These mice were 10 weeks to 1 year old during the photoinhibition experiments. All mice were kept on a reversed 12-hour dark/light cycle and housed in groups of 2–3 littermates per cage.

METHOD DETAILS

Behavioral task and training

Virtual reality system

For calcium imaging experiments, we used a virtual reality system that has been previously described (Harvey et al., 2009; Morcos and Harvey, 2016) for behavioral and imaging experiments. Mazes were constructed using ViRMEn (Virtual Reality Mouse Engine (Aronov and Tank, 2014)) in Matlab. Images were back-projected onto a half-cylindrical screen (24-inch diameter) using a PicoPro Projector (Celluon) at 60 Hz frame rate. For optogenetics experiments, a compact virtual reality system was used (https://github.com/HarveyLab/mouseVR). The compact system was assembled with laser-cut acrylic pieces and mirrors, with overall dimensions of 15 inches wide × 21 inches deep × 18 inches high. Images were projected onto a double-mirror system and a half-cylindrical screen (15-inch diameter) using a Laser Beam Pro projector. In both systems, head-restrained mice ran on an air-supported styrofoam spherical treadmill (8-inch diameter), and the ball movement was recorded using a pair of optical sensors (ADNS-9800, Avago Technologies) and converted into 3-dimensional rotation velocity signals with a microcontroller (Teensy 3.2, PJRC). The pitch velocity was used to translate forward/backward position throughout the maze, and the roll velocity controlled the lateral position. View angle was fixed along the forward direction.

Task description

The task took place in a virtual Y-maze (Figure 1B and S1A). For behavioral and imaging experiments, the full length of the maze was 250 cm with 200 cm for the stem and 50 cm for the arms. During behavioral training, the stem had a width of 60 cm, and the visual scene varied as the mouse made lateral movements in the stem. To control the visual stimulus during imaging experiments, stem width was reduced to 10 cm, starting a few sessions before imaging began, which prevented any lateral movement in the maze stem because the virtual agent could not approach closer than 5 cm to any wall. Therefore, during imaging experiments, the visual scene in the Y-stem was controlled entirely by the mouse’s position along the long axis of the Y-stem, with no lateral movement along the short axis and no angular rotation (view angle changes). While running down the stem of the Y-maze, mice were randomly presented with one of the two distinct cue patterns on the wall (black background with white dots or white background with black dots) and made lateral movement into one of the two arms after passing the Y-intersection. In the majority of sessions, the cue disappeared either 10 cm or 60 cm before the Y-intersection and was replaced by a gray wall pattern (“cue delay” sessions; fixed delay length per session). On other sessions, the cue was visible in the Y-intersection until the mice entered a maze arm (“no cue delay” sessions). We noticed that the task performance decayed as the mouse experienced multiple sessions with long cue delay. Thus, to keep the task performance stable over sessions, the presence and the length of the cue delay was manually adjusted based on the mouse’s performance in previous sessions. After the mice entered an arm, their lateral position was adjusted and locked to a central position such that every trial exhibited an identical view of a gray wall on all sides. After a one-second delay, a visual feedback (checkerboard pattern) replaced the gray wall for 2 seconds if the mouse made a correct choice, after which reward (3 μl 10x diluted condensed milk, Eagle Brand) was delivered through a lick sprout as the screen turned dark for 3 seconds before the onset of the next trial. On incorrect trials, the wall stayed gray during the feedback period for 2 seconds, followed by a 5 second timeout with dark screen. Some representative maze views are shown in Figure S1A. The associations between visual cues and rewarded arms were determined by one of the two rules (rule A: black cue-left arm/white cue-right arm; rule B: black cue-right arm/white cue-left arm; Figure 1A). The rule alternated in blocks with pre-determined length of 100–175 trials, without explicit signaling for the rule switches. Therefore, to maximize the reward, the mouse had to combine the visual cue with an estimate of the current rule (rule belief) to generate a choice, and update its rule belief by combining a memory of a trial’s cue and choice with the outcome. A typical session consisted of 350–450 trials with 2–3 switches. The mice consumed all rewards throughout the sessions with their licking behavior monitored with a lick sensor.

For photoinhibition experiments, a modified configuration of the virtual maze was used. The full length of the maze was 180 cm with 100 cm for the stem and 80 cm for the arms. The two cue patterns were vertical and horizontal bars, and these patterns extended into the walls in the maze arms.

For all experiments, we interleaved a small fraction (typically varying from 0 to 20%) of visually guided trials to assist behavioral performance. In these trials, the checkerboard pattern was present on the end wall of the rewarded arm and was visible to the mice before entering an arm. The inclusion of visually guided trials helped the mouse stay engaged and retain stable performance, and we manually determined the fraction based on the mouse’s task performance on previous sessions. These trials never appeared as the first trial after rule switches. We found that these visually guided trials did not have a larger impact than other trials on the update of the mouse’s rule belief (see the section Modeling of decision-making strategies), and we excluded them from all analyses unless mentioned otherwise.

Training procedure

Three to five days prior to behavioral training, mice were put on a water restriction schedule that limited their water consumption to 1 mL per day. Their body weight was monitored daily and kept above 80% of the pre-training weight with additional water supply when necessary. At the first stage of training, mice were head-restrained on top of the spherical treadmill and placed into a virtual linear track, in which the reward was available at the end of the track. Mice were randomly presented with one of the two cue patterns on the side walls on each trial, and the checkerboard pattern was always present on the wall at the end of the track prior to the reward delivery (to encourage association of reward with the checkerboard pattern). We gradually increased the length of the linear track from 15 cm to 300 cm as mice learned to run straight forward on the treadmill. This stage took 7–10 days, with one session per day. After the mice demonstrated proficient running skills, we moved them to the Y-maze. Visual cues were present throughout the maze (“no cue delay”). Both rules were introduced in alternating blocks within every session with at least 2 switches (3 blocks). At the early phase of this stage, all trials were visually guided, and the mice learned to follow the checkerboard pattern and move the ball laterally to enter the rewarded arms. As training progressed, we gradually decreased the fraction of visually guided trials, so the mice learned the rewarded cue-choice association imposed by both rules. At the late stage of training, we added a cue delay before the Y-intersection. Mice were considered well-trained when accuracy reached 70–75% with a low fraction of visually guided trials (0 to 20%). This training process took around 1–2 months and varied between individual mice.

Surgery

Cranial window

Prior to behavioral training, a cranial window implant surgery was performed. Mice were injected with dexamethasone (2 μg per g body weight) 4–12 hours before the surgery. For the surgery, mice were anesthetized with 1–2% isoflurane. A skin incision was created to expose the skull, and a titanium headplate was affixed to the skull with dental cement (Metabond, Parkell) mixed with India ink for light-proofing. A 3.5 mm-diameter craniotomy was created over the left hemisphere, centered at 2 mm lateral, 2.5 or 2.75 mm posterior to bregma, and the dura was removed. A glass plug constructed with two 3.5 mm-diameter inner coverslips and one 4.0 mm-diameter outer coverslip (#1 thickness, Warner Instruments) bonded together using optical adhesive (Norland Optics NOA 65) was inserted and sealed with dental cement. Mice were then put on behavioral training. After they learned to reliably perform the task, they were anesthetized again for injection of adeno-associated virus (AAV) after one day of free access to water. The dental cement around the window and the glass plug were removed, and 60–100 nL of AAV2/1-synapsin-GCaMP6s-WPRE-SV40 (U. Penn Vector Core, cat. no. AV-1-PV2824) diluted in phosphate-buffered saline (1/10 dilution with final titer ~4 × 1012 gc/ml) was injected into layer 2/3 and layer 5 (250 μm and 500 μm below the pia surface, respectively). Injections were targeted to 7–9 sites spaced evenly across areas of interest, including primary visual cortex (V1), posteromedial (PM), anteromedial (AM), mediomedial (MM), retrosplenial cortex (RSC), and anterior (A). Gradual and continuous injections were made using a glass pipette and a custom air-pressure system over 2–3 min per depth per site, and the pipette was left in place for an additional 3–5 min. A new glass plug was then inserted and sealed with dental cement.

Before the headplate implantation, mice were also injected with two retrogradely transported AAVs (AAV2retro-Syn-mTagBFP2 undiluted with concentration ~1.5 × 1013 gc/ml and AAV2retro-Syn-mScarlet 1/5 dilution in PBS with final concentration ~5 × 1011 gc/ml, both obtained from Boston Children’s Hospital Viral Core) in projection target areas of posterior cortex. Mice were injected with AAVretro-mTagBFP2 and mScarlet into one of the two sets of targets through craniotomies on the left hemisphere: (1) anterior ACC/M2 (3 sites: 1 mm anterior, 0.5 mm lateral, 0.3 and 1.0 mm in depth; 1 mm anterior, 0.8 mm lateral, 0.4 mm in depth; 300 nl AAVretro-mTagBFP2 per site) and dorsomedial striatum (3 sites: 1 mm anterior, 1.2 mm lateral, 2.1 mm in depth; 1 mm anterior, 1.5 mm lateral, 2.1 mm in depth; 0.2 mm posterior, 1.75 mm lateral, 2.1 mm in depth; 300 nl AAVretro-mScarlet per site), or (2) posterior ACC/M2 (4 sites: 0 mm anterior, 0.35 mm lateral, 0.4 and 0.8 mm in depth; 0 mm anterior, 0.7 mm lateral, 0.3 mm and 0.8 mm in depth; 300 nl AAVretro-mTagBFP2 per site) and orbital frontal areas (ORBvl: 2.45 mm anterior, 0.75 mm lateral, 1.8 mm in depth; ORBl: 2.45 mm anterior, 1.25 mm lateral, 1.8 mm in depth; 500 nl AAVretro-mScarlet per site). Craniotomies were sealed with dental cement before implantation of headplate. Note that the labeling resulting from AAVretro injections was not analyzed for this study.

In four of the mice used for imaging, only the headplate was implanted before behavioral training. AAVretro injections, cranial window creation and GCaMP injections were made at once after the mice achieved proficient performance for the task.

Clear skull cap

The surgical procedures for optogenetics experiments were described previously (Guo et al., 2014; Minderer et al., 2019). Mice were anesthetized with 1–2% isoflurane. The scalp was resected to expose the dorsal skull surface, and the periosteum was removed. A thin layer of cyanoacrylate glue (Insta-Cure, Bob Smith Industries) followed by several layers of transparent dental acrylic (Jet Repair Acrylic, Lang Dental, P/N 1223-clear) was applied to the skull to create the cap. A bar-shaped titanium headplate was affixed to the interparietal bone using dental cement for use during training. Prior to beginning photoinhibition, mice were anesthetized again, and the skull cap was polished with a polishing drill (Model 6100, Vogue Professional) using denture polishing bits (HP0412, AZDENT). A layer of clear nail polish (Electron Microscopy Sciences, 72180) was applied to the polished skull cap. An aluminum ring was then attached to the skull using dental cement mixed with India ink for light-proofing.

Photoinhibition experiments

We built the photostimulation system based on a previous design (Minderer et al., 2019). Light from a 470 nm collimated laser (LRD-0470-PFFD-00200, Laserglow Technologies) was coupled with a pair of galvanometric scan mirrors (6210H, Cambridge Technology) and focused onto the skull using an achromatic doublet lens (f = 300 mm, AC508–300-A-ML, Thorlabs). The analog power modulation along with the mirrors allowed rapid movement of the laser beam between multiple target sites for simultaneous stimulation. The focused laser beam had a diameter of approximately 200 µm.

Data from six out of seven mice for the photoinhibition experiments were included and analyzed differently as part of an independent study (Arlt et al., 2021). We started the photoinhibition after mice reached steady state performance within a rule block. Three cortical targets were selected: PPC (1 spot: 2 mm posterior, 1.75 mm lateral), RSC (3 spots: 1.5, 2.5, 3.5 mm posterior, 0.5 mm lateral), and S1 (1 spot: 0.5 mm posterior, 2.5 mm lateral) together with an out-of-cortex control site on the dental cement (1 spot: 2 mm anterior, 5 mm lateral) (Figure 1J). For the single spot targets (PPC, S1 and control), the laser power was sinusoidally modulated at 40 Hz and the time-average power was approximately 6.5 mW per spot. For RSC (3 spots), we used laser power modulated at 20 Hz with a mean of 5 mW per spot. The inhibition was performed bilaterally, with an estimated effect size of 1–2 mm radius on cortex (Guo et al., 2014; Pinto et al., 2019). Given this spatial resolution, the inhibition at the PPC coordinate would have effect on adjacent areas including A, AM, MM, and small portions of PM, lateral part of RSC and anterior part of V1 (the distance between PPC and anterior border of V1 is ~0.8 mm). The inhibition of RSC would have effect on MM and small portions of AM, PM and A. The majority of V1 would be less affected since its center is greater than 2 mm away from PPC and RSC coordinates.

In each session, inhibition blocks consisting of 50 trials started when the mouse’s performance reached 85% correct over the past 30 trials, followed by a rule switch right after the end of each inhibition block. The targets of the inhibition trials were randomly interleaved, with at least half of the trials targeting the control site. Within the trial, the inhibition started 0.5 s prior to the trial onset and lasted until the mouse reached the maze end. Overall, we collected data from 164 sessions from 7 mice. Each session consisted of 10.7 ± 4.3 trials per target and 34.0 ± 15.5 control trials (mean ± SD).

Two-photon calcium imaging

Microscope design