Abstract

Purpose

Virtual reality (VR) simulation has the potential to advance surgical education, procedural planning, and intraoperative guidance. “SurgiSim” is a VR platform developed for the rehearsal of complex procedures using patient-specific anatomy, high-fidelity stereoscopic graphics, and haptic feedback. SurgiSim is the first VR simulator to include a virtual operating room microscope. We describe the process of designing and refining the VR microscope user experience (UX) and user interaction (UI) to optimize surgical rehearsal and education.

Methods

Human-centered VR design principles were applied in the design of the SurgiSim microscope to optimize the user’s sense of presence. Throughout the UX’s development, the team of developers met regularly with surgeons to gather end-user feedback. Supplemental testing was performed on four participants.

Results

Through observation and participant feedback, we made iterative design upgrades to the SurgiSim platform. We identified the following key characteristics of the VR microscope UI: overall appearance, hand controller interface, and microscope movement.

Conclusion

Our design process identified challenges arising from the disparity between VR and physical environments that pertain to microscope education and deployment. These roadblocks were addressed using creative solutions. Future studies will investigate the efficacy of VR surgical microscope training on real-world microscope skills as assessed by validated performance metrics.

Keywords: Surgical simulation, Virtual reality, Surgery, Microsurgery, Microscope, Medical education

Introduction

Medical education is evolving across surgical specialties [1]. This evolution includes reduced training hours while continuing to emphasize excellent patient care which has motivated innovative education solutions [1]. For example, most North American programs have moved from an apprenticeship model to a more standardized competency-based evaluation model [1]. Surgical simulation has emerged as a compelling adjunct to developing surgical skills in a safe environment and assessing these skills in a structured fashion [1, 2]. Virtual reality (VR), as a simulation tool, has the potential to advance surgical education, procedural planning, and intraoperative guidance [3]).

“SurgiSim” (http://www.surgisim.ca) is a VR platform developed for the exploration and manipulation of patient-specific anatomy derived from clinical imaging studies [4]. The platform allows surgeons and trainees to rehearse complex procedures using high-fidelity stereoscopic graphics and haptic feedback in an immersive VR environment (video here: https://youtu.be/ejHGFa1hUtk) [4].

To our knowledge, SurgiSim is the first VR simulator to include a virtual microscope (video here: https://youtu.be/gaTWqWX-Aeo). The integration of such novel technology in surgical practice requires the development of a refined user experience (UX) and user interface (UI) optimized specifically for clinicians and trainees [4]. In designing the SurgiSim UX/UI, our objectives were to optimize the ability of surgeons and trainees to realize the benefits of immersive simulation. We describe the process of designing and refining a VR microscope UX/UI specifically for surgical rehearsal and education.

Methods

VR Platform

“SurgiSim” is a VR platform, built on the original “CardinalSim” platform, developed for the patient-specific exploration and manipulation of anatomy generated from clinical imaging studies [4–6]. It is a collaborative multi-institutional effort between the University of Calgary, Western University, and Stanford University. The platform currently supports the use of either the Oculus (Meta, Irvine, CA) Rift S, Quest, or Quest 2 to view the high-fidelity stereoscopic graphics in VR (Fig. 1). A 2D or “Monitor Mode” also exists for users who do not have access to a VR headset.

Fig. 1.

A surgeon wearing an Oculus Rift S with his left hand holding a controller and his right hand holding a haptic hand controller

The SurgiSim environment includes anatomical models that users can explore and compare to patient-specific anatomy. The anatomical renderings are provided courtesy of the Neurosurgical Atlas (https://www.neurosurgicalatlas.com/). The Neurosurgical Atlas is an online resource curated by Dr. Aaron Cohen-Gadol that hosts multimodal neurosurgical education tools, including interactive 3D anatomical renderings. The anatomical dissections are provided courtesy of Stanford NeuroTraIn (https://med.stanford.edu/neurosurgery/research/SNFTVR_Lab.html). Stanford NeuroTraIn creates the cadaveric dissections and subsequently constructs 3D models of the specimens using photogrammetry [7, 8].

With SurgiSim, a user creates realistic surgical scenes derived from clinical volumetric computed tomography (CT) imaging data from DICOM files. Segmented anatomic structures are incorporated into this texture-based volume rendering. Volumetric structures may be represented with predefined or customized material properties and viewed with a variety of stereoscopic displays using real-time volumetric rendering. The image datasets underwent auto-segmentation using a machine learning model that was developed by the University of Brasilia, Stanford University, and Western University to identify critical anatomic structures including the facial nerve, sigmoid sinus, carotid artery, among other structures [9]. Virtual dissections can be performed with the support of high-fidelity haptic feedback from a variety of supported hardware. The dissections can be compared with intraoperative video for retrospective analysis. The first released version of SurgiSim (VI) is set up to explore user interactions with anonymized clinical CT imaging from selected skull base cases.

Throughout the development of SurgiSim’s UX, the developers met regularly with a team of surgeons to gather feedback about the end-user interaction with the surgical specimens, including the VR microscope and the haptic feedback.

Creation of the VR microscope

The SurgiSim operating room (OR) houses a surgical microscope that can be used to view generic and patient-specific anatomical models (Fig. 2). The scene also includes a surgical table, one instrument tray, a Mayo stand for the anatomical models, a ceiling-mounted surgical light, and a television screen. The VR microscope geometry was modeled based on the ZEISS (Carl Zeiss AG, Germany) Kinevo® 900 surgical microscope (Fig. 2). Unlike conventional surgical microscopes, the VR microscope is not attached to a boom and is free-floating. This was done to improve ergonomics while allowing for the placement of a large virtual screen that projects the video output from the microscope.

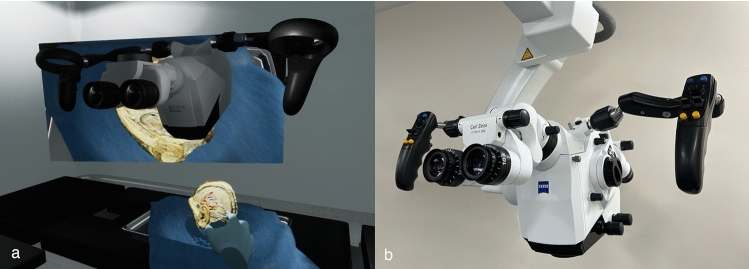

Fig. 2.

Comparison of SurgiSim microscope (a) and the ZEISS (Carl Zeiss AG, Germany) Kinevo® 900 surgical microscope (b), after which it was modeled. The user’s hands, the tray, a surgical specimen, and the surgical table can be seen below the microscope, with the television screen in the background. The projected specimen was provided courtesy of Stanford NeuroTraIn

The microscope was modeled using Blender 3D (Blender Foundation, Amsterdam) and was based on reference photos sourced locally and online. It is divided into 3 parts: the head, arms, and the eyepiece. This division provided the eyepiece with an independent pivot point, allowing it to rotate separately from the microscope head. Plastic, metal, and glass-like materials were created to mimic the surfaces and details of the microscope. A single texture containing SurgiSim's logo along with black details was created using Photoshop (Adobe, Berkeley, CA) and applied to the head of the microscope.

SurgiSim is a real-time application; therefore, interactivity and speed are paramount. The main goal of the 3D object modeling was to minimize the number of polygons while maintaining the original appearance and details of the microscope. This goal was achieved by using simple shapes like cylinders and cubes. For the most complex parts of the microscope, the decimate modifier was applied to reduce the number of triangles while maintaining the design and shape of the object.

VR microscope UI design

The microscope does not include a traditional interactive UI in which one would click with a mouse, tap with a finger, or in VR, point to a target with the controller. Instead, we aimed to create a seamless experience that mirrored the physical world. Therefore, the interaction was designed to rely only on the Oculus controllers to move the microscope. A 3D transparent aura of the controllers was added to the virtual world and was pinned to the user's exact hand position. When the user presses the trigger (Fig. 4), labels/tooltips appear displaying the functionality of each button. This is a useful way to provide information because most users are unfamiliar with the buttons on a VR controller. One of the challenges with this approach is the limited number of buttons on a controller, which constrains the number of functions. Our solution was to combine actions that a single button could perform. For instance, the thumbstick, when rotated, controls the translation of the microscope; if the trigger is concurrently pressed and held, then the thumbstick controls the rotation. This solution, however, still requires further validation and feedback from users.

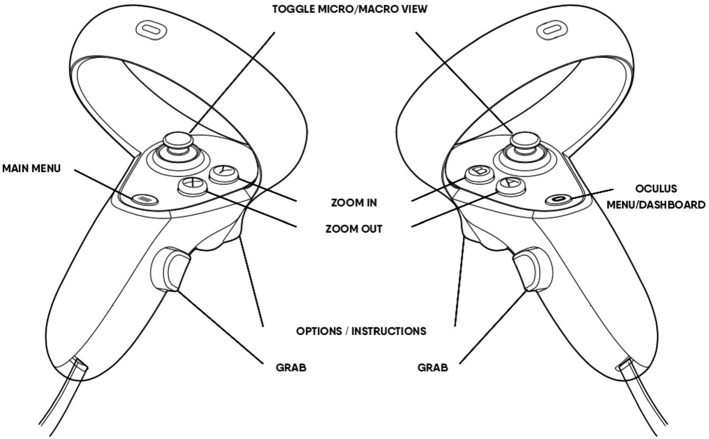

Fig. 4.

Illustration of the Oculus Quest 2 hand controllers with labels indicating their function within the SurgiSim environment

Participants

Four participants including two graduate students, one professor from Stanford University, and one Neurotology Fellow from Stanford participated in the testing of our VR microscope. Two participants were microscope naive, one was familiar with the microscope, and one was an expert surgical microscope user. No ethics approval was required given that we did not collect data from the participants. The participants provided oral consent to use their feedback for both the improvement of the platform and inclusion in this manuscript.

Results

Through observation and participant feedback, we made iterative design changes to the SurgiSim platform. During our design process, we identified the following key characteristics of the VR microscope UX/UI: the overall appearance, the hand controllers, the boom, the zoom, the microscopic view, and the human factors.

The microscope appearance

The overall microscope appearance was based on the ZEISS Kinevo® 900 microscope (Fig. 2). Based on the feedback collected at the end of the VR microscope testing, all of the participants found the appearance of the microscope to be realistic. All of the participants consistently indicated that they were satisfied with graphics within the VR environment. The appearance of the microscope did not require any updating throughout the design iterations.

The microscope controllers

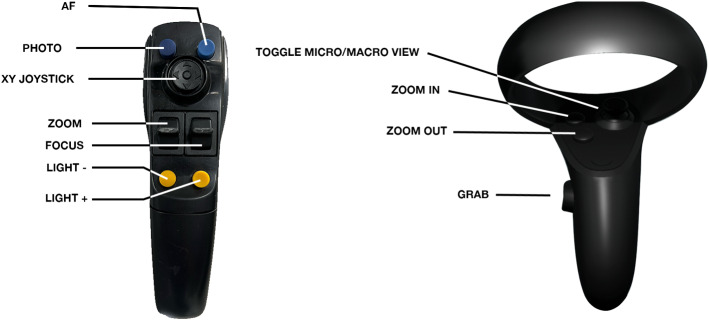

The virtual microscope’s button layout was based on the oculus controller given the similarity in layouts (Fig. 3). Users reach for the microscope handles with the Oculus controllers in hand and vibratory confirmation from the controller notifies the user that they are sufficiently close to the virtual microscope handles to operate its controls. With the controller buttons, users can optically zoom in and out of the surgical field, adjust the pitch of the microscope head for microscopic movement, or release the boom for macroscopic movement. The VR controller buttons were mapped to mimic the handles of the Kinevo microscope as closely as possible.

Fig. 3.

Comparison of the ZEISS (Carl Zeiss AG, Germany) Kinevo® 900 surgical microscope handle and the SurgiSim VR surgical microscope handle

Unlocking and moving the microscope head is triggered with the Oculus "grip” buttons on each controller (Fig. 4), which is in line with traditional VR programming similar to grabbing objects in VR. The grip buttons are located in similar positions to the buttons that are used to move conventional microscopes.

Unlike conventional microscopes, users cannot move their faces to the microscope to move into the microscopic view, instead, the user must click the thumb trigger on either Oculus controller to move into the microscopic view. The VR microscope also differs in that it does not include buttons that allow the user to focus the microscope, given that the objects in the VR environment are always in focus as long as the image is of sufficient quality.

The Oculus controllers house several buttons (Fig. 4). Only the grip button, the thumbsticks, and the X/Y/A/B buttons are required to use the VR microscope. Some of the participants indicated that they were confused by the additional buttons that are assigned to other functions in the VR environment. A training module about the controller buttons already existed in SurgSim, and therefore this problem was solved by integrating this training module into the experiment training.

We observed that participants quickly learned to use the grip button to move the microscope and the X/Y/A/B buttons to zoom in and out. This rapid adaptation was most evident in the two non-microscope naive participants, given that the buttons were located in very similar locations to conventional surgical microscopes. All of the participants initially interacted with the thumbstick like a joystick and therefore required a demonstration about how to click down on the thumbstick to enter microscopic view. This particular instruction was incorporated into the SurgiSim training module.

The microscope boom

The weight, resistance, and lock position of the microscope boom presented significant, and to some extent, unsolvable challenges. Conventional microscopes are of substantial size and weight, whereas the floating SurgiSim microscope head was initially simulated to feel weightless. Early surgeon feedback asked that weight and resistance be added to the microscope. Implementing this in VR required that hand interaction physics be applied to the microscope. This interference between the two dynamic objects introduced another challenge, in that if the hands bumped the microscope, it would always move, even if slightly. Two participants provided feedback that they could move the microscope head out of the locked position, without using the grip buttons, by contacting the head with enough force. None of the participants reported that maneuvering the VR microscope felt realistic. This maneuverability presented such a problem that all of the participants reported that they would not use the VR platform, in its current state, for microscope training. We addressed this maneuverability issue by implementing a "lock" solution. Whenever the microscope was not being grabbed by the handles, its weight would be set to 500 kg; therefore, the hands would not affect it because it was simply too heavy. Once the hands touched the handles and used the grip button, the simulated microscope weight would be set to around 50 kg, a much more manageable weight.

One participant noted that they experience a slight drop as they release the button to move conventional microscopes, whereas this did not occur within the SurgiSim environment. This is consistent with the absence of gravity effects on the VR microscope head. This was felt to be a benefit in interaction design that users would accommodate.

Conventional microscopes are limited by the movements of the boom, unlike the floating VR microscope head. This difference in movements was noted by the one expert microscope user participant. The participant provided feedback that learning to maneuver the microscope within these physical constraints was an important part of microscope education. Future work will apply physical constraints to the VR microscope head that will reflect those experienced in an OR.

The microscope zoom

One challenge within the VR space is the ability to zoom indefinitely, which contrasts the limitation of the Kinevo’s optical zoom properties. To accurately mimic these constraints, the zoom functionality was restricted.

The location of the VR microscope zoom buttons is similar to that of a conventional microscope (Fig. 3). All of the participants found the zoom buttons realistic and easy to use. None of the participants found the absence of focus buttons confusing, which is likely because the object of interest remains in focus in the VR environment.

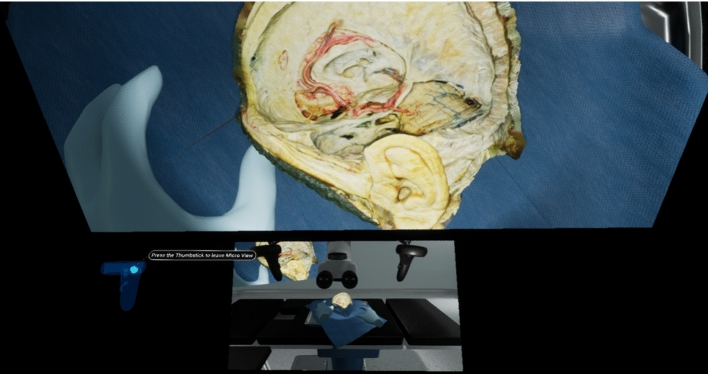

Microscopic view

All of the participants provided feedback that it was difficult to find the microscope handles once they entered the microscopic view. All of the participants frequently needed to exit the microscopic view to locate the handles in space. This problem was solved by adding a small layout map at the bottom of the microscopic view that displays the larger OR environment so that users could easily locate the microscope handles (Fig. 5).

Fig. 5.

Screenshot of microscopic view with the OR layout map visualized at the bottom of the user’s field of view

Unlike a physical microscope, the Oculus controllers remain in the user's hands which means that they do not experience the tactile feedback of coming into contact with the static handles. This was addressed by providing the user with vibratory feedback when the handles are encountered. All of the participants provided feedback that experienced a learning curve as they adapted to this different kind of haptic feedback. During microscope testing, all of the participants noted that the vibration had occurred and yet they were still unable to move the microscope. This problem was addressed by enlarging the sphere of space in which the user could use the buttons to move the microscope.

Discussion

The challenge of providing trainees with sufficient surgical experience to ensure safe and effective care is an ongoing challenge [1, 10, 11]. During the COVID-19 pandemic, medical students remained at home and the operative experience of residents was limited [10]. Visiting elective rotations were canceled and an estimated 28 million elective surgeries were postponed worldwide [10]. These circumstances highlight the need for access to surgical rehearsal technology [10]. VR is increasingly recognized as an important part of surgical education and has been acknowledged by both the Royal College of Surgeons of Canada and the Accreditation Council for Graduate Medical Education as validated means of training. This technology promises to both increase user experience and minimize patient harm [3, 4].

Microsurgery is a fundamental component of many surgical specialties, including otolaryngology, neurosurgery, plastic surgery, ophthalmology, general surgery, orthopedic surgery, gynecology, and oral and maxillofacial surgery [12]. Despite the ubiquitous nature of this tool among surgical specialties, microscope-specific training is absent [11, 13–15]. Post-graduate trainees often observe microscope use without maneuvering the microscope themselves, which may result in errors in magnification and selection of the surgical field of view [13]. The literature describes alternative microscope training platforms that include smartphones, tablets, and VR headsets with and without the addition of digital cameras, and training on models that include cadaver specimens and physical mock-ups [11, 14–17]. To our knowledge, no microscope-specific VR training programs exist.

Previous research has demonstrated that training surgical residents on VR simulators results in fewer surgical errors and increases proficiency when compared to those lacking VR training [18]. In addition, technological advances in VR have increased the fidelity of surgical simulation through improved graphics and haptics [19]. When paired with increased accessibility due to decreased hardware size and costs, the potential to advance microscope-specific training abounds. However, commercially available temporal bone surgery simulators, such as the Voxel-Man® (Voxel-Man Group, Hamburg, Germany), are expensive and not yet routinely incorporated into surgical practice [6, 20].

A 2017 systematic review by Musbahi et al. identified 64 simulators within otolaryngology, with 12 of these being VR temporal bone surgery simulators [21]. Similarly, in 2019, a systematic review by Alwani et al. identified 11 VR neuro-otologic simulators [22]. Some of these platforms incorporate microscopes, either in the form of physical microscope eyepieces or VR environments that simulate a microscope view [23–28]. One group incorporated microscope positioning into their simulation; however, this was controlled with a haptic arm and therefore does not replicate the maneuvering of a conventional microscope [27]. Other groups acknowledged the absence of microscope positioning as a limitation in their temporal bone simulators [29]. None of the platforms included a VR representation of a microscope or were deployed on a head mounted display, such as the Oculus.

In this paper, we present a novel VR surgical simulator that incorporates a surgical microscope. Many VR design paradigms have been proposed; however, there is an evolution toward more user-centered, iterative processes [30]. High-level principles for human-centered VR design fall into three categories: communication, the form of reality, and presence [31]. Within “communication”, VR must prioritize the UX [31]. The communication between the user and the technology should be simple and transparent to foster a sense of presence [31]. We employed an iterative design process that allowed us to learn more about the subtle differences between VR and physical environments and how they pertain to microscope education and use.

The “form of reality” design principle requires decisions about where the technology lies on the reality-virtuality spectrum and what type of input and output hardware to employ [31]. This category also includes the development of the more abstract story and layout of the VR environment [31]. The design of the VR microscope presented several unpredictable challenges surrounding the lack of physical constraints in VR. For instance, the VR world allows for a microscope head to exist without being attached to a supporting microscope boom. Although operative ergonomics improved, locking the microscope in place became more challenging. The floating microscope head also allows the user to view the object of interest from an infinite number of angles, which is unrealistic. This is useful in exploring specimens. However, its role as a conventional microscope trainer requires the presence of constraints that mirror the real world. Future versions of the microscope will include articulations and rigging of the entire microscope to better emulate real-world constraints.

“Presence” manifests from the user's interpretation of immersion [31]. Immersion describes the extent to which a technology can deliver an illusion of reality to the senses of the user [32]. To maximize the illusion of presence, a VR platform should minimize breaks in presence and optimize the VR world stability and depth cues [31]. Presence is also enhanced by representations of the user’s body, physical interactions, and social communication. Defining the fidelity of the VR environment also contributes to the experience of presence [31]. Certain fundamental differences between the physical and virtual worlds, such as gravity and the physical constraints of the Oculus headset and controllers, resulted in unique challenges to the user’s sense of presence. For instance, the Oculus headset and controllers are attached to the user, whereas a user interacts with the head and handles on a conventional microscope as needed. With a physical microscope, surgeons can freely move their heads without affecting the image from the microscope. This counters the VR principle of continuous head tracking whereby the image moves with any head movement. This design challenge was overcome by allowing the user to enter microscope view, which is independent of head movement by the click of the thumbstick. Moreover, in the physical world, surgeons can easily pull their heads back and adjust the microscope head for large-scale movement. To emulate this, a proximity zone near the eyepieces was created where users place their eyes and go into “microscope mode.” Our initial testing feedback from expert surgeons suggested significant head and neck fatigue because any subtle head movement would inadvertently pull the surgeon out of the microscope. Although microscope mode activation from surgeons’ eye proximity to the virtual eyepieces is highly intuitive, locking in microscopic view with pushing in the thumbstick was favored by expert feedback.

Navigating these differences presented an interesting design challenge that we addressed with creative workarounds. These workarounds included the vibration feedback upon encountering the microscope handles, and the click of the thumbstick to move into the microscopic view. Each iterative modification brought us closer to the high-level design principles of communication, the form of reality, and presence in our ability to create a human-centered VR design for the operating microscope. With our aim to increase user proficiency and maneuverability of using an operative microscope, the next steps are geared toward elucidating this translatability.

Future directions

The SurgiSim platform will first be introduced to four otolaryngology training programs in Canada (Western University, the University of Calgary, the University of British Columbia, and the University of Toronto). Data collection from the initial interactions of trainees with the platform will allow the SurgiSim team to iteratively refine both the UX/UI and the overall platform using validated software design principles. As the platform is integrated into the first otolaryngology training programs, we will further understand which changes to the UX/UI are needed to facilitate its use by clinicians.

Given that SurgiSim employs CT imaging data, the platform is most applicable to the rehearsal of bony anatomy dissection. For this reason, our initial focus is on temporal bone dissection. Future versions of SurgiSim will apply the platform to a broader range of surgical cases, beginning with anterior cranial base procedures. Our team is also working on incorporating magnetic resonance imaging to create 3D models of soft tissues, which will make the platform more relevant for procedures that involve soft tissue dissection. We will begin with procedures in adjacent regions, thereby expanding our scope to include neurosurgery and plastic surgery. Our expansion into neurosurgery will allow users to compare their dissections of patient-specific data to the anatomical teaching models provided by the Neurosurgical Atlas and Stanford NeuroTraIn.

We will incorporate SurgiSim into a variety of academic centers internationally to improve its utility as a training tool and to maximize its educational impact. Future studies will investigate the efficacy of VR surgical microscope training on real-world microscope skills as assessed by validated performance metrics. SurgiSim will also facilitate access to training on novel technologies by incorporating devices such as surgical exoscopes into the platform. Ultimately, we aim to incorporate SurgiSim into surgical practice to improve training and improve patient outcomes.

Conclusion

SurgiSim is a novel surgical simulator that includes a unique VR microscope. The development of this microscope involved the careful consideration of VR design principles and elegant workarounds to address the discrepancies between the physical and VR worlds. We recommend incorporating these learnings into the development of future VR tools for surgical rehearsal and education.

Acknowledgements

This research was supported by the Ohlson Research Initiative and the Karren Family Endowment at the Cumming School of Medicine, University of Calgary.

Author contributions

Material preparation was performed by MdLB, AVB, and TEC. MdLB, AVB, CL, TEC, JD, NB, and JL were involved in project conception and design. The first draft of the manuscript was written by MdLB, and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

No funding was received to assist with the preparation of this manuscript.

Declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Villanueva C, Xiong J, Rajput S. Simulation-based surgical education in cardiothoracic training. ANZ J Surg. 2020;90:978–983. doi: 10.1111/ans.15593. [DOI] [PubMed] [Google Scholar]

- 2.Lui JT, Hoy MY. Evaluating the effect of virtual reality temporal bone simulation on mastoidectomy performance: a meta-analysis. Otolaryngol Neck Surg. 2017;156:1018–1024. doi: 10.1177/0194599817698440. [DOI] [PubMed] [Google Scholar]

- 3.Pelargos PE, Nagasawa DT, Lagman C, et al. Utilizing virtual and augmented reality for educational and clinical enhancements in neurosurgery. J Clin Neurosci. 2017;35:1–4. doi: 10.1016/j.jocn.2016.09.002. [DOI] [PubMed] [Google Scholar]

- 4.Chan S, Li P, Locketz G, et al. High-fidelity haptic and visual rendering for patient-specific simulation of temporal bone surgery. Comput Assist Surg. 2016;21:85–101. doi: 10.1080/24699322.2016.1189966. [DOI] [PubMed] [Google Scholar]

- 5.Locketz GD, Lui JT, Chan S, et al. Anatomy-specific virtual reality simulation in temporal bone dissection: perceived utility and impact on surgeon confidence. Otolaryngol Neck Surg. 2017;156:1142–1149. doi: 10.1177/0194599817691474. [DOI] [PubMed] [Google Scholar]

- 6.Compton EC, Agrawal SK, Ladak HM, et al. Assessment of a virtual reality temporal bone surgical simulator: a national face and content validity study. J Otolaryngol - Head Neck Surg. 2020;49:17. doi: 10.1186/s40463-020-00411-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rubio RR, Shehata J, Kournoutas I, et al. Construction of neuroanatomical volumetric models using 3-dimensional scanning techniques: technical note and applications. World Neurosurg. 2019;126:359–368. doi: 10.1016/j.wneu.2019.03.099. [DOI] [PubMed] [Google Scholar]

- 8.Vigo V, Pastor-Escartín F, Doniz-Gonzalez A, et al. The smith-robinson approach to the subaxial cervical spine: a stepwise microsurgical technique using volumetric models from anatomic dissections. Oper Neurosurg. 2021;20:83–90. doi: 10.1093/ons/opaa265. [DOI] [PubMed] [Google Scholar]

- 9.Neves C, Tran E, Kessler I, Blevins N. Fully automated preoperative segmentation of temporal bone structures from clinical CT scans. Sci Rep. 2021;11:116. doi: 10.1038/s41598-020-80619-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dedeilia A, Sotiropoulos MG, Hanrahan JG, et al. Medical and surgical education challenges and innovations in the COVID-19 era: a systematic review. In Vivo. 2020;34:1603–1611. doi: 10.21873/invivo.11950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Margulies IG, Xu H, Henderson PW. Microsurgery training in the digital era: a systematic review of accessible digital resources. Ann Plast Surg. 2020;85:337–343. doi: 10.1097/SAP.0000000000002214. [DOI] [PubMed] [Google Scholar]

- 12.Mavrogenis AF, Markatos K, Saranteas T, et al. The history of microsurgery. Eur J Orthop Surg Traumatol. 2019;29:247–254. doi: 10.1007/s00590-019-02378-7. [DOI] [PubMed] [Google Scholar]

- 13.Wickens B, Shamsil A, Husein M, et al. Comprehensive metrics for evaluating surgical microscope use during tympanostomy tube placement. Int J Comput Assist Radiol Surg. 2021;16:1587–1594. doi: 10.1007/s11548-021-02428-8. [DOI] [PubMed] [Google Scholar]

- 14.Sayadi LR, Fligor JE, Couchois S, et al. A novel application of digital microscope for microsurgery training. J Reconstr Microsurg Open. 2020;05:e32–e35. doi: 10.1055/s-0040-1710346. [DOI] [Google Scholar]

- 15.Pafitanis G, Hadjiandreou M, Miller R, et al. The use of mobile computing devices in microsurgery. Arch Plast Surg. 2019;46:102–107. doi: 10.5999/aps.2018.00150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Huang TC-T, Sabbagh MD, Adabi K, et al. Compact and economical microsurgical training made possible with virtual reality. Plast Reconstr Surg. 2018;142:993e. doi: 10.1097/PRS.0000000000005059. [DOI] [PubMed] [Google Scholar]

- 17.Choque-Velasquez J, Colasanti R, Collan J, et al. Virtual reality glasses and “eye-hands blind technique” for microsurgical training in neurosurgery. World Neurosurg. 2018;112:126–130. doi: 10.1016/j.wneu.2018.01.067. [DOI] [PubMed] [Google Scholar]

- 18.Ro C, Toumpoulis I, Ashton R, et al. The LapSim: a learning environment for both experts and novices. Stud Health Technol Inform. 2005;111:414–417. [PubMed] [Google Scholar]

- 19.Lungu AJ, Swinkels W, Claesen L, et al. A review on the applications of virtual reality, augmented reality and mixed reality in surgical simulation: an extension to different kinds of surgery. Expert Rev Med Devices. 2021;18:47–62. doi: 10.1080/17434440.2021.1860750. [DOI] [PubMed] [Google Scholar]

- 20.Abbas JR, Kenth JJ, Bruce IA. The role of virtual reality in the changing landscape of surgical training. J Laryngol Otol. 2020;134:863–866. doi: 10.1017/S0022215120002078. [DOI] [PubMed] [Google Scholar]

- 21.Musbahi O, Aydin A, Al Omran Y, et al. Current status of simulation in otolaryngology: a systematic review. J Surg Educ. 2017;74:203–215. doi: 10.1016/j.jsurg.2016.09.007. [DOI] [PubMed] [Google Scholar]

- 22.Alwani M, Bandali E, Larsen M, et al. Current state of surgical simulation training in otolaryngology: systematic review of simulation training models. Arch Otorhinolaryngol-Head Neck Surg AOHNS. 2019 doi: 10.24983/scitemed.aohns.2019.00109. [DOI] [Google Scholar]

- 23.Zhao YC, Kennedy G, Hall R, O’Leary S. Differentiating levels of surgical experience on a virtual reality temporal bone simulator. Otolaryngol Neck Surg. 2010;143:30–35. doi: 10.1177/019459981014305S02. [DOI] [PubMed] [Google Scholar]

- 24.Arora A, Khemani S, Tolley N, et al. Face and content validation of a virtual reality temporal bone simulator. Otolaryngol Neck Surg. 2012;146:497–503. doi: 10.1177/0194599811427385. [DOI] [PubMed] [Google Scholar]

- 25.Wiet GJ, Stredney D, Kerwin T, et al. Virtual temporal bone dissection system: OSU virtual temporal bone system. Laryngoscope. 2012;122:S1–S12. doi: 10.1002/lary.22499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ho AK, Alsaffar H, Doyle PC, et al. Virtual reality myringotomy simulation with real-time deformation: development and validity testing. Laryngoscope. 2012;122:1844–1851. doi: 10.1002/lary.23361. [DOI] [PubMed] [Google Scholar]

- 27.Huang C, Cheng H, Bureau Y, et al. Face and content validity of a virtual-reality simulator for myringotomy with tube placement. J Otolaryngol - Head Neck Surg. 2015;44:40. doi: 10.1186/s40463-015-0094-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wheeler B, Doyle PC, Chandarana S, et al. Interactive computer-based simulator for training in blade navigation and targeting in myringotomy. Comput Methods Progr Biomed. 2010;98:130–139. doi: 10.1016/j.cmpb.2009.09.010. [DOI] [PubMed] [Google Scholar]

- 29.Zirkle M, Roberson DW, Leuwer R, Dubrowski A. Using a virtual reality temporal bone simulator to assess otolaryngology trainees. Laryngoscope. 2007;117:258–263. doi: 10.1097/01.mlg.0000248246.09498.b4. [DOI] [PubMed] [Google Scholar]

- 30.Sutcliffe AG, Poullis C, Gregoriades A, et al. Reflecting on the design process for virtual reality applications. Int J Human-Comput Interact. 2019;35:168–179. doi: 10.1080/10447318.2018.1443898. [DOI] [Google Scholar]

- 31.Jerald J. The VR book: human-centered design for virtual reality. United States: Morgen & Claypool; 2015. [Google Scholar]

- 32.Slater M, Wilbur S. A framework for immersive virtual environments (FIVE): speculations on the role of presence in virtual environments. Presence Teleoper Virtual Environ. 1997;6:603–616. doi: 10.1162/pres.1997.6.6.603. [DOI] [Google Scholar]