Abstract

Background.

Publicly reported hospital risk-standardized mortality rates (RSMRs) for acute myocardial infarction (AMI) are calculated for Medicare beneficiaries. Outcomes for older AMI patients may not reflect outcomes more generally.

Objective.

To examine the relationship of hospital 30-day RSMRs for older patients (≥65 years-old) with 30-day RSMRs for younger patients (18–64 years-old) and all patients (≥18 years-old) with AMI.

Design.

Retrospective cohort study.

Setting.

986 hospitals in the ACTION Registry®-Get With the Guidelines™.

Participants.

AMI hospitalizations from 10/01/2010–09/30/2014.

Measurements.

We calculated hospital 30-day RSMRs for older, younger, and all patients using a National Quality Forum-endorsed EHR measure of AMI mortality. We ranked hospitals by their 30-day RSMRs for these 3 age groups and plotted agreement in rankings. We also calculated the correlation in hospital AMI achievement scores for each age group using the Hospital Value-Based Purchasing (HVBP) Program methodology computed with the EHR measure.

Results.

We identified 267,763 and 276,031 AMI hospitalizations among older and younger patients, respectively. Median hospital 30-day RSMRs were 9.4%, 3.0%, and 6.2% for older, younger, and all patients. Most top and bottom performing hospitals for older patients were neither top nor bottom performers for younger patients. In contrast, most top and bottom performers for older patients were also top and bottom performers for all patients. Analogously, HVBP achievement scores for older patients correlated weakly with scores for younger patients (R=0.30) and strongly with scores for all patients (R=0.92).

Limitations.

Study included minority of US hospitals.

Conclusions.

Hospital mortality rankings for older patients with AMI inconsistently reflect rankings for younger patients. Incorporation of younger patients into hospital outcomes assessment would permit further examination of the presence and impact of age-related quality differences.

Primary funding source.

American College of Cardiology

INTRODUCTION

The Centers for Medicare & Medicaid Services (CMS) measure and publicly report hospital mortality after acute myocardial infarction (AMI). To accomplish this, CMS calculates 30-day risk-standardized mortality rates (RSMRs) using data for Medicare fee-for-service beneficiaries who are 65 years of age and older. While 30-day RSMRs provide a signal of hospital quality for older patients, it is not known if they also reflect quality for younger patients who are less than 65 years old.

Despite this uncertainty, outcomes for Medicare beneficiaries are often used as a proxy for hospital quality more broadly. For example, hospitals and specialty societies use these data to support quality improvement initiatives for all patients with AMI (1, 2). Researchers use this information to select hospitals for study and identify interventions believed to be associated with high and low quality of care (3–5), and the media often report Medicare outcomes as if they reflect care for all populations (6, 7). Yet the relationship of hospital outcomes for Medicare beneficiaries with outcomes for other hospitalized patients has not been well characterized. To date, the only data examining hospital mortality rates by age are from Veterans Affairs hospitals (8), which differ from non-federal hospitals with regard to their patient composition, treatment patterns, and outcomes (9, 10). If data from older patients can also be used to accurately reflect hospital quality for younger patients, clinicians, administrators, and payers will be better positioned to estimate, benchmark, and improve outcomes for all hospitalized patients with AMI, not just Medicare beneficiaries. However, if data from older patients do not prove broadly reflective of hospital quality, expanding quality and outcomes measurement to include younger patients may be warranted. We therefore sought to examine the relationship of hospital 30-day mortality rates for older patients with AMI with hospital 30-day mortality rates for younger patients and all adult patients with AMI.

METHODS

Study overview

We analyzed hospitalizations for AMI from the ACTION Registry®-Get With the Guidelines™ (ACTION-GWTG), the largest US clinical registry of AMI. Using a methodology developed by CMS to calculate hospital 30-day RSMRs using clinical data (11, 12) and a novel linkage between ACTION-GWTG and the National Death Index, we examined the relationship of hospital 30-day RSMRs for older AMI patients ≥65 years old with 30-day RSMRs for younger AMI patients 18–64 years old and 30-day RSMRs for all adult AMI patients ≥18 years old. We also calculated the correlation in hospital AMI achievement scores for each age group using an approach analogous to that of the CMS Hospital Value-Based Purchasing (HVBP) Program, which considers AMI mortality in hospital reimbursement (13). Results of these analyses will help clarify whether measurement of AMI outcomes should be expanded beyond the Medicare population to estimate hospital quality and outcomes for all patients, including younger patients.

Dataset

We used data from ACTION-GWTG, a collaboration of the American College of Cardiology Foundation and the American Heart Association that captures detailed clinical information about adult AMI hospitalizations from over 900 US hospitals. The database contains more than 300 elements selected and vetted by cardiovascular experts to capture patient characteristics, care processes, and outcomes (14–17). ACTION-GWTG has a data quality program that promotes consistent and accurate data abstraction and entry (18). We utilized ACTION-GWTG data from October 1, 2010 to September 30, 2014, the time period for which registry hospitalizations had been linked to the National Death Index using direct identifiers to determine vital status.

Patient sample

We included all hospitalizations for adult patients with AMI. We applied exclusion criteria consistent with the CMS electronic measure for AMI mortality (11, 12). Specifically, we excluded patients transferred in from another hospital since mortality is attributed by CMS to the first hospital providing treatment (12, 19), and not all transferring hospitals participate in ACTION-GWTG. We also excluded patients leaving against medical advice (11, 12). We randomly selected one hospitalization per year for patients with multiple hospitalizations.

Outcomes

The outcome for all analyses was mortality within 30 days of the admission date. Vital status was determined through linkage of ACTION-GWTG to the National Death Index.

Determination of Hospital 30-Day Risk-Standardized Mortality Rates (RSMRs)

We calculated hospital 30-day RSMRs for AMI using an established electronic quality measure developed by CMS for risk adjustment using clinical data from electronic health records (11, 12). This measure was originally created and validated using ACTION-GWTG and was endorsed by the National Quality Forum as suitable for public reporting. We used this electronic measure to calculate hospital 30-day RSMRs rather than the administrative claims measure used for public reporting (19) because incorporation of physiologic biomarkers in risk adjustment has face validity and because pre-admission claims data were not available for non-Medicare beneficiaries in ACTION-GWTG.

The methods used to identify candidate variables for inclusion in the CMS electronic measure have been described previously (11, 12). Variables had to be clinically relevant to AMI, demonstrate strong association with 30-day mortality, and be feasibly extracted from structured fields within the electronic health record. Variables also needed to be consistently obtained, captured with standard definitions, and recorded in standard formats. Variables unrelated to clinical status at hospital presentation or potentially related to post-admission events such as treatments, complications, and post-admission laboratory results were not included. The final measure included variables for patient age, heart rate at presentation, systolic blood pressure at presentation, creatinine at presentation, and troponin ratio (initial troponin value/troponin upper range limit for hospital) at presentation. We used these same variables in our study. Variables for ST-segment elevation on the electrocardiogram at presentation, cardiogenic shock at presentation, acute heart failure at presentation, and cardiac arrest at presentation were not included because these variables were often recorded in non-standard formats across hospitals and in non-structured fields within the electronic health record. However, these variables were included in a sensitivity analysis described below.

Hospital mortality rates for AMI were calculated using hospital 30-day RSMRs in a manner consistent with publicly reported outcomes measures (20). The method for calculating 30-day RSMRs involves fitting hierarchical logistic regression models for the outcome of death within 30 days of the admission date with adjustment for predictor variables at hospital presentation (12, 19). These models account for patient clustering by hospital. Data are modeled simultaneously at patient and hospital levels, and hospital random intercepts are calculated to account for variance in outcomes within and between hospitals. The hospital-specific 30-day RSMR is the ratio of the predicted number of deaths to the expected number of deaths for a specific patient population within the hospital, multiplied by the unadjusted 30-day mortality rate for that patient population across all hospitals (19). The expected number of deaths for each hospital was estimated by applying estimated regression coefficients to the patient characteristics of each hospital’s patients, adding the average of the hospital-specific intercepts, and after transformation, summing over all patients in the hospital to obtain the expected count. The predicted number of deaths was calculated by applying the estimated regression coefficients to the patient characteristics of each hospital’s patients, adding the hospital-specific intercept (representing baseline mortality risk at the specific hospital), and after transformation, summing over all patients in the hospital to obtain the predicted count.

As a sensitivity analysis, we recalculated hospital 30-day RSMRs for AMI using additional clinical variables previously shown to be predictive of AMI mortality (17, 21) including ST-segment elevation on the electrocardiogram, cardiogenic shock, acute heart failure, and cardiac arrest at presentation.

Determination of Hospital Value-Based Purchasing (HVBP) Program AMI Achievement Scores

To calculate achievement scores for each hospital, we applied the methodology utilized by CMS as part of its HVBP Program, which was created by the Affordable Care Act to incentivize hospital quality improvements (13). HVBP quantifies hospital performance with regard to care processes, patient experiences, efficiency, and outcomes (13). Hospital 30-day mortality for AMI is a component measure of hospitals’ HVBP scores. Briefly, CMS awards achievement points by comparing individual hospital 30-day RSMRs during a 3-year performance period to all hospitals’ 30-day RSMRs during a 1-year baseline period (13). A maximum of 10 achievement points is awarded to hospitals with 30-day RSMRs below a benchmark rate, defined as the mean of the bottom decile of hospital 30-day RSMRs in the baseline period (13). Hospitals with 30-day RSMRs above a threshold rate, defined as the 50th percentile of hospital 30-day RSMRs in the baseline period, are awarded zero achievement points (13). Hospitals with 30-day RSMRs that are above the benchmark rate and equal to or below the achievement threshold receive 1–10 achievement points (13). HVBP achievement scores calculated in this study utilized RSMRs that were computed using the CMS electronic measure for AMI rather than the usual publicly reported claims-based measure.

Statistical Analyses

To compare patient characteristics, hospital characteristics, and 30-day mortality between older and younger patients, we calculated frequencies and percentages for categorical variables and means and standard deviations (SD) for continuous variables. We used chi-square tests for categorical variables and T tests for continuous variables to test differences between older and younger patients.

Primary Analyses

We used the CMS electronic measure methodology to separately calculate hospital 30-day RSMRs for older patients, younger patients, and all adult patients with AMI. To better estimate hospital 30-day RSMRs for each age group, we separately calculated coefficients for predictor variables in models for older patients, younger patients, and all adult patients. We examined the discrimination of patient-level 30-day mortality models for all three age groups using the C-statistic (area under the Receiver Operating Characteristic curve).

We ranked hospitals by their 30-day RSMRs for older patients, younger patients, and all adult patients with AMI and plotted agreement in these rankings. We grouped hospitals in three ways as has been done in prior studies of hospital performance (22): (1) top 20%, middle 60%, bottom 20%; (2) top 10%, middle 80%, bottom 10%; (3) better-than-expected, no different-than-expected, and worse-than-expected per the CMS measurement algorithm, which incorporates 95% confidence intervals around each hospital’s 30-day RSMR estimate. We restricted calculations to hospitals with ≥50 AMI hospitalizations in each age group.

Finally, we calculated Pearson correlation coefficients describing the association of CMS electronic measure-based HVBP achievement scores for older patients with HVBP achievement scores for younger patients and all adult patients with AMI. CMS does not incorporate measures of uncertainty, such as 95% confidence intervals, when calculating HVBP achievement scores. Correlation estimates were restricted to hospitals with ≥50 AMI hospitalizations in each age group.

Sensitivity Analyses

We repeated the above analyses after adding additional predictor variables for ST-segment elevation on the electrocardiogram, cardiogenic shock, acute heart failure, and cardiac arrest at presentation to the CMS electronic measure for AMI when calculating hospital 30-day RSMRs.

Significance levels were 2-sided with a P value <0.05. Analyses were conducted using SAS 9.4 (SAS Institute Inc.). The Yale Human Investigation Committee approved analyses of ACTION-GWTG using a limited data set.

Role of the Funding Source

This research was supported by the American College of Cardiology Foundation’s National Cardiovascular Data Registry (NCDR). The NCDR research committee reviewed the final manuscript before submission but had no role in the design, conduct, or reporting of the study.

RESULTS

We identified 543,794 adult hospitalizations for AMI from 986 hospitals. 267,763 (49.2%) involved patients ≥65 years-old and 276,031 (50.8%) involved patients <65 years-old. Characteristics of these hospitalizations are shown in Table 1. Average age was 76.3 years among older patients, 53.6 years among younger patients, and 64.8 years among all adult patients. Compared with younger patients, older patients were less likely to have ST-elevation myocardial infarction and more likely to have acute heart failure on admission (p<0.001 for both). 30-day mortality occurred in 9.3% of older patients, 3.1% of younger patients, and 6.2% of all adult patients.

Table 1.

Characteristics of Patients with Acute Myocardial Infarction

| Patient Characteristic | All Adult Patients ≥18 Years-Old (N=543,794) | Older Patients ≥65 Years-Old (N=267,763) | Younger Patients 18–64 Years-Old (N=276,031) | P Value for Difference between Older and Younger Patients |

|---|---|---|---|---|

| Mean age (SD) | 64.8 (13.8) | 76.3 (8.1) | 53.6 (7.8) | --- |

| Mean heart rate at presentation in BPM (SD) | 84.5 (24.3) | 85.1 (25.1) | 83.8 (23.4) | <0.001 |

| Mean systolic blood pressure at presentation in mm Hg (SD) | 145.3 (35.0) | 144.5 (35.3) | 146.2 (34.7) | <0.001 |

| Mean serum creatinine at presentation in mg/dL (SD) | 1.3 (1.2) | 1.4 (1.1) | 1.2 (1.2) | <0.001 |

| Troponin ratio at presentation (SD) | 70.7 (926.8) | 66.0 (853.1) | 75.2 (993.4) | <0.001 |

| ST-elevation on ECG at presentation (%) | 39.3 | 31.7 | 46.7 | <0.001 |

| Acute heart failure at presentation (%) | 13.4 | 19.1 | 7.8 | <0.001 |

| Cardiogenic shock at presentation (%) | 4.2 | 4.5 | 3.9 | <0.001 |

| Cardiac arrest at presentation (%) | 3.8 | 3.2 | 4.4 | <0.001 |

The distribution of patients by hospital characteristic for older patients, younger patients, and all adult patients is shown in Appendix Table 1. There were small differences in hospital location (rural, suburban, urban), hospital type (government, private/community, university), hospital teaching status, and hospital census region across age groups.

Hospital AMI volumes were large. Median hospital AMI volume was 389 (interquartile range [IQR] 141–760) for all adult patients, 182 (IQR 66–373) for older patients, and 195 (IQR 73–385) for younger patients.

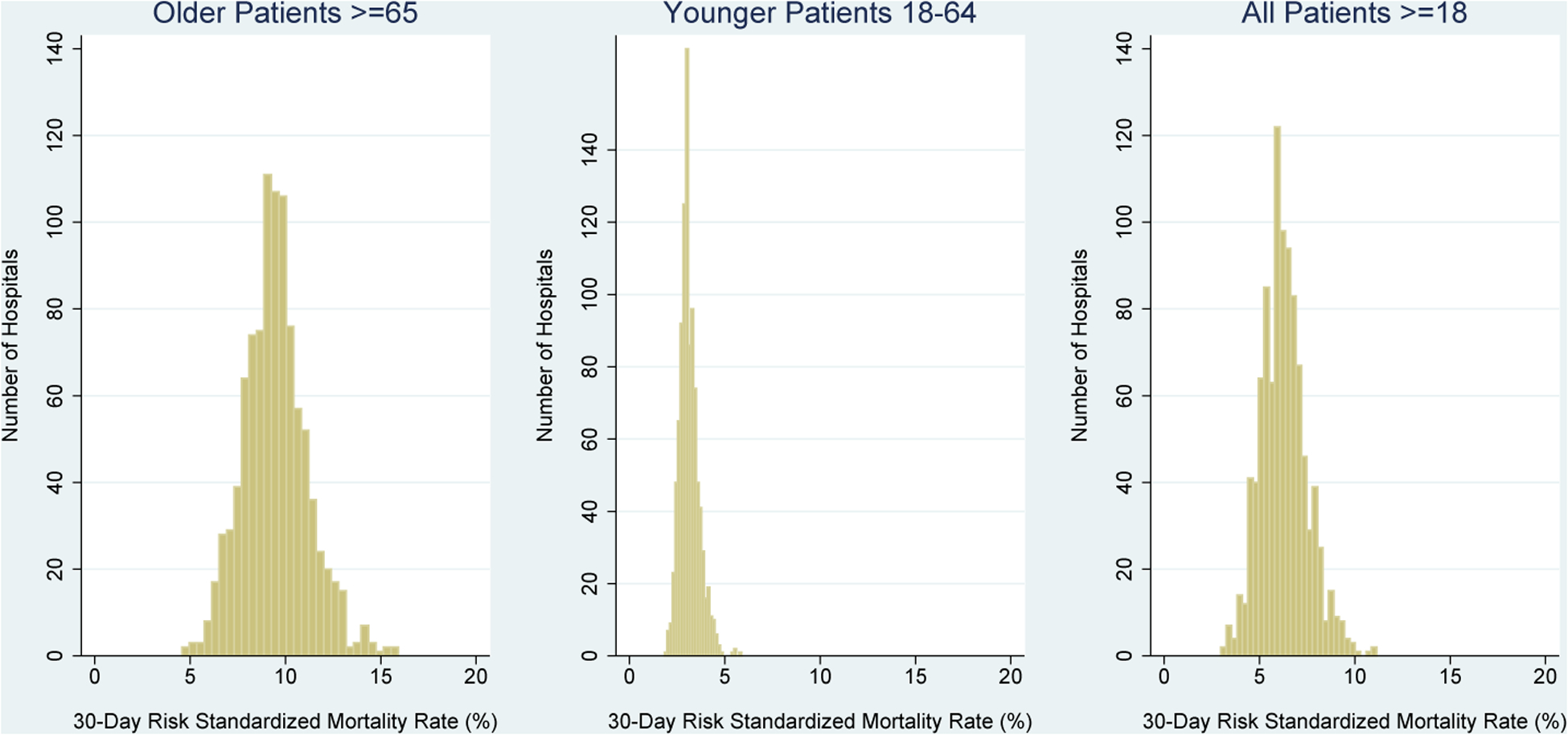

Distributions of hospital 30-day RSMRs for older patients, younger patients, and all adult patients are shown in Figure 1. Median hospital 30-day RSMRs were 9.4% (IQR 8.4%−10.4%) for older patients, 3.0% (IQR 2.8%−3.4%) for younger patients, and 6.2% (IQR 5.4%−7.0%) for all adult patients.

Figure 1. Distribution of Hospital 30-Day Risk-Standardized Mortality Rates for Older Patients, Younger Patients, and All Adult Patients with Acute Myocardial Infarction.

Hospital 30-day risk-standardized mortality rates for older patients ≥65 years-old, younger patients 18–64 years-old, and all adult patients ≥18 years-old are presented sequentially from left to right. Hospital risk-standardized mortality rates were calculated with the Centers for Medicare & Medicaid Services electronic measure for acute myocardial infarction. RSMR: risk-standardized mortality rate.

Primary Analysis of Hospital 30-Day RSMRs (CMS Electronic Measure)

Model discrimination was high. C-statistics were 0.78, 0.83, and 0.83 in models for older patients, younger patients, and all adult patients, respectively.

Most top and bottom performing hospitals for older patients were neither top nor bottom performers for younger patients (Table 2). Among 303 hospitals ranked in the top 20% or bottom 20% for older patients, 59% (178) were ranked differently for younger patients. Among 151 hospitals ranked in the top 10% or bottom 10% for older patients, 70% (105) were ranked differently for younger patients. Finally, among 131 hospitals with better-than-expected or worse-than-expected mortality performance for older patients per the CMS algorithm (11), 88% (115) were ranked differently for younger patients. No hospitals with better-than-expected performance for older patients had worse-than-expected performance for younger patients, or vice versa.

Table 2.

Hospital Ranking Agreements for Older Patients and Younger Patients with Acute Myocardial Infarction

| Hospital 30-Day RSMRs* for Older Patients ≥65 Years-Old | |||

|---|---|---|---|

| Hospital 30-Day RSMRs* for Younger Patients 18–64 Years-Old | Top 20% (n=153) |

Middle 60% (n=456) |

Bottom 20% (n=150) |

| Top 20% (n=158) | 61 | 82 | 15 |

| Middle 60% (n=447) | 84 | 292 | 71 |

| Bottom 20% (n=154) | 8 | 82 | 64 |

| Hospital 30-Day RSMRs* for Younger Patients 18–64 Years-Old | Top 10% (n=77) |

Middle 80% (n=608) |

Bottom 10% (n=74) |

| Top 10% (n=79) | 25 | 52 | 2 |

| Middle 80% (n=605) | 51 | 503 | 51 |

| Bottom 10% (n=75) | 1 | 53 | 21 |

| Hospital 30-Day RSMRs* for Younger Patients 18–64 Years-Old | Better-Than-Expected (n=62) |

No Different-Than-Expected (n=628) |

Worse-Than-Expected (n=69) |

| Better-Than-Expected (n=10) | 6 | 4 | 0 |

| No Different-Than-Expected (n=728) | 56 | 613 | 59 |

| Worse-Than-Expected (n=21) | 0 | 11 | 10 |

Each cell in table indicates number of hospitals (total N=759 hospitals, 227 hospitals omitted due to <50 AMI hospitalizations among older adults or younger adults). Shaded cells correspond to movements in hospital mortality rankings for older patients compared with younger patients.

RSMR: risk-standardized mortality rate.

In contrast, most top and bottom performing hospitals for older patients were also top and bottom performers for all adult patients (Table 3). Among 312 hospitals ranked in the top 20% or bottom 20% for older patients, only 18% (55) were ranked differently for all adult patients. Among 156 hospitals ranked in the top 10% or bottom 10% for older patients, 23% (36) were ranked differently for all adult patients. Finally, among 133 hospitals with better-than-expected or worse-than-expected mortality performance for older patients, 13% (17) were ranked differently for all adult patients.

Table 3.

Hospital Ranking Agreements for Older Patients and All Adult Patients with Acute Myocardial Infarction

| Hospital 30-Day RSMRs* for Older Patients ≥65 Years-Old | |||

|---|---|---|---|

| Hospital 30-Day RSMRs* for All Adult Patients ≥18 Years-Old | Top 20% (n=156) |

Middle 60% (n=470) |

Bottom 20% (n=156) |

| Top 20% (n=168) | 135 | 33 | 0 |

| Middle 60% (n=458) | 21 | 403 | 34 |

| Bottom 20% (n=156) | 0 | 34 | 122 |

| Hospital 30-Day RSMRs* for All Adult Patients ≥18 Years-Old | Top 10% N=78) |

Middle 80% (n=626) |

Bottom 10% (n=78) |

| Top 10% (n=86) | 62 | 24 | 0 |

| Middle 80% (n=616) | 16 | 580 | 20 |

| Bottom 10% (n=80) | 0 | 22 | 58 |

| Hospital 30-Day RSMRs* for All Adult Patients ≥18 Years-Old | Better-Than-Expected (n=62) |

No Different-Than-Expected (n=649) |

Worse-Than-Expected (n=71) |

| Better-Than-Expected (n=85) | 57 | 28 | 0 |

| No Different-Than-Expected (n=617) | 5 | 600 | 12 |

| Worse-Than-Expected (n=80) | 0 | 21 | 59 |

Each cell in table indicates number of hospitals (total N=782 hospitals, 204 hospitals omitted due to <50 AMI hospitalizations among older adults). Shaded cells correspond to movements in hospital mortality rankings for older patients compared with all adult patients.

RSMR: risk-standardized mortality rate.

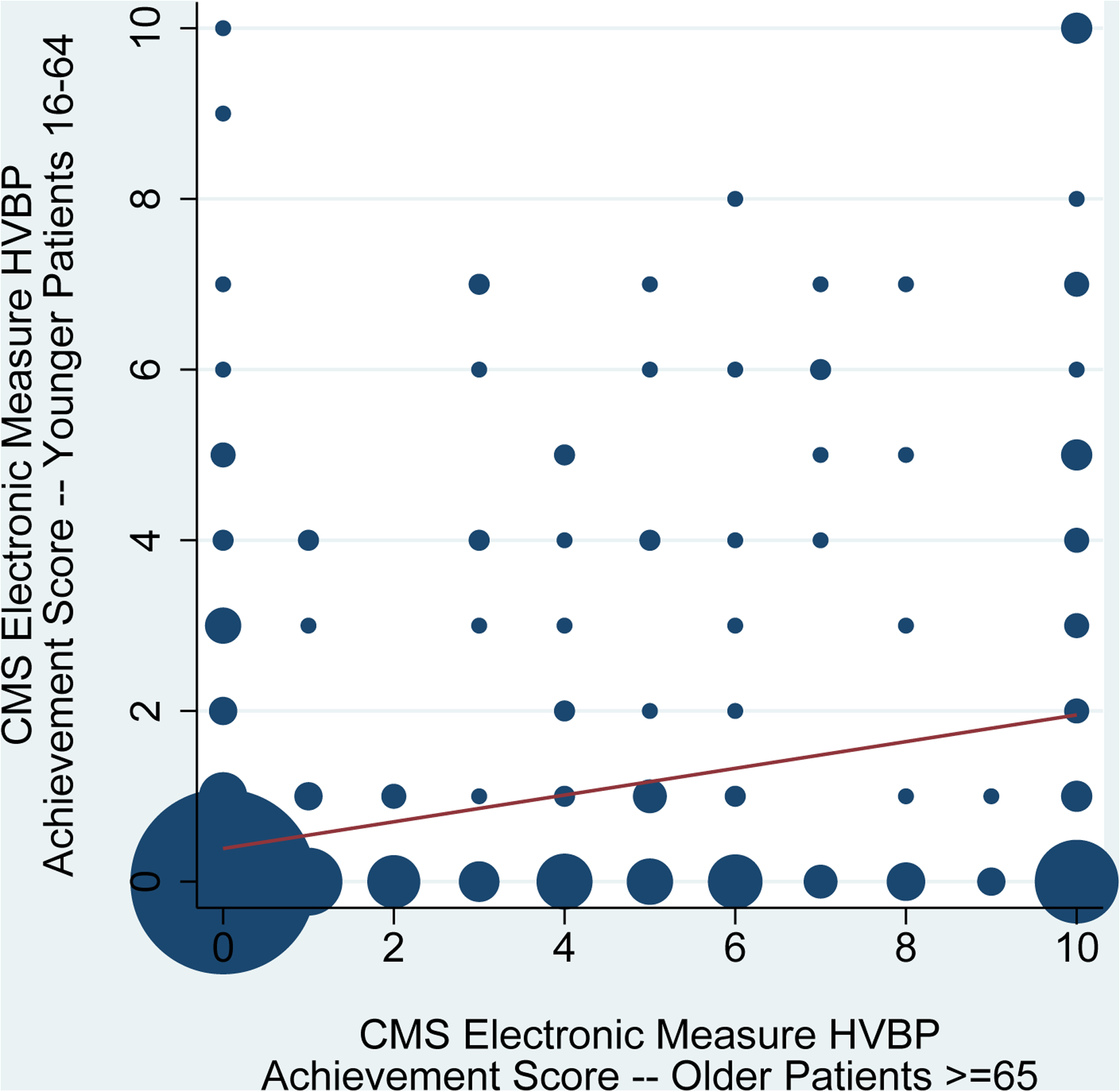

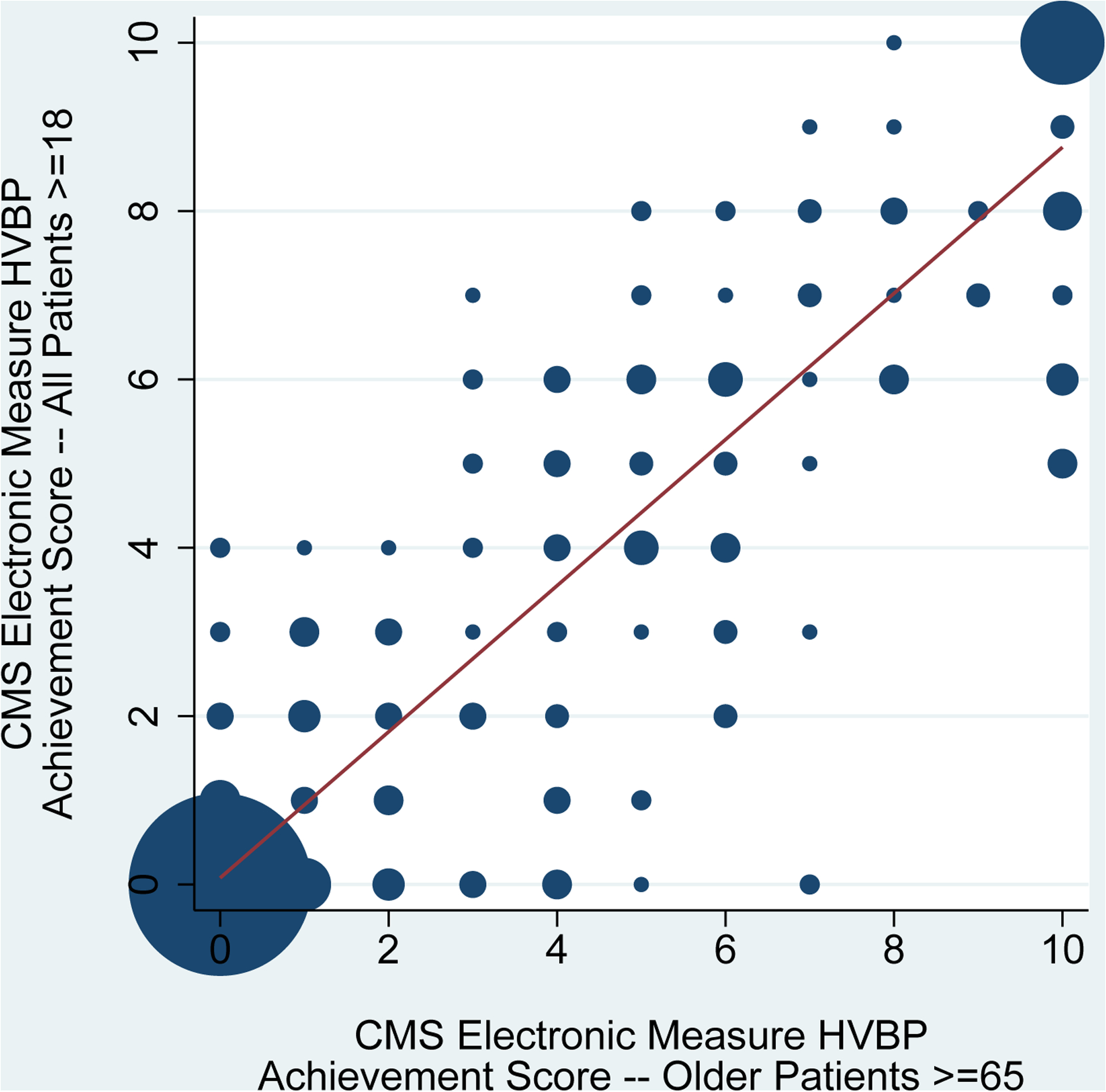

Consistent with these findings, CMS electronic measure-based HVBP achievement scores for older patients were weakly correlated with HVBP achievement scores for younger patients (correlation coefficient 0.30, Figure 2A) and strongly correlated with HVBP achievement scores for all adult patients (correlation coefficient 0.92, Figure 2B).

Figure 2. Correlation of CMS Electronic Measure Hospital Value-Based Purchasing Program Achievement Scores for Older Patients with Achievement Scores for Younger Patients and All Adult Patients with Acute Myocardial Infarction.

Hospital Value-Based Purchasing (HVBP) Program achievement scores for acute myocardial infarction were calculated using the Centers for Medicare & Medicaid Services’ methodology. Risk-standardized mortality rates were computed using the Centers for Medicare & Medicaid Services’ electronic measure for acute myocardial infarction rather than the usual publicly reported claims-based measure. The correlation of HVBP Program achievement scores for older patients ≥65 years-old with HVBP Program achievement scores for younger patients 18–64 years-old is shown in panel 2A (n=495 hospitals). The correlation of HVBP Program achievement scores for older patients ≥65 years-old with HVBP Program achievement scores for all adult patients ≥18 years-old is shown in panel 2B (n=490 hospitals). Circle size is proportional to the number of hospitals. The volume-weighted trend line is displayed. HVBP: Hospital Value-Based Purchasing.

Sensitivity Analysis of Hospital 30-Day RSMRs (CMS Electronic Measure + Additional Clinical Predictors of Mortality)

Model discrimination was higher after adding variables for ST-segment elevation on the electrocardiogram, cardiogenic shock, acute heart failure, and cardiac arrest at presentation. C-statistics were 0.82, 0.88, and 0.86 in models for older patients, younger patients, and all adult patients, respectively.

Adding more clinical variables for risk adjustment, however, did not materially change findings. As above most top and bottom performing hospitals for older patients were neither top nor bottom performers for younger patients (Appendix Table 2). For example, among 153 hospitals considered to have better-than-expected or worse-than-expected mortality performance for older patients, 87% (133) were ranked differently for younger patients. Similarly, most top and bottom performing hospitals for older patients were also top and bottom performers for all adult patients (Appendix Table 3). For example, among 157 hospitals considered to have better-than-expected or worse-than-expected mortality performance for older patients, only 11% (17) were ranked differently for all patients.

Consistent with these findings, CMS electronic measure-based HVBP achievement scores for older patients were again weakly correlated with HVBP achievement scores for younger patients (correlation coefficient 0.26, Appendix Figure 2) and strongly correlated with HVBP achievement scores for all adult patients (correlation coefficient 0.91, Appendix Figure 3).

DISCUSSION

In this analysis of detailed clinical data from the largest US registry of AMI, we found that hospital mortality rankings for older patients with AMI inconsistently reflected rankings for younger patients. Most top and bottom performing hospitals for older patients were ranked differently for younger patients, regardless of the approach used to identify performance outliers. These differences resulted in large discrepancies in achievement scores analogous to those used by the CMS Hospital Value-Based Purchasing Program. These results suggest that hospital quality for Medicare beneficiaries, the most commonly used benchmark for performance assessment, should not be assumed to reflect outcomes for younger persons.

Our results support the need to incorporate younger patients into hospital outcomes assessment. Data from younger patients are needed to permit examination of the presence and impact of age-related differences in hospital quality. These data are also needed for hospitals and clinicians to benchmark performance and continuously improve outcomes over time. The lack of focus on hospital outcomes for younger patients is a missed opportunity, as initial hospital quality for AMI may influence long-term mortality (23, 24). AMI is a noteworthy case, as almost half of patients are less than 65 years of age (25).

Yet substantial barriers exist to measuring hospital quality and outcomes for younger patients. While many states have embarked on building databases containing administrative claims from all payers (26, 27), there is no centralized database with health information on younger patients across the US. This stands in contrast to data for Medicare fee-for-service beneficiaries, which are organized in an integrated repository (28). At present, clinical data for younger patients largely exists in databases of individual health plans or hospitals that do not easily link to one another. Interoperable medical record systems and devices that can exchange and interpret shared data (29) and link to national death indices may therefore be needed to examine hospital 30-day mortality rates for younger patients. The Office of the National Coordinator for Health Information Technology has released a roadmap for interoperability with the goal of achieving nationwide interoperability by 2024 (30). Yet a large number of impediments exist including technical challenges, privacy and security concerns, and lack of alignment with payment incentives (30).

The reasons why hospital mortality performance differs for older and younger patients with AMI are unknown. It may be that care patterns diverge by age in variable ways across hospitals. Previous studies have shown that high-risk older patients with AMI are less likely to receive guideline-concordant medical therapies and primary percutaneous coronary intervention (PCI) (31–33). Older patients undergoing PCI are also less likely to receive bleeding avoidance strategies like transradial access and vascular closure devices (34, 35). Treatment also varies across hospitals (36–38). However, it is not known if variability in treatment by age and hospital interact to influence outcomes. As incentive programs to improve quality for AMI have largely involved Medicare beneficiaries (20, 39, 40), it is also possible that our findings reflect differential attention to treatment and outcomes for older and younger patients. Alternatively, observed differences in hospital RSMRs by age may result from particular difficulties with measuring hospital quality for younger patients due to relatively low mortality rates in this population and relatively little variation in mortality performance across hospitals, both of which may caused increased instability in hospital rankings. In contrast, it is unlikely that our findings are explained by differences in model performance for older and younger patients, as model discrimination was high for all age groups across analyses.

While we found that hospital 30-day RSMRs for older patients may provide a useful overall signal of hospital-wide quality for AMI due to the preponderance of deaths among older adults, this result does not resolve measurement gaps for younger patients. We did not know a priori what our analyses would show and were hopeful that hospital mortality rates for older patients would be strongly correlated with mortality rates for both younger patients and all patients with AMI. As quality measures have proliferated and made reporting requirements increasingly burdensome (41, 42), a strong alignment in mortality outcomes for older, younger, and all patients would have suggested that current public reporting for Medicare beneficiaries may be sufficient to characterize hospital-wide mortality for AMI. Such consolidation of quality measures would be consistent with recently articulated priorities of CMS (43), the Institute of Medicine (44), and other organizations (45, 46). Unfortunately, our results have shown that Medicare outcomes in isolation may not provide a reliable signal of quality for younger adults. Stratified reporting of quality measures with separate estimates of hospital quality for both younger and older patients would permit further examination of the presence and impact of age-related differences in hospital quality.

Our study should be interpreted in the context of the following potential limitations. First, ACTION-GWTG only includes a minority of US hospitals caring for AMI. However, characteristics of patients and treatment patterns in ACTION-GWTG compare favorably with national estimates (47), and ACTION-GWTG has been used extensively to study quality and outcomes after AMI (48, 49). Second, we did not calculate 30-day RSMRs with the administrative claims measure currently used by CMS for hospital profiling of AMI mortality (19). Rather, we chose to use an electronic measure with clinical data to calculate hospital 30-day RSMRs, as incorporation of physiologic biomarkers describing clinical status for risk adjustment has face validity and leverages rich data within ACTION-GWTG. This electronic measure was applied to all study cohorts, and findings were consistent across analyses. Third, our finding a weak relationship between hospital mortality for older and younger patients may relate to low mortality rates and limited ranges in hospital 30-day RSMRs for younger patients, both of which increase imprecision of hospital quality estimates. However, model performance was especially high for younger patients in both primary and sensitivity analyses (c-statistics of 0.83 and 0.88, respectively) and exceeded model performance for older adults (respective c-statistics of 0.78 and 0.82). In addition, findings were based on large sample sizes with four years of study data and were unchanged in further sensitivity analyses that were restricted to hospitals with greater than 200 AMI hospitalizations among both older and younger adults during the study period (results available on request). Fourth, we only studied persons hospitalized with AMI and therefore cannot comment on the relationship of hospital mortality rates by age for patients with other conditions.

CONCLUSIONS

Hospital mortality rankings for older patients with AMI inconsistently reflect rankings for younger patients. Estimates of hospital quality using data from Medicare beneficiaries should therefore not be assumed to reflect quality for younger persons, who constitute almost one-half of patients with AMI. Additional outcomes measurement beyond Medicare beneficiaries may be required to understand and report care quality more broadly within hospitals.

Supplementary Material

Correlation of CMS Electronic Measure Hospital Value-Based Purchasing Program Achievement Scores for Older Patients with Achievement Scores for Younger Patients with Acute Myocardial Infarction (Sensitivity Analysis). Hospital Value-Based Purchasing (HVBP) Program achievement scores for acute myocardial infarction were calculated using the Centers for Medicare & Medicaid Services’ methodology. Risk-standardized mortality rates were computed using the Centers for Medicare & Medicaid Services’ electronic measure for acute myocardial infarction with additional predictor variables for ST-segment elevation on the electrocardiogram at presentation, cardiogenic shock at presentation, acute heart failure at presentation, and cardiac arrest at presentation rather than the usual publicly reported claims-based measure (n=495 hospitals). Circle size is proportional to the number of hospitals. The volume-weighted trend line is displayed. HVBP: Hospital Value-Based Purchasing.

Correlation of CMS Electronic Measure Hospital Value-Based Purchasing Program Achievement Scores for Older Patients with Achievement Scores for All Adult Patients with Acute Myocardial Infarction (Sensitivity Analysis). Hospital Value-Based Purchasing (HVBP) Program achievement scores for acute myocardial infarction were calculated using the Centers for Medicare & Medicaid Services’ methodology. Risk-standardized mortality rates were computed using the Centers for Medicare & Medicaid Services’ electronic measure for acute myocardial infarction with additional predictor variables for ST-segment elevation on the electrocardiogram at presentation, cardiogenic shock at presentation, acute heart failure at presentation, and cardiac arrest at presentation rather than the usual publicly reported claims-based measure (n=490 hospitals). Circle size is proportional to the number of hospitals. The volume-weighted trend line is displayed. HVBP: Hospital Value-Based Purchasing.

Funding/Support:

This project was funded by the American College of Cardiology. At the time this research was conducted, Dr. Dharmarajan was supported by grant K23AG048331 from the National Institute on Aging and the American Federation for Aging Research through the Paul B. Beeson Career Development Award Program. He was also supported by grant P30AG021342 via the Yale Claude D. Pepper Older Americans Independence Center. Drs. Spatz and Desai are supported by grant K12HS023000 from the Agency for Healthcare Research and Quality. Dr. Curtis is supported by grant 1U01HL105270 (Center for Cardiovascular Outcomes Research at Yale University) from the National Heart, Lung, and Blood Institute. The content is solely the responsibility of the authors and does not necessarily represent the official views or policies of the American College of Cardiology, Department of Health and Human Services, the National Institutes of Health, or the American Federation for Aging Research.

Conflict of Interest Disclosures:

KD, RLM, YW, JSR, ESS, NRD, SMB, and JPC work under contract with the Centers for Medicare & Medicaid Services to develop and maintain performance measures. At the time this research was performed, KD was a consultant for and member of a scientific advisory board for Clover Health. RLM is on an adjudication committee for Pfizer. YW and JPC work under contract with the ACC/NCDR. JSR receives support from the Food and Drug Administration to develop methods for post-market surveillance of medical devices. JSR and NRD report receiving research support through Yale University from Medtronic, Inc. and Johnson & Johnson to develop methods of clinical trial data sharing and from the Blue Cross Blue Shield Association to better understand medical technology evidence generation. JAdL is a consultant for Roche Diagnostics, Abbott Diagnostics, and Ortho Clinical Diagnostics, serves on an endpoint committee for Siemen’s Health Care Diagnostics and Radiometer, and serves on a steering committee for Amgen. GCF serves as vice-chair and PAH serves as chair of the ACC/AHA Task Force on Performance Measures. DLB discloses the following relationships - Advisory Board: Cardax, Elsevier Practice Update Cardiology, Medscape Cardiology, Regado Biosciences; Board of Directors: Boston VA Research Institute, Society of Cardiovascular Patient Care; Chair: American Heart Association Quality Oversight Committee; Data Monitoring Committees: Duke Clinical Research Institute, Harvard Clinical Research Institute, Mayo Clinic, Population Health Research Institute; Honoraria: American College of Cardiology (Senior Associate Editor, Clinical Trials and News, ACC.org), Belvoir Publications (Editor in Chief, Harvard Heart Letter), Duke Clinical Research Institute (clinical trial steering committees), Harvard Clinical Research Institute (clinical trial steering committee), HMP Communications (Editor in Chief, Journal of Invasive Cardiology), Journal of the American College of Cardiology (Guest Editor; Associate Editor), Population Health Research Institute (clinical trial steering committee), Slack Publications (Chief Medical Editor, Cardiology Today’s Intervention), Society of Cardiovascular Patient Care (Secretary/Treasurer), WebMD (CME steering committees); Other: Clinical Cardiology (Deputy Editor), NCDR-ACTION Registry Steering Committee (Chair), VA CART Research and Publications Committee (Chair); Research Funding: Amarin, Amgen, AstraZeneca, Bristol-Myers Squibb, Eisai, Ethicon, Forest Laboratories, Ischemix, Medtronic, Pfizer, Roche, Sanofi Aventis, The Medicines Company; Royalties: Elsevier (Editor, Cardiovascular Intervention: A Companion to Braunwald’s Heart Disease); Site Co-Investigator: Biotronik, Boston Scientific, St. Jude Medical; Trustee: American College of Cardiology; Unfunded Research: FlowCo, PLx Pharma, Takeda.

Appendix Table 1.

Distribution of Patients By Hospital Characteristic for All Adult Patients, Older Patients, and Younger Patients with Acute Myocardial Infarction

| Hospital Characteristic | All Adult Patients (N=543,794) | Older Patients (N=267,763) | Younger Patients (N=276,031) | P Value for Difference between Hospitals Caring for Older and Younger Patients |

|---|---|---|---|---|

| Hospital location | <0.001 | |||

| Rural (%) | 81,836 (15.0) | 41,093 (15.4) | 40,743 (14.8) | |

| Suburban (%) | 169,062 (31.1) | 85,902 (32.1) | 83,160 (30.1) | |

| Urban (%) | 292,877 (53.9) | 140,761 (52.6) | 152,116 (55.1) | |

| Participant Category | <0.001 | |||

| Government (%) | 9,712 (1.8) | 4,161 (1.6) | 5,551 (2.0) | |

| Private/community (%) | 480,368 (88.3) | 240,373 (89.8) | 239,995 (87.0) | |

| University (%) | 53,695 (9.9) | 23,222 (8.7) | 30,473 (11.0) | |

| Teaching hospital (%) | 248,311 (45.7) | 119,056 (44.5) | 129,255 (46.8) | <0.001 |

| Public hospital (%) | 285,384 (52.5) | 141,055 (52.7) | 144,329 (52.3) | 0.36 |

| US census region | <0.001 | |||

| Midwest (%) | 150,924 (27.8) | 75,935 (28.4) | 74,989 (27.2) | |

| Northeast (%) | 52,846 (9.7) | 26,979 (10.1) | 25,867 (9.4) | |

| South (%) | 269,118 (49.5) | 127,756 (47.7) | 141,362 (51.2) | |

| West (%) | 70,705 (13.0) | 36,977 (13.8) | 33,728 (12.2) |

Appendix Table 2.

Hospital Ranking Agreements for Older Patients and Younger Patients with Acute Myocardial Infarction (Sensitivity Analysis)

| Hospital 30-Day RSMRs* for Older Patients ≥65 Years-Old | |||

|---|---|---|---|

| Hospital 30-Day RSMRs* for Younger Patients <65 Years-Old | Top 20% (n=153) |

Middle 60% (n=458) |

Bottom 20% (n=148) |

| Top 20% (n=153) | 61 | 82 | 10 |

| Middle 60% (n=453) | 86 | 302 | 65 |

| Bottom 20% (n=153) | 6 | 74 | 73 |

| Hospital 30-Day RSMRs* for Younger Patients <65 Years-Old | Top 10% (n=77) |

Middle 80% (n=609) |

Bottom 10% (n=73) |

| Top 10% (n=77) | 20 | 55 | 2 |

| Middle 80% (n=604) | 56 | 497 | 51 |

| Bottom 10% (n=78) | 1 | 57 | 20 |

| Hospital 30-Day RSMRs* for Younger Patients <65 Years-Old | Better-Than-Expected (n=58) |

No Different-Than-Expected (n=606) |

Worse-Than-Expected (n=95) |

| Better-Than-Expected (n=20) | 7 | 13 | 0 |

| No Different-Than-Expected (n=701) | 51 | 568 | 82 |

| Worse-Than-Expected (n=38) | 0 | 25 | 13 |

Each cell in table indicates number of hospitals (total N=759 hospitals, 227 hospitals omitted due to <50 AMI hospitalizations among older adults or younger adults). Shaded cells correspond to movements in hospital mortality rankings for older patients compared with younger patients. We used the CMS electronic measure methodology with additional predictor variables for ST-segment elevation on the electrocardiogram at presentation, cardiogenic shock at presentation, acute heart failure at presentation, and cardiac arrest at presentation when calculating hospital 30-day RSMRs for older patients and younger patients with AMI. We ranked hospitals by their 30-day RSMRs for older patients and younger patients with AMI and plotted agreement in these rankings.

RSMR: risk-standardized mortality rate.

Appendix Table 3.

Hospital Ranking Agreements for Older Patients and All Adult Patients with Acute Myocardial Infarction (Sensitivity Analysis)

| Hospital 30-Day RSMRs* for Older Patients ≥65 Years-Old | |||

|---|---|---|---|

| Hospital 30-Day RSMRs* for All Adult Patients ≥18 Years-Old | Top 20% (n=156) |

Middle 60% (n=470) |

Bottom 20% (n=156) |

| Top 20% (n=163) | 128 | 35 | 0 |

| Middle 60% (n=455) | 28 | 403 | 24 |

| Bottom 20% (n=164) | 0 | 32 | 132 |

| Hospital 30-Day RSMRs* for All Adult Patients ≥18 Years-Old | Top 10% (n=78) |

Middle 80% (n=626) |

Bottom 10% (n=78) |

| Top 10% (n=83) | 60 | 23 | 0 |

| Middle 80% (n=616) | 18 | 584 | 14 |

| Bottom 10% (n=83) | 0 | 19 | 64 |

| Hospital 30-Day RSMRs* for All Adult Patients ≥18 Years-Old | Better-Than-Expected (n=58) |

No Different-Than-Expected (n=625) |

Worse-Than-Expected (n=99) |

| Better-Than-Expected (n=88) | 52 | 36 | 0 |

| No Different-Than-Expected (n=570) | 6 | 553 | 11 |

| Worse-Than-Expected (n=124) | 0 | 36 | 88 |

Each cell in table indicates number of hospitals (total N=782 hospitals, 204 hospitals omitted due to <50 AMI hospitalizations among older adults). Shaded cells correspond to movements in hospital mortality rankings for older patients compared with younger patients. We used the CMS electronic measure methodology with additional predictor variables for ST-segment elevation on the electrocardiogram at presentation, cardiogenic shock at presentation, acute heart failure at presentation, and cardiac arrest at presentation when calculating hospital 30-day RSMRs for older patients and all adult patients with AMI. We ranked hospitals by their 30-day RSMRs for older patients and all adult patients with AMI and plotted agreement in these rankings.

RSMR: risk-standardized mortality rate.

REFERENCES

- 1.Curry LA, Linnander EL, Brewster AL, Ting H, Krumholz HM, Bradley EH. Organizational culture change in U.S. hospitals: a mixed methods longitudinal intervention study. Implement Sci. 2015;10:29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Amerian College of Cardiology. H2H: Hospital to Home. Accessed at http://cvquality.acc.org/Initiatives/H2H.aspx on 17 July 2016.

- 3.Curry LA, Spatz E, Cherlin E, Thompson JW, Berg D, Ting HH, et al. What distinguishes top-performing hospitals in acute myocardial infarction mortality rates? A qualitative study. Ann Intern Med. 2011;154:384–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bradley EH, Curry LA, Spatz ES, Herrin J, Cherlin EJ, Curtis JP, et al. Hospital strategies for reducing risk-standardized mortality rates in acute myocardial infarction. Ann Intern Med. 2012;156:618–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bradley EH, Sipsma H, Brewster AL, Krumholz HM, Curry L. Strategies to reduce hospital 30-day risk-standardized mortality rates for patients with acute myocardial infarction: a cross-sectional and longitudinal survey. BMC Cardiovasc Disord. 2014;14:126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Becker’s Infection Control & Clinical Quality. 48 hospitals with the lowest mortality rates from heart attacks. Accessed at http://www.beckershospitalreview.com/quality/48-hospitals-with-lowest-mortality-rates-from-heart-attacks.html on 28 July 2016.

- 7.The Advisory Board. Where Hospitals Have the Most Readmissions. Accessed at https://www.advisory.com/daily-briefing/2014/01/06/where-hospitals-have-the-most-readmissions on 18 November 2016.

- 8.Render ML, Almenoff PL, Christianson A, Sales AE, Czarnecki T, Deddens JA, et al. A hybrid Centers for Medicaid and Medicare service mortality model in 3 diagnoses. Med Care. 2012;50:520–6. [DOI] [PubMed] [Google Scholar]

- 9.Landrum MB, Guadagnoli E, Zummo R, Chin D, McNeil BJ. Care following acute myocardial infarction in the Veterans Administration Medical Centers: a comparison with Medicare. Health Serv Res. 2004;39:1773–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nuti SV, Qin L, Rumsfeld JS, Ross JS, Masoudi FA, Normand SL, et al. Association of Admission to Veterans Affairs Hospitals vs Non-Veterans Affairs Hospitals With Mortality and Readmission Rates Among Older Men Hospitalized With Acute Myocardial Infarction, Heart Failure, or Pneumonia. JAMA. 2016;315:582–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Centers for Medicare & Medicaid Services. Hybrid 30-day risk-standardized acute myocardial infarction (AMI) mortality measure with electronic health record (EHR)-extracted risk factors. Technical report version 1.1 March 2015. [Google Scholar]

- 12.McNamara RL, Wang Y, Partovian C, Montague J, Mody P, Eddy E, et al. Development of a Hospital Outcome Measure Intended for Use With Electronic Health Records: 30-Day Risk-standardized Mortality After Acute Myocardial Infarction. Med Care. 2015;53:818–26. [DOI] [PubMed] [Google Scholar]

- 13.Centers for Medicare & Medicaid Services. Medicare Learning Network. Hospital Value-Based Purchasing, September 2015. Accessed at https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNProducts/downloads/Hospital_VBPurchasing_Fact_Sheet_ICN907664.pdf on 24 April 2017.

- 14.Cannon CP, Battler A, Brindis RG, Cox JL, Ellis SG, Every NR, et al. American College of Cardiology key data elements and definitions for measuring the clinical management and outcomes of patients with acute coronary syndromes. A report of the American College of Cardiology Task Force on Clinical Data Standards (Acute Coronary Syndromes Writing Committee). J Am Coll Cardiol. 2001;38:2114–30. [DOI] [PubMed] [Google Scholar]

- 15.Cannon CP, Brindis RG, Chaitman BR, Cohen DJ, Cross JT Jr., Drozda JP Jr., et al. 2013 ACCF/AHA key data elements and definitions for measuring the clinical management and outcomes of patients with acute coronary syndromes and coronary artery disease: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Clinical Data Standards (Writing Committee to Develop Acute Coronary Syndromes and Coronary Artery Disease Clinical Data Standards). Circulation. 2013;127:1052–89. [DOI] [PubMed] [Google Scholar]

- 16.Peterson ED, Roe MT, Chen AY, Fonarow GC, Lytle BL, Cannon CP, et al. The NCDR ACTION Registry-GWTG: transforming contemporary acute myocardial infarction clinical care. Heart. 2010;96:1798–802. [DOI] [PubMed] [Google Scholar]

- 17.Chin CT, Chen AY, Wang TY, Alexander KP, Mathews R, Rumsfeld JS, et al. Risk adjustment for in-hospital mortality of contemporary patients with acute myocardial infarction: the acute coronary treatment and intervention outcomes network (ACTION) registry-get with the guidelines (GWTG) acute myocardial infarction mortality model and risk score. Am Heart J. 2011;161:113–22.e2. [DOI] [PubMed] [Google Scholar]

- 18.Messenger JC, Ho KK, Young CH, Slattery LE, Draoui JC, Curtis JP, et al. The National Cardiovascular Data Registry (NCDR) Data Quality Brief: the NCDR Data Quality Program in 2012. J Am Coll Cardiol. 2012;60:1484–8. [DOI] [PubMed] [Google Scholar]

- 19.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006;113:1683–92. [DOI] [PubMed] [Google Scholar]

- 20.Centers for Medicare & Medicaid Services. Hospital Compare. Accessed at https://www.medicare.gov/hospitalcompare/search.html on 15 November 2016.

- 21.McNamara RL, Kennedy KF, Cohen DJ, Diercks DB, Moscucci M, Ramee S, et al. Predicting In-Hospital Mortality in Patients With Acute Myocardial Infarction. J Am Coll Cardiol. 2016;68:626–35. [DOI] [PubMed] [Google Scholar]

- 22.Fonarow GC, Pan W, Saver JL, Smith EE, Reeves MJ, Broderick JP, et al. Comparison of 30-day mortality models for profiling hospital performance in acute ischemic stroke with vs without adjustment for stroke severity. JAMA. 2012;308:257–64. [DOI] [PubMed] [Google Scholar]

- 23.Bucholz EM, Butala NM, Normand SL, Wang Y, Krumholz HM. Association of Guideline-Based Admission Treatments and Life Expectancy After Myocardial Infarction in Elderly Medicare Beneficiaries. J Am Coll Cardiol. 2016;67:2378–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bucholz EM, Butala NM, Ma S, Normand ST, Krumholz HM. Life Expectancy after Myocardial Infarction, According to Hospital Performance. N Engl J Med. 2016;375:1332–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Yeh RW, Sidney S, Chandra M, Sorel M, Selby JV, Go AS. Population trends in the incidence and outcomes of acute myocardial infarction. N Engl J Med. 2010;362:2155–65. [DOI] [PubMed] [Google Scholar]

- 26.Love D, Custer W, Miller P. All-payer Claims Databases: State Initiatives to Improve Health Care Transparency. The Commonwealth Fund Issue Brief, September 2010. Accessed at http://www.commonwealthfund.org/~/media/Files/Publications/IssueBrief/2010/Sep/1439_Love_allpayer_claims_databases_ib_v2.pdf on 5 October 2016. [PubMed] [Google Scholar]

- 27.All-Payer Claims Database Council. Interactive State Report Map. Accessed at https://www.apcdcouncil.org/state/map on 5 October 2016. [Google Scholar]

- 28.Centers for Medicare & Medicaid Services. CMS Integrated Data Repository. Accessed at https://www.cms.gov/Research-Statistics-Data-and-Systems/Computer-Data-and-Systems/IDR/ on 17 July 2016.

- 29.Healthcare Information and Management Systems (HIMSS). What is interoperability? Accessed at http://www.himss.org/library/interoperability-standards/what-is-interoperability on 28 July 2016.

- 30.Office of the National Coordinator for Health Information Technology. Connecting health and care for the nation: a shared nationwide interoperability roadmap. Accessed at https://www.healthit.gov/sites/default/files/hie-interoperability/nationwide-interoperability-roadmap-final-version-1.0.pdf on 17 July 2016. [Google Scholar]

- 31.Krumholz HM, Radford MJ, Ellerbeck EF, Hennen J, Meehan TP, Petrillo M, et al. Aspirin in the treatment of acute myocardial infarction in elderly Medicare beneficiaries. Patterns of use and outcomes. Circulation. 1995;92:2841–7. [DOI] [PubMed] [Google Scholar]

- 32.Rathore SS, Mehta RH, Wang Y, Radford MJ, Krumholz HM. Effects of age on the quality of care provided to older patients with acute myocardial infarction. Am J Med. 2003;114:307–15. [DOI] [PubMed] [Google Scholar]

- 33.Schoenenberger AW, Radovanovic D, Stauffer JC, Windecker S, Urban P, Eberli FR, et al. Age-related differences in the use of guideline-recommended medical and interventional therapies for acute coronary syndromes: a cohort study. J Am Geriatr Soc. 2008;56:510–6. [DOI] [PubMed] [Google Scholar]

- 34.Marso SP, Amin AP, House JA, Kennedy KF, Spertus JA, Rao SV, et al. Association between use of bleeding avoidance strategies and risk of periprocedural bleeding among patients undergoing percutaneous coronary intervention. Jama. 2010;303:2156–64. [DOI] [PubMed] [Google Scholar]

- 35.Wimmer NJ, Resnic FS, Mauri L, Matheny ME, Piemonte TC, Pomerantsev E, et al. Risk-treatment paradox in the selection of transradial access for percutaneous coronary intervention. J Am Heart Assoc. 2013;2:e000174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Vora AN, Peterson ED, McCoy LA, Garratt KN, Kutcher MA, Marso SP, et al. The Impact of Bleeding Avoidance Strategies on Hospital-Level Variation in Bleeding Rates Following Percutaneous Coronary Intervention: Insights From the National Cardiovascular Data Registry CathPCI Registry. JACC Cardiovasc Interv. 2016;9:771–9. [DOI] [PubMed] [Google Scholar]

- 37.Peterson ED, Roe MT, Mulgund J, DeLong ER, Lytle BL, Brindis RG, et al. Association between hospital process performance and outcomes among patients with acute coronary syndromes. JAMA. 2006;295:1912–20. [DOI] [PubMed] [Google Scholar]

- 38.Mehta RH, Chen AY, Alexander KP, Ohman EM, Roe MT, Peterson ED. Doing the right things and doing them the right way: association between hospital guideline adherence, dosing safety, and outcomes among patients with acute coronary syndrome. Circulation. 2015;131:980–7. [DOI] [PubMed] [Google Scholar]

- 39.Kocher RP, Adashi EY. Hospital readmissions and the Affordable Care Act: paying for coordinated quality care. JAMA. 2011;306:1794–5. [DOI] [PubMed] [Google Scholar]

- 40.Medicare Program; Hospital Inpatient Prospective Payment Systems for Acute Care Hospitals and the Long-Term Care Hospital Prospective Payment System Policy Changes and Fiscal Year 2016 Rates; Revisions of Quality Reporting Requirements for Specific Providers, Including Changes Related to the Electronic Health Record Incentive Program; Extensions of the Medicare-Dependent, Small Rural Hospital Program and the Low-Volume Payment Adjustment for Hospitals. Final rule; interim final rule with comment period. Fed Regist. 2015;80:49325–886. [PubMed] [Google Scholar]

- 41.Panzer RJ, Gitomer RS, Greene WH, Webster PR, Landry KR, Riccobono CA. Increasing demands for quality measurement. Jama. 2013;310:1971–80. [DOI] [PubMed] [Google Scholar]

- 42.Meyer GS, Nelson EC, Pryor DB, James B, Swensen SJ, Kaplan GS, et al. More quality measures versus measuring what matters: a call for balance and parsimony. BMJ Qual Saf. 2012;21:964–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Conway PH. The Core Quality Measures Collaborative: a rationale and framework for public-private quality measure alignment. Health Affairs Blog [blog on the Internet]. 2015. Jun 23. Accessed at http://healthaffairs.org/blog/2015/06/23/the-core-quality-measures-collaborative-a-rationale-and-framework-for-public-private-quality-measure-alignment/ on 17 July 2016. [Google Scholar]

- 44.Blumenthal D, McGinnis JM. Measuring Vital Signs: an IOM report on core metrics for health and health care progress. JAMA. 2015;313:1901–2. [DOI] [PubMed] [Google Scholar]

- 45.Cassel CK. Making measurement meaningful. Am J Manag Care. 2015;21:332b–c. [PubMed] [Google Scholar]

- 46.Cassel CK, Kronick R. Learning From the Past to Measure the Future. Jama. 2015;314:875–6. [DOI] [PubMed] [Google Scholar]

- 47.Mathews R, Fonarow GC, Li S, Peterson ED, Rumsfeld JS, Heidenreich PA, et al. Comparison of performance on Hospital Compare process measures and patient outcomes between hospitals that do and do not participate in Acute Coronary Treatment and Intervention Outcomes Network Registry-Get With The Guidelines. Am Heart J. 2016;175:1–8. [DOI] [PubMed] [Google Scholar]

- 48.Wang TY, Nallamothu BK, Krumholz HM, Li S, Roe MT, Jollis JG, et al. Association of door-in to door-out time with reperfusion delays and outcomes among patients transferred for primary percutaneous coronary intervention. JAMA. 2011;305:2540–7. [DOI] [PubMed] [Google Scholar]

- 49.Pokorney SD, Miller AL, Chen AY, Thomas L, Fonarow GC, de Lemos JA, et al. Implantable Cardioverter-Defibrillator Use Among Medicare Patients With Low Ejection Fraction After Acute Myocardial Infarction. JAMA. 2015;313:2433–40. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Correlation of CMS Electronic Measure Hospital Value-Based Purchasing Program Achievement Scores for Older Patients with Achievement Scores for Younger Patients with Acute Myocardial Infarction (Sensitivity Analysis). Hospital Value-Based Purchasing (HVBP) Program achievement scores for acute myocardial infarction were calculated using the Centers for Medicare & Medicaid Services’ methodology. Risk-standardized mortality rates were computed using the Centers for Medicare & Medicaid Services’ electronic measure for acute myocardial infarction with additional predictor variables for ST-segment elevation on the electrocardiogram at presentation, cardiogenic shock at presentation, acute heart failure at presentation, and cardiac arrest at presentation rather than the usual publicly reported claims-based measure (n=495 hospitals). Circle size is proportional to the number of hospitals. The volume-weighted trend line is displayed. HVBP: Hospital Value-Based Purchasing.

Correlation of CMS Electronic Measure Hospital Value-Based Purchasing Program Achievement Scores for Older Patients with Achievement Scores for All Adult Patients with Acute Myocardial Infarction (Sensitivity Analysis). Hospital Value-Based Purchasing (HVBP) Program achievement scores for acute myocardial infarction were calculated using the Centers for Medicare & Medicaid Services’ methodology. Risk-standardized mortality rates were computed using the Centers for Medicare & Medicaid Services’ electronic measure for acute myocardial infarction with additional predictor variables for ST-segment elevation on the electrocardiogram at presentation, cardiogenic shock at presentation, acute heart failure at presentation, and cardiac arrest at presentation rather than the usual publicly reported claims-based measure (n=490 hospitals). Circle size is proportional to the number of hospitals. The volume-weighted trend line is displayed. HVBP: Hospital Value-Based Purchasing.