Abstract

The agricultural crop productivity can be affected and reduced due to many factors such as weeds, pests, and diseases. Traditional methods that are based on terrestrial engines, devices, and farmers’ naked eyes are facing many limitations in terms of accuracy and the required time to cover large fields. Currently, precision agriculture that is based on the use of deep learning algorithms and Unmanned Aerial Vehicles (UAVs) provides an effective solution to achieve agriculture applications, including plant disease identification and treatment. In the last few years, plant disease monitoring using UAV platforms is one of the most important agriculture applications that have gained increasing interest by researchers. Accurate detection and treatment of plant diseases at early stages is crucial to improving agricultural production. To this end, in this review, we analyze the recent advances in the use of computer vision techniques that are based on deep learning algorithms and UAV technologies to identify and treat crop diseases.

Keywords: Computer vision, Deep learning, Unmanned Aerial Vehicles, Precision agriculture, Plant disease, Convolutional neural network

Introduction

Agriculture is one of the most important human activities that plays a crucial role to improve the economy of any country Thangaraj et al. [76]. However, several issues have imposed additional challenges in the agriculture field in terms of crop productivity and food security, including the continuous population growth, climate change, shortage of arable lands, plant diseases, and more recently the high spread of the COVID-19 pandemic Bouguettaya et al. [18], Rahman et al. [61].

Plant diseases have always been considered one of the most significant threats to crops restricting food productivity Vishnoi et al. [78], Jiang et al. [41] and increasing economic losses. They occur in agriculture fields due to many factors, including climate change, water stress, and insects Card et al. [21], Bondre and Sharma [16]. According to Thangaraj et al. [76], plant diseases are causing losses of around 40% of food supplements every year. Also, we are losing more than 5 million tons of wheat every year due to the yellow rust disease Beddow et al. [14]. Unfortunately, due to the Russian-Ukrainian war, the amount of wheat losses are expected to be increased because these two countries are considered among the main sources of wheat production and export.

The reduced crop yields eventually result in starvation and insufficient food supplies. Therefore, early and efficient crop and plant diseases detection and diagnosis are required to increase food productivity. Traditional methods that are based on human experts and on-ground machines scouting can be useful to monitor small crops, but it is very difficult and can be impossible in some cases to cover large crops, which is time-consuming and very exhaustive making them not suitable for early crop and plant diseases identification Zhang et al. [84], Kerkech et al. [43], Guo et al. [33].

To overcome the aforementioned issues sustainably with low cost while not affecting the environment, we need an agricultural revolution that is based on innovative ideas and technologies. To this end, over the last few decades, agriculture has changed and still changing from being accomplished by human workers to smart agricultural machines and robots due to the continuous adoption of recent advanced technologies, including Internet of Things devices, intelligent algorithms, sophisticated sensors, and modern machines Reddy Maddikunta et al. [63], Moysiadis et al. [53], Ouhami et al. [55]. This new paradigm is called Precision Agriculture or Smart Farming, where its main role is to optimize the use of agricultural resources such as water, agrochemical products, and drudgery to improve crop quality and quantity while saving time and money Neupane and Baysal-Gurel [54].

To detect crop diseases, several studies have adopted satellite, airplane, on-ground machinery, and UAV platforms to collect high-resolution images. However, airplane and satellite technologies are facing several limitations in terms of spatial/temporal resolutions and limited viewing capacity due to weather conditions. Moreover, they provide a very expensive solution. Similarly, on-ground technologies suffer from low area coverage and a long time to cover large fields. As a result, UAVs equipped with intelligent visual systems could be an effective low-cost solution to achieve crop and plant diseases detection in small, medium, and large agricultural fields allowing farmers to apply the right treatment with the right amount and at the right place at the right time.

To analyze the collected images, we require efficient algorithms. Several studies have adopted traditional machine learning techniques, including SVM and Random Forest Sujatha et al. [73]. However, these techniques are facing many limitations because they depend on manual feature extraction methods making them inefficient, especially in the case of complex environments. Recently, deep learning algorithms have emerged as a new effective solution to improve computer vision-based systems for automatic crop disease monitoring. They perform automatic feature extraction without any human intervention providing valuable information that could help farmers to make the right decisions, while reducing crops treatment costs and increasing their productivity. Therefore, the combination of recent UAV camera sensor technologies and deep learning algorithms could be an efficient solution for the early detection of crop diseases.

Since the advance of AlexNet architecture in 2012, Convolutional Neural Network (CNN) is considered one of the most effective deep learning approaches in the computer vision field. Currently, there is a wide range of CNN-based deep learning algorithms and architectures that are used to detect and classify different crop diseases Bouguettaya et al. [18]. Nowadays, the application of computer vision techniques, deep learning algorithms, and crop diseases identification-based UAV platforms are an active research field that can provide solutions to solve some problems related to the early and effective identification of different plant diseases. To make UAV platforms able to achieve autonomous detection and treatment of crop diseases, we need to address several disciplines, including agriculture, electronic control, remote sensing technologies, computer vision, artificial intelligence, among others. Therefore, in this review paper, we investigate the effectiveness of UAV platforms, remote sensing technologies, preprocessing techniques, and deep learning-based computer vision models to detect and treat different crop diseases at their early stages.

There are several review papers in the literature targeting crop and plant diseases identification through deep learning methods. However, most of these studies have targeted diseases recognition using datasets collected based on on-ground technologies. For example, the authors in Thangaraj et al. [76] provided a review on different machine learning and deep learning algorithms targeting tomato leaves diseases from non-aerial images. However, in addition to the adoption of datasets collected from non-aerial platforms, there is a lack of methods based on object detection and image segmentation, where most of the reviewed papers are based on image classification techniques. Similarly, the authors in Sirohi et al. [68] provided a short review of some recent deep learning algorithms to identify and classify plant diseases from their leaves. However, none of these studies have focused on the use of UAVs as the main platform to collect data. Some other reviews, like Zhang et al. [86] and Barbedo [12], provided few studies targeting plant disease identification through UAV technologies, but they did not investigate either the use of UAVs or deep learning techniques in detail. To the best of our knowledge, this is the first review study that focuses on the combination of deep learning techniques and UAV technologies to identify crop and plant diseases in detail. The main contributions of the present paper are summarized as follows:

Presenting different UAV and remote sensing technologies adopted for crop and plant diseases recognition from aerial images, while providing their important characteristics and advantages over other available technologies.

Providing new and effective solutions that may improve crop productivity, while reducing cost and drudgery.

Describing detailed investigation regarding the effectiveness of different deep learning algorithms and computer vision techniques employed to improve crop diseases identifications, including image segmentation, object detection, and image segmentation.

Presenting challenges and limitations of UAV-based plant diseases identification that face farmers, and the potential solutions to overcome issues.

The rest of the paper is organized as follows. In Sect. 1, we discuss the research methodology adopted in this study to select the appropriate papers to be reviewed. A survey on different agricultural UAV types and their applications is presented in Sect. 3, while diverse UAV-based camera sensors used to detect crop and plant diseases are presented in Sect. 4. In Sect. 5, we present the effectiveness of different deep learning algorithms to identify crop and plant diseases from UAV imagery, including image classification, object detection, and image segmentation techniques. In Sect. 6, we present the challenges, limitations, and potential solutions to overcome some plant disease detection-related issues. A comparison study to analyze computation offloading mechanisms in terms of evaluation tools, case study, utilized techniques, and performance metrics is presented in Sect. 6.2. Finally, Sect. 8 concludes the paper and provides some future directions.

Research methodology

We adopted a step-by-step process to carefully select the published papers that addressing the application of computer vision-based deep learning algorithms for plant and crop diseases recognition from UAV platforms. The overall process of the used research methodology is shown in Fig. 1.

Fig. 1.

Search strategy flowchart

The review process is carried out by observing the existing UAV and deep learning-based solutions for the following research questions.

What are the targeted crop and disease types?

What are the adopted UAV and sensor types?

Which deep learning technique is adopted for diseases recognition?

Which model provides better results?

To achieve our goal, a keyword-based search is conducted from different well-known scientific databases, including IEEE Xplore, Google Scholar, ScienceDirect, MDPI, and Taylor & Francis. To select the related papers, we used different combinations of the following keywords for journals and conferences papers search step: [“crop disease” OR “plant disease”] AND [“detection” OR “identification” OR “diagnosis”] AND [“UAV” OR “drone”] AND “artificial intelligence” AND “deep learning” AND “computer vision”.

Firstly, we downloaded papers relevant to crop and plant diseases recognition, detection, or identification from UAV imagery using different artificial intelligence techniques from the aforementioned electronic databases. Only documents published between 2004 and 2021 were considered. Thus, we collected more than 100 published scientific papers related to our study. In the next step, we read each paper and classify it according to the crop and plant types, disease type, and deep learning models. Finally, by applying the inclusion and exclusion techniques, we reduced the number of papers to around 45 by keeping only strongly related papers to our study, which is crop and plant diseases detection from UAV imagery.

An overview on agricultural Unmanned Aerial Vehicles and their applications

Unmanned Aerial Vehicles are flying robots without a human pilot on board. These flying machines can be controlled remotely using remote controller devices, or perform some missions autonomously through an onboard computer that executes intelligent algorithms. Recently, smart UAVs are widely applied to perform a wide range of applications, including search and rescue operations Martinez-Alpiste et al. [52], wildfire detection Bouguettaya et al. [20], vehicle detection Bouguettaya et al. [19], precision agriculture Di Nisio et al. [27], Delavarpour et al. [26], package delivery Shahzaad et al. [64], smart cities Abualigah et al. [5], to name a few.

The agriculture sector has largely changed over the past few decades, and new technologies have played a significant role in this transformation. Several spatial and aerial platforms have been used to perform different agricultural tasks, including satellites, airplanes, and UAVs. Satellites and airplanes can cover very large areas in a very short time compared to UAV platforms that take a longer time to cover large fields. However, in addition to the huge budget to accomplish missions through satellites and airplanes, they also suffer from poor spatial and temporal image resolutions compared to those provided by UAVs and terrestrial technologies. Furthermore, they are very sensitive to cloudy and rainy weathers that could affect the overall performance of these systems.

The introduction of agricultural UAVs is one of the most significant changes that lead to smart farming. Due to their high flexibility and mobility, UAVs can perform flight missions at different altitudes and viewing angles above dangerous and difficult areas that was impossible to reach through piloted airplanes or satellites. Recently, different UAV types equipped with high-resolution camera sensors have been widely adopted to perform different agriculture-related activities, including crop diseases identification and treatment. They could be categorized into three main classes, which are fixed-wing, rotary-wing, and Hybrid VTOL UAVs (Fig. 2). In this section, we focus on presenting the various UAV types all along with their properties and agricultural operations purposes.

Fig. 2.

Different agricultural UAV types

Rotary-wing UAVs

Multirotor UAVs, also called rotary-wing (Fig. 2), are the most popular UAV types to achieve different agricultural tasks. Their high flexibility and capability of hovering and flying at low altitudes above the targeted crop provide farmers with good images allowing them to detect crop diseases at early stages through small visual symptoms at the different parts of the plant, including leaf level Bouguettaya et al. [18]. Multirotor UAVs depend on multiple propellers to fly making them the best choice to cover crops at difficult areas from different altitudes. We can classify them according to the number of rotors mounted on them. Some UAVs have three rotors, which are called tri-copters. If there are four rotors, they are called quad-copters. Also, there are hexacopters and octocopters with six and eight propellers, respectively. The latter categories are mostly preferred for high payload lifting making them more suitable for precise crop spraying operations. For example, the authors in Pittu and Gorantla [58] adopted a hexacopter to detect the exact diseased areas and spray pesticides. However, UAVs with six and eight rotors are facing a serious problem concerning high energy consumption resulting in short flying time. Thus, quadcopter UAVs are considered the most appropriate UAV category for crop disease monitoring due to many factors, including high flexibility, ease of use, and their higher endurance compared to hexacopters and octocopters. Another rotary-wing type similar to helicopters was adopted in Théau et al. [77] for potato crops surveying to reduce the impact of diseases and pests on crop productivity. This type of UAVs has a higher payload capacity than the aforementioned rotary-wing UAVs.

Fixed-wings UAVs

Fixed-wing UAVs (Fig. 2) are other UAV types that are capable of covering larger areas in less time compared to rotary-wing UAVs due to their long endurance, high speed, and high altitude Raeva et al. [60]. These properties make them more suitable for forest and large crops surveillance. Fixed-wing UAVs are already widely used for crop disease monitoring. For example, the authors in Albetis et al. [10], Albetis et al. [9] adopted the Long-range DT-18 Fixed-wing UAV to detect Flavescence dorée and Grapevine Trunk Diseases within seven different vineyards. However, in addition to their high cost, fixed-wing UAVs are suffering from low flexibility making small crop monitoring very difficult. Moreover, they require runways and space to land and take off Zhang et al. [85].

Hybrid VTOL UAVs

More recently, hybrid Vertical Take-Off and Landing (VTOL) UAVs (Fig. 2) have emerged as a new effective solution to overcome multirotor and fixed-wing UAVs problems Song and Park [70]. They combine multirotor UAVs’ VTOL capability with fixed-wing UAVs’ cruise flight Delavarpour et al. [26]. Unfortunately, this type of UAV is still not largely used to monitor crop diseases.

In Table 1, we highlighted the most used agricultural UAVs in the literature for various crop disease monitoring, where we find that DJI UAVs are the most used ones. Similarly, the pros and cons of the different agricultural UAV categories are summarized in Table 2.

Table 1.

Most used UAVs in the literature for crop disease monitoring

| UAV brand | UAV category | Product name | References |

|---|---|---|---|

| DJI | Quadcopter | Phantom 3 Pro | Tetila et al. [75] |

| Phantom 4 | Huang et al. [39] | ||

| Sentinel 2 | Pan et al. [56] | ||

| Phantom 4 RTK | Wu et al. [82] | ||

| Phantom 4 Pro | Gomez Selvaraj et al. [31], Hu et al. [38], Hu et al. [37], | ||

| Dang et al. [25], Heidarian Dehkordi et al. [35], Hu et al. [36] | |||

| Matrice 100 | Su et al. [72], Zhang et al. [87] | ||

| Hexacopter | Matrice 600 | [ Wiesner-Hanks et al. [80], Bohnenkamp et al. [15], | |

| Görlich et al. [32], Stewart et al. [71], Wu et al. [81] | |||

| Matrice 600 Pro | Abdulridha et al. [3], Abdulridha et al. [2], Liu et al. [50] | ||

| Octocopter | S1000 | Zhang et al. [84] | |

| 3D Robotics | Quadcopter | 3DR SOLO | Gomez Selvaraj et al. [31] |

| 3DR IRIS+ | Duarte-Carvajalino et al. [28] | ||

| ING Robotic Aviation | Helicopter | Responder | Théau et al. [77] |

| Italdron | Quadcopter | 4HSE EVO | Di Nisio et al. [27] |

| Feima Robotics | Quadcopter | Feima D200 | Qin et al. [59] |

| Delair-Tech | Fixed-wing | DT-18 | Albetis et al. [10], Albetis et al. [9] |

Table 2.

Pros and cons of agricultural UAV types

| UAV type | Pros | Cons |

|---|---|---|

| Rotary-wing | Easy control and maneuver | Limited flying time |

| Ability to hover | Low area coverage | |

| Take off and land vertically | Small payload capabilities | |

| Very stable | High energy consumption | |

| Better spatial/temporal resolutions | ||

| Low cost | ||

| Accessibility to difficult areas | ||

| Fixed-wing | High payload capabilities | Expensive |

| Long flying time | Require a launcher to put in the air | |

| Large area coverage | Difficult to land | |

| High speed | Do not have hovering ability | |

| Lower energy consumption | ||

| Hybrid VTOL | Long flying time | Expensive |

| Large area coverage | Do not have hovering ability | |

| High speed | ||

| Vertical take-off and landing ability | ||

| Relatively low energy consumption |

UAV-based visual remote sensing systems used to identify crop diseases

Sensors represent one of the fundamental building blocs of any UAV to perform different tasks autonomously with little or no human intervention through intelligent algorithms, including navigation, detect and geolocating potential diseases in crops from visual data, and providing a map of crops status that could be helpful to the farmers or other machines working in coordination with the UAV Dammer et al. [24], Lytridis et al. [51]. Remote sensing systems that are based on camera sensors mounted on UAV platforms can be classified according to two main factors, which are the UAV type and the camera sensor type. UAV-based aerial imaging is one of the most important and useful data types that can help to improve the agricultural field. Usually, the choice of UAV platforms and sensor types depends on the purpose of the targeted application and crop type. Therefore, UAVs can be equipped with different types of cameras, including RGB, spectral, and thermal. Fixed-wing and VTOL UAVs are capable of carrying more sophisticated cameras than multirotor UAVs, especially in the case of hyperspectral cameras that have high weight. Also, UAVs equipped with sophisticated cameras can help farmers to improve crop yield while saving time and money by automating some processes that require a group of persons working on them. However, cameras mounted on multirotor UAVs provide better Ground Sampling Distance resolution due to their ability to fly at lower altitudes. To this end, in this section, we are going to present the most used camera sensors for crop disease monitoring.

RGB cameras

According to Table 3, the visible (or RGB) camera is one of the most adopted sensor types to achieve different agricultural tasks, including crop disease identification. Their high adoption is due to many factors such as low price, ease of use, low weight, and high spatial resolution Heidarian Dehkordi et al. [35], Abdulridha et al. [3]. These cameras represent the best choice for smallholders farmers with low financial resources providing valuable visual information to easily identify visual plant disease symptoms at the leaf level Kerkech et al. [43], Tetila et al. [75].

Table 3.

Deep learning-based crop and plant diseases identification methods from UAV imagery

| References | Crop | Disease | Altitude/GSD (m) | Data type | Model | Data size | Input data size | Performance |

|---|---|---|---|---|---|---|---|---|

| Tetila et al. [75] | Soybean | Leaf Diseases | 2/- | RGB | Inception-v3 (FT 75%) | 3000 | 256 256 | 99.04% (accuracy) |

| ResNet-50 (FT 75%) | 99.02% (accuracy) | |||||||

| VGG-19 (FT 100%) | 99.02% (accuracy) | |||||||

| Xception (FT 100%) | 98.56% (accuracy) | |||||||

| Gomez Selvaraj et al. [31] | Banana | BBTD, BXW | 50 to 100/1 to 3 | RGB | VGG-16 | 3300 | 64 64 | 85% (accuracy) |

| Custom CNN | 92% (accuracy) | |||||||

| Görlich et al. [32] | Sugar beet | Cercospora Leaf Spot | – | RGB | FCN | – | 320 320 | 76% (precision), 83.87% (recall), 75.74% (F1) |

| Dang et al. [25] | Radish | Fusarium Wilt | 3, 7, and 15/- | RGB | RadRGB | 1700 | 64 64 | 96.4% (accuracy), 0.043 s/image (testing time) |

| Inception-V3 | 95.7% (accuracy), 0.1 s/image (testing time) | |||||||

| VGG-16 | 93.1% (accuracy), 0.22 s/image (testing time) | |||||||

| Wu et al. [82] | Pine | Pine Wilt Disease | 120/0.04 | RGB | Faster R-CNN (ResNet-50) | 476 | – | 60,2% (mAP), 134 MB (model size), 0.191 (FPS) |

| Faster R-CNN (ResNet-101) | 62,2% (mAP), 208 MB (model size), 0.18 (FPS) | |||||||

| YOLOv3 (DarkNet-53) | 64% (mAP), 241 MB (model size), 1.066 (FPS) | |||||||

| YOLOv3 (MobileNet) | 63,2% (mAP), 95 MB (model size), 1.393 (FPS) | |||||||

| Hu et al. [38] | Pine | – | 103/- | RGB | Proposed approach (with Augmentor) | – | – | 46.4% (precision), 92.9% (recall), 61.9% (F1) |

| Proposed approach (with DCGAN) | 56.5% (precision), 92.9% (recall), 70.3% (F1) | |||||||

| Hu et al. [37] | Pine | – | 103/0.025 | RGB | AlexNet | 1486 | 64 64 | 39.1% (Recall) |

| VGGNet | 91.3% (Recall) | |||||||

| Inception-v3 | 73.7% (F1), 61.8% (precision), 91.3% (Recall) | |||||||

| AlexNet + Adaboost | 71.2% (F1), 58.3% (precision), 91.3% (Recall) | |||||||

| VGGNet + Adaboost | 76.9% (F1), 69% (precision), 87% (Recall) | |||||||

| Proposed method | 86.3% (F1), 78.6% (precision), 95.7% (Recall) | |||||||

| Qin et al. [59] | Pine | Pine Wood Nematode Disease | 150 - 200/0.1 - 0.125 | Multispectral | SCANet | 4862 | – | 79.33% (OA), 86% (precision), 91% (recall), 88.43% (F1) |

| DeepLabV3+ | 56.62% (OA), 68% (precision), 77% (recall), 72.22% (F1) | |||||||

| HRNet | 56.9% (OA), 75.66% (precision), 68.66% (recall), 72% (F1) | |||||||

| DenseNet | 54.7% (OA), 64.33% (precision), 76.66% (recall), 70% (F1) | |||||||

| Yu et al. [83] | Pine | Pine Wilt Disease | 100/12 | Multispectral | Faster R-CNN | 1905 | 800 800 | 60.98% (mAP), 113.43 MB (model size), 10.51 (FPS) |

| YOLOv4 | 57.07% (mAP), 243.96 MB (model size), 25.55 (FPS) | |||||||

| Shi et al. [65] | Potato | Late Blight Disease | 30/2.5 | Hyperspectral | CropdocNet | – | – | 98.2% (OA), 0.812 (Kappa), 721 ms (Computing Time) |

| SVM | 82.7% (OA), 0.571 (Kappa), 162 ms (Computing Time) | |||||||

| RF | 78.8% (OA), 0.615 (Kappa), 117 ms (Computing Time) | |||||||

| 3D-CNN | 88.8% (OA), 0.771 (Kappa), 956 ms (Computing Time) | |||||||

| Abdulridha et al. [2] | Tomato | Target Spot | 30/0.1 | Hyperspectral | MLP | – | – | TS: 97% (accuracy) |

| Bacterial Spot | BS: 98% (accuracy) | |||||||

| Duarte-Carvajalino et al. [28] | Potato | Late Blight | 30/0.008 | Multispectral | MLP (NIR-G-B) | 748,071 | 50 40 | 16.37 (MAE), 23.25 (RMSE), 0.47 (R2) |

| MLP (NDVI) | 18.71 (MAE), 21.98 (RMSE), 0.44 (R2) | |||||||

| MLP (Band Differences) | 13.23 (MAE), 16.28 (RMSE), 0.75 (R2) | |||||||

| MLP (PCA) | 16.60 (MAE), 21.87 (RMSE), 0.48 (R2) | |||||||

| SVR (Band Differences) | 17.34 (MAE), 21.06 (RMSE), 0.45 (R2) | |||||||

| RF (Band Differences) | 12.96 (MAE), 16.15 (RMSE), 0.75 (R2) | |||||||

| CNN (NIR-G-B) | 11.72 (MAE), 15.09 (RMSE), 0.74(R2) | |||||||

| Kerkech et al. [43] | Vineyard | Esca | 25/0.01 | RGB | CNN + YUV + ExGR | 70,560 | (16 16), (32 32), (64 64) | 95.92% (accuracy) |

| Wiesner-Hanks et al. [79] | Vineyard | Mildew disease | 25/– | Multispectral | VddNet | – | 256 256 | 93.72% (accuracy) |

| SegNet | 92.75% (accuracy) | |||||||

| U-Net | 90.69% (accuracy) | |||||||

| DeepLabv3+ | 88.58% (accuracy) | |||||||

| PSPNet | 84.63% (accuracy) | |||||||

| Raj et al. [62] | Vineyard | Mildew disease (leaf-level) | 25/0.01 | RGB | SegNet | 105, 515 (RGB) | 360 480 | 85.13% (accuracy) |

| Infrared | 98, 895 (IR) | 78.72% (accuracy) | ||||||

| Fusion AND | 82.20% (accuracy) | |||||||

| Fusion OR | 90.23% (accuracy) | |||||||

| Mildew disease (grapevine-level) | RGB | 94.41% (accuracy) | ||||||

| Infrared | 89.16% (accuracy) | |||||||

| Fusion AND | 88.14% (accuracy) | |||||||

| Fusion OR | 95.02% (accuracy) | |||||||

| Wu et al. [81] | Maize (Corn) | Northern Leaf Blight | 6/– | RGB | CNN (Resnet-34) | 6267 | 224 224 | 97.76% (accuracy), 97.85% (recall), 98.42% (precision) |

| Wiesner-Hanks et al. [80] | Maize (Corn) | Northern Leaf Blight | 6/– | RGB | CNN (ResNet-34) + CRF | 18,222 | 224 224 | 99.79% (accuracy), 71.53 (F1) |

| Stewart et al. [71] | Maize (Corn) | Northern Leaf Blight | 6/– | RGB | Mask R-CNN | 3000 | 512 512 | 96% at IoU = 0.5 (average precision) |

| Huang et al. [39] | Wheat | Helminthosporium Leaf Blotch | 80/0.034 | RGB | Color Histogram + SVM | 246 | 100 100 | 85.92% (OA) |

| LBPH + SVM | 65.10% (OA) | |||||||

| VI + SVM | 87.65% (OA) | |||||||

| Color Histogram + LBPH + VI + SVM | 90% (OA) | |||||||

| CNN (LeNet) | 91.43% (OA) | |||||||

| Liu et al. [50] | Wheat | Fusarium Head Blight | 60/0.04 | Hyperspectral | SVM | – | – | 95% (OA), 0.9 (Kappa) |

| RF | 95% (OA), 0.9 (Kappa) | |||||||

| BPNN | 98% (OA), 0.96 (Kappa) | |||||||

| Su et al. [72] | Wheat | Yellow Rust | 20/0.013 | Multispectral | U-Net | 1600 | 256 256 | 91.3% (precision), 92.6% (recall), 92% (F1) |

| Zhang et al. [87] | Wheat | Yellow Rust | 20/0.013 | Multispectral | Ir-UNet | – | 256 256 | 94.63% (precision), 95.15% (recall), 94.89% (F1), 97.13% (OA) |

| Pan et al. [56] | Wheat | Yellow Rust | 30/0.007 | RGB | BPNN | 5580 | 256 256 | 86% (accuracy), 0.65 (Kappa) |

| FCN | 90% (accuracy), 0.81 (Kappa) | |||||||

| U-Net | 94% (accuracy), 0.89 (Kappa) | |||||||

| PSPNet | 98% (accuracy), 0.96 (Kappa) |

Several studies adopted this type of camera to detect plant diseases from UAV imagery. For example, Tetila et al. Tetila et al. [75] used a Sony EXMOR RGB camera mounted on a DJI Phantom 3 Pro to recognize soybean leaf diseases. Also, the authors in Wu et al. [82] used an RGB camera mounted on a DJI Phantom 4 RTK UAV to diagnose pine wilt disease at an early stage. However, compared to other available technologies, visible cameras provide lower performance to detect diseases at their early stages due to many factors. They are extremely susceptible to environmental conditions such as sunlight angle and shadows that could affect the crop disease identification system causing false disease detection Ganchenko and Doudkin [29]. The authors in Li et al. [49] used another type of visible camera called RGB-D that is less sensitive to light. This camera provides depth information that could be used to improve the performance of targeted object detection and localization in agricultural tasks.

To have more information about crop status, we need other types of camera sensors that provide more details. For example, a multispectral sensor operating in the Near-InfraRed (NIR) wavelength is required to generate a Normalized Difference Vegetation Index (NDVI) map. On the other hand, multispectral cameras are more expensive than conventional RGB cameras and require complex and time-consuming calibration procedures. To this end, the authors in Costa et al. [23] provide a solution to create an NDVI map from RGB data, which is one of the most useful vegetation indices to identify crop diseases. Genetic algorithms have been used to achieve such objectives.

Multispectral and hyperspectral cameras

Depending on their spectral resolutions, spectral imaging systems could be categorized into two main types, which are multispectral and hyperspectral. They provide information in the electromagnetic spectrum that ranges from the visible to the Near-Infrared (NIR) allowing the calculation of different robust vegetation indices such as NDVI Zhang et al. [84]. These characteristics make spectral cameras one of the most adopted sensor types for crop and plant disease identification from UAV platforms (Table 3). The main differences between multispectral and hyperspectral imaging systems can be summarized as follow: 1) the number of channels in hyperspectral cameras is much more than those in multispectral ones, 2) hyperspectral camera are more expensive. Multispectral and hyperspectral cameras could be an effective tool for disease symptoms automatic detection. They are more robust than RGB cameras against different illumination conditions making them more reliable to distinguish between healthy and stressed plants Zhang et al. [87]. According to Théau et al. [77], it is difficult to distinguish between different crop stress types from multispectral data. However, hyperspectral cameras provide more details allowing the measurement of different Vegetation Indices (VIs) that could be used to discriminate stress types accurately.

Thermal infrared cameras

Thermal infrared cameras are other available sensors that could be used for crop diseases identification from aerial images. InfraRed (IR) region consists of several spectral bands, including Near InfraRed (NIR), Short-Wave InfraRed (SWIR), Mid-Wave InfraRed (MWIR), Long-Wave InfraRed (LWIR), and Far InfraRed (FIR) Khalid et al. [46]. Due to their characteristics, these types of cameras can be used either during the day or night times. Recently, UAV-based thermal cameras are widely used in several agriculture-related tasks, including water stress and crop disease monitoring providing valuable information. Unlike RGB cameras that measure visible light, thermal cameras are sensitive to infrared spectra providing more information about the plant status, which are not achievable using visible, multispectral, and hyperspectral cameras. They provide a heatmap by measuring the emitted radiation from the targeted crop. The measured energy could be used to estimate the crop status through its temperature irregularities and anomalies Sishodia et al. [69]. This information can improve the visual inspection abilities of UAV-based crop diseases identification systems. Therefore, thermal remote sensing data could be used to identify crop diseases even before being distinguished by the naked eyes Kerkech et al. [43], Khanal et al. [47]. However, according to Raj et al. [62], when employing thermal images, there are a few concerns that must be handled, including images’ temporal and spatial resolutions, environmental conditions, crop species diversity and their growth stage, and the flight altitude and viewing angle.

Deep learning algorithms to identify crop diseases from UAV-based visual data

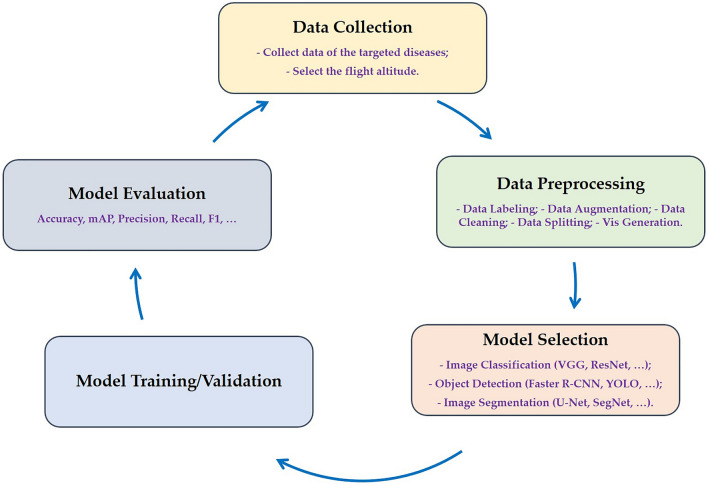

Over the last decade, deep learning-based computer vision techniques achieved interesting results in many fields, including agriculture. Crops affected by diseases show several visual symptoms such as plant color, leaf winding, leaf spots, fruit spots Thangaraj et al. [76], Kerkech et al. [43]. This can make deep learning algorithms the best choice to identify these diseases. To achieve plant diseases identification, three main computer vision-based tasks can be used to achieve better crop disease identification from UAV imagery, which are image classification Tetila et al. [75], object detection Wu et al. [82], and image segmentation Pan et al. [56], Qin et al. [59]. The deep learning algorithms workflow diagram used to detect and classify crop diseases from UAV imagery is illustrated in Figure 3.

Fig. 3.

Deep learning-based plant disease identification workflow

Recently, there has been considerable interest in crop diseases diagnosis using deep learning algorithms to process images acquired through UAV platforms. Several recent studies on crop diseases detection from UAV imagery are based on deep learning models to overcome the limitations of traditional techniques, especially Convolutional Neural Network (CNN) algorithms. Most of these studies targeted subsistence crops such as wheat Pan et al. [56], Su et al. [72], Zhang et al. [87], maize Wiesner-Hanks et al. [80], Stewart et al. [71], potato Théau et al. [77], Siebring et al. [66], and tomato Abdulridha et al. [3], Abdulridha et al. [2].

Major grain crops diseases identification

Several recent studies have successfully used the combination of UAV-based images and deep learning algorithms to identify different diseases that affect the major grain crops, including wheat and maize. For example, the authors in Pan et al. [56], Su et al. [72], Zhang et al. [87] targeted the detection of yellow rust disease using different deep learning-based computer vision models. The yellow rust disease is one of the most dangerous diseases that causes great wheat production losses each year estimated at more than 5 million tonnes Beddow et al. [14]. To minimize the impact of this disease, the authors in Zhang et al. [87], proposed a novel semantic segmentation method derived from the U-Net model to identify the infected wheat crop regions with yellow rust disease using multispectral data collected through a UAV platform. To improve the main U-Net architecture, they embed three modules which are Irregular Encoder Module (IEM), Irregular Decoder Module (IDM), and Content-aware Channel Re-weight Module (CCRM). They investigated the impact of the input data type on the overall performance of the deep learning model to detect yellow rust disease in wheat crops. They found that the performance of the proposed Ir-Unet model provides good results using all the five bands information gathered using the RedEdge multispectral camera achieving an overall accuracy of 96.95% and an F1-score of 94.66% exceeding the results obtained in Su et al. [72], where they achieved an F1-score of only 92%. Furthermore, they achieved an even slightly better overall accuracy of 96.97% using a combination of all the raw bands and their variant measured Selected Vegetation Indices (SVIs). Also, by applying feature re-weight using the CCRM, the Ir-Unet model provides an overall accuracy of 97.13%. Similarly, the authors in Pan et al. [56] investigated the performance of different machine learning and deep learning models to identify yellow rust disease in the wheat crops from UAV-based RGB images. They found that PSPNet (98%) and SVM (96) algorithms provide the best accuracy among all the tested models, including Random Forest (73%), BPNN (86%), FCN (90%), and U-Net (94%). Liu et al. Liu et al. [50], proposed a BPNN model to monitor Fusarium Head Blight from aerial hyperspectral imagery achieving the highest overall accuracy of 98% outperforming SVM and RF with 95% both. Huang et al. Huang et al. [39] targeted another type of wheat diseases from UAV-based RGB images, which is Helminthosporium Leaf Blotch Disease. They proposed to use a LeNet-based CNN model to classify HLBD according to its severity at different disease progression stages. The adopted CNN model achieved an overall accuracy of 91.43% outperforming the different techniques combined with the SVM model (Table 3).

Other studies targeted one of the major maize crop diseases, which is the Northern Leaf Blight (NLB). For example, to achieve the NLB identification in maize crops, they used the dataset created in Wiesner-Hanks et al. [79]. This type of disease caused annual production losses estimated to be approximately 14 million tonnes between 2012 and 2015 only in the United States and Ontario. In Stewart et al. [71], the authors adopted an instance segmentation technique (Mask R-CNN) to detect NLB disease from low altitude RGB aerial images collected using a DJI Matrice 600. The proposed approach was able to identify and segment individual lesions with an average precision of 96%. In Wu et al. [81], a ResNet-based model was adopted to classify healthy and diseased maize leaves from low altitude UAV imagery providing an accuracy of 97.76%, a recall rate of 97.85%, and a precision rate of 98.42%. Similarly, Wiesner-Hanks et al. Wiesner-Hanks et al. [80], combined crowdsourced ResNet-based CNN and Conditional Random Field (CRF) techniques to segment UAV-based RGB images into regions affected by the NLB disease or not, where the crowdsourced CNN is used to generate heatmaps and the CRF to classify each pixel in the image as lesion or non-lesion. Applying this approach, they were able to identify the NLB disease in maize crops down to the millimeter level achieving an impressive accuracy of 99.79% overcoming the approach adopted in Wu et al. [81] by more than 2% (Table 3).

Vineyards diseases identification

Vines are other important crops that are susceptible to different diseases. Therefore, several studies targeted vineyards diseases from aerial images collected through UAVs. In Kerkech et al. [43], the authors adopted LeNet-5 architecture to identify grapevine infected regions from RGB aerial images gathered through a UAV flew at an altitude of 25 meters above the ground. Combining YUV color space and ExGR vegetation index as input to the used CNN model, they achieved the best accuracy of 95.92% (using patches of and a filter size of ) among all the adopted combinations, including YUV & ExG (95.41%), YUV & ExR (95.70%), YUV & GRVI (95.70%), YUV & NDI (95.52%), and YUV & RGI (95.73%). In Kerkech et al. [45], a deep learning-based semantic segmentation approach was developed to automatically identify Mildew disease in vineyards from RGB images, infrared images, and multispectral data by combining the visible and infrared bands collected through a UAV platform. They used the SegNet model to classify each pixel in the image as diseased or not at both leaf-level and grapevine-level. According to Table 3, the proposed method achieved accuracies of 85.13%, 78.72%, 82.20%, and 90.23% at leaf-level and 94.41%, 89.16%, 88.14%, and 95.02% at grapevine-level using visible, infrared, fusion AND, and Fusion OR data. Also, Kerkech et al. Kerkech et al. [44] developed VddNet which is a semantic segmentation model inspired by VGGNet, SegNet, and U-Net architectures. It consists of three parallel encoders based on VGGNet architecture for each type of the used data (RGB, Near InfraRed (NIR), and depth map) and one decoder to generate a disease map at the pixel level. The proposed model achieved an accuracy of 93.72% overcoming state-of-the-art semantic segmentation algorithms, which are SegNet (92.75%), U-Net (90.69%), DeepLab3+ (88.58%), and PSPNet (84.63%) (Table 3).

Pine tree crops diseases identification

Several studies targeted the detection of pine trees diseases from UAV-based aerial imagery. For example, Qin et al. Qin et al. [59] developed Spatial-Context-Attention Network (SCANet) architecture to segment pine nematode disease in multispectral aerial images of pine trees gathered using UAV technology. SCANet architecture consists of Spatial Information Retention Module (SIRM) and Context Information Module (CIM), where SIRM was used to obtain low-level features and CIM was designed to expand the receptive field. Their approach provides better results than state-of-the-art semantic segmentation algorithms achieving a mean F1-score rate of more than 88% while DeeoLabV3+, HRNet, and DenseNet provide only 72.22%, 72%, and 70%, respectively. Also, Hu et al. [37] combined Deep Convolutional Neural Network (DCNN), Deep Convolutional Generative Adversarial Network (DCGAN), and AdaBoost classifier to improve the detection of diseased pinus trees from RGB UAV imagery. The proposed approach overcomes traditional machine learning methods providing an F1-score of 86.3% and a recall of 95.7% against recall rates of 78.3% and 65.2% for SVM and AdaBoost classifiers, respectively (Table 3). Similarly, in Hu et al. [38], a combination between MobileNet, Faster R-CNN, Augmentor, and DCGAN architectures was adopted to recognize diseased pinus trees from UAV. DCGAN model was used to increase the number of images used in the training process, while MobileNet architecture was used to reduce the complex background information, such as roads, soils, and shadows that have some feature similarities with the targeted pine tree disease; then, Faster R-CNN was used to detect diseased pine trees. According to Table 3, the proposed method with DCGAN-based data augmentation provides acceptable results achieving an F1-score of 70.3%, a recall rate of 92.9%, and a precision rate of 56.5% while it achieved only 61.9%, 92.9%, and 46.4%, respectively, with Augmentor-based data augmentation. Also, the authors in Wu et al. [82] focused on the detection of Pine Wilt Disease (PWD) using UAV technology and two state-of-the-art deep learning-based detectors. The first detector is Faster R-CNN (a two-stage detector), while the second is YOLOv3 (a one-stage detector). These detectors are based on different backbone architectures for features extraction, including ResNet-50, ResNet-101 for Faster R-CNN and DarkNet-53, MobileNet for YOLOv3. They achieved a mAP of 60,2%, 62,2%, 64%, and 63,2% using Faster R-CNN (ResNet-50), Faster R-CNN (ResNet-101), YOLOv3 (DarkNet-53), and YOLOv3 (MobileNet), respectively (Table 3). According to Table 3, the DarkNet-53-based YOLOv3 provides the best mAP among all the tested detectors, but with the largest model size of 241 MB. On the other hand, MobileNet-based YOLOv3 provides the highest inference speed with around 1.4 FPS while keeping a competitive mAP. Similarly, in another study Yu et al. [83], the authors adopted a ResNet50-based Faster R-CNN and DarkNet53-based YOLOv4 detectors to identify PWD in pinus trees at different growing stages, including green, early, middle, and late stages. According to Table 3, Faster R-CNN provides a better mAP and (61%) smaller model size (113M) than YOLOv4 achieving only an mAP of around 57% and a model size of 224M. Compared with the work of Wu et al. [82], YOLOv4 performs worse than YOLOv3, which could be due to the dataset type, image size, image preprocessing, and model configuration. However, YOLOv4 provides real-time PWD detection achieving an inference speed of more than 25 FPS. Though, these results are still relatively low, which could be due to many factors such as the flight altitude and the small size of the targeted disease.

Other crops diseases identification

The use of deep learning and UAVs were adopted to identify diseases in many other crop types, including potato, tomato, soybean, banana, among others. For example, the authors in Tetila et al. [75] proposed a computer vision technique that combines the SLIC algorithm and different CNN models to identify soybean diseases at leaf level from RGB images captured through a DJI Phantom 3 Pro equipped with a Sony EXMOR camera. To classify soybean leaf diseases, they adopted several deep CNN architectures with different parameter fine-tuning, including Inception-v3 Szegedy et al. [74], ResNet-50 He et al. [34], VGG-19 Simonyan and Zisserman [67], and Xception Chollet [22]. According to Table 3, Inception-v3 with a fine-tuning rate of 75% slightly overcomes the other architectures in terms of accuracy and training time. It achieved an accuracy of 99.04% against 99.02%, 99.02%, and 98.56% achieved by Resnet-50, VGG-19, and Xception, respectively. Moreover, it took less training time by 6, 31, and 42 hours than ResNet-50, VGG-19, and Xception respectively. The authors in Gomez Selvaraj et al. [31] used a RetinaNet detector based on ResNet-50 architecture to detect banana plants from UAV-based RGB aerial images achieving an F1-score of around 84% on the training set and 70% on the test set, which is relatively low. The banana plant’s small size from high altitudes could explain the detector’s poor performance. Then, they investigated the performance of two CNN models to classify each of the detected plants as healthy or diseased achieving an accuracy of 85% for VGG-16 and 92% for the proposed architecture. To determine the severity of potato Late Blight disease from multispectral UAV imagery, the authors in Duarte-Carvajalino et al. [28] used different methods, including traditional machine learning approaches (MLP, RF, and SVR) and a deep learning model (CNN). According to Table 3, CNN and RF provide better results than MLP and SVR achieving an R2 of 0.74 for CNN and 0.75 for RF. Similarly, the authors in Shi et al. [65] developed a 3D-CNN model called CropdocNet to detect potato Late Blight disease from hyperspectral images collected using a UAV platform. The proposed model provided impressive results achieving an average accuracy of around 98% and 96% on the training and independent testing sets. However, in their study, they only targeted one single potato disease type. The authors in Abdulridha et al. [2] developed a system based on MLP and VIs to detect Target Spot (TS) and Bacterial Spot (BS) diseases in tomato crops from UAV-based hyperspectral images in both laboratory and field conditions and at three different diseases development stages, which are healthy, early, and late. Applying MLP, they were able to identify TS and BS diseases from UAV imagery with an accuracy of 97% and 98%, respectively. Dang et al. [25], proposed RadRGB model to classify Fusarium Wilt Disease in radish crops. Compared to VGG-16 and Inception-V3, the proposed architecture provides the best results in terms of accuracy and testing time of 96.4% and 0.043 s/image, respectively, while VGG-16 and Inception-V3 achieved slightly lower accuracies of 93.1% and 95.7%, respectively, and longer testing time of 0.1 (VGG-16) and 0.22 (Inception-V3) s/image (Table 3). Cercospora leaf spot detection from UAV imagery of sugar beet crops was targeted in Görlich et al. [32] using a sematic segmentation model (FCN) based on fully convolutional DenseNet (FC-DenseNet) proposed in Jégou et al. [40]. The proposed FCN approach takes RGB images as input and provides a pixel-wise map. The adopted method achieved an F1-score of 75.74% on data under similar field conditions to the training data and a similar F1-score of 75.55% under changing field conditions.

Discussions

Early crop and plant disease identification is a crucial task to improve crop productivity. The adoption of recent UAV-based technologies and advanced deep learning algorithms has emerged as a new effective solution, where their adoption to detect crop diseases has gained high importance in several studies over the last few years. In this review paper, we investigated the importance of several UAV platforms, camera sensors technologies, and deep learning algorithms to improve crop and plant disease identification. These techniques and technologies provide better performance than traditional ones that are based on spatial and terrestrial technologies and machine learning-based methods such as SVM and random forest classifiers. In this section, we aim to provide readers and farmers with the most challenging issues that could face them to detect crop diseases from UAV-based aerial images and how they can select the appropriate technology and algorithm to achieve better results.

Challenges, limitations, and potential solutions related to the UAV and camera technologies

Even with all their high benefits, the UAV industry still facing several challenges to achieve different agricultural tasks, including large fields monitoring and pesticide spraying. In addition to the regulations that could restrict flying in several countries and areas across the world, the short flight time is considered one of the most important limitations of UAVs due to many factors, including battery capacity, the computational power needed to run deep learning algorithms, and the high payload. Unfortunately, these issues could affect and limit the use of UAVs in the modern smart agriculture. Several solutions were proposed in many studies to minimize the impact of these factors on the overall efficiency of UAV technology. For example, the authors in Gao et al. [30] proposed to plan the UAV flight route in advance to ensure that the entire crop is checked following the shortest flight path to reduce energy consumption. However, this approach is not always applicable due to many factors, including crop structure and location. Similarly, to reduce energy consumption and increase flight time, the adoption of lightweight models and offboard processing could be other effective solutions Bouguettaya et al. [17]. Furthermore, to cover larger fields, the use of UAV swarms is an interesting solution Albani et al. [8], Ju and Son [42]. However, it is still very challenging to control a large number of UAVs simultaneously. Also, fixed-wing and Hybrid VTOL UAVs can be used for large crops monitoring. However, they are suffering from many limitations that could restrict and reduce the performance of the system for early disease identification due to the lower spatial resolution compared to multirotor UAVs that can fly at lower altitudes and provide better resolution allowing farmers to monitor crops even at leaf level.

The camera type selection is a crucial task that we need to consider. Multispectral, hyperspectral, and thermal camera sensors provide richer information than visible cameras making them more suitable for early crop diseases identification from aerial images Wu et al. [82], Pineda et al. [57]. Several studies have focused on calculating different VIs using multispectral and hyperspectral data allowing farmers to identify general crop stress, including different diseases Kerkech et al. [43], Abdulridha et al. [1], Bagheri [11]. However, UAVs equipped with visible cameras are the most used in the agriculture field due to their price, weight, and availability Bouguettaya et al. [18], Wu et al. [82]. Also, the performance of the different imaging systems depends on the flight altitudes and different viewing angles. For example, flying at high altitudes could increase the scene complexity that may restrict the deep learning model’s performance in the case of small-scale visual symptoms. Also, flying at very low altitudes can affect the detection performance due to the wind generated by the rotation of propellers resulting in the continuous movement of the plants’ leaves that can cause hiding of some symptoms.

Challenges, limitations, and potential solutions related to the deep learning models

Recently, deep learning models have emerged as a novel technology that has shown promising results in the visual data processing. They provide significant benefits against classical machine learning approaches. For example, deep learning algorithms can extract relevant features automatically instead of extracting them manually, which is a time-consuming task. Generalization is another important parameter that would favor deep learning models over classical techniques. For example, the model in Görlich et al. [32] provides similar results on both similar field conditions to the training data and under changing field conditions, such as varying illumination and orientation. However, deep learning models are still facing several challenges and limitations.

The use of deep learning methods requires a huge amount of data, which is not always achievable in the case of crop diseases identification from UAV imagery due to the large variants of crops, plants, and diseases. Several approaches were adopted in different studies to reduce the impact of the low availability of datasets, including transfer learning, fine-tuning, and techniques employed within the deep learning architecture like dropout and normalization techniques Tetila et al. [75], Gomez Selvaraj et al. [31], Duarte-Carvajalino et al. [28]. Also, data augmentation could be another effective solution to overcome this issue by increasing artificially the training dataset size. This can be done through image processing techniques like rotation, zooming, mirroring, and adding some noises and brightness to the images Stewart et al. [71], Kerkech et al. [44]. For example, the authors in Stewart et al. [71], applied rotations on each image to increase the number of images used for the training process resulting in seven additional augmented images per image. Similarly, the authors in Tetila et al. [75] used a total of 3000 images for six different classes to train the adopted CNN architectures. Each of the six classes consists of 500 UAV-based imagery, which is not sufficient to train deep learning models. To this end, they applied different geometric transformations to increase the number of image samples in each class, including rotation, rescaling, scrolling, and zooming operations. Another way of data augmentation is to use deep learning algorithms to generate new unseen artificial data, including different types of Generative Adversarial Networks (GANs). For example, the authors in Hu et al. [38], Hu et al. [37] used DCGAN architecture to increase data size by generating new unseen data to improve the model performance. Also, to overcome the lack of data to train an efficient deep learning model, the authors in Tetila et al. [75] applied dropout and data augmentation techniques using the Keras module. Also, they investigated the impact of transfer learning and fine-tuning techniques on the overall performance of the CNN model, where various pre-trained models on the ImageNet dataset were tested. These techniques provide much better results than training deep learning models from scratch with random weight initialization. Data type and data preprocessing are other fundamental factors we should consider to develop efficient deep learning models, especially in the case of crop diseases identification from UAV-based aerial images. Thus, the data type selection may improve the performance of the developed model. Several studies adopted different data fusion approaches to improve the model performance. For example, the authors in Zhang et al. [84] proposed to combine spectral and spatial information. Similarly, the authors in Kerkech et al. [45] combined visible and infrared data to improve the model performance in identifying diseases. Also, combining different vegetation indices and color spaces were adopted in Kerkech et al. [43], where the choice of the right vegetation index could affect the overall performance of the crop disease identification systems as shown in many studies, including Guo et al. [33], Abdulridha et al. [2], and Bagheri [11].

Also, several studies focused on the deep learning model architecture, such as the chosen backbone for features extraction, the number of layers, the loss functions, to name a few. The selected architecture is a fundamental parameter that could affect the performance of the detection. As presented in Table 3, the authors in Gomez Selvaraj et al. [31], Wu et al. [82], and Kerkech et al. [45] investigated the impact of the selected backbone architecture on the effectiveness of the used algorithms. For example, the authors in Wu et al. [82] showed that YOLOv3 based on DarkNet architecture as the main feature extractor achieved a better mAP of 64% than the one based on MobileNet achieving a relatively smaller mAP of 63.2%. However, the latter provides better processing speed and model size making it more suitable for small devices with limited computational resources.

Comparison

Regarding crop and plant diseases detection, the computer vision-based methods are often varying among three main tasks, which are image classification, object detection, and image segmentation. However, the selection of the appropriate computer vision task depends on the targeted objective. Therefore, in this section, we aim to provide a detailed analysis of the most adopted deep learning-based computer vision categories for crop diseases identification to help researchers and farmers in selecting the appropriate models and tools to achieve the most important study cases. Also, different evaluation metrics for computer vision tasks are presented.

Image classification case

Image classification is one of the first deep learning approaches to be widely used in the field of crop disease detection, where the classifier receives an image as input and tries to assign a label to the entire image from a predefined set of categories. According to Table 3, deep learning-based image classification is the most used technique for crop and plant diseases from RGB images collected from UAVs that fly at low altitudes.

Case study Deep learning-based image classification models are widely used to recognize different plant and crop diseases. In most cases, the image classification task is utilized to recognize diseases at the leaf level. Thus, most of the reviewed studies adopted image classification techniques to identify plant diseases from UAV-based RGB images collected from low altitudes (Table 3).

Adopted techniques Due to their high effectiveness in the image classification task, CNNs are considered the main deep learning architectures for plant and crop diseases identification. According to the reviewed papers, in addition to some custom CNN architectures, AlexNet, VGGNet, ResNet, Inception, and Xception were among the most employed CNN architectures for plant diseases classification. For example, the authors in Tetila et al. [75] adopted four state-of-the-art CNN architectures to classify soybean diseases, which are Inception-V3, ResNet-50, VGG-19, and Xception. Other researchers developed their own custom CNN architectures to classify diseases, including the study of Dang et al. [25].

Performance The performance of plant diseases identification using image classification techniques is calculated using different evaluation metrics, including accuracy, precision, recall, f1-score, learning error, training time, and inference time. Image classification based on deep learning models provides high recognition rates compared to traditional machine learning models. For example, the authors in Duarte-Carvajalino et al. [28] showed that CNN achieved lower error rates than Random Forest and MLP. Shallow CNN models are among the preferred solutions for plant disease classification from small-size images. For example, the authors in Dang et al. [25] showed the effectiveness of using shallow CNN architecture with only five convolution layers in identifying fusarium wilt of radish from 6464 images. Similarly, in deeper networks, as shown in Gomez Selvaraj et al. [31], where they adopted ResNet-50 as the main CNN classifier. Using such deep architectures may result in losing significant information about small objects at the deeper layer levels.

Image classification could be an effective and even faster way when we target single disease identification per image. However, in the case of multiple diseases in the same image, we need more advanced and complicated techniques such as object detection and image segmentation that can identify multiple diseases in the same image.

Object detection case

Object detection is another important computer vision task adopted to identify crop and plant diseases from UAV imagery. Unlike image classification, deep learning-based object detection models can classify and localize multiple diseases that are present in the input image providing bounding boxes around each detected disease with its appropriate class.

Case study According to the studies available in the literature, object detection-based techniques are mainly adopted to identify diseases in tree crops, including pine and banana (Table 3).

Adopted techniques There are two main object detection categories to detect plant and crop diseases from aerial images, which are two-stage and single-stage algorithms. Due to its high performance, compared to other region-based models, Faster R-CNN is the most used two-stage detector, which is adopted in several studies, including Wu et al. [82] and Yu et al. [83], whereas, different YOLO versions are used as the main single-stage detector. YOLOv3 and YOLOv4 were adopted in Wu et al. [82] and Yu et al. [83], respectively, to identify pine wilt disease. Other studies combined object detection and image segmentation techniques to improve the model performance. The authors in Gomez Selvaraj et al. [31] used a RetinaNet based on ResNet-50 architecture to detect banana plants from high-altitude UAV imagery. Then, they cropped the detected plants from the original image and fed it to VGG-16 or a custom CNN architecture to classify the detected plant as diseased or not.

Performance Compared to image classification and image segmentation techniques, object detection-based plant and crop diseases identification provides relatively low performance. For example, the detection performance achieved in Gomez Selvaraj et al. [31] using the RetinaNet model (F1-score of around 84% on the training set and 70% on the test set) could be improved using a larger input data size, but it comes with the cost of longer training time and higher processing power. Thus, one of the major drawbacks of object detection approaches is that their overall performance depends on the flight altitude and also on the adopted feature extractor.

According to Table 3, object detection-based methods are the less adopted techniques for plant and crop diseases identification from UAV imagery due to their relatively low performances.

Image segmentation case

Image segmentation aims to classify each pixel in the image according to its class. In the plant and crop diseases identification field, deep learning-based image segmentation techniques provide a more precise location of the detected disease by classifying each pixel in the image according to the disease types. The problem of detecting diseases that are in arbitrary poses and cluttered and/or occluded environments using object detection algorithms remains unsolved making image segmentation techniques more appropriate in such cases.

Case study Image segmentation techniques based on deep learning models are mostly used to identify plant and crop diseases from multispectral and hyperspectral images collected using UAV platforms that fly at different altitudes.

Adopted techniques Image segmentation algorithms are divided into two main categories, including semantic segmentation and instance segmentation. Several studies adopted image segmentation algorithms to identify crop and plant diseases from UAV imagery. For example, the authors in Stewart et al. [71] adopted an instance segmentation algorithm called Mask R-CNN to identify the Northern Leaf Blight in maize crops from images acquired through a UAV achieving an average precision of 96%. However, according to Table 3, semantic segmentation is the most used image segmentation technique, which is adopted in several studies. FCN, U-Net, SegNet, PSPNet, and DeepLab-V3 are the most adopted semantic segmentation, but their performances depend on several factors, including data type, flight altitude, crop types, and diseases types.

Performance In the case of plant and crop diseases identification from high altitude UAV imagery, according to Table 3, image segmentation models provide better recognition rates compared to object detection-based techniques. For example, using object detection models, the authors in Wu et al. [82] achieved precision rates varying between 60% and 64% to identify pine disease, whereas adopting semantic segmentation techniques, the authors in Qin et al. [59] were able to achieve a precision rate between 68% and 86%. However, these results did not only depend on the used model but also the disease type.

Object detection and image segmentation techniques may require more computational power, where UAV platforms do not have such a powerful processing system. To overcome such a problem, several approaches were adopted over the last few years, including online off-board data processing Li et al. [48], Hu et al. [37], offline data processing Bayraktar et al. [13], and lightweight deep learning models implementation Bouguettaya et al. [17]. However, each of these techniques has its pros and cons. For example, the online off-board processing method has some issues in terms of data privacy and security because we need to transmit the collected data in real-time using different communications protocols, whereas offline data processing does not provide real-time solutions in the case of high-resolution data. Also, lightweight versions of deep learning models have lower accuracy compared to large and complex deep learning models.

Deep learning-based crop and plant diseases identification through visual data acquired from UAVs may suffer in terms of performance due to many factors related to the crop and diseases characteristics. Thus, several techniques can be used to improve their performance. For example, the choice of CNN architectures to extract effective features play a crucial role in terms of accuracy and speed. Table 3 shows that the selected CNN has a significant impact on the model accuracy. Using different model-related parameters, like learning rate, optimizers, normalization, and the number of epochs, may improve the recognition accuracy. Also, various bio-inspired meta-heuristic optimization techniques were proposed over the last few years to improve the performance of developed models, including Reptile Search Algorithm (RSA) Abualigah et al. [7], Arithmetic Optimization Algorithm (AOA) Abualigah et al. [4], and Aquila Optimizer (AO) Abualigah et al. [6].

Evaluation metrics

To evaluate the performance of deep learning models, several evaluation metrics were proposed over the years, including accuracy, precision, recall, f1-score, among others. Table 4 summarizes the most used metrics to evaluate the performance of the developed deep learning-based computer vision models.

Table 4.

List of the most used metrics to evaluate deep learning-based computer vision models

| Metric | Formula | Description |

|---|---|---|

| Accuracy | The accuracy is the most used evaluation technique that measures how many times the developed model made the correct prediction in the classification task. | |

| Recall (sensitivity) | The recall rate indicates how many misidentified plant disease types the developed model can predict. A better recall rate means lower false-negative predictions. | |

| Precision | The precision rate indicates how many of a certain disease class the developed model incorrectly diagnosed as another disease type. A better precision rate means lower false-positive predictions. | |

| F1-score (F-measure) | The F1-score metric represents the harmonic mean of precision and recall rates. | |

| Average Precision | The AP is a metric to evaluate the performance of an object detection model, which is calculated for each class. | |

| Mean Average Precision | The mAP is the average of AP over all classes. In the case when we have a single class, mAP and AP are the same metrics. | |

| Kappa coefficient | The Kappa coefficient is a statistic that measures inter-annotator agreement. | |

| Intersection over Union | The IoU measures the overlap between the ground truth area and the predicted area. | |

| FPS | The FPS is used to compute the detection speed. |

The abbreviations TP, TN, FP, FN in Table 4 denote: - True Positives (represent the number of correctly identified diseases); - True Negatives (represent the number of identified diseases); - False Positives (represent the number of incorrectly identified diseases); - False Negatives (represent the number of non-identified diseases). Also, p0 and pe denote the observed proportional agreement and the expected agreement by chance, respectively.

Conclusions and future directions

In the present paper, we reviewed the existing deep learning-based computer vision methods to identify and classify crop and plant diseases from UAV-based aerial images. Firstly, we introduced the differet UAV types used in the agricultural field, including rotary-wing, fixed-wing, and hybrid VTOL UAVs. Secondly, we highlighted various camera sensors that could be mounted on UAV platforms adopted to identify crop diseases such as RGB, multispectral, hyperspectral, and thermal cameras. Thirdly, different deep learning models used to identify crop and plant diseases from aerial images were presented. Finally, we investigated the most challenging issues that could face farmers to detect crop diseases from UAV imagery and how they can select the appropriate deep learning model and technology to achieve better results.

Developing accurate, real-time, reliable, and autonomous UAV-based systems for plant and crop diseases identification is becoming more and more essential in modern agriculture. These systems require complex and efficient algorithms that can overcome the encountered problems and challenges, such as lighting condition changes, disease size, occlusion, and changes in viewpoints, among others. In addition, it is necessary to combine recent deep learning architectures and UAV platforms with advanced technologies to build a system that works efficiently to improve crop productivity. One other major problem that we have to deal with is agricultural data availability. Therefore, we need to collect more data or develop sophisticated algorithms based on generative deep learning architectures to generate realistic datasets.

Biographies

Abdelmalek Bouguettaya

received the master’s and Ph.D. degrees in telecommunications from Badji Mokhtar University, Annaba, Algeria, in 2011 and 2017, respectively. He was a member of the “Embedded and Detection” Advanced Systems Division, Laboratoire d’Etude et de Recherche en Instrumentation et en Communication d’Annaba (LERICA Laboratory), Annaba, from 2009 to 2018. He is currently a Senior Researcher with the Research Center in Industrial Technologies (CRTI), Chéraga, Algeria. His current research interests include deep learning, computer vision, intelligent embedded systems, and Unmanned Aerial Vehicles (UAVs).

Hafed Zarzour

received the Ph.D. degree in computer science from Annaba University, Annaba, Algeria, in 2013. He is currently an Associate Professor of computer science with the University of Souk Ahras, Souk-Ahras, Algeria. He has published several research articles in international journals and conferences of high repute, including IEEE, Springer, Elsevier, Wiley, ACM, Taylor and Francis, IGI Global, and Inderscience. His research focuses on deep learning, artificial intelligence, and educational technology.

Ahmed Kechida

received his M.S. degree in electronics from the Polytechnic Milatary School, Algiers, Algeria, in 2003 and the Ph.D. degree in electronics from Saad Dahleb Blida 1 University, Blida, Algeria, in 2016. He is currently a Senior Researcher with Research Center in Industrial Technologies (CRTI), Algiers. His research interest include signal processing, image analysis, embedded systems for drones, and non-destructive evaluation by ultrasound.

Amine Mohammed Taberkit

was born in Tlemcen, Algeria, in 1988. He received the master’s degree in electronic instrumentation and the Ph.D. degree from the University of Tlemcen, Tlemcen, in 2012 and 2018, respectively. In 2010, he became an Electronic Engineer at the University of Tlemcen. He was a member of the Research Unit of Materials and Renewable Energy Research (URMER) and a part-time Teacher with the University of Tlemcen from 2013 to 2019. He is currently a Senior Researcher with the Research Center in Industrial Technologies (CRTI), Chéraga, Algiers. His research interests included strain silicon technology, MOSFET transistors, and nanotechnology. His actual research interests include artificial intelligence, intelligent embedded systems, unmanned aerial vehicle, and computer vision.

Author contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by [AB], [HZ], [AK] and [AMT]. The first draft of the manuscript was written by [AB] and [HZ] and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Consent to participate

Not applicable.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Abdelmalek Bouguettaya and Hafed Zarzour have contributed equally to this work.

Contributor Information

Abdelmalek Bouguettaya, Email: a.bouguettaya@crti.dz.

Hafed Zarzour, Email: hafed.zarzour@univ-soukahras.dz.

Ahmed Kechida, Email: a.kechida@crti.dz.

Amine Mohammed Taberkit, Email: a.taberkit@crti.dz.

References

- 1.Abdulridha J, Ampatzidis Y, Ehsani R, et al. Evaluating the performance of spectral features and multivariate analysis tools to detect laurel wilt disease and nutritional deficiency in avocado. Comput. Electron. Agric. 2018;155:203–211. doi: 10.1016/j.compag.2018.10.016. [DOI] [Google Scholar]

- 2.Abdulridha J, Ampatzidis Y, Kakarla SC, et al. Detection of target spot and bacterial spot diseases in tomato using UAV-based and benchtop-based hyperspectral imaging techniques. Precision Agric. 2020;21(5):955–978. doi: 10.1007/s11119-019-09703-4. [DOI] [Google Scholar]

- 3.Abdulridha J, Ampatzidis Y, Qureshi J, et al. Laboratory and UAV-based identification and classification of tomato yellow leaf curl, bacterial spot, and target spot diseases in tomato utilizing hyperspectral imaging and machine learning. Remote Sens. 2020 doi: 10.3390/rs12172732. [DOI] [Google Scholar]

- 4.Abualigah L, Diabat A, Mirjalili S, et al. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021;376(113):609. doi: 10.1016/j.cma.2020.113609. [DOI] [Google Scholar]

- 5.Abualigah L, Diabat A, Sumari P, et al. Applications, deployments, and integration of internet of drones (iod): a review. IEEE Sens. J. 2021;21(22):25532–25546. doi: 10.1109/JSEN.2021.3114266. [DOI] [Google Scholar]

- 6.Abualigah L, Yousri D, Abd Elaziz M, et al. Aquila optimizer: a novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021;157(107):250. doi: 10.1016/j.cie.2021.107250. [DOI] [Google Scholar]

- 7.Abualigah L, Elaziz MA, Sumari P, et al. Reptile search algorithm (RSA): a nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022;191(116):158. doi: 10.1016/j.eswa.2021.116158. [DOI] [Google Scholar]

- 8.Albani, D., Nardi, D., Trianni, V.: Field coverage and weed mapping by UAV swarms. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4319–4325, (2017). 10.1109/IROS.2017.8206296

- 9.Albetis J, Duthoit S, Guttler F, et al. Detection of flavescence dorée grapevine disease using unmanned aerial vehicle (uav) multispectral imagery. Remote Sens. 2017 doi: 10.3390/rs9040308. [DOI] [Google Scholar]

- 10.Albetis J, Jacquin A, Goulard M, et al. On the potentiality of UAV multispectral imagery to detect flavescence dorée and grapevine trunk diseases. Remote Sens. 2019 doi: 10.3390/rs11010023. [DOI] [Google Scholar]

- 11.Bagheri N. Application of aerial remote sensing technology for detection of fire blight infected pear trees. Comput. Electron. Agric. 2020;168(105):147. doi: 10.1016/j.compag.2019.105147. [DOI] [Google Scholar]

- 12.Barbedo JGA. A review on the use of Unmanned Aerial Vehicles and imaging sensors for monitoring and assessing plant stresses. Drones. 2019 doi: 10.3390/drones3020040. [DOI] [Google Scholar]

- 13.Bayraktar E, Basarkan ME, Celebi N. A low-cost UAV framework towards ornamental plant detection and counting in the wild. ISPRS J. Photogramm. Remote. Sens. 2020;167:1–11. doi: 10.1016/j.isprsjprs.2020.06.012. [DOI] [Google Scholar]

- 14.Beddow JM, Pardey PG, Chai Y, et al. Research investment implications of shifts in the global geography of wheat stripe rust. Nat. Plants. 2015;1(10):1–5. doi: 10.1038/nplants.2015.132. [DOI] [PubMed] [Google Scholar]

- 15.Bohnenkamp D, Behmann J, Mahlein AK. In-field detection of yellow rust in wheat on the ground canopy and UAV scale. Remote Sens. 2019 doi: 10.3390/rs11212495. [DOI] [Google Scholar]