Abstract

Introduction

Augmented reality (AR) has promise as a clinical teaching tool, particularly for remote learning. The Chariot Augmented Reality Medical (CHARM) simulator integrates real-time communication into a portable medical simulator with a holographic patient and monitor. The primary aim was to analyze feedback from medical and physician assistant students regarding acceptability and feasibility of the simulator.

Methods

Using the CHARM simulator, we created an advanced cardiovascular life support (ACLS) simulation scenario. After IRB approval, preclinical medical and physician assistant students volunteered to participate from August to September 2020. We delivered augmented reality headsets (Magic Leap One) to students before the study. Prior to the simulation, via video conference, we introduced students to effective communication skills during a cardiac arrest. Participants then, individually and remotely from their homes, synchronously completed an instructor-led ACLS AR simulation in groups of three. After the simulation, students participated in a structured focus group using a qualitative interview guide. Our study team coded their responses and interpreted them using team-based thematic analysis.

Results

Eighteen medical and physician assistant students participated. We identified four domains that reflected trainee experiences: experiential satisfaction, learning engagement, technology learning curve, and opportunities for improvement. Students reported that the simulator was acceptable and enjoyable for teaching trainees communication skills; however, there were some technical difficulties associated with initial use.

Conclusion

This study suggests that multiplayer AR is a promising and feasible approach for remote medical education of communication skills during medical crises.

Supplementary Information

The online version contains supplementary material available at 10.1007/s40670-022-01598-7.

Keywords: Augmented reality, Medical education, Medical students, Simulation, Qualitative

Introduction

Virtual reality (VR) and augmented reality (AR) have supplemented traditional medical simulation programs with novel tools that provide immersive learning experiences [1]. Students and teachers can interact within a mix of virtual environments, real-world assets, and holographic projections. Distinct from VR, during which users are immersed in a computer-generated environment, AR enables interactive simulation experiences that augment real-world environments overlaid with holograms.

AR is a promising tool for medical education [2]. The use of AR simulation technology in medical student education increases learning motivation and competency [3]. Its utility may be further increased in a pandemic and post-pandemic environment due to renewed interest in distance learning [4]. However, medical trainees’ satisfaction with a socially distanced AR simulation has not been studied, particularly one in which they participate from their home environment.

Using the Mobile AR Education (MARE) framework, we developed an advanced cardiovascular life support (ACLS) AR simulation hosted on the Magic Leap One (ML1) headset (Plantation, FL, USA) [5, 6]. The simulator, Chariot Augmented Reality Medical simulator (CHARM simulator, Stanford Chariot Program, Stanford, CA, USA), integrates elements of teamwork and real-time communication into a portable, remote learning–enabled medical simulator. The AR simulation depicts challenging clinical scenarios while maintaining participant interaction in their current environment. Holographic patients, beds, and monitors are placed into the real world and are controlled through holographic touch pads seen only by the instructors. With AR simulations, instructors can modulate scenario difficulty in response to trainees, unlike hard-coded software with predetermined responses. This makes the AR simulations uniquely leveraged at supporting a constructivist approach to teaching and learning. Because the CHARM simulator can be completed while maintaining social distance, it is a potential tool for encouraging collaborative learning in a safe environment during a global pandemic. Medical simulation, particularly augmented reality, is traditionally completed in medical environments. This study explored whether realism could be maintained in a non-medical environment.

Given the novel study intervention and limited prior research in this area, a qualitative methodology provided optimal opportunity to explore feasibility and acceptability. We focused on usability testing, observation of implementation, and assessment of participant experience to determine if their home environment provided a suitable environment for the AR simulation [7]. The purpose of this study was to analyze qualitative feedback from medical and physician assistant students piloting the CHARM simulator.

Methods

Study Design and Researcher Characteristics

This was a descriptive, qualitative study that adhered to the guidelines described by the Standards for Reporting Qualitative Research (SRQR) guidelines [8]. No research personnel had supervisory roles over the participants. When approaching students for the study, the trained research personnel clarified that participation was voluntary.

Context

This study was conducted at a large, academic medical and physician assistant school in Northern California. There are 482 medical students and 82 physician assistant students enrolled in the respective schools. Regarding other simulation exposure, students have access to a 28,000 square feet Immersive Learning Center, which had reduced capabilities due to county social restriction guidelines during the COVID-19 pandemic.

This study was conducted remotely to maintain safe social distance. We delivered sanitized study equipment to participants and conducted the simulation and subsequent focus group interviews via video conference (Zoom Video Communications, San Jose, CA). Data collection occurred between August and September 2020.

Sampling Strategy

Research assistants (RAs) recruited a purposeful sample of eligible medical and physician assistant students via email and Slack (Slack Technologies, San Francisco, CA). A priori, we sought to enroll 18 students in order to reach thematic saturation [9]. Students eligible for inclusion were second-year medical or physician assistant students who were 18 years or older (Table 1). We selected second-year medical and physician assistant students because they have learned cardiac and pulmonary pathophysiology and have relatively homogenous experiences with life support crisis management training. Students who currently had nausea or had a history of severe motion sickness or seizures were excluded from the study. Students who wore corrective glasses were also ineligible, since glasses were not compatible with the AR goggles.

Table 1.

Demographics

| Characteristic | Value |

|---|---|

| Age | 24.9 ± 1.7 |

| Sex (n =) | |

| Male | 11 |

| Female | 7 |

| Race* | |

| American Indian or Alaskan Native | 1 (5.6%) |

| Asian | 6 (33.3%) |

| Black or African American | – |

| Native Hawaiian or Other Pacific | – |

| White | 12 (66.7%) |

| Ethnicity | |

| Hispanic or Latino | 4 (22.2%) |

| Not Hispanic or Latino | 14 (77.8%) |

| Training program | |

| M.D. Program | 15 (83.3%) |

| Master of Science Physician Assistant (Level MSPA2) | 3 (16.7%) |

| Level of resuscitation certification* | |

| None | 2 (11.1%) |

| Basic Life Support | 16 (88.9%) |

| Advanced Cardiac Life Support | 1 (5.6%) |

| Pediatric Advanced Life Support | – |

| Neonatal Resuscitation Program | – |

| Previous exposure to AR | |

| Yes (1–2 times) | 8 (44.4%) |

| None | 10 (55.6%) |

| Number of times initiated resuscitative efforts on a person | |

| None | 16 (88.9%) |

| 1–2 times | 2 (11.1%) |

| Received training on effective communication skills during resuscitation | |

| Yes | 8 (44.4%) |

| No | 10 (55.6%) |

| Worked as a frontline healthcare worker with direct contact with patients who were critically ill and in need of resuscitation | |

| Yes | 5 (27.8%) |

| 1–6 months | 2/5(40%) |

| 1–2 years | 3/5(60%) |

| No | 13(72.2%) |

*Multiple answers allowed

Human Subject Ethical Approval

The Stanford University Institutional Review Board approved this study. Participants were considered vulnerable because they are students. Consent was obtained prior to participation in the simulation and focus group. Demographic questionnaires permitted participants to refuse answering questions without study exclusion. Participants were also allowed to refuse answering questions during the focus group interview.

Data Collection Methods

Qualitative data were collected during focus groups following each simulation, with the focus group consisting of the students who had just participated in the session. Focus group transcripts were generated using video recording (Zoom) with automated audio transcription service (Otter.ai, Los Altos, CA). Transcripts were reviewed and edited for accuracy by a trained RA.

Data Collection Instruments

After we obtained consent and prior to the simulation, each participant completed a questionnaire to obtain information about general demographics, stage of training, experience with AR, and first aid background. We administered the questionnaire electronically using REDCap [10, 11].

We developed a qualitative focus group guide for data collection which did not change over the course of the study. The focus group guide contained six questions accompanied by probing questions and prompts that could be used by the facilitator to increase the amount of information obtained from a given question (see document, Supplemental Digital Content 1, which is the focus group interview guide). The same study investigator administered these questions each time after the simulation. The questions addressed attitudes and opinions about the simulation experience and the quality of learning, as well as perceptions on the best way to learn medical skills, teamwork during the simulation, and limitations of this paradigm. Our phenomenological data-driven methodology for interviewing was similar to a previous qualitative study assessing medical student experiences using an online multiplayer virtual world for cardiopulmonary resuscitation [12]. While this study used a focus group interview guide with fourteen targeted questions, we selected six open-ended questions with optional prompting questions to increase the response depth. Additionally, we piloted these questions with medical students who had previous experience with simulation-based learning and modified them based upon their feedback.

Simulation Design and Delivery

The goal of the CHARM simulation was to present students with a realistic, interactive scenario in which they would participate in a cardiac arrest medical crisis. As described in the function layer of the MARE framework, suitable activities in an appropriate environment are required for augmented reality–based learning [6]. Part of assessing feasibility and acceptability involved exploring whether we could create an immersive clinical environment in the learners’ homes. The activities performed in the simulation, specifically communication with other students in response to a dynamic situation, were designed to be adaptable to the various personal paradigms of students, another element in the MARE framework.

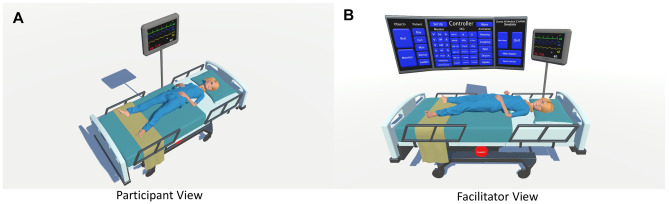

Each simulation included a hologram of a hospital bed with a patient that would animate in response to the facilitator’s modifications of the patient’s presentation, such as seizing or sleeping. A holographic monitor indicated the patient’s heart rate, blood pressure, oxygen saturation, and electrocardiogram next to the bed and was similarly adjustable by the simulation instructor (Fig. 1). Each participant appeared in the simulation as an avatar head with holographic hands that followed the movement of the handheld controller. Participants were able to hear and speak with other participants via ML1 spatial audio capabilities. The facilitator controlled all aspects of the simulation remotely and also communicated with students using the headset.

Fig. 1.

A The hologram of the hospital bed, patient, and monitor. B The holographic controller seen only by the simulation facilitator

We randomly assigned students to groups of three to participate in a simulation [13]. A study staff member delivered the ML1 headsets in a contactless manner. Via an interactive lecture over video conference, the facilitator conducted an orientation module that reviewed the core tenets of effective communication skills during a medical crisis. The learning objectives were (1) to learn and practice closed-loop communication skills during a medical crisis simulation and (2) to understand and enact the various roles of individuals on a code team. We used learning objectives to drive motivation for the simulation experience. However, the primary aim of the study was not to quantifiably measure achievement of these learning objectives, but to assess feasibility and acceptability.

The facilitator then played a video example of a team managing a medical crisis, which highlighted effective and ineffective communication styles. After a video debrief, we randomly assigned participants to one of three roles: compressor, drug administrator, and team leader/defibrillator [13]. The facilitator described these roles to the students, providing examples of verbalized actions that each role can perform. For example, the compressor would communicate the rate of compressions they planned to administer and would simulate compressions with their holographic hand via their controller. The drug administrator would describe the dose and medication type they planned to administer. These verbal, closed-loop communications aligned with the learning objectives of the simulation.

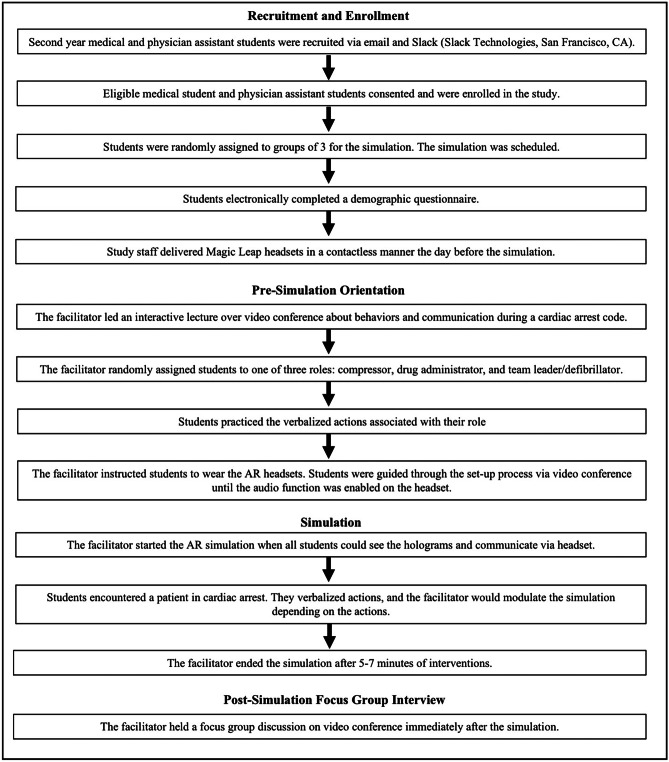

After the participants were oriented to these communication skills and their roles, the simulation commenced (Fig. 2). Students muted their video conference audio and enabled their ML1 headsets, which allowed them to hear and communicate with the other students and study simulation facilitator.

Fig. 2.

Outline of methods used to conduct the simulation and focus groups

AR Simulation Description and Facilitator Response

When students entered the simulation, the patient was apneic in ventricular fibrillation arrest. The facilitator adjusted the holographic patient’s vital signs in real time in response to the verbal cues by the students. The simulation finished after the students enacted a series of interventions (approximately 5–7 min), and the students returned to video conference for the focus group discussion. The primary learning objective of our study was to practice closed-loop communication skills within each role. Participants practiced delivering instructions, acknowledging receipt of information, clarifying if necessary, and confirmed their resulting action with the team. Return of spontaneous circulation was achieved after commencement of verbalized chest compressions, one dose of epinephrine, and two defibrillations after recognition of the arrythmia. The code leader had to rely on the other two participants to verbalize their actions.

Debrief and Focus Group

A debrief was conducted after the simulation to the learning objectives and additional context of medical crisis scenarios in the hospital setting. Students were taught how to verbalize drug doses or shock administration in the orientation session, and these decisions were discussed in greater detail during the debrief. The focus group consisted of open-ended questions from the previously created guide with probes to further to clarify ambiguous statements or response inconsistencies. Each focus group consisted of the students who had just completed the simulation.

Data Processing

Two study investigators independently read a random sample of the two transcripts to identify preliminary topics to analyze [13]. After this, the two investigators compared topics for similarities and redundancies. We then defined each code with representative quotations (Table 2). Next, three study staff independently analyzed the six transcripts, with each individual coding four different transcripts so that each transcript was coded by two individuals. This was an iterative process, in which codes were added or adapted based upon content that arose in transcripts.

Table 2.

Focus group domains, defining themes, and representative transcript excerpts

| Domains | Definition | Representatives Statements |

|---|---|---|

| Experiential satisfaction | Overall impression of the simulation, particularly emotional valence and level of satisfaction |

“I agree. It’s [AR] a super fun experience, honestly. Great way to learn, it’s fun.” “I thought it [AR] was really fun.” “I felt very excited, especially after the whole stimulation. My heart was kind of pumping and racing.” “It [AR] was very exciting. And you feel like a sense of responsibility with what you’re doing.” “I felt like it was really immersive. There’s a level of emotional excitement when you’re simulating something, so it makes the whole situation feel a little bit more intense.” |

| Learning engagement | Quality of the learning experience, including the perception of skills that were practiced and the realism of the scenario |

“Doing it this way just seemed a lot more higher stakes. I could see the person dying. I think this is a great way to learn material.” “The components of a real-life situation with the beeping of the monitor added pressure and made it more emotionally realistic.” “It’s very different seeing a patient start to seize, instead of just reading it. And I think that was a huge thing. That’s a disconnect between book learning and clinical learning.” “I really liked that we could see the vitals change. I like that because it felt less of an exercise in imagination.” “I think like in terms of practicing communication, I think it definitely was more stressful than if we were just sitting in a room talking through the scenario. So definitely felt a little more intense which is probably good for practice” “I also liked the teamwork aspect of it. It’s difficult, I think, to really capture that in a setting, which is not emergency or not acute. And so having put us into a sort of stressful situation where something happened, which we weren’t expecting, was a good way to really engage our teamwork skills and to make sure or to assess if we can keep calm and work as a team, even though things are changing out of our control.” “I could almost smell the patient. I think it just took the educational material to the next level.” |

| Technology learning curve | Assessment of the feasibility, novelty, and ease of use of the software and hardware |

“There’s fluency with the technology that only comes with using it.” “I think that there is a little bit of just startup cost to kind of understanding how to use the technology itself. And ways that could help with that.” “The setup was a bit challenging.” “It’s definitely not like anything I’ve done before.” |

| Opportunities for improvement | Areas where the simulation could benefit from added or modified features |

“Maybe a syringe table or just a few more features in the room to make it feel like slightly more realistic.” “Maybe having something to hold in your hand…you could actually feel tired doing compressions…picking up a fake syringe would make it a bit more real.” “I think that I would have liked to see physical things. So instead of verbalizing actions, but instead physically placing defibrillator pads.” |

Data Analysis

One study staff member who had participated in transcription performed thematic analysis using all six transcripts. Two other individuals who had participated in coding review modified these findings until consensus was reached between all three individuals. Our qualitative analysis methods also mirror those used in other medical simulation–based studies that relied on post-simulation interview data [14–18].

Techniques to Enhance Trustworthiness

After the thematic analysis, we presented two study participants from two of the groups with the results for member-checking. The participants provided independent agreement that the results represented their sentiments.

Results

Participants

Eighteen medical and physician assistant students participated in one of six groups of three students each. Nearly 90% of students had previously received basic life support training. Less than half of the participants had previous exposure to AR, and 44% of participants reported previously receiving training on communication skills during resuscitation (Table 1).

Focus Group Findings

Across six simulations, the average session lasted 60 to 90 min, with approximately 30 min devoted to the post-simulation focus group. Thematic analysis indicated four domains: experiential satisfaction, learning engagement, technology learning curve, and opportunities for improvement (Table 2).

Experiential Satisfaction

Participants consistently expressed satisfaction with their experience, describing the simulation as “engaging” and “fun.” They frequently noted the positive emotion evoked by the simulation, and how these feelings made the learning experience both enjoyable and effective. Participants felt that their emotional response stemmed from the immersive nature of the AR. Several participants mentioned feeling an elevated heart rate or sweating in response to the simulation. One participant reported the experience made them feel like they could smell the patient. Immersivity was also evidenced by the fact that participants were in their own residences and reported forgetting they were still there. Participants noted that the stress and pressure they felt during the simulation were beneficial for their experience, and some thought that increasing it further would improve the experience. Finally, participants reflected upon the monotony of socially distanced learning during the pandemic and felt this clinical simulation made learning feel fun again.

Learning Engagement

Participants reported that their emotional reactions promoted learning and that they believed this would enhance their memory of events during a future medical crisis. They also reported that the sense of urgency in the scenario augmented their engagement and fostered strong team communication. Students averred that their intellectual engagement had suffered during distance learning, so they found practicing communication to be even more important than usual. They felt that AR was a valuable tool for practicing such communication.

Many participants reported that the distanced AR simulation disallowed nonverbal cues between participants, which elevated the importance of verbal communication. While some noted that this made completing their roles more challenging in the simulation, most participants felt it was beneficial to be pushed to rely on effective verbal communication. Participants noted that isolating this one type of communication would not be practical without this technology.

Participants consistently reported that the AR patient realism and real-time vital sign modulation enhanced their learning compared to other distance learning modalities that they have experienced. Specifically, many participants felt it was preferable to watching videos or completing live remote sessions facilitated by professors. Some students previously completed experiences in which their responses to prompts by an instructor would result in changes on a monitor shared by the instructor over video conference. Though they had used similar verbal cues in such an experience, participants felt that it was not as engaging or effective as the AR simulation. They emphasized that the immersivity of the AR simulation was a key difference that enhanced their learning. Students also highlighted that the adaptability of the scenario was important, and they felt that their learning benefitted from the real-time vital sign modulation. Participants compared the simulation positively to in-person simulation, as they reported the simulation seeming more realistic than a static mannequin in the simulation center.

Technology Learning Curve

Technology learning curve was defined as difficulties encountered during the simulation due to inexperience with the hardware or software. There was a learning curve to using the technology, even for participants who had previously used AR. Some challenges were hardware-specific, and others were related to the software. Participants felt that the challenges could be overcome with practice but made the initial simulation more challenging. Some participants reported technological difficulties, although preventable with experience, that included being unable to project the holograms in a reasonable location (for example, the hospital bed was not anchored to the floor or was rendered to an unrealistic size and the participants were unsure how to re-size and move the holographic assets). Some participants also reported not knowing what to expect when entering the simulation and felt confused or disoriented during the set-up process. This included using the trackpad on the AR controller and modifying the volume on the headset. Participants generally felt that the positivity of their experience outweighed the technological challenges.

Opportunities for Improvement

Some students reported that it would be useful to introduce the simulation design and controls to them in greater depth before starting the simulation. Almost all students stated that additional holograms would improve the simulation realism, including a medication tray with syringes, a defibrillator, and extra healthcare providers in the room. Other students suggested adding real-time projections with decision algorithms to aid clinical decisions. Some students recommended gamifying the simulation for increased enjoyment. Many students felt that supplementing the hologram with a mannequin or physical equipment could further improve their experience.

Discussion

This qualitative study investigated medical and physician assistant student experiences while piloting a novel, socially distanced, AR medical simulator. Core focus group domains included discussion around experiential satisfaction, learning engagement, technology learning curve, and opportunities for improvement. The collective responses from each of these domains suggest that the AR simulator was an engaging and enjoyable way for medical trainees to learn communication skills, although there were some technical challenges and aspects of the simulation that would benefit from further refinement. This study is a proof of concept that medical AR simulation can be feasibly be deployed in a socially distanced manner as an additional method of remote learning.

Medical trainees across all simulation groups conveyed that traditional remote learning lacked engagement and experiential satisfaction, which is similar to previously reported findings [19]. Although online pedagogy has replaced in-person learning for many institutions’ preclinical curriculums, a hybrid learning model may persist in the post-pandemic period [20, 21]. The CHARM simulator was well-received by preclinical students, with many citing it as the most engaging remote learning activity in which they have participated since transitioning to online coursework, and they reported it was effective in promoting content understanding. As one of the first multiplayer, distance AR applications for medical trainees, this study presents a promising and realistic learning modality that leverages recent technological advances. Exposure to technology for medical trainees has become increasingly critical due to the rapid expansion of telemedicine and adoption of advanced digital health tools for clinical care [22, 23].

Students consistently reported feeling emotional arousal during the simulation, referring to the “intensity” and “pressure” they felt when making decisions. In prior research, preclinical students randomized to a high anxiety medical simulation performed better on a written competency test 6 months later when compared to those in a less stressful situation [24]. Additionally, emotional arousal in the form of mild to moderate stress can increase retention [25, 26]. Anesthesiology residents found that high fidelity-simulation could be anxiety-provoking, but residents reported feeling equipped to provide emergent care and practice safely in high-intensity situations [27]. These previously published studies and our novel findings together demonstrate the importance of realistic, immersive simulations to create genuine excitement and stress in students. This also supports the development of additional holographic assets, such as a defibrillator or medications, to enhance the fidelity of the AR simulation.

In addition to its standalone use with holographic features only, the CHARM software and other AR technologies can be supplemented with physical models when in-person learning is feasible. For example, a chest task trainer may be positioned into the holographic patient to provide chest compressions. Participants in this study supported supplementing the holograms with real-world equipment, and this mixed reality technique has obtained positive feedback in other settings [28–31]. Additionally, participant feedback from this study suggested that designing responsive prompts to guide students’ clinical decision-making would enhance both supplemented mixed reality and pure AR simulations. These enhancements could also leverage the integrated eye tracking software in the ML1 headsets to guide students to attend to the most important stimuli during a cardiac arrest simulation.

This study included several limitations. First, the fidelity of avatars would benefit from enhanced participant discrimination given that all the avatars look the same, and the lack of tangible interaction reduces its utility for technical skill advancement. This also raises the question of the importance of non-verbal cues in an emergency given that the avatars do not reflect more nuanced maneuvers or body language. Second, though technological learning is more scalable than in-person simulations, the cost of AR headsets can be an impediment. Third, the learning curve of the technology requires priming of participants, though exposure to such technology is beneficial for medical trainees given the role these technologies are likely to play in the future of healthcare delivery. Finally, the incompatibility of the headset with glasses and challenges for individuals susceptible to motion sickness may present a barrier to use by some students.

Although the beneficial reduction of cognitive load associated with AR-based visual cues during task performance is less evident in novice users, more experienced users have been found to have enhanced task performance in multiple application domains. This suggests that increased exposure to AR technology may overcome initial cognitive load demand, resulting in more optimal learning [32]. The initial challenges can be reduced with a pre-simulation module that introduces students to the simulation and hardware in greater detail, which may also assist in reducing the initial increase in cognitive load associated with using a new technology. Given the small sample size and lack of generalizability inherent in qualitative methods, controlled trials with increased number of participants are warranted. Finally, as is common for in situ simulations at our institution, the same facilitator conducted the debrief. This introduces the possibility of social desirability bias into our study, although this is reduced by the fact that the facilitator had no supervising role over participants.

Conclusion

Medical and physician assistant students reported positive experiences with the CHARM simulator and felt that it was an engaging, effective way to conduct socially distanced learning. This qualitative, proof-of-concept study supports the use of multiplayer AR medical simulation technology as a feasible method of remote instruction for medical trainees. Future studies should quantify learning outcomes and compare distance AR simulations to traditional modalities.

Supplementary Information

Below is the link to the electronic supplementary material.

Declarations

Ethical Approval

Ethical approval has been granted by Stanford University Internal Review Board, #55657.

Competing Interests

TJ Caruso, E Wang, and S Rodriguez are on the board of directors for Invincikids, a federal, tax exempt, non-profit organization that seeks to train providers how to use technologies in healthcare and distribute technologies to sick children. They receive no realized or potential financial benefit from their board positions or the results of this study. On behalf of all authors, the corresponding author states that there is no conflict of interest.

Disclaimers

None.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Previous Presentation

None.

Jimmy Qian contributed as co-first author and Nick Haber contributed as a co-senior author.

References

- 1.Tang KS, Cheng DL, Mi E, Greenberg PB. Augmented reality in medical education: a systematic review. Can Med Ed J. 2020;11:e81–e96. doi: 10.36834/cmej.61705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Barsom EZ, Graafland M, Schijven MP. Systematic review on the effectiveness of augmented reality applications in medical training. Surg Endosc. 2016;30(10):4174–4183. doi: 10.1007/s00464-016-4800-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Moro C, Štromberga Z, Raikos A, Stirling A. The effectiveness of virtual and augmented reality in health sciences and medical anatomy. Anat Sci Educ. 2017;10(6):549–559. doi: 10.1002/ase.1696. [DOI] [PubMed] [Google Scholar]

- 4.Remtulla R. The present and future applications of technology in adapting medical education amidst the COVID-19 pandemic. JMIR Med Educ. 2020;6(2):e20190. doi: 10.2196/20190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kamphuis C, Barsom E, Schijven M, Christoph N. Augmented reality in medical education? Perspect Med Educ. 2014;3(4):300–311. doi: 10.1007/s40037-013-0107-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zhu E, Lilienthal A, Shluzas LA, et al. Design of mobile augmented reality in health care education: a theory-driven framework. JMIR Med Educ. 2015;1(2):e10. doi: 10.2196/mededu.4443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cook DA, Ellaway RH. Evaluating technology-enhanced learning: a comprehensive framework. Med Teach. 2015;37(10):961–970. doi: 10.3109/0142159X.2015.1009024. [DOI] [PubMed] [Google Scholar]

- 8.O'Brien BC, Harris IB, Beckman TJ, et al. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med. 2014;89(9):1245–1251. doi: 10.1097/ACM.0000000000000388. [DOI] [PubMed] [Google Scholar]

- 9.Guest G, Namey E, Chen M. A simple method to assess and report thematic saturation in qualitative research. PLoS ONE. 2020;15(5):e0232076. doi: 10.1371/journal.pone.0232076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap) – a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Harris PA, Taylor R, Minor BL, et al. The REDCap consortium: building an international community of software partners. J Biomed Inform. 2019;95:103208. doi: 10.1016/j.jbi.2019.103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Creutzfeldt J, Hedman L, Felländer-Tsai L. Cardiopulmonary resuscitation training by avatars: a qualitative study of medical students’ experiences using a multiplayer virtual world. JMIR Serious Games. 2016;4(2):e22. doi: 10.2196/games.6448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Suresh K. An overview of randomization techniques: an unbiased assessment of outcome in clinical research. J Hum Reprod Sci. 2011;4(1):8–11. doi: 10.4103/0974-1208.82352. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 14.Kumar A, Wallace EM, East C, et al. Interprofessional simulation-based education for medical and midwifery students: a qualitative study. Clin Simul Nurs. 2017;13(5):217–227. doi: 10.1016/j.ecns.2017.01.010. [DOI] [Google Scholar]

- 15.Isaza-Restrepo A, Gómez MT, Cifuentes G, Argüello A. The virtual patient as a learning tool: a mixed quantitative qualitative study. BMC Med Educ. 2018;18(1):297. doi: 10.1186/s12909-018-1395-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ballangrud R, Hall-Lord ML, Persenius M, Hedelin B. Intensive care nurses’ perceptions of simulation-based team training for building patient safety in intensive care: a descriptive qualitative study. Intensive Crit Care Nurs. 2014;30(4):179–187. doi: 10.1016/j.iccn.2014.03.002. [DOI] [PubMed] [Google Scholar]

- 17.Weller JM, Janssen AL, Merry AF, Robinson B. Interdisciplinary team interactions: a qualitative study of perceptions of team function in simulated anaesthesia crises. Med Educ. 2008;42(4):382–388. doi: 10.1111/j.1365-2923.2007.02971.x. [DOI] [PubMed] [Google Scholar]

- 18.Sørensen JL, Navne LE, Martin HM, et al. Clarifying the learning experiences of healthcare professionals with in situ and off-site simulation-based medical education: a qualitative study. BMJ Open. 2015;5:e008345. doi: 10.1136/bmjopen-2015-008345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rajab MH, Gazal AM, Alkattan K. Challenges to online medical education during the Covid-19 pandemic. Cureus. 2020;12(7):e8966. doi: 10.7759/cureus.8966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shetty S, Shilpa C, Dey D, Kavya S. Academic crisis during COVID 19: online classes, a panacea for imminent doctors. Indian J Otolaryngol Head Neck Surg. 2020;1–5. [DOI] [PMC free article] [PubMed]

- 21.Gaur U, Majumder MAA, Sa B, Sarkar S, Williams A, Singh K. Challenges and opportunities of preclinical medical education: COVID-19 crisis and beyond. SN Compr Clin Med. 2020;1–6. [DOI] [PMC free article] [PubMed]

- 22.Chandrashekar P. A digital health preclinical requirement for medical students. Acad Med. 2019;94(6):749. doi: 10.1097/ACM.0000000000002685. [DOI] [PubMed] [Google Scholar]

- 23.Poncette AS, Glauert DL, Mosch L, Braune K, Balzer F, Back DA. Undergraduate medical competencies in digital health and curricular module development: mixed methods study. J Med Internet Res. 2020;22(10):e22161. doi: 10.2196/22161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.DeMaria S, Jr, Bryson EO, Mooney TJ, et al. Adding emotional stressors to training in simulated cardiopulmonary arrest enhances participant performance. Med Educ. 2010;44(10):1006–1015. doi: 10.1111/j.1365-2923.2010.03775.x. [DOI] [PubMed] [Google Scholar]

- 25.Wood R, Kakebeeke B, Debowski S, Frese M. The impact of enactive exploration on intrinsic motivation, strategy, and performance in electronic search. Appl Psychol. 2000;49(2):263–283. doi: 10.1111/1464-0597.00014. [DOI] [Google Scholar]

- 26.Simons PRJ, Jong FPD. Self-regulation and computer-aided instruction. Appl Psychol. 1992;41(4):333–346. doi: 10.1111/j.1464-0597.1992.tb00710.x. [DOI] [Google Scholar]

- 27.Price JW, Price JR, Pratt DD, Collins JB, McDonald J. High-fidelity simulation in anesthesiology training: a survey of Canadian anesthesiology residents’ simulator experience. Can J Anaesth. 2010;57(2):134–142. doi: 10.1007/s12630-009-9224-5. [DOI] [PubMed] [Google Scholar]

- 28.Samosky JT, Wang B, Nelson DA, et al. BodyWindows: enhancing a mannequin with projective augmented reality for exploring anatomy, physiology and medical procedures. Stud Health Technol Inform. 2012;173:433–439. [PubMed] [Google Scholar]

- 29.Sutherland C, Hashtrudi-Zaad K, Sellens R, Abolmaesumi P, Mousavi P. An augmented reality haptic training simulator for spinal needle procedures. IEEE Trans Biomed Eng. 2013;60(11):3009–3018. doi: 10.1109/TBME.2012.2236091. [DOI] [PubMed] [Google Scholar]

- 30.Rochlen LR, Levine R, Tait AR. First-person point-of-view-augmented reality for central line insertion training: a usability and feasibility study. Simul Healthc. 2017;12(1):57–62. doi: 10.1097/SIH.0000000000000185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Samosky JT, Nelson DA, Wang B, et al. BodyExplorerAR: enhancing a mannequin medical simulator with sensing and projective augmented reality for exploring dynamic anatomy and physiology. In: Proceedings of the Sixth International Conference on Tangible, Embedded and Embodied Interaction: ACM;2012:263–270.

- 32.Jeffri NFS, Awang Rambli DR. A review of augmented reality systems and their effects on mental workload and task performance. Heliyon. 2021;7(3):e06277. doi: 10.1016/j.heliyon.2021.e06277. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.