Abstract

Background

Researchers conducting cohort studies may wish to investigate the effect of episodes of COVID-19 illness on participants. A definitive diagnosis of COVID-19 is not always available, so studies have to rely on proxy indicators. This paper seeks to contribute evidence that may assist the use and interpretation of these COVID-indicators.

Methods

We described five potential COVID-indicators: self-reported core symptoms, a symptom algorithm; self-reported suspicion of COVID-19; self-reported external results; and home antibody testing based on a 'lateral flow' antibody (IgG/IgM) test cassette. Included were staff and postgraduate research students at a large London university who volunteered for the study and were living in the UK in June 2020. Excluded were those who did not return a valid antibody test result. We provide descriptive statistics of prevalence and overlap of the five indicators.

Results

Core symptoms were the most common COVID-indicator (770/1882 participants positive, 41%), followed by suspicion of COVID-19 (n = 509/1882, 27%), a positive symptom algorithm (n = 298/1882, 16%), study antibody lateral flow positive (n = 124/1882, 7%) and a positive external test result (n = 39/1882, 2%), thus a 20-fold difference between least and most common. Meeting any one indicator increased the likelihood of all others, with concordance between 65 and 94%. Report of a low suspicion of having had COVID-19 predicted a negative antibody test in 98%, but positive suspicion predicted a positive antibody test in only 20%. Those who reported previous external antibody tests were more likely to have received a positive result from the external test (24%) than the study test (15%).

Conclusions

Our results support the use of proxy indicators of past COVID-19, with the caveat that none is perfect. Differences from previous antibody studies, most significantly in lower proportions of participants positive for antibodies, may be partly due to a decline in antibody detection over time. Subsequent to our study, vaccination may have further complicated the interpretation of COVID-indicators, only strengthening the need to critically evaluate what criteria should be used to define COVID-19 cases when designing studies and interpreting study results.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12889-022-13889-0.

Keywords: COVID-19, Cohort studies, Public health, Classification, COVID-19 serological testing

Introduction

The vast majority of people who experience a COVID-19 illness will not require hospitalisation for that illness but stay in the community [1–3]. Proportions of people requiring hospitalisation vary over time and place, and rely on getting an accurate incidence of COVID-19, but one modelling study estimated that only 2% of people with COVID-19 were admitted to hospital in the first wave of COVID-19 in the UK [4]. Research is urgently needed about medium and long-term outcomes of cases outside the hospital, particularly so-called "long COVID" [5–10]. In hospitals, COVID-19 status is determined using clinical assessment and investigations, particularly antigen detection by polymerase chain reaction (PCR) on nasopharyngeal swab samples and lung imaging (usually Computed Tomography, CT); therefore, hospital-based cohorts can have a strong basis for COVID-19 diagnosis [11]. In community settings, particularly during the first wave of COVID-19, such information was often unavailable, [4] and may continue to be so in resource-limitedenvironments. As investigations for COVID-19 are time-sensitive, participants in cohort studies may have missed the window where definitive diagnosis might be made. Since there are no "gold standard" methods by which community-based studies can distinguish between cases and controls, researchers have had to rely on proxy indicators of COVID-19. Despite guidance on clinical tests for COVID-19, [11–15] there is little evidence aimed explicitly at choosing and interpreting proxy indicators of past COVID-19 infection in research contexts.

Potential indicators of past COVID-19 infection (COVID-indicators) include self-report – for instance, an individual’s belief they have had COVID-19, symptoms they recall and test results they report. These are all dependent on participant recall. Some variables may also be available through electronic health records, that are not prone to recall effects, but bias case-finding towards those in contact with health services during the pandemic [16, 17]. To provide an objective measure for past infection, it is possible to detect antibodies to SARS-CoV-2 (the virus that causes COVID-19) in blood samples, but it is not yet clear how long these antibodies remain detectable [18]. This paper describes concordance between proxy COVID-indicators, both self-report and antibody, in a cohort study of staff and postgraduate research students (PGRs) of a university in London, United Kingdom (UK) [19] during the first wave of the pandemic (winter-spring 2020). The aim is to provide evidence to inform the design and the interpretation of future studies of COVID-19 in non-hospitalised participants.

Methods

This cohort study conforms to The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guidelines, [20] documented in appendix 1 of supplementary materials. Ethical approval was granted by King's Psychiatry, Nursing and Midwifery Research Ethics Committee (HR-19/20–18,247) and research was performed in accordance with the Declaration of Helsinki.

Setting

The King's College London Coronavirus Health and Experiences of Colleagues at King's (KCL CHECK) study explores the health and wellbeing outcomes of the COVID-19 pandemic on staff and PGRs. A protocol is available [19]. Briefly, on 16 April 2020, all KCL staff and PGRs were invited via email and internal social media to participate in an online survey (‘the baseline survey’). The survey was open for enrolment for two weeks. Participants provided informed consent for their data to be used internally and for research purposes, and were given the opportunity to opt in to follow-up surveys (surveys every two months and shorter surveys every two weeks) and antibody testing.

Participants

All KCL CHECK participants who consented to follow-up and gave a valid UK address were sent a test kit. Participants residing outside the UK in June 2020 were excluded for logistical reasons. Participants were included in this analysis if they returned a valid antibody test result by 13th July 2020.

Data collection

Table 1 shows the schedule for follow-up surveys, with the first referred to as Period 1 (P1). Questions in the baseline and longer follow-up surveys asked about experiences in the last two months (e.g. P4); questions in the shorter fortnightly surveys referred to the last two weeks. This analysis reports data from surveys at P0 (baseline) to P5, which took place between April and June 2020.

Table 1.

Periods of data collection for KCL CHECK to week 18 (April – Aug 2020)

| Timepoint | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Data collection period | P0 (Baseline) | P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 |

| Week of study | 1–3 | 3–4 | 5–6 | 7–8 | 9–10 | 11–12 | 13–14 | 15–16 | 17–18 |

| Month | April | May | June | July | Aug | ||||

|

Long survey • "In the last two months" |

x | x | x | ||||||

|

Short survey • "In the last two weeks" |

x | x | x | x | x | x | |||

| Antibody test | x | ||||||||

The antibody test provided was the SureScreen Diagnostics Rapid COVID-19 IgG/IgM Immunoassay Test Cassette, which detects antibodies to the 'spike' protein of SARS-CoV-2. The performance of this test (under laboratory conditions) has been shown to be good. For example, using samples from 268 keyworkers who self-reported positive COVID-19 antigen/PCR tests and 1,995 historical samples, it had 94.0% sensitivity and 97.0% specificity, also showing 96.3% agreement with the SAR-CoV-2 spike antibody enzyme-linked immunoassay (ELISA) result for 2,847 keyworkers [21]. An internal pilot demonstrated that participants could use the test cassette without specific training [22]. We developed our procedure and detailed illustrated instructions following pilot feedback, shown in supplementary material appendix 2. In late June 2020, the test kit was posted to participants, including the test cassette and a lancet for providing a blood spot. Participants uploaded a photograph of their result to a secure server. Participants were asked to email the team if they had difficulties, who answered within two working days and could arrange for a replacement kit (sent in early July 2020).

Deriving COVID-indicators

Self-reported COVID-indicators were measured at baseline and follow-up surveys as follows.

- Suspicion of COVID-19 illness: At baseline (P0), participants were asked, "Do you think that you have had COVID-19 (coronavirus) at any time? Definitely/Probably/Unsure/No". At P1, P2, P3, and P5, participants were asked, "Do you think that you have had COVID-19 (coronavirus) in the last two weeks?" At P4, participants were asked, "Do you think that you have had COVID-19 (coronavirus) in the last two months?". Positive suspicion was defined as a response of "Definite" or "Probable" in any survey (P0-P5).

- COVID-19 symptoms: We used a symptom list derived from the ZOE coronavirus daily reporting app (part of the COVID symptom study, [23, 24]), adapted to cover two-month periods (P0 and P4) or two-week periods (P1, P2, P3 and P5) and used to define multiple possible symptom states. (a) Any symptom: Responded to the screening question "How have you felt physically?" with 'Not quite right' rather than 'Normal' (b) Core symptoms: Any report of 'fever', 'new persistent cough' or 'loss of smell/taste'. (c) Symptom algorithm: The COVID symptom study reported an algorithm (including age, gender, core symptoms of COVID-19, 'severe fatigue' and 'skipped meals') with scores above a cut-off representing a high likelihood to have COVID [23]. Combining these definitions (a-c), a positive algorithm was considered the most specific category, followed by core symptoms and then any symptoms. An overall symptom category was assigned as the most specific category reached in any survey (P0-P5).

- COVID-19 test results. We asked, "Have you had a test for COVID-19 (coronavirus)?" and "What was the result?" at baseline, repeated at P1, P2, P3, and P5 for the two preceding weeks and at P4 for the preceding two months. We did not ask for any evidence. Those who reported a positive test at any point P0-P5 were defined as positive on external tests. To further differentiate between tests for current infection and tests for previous infection, at P8, we asked about different types of tests. Those who endorsed having a "blood/blood spot test to look for evidence of past infection" (excluding the test they had received through the KCL CHECK study) were allocated 'external antibody test'. Those who had reported a test in P0-P5 and endorsed "swab of the throat and/or nose to look for infection" or who did not report at P8 were allocated 'antigen/PCR test'.

Because each indicator is summarised over time as positive if ever met and negative in all other cases, missing values caused by participants not completing a survey were treated as a negative result at that time.

Antibody result: The results of KCL CHECK home antibody testing for spike IgM or IgG using the SureScreen Diagnostics Rapid COVID-19 Immunoassay Test Cassette results were adjudicated by the KCL CHECK team based on an uploaded photograph, as explained in a previous paper [22] and illustrated in supplementary material appendix 2. Extraction of these results took place on 13 July and included all photos uploaded by this date.

Participant characteristics

All characteristics were self-reported in the baseline survey. Ethnicity was asked using recommended wording from the Office of National Statistics with 18 groups, [24] reported grouped into categories due to small numbers of some of the 18 ethnic groups. Participants were also asked whether they were “key workers” based on government definitions of essential workers. Population characteristics of all staff and PGRs students at King’s College London were obtained from KCL administrative sources to describe sample representativeness.

Analysis

Datasets from each period (P0-5 and P8) and antibody testing were merged using R 4.0.0 and associated packages [25–28]. We summarised participation and missing data. Overlap of the indicators was explored through descriptive analyses and figures. Concordance between pairs of indicators is the proportion of participants in whom both indicators agree (both positive or both negative). Additionally, we compared self-report indicators to the KCL CHECK antibody tests using sensitivity (proportion of antibody positive participants also identified by indicator) and specificity (proportion of antibody negative participants also negative by indicator). However, the use of these statistics does not imply that we regard the antibody test results as a gold-standard for defining past COVID-19 cases. We give proportions to the nearest percentage point, unless under 1%, with 95% confidence intervals calculated using Wilson's method.

Results

Participants

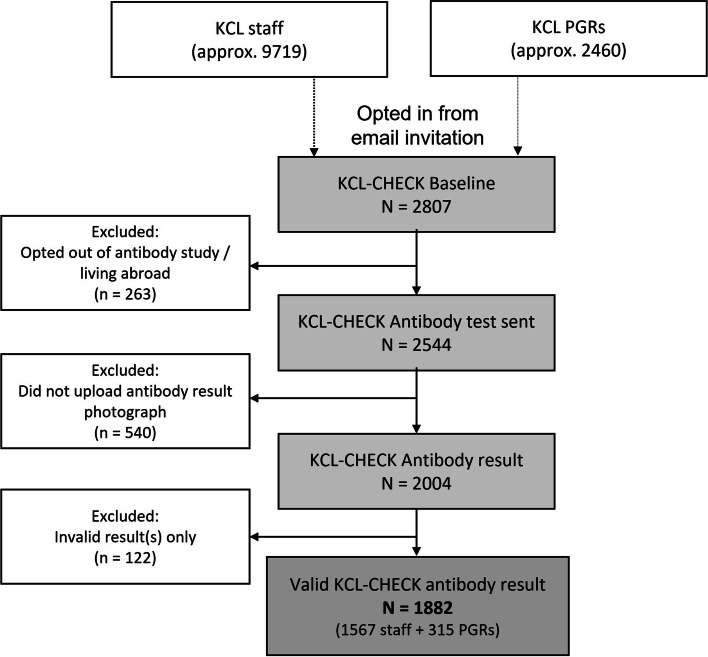

Out of approximately 9719 staff and 2460 PGRs in KCL, 2807 (23%) volunteered for KCL CHECK (see Fig. 1). A total of 2544 participants who consented to the longitudinal study and antibody testing, and who were residing in the UK, received a testing kit. Excluding those who did not return a valid result, left 1882 participants for analysis. Table ST1 in supplementary materials shows these exclusions did not greatly affect the composition of the sample.

Fig. 1.

Study flowchart

Sample characteristics

In the analytical sample (n = 1882) 88% identified as being from a White ethnic background, 71% identified as female and 13% as keyworkers, with a median age of 37 years. Compared to the characteristics of the university staff (Table ST2 in supplementary materials) and PGRs (Table ST3 in supplementary materials), White and female people were over-represented in our sample.

The antibody test was sent to participants 10–12 weeks after they completed their baseline survey. Table 1 shows five opportunities to complete follow-up surveys in this time. 98% of the sample completed at least one survey, and 68% completed all five. 1687 (90%) participants took part in the first two-month survey (P4), which comprised eight of the 10–12 weeks that elapsed. We considered these in a secondary analysis to see whether more complete reporting would alter the results. Prevalence and overlap of COVID-indicators were identical in the cohorts, so the larger cohort is reported.

Prevalence of COVID-indicators

Table 2 shows the prevalence of COVID- indicators in our sample. Of 1882 participants, 124/1882 (7%, 95% confidence interval 6–8%) tested positive on the study antibody test. Core symptoms were reported by 770/1882 (41%, CI 39–43%), 90% (694/770) of these experienced those symptoms before the baseline survey (April 2020). 298/1882 (16%, CI 14–18%) also met the criteria for the symptom algorithm. Suspicion of having had COVID (probable or definite) was reported by 509/1882 (27%, CI 25–29%), 96% (487/509) experienced this before baseline. 323 participants reported they had been tested elsewhere (235 antigen test only, 138 antibody test only, 50 both) with 39/1882 (2%, CI 2–3%) reporting at least one positive external test result (six antigen positive, 29 antibody positive, four both). This means that 10 of our 1882 participants (0.5%, CI 0.3–1%) is known to have had a positive antigen test during this wave of COVID-19.

Table 2.

Prevalence and overlap of positive COVID-19 indicators in KCL CHECK (n = 1882)

| One or more core COVID-19 symptoms reported | Participant thinks they have had COVID-19 | Symptom algorithm positive | KCL CHECK antibody test positive | Reports positive test result from elsewhere | |

|---|---|---|---|---|---|

| Overall prevalence | 770/1882, 41% | 509/1882, 27% | 298/1882, 16% | 124/1882, 7% | 39/1882, 2% |

| Number and proportion of column who also have: | |||||

| One or more core COVID symptoms reported | 429 / 509, 84% | 298 / 298, 100% | 106 / 124, 85% | 31 / 39, 79% | |

| Participant thinks they have had COVID | 429 / 770, 56% | 214 / 298, 72% | 101 / 124, 81% | 33 / 39, 85% | |

| Symptom algorithm positive | 298 / 770, 39% | 214 / 509, 42% | 83 / 124, 67% | 25 / 39, 64% | |

| KCL -CHECK antibody test positive | 106 / 770, 14% | 101 / 509, 20% | 83 / 298, 28% | 24 / 39, 62% | |

| Reports positive test result from elsewhere | 31 / 770, 4% | 33 / 509, 6% | 25 / 298, 8% | 24 / 124, 19% | |

Indicators in order of prevalence in the main cohort

When gender, age and ethnicity are considered (see Table ST4 in supplementary materials), proportions positive on the study antibody test were broadly the same in all groups. However, younger age groups reported core symptoms more often (44% in under 45 s, 34% in 45 +) and men reported suspicion of COVID-19 illness more often (33% in men, 25% in women). There were no significant results by ethnicity, but given small numbers there was low certainty around the estimates for Asian and other minority ethnic groups.

Overlap between alternative indicators of COVID-19

We found overlap between alternative indicators (Table 2) whereby any indicator being positive increased the likelihood of other indicators being positive. For instance, those with core symptoms had double the proportion of positive tests – both study tests (106/770, 14%) and external (31/770, 4%) – compared to those without core symptoms. Of those who tested positive on the KCL CHECK antibody tests, 85% had experienced core symptoms, 81% thought they had had COVID-19, and 67% met the symptom algorithm. In the KCL CHECK antibody-positive group, 28% reported receiving an external test, and 19% reported at least one positive external test. Concordance of indicator status between pairs of outcomes is shown in supplementary material table ST5. Concordance ranged from 60% for core symptoms and external test to 94% for the KCL CHECK antibody test and external test.

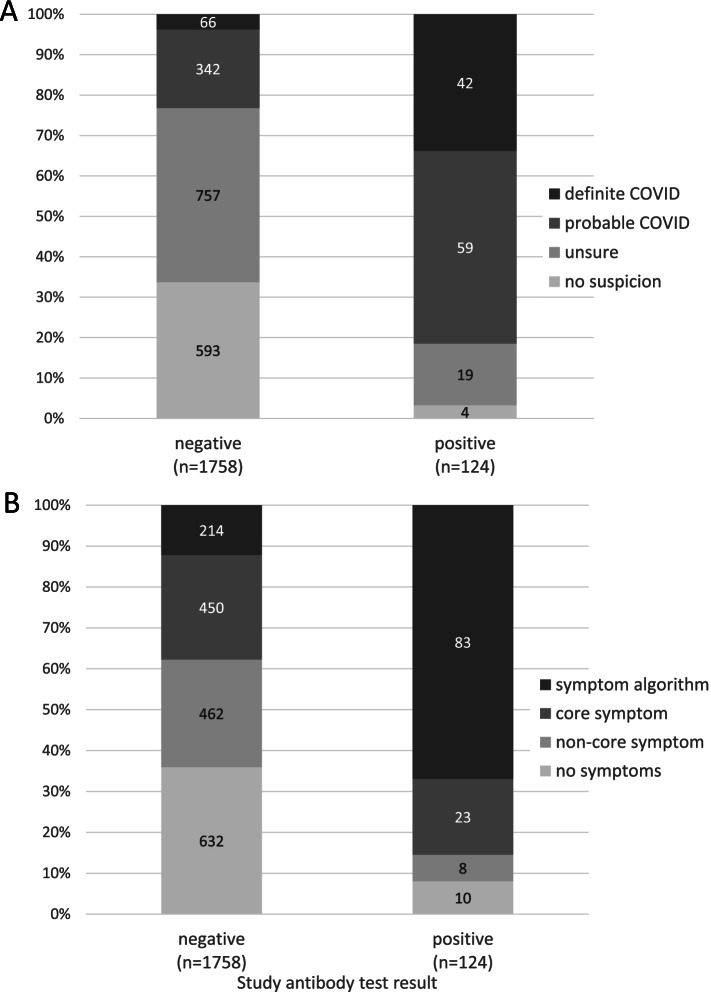

Figure 2A and Table ST6 show that participants who thought they had not experienced COVID-19 were very unlikely to get a positive antibody test result (4/597, 0.7%). Probable or definite suspicion of COVID-19 infection had 81% sensitivity (101/124) and 77% specificity (1350/1758) for the KCL CHECK antibody test. Figure 2B and Table ST7 show that most people who tested positive on the KCL CHECK antibody test were positive on the symptom algorithm, which had 67% sensitivity (83/124) and 88% specificity (1544/1758) for the KCL CHECK antibody test. Core symptoms (including those also algorithm positive) had 85% sensitivity (106/124) and 62% specificity (1094/1758).

Fig. 2.

(A-B) KCL CHECK antibody test result in June by suspicion and symptoms. A. participant suspicion that they had experienced COVID-19; B. highest level of symptoms

Combining the range of symptom and suspicion reports, ST8 shows the number of participants at each intersection of symptom and suspicion level. Adding KCL CHECK antibody test results, Table 3 and Table ST9 show the proportion testing positive in each intersect (except where there were fewer than ten participants). Table 3 shows that, within those of the same symptom level, greater suspicion of having had COVID-19 also had a greater proportion antibody positive. Of those who were positive for the symptom algorithm and had definite suspicion, 49% were positive on the KCL CHECK antibody test.

Table 3.

Intersect of suspicion and self-reported symptoms domains, showing proportion of KCL CHECK antibody test for participants in each intersecta

| % KCL CHECK antibody positive | ||||||

|---|---|---|---|---|---|---|

| Highest suspicion reported | ||||||

| no suspicion (n = 597) | unsure (n = 776) | probable (n = 401) | definite (n = 108) | Totals | ||

| Most specific symptoms reported | symptom algorithm (n = 298) | NR | 13% | 27% | 49% | 28% |

| core symptoms (n = 472) | 0% | 1% | 8% | 17% | 5% | |

| non-core symptoms (n = 470) | 0% | 2% | 6% | NR | 2% | |

| no symptoms (n = 642) | 1% | 2% | 4% | NR | 2% | |

| Totals | 1% | 2% | 15% | 39% | 7% | |

Secondary analyses

Table ST10 compares the external antibody test results and KCL CHECK antibody test results for the 138 participants with external antibody test results. Concordance was 88%. Using the external tests would indicate 24% (CI 18–32) of the 138 participants positive for antibodies, but this falls to 15% (CI 10–22) positive on the KCL CHECK antibody test.

Discussion

Ascertaining cases and controls of COVID-19 in the community is challenging. [1] There are no ‘gold-standard’ diagnostic criteria, [11] and for people who have not had the time-sensitive tests, there may be no opportunity to get diagnostic certainty. KCL CHECK used retrospective ascertainment of COVID-19 based on multiple COVID-indicators. Self report COVID-indicators included suspicion of COVID-19, symptoms and test results. An objective measure was home antibody testing. The results show that depending on which COVID-indicator is used, there could be a wide range of estimates of COVID-19 illness history. Our cohort had prevalence of COVID-indicators that ranged from 2% (external test positive) to 41% (core symptom of COVID) in June 2020. Self-reported positive external tests were reported by the fewest participants – but given that antigen testing in the community was unavailable during the March 2020 wave of infections (only 285 (15%) of the cohort had accessed antigen testing by June 2020) this will greatly under-estimate the prevalence of mild-moderate cases of COVID-19. Antigen testing (both PCR and lateral-flow) in the UK expanded subsequently, but performance of the test depends on timing and swab technique, [14] while accessing tests and reporting results requires engagement with authorities, [29] such that reporting of positives from routine antigen tests probably still underestimates true COVID-19 prevalence [30]. Reporting a core COVID-19 symptom, in contrast, is likely to over-estimate true prevalence of COVID-19 since the symptoms overlap with other common illnesses. Other indicators were suspicion of having had COVID-19 (reported by 25% of female and 33% of male participants), symptom algorithm (positive for 16%) and antibody testing (positive for 7%).

Antibody testing

Antibody testing in KCL CHECK used an IgG/IgM test kit based on “lateral flow” technology, sent to participants, which was simple to use and has high validity (under lab conditions) [31]. Antibody tests can give false positives in up to 2 per 100 tests, through cross-reactivity with antibodies unrelated to SARS-CoV-2, [12, 21] which can be problematic in large studies with low prevalence. Testing negative after having had COVID-19 is also a concern, since small numbers of people do not produce anti-spike antibodies, [32, 33] they are detected more inconsistently in mild and asymptomatic COVID-19, [31, 34, 35] and decline over time [36–38]. Testing in KCL CHECK (June-July 2020) occurred at least three months after the onset of most participants’ symptoms (Feb-March 2020). Antibodies may cease to be detectible some months after exposure, especially on lateral flow devices [39, 40]. We found 7% of our participants were positive for anti-spike IgG/IgM. Comparing to two studies done at around this time in England, the proportion of positive antibody results is lower than in TwinsUK (12%) [41] and around the level of REACT-2 (6%) [42]. However, these findings are not directly comparable, as TwinsUK used ELISA (which is more sensitive than lateral flow tests) and REACT-2 had a different participant profile. Among KCL CHECK participants who reported previous antibody testing, 15% were positive on the KCL CHECK antibody test, compared with 24% in their prior reported test. This may suggest time-dependent loss of reactivity, although there were likely also differences in test specifications and this comparison is based on small numbers of participants. Further rounds of testing of our cohort may help clarify this [43]. Augmenting antibody testing with testing for T cell response to better track long-term immunity may be possible in the future [44].

Agreement between antibody testing and other indicators

Some other studies have explored the agreement of symptoms and objective test results. The COVID symptom study found that their algorithm had high sensitivity (65%) and specificity (78%) for antigen/PCR test results [23]. Researchers compared the algorithm with antibody testing in the TwinsUK cohort, where being algorithm positive in daily symptom recording at any point in March to April was 37% sensitive and 95% specific for antibodies via ELISA in April-June [41]. In our study, the algorithm was more sensitive (67%) and slightly less specific (88%), indicating that a greater proportion of people who were antibody positive had significant symptoms. The known epidemiology for COVID-19 suggests that a proportion of people have COVID-19 without any obvious symptoms, [1] and thus a proportion of those who were antibody positive would not recall symptoms. In our study, 10 people with no symptoms tested positive (10/632, 1.6%), as well as 8 people who reported feeling "not quite right" but did not have core symptoms (8/462, 1.7%), making up 15% (18/124) of those who were antibody positive. This is low compared to the proportion without core symptoms who tested positive in TwinsUK (27%) and REACT-2 (39%) [41, 42].

We found that for a given level of symptoms, a higher suspicion of having had COVID-19 added to the likelihood of testing positive; presumably because a participant's suspicion includes context such as symptom unusualness and contacts with COVID-19. However, it is also true that even for participants who were definite they had experienced COVID-19 and had symptoms severe enough to be positive on the symptom algorithm, the majority (51%) were negative on antibody testing. This surprisingly low level of confirmation, taken together with other studies that have tested of our lateral flow device [31, 32] and comparison with TwinsUK and REACT-2, [45] suggests that the home antibody testing was of low sensitivity –missing some past cases of COVID-19 that may have been positive if subject to laboratory testing or testing closer to the time of illness [45].

Implications

There was a 20-fold difference in apparent prevalence of past COVID-19 between different COVID-indicators, which means that it is important to consider how a history of COVID-19 has been ascertained when interpreting studies that report past COVID-19 illness. In particular, our results suggest that cohort studies using external testing (which will include record-linkage to testing results, e.g. in UK Biobank [17]) will underestimate the proportion with COVID-19. The under-ascertainment may not be evenly seen across characteristics of the population or illness (e.g. people who had more severe symptoms will have been more likely to be tested), and that can incorporate bias or lead to a lack of generalisability of results [46]. While this is most relevant for infections in the first wave when testing in the UK was severely limited, accessibility of testing may be limited and likely skewed at times of greatest infection and in places with poorer infrastructure or where there are low levels of trust in the authorities [29]. Also notable is that while antibody testing gives an objective outcome, timing is important, and accuracy may be an issue depending on how it was used; in our study likely leading to an under-estimate of cases.

For researchers designing studies and considering which COVID-indicator to use, multiple measures may be preferable. As the difficulties in retrospective ascertainment mean there is underlying uncertainty, a small number of compound variables (e.g., one wide definition and one narrow definition) may be more suitable than a binary case and control adjudication. More specifically, collecting symptom report for the COVID symptom study algorithm [23] will assist in finding those who have had a typical COVID-19-like illness, and considering the participants' own suspicion can add both sensitivity and specificity. Adding a high specificity antibody test to self-report may help to identify past cases that were asymptomatic or atypically symptomatic. However, the low sensitivity of tests such as ours means that they are unlikely to make a good 'rule-out' for people who suspect they had COVID-19 some months before. More generally, there are great benefits to be had from a standardised set of criteria that can be used across studies for reproducibility and comparison, [47] but this does not need to be at the expense of a plurality of approaches that are tailored to the aims of each study.

Strengths and weaknesses

The strengths of this study include the survey repeating every fortnight to minimise recall bias. We incorporated a symptom checklist that has been previously evaluated. The antibody test kit was highly specific for SARS-CoV-2, suited to minimise false positives in population screening. Home testing maximised uptake of the test at a time when people may have been hesitant about attending a clinic. We attempted to reduce inter-individual variation through our pilot, illustrated instructions and responsive email enquiry team [22].

There are three key limitations of which to be aware. The first regards the antibody test lateral flow cassettes, which are designed for use by a trained person rather than the general public, and are known to be less sensitive and more inconsistent when testing outside the laboratory and on capillary blood [21, 35]. Secondly, as we did not exclude people with missing longitudinal survey we had incomplete data about self-reported items after the baseline. However, COVID-19 infections were much less common in May–June 2020 than they had been in March, [48] so we would expect relatively few positives to occur after the April baseline. This, and a comparison involving participants with more complete data, leads us to believe that this had minimal effect on our outcomes. Thirdly, our cohort was not representative of the general population, and even within our target population (all KCL staff and PGRs) female gender and White ethnicity were overrepresented in our cohort [49].We expect that our general findings will still be useful to studies that have different participant composition, but care would need to be taken in populations with very different expected COVID-19 prevalence. Since the time of this analysis there have been a number of further waves of COVID-19 throughout the world, which may lead to a higher proportion of people having been affected. There has also been a vaccination roll-out nationally, which makes antibody test results much more complicated to interpret.

Conclusions

This paper compares alternative indicators of past COVID-19 illness and acknowledges the complicated context: the time-course of detectable antigen and antibody, initial poor access to routine testing, symptoms common to other respiratory illnesses, asymptomatic infection, and the high profile of the illness. We found that there was overlap in the occurrence of the indicators, as expected since they reflect similar underlying concepts, but there are large differences in prevalence of different indicators in the community. This analysis is from relatively early in the COVID-19 pandemic, and the prevalence of COVID-indicators will have been affected by events such as vaccination since our analysis. However, our findings are still of relevance for public health planning insofar as they highlight the importance of indicator choice when ascertaining past COVID-19 status, and how the choice of indicator has a large influence on the proportion of a cohort who will be identified as having a history of COVID-19. This may go on to influence downstream results and findings from a cohort. We encourage researchers to consider the use of algorithms that maximise COVID-19 case history, rather than relying on single measures which may give a false sense of certainty.

Supplementary Information

Acknowledgements

The authors acknowledge the wider KCL CHECK research team who supported the planning of the study and scientific outputs, and has provided logistic support, in particular: Jonathan Edgeworth, Liam Jones, Lisa Sanderson, Jana Kim, Laila Danesh, Charlotte Williamson, Laurence Blight, Rupa Bhundia, Lucy O'Neill, Candice Middleton. We thank Mark Zuckerman for comments on an earlier draft of this paper. We would also like to thank the participants of KCL CHECK. This paper represents independent research supported by the National Institute for Health Research (NIHR) Biomedical Research Centre at South London and Maudsley NHS Foundation Trust and King's College London. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care. MHM is a Wellcome Trust Investigator.

Abbreviations

- ELISA

Enzyme-linked immunoassay [used for quantitively measuring antibodies]

- KCL CHECK

King's College London Coronavirus Health and Experience of Colleagues at King's

- P0-P8

Periods in the research study, each with one survey issued

- PCR

Polymerase chain reaction [used to detect SARS-CoV-2 antigen]

- PGRs

Postgraduate Research Students

Authors’ contributions

KASD analysed and interpreted the data and wrote the paper; EC/MH were major contributors to analysis and writing the paper; DL/VV/GBC/GL/AW were key in data collection and logistics; SAMS/RR/MH designed and arranged funding for the KCL CHECK study. All authors contributed to and approved the final manuscript.

Funding

This study was funded by King's College London. Decisions regarding design, collection, analysis and interpretation were made independently by the investigators.

Availability of data and materials

Researchers may access pseudonymised data by upon reasonable request to the Principal Investigators (Professor Matthew Hotopf and Professor Reza Razavi, email: check@kcl.ac.uk).

Declarations

Ethics approval and consent to participate

All participants gave informed consent. Ethical approval was granted by King's Psychiatry, Nursing and Midwifery Research Ethics Committee (HR-19/20–18247) and research was performed in accordance with the Declaration of Helsinki.

Consent for publication

Not applicable (no identifiable information).

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Reza Razavi and Matthew Hotopf are joint last authors.

References

- 1.Anderson RM, Hollingsworth TD, Baggaley RF, Maddren R, Vegvari C. COVID-19 spread in the UK: the end of the beginning? Lancet. 2020;396:587–590. doi: 10.1016/S0140-6736(20)31689-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Davies NG, Kucharski AJ, Eggo RM, Gimma A, Edmunds WJ, Jombart T, et al. Effects of non-pharmaceutical interventions on COVID-19 cases, deaths, and demand for hospital services in the UK: a modelling study. Lancet Public Health. 2020;5(7):e375–e385. doi: 10.1016/S2468-2667(20)30133-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Neil Ferguson, Azra Ghani, Wes Hinsley, Erik Volz, Imperial College COVID-19 response team. Hospitalisation risk for Omicron cases in England. https://www.imperial.ac.uk/mrc-global-infectious-disease-analysis/covid-19/report-50-severity-omicron/: MRC Centre for Global Infection Disease Analysis; 2021. [Accessed 30 May 2022].

- 4.Cunniffe NG, Gunter SJ, Brown M, Burge SW, Coyle C, De Soyza A, et al. How achievable are COVID-19 clinical trial recruitment targets? A UK observational cohort study and trials registry analysis. BMJ Open. 2020;10(10):e044566. doi: 10.1136/bmjopen-2020-044566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.de Erausquin GA, Snyder H, Carrillo M, Hosseini AA, Brugha TS, Seshadri S, et al. The chronic neuropsychiatric sequelae of COVID‐19: The need for a prospective study of viral impact on brain functioning. Alzheimer's & Dementia. 2021 10.1002/alz.12255. [DOI] [PMC free article] [PubMed]

- 6.Lancet T. Facing up to long COVID. Lancet. 2020;396(10266):1861. doi: 10.1016/S0140-6736(20)32662-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Del Rio C, Collins LF, Malani P. Long-term health consequences of COVID-19. JAMA. 2020;324(17):1723–1724. doi: 10.1001/jama.2020.19719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.National Institute for Health and Care Excellence, Royal College of General Practitioners, Scotland HI. COVID-19 rapid guideline: managing the long-term effects of COVID-19. https://www.nice.org.uk/guidance/ng188; 2020. [Accessed 30 May 2022]. [PubMed]

- 9.Yelin D, Wirtheim E, Vetter P, Kalil AC, Bruchfeld J, Runold M, et al. Long-term consequences of COVID-19: research needs. Lancet Infect Dis. 2020;20(10):1115–1117. doi: 10.1016/S1473-3099(20)30701-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Thompson EJ, Williams DM, Walker AJ, Mitchell RE, Niedzwiedz CL, Yang TC, et al. Risk factors for long COVID: analyses of 10 longitudinal studies and electronic health records in the UK. medRxiv. 2021:2021.06.24.21259277.

- 11.Watson J, Whiting PF, Brush JE. Interpreting a covid-19 test result. BMJ. 2020;369:m1808. doi: 10.1136/bmj.m1808. [DOI] [PubMed] [Google Scholar]

- 12.Hanson KE, Caliendo AM, Arias CA, Englund JA, Lee MJ, Loeb M, et al. Infectious Diseases Society of America guidelines on the diagnosis of COVID-19. Clinical Infectious Diseases. 2020 10.1093/cid/ciaa760. [DOI] [PMC free article] [PubMed]

- 13.Deeks JJ, Dinnes J, Takwoingi Y, Davenport C, Spijker R, Taylor-Phillips S, et al. Antibody tests for identification of current and past infection with SARS-CoV-2. Cochrane Database of Syst Rev. 2020;6(6):CD013652. doi: 10.1002/14651858.CD013652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sethuraman N, Jeremiah SS, Ryo A. Interpreting diagnostic tests for SARS-CoV-2. JAMA. 2020;323(22):2249–2251. doi: 10.1001/jama.2020.8259. [DOI] [PubMed] [Google Scholar]

- 15.Mlcochova P, Collier D, Ritchie A, Assennato SM, Hosmillo M, Goel N, et al. Combined point-of-care nucleic acid and antibody testing for SARS-CoV-2 following emergence of D614G Spike variant. Cell Reports Med. 2020;1(6):100099. doi: 10.1016/j.xcrm.2020.100099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wood A, Denholm R, Hollings S, Cooper J, Ip S, Walker V, et al. Linked electronic health records for research on a nationwide cohort of more than 54 million people in England: data resource. BMJ. 2021;373:n826. doi: 10.1136/bmj.n826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Armstrong J, Rudkin JK, Allen N, Crook DW, Wilson DJ, Wyllie DH, et al. Dynamic linkage of COVID-19 test results between Public Health England's Second Generation Surveillance System and UK Biobank. Microb Genom. 2020;6(7):mgen000397. doi: 10.1099/mgen.0.000397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Alter G, Seder R. The Power of Antibody-Based Surveillance. NEJM. 2020 10.1056/NEJMe2028079. [DOI] [PMC free article] [PubMed]

- 19.Davis KA, Stevelink SA, Al-Chalabi A, Bergin-Cartwright G, Bhundia R, Burdett H, et al. The King's College London Coronavirus Health and Experiences of Colleagues at King's Study (KCL CHECK) protocol paper: a platform for study of the effects of coronavirus pandemic on staff and postgraduate students. medRxiv. 2020 10.1101/2020.06.16.20132456.

- 20.Von EE, Altman D, Egger M, Pocock S, Gotzsche P, Vandenbroucke J. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. BMJ. 2007;335(7624):806–808. doi: 10.1136/bmj.39335.541782.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Jones HE, Mulchandani R, Taylor-Phillips S, Ades AE, Shute J, Perry KR, et al. Accuracy of four lateral flow immunoassays for anti SARS-CoV-2 antibodies: a head-to-head comparative study. EBioMedicine. 2021;68:103414. doi: 10.1016/j.ebiom.2021.103414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Leightley D, Vitiello V, Bergin-Cartwright G, Wickersham A, Davis KA, Stevelink S, et al. The King's College London Coronavirus Health and Experiences of Colleagues at King's Study: SARS-CoV-2 antibody response in an occupational sample. medRxiv. 2020 10.1101/2021.01.26.21249744.

- 23.Menni C, Valdes AM, Freidin MB, Sudre CH, Nguyen LH, Drew DA, et al. Real-time tracking of self-reported symptoms to predict potential COVID-19. Nat Med. 2020;26(7):1037–1040. doi: 10.1038/s41591-020-0916-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Office for National Statistics (ONS). Ethnic group, national identity and religion: A guide for the collection and classification of ethnic group, national identity and religion data in the UK GOV.UK2016 [updated 18 January 2016. Available from: https://www.ons.gov.uk/methodology/classificationsandstandards/measuringequality/ethnicgroupnationalidentityandreligion. [Accessed 30 May 2022].

- 25.Dowle M, Srinivasan A. data.table: Extension of `data.frame`. R package version 1.12.8. 2019. [Google Scholar]

- 26.R Core Team . R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2020. [Google Scholar]

- 27.Wickham H, Bryan J. readxl: Read Excel Files. R package version 1.3.1. 2019. [Google Scholar]

- 28.Wickham H, François R, Henry L, Müller K. dplyr: A Grammar of Data Manipulation. R package version 1.0.0. 2020. [Google Scholar]

- 29.Marshall GC, Skeva R, Jay C, Silva MEP, Fyles M, House T, et al. Public perceptions and interactions with UK COVID-19 Test, Trace and Isolate policies, and implications for pandemic infectious disease modelling. medRxiv. 2022:2022.01.31.22269871.

- 30.Beauté J, Adlhoch C, Bundle N, Melidou A, Spiteri G. Testing indicators to monitor the COVID-19 pandemic. Lancet Infect Dis. 2021;21(10):1344–1345. doi: 10.1016/S1473-3099(21)00461-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pickering S, Betancor G, Pedro Galao R, Merrick B, Signell AW, Wilson HD, et al. Comparative assessment of multiple COVID-19 serological technologies supports continued evaluation of point-of-care lateral flow assays in hospital and community healthcare settings. PLoS Pathogens. 2020 10.1371/journal.ppat.1008817. [DOI] [PMC free article] [PubMed]

- 32.Flower B, Brown JC, Simmons B, Moshe M, Frise R, Penn R, et al. Clinical and laboratory evaluation of SARS-CoV-2 lateral flow assays for use in a national COVID-19 seroprevalence survey. Thorax. 2020;75:1082–1088. doi: 10.1136/thoraxjnl-2020-215732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wu F, Liu M, Wang A, Lu L, Wang Q, Gu C, et al. Evaluating the association of clinical characteristics with neutralizing antibody levels in patients who have recovered from mild COVID-19 in Shanghai. China JAMA internal medicine. 2020;180(10):1356–1362. doi: 10.1001/jamainternmed.2020.4616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gudbjartsson DF, Norddahl GL, Melsted P, Gunnarsdottir K, Holm H, Eythorsson E, et al. Humoral immune response to SARS-CoV-2 in Iceland. N Engl J Med. 2020;383:1724–1734. doi: 10.1056/NEJMoa2026116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Morshed M, Sekirov I, McLennan M, Levett PN, Chahil N, Mak A, et al. Comparative analysis of capillary versus venous blood for serologic detection of SARS-CoV-2 antibodies by rPOC lateral flow tests. Open Forum Infect Dis. 2021;8(3):ofab043. doi: 10.1093/ofid/ofab043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Korte W, Buljan M, Rösslein M, Wick P, Golubov V, Jentsch J, et al. SARS-CoV-2 IgG and IgA antibody response is gender dependent; and IgG antibodies rapidly decline early on. J Infect. 2020;82:e11–e14. doi: 10.1016/j.jinf.2020.08.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Patel MM, Thornburg NJ, Stubblefield WB, Talbot HK, Coughlin MM, Feldstein LR, et al. Change in Antibodies to SARS-CoV-2 Over 60 Days Among Health Care Personnel in Nashville, Tennessee. JAMA. 2020;324(17) 10.1001/jama.2020.18796. [DOI] [PMC free article] [PubMed]

- 38.Self WH. Decline in SARS-CoV-2 Antibodies After Mild Infection Among Frontline Health Care Personnel in a Multistate Hospital Network—12 States, April–August 2020. MMWR Morb mortal Wkly Rep. 2020;69(47):1762–1766. doi: 10.15585/mmwr.mm6947a2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Muecksch F, Wise H, Batchelor B, Squires M, Semple E, Richardson C, et al. Longitudinal analysis of clinical serology assay performance and neutralising antibody levels in COVID19 convalescents. medRxiv. 2020:2020.08.05.20169128.

- 40.Kontou PI, Braliou GG, Dimou NL, Nikolopoulos G, Bagos PG. Antibody tests in detecting SARS-CoV-2 infection: a meta-analysis. Diagnostics. 2020;10(5):319. doi: 10.3390/diagnostics10050319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Wells PM, Doores KJ, Couvreur S, Nunez RM, Seow J, Graham C, et al. Estimates of the rate of infection and asymptomatic COVID-19 disease in a population sample from SE England. J Infect. 2020;81:931–936. doi: 10.1016/j.jinf.2020.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ward H, Atchison CJ, Whitaker M, Ainslie KEC, Elliott J, Okell LC, et al. SARS-CoV-2 antibody prevalence in England following the first peak of the pandemic. Nature Communications. 2021;12(905) 10.1038/s41467-021-21237-w. [DOI] [PMC free article] [PubMed]

- 43.Davis KA, Oetzmann C, Carr E, Malim M, Boshell VJ, Lavelle G, et al. Unexplained longitudinal variability in COVID-19 antibody status by Lateral Flow Immuno-Antibody testing. medRxiv. 2021.

- 44.Altmann DM, Boyton RJ. SARS-CoV-2 T cell immunity: Specificity, function, durability, and role in protection. Sci Immunol. 2020;5(49):eabd6160. doi: 10.1126/sciimmunol.abd6160. [DOI] [PubMed] [Google Scholar]

- 45.Ward H, Cooke G, Atchison CJ, Whitaker M, Elliott J, Moshe M, et al. Prevalence of antibody positivity to SARS-CoV-2 following the first peak of infection in England: Serial cross-sectional studies of 365,000 adults. Lancet Regional Health - Europe. 2021;4:100098. doi: 10.1016/j.lanepe.2021.100098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hand DJ. Statistical challenges of administrative and transaction data. J R Stat Soc A Stat Soc. 2018;181(3):555–605. doi: 10.1111/rssa.12315. [DOI] [Google Scholar]

- 47.Holmes EA, O'Connor RC, Perry VH, Tracey I, Wessely S, Arseneault L, et al. Multidisciplinary research priorities for the COVID-19 pandemic: a call for action for mental health science. Lancet Psychiatry. 2020;7:547–560. doi: 10.1016/S2215-0366(20)30168-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Office for National Statistics (ONS), University of Oxford, University of Manchester, Public Health England, Wellcome Trust. Coronavirus (COVID-19) Infection Survey pilot: England, Wales and Northern Ireland, 25 September 2020: ONS; 2020 [updated 25 September 2020. Available from: https://www.ons.gov.uk/peoplepopulationandcommunity/healthandsocialcare/conditionsanddiseases/bulletins/coronaviruscovid19infectionsurveypilot/englandwalesandnorthernireland25september2020. [Accessed 30 May 2022].

- 49.Carr E, Davis K, Bergin-Cartwright G, Lavelle G, Leightley D, Oetzmann C, et al. Mental health among UK university staff and postgraduate students in the early stages of the COVID-19 pandemic. Occup Environ Med. 2021;79(4). 10.1136/oemed-2021-107667. [DOI] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Researchers may access pseudonymised data by upon reasonable request to the Principal Investigators (Professor Matthew Hotopf and Professor Reza Razavi, email: check@kcl.ac.uk).