Abstract

The automated sorting systems are used in the industrial sectors to increase the rate of production. This research developed the sorting system by using a vision machine to detect the matching of capturing image with the storage base image. The system will be matching and sorting in real-time with 5 cm/s conveyor belt speed. The vision system is based on three stages to arrive at the sorting decision. The first stage is to covert the capturing image to a binary image, the second stage is applying edge detection of the product, and the third stage is matching this result with the base image. The system was successful to sort any product with complexity in shape and with high efficiency. The system sorting can be detected and sorted any product/machine element at any position or orientation. The system uses real-time analysis in order to provide the required results. The results arrived at the sorting gate at the end conveyer belt of the system if open that means the product matching. Three different products were selected in order to investigate the response and the accuracy of the results. It was found that the maximum of error to detect the product is not exceeding 2% for all cases.

1. Introduction

The automated sorting system become an essential element in industrial sectors due to decreased time and increased rate of sorting. It was using sensors that connect to control the microcontroller or PLC for producing the right decision. The sorting method depends on the color, shape, volume, size, and type of detection products. The actuator sorting consists of an arm robot, sorting gate, and hydraulic link. The sorting process uses mainly vision computers with artificial intelligence and statistical methods.

The application of sorting systems is in all industrial sectors such as sorting fruits by size with three categories, finding the radius, and calculating the area and perimeter based on light detection and ranging (LIDAR) sensor [1]. Some researchers design the systems to sort fruits using a vision system and robot with tracking the positions [2, 3]. PLC controller is used to sort objects based on the material of the subject such as wood, steel, and plastic with three sensors at different positions with acceptable average time [4]. Machine vision adds to sorting systems for detecting and automated sorting [5–7]. Also, the image processing approach with deep learning as a system is used to sort objects [8].

The sorting of an object is based on four methods that are related to color, shape, texture, and hybrid approach [9]. The support vector machine (SVM) is utilized in some systems with a mobile manipulator in cases where the system accomplished the step tasks such as the recognition of objects at any varying scales and rotations, motion control plan for mobile manipulation, and grasping control plane for objects at any position [10].

Sorting parts and remanufacturing processes use logic cube inspection to detect the product and sort based on its weight and volume with machine vision, but the system does not work in real-time [11]. Automated defect detection by a visual inspection system for cylindrical objects is performed using a camera and multimirror handle, but such a system does not work with real-time detection [12]. Some vision systems are using robots to recognize and sort objects, and such systems sometimes use PLC control units [13, 14].

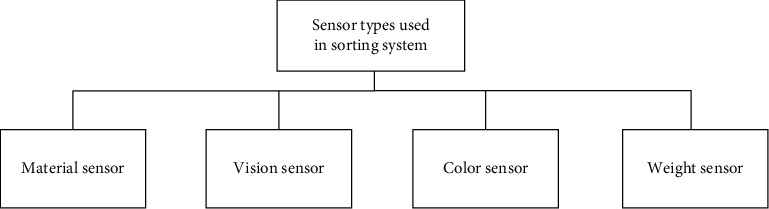

The sorting system is also used for sorting lima beans or sorting the connected characters of multiline license plates [15, 16]. The automated sorting system based on vision detection for fruit functions is based on the detection of the size and the shape of fruit in real-time [17, 18]. 3D vision sorting systems detect the object and pick it up using the robot arm, where the systems used two cameras to obtain 3D detection [19]. Figure 1 shows the types of sensors used in sorting systems in different applications.

Figure 1.

Sensor types that are used in sorting systems.

Ilas et al. [20] explored different types of algorithms in order to find the possible trajectories of an autonomous robot moving through different types of obstacles. Based on the developed approach, the distance between the robot and the obstacles was determined by taking into account the width of the robot. It was found that the optimal trajectory can be chosen to plan the robot. The most significant advantages of the presented algorithms are the height accuracy and simplicity even when the resources for the calculations are at a medium level.

Benicky et al. [21] presented a developed technique based on the real-time aspect. The partial solution was found to detect fast movement using different algorithms such as corner detection and edge detection. The authors presented the way to classify the motion. Furthermore, the optimal and effective forms were found by applying the corner and edge detection methods that can be used in the applications of redundant data. They recommended to filter the redundant data (enter image) that will be used in the real-time analysis.

Alexander and Thathan [22] presented a developed control circuit that can be used for the solar-fed cascaded multilevel inverter for the 3-kW solar plant. The switches of the semiconductor of the multilevel inverter were reduced by decreasing the total harmonic distortion that leads to enhanced output power. Based on the developed system, the level of the output voltage was increased without using the output transformers of boost converters. MATLAB/Simulink was used to simulate the developed system under different working circumstances. The obtained results from Simulink agreed completely with the experimental results. It was also found that the presented inverter is more optimal for the grid-connected and standalone systems.

Table 1 shows the most available sensors and methods that are used for different types of sorting operations. Based on the available literature that used the camera with edge detection, Hough line transform was used to detect all line edges of the product and the cross-correlation method was used to scan all lines of template and product capturing image.

Table 1.

The sensors and methods that are used in sorting operations.

| No. | Classifications of sensors and methods | Type of sorting |

|---|---|---|

| 1 | Camera sensor [2, 3] | Color, size, shape |

| 2 | LIDAR [1] | Size |

| 3 | Heuristic method [1] | Shape |

| 4 | Oscillator frequency [4] | Material types |

| 5 | Histogram + SVM [6] | Object properties |

| 6 | Deep learning + CCD sensor [8] | Object properties |

| 7 | Municipal solid waste + SVM [10] | Recyclable materials |

| 8 | Camera + weight sensor + silhouette algorithm [11] | Defect detection and remanufacturing |

| 9 | Camera + fuzzy logic algorithm [12] | Defect detection and classification |

| 10 | HMI (human-machine interface) [14] | Color box |

The main objective of this work is to develop a real-time sorting system for any specific product (complex shape) that can detect it from different products by using a camera and based on an image processing approach with minimum cost and time. The matching method is applied in this system at a speed that is calculated according to the characteristics of a camera such as the number of the frame. Increasing the characteristics of the selected camera will increase the system performance and accuracy. The developed system can match the target product with high accuracy and in different positions and orientations. The main contribution of this work was building a new sorting system with cost and high accuracy to sort any complex product/object with an acceptable time. Based on the available literature, it was found that most of the researchers used or developed complex and expensive sorting systems, which is considered an economic disadvantage from the view of the industrial sector.

1.1. Description and Working Principle of Sorting System

The developed sorting system consists of a conveyor belt that is connected to a DC motor, to handle the products to detect. Below this conveyor belt, two IR sensors were fixed, where the first sensor is to detect the arriving product under the camera to send the signal trigger to the camera. The camera is fixed above the conveyor belt to capture the images in real-time for the products. The second IR sensor is located before the sorting gate to detect the arrived target product. The gate is connected to the second DC motor to change the path of the target product when the target product arrives at the second IR sensor, then the sorting gate will be opened. Figure 2 illustrates the details of the elements of the developed sorting system.

Figure 2.

The main parts of the developed sorting system.

The microcontroller is the control unit in the system that connects with the program platform to transform input and output control signals. The optical encoder connects to the shift of the conveyor belt to calculate the speed of the belt which must be in the range of the frame of the camera. The sorting gate subsystem consists of a gate connected to a DC motor with a limited switch to open the gate at the suitable gap that is best to cross the desired product. Then, the gate closed after a few times need to cross the product as shown in Figure 3.

Figure 3.

The sorting gate subsystem.

1.2. Theoretical Methodology of the Target Product Matching

The template image of the target product is prepared to match the color-capturing image for all products. The Canny edge detection method was applied to detect the desired line. The Canny strategy activity comprises six steps for the edge detection [23]. The gradient image P(x, y) is calculated from the partial derivatives in the x-direction and y-direction of the 2D image as

| (1) |

The measure pixel intensity rate at x-direction as

| (2) |

and the measured pixel intensity rate at y-direction as

| (3) |

The gradient magnitude can be found from

| (4) |

Now, the Image Processed Hough Lines for Path Planning Application is applied to find the target line from capturing the image and detect its coordinate. The Hough transform obtained from the polar equation is

| (5) |

Here, r is the length of the line from the inception to the nearest point, and θ is the angle between the x-axis and the line associating the cause with that nearest point. It is subsequently conceivable to connect with each line of the picture, a couple (r, θ). The (r, θ) is the plane alluded as Hough space for the arrangement of straight lines in the two measurements. This portrayal causes the Hough to change reasonably exceptionally near the two-dimensional Radon change. The Hough change is numerically identical to the Radon change, yet the two changes have distinctive computational understandings customarily connected with them. Given a solitary point in the plane, at that point, the arrangement of every single consecutive line experiencing that point relates to a sinusoidal bend in the (r,θ) plane, which is novel to that point. A lot of at least two focuses that structure a straight line will create sinusoids which cross at the (r,θ) for that line. Hence, the issue of identifying collinear focuses can be changed over to the issue of finding simultaneous bends. The computational appeal of the Hough change emerges from subdividing the r parameter space into supposed accumulator cells, where [rmin, rmax] and [θmin, θmax] are the normal scopes of the parameter esteems. Normally, the most extreme scope of qualities is

| (6) |

where B is the most distant separation between inverse corners in the image. The cell at organizes (i, j) with aggregator esteem A (i, j) relates to the square connected with parameter space arranges (ri, θj). At first, these cells are set to zero. At that point, for each nonfoundation point (xk, yk) in the picture plane (i.e., the xy-plane), it was assumed that θ is equivalent to every one of the permitted subdivision values on the θ-axis, where it explains the relating p that utilizing the condition r = xk cos θ+ yk sin θ.

The subsequent R-values are adjusted in the next step to the closest permitted cell esteem along the r-axis. The comparing accumulator cell is increased as well. In order to finish the process of this methodology, an estimation of P value in the cell was performed. An (i, j) implies that P focuses in the xy-plane that lies on hold x cos θj + y sin θj = ri. The quantity of subdivisions in the rθ-plane will be decided by the accuracy of the collinearity of these focuses. The aggregator cluster is alluded to the tool kit as the Hough transform matrix, or basically as the Hough transform.

An improved Hough transform (HT) technique is proposed to recognize line portions in images with entangled foundations. This work concentrates around distinguishing line sections of particular lengths, absolutely autonomous of earlier information on the first image [24]. The statistical method depends on the Hough transforms of line fragment location by taking about quantization error, picture clamor, pixel unsettling influence, and pinnacle spreading, likewise considering the decision of the facilitate starting point [25, 26]. Improvement of image processing by the changed Hough transformation applied for seam following the framework in gas metal arc (GMA) welding [27]. An improved Hough algorithm for line detection, which shares the comparative qualities of the modified Hough transform (MHT) and the Windowed random Hough transform (RHT) [28]. Hough transform (HT) has discovered monstrous viable applications in vision issues such as object detection, movement detection, biometric validation, medical imaging, remote information processing, and robot route [29]. Applying Hough transform formulation depends on the utilization of the inverse Radon operator, which decides the location and orientation of the lines in the image to the noise information image [30].

A matching technique consolidated Hough transform and Random sample consensus (RANSAC), which changes the issue of finding the right pixel-wavelength matching pairs into finding the privilege direct model [31]. A productive algorithm for line discovered from LRS information utilizing a novel jumping focuses singular value decomposition (SVD) and Hough transform-based transform, in which SVD is applied to irregular LRS focuses to accelerate the transform [32]. A modified amassing plan for the Hough transform makes it appropriate for computer frameworks with little, however, quick read-write memory [33]. Real-time lane detection for a driving system that uses edge detection and Hough transform has been created to help a driver in the path takeoff basic leadership, in order to decrease lost fixation and forestall a mishap while driving [34].

The cross-correlation method is applied to scan the pixel of the template and capture images to find the similarity between them. The cross-correlation is calculated as

| (7) |

where the is the complex conjugated for the f (t) that is represented the function of pixels.

1.3. Experiment of the Developed Sorting System

The starting step of the sorting system is capturing pictures of the target product. Image processing Canny edge detection, Hough line transform, and cross-correlation are applied in the developed sorting system. The next step is the matching process between the image capturing and the templet of the selected product. Figure 4 shows the flowchart of the main steps of the process achieved in the developed sorting system. The matching operation will be scanned pixels of templet and then compare them with pixels of capturing images. The comparison is at the edge of the product that detects in the template image with the edge that detects in the capturing image. If the matching is obtained, the gate will be opened to change the path of the selected product. The microcontroller is the center of the control unit of this system, where the signal that came from the IR1 sensor gives the signal to the microcontroller, and then it gives the signal to capture the new image. If matching happened, the platform gives the signal to the microcontroller and waits for the signal from the IR2 sensor to give the motor of the gate a new signal to open it. Figure 5 demonstrates the block diagram of the control unit in the developed sorting system.

Figure 4.

Flowchart showed the step of the system process.

Figure 5.

The main elements in the control unit of the sorting system.

The microcontroller circuit consisted of a (pic16f84a) microcontroller and two relays to control the sorting gate motor with a buffer that regulates the signal that comes from the software platform (Figure 6). The system was designed and implemented at a low-cost component with high sorting efficiency in real-time.

Figure 6.

The microcontroller circuit.

2. Results of the Sorting System and Discussions

The automated industrial sorting system results are the decision to choose the target product from any product. The template image was obtained from edge detection for the target image of the product. The system scans all pixels capturing the image to detect the matching with the template image (Figures 7, 8 and 9) and shows the results of product detection.

Figure 7.

The matching of sample 1: (a) product image captured; (b) template image.

Figure 8.

The matching of sample 2: (a) product image captured; (b) template image.

Figure 9.

The matching of sample 3: (a) product image captured; (b) template image.

The results show the template edge is converted to the red line and finds the edge of the product; then, the system tracks this product until arrived at the IR2 sensor. The template has a different orientation compared to the product, but the system has the ability to scan the pixel of the capturing image for the product to apply the matching process. The template is basically the image of the product that was taken in the early stage. In the next step, the image processing edge detection was applied with filtering to obtain this image edge templet. Any product that needs to sort it will call up the template and start the process of the system.

In the experimental work, three different samples of products were used to examine the accuracy of the sorting operation. The developed system can be sorted these three different shapes of product but with different time speeds of detection. The time of detection (matching image processing) is a function of the product shape, where the detection time increases if the geometry of the product has a complex shape and decreases if the geometry of the product has a simple shape. It was achieved 100 tests for each sample of selected products in order to explore the accuracy of sorting and the average time of detection for each product as shown in Table 2. It was found that the lowest time (6 s) needed for detection was for sample 2 and the highest one was for sample 3 (10 s). The reason behind such results is the shape of the sample which contains a number of lines and curvatures that affect significantly the sorting process. Table 3 shows the accuracy of detection of the developed sorting system for the same samples. It was found that the highest accuracy (99%) was obtained for samples 1 and 2, and the lowest accuracy (98%) was obtained for sample 3.

Table 2.

The average time needed for the detection of the samples.

| Product sample no. | No. of experiment | Rate of time detection (s) |

|---|---|---|

| Sample no. 1 | 100 | 8 |

| Sample no. 2 | 100 | 6 |

| Sample no. 3 | 100 | 10 |

Table 3.

The accuracy of the detection of the samples.

| No. Of product sample | No. of experiment | The number of successful detection |

|---|---|---|

| Sample no. 1 | 100 | 99 |

| Sample no. 2 | 100 | 99 |

| Sample no. 3 | 100 | 98 |

The results of the developed sorting system are used to detect the target product by capturing an image at any position or orientation. One of the reasons to appear such kind of error is the lack of clarity for capturing the image that makes the edge of the product not distinguished. It can be considered the vibration of the camera or product is one of the main sources that reduces the stability of capturing the images used in the matching process.

In this research paper, other techniques of edge detection were explored in order to verify the selected technique and show the advantages of the selected approach compared with other available approaches. Figures 10–12 show the results of sorting based on other edge techniques, where Prewitt, Roberts, and Sobel techniques were selected. Based on the obtained results, existing errors can be noticed to detect the desired product. The Prewitt method finds the product but not in the correct position, and such a case will lead to an inaccurate sorting process and affect the overall performance of the system as shown in Figure 10.

Figure 10.

The matching of Prewitt edge method: (a) product image captured; (b) template image.

Figure 11.

The matching of Roberts edge method: (a) product image captured; (b) template image.

Figure 12.

The matching of Sobel edge method: (a) product image captured; (b) template image.

On the other hand, Roberts and Sobel's methods cannot find the desired product and the position was not correct, and then the sorting gate was opened at the wrong time as shown in Figures 11 and 12. The reason for incorrect detection of the products is the detecting process does not cover the entire template of the outer edge for the desired product. The results of the image processing program of the system will give the wrong boundary of pixels in the template and the product image captured. Based on the all obtained results, it was proved that the optimal method is Canny which is used in this system that has the ability to find the optimal outer edge of the product and obtain high-performance sorting.

3. Conclusions and Remarks

In this paper, a new automated sorting system was designed and implemented that has the ability to sort any product in real-time. The system can detect the product at any position or orientation and determine the edge of the target product. The template image was prepared out of the processing of the system and changes with changing the type of product. It was found that the time of processing is different, and it is a function of the size of the capturing image and the volume of the detecting product. One of the disadvantages that lead to an increase in the time and decrease in the accuracy of the detections is the low resolution of the used camera. The captured image at a high-speed conveyer belt increased the error in the sorting system because of distorting the image, where the matching process needs sufficient time for detection. The main advantages of the new sorting system are the ability to sort any product with different shapes, even if it is complex in shape. The complex shape products have sharp edges and a lot of curves, for example, sample no. 3 and gears. The limitation of this system is the similar out edge of products like they are all circular or square, etc. Also, there is another important point that should be mentioned which is the suitability of the illumination, where it should be with suitable intensity and distance from the conveyor belt to capture the image clearly.

One of the most important points that will be discussed in detail in the subsequent research is how to sort products that are completely identical in their external borders but contain holes and cavities in different locations and sizes. Such kind of sorting needs to study the internal details of the object/product, such as the locations and sizes of the holes, and how to sort it in any orientation.

Data Availability

No data were used to support the study.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Iqbal S., Gopal A., Sankaranarayanan P., Nair A. B. Estimation of size and shape of citrus fruits using image processing for automatic grading. Proceeding of the 2015 3rd International Conference on Signal Processing, Communication and Networking (ICSCN); 2015; Chennai, India. IEEE; [DOI] [Google Scholar]

- 2.Phuoc Tho T., Truong Thinh N. Design and development of the sorting system based on robot. Proceeding of the 2015 15th International Conference on Control, Automation and Systems (ICCAS); 2015; Busan, Korea. IEEE; [DOI] [Google Scholar]

- 3.Phuoc Tho T., Truong Thinh N., Huy Bich N. Design and development of the vision sorting system. Proceeeding of the 2016 3rd International Conference on Green Technology and Sustainable Development (GTSD); 2016; Kaohsiung, Taiwan. IEEE; [DOI] [Google Scholar]

- 4.Oladapo B. I., Balogun V. A., Adeoye A. O. M., et al. Model design and simulation of automatic sorting machine using proximity sensor. Engineering science and technology, an international journal . 2016;19 doi: 10.1016/j.jestch.2016.04.007. [DOI] [Google Scholar]

- 5.Bagade A. A., Shete S. S., Khavale D. D. Object sorting on conveyor belt by using MATLAB software. Department of Mechanical Engineering . 2017;2 [Google Scholar]

- 6.Soans R. V., Pradyumna G. R., Fukumizu Y. Object sorting using image processing. In Proceddings of the 2018 3rd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT); 2018; Bangalore, India. IEEE; [DOI] [Google Scholar]

- 7.Zhang Y., Cheng W. Vision-based robot sorting system. IOP Conference Series: Materials Science and Engineering . 2019;592 doi: 10.1088/1757-899x/592/1/012154. [DOI] [Google Scholar]

- 8.Yadav P., Uikey M., Lonkar P., Kayande S., Amit M. Sorting of objects using image processing. Proceeeding of the 2020 IEEE International Conference for Innovation in Technology (INOCON); 2020; Bangluru, India. IEEE; [DOI] [Google Scholar]

- 9.Dinesh R., Bharadwaj N. K. Possible approaches to arecanut sorting/grading using computer vision: a brief review. Proceedings of the 2017 International Conference on Computing, Communication and Automation (ICCCA); 2017; Greater Noida, India. IEEE; [DOI] [Google Scholar]

- 10.Paulraj G., Sathish S. H., Thakur A. Automated Municipal Solid Waste Sorting for Recycling Using a Mobile Manipulator . Newyork, NY, USA: American Society of Mechanical Engineers (ASME); 2016. [DOI] [Google Scholar]

- 11.Schlüter M., Niebuhr C., Lehr J., Krüger J. Vision-based identification service for remanufacturing sorting. Procedia Manufacturing . 2018;21:384–391. doi: 10.1016/j.promfg.2018.02.135. [DOI] [Google Scholar]

- 12.Ali M. A. H., Lun A. K. A cascading fuzzy logic with image processing algorithm-based defect detection for automatic visual inspection of industrial cylindrical object’s surface. International Journal of Advanced Manufacturing Technology . 2019;102:81–94. doi: 10.1007/s00170-018-3171-7. [DOI] [Google Scholar]

- 13.Kuts V., Otto T., Tähemaa T., Bukhari K., Pataraia T. Adaptive Industrial Robots Using Machine Vision . Newyork, NY, USA: American Society of Mechanical Engineers (ASME); 2018. [DOI] [Google Scholar]

- 14.Fadhil Al Hinai R., Abdelhamied Farh A. F. Building color sensor based on image processing technology for automatic color object sorting using PLC system. Proceeding of the 2019 International Conference on Digitization (ICD); 2019; Sharjah, United Arab Emirates. IEEE; [DOI] [Google Scholar]

- 15.Injante H., Gutiérrez E., Vinces L. A vibratory conveying system for automatic sorting of Lima beans through image processing. Proceedings of the 2020 IEEE XXVII International Conference on Electronics, Electrical Engineering and Computing (INTERCON); 2020; Lima, Peru. IEEE; [DOI] [Google Scholar]

- 16.Minhuz Uddin Ahmed A. J. M., Uddin A., Rahman A. Developing an algorithm for sequential sorting of discrete and connected characters using image processing of multi-line license plates. Array . 2021;10 doi: 10.1016/j.array.2021.100063. [DOI] [Google Scholar]

- 17.Zhang Z., Lu Y., Lu R. Development and evaluation of an apple infield grading and sorting system. Postharvest Biology and Technology . 2021;180 doi: 10.1016/j.postharvbio.2021.111588. [DOI] [Google Scholar]

- 18.Fermo I. R., Cavali T. S., Bonfim-Rocha L., Srutkoske C. L., Flores F. C., Andrade C. M. G. Development of a low-cost digital image processing system for oranges selection using hopfield networks. Food and Bioproducts Processing . 2021;125(2021):181–192. doi: 10.1016/j.fbp.2020.11.012. [DOI] [Google Scholar]

- 19.Tejaswini S., Spoorthi M. P., Sandeep B. S. Real time object sorting with 3D parameter using image processing and robotic ARM system. SN Computer Science . 2021;2:162–167. doi: 10.1007/s42979-021-00527-6. [DOI] [Google Scholar]

- 20.Ilas C., Paturca S., Ilas M. Real-time image processing algorithms for object and distances identification in mobile robot trajectory planning. Journal of Control Engineering and Applied Informatics . 2011;13(2):32–37. [Google Scholar]

- 21.Benicky P., Jurišica L., Vitko A. Motion data preprocessing in robotic applications. Journal of Control Engineering and Applied Informatics . 2015;17(1):3–11. [Google Scholar]

- 22.Alexander A., Thathan M. Design and development of digital control strategy for solar photovoltaic inverter to improve power quality. Journal of Control Engineering and Applied Informatics . 2014;16(4):20–29. [Google Scholar]

- 23.Pratt W. K. Digital Image Processing . 1994;19(3) [Google Scholar]

- 24.Ji J., Chen G., Sun L. A novel Hough transform method for line detection by enhancing accumulator array. Pattern Recognition Letters . 2011;32 doi: 10.1016/j.patrec.2011.04.011. [DOI] [Google Scholar]

- 25.Xu Z., Shin B. S., Klette R. A statistical method for line segment detection. Computer Vision and Image Understanding . 2015;138:61–73. doi: 10.1016/j.cviu.2015.05.008. [DOI] [Google Scholar]

- 26.Shin Z., Shin B. A statistical method for peak localization in hough space by analysing butterflies. Image and Video Technology . 2014;8333 doi: 10.1007/978-3-642-53842-1_10. [DOI] [Google Scholar]

- 27.Lee J. P., Park J. P., Jin M. H., et al. A study on the modified Hough algorithm for image processing in weld seam tracking. Journal of Mechanical Science and Technology . 2015;29 doi: 10.1007/s12206-015-1033-x. [DOI] [Google Scholar]

- 28.Chaudhuri P., Chaudhuri B. B. A survey of hough transform. Pattern Recognition . 2015;48:993–1010. doi: 10.1016/j.patcog.2014.08.027. [DOI] [Google Scholar]

- 29.Dagao Duan D., Meng Xie M., Qian Mo Q., Zhongming Han Z., Yueliang Wan Y. An improved Hough transform for line detection. Proceedings of the 2010 International Conference on Computer Application and System Modeling (ICCASM 2010); 2010; Taiyuan, China. [DOI] [Google Scholar]

- 30.Aggarwal N., Karl W. C. Line detection in images through regularized hough transform. IEEE Transactions on Image Processing . 2006;15 doi: 10.1109/TIP.2005.863021. [DOI] [PubMed] [Google Scholar]

- 31.Wang Z., Song Q., Yang L., Jia W., Yang L. Matching method combined hough transform and RANSAC for spectrometer wavelength calibration. Proceedings of the 2017 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD); 2017; Guilin, China. [DOI] [Google Scholar]

- 32.Ravankar A., Ravankar A. A., Hoshino Y., Emaru T., Kobayashi Y. On a hopping-points SVD and hough transform-based line detection algorithm for robot localization and mapping. International Journal of Advanced Robotic Systems . 2016;13 doi: 10.5772/63540. [DOI] [Google Scholar]

- 33.Jošth R., Dubská M., Herout A., Havel J. Real-time line detection using accelerated high-resolution hough transform. Image Analysis . 2011;6688 doi: 10.1007/978-3-642-21227-7_73. [DOI] [Google Scholar]

- 34.Ganokratanaa T., Ketcham M., Sathienpong S. Real-time lane detection for driving system using image processing based on edge detection and hough transform. 2013. https://www.semanticscholar.org/paper/REAL-TIME-LANE-DETECTION-FOR-DRIVING-SYSTEM-USING-Ganokratanaa-Ketcham/77b4240001a6965848ffd0bb7c436c91aa9a7ecc .

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No data were used to support the study.