Abstract

Objectives

Transparent reporting of clinical trials is essential to assess the risk of bias and translate research findings into clinical practice. While existing studies have shown that deficiencies are common, detailed empirical and field-specific data are scarce. Therefore, this study aimed to examine current clinical trial reporting and transparent research practices in sports medicine and orthopaedics.

Setting

Exploratory meta-research study on reporting quality and transparent research practices in orthopaedics and sports medicine clinical trials.

Participants

The sample included clinical trials published in the top 25% of sports medicine and orthopaedics journals over 9 months.

Primary and secondary outcome measures

Two independent reviewers assessed pre-registration, open data and criteria related to scientific rigour, like randomisation, blinding, and sample size calculations, as well as the study sample, and data analysis.

Results

The sample included 163 clinical trials from 27 journals. While the majority of trials mentioned rigour criteria, essential details were often missing. Sixty per cent (95% confidence interval (CI) 53% to 68%) of trials reported sample size calculations, but only 32% (95% CI 25% to 39%) justified the expected effect size. Few trials indicated the blinding status of all main stakeholders (4%; 95% CI 1% to 7%). Only 18% (95% CI 12% to 24%) included information on randomisation type, method and concealed allocation. Most trials reported participants’ sex/gender (95%; 95% CI 92% to 98%) and information on inclusion and exclusion criteria (78%; 95% CI 72% to 84%). Only 20% (95% CI 14% to 26%) of trials were pre-registered. No trials deposited data in open repositories.

Conclusions

These results will aid the sports medicine and orthopaedics community in developing tailored interventions to improve reporting. While authors typically mention blinding, randomisation and other factors, essential details are often missing. Greater acceptance of open science practices, like pre-registration and open data, is needed. As these practices have been widely encouraged, we discuss systemic interventions that may improve clinical trial reporting.

Keywords: clinical trials, statistics & research methods, sports medicine, rehabilitation medicine, orthopaedic & trauma surgery, medical education & training

Strengths and limitations of this study.

The present study provides an in-depth assessment of clinical trial reporting quality, and utilisation of transparent research practices in a recent sample of published clinical trials on orthopaedics and sports medicine.

A comprehensive set of outcome parameters was assessed, covering fundamental aspects like scientific rigour, the study sample and data analysis but also the utilisation of pre-registration and open science practices.

All assessments were performed by two independent reviewers and disagreements were resolved by consensus.

The cross-sectional design and exploratory nature of the present study cannot provide information about cause–effect relationships. The odds ratios (ORs) calculated in the present study were exploratory post-hoc calculations.

The sample consisted of the top 25% of sports medicine and orthopaedics journals, hence our findings may not be generalisable to journals that are not indexed by PubMed, lower tier journals or non-English journals.

Introduction

The overarching goal of medical research is to improve healthcare for patients, which requires the biomedical community to translate study outcomes into clinical practice.1 Clinical trials are central to this process, as properly conducted trials reduce the risk of bias and increase the likelihood that results about new treatments will be trustworthy, reproducible and generalisable.2 3 Clinical trials must be properly designed, conducted and reported4 to facilitate translation. Poorly designed and conducted trials may not be trustworthy or reproducible. This undermines public trust in biomedical research and raises concerns about whether the trial costs and patient risks were justified.5 6 Poor reporting makes it difficult to distinguish between trials with and without a high risk of bias.

To improve clinical trial reporting, the Consolidated Standards of Reporting Trials (CONSORT) guidelines7 8 have been recommended by the International Committee of Medical Journal Editors (ICMJE) and widely disseminated by the EQUATOR network.9 10 While reporting has improved over time, major deficiencies that can impair translation are still common.11 12 These previous studies show that details needed to assess the risk of bias were missing from many published trials. More than half of all trials failed to address allocation concealment, and almost one-third of the studies did not address blinding of participants and personnel.12 Similarly, among randomised controlled trials published in the top five orthopaedics journals, 60% failed to address the blinding status of the participants and 58% did not specify the number of participants included in the final analysis.13 However, these results are only available for a relative narrow set of criteria, and it is unclear whether these results are still applicable in recently published literature and for a broader range of journals.

Orthopaedics and sports medicine researchers have joined efforts to improve study design and reporting. Newly formed societies14 15 and editorial series16 focus on improving research quality in sports medicine and orthopaedics. These efforts are urgently needed, as only 1% of the studies in high-impact orthopaedic journals reported all 10 criteria needed for risk of bias assessment.13 In 42% of the papers, risk of bias could not be assessed due to incomplete reporting.13 Incomplete reporting of exercise interventions17 makes it impossible to implement interventions in clinical practice or to assess the appropriateness of the control intervention.18

In sports medicine-related fields, meta-researchers suggested that scientists may be using questionable research practices, such as those described in table 1, after observing overinflated effect sizes19 and an unreasonably high number of papers that support the study hypothesis.20 Comprehensive reporting may prevent biases like selective reporting, selection bias, attrition bias, outcome switching or wrong sample size bias, or make them easier to detect (see table 1 for selected definitions). However, earlier studies have shown that reporting deficiencies are still common in orthopaedics13 and general medical journals.12 21 Yet, available studies either examine older publications, assessed a small number of criteria or are not specific to orthopaedics and sports medicine. Comprehensive data on current reporting practices of orthopaedics and sports medicine clinical trials are lacking.

Table 1.

Terminology and concepts. Created by the authors

| Concept | |

| Questionable research practices | Questionable research practices are defined as ‘Design, analytical or reporting practices that have been questioned because of the potential for the practice to be employed with the purpose of presenting biased evidence in favour of an assertion”.70 |

| Selective reporting/ cherry picking | The decision about whether to publish a study or parts of a study is based on the direction or statistical significance of the results.71 72 Pre-registration and Registered Reports may prevent selective reporting,26 73 which is also known as cherry picking. |

| Publication bias | The decision about whether to publish research findings depends on the strength and direction of the findings.74 The odds of publication are nearly four times higher among clinical trials with positive findings, compared with trials with negative or null findings.75 |

| Outcome reporting bias | Only particular outcome variables are included in publications and decisions about which variables to include are based on the statistical significance or direction of the results.71 Outcomes that are statistically significant have higher odds of being fully reported than non-significant outcomes.76 77 |

| Attrition bias | Attrition refers to reductions in the number of participants throughout the study due to withdrawals, dropouts or protocol deviations. Attrition bias occurs when there are systematic differences between people who leave the study and those who continue.78 For example, a trial shows no differences between two treatments. In one group, however, half of the participants dropped out because they underwent surgery due to worsening symptoms. |

| Null hypothesis statistical testing (NHST) | NHST is originally based on theories of Fisher and Neyman-Pearson. The null hypothesis is rejected or accepted depending on the position of an observed value in a test distribution. While NHST is standard practice in many fields, the International Committee of Medical Journal Editors warns against the inappropriate use and sole reliance on NHST due to several shortcomings of using this approach inappropriately.79 |

| p-Hacking | Describes the process of analysing the data in multiple ways until statistically significant results are found. |

| HARKing | HARKing, or hypothesising after results are known, is defined as presenting a post-hoc hypothesis as if it were an a priori hypothesis.80 |

Therefore, this meta-research study examined reporting among clinical trials published in the top 25% of sports medicine and orthopaedics journals as determined by Scientific Journal Rank. Our objective was to assess the prevalence of reporting for selected criteria, including pre-registration, open data and reporting of randomisation, blinding, sample size calculations, data analysis and the flow of participants through the study. Meta-research data on clinical trial design, conduct and reporting will help researchers in sports medicine to implement targeted measures to improve trial design and reporting.

Methods

Protocol pre-registration

The study was pre-registered on the Open Science Framework (RRID:SCR_003238) and all generated data were made openly available.22 Additional details regarding sample selection and screening, data abstraction, a sample size calculation and data for each included study can be found in the online supplemental materials.

bmjopen-2021-059347supp001.pdf (365.2KB, pdf)

Sample selection and screening

We systematically examined clinical trials published in the top 25% of orthopaedics and sports medicine journals over 9 months. This sampling strategy provides an overview of practices in the field, particularly among journals whose articles receive the most attention. The large number of journals included ensures that findings are not driven by practices or policies of individual journals. Journals in the orthopaedics and sports medicine category were selected based on the Scimago Journal Rank indicator23 (online supplemental methods). The top 25% of journals (n=65) were entered into the PubMed search with article type (clinical trial) and publication date (2019/12:2020/08) filters. The search was run on 16 September 2020. All articles (n=175 from 27 journals) were uploaded into Rayyan (RRID:SCR_017584)24 to screen titles and abstracts.

Inclusion and exclusion criteria

Two reviewers (RS, GL) screened titles and abstracts to exclude articles that were obviously not clinical trials, as defined by the ICMJE. The ICMJE defines a clinical trial as any research project that ‘prospectively assigns people or a group of people to an intervention, with or without concurrent comparison or control groups, to study the relationship between a health-related intervention and a health outcome’.9 Two independent reviewers (RS, GL, RP) then performed full-text screening. All papers meeting the ICMJE clinical trial definition were included, whereas articles that did not meet the definition were excluded. Studies looking at both health-related and non-health-related outcomes were included but data abstraction focused on health-related outcomes only. Disagreements were resolved by consensus.

Data abstraction

Two independent assessors (RS, GL, RP) reviewed each article and its supplemental files to evaluate the reporting of pre-specified criteria and extracted data using preformatted Excel spreadsheets. Table 2 presents the main criteria that were abstracted and a reason for their selection. The transparency and rigour criteria are based on CONSORT criteria for methods and results reporting.7 8 We also abstracted additional open science criteria, focusing on the open access status of the trial publication, whether a data availability statement was included and whether data were deposited in a public repository.25 The abstraction protocol was deposited on the Open Science Framework (RRID:SCR_003238) at https://osf.io/q8b46/.

Table 2.

Criteria for reporting and transparent research practices. The table shows specific questions used to assess each outcome criteria and provides a brief justification for why each criteria was selected. Created by the authors

| Category | Assessment | Rationale and context |

| Sample size calculation | Was an a priori sample size calculation performed? What type of sample size calculation was performed? Did the authors provide a justification for the expected effect size? |

|

| Randomisation and concealed allocation | Did the authors address whether randomisation was used? If so, were the randomisation type and method mentioned? Were the following details of the allocation concealment procedure addressed?

|

|

| Blinding | Did the article include a statement on blinding? Was the blinding status of each of the major stakeholders mentioned (participants, healthcare providers, outcome assessors, data analysts)? Was each stakeholder group blinded? |

|

| Flow of participants | Were the inclusion and exclusion criteria clearly stated? Did the authors define how many participants were excluded at each phase of the study and list reasons for exclusion? Did the authors present this information in a flow chart? |

|

| Data analysis | Was a study hypothesis presented and a primary outcome specified? Was the hypothesis supported or rejected? If null hypothesis statistical testing was performed, were exact p values, df and the test statistics presented? Were standardised effect sizes and their precision reported? |

|

| Data visualisation | Were bar graphs used to visualise continuous data? |

|

| Intervention reporting | What type of intervention was performed (eg, exercise, physical therapy, surgery)? For exercise interventions:

|

|

| Transparency criteria | Was the study registered or pre-registered? Was a data availability statement included? Were the data publicly available? Was the study openly accessible? |

|

Protocol deviations

For trials with exercise interventions, we assessed the frequency, intensity and volume of exercise for experimental and control interventions. The protocol was modified if the control intervention did not involve exercise. Control interventions were rated as fully reported if the frequency, the content and the duration was described. Control groups that received no intervention (eg, wait-and-see) were rated as fully reported if the activity status or number of other treatments were monitored.

Trial registration statement assessments were amended to determine whether trials were registered prospectively or retrospectively. Two abstractors (RS, MP) assessed each trial registration. Trials were considered pre-registered if their registration was completed before the first participant was enrolled. Otherwise, the trial was classified as retrospectively registered. If the primary outcome was changed after the study began, the trial was classified as retrospectively registered.

Statistical analysis

This exploratory study assessed the prevalence of reporting for selected criteria in sports medicine and orthopaedics clinical trials. Results are presented as the percentage of trials reporting each outcome measure, with a 95% confidence interval (CI).

Odds ratios (ORs) and their 95% CIs were calculated to examine the relationship between the completeness of reporting and pre-registration, the use of flow charts or the presence of sample size calculations and the completeness of reporting. ORs were interpreted as unclear if the CI included 1. These analyses were not pre-registered.

Sample size calculation

This exploratory study does not require formal sample size calculations. However, we adhered to conventional sample size recommendations for exploratory designs and performed a precision-based sample size calculation to obtain rough estimates of relevant sample sizes (online supplemental methods). Depending on the assumptions, a required sample size of 124 to 203 trials was estimated.

Patient and public involvement

Patients or the public were not involved in the design, or conduct, or reporting, or dissemination plans of our research.

Results

One hundred and seventy-five articles were screened, and 168 articles were reviewed from 27 sports medicine and orthopaedics journals (online supplemental figure S1, online supplemental table S1). Eleven articles were excluded because they did not meet the ICMJE clinical trial criteria. One extended conference abstract was excluded because it was not a full-length research article. Analyses included the remaining 163 papers.

Rigour and sample criteria

Sample size calculations

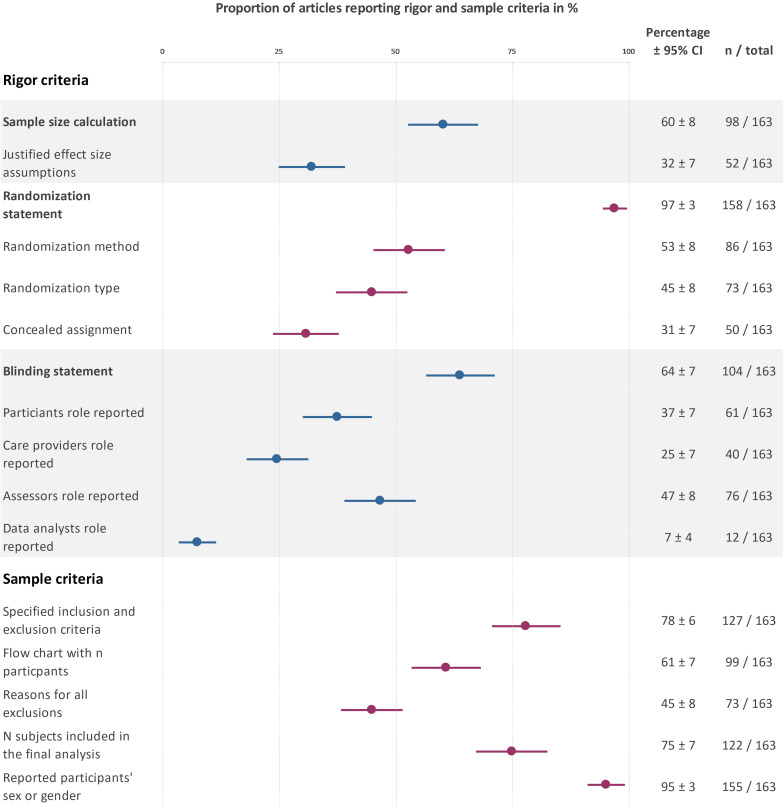

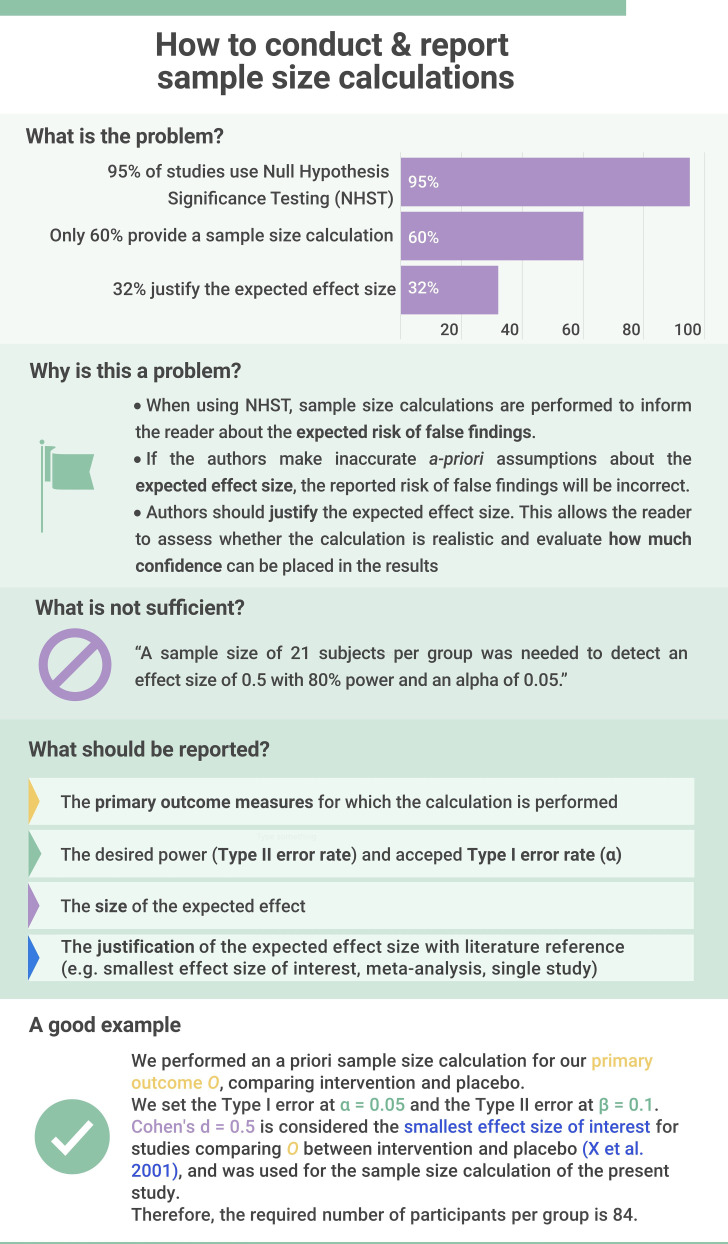

The reporting prevalence of sample size calculations and related results can be found in figure 1. In trials not reporting a priori sample size calculation (figure 1), 2% (95% CI 0% to 5%; n=4) reported that no sample size calculation was performed because the study was an exploratory pilot study. Among trials reporting sample size calculations (n=98), 53% (95% CI 43% to 63%; n=52) included a justification for the expected effect size. The remaining trials either presented no justification (39%; 95% CI 23% to 42%; n=32) or used arbitrary effect size thresholds (14%; 95% CI 7% to 21%; n=14). Almost all sample size calculations were based on statistical power (93%; 95% CI 88% to 98%; n=96). Two sample size calculations were based on precision (2%; 95% CI 0% to 5%). No calculations were based on Bayes methods.

Figure 1.

Reporting prevalence for rigour and sample criteria. This plot displays the percentage of trials that addressed each criteria. For information on the actual randomisation or blinding status, please refer to the text. The different coloured data points are for better visual differentiation of each subcategory. Created by the authors.

Randomisation and allocation concealment

The reporting prevalence of randomisation, allocation concealment and related results can be found in figure 1. In trials not addressing randomisation (figure 1), two trials (1%; 95% CI 0% to 3%) were not randomised, and five trials did not mention randomisation (3%; 95% CI 0% to 6%).

Complete information on the allocation concealment procedure was provided by 8% (95% CI 4% to 12%; n=13) of the trials (defined as reporting who generated the randomisation sequence, and who enrolled participants and assigned them to interventions). Some of this information was available 23% (95% CI 16% to 29%; n=37) of trials, and 69% (95% CI 62% to 76%; n=113) did not report any information. Few studies reported at least some information on all three factors needed to assess randomisation and allocation concealment (randomisation type, method and allocation concealment; 18%; 95% CI 12% to 24%; n=30).

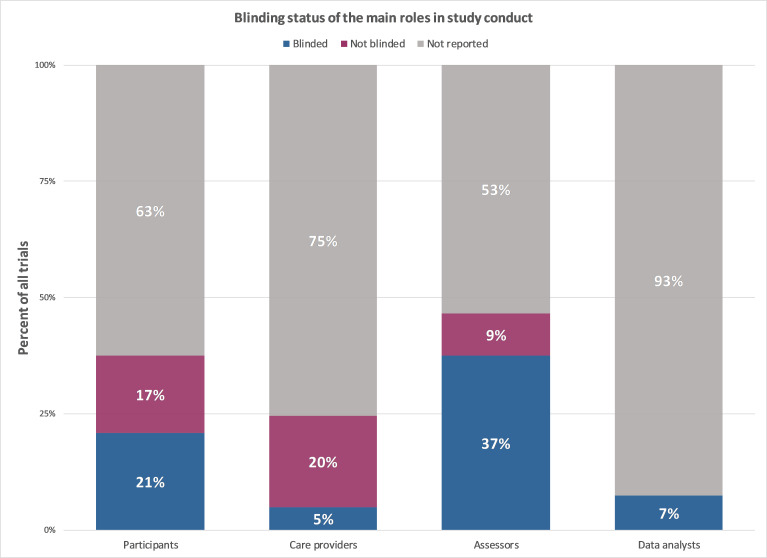

Blinding

The reporting prevalence of statements on blinding of different stakeholders can be found in figure 1. The actual blinding status of included trials is visualised in figure 2. Two-thirds of the trials addressed blinding (figure 2). Among trials that addressed blinding (figure 1), 81% (95% CI 73% to 88%; n=84) used blinding, while 19% (95% CI 12% to 27%; n=20) were not blinded. Only 4% (95% CI 1% to 7%; n=7) of all trials addressed the blinding status of all four stakeholder groups (figure 2). Trials were most likely to address the blinding status of the outcome assessors and the participants. The blinding status of data analysts is typically unreported.

Figure 2.

The blinding status across the main different stakeholder groups across all clinical trials (n=163). Created by the authors.

Sample-related criteria

The reporting prevalence of criteria related to the study sample can be found in figure 1. Approximately three-quarters of the trials reported the inclusion and exclusion criteria and provided complete information on the number of participants at enrolment, after enrolment and included in data analysis (figure 1). Fewer trials used a flow chart to illustrate the number of included and excluded participants at each stage. Among trials that did not report the reasons for all exclusions after enrolment (figure 1), 17% (95% CI 11% to 22%; n=24/90) reported the reasons for some exclusions and 33% (95% CI 26% to 41%; n=41/90) did not report any information.

In trials that stated participants’ sex or gender (figure 1), a median of 51% (IQR 27%–71%) of participants were women in the group with the highest proportion of women, versus 49% (IQR 22%–66%) in the group with the lowest proportion of women.

Intervention criteria

The most frequent intervention type was exercise (44%; 95% CI 37% to 52%; n=72), followed by surgery (26%; 95% CI 19% to 32%; n=42). Diet (6%; 95% CI 2% to 9%; n=9), physical therapy (5%; 95% CI 2% to 8%; n=8), pharmacological interventions (4%; 95% CI 0% to 2%; n=7) and manual therapy (1%; 95% CI 0% to 2%; n=1) were uncommon. Fifteen per cent (95% CI 9% to 20% n=24) of studies used other interventions.

We next examined reporting of details needed to assess or implement exercise interventions. Sixty-two per cent (95% CI 50% to 73%; n=42) of trials with exercise interventions monitored adherence or compliance, one trial (1%; 95% CI 0% to 4%) reported that adherence was not monitored, and 37% (95% CI 25% to 48%; n=25) of trials did not mention intervention adherence or compliance. All trials reported at least some information about the experimental exercise intervention, and most trials provided complete information (table 2) (83%; 95% CI 75% to 92%; n=60). Fewer trials reported complete information for the control interventions (63%; 95% CI 51% to 74%; n=45). Five trials did not provide any information about the control intervention (7%; 95% CI 1% to 13%).

Data analysis and transparency criteria

Hypotheses and outcome measures

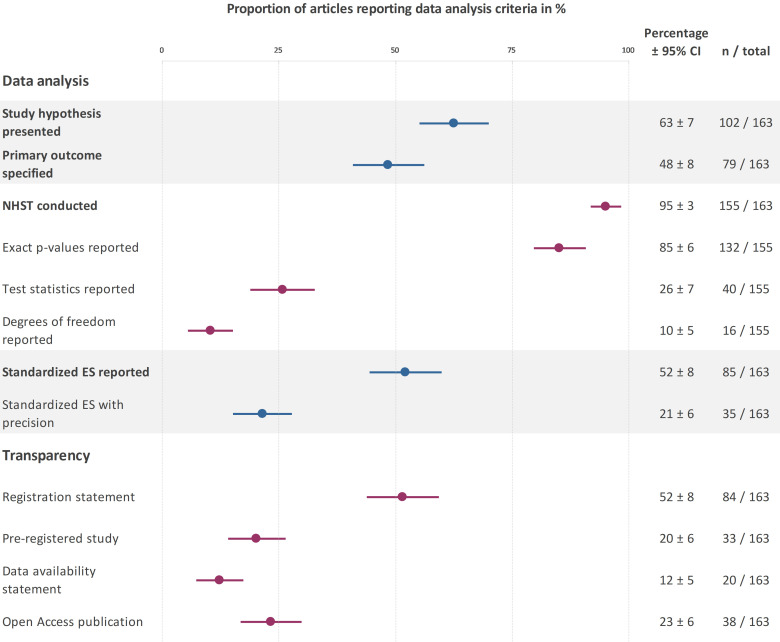

The reporting prevalence of the study hypotheses and outcome measures can be found in figure 3. Nearly half of the articles specified a primary outcome and almost two-thirds of the articles presented a hypothesis (figure 3). Among clinical trials that reported a hypothesis (figure 3), 61% (95% CI 53% to 68%; n=62) supported the main hypothesis, while 39% of trials (95% CI 32% to 47%; n=40) did not support the main hypothesis.

Figure 3.

Reporting prevalence for data analysis and transparency criteria. This plot displays the percentage of trials that addressed each criteria. Created by the authors. ES, effect size; NHST, null hypothesis statistical testing.

Statistical reporting

Figure 3 shows the reporting prevalence of criteria related to statistical reporting and data visualisation. Almost all studies used NHST (figure 3). While most trials reported exact p values, few reported test statistics and degrees of freedom (df). Approximately half of the trials reported standardised effect sizes but only 21% included the precision of the effect size estimates. One study reported Bayesian statistics (1%; 95%CI 0% to 2%).

Data visualisation

Bar graphs were used to display continuous data in 21% (95%CI 15% to 21%; n=34) of trials.

Transparency

The reporting prevalence of transparency criteria are shown in figure 3. Most of the studies with registration statements (figure 3) were registered in ClinicalTrials.gov (n=52), followed by the Australian New Zealand Clinical Trials Registry (n=9), International Standard Randomized Controlled Trial Number Register (n=4) and other regional clinical trials registries (n=9). Less than half of the registered trials, and 20% of all trials, were pre-registered. The remaining trials with registration statements were registered retrospectively (58%; 95% CI 48% to 69%; n=49/84). This included six prospectively registered trials where the primary outcome was changed after data collection started. Two studies with registration statements did not provide sufficient information to determine whether the study was registered prospectively or retrospectively (2%; 95% CI 0% to 6%; n=2/84).

Data availability statements were uncommon (figure 3). No trial with a data availability statement deposited data publicly in an open repository. Twenty-one per cent of the trials with data availability statements (95% CI 15% to 27%; n=4) noted that data were not publicly available, whereas 74% (95% CI 67% to 80%; n=15) stated that data were available on request. One study (5%; 95% CI 2% to 9%) reported that all data were available in the main text and its supplements, however, raw data were not available in either location.

Exploratory analyses

Pre-registration and reporting

Compared with unregistered or retrospectively registered studies, pre-registered studies were more likely to report complete information for randomisation (type and method) and allocation concealment (OR 4.3; 95% CI 1.9 to 10.0), whether all stakeholders were blinded (OR 8.6; 95% CI 1.6 to 46.5), a priori sample size calculations (OR 2.5; 95% CI 1.1 to 5.8), justifications for expected effect sizes used in power calculations (OR 2.5; 95% CI 1.1 to 5.8) and specifying the primary outcome measure (OR 3.3; 95% CI 1.5 to 7.1). The odds of reporting (OR 1.0; 95% CI 0.48 to 2.1) or rejecting (OR 1.0; 95% CI 0.42 to 2.6) the study hypothesis were not clearly different between unregistered and pre-registered studies.

Sample size calculations and reporting

The odds of rejecting the main hypothesis in trials with a priori sample calculations were not different from 1 (OR 1.3; 95% CI 0.6 to 2.8). Trials that provided justifications for the expected effect size were more likely to reject the study hypothesis (OR 2.5; 95% CI 1.2 to 5.2).

Flow charts and reporting

The odds of reporting all reasons for dropouts (OR 4.6; 95% CI 2.3 to 9.3) and explicitly reporting the number of participants in each group that were included in the data analysis (OR 163.3; 95% CI 21.4 to 1248.5) were higher among studies that used flow charts to track participant flow, compared with those that did not.

Discussion

Sports medicine and orthopaedics researchers have recently emphasised rigorous study design and reporting to make research easier to understand, interpret and translate into clinical practice.16 Calls for more transparent reporting in orthopaedics and sports medicine19 26 27 followed older studies suggesting that poor clinical trial reporting limits readers ability to assess study quality and risk of bias.13 28 29 Our study shows that while most studies include a general statement about rigour criteria, like blinding or randomisation, these statements lack essential details needed to assess the risk of bias. The majority of the trials report criteria related to the study sample, such as the sex of participants, inclusion and exclusion criteria or the number of participants finally included in the analysis. Only 20% of the studies were pre-registered. No study shared data in open repositories.

Opportunities to improve reporting

These results highlight two main opportunities to improve transparency and reproducibility in sports medicine and orthopaedics clinical trials; improving reporting for essential details of the main CONSORT elements and increasing uptake of open science practices.

First, our results indicate that most authors are aware that they need to address factors like blinding, randomisation and sample size calculations; however, few provide the essential details required to evaluate the trial and interpret the results. Almost all trials addressed blinding, for example, but only 4% reported the blinding status of all main stakeholders. Educational efforts should emphasise the difference between informative and uninformative reporting (see example in figure 4).

Figure 4.

A priori sample size calculations are essential for generating meaningful results with clinical trials. Created by the authors. This infographic focuses on key elements a priori sample size calculations that should be reported in clinical trial publication. However, it is important to note that each element should be justified individually including the thresholds for type 1 and type 2 errors, and the expected effect size. Lakens free article on sample size justification provides an excellent overview of aspects to consider when planning empirical research studies.96

CONSORT writing templates may also help.28 Target criteria should include the blinding status of all main stakeholders, randomisation type and method, how and by whom concealed allocation was performed and effect size justifications in sample size calculations.

Second, interventions are needed to increase pre-registration and data sharing. Although the ICMJE has required clinical trial pre-registration since 2005,29 only one-fifth of the trials were pre-registered. Pre-registered studies had higher odds of reporting several rigour criteria, potentially suggesting that authors who pre-register may be more aware of reporting guidelines. Our results are consistent with previous findings30 that trial registrations were among the least reported CONSORT items in sports medicine. A recent study in kinesiology shows even lower rates of pre-registration, data availability statements and data sharing in open repositories.31 Sports medicine researchers have already noted that pre-registration and registered reports can prevent questionable research practices26 (table 1) or make them easier to detect.32

Data were not shared in public repositories, suggesting that this topic requires special attention. The benefits of data sharing for authors include more citations,33 34 likely increased trustworthiness,35 and increased opportunities to collaborate with researchers who want to perform secondary analyses.36 Recent materials have addressed many common concerns about sharing patient data, including data privacy and confidentiality.37–39 Regulations vary by country and institution. Some institutions have designated support staff for data sharing. Researchers should contact their institutions’ data privacy, statistics or ethics offices to identify local experts. Seventy-four per cent of the trials with data availability statements noted that data were available on request. This is problematic, as such data are often unavailable and the odds of obtaining data decline precipitously with time since publication.40

Interestingly, our exploratory analysis revealed that the odds of rejecting the study hypothesis were 2.5 (95% CI 1.2 to 5.2) times higher in trials that provided a justification for the expected effect size in sample size calculations. This might indicate overinflated effect sizes, as trials that based their sample size calculation on effect sizes published in earlier studies more often failed to find a similar sized effect. Inflated effect sizes were also observed in the psychological science reproducibility project, where replicated effects were generally smaller than those in the initial studies.41

Authors should also be encouraged to report the data analysis transparently. Our study shows that more than one-fifth of the included trials used bar graphs to visualise continuous data. While this practice is common in many fields,42 these figures are problematic because many different data distributions can lead to the same summary statistics shown in bar graphs. Researchers should use data visualisations that show the data distribution, such as dot plots, box plots or violin plots.43 44 Reporting of test statistics and degrees of freedom yields much potential for improvement, as well as reporting of standardised effect sizes and their precision. Instead of making decisions based on p values alone, reporting the size and precision of effects in combination with the p value provides a more complete representation of the results and reduces the likelihood of spurious findings. P values that do not match the reported test-statistic and degrees of freedom were included in 25% to 38% of medical articles,45 and up to 50% in psychology papers.46 These inaccurate p values may alter study conclusions in 13% of psychology papers.46 Our study shows that these assessments are impossible in sports medicine and orthopaedics clinical trials, as test statistics and degrees of f are rarely reported.

Reporting of criteria related to the study sample and to exercise interventions highlighted some positive points. Whereas Costello et al47 observed that less than 40% of sports and exercise study participants were women, indicating sex bias, our study, on average, shows an even distribution of sex/gender. Similarly the number of participants included in the analysis was reported in 75% of trials in the present study, compared with 42% of randomised controlled trials in orthopaedic journals.13 The introduction of flow charts to display the participant flow in CONSORT 2010 may improve reporting for sample-related criteria, as trials which included flow charts were more likely to report the number of participants included in the analysis and reasons for all exclusions. While the majority of studies reported key details of exercise interventions, reporting was less comprehensive for the control intervention and for intervention adherence or compliance.

Options for systemic interventions to improve reporting

Ongoing reporting deficiencies in clinical trials highlight the need for systemic interventions to improve reporting. The 2010 CONSORT guideline has been endorsed by more than 50% of the core medical journals and the ICMJE.48 Transparent research practices and reporting need to be incentivised on different levels and by different stakeholders in the academic research lifecycle.49 50 Persistent reporting deficiencies12 21 indicate that endorsement without enforcement is insufficient,51 52 and engaging individuals, journals, funders, and institutions is necessary to improve reporting.49 53

One option to improve reporting is for journals to enforce existing guidelines and policies. All journals in our sample were peer reviewed; yet there were major essential details often missing from published trials. This suggests that peer review alone is insufficient. Alternatives include rigorous manual review by trained ‘trial reporting’ assessors, automated screening or a combined approach. A journal programme that trained early career researchers to check for common data visualisation errors was well accepted by authors and increased compliance with data presentation guidelines.54 Implementing similar programmes, using paid staff, could improve CONSORT compliance. Alternatively, automated screening tools may efficiently flag missing information for peer reviewers.55 56 Peer review systems at several journals include an automated tool that checks statistical reporting and guideline adherence.57 Tools are available to screen for risk of bias (RobotReviewer; RRID:SCR_02106458), and CONSORT methodology criteria (CONSORT-TM; RRID:SCR_02105159). The CONSORT tool performs well for frequently reported criteria, but needs more training data for less often reported criteria.59 New tools may need to be created to assess details like the specifics of allocation concealment, blinding of specific stakeholders or justifications of expected effect sizes. As 52% of clinical trials in our sample were published in only five journals, systemic efforts to improve reporting in these journals level could make a noticeable difference on clinical trial reporting in the field.

A second option is automated screening of sports medicine and orthopaedics preprints. Preprints, which are posted on public servers such as medRxiv and sportRxiv prior to peer review, allow authors to receive feedback and improve their manuscripts before journal submission. Large-scale automated screening of bioRxiv and medRxiv preprints for rigour and transparency criteria is feasible and could raise awareness about factors affecting transparency and reproducibility.60 Automated screening has limitations—the tools make mistakes and cannot always determine whether a particular item is relevant to a given study. Automated screening may complement peer review, but is not a replacement. The value of this approach will also depend on the proportion of trials that are posted as preprints.

Dashboards may offer a third option for monitoring changes in practice over time, and raising awareness about the importance of specific reporting practices among researchers, policymakers and the public. When used to inform incentives systems, dashboards may potentially contribute to improved reporting. Dashboards may work best in combination with other measures, like policy changes, incorporating practices described in dashboards into researcher assessments or rewarding researchers for improving reporting. Policymakers and the scientific community can use dashboards to evaluate the effectiveness of interventions to improve scientific practice. Data from dashboards can show whether interventions impact scientific practice or demonstrate that further incentives are needed to drive change. Examples include dashboards on open science,61 and trial results reporting.62 In sports medicine and orthopaedics, clinical trial dashboards could track transparent research practices for journals, society publishers or all publications, and should include commonly missed items identified in this study. Researchers may need to develop new automated tools to track some criteria.

The scientific community has long relied on educational resources to improve reporting. On-demand resources include the CONSORT guideline use webinar by Altman,63 and open webinars on pre-registration, sample size justification and other topics offered by the Society for Transparency, Openness and Replication in Kinesiology.64 Creating a single platform with field-relevant resources; then collaborating with large journals, publishers and societies, may help to disseminate materials to the global orthopaedics and sports medicine community.

Limitations

Our CONSORT-based evaluation criteria for intervention reporting were not optimised for non-exercise or wait-and-see control interventions. While the assessments required by guidelines for intervention reporting65 66 were beyond the scope of this study, previous studies assessed intervention reporting in detail.17 67–69 Larger, confirmatory studies are needed to examine relationships between different variables, as ORs calculated in the present study were exploratory post-hoc calculations. We examined the top 25% sports medicine and orthopaedics journals; hence our findings may not be generalisable to journals that are not indexed by PubMed, lower tier journals, non-English journals or unpublished trials. The use of the clinical trial filter may have led to the exclusion of a small number of trials that were incorrectly classified on indexing.

Conclusions

The present study in recent sports medicine and orthopaedic clinical trials shows that authors often report general information on rigour criteria but few provide the essential details to assess risk of bias required by existing guidelines. Examples include the blinding status of all main stakeholders, information on the concealed assignment or the justification of expected effect sizes in sample size calculations. Further, transparent research practices like pre-registration or data sharing are rarely used in sports medicine and orthopaedics.

As reporting guidelines for clinical trial reporting are long established and well accepted across medical fields, the persistent lack of detailed reporting suggests that education and existing guidelines alone are not working. Better incentives, further interventions and other innovative approaches are needed to improve clinical trial reporting further. We present options for future interventions, which might include rigorous peer-reviewer training, automated screening of submitted manuscripts and preprints and field-specific dashboards to monitor reporting and transparent research practices to increase awareness and track improvements over time. Our results show which aspects of clinical trial reporting have the greatest need for improvement. Researchers can use this data to tailor future interventions to improve reporting to the needs of the sports medicine and orthopaedics community.

Supplementary Material

Acknowledgments

We would like to acknowledge Mia Pattillo for her valuable contributions to extracting the registration status of trials.

Footnotes

Twitter: @RSchulz_, @T_Weissgerber

Contributors: Project conceptualisation: RS, TLW. Project administration: RS, TLW. Methodology: RS, TLW. Investigation: RS, RP, GL. Validation: GL. Data curation: RS, GL. Visualisation: RS created visualisations and TW provided feedback. Data analysis: RS. Supervision: TLW, MC. Writing—original draft: RS, TLW. Writing—reviewing and editing: RS, TLW, GL, RP, MC.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient and public involvement: Patients and/or the public were not involved in the design, or conduct, or reporting, or dissemination plans of this research.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

All data are available on the Open Science Framework and may be accessed under the Creative Commons Attribution V.4.0 International License at the following link: https://osf.io/q8b46/.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

Not applicable.

References

- 1.Emanuel EJ, Wendler D, Grady C. What makes clinical research ethical? JAMA 2000;283:2701–11. 10.1001/jama.283.20.2701 [DOI] [PubMed] [Google Scholar]

- 2.Califf RM, DeMets DL. Principles from clinical trials relevant to clinical practice: Part I. Circulation 2002;106:1015–21. 10.1161/01.cir.0000023260.78078.bb [DOI] [PubMed] [Google Scholar]

- 3.Gerstein HC, McMurray J, Holman RR. Real-World studies no substitute for RCTs in establishing efficacy. Lancet 2019;393:210–1. 10.1016/S0140-6736(18)32840-X [DOI] [PubMed] [Google Scholar]

- 4.Zarin DA, Goodman SN, Kimmelman J. Harms from uninformative clinical trials. JAMA 2019;322:813-814. 10.1001/jama.2019.9892 [DOI] [PubMed] [Google Scholar]

- 5.Feudtner C, Schreiner M, Lantos JD. Risks (and benefits) in comparative effectiveness research trials. N Engl J Med 2013;369:892–4. 10.1056/NEJMp1309322 [DOI] [PubMed] [Google Scholar]

- 6.van Delden JJM, van der Graaf R. Revised CIOMS international ethical guidelines for health-related research involving humans. JAMA 2017;317:135–6. 10.1001/jama.2016.18977 [DOI] [PubMed] [Google Scholar]

- 7.Schulz KF, Altman DG, Moher D, et al. Consort 2010 statement: updated guidelines for reporting parallel group randomised trials. PLoS Med 2010;7:e1000251. 10.1371/journal.pmed.1000251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Moher D, Hopewell S, Schulz KF, et al. Consort 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ 2010;340:c869. 10.1136/bmj.c869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.ICMJE . Recommendations for the Conduct, Reporting, Editing and Publication of Scholarly Work in Medical Journals [Internet], 2020. Available: http://www.ICMJE.org [Accessed 04 Aug 2020]. [PubMed]

- 10.Moher D, Simera I, Schulz KF, et al. Helping editors, peer reviewers and authors improve the clarity, completeness and transparency of reporting health research. BMC Med 2008;6:13. 10.1186/1741-7015-6-13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Turner L, Shamseer L, Altman DG, et al. Does use of the CONSORT statement impact the completeness of reporting of randomised controlled trials published in medical journals? A cochrane review. Syst Rev 2012;1:60. 10.1186/2046-4053-1-60 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dechartres A, Trinquart L, Atal I, et al. Evolution of poor reporting and inadequate methods over time in 20 920 randomised controlled trials included in Cochrane reviews: research on research study. BMJ 2017;357:j2490. 10.1136/bmj.j2490 [DOI] [PubMed] [Google Scholar]

- 13.Chess LE, Gagnier J. Risk of bias of randomized controlled trials published in orthopaedic journals. BMC Med Res Methodol 2013;13:76. 10.1186/1471-2288-13-76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nielsen RO, Shrier I, Casals M, et al. Statement on methods in sport injury research from the 1st methods matter meeting, Copenhagen, 2019. Br J Sports Med 2020;54:941. 10.1136/bjsports-2019-101323 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zenko Z, Steele J, Mills J. Communications in Kinesiology: a new open access Journal from the Society for transparency, openness, and replication in Kinesiology. Communications in Kinesiology 2020;1:1–3. 10.5526/cik.v1i1.26 [DOI] [Google Scholar]

- 16.Verhagen E, Stovitz SD, Mansournia MA, et al. BJSM educational editorials: methods matter. Br J Sports Med 2018;52:1159–60. 10.1136/bjsports-2017-097998 [DOI] [PubMed] [Google Scholar]

- 17.Holden S, Rathleff MS, Jensen MB, et al. How can we implement exercise therapy for patellofemoral pain if we don't know what was prescribed? A systematic review. Br J Sports Med 2018;52:385. 10.1136/bjsports-2017-097547 [DOI] [PubMed] [Google Scholar]

- 18.Losina E. Why past research successes do not translate to clinical reality: gaps in evidence on exercise program efficacy. Osteoarthritis Cartilage 2019;27:1–2. 10.1016/j.joca.2018.09.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Knudson D. Confidence crisis of results in biomechanics research. Sports Biomech 2017;16:425–33. 10.1080/14763141.2016.1246603 [DOI] [PubMed] [Google Scholar]

- 20.Büttner F, Toomey E, McClean S, et al. Are questionable research practices facilitating new discoveries in sport and exercise medicine? the proportion of supported hypotheses is implausibly high. Br J Sports Med 2020;54:1365–71. 10.1136/bjsports-2019-101863 [DOI] [PubMed] [Google Scholar]

- 21.Kane RL, Wang J, Garrard J. Reporting in randomized clinical trials improved after adoption of the CONSORT statement. J Clin Epidemiol 2007;60:241–9. 10.1016/j.jclinepi.2006.06.016 [DOI] [PubMed] [Google Scholar]

- 22.Schulz R, Langen G, Prill R, et al. The devil is in the details: reporting and transparent research practices in sports medicine and orthopedic clinical trials, 2021. Available: https://osf.io/dm549 [DOI] [PMC free article] [PubMed]

- 23.SCImago (SJR — SCImago Journal & Country Rank [Portal] [Internet], 2020. Available: https://www.scimagojr.com/journalrank.php?category=2732 [Accessed 22 Feb 2021].

- 24.Ouzzani M, Hammady H, Fedorowicz Z, et al. Rayyan-a web and mobile APP for systematic reviews. Syst Rev 2016;5:210. 10.1186/s13643-016-0384-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Nosek BA, Alter G, Banks GC, et al. Scientific standards. promoting an open research culture. Science 2015;348:1422–5. 10.1126/science.aab2374 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Caldwell AR, Vigotsky AD, Tenan MS, et al. Moving sport and exercise science forward: a call for the adoption of more transparent research practices. Sports Med 2020;50:449–59. 10.1007/s40279-019-01227-1 [DOI] [PubMed] [Google Scholar]

- 27.Halperin I, Vigotsky AD, Foster C, et al. Strengthening the practice of exercise and Sport-Science research. Int J Sports Physiol Perform 2018;13:127–34. 10.1123/ijspp.2017-0322 [DOI] [PubMed] [Google Scholar]

- 28.Barnes C, Boutron I, Giraudeau B, et al. Impact of an online writing aid tool for writing a randomized trial report: the COBWEB (Consort-based web tool) randomized controlled trial. BMC Med 2015;13:221. 10.1186/s12916-015-0460-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.De Angelis C, Drazen JM, Frizelle FA, et al. Clinical trial registration: a statement from the International Committee of medical Journal editors. Ann Intern Med 2004;141:477–8. 10.7326/0003-4819-141-6-200409210-00109 [DOI] [PubMed] [Google Scholar]

- 30.Harris JD, Cvetanovich G, Erickson BJ, et al. Current status of evidence-based sports medicine. Arthroscopy 2014;30:362–71. 10.1016/j.arthro.2013.11.015 [DOI] [PubMed] [Google Scholar]

- 31.Twomey R, Yingling V, Warne J, et al. Nature of our literature. Commun in Kinesiology 2021;1. 10.51224/cik.v1i3.43 [DOI] [Google Scholar]

- 32.Warren M. First analysis of ‘pre-registered’ studies shows sharp rise in null findings. Nature 2018;112. 10.1038/d41586-018-07118-1 [DOI] [Google Scholar]

- 33.Christensen G, Dafoe A, Miguel E, et al. A study of the impact of data sharing on article citations using Journal policies as a natural experiment. PLoS One 2019;14:e0225883. 10.1371/journal.pone.0225883 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Colavizza G, Hrynaszkiewicz I, Staden I, et al. The citation advantage of linking publications to research data. PLoS One 2020;15:e0230416. 10.1371/journal.pone.0230416 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lesk M, Mattern JB, Moulaison Sandy H. Are Papers with Open Data More Credible? An Analysis of Open Data Availability in Retracted PLoS Articles. In: Taylor NG, Christian-Lamb C, Martin MH, et al., eds. Information in contemporary Society. Cham: Springer International Publishing, 2019: 154–61. [Google Scholar]

- 36.Lo B, DeMets DL. Incentives for clinical Trialists to share data. N Engl J Med 2016;375:1112–5. 10.1056/NEJMp1608351 [DOI] [PubMed] [Google Scholar]

- 37.Mello MM, Francer JK, Wilenzick M, et al. Preparing for responsible sharing of clinical trial data. N Engl J Med 2013;369:1651–8. 10.1056/NEJMhle1309073 [DOI] [PubMed] [Google Scholar]

- 38.Taichman DB, Sahni P, Pinborg A, et al. Data Sharing Statements for Clinical Trials - A Requirement of the International Committee of Medical Journal Editors. N Engl J Med 2017;376:2277–9. 10.1056/NEJMe1705439 [DOI] [PubMed] [Google Scholar]

- 39.Keerie C, Tuck C, Milne G, et al. Data sharing in clinical trials - practical guidance on anonymising trial datasets. Trials 2018;19:25. 10.1186/s13063-017-2382-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Vines TH, Albert AYK, Andrew RL, et al. The availability of research data declines rapidly with article age. Curr Biol 2014;24:94–7. 10.1016/j.cub.2013.11.014 [DOI] [PubMed] [Google Scholar]

- 41.Open Science Collaboration . Psychology. estimating the reproducibility of psychological science. Science 2015;349:aac4716. 10.1126/science.aac4716 [DOI] [PubMed] [Google Scholar]

- 42.Riedel N, Schulz R, Kazezian V. Replacing bar graphs of continuous data with more informative graphics: are we making progress? Clin Sci 2022:CS20220287. 10.1042/CS20220287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Weissgerber TL, Milic NM, Winham SJ, et al. Beyond bar and line graphs: time for a new data presentation paradigm. PLoS Biol 2015;13:e1002128. 10.1371/journal.pbio.1002128 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Weissgerber TL, Winham SJ, Heinzen EP, et al. Reveal, Don't Conceal: Transforming Data Visualization to Improve Transparency. Circulation 2019;140:1506–18. 10.1161/CIRCULATIONAHA.118.037777 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.García-Berthou E, Alcaraz C. Incongruence between test statistics and P values in medical papers. BMC Med Res Methodol 2004;4:13. 10.1186/1471-2288-4-13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Nuijten MB, Hartgerink CHJ, van Assen MALM, et al. The prevalence of statistical reporting errors in psychology (1985-2013). Behav Res Methods 2016;48:1205–26. 10.3758/s13428-015-0664-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Costello JT, Bieuzen F, Bleakley CM. Where are all the female participants in sports and exercise medicine research? Eur J Sport Sci 2014;14:847–51. 10.1080/17461391.2014.911354 [DOI] [PubMed] [Google Scholar]

- 48.CONSORT . Consort - Endorsers [Internet], 2021. Available: http://www.consort-statement.org/about-consort/endorsers1

- 49.Macleod MR, Michie S, Roberts I, et al. Biomedical research: increasing value, reducing waste. The Lancet 2014;383:101–4. 10.1016/S0140-6736(13)62329-6 [DOI] [PubMed] [Google Scholar]

- 50.Mellor D. Improving norms in research culture to incentivize transparency and rigor. Educ Psychol 2021;56:122–31. 10.1080/00461520.2021.1902329 [DOI] [Google Scholar]

- 51.Hirst A, Altman DG. Are peer reviewers encouraged to use reporting guidelines? A survey of 116 health research journals. PLoS One 2012;7:e35621. 10.1371/journal.pone.0035621 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Shamseer L, Hopewell S, Altman DG, et al. Update on the endorsement of CONSORT by high impact factor journals: a survey of journal "Instructions to Authors" in 2014. Trials 2016;17:301. 10.1186/s13063-016-1408-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Moher D. Reporting guidelines: doing better for readers. BMC Med 2018;16:233. 10.1186/s12916-018-1226-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Keehan KH, Gaffney MC, Zucker IH. CORP: assessing author compliance with data presentation guidelines for manuscript figures. Am J Physiol Heart Circ Physiol 2020;318:H1051–8. 10.1152/ajpheart.00071.2020 [DOI] [PubMed] [Google Scholar]

- 55.Halffman W, Horbach SPJM. What are innovations in peer review and editorial assessment for? Genome Biol 2020;21:87. 10.1186/s13059-020-02004-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Checco A, Bracciale L, Loreti P, et al. AI-assisted peer review. Humanit Soc Sci Commun 2021;8:1–11. 10.1057/s41599-020-00703-8 [DOI] [Google Scholar]

- 57.BMC . Advancing peer review at BMC [Internet], 2021. Available: https://www.biomedcentral.com/about/advancing-peer-review

- 58.Soboczenski F, Trikalinos TA, Kuiper J, et al. Machine learning to help researchers evaluate biases in clinical trials: a prospective, randomized user study. BMC Med Inform Decis Mak 2019;19:96. 10.1186/s12911-019-0814-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kilicoglu H, Rosemblat G, Hoang L, et al. Toward assessing clinical trial publications for reporting transparency. J Biomed Inform 2021;116:103717. 10.1016/j.jbi.2021.103717 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Weissgerber T, Riedel N, Kilicoglu H, et al. Automated screening of COVID-19 preprints: can we help authors to improve transparency and reproducibility? Nat Med 2021;27:6–7. 10.1038/s41591-020-01203-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.European Comission . Open science monitor [Internet], 2018. Available: https://ec.europa.eu/info/research-and-innovation/strategy/goals-research-and-innovation-policy/open-science/open-science-monitor_en

- 62.EU Trials Tracker . EU Trials Tracker — Who’s not sharing clinical trial results? [Internet]: Evidence-Based Medicine Data Lab; University of Oxford, 2021. Available: https://eu.trialstracker.net/

- 63.Altman DG. WEBINAR: Doug Altman – CONSORT Statement guidance for reporting randomised trials | The EQUATOR Network [Internet]: EQUATOR, 2013. Available: https://www.equator-network.org/2013/06/24/webinar-doug-altman-consort-statement-guidance-for-reporting-randomised-trials/

- 64.Society for Transparency, . Openness, and Replication in Kinesiology. Stork - Resources [Internet], 2021. Available: https://storkinesiology.org/resources/

- 65.Hoffmann TC, Glasziou PP, Boutron I, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014;348:g1687. 10.1136/bmj.g1687 [DOI] [PubMed] [Google Scholar]

- 66.Slade SC, Dionne CE, Underwood M, et al. Consensus on exercise reporting template (CERT): modified Delphi study. Phys Ther 2016;96:1514–24. 10.2522/ptj.20150668 [DOI] [PubMed] [Google Scholar]

- 67.Slade SC, Keating JL. Exercise prescription: a case for standardised reporting. Br J Sports Med 2012;46:1110–3. 10.1136/bjsports-2011-090290 [DOI] [PubMed] [Google Scholar]

- 68.Verhagen EALM, Hupperets MDW, Finch CF, et al. The impact of adherence on sports injury prevention effect estimates in randomised controlled trials: looking beyond the CONSORT statement. J Sci Med Sport 2011;14:287–92. 10.1016/j.jsams.2011.02.007 [DOI] [PubMed] [Google Scholar]

- 69.Hoffmann TC, Erueti C, Glasziou PP. Poor description of non-pharmacological interventions: analysis of consecutive sample of randomised trials. BMJ 2013;347:f3755. 10.1136/bmj.f3755 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Banks GC, O’Boyle EH, Pollack JM, et al. Questions about questionable research practices in the field of management. J Manage 2016;42:5–20. 10.1177/0149206315619011 [DOI] [Google Scholar]

- 71.Hutton JL, Williamson PR. Bias in meta‐analysis due to outcome variable selection within studies. Journal of the Royal Statistical Society: Series C 2000;49:359–70. [Google Scholar]

- 72.Bernard R, Weissgerber TL, Bobrov E, et al. fiddle: a tool to combat publication bias by getting research out of the file drawer and into the scientific community. Clin Sci 2020;134:2729–39. 10.1042/CS20201125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Chambers C. What's next for registered reports? Nature 2019;573:187–9. 10.1038/d41586-019-02674-6 [DOI] [PubMed] [Google Scholar]

- 74.Chalmers lain. Underreporting research is scientific misconduct. JAMA 1990;263:1405. 10.1001/jama.1990.03440100121018 [DOI] [PubMed] [Google Scholar]

- 75.Hopewell S, Loudon K, Clarke MJ, et al. Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst Rev 2009:MR000006. 10.1002/14651858.MR000006.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Dwan K, Gamble C, Williamson PR, et al. Systematic review of the empirical evidence of study publication bias and outcome reporting bias - an updated review. PLoS One 2013;8:e66844. 10.1371/journal.pone.0066844 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Kirkham JJ, Altman DG, Chan A-W, et al. Outcome reporting bias in trials: a methodological approach for assessment and adjustment in systematic reviews. BMJ 2018;362:k3802. 10.1136/bmj.k3802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Nunan D, Aronson J, Bankhead C. Catalogue of bias: attrition bias. BMJ Evid Based Med 2018;23:21–2. 10.1136/ebmed-2017-110883 [DOI] [PubMed] [Google Scholar]

- 79.ICMJE . Recommendations | Preparing a Manuscript for Submission to a Medical Journal: Methods - statistics [Internet], 2021. Available: http://www.icmje.org/recommendations/browse/manuscript-preparation/preparing-for-submission.html

- 80.Kerr NL. HARKing: hypothesizing after the results are known. Pers Soc Psychol Rev 1998;2:196–217. 10.1207/s15327957pspr0203_4 [DOI] [PubMed] [Google Scholar]

- 81.Button KS, Ioannidis JPA, Mokrysz C, et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat Rev Neurosci 2013;14:365–76. 10.1038/nrn3475 [DOI] [PubMed] [Google Scholar]

- 82.Kinney AR, Eakman AM, Graham JE. Novel effect size interpretation guidelines and an evaluation of statistical power in rehabilitation research. Arch Phys Med Rehabil 2020;101:2219–26. 10.1016/j.apmr.2020.02.017 [DOI] [PubMed] [Google Scholar]

- 83.Charles P, Giraudeau B, Dechartres A, et al. Reporting of sample size calculation in randomised controlled trials: review. BMJ 2009;338:b1732. 10.1136/bmj.b1732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Abdul Latif L, Daud Amadera JE, Pimentel D, et al. Sample size calculation in physical medicine and rehabilitation: a systematic review of reporting, characteristics, and results in randomized controlled trials. Arch Phys Med Rehabil 2011;92:306–15. 10.1016/j.apmr.2010.10.003 [DOI] [PubMed] [Google Scholar]

- 85.Hewitt C, Hahn S, Torgerson DJ, et al. Adequacy and reporting of allocation concealment: review of recent trials published in four general medical journals. BMJ 2005;330:1057–8. 10.1136/bmj.38413.576713.AE [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Armijo-Olivo S, Saltaji H, da Costa BR, et al. What is the influence of randomisation sequence generation and allocation concealment on treatment effects of physical therapy trials? A meta-epidemiological study. BMJ Open 2015;5:e008562. 10.1136/bmjopen-2015-008562 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Holman L, Head ML, Lanfear R, et al. Evidence of experimental bias in the life sciences: why we need blind data recording. PLoS Biol 2015;13:e1002190. 10.1371/journal.pbio.1002190 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Haahr MT, Hróbjartsson A. Who is blinded in randomized clinical trials? A study of 200 trials and a survey of authors. Clinical Trials 2006;3:360–5. 10.1177/1740774506069153 [DOI] [PubMed] [Google Scholar]

- 89.Mathieu S, Boutron I, Moher D, et al. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA 2009;302:977–84. 10.1001/jama.2009.1242 [DOI] [PubMed] [Google Scholar]

- 90.Chen T, Li C, Qin R, et al. Comparison of clinical trial changes in primary outcome and reported intervention effect size between trial registration and publication. JAMA Netw Open 2019;2:e197242. 10.1001/jamanetworkopen.2019.7242 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Lakens D. Calculating and reporting effect sizes to facilitate cumulative science: a practical primer for t-tests and ANOVAs. Front Psychol 2013;4:863. 10.3389/fpsyg.2013.00863 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.ICMJE . Recommendations | Clinical Trials [Internet], 2021. Available: http://www.icmje.org/recommendations/browse/publishing-and-editorial-issues/clinical-trial-registration.html

- 93.McKiernan EC, Bourne PE, Brown CT, et al. How open science helps researchers succeed. Elife 2016;5:e16800. 10.7554/eLife.16800 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Vasilevsky NA, Minnier J, Haendel MA, et al. Reproducible and reusable research: are Journal data sharing policies meeting the mark? PeerJ 2017;5:e3208. 10.7717/peerj.3208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.European Comission . Facts and Figures for open research data: Figures and case studies related to accessing and reusing the data produced in the course of scientific production. [Internet], 2019. Available: https://ec.europa.eu/info/research-and-innovation/strategy/goals-research-and-innovation-policy/open-science/open-science-monitor/facts-and-figures-open-research-data_en#funderspolicies

- 96.Lakens D. Sample size Justification. Collabra: Psychology 2021;8:33267. 10.1525/collabra.33267 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2021-059347supp001.pdf (365.2KB, pdf)

Data Availability Statement

All data are available on the Open Science Framework and may be accessed under the Creative Commons Attribution V.4.0 International License at the following link: https://osf.io/q8b46/.