Abstract

The spread of COVID-19 caused wide scale disruptions in the educational sector across the globe. Digital education, which involves the use of digital tools, virtual platforms and online learning, is seen as one of the viable alternatives to continue academic activities in such an environment. Higher education institutions have largely switched to this new mode of learning and continue to rely on digital mode in many parts of the world, due to the ongoing pandemic threat. However, learners' competency to effectively engage in online courses and the impact of their socioeconomic background on this competency has not been adequately addressed in the literature. The present study was an attempt to explore these aspects, as they are crucial to the success of digital education. The study was conducted with 833 undergraduate, postgraduate, and doctoral students from an agricultural university to assess their digital competencies and factors that influence effective participation in online courses. The Digital Competence Framework 2.0 of EU Science Hub (DIGCOMP) was adapted and used for this study. Our findings suggest that the learners have a satisfactory level of competence in most of the aspects of digital competence. Majority of the participants were relying on smart phones both as the device for accessing internet as well as for their learning activities. The results of a Tukey's difference in the mean test reveals that learners' digital competence varies significantly by gender, economic profile, and academic level. This finding can be attributed to the difference in their socio-economic background, which confirms digital divide among learners. Our findings have implications for the design of digital higher education strategies and institutional management to ensure effective learner participation, especially for higher education institutions in developing countries.

Keywords: Competence, Learning management system, Pandemic, Lock-down, Digital divide

1. Introduction

The outbreak of the coronavirus disease 2019 (COVID-19) pandemic affected every country in the world. To contain the transmission of the disease ‘lockdown and staying at home’ strategies were implemented by many countries, which resulted in the closure of schools and higher education institutions worldwide (Pokhrel and Chhetri, 2021; Sintema, 2020). About 186 countries closed their educational institutions due to this pandemic, and switched from learning at the institution to remote learning using online tools and resources (UNESCO, 2020). Consequently, the entire academic landscape faced massive disruption due to suspension of physically co-located classes. Nevertheless, in the era of “Living with COVID”, many alternatives were developed to replace the old system of knowledge transfer, which was characterized by direct physical class room interactions. Thus, the pandemic situation has triggered higher order reforms in teaching and learning process and paved the way to ‘digital education’ as a strategy to defy the unprecedented health challenge (Pokhrel and Chhetri, 2021; Yang and Huang, 2021).

India has the world's largest educational population of about 500 million and the higher education sector of the country is third largest in the world (Singh, 2021). More than 320 million learners have been affected in India due to the lockdown strategy to combat COVID-19 (Mathivanan et al., 2021). The outbreak of the pandemic forced the closure of various higher education institutions for an indefinite period of time, which brought the entire academic activities of these institutions to a halt (Mishra, Gupta, and Shree, 2020). The Government of India (GoI) proactively made some efforts to revive teaching and learning activities by promoting knowledge delivery platforms and digital education (Ministry of Education, Government of India, 2021). In line with these efforts, universities in India have taken various mitigation measures, including the adoption of digital tools and learning management systems (LMS), the delivery of online courses via video conferencing platforms, and the development of e-learning content. Online learning is defined as learning experiences in synchronous or asynchronous environments using different devices with internet access, in which students can learn and interact with instructors from any location (Singh & Thurman, 2019). The synchronous learning environment is more structured and characterized by live lectures, real time interactions and instant feedback in contrast to the asynchronous learning environment which is less structured (Dhawan 2020). It can be used interchangeably with other terms such as open learning, computer mediated learning, web-based learning (Dhawan 2020) and digital learning (Holzberger, Philipp, and Kunter, 2013). Essentially, it involves deploying educational technologies to learning situations to achieve the learner-centered individualized learning (Kaklamanou, Pearce, and Nelson, 2012). However, the learners should have the competence to access, evaluate and adapt resources using these technologies for effective learning in any modified learning situation (Ferrari, 2013; Zhao et al., 2021; Wang et al., 2021). This competence which enable a learner to use technological tools effectively to learn, to work, and to participate in society is identified as digital competence (Vázquez-Cano et al., 2020). Any attempt to integrate digital tools in an educational setting must be based knowledge of learners' level of digital competence (Calvani et al., 2012). Nevertheless, the usage of digital tools does not guarantee that the learner has digital competence (Sánchez-Caballé, Gisbert Cervera, & Esteve-Mon, 2020). Furthermore, learners' competence may not be sufficient to achieve the learning objective in an online learning context (Watkins, Leigh, and Triner, 2004). Hasan and Bao (2020) contends that inadequate digital competence can lead to poor learning experience particularly when the learning options for the learners are limited to online mode. Hence, it became relevant to investigate learners' level of digital competence as an essential condition for active participation in digital learning.

Researchers are giving much attention to the prospects of digital education in developing countries with the very beginning of the COVID-19 pandemic (Bao, 2020; Bond, Marín, Dolch, Bedenlier, & Zawacki-Richter, 2018; Budi et al., 2020; Sevillano-García and Vázquez-Cano, 2015). These studies largely focus on the status of online education in terms of its effectiveness (Dhawan 2020), acceptability and quality (Maity et al., 2020), required essentialities (Mishra et al., 2020) and policy environment (Bhorkar, Khanapurkar, Dandare, & Kathole, 2020). However, critical investigations on the digital competence of higher education learners, which refers to the skills and abilities to use computers and other technologies to improve learning, productivity and performance, are scarce (Burgos-Videla et al., 2021). The concept of digital competence encompasses those skills and abilities that enable learners to produce and communicate information using digital technology in an academic environment (Hatlevik and Christophersen, 2013; Kim, Hong, and Song, 2019). Digital competence is even regarded as a key determinant for understanding and interpreting digital learning resources and online learning services (López-Meneses, Sirignano, Vázquez-Cano, & Ramírez-Hurtado, 2020). Although there are multiple factors that require a thorough understanding, such as the structure of the learning activities, learning behaviors and engagement, learning environment design, etc., we were specifically interested in examining the digital competence that enables students meet the challenges of online learning (Wang et al., 2021). While there are a couple of studies on the digital competence of students (Martzoukou et al., 2020; Sánchez-Caballé et al., 2020), few empirical studies have been conducted on the digital competence of higher education students of a professional course.

Researchers have attempted to understand the concept of digital competence in a variety of ways, including using a digital competence scale (Tzafilkou, K., Perifanou, M. & Economides, 2022), digital competence models (Amaro, Oliveira, & Veloso, 2017; Tourón, Deborah Martin, Enrique Navarro, SilviaPradas, & VictoriaIñigo, 2018), digital competence building blocks (Janssen et al., 2013) and using comprehensive frameworks (Vukčević et al., 2021). Some of the frameworks to assess the digital competence include the European Computer Driving License (Leahy and Dolan, 2010), iCritical Thinking framework of the International ICT Literacy Panel (Verizon et al., 2002) and Digital Competence Assessment Framework (Calvani et al., 2008). On the other hand, studies have also attempted to assess digital competence using qualitative (Çebi, A., & Reisoğlu, İ. (2020) as well as mixed method approaches (Burgos-Videla et al., 2021). In addition, there were attempts to identify and analyze the concept of digital competence in different ways, such as internet skills (van Deursen & van Dijk, 2011; Hargittai, 2010), digital literacy (Eshet-Alkalai & Yoram, 2012), digital readiness (Arthur-Nyarko & Moses, 2019), and digital skills (Reedy, Boitshwarelo, Barnes, & Billany, 2015). In this study, an attempt was made to empirically assess the digital competencies of university graduates using the Digital Competence Assessment Framework (DIGCOMP). Although the original framework was modified by Evangelinos and Holley (2015), its current version proposed by the EU Science Hub in 2019, which is used for this study.

Specifically, the present study sought to address the following questions: (1) Is it possible to assess the digital competence of online learners? (2) Do online learners have an adequate level of digital competence to effectively participate in online learning? (3) How does digital competence vary among learners based on their socioeconomic characteristics? Hence, this study aims to critically analyze the digital competence of online learners of a professional degree programme and their socio-economic situation that influence their effective participation in online classes beyond the issues of access and availability of digital technologies (Afolabi, 2015).

2. Methodology

2.1. Theoretical concepts

Digital transformation, the incorporation of digital technologies into behavior, processes and strategies, is triggering major changes in higher education and research (Barzman et al., 2021). In essence, it denotes the kind of changes that result from the application of digital technologies (Agarwal, Gao, DesRoches, & Jha, 2010). The constraints triggered by the COVID-19 pandemic have initiated such a transition by vigorously enforcing online learning and teaching in the higher education system (Iivari, Sharma, and Ventä-Olkkonen, 2020; Zawacki-Richter, 2021). Digitization of education became one of the most important features of this change, with the massive use of information and communication technologies (ICTs) for teaching and learning, as well as for information delivery (Arthur-Nyarko and Kariuki, 2019). With these changes, many critical concerns emerged that challenged learners' ability to adapt to these changes (Levy, 2017; Maity et al., 2020). Of these concerns, learners’ digital competence is important because it is crucial for the effective participation in a knowledge society (Ilomäki, Paavola & Lakkala, 2016). Thus, this research is based on the premise that learners should be digitally competent, to effectively participate in online learning and use digitized learning materials (Arthur-Nyarko and Kariuki, 2019).

Research on digital competence in education has proliferated in recent years. The concept of digital competence is multifaceted, broad, and sensitive to sociocultural context (Ilomäki et al., 2016; Olofsson, Fransson, and Lindberg, 2019). In this regard, the importance of technology related skills is widely discussed in the literature. A number of studies have tried to define the concept of digital competence (Falloon, 2020; Gallardo-Echenique et al., 2015; Janssen et al., 2013). Fundamentally, digital competence refers to the set of skills and abilities required to learn and navigate environments critically, creatively and responsibly (Ilomäki et al., 2016). The underlying skills include information retrieval, content creation, communication, problem solving, and technical competence (Sánchez-Caballé et al., 2020). In addition, there exists a causal relationship between students' digital competence and their previous experience with the digital environment in everyday life (Martzoukou et al., 2020). Thus, the concept of digital competence has a broader meaning than just technical know-how and extends to critical aspects related to learners’ attitude, confidence, and active engagement in a knowledge society (Svensson and Baelo, 2015).

2.2. Data collection

The study was conducted among graduate and postgraduate students of Kerala Agricultural University (KAU), an autonomous, publicly funded professional educational institution under the Indian Council of Agricultural Research (ICAR). The university was purposively selected because it is located in the state of Kerala, one of India's leading states in terms of various digital infrastructure parameters, including internet access, possession of digital devices by its citizens and their level of e-literacy (Government of India, 2020). Due to the implementation of the COVID-19 lockdown, the university has shifted all classes from ‘physically present’ mode to various online learning platforms and LMS since early March of 2020. The data was collected in September 2020 (after six months of implementing online learning) using an online survey tool named Survey Monkey®.

The university has four campuses with 2046 higher education learners and the questionnaire was pre-tested with 50 students from these different colleges to check clarity of the concept and understandability. The questionnaire was then finalized with the necessary modifications. At the beginning of the survey, the participants were informed about the objectives of the study in order to get as many responses as possible. Then, the survey link was shared with the respondents through personal emails. After one week of deployment of the questionnaire, the survey was followed up by contacting class representatives1 to maximize participation. Within the stipulated time, a total of 984 students responded to the survey. However, due to incomplete responses, either because of partial completion of the survey or completely dropping the schedule after attempting a few questions, 151 responses was omitted. Thus, only 833 responses were considered for further analysis.

2.3. Method

The study followed a descriptive research design. Survey questions were prepared based on previous studies and in collaboration with subject matter experts. Questions included information about participants' ownership of and access to digital devices for learning, level of experience with various software applications for learning, and level of digital competency. To assess respondents' digital competence, the latest version of DIGCOMP, the Digital Competence Framework 2.0 of EU Science Hub (2019), was adapted and used for this study. The framework emphasizes critical skills needed to use ICTs for learning, self-development and participation in the society. The original framework consists of five dimensions of digital competence and a total of 21 competencies that have been adapted and used for the purpose of this study. To be precise, we omitted some of the subcomponents, such as copyright and licensing, and programming, which falls under digital content creation, because they were not relevant to the study participants. Furthermore, two additional questions: ‘managing data, information and digital content’ and ‘integrating and re-elaborating digital content’ were included as they were relevant for this study. In order to develop relevant questions for our study, we first reviewed each dimension of the framework and transformed each item into individual questions.

2.3.1. Assessment of digital competence

In order to assess the participants’ digital competence, a composite index was constructed by incorporating five competence areas, namely information and data literacy, communication and collaboration, digital content creation, safety and problem solving, proposed by Evangelinos and Holley (2015). These five competence areas were renamed as the dimensions for this particular study. Each of these dimensions was described by a set of indicators in the original framework. Table A1 in the Appendix provides a description of the DIGCOMP framework that was adapted and used in this study. In this study, each of these dimensions was explored by framing appropriate questions. Responses of the participants to the questions pertaining to each of the dimensions were collected on a five point continuum ranging from-highly competent, moderately competent, competent, less competent and incompetent to represent the varying levels of digital competence. Further, Exploratory Factor Analysis (EFA) was performed to derive an appropriate number of factors and to assign weight to various sub-dimensions in SPSS 20.

2.3.2. Assignment of weights to the indicators

EFA was performed with the dataset to obtain factor loading and eigenvalues. Kaiser normalization was performed to identify the eigenvalues greater than 1 and the same number of components was extracted using varimax rotation method. Then, the extracted component matrix was multiplied by the eigenvalues, taking only the absolute values. The values obtained were summed for each indicator to obtain weight for that particular indicator following (Ramadas et al., 2017). As all the measures were ordinal and collected at the same level (1–5 continuum), no normalization was performed. The indicators were multiplied with the assigned weights to construct the indices separately for each dimension of the DIGCOMP framework.

2.3.3. Digital competence index (DCI)

Each of the respondents was awarded a score for each dimension. The scores obtained under each of the dimension are averaged and summed up to get the score for that particular dimension (Equation (1)):

| (1) |

where to denote the five dimensions and n corresponds to number of indicators under each dimension.

Finally, the scores of all the five competence areas were added together to obtain a respondent's digital competence score. However, the scores do not represent an absolute measure of the participants' digital competence. Rather, they are used as a benchmark for a comparative assessment of the participants. Based on the scores, the respondents were classified into low, medium and high categories by following percentile values. Further, one way ANOVA and Post Hoc (Tukey HSD) tests were performed to check differences among the learners with regard to selected variables such as gender, academic level and economic status, which were found worth exploring in line with other studies (Burgos-Videla et al., 2021; Wang et al., 2021).

3. Results

In this study, 640 female and 193 male students participated in the survey, reflecting the predominance of female students enrolled for in agricultural degree course in Kerala. The socio-demographic data of the respondents show that majority of them (61.10%) belong to rural areas followed by peri-urban (20.89%) and urban (18.01%) backgrounds. The average age of the respondents is 21.45 years. Further, most of the students (42.61%) belong either to the category with an average monthly family income of less than 340 US$2 or between 340 and 1020 US$ (32.49%). Almost all (99.03%) the respondents were residing with their parents during the lockdown period.

3.1. Access to and awareness of digital technologies

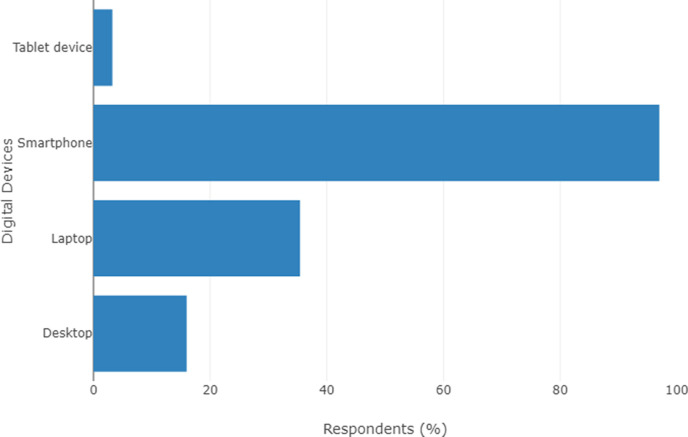

Almost all students (97%) have their own smartphones and more than one third had a laptop (Fig. 1 ). Overall, 36.58% had access to any two and 7.56% had more than two digital devices for online learning.

Fig. 1.

Access to various digital devices by the respondents.

The availability of digital devices for the respondents corresponding to their economic status indicate that the lowest income group (<340 US$) has digital devices such as desktop and tablet to a lesser extent (Fig. 2 ). The data distribution shows that majority of the lower (63.94%) and middle income (47.42%) categories, have a single digital device for learning. Out of the total respondents, 94.35% stated that they are accessing internet within the home premises. Regarding the means of internet connectivity, an overwhelming majority (86.91%) stated that they mainly rely on their smartphones. In terms of frequency of Internet access, 92.7% of respondents use the Internet daily. Further, majority (56.6%) of the respondents who access the internet daily spends at least 3 hours for browsing on various topics of interest.

Fig. 2.

Access to digital devices based on the economic status of the respondents.

3.2. Digital competence of learners

Student competence is assessed in terms of the five competence domains described in the DIGCOMP framework. Fig. 3 depicts the respondents’ competence levels in relation to the 21 components of digital competence. Under the first dimension, information and data literacy component, majority of the respondents had moderate level of competence with respect to the various components of this dimension. These include the ability to collect relevant information from various web sources for learning, to collect recent and genuine information, to access various digital platforms for coursework as well as to systematically store and retrieve collected information (see Fig. 3).

Fig. 3.

Competence level of the respondents for various dimensions of digital competence.

Fig. 4.

Difference in Digital Competence Scores segregated by Academic Levels, Income Groups and Gender.

More than one third of the respondents had a moderate level competency for the skill to engage in digital interaction across classes, share the study resources digitally, use the digital collaborative tools to do class assignments, adhere to standard practices while attending online classes and manage their digital identity under the second dimension, communication and collaboration. Further, more than a quarter of the respondents had high level of competence for the first four components. However, about 30% of the learners reported low levels of competence corresponding to the last component, which is a point of concern. The third-dimension digital content creation comprised of three components, namely participating in relevant social issues, the ability to develop digital content, and modifying and adapting the digital content. While half of the study participants had a moderate level of competence with regard to the second component, less than one third and just more than one third respectively for the first and third components. Besides, more than one third had reported being less competent in the first component.

The fourth dimension addressed the safety aspect of digital competence by examining five components. More than one-third of the students were found to be moderately competent with regard to their ability to adapt and mix various media for study purposes, protect personal data and privacy, protect health and wellbeing while using digital technologies, and understand the impact of digital technologies on environment. About one third of the respondents had competence to check risks and threats from the cyber world. However, the fact that almost 30 percent of the respondents showed low competence with regard to the first component is a cause of concern. Finally, the fifth dimension, problem solving had 4 components, namely the ability to resolve technical glitches during online learning, selecting appropriate digital tools and customizing them for course work, being creative in using digital tools in their communication efforts and self-assessing their digital skills. Almost one third of them each belonged to the moderately competent and competent category with regard to the last three components. However, more than a quarter of the respondents had less competence with respect to first three components as indicated by the findings. The figure shows the distribution of respondents across the different dimensions of digital competence measurement. Overall, majority of the respondents were either moderately competent or competent with regard to the various dimensions of digital competence.

3.3. Assessing the level of digital competence of learners

The digital competence scores were calculated for all the respondents for a comparative assessment of the digital competence among them. Based on their overall score, they were categorized into low, medium and high category of digital competence (Table 1 ).

Table 1.

Classification of the respondents according to level of digital competence scores.

| Competence level | Competence score | Number of respondents |

|---|---|---|

| Low | Up to 14.19 | 208 |

| Medium | 14.2 to 18.5 | 416 |

| High | 18.51 and above | 209 |

Note: Low: Up to 25th percentile; Medium: Between 25th and 75th Percentile High: Above 75th percentile.

In addition, respondents were categorized into different groups based on specific variables to provide a better insight about their digital competence. To get an overview of the change in score for digital competence, we used Tukey's difference in the mean test to assess the overall change in the mean level between the overall score and the three categories of low, medium and high competence level. Here, Tukey's test compares the means of all classifications with the mean of every other mean value by pairwise comparison using studentized range statistics that corrects for multiple comparisons. To be precise, we use the Tukey-Kramer procedure, which allows us to provide actual probabilities in close agreement with the corresponding nominal probabilities in the case of a different number of observations for each group (Smith, 1971). Table 2 summarizes the results of the Tukey's test on the differences between the means of the various pairs and their adjusted p-values for the overall score and separately for the low, medium and high level of competence.

Table 2.

Classification of the respondents segregated by gender, economic level, academic level and levels of digital competence.

| Pair | Total |

Low |

Medium |

High |

|||||

|---|---|---|---|---|---|---|---|---|---|

| Dif- ference | Adj. p-value | Dif- ference | Adj. p-value | Dif- ference | Adj. p-value | Dif- ference | Adj. p-value | ||

| Gender | 2–1 | 0.5721 | 0.0140 | −0.0380 | 0.8785 | −0.1280 | 0.3791 | 0.4947 | 0.0051 |

| Economic Status | 2–1 | 0.3655 | 0.2069 | −0.2867 | 0.3509 | 0.3267 | 0.0412** | −0.0713 | 0.9199 |

| 3–1 | 1.1064 | 0.0002 | −0.2721 | 0.6570 | 0.2639 | 0.2844 | 0.3596 | 0.1877 | |

| 3–2 | 0.7409 | 0.0213** | 0.0145 | 0.9989 | −0.0628 | 0.9290 | 0.4308 | 0.0984* | |

| Academic Level | 2–1 | 0.4978 | 0.2337 | 0.1320 | 0.9805 | 0.2117 | 0.6103 | 0.1084 | 0.9846 |

| 3–1 | 0.2157 | 0.9689 | 0.1939 | 0.9729 | 0.0480 | 0.9994 | 0.3394 | 0.7812 | |

| 4–1 | 0.5281 | 0.6763 | 0.3077 | 0.9166 | 0.6539 | 0.0888* | 0.0525 | 0.9999 | |

| 5–1 | 1.1528 | 0.0019 | 0.3086 | 0.8781 | 0.3074 | 0.5437 | 0.3582 | 0.5376 | |

| 3–2 | −0.2821 | 0.9154 | 0.0620 | 0.9997 | −0.1637 | 0.9307 | 0.2310 | 0.9297 | |

| 4–2 | 0.0303 | 1.0000 | 0.1757 | 0.9895 | 0.4422 | 0.4134 | −0.0559 | 0.9998 | |

| 5–2 | 0.6550 | 0.1946 | 0.1766 | 0.9839 | 0.0957 | 0.9881 | 0.2498 | 0.7940 | |

| 4–3 | 0.3124 | 0.9611 | 0.1138 | 0.9989 | 0.6059 | 0.2446 | −0.2869 | 0.9453 | |

| 5–3 | 0.9371 | 0.1076 | 0.1146 | 0.9984 | 0.2594 | 0.8288 | 0.0189 | 1.0000 | |

| 5–4 | 0.6247 | 0.6135 | 0.0009 | 1.0000 | −0.3465 | 0.7511 | 0.3057 | 0.8953 | |

Note: Bold values denote a significance level of p < 0.01, ** and * 0.05 and 0.1, respectively. The pairs are as follows: Gender: male = 2, female = 1; Economic status: low = 1, medium = 2 and high = 3; Academic level: I year graduates = 1, II year graduates = 2, III year graduates = 3, IV year graduates = 4 and post graduates/PhDs = 5.

We found that the mean overall score of digital competence for male students is significantly higher than for female students. Classifying respondents based on their economic status and academic level also showed a significant difference between groups of respondents. Thereby, we found that economic status significantly determines the level of digital competence between the high and low income group and the high and medium income group (p < 0.05), while we did not find a difference between the low and medium income groups. In addition, post graduates and doctoral students had a higher mean overall score compared to first year graduates, while the other pairs showed no difference. In addition, we found no other significant differences between the low, medium and high competence level.

The analysis also delved deeper into the levels of the five dimensions of digital competence of the respondents, when they are divided into different groups as mentioned above. Similar to the previous analysis, we used Tukey's test to compare the means of the five dimensions of digital competence segregated for the categories of academic level, economic status and gender. Fig. 4 gives a graphical representation of the results for the 5 dimensions (Dim1 to Dim 5). We found that male students have a significant higher level (p < 0.01) of average competencies with regard to digital content creation, safety and problem solving, reiterating the similar trend in the case of overall scores on digital competence (Table 2). It is important to note that there was no significant difference between male and female participants with regard to access to digital devices, though the study didn't take into any account the female specific cultural disadvantages.

When grouped by academic level, postgraduate students have the highest mean scores with regard to various dimensions of digital competence. The result can be explained by the fact that they are more familiar with the tools than students at the degree level. For the first dimension of digital competence, namely information and data literacy, a significant difference was found only between first year graduate and postgraduate students. This holds also true for the fourth dimension, safety, of digital competence. However, with regard to digital content creation (Dim 3), significant difference (p < 0.01) was found between first and second year students, first and fourth year graduate students and first, second and third year students with postgraduate students. Further, with regard to the second and fifth dimensions (communication and collaboration, problem solving) no significant difference was noticed among the various academic groups.

Regarding the three economic levels, the first and third categories differed significantly (p < 0.01) in information and data literacy as well as safety. In terms of communication and collaboration, the first and second categories as well as second and third categories varied significantly, albeit at different levels, (p < 0.01) and (p < 0.05), respectively. Similar results were observed for the third and fifth dimensions, digital content creation and problem solving. Mean values for digital competencies were the highest in the third category. Overall, the results signaled a significant difference among various strata of respondents across all the dimensions, when categorized by economic status than by other variables.

4. Discussion

Although the study primarily aimed to understand learners' digital competence in the context of online learning, other dimensions of the digital competence are also highlighted, such as access to digital technologies, access to internet to use the digital devices and knowledge of the learners on key digital applications for learning. As shown in the results, the smartphone was the most important digital device that majority of the respondents had access to, compared to other devices such as laptops, desktop computers, or tablets. This is consistent with the findings of Arthur-Nyarko and Kariuki (2019). Learners increasingly relied on smartphones for learning, since the start of COVID-19 and the consequent shift in the classes to online mode (Biswas, Roy, & Roy, 2020). However, it is important to note that smartphones were the default choices for the learners, as it was the only digital device to which most learners had access. Hence, the choice of the digital device for learning was mostly driven by access rather than availability. To continue, most of the respondents had access to a single digital device for learning, which is not a desirable scenario for unhindered online learning. Access to right and adequate technologies is crucial for effective participation in technology-based learning (Arthur-Nyarko and Kariuki 2019). Moreover, access to digital devices disaggregated by economic status illustrated that the learners' income level play a crucial role in determining access to digital technologies, confirming the findings of Scherer and Siddiq (2019). As indicated by the findings, internet connectivity was not an issue of concern as 95% of the respondents accessed the internet daily without interruption, in contrast to the findings of Coman et al. (2020). However, learners’ over-reliance on smartphones for internet access may lead to limitations in their participation in online classes (Muthuprasad et al., 2021).

Students' digital competence is very important, as they need to attend their entire academic activities in the digital environment (Kim, Hong, and Song 2019). Based on our findings, it is evident that in most of the dimensions of digital competence, the respondents were found to be moderately competent or competent which is in line with the findings of Zhao et al. (2021). The high level of competence of a significant portion of learners in certain components such as the ability to share learning content online or follow standard practices in the digital learning environment could be an indication of their ability to adapt quickly to the new mode of learning. Overall, the findings signaled that students are equipped to participate in online classes as they have most of the required digital competencies, possibly due to their regular exposure to online classes. However, there are certain components where the competence of the students' needs to be developed. For example, competence related to solving technical glitches, mixing various digital media forms and checking the cyber risks and threats were found to be insufficient. Competence in these components stands critical in their effective and safe participation in online classes and fulfilling the course requirements like submission of assignments. Given the persistence of online learning and the prospects for more blended approaches to education in the near future, it would be helpful if students were supported with specific training programs, e.g., orientation sessions to address common technical issues, production of animated videos on course content, etc. (Røkenes and Krumsvik 2016). Studies have raised serious concerns about the impact of online learning on learners’ physical and mental health (Chaturvedi, Kumar Vishwakarma & Singh, 2021; Singh & Thurman, 2019; Lischer, Safi, and Dickson, 2021). The present study also found various health issues faced by learners in online classes. Since this is a barrier to effective participation in virtual classes, immediate attention is needed in this regard. Towards this purpose, modifications can be made to online classes by ensuring intervals between classes, rightly balancing the frequency and duration of classes and providing options for more flexibility in learning activities (Stern, 2004).

It is found that nearly a quarter of the respondents had low levels of digital competence which requires urgent attention. This might result in low engagement of the students to online classes and learning materials. Besides, the level of digital competence was found to vary depending on the socio-economic profile of the respondents. This finding is congruent with the studies of Wang et al. (2021) and Hatlevik, Ottestad, and Throndsen (2015). To gain better insight into these differences, we looked more closely at the dimensions of digital competence. The analysis focused on the digital competence of learners, separated by gender, level of academic stage and economic status, in relation to the various dimensions. Male respondents tended to have higher mean scores in the specific dimensions of digital competence, without factoring in the cultural disadvantages faced by the female learners. In a similar study, Wild and Schulze Heuling (2020) reported higher scores for male students in the dimensions “problem solving” and “safety”. In the present study, the same results were obtained with a significant difference in one additional dimension, “digital content creation”. Nevertheless, the marginal difference in the mean score of the overall measure of digital competence between the two categories suggests that gender difference in digital competence is specific rather than general. There are studies that support the argument that males tend to have a greater inclination towards digital technologies to explain this trend (Kuhlemeier and Hemker, 2007). However, this finding is not in line with the results of Scherer and Siddiq (2019), who found higher levels of digital literacy among females than males. Further, higher means scores with a significant difference was observed for post graduate and doctoral students than for graduate students, which confirms the findings of Yu (2021). This result is significant in that the level of education can highly predict the outcome of digital learning (Huang and Fang 2013). Finally, the higher mean scores of various dimensions of digital competence for the group with higher economic profile have confirmed the findings of other studies (Ritzhaupt et al., 2013), although there exist also opposite findings (Hohlfeld, Ritzhaupt, and Barron, 2013).

5. Conclusion

The goal of the study was to analyze the digital competence of higher education students and the factors underpinning their varying level of digital competence. Given the magnitude of the pandemic, online learning is more likely to continue over an extended period of time. The study results suggest that learners have a satisfactory level of competence in most aspects of digital competence to participate effectively in their online classes, although there are some gaping areas. With regard to access to learning technologies, almost all study participants had at least one kind of digital device. Personalized devices such as smartphones have been the main driver of online learning as well as access to internet, a common feature reported by most of the developing countries. This can limit the quality and effectiveness of learning given the length of the timing of the online learning activities. Furthermore, the study confirms the digital divide argument in terms of learner's access to and competence in using the technologies for learning. Although gender differences are observed in terms of learners' digital competence, they tend to be specific rather than general. Besides, the economic status and academic level of the student is also a key determinant of the learners' digital competence, as can be seen from the results. Hence, it can be concluded that various socioeconomic factors are significant determinants of learners' access to digital technologies and their level of competence using these technologies. Based on the findings, specific training can be planned to build the skill of the learners in the areas of lower competency with special attention to economically lagging, female learners. Further, the delivery of content can be made more learner-friendly by scheduling more breaks between classes and keeping lectures shorter, since a large proportion of learners use smartphone to learn. Further studies should be conducted to understand the impact of digital competence on learning outcomes and its differential impact on various categories of learners.

The policy guidelines can focus on various aspects of online learning, e.g., policies related to course syllabus, software standards, student code of conduct, discussion policy, intellectual property rights etc. Among these aspects, security and privacy concerns are very important and neglected or least considered by the students surveyed. As the National Education Policy of India reiterates the importance of implementing hybrid learning, the above concerns should be addressed along with the creation of digital infrastructure.

The study was conducted among students from only one agricultural universities of India, so it may be difficult to generalize the results to other disciplines and HEIs. Furthermore, the study emphasized and relied only on components of DIGCOMP framework, adapting the indicators depending on the specifics of the learners. This could also be considered as one of the limitations of the study. Nevertheless, the findings of the study can be generalized to other comparable settings with suitable adaptation.

CRediT authorship contribution statement

Sreeram Vishnu: Conceptualization, Data Curation, Writing Original draft. Archana Raghavan Sathyan: Data curation, Formal Analysis, Writing- Review and Editing. Anu Susan Sam: Writing- Review and Editing. Aparna Radhakrishnan: Data curation, Writing- Review and Editing. Sulaja Olaparambil Ragavan: Data curation, Writing- Review and Editing. Jasna Vattam Kandathil: Data curation, Writing- Review and Editing. Christoph Funk: Formal Analaysis, Writing- Review and Editing, Funding Acquisition and Supervision.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgement

Christoph Funk acknowledges the funding by the German Academic Exchange Service (DAAD) from funds of Federal Ministry for Economic Cooperation (BMZ), SDGnexus Network (Grant No.57526248), program “exceed – Hochschulexzellenz in der Entwicklungszusammenarbeit.”

Footnotes

Class representative refers to a student who represents a batch of students, belonging to that particular batch and facilitates various arrangements for the smooth conduct of the classes.

1 US$ = 73 Indian Rupee.

Appendix.

Table A1.

DIGCOMP Framework adapted and used for the study

| Competence Area | Original Dimensions | Dimensions adapted and used for the present study |

|---|---|---|

| 1. Information and Data Literacy | 1.1 Browsing, searching and filtering data, information and digital content | 1.1 Browsing, searching and filtering data, information and digital content |

| 1.2 Evaluating data, information and digital content | 1.2 Evaluating data, information and digital content | |

| 1.3 Managing data, information and digital content | 1.3 Managing data, information and digital content | |

| 2. Communication and Collaboration | 2.1 Interacting through digital technologies | 2.1 Interacting through digital technologies |

| 2.2 Sharing through digital technologies | 2.2 Sharing through digital technologies | |

| 2.3 Engaging in citizenship through digital technologies | 2.3 Engaging in citizenship through digital technologies | |

| 2.4 Collaborating through digital technologies | 2.4 Collaborating through digital technologies | |

| 2.5 Netiquette | 2.5 Netiquette | |

| 2.6 Managing digital identity | 2.6 Managing digital identity | |

| 3. Digital Content Creation | 3.1 Developing digital content | 3.1 Developing digital content |

| 3.2 Integrating and re-elaborating digital content | 3.2 Integrating and re-elaborating digital content | |

| 3.3 Copyright and licenses∗ | ||

| 3.4 Programming∗ | ||

| 4. Safety | 4.1 Protecting devices | 4.1 Protecting devices |

| 4.2 Protecting personal data and privacy | 4.2 Protecting personal data and privacy | |

| 4.3 Protecting health and well-being | 4.3 Protecting health and well-being | |

| 4.4 Protecting the environment | 4.4 Protecting the environment | |

| 5. Problem Solving | 5.1 Solving technical problems | 5.1 Solving technical problems |

| 5.2 Identifying needs and technological responses | 5.2 Identifying needs and technological responses | |

| 5.3 Creatively using digital technologies | 5.3 Creatively using digital technologies | |

| 5.4 Identifying digital competence gaps | 5.4 Identifying digital competence gaps |

Components not considered for the present study since focus was only on digital competence in online learning situation of the student respondents.

References

- Afolabi A.A. Availability of online learning tools and the readiness of teachers and students towards it in adekunle ajasin university, akungba-akoko, Ondo state, Nigeria. Procedia - Social and Behavioral Sciences. 2015;176:610–615. doi: 10.1016/j.sbspro.2015.01.517. [DOI] [Google Scholar]

- Agarwal R., Gao G.(G.), DesRoches C., Jha A.K. Research commentary: The digital transformation of healthcare: Current status and the road ahead. Information Systems Research. 2010;21(4):796–809. [Google Scholar]

- Amaro A.C., Oliveira L., Veloso A. Intergenerational and collaborative use of tablets: «in-Medium» and «in-Room» communication, learning and interaction. Observatorio. 2017;11:83–94. doi: 10.15847/obsOBS1102017995. [DOI] [Google Scholar]

- Arthur-Nyarko E., Moses K. Learner access to resources for ELearning and preference for ELearning delivery mode in distance education programs in Ghana. International Journal of Educational Technology. 2019;6(2):1–8. [Google Scholar]

- Bao W. COVID-19 and online teaching in higher education: A case study of peking university. Human Behavior and Emerging Technologies. 2020;2(2):113–115. doi: 10.1002/hbe2.191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barzman M., Gerphagnon M., Aubin-Houzelstein G., Baron G.-L., Benard A., Bouchet F., et al. Exploring digital transformation in higher education and research via scenarios. Journal of Futures Studies. 2021;25(3):65–78. [Google Scholar]

- Bhorkar S., Khanapurkar R., Dandare K., Kathole P. 2020. Strengthening the online education ecosystem in India.https://www.orfonline.org/research/strengthening-the-online-education-ecosystem-in-india/ ORF Occassional paper. [Google Scholar]

- Biswas B., Roy S., Roy F. Vol. 4. University Student Perspective; 2020. pp. 1–9. (Students perception of mobile learning during COVID-19 in Bangladesh). [DOI] [Google Scholar]

- Bond M., Marín V.I., Dolch C., Bedenlier S., Zawacki-Richter O. Digital transformation in German higher education: Student and teacher perceptions and usage of digital media. International Journal of Educational Technology in Higher Education. 2018;15(1):48. doi: 10.1186/s41239-018-0130-1. [DOI] [Google Scholar]

- Budi H.S., Ludjen J.S.M., Aula A.C., Prathama F.A., Maulana R., Siswoyo L.A.H., et al. Distance learning (DL) strategies to fight coronavirus (COVID-19) pandemic at higher education in Indonesia. International Journal of Psychosocial Rehabilitation. 2020;24:8777–8782. doi: 10.37200/IJPR/V24I7/PR270859. [DOI] [Google Scholar]

- Burgos-Videla, Gloria C., Wilson Andrés Castillo Rojas. López Meneses E., Mart\’\inez J. Digital competence analysis of university students using latent classes. Education Sciences. 2021;11(8):385. [Google Scholar]

- Calvani A., Cartelli A., Fini A., Ranieri M. Models and instruments for assessing digital competence at school. Journal of e-Learning and Knowledge Society. 2008;4:183–193. doi: 10.20368/1971-8829/288. [DOI] [Google Scholar]

- Calvani A., Fini A., Ranieri M., Picci P. Are young generations in secondary school digitally competent? A study on Italian teenagers. Computers & Education. 2012;58:797–807. doi: 10.1016/j.compedu.2011.10.004. [DOI] [Google Scholar]

- Çebi A., Reisoğlu İ. Digital competence: A study from the perspective of pre-service teachers in Turkey. Journal of New Approaches in Educational Research. 2020;9(2):294–308. [Google Scholar]

- Chaturvedi K., Kumar Vishwakarma D., Singh N. COVID-19 and its impact on education, social life and mental health of students: A survey. Children and Youth Services Review. 2021;121 doi: 10.1016/j.childyouth.2020.105866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coman C., Țîru L., Schmitz L.M., Stanciu C., Bularca M. Online teaching and learning in higher education during the coronavirus pandemic: Students' perspective. Sustainability. 2020;12 doi: 10.3390/su122410367. [DOI] [Google Scholar]

- Deursen A.J.A.M., Jan A.G., Van Dijk M. Vol. 13. New Media and Society; 2011. pp. 893–911. (Internet skills and the digital divide). [DOI] [Google Scholar]

- Dhawan S. Online learning: A panacea in the time of COVID-19 crisis. Journal of Educational Technology Systems. 2020:1–18. doi: 10.1177/0047239520934018. [DOI] [Google Scholar]

- Eshet-Alkalai, Yoram Thinking in the digital era: A revised model for digital literacy. Issues in Informing Science and Information Technology. 2012;9:267–276. doi: 10.28945/1621. [DOI] [Google Scholar]

- Evangelinos G., Holley D. A qualitative exploration of the DIGCOMP digital competence framework: Attitudes of students, academics and administrative staff in the health faculty of a UK HEI. EAI Endorsed Transactions on E-Learning. 2015;2:e1. doi: 10.4108/el.2.6.e1. [DOI] [Google Scholar]

- Falloon G. From digital literacy to digital competence: The teacher digital competency (TDC) framework. Educational Technology Research & Development. 2020;68(5):2449–2472. doi: 10.1007/s11423-020-09767-4. [DOI] [Google Scholar]

- Ferrari A. Publications Office of the European Union; 2013. DIGCOMP: A Framework for Developing and Understanding Digital Competence in Europe.https://publications.jrc.ec.europa.eu/repository/handle/JRC83167#:~:text=DIGCOMP%3A%20A%20Framework%20for%20Developing%20and%20Understanding%20Digital%20Competence%20in%20Europe.,-2013Science%20for&text=This%20is%20the%20final%20report,of%20a%20wide%20stakeholder%20consultation [Google Scholar]

- Gallardo-Echenique E., Oliveira J., Molías L.M., Esteve F. Digital competence in the knowledge society. MERLOT Journal of Online Learning and Teaching (JOLT) 2015;11:1–16. [Google Scholar]

- Government of India . 2020. Household social consumption on education in India NSS 75th round july 2017 - June 2018.http://mospi.nic.in/sites/default/files/publication_reports/Report_585_75th_round_Education_final_1507_0.pdf New Delhi. [Google Scholar]

- Hargittai E. Digital Na(t)Ives? Variation in internet skills and uses among members of the ‘net generation. Sociological Inquiry. 2010;80(1):92–113. doi: 10.1111/j.1475-682X.2009.00317.x. [DOI] [Google Scholar]

- Hasan N., Bao Y. Impact of ‘e-learning crack-up’ perception on psychological distress among college students during COVID-19 pandemic: A mediating role of ‘fear of academic year loss. Children and Youth Services Review. 2020;118(November) doi: 10.1016/j.childyouth.2020.105355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatlevik O.E., Knut-Andreas C. Digital competence at the beginning of upper secondary school: Identifying factors explaining digital inclusion. Computers & Education. 2013;63:240–247. doi: 10.1016/j.compedu.2012.11.015. [DOI] [Google Scholar]

- Hatlevik O.E., Ottestad G., Throndsen I. Predictors of digital competence in 7th grade: A multilevel analysis. Journal of Computer Assisted Learning. 2015;31(3):220–231. doi: 10.1111/jcal.12065. [DOI] [Google Scholar]

- Hohlfeld T.N., Ritzhaupt A.D., Barron A.E. Are gender differences in perceived and demonstrated technology literacy significant? It depends on the model. Educational Technology Research & Development. 2013;61(4):639–663. doi: 10.1007/s11423-013-9304-7. [DOI] [Google Scholar]

- Holzberger D., Philipp A., Kunter M. How teachers’ self-efficacy is related to instructional quality: A longitudinal analysis. Journal of Educational Psychology. 2013;105:774. doi: 10.1037/a0032198. [DOI] [Google Scholar]

- Huang S., Fang N. Predicting student academic performance in an engineering dynamics course: A comparison of four types of predictive mathematical models. Computers & Education. 2013;61:133–145. doi: 10.1016/j.compedu.2012.08.015. [DOI] [Google Scholar]

- Iivari N., Sharma S., Ventä-Olkkonen L. Digital transformation of everyday life – how COVID-19 pandemic transformed the basic education of the young generation and why information management research should care? International Journal of Information Management. 2020;55 doi: 10.1016/j.ijinfomgt.2020.102183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ilomäki L., Paavola S., Lakkala M., Anna K. Digital competence – an emergent boundary concept for policy and educational research. Education and Information Technologies. 2016;21:655–679. doi: 10.1007/s10639-014-9346-4. [DOI] [Google Scholar]

- Janssen J., Stoyanov S., Ferrari A., Punie Y., Pannekeet K., Peter Sloep Experts' views on digital competence: Commonalities and differences. Computers & Education. 2013 doi: 10.1016/j.compedu.2013.06.008. [DOI] [Google Scholar]

- Kaklamanou D., Pearce J., Nelson M. Department for Education; United Kingdom: 2012. Food and academies: A qualitative study.https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/182196/SFT-00045-2012_Food_and_Academies_-_a_qualitative_study.pdf [Google Scholar]

- Kim H.J., Hong A.J., Song H.-D. The roles of academic engagement and digital readiness in students' achievements in university e-learning environments. International Journal of Educational Technology in Higher Education. 2019;16(1):21. doi: 10.1186/s41239-019-0152-3. [DOI] [Google Scholar]

- Kuhlemeier H., Hemker B. The impact of computer use at home on students' internet skills. Computers & Education. 2007;49:460–480. doi: 10.1016/j.compedu.2005.10.004. [DOI] [Google Scholar]

- Leahy D., Dolan D. In: Key competencies in the knowledge society. Reynolds N., Turcsányi-Szabó M., editors. Springer Berlin Heidelberg; Berlin, Heidelberg: 2010. Digital literacy: A vital competence for 2010?” pp. 210–21. [Google Scholar]

- Levy D. Online, blended and technology-enhanced learning: Tools to facilitate community college student success in the digitally-driven workplace. Contemporary Issues In Education Research. 2017;10:255. doi: 10.19030/cier.v10i4.10039. [DOI] [Google Scholar]

- Lischer S., Safi N., Dickson C. Remote learning and students' mental health during the covid-19 pandemic: A mixed-method enquiry. Prospects. 2021:1–11. doi: 10.1007/s11125-020-09530-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maity, S., T. Nath Sahu, and N. Sen. n.d. “Panoramic View of Digital Education in COVID-19: A New Explored Avenue.” Review of Education n/a(n/a). doi: 10.1002/rev3.3250. [DOI]

- López-Meneses E., Sirignano F, M., Vázquez-Cano E, Ramírez-Hurtado J., M. University students’ digital competence in three areas of the DigCom 2.1 model: A comparative study at three European universities. Australasian Journal of Educational Technology. 2020;36(3):69–88. [Google Scholar]

- Martzoukou K., Fulton C., Kostagiolas P., Lavranos C. A study of higher education students' self-perceived digital competences for learning and everyday life online participation. Journal of Documentation ahead-of-p. 2020 doi: 10.1108/JD-03-2020-0041. [DOI] [Google Scholar]

- Mathivanan S.K., Jayagopal P., Ahmed S., Manivannan S.S., Kumar P.J., Thangam Raja K., et al. Adoption of E-learning during lockdown in India. International Journal of System Assurance Engineering and Management. 2021 doi: 10.1007/s13198-021-01072-4. [DOI] [Google Scholar]

- Ministry of Education . Government of India; 2021. Steps taken by the government to improve learning levels.https://pib.gov.in/PressReleaseIframePage.aspx?PRID=1739159 [Google Scholar]

- Mishra L., Gupta T., Shree A. Online teaching-learning in higher education during lockdown period of COVID-19 pandemic. International Journal of Educational Research Open. 2020;1 doi: 10.1016/j.ijedro.2020.100012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muthuprasad T., Aiswarya S., Aditya K.S., Jha G.K. Students' perception and preference for online education in India during COVID -19 pandemic. Social Sciences & Humanities Open. 2021;3(1) doi: 10.1016/j.ssaho.2020.100101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olofsson A.D., Fransson G., Lindberg J. A study of the use of digital technology and its conditions with a view to understanding what ‘adequate digital competence’ may mean in a national policy initiative. Educational Studies. 2019;46:1–17. doi: 10.1080/03055698.2019.1651694. [DOI] [Google Scholar]

- Pokhrel S., Chhetri R. A literature review on impact of COVID-19 pandemic on teaching and learning. Higher Education for the Future. 2021;8 doi: 10.1177/2347631120983481. [DOI] [Google Scholar]

- Ramadas S., Kumar A., Singh S., Verma A., Venkatesh K., Gupta V. ICAR-Indian Institute of Wheat and Barley Research; 2017. Data analysis tools and approaches (DATA) in agricultural sciences; pp. 54–56. [Google Scholar]

- Reedy A, Boitshwarelo B, Barnes J, Billany T. Refereed paper presented at ‘Strengthening partnerships in teacher education: Building community, connections and creativity’, the annual conference of the Australian Teacher Education Association (ATEA) Australian Teacher Education Association (ATEA); 2015. Swimming with Crocs: Professional Development in a Northern Context.https://atea.edu.au/?option=com_jdownloads&view=viewcategories&Itemid=132 [Google Scholar]

- Ritzhaupt A., Liu F., Dawson K., Barron A. Differences in student information and communication technology literacy based on socio-economic status, ethnicity, and gender. Journal of Research on Technology in Education. 2013;45:291–307. doi: 10.1080/15391523.2013.10782607. [DOI] [Google Scholar]

- Røkenes, Mørk F., Johan Krumsvik R. Prepared to teach esl with ICT? A study of digital competence in Norwegian teacher education. Computers & Education. 2016;97:1–20. doi: 10.1016/j.compedu.2016.02.014. [DOI] [Google Scholar]

- Sánchez-Caballé A, Gisbert Cervera M, Esteve-Mon F., M. The digital competence of university students: a systematic literature review. Revista de Psicologia. 2020;38(1):63–74. doi: 10.51698/aloma.2020.38.1.63-74. [DOI] [Google Scholar]

- Scherer R., Siddiq F. The relation between students' socioeconomic status and ICT literacy: Findings from a meta-analysis. Computers & Education. 2019;138:13–32. doi: 10.1016/j.compedu.2019.04.011. [DOI] [Google Scholar]

- Sevillano-García M.L., Vázquez-Cano E. The impact of digital mobile devices in higher education. Journal of Educational Technology & Society. 2015;18(1):106–118. [Google Scholar]

- Singh V.K. Challenges in Indian higher education. Current Science. 2021;121(3):339–340. https://www.currentscience.ac.in/Volumes/121/03/0339.pdf [Google Scholar]

- Singh V., Thurman A. How many ways can we define online learning? A systematic literature review of definitions of online learning (1988-2018) American Journal of Distance Education. 2019;33(4):289–306. doi: 10.1080/08923647.2019.1663082. [DOI] [Google Scholar]

- Sintema E.J. Effect of COVID-19 on the performance of grade 12 students: Implications for STEM education. Eurasia Journal of Mathematics, Science and Technology Education. 2020;16 doi: 10.29333/ejmste/7893. [DOI] [Google Scholar]

- Smith R.A. The effect of unequal group size on Tukey's HSD procedure. Psychometrika. 1971;36(1):31–34. doi: 10.1007/BF02291420. [DOI] [Google Scholar]

- Stern B. A comparison of online and face-to-face instruction in an undergraduate foundations of American education course. Contemporary Issues in Technology and Teacher Education. 2004;4(2):196–213. [Google Scholar]

- Svensson M., Baelo R. Teacher students' perceptions of their digital competence. Procedia - Social and Behavioral Sciences. 2015;180:1527–1534. doi: 10.1016/j.sbspro.2015.02.302. [DOI] [Google Scholar]

- Tourón J., Deborah Martin R., Enrique Navarro A., Silvia Pradas M., Victoria Iñigo M. Construct validation of a questionnaire to measure teachers' digital competence (TDC) Revista Espanola de Pedagogia. 2018;76 doi: 10.22550/REP76-1-2018-10. [DOI] [Google Scholar]

- Tzafilkou K., Perifanou M., Economides A. Development and validation of students' digital competence scale (SDiCoS) International Journal of Educational Technology in Higher Education. 2022;19 doi: 10.1186/s41239-022-00330-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- UNESCO . United Nations Educational, Scientific and Cultural Organization; 2020. “COVID 19 educational disruption and response.”.https://en.unesco.org/news/covid-19-educational-disruption-and-response#:~:text=Governments%20all%20around%20the%20world,half%20of%20world's%20student%20population [Google Scholar]

- Vázquez-Cano, Esteban, Urrutia M.L., Parra-González M.E., López Meneses E. Analysis of interpersonal competences in the use of ICT in the Spanish university context. Sustainability. 2020;12(2) doi: 10.3390/su12020476. [DOI] [Google Scholar]

- Verizon, P.G., L.A. Taylor, and M. Lennon. n.d. “Digital Transformation A Framework for ICT Literacy A Report of the International.” https://www.ets.org/Media/Research/pdf/ICTREPORT.pdf. Accessed on 18 February 2020.

- Vukcevic N., Abramović N., Perović N. Research of the level of digital competencies of students of the university ‘adriatic’ bar. SHS Web of Conferences. 2021;111:1008. doi: 10.1051/shsconf/202111101008. [DOI] [Google Scholar]

- Wang X., Zhang R., Wang Z., Li T. How does digital competence preserve university students' psychological well-being during the pandemic? An investigation from self-determined theory. Frontiers in Psychology. 2021;12 doi: 10.3389/fpsyg.2021.652594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watkins R., Leigh D., Triner D. Assessing readiness for E‐learning. Performance Improvement Quarterly. 2004;17:66–79. doi: 10.1111/j.1937-8327.2004.tb00321.x. [DOI] [Google Scholar]

- Wild S., Schulze Heuling L. How do the digital competences of students in vocational schools differ from those of students in cooperative higher education institutions in Germany? Empirical Research in Vocational Education and Training. 2020;12(1):5. doi: 10.1186/s40461-020-00091-y. [DOI] [Google Scholar]

- Yang B., Huang C. Turn crisis into Opportunity in response to COVID-19: Experience from a Chinese university and future prospects. Studies in Higher Education. 2021;46(1):121–132. doi: 10.1080/03075079.2020.1859687. [DOI] [Google Scholar]

- Yu Z. The effects of gender, educational level, and personality on online learning outcomes during the COVID-19 pandemic. International Journal of Educational Technology in Higher Education. 2021;18(1):14. doi: 10.1186/s41239-021-00252-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zawacki-Richter, Olaf The current state and impact of covid-19 on digital higher education in Germany. Human Behavior and Emerging Technologies. 2021;3(1):218–226. doi: 10.1002/hbe2.238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao Y., Ana María P.L., María Cruz S.G. Digital competence in higher education research: A systematic literature review. Computers & Education. 2021;168 doi: 10.1016/j.compedu.2021.104212. [DOI] [PMC free article] [PubMed] [Google Scholar]