Abstract

Artificial intelligence (AI) and machine learning are changing our world through their impact on sectors including health care, education, employment, finance, and law. AI systems are developed using data that reflect the implicit and explicit biases of society, and there are significant concerns about how the predictive models in AI systems amplify inequity, privilege, and power in society. The widespread applications of AI have led to mainstream discourse about how AI systems are perpetuating racism, sexism, and classism; yet, concerns about ageism have been largely absent in the AI bias literature. Given the globally aging population and proliferation of AI, there is a need to critically examine the presence of age-related bias in AI systems. This forum article discusses ageism in AI systems and introduces a conceptual model that outlines intersecting pathways of technology development that can produce and reinforce digital ageism in AI systems. We also describe the broader ethical and legal implications and considerations for future directions in digital ageism research to advance knowledge in the field and deepen our understanding of how ageism in AI is fostered by broader cycles of injustice.

Keywords: Bias, Gerontology, Machine learning, Technology

The intersection of an aging population with rapid technological advancements has given rise to novel considerations in the realm of Artificial Intelligence (AI). As defined by Russel and Norvig, AI is the “study of agents that receive percepts from the environment and perform actions” (Russell & Norvig, 2010, p. viii).

Current research examining biases in AI is largely focused on racial and gender biases and the serious consequences that arise as a result (Zhavoronkov et al., 2019); however, little attention has been paid to age-related bias (known as ageism) in AI (Butler, 1969). Ageism is a societal bias conceptualized as (a) prejudicial attitudes toward older adult populations and the process of aging, (b) discriminatory practices against older adults, and/or (c) institutionalized policies and social practices that foster these attitudes and actions (Rosales & Fernández-Ardèvol, 2020; Wilkinson & Ferraro, 2002). The pervasiveness of ageism has been highlighted in the coronavirus disease 2019 (COVID-19) pandemic where older adults were considered to be the most sick and vulnerable population (Vervaecke & Meisner, 2021). A report from the World Health Organization (WHO) and United Nations (UN) calls for urgent action to combat ageism due to its negative impacts on well-being, premature death, and higher health costs (WHO & UN, 2021). As noted in the WHO and UN (2021) report, scarce health care resources are sometimes allocated based on age, which means that an individual’s age may influence whether or not they receive an essential health intervention(s). With biases in AI recognized as a critical problem requiring urgent action, it is essential to invest in evidence-based strategies to prevent and tackle age-related bias in AI systems. These strategies can inform future legal and social policy developments to help mitigate this bias and advance social equity. In this Forum, we introduce the term digital ageism that we define as age bias in technology such as AI and discuss the mechanisms that lead to biases in AI systems. In the subsequent sections, we describe ageism in AI systems, broader ethical and legal implications, and considerations for future directions in research.

Biases in AI Systems

AI has experienced exceptional advancements in its ability to learn and reason and accordingly has been described as the “fastest-moving technology” (Brown, 2020). As a tool, there are no inherent limits to the potential range of uses for AI. At their most fundamental, AI tools work by subjecting large data sets—the bigger the better—to rapid machine learning algorithms capable of pattern recognition, statistical correlation, prediction, inference, and problem-solving (Presser et al., 2021). A recent report indicates that a “digital world” of more than 2.5 quintillion bytes of data is produced each day (O’Keefe et al., 2020). As a result of its immense capability to process data for predictive modeling, AI has been touted for its transformative potential and has become increasingly salient as a matter of public and political interest. The ability of AI to supplement human decision making at super speed and on a large population or global scale positions AI to fundamentally change the nature of the global economy (Margetts & Dorobantu, 2019; Presser et al., 2021).

Notwithstanding its immense promise, AI applications released to the public are not free from racial and gender biases (Chen, Szolovits & Ghassemi, 2019; Howard & Borenstein, 2018). For instance, a widely deployed AI algorithm was shown to underestimate the health risks of Black patients compared to White patients (Obermeyer et al., 2019). The algorithm’s prediction was based on individuals’ health care costs, but it failed to consider the primary cause of Black patients’ lower spending on health care which is reduced health care access due to systemic racism. Other instances of racial bias include AI systems assigning longer jail sentences to Black inmates (Angwin et al., 2016) and imprecise facial recognition algorithms misidentifying Black faces at a 5 times higher rate than White faces (Simonite, 2019). AI bias against women has also been identified with serious socioeconomic consequences including women being less likely to receive job search advertisements for high-paying positions (Dastin, 2018) and job discrimination (Datta et al., 2015). This bias can be attributed to the way AI’s predictive algorithms learn from not only quantitative data but also text (i.e., corpus), which insidiously encodes historical–cultural associations that result in semantic biases, such as associations between stereotypical male names and working in the labor force or, conversely, female names and family/child-rearing (Caliskan et al., 2017).

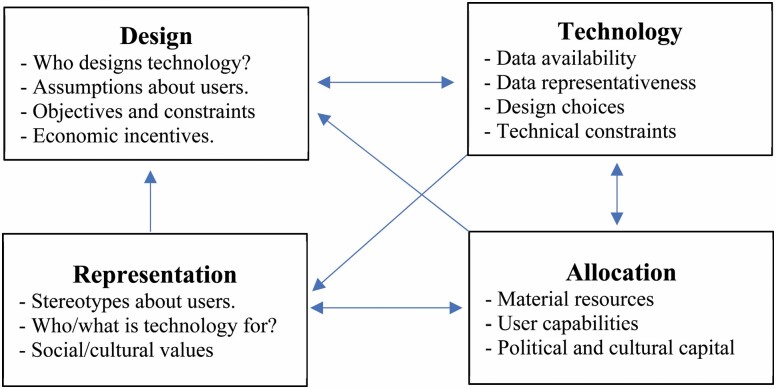

One of the earliest definitions of bias in computer systems refers to a system’s ability to “systematically and unfairly discriminate against certain individuals or groups of individuals in favor of others. A system discriminates unfairly if it denies an opportunity or a good or if it assigns an undesirable outcome to an individual or group of individuals on grounds that are unreasonable or inappropriate” (Friedman & Nissenbaum, 1996, p. 332). Two unique types of undesirable outcomes can result from algorithmic bias: harms of allocation and harms of representation (Crawford, 2017). Harms of allocation refer to the distribution of resources and opportunities. This includes opportunities like when to be released on bail, receiving notification about potential job prospects, and access to health care resources or services. In contrast, harms of representation refer to how different groups or identities are represented and perceived by society. It is important to note that the underlying causes of these types of harms are complex. While technical factors, such as biased data and design choices, play an important role, biases can also arise from the context of use, for example, how human users interpret system outputs or from a mismatch between the capabilities and values assumed in the design of the system and those of its actual users (Danks & London, 2017; Friedman & Nissenbaum, 1996). These contextual factors can reflect underlying individual and social biases from as early on as technology inception, like who is involved in the design of technologies and the assumptions they make about end-users, to technology use by end-users, who have discrepancies in resources and capabilities to use existing technologies that affect what kind of data (and about whom) is readily collected. All of these factors are in turn shaped by both the allocative and representational effects of existing technologies, potentially creating a “cycle of injustice” (Whittlestone et al., 2019), where technological, individual, and social biases interact to produce and mutually reinforce each other (Figure 1). In the literature examining biases in AI, age-related bias is seldom discussed in comparison to racial and gender biases. It is time to critically reflect on and consider the experience of ageism in AI: the process of growing old in an increasingly digital world that directly and insidiously reinforces social inequities, exclusion, and marginalization. The next sections will focus on the digital divide, cycles of injustice that reinforce ageism, and the ethical and legal aspects of digital ageism.

Figure 1.

Cycles of injustices in how technology is developed, applied, and understood by members of society (Whittlestone et al., 2019).

Ageism and the Digital Divide

Both the development and use of technology have excluded older adults, producing a “physical–digital divide,” which exists when a group feels ostracized when they are unable to engage with the technologies being used around them (Ball et al., 2017). The social exclusion of older adults from the development and use of digital platforms results in data symptomatic of age-related bias in AI (Rosales & Fernández-Ardèvol, 2020; Wilkinson & Ferraro, 2002). There is a misconception that older adults are a homogenous group of people who are “in decline,” incompetent, and in need of younger people’s guidance when it comes to technology (Mannheim et al., 2019). Furthermore, these paternalistic stereotypes and patronizing sentiments contribute to harmful compassionate ageism—“stereotypes concerning older persons that have permeated public rhetoric” (Binstock, 1983)—which is then reinforced and internalized by older adults (Vervaecke & Meisner, 2021). Internalized negative stereotypes can cause older adults to experience a decline in cognitive (e.g., memory) and psychological performances (Hehman & Bugental, 2015; Hess et al., 2003).

Furthermore, in a society where AI is becoming increasingly prevalent, older adults are at risk of further social exclusion and retrogression due to a digital divide (Rosales & Fernández-Ardèvol, 2020). The risk of a gap or distinction that delineates this aging population according to those with access to information technology and those without grows as technology advances (Srinuan & Bohlin, 2011). While older adults are using technology in greater numbers (Anderson et al., 2017) and benefitting from technology use (Anguera et al., 2017; Cotten et al., 2011; Czaja et al., 2018; Decker et al., 2019; Harerimana et al., 2019; Hurling et al., 2007; Irvine et al., 2013; Tomasino et al., 2017; White et al., 2002), they continue to be the least likely age cohort to have access to a computer and the internet due to physical barriers (e.g., physical disability) and/or psychological factors (e.g., lack of confidence to technology use; Anderson et al., 2017; Tomasino et al., 2017). One report from the European Union indicates that one third of older adults report never using the internet (Anderson et al., 2017). A survey of 17 European countries showed that internet use in older adults varied depending on location and age with the rates of internet nonusers increasing with each decade of age (König et al., 2018). Results show that 52% of individuals 65 years and older were internet nonusers and the percentage of internet nonusers increased to 92% in those 80–84 years old, indicating that “many older Europeans do not use the Internet and are particularly affected by the digital divide” (König et al., 2018, p. 626). Similarly, in Toronto, Canada, residents aged 60 and older report having lower rates of access to home internet compared to younger residents, with those who have access experiencing internet speeds below the Canadian national target of 50 Mbps (Andrey et al., 2021). Additionally, almost one third (30%) of this older adult cohort lack a device through which they can connect to the internet (Andrey et al., 2021). Older people may also experience more disparities in material access to technologies, education, and support to learn new technology (Ball et al., 2017; Cronin, 2003; Lagacé et al., 2015). For some older adults, the challenge to learn to use technology and the fear that technology will fail to work when most needed can be stressful (Cotten et al., 2011).

Ageist Cycles of Injustice in Digital Technologies

The barriers to technological access outlined above provide insight as to possible explanations for the exclusion of older adults from the research, design, and development process of digital technologies (Baum et al., 2014; Kanstrup & Bygholm, 2019; Lagacé et al., 2015). Older adults are sometimes referred to as “invisible users” in the literature alluding to their exclusion in the process of technology design that makes their interests and values invisible (Kanstrup & Bygholm, 2019; Rosales & Fernández-Ardèvol, 2019). Their perspectives are unlikely or inaccurately taken into consideration during technology design or product development which are activities dominated by younger people. Research by Charness (1990, 1992, 2009, 2020) highlights a misalignment of person–system fit that is generated when normative age-related changes, like in perception, cognition, and psychomotor abilities, are not accounted for which contributes to older adults’ low adoption rates and suboptimal user experiences. The impact of this mismatch will be intensified over time as society transitions to an increased use of technology (e.g., health care technologies, information and communication technologies) which leaves older adults further behind from a technology-enabled world.

Additionally, ageist attitudes (Abbey & Hyde, 2009), which manifest in marketing and research studies (Ayalon & Clemens, 2018), influence the design of technology through a historical exclusion of older adults, particularly at arbitrary upper age limits (50+ or 60+) (Mannheim et al., 2019). The perception of older adults as a homogenous group potentially results in a loss of recognizing the nuanced needs of older people. Moreover, a disproportionate amount of information technology targets older adults specifically for health care and chronic disease management (Mannheim et al., 2019), rather than for leisure, joy, or fun. The underlying assumption of this phenomenon is that older adults are unhealthy and that managing health conditions is the only reason that they may seek to use and benefit from technology. This assumption could consequently create a feedback loop that reinforces negative stereotypes. Specifically, if most technologies marketed toward older adults are designed to resolve or manage health problems, then this could easily reinforce the impression that older adults are mainly unhealthy, in need of support, and/or in decline. There is evidence of significant age bias as demonstrated by Díaz et al. (2018) who used sentiment analysis on a large corpus of text data from Wikipedia, Twitter, and web crawling the internet. Díaz et al. (2018) found age-related bias with respect to explicit and implicit encoded ageist stereotypes. For example, sentences containing “young” were 66% more likely to be scored positively than the same sentences containing “old” when controlling for other sentential content, and in their analysis of word embedding to explore implicit bias, they found “youth” was associated with words like “courageous” and the words “old” and “older” were associated with “stubborn” and “obstinate.” Another effect is that the data collected from these technologies end up representing only a segment of older adults with health issues. This selection bias does not enable technologies to capture the heterogeneity of the aging population, causing a mismatch between targeted technology such as AI and the actual needs of older adults (Crawford, 2017).

Taken together, there is not enough data from older adults available for training AI models, and the corpus that is available shows an explicit and implicit age-related bias (Díaz et al., 2018). Problems arise when the corpus may be mined by algorithms to understand attitudes toward or about products or services, and the “sentiment output is less positive simply because the sentences describe an older person taking part in an interaction” (Díaz et al., 2018, p. 9). This can result in further bias that leads to nongeneralizable AI models and the development of future AI systems that ignore the use, interests, and values of older adults while reinforcing or amplifying existing disadvantages (Coiro, 2003). In addition, this bias could influence or reduce the products or services targeted for older individuals (Díaz et al., 2018).

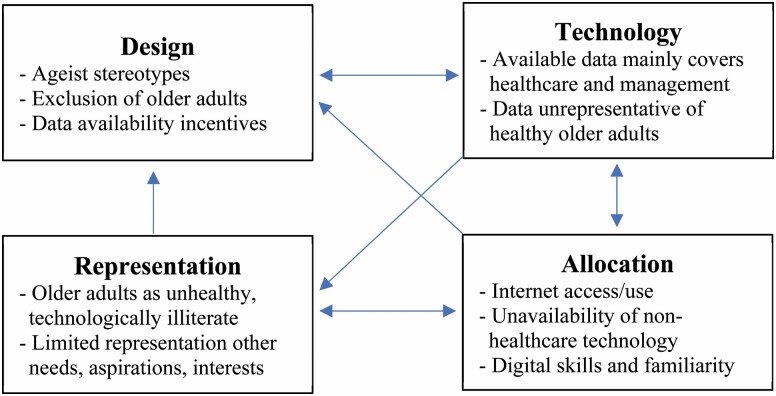

AI systems can produce and reinforce ageist biases through multiple pathways. Addressing bias requires a deeper understanding of how ageism fits into a broader cycle of injustice as illustrated in Figure 2. Existing stereotypes of older adults as unhealthy and/or technologically incompetent (Representation) affect the assumptions made about older adults, which can lead to the exclusion of older adults from research and design processes (Design). Ageist stereotypes are further reinforced by the fact that new information technologies for older adults mostly focus on health and health care management (Design/Technology). The digital divide (Allocation), together with patterns in existing applications, results in data sets that inaccurately represent healthy older adults (Technology). These biased data sets incentivize further technology development that primarily focuses on health care needs (Design). The limited availability of digital technologies serving other needs, interests, and aspirations of older adults can further entrench the digital divide (Allocation).

Figure 2.

How cycles of injustice in digital technologies result in digital ageism.

In this way, new systems reinforce inequality and magnify societal exclusion for subsects of the population who are considered a “digital underclass” (Petersen & Bertelsen, 2017), primarily made up of older, poor, racialized, and marginalized groups. This raises questions about how older adults are included and viewed in our increasingly digital world, and how our societal structures that enforce ageism are represented in AI systems. There is a pressing need to address these foundational questions especially with the surge of digital technology use during the COVID-19 pandemic (De' et al., 2020).

Ethical and Legal Implications of Ageism in AI

Ageism is an overlooked bias within AI ethics. This is evident upon our search of the AI Ethics Guidelines Global Inventory (AlgorithmWatch, 2021), a repository that compiles documents about how AI systems can conduct ethical automated decision making. Most of these guidelines highlight fairness as a key governing ethical principle; fairness typically incorporates considerations of equity and justice. In the repository, there are 146 documents created by government, private, civil society, and international organizations, which are accessible and available in English. The research team searched these documents for the terms ageism and similar concepts like age bias, age, old/older, senior(s), and elderly. We found that only 34 (23.3%) of these documents mention ageism as a bias for a total of 53 unique mentions. Of these, 19 (54.7%) merely listed “age” as part of a general list of protected characteristics. For example, the UNI Global Union Top 10 Principles for Ethical AI (2018) states “In the design and maintenance of AI, it is vital that the system is controlled for negative or harmful human bias, and that any bias—be it gender, race, sexual orientation, age, etc.—is identified and is not propagated by the system” (p. 8). Only 12 (8.2%) of the examined documents provided slightly more context about bias against older adults, often no more than one or two sentences. For example, the Academy of Royal Medical College’s Artificial Intelligence in Healthcare report (2019) states “It might be argued that the level of regulation should be varied according to the risks—for example psychiatric patients, the young and the elderly [sic] might be at particular risk from any ‘bad advice’ from digitised systems” (p. 28).

Ultimately, our overview of these documents demonstrates that ageism directed toward older adults is insufficiently recognized as a specific and unique ethical implication of AI in current literature. To ensure that AI is developed in an ethically defensible manner, such that it promotes equity and rejects unjust bias, this implication ought to be explicitly recognized and addressed. As indicated in previous sections of this Forum article, failing to appropriately involve and accurately represent older people leads to a digital divide that may further contribute to further preventable inequities.

One significant concern about failing to respond to ageism in AI relates to the presence of ageism in AI-powered hiring systems. Consider for example an AI-powered résumé-screening tool that excludes job candidates based on their date of graduation. In 2017, AI-driven hiring platforms including Jobr were under investigation for prohibiting applicants from selecting either graduation year or any first job before 1980 (Ajunwa, 2019). Similarly, an algorithm may prioritize young, male applicants to reflect the current employee composition of an organization in an attempt to emulate the employer’s past hiring behavior, and in doing so, perpetuate preexisting biases (Kuei & Mixon, 2020). From an ethical and legal perspective, providing people with a fair opportunity is often considered an important part of what it means to treat people equally and justly (UN, 1945). Failing to provide suitable individuals with the ability to pursue a career opportunity on the basis of immutable characteristics (e.g., graduation year, gender) with no bearing on ability directly opposes the fair equality of opportunity principle.

The widespread use of AI tools to make recommendations with transformative consequences for individuals and society has given rise to an “urgent set of legal questions and concerns” (Presser et al., 2021). These concerns include security, fairness, bias and discrimination, legal personhood, intellectual property, privacy and data protection, and liability for damages (Rodrigues, 2020). There is growing recognition of the need for “normative frameworks for the development and deployment of AI” (Martin-Bariteau & Scassa, 2021). Regulatory governance frameworks are important in preventing and mitigating harm occasioned by the deployment of AI algorithms and can outline the legal recourse available to an aggrieved individual or entity. In the development context, regulatory governance frameworks provide guidance for the ethical development and deployment of AI (including recognizing and minimizing embedded bias).

In recent years, a wave of lawsuits has plagued major employers like Google and LinkedIn who used software algorithms to target internet job advertisements to younger applicants, excluding applicants older than 40 years (Ajunwa, 2018). There have also been multiple lawsuits and settlements based on Facebook’s paid advertisement platform, which enabled advertisers to micro-target ads to exclude users based on protected classes, such as age, which are in violation of federal and state civil rights laws (American Civil Liberties Union, 2019). These discriminatory advertising practices prevented older people from seeing ads for job opportunities, ostensibly denying them the opportunity for employment.

Stakeholders and regulators face unique challenges in AI regulation and governance. There is no uniform global legal code for AI governance. International sources of AI law may be persuasive in other jurisdictions but will not be binding. This means that lawmakers may look internationally for guidance on how other states or countries have navigated the challenges posed by the proliferation of AI, but will ultimately have to develop and implement regulatory systems that accord with their own legal structures. For example, the proposed Canadian Digital Charter Implementation Act (2020) was modeled on the European Union’s General Data Protection Regulation (2016).

Developing laws and regulations regarding technology have global challenges and issues with regard to applications within and across country boundaries. For example, in the Canadian context, governments and regulators must grapple with regulating AI within our federal and constitutional setting (Martin-Bariteau & Scassa, 2021) because powers over health care and human rights are shared between federal and provincial governments. As a result, “[c]oherent, consistent and principled AI regulation in Canada [necessitates] considerable federal-provincial co-operation as well as strong inter agency collaboration—both that may be difficult to count on” (Martin-Bariteau & Scassa, 2021). Beyond jurisdictional issues, governments have sought to balance competing regulatory interests, including the need to protect the public and the need to exercise regulatory restraint as to not stifle innovation (Martin-Bariteau & Scassa, 2021). Adding to this challenge, some AI algorithms are proprietary and thus are afforded intellectual property protections. These intellectual property protections have precluded aggrieved individuals (including criminal defendants) from having access to and examining the AI algorithm (see State v Loomis 881 N.W.2d 749 (Wis. 2016) 754 (US)). AI algorithms behind many social, political, and legal applications of AI have used intellectual property protections to avoid legal and research scrutiny.

Transparency and careful examination for age-related bias (such as through research) is required given the complexity of AI systems, without a deeper investigation we are not able to assess from a legal standpoint whether these systems are perpetuating the ageism that is pervasive in society. Ultimately, the concern is that AI will simply, “reproduce existing hierarchies and vulnerabilities of social relations …” with regard to age and in a manner that avoids scrutiny through obscurity and lack of transparency (Martin-Bariteau & Scassa, 2021). Even with its widespread adoption, there is very little training, support, auditing, or oversight of AI-driven activities from a regulatory or legal perspective (Presser et al., 2021), and Canada’s current AI regulatory regime is lagging (Martin-Bariteau & Scassa, 2021). With the regulation of AI in Canada in its relative infancy, it remains unclear as to whether existing legal frameworks are sufficient to protect or offer any meaningful recourse to those who are victims of ageist bias occurring because of the use of AI.

Looking Ahead

Although much of the discussion about AI and bias has focused on its potential to cause harm, we are optimistic that AI can be developed to mitigate human bias. In the area of employment, for example, new AI-based hiring platforms can help overcome human recruiter bias by detecting qualified candidates who may be overlooked in traditional hiring processes that use resumes and cover letters (Wiggers, 2021). More research developing technologies are also being conducted with older adults (Chu et al., 2021; Harrington et al., 2018), but there is a need for continued analysis of the process to address aspects of ageism (Mannheim et al., 2019). Additionally, mitigating biases in health care is an area of gaining more attention. In this context, the validation of the representativeness of the data set is suggested as the best approach to combat algorithmic bias (Ho et al., 2020). Looking ahead, we remain optimistic that the bias of digital ageism can be acknowledged and addressed through a multifaceted approach. First and foremost, from the lens of critical gerontology, it is crucial to include older adults throughout the pipeline when developing AI systems. This will require addressing structural issues such as access, time, training, and the means to participate in research and development, as well as existing funding constraints of research grants and technology development (Grenier et al., 2021). Next, an interdisciplinary approach that includes gerontologists, social scientists, philosophers, legal scholars, ethicists, clinicians, and technologists who could work collaboratively and lend their expertise to address digital ageism is warranted. An interdisciplinary and critical examination of age as a bias is necessary to capture the full picture for effective AI deployment, especially under the context of the COVID-19 pandemic, where, in some jurisdictions, age was the sole criterion for health care access and lifesaving treatments (WHO & UN, 2021).

There is an urgency and opportunity to better understand and address digital ageism. To date, the AI developed may be insufficient to meet the needs of older adults and may prove to be exclusionary and discriminatory. However, there is also an opportunity to develop programs and mechanisms that include older adults and to delineate what is fair and ethical with regard to AI. This is especially the case given the sociocultural shift where more and more people will, and are expected to, incorporate technology into their lives to remain connected to our technology-enabled world. Projections show that older adults are likely to make up the largest proportion of technology (e.g., health related, information and communication) consumers in the future as today’s tech-savvy adults grow older (Foskey, 2001; Kanstrup & Bygholm, 2019; Rosales & Fernández-Ardèvol, 2019). The COVID-19 pandemic was a significant accelerator of technology use and uptake for day-to-day needs (e.g., online groceries, shopping, health care) and social communication. Such ubiquitous use of technology (De' et al., 2020) indicates that there is an increased number of people who are likely to be both excluded from these means of communication and affected by implicit biases in current AI systems. Together, these conditions underscore the need for more research on digital ageism.

For future directions, our research team will establish a multiphase research program to further explore the extent of ageism in AI and develop insights about the potential for age-related bias in AI applications that can perpetuate social inequity for older adults. We aim to expand on the described conceptual framework of how older adults experience ageism in and through AI to raise broad awareness of this bias and contribute to a more socially conscious approach to AI development. As the current younger generation may have grown up with widespread access to information and communication technologies like computers, social media, and the internet (referred to as “digital natives” [International Telecommunication Union, 2013; UN]), it is expected that these tech-savvy end-users will have greater expectations for fair and just AI applications as older adults in the future. To meet these future expectations, our interdisciplinary team aims to create data sets with more representations of older adults for fair algorithm development of AI technologies like facial recognition. Furthermore, we will develop partnerships with older adults organizations, governments, AI researchers and developers, and other stakeholders to shape legal and social policy with the aim to reduce technology-driven exclusion and inequities for older adults.

Conclusions

Ageism is a bias that currently remains understudied in AI research. The exclusion of older adults from technology development maintains a broader cycle of injustice including societal ageist attitudes and exacerbates the digital divide. Thus, we urge future AI development and research to consider and include digital ageism as a concept in the research and policy agenda toward building fair and ethical AI.

Contributor Information

Charlene H Chu, Lawrence S. Bloomberg Faculty of Nursing, University of Toronto, Toronto, Ontario, Canada; KITE—Toronto Rehabilitation Institute, University Health Network, Toronto, Ontario, Canada.

Rune Nyrup, Leverhulme Centre for the Future of Intelligence, University of Cambridge, Cambridge, UK.

Kathleen Leslie, Faculty of Health Disciplines, Athabasca University, Athabasca, Alberta, Canada.

Jiamin Shi, Lawrence S. Bloomberg Faculty of Nursing, University of Toronto, Toronto, Ontario, Canada; Dalla Lana School of Public Health, University of Toronto, Toronto, Ontario, Canada.

Andria Bianchi, Dalla Lana School of Public Health, University of Toronto, Toronto, Ontario, Canada; University Health Network, Toronto, Ontario, Canada.

Alexandra Lyn, Faculty of Health Disciplines, Athabasca University, Athabasca, Alberta, Canada.

Molly McNicholl, University of Cambridge, Cambridge, UK; London School of Hygiene and Tropical Medicine, University of London, London, UK.

Shehroz Khan, KITE—Toronto Rehabilitation Institute, University Health Network, Toronto, Ontario, Canada; Institute of Biomedical Engineering, University of Toronto, Toronto, Ontario, Canada.

Samira Rahimi, Department of Family Medicine, McGill University, Montreal, Quebec, Canada; Mila—Quebec AI Institute, Montréal, Quebec, Canada.

Amanda Grenier, Factor-Inwentash Faculty of Social Work, University of Toronto, Toronto, Ontario, Canada; Baycrest Hospital, Toronto, Ontario, Canada.

Funding

The work was led by Dr. C. H. Chu (Principal Investigator) and funded by the Social Sciences and Humanities Research Council in Canada (grant number 00362).

Conflict of Interest

None declared.

References

- Abbey, R., & Hyde, S. (2009). No country for older people? Age and the digital divide. Journal of Information, Communication and Ethics in Society, 7(4), 225–242. doi: 10.1108/14779960911004480 [DOI] [Google Scholar]

- Academy of Royal Medical Colleges . (2019). Artificial intelligence in healthcare.https://www.aomrc.org.uk/reports-guidance/artificial-intelligence-in-healthcare/

- Ajunwa, I. (2018). How artificial intelligence can make employment discrimination worse. The Independent. https://suindependent.com/artificial-intelligence-can-make-employment-discrimination-worse/ [Google Scholar]

- Ajunwa, I. (2019). Beware of automated hiring. The New York Times. https://www.nytimes.com/2019/10/08/opinion/ai-hiring-discrimination.html [Google Scholar]

- AlgorithmWatch . (2021). AI ethics guidelines global inventory. https://algorithmwatch.org/en/ai-ethics-guidelines-global-inventory/

- American Civil Liberties Union . (2019). Facebook agrees to sweeping reforms to curb discriminatory ad targeting practices. https://www.aclu.org/press-releases/facebook-agrees-sweeping-reforms-curb-discriminatory-ad-targeting-practices

- Anderson, M., Center, A. P.-P. Research, & 2017, U . (2017). Tech adoption climbs among older adults. Washington, DC: Pew Research Center for Internet & Technology. https://www.pewresearch.org/internet/wp-content/uploads/sites/9/2017/05/PI_2017.05.17_Older-Americans-Tech_FINAL.pdf [Google Scholar]

- Andrey, S., Masoodi, M. J., Malli, N., & Dorkenoo, S. (2021). Mapping Toronto’s digital divide (Issue January). https://brookfieldinstitute.ca/mapping-torontos-digital-divide/

- Anguera, J. A., Gunning, F. M., & Areán, P. A. (2017). Improving late life depression and cognitive control through the use of therapeutic video game technology: A proof-of-concept randomized trial. Depression and Anxiety, 34(6), 508–517. doi: 10.1002/da.22588 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angwin, J., Kirchner, L., Larson, J., & Mattu, S. (2016). Machine bias: There’s software used across the country to predict future criminals. And it’s biased against blacks. ProPublica. [Google Scholar]

- Ayalon, L., & Clemens, T.-R. (2018). Contemporary perspectives on ageism (Ayalon L. & Tesch-Römer C. (Eds.), Vol. 19. Springer International Publishing. doi: 10.1007/978-3-319-73820-8 [DOI] [Google Scholar]

- Ball, C., Francis, J., Huang, K.-T., Kadylak, T., Cotten, S. R., & Rikard, R. V. (2017). The physical–digital divide: Exploring the social gap between digital natives and physical natives. Journal of Applied Gerontology, 38(8), 1167–1184. doi: 10.1177/0733464817732518 [DOI] [PubMed] [Google Scholar]

- Baum, F., Newman, L., & Biedrzycki, K. (2014). Vicious cycles: Digital technologies and determinants of health in Australia. Health Promotion International, 29(2), 349–360. doi: 10.1093/heapro/das062 [DOI] [PubMed] [Google Scholar]

- Binstock, R. H. (1983). The Donald P. Kent memorial lecture. The aged as scapegoat. The Gerontologist, 23(2), 136–143. doi: 10.1093/geront/23.2.136 [DOI] [PubMed] [Google Scholar]

- Brown, P. (2020). Artificial intelligence: The fastest moving technology. New York Law Journal. https://www.law.com/newyorklawjournal/2020/03/09/artificial-intelligence-the-fastest-moving-technology/ [Google Scholar]

- Butler, R. N. (1969). Age-ism: Another form of bigotry. The Gerontologist, 9(4), 243–246. doi: 10.1093/geront/9.4_part_1.243 [DOI] [PubMed] [Google Scholar]

- Caliskan, A., Bryson, J. J., & Narayanan, A. (2017). Semantics derived automatically from language corpora contain human-like biases. Science (New York, N.Y.), 356(6334), 183–186. doi: 10.1126/science.aal4230 [DOI] [PubMed] [Google Scholar]

- Charness, N., & Boot, W. R. (2009). Aging and information technology use: Potential and barriers. Current Directions in Psychological Science, 18(5), 253–258. doi: 10.1111/j.1467-8721.2009.01647.x [DOI] [Google Scholar]

- Charness, N., & Bosman, E. A. (1990). Human factors and design for older adults. In Birren J. & Schaie W. (Eds.) Handbook of the psychology of aging (3rd edition), 446–464. Academic Press. [Google Scholar]

- Charness, N., & Bosman, E. A. (1992). Age and human factors. In Craik F. I. M. & Salthouse T. A. (Eds.), The handbook of aging and cognition (pp. 495–551). Erlbaum. [Google Scholar]

- Charness, N., Yoon, J. S., & Pham, H. (2020). Designing products for older consumers: A human factors perspective. In The aging consumer (pp. 215–234). Routledge. doi: 10.4324/9780429343780 [DOI] [Google Scholar]

- Chen, I. Y., Szolovits, P., & Ghassemi, M. (2019). Can AI help reduce disparities in general medical and mental health care? AMA Journal of Ethics, 21(2), E167–E179. doi: 10.1001/amajethics.2019.167 [DOI] [PubMed] [Google Scholar]

- Chu, C. H., Biss, R. K., Cooper, L., Quan, A. M. L., & Matulis, H. (2021). Exergaming platform for older adults residing in long-term care homes: User-centered design, development, and usability study. JMIR Serious Games, 9(1), e22370. doi: 10.2196/22370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coiro, J. (2003). Reading comprehension on the Internet: Expanding our understanding of reading comprehension to encompass new literacies. The Reading Teacher, 56(5), 458–464. https://www.jstor.org/stable/20205224 [Google Scholar]

- Cotten, S. R., McCullough, B. M., & Adams, R. G. (2011). Technological influences on social ties across the lifespan S. https://www.springerpub.com/ [Google Scholar]

- Crawford, K. (2017). The trouble with bias—NIPS 2017 Keynote—Kate Crawford #NIPS2017. The Artificial Intelligence Channel. https://www.youtube.com/watch?v=fMym_BKWQzk [Google Scholar]

- Cronin, B. (2003). The digital divide a complex and dynamic phenomenon. The Information Society, 19(4), 315–326. doi: 10.1080/01972240390227895 [DOI] [Google Scholar]

- Czaja, S. J., Boot, W. R., Charness, N., Rogers, W. A., & Sharit, J. (2018). Improving social support for older adults through technology: Findings from the PRISM randomized controlled trial. The Gerontologist, 58(3), 467–477. doi: 10.1093/geront/gnw249 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danks, D., & London, A. J. (2017). Algorithmic bias in autonomous systems. IJCAI international joint conference on artificial intelligence, pp. 4691–4697. doi: 10.24963/ijcai.2017/654 [DOI] [Google Scholar]

- Dastin, J. (2018). Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-ai-recruiting-tool-that-showed-bias-against-women-idUSKCN1MK08G [Google Scholar]

- Datta, A., Tschantz, M. C., & Datta, A. (2015). Automated experiments on ad privacy settings. Proceedings on Privacy Enhancing Technologies, 2015(1), 92–112. doi: 10.1515/popets-2015-0007 [DOI] [Google Scholar]

- De’, R., Pandey, N., & Pal, A. (2020). Impact of digital surge during COVID-19 pandemic: A viewpoint on research and practice. International Journal of Information Management, 55, 102171. doi: 10.1016/j.ijinfomgt.2020.102171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decker, V., Valenti, M., Montoya, V., Sikorskii, A., Given, C. W., & Given, B. A. (2019). Maximizing new technologies to treat depression. Issues in Mental Health Nursing, 40(3), 200–207. doi: 10.1080/01612840.2018.1527422 [DOI] [PubMed] [Google Scholar]

- Díaz, M., Johnson, I., Lazar, A., Piper, A. M., & Gergle, D. (2018, April). Addressing age-related bias in sentiment analysis. In Proceedings of the 2018 CHI conference on human factors in computing systems, Montreal, Quebec, Canada, pp. 1–14. doi: 10.1145/3173574.3173986 [DOI] [Google Scholar]

- Digital Charter Implementation Act . (2020). Bill C-11, 2nd Session, 43rd Parliament.https://parl.ca/DocumentViewer/en/43-2/bill/C-11/first-reading

- Foskey, R. (2001). Technology and older people: Overcoming the great divide. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.199.6890&rep=rep1&type=pdf [Google Scholar]

- Friedman, B., & Nissenbaum, H. (1996). Bias in computer systems. ACM Transactions on Information Systems, 14(3), 330–347. [Google Scholar]

- General Data Protection Regulation . (2016). Regulation 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC, OJ L 119 at art. 3.https://eur-lex.europa.eu/eli/reg/2016/679/oj

- Grenier, A., Gontcharov, I., Kobayashi, K., & Burke, E. (2021). Critical knowledge mobilization: Directions for social gerontology. Canadian Journal on Aging, 40(2), 344–353. doi: 10.1017/S0714980820000264 [DOI] [PubMed] [Google Scholar]

- Harerimana, B., Forchuk, C., & O’Regan, T. (2019). The use of technology for mental healthcare delivery among older adults with depressive symptoms: A systematic literature review. International Journal of Mental Health Nursing, 28(3), 657–670. doi: 10.1111/inm.12571 [DOI] [PubMed] [Google Scholar]

- Harrington, C. N., Wilcox, L., Connelly, K., Rogers, W., & Sanford, J. (2018, May). Designing health and fitness apps with older adults: Examining the value of experience-based co-design. In Proceedings of the 12th EAI international conference on pervasive computing technologies for healthcare, New York, NY, USA, pp. 15–24. doi: 10.1145/3240925.3240929 [DOI] [Google Scholar]

- Hehman, J. A., & Bugental, D. B. (2015). Responses to patronizing communication and factors that attenuate those responses. Psychology and Aging, 30(3), 552–560. doi: 10.1037/pag0000041 [DOI] [PubMed] [Google Scholar]

- Hess, T. M., Auman, C., Colcombe, S. J., & Rahhal, T. A. (2003). The impact of stereotype threat on age differences in memory performance. The Journals of Gerontology, Series B: Psychological Sciences and Social Sciences, 58(1), 3–11. doi: 10.1093/geronb/58.1.p3 [DOI] [PubMed] [Google Scholar]

- Ho, C., Martin, M., Ratican, S., Teneja, D., & West, S. (2020). How to mitigate algorithmic bias in healthcare. MedCityNews. https://medcitynews.com/2020/08/how-to-mitigating-algorithmic-bias-in-healthcare/ [Google Scholar]

- Howard, A., & Borenstein, J. (2018). The ugly truth about ourselves and our robot creations: The problem of bias and social inequity. Science and Engineering Ethics, 24(5), 1521–1536. doi: 10.1007/s11948-017-9975-2 [DOI] [PubMed] [Google Scholar]

- Hurling, R., Catt, M., Boni, M. D., Fairley, B. W., Hurst, T., Murray, P., Richardson, A., & Sodhi, J. S. (2007). Using internet and mobile phone technology to deliver an automated physical activity program: Randomized controlled trial. Journal of Medical Internet Research, 9(2), e7. doi: 10.2196/jmir.9.2.e7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- International Telecommunication Union. (2013). Measuring information society report.https://www.itu.int/en/ITU-D/Statistics/Documents/publications/mis2013/MIS2013_without_Annex_4.pdf

- Irvine, A. B., Gelatt, V. A., Seeley, J. R., Macfarlane, P., & Gau, J. M. (2013). Web-based intervention to promote physical activity by sedentary older adults: Randomized controlled trial. Journal of Medical Internet Research, 15(2), e19. doi: 10.2196/jmir.2158 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanstrup, A. M., & Bygholm, A. (2019). The lady with the roses and other invisible users: Revisiting unused data on nursing home residents in living labs. In Neves B. B. & Vetere F. (Eds.), Ageing and digital technology: Designing and evaluating emerging technologies for older adults (pp. 17– 33). Springer. doi: 10.1007/978-981-13-3693-5_2 [DOI] [Google Scholar]

- König, R., Seifert, A., and Doh, M. (2018). Internet use among older Europeans: An analysis based on SHARE data. Universal Access in the Information Society, 17, 621–633. doi: 10.1007/s10209-018-0609-5 [DOI] [Google Scholar]

- Kuei, J., & Mixon, M. (2020). Legal risks of using artificial intelligence in hiring. The Center for Association Leadership. https://www.asaecenter.org/resources/articles/an_plus/2020/may/legal-risks-of-using-artificial-intelligence-in-hiring [Google Scholar]

- Lagacé, M., Laplante, J., Charmarkeh, H., & Tanguay, A. (2015). How ageism contributes to the second-level digital divide. Journal of Technologies and Human Usability, 11(4), 1–13. doi: 10.18848/2381-9227/cgp/v11i04/56439 [DOI] [Google Scholar]

- Mannheim, I., Schwartz, E., Xi, W., Buttigieg, S. C., McDonnell-Naughton, M., Wouters, E. J. M., & van Zaalen, Y. (2019). Inclusion of older adults in the research and design of digital technology. International Journal of Environmental Research and Public Health, 16(19), 3718. doi: 10.3390/ijerph16193718 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margetts, H., & Dorobantu, C. (2019). Rethink government with AI. Nature, 568(7751), 163–165. doi: 10.1038/d41586-019-01099-5 [DOI] [PubMed] [Google Scholar]

- Martin-Bariteau, F., & Scassa, T. (2021). Artificial intelligence and the law in Canada. LexisNexis Canada. [Google Scholar]

- O’Keefe, C., Flynn, C., Cihon, P., Leung, J., Garfinkel, B., & Dafoe, A. (2020). The windfall clause: Distributing the benefits of AI for the common good. AIES 2020—Proceedings of the AAAI/ACM conference on AI, ethics, and society, New York, NY, USA, pp. 327–331. doi: 10.1145/3375627.3375842 [DOI] [Google Scholar]

- Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science (New York, N.Y.), 366(6464), 447–453. doi: 10.1126/science.aax2342 [DOI] [PubMed] [Google Scholar]

- Petersen, L. S., & Bertelsen, P. (2017). Equality challenges in the use of eHealth: Selected results from a Danish citizens survey. Studies in Health Technology and Informatics, 245, 793–797. doi: 10.3233/978-1-61499-830-3-793 [DOI] [PubMed] [Google Scholar]

- Presser, J., Beatson, J., & Chan, G. (2021). Litigating artificial intelligence. Emond Montgomery Publications Limited. https://emond.ca/ai21 [Google Scholar]

- Rodrigues, R. (2020). Legal and human rights issues of AI: Gaps, challenges and vulnerabilities. Journal of Responsible Technology, 4, 100005. doi: 10.1016/j.jrt.2020.100005 [DOI] [Google Scholar]

- Rosales, A., & Fernández-Ardèvol, M. (2019). Structural ageism in big data approaches. Nordicom Review, 40(s1), 51–64. doi: 10.2478/nor-2019-0013 [DOI] [Google Scholar]

- Rosales, A., & Fernández-Ardèvol, M. (2020). Ageism in the era of digital platforms. Convergence (London, England), 26(5–6), 1074–1087. doi: 10.1177/1354856520930905 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russell, S. J., Norvig, P., & Davis, E. (2010). Artificial intelligence: a modern approach. 3rd ed. Upper Saddle River, NJ: Prentice Hall. [Google Scholar]

- Simonite, T. (2019). The best algorithms still struggle to recognize black faces. Wired. https://www.wired.com/story/best-algorithms-struggle-recognize-black-faces-equally/ [Google Scholar]

- Srinuan, C., & Bohlin, E. (2011). Understanding the digital divide: A literature survey and ways forward. 22nd European Regional Conference of the International Telecommunications Society (ITS2011). http://hdl.handle.net/10419/52191 [Google Scholar]

- Tomasino, K. N., Lattie, E. G., Ho, J., Palac, H. L., Kaiser, S. M., & Mohr, D. C. (2017). Harnessing peer support in an online intervention for older adults with depression. The American Journal of Geriatric Psychiatry, 25(10), 1109–1119. doi: 10.1016/j.jagp.2017.04.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- United Nations . (1945). Charter of the United Nations. https://www.un.org/en/about-us/un-charter/full-text [Google Scholar]

- Vervaecke, D., & Meisner, B. A. (2021). Caremongering and assumptions of need: The spread of compassionate ageism during COVID-19. The Gerontologist, 61(2), 159–165. doi: 10.1093/geront/gnaa131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- White, H., McConnell, E., Clipp, E., Branch, L. G., Sloane, R., Pieper, C., & Box, T. L. (2002). A randomized controlled trial of the psychosocial impact of providing internet training and access to older adults. Aging & Mental Health, 6(3), 213–221. doi: 10.1080/13607860220142422 [DOI] [PubMed] [Google Scholar]

- Whittlestone, J., Nyrup, R., Alexandrova, A., Dihal, K., & Cave, S. (2019). Ethical and societal implications of algorithms, data, and artificial intelligence: A roadmap for research.http://www.nuffieldfoundation.org/sites/default/files/files/Ethical-and-Societal-Implications-of-Data-and-AI-report-Nuffield-Foundat.pdf

- Wiggers, K. (2018). Plum uses AI to hire people ‘that never would have been discovered through a traditional hiring process.’ VentureBeat. https://venturebeat.com/2018/06/13/plum-uses-ai-to-hire-people-that-never-would-have-been-discovered-through-a-traditional-hiring-process/ [Google Scholar]

- Wilkinson, J., & Ferraro, K. F. (2002). Thirty years of ageism research. In T. D. Nelson (Ed.) Ageism. The MIT Press. doi: 10.7551/mitpress/1157.003.0017 [DOI] [Google Scholar]

- World Health Organization & United Nations . (2021). Ageism is a global challenge: UN. https://www.who.int/news/item/18-03-2021-ageism-is-a-global-challenge-un

- Zhavoronkov, A., Mamoshina, P., Vanhaelen, Q., Scheibye-Knudsen, M., Moskalev, A., & Aliper, A. (2019). Artificial intelligence for aging and longevity research: Recent advances and perspectives. Ageing Research Reviews, 49, 49–66. doi: 10.1016/j.arr.2018.11.003 [DOI] [PubMed] [Google Scholar]