Abstract

We report the results of an academic survey into the theoretical and methodological foundations, common assumptions, and the current state of the field of consciousness research. The survey consisted of 22 questions and was distributed on two different occasions of the annual meeting of the Association of the Scientific Study of Consciousness (2018 and 2019). We examined responses from 166 consciousness researchers with different backgrounds (e.g. philosophy, neuroscience, psychology, and computer science) and at various stages of their careers (e.g. junior/senior faculty and graduate/undergraduate students). The results reveal that there remains considerable discussion and debate between the surveyed researchers about the definition of consciousness and the way it should be studied. To highlight a few observations, a majority of respondents believe that machines could have consciousness, that consciousness is a gradual phenomenon in the animal kingdom, and that unconscious processing is extensive, encompassing both low-level and high-level cognitive functions. Further, we show which theories of consciousness are currently considered most promising by respondents and how supposedly different theories cluster together, which dependent measures are considered best to index the presence or absence of consciousness, and which neural measures are thought to be the most likely signatures of consciousness. These findings provide us with a snapshot of the current views of researchers in the field and may therefore help prioritize research and theoretical approaches to foster progress.

Keywords: consciousness, survey, definitions, ASSC

Introduction

The Association for the Scientific Study of Consciousness (ASSC) was established in 1994 to foster (empirical) scientific progress towards understanding the nature of consciousness and to facilitate information exchange among a community of actively interested scientists and philosophers. Since this time, the field has grown rapidly, the annual number of publications has shown a 10-fold increase, and ASSC’s annual conference, now reaching its 25th iteration, continues to grow.

One of the community’s main ambitions is to uncover the neural mechanisms underlying fluctuations in consciousness level (e.g. being awake and aware versus in a coma) and conscious content (e.g. dissociating unconscious processes from conscious processes). The neural processes associated with conscious, as compared to unconscious, level and content are often referred to as the ‘neural correlates of consciousness’ (NCC) (Crick and Koch 1995; Block 1996). Identifying NCCs has remained one of the main goals of consciousness researchers, now for decades. Sophisticated technological approaches, scientific rigour, and methodological innovations have allowed the field to make impressive progress towards this goal. In addition, the community’s efforts have resulted not only in increased theoretical understanding of the nature of consciousness but also in the development of practical, clinical applications such as the ability to diagnose the presence of residual consciousness in brain-damaged, non-communicative patients using neuroimaging techniques (Casali et al. 2013; Fernández-Espejo and Owen 2013).

Despite this progress, it is essential to recognize that conceptual choices and assumptions about the nature of the phenomenon under study have a significant influence on which experiments are conducted and how their outcomes are interpreted (Barrett 2009; Irvine 2013; Francken and Slors 2014; Abend 2017). Thus, although there seems to be broad consensus about what needs to be explained (i.e. subjective experience), consciousness researchers have argued extensively about what that refers to (i.e. how it should be operationalized), what would constitute an explanation, and how valid experiments should be designed (Block 1995; Chalmers 1995, 2000; Dehaene and Naccache 2001; Dennett 2001; Lamme 2006; Tononi 2008; Dehaene and Changeux 2011; Doerig et al. 2020; Hohwy and Seth 2020); see Seth and Bayne (2022) for a review.

If different researchers utilize different conceptualizations and operationalizations of consciousness, the danger is that we will not be studying the same phenomenon even though we use the same label (Figdor 2013; Irvine 2013; Francken and Slors 2014). As a consequence, empirical findings will not easily build on each other towards a unified understanding, meta-analyses become difficult to perform and interpret, and misunderstanding might occur when communicating scientific findings both inside and outside of the community (Bilder et al. 2009). It is therefore crucial to acknowledge and examine the theoretical (and sometimes pre-theoretical) and conceptual disagreements, as we will do here. Our hope is that the outcome of such examinations can be used to promote conceptual clarity for consciousness science.

We surveyed consciousness researchers that attended the annual conference of the ASSC (2018 and 2019, see the ‘Methods and Materials’ section) with several goals: first, we aimed to uncover the often-implicit theoretical foundations on which current and future empirical studies are built. Second, we set out to examine the theoretical attitudes and methodological preferences of a sample of consciousness researchers and to explore areas of consensus and disagreement about fundamental concepts, experimental tasks, theories, and findings in the field. Last, we aimed to gauge opinions about other long-standing and fundamental questions about consciousness, such as whether machines may have it (Dehaene et al. 2017), whether it has an evolutionary advantage (Frith 2010; Frith and Metzinger 2016; Ginsburg and Jablonka 2019), and whether it is presence or absence in other species (Birch et al. 2020; Edelman & Seth, 2009a; Nieder et al. 2020).

Methods and materials

Data collection

The consciousness survey was administered between June 2018 and August 2019. We distributed the survey online through the online software SurveyMonkey (2018 and 2019) and on paper (2018 only) during the annual conference of the ASSC 2018 in Krakow (Poland) from June 26 to 29 and the 2019 ASSC conference in London Ontario (Canada) from June 25 to 28. We advertised the survey via social media, flyers, and slides included in conference talks. Although the promotion of the survey was mainly aimed at ASSC attendees, others could also respond.

Participants

Before people could participate in the survey, they were provided with information about the aim of the study, instructions and procedure, their right to stop participation at any time, confidentiality of data (anonymous data collection and processing), and contact information of the main researchers. Subsequentially, they signed (digital) consent before they could start answering the survey questions. The survey procedure was approved by the University of Amsterdam Ethics Committee. For the full questionnaire, see Supplementary Table S1.

In total, we received 307 responses to our survey. Because our main interest lies with the ASSC attendees and because they form a relatively well-controlled population, we will only report about this subgroup’s results here. We thus excluded 99 submissions of people who did not attend the ASSC conferences in 2018 or 2019. The survey version that we distributed from June 2019 onwards (during the annual ASSC conference) included an extra question asking whether participants had previously filled out the survey. Thirteen out of 208 remaining submissions contained a positive answer to this question and were therefore excluded from further analyses. To make sure that only consciousness researchers would be included, we added a question to the background variables section of the survey in which we explicitly asked about this (QD1 ‘Do you study or research consciousness?’). In all, 166 out of 195 respondents answered positively to this question, and we excluded the others from our data analyses. The results of our entire group of respondents (N = 232; excluding double submissions and respondents who did not self-identify as consciousness researchers) can be found in the Supplementary data.

Even though we only report the results of ASSC attendees here, it is worth noting that the response tendencies of the non-ASSC attendees who study consciousness were similar to the response tendencies of our subsample. To establish this, we compared the correlation between all means of all Likert scale questions (i.e. demographics and ranking questions excluded and 54 questions remaining) between ASSC conference attendees in 2018 and 2019 and non-ASSC conference attendees. All three groups showed very strong similarity in their responses [ASSC 2018 vs. ASSC 2019: r(52) = 0.96, p < 0.001; ASSC 2018 vs. non-ASSC: r(52) = 0.93, p < 0.001; ASSC 2019 vs. non-ASSC: r(52) = 0.92, p < 0.001; see Supplements for the results of all three groups together].

Our sample of 166 ASSC attendees consists mostly (76%) of submissions from 2018, and it represents approximately 37% of the total number of ASSC attendees in 2018, with the remaining 24% coming from 2019 (16% of the total number of ASSC attendees in that year). Our overall sample consists of approximately 39% graduate students, 19% postdoctoral researchers, and 28% faculty (see Fig. 1), mostly coming from the fields of (cognitive) neuroscience, psychology, and philosophy.

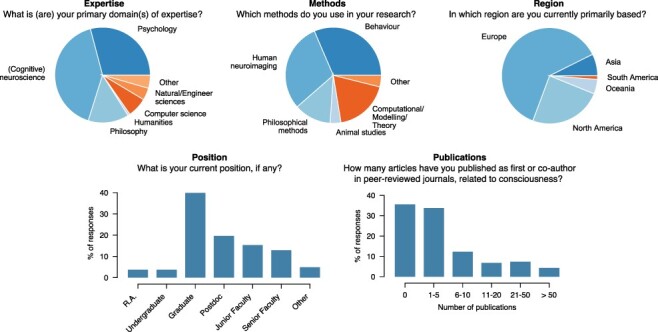

Figure 1.

Background variables. Five questions were included in the survey to obtain background characteristics of the respondents (N = 166). ‘Expertise’ and ‘Methods’ questions had tick boxes answer options, allowing for multiple responses. See the ‘Methods and Materials’ section and Supplementary Table S1 for a complete overview

Background variables

We collected data on several background variables to get a sense of the makeup of our group of respondents and to study possible correlations between background variables and the answers to main questions. Background variables were gauged by two questions with tick boxes answer options (‘expertise’ and ‘methods’), allowing for multiple options, and four questions with check box answer options (see Supplementary Table S1 for full list of questions). To analyse the background variables, we calculated frequency and percentage for each response option.

Main questions

The survey included 22 main questions aiming to get a sense of consciousness researchers’ theoretical and methodological perspectives relating to the scientific study of consciousness. In the survey introduction, we explicitly restricted our questions to ‘conscious content’, defined as ‘what one is conscious of, when one is conscious’. We were not able to explicitly verify whether respondents in fact restricted their answers to issues of conscious content, as opposed to (for example) conscious level—since applying any particular criterion would have introduced arbitrary exclusions. Questions covered definitions of consciousness, theories of consciousness, methodological approaches, and potential outcomes of consciousness research.

Survey questions had two types of response options (see Supplementary Table S1 for full list of questions). First, we used 5-point Likert scale questions to evaluate respondents’ beliefs about and attitudes towards various aspects of (the scientific study of) consciousness. For each question, we calculated mean and standard deviation of the answer frequencies. We also calculated the answer options percentages from which we derived the sum percentage of the ‘yes’ responses (Likert scale points 1 + 2). Note that Q15 had only three answer options instead of five.

Second, there were three ranking questions (Q16, Q17, and Q19) in which we asked participants to rank their preferred answer options by placing the numbers 1, 2, and 3 in front of the options of their choice (note that we only asked the top three to be ranked). Question Q18 was a follow-up question after question Q17 with two answer options (‘yes’ and ‘no’) with an open answer option in the latter case. For each question, we calculated frequencies and percentages for the three answer options as well as the sum frequency and percentage.

For all survey questions, we wanted to estimate the level of consensus within our respondents. To this aim, we used a criterion of 75%, i.e. either >75% responses of ‘definitely yes’ or ‘probably yes’ or >75% responses of ‘probably no’ or ‘definitely no’.

To take the ordinal nature of our data into account, we used Spearman’s rho for correlation analyses to assess regularities in responses to different questions and ordinal regression analyses to assess potential differences in response tendencies for senior researchers compared to junior researchers (JASP Team 2020). For the ordinal regression, junior researchers were defined as research assistants and graduate and undergraduate students. Postdoctoral researchers and junior and senior faculty were considered to be senior researchers. p values for correlation analyses reported in the ‘Results’ section were FDR-corrected (with Python ‘statsmodels.stats.multitest.fdrcorrection’ function) to account for multiple tests. For those interested, it is possible to explore all possible correlations (and review the analyses presented here, filter cases, and perform many other analyses) in the JASP file that is publicly available (see the Supplementary Information for details and relevant links).

Finally, we carried out a network analysis on Q13 (‘theories’) to explore clustering among the different consciousness theories. We used the ‘Network module’ in JASP. Observed variables (Likert scale scores indicating theory evaluation for each of the 10 theories) are referred to as nodes, and estimated relations are called edges. Labels of the nodes are abbreviations of the theories’ names (see Fig. 3). The EBICglasso estimator was used to fit a Graphical Gaussian model to the partial polychoric correlation matrix of the data (Foygel and Drton 2010; Epskamp and Fried 2018). Each edge between two nodes represents the relation between the nodes after conditioning on all other nodes. A thicker, darker edge indicates that the corresponding relation between two edges (weight) is estimated to be stronger as compared to a thinner, lighter edge.

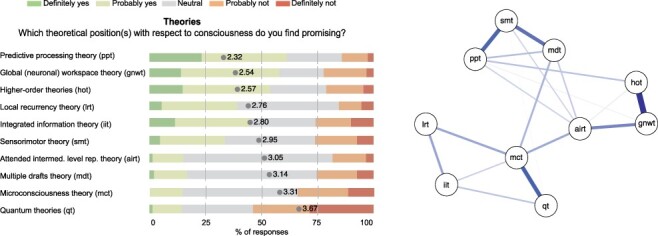

Figure 3.

Theories of consciousness. (Left) We asked participants to indicate whether they regarded a theoretical position as promising for 10 different consciousness theories on a 5-point Likert scale ranging from ‘definitely yes’ (dark green) to ‘definitely not’ (red). Mean score is indicated on the bars. (Right) Network analysis of theories of consciousness. Thicker darker edges represent stronger weights. The largest weight (between HOT and GNWT) equals 0.47. See left for node abbreviations. See Supplementary Table S3 for the weights matrix of the network analysis

Results

The consciousness survey included 22 main questions and was administered between June 2018 and August 2019 (see the ‘Methods and Materials’ section for details and Supplementary Table S1 for the full questionnaire). For each question, we calculated mean and standard deviation of the Likert scale responses and the sum percentage of ‘yes’ responses (combining ‘definitely yes’ and ‘probably yes’ responses). We will first report the results without extensive elaboration below and will then, in the ‘Discussion’ section, place these results in a broader perspective and discuss them in light of the existing literature on consciousness.

Background variables

As can be seen from Fig. 1 (and Supplementary Table S1), the majority of respondents fall into the categories of (cognitive) neuroscientist, psychologist, or philosopher. The interdisciplinarity of the consciousness science community becomes even clearer from the fact that 37.6% of the respondents chose two domains and 11.5% chose three or more domains. Moreover, only a small minority indicated that they use just one method of study (21.2%, e.g. behaviour or human neuroimaging), whilst most respondents use two (33.9%), three (35.8%), or even more (8.5%) different methods. Figure 1 also shows that our respondents comprise approximately one-third of graduate students and shows a gradual decrease in the percentage of senior faculty who nevertheless make up 12.7% of our respondents. Based on the information that we have received from the ASSC, this distribution resembles the typical makeup of ASSC attendees. The number of consciousness-related publications shows a similar pattern.

For all main questions, we performed additional analyses to explore whether respondents’ career stage was associated with their answers (see the ‘Methods and Materials’ section). In general, there were no such associations, and they will be reported only in case a significant effect was observed.

General questions

First, we asked participants some general questions about consciousness. These questions ranged from explicit questions about the definition of consciousness (e.g. does consciousness require higher-order states?) to some common questions about consciousness [e.g. the ‘zombie’ question (Kirk 2019) and the ‘Mary’ question (Jackson 1982)]. The results are presented in Fig. 2 (and Supplementary Table S1) and interpreted below.

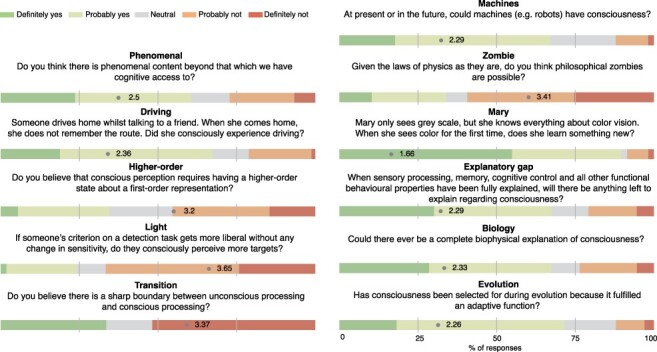

Figure 2.

General questions. Eleven questions were included in the survey to explore how consciousness researchers perceive their object of study. For all questions except ‘Transition’, a 5-point Likert scale was used ranging from ‘definitely yes’ (dark green) to ‘definitely not’ (red). Mean score is indicated on the bars. For ‘Transition’, three answer options were provided: ‘sharp boundary’ (dark green), ‘neutral’ (grey), and ‘continuous transition’ (red). Note that questions are sometimes summarized in the figure legend due to space constraints of the figure. See Supplementary Table S1 for a complete overview and exact formulation of all questions

There were three questions probing participants’ definition of consciousness. A small majority of respondents believe that there is phenomenal content beyond that to which we have cognitive access (Q3 ‘phenomenal’; mean: 2.50, SD: 1.24, ‘yes’ responses: 60.6%), indicating a lack of consensus. In the survey, we did not give explicit definitions of phenomenal and access consciousness because these are commonly used terms in the field: phenomenal consciousness is often referred to as ‘what is it like for a subject to have an experience’ (Block 2011, glossary p. 567), whereas ‘a representation is access-conscious if it is made available to cognitive processing’ (Block 2011, glossary p. 567). A more indirect question implicitly assessing the relevance and existence of phenomenal consciousness shows a comparable pattern of results yet leaning more towards agreement favouring phenomenal consciousness beyond access (Q1 ‘driving’; mean: 2.36, SD: 1.04, ‘yes; responses: 67.3%). Second, less than half of the respondents believe that consciousness requires higher-order states (Q4 ‘higher-order’; mean: 3.20, SD: 1.17, ‘yes’ responses: 34.5%), but also regarding this issue there is no consensus. Third, response bias is not regarded as part of conscious experience according to a majority of respondents (Q2 ‘light’; mean: 3.65, SD: 1.13, ‘yes’ responses: 24.2%). The final explicit definition question explores whether the transition from unconscious processing to conscious processing (of content, not level1) is discrete or continuous (Q15 ‘transition’). Most respondents believe the latter is the case, i.e. there is no sharp boundary (mean: 3.37, SD: 1.82, ‘sharp boundary’ responses: 33.5%; note that we used a 3-point Likert scale question for this question with the options ‘sharp boundary’, ‘neutral’, and ‘continuous transition’). Interestingly, the senior researchers that we surveyed are more inclined to think of the transition between conscious and unconscious processing as a sharp boundary as compared to our sample of junior researchers, once more demonstrating a lack of consensus (senior: mean: 3.26, SD: 1.76, ‘sharp boundary’ responses: 32.6%; junior: mean: 3.75, SD: 1.67, ‘sharp boundary’ responses: 22.8%; Wald χ2 = 6.73, p = 0.009).

Three questions presupposed a particular definition of consciousness. First, we surveyed whether machines could have consciousness (Q9 ‘machines’). Indeed, two-thirds of respondents believe that this is or will be the case (mean: 2.29, SD: 0.94, ‘yes’ responses: 67.1%). Next, we found that most respondents believe philosophical zombies (Kirk 2019) are not possible given the laws of physics as they are (Q10 ‘zombie’; mean: 3.41, SD: 1.36, ‘yes’ responses: 33.9%). Finally, probing another famous philosophical thought experiment (Jackson 1982; Nida-Rümelin and O Conaill 2019), a very large majority of respondents believes that Mary learns something new about colour vision when she enters the real world after living in a black-and-white basement2 (Q11 ‘Mary’; mean: 1.66, SD: 0.94, ‘yes’ responses: 89.6%). Note that this is the only general question for which we find consensus (>75% responses of ‘definitely yes’ or ‘probably yes’).

The scientific explanation of consciousness was explored using three questions. A small majority of respondents believe that when all functional and behavioural properties have been fully explained, there will still be something left out of the explanation of consciousness (Q5, ‘explanatory gap’; mean: 2.29, SD: 1.21, ‘yes’ responses: 67.5%). This suggests that the majority of respondents do not adhere to a fully functionalist interpretation of consciousness (Dennett 1991; Cohen and Dennett 2011), although respondents are divided about this issue. Moreover, this question is the standard way of asking whether there is a ‘hard problem” of consciousness over and above the ‘easy problems” (Chalmers 2017). In this light, most respondents appear to believe that there is a hard problem, although, again, there is no consensus. We would like to note however that the formulation of Q5 (‘explanatory gap’) may have been ambiguous and that Q5 could have been interpreted in at least two other ways—questioning whether consciousness can be explained in terms of (substrate independent) input–output relations (i.e. broadly speaking the philosophical position of functionalism) or, on the other hand, questioning whether consciousness has a function (vs. being epiphenomenal). Here, functions could be both teleological (e.g. resulting from evolutionary selection) or what has been called dispositional (i.e. the role played by a process in the operation of a larger system, see Cummins 1975). Further surveying would be required to establish what views consciousness researchers hold about the relevance and meaning of ‘function’ to the explanation of consciousness.

Most respondents believe that we could have a complete biophysical explanation of consciousness (Q6 ‘biology’; mean: 2.33, SD: 1.22, ‘yes’ responses: 67.3%) yet again without reaching strong consensus. Finally, the majority of respondents supports the hypothesis that consciousness has evolved because it fulfils an adaptive function (Q7 ‘evolution’; mean: 2.26, SD: 0.96, ‘yes’ responses: 71.5%). Regularities in responses to the different questions across participants are highlighted in Table 1.

Table 1.

Spearman’s correlations for the general questions

| Driving | Light | Phenomenal | Higher-order | Explanatory gap | Biology | Evolution | Machines | Zombie | Mary | Transition | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Driving | |||||||||||

| Light | 0.001 | ||||||||||

| Phenomenal | 0.212* | 0.004 | |||||||||

| Higher-order | −0.039 | −0.003 | −0.191 | ||||||||

| Explanatory gap | 0.087 | 0.100 | 0.300* | −0.083 | |||||||

| Biology | −0.133 | −0.015 | −0.140 | −0.032 | −0.221* | ||||||

| Evolution | −0.176 | 0.022 | −0.096 | 0.047 | −0.084 | 0.247* | |||||

| Machines | 0.034 | 0.027 | −0.117 | −0.100 | −0.122 | 0.281* | 0.113 | ||||

| Zombie | 0.021 | −0.072 | 0.183 | −0.048 | 0.226* | −0.146 | −0.220* | −0.096 | |||

| Mary | 0.040 | 0.127 | 0.338* | 0.116 | 0.304* | −0.155 | 0.026 | −0.172 | 0.046 | ||

| Transition | −0.099 | −0.048 | −0.110 | 0.130 | 0.069 | 0.173 | 0.045 | 0.108 | −0.130 | 0.001 |

Note. Significant correlations (FDR-corrected) are marked with an asterisk (*). See Supplementary Table S2 for uncorrected and FDR-corrected p values.

In the field, several theories of consciousness have been proposed (Klink et al. 2015; Doerig et al. 2020; Seth and Bayne 2022). The attitudes towards various theories of consciousness were assessed by indicating for each of 10 popular theories to what extent respondents regarded it as promising or not (Fig. 3A). Note that ‘promising” was not defined. Some respondents ranked only 1 theory as promising (score 1 or 2 on the Likert scale, 10.2% of respondents), some ranked 2 theories as promising (13.9%), or 3–9 theories (60.8%), or none of the theories (13.9%) or even all 10 theories (<1%). Interestingly, ‘Predictive processing theory’ (PPT) was in fact not an elaborate, existing theory in the scientific community of consciousness researchers when we surveyed our respondents (Williford et al. 2018; Hohwy and Seth 2020). Despite this, or maybe thanks to this, PPT is considered the most promising theory (mean: 2.32, SD: 1.04, ‘promising’ responses 61.3%). PPT is closely followed by ‘global (neuronal) workspace theory (GNWT)’ (Baars 1988; Dehaene et al. 2003; Mashour et al. 2020) and ‘higher-order theories (HOT)’ of consciousness (Rosenthal 2005; Lau and Rosenthal 2011; Brown et al. 2019), which are both considered to be promising theories according to most respondents (GNWT: mean: 2.54, SD: 1.05, ‘promising’ responses: 58.0%; HOT: mean: 2.57, SD: 1.07, ‘promising’ responses: 53.8%). These three theories are all regarded as promising by most respondents.

For three other theories, the number of ‘promising’ responses outweighed the number of ‘not promising’ responses: ‘local recurrency theory’ [LRT; mean: 2.76, SD: 0.91, ‘promising’ responses: 39.2%; (Lamme 2006, 2010)], ‘integrated information theory’ [IIT; mean: 2.80, SD: 1.15, ‘promising’ responses: 44.9%; (Tononi 2004, 2008)], and ‘sensorimotor theory (SMT)’ [mean: 2.95, SD: 0.98, ‘promising’ responses: 33.6%; (O’Regan and Noë 2001)].

Three other theories of consciousness received more negative than positive responses: ‘multiple drafts theory (MDT)’ [mean: 3.14, SD: 0.83, ‘promising’ responses: 16.8%; (Dennett 1991)], ‘attended intermediate level representation theory (AIRT)’ [mean: 3.05, SD: 0.69, ‘promising’ responses: 15.0%; (Prinz 2000)], and ‘microconsciousness theory (MCT)’ [mean: 3.32, SD: 0.86, ‘promising’ responses: 14.4%; (Zeki and Bartels 1999)]. Note that these three theories are likely to be less well known by consciousness researchers, a notion also suggested by the large number of ‘neutral’ responses received by these theories compared to the others. Moreover, our respondent sample consisted of relatively few philosophers (22.3%), and these theories—although based on neuroscience—are more philosophical in nature (apart from ‘microconsciousness’; see Fig. 1). Finally, whilst the number of positive responses for ‘quantum theories’ (QT) is comparable to these latter theories [mean: 3.67, SD: 1.07, ‘promising’ responses: 14.3%; (Atmanspacher 2020)], QT received a remarkably high number of 53.9% ‘not promising’ responses. We further analysed the responses to see whether respondents typically favoured one theory over the others or whether they instead went along with multiple theories.

We performed a network analysis (Fig. 3B) to look for structure in the evaluation of theories, i.e. which theories are supported by the same respondents (see the ‘Methods and Materials’ section for details). Three clusters emerged for which weights between theories within a cluster were larger than weights between theories from different clusters (see Supplementary Table S3 for the weight matrix of the network). To verify the results based on the visual representation of the estimated network model, we also performed a principal component analysis and found exactly the same three clusters of theories.

The first cluster includes microconsciousness theory, Integrated information theory (IIT), Quantum theories (QT), and Local recurrency theory (LRT). The second cluster includes sensorimotor theory, multiple drafts theory, and Predictive processing theory (PPT). The third cluster includes global (neuronal) workspace theory, higher-order theories, and attended intermediate level representation theory. As mentioned, these results are confirmed by a principal component analysis: that is, three components are selected on the basis of the scree-plot with the components’ loadings showing the same pattern as that of the network analysis. Thus, there appears to be overlap or similarity (in fact or in appeal) between different theories resulting in three main groups of theories.

Finally, we tested whether certain sets of responses to a subset of the general questions go along with specific theoretical preferences. We report the results for the five theories evaluated as most promising correlated with the answers to the questions that directly assess important aspects of the definition (‘phenomenal’ and ‘higher-order’) and explanation of consciousness (explanatory gap’) (Table 2, see Supplementary Table S2 for complete analysis).

Table 2.

Spearman’s correlations for five main theory questions and main definition (general) questions

| Phenomenal | Higher-order | Explanatory gap | |

|---|---|---|---|

| PPT | 0.049 | 0.186* | −0.127 |

| Global (neuronal) workspace theory | −0.246* | 0.183* | −0.120 |

| Higher-order theories | −0.243* | 0.502* | −0.119 |

| LRT | 0.174^ | −0.051 | 0.075 |

| IIT | 0.297* | −0.214* | 0.218* |

Note. Significant correlations (p < 0.05, FDR-corrected) are marked with an asterisk (*). ^ reflects p = 0.058 after FDR correction. See Supplementary Table S2 for FDR-corrected and uncorrected p values and complete analyses.

The ratings of ‘PPT’ correlated positively with the answers to the higher-order question, meaning that respondents who evaluated PPT as promising tend to believe that conscious perception requires having higher-order states about first-order representations. Whilst there are several variants of PPT (e.g. Pennartz 2018; Williford et al. 2018; Hohwy and Seth 2020), they have in common with higher-order theories the notion that consciousness depends on higher-level ‘interpretation’ of lower-level content. The evaluations of ‘global (neuronal) workspace theory’ also correlated positively with answers to the higher-order question and negatively with the phenomenal question. As could be expected, we observed the strongest positive correlation between higher-order theories and the answers to the higher-order question. Moreover, respondents adhering to higher-order theories generally do not think there is phenomenal content beyond that which we have cognitive access to.

As opposed to the above-mentioned theories, respondents that evaluated ‘LRT’ and ‘IIT’ positively generally think that there is phenomenal content beyond that which we have access to. In addition, adherents of IIT tend to dismiss the importance of higher-order states for conscious perception. Broadly speaking, the correlations between the theories and the answers to the higher-order question versus phenomenal question are in the opposite direction, as one would expect. This overall pattern of correlations is largely consistent with the perspectives of the theories. The explanatory gap question only correlated positively with IIT, meaning that adherents of IIT appeared to believe that a functionalist explanation of consciousness would be insufficient. Answers to the explanatory gap question did not correlate significantly with the ratings of the other theories.

Note that we do not wish to imply any judgement about the degree to which respondents displayed coherent ideas, rather we aimed to quantify the degree to which certain sets of responses tend to go together in the evaluation of theories and definitions.

Methodology questions

Next, we aimed to assess how respondents (ideally) manipulate and measure their object of study. Methodology questions included three ranking questions in which we asked participants to rank their preferred answer options by placing the numbers 1, 2, and 3 in front of the options of their choice. For each question, we calculated frequencies and percentages for the three answer options as well as the sum frequency and percentage.

The first methodology question concerns what participants consider the best dependent variable to measure the presence (or absence) of conscious perception (Fig. 4). There is a clear preference among respondents for the option ‘subjective report on whether the stimulus is seen’ (total number of rankings: 97, number one rankings: 48). The preference for a ‘subjective’ dependent variable based on subjects’ introspective reports is further reflected by the other options in the top four: ‘perceptual awareness scale’ (total number of rankings: 71, number one rankings: 17), ‘description of phenomenology’ (total number of rankings: 52, number one rankings: 16), and ‘confidence about correctness of response’ (total number of rankings: 43, number one rankings: 9).

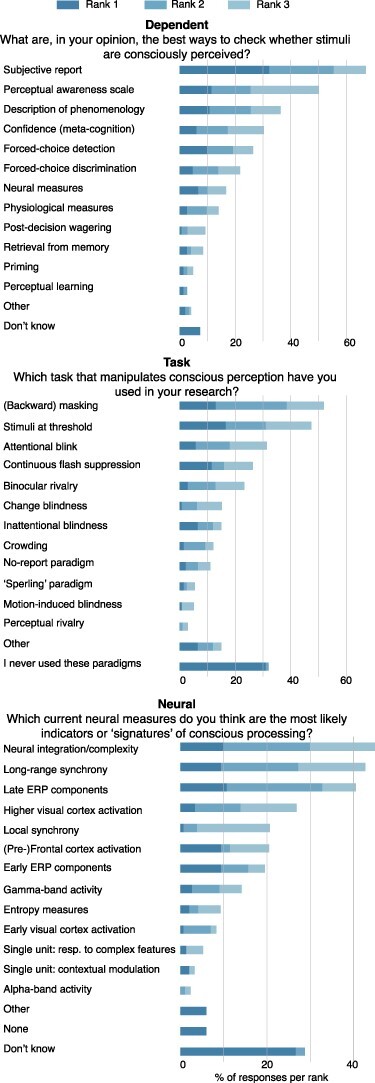

Figure 4.

Methodology questions. Three questions were included in the survey to explore how consciousness researchers experimentally manipulate and study consciousness. For these questions, participants were asked to rank their preferred answer options by placing the numbers 1, 2, and 3 in front of the options of their choice (ranging from dark blue to light blue)

Only after these ‘subjective’ measures do we find the more ‘objective’, i.e. performance-based or non-behavioural measures, measures of conscious perception: ‘forced-choice detection’ (total number of rankings: 38, number one rankings: 15), ‘forced-choice discrimination’ (total number of rankings: 31, number one rankings: 7), ‘neural measures’ (total number of rankings: 24, number one rankings: 10), and ‘physiological measures’ (e.g. pupil size, skin conductance; total number of rankings: 20, number one rankings: 4). The remaining options were chosen less than 20 times. Thus, it appears that ‘subjective’ measures are generally preferred over ‘objective’ measures (263 vs. 151 rankings), although both are considered to be important.

Second, we asked participants which task(s) that manipulate(s) conscious perception they have used in their research by ranking different options in order of how frequently they have used them in the past. The most frequently used task among respondents is ‘(backward) masking’ (total number of rankings: 50, number one rankings: 18), followed by ‘stimuli at threshold’ (total number of rankings: 47, number one rankings: 23), the ‘attentional blink’ (total number of rankings: 28, number one rankings: 8), and ‘continuous flash suppression’ (total number of rankings: 27, number one rankings: 16). Three other options, ranked lower, were selected at least 10 times: ‘binocular rivalry’ (total number of rankings: 20, number one rankings: 4), ‘inattentional blindness’ (total number of rankings: 16, number one rankings: 9), and ‘change blindness’ (total number of rankings: 12, number one rankings: 1). Note that a large number of respondents indicated that they had never used any of these paradigms (total number of rankings: 45). In contrast to the ‘dependent’ question, there does not seem to be a clear preference for a particular (type of) experimental paradigm to manipulate conscious perception.

Last, we surveyed participants about the hypothesized ‘neural correlates of consciousness (NCC)’ (which could be measured with different techniques, e.g. fMRI, EEG, and single-unit) by ranking neural measures most likely to indicate the ‘signatures’ of conscious processing of visual content. Three neural ‘indicators’ were clearly preferred: ‘neural integration/complexity measures’ (total number of rankings: 46, number one rankings: 15), ‘late ERP components’ (total number of rankings: 43, number one rankings: 16), and ‘long-range synchrony’ (total number of rankings: 43, number one rankings: 14). Next, three other options were selected (almost) equally often: ‘higher visual cortex activation (total number of rankings: 25, number one rankings: 6), ‘early ERP components’ (total number of rankings: 23, number one rankings: 14), and ‘(pre-)frontal cortex activation’ (total number of rankings: 23, number one rankings: 14). Further options, including ‘none’, were chosen less than 20 times. It is important to note that a large number of respondents answered this question with ‘don’t know’ (total number of rankings: 42).3 Taken together, these results suggest that most respondents hypothesize that the neural mechanisms of consciousness include extensive neural processing, later in time, and including multiple brain regions. However, at the same time, we found that a large variety of neural indicators was selected, indicating a lack of consensus or common hypothesis concerning one of the main goals of the scientific study of consciousness.

Outcome questions

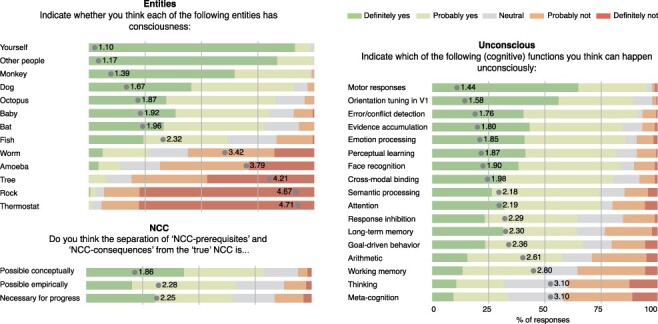

Finally, we aimed to evaluate participants’ ideas about the (future) conclusions of the scientific study of consciousness (see Fig. 5 and Supplementary Table S1). First, we gauged how they think about the presence of consciousness in other species. Unsurprisingly, almost all respondents believe that other people besides themselves have consciousness. Interestingly, babies, the other category of human beings that we probed, ended up quite low in the ranking (‘baby’: mean: 1.92, SD: 0.94, ‘yes’ responses: 79.9%). It is supposed that monkeys, dogs, and octopuses are more likely to have consciousness than babies, and babies are only slightly more likely to have consciousness than bats. Furthermore, it is interesting to note that the clearest boundary between conscious and unconscious creatures or entities lies between fish and worm, where we find the largest difference between two entities as well as the reversal from a majority of ‘yes’ responses to a majority of ‘no’ responses (‘fish’: mean: 2.32, SD: 1.03, ‘yes’ responses: 61.5%; ‘worm: mean: 3.42, SD: 1.17, ‘yes’ responses: 26.2%). This boundary may be an artefact of not including insects. The 2020 PhilPapers Survey (Bourget and Chalmers 2020), a survey of philosophers, asked a question similar to ours (titled ‘Other minds’). They asked their participants ‘For which groups are some members conscious?’. The results were very comparable to ours: fish received 65.3% positive responses and worms received 24.2% positive responses. Interestingly, flies came out about intermediate between fish and worms, with 34.5% ‘yes’ answers, leaving less of a distinct boundary between fish and worms than our results suggest.

Figure 5.

Outcome questions. Three questions assessed participants’ opinions about outcomes of consciousness research for multiple items. Five-point Likert scales were used ranging from ‘definitely yes’ (dark green) to ‘definitely not’ (red). Mean score is indicated on the bars

A common approach to identify the NCC is to contrast ‘seen’ with ‘unseen’ stimuli. According to some authors, the result of the contrast between seen and unseen conditions includes neural processes that correlate with but are not part of the underlying neural mechanism of consciousness because they include—for example—report-related neural activity (for discussion on this issue, see Tsuchiya et al. 2015b; Block 2019). This concern has led to the proposal that to isolate the ‘true’ NCC, we need to distinguish it from ‘NCC-prerequisites’ and ‘NCC-consequences’ (Aru et al. 2012; de Graaf et al. 2012; Tsuchiya et al. 2015a). In this context, a large majority of respondents believe that the separation of true-NCC from NCC-pr and NCC-con is possible conceptually (‘NCC conceptually’; mean: 1.86, SD: 0.95, ‘yes’ responses: 78.7%). A smaller number of respondents also believe that the separation is also possible empirically (‘NCC empirically’; mean: 2.28, SD: 0.98, ‘yes’ responses: 66.3%). Finally, still most respondents believe that separating NCC’s components is necessary for progress (‘NCC necessary’; mean: 2.25, SD: 1.13, ‘yes’ responses: 64.6%). Taken together, the results indicate a positive stance (but not a consensus) towards the ‘true-NCC’ procedure.

One of the main debates in the literature is regarding the existence, extent, and scope of unconscious processing (Dehaene and Naccache 2001; Kunde et al. 2012; van Gaal and Lamme 2012; Hassin 2013; Newell and Shanks 2014; Meyen et al. 2021). We probed which of 17 perceptual and cognitive functions can happen unconsciously (‘unconscious’). Intriguingly, a majority of respondents believe that almost all of these functions can occur unconsciously, with ‘yes’ percentages between 75% and 100% for nine functions. Unconscious ‘motor responses’ are rated most likely, but this list of nine functions also included functions such as emotion processing, semantic processing, error/conflict monitoring, and response inhibition (see Fig. 5). The strongest controversies are about ‘working memory’ (mean: 2.80, SD: 1.17, ‘yes’ responses: 47.9%), ‘thinking’ (mean: 3.10, SD: 1.19, ‘yes’ responses: 31.9%), and ‘metacognition’ (mean: 3.10, SD: 1.17, ‘yes’ responses: 33.8%) with approximately equal amounts of yes/no responses.

Discussion

We surveyed scientists and philosophers active in the field of consciousness research (N = 166) that attended the ASSC conference in 2018 or 2019, in order to explore theoretical attitudes, methodological preferences, and opinions about fundamental issues related to the scientific study of consciousness. We will now put the results of our survey in a broader perspective. Formulating clear and unified objectives is an important catalyst for scientific progress; yet overall, our survey results show that this condition is currently not fully met in our sample of consciousness researchers. Therefore, we also give recommendations for future development.

The definition of ‘what’ needs to be explained by consciousness science is not shared between all respondents. We observed particularly large levels of disagreement with respect to whether consciousness has phenomenal content beyond cognitive access (which is arguably one of the most crucial defining aspects of consciousness), as well as with respect to other central issues such as whether consciousness requires higher-order states, and how unconscious processing shifts to conscious processing (Fig. 2). One might argue that we could use empirical (brain) data to settle these disagreements. However, mental phenomena, including consciousness, always require operationalization to enable (neuro)scientific investigation. Operationalization is theory-laden, meaning that a particular conceptualization of consciousness is implicitly or explicitly accepted and introduced in an experiment through specific experimental manipulations (e.g. masking vs. not masking a stimulus). The resulting empirical data are informative only ‘within’ or with reference to the context of a particular operationalization. As a consequence, by starting off with different conceptualizations of consciousness, researchers may not be investigating the same phenomenon even though the same overarching label is used (‘consciousness’) (Figdor 2013; Irvine 2013; Francken and Slors 2014).

To illustrate, it is currently debated whether conscious states, by definition, need to be reportable (Block 1995; Lamme 2006; Tsuchiya et al. 2015a; Pitts et al. 2018). To foster future progress, it would be useful for the community to converge on a shared definition of consciousness or on specific definitions for the different aspects of consciousness that require explanation (Dehaene et al. 2006; Seth and Bayne 2022). A first crucial step is to make one’s definition of consciousness ‘explicit’. Thus, instead of stating that one is going to study ‘consciousness’, we recommend to state clearly what is meant by that in this experiment in the form of a conceptual or computational definition. This is done already, for example, when referring to ‘conscious access’ (e.g. Mashour et al. 2020) versus ‘phenomenal consciousness’ (e.g. Fahrenfort et al. 2017), but clearly stating one’s definition should become common practice in the field. This explication will enable readers to decide whether they share the authors’ definition or not and will facilitate a more meaningful integration of empirical results because it will be clear which experiments study similar ‘kinds’ of consciousness (Bisenius et al. 2015). Consequently, some empirical disputes may dissolve when it becomes clearer that they rely on conceptual disagreement about the phenomenon being studied. For example, the debate about whether consciousness resides in the ‘front’ or the ‘back’ of the brain (Boly et al. 2017; Odegaard et al. 2017) may turn on different conceptions of consciousness.

Our survey asked participants to indicate for each theoretical position(s) with respect to consciousness whether they find it promising or not. As we did not define ‘promising’, respondents could have interpreted its meaning in different ways, for instance as a matter of explanatory potential in the (near) future (‘promising’) or as a matter of actual development and explanatory use (‘proponent’), although other interpretations probably exist. Interestingly, out of all theories, PPT received most positive responses, despite the fact that—at the time the survey was conducted—most varieties of PPT were not first and foremost theories of consciousness (Seth and Tsakiris 2018; Williford et al. 2018; Hohwy and Seth 2020), although many studies have been performed examining the relation between predictions and conscious perception and access (e.g. Melloni et al. 2011; Pinto et al. 2015; Stein and Peelen 2015; Meijs et al. 2018, 2019). This surprising result might be the consequence of respondents interpreting promising as having future explanatory potential. Because newer theories (such as PPT) have not been put to the test to the same extent as older, more established theories, they might inherently carry more promise than these more established theories. Two other (established) theories received a majority of positive evaluations, namely global (neuronal) workspace theory and higher-order theories, but they still received a considerable amount of (strong or moderate) negative ratings (Fig. 3). Our results show that there is no single theory that the majority of the respondents currently endorse, in line with the lack of agreement on the definitional aspects of consciousness.

We observed correlations between responses to a subset of the general questions (phenomenal, higher-order, and explanatory gap) and theory preferences (e.g. people in our survey who favour higher-order theories and global (neuronal) workspace theory tend to think that there is no phenomenal content beyond that to which we have cognitive access), indicating that certain sets of responses tend to go together in the evaluation of theories and definitions. These correlations revealed a clear distinction between theories that adhere to phenomenal consciousness (LRT and IIT) and theories that do less so (i.e. PPT, global (neuronal) workspace theory, and higher-order theories).

Finally, there was considerable overlap in respondents’ preference for particular theories, which resulted in three separate network clusters. This might reflect that various theories of consciousness share commonalities and may not be as distinct as they are sometimes presented to be. In line with this, recently several attempts have been made to look for commonalities between the main theories in the field (Shea and Frith 2019; Northoff and Lamme 2020; Wiese 2020; Seth and Bayne 2022), and our approach of assessing commonalities in the evaluation of theories might be informative for such efforts to realize theoretical alignment.

Despite a lack of consensus regarding the definition and most promising theory of consciousness, there appears to be agreement about how to best measure its presence/absence (Fig. 4). Our respondents prefer ‘subjective’ measures based on subjects’ introspective reports (‘have you seen the stimulus? yes/no’) over ‘objective’ measures, which are purely performance-based (e.g. the ability to discriminate between two alternatives). Whilst most respondents think the best way to check for consciousness is by subjective report, at the same time, most believe that the ‘true-NCC’ approach is necessary. This reveals a recognized tension because the latter approach attempts to exclude prerequisites and consequences of consciousness (e.g. report-related processes), which are often impossible to rule out using subjective measures in combination with post hoc trial sorting (Schmidt 2015; King et al. 2016; Shanks 2017). Respondents agreed that conscious perception should be separated from response biases in the search for the NCC (Fig. 2), and it has been argued in the past that subjective measures of consciousness are more susceptible to criterion shifts and response biases than objective measures striving for zero sensitivity (d′ = 0; Reingold and Merikle 1990; Holender 1992; Merikle and Reingold 1998; Duscherer and Holender 2005; Schmidt 2015).

A large range of tasks are being used to manipulate conscious perception (Fig. 4, ‘task’), in line with the wide-ranging conceptual definitions of consciousness, allowing for manipulations targeting multiple, different aspects of consciousness (Hulme et al. 2009; Irvine 2013). This may be partly due to the assumption that different types of manipulations may be optimal in combination with different neuroimaging methods. One consequence of the diverse combinations of methods and analysis schemes (e.g. time-domain vs. frequency domain responses) is that, when looked at collectively, putative signatures of consciousness cover large swathes of the brain (excluding the cerebellum; Dehaene 2014). A more promising avenue for theory disambiguation might focus on the temporal profile of processes related to consciousness. Here, theories such as global workspace theory and higher-order theories emphasize relatively late signatures, whilst others—such as LRT, IIT, and some interpretations of PPT—emphasize relatively early signatures. Interestingly, empirical evidence for early versus late signatures is currently hotly debated (compare, for example, Dembski et al. 2021; Sergent et al. 2021).

Some putative neural signatures of consciousness have been observed in several species, including macaque monkeys (Supèr et al. 2001; van Vugt et al. 2018), corvids (Nieder et al. 2020), ferrets (Yin et al. 2020), and mice (Allen et al. 2017; Steinmetz et al. 2019). Not surprisingly, our respondents tend to believe that consciousness is a gradual phenomenon in the animal kingdom (Fig. 5). Because consciousness is not considered a uniquely human characteristic, this implies that integrating human and non-human animal studies is important to come to a full understanding of the phenomenon, in line with several recent proposals (Edelman and Seth 2009b; Birch et al. 2020; Nieder et al. 2020). To successfully integrate findings across scientific disciplines, it is even more important to elucidate and unify conceptual and methodological goals within the field and its subfields.

There was strong agreement regarding the possibility of unconscious occurrence for almost all functions we included in our survey. This is in line with the idea that many cognitive and perceptual processes in the brain can be activated unconsciously and that consciousness is associated with the integration of information among distant brain modules (Lamme and Roelfsema 2000; Dehaene and Naccache 2001). Our respondents were more divided regarding whether the high-level cognitive functions, such as arithmetic, working memory, thinking, and metacognition, could be implemented without consciousness—reflecting recent debates in the literature, e.g. for unconscious arithmetic (Sklar et al. 2012; Karpinski et al. 2019) and unconscious working memory (Soto et al. 2011; Stein et al. 2016; Trübutschek et al. 2017). It seems that the general assumption among those surveyed is that virtually all cognitive functions can happen unconsciously. Even for the two ‘highest’ cognitive functions, thinking and metacognition, the ‘yes’ responses were on par with the ‘no’ responses, despite scarce empirical evidence. Thus, even though several methodological caveats have been identified over the years in the study of unconscious cognition (Schmidt and Vorberg 2006; Newell and Shanks 2014; Shanks 2017; Meyen et al. 2021; Stein et al. 2021) and the depth and scope of unconscious processes is debated (Dehaene and Naccache 2001; Kunde et al. 2012; van Gaal and Lamme 2012; Hassin 2013; Newell and Shanks 2014; Beauny et al. 2020; Meyen et al. 2021), it seems that at present at least some members of the consciousness research community are convinced of the existence of extensive unconscious processing the brain.

Limitations and future outlook

Our survey focused on theoretical, neuroscientific, and methodological issues in the field of consciousness, targeting scientists and philosophers that are currently studying consciousness. In contrast, previous surveys about consciousness and related topics have been conducted targeting academics and/or non-academics, focusing more on metaphysics (Barušs and Moore 1998; Demertzi et al. 2009; Bourget and Chalmers 2014, 2020) and funding and job opportunities in the field of consciousness science (Michel et al. 2018). In our case, the data presented here were collected during the annual ASSC conferences in 2018 (Poland, Europe) and 2019 (Canada, North America), but see the Supplements for the results that include non-ASSC attendees as well. By mainly targeting ASSC attendees, we aimed to survey a group of consciousness researchers that is actively involved in the field. However, the sample was a self-selected convenience sample of researchers attending ASSC, and although ASSC is the main/largest interdisciplinary consciousness science conference, we have not formally established whether they form a representative sample of consciousness scientists and philosophers at large. It therefore remains possible that more extensive surveys covering multiple years and multiple conferences paint a different picture.

Because we aimed to limit the time needed to fill out the questionnaire to increase the response rate, we had to make decisions about which questions to include and which not. Therefore, some questions were omitted that could have been illuminating. For example, it is likely that the responses to several questions are associated with people’s commitment to a particular position regarding the mind–brain relationship (or mind–body problem), but we did not explicitly ask respondents about their commitments in this regard. It is possible that respondents with different commitments—such as dualists compared to (reductive) materialists (or non-physicalists vs. physicalists)—may have provided distinctive responses to several questions that we did ask. These include questions about the ‘explanatory gap’ (when all functional behavioural properties have been fully explained, whether there will still be something left out of the explanation of consciousness) and ‘biology’ (whether we could have a complete biophysical explanation of consciousness). It has also been shown that taking different positions towards the mind–brain relationship has implications for both scientific practice and clinical work (Demertzi et al. 2009). Furthermore, although our survey included several questions related to the definition of consciousness, we did not ask participants to write down their explicit (working) definition of consciousness. Whilst such a question might have been informative, we expected that many respondents would have difficulty formulating their definition. Moreover, responses to such open questions would have been difficult to analyse and interpret.

Our results show that many views and opinions currently coexist in the consciousness community. Moreover, individual respondents appear to hold views that are not always completely consistent from a theoretical point of view. It should be acknowledged that the consciousness community spans people from various fields (philosophy, neuroscience, psychology, etc.) and that the questions in our survey tackled central issues in each of these fields. It may therefore be that, for example, not all neuroscientists are familiar with the philosophical thought experiments, whilst some philosophers may not be so familiar with the neural measures, possibly partly explaining observed inconsistencies in the response patterns of our participants.

Obviously, the results presented here do not reflect the ‘truth’ about consciousness, and in that respect, they will not play any evidential role in the science of consciousness. Rather, they provide us with a snapshot of the current views of a sample of consciousness researchers. Whilst this sociological information is interesting in itself, we believe our findings also have the potential to aid the improvement of the research practices of empirical consciousness science. Our results show that the theoretical foundations of contemporary consciousness research require development to achieve one of the community’s main ambitions, uncovering the neural mechanisms that give rise to conscious experience. Specifically, our results show that opinions differ about the definition of ‘what’ we are searching for, as well as the most appropriate methodological approach for ‘how’ to best approach the issue. If researchers have different objectives and pursue them in different ways, it is unlikely they will converge on one, unified solution (or indeed, onto several interlocking solutions). This situation is reminiscent of a young field of research, will likely improve naturally over time, and is not unique for consciousness science. For example, in the field of memory science, it has been recognized that the alignment of cross-disciplinary understanding of key concepts (e.g. learning and retrieval) is critical for a successful science of memory (Roediger et al. 2007).

In conclusion, by providing a snapshot of differing views and assumptions among consciousness researchers, our survey reveals opportunities for conceptual development and underlines the importance of consciousness researchers being clear about what they are talking about and when they are talking about consciousness.

Supplementary Material

Funding

This study was funded by an Amsterdam Brain & Cognition Talent Grant (2016) from the University of Amsterdam to J.C.F., an NWO Research Talent Grant (number 406.17.109) to L.B. and S.v.G., and an ERC Starting Grant (number 715605) to S.v.G. A.K.S. is grateful to the Dr. Mortimer and Theresa Sackler Foundation; to the Canadian Institute for Advanced Research Program on Brain, Mind, and Consciousness; and to the European Research Council (Advanced Investigator Grant number 101019254).

Footnotes

In this survey, our questions were restricted to, and respondents were instructed to focus on conscious ‘content’—in the following sense: ‘what one is conscious of, when one is conscious’.

The formulation of this question might have been suboptimal as we wrote that Mary knows ‘everything about colour vision’. It would have been better to say that Mary knows all the physical facts, which leaves room to argue about whether she knows everything. However, based on the results, it appears the respondents have interpreted this along the lines of ‘physical facts’, presumably because the knowledge argument is very familiar.

Note that it was not possible to compare response tendencies of senior researchers and junior researchers regarding the methodology questions due to the ranking format of the answer options, which resulted in too many missing values (with a too small effective sample size as a result) for statistical analyses.

Contributor Information

Jolien C Francken, Department of Psychology, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands; Amsterdam Brain and Cognition, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands; Institute for Interdisciplinary Studies, University of Amsterdam, Science Park 904, 1098 XH, Amsterdam, the Netherlands; Faculty of Philosophy, Theology and Religious Sciences, Radboud University, Erasmusplein 1, 6525 HT, Nijmegen, the Netherlands.

Lola Beerendonk, Department of Psychology, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands; Amsterdam Brain and Cognition, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands.

Dylan Molenaar, Department of Psychology, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands; Amsterdam Brain and Cognition, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands.

Johannes J Fahrenfort, Department of Psychology, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands; Amsterdam Brain and Cognition, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands; Department of Experimental and Applied Psychology, Vrije Universiteit, Van der Boechorststraat 7, 1081 BT, Amsterdam, the Netherlands.

Julian D Kiverstein, Academic Medical Centre, University of Amsterdam, Meibergdreef 9, 1105 AZ, Amsterdam, the Netherlands.

Anil K Seth, Department of Informatics, University of Sussex, Sussex House, Falmer, Brighton BN1 9RH, UK; Sackler Centre for Consciousness Science, University of Sussex, Sussex House, Falmer, Brighton BN1 9RH, UK; Canadian Institute for Advanced Research (CIFAR) Program on Brain, Mind, and Consciousness, MaRS Centre, West Tower, 661 University Avenue, Toronto, ON M5G 1M1, Canada.

Simon van Gaal, Department of Psychology, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands; Amsterdam Brain and Cognition, University of Amsterdam, Nieuwe Achtergracht 129-B, 1018 WS, Amsterdam, the Netherlands.

Supplementary data

Supplementary data is available at NCONSC online.

Data availability

Data are available at the OSF: https://osf.io/xz3ua/.

Author contributions

J.C.F., J.J.F., A.K.S., and S.v.G. developed the study concept and design. Data collection was performed by J.C.F. and L.B. J.C.F., L.B., D.M., and S.v.G. performed the data analysis and interpretation. J.C.F. drafted the manuscript, and L.B., J.J.F., J.D.K., A.K.S., and S.v.G. provided critical revisions. All authors approved the final version of the manuscript for submission.

Conflict of interest statement

None declared.

References

- Abend G. What are neural correlates neural correlates of? BioSocieties 2017;12:415–38. [Google Scholar]

- Allen WE, Kauvar IV, Chen MZ et al. Global representations of goal-directed behavior in distinct cell types of mouse neocortex. Neuron 2017;94:891–907.e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aru J, Bachmann T, Singer W et al. Distilling the neural correlates of consciousness. Neurosci Biobehav Rev 2012;36:737–46. [DOI] [PubMed] [Google Scholar]

- Atmanspacher H. Quantum Approaches to Consciousness. In: Zalta EN (ed.), The Stanford Encyclopedia of Philosophy, 2020. [Google Scholar]

- Baars BJ. A Cognitive Theory of Consciousness. Cambridge University Press, 1988. [Google Scholar]

- Barrett LF. The future of psychology: connecting mind to brain. Psychol Inq 2009;4:326–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barušs I, Moore RJ. Beliefs about consciousness and reality of participants at “Tucson II”. J Conscious Stud 1998;5:483–96. [Google Scholar]

- Beauny A, de Heering A, Muñoz Moldes S et al. Unconscious categorization of submillisecond complex images. PLoS One 2020;15:e0236467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bilder RM, Sabb FW, Parker DS et al. Cognitive ontologies for neuropsychiatric phenomics research. Cogn Neuropsychiatry 2009;14:419–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birch J, Schnell AK, Clayton NS. Dimensions of animal consciousness. Trends Cogn Sci 2020;24:789–801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisenius S, Trapp S, Neumann J et al. Identifying neural correlates of visual consciousness with ALE meta-analyses. NeuroImage 2015;122:177–87. [DOI] [PubMed] [Google Scholar]

- Block N. On a confusion about a function of consciousness. Behav Brain Sci 1995;18:227–87. [Google Scholar]

- Block N. How can we find the neural correlate of consciousness? Trends Neurosci 1996;19(11):456–9. [DOI] [PubMed] [Google Scholar]

- Block N. Perceptual consciousness overflows cognitive access. Trends Cogn Sci 2011;15:567–75. [DOI] [PubMed] [Google Scholar]

- Block N. What is wrong with the no-report paradigm and how to fix it. Trends Cogn Sci 2019;23:1003–13. [DOI] [PubMed] [Google Scholar]

- Boly M, Massimini M, Tsuchiya N et al. Are the neural correlates of consciousness in the front or in the back of the cerebral cortex? Clinical and neuroimaging evidence. J Neurosci 2017;37:9603–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bourget D, Chalmers DJ. What do philosophers believe? Philos Stud 2014;170:465–500. [Google Scholar]

- Bourget D, Chalmers DJ. Philosophers on Philosophy: The 2020 PhilPapers Survey. 2020. [Google Scholar]

- Brown R, Lau H, LeDoux JE. Understanding the higher-order approach to consciousness. Trends Cogn Sci 2019;23:754–68. [DOI] [PubMed] [Google Scholar]

- Casali AG, Gosseries O, Rosanova M et al. A theoretically based index of consciousness independent of sensory processing and behavior. Sci Transl Med 2013;5(198):198ra105.doi: 10.1126/scitranslmed.3006294 [DOI] [PubMed] [Google Scholar]

- Chalmers DJ. Facing up to the problem of consciousness. J Conscious Stud 1995;2:200–19. [Google Scholar]

- Chalmers DJ. What is a neural correlate of consciousness? In: T. Metzinger (ed.), Neural Correlates of Consciousness: Empirical and Conceptual Questions. MIT Press, 2000, 31.doi: 10.1093/acprof:oso/9780195311105.001.0001 [DOI] [Google Scholar]

- Chalmers DJ. The hard problem of consciousness. Blackwell Companion Conscious 2017;32–42.doi: 10.1002/9781119132363.ch3 [DOI] [Google Scholar]

- Cohen MA, Dennett DC. Consciousness cannot be separated from function. Trends Cogn Sci 2011;15:358–64. [DOI] [PubMed] [Google Scholar]

- Crick F, Koch C. Are we aware of neural activity in primary visual cortex? Nature 1995;375:121–3. [DOI] [PubMed] [Google Scholar]

- Cummins R. Functional analysis. J Philos 1975;72:741–65. [Google Scholar]

- de Graaf TA, Hsieh PJ, Sack AT. The “correlates” in neural correlates of consciousness. Neurosci Biobehav Rev 2012;36:191–7. [DOI] [PubMed] [Google Scholar]

- Dehaene S. Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts. Penguin Putnam Inc, 2014. [Google Scholar]

- Dehaene S, Changeux JP. Experimental and theoretical approaches to conscious processing. Neuron 2011;70:200–27. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Changeux JP, Naccache L et al. Conscious, preconscious, and subliminal processing: a testable taxonomy. Trends Cogn Sci 2006;10:204–11. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Lau H, Kouider S. What is consciousness, and could machines have it? Science 2017;358:486–92. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Naccache L. Towards a cognitive neuroscience of consciousness: basic evidence and a workspace framework. Cognition 2001;79:1–37. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Sergent C, Changeux JP. A neuronal network model linking subjective reports and objective physiological data during conscious perception. PNAS 2003;100:8520–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dembski C, Koch C, Pitts M. Perceptual awareness negativity: a physiological correlate of sensory consciousness. Trends Cogn Sci 2021;25:660–70. [DOI] [PubMed] [Google Scholar]

- Demertzi A, Liew C, Ledoux D et al. Dualism persists in the science of mind. Ann N Y Acad Sci 2009;1157:1–9. [DOI] [PubMed] [Google Scholar]

- Dennett DC. Consciousness Explained. Little, Brown, 1991. [Google Scholar]

- Dennett DC. Are we explaining consciousness yet? Cognition 2001;79:221–37. [DOI] [PubMed] [Google Scholar]

- Doerig A, Schurger A, Herzog MH. Hard criteria for empirical theories of consciousness. Cogn Neurosci 2020;1–22. [DOI] [PubMed] [Google Scholar]

- Duscherer K, Holender D. The role of decision biases in semantic priming effects. Swiss J Psychol 2005;64:249–58. [Google Scholar]

- Edelman DB, Seth AK. Animal consciousness: a synthetic approach. Trends Neurosci 2009a;32:476–84. [DOI] [PubMed] [Google Scholar]

- Edelman DB, Seth AK. Animal consciousness: a synthetic approach. Trends Neurosci 2009b;32:476–84. [DOI] [PubMed] [Google Scholar]

- Epskamp S, Fried EI. A tutorial on regularized partial correlation networks. Psychol Methods 2018;23:617–34. [DOI] [PubMed] [Google Scholar]

- Fahrenfort JJ, van Leeuwen J, Olivers CNL et al. Perceptual integration without conscious access. Proc Natl Acad Sci 2017;114:3744–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernández-Espejo D, Owen AM. Detecting awareness after severe brain injury. Nat Rev Neurosci 2013;14:801–9. [DOI] [PubMed] [Google Scholar]

- Figdor C. What is the “cognitive” in cognitive neuroscience? Neuroethics 2013;6:105–14. [Google Scholar]

- Foygel R, Drton M. Extended Bayesian information criteria for Gaussian graphical models. Advances in Neural Information processing Systems, 2010;23. [Google Scholar]

- Francken JC, Slors M. From commonsense to science, and back: the use of cognitive concepts in neuroscience. Conscious Cogn 2014;29:248–58. [DOI] [PubMed] [Google Scholar]

- Frith CD. What is consciousness for? Pragmat Cogn 2010;18:497–551. [Google Scholar]

- Frith CD, Metzinger T What’s the use of consciousness? In: Engel AK, Friston KF, Kragic D (eds.), Where’s the Action? the Pragmatic Turn in Cognitive Science. Cambridge, MA: MIT Press, 2016. [Google Scholar]

- Ginsburg S, Jablonka E. The Evolution of the Sensitive Soul. MIT Press, 2019. [Google Scholar]

- Hassin RR. Yes it can: on the functional abilities of the human unconscious. Perspectives Psychol Sci 2013;8:195–207. [DOI] [PubMed] [Google Scholar]

- Hohwy J, Seth A. Predictive processing as a systematic basis for identifying the neural correlates of consciousness. Philos Mind Sci 2020;1(II):3.doi: 10.33735/phimisci.2020.II.64 [DOI] [Google Scholar]

- Holender D. Expectancy effects, congruity effects and the interpr tation of response latency measurement. In: Alegria J, Holender D, Junca de Morais J et al. (eds.), Analytic Approaches to Human Cognition. Amsterdam: Elsevier Science Publishers, 1992. 351–75. [Google Scholar]

- Hulme OJ, Friston KF, Zeki S. Neural correlates of stimulus reportability. J Cogn Neurosci 2009;21:1602–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irvine E. Consciousness as a Scientific Concept. Springer, 2013. [Google Scholar]

- Jackson F. Epiphenomenal qualia. Philos Q 1982;32:127. [Google Scholar]

- JASP Team . 2020. JASP (Version 0.13.1).

- Karpinski A, Briggs JC, Yale M. A direct replication: unconscious arithmetic processing. Eur J Soc Psychol 2019;49:637–44. [Google Scholar]

- King J-R, Pescetelli N, Dehaene S et al. Brain mechanisms underlying the brief maintenance of seen and unseen sensory information. Neuron 2016;92:1122–34. [DOI] [PubMed] [Google Scholar]

- Kirk R Zombies. In: Zalta EN (ed.), The Stanford Encyclopedia of Philosophy, 2019. [Google Scholar]

- Klink PC, Self MW, Lamme VA, Roelfsema PR et al. The Constitution of Phenomenal Consciousness. Toward a Science and Theory. Theories and methods in the scientific study of consciousness, 2015, 92. [Google Scholar]

- Kunde W, Reuss H, Kiesel A. Consciousness and cognitive control. Adv Cogn Psychol 2012;8:9–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamme VAF. Towards a true neural stance on consciousness. Trends Cogn Sci 2006;10:494–501. [DOI] [PubMed] [Google Scholar]

- Lamme VAF. How neuroscience will change our view on consciousness. Cogn Neurosci 2010;1:204–20. [DOI] [PubMed] [Google Scholar]

- Lamme VAF, Roelfsema PR. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci 2000;23:571–9. [DOI] [PubMed] [Google Scholar]

- Lau H, Rosenthal D. Empirical support for higher-order theories of conscious awareness. Trends Cogn Sci 2011;15:365–73. [DOI] [PubMed] [Google Scholar]

- Mashour GA, Roelfsema P, Changeux JP et al. Conscious processing and the global neuronal workspace hypothesis. Neuron 2020;105(5):776–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meijs EL, Mostert P, Slagter HA et al. Exploring the role of expectations and stimulus relevance on stimulus-specific neural representations and conscious report. Neurosci Conscious 2019;2019(1). 10.1093/nc/niz011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meijs EL, Slagter HA, de Lange FP et al. Dynamic interactions between top–down expectations and conscious awareness. J Neurosci 2018;38:2318–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melloni L, Schwiedrzik CM, Müller N et al. Expectations change the signatures and timing of electrophysiological correlates of perceptual awareness. J Neurosci 2011;31:1386–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merikle PM, Reingold EM. On demonstrating unconscious perception: comment on Draine and Greenwald (1998). J Exp Psychol Gen 1998;127:304–10. [DOI] [PubMed] [Google Scholar]

- Meyen S, Zerweck IA, Amado C et al. Advancing research on unconscious priming: When can scientists claim an indirect task advantage? Journal of Experimental Psychology: General 2022;151(1):65. [DOI] [PubMed] [Google Scholar]

- Michel M, Fleming SM, Lau H et al. An informal internet survey on the current state of consciousness science. Front Psychol 2018;9:2134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newell BR, Shanks DR. Unconscious influences on decision making: a critical review. Behav Brain Sci 2014;38:1–19. [DOI] [PubMed] [Google Scholar]

- Nida-Rümelin M, O Conaill D Qualia: The Knowledge Argument. In: Zalta EN (ed.), The Stanford Encyclopedia of Philosophy, 2019. [Google Scholar]

- Nieder A, Wagener L, Rinnert P. A neural correlate of sensory consciousness in a corvid bird. Science 2020;369:1626–9. [DOI] [PubMed] [Google Scholar]

- Northoff G, Lamme VAF. Neural signs and mechanisms of consciousness: is there a potential convergence of theories of consciousness in sight? Neurosci Biobehav Rev 2020;118:568–87. [DOI] [PubMed] [Google Scholar]

- O’Regan JK, Noë A. A sensorimotor account of vision and visual consciousness. Behav Brain Sci 2001;24:939–73. [DOI] [PubMed] [Google Scholar]

- Odegaard B, Knight RT, Lau H et al. Should a few null findings falsify prefrontal theories of conscious perception? J Neurosci 2017;37:9593–602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennartz CMA. Consciousness, representation, action: the importance of being goal-directed. Trends Cogn Sci 2018;22:137–53. [DOI] [PubMed] [Google Scholar]

- Pinto Y, van Gaal S, de Lange FP et al. Expectations accelerate entry of visual stimuli into awareness. J Vis 2015;15:13. [DOI] [PubMed] [Google Scholar]

- Pitts MA, Lutsyshyna LA, Hillyard SA. The relationship between attention and consciousness: an expanded taxonomy and implications for ‘no-report’ paradigms. Philos Transact Royal Soc B 2018;373:20170348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prinz J. A neurofunctional theory of visual consciousness. Conscious Cogn 2000;9:243–59. [DOI] [PubMed] [Google Scholar]

- Reingold EM, Merikle PM. On the inter‐relatedness of theory and measurement in the study of unconscious processes. Mind Lang 1990;5:9–28. [Google Scholar]

- Roediger H, Dudai Y, Fitzpatrick S. Science of Memory: Concepts. In: Roediger III H, Dudai Y, Fitzpatrick S (eds.), Oxford University Press, 2007. [Google Scholar]

- Rosenthal D. Consciousness and Mind. Clarendon, 2005. [Google Scholar]

- Schmidt T. Invisible stimuli, implicit thresholds: why invisibility judgments cannot be interpreted in isolation. Adv Cogn Psychol 2015;11:31–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt T, Vorberg D. Criteria for unconscious cognition: three types of dissociation. Percept Psychophys 2006;68:489–504. [DOI] [PubMed] [Google Scholar]

- Sergent C, Corazzol M, Labouret G et al. Bifurcation in brain dynamics reveals a signature of conscious processing independent of report. Nat Commun 2021;12:1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seth AK, Bayne T. Theories of consciousness. Nat Rev Neurosci 2022;2022:1–14. [DOI] [PubMed] [Google Scholar]

- Seth AK, Tsakiris M. Being a beast machine: the somatic basis of selfhood. Trends Cogn Sci 2018;22:969–81. [DOI] [PubMed] [Google Scholar]

- Shanks DR. Regressive research: the pitfalls of post hoc data selection in the study of unconscious mental processes. Psychon Bull Rev 2017;24:752–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shea N, Frith CD. The global workspace needs metacognition. Trends Cogn Sci 2019;23:560–71. [DOI] [PubMed] [Google Scholar]

- Sklar AY, Levy N, Goldstein A et al. Reading and doing arithmetic nonconsciously. PNAS 2012;109:19614–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soto D, Mäntylä T, Silvanto J. Working memory without consciousness. Curr Biol 2011;21:R912–3. [DOI] [PubMed] [Google Scholar]

- Stein T, Kaiser D, Fahrenfort JJ et al. The human visual system differentially represents subjectively and objectively invisible stimuli. PLoS Biol 2021;19:e3001241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein T, Kaiser D, Hesselmann G. Can working memory be non-conscious? Neurosci Conscious 2016;2016:niv011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein T, Peelen MV. Content-specific expectations enhance stimulus detectability by increasing perceptual sensitivity. J Exp Psychol Gen 2015;144:1089–104. [DOI] [PubMed] [Google Scholar]

- Steinmetz NA, Zatka-Haas P, Carandini M et al. Distributed coding of choice, action and engagement across the mouse brain. Nature 2019;576:266–73. [DOI] [PMC free article] [PubMed] [Google Scholar]