Abstract

Purpose: To evaluate the image quality generated by eight commercially available intraoral sensors. Methods: Eighteen clinicians ranked the quality of a bitewing acquired from one subject using eight different intraoral sensors. Analytical methods used to evaluate clinical image quality included the Visual Grading Characteristics method, which helps to quantify subjective opinions to make them suitable for analysis. Results: The Dexis sensor was ranked significantly better than Sirona and Carestream-Kodak sensors; and the image captured using the Carestream-Kodak sensor was ranked significantly worse than those captured using Dexis, Schick and Cyber Medical Imaging sensors. The Image Works sensor image was rated the lowest by all clinicians. Other comparisons resulted in non-significant results. Conclusions: None of the sensors was considered to generate images of significantly better quality than the other sensors tested. Further research should be directed towards determining the clinical significance of the differences in image quality reported in this study.

Key words: Imaging, oral diagnosis, digital sensors, complementary metal-oxide semiconductor, Visual Grading Characteristics

INTRODUCTION

The use of computing and digital technologies is an emerging trend in dentistry. Already in the 1990s, 66.8% of dentists in the USA used computers in their practice1. In 2000, it was estimated that 5% of practitioners in North America used digital radiography2, and in 2005, 25% of the surveyed dentists used some form of digital radiography and 18% had planned to purchase digital equipment within 1 year3. The percentage of users of digital radiography was reported to be 30% in 2010 and the expectation is that this trend will continue to increase4. Among the digital technologies predicted to be incorporated in practice, digital radiography is quickly becoming the leading imaging technique in dentistry3. The most significant factor in deciding whether to include digital imaging in a dental practice is availability and the cost of the computer system. Dentists reported that in addition to the lack of chemicals, lower levels of exposure, ease and cost of imaging storage and the perceived time saving, improved clinical image is a prime motive for integrating this technology in practice5., 6.. Others have asserted that the most significant advantages of digital radiography are image archiving and access, computer-aided image interpretation and tools for image enhancement7. Overall, the vast majority of owners of digital imaging systems are satisfied and believe that productivity is increased3.

Intraoral solid-state rigid sensors are based on either the charge-coupled device (CCD) or the complementary metal–oxide–semiconductor (CMOS) technologies. There is debate as to which technology is most advantageous; CMOS sensors have lower energy requirements, but both CCD and CMOS sensors are capable of capturing 12-bit images and have clinically acceptable spatial resolution3., 7.. The CMOS technology is currently incorporated in the products of several leading manufacturers.

It has already been shown that intraoral digital sensors provide diagnostic images. Early digital systems were useful in evaluating endodontic file lengths up to a size of 158. A recent study determined that the performance in this regard is precise for files up to a size of 69. The detection of primary and recurrent caries is similar for digital images and for film10., 11.. In addition, studies have shown that there is no significant difference in the sensitivity and specificity of the detection of dentinal caries using digital or film-based bitewings10., 11..The topic of image quality generated by intraoral digital sensors is complex. This is because ‘defining image quality is a complicated process…part of a longer chain of procedures and actions’7. As stated, ‘there is a continuous need for the evaluation of new digital intraoral radiography systems that appear on the market, first and foremost for their image quality…’12. Subjective image-quality evaluation has reportedly been performed by a small number of evaluators, using several digital systems and usually ‘in vitro’13., 14., 15. using prefabricated phantoms or cadavers16., 17., 18., 19..

Image quality also can be affected by placement of the rigid sensor in the mouth, a manoeuvre that is more challenging than placement of film3. Furthermore, clinicians must adapt to digital images, which have a smaller surface than film; for example, the total active area of a size 2 film is 1,235 mm2, whereas similar size digital sensors have active areas in the range of 802–940 mm2 only. As with conventional radiography, lighting conditions are important for digital image evaluation16., 20., 21., but observers’ performance was found to be independent of the visual characteristics of the display monitors22., 23., 24..

In the present study we used a greater number of sensors than previously reported and the image evaluated was captured in vivo. Images acquired with eight digital intraoral sensors were evaluated by faculty who teach undergraduate dental students. The null hypothesis was that no difference would be found in the clinical image quality between the sensors.

MATERIAL AND METHODS

Of 12 companies contacted to provide equipment for this evaluation, eight responded and provided size 2 CMOS intraoral sensors: XDR (Cyber Medical Imaging, Los Angeles, CA, USA); RVG 6100 (Carestream Dental LLC, Atlanta, GA, USA); Platinum (DEXIS LLC., Hatfield, PA, USA); CDR Elite (Schick Technologies, Long Island City, NY, USA); ProSensor (Planmeca, Helsinki, Finland); EVA (ImageWorks, Elmsford, NY, USA); XIOS Plus (Sirona, Bensheim, Germany); and GXS-700 (Gendex Dental Systems, Hatfield, PA, USA). The Platinum sensor comes in a single size, and is considered to be size 2 because it is used for taking radiographs of posterior teeth and for bitewings. Each sensor was used to capture one left bitewing from the same subject (one of the authors).

Institutional Review Board (IRB) approval was sought; however, because this was a single-subject study, the Case Western Reserve University IRB concluded that this study did not require further review or approval. The faculty subject who volunteered (one of the authors), provided verbal consent and was informed about the effective radiation dose of bitewings (a total of 10 μSv for eight posterior bitewings)25.

The volunteer was protected with a lead apron and a protective thyroid collar. Sensors were positioned intraorally with a Rinn kit (model XCP-DS; Dentsply Rinn, Elgin, IL, USA) and the source was a Planmeca Intra X-ray DC machine (Planmeca) with digital exposure parameters (63 kVp, 8 mA and 0.064 seconds) and rectangular collimation.The bitewings were taken by one of the authors (W.A.R.), who is an oral and maxillofacial radiologist; in total, eight bitewings were taken, and no retakes were necessary.

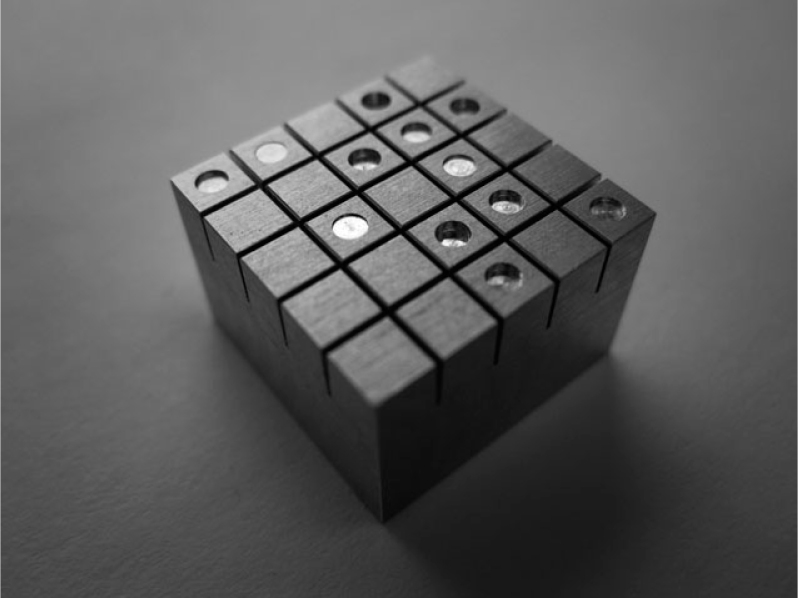

The same sensors were used to capture the image of an aluminum phantom (99% pure aluminum; manufactured according to our specifications by Bien Air Dental SA, Bienne, Switzerland), 1.5 cm × 1.5 cm × 1 cm (w × l × h) in size (Figure 1). This type of phantom has been previously described16., 19.. The same X-ray machine and settings that were used for the subject were used for the phantom. The phantom was divided into 25 squares (3 mm × 3 mm), of which 12 had a round well with a diameter of 1.5 mm and with a depth varying from 0.05 to 0.6 mm, in increments of 0.05 mm. The wells were randomly distributed over the surface of the phantom. All dimensions had a size tolerance of ±0.005 mm.

Figure 1.

Aluminum Phantom with 12 wells of 1.5 mm in diameter and with a depth varying from 0.05 to 0.6 mm, in increments of 0.05 mm.

All sensors were operated with their native software installed on a 15″ MacBookPro (Apple Inc., Infinity Loop, CA, USA) with a Core 2 Duo processor and 4GB of RAM. The computer was equipped with a 32-bit version of Windows 7 Professional (Microsoft Corporation, Redmond, WA, USA) with the latest updates running under Boot Camp software that enables Apple computers to emulate Windows, similarly to a PC environment.

The latest version of the software application (at the time of testing) was used to capture radiographs from each sensor: XDR 3.0.5 Beta (XDR; Cyber Medical Imaging); KDI 6.11.7.0 (RVG 6100; Carestream Dental LLC); Dexis 9.2 (Platinum; DEXIS LLC); CDR DICOM 4.5.0.92 (CDR Elite; Schick Technologies); Romexis 2.3.1.R (ProSensor; Planmeca); EVAsoft 1.0 (EVA; ImageWorks); Sidexis 2.5.2 (XIOS Plus; Sirona); and VixWin Platinum 2.0 (Gendex GXS-700; Gendex Dental Systems).

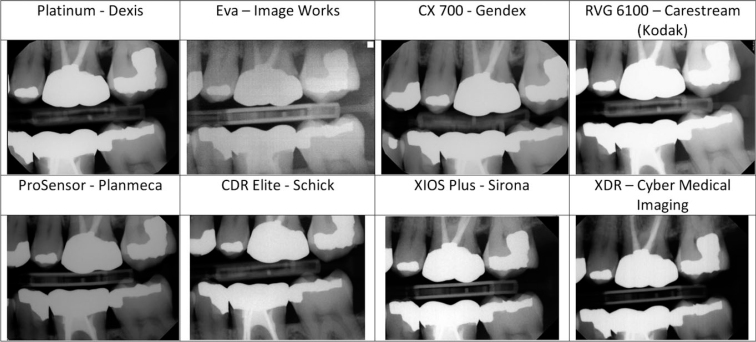

Images were saved in uncompressed TIFF format (Figure 2). For evaluation purposes, the images were displayed on a Dell G2410 monitor at a resolution of 1920 × 1080 pixels in a room without ambient light. Clinical and phantom images were displayed in separate templates created in Adobe Lightroom Ver. 3.5, 64 bit software (Adobe Systems Incorporated, San Jose, CA, USA). When displayed in the Lightroom template, images were not labelled with the name of the sensors (Figure 3). The phantom images were rotated randomly. The templates in Lightroom allowed the images to be displayed side by side, and when an image was double clicked, it was enlarged. Double clicking again on the image resumed the display of the template. The evaluators were allowed to enlarge each image as desired, but were not allowed to adjust other parameters, such as contrast or brightness.

Figure 2.

Clinical images acquired with the intraoral digital sensors tested.

Figure 3.

Clinical images displayed in Adobe Lightroom for evaluation.

Image evaluation

Eighteen clinicians evaluated the clinical and the phantom images. All evaluators had at least 1 year of experience with digital radiography in the undergraduate clinic. For the clinical images, the clinicians were presented with the following instructions: ‘Arrange the images according to the image clinical quality (best being 1st, worst being 8th). Image quality parameters include but are not limited to clarity, diagnostic value, contrast, sharpness, etc. Please provide your overall evaluation of the clinical quality of the image’. The results of the evaluation were recorded on a separate form for each clinician.

For the phantom images, the clinicians were presented with a form with a grid of 5 × 5 squares, representing the phantom. The evaluators were requested to identify the presence of the wells on a grid of 25 possible locations and to mark the results on the form. The results of the identification were used to determine if any of the evaluators assessed the images such that they would be considered an outlier; if a protocol stated that the scores generated by that evaluator for the clinical images would not be included in the analysis.

Analytical methods

To detect outliers, the frequency and the distribution of false-positive responses of the evaluators for the phantom images were computed. A 95% confidence interval of the total sample of the evaluators was also computed.

For the evaluation of clinical images, a Visual Grading Characteristics (VGC) analysis was performed26., 27.. This method was designed to determine the difference in image quality between two modalities in cases of ordinal multiple rating. In the current study, the data consisted of multiple ratings for each image on an ordinal scale. For each of the images to be compared, the frequency of the ratings provided by the evaluators, for each level of the scale, was calculated. These frequencies were then transformed into cumulative proportions for each level of the evaluation scale and this served as a basis for generating VGC curves.

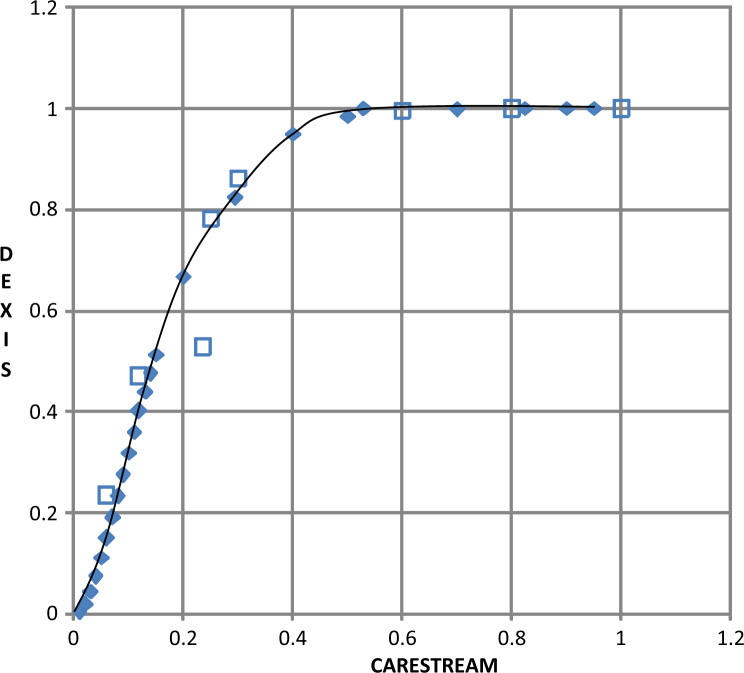

The area under the curve (AUC) was utilised as a single measure of the difference in image quality between two modalities compared (i.e. each pair of sensors)26. The AUC represented the difference in overall rankings between the two images for which the VGC curve was generated (an area which significantly differed by 50% represented a significant difference between the rankings of the two images).

The VGC curves and the corresponding AUC values for each pair of sensors were calculated using the ROCKIT software, ver. 0.9.1 Beta, downloaded from http://metz-roc.uchicago.edu/MetzROC/software28. All other statistics were generated in SPSS for Windows ver. 20 (IBM, Armonk, NY, USA).

RESULTS

Eighteen faculty clinicians who teach in the undergraduate clinic at Case Western Reserve University School of Dental Medicine (CWRU) evaluated the clinical and phantom images. The average evaluator’s time since graduation was 25.12 (standard deviation = 8.13; range: 11–39) years.

In the detection of outliers, from a total of 15 false positives, nine were attributed to one clinician (four other clinicians had one false positive each and another clinician had two false positives). The mean value of true-positive ‘hits’ for the first clinician (1.92) was not within the 95% confidence interval limits of the total sample of the evaluators (3.23–4.07). In light of these results, the evaluations reported by this clinician were considered as outliers and were not included in the analysis of the clinical images. Consequently, all the results reported reflect the evaluations provided by only 17 clinicians.To calculate the reliability of the rankings of the clinical images by the remaining 17 evaluators, we calculated the inter-rater reliability reflected by the intraclass correlation coefficent (ICC), based on the random effects assumption. The ICC was calculated using an assumption of absolute agreement (i.e. we expected exactly the same ranking from all evaluators). For this sample, we obtained an ICC of 0.92 (95% confidence interval: 0.80–0.98).

The ranking provided by the evaluators of each sensor was compared between all possible sensor pairs using the VGC method26., 27.. Table 1 presents the frequency of each rank (1 = best, 8 = worst) and the cumulative proportions for each level of the evaluation scale. The EVA sensor was not included in the analysis because it was consistently ranked 8 (worst) by all the evaluators. Therefore, as no sensor other than EVA was ranked 8, the analysis included only seven levels of ranking. We calculated the AUC for each pair of the seven sensors (a total of 21 pairs) and the 95% confidence interval for the calculated AUC (Table 2). The AUC is the area under the receiver–operating characteristics (ROC) curve that was generated for each pair of sensors, as illustrated in Figure 4. Significance (P < 0.05) is determined when the confidence interval does not include the 0.5 value. In other words, if the calculated area under the curve was significantly different, 50% or more, there was a significant difference between the rankings of the two sensors under consideration. The results show that the clinical image acquired with the Platinum (DEXIS LLC) sensor was ranked significantly better than that captured with the XIOS Plus (Sirona) and the RVG 6100 (Carestream Dental LLC) sensors; the image captured with the RVG 6100 (Carestream Dental LLC) sensor was ranked significantly worse than the images captured with the Platinum (DEXIS LLC), CDR Elite (Schick Technologies) and XDR (Cyber Medical Imaging) sensors. All other comparisons resulted in non-significant differences.

Table 1.

Frequency and cumulative proportions (according to sensor) of the clinical image quality ranking

| Rank | Frequency Platinum– DEXIS | Cumulative Proportion Platinum | Frequency EVA– Image Works | Cumulative Proportion EVA | Frequency GXS-700– Gendex | Cumulative Proportion GXS-700 | Frequency RVG 6100– Carestream (Kodak) | Cumulative Proportion RVG 6100 |

|---|---|---|---|---|---|---|---|---|

| 1 | 3 | 1 | 0 | 1 | 2 | 1 | 0 | 1 |

| 2 | 5 | 0.82352941 | 0 | 1 | 3 | 0.882352941 | 0 | 1 |

| 3 | 4 | 0.52941176 | 0 | 1 | 2 | 0.705882353 | 3 | 1 |

| 4 | 1 | 0.29411765 | 0 | 1 | 0 | 0.588235294 | 5 | 0.82352941 |

| 5 | 2 | 0.23529412 | 0 | 1 | 3 | 0.588235294 | 1 | 0.52941176 |

| 6 | 1 | 0.11764706 | 0 | 1 | 3 | 0.411764706 | 4 | 0.47058824 |

| 7 | 1 | 0.05882353 | 0 | 1 | 4 | 0.235294118 | 4 | 0.23529412 |

| 8 | 0 | 0 | 17 | 1 | 0 | 0 | 0 | 0 |

| Total | 17 | 17 | 17 | 17 | ||||

| Frequency ProSensor– Planmeca | Cumulative Proportion ProSensor | Frequency CDR Elite– Schick | Cumulative Proportion CDR Elite | Frequency XIOS Plus– Sirona | Cumulative Proportion XIOS plus | Frequency XDR– Cyber Medical Imaging | Cumulative Proportion XDR | |

| 1 | 3 | 1 | 2 | 1 | 2 | 1 | 5 | 1 |

| 2 | 2 | 0.82352941 | 3 | 0.882352941 | 0 | 0.882352941 | 4 | 0.70588235 |

| 3 | 3 | 0.70588235 | 1 | 0.705882353 | 2 | 0.882352941 | 2 | 0.47058824 |

| 4 | 0 | 0.52941176 | 5 | 0.647058824 | 5 | 0.764705882 | 1 | 0.35294118 |

| 5 | 2 | 0.52941176 | 3 | 0.352941176 | 4 | 0.470588235 | 2 | 0.29411765 |

| 6 | 4 | 0.41176471 | 3 | 0.176470588 | 2 | 0.235294118 | 0 | 0.17647059 |

| 7 | 3 | 0.17647059 | 0 | 0 | 2 | 0.117647059 | 3 | 0.17647059 |

| 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Total | 17 | 17 | 17 | 17 |

XDR (Cyber Medical Imaging), RVG 6100 (Carestream Dental LLC), Platinum (DEXIS LLC), CDR Elite (Schick Technologies), ProSensor (Planmeca), EVA (ImageWorks), XIOS Plus (Sirona) and Gendex GXS-700 (Gendex Dental Systems).

Table 2.

Visual Grading Characteristics (VGC) results for the clinical images ranking

| Platinum– DEXIS | GXS-700– Gendex | RVG 6100– Carestream (Kodak) | ProSensor– Planmeca | CDR Elite– Schick | XIOS Plus– Sirona | |

|---|---|---|---|---|---|---|

| GXS-700– Gendex | 0.67 | |||||

| 0.094 | ||||||

| 0.475−0.83 | ||||||

| RVG 6100– Carestream (Kodak) | 0.8279 | 0.595 | ||||

| 0.0756 | 0.104 | |||||

| 0.642−0.937* | 0.389−0.778 | |||||

| ProSensor– Planmeca | 0.6315 | 0.463 | 0.36 | |||

| 0.097 | 0.101 | 0.100 | ||||

| 0.433−0.80 | 0.277−0.658 | 0.189−0.566 | ||||

| CDR Elite– Schick | 0.6041 | 0.373 | 0.267 | 0.41 | ||

| 0.097 | 0.098 | 0.086 | 0.102 | |||

| 0.408−0.776 | 0.202−0.574 | 0.128−0.456* | 0.232−0.615 | |||

| XIOS Plus– Sirona | 0.699 | 0.46 | 0.38 | 0.509 | 0.598 | |

| 0.0895 | 0.100 | 0.097 | 0.101 | 0.098 | ||

| 0.507−0.847* | 0.278−0.657 | 0.214−0.581 | 0.318−0.699 | 0.403−0.772 | ||

| XDR– Cyber Medical Imaging | 0.478 | 0.347 | 0.246 | 0.383 | 0.416 | 0.343 |

| 0.103 | 0.096 | 0.094 | 0.098 | 0.101 | 0.096 | |

| 0.287−0.674 | 0.183−0.546 | 0.102−0.460* | 0.250−1.446 | 0.236−0.616 | 0.180−0.543 | |

Each entry lists the area under the curve (AUC), followed by the standard deviation (SD) and then by the 95% confidence interval. *Indicates significant differences between pairs (P < 0.05). XDR (Cyber Medical Imaging), RVG 6100 (Carestream Dental LLC), Platinum (DEXIS LLC), CDR Elite (Schick Technologies), ProSensor (Planmeca), EVA (ImageWorks), XIOS Plus (Sirona) and Gendex GXS-700 (Gendex Dental Systems).

Figure 4.

Example of a Visual Grading Characteristics (VGC) curve, comparing the RVG 6100 (Carestream Dental LLC) sensor with the Platinum (DEXIS LLC) sensor. The empty small square boxes represent the operating points corresponding to the evaluators’ ranking of the sensor.

DISCUSSION

Intraoral solid-state sensors have been tested in different settings. Nonetheless, such studies tested a small number of sensors that generated images which were evaluated by a relatively small number of clinicians13., 14., 15., 29.. This study has greater validity than previous studies, all of which used fewer types of sensors, fewer evaluators or both. Moreover, our study utilised images generated from a single human subject from the same area of the mouth, which enabled us to standardise the clinical conditions while testing a broad range of available products.

As the correlation between physical measures that can be determined by the use of phantoms and clinical image quality is poor, there is no justification for extrapolating such measurements to clinical performance of the sensor15., 27., 30.. However, use of phantoms, such as the aluminum block used in this project, provides valuable information regarding quality control and standardisation31. In the present study, the clinicians were not limited to evaluating a single clinical parameter, such as the presence of caries or the quality of a restoration, but were instructed to rank the overall quality of an image. Subjective quality estimations can serve as the baseline for objective quality methodology as long as there is no perfect model that would apply to a complex situation, such as the quality of an X-ray image32. This approach is consistent with image quality, defined as the degree to which the image satisfies the requirements imposed on it, and thus is relevant to the end user33. Image-quality evaluation is considered to be a high-level interpretative process of perception that cannot be dissected by analysing only ‘low-level’ physical image characteristics, such as sharpness, noisiness, brightness and contrast32., 34.. In this context, only a weak relationship was reported between image fidelity (the ability to discriminate between two images based on the physical characteristics) and image quality (the preference for one image over another)35.

The VGC method used in this study is a relatively easy way to quantify subjective opinions and make them suitable for analysis, whilst providing the opportunity to use the AUC as a single measure to quantify differences in image quality between the two modalities that are being compared26., 27.. The results show that the Platinum (DEXIS LLC) sensor image was ranked significantly better than two other sensors – XIOS Plus (Sirona) and RVG 6100 (Carestream Dental LLC) – but in a comparison of other sensors with the Platinum (DEXIS LLC) sensor no significant differences were found. For example, the image generated by the ProSensor (Planneca) sensor was not found to be statistically significantly different from the images generated by either the Platinum (DEXIS LLC) or the RVG 6100 (Carestream Dental LLC) sensors. This finding indicates that even differences which have been found to be statistically significant may be so subtle that their clinical significance is unclear.

In this study, many parameters were standardised as much as possible, such as using a single clinical subject, capturing the image from the same area of the mouth with each sensor, using a device that aligns the X-ray machine with the sensor, standardised evaluation conditions and the profile of the evaluators. We are aware of the fact that the sample size of the evaluators may still be a limitation in this study. Using a single subject in this study and exposing one area of the mouth not only contributed to standardisation but also enabled the authors to keep the ALARA (As Low As Reasonably Achievable) principle of minimising radiation exposure. Although ALARA principles were carefully observed and implemented and the amount of exposure was kept to an absolute minimum, researchers using this type of single-subject survey should carefully weigh the benefits versus the radiation hazards. However, this standardisation might lead to a limitation because it is expected that imaging results can be influenced by factors related to subject characteristics (size, age, ethnicity, systemic bone pathology, etc.) and different mouth areas.

Another potential limitation is that the clinicians were not required to justify the image ratings; thus, we could not analyse whether there was one perceived single factor that had a major influence on image quality. Despite the fact that a positioning device to align the X-ray source with the sensor was used, the subject may bite on it with a different force each time and the alignment may be subject to minor variations. It is also clear that because not all sensors have the same dimension and/or physical configurations, different bitewing images may depict more or less of the crestal bone. One should also consider that using a standardised exposure from one machine may affect the image quality of other sensors because the parameters chosen may not fall within the optimal dynamic range of these devices. Finally, another possible shortcoming lies in the fact that intra-rater reliability over time was not assessed, and therefore it is possible that the raters’ results would be different if retested.

In conclusion, the null hypothesis was rejected because there were statistically significant differences between the images captured with different sensors. Clearly, the EVA (ImageWorks) sensor generated an image that was consistently rated worst by all clinicians, whereas, at the other end of the spectrum, no sensor could be identified as generating higher-quality images than the other sensors tested. Further research should be directed towards determining the clinical significance of the differences in image quality found in this study.

Acknowledgements

The study received no funding.

Conflicts of interest

The authors report no conflict of interest.

References

- 1.Ludlow J, Abreu M. Performance of film, desktop monitor and laptop displays in caries detection. Dentomaxillofac Radiol. 1999;28:26–30. doi: 10.1038/sj.dmfr.4600400. [DOI] [PubMed] [Google Scholar]

- 2.Miles D, Razzano M. The future of digital imaging in dentistry. Dent Clin North Am. 2000;44:427–438. [PubMed] [Google Scholar]

- 3.Parks ET. Digital radiographic imaging is the dental practice ready? J Am Dent Assoc. 2008;139:477–481. doi: 10.14219/jada.archive.2008.0191. [DOI] [PubMed] [Google Scholar]

- 4.The Dental Market: Techniques, Equipment & Materials (HLC028D). BCC Research; 2012

- 5.Brian J, Williamson G. Digital radiography in dentistry: a survey of Indiana dentists. Dentomaxillofac Radiol. 2007;36:18–23. doi: 10.1259/dmfr/18567861. [DOI] [PubMed] [Google Scholar]

- 6.Wenzel A, Møystad A. Decision criteria and characteristics of Norwegian general dental practitioners selecting digital radiography. Dentomaxillofac Radiol. 2001;30:197–202. doi: 10.1038/sj.dmfr.4600612. [DOI] [PubMed] [Google Scholar]

- 7.Van Der Stelt PF. Filmless imaging the uses of digital radiography in dental practice. J Am Dent Assoc. 2005;136:1379–1387. doi: 10.14219/jada.archive.2005.0051. [DOI] [PubMed] [Google Scholar]

- 8.Sanderink GC, Huiskens R, Van der Stelt PF, et al. Image quality of direct digital intraoral x-ray sensors in assessing root canal length: the RadioVisioGraphy, Visualix/VIXA, Sens-A-Ray, and Flash Dent systems compared with Ektaspeed films. Oral Surg Oral Med Oral Pathol. 1994;78:125–132. doi: 10.1016/0030-4220(94)90128-7. [DOI] [PubMed] [Google Scholar]

- 9.Brito-Júnior M, Santos LAN, Baleeiro ÉN, et al. Linear measurements to determine working length of curved canals with fine files: conventional versus digital radiography. J Oral Sci. 2009;51:559–564. doi: 10.2334/josnusd.51.559. [DOI] [PubMed] [Google Scholar]

- 10.Anbiaee N, Mohassel A, Imanimoghaddam M, et al. A comparison of the accuracy of digital and conventional radiography in the diagnosis of recurrent caries. J Contemp Dent Pract. 2010;11:25–32. [PubMed] [Google Scholar]

- 11.Ulusu T, Bodur H, Odabaş M. In vitro comparison of digital and conventional bitewing radiographs for the detection of approximal caries in primary teeth exposed and viewed by a new wireless handheld unit. Dentomaxillofac Radiol. 2010;39:91–94. doi: 10.1259/dmfr/15182314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wenzel A. A review of dentists’ use of digital radiography and caries diagnosis with digital systems. Dentomaxillofac Radiol. 2006;35:307–314. doi: 10.1259/dmfr/64693712. [DOI] [PubMed] [Google Scholar]

- 13.Bhaskaran V, Qualtrough A, Rushton V, et al. A laboratory comparison of three imaging systems for image quality and radiation exposure characteristics. Int Endod J. 2005;38:645–652. doi: 10.1111/j.1365-2591.2005.00998.x. [DOI] [PubMed] [Google Scholar]

- 14.Kitagawa H, Farman A, Scheetz J, et al. Comparison of three intra-oral storage phosphor systems using subjective image quality. Dentomaxillofac Radiol. 2000;29:272–276. doi: 10.1038/sj/dmfr/4600532. [DOI] [PubMed] [Google Scholar]

- 15.Borg E, Attaelmanan A, Gröndahl H. Subjective image quality of solid-state and photostimulable phosphor systems for digital intra-oral radiography. Dentomaxillofac Radiol. 2000;29:70–75. doi: 10.1038/sj/dmfr/4600501. [DOI] [PubMed] [Google Scholar]

- 16.Welander U, McDavid WD, Higgins NM, et al. The effect of viewing conditions on the perceptibility of radiographic details. Oral Surg Oral Med Oral Pathol. 1983;56:651–654. doi: 10.1016/0030-4220(83)90084-1. [DOI] [PubMed] [Google Scholar]

- 17.Yoshiura K, Kawazu T, Chikui T, et al. Assessment of image quality in dental radiography, part 1: phantom validity. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 1999;87:115–122. doi: 10.1016/s1079-2104(99)70304-5. [DOI] [PubMed] [Google Scholar]

- 18.Yoshiura K, Kawazu T, Chikui T, et al. Assessment of image quality in dental radiography, part 2: optimum exposure conditions for detection of small mass changes in 6 intraoral radio-graphy systems. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 1999;87:123–129. doi: 10.1016/s1079-2104(99)70305-7. [DOI] [PubMed] [Google Scholar]

- 19.Yoshiura K, Stamatakis H, Shi X, et al. The perceptibility curve test applied to direct digital dental radiography. Dentomaxillofac Radiol. 1998;27:131–135. doi: 10.1038/sj/dmfr/4600332. [DOI] [PubMed] [Google Scholar]

- 20.Kutcher MJ, Kalathingal S, Ludlow JB, et al. The effect of lighting conditions on caries interpretation with a laptop computer in a clinical setting. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 2006;102:537–543. doi: 10.1016/j.tripleo.2005.11.004. [DOI] [PubMed] [Google Scholar]

- 21.Orafi I, Worthington H, Qualtrough A, et al. The impact of different viewing conditions on radiological file and working length measurement. Int Endod J. 2010;43:600–607. doi: 10.1111/j.1365-2591.2010.01744.x. [DOI] [PubMed] [Google Scholar]

- 22.Cederberg R, Frederiksen N, Benson B, et al. Influence of the digital image display monitor on observer performance. Dentomaxillofac Radiol. 1999;28:203–207. doi: 10.1038/sj/dmfr/4600441. [DOI] [PubMed] [Google Scholar]

- 23.Isidor S, Faaborg-Andersen M, Hintze H, et al. Effect of monitor display on detection of approximal caries lesions in digital radiographs. Dentomaxillofac Radiol. 2009;38:537–541. doi: 10.1259/dmfr/21071028. [DOI] [PubMed] [Google Scholar]

- 24.Cederberg RA. Intraoral digital radiography: elements of effective imaging. Compend Contin Educ Dent (Jamesburg, NJ: 1995) 2012;33:656. [PubMed] [Google Scholar]

- 25.Ludlow JB, Davies-Ludlow LE, White SC. Patient risk related to common dental radiographic examinations. J Am Dent Assoc. 2008;139:1237–1243. doi: 10.14219/jada.archive.2008.0339. [DOI] [PubMed] [Google Scholar]

- 26.Båth M, Månsson LG. Visual grading characteristics (VGC) analysis: a non-parametric rank-invariant statistical method for image quality evaluation. Br J Radiol. 2007;80:169–176. doi: 10.1259/bjr/35012658. [DOI] [PubMed] [Google Scholar]

- 27.Månsson L. Methods for the evaluation of image quality: a review. Radiat Prot Dosimetry. 2000;90:89–99. [Google Scholar]

- 28.Metz CE, Herman BA, Roe CA. Statistical comparison of two ROC-curve estimates obtained from partially-paired datasets. Med Decis Making. 1998;18:110–121. doi: 10.1177/0272989X9801800118. [DOI] [PubMed] [Google Scholar]

- 29.Oliveira M, Ambrosano G, Almeida S, et al. Efficacy of several digital radiographic imaging systems for laboratory determination of endodontic file length. Int Endod J. 2011;44:469–473. doi: 10.1111/j.1365-2591.2011.01860.x. [DOI] [PubMed] [Google Scholar]

- 30.Tapiovaara M. Review of relationships between physical measurements and user evaluation of image quality. Radiat Prot Dosimetry. 2008;129:244–248. doi: 10.1093/rpd/ncn009. [DOI] [PubMed] [Google Scholar]

- 31.Busch H, Faulkner K. Image quality and dose management in digital radiography: a new paradigm for optimisation. Radiat Prot Dosimetry. 2005;117:143–147. doi: 10.1093/rpd/nci728. [DOI] [PubMed] [Google Scholar]

- 32.Radun J, Leisti T, Virtanen T, et al. Evaluating the multivariate visual quality performance of image-processing components. ACM Trans Appl Percept. 2010;7:16. [Google Scholar]

- 33.Janssen T, Blommaert F. A computational approach to image quality. Displays. 2000;21:129–142. [Google Scholar]

- 34.Radun J, Leisti T, Häkkinen J, et al. Content and quality: interpretation-based estimation of image quality. ACM Trans Appl Percept. 2008;4:2. [Google Scholar]

- 35.Silverstein D, Farrell J. The relationship between image fidelity and image quality. Image Processing, 1996 Proceedings, International Conference on: IEEE; 1996. p. 881–884