Abstract

Predicting another person’s emotional response to a situation is an important component of emotion concept understanding. However, little is known about the developmental origins of this ability. The current studies examine whether 10-month-olds expect facial configurations/vocalizations associated with negative emotions (e.g., anger, disgust) to be displayed after specific eliciting events. In Experiment 1, 10-month-olds (N = 60) were familiarized to an Emoter interacting with objects in a positive event (Toy Given) and a negative event (Toy Taken). Infants expected the Emoter to display a facial configuration associated with anger after the negative event, but did not expect the Emoter to display a facial configuration associated with happiness after the positive event. In Experiment 2, 10- and 14-month-olds (N = 120) expected the Emoter to display a facial configuration associated with anger, rather than one associated with disgust, after an “anger-eliciting” event (Toy Taken). However, only the 14-month-olds provided some evidence of linking a facial configuration associated with disgust, rather than one associated with anger, to a “disgust-eliciting event” (New Food). Experiment 3 found that 10-month-olds (N = 60) did not expect an Emoter to display a facial configuration associated with anger after an “anger-eliciting” event involving an Unmet Goal. Together, these experiments suggest that infants start to refine broad concepts of affect into more precise emotion concepts over the first 2 years of life, before learning emotion language. These findings are a first step toward addressing a long-standing theoretical debate in affective science about the nature of early emotion concepts.

Electronic supplementary material

The online version of this article (10.1007/s42761-020-00005-x) contains supplementary material, which is available to authorized users.

Keywords: Emotion concepts, Emotion understanding, Infancy, Event-emotion matching

Emotions are complex social phenomena, expressed in variable ways across different situations. Despite this variability, humans perceive emotions in terms of categories. A conceptual emotion category, or “emotion concept,” is a group of expressive behaviors, eliciting events, behavioral consequences, labels, and so on, that are related to a specific emotion (Fehr & Russell, 1984; Widen & Russell, 2011). For example, receiving a desired gift may elicit a wide smile, which is labeled as “happiness.” There is considerable disagreement, however, as to the nature and acquisition of emotion concepts in infancy. Classical emotion theories suggest that infants have innate or early emerging concepts for certain “basic” emotions (i.e., happiness, sadness, anger, fear, and disgust; Ekman, 1994; Izard, 1994). In contrast, constructionist theories argue that preverbal infants have broad concepts of affect (i.e., positive vs. negative valence) that gradually narrow into more specific emotion concepts (e.g., anger vs. disgust) over the first decade of life (Widen, 2013; Widen & Russell, 2008). This narrowing is thought to occur alongside the acquisition of emotion labels (e.g., “happy”), beginning at around age 2 or 3 (Barrett, 2017; Barrett, Lindquist, & Gendron, 2007; Lindquist & Gendron, 2013). To date, it is unclear which theory best accounts for emotion concept development in infancy. The current studies provide new insights on this issue by examining (a) how 10-month-olds associate different negative facial configurations and vocalizations with eliciting events and (b) potential developmental changes in this ability between 10 and 14 months of age.

Extant Research on Infants’ Emotion Concepts

Studies on infants’ emotion concepts have largely examined infants’ ability to perceive differences between facial configurations1 associated with different emotions (for a review, see Ruba & Repacholi, 2019). These studies suggest that, by 5 to 7 months of age, infants can perceptually discriminate between and categorize facial configurations associated with positive and negative emotions (e.g., happy vs. fear; Bornstein & Arterberry, 2003; Geangu et al., 2016; Kestenbaum & Nelson, 1990; Ludemann, 1991; Krol, Monakhov et al., 2015; Safar & Moulson, 2017) as well as facial configurations associated with different negative emotions (e.g., anger vs. disgust; Ruba et al., 2017; White et al., 2019; Xie et al., 2019). However, these findings cannot speak to the nature of infants’ emotion concepts, since infants may discriminate and categorize these facial configurations based on salient facial features (e.g., amount of teeth shown), rather than on affective meaning (Barrett, 2017; Caron, Caron, & Myers, 1985; Ruba, Meltzoff, & Repacholi, 2020). Ultimately, it is unclear from these studies whether preverbal infants perceive facial configurations only in terms of (a) positive and negative valence (in line with a constructionist view) or, (b) the specific emotions with which they are associated (in line with a classical view). In other words, these studies cannot determine whether infants perceive emotions in terms of broad concepts of affect, or more narrow concepts of specific emotions.

With this in mind, researchers have recently used the violation-of-expectation (VOE) paradigm (Baillargeon, Spelke, & Wasserman, 1985) to further explore infants’ emotion concepts. These studies have focused on event-emotion matching–the ability to associate facial configurations with eliciting events—which is thought to reflect infants’ conceptual understanding of emotional causes (Chiarella & Poulin-Dubois, 2013; Hepach & Westermann, 2013). In these studies, infants are presented with a video of an eliciting event (e.g., receiving a gift) followed by an Emoter displaying a “congruent” (e.g., happiness) or “incongruent” facial configuration (e.g., sadness).2 Infants’ looking time (or pupil dilation, see Hepach & Westermann, 2013) to the two facial configurations is recorded, under the premise that infants tend to look longer at events and stimuli that are novel or unexpected (Aslin, 2007; Oakes, 2010). For example, after viewing a specific event (e.g., receiving a gift), infants might attend longer to the “incongruent” facial configuration (e.g., sadness) compared to the “congruent” facial configuration (e.g., happiness). If so, it is assumed that infants have expectations about the types of emotional responses that follow specific eliciting events. These VOE studies have the potential to provide clearer insights into whether infants perceive emotions in terms of broad concepts of affect or more narrow concepts of specific emotions.

Using this paradigm, an emerging literature suggests that, between 12 and 18 months of age, infants associate different facial configurations and/or vocalizations with specific eliciting events. Specifically, it has been demonstrated that infants associate “positive” facial configurations (e.g., happiness) with positive events (e.g., receiving a desired object) and “negative” facial configurations (e.g., anger) with negative events (e.g., failing to meet a goal) (Chiarella & Poulin-Dubois, 2013; Hepach & Westermann, 2013; Reschke, Walle, Flom, & Guenther, 2017). However, two studies suggest that infants’ emotion concepts may extend beyond positive and negative valence. For example, Ruba, Meltzoff, and Repacholi (2019) found that 14- to 18-month-olds expected an Emoter to display facial configurations/vocalizations associated with (a) disgust, rather than anger or fear, after tasting a novel food, and (b) anger, rather than disgust or fear, after failing to complete a goal. In addition, Wu, Muentener, and Schulz (2017) demonstrated that 12- to 23-month-olds associate different “positive,” within-valence vocalizations (e.g., “sympathy,” “excitement”) with specific events (e.g., a crying infant, a light-up toy). Together, these studies suggest that infants in the second year of life may not perceive emotions exclusively in terms of broad concepts of affect (for similar findings in a social referencing paradigm, see Walle, Reschke, Camras, & Campos, 2017).

However, it is possible that infants in the second year of life have acquired sufficient social, environmental, and linguistic experiences to begin disentangling these emotions. For example, a significant minority of 14- to 18-month-olds in Ruba et al., (2019) were reported to “understand” some emotion labels (e.g., “anger”). It is important to examine a younger age in order to determine if infants with fewer experiences with language and others’ emotions are also able to make these distinctions. These data would provide insights into whether emotion concepts are early emerging or acquired later in infancy. To date, only two studies have examined whether younger infants (in the first year of life) have expectations about the types of facial configurations/vocalizations associated with events. Skerry and Spelke (2014) familiarized 10-month-olds with an agent (a geometric circle) that moved around barriers in order to reach an object. If the agent reached the object, infants looked longer when the agent posed positive affect (smiling, giggling, and bouncing) compared to negative affect (frowning, crying, and slowly rocking side-to-side). However, if the agent failed to reach the object, there were no differences in infants’ looking time to the two responses. Similarly, Hepach and Westermann (2013) showed infants a human Emoter displaying either a positive or negative facial configuration (i.e., happy or anger) while engaging in a positive action (i.e., patting a stuffed animal) or a negative action (i.e., hitting a stuffed animal). Infants’ pupil dilation suggested that 10-month-olds expected a positive action to be linked with the positive facial configuration, but they did not expect a negative action to be linked to the negative facial configuration.

Current Studies

Together, these two studies suggest that, prior to 12 months of age, infants do not expect negative facial configurations to follow negative events. Thus, in contrast to both classical and constructionist theories, it is possible that infants in the first year of life may not have emotion concepts based on valence. The current studies provide a new examination of this topic. Experiment 1 examined whether 10-month-olds associate positive and negative facial configurations/vocalizations (i.e., happiness vs. anger) with positive and negative events, respectively. Experiments 2 and 3 further explored (a) whether 10-month-olds could also link different negative facial configurations/vocalizations (i.e., anger vs. disgust) to specific negative events, and (b) potential changes in this ability from 10 to 14 months of age. These experiments differ from previous research (Hepach & Westermann, 2013; Skerry & Spelke, 2014) by using (a) both facial configurations and vocalizations, displayed by human Emoters, and (b) interpersonal events, rather than intrapersonal events, which may be easier for infants to understand (Reschke et al., 2017; Walle & Campos, 2012).

Experiment 1

Experiment 1 examined whether 10-month-olds expect an Emoter to display a negative facial configuration/vocalization (anger) after a “negative” event (Toy Taken) and a positive facial configuration/vocalization (happy) after a “positive” event (Toy Given). These two emotions were selected because they differ in terms of valence but are both high arousal (Russell, 1980). In the Toy Taken event, an Actor took a desired object away from the Emoter. In the Toy Given event, the Emoter received a desired object from an Actor. Prosocial “giving” and antisocial “taking” actions were chosen since infants in the first year of life discriminate and differentially respond to these actions (Hamlin & Wynn, 2011; Hamlin, Wynn, Bloom, & Mahajan, 2011).

After each event, the Emoter produced either a “congruent” or an “incongruent” facial configuration and vocalization. For example, after viewing the Toy Taken event, infants in the anger condition saw a congruent response at test, while infants in the happy condition saw an incongruent response. Infants’ looking time to the facial configurations was recorded for each event, with the assumption that infants would attend longer to the incongruent configurations if they viewed this response as “unexpected” for the given event. Thus, we hypothesized that infants would attend significantly longer to: (a) the facial configuration associated with anger (compared to the one associated with happiness) following the Toy Given event, and (b) the facial configuration associated with happiness (compared to the one associated with anger) following the Toy Taken event.

Methods

Participants

A power analysis indicated that a sample size of 60 infants (30 per emotion condition) would be sufficient to detect reliable differences, assuming a small-to-medium effect size (f = 0.20) at the 0.05 alpha level with a power of 0.80. The effect size was based on previous research using this paradigm with older infants (Ruba et al., 2019). This sample size was preselected as the stopping rule for the study. The final sample consisted of 60 (36 female) 10-month-old infants (M = 10.01 months, SD = 0.23, range = 9.57–10.45). The study was conducted following APA ethical standards and with approval of the Institutional Review Board (IRB) at the University of Washington (Approval Number 50377, Protocol Title: “Emotion Categories Study”). Infants were recruited from a university database of parents who expressed interest in participating in research studies. All infants were healthy, full-term, and of normal birth weight. Parents identified their infants as Caucasian (77%, n = 46), multiracial (17%, n = 10), or Asian (7%, n = 4). Approximately 8% of infants (n = 5) were identified as Hispanic or Latino. There was no attrition in this study. According to parental report, few infants were reported to “understand” or “say” the words “happy” or “anger/mad” (Table 1). Thus, infants at this age did not have these emotion words in their vocabularies.

Table 1.

Total number of infants (and proportion of infants in sample) who were reported to have the following emotion labels in their receptive and productive vocabularies

| Experiment 1 | Experiment 2 | Experiment 3 | ||

|---|---|---|---|---|

| 10 mos | 10 mos | 14 mos | 10 mos | |

| Receptive | ||||

| “Happy” | 13 (0.22) | N/A | N/A | N/A |

| “Anger/mad” | 2 (0.03) | 4 (0.07) | 16 (0.27) | 8 (0.13) |

| “Disgusted” | N/A | 1 (0.02) | 2 (0.03) | 4 (0.07) |

| Productive | ||||

| “Happy” | 0 (0.00) | N/A | N/A | N/A |

| “Anger/mad” | 0 (0.00) | 0 (0.00) | 0 (0.00) | 0 (0.00) |

| “Disgusted” | N/A | 0 (0.00) | 0 (0.00) | 0 (0.00) |

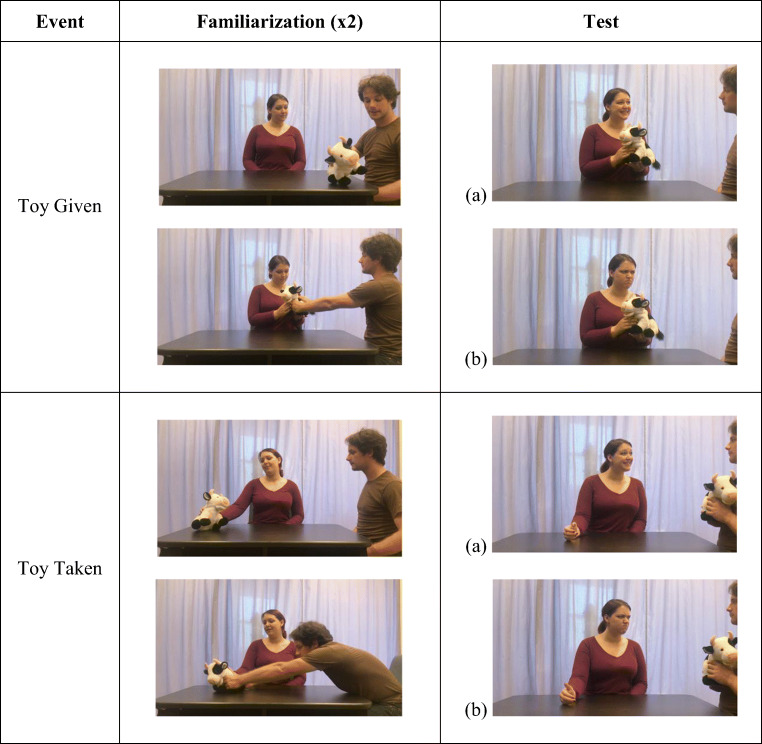

Design

As in Ruba et al., (2019), a mixed-design was used, with emotion condition as a between-subjects variable and event as a within-subjects variable. Equal numbers of male and female infants were randomly assigned to one of two emotion conditions: happy or anger (n = 30 per condition). Each infant watched two different videotaped events (Toy Taken and Toy Given) of an Emoter interacting with another person and an object. Infants were first presented with two familiarization trials of one of these events (e.g., Toy Taken), followed by a test trial in which the Emoter produced a facial configuration/vocalization (e.g., anger in the anger condition). Total looking time to the facial configuration was measured. Following this test trial, infants were presented with two familiarization trials of the other event (e.g., Toy Given), followed by a test trial of the same facial configuration/vocalization (e.g., anger; Fig. 1). The order in which the two events were presented was counterbalanced across participants.

Fig. 1.

Experiment 1 design. Test trials are depicted for each condition. a Happy. b Anger

Stimuli

Familiarization Trials

The familiarization trials were two videotaped events in which two adults (an “Actor” and an “Emoter”) interacted with objects (described below). The Actor and Emoter were pleasantly neutral in their face and voice. Each event was approximately 20 s in length (Fig. 1).

In the Toy Given event, the Actor played with a stuffed toy cow on the table, while the Emoter watched silently. The Emoter verbally requested the toy and the Actor complied by placing the toy in the Emoter’s hands. The event ended with the Actor looking at the Emoter, who now had the toy in her hands. In the Toy Taken event, the Emoter played with the toy on the table, while the Actor watched silently. The Actor verbally requested the toy but the Emoter continued to play with it. Then, the Actor reached across the table and grabbed the toy out of the Emoter’s hands. The event ended with the Emoter looking at the Actor, who now had the toy in his hands.

Test Trials

The test trials were videotapes of the Emoter’s response to each event, which consisted of a facial configuration and corresponding vocalization. The onset of the response occurred after the Emoter was given the toy (Toy Given) or after the Actor took the toy away (Toy Taken). Facial configurations followed the criteria outlined by Ekman and Friesen (1975). Happiness was conveyed by a wide toothy smile, and anger was conveyed with furrowed eyebrows and a tight mouth. Vocalizations consisted of “That’s blicketing. That’s so blicketing,” spoken in either a “happy” (i.e., pleasant) or “angry” (i.e., sharp) tone of voice. Nonsense words were used to minimize infants’ reliance on the lexical content of the vocalization.

During the response, the Emoter maintained her gaze in the direction of the Actor. These test videos were cropped so that the Emoter was centered in the frame. After the Emoter finished her script (approximately 5 s), the video was frozen to provide a still-frame of the Emoter’s facial configuration. This still-frame was shown for a maximum of 60 s, during which infants’ looking behavior was recorded (see “Scoring”). Full videos can be accessed here: https://osf.io/vsxm8/?view_only=2e149765de734f3bb7884369e01c9b9b. For validation information, see the Supplementary Materials.

Apparatus

Each infant was tested in a small room, divided by an opaque curtain into two sections. In one half of the room, infants sat on their parent’s lap approximately 60 cm away from a 48-cm color monitor with audio speakers. A camera was located approximately 10 cm above the monitor, focused on the infant’s face to capture their looking behavior. In the other half of the room, behind the curtain, the experimenter operated a laptop computer connected to the test display monitor. A secondary monitor displayed a live feed of the camera focused on the infant’s face, from which the experimenter observed infant’s looking behavior. Habit 2 software (Oakes, Sperka, DeBolt, & Cantrell, 2019) was used to present the stimuli, record infants’ looking times, and calculate the looking time criteria (described below).

Procedure

Infants were seated on their parents’ lap in the testing room. During the session, parents were instructed to look down and not speak to their infant or point to the screen. All parents complied with this request. Before each familiarization and test trial, an “attention-getter” (a blue flashing, chiming circle) attracted infants’ attention to the monitor. The experimenter began each trial when the infant was looking at the monitor.

Infants were initially presented with two familiarization trials of one event (e.g., Toy Given). After these two event trials, infants were shown the Emoter’s emotional response (e.g., “happiness”), and infants’ looking to the still frame of the Emoter’s facial configuration was recorded. For a look to be counted, infants had to look continuously for at least 2 s. The test trial played until infants looked away for more than two continuous seconds or until the 60-s trial ended. Following the first test trial, infants were presented with two familiarization trials of the second event (e.g., Toy Taken), followed by a test trial of the same emotional response (e.g., “happiness”; see Fig. 1). The order in which the two events were presented was counterbalanced across participants.

Prior work with this paradigm has used a 45-s response window (Reschke et al., 2017; Skerry & Spelke, 2014). However, pilot testing suggested that a longer response window was necessary for this study, as several infants attended for the entire 45 s. We believe this was due to the dynamic nature of the events (i.e., the Emoter was very engaging and spoke directly to the infants). For this reason, we also included two familiarization trials to further acclimate infants to the task. Because prior work has not included a familiarization period, the number of familiarization trials was kept low.

Scoring

Infants’ looking behavior was live-coded by a trained research assistant. For each event, this coding began at the end of the Emoter’s vocalization. Because the online coder was aware of the emotion the infant was currently viewing, a second trained research assistant recoded 100% of the videotapes offline, without sound. The coder was kept fully blind as to the participant’s experimental condition. Agreement between the live and naïve coder was excellent, r = 0.99, p < 0.001. Identical results were obtained using the online and offline coding (analyses with the offline reliability coding are reported below).

Analysis Plan and Hypotheses

Initially, a 2 (event) × 2 (emotion) × 2 (order) ANOVA was conducted. However, in line with previous research (Ruba et al., 2019), we also planned between-subjects analyses for each event. If infants have expectations about the types of emotional responses likely to follow specific events, they should look longer at the facial configuration when it is “incongruent” with the preceding event. Thus, after viewing the Toy Taken event, infants should look longer at the facial configuration associated with happiness, relative to the facial configuration associated with anger. On the other hand, after viewing the Toy Given event, infants should look longer to the facial configuration associated with anger relative to the facial configuration associated with happiness. All analyses were conducted in R (R Core Team, 2014), and figures were produced using the package ggplot2 (Wickham, 2009). Analysis code can be found here: https://osf.io/vsxm8/?view_only=2e149765de734f3bb7884369e01c9b9b.

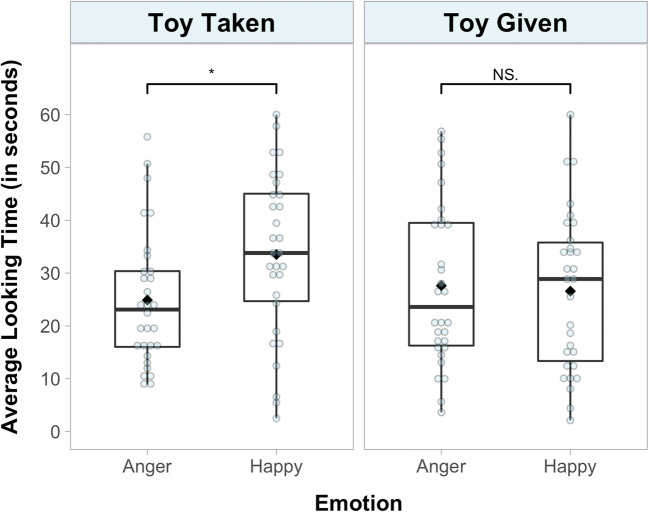

Results

All infants, across both emotion conditions, attended to the entirety of the familiarization trials. A 2 (event: toy taken/toy given) × 2 (emotion: happy/anger) × 2 (order: toy-taken first/toy-given first) mixed-design analysis of variance (ANOVA) was conducted for infants’ looking times to the test trials (Fig. 2). The event × emotion interaction was significant, F(1, 56) = 6.15, p = 0.016, ηp2 = 0.10. Between-subjects planned comparisons were then conducted for each event. For the Toy Taken event, infants attended significantly longer to the facial configuration in the happy condition (M = 33.52 s, SD = 15.59) than the anger condition (M = 24.88 s, SD = 12.71), t(58) = 2.35, p = 0.022, d = 0.61. However, contrary to our predictions, infants did not attend significantly longer to the facial configuration in the anger condition (M = 27.61 s, SD = 15.34) compared to the happy condition (M = 26.57 s, SD = 15.13), t(58) = 0.26, p > 0.25, d = 0.07. There were no other significant main effects or interactions: order, F(1, 56) = 3.33, p = 0.073, ηp2 = 0.06; condition, F(1, 56) = 1.43, p = 0.24, ηp2 = 0.02; order × condition, F(1, 56) = 1.18, p > 0.25, ηp2 = 0.02; event, F(1, 56) = 1.17, p > 0.25, ηp2 = 0.02; order × event, F(1, 56) = 3.12, p = 0.083, ηp2 = 0.05; order × condition × event; F(1, 56) = 0.36, p > 0.25, ηp2 = 0.01 (Table 2).

Fig. 2.

Experiment 1. Infants’ looking time during the test trials, *p < 0.05 for pairwise comparisons between events

Table 2.

Inferential statistics for 2 (emotion) × 2 (event) × 2 (order) × 2 (age) ANOVA for Experiment 2

| df | MSE | F | pes | p | |

|---|---|---|---|---|---|

| Order | 1, 112 | 334 | 3.12 | 0.03 | 0.080 |

| Emotion | 1, 112 | 334 | 0.98 | 0.01 | 0.32 |

| Age | 1, 112 | 334 | 4.13 | 0.04 | 0.045 |

| Order × emotion | 1, 112 | 334 | 0.05 | 0.00 | 0.83 |

| Order × age | 1, 112 | 334 | 0.01 | 0.00 | 0.93 |

| Emotion × age | 1, 112 | 334 | 0.20 | 0.00 | 0.65 |

| Order × emotion × age | 1, 112 | 334 | 3.11 | 0.03 | 0.081 |

| Event | 1, 112 | 178 | 0.00 | 0.00 | 0.97 |

| Order × event | 1, 112 | 178 | 3.57 | 0.03 | 0.062 |

| Emotion × event | 1, 112 | 178 | 7.77 | 0.06 | 0.006 |

| Age × event | 1, 112 | 178 | 0.94 | 0.01 | 0.34 |

| Order × emotion × event | 1, 112 | 178 | 0.31 | 0.00 | 0.58 |

| Order × age × event | 1, 112 | 178 | 0.05 | 0.00 | 0.82 |

| Emotion × age × event | 1, 112 | 178 | 1.11 | 0.01 | 0.29 |

| Order × emotion × age × event | 1, 112 | 178 | 1.04 | 0.01 | 0.31 |

Discussion

These findings are the first to suggest that infants in the first year of life have expectations about the types of emotional responses that follow negative events. Specifically, 10-month-olds expected that the Emoter would display a facial configuration associated with anger, rather than one associated with happiness, after a person took away a desired toy. In previous studies with different events (failed goal; hitting), infants did not associate negative facial configurations with negative events at this age (Hepach & Westermann, 2013; Skerry & Spelke, 2014). However, in the current study, we used a negative event that may have been less ambiguous and more familiar for infants. In particular, we chose a negative “taking” event because (a) infants have self-experience with taking actions, and (b) infants at this age differentiate between “taking” and “giving” actions (Hamlin & Wynn, 2011; Hamlin et al., 2011). In addition, our event was interpersonal, as opposed to intrapersonal. It has been suggested that it is easier for infants to understand emotions when they are expressed in a social context (Reschke et al., 2017; Walle & Campos, 2012). Finally, it is also possible that the facial configurations themselves were easier for infants to process. In the current study, the Emoter was human (rather than a geometric shape; Skerry & Spelke, 2014) and her emotional response was multi-modal (a facial configuration and vocalization). Consequently, this may have provided 10-month-olds with a richer set of cues with which to interpret the Emoter’s affect.

Unexpectedly, and in contrast to previous research (Hepach & Westermann, 2013; Skerry & Spelke, 2014), infants in the current study did not seem to have expectations about the types of emotional responses that follow positive events. Specifically, 10-month-olds did not expect the Emoter to display a facial configuration associated with happiness, rather than one associated with anger, after receiving a desired toy. One possible explanation is that the Toy Given event was more difficult to process compared to the positive events used in previous studies with this age group (completed goal; patting toy). In the current study, the Emoter verbally requested the toy (i.e., “Can I have the cow?”) without any accompanying gesture (i.e., reaching out her hands). As a result, 10-month-olds may not have understood that the Emoter desired the toy. When viewed in this way, negative affect could be an appropriate response to receiving a (potentially undesired) object from another person. Prior work also supports this interpretation. For example, 12.5-month-olds do not expect an Agent to choose the same toy that they previously received from another person (Eason, Doctor, Chang, Kushnir, & Sommerville, 2018). Thus, young infants may not view “receiving” actions as a strong indicator of a person’s future object-directed behavior or emotional responses. Future research could explore whether adding nonverbal cues (e.g., a reaching gesture) to the Toy Given event influences infants’ expectations.

Although this study suggests that infants in the first year of life expect a negative emotional response to follow a negative event, it is unable to determine whether infants at this age perceive emotions in terms of more specific emotion concepts (e.g., anger vs. disgust). As with virtually all studies on infants’ emotion concepts (see Ruba & Repacholi, 2019), we only compared emotions across the dimension of valence. Therefore, it is impossible to determine whether infants perceived the emotions in terms of broad concepts of affect (i.e., positive vs. negative) or more specific emotion concepts (i.e., happiness vs. anger). To address this question, infants’ perception of emotions within a dimension of valence and arousal would need to be compared. If infants’ have more narrow emotion concepts, they should expect a specific high arousal, negative facial configuration/vocalization (e.g., “disgust”) to be associated with a particular negative event (e.g., tasting unpleasant food) compared to another high arousal, negative facial configuration/vocalization (e.g., “anger”).

Experiment 2

Experiment 2 examined whether (a) 10-month-olds associate specific negative facial configurations/vocalizations with different negative events, and (b) age-related changes in this ability between 10 and 14 months of age. Specifically, infants were tested on their ability to link facial configurations/vocalizations associated with anger to an “anger-eliciting” event (Toy Taken) and facial configurations/vocalizations associated with disgust to a “disgust-eliciting” event (New Food). Anger and disgust were selected because they are both high arousal, negative emotions (Russell, 1980). As in Experiment 1, an Actor took a desired object away from the Emoter in the Toy Taken event. In the New Food event, an Actor fed the Emoter a bite of food.

After each event, infants saw the Emoter produce either a “congruent” or an “incongruent” emotional response. For example, after viewing the New Food event, infants in the disgust condition saw a congruent response, while infants in the anger condition saw an incongruent response. If infants view specific facial configurations/vocalizations as “unexpected” after an event, then they should attend significantly longer to: (a) a facial configuration associated with disgust (compared to one associated with anger) following the Toy Taken event, and (b) a facial configuration associated with anger (compared to one associated with disgust) following the New Food event. Consistent with prior research (Ruba et al., 2019), we predicted that 14-month-olds would have expectations about the specific types of negative emotional responses that follow certain events. We did not have specific hypotheses for the 10-month-olds.

Methods

Participants

The final sample consisted of 60 (28 female) 10-month-old infants (M = 10.06 months, SD = 0.19, range = 9.70–10.42) and 60 (38 female) 14-month-old infants (M = 14.05 months, SD = 0.23, range = 13.51–14.43). Parents identified their infants as Caucasian (78%, n = 93), multiracial (19%, n = 23), Asian (2%, n = 3), or African American (1%, n = 1). Approximately 5% of infants (n = 6) were identified as Hispanic or Latino. Participants were recruited in the same manner as Experiment 1. Four additional infants were tested but excluded from the final sample for procedural error (n = 2, 1 10-month-old), and failure to finish the study (n = 2, 1 10-month-old). According to parental report, few infants were reported to “understand” or “say” the words “anger/mad” or “disgusted” (Table 1).

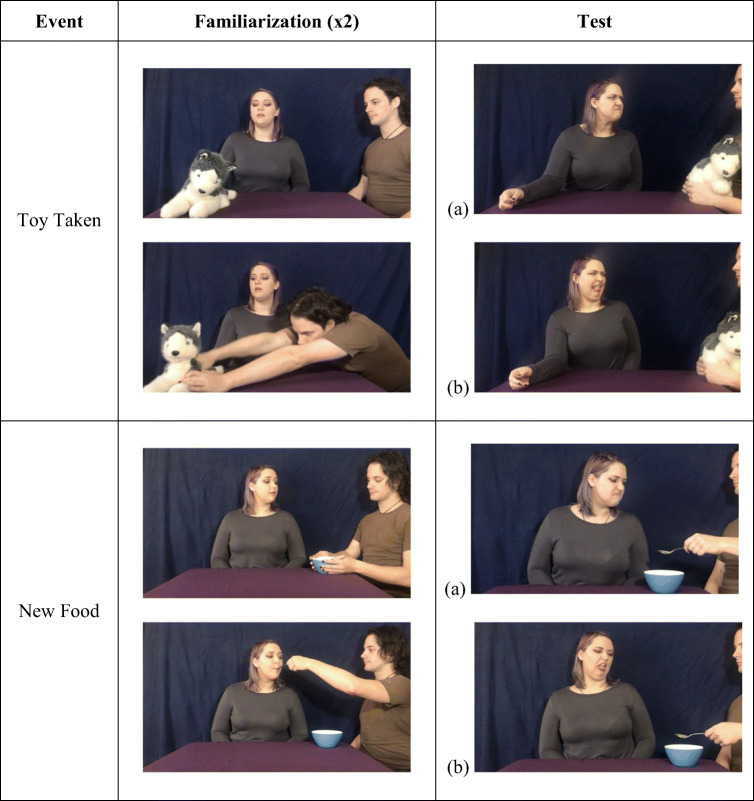

Design

The design of the study was similar to Experiment 1. Equal numbers of male and female infants from each age group were randomly assigned to one of two conditions: anger or disgust (n = 30 per age/condition). Each infant watched two different videotaped events of an Emoter interacting with another person and an object: the Toy Taken event and the New Food event (order was counterbalanced).

Stimuli

Familiarization Trials

The familiarization trials involved two videotaped events of two adults (an “Actor” and an “Emoter”) interacting with objects. The same adults from Experiment 1 were used for these videos. Each event was approximately 15 s in length (see Fig. 3). In the New Food event, the Actor placed a spoon and bowl on the table, which contained some nondescript food. The Emoter inquired about the food, and the Actor used the spoon to feed the Emoter a bite. The event ended when the spoon was in the Emoter’s mouth. This event was similar, but not identical, to the New Food event used in Ruba et al., (2019), as different Actors were used. The Toy Taken event was similar to Experiment 1 with two changes: (a) a different toy was used (i.e., a stuffed dog) and (b) the Actor reached out his arms when verbally requesting the toy (i.e., to further indicate that he desired the toy).

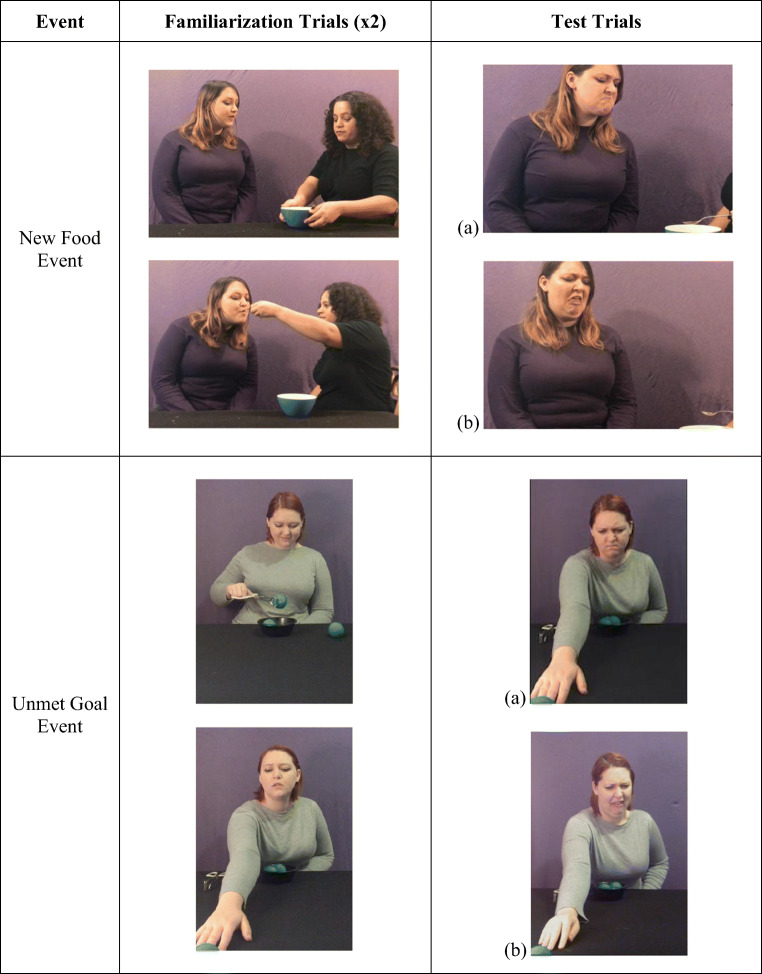

Fig. 3.

Experiment 2 design. Test trials are depicted for each condition. a Anger. b Disgust

Test Trials

The test trials were videotapes of the Emoter’s response to each event. The onset of the Emoter’s facial configuration and vocalization occurred after she was fed the food (New Food) or after the toy was taken away (Toy Taken). Anger was displayed with furrowed eyebrows and a tight mouth, and disgust was displayed with a protruding tongue and scrunched nose. Vocalizations began with an emotional utterance (e.g., a guttural “ugh” for anger, “ewww” for disgust) followed by, “That’s blicketing. That’s so blicketing,” spoken in an “angry” or “disgusted” tone. These test videos were cropped so that only the Emoter and the object (i.e., spoon/bowl, toy) were present in the frame. During the response, the Emoter maintained her gaze on the spoon (which was pulled away from her mouth) or the toy (which was in the Actor’s hands). After the Emoter finished her script (approximately 5 s), the video was frozen to create a still-frame of the Emoter’s facial configuration for a maximum of 60 s, and infants’ looking behavior was recorded. Full videos can be accessed here: https://osf.io/vsxm8/?view_only=2e149765de734f3bb7884369e01c9b9b. For validation information, see the Supplementary Materials.

Apparatus and Procedure

The apparatus and procedure were identical to Experiment 1, except that infants viewed the Toy Taken and New Food events.

Scoring

A second trained research assistant recoded 100% of the tapes offline, without sound. The coder was kept fully blind as to the participant’s experimental condition. Agreement was excellent, r = 0.99, p < 0.001. Identical results are obtained using the online and offline coding (analyses with the offline reliability coding are reported below).

Results

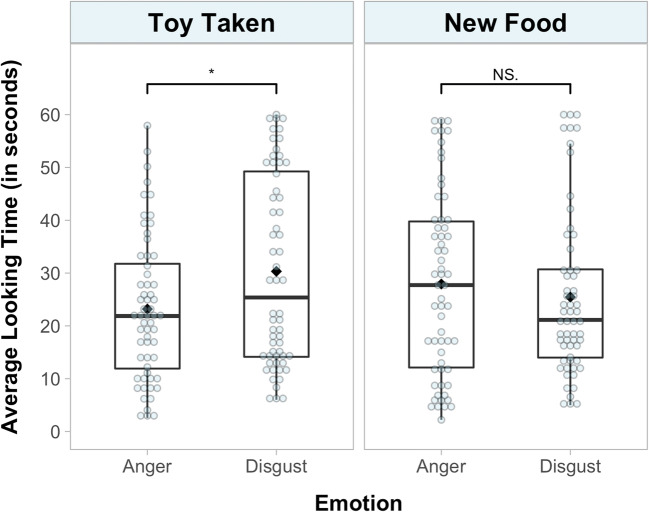

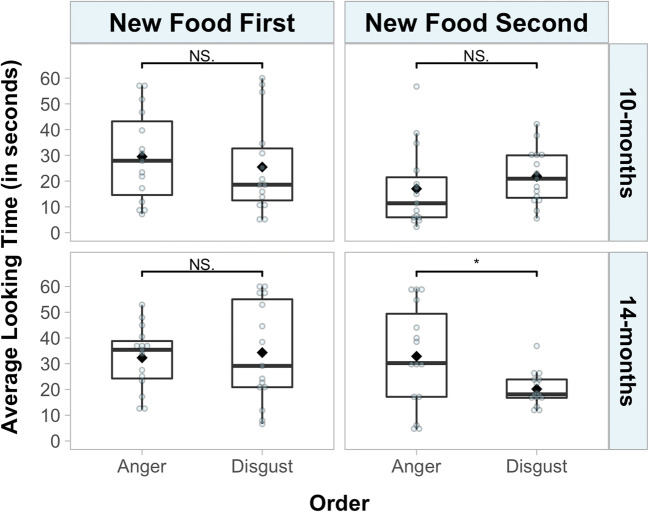

All infants, across both emotion conditions and age groups, attended to the entirety of the familiarization trials. A 2 (age: 10 months/14 months) × 2 (emotion: anger/disgust) × 2 (order: toy-taken first/new-food first) × 2 (event: toy taken/new food) mixed-design ANOVA was conducted for infants’ looking times to the test trials. There was a significant main effect of age, F(1, 112) = 4.13, p = 0.045, ηp2 = 0.04, suggesting the 14-month-olds attended longer to the facial configurations (M = 29.12 s, SD = 16.59 s) compared to the 10-month-olds (M = 24.33 s, SD = 15.79). A significant emotion × event interaction also emerged, F(1, 112) = 7.77, p = 0.006, ηp2 = 0.06. Planned between-subjects comparisons were conducted separately for each event (Fig. 4). For the Toy Taken event, infants looked longer at the facial configuration in the disgust condition (M = 30.32 s, SD = 17.97) compared to the anger condition (M = 23.18 s, SD = 13.59), t(118) = 2.45, p = 0.016, d = 0.45. However, for the New Food event, infants did not look significantly longer at the facial configuration in the anger condition (M = 27.92 s, SD = 17.41) compared to the disgust condition (M = 25.45 s, SD = 15.56), t(118) = 0.82, p > 0.25, d = 15. There were no other significant main effects or interactions (Table 2).

Fig. 4.

Experiment 2. Infants’ looking time during the test trials, *p < 0.05 for pairwise comparisons between emotions

Exploratory New Food Analyses

Given that 14-month-olds in Ruba et al., (2019) expected the Emoter to display a facial configuration associated with disgust rather than one associated with anger in a New Food event, we ran an additional analysis with the current data to explore potential age and order effects. A 2 (order) × 2 (age) × 2 (emotion) mixed-design ANOVA was conducted with infants’ looking time to the test trials for the New Food event only.

Significant main effects of order, F(1, 112) = 6.63, p = 0.011, ηp2 = 0.06, and age, F(1, 112) = 5.03, p = 0.027, ηp2 = 0.04, emerged. Overall, infants attended longer to the facial configurations when the New Food event was presented first (M = 30.40 s, SD = 17.17) compared to when it was presented second (M = 22.98 s, SD = 15.02). In addition, 14-month-olds attended longer to the facial configurations (M = 29.92 s, SD = 16.30) compared to the 10-month-olds (M = 23.46 s, SD = 16.17). These main effects were qualified by a significant age × order × emotion interaction, F(1, 112) = 4.21, p = 0.043, ηp2 = 0.04. There were no other significant main effects or interactions: emotion, F(1, 112) = .73, p > 0.25, ηp2 < 0.01; order × age, F(1, 112) = 0.05, p > 0.25, ηp2 < 0.01; age × emotion, F(1, 112) = 1.00, p > 0.25, ηp2 < 0.01; order × emotion, F(1, 112) = 0.26, p > 0.25, ηp2 < 0.01.

Follow-up analyses were conducted separately by order (Fig. 5). When the New Food event was presented first, there were no significant main effects or interactions: age, F(1, 56) = 1.72, p = 0.20, ηp2 = 0.03; emotion, F(1, 56) = 0.05, p > 0.25, ηp2 < 0.01; age × emotion, F(1, 56) = 0.46, p > 0.25, ηp2 < 0.01. When the New Food event was presented second, a significant age × emotion interaction emerged, F(1, 56) = 5.82, p = 0.019, ηp2 = 0.09. For the 10-month-olds, infants’ looking time to the facial configurations associated with anger and disgust did not differ, t(28) = 0.99, p > 0.25, d = 0.36. For the 14-month-olds, infants looked significantly longer to the facial configuration in the anger condition compared to the disgust condition, t(28) = 2.35, p = 0.026, d = 0.86. There were no significant main effects: age, F(1, 56) = 3.77, p = 0.057, ηp2 = 0.06; emotion, F(1, 56) = 1.17, p > 0.25, ηp2 = 0.02.

Fig. 5.

Experiment 2. Infants’ looking time during the test trials to the New Food Event, separated by age and order, *p < 0.05 for pairwise comparisons between emotions

Discussion

Infants looked longer at the facial configuration associated with disgust compared to the facial configuration associated with anger following the Toy Taken event. Together with Experiment 1, this suggests that infants expect a person to display a facial configuration associated with anger in response to this event, rather than facial configurations associated with positive emotion (i.e., happiness) or another negative emotion (i.e., disgust). Interestingly, and contrary to our hypotheses, there were no significant interactions with age. Consequently, this is the first study to demonstrate that, between 10 and 14 months of age, infants have expectations about the specific types of negative responses that follow certain events. To date, this ability has only been documented in infants 14 months and older (Ruba et al., 2019; Walle et al., 2017).

In contrast, 10-month-olds did not expect a person to display a facial configuration associated with disgust rather than one associated with anger following the New Food event. One possibility is that infants viewed each of facial configurations as equally appropriate responses to this event. In other words, 10-month-olds expected that the act of tasting a new food would elicit a facial configuration associated with negative affect in general, rather than one associated with a specific negative emotion (i.e., disgust). It is also possible that infants viewed both facial configurations associated with disgust and anger as plausible responses, but would not view other facial configurations (e.g., sadness, fear) as likely outcomes.

These results also contrast with Ruba et al., (2019), which found that 14-month-olds expected the Emoter to display a facial configuration associated with disgust rather than anger following a New Food event. In the current study, an exploratory follow-up analysis revealed that 14-month-olds attended longer to the facial configuration associated with anger compared to the facial configuration associated with disgust, but only when the New Food event was presented second (after the Toy Taken event). This effect was not found when the New Food event was presented first. This finding replicates the order effects obtained in the New Food event of Experiment 3 in Ruba et al., (2019) (reported in the Supplementary Materials). The hypothesized condition effects may have been stronger in this order, because infants had more experience with the procedure by the time they saw the second event. Related to this, it is also possible that the familiarization period (i.e., two trials) was not long enough to sufficiently acclimate infants to the task. This could partially explain why infants had relatively long average looking times (20–30 s) to the test events. With a longer familiarization period (or a habituation design) in future studies, the expected condition effects may become more robust and reliable across all presentation orders. It is also worth noting that effects in Ruba et al., (2019) and the current study were small, which may have also attributed to the failed replication (see the “General Discussion” for further elaboration on this point).

Experiment 3

Although 10-month-olds expected a facial configuration associated with anger to follow the Toy Taken event, they did not seem to expect a facial configuration associated with disgust to follow the New Food event. This suggests that the ability to predict a specific emotional response to a situation may emerge in the first year of life, but only for some events and/or some emotions. Thus, the goals of Experiment 3 were (a) to present 10-month-olds with the exact same New Food event used in Ruba et al., (2019), since 14-and 18-month-olds had expectations about this event, and (b) to determine whether 10-month-olds associate facial configurations associated with anger to a different “anger-eliciting” event (Unmet Goal event). Both events were identical to those used in Experiment 3 of Ruba et al., (2019). In the New Food event, an Actor fed the Emoter a bite of food. In the Unmet Goal event, the Emoter tried and failed to obtain an out-of-reach object.

In prior work with these specific events, 14- and 18-month-olds expected the Emoter to display (a) a facial configuration/vocalization associated with disgust (rather than one associated with anger) after the New Food event, and (b) a facial configuration/vocalization associated with anger (rather than one associated with disgust) after the Unmet Goal event (Ruba et al., 2019). Similar to Experiment 2, we hypothesized that 10-month-olds would not have specific expectations about the type of negative facial configuration to follow the New Food event. On the other hand, in line with Experiment 2, we predicted that infants would expect a facial configuration associated with anger to follow the Unmet Goal event.

Methods

Participants

The current sample consisted of 60 (31 female) 10-month-old infants (M = 10.07 months, SD = 0.19, range = 9.63–10.42). All infants were healthy, full-term, and of normal birth weight. Parents identified their infants as Caucasian (73%, n = 44), multiracial (18%, n = 11), Asian (5%, n = 3), or African American (3%, n = 2). Approximately 8% of infants (n = 5) were identified as Hispanic or Latino. Participants were recruited in the same manner as Experiment 1. Five additional infants were tested but excluded from the final sample due to inattention during the familiarization events (n = 1), procedural error (n = 2), or fussiness (n = 2). According to parental report, few infants were reported to “understand” or “say” the words “anger/mad” or “disgusted” (Table 1). Thus, infants at this age did not have these emotion words in their vocabularies.

Design

The design of the study was similar to Experiment 1. Equal numbers of male and female infants were randomly assigned to one of the two conditions (between-subjects): anger or disgust (n = 30 per condition). Each infant watched two different videotaped events of an Emoter interacting with another person and an object: the Unmet Goal event and the New Food event (order was counterbalanced).

Stimuli

Familiarization Trials

The stimuli were identical to those used in Experiment 3 of Ruba et al., (2019) (see Fig. 6). The familiarization trials involved two videotaped events of an “Emoter” interacting with objects. Both events started with the Emoter introducing herself to the infant by looking at the camera and saying “Hi baby” in a pleasant tone of voice.

Fig. 6.

Experiment 3 design. Test trials are depicted for each condition. a Anger. b Disgust

The New Food event was similar to Experiment 2, except the “Actor” was a different person (the “Emoter” was the same person). In the Unmet Goal event, the Emoter placed a bowl, three balls, and tongs on top of the table. She looked at the camera and said, pleasantly to the infant, “I’m going to put some balls in my bowl.” The Emoter picked up the tongs and used them to put two of the balls in the bowl. When she reached for the third ball, she “accidentally” knocked the ball out of reach toward the infant/camera. The Emoter leaned across the table and tried to reach the third ball, saying neutrally to the camera, “I can’t reach it”.

Test Trials

The test trials were videotapes of the Emoter’s response after each event. The onset of the Emoter’s facial configuration/vocalization occurred after she was fed the food (New Food) or after the Emoter unsuccessfully reached for the third ball (Unmet Goal). Facial configurations and vocalizations were displayed in the same manner described in Experiment 2. During the response, the Emoter maintained her gaze on the spoon and bowl (which was pulled away from her mouth) or the ball (which was on the table). These test videos were cropped so that only the Emoter and the object (i.e., spoon/bowl, ball) were present in the frame. Thus, the Actor was not present in the New Food test trial video. Full videos can be accessed here: https://osf.io/vsxm8/?view_only=2e149765de734f3bb7884369e01c9b9b. For validation information, see the Supplementary Materials.

Apparatus and Procedure

The apparatus and procedure were identical to Experiment 1, except that infants viewed the New Food and Unmet Goal events.

Scoring

A second trained research assistant recoded 100% of the tapes offline, without sound. The coder was kept fully blind as to the participant’s experimental condition and which emotion was presented to the infant. Agreement was excellent, r = 0.99, p < 0.001. Identical results are obtained using the online and offline coding (analyses with the offline reliability coding are reported below).

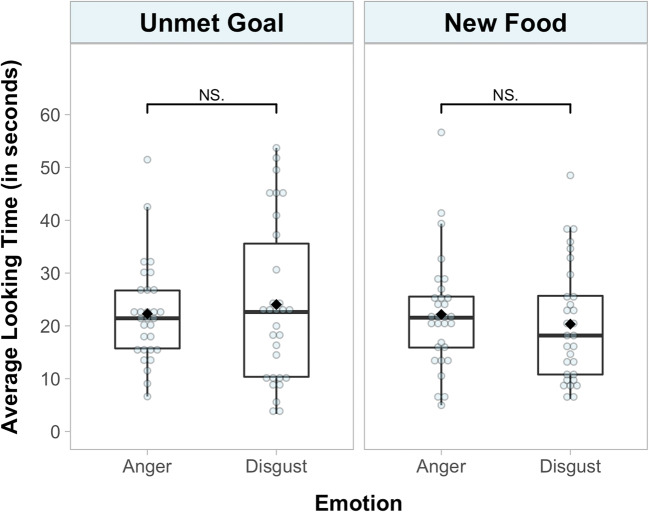

Results

All infants, across both emotion conditions, attended to the entirety of the familiarization trials. A 2 (emotion: anger/disgust) × 2 (order: new-food first/unmet-goal first) × 2 (event: new food/unmet goal) mixed-design ANOVA was conducted for infants’ looking times to the test trials. There were no significant main effects or interactions: order, F(1, 56) = 0.18, p > 0.25, ηp2 < 0.01; condition, F(1, 56) < 0.01, p > 0.25, ηp2 < 0.01; order × condition, F(1, 56) = 3.55, p = 0.065, ηp2 = 0.06; event, F(1, 56) = 1.11, p > 0.25, ηp2 = 0.02; order × event, F(1, 56) = 0.61, p > 0.25, ηp2 = 0.01; order × condition × event; F(1, 56) = 0.65, p > 0.25, ηp2 = 0.01.

In particular, there was not a significant emotion × event interaction, F(1, 56) = 0.97, p > 0.25, ηp2 = 0.02. Figure 7 following the Unmet Goal event, infants did not attend significantly longer to the facial configuration associated with disgust (M = 24.04 s, SD = 15.27) compared to the facial configuration associated with anger (M = 22.29 s, SD = 9.40). Further, following the New Food event, infants did not attend significantly longer to the facial configuration associated with anger (M = 22.16 s, SD = 10.80) compared to the facial configuration associated with disgust (M = 20.33 s, SD = 11.13).

Fig. 7.

Experiment 3. Infants’ looking time during the test trials

Discussion

As in Experiment 2, these results suggest that 10-month-olds did not have expectations about the types of facial configurations that follow the New Food event. In addition, 10-month-olds did not have expectations about the types of facial configurations that follow the Unmet Goal event. This is consistent with prior work in which 8- and 10-month-olds did not expect an agent to display a facial configuration/vocalization associated with sadness, rather than one associated with happiness, after failing to complete a goal (Skerry & Spelke, 2014). Both of these findings with 10-month-olds stand in contrast to research with older infants. With these identical events, infants between 14 and 18 months expected (a) a facial configuration associated with disgust to follow the New Food event, and (b) a facial configuration associated with anger to follow the Unmet Goal event (Ruba et al., 2019). These age-related changes will be discussed in further detail below.

General Discussion

The current studies explored the associations that infants have between specific negative facial configurations/vocalizations and events. Experiment 1 found that 10-month-old infants expected an Emoter to display a negative facial configuration (anger), rather than a positive facial configuration (happy), after a negative event (Toy Taken). This is the first study to demonstrate that infants in the first year of life expect negative facial configurations to follow negative events. Experiments 2 and 3 built on these findings to explore whether 10-month-olds had associations between specific negative facial configurations and negative events. Experiment 2 revealed that 10-month-olds and 14-month-olds expected a facial configuration associated with anger, rather than one associated with disgust, to follow an “anger-eliciting” event (Toy Taken). This is also the first study to show that infants as young as 10 months of age may differentiate negative facial configurations on a conceptual basis. Prior work has not provided evidence of this ability until 14 to 24 months of age (Ruba et al., 2019; Walle et al., 2017). Findings from Experiments 2 and 3 also suggest that expectations about the negative facial configurations associated with specific events are still developing at this age. Specifically, 10-month-olds did not expect (a) a facial configuration associated with disgust, rather than one associated with anger, to follow a “disgust-eliciting” event (New Food), or (b) a facial configuration associated with anger, rather than one associated with disgust, to follow an “anger-eliciting” event (Unmet Goal). These latter findings contrast with findings from Ruba et al., (2019), which found that 14- and 18-month-olds had associations between specific negative facial configurations and events. Taken together, these age-related changes provide new insights into theories of emotion understanding development.

These findings also add to emerging evidence that preverbal infants are not limited to valence-based interpretations of emotions (Ruba et al., 2017, 2019; Walle et al., 2017; Wu et al., 2017). These findings are inconsistent with predictions made by constructionist emotion theories, particularly, the theory of constructed emotion (Barrett, 2017; Hoemann, Xu, & Barrett, 2019; Lindquist & Gendron, 2013). This theory argues that infants cannot “perceive” or “interpret” specific emotions from facial configurations until much later in development, once children have gained experience with emotion labels (Barrett et al., 2007; Lindquist & Gendron, 2013). However, the current studies suggest that preverbal infants may begin to differentiate negative emotions on a conceptual basis as early as 10 to 14 months of age. Crucially, this narrowing appears to start before most infants acquire emotion language. However, these findings are also inconsistent with classical emotion theories, which argue that young infants interpret facial configurations in terms of discrete conceptual categories (Izard, 1994; Nelson, 1987). While 10-month-olds in the current studies had expectations about the specific negative facial configurations that follow some events, (i.e., Toy Taken), they did not have these expectations for other negative events (i.e., New Food, Unmet Goal). Thus, infants around 10 to 14 months of age appear to be in a state of transition, as they refine broad concepts of affect into more precise emotion concepts. Currently, emotion theories have not adequately accounted for this type of change across the first 2 years of life. Future research is needed to document these developmental changes in emotion concept acquisition and the mechanisms of such change (e.g., learning, motor development; Campos et al., 2000). It is possible that the novelty of certain events (e.g., Unmet Goal) or self-experience with specific events and emotions (e.g., disgust after eating a novel food) may influence infants’ expectations about these pairings.

We have presented one of the first studies to explicitly test the nature of infants’ conceptual “understanding” of different negative facial configurations. However, this preliminary work is not without limitations. Mainly, the reported effects were small (similar to past research, see Ruba et al., 2019; Wu et al., 2017) and were influenced by various task features (i.e., presentation order, event). It is possible that the difficulty of the task contributed to these small effects. Specifically, it is likely that infants do not have a one-to-one mapping between each event and facial configuration. In other words, infants may view certain facial configurations (e.g., disgust) as an “unlikely” response, but not an “impossible” response, for a specific event (e.g., unmet goal). Future studies may consider alternative outcome measures (e.g., pupil dilation, anticipatory looking) to capture these subtle differences in infants’ expectations. Further, the design of our study, like many studies of emotion, was constrained by classic emotion theories. We used posed stereotypes of emotion (i.e., facial configurations), which fail to capture the diversity of children’s emotional environments. Future research may consider a wider range of emotions and more naturalistic paradigms to explore infants’ emotion concepts. Nevertheless, these findings raise the intriguing possibility that infants may not be limited to valence-based distinctions between emotions before acquiring emotion language.

The debate on the nature of emotion actively continues at a theoretical and empirical level (Barrett, Adolphs, Marsella, Martinez, & Pollak, 2019; Cowen, Sauter, Tracy, & Keltner, 2019). Unfortunately, research with young infants has been largely missing from this debate (Ruba & Repacholi, 2019). By adding new data gathered from a developmental perspective, researchers can draw closer to answering some of the most basic questions about the nature, scope, and development of emotion understanding.

Electronic supplementary material

(DOCX 132 kb)

Additional Information

Acknowledgements

The authors would like to thank Alexandra Ruba and Noah Fisher for their assistance with stimuli creation.

Funding Information

This research was supported by grants from the National Institute of Mental Health (T32MH018931) to ALR, the University of Washington Royalty Research Fund (65-4662) to BMR, and the University of Washington Institute for Learning & Brain Sciences Ready Mind Project to ANM.

Data Availability

The datasets generated and analyzed for these studies are available on OSF, https://osf.io/vsxm8/?view_only=2e149765de734f3bb7884369e01c9b9b.

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

The study was conducted following APA ethical standards and with approval of the Institutional Review Board (IRB) at the University of Washington (Approval Number 50377, Protocol Title: “Emotion Categories Study”).

Informed Consent

Written informed consent was obtained from the parents.

Footnotes

Throughout, we use the term “facial configuration” instead of “facial expression,” since facial muscle movements may not “express” an internal emotional state (see Barrett et al., 2019).

Whether a facial configuration is “congruent” is determined by the researchers and cultural norms about whether an emotion is likely to follow a particular event. It is certainly possible to experience/express a “negative” emotion (e.g., sadness) in response to a “positive” event (e.g., receiving a gift).

References

- Aslin RN. What’s in a look? Developmental Science. 2007;10(1):48–53. doi: 10.1111/j.1467-7687.2007.00563.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baillargeon R, Spelke ES, Wasserman S. Object permanence in five-month-old infants. Cognition. 1985;20(3):191–208. doi: 10.1016/0010-0277(85)90008-3. [DOI] [PubMed] [Google Scholar]

- Barrett LF. How emotions are made: The secret life of the brain. Boston: Houghton Mifflin Harcourt; 2017. [Google Scholar]

- Barrett LF, Adolphs R, Marsella S, Martinez AM, Pollak SD. Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest. 2019;20(1):1–68. doi: 10.1177/1529100619832930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Sciences. 2007;11(8):327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornstein MH, Arterberry ME. Recognition, discrimination and categorization of smiling by 5-month-old infants. Developmental Science. 2003;6(5):585–599. doi: 10.1111/1467-7687.00314. [DOI] [Google Scholar]

- Campos JJ, Anderson DI, Barbu-Roth MA, Hubbard EM, Hertenstein MJ, Witherington DC. Travel broadens the mind. Infancy. 2000;1(2):149–219. doi: 10.1207/S15327078IN0102_1. [DOI] [PubMed] [Google Scholar]

- Caron RF, Caron AJ, Myers RS. Do infants see emotional expressions in static faces? Child Development. 1985;56(6):1552–1560. doi: 10.1111/j.1467-8624.1985.tb00220.x. [DOI] [PubMed] [Google Scholar]

- Chiarella SS, Poulin-Dubois D. Cry babies and pollyannas: Infants can detect unjustified emotional reactions. Infancy. 2013;18(Suppl 1):E81–E96. doi: 10.1111/infa.12028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowen A, Sauter D, Tracy JL, Keltner D. Mapping the passions: Toward a high-dimensional taxonomy of emotional experience and expression. Psychological Science in the Public Interest. 2019;20(1):69–90. doi: 10.1177/1529100619850176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eason AE, Doctor D, Chang E, Kushnir T, Sommerville JA. The choice is yours: Infants’ expectations about an agent’s future behavior based on taking and receiving actions. Developmental Psychology. 2018;54(5):829–841. doi: 10.1037/dev0000482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P. Strong evidence for universals in facial expressions: A reply to Russell’s mistaken critique. Psychological Bulletin. 1994;115(2):268–287. doi: 10.1037/0033-2909.115.2.268. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Unmasking the face: A guide to recognizing emotions from facial clues. Oxford: Prentice-Hall; 1975. [Google Scholar]

- Fehr B, Russell JA. Concept of emotion viewed from a prototype perspective. Journal of Experimental Psychology: General. 1984;113(3):464–486. doi: 10.1037/0096-3445.113.3.464. [DOI] [Google Scholar]

- Geangu E, Ichikawa H, Lao J, Kanazawa S, Yamaguchi MK, Caldara R, Turati C. Culture shapes 7-month-olds’ perceptual strategies in discriminating facial expressions of emotion. Current Biology. 2016;26(14):R663–R664. doi: 10.1016/j.cub.2016.05.072. [DOI] [PubMed] [Google Scholar]

- Hamlin JK, Wynn K. Young infants prefer prosocial to antisocial others. Cognitive Development. 2011;26(1):30–39. doi: 10.1016/J.COGDEV.2010.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamlin JK, Wynn K, Bloom P, Mahajan N. How infants and toddlers react to antisocial others. Proceedings of the National Academy of Sciences of the United States of America. 2011;108(50):19931–19936. doi: 10.1073/pnas.1110306108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hepach R, Westermann G. Infants’ sensitivity to the congruence of others’ emotions and actions. Journal of Experimental Child Psychology. 2013;115(1):16–29. doi: 10.1016/j.jecp.2012.12.013. [DOI] [PubMed] [Google Scholar]

- Hoemann K, Xu F, Barrett LF. Emotion words, emotion concepts, and emotional development in children: A constructionist hypothesis. Developmental Psychology. 2019;55(9):1830–1849. doi: 10.1037/dev0000686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izard CE. Innate and universal facial expressions: Evidence from developmental and cross-cultural research. Psychological Bulletin. 1994;115(2):288–299. doi: 10.1037/0033-2909.115.2.288. [DOI] [PubMed] [Google Scholar]

- Kestenbaum R, Nelson CA. The recognition and categorization of upright and inverted emotional expressions by 7-month-old infants. Infant Behavior and Development. 1990;13(4):497–511. doi: 10.1016/0163-6383(90)90019-5. [DOI] [Google Scholar]

- Krol KM, Monakhov M, Lai PS, Ebstein RP, Grossmann T. Genetic variation in CD38 and breastfeeding experience interact to impact infants’ attention to social eye cues. Proceedings of the National Academy of Sciences. 2015;112(39):E5434–E5442. doi: 10.1073/pnas.1506352112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Gendron M. What’s in a word? Language constructs emotion perception. Emotion Review. 2013;5(1):66–71. doi: 10.1177/1754073912451351. [DOI] [Google Scholar]

- Ludemann PM. Generalized discrimination of positive facial expressions by seven- and ten-month-old infants. Child Development. 1991;62(1):55–67. doi: 10.1111/j.1467-8624.1991.tb01514.x. [DOI] [PubMed] [Google Scholar]

- Nelson CA. The recognition of facial expressions in the first two years of life: Mechanisms of development. Child Development. 1987;58(4):889. doi: 10.2307/1130530. [DOI] [PubMed] [Google Scholar]

- Oakes LM. Using habituation of looking time to assess mental processes in infancy. Journal of Cognition and Development. 2010;11(3):255–268. doi: 10.1080/15248371003699977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oakes LM, Sperka D, DeBolt MC, Cantrell LM. Habit2: A stand-alone software solution for presenting stimuli and recording infant looking times in order to study infant development. Behavior Research Methods. 2019;51:1943–1952. doi: 10.3758/s13428-019-01244-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Core Team. (2014). R: A language and environment for statistical computing. Retrieved from http://www.r-project.org/

- Reschke PJ, Walle EA, Flom R, Guenther D. Twelve-month-old infants’ sensitivity to others’ emotions following positive and negative events. Infancy. 2017;22(6):874–881. doi: 10.1111/infa.12193. [DOI] [Google Scholar]

- Ruba AL, Johnson KM, Harris LT, Wilbourn MP. Developmental changes in infants’ categorization of anger and disgust facial expressions. Developmental Psychology. 2017;53(10):1826–1832. doi: 10.1037/dev0000381. [DOI] [PubMed] [Google Scholar]

- Ruba AL, Meltzoff AN, Repacholi BM. How do you feel? Preverbal infants match negative emotions to events. Developmental Psychology. 2019;55(6):1138–1149. doi: 10.1037/dev0000711. [DOI] [PubMed] [Google Scholar]

- Ruba, A. L., Meltzoff, A. N., & Repacholi, B. M. (2020). Superordinate categorization of negative facial expressions in infancy: The influence of labels. Developmental Psychology. 10.1037/dev0000892. [DOI] [PMC free article] [PubMed]

- Ruba, A. L., & Repacholi, B. M. (2019). Do preverbal infants understand discrete facial expressions of emotion? Emotion Review. 10.1177/1754073919871098.

- Russell JA. A circumplex model of affect. Journal of Personality and Social Psychology. 1980;39(6):1161. doi: 10.1037/h0077714. [DOI] [Google Scholar]

- Safar K, Moulson MC. Recognizing facial expressions of emotion in infancy: A replication and extension. Developmental Psychobiology. 2017;59(4):507–514. doi: 10.1002/dev.21515. [DOI] [PubMed] [Google Scholar]

- Skerry AE, Spelke ES. Preverbal infants identify emotional reactions that are incongruent with goal outcomes. Cognition. 2014;130(2):204–216. doi: 10.1016/j.cognition.2013.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walle EA, Campos JJ. Interpersonal responding to discrete emotions: A functionalist approach to the development of affect specificity. Emotion Review. 2012;4(4):413–422. doi: 10.1177/1754073912445812. [DOI] [Google Scholar]

- Walle EA, Reschke PJ, Camras LA, Campos JJ. Infant differential behavioral responding to discrete emotions. Emotion. 2017;17(7):1078–1091. doi: 10.1037/emo0000307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White H, Chroust A, Heck A, Jubran R, Galati A, Bhatt RS. Categorical perception of facial emotions in infancy. Infancy. 2019;24(2):139–161. doi: 10.1111/infa.12275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wickham H. ggplot2: Elegent graphics for data analysis. New York: Springer; 2009. [Google Scholar]

- Widen SC. Children’s interpretation of facial expressions: The long path from valence-based to specific discrete categories. Emotion Review. 2013;5(1):72–77. doi: 10.1177/1754073912451492. [DOI] [Google Scholar]

- Widen SC, Russell JA. Children acquire emotion categories gradually. Cognitive Development. 2008;23(2):291–312. doi: 10.1016/j.cogdev.2008.01.002. [DOI] [Google Scholar]

- Widen SC, Russell JA. In building a script for an emotion, do preschoolers add its cause before its behavior consequence? Social Development. 2011;20(3):471–485. doi: 10.1111/j.1467-9507.2010.00594.x. [DOI] [Google Scholar]

- Wu Y, Muentener P, Schulz LE. One- to four-year-olds connect diverse positive emotional vocalizations to their probable causes. Proceedings of the National Academy of Sciences. 2017;114(45):11896–11901. doi: 10.1073/pnas.1707715114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie W, McCormick SA, Westerlund A, Bowman LC, Nelson CA. Neural correlates of facial emotion processing in infancy. Developmental Science. 2019;22(3):e12758. doi: 10.1111/desc.12758. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 132 kb)

Data Availability Statement

The datasets generated and analyzed for these studies are available on OSF, https://osf.io/vsxm8/?view_only=2e149765de734f3bb7884369e01c9b9b.