Abstract

Autism spectrum disorder (ASD) and schizophrenia (SZ) are separate clinical entities but share deficits in social–emotional processing and static neural functional connectivity patterns. We compared patients’ dynamic functional network connectivity (dFNC) state engagement with typically developed (TD) individuals during social–emotional processing after initially characterizing such dynamics in TD. Young adults diagnosed with ASD (n = 42), SZ (n = 41), or TD (n = 55) completed three functional MRI runs, viewing social–emotional videos with happy, sad, or neutral content. We examined dFNC of 53 spatially independent networks extracted using independent component analysis and applied k-means clustering to windowed dFNC matrices, identifying four unique whole-brain dFNC states. TD showed differential engagement (fractional time, mean dwell time) in three states as a function of emotion. During Happy videos, patients spent less time than TD in a happy-associated state and instead spent more time in the most weakly connected state. During Sad videos, only ASD spent more time than TD in a sad-associated state. Additionally, only ASD showed a significant relationship between dFNC measures and alexithymia and social–emotional recognition task scores, potentially indicating different neural processing of emotions in ASD and SZ. Our results highlight the importance of examining temporal whole-brain reconfiguration of FNC, indicating engagement in unique emotion-specific dFNC states.

Keywords: autism spectrum disorder, emotion processing, functional network connectivity, schizophrenia, social cognition

Introduction

Although typically categorized as separate clinical entities, the neurodevelopmental disorders autism spectrum disorder (ASD) and schizophrenia (SZ) share many clinical attributes, including deficits in social–emotional (SE) processing (Eack et al. 2013; Ciaramidaro et al. 2018). Such deficits in these two clinical populations are often a part of an overall impairment in social cognition (SC) (Fernandes et al. 2018; Barlati et al. 2020; Pinkham et al. 2020). It remains unclear, however, if these shared SE processing impairments are associated with deficits in the same or different neural substrates.

The neural underpinnings of SE perception impairments in both ASD and SZ are posited to involve, at least in part, abnormal functional connectivity between regions comprising the social brain (Eack et al. 2017; Barlati et al. 2020; Nair et al. 2020). Functional connectivity (FC) is defined as the temporal correlation of time courses derived from functional magnetic resonance imaging (fMRI) blood oxygenation level–dependent (BOLD) signals from spatially distinct pairs of brain regions. Abnormal connectivity, or dysconnectivity, is then defined as any significant deviation from the mean FC between brain regions occurring in typically developed (TD) individuals and can appear in ASD or SZ as either hypo- or hyperconnectivity (Damaraju et al. 2014; Padmanabhan et al. 2017; White and Calhoun 2019).

Evidence for functional dysconnectivity between social brain regions in ASD and SZ has been found using either seed- and/or ROI-based methods (Anticevic et al. 2012; Lynch et al. 2013; Ciaramidaro et al. 2015; Eack et al. 2017) or by examining functional “network” connectivity (FNC) between intrinsic connectivity networks (ICNs) identified from spatial independent component analysis (sICA) (Assaf et al. 2010; Das et al. 2012). Analysis of FC between pairs of ICN time courses when performed using the entire ICN time course is termed a “static” functional network connectivity (sFNC) analysis.

Recently, however, studies have increasingly focused on the analysis of time-varying or “dynamic” changes in functional connectivity between independent networks. The motivation for this change in focus is the observation that functional connectivity during an fMRI run changes continuously both in resting-state and task-based fMRI (Hutchison et al. 2013; Allen et al. 2014; Calhoun et al. 2014; Du, Fu, et al. 2018b; Taghia et al. 2018; Iraji et al. 2020). In contrast, traditional seed- or ROI-based connectivity and sFNC studies cannot capture such transient changes in whole-brain functional connectivity. Several studies have also demonstrated that dFNC is superior to sFNC in predicting behaviors and symptoms. A recent study of schizophrenia and bipolar patients showed that dFNC outperformed sFNC in predictive accuracy (Rashid et al. 2016). Furthermore, this study found that combining sFNC and dFNC did not significantly improve classification performance over dFNC alone. Also, in a comparison of dynamic and static FC in a large cohort of TD individuals, dynamic FC metrics when compared with static FC were found to explain more than twice the variance in 75 behaviors across different domains including cognition and emotion (Jia et al. 2014).

Dynamic functional dysconnectivity has been found between pairs of ROIs or networks in both ASD (Falahpour et al. 2016) and SZ (Mennigen et al. 2019) when compared with TD individuals. Pairwise dysconnectivity in dynamic FC studies often lasts for only a portion of an fMRI run or occurs only when the brain enters a particular dynamic FC “state.” Studies of dynamic changes in FC have shown that during an fMRI run, a relatively small number of these dynamic FC “states” exist, typically five or less, where each dynamic FC state represents a distinct pattern of function network connectivity between all regions or ICN pairs encompassing the entire brain (Calhoun et al. 2014; Du, Fu, et al. 2018b; Lurie et al. 2020). These dynamic FC states can be identified using clustering methods, such as the k-means algorithm, applied to overlapping, windowed FNC matrices from the ROI or ICN time courses (Allen et al. 2014) or using machine learning methods based on Bayesian switching linear dynamical systems (BSDS; Taghia et al. 2018).

Once dynamic FC states are identified, it then becomes possible to examine not only between-group differences in pairwise FC in a given state, but also group differences in properties of dynamic FC state “engagement.” In fact, groups might have no differences in pairwise FC (i.e., no dysconnectivity) but instead differ only on dynamic state (dS) measures, such as number of transitions between states, probability of transition, mean dwell time (DT), and fraction of total time (FT) spent in a given state (a.k.a., occupancy rate). In these cases, it is the brain’s ability to remain in, or switch between, dynamic FC states that is dysfunctional, rather than the static or dynamic FC between any two networks or regions. In resting-state studies that used dFNC to extract dS measures, abnormal state engagement was found in ASD (Rashid et al. 2018), in SZ (Du, Pearlson, et al. 2016b), as well as in a study including both groups (Rabany et al. 2019). These studies all found that patient groups spent significantly more time (higher mean dwell time, fractional time, or both) than TD participants in the most weakly connected state, that is, the state with the smallest mean absolute (both positive and negative) magnitude in correlation between all ICN pairs.

In this study, our goal was to analyze task-based fMRI data in ASD, SZ, and TD participants using dFNC and a task that includes videos requiring processing SE information. Participants passively viewed videos of actors describing happy, sad, or neutral situations designed to simulate SE interactions. We aimed to 1) determine whole-brain dFNC states unique to happy and sad SE interactions in TD; 2) assess similarities and differences between ASD and SZ in dS measures and compare both groups to TD; and 3) explore the relationship between dS measures and scores on social cognition (all groups) and clinical symptom (ASD and SZ only) standardized tests.

We hypothesized that happy and sad SE stimuli will be associated with different whole-brain dynamic functional connectivity states. This is consistent with the hypothesis that different emotions are associated with different overall organization of brain functional systems (Wexler 1986). Because this is to our knowledge the first study to evaluate dFNC during an SE paradigm, we do not attempt to predict exact emotion effect and the between-group differences in dS measures. Based on previous dFNC studies during rest, however, our hypothesis was that both SZ and ASD will spend more time (i.e., have a higher mean dwell time and/or fractional time) than TD in the most weakly connected state during the viewing of videos of any emotion type (happy, neutral, or sad). Due to known SE processing deficits in ASD and SZ, however, we posited that ASD and SZ differences with TD in whole-brain dynamic FNC would be greater during viewing of videos with emotional content (happy or sad) than with neutral content.

Materials and Methods

Participants

Table 1 provides participant characterization and demographics. Individuals were recruited from Olin Neuropsychiatry Research Center (ONRC) and Yale University School of Medicine. Participants included in the study were classified as ASD (n = 42), SZ (n = 41), and TD (n = 55), ages 22–38, using the diagnostic interviews noted below. Exclusion criteria were intellectual disability (estimated full-scale IQ < 80), current substance use/abuse (assessed by clinical interview and urine screen prior to MRI), MRI contraindications (e.g., in-body metal), or clinical instability. Inclusion criteria for TD were no current DSM-IV Axis I diagnosis, as confirmed with Structured Clinical Interview for DSM-5 (SCID), Autism Diagnostic Observation Schedule (ADOS) and a detailed health questionnaire, no history of psychiatric hospitalization or pharmacological treatment, and no reported first-degree relative diagnosed with ASD, SZ, psychosis, or bipolar disorder.

Table 1.

Participant characterization and demographics

| ASD | SZ | TD | Group comparison | |||

|---|---|---|---|---|---|---|

| (N = 42) | (N = 41) | (N = 55) | ||||

| Mean (SD) | Mean (SD) | Mean (SD) | Statistic | P-value | Post hoc | |

| Age | 26.8 (3.6) | 30.9 (3.8) | 29.1 (3.6) | F(2,135) = 13.3 | P < 0.001 | TD > ASD* SZ > ASD** |

| IQ (Estimated) | 111 (15.9) | 98 (13.9) | 113 (14.6) | F(2,135) = 12.6 | P < 0.001 | TD > SZ** ASD > SZ** |

| Gender (M/F)a | 34/8 | 29/12 | 28/27 | χ2(2) = 10.2 | P = 0.006 | HC/F+, ASD/M+ |

| Mean FDRMS (mm) Happy videos | 0.104 (0.033) | 0.105 (0.057) | 0.079 (0.022) | F(2,135) = 7.34 | P = 0.001 | ASD > TD* SZ > TD* |

| Mean FDRMS (mm) Neutral videos | 0.101 (0.027) | 0.102 (0.051) | 0.080 (0.029) | F(2,135) = 5.47 | P = 0.005 | ASD > TD* SZ > TD* |

| Mean FDRMS (mm) Sad videos | 0.103 (0.034) | 0.108 (0.058) | 0.081 (0.031) | F(2,135) = 5.99 | P = 0.003 | ASD > TD* SZ > TD* |

| ADOS Communication | 3.52 (1.29) | 2.61 (1.86) | 1.28 (1.03) | F(2,134) = 31.2 | P < 0.001 | ASD > TD** SZ > TD** ASD > SZ* |

| ADOS Social Interaction | 6.86 (2.14) | 5.39 (3.51) | 0.83 (1.44) | F(2,134) = 81.7 | P < 0.001 | ASD > TD** SZ > TD** |

| BVAQ Verbalizing | 21.9 (4.6) | 21.7 (5.5) | 19.2 (5.5) | F(2,133) = 4.16 | P = 0.018 | ASD > TD* |

| BVAQ Fantasizing | 18.0 (6.1) | 21.6 (5.3) | 20.0 (5.3) | F(2,133) = 4.54 | P = 0.012 | SZ > ASD* |

| BVAQ Identifying | 19.2 (6.1) | 19.2 (6.1) | 15.9 (4.7) | F(2,133) = 7.77 | P = 0.001 | ASD > TD* SZ > TD* |

| BVAQ Emotionalizing | 21.9 (3.3) | 22.2 (3.9) | 22.3 (4.0) | F(2,132) = 0.17 | P = 0.844 | _ |

| BVAQ Analyzing | 18.8 (4.3) | 19.9 (4.6) | 18.0 (4.4) | F(2,133) = 2.11 | P = 0.125 | _ |

| RMET | 24.5 (4.2) | 23.3 (4.4) | 27.3 (3.3) | F(2,133) = 13.4 | P < 0.001 | TD > ASD* TD > SZ** |

| BLERT | 17.0 (3.1) | 16.0 (3.8) | 17.7 (2.6) | F(2,134) = 3.47 | P = 0.034 | TD > SZ* |

| PANSS Positive | 11.9 (3.2) | 15.9 (4.6) | _ | t(71.6#) = −4.44 | P < 0.001 | SZ > ASD |

| PANSS Negative | 16.1 (5.4) | 19.1 (6.1) | _ | t(79) = −2.41 | P = 0.018 | SZ > ASD |

| PANSS General | 26.5 (5.8) | 31.5 (6.3) | _ | t(79) = −3.74 | P < 0.001 | SZ > ASD |

| Valence (Happy) | 6.80 (1.52) | 6.24 (1.58) | 6.67 (1.49) | F(2,134) = 1.54 | P = 0.218 | _ |

| Valence (Neutral) | 5.39 (1.41) | 5.28 (1.63) | 4.72 (1.28) | F(2,132) = 3.02 | P = 0.052 | _ |

| Valence (Sad) | 3.54 (1.38) | 3.68 (1.56) | 3.73 (1.42) | F(2,134) = 0.212 | P = 0.809 | _ |

Notes: ASD: Autism spectrum disorder, SZ: Schizophrenia, TD: Typically developed, SD: standard deviation, FDRMS: framewise displacement (root mean square), ADOS: Autism Diagnosis Observation Schedule, BVAQ: Bermond-Vorst Alexithymia Questionnaire, RMET: Reading the Mind in the Eyes Task, BLERT: Bell Lysaker Emotion Recognition Task, PANSS: Positive and Negative Syndrome Scale.

achi-squared test.

* P ≤ 0.0.05.

** P ≤ 0.0.001. IQ: Intelligence quotient, M: Male, F: Female.

Participants provided written informed consent after the study had been explained to them and were paid for their time. The authors assert that all procedures contributing to this work comply with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, as revised in 2008. All procedures were approved by the Institutional Review Boards of Hartford Hospital and Yale University.

Psychiatric and Behavioral Assessments

A psychiatric assessment included the structured clinical interview for DSM-IV axis I disorders (SCID) (First et al. 2002) and the autism diagnosis observation schedule (ADOS)–Module 4 (Lord et al. 2000). We administered the Positive and Negative Syndrome Scale (PANSS) (Kay et al. 1987) to patients only (ASD and SZ), and ADOS to all three groups, to quantify the severity of psychotic and social communication deficits; TD individuals were, however, excluded from clinical symptom analyses.

To assess social cognition, we administered the following tests: 1) Reading the Mind in the Eyes Task (RMET; Baron-Cohen et al. 2001), 2) Bell Lysaker Emotion Recognition Task (BLERT; Bryson et al. 1997), and 3) Bermond-Vorst Alexithymia Questionnaire (BVAQ; Vorst and Bermond 2001) (See Supplement 1 for details). We estimated full-scale IQ using the Wechsler Adult Intelligence Scale (WAIS-III; Wechsler 1997), vocabulary and block design subscales.

Functional MRI Simulated Social–Emotional Task

The fMRI task consisted of naturalistic videos of actors telling stories about happy (positive), sad (negative), or neutral personal experiences, looking at and addressing the subjects directly and displaying emotion-related nonverbal behaviors, but without any specific task events (Prohovnik et al. 2004). The actors were seated facing and talking directly to the camera (i.e., the viewer). Eight actors made three videos each, one for each type of content, happy, sad, and neutral, for a total of 24 videos. The actors were from two ethnic groups (White and Black, 4 actors each) with two males and two females for each ethnicity.

In happy videos, actors smiled frequently and spoke in a cheerful tone of voice about a personal happy memory. In sad videos, actors spoke sadly, often while crying, about a personal memory usually involving a death in the family. Each video was preceded by 45 s (95 fMRI images) of a fixation screen, consisting of a blank black background, followed by the video itself and then by a second blank fixation screen, bringing the total fMRI run duration to 5 min and 13 s (313 s). Videos ranged in length from 3 min 3 s to 3 min 34 s. Participants viewed three videos in randomized order, one video each of happy, sad, and neutral, with ethnicity and gender of the videos also randomized and each ethnicity and gender appearing in at least one of the three videos. Participants were instructed to pay attention to when they felt an emotion and when the emotion intensity changed.

After viewing each video, we gave participants a postscan debriefing with two content questions to determine if they had been attentive to the video and we asked them to quantify their emotional valence (scale 1–9, where 5 is neutral) during the video (see Supplementary Material, Supplement 3, for postscan questionnaire example).

Functional MRI Data Acquisition

We collected BOLD fMRI data with a T2*-weighted echo planar imaging (EPI) sequence (TR/TE = 475/30 ms, flip angle = 60°, FOV = 24 cm, acquisition matrix 80 × 80), using a Siemens Skyra 3 Tesla scanner (Siemens, Malvern, Pennsylvania) at the Olin Neuropsychiatry Research Center (ONRC; Hartford, CT). We acquired 48 contiguous axial functional slices of 3.0 mm thickness (interleaved slice order) resulting in 3.0 mm3 voxels. Participants completed the three emotion fMRI task runs on the same day. Each run consisted of 658 images (5 min, 13 s run duration).

Image Preprocessing and Motion-Artifact Correction

We processed functional MRI datasets using SPM8 (http://www.fil.ion.ucl.ac.uk/spm) running under MATLAB 2018b (Natick, MA). We realigned each subject’s dataset to the first “nondummy” T2* image using the INRIAlign toolbox (http://www-sop.inria.fr/epidaure/software/INRIAlign, A. Roche, EPIDAURE Group) to compensate for any subject head movement. We screened each subject for excess head movement (>6 mm) and accordingly excluded from the study three ASD, six TD, and three SZ participants, resulting in the final participant count.

After realignment, we spatially normalized the images to the Montreal Neurological Institute (MNI) standard template (Friston et al. 1995). Finally, we spatially smoothed images with a 9 mm isotropic (FWHM) Gaussian kernel and then applied a high-pass filter with a cutoff of 128 s to correct for EPI signal low-frequency drift. Due to a very short TR (475 ms) multiband sequence, as recommended by Human Connectome Project guidelines (Glasser et al. 2013), we did not perform slice timing correction. As a final step to mitigate head motion artifact, we scrubbed the fMRI data using the ArtRepair toolbox (https://cibsr.stanford.edu/tools/human-brain-project/artrepair-software.html, RRID:SCR_005990; Mazaika et al. 2009). The ArtRepair toolbox replaces fMRI time points (volumes) that exceed a predetermined threshold for movement with linearly interpolated values from neighboring “good” time points. No time points are actually removed. Since patients are often characterized by high head movement, we set the ArtRepair threshold to a liberal maximum acceptable movement at 1.0 mm/TR (assuming a 65 mm head radius), and the intensity variation at a maximum percent threshold of 1.3% of the mean global average signal.

From retained participants, we determined that the number with greater than 5% (33 images) repaired in any one run (658 total images per run) were 6/42 (ASD), 5/41 (SZ), and 4/55 (TD). The maximum number of images repaired in any one run (total of 658 run images) was 183 (27.8%, ASD), 55 (8.4%, SZ), and 69 (10.5%, TD); therefore, no run for any participant had more than 30% repaired scans. Mean and standard deviation values for the number of images repaired over all 417 runs were 10.4 ± 21.3 (ASD), 5.8 ± 10.1 (SZ), and 4.6 ± 7.4 (TD). A Kruskal-Wallis test indicated a significant difference between groups (χ2 = 13.71, P = 0.001) on the number of repaired scans, where the mean rank of each group was 236.8, 191.8, and 198.6 for ASD, TD, and SZ, respectively. This indicates that ASD had significantly more scans repaired than TD or SZ, but the overall mean number of scans repaired was small, even for ASD (10.4).

We further reduced the effect of head motion on our results by including the mean of the root mean square (RMS) of the framewise displacement (mean FDRMS) for each participant as a second (group) level covariate in all statistical analyses. To calculate mean FDRMS for each participant, we computed a single mean value of the root-mean-square framewise head displacement for each run, determined using the six realignment parameters during that run, with one set each from the three social emotion video runs. For FDRMS, we assumed a head radius of 65 mm for all participants. We further note that the group information guided ICA (GIG-ICA) procedure we used (described in more detail below) has been shown in a previous study to be a highly effective method in reducing head motion effects on the data, with GIG-ICA being superior to ICA-FIX (Du, Allen, et al. 2016a). In a separate study, ICA methods (e.g., ICA-AROMA, ICA-FIX) were found to be among the most effective methods for reducing motion artifacts in rs-fMRI data (Parkes et al. 2018).

Group Independent Component Analysis

We performed group spatial ICA (sICA) using the Group ICA of fMRI Toolbox (GIFT, https://trendscenter.org/software/gift/, RRID:SCR_001953). Group sICA identifies independent component networks (ICNs), that is, temporally coherent networks, through estimation of maximally independent spatial sources from the linearly mixed fMRI signals. Each independent component has an associated time course (TC) and a spatial map (SM).

We chose an ICA model order of 100 components (Rashid et al. 2018). Using GIFT, we first performed a subject-specific data reduction step using principal component analysis (PCA) to reduce the fMRI data (658 time points) into 150 directions capturing maximal variability. The subject-reduced data were then concatenated across time and a second group data PCA step was performed to further reduce this matrix into 100 components along directions of maximal group variability. After obtaining an initial set of 100 independent components from the group PCA reduced matrix using the Infomax ICA algorithm (Bell and Sejnowski 1995), we then repeated the algorithm 15 times in ICASSO (http://www.cis.hut.fi/projects/ica/icasso) and selected the most representative run (Du et al. 2014) to generate a stable final set of 100 group-level components.

Next, using group-level component maps as references, we applied GIG-ICA to back-reconstruct individual subject components using spatially constrained ICA (Du and Fan 2013; Du, Fryer, et al. 2018a). The subject (n = 139)- and run (n = 3)-specific SMs and TCs yielded 139 subjects × 3 runs × 100 components = 41 700 component SMs. Subject and run SMs and TCs were converted to z-scores. The benefits of GIG-ICA as the back-reconstruction method are that single-subject ICA statistical independence is optimized (Du, Fu, et al. 2018b) and artifact suppression is improved (Du, Allen, et al. 2016a) when compared with traditional single-subject ICA back-reconstruction methods.

We determined that 51 of 100 extracted components were BOLD-related networks (i.e., not physiological artifacts). We established which ICNs were BOLD-related networks through use of fractional amplitude of low-frequency fluctuation (fALFF) data (Zou et al. 2008) because noise (nonsignal) components typically exhibit low fALFF values (Allen et al. 2011). Final determination of which components to include/exclude was based on visual observation of mean component SMs. Those component SMs that matched known resting-state networks (e.g., default-mode network, visual network, etc.) were included, and those that mostly overlapped white matter or cerebrospinal fluid were excluded. We provide a list of ICNs and their locations, peak MNI coordinates, and functional domain in Supplementary Table 1. We determined functional domains based on ICN spatial overlap with seven established resting-state networks including default mode (DMN), salience (SAL, subnetwork of cognitive-control network), central executive (CEN, subnetwork of cognitive-control network), visual (VIS), sensorimotor (SM), auditory (AUD), and subcortical (SBC) networks (Du et al. 2020). We added amygdala (AMYG) as an eighth functional domain, although typically belongs to the SBC domain.

Dynamic Functional Network Connectivity (dFNC)

Dynamic FNC measures time-varying patterns of FNC over the course of an fMRI run. In the dFNC method, ICN time courses are partitioned into overlapping time domain sequences using a tapered sliding window approach (Allen et al. 2014). Before dFNC analysis, however, we isolated ICN time courses for only the video content from each fMRI run. For all 21 267 (=417 runs × 51 ICNs) subject-level time courses, we retained only time points from 45 to 228 s (386 TRs or 183 s duration, corresponding to the shortest video) from the entire run for inclusion in dFNC analysis. We analyzed only the video part of the run because, for this study, we were interested only in dynamic FNC responses to emotion processing. The portions of the fMRI run, both before and after the video, were resting-state periods (baseline fixation), which, in a separate study, will be used to examine the differences in dFNC between the resting state and emotion-processing periods of the task. Therefore, the portions of run time courses not examined using dFNC were during the baseline fixation screen both preceding (45 s duration) and succeeding (54–85 s duration) a video, and the portion of a video after the first 183 s (range of 0–28 s video remaining). After cropping, we further prepared subject-specific ICN time courses using the dFNC toolbox by detrending, despiking, and low-pass filtering (0.15 Hz cutoff). The despiking procedure used AFNI’s 3dDespike algorithm to replace “spikes” with values obtained from the third-order spline fit to neighboring clean portions of thedata.

After ICN time course preparation, we set the sliding window size at 70 TRs (33.25 s) with a step size of 1 TR, and a Gaussian taper of sigma 10 TRs. This yielded 316 (=386–70 TRs) time-windowed domains for each subject/run. In each time-windowed domain, we computed FNC as the pairwise correlation between windowed ICN time courses. From this, we obtained a total of 1275 [=51 ICNs * (51 ICNs − 1)/2] unique FNC pairs of time-windowed connectivity, each of which can be rearranged into a symmetric FNC matrix. This resulted in 131 772 (=417 subjects/runs × 316 windows) total windowed FNC matrices. Because of the relatively short-duration time courses, the dFNC algorithm used a graphical LASSO algorithm (Friedman et al. 2008) to estimate covariance matrices and also implemented a penalty on the L1 norm of the precision matrix to enforce sparsity. We Fisher-transformed all dFNC matrices to z-scores to stabilize variance before performing statistical analysis.

Next, we applied clustering analysis, via k-means algorithm with L1 (Manhattan) distance, to the individual arrays of FNC covariance matrices to identify unique states of FNC during each fMRI run (Lloyd 1982). Using this algorithm applied to all participant dFNC data, and the elbow criterion applied to the cluster validity index (the ratio of within-cluster to between-cluster distances), we estimated the number of states to be k = 4. The four resulting dFNC states defined four unique connectivity patterns into which participants can potentially enter during arun.

Several dS measures characterize the k = 4 dFNC states for a given fMRI run including 1) mean dwell time (DT), which is the average time that a participant spends in a given state (the elapsed time from entering to exiting that state); 2) fractional time (FT), which is the fraction (0.0–1.0) of the total time that a subject spends in a given state (equal to DT times the number of times a state is entered during a run, divided by the run duration); 3) number of transitions (NT), which is the number of transitions between states; and 4) number of states (NS), which is the total number of states entered (1–4).

Statistical Analyses

We performed statistical analyses using either IBM SPSS v.21 (Corp. 2020) or the R programming language (v.4.0.2) (Team 2020). We included age, estimated full-scale IQ, gender, and mean framewise head displacement (root mean square; FDRMS) as four covariates of no interest to control for group differences in these variables.

We first examined only the TD group to establish, using DT or FT as an “engagement” metric (i.e., with time spent in a state indicating engagement), which of four dynamic connectivity states was associated with which emotion level (happy, sad, or neutral). For this analysis, we used univariate GLM modeling with the dependent variable being the dS measure DT or FT (on the dS measure for each of the four states), and with the independent variable being “emotion” (three levels), plus the four covariates of no interest. In the TD group, we also examined dS measures NT and NS (state independent measures) for significant association with the variable emotion.

For between-group statistics at a given “emotion” level (happy or sad), with mean dwell time (DT) or fractional time (FT) as the dependent variable, we performed a mixed model as follows (Eq. (1)):

|

(1) |

with “group” as a between-subject variable (three levels: ASD, SZ, TD) and “state” as a within-subject variable (maximum of two levels, e.g., 3 and 4). Note that, for state, we include only two levels because in any single run, the measure FT always sums to unity over the variable state, leading to statistical instability and inaccurate results. For between-group statistics where we examined statistics at only one emotion and one state level, we performed an ANCOVA with group as the only independent variable, plus the four covariates of no interest.

Effect sizes are estimated using Cohen’s d (post hoc tests only). Because there is no universally accepted method for calculating effect sizes for mixed model analyses, for both the standard ANCOVA and the mixed models, we present Cohen’s d calculated both from the raw data and from the estimated marginal means tables.

Relationship of dS Measures with Symptoms

We examined all three groups for the relationship of dS measures with BLERT, RMET, and BVAQ symptom severity scores, but only ASD and SZ for the relationship of dS measures with ADOS and PANSS symptom severity scores. We used a mixed model with dS measures as the dependent variable, emotion (three levels) and symptom severity score (continuous) as independent variables, the emotion-by-score interaction, plus the four covariates of no interest. All post hoc results (for significant main or interaction effects) were compared using least significant difference (LSD) correction.

Network Modularity Analysis

Although we assigned ICNs to eight functional domains according to well-established resting-state network configurations (Shirer et al. 2012), dFNC analysis consistently demonstrates that whole-brain FNC undergoes significant reconfiguration during an fMRI run, as measured by the different states determined by k-means clustering. To assess the modularity of such whole-brain FNC reconfiguration in each dFNC state, we applied the Louvain algorithm (Blondel et al. 2008) to the mean dFNC state matrices (averaged over all 417 runs) to form “modules” (ICNs with similar whole-brain dynamic FC configurations) that are rearrangements of the data (dFNC matrices) originally sorted according to functional domain (as shown in Fig. 2). We implemented the Louvain algorithm (community_louvain.m; http://www.brain-connectivity-toolbox.net) in MATLAB 2018b, using default gamma (=1) and asymmetric treatment of negative weights (“negative_asym”; Rubinov and Sporns 2011). This algorithm is a data-driven approach that finds the optimal community structure such that a dFNC matrix is subdivided into nonoverlapping groups of nodes in which the number of within-group edges is maximized and the number of between-group edges is minimized. Because this Louvain community detection algorithm output depends on the initial conditions (e.g., the first derived modularity is used as an initial condition) and involves looping over all nodes in random order, we ran the algorithm 10 000 times on each of the four state dFNC matrices to find the most common (i.e., stable) modularity arrangement for each state dFNC matrix. Supplementary Material, Supplement 1, Supplementary Figure 2 depicts the dFNC state matrices of Figure 2 rearranged (all data, however, are the same) to reflect the most stable dFNC state modularity configurations as detected by the Louvain algorithm and also provides color coding to show to which original functional domain each ICN belongs. While the modularity arrangements shown in Supplementary Figure 2 are the most stable modularity arrangements, we also found that modularity stability did differ for the dFNC states. The final stable modularity arrangements shown in Supplementary Figure 2 occurred 67.8%, 100%, 95.1%, and 30.8% of the time (after 10 000 iterations) for dFNC matrices of State-1, -2, -3, and -4, respectively. This indicates that State-2 and State-3 had very stable modularity arrangements, while State-4 (the most weakly connected state) had the least stable modularity arrangement. However, the alternate arrangements were not qualitatively very different from the most stable arrangements.

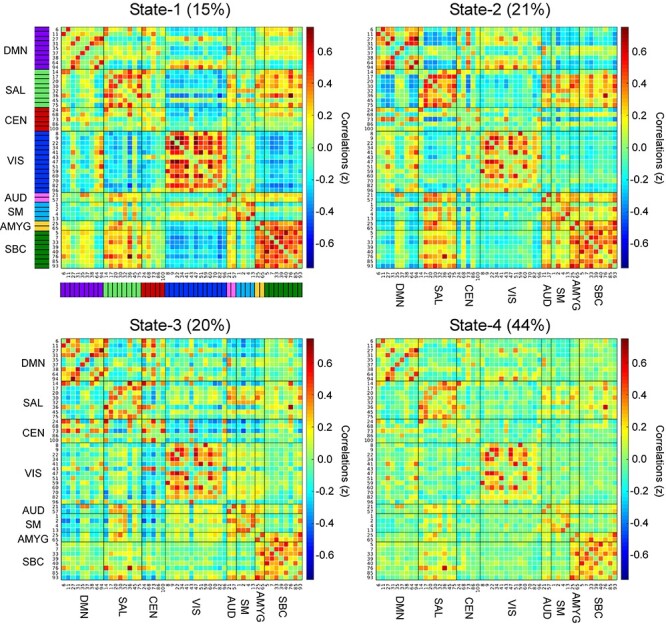

Figure 2.

Correlation matrices of the four dynamic FNC state clusters showing ICN pairwise correlation values (z-scores). The value at the top of each panel is the percentage of the total number of windowed FNC matrices assigned to that state over all participants and runs. Functional domains corresponding to each ICN are indicated by the color bar immediately to the left of ICN numbers in the upper left panel (the color coding is the same for all panels, and it is the same color coding used in Supplementary Fig. 2). Abbreviations: DMN, default mode network; SAL, salience network; CEN, central executive network; VIS, visual network; AUD, auditory network; SM, sensorimotor network; AMYG, amygdala; SBC, subcortical network.

Results

Intrinsic Connectivity Networks

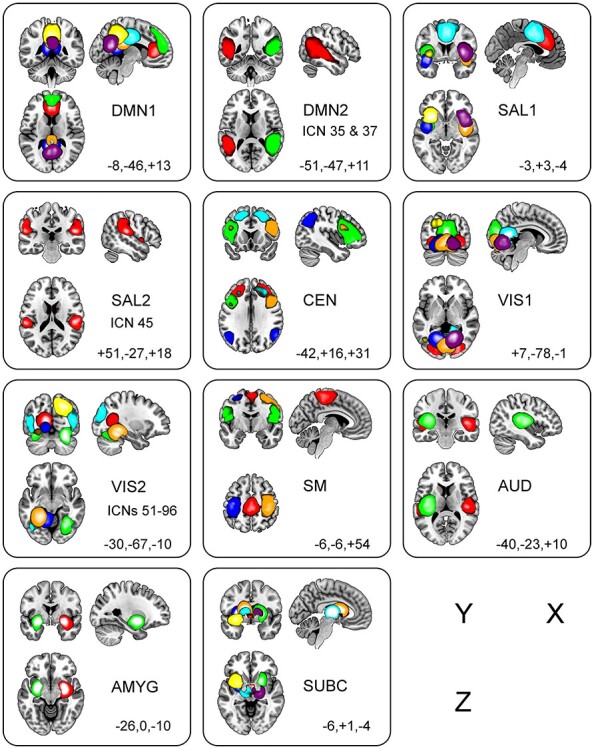

The 51 ICNs derived from group ICA from each functional domain overlaid on an MNI template brain, and arranged by functional domain (see Materials and Methods), are depicted in Figure 1. A list of these 51 ICNs and peak MNI coordinates is provided in Supplementary Table 1.

Figure 1.

Depiction of the 51 ICNs, arranged by functional domain. Functional domains are DMN, default mode network; SAL, salience network; CEN, central executive network; VIS, visual network; AUD, auditory network; SM, sensorimotor network; AMYG, amygdala; SBC, subcortical network. The domains DMN, SAL, and VIS domains are split into two parts for clarity (suffixed with the numbers 1 and 2). Colors, ordered according to ascending ICN numbers shown in each panel, are red, green, blue, orange, cyan, yellow, and violet (t-value threshold is +50.0 for all panels). MNI coordinates of sagittal (X), coronal (Y), and axial (Z) slices are provided.

Participant Characteristics

Groups differed on age, estimated IQ, gender, and mean framewise displacement (Table 1). All analyses included these measures as covariates.

The three diagnostic groups differed on the assessment and symptom severity scores for ADOS, RMET, BLERT, and BVAQ (Verbalizing, Fantasizing, and Identifying subscores only). Table 1 provides a statistical summary. Brief descriptions of all diagnostic and social cognition tests are provided in Supplementary Material, Supplement 2. Post hoc analyses for all but the ADOS and BVAQ Fantasizing demonstrated differences between TD and either patient groups but not between ASD and SZ. For the ADOS, all three groups differ from each other (ASD > SZ > TD) and for BVAQ Fantasizing the only significant difference was found between SZ and ASD. Lastly, for all three PANSS subscores (ASD and SZ only), SZ showed significantly higher scores than ASD. Importantly, we found no group differences in self-reported emotional valence scores in the three emotional runs, indicating that all three groups were fully engaged in the task and processed its emotional content.

Dynamic Functional Network Connectivity

The correlation matrices for the four dFNC states derived from k-means clustering are shown in Figure 2, with larger magnitude z-scores indicating greater correlation (both positive in warm colors and negative in cold colors) between ICN pairs. State-1 and State-4 are characterized by the largest and smallest mean absolute value z-scores (0.1887 ± 0.1374 and 0.1000 ± 0.0986), respectively, whereas State-2 and State-3 show z-scores of intermediate mean absolute value (0.1729 ± 0.1273 and 0.1564 ± 0.1264, respectively). All four values are significantly different from one another (State-1 vs. -2, -3, and -4, P = 0.007, <0.001 and <0.001; State-2 vs. -3 and -4, P = 0.004 and <0.001 and State-3 vs. -4, P < 0.001).

Across groups and emotion runs, participants spent most time (44%) in State-4 (the least connected state). Participants spent 15% of total time in State-1, 21% in State 2, and 20% in State 3. An analysis of state engagement (SE) over all three emotion videos showed that State-1 was entered by 41 ASD, 49 TD, and 38 SZ; State-2 was entered by 42 ASD, 51 TD, and 41 SZ; State-3 was entered by 39 ASD, 52 TD, and 41 SZ; and State-4 was entered by 42 ASD, 55 TD, and 41 SZ (out of a total of ASD n = 42, TD n = 55, and SZ n = 41 participants in each group). Chi-squared analysis of SE (by group and emotion) indicated that both ASD and SZ entered into State-2 during Sad videos in significantly greater proportions than TD (P < 0.05, 82.5% and 89.5%, respectively, vs. 63.6%). There were no other significant group differences in state engagement. Refer to Supplementary Material, Supplement 1, for further characterization of each dynamic state according to correlation difference (∆z) maps (for State-1, -2, and -3 minus State-4, see Supplementary Fig. 1), and Louvain modularity (see Supplementary Fig. 2).

Dynamic State Measure Statistics for Each Group Independently

To establish a baseline to which patient groups can be compared, and to confirm that different emotion conditions increase the likelihood of different dFNC patterns, we first present statistics on the dS measures for TD only, focusing on the main effect of emotion as the within-subject factor in one-way repeated measures ANOVAs for each state/DV (three levels, Happy, Neutral, andSad).

The dS measure results for TD only are shown in Table 2, where mean dwell time (DT) and fractional time (FT) (see Materials and Methods) from different states are listed suffixed with the associated state (e.g., DT2 indicates DT for State-2). To summarize, for TD in State-2, the main effect of emotion was significant for FT2 (see Table 2), with post hoc tests indicating both Happy > Sad and Neutral > Sad. In State-3, the main effect of emotion was significant for both DT3 and FT3 (Table 2), with post hoc tests for both indicating Happy > Neutral and Happy > Sad. Finally, in State-4, the main effect of emotion was significant for both DT4 and FT4 (Table 2), with post hoc tests for both indicating Sad > Happy and Neutral > Happy.

Table 2.

Clustering measure statistics for the TD group (n = 55)only

| Video emotion | Estimated mean (SE) | 95% CI | Main effect of emotion | Post hoca [Cohen’s d: raw/emm] |

|

|---|---|---|---|---|---|

| DT1 | Happy | 61.0 (13.3) | 33.5–88.5 | F(2,26) = 1.405 | NA |

| Neutral | 45.1 (7.8) | 29.2–61.0 | P = 0.263 | ||

| Sad | 39.6 (3.7) | 32.0–47.1 | |||

| DT2 | Happy | 55.6 (8.5) | 38.6–72.7 | F(2,40) = 3.075 | NA |

| Neutral | 54.2 (4.4) | 45.3–63.1 | P = 0.057 | ||

| Sad | 43.3 (3.3) | 36.5–50.0 | |||

| DT3 | Happy | 84.5 (11.6) | 61.1–108.0 | F(2,49) = 6.345 | Happy > Neutral (t(42) = 3.453; P = 0.001) |

| Neutral | 45.6 (4.9) | 35.7–55.4 | P = 0.004 | [0.666/0.675] | |

| Sad | 57.8 (6.2) | 45.3–70.3 | Happy > Sad (t(46) = 2.211; P = 0.032) [0.428/0.441] | ||

| DT4 | Happy | 55.4 (4.1) | 47.1–63.7 | F(2,53) = 6.701 | Sad > Happy (t(54) = 2.529; P = 0.014) |

| Neutral | 78.2 (9.3) | 59.6–96.9 | P = 0.003 | [0.457/0.446] | |

| Sad | 77.7 (8.7) | 60.1–95.2 | Neutral > Happy (t(51) = 2.428; P = 0.019) [0.441/0.444] | ||

| FT1 | Happy | 0.122 (0.032) | 0.059–0.185 | F(2,54) = 0.878 | NA |

| Neutral | 0.157 (0.032) | 0.093–0.220 | P = 0.422 | ||

| Sad | 0.143 (0.024) | 0.094–0.191 | |||

| FT2 | Happy | 0.210 (0.030) | 0.151–0.270 | F(2,54) = 5.898 | Happy > Sad (t(54) = 2.617; P = 0.011) |

| Neutral | 0.225 (0.027) | 0.170–0.280 | P = 0.005 | [0.410/0.401] | |

| Sad | 0.134 (0.021) | 0.092–0.175 | Neutral > Sad (t(53) = 2.975; P = 0.004) [0.512/0.518] | ||

| FT3 | Happy | 0.291 (0.035) | 0.220–0.362 | F(2,54) = 5.331 | Happy > Neutral (t(54) = 3.317; P = 0.002) |

| Neutral | 0.164 (0.023) | 0.118–0.211 | P = 0.008 | [0.583/0.598] | |

| Sad | 0.213 (0.028) | 0.156–0.270 | Happy > Sad (t(54) = 2.146; P = 0.036) [0.330/0.328] | ||

| FT4 | Happy | 0.377 (0.033) | 0.310–0.443 | F(2,53) = 7.583 | Sad > Happy (t(54) = 3.883; P < 0.001) |

| Neutral | 0.454 (0.035) | 0.383–0.524 | P = 0.001 | [0.535/0.518] | |

| Sad | 0.511 (0.037) | 0.437–0.585 | Neutral > Happy (t(53) = 2.069; P = 0.043) [0.370/0.345] | ||

| NS | Happy | 3.0 (0.1) | 2.7–3.2 | F(2,53) = 0.846 | NA |

| Neutral | 3.1 (0.1) | 2.9–3.3 | P = 0.435 | ||

| Sad | 3.0 (0.1) | 2.8–3.2 | |||

| NT | Happy | 4.4 (0.3) | 3.8–5.0 | F(2,54) = 0.334 | NA |

| Neutral | 4.7 (0.3) | 4.1–5.3 | P = 0.717 | ||

| Sad | 4.7 (0.3) | 4.1–5.2 |

Notes: emm: effect size from estimated marginal means.

aPost hoc correction used is least significant difference (LSD).

Next, we repeated the above analyses for ASD and SZ groups separately. For ASD, there was no significant main effect of emotion for any dS measure. For SZ, the main effect of emotion was significant only for FT3 (F(2,39) = 3.860; P = 0.030). Post hoc analyses for SZ showed that for FT3, Sad > Neutral (t(40) = 2.776, P = 0.008).

In summary, the analysis of TD shows that for FT measures, State-2 is less associated with the emotion “Sad” (i.e., State-2 is a “Not-Sad” state), and for DT measures, State-3 and State-4 were both associated with the emotion “Happy.” Conversely, ASD had no findings for the main effect of emotion, and in SZ, the only finding was that State-3 was associated with the emotion “Sad.”

Group and State Differences: Emotion Happy or Sad

Based on the results from the TD only analysis, we here examine more closely the differences between groups and states associated with the same emotion. Because the emotion “Sad” was associated with State-2, and “Happy” was associated with both State-3 and State-4, we now examine between-group differences for each of these scenarios by including “state” as a within-subjects factor but include only one (State-2 for Sad videos) or two (State-3 and State-4 for Happy videos), instead of all four states as described in Materials and Methods.

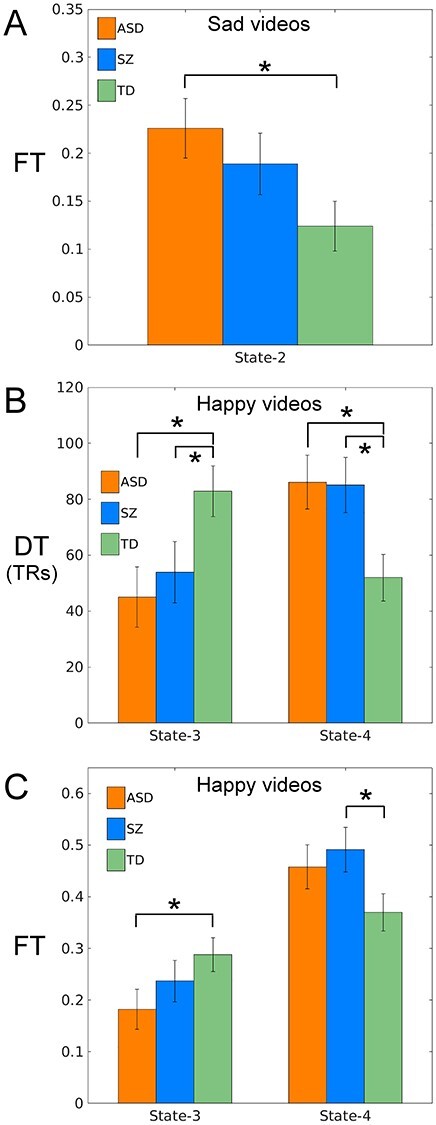

One-way ANOVAs for DT and FT for the emotion Sad in State-2 with group as between-subject effect showed a significant main effect of group for FT only (F(2,126) = 3.290, P = 0.040) (see Fig. 3A, and Table 3). Post hoc analyses showed higher FT for ASD compared with TD (see Fig. 3A). SZ also showed higher FT than TD, but this difference did not reach significance (P = 0.131). Neither patient group responded to viewing sad videos by a reduction in the amount of time spent in State-2.

Figure 3.

Bar plots showing dS measures for groups ASD (orange), TD (green), and SZ (blue) for (A) FT for the emotion Sad and State-2 and (B) both DT and FT for the emotion Happy and State-3 and State-4. Error bars denote standard error and an asterisk denotes a significant post hoc test (P < 0.05LSD).

Table 3.

Emotion- and state-specific clustering measure statistics for all three groups (with age, IQ, gender, and FDrms as covariates). For Sad videos, State-2 only (top) and for Happy videos, State-3 and State-4 (bottom)

| Group: State | Estimated mean (SE) | 95% CI (low/high) | Main effect & sig | Post hoca [Cohen’s d: effect size raw/emm] | |

|---|---|---|---|---|---|

| Sad videos, State-2 only, one-way ANCOVA, main effect of group | |||||

| DT (TRs) | ASD: State-2 | 48.8 (4.8) | 39.2–58.4 |

F(2,95) = 1.432 P = 0.244 |

NA |

| TD: State-2 | 39.1 (4.4) | 30.3–47.9 | |||

| SZ: State-2 | 37.8 (4.8) | 28.3–47.3 | |||

| FT (0–1) | ASD: State-2 | 0.226 (0.031) | 0.165–0.287 |

F(2,126) = 3.290 P = 0.040 |

ASD > TD State-2, t(126) = 2.490; P = 0.014 [0.472/0.531] |

| TD: State-2 | 0.124 (0.026) | 0.073–0.174 | |||

| SZ: State-2 | 0.189 (0.032) | 0.125–0.253 | |||

| Happy videos, State-3 and State-4, 3 × 2 mixed model ANOVA, Group-by-State interaction | |||||

| DT (TRs) | ASD: State-3 | 45.1 (10.8) | 23.7–66.5 |

F(2,101) = 7.409 P = 0.001 |

TD > ASD State-3, t(106) = 2.661; P = 0.009 [0.562/0.644] TD > SZ State-3, t(110) = 1.984; P = 0.050 [0.535/0.488] ASD > TD State-4, t(137) = 2.580; P = 0.011 [0.555/0.563] SZ > TD State-4, t(137) = 2.530; P = 0.013 [0.529/0.548] TD > ASD, State-3 > State-4, t(102) = 3.451; P = 0.001 TD > SZ, State-3 > State-4, t(99) = 2.979; P = 0.004 |

| TD: State-3 | 82.9 (9.1) | 65.0–100.9 | |||

| SZ: State-3 | 54.0 (11.0) | 32.2–75.8 | |||

| ASD: State-4 | 86.1 (9.6) | 66.4–105.7 | |||

| TD: State-4 | 52.0 (8.3) | 35.6–68.4 | |||

| SZ: State-4 | 85.1 (9.8) | 65.7–104.5 | |||

| FT (0–1) | ASD: State-3 | 0.182 (0.039) | 0.105–0.259 |

F(2,131) = 3.093 P = 0.049 |

TD > ASD State-3, t(141) = 2.050; P = 0.042 [0.433/0.498] SZ > TD State-4, t(142) = 2.122;P = 0.036 [0.410/0.455] TD > ASD, State-3 > State-4, t(131) = 2.213; P = 0.029 |

| TD: State-3 | 0.288 (0.033) | 0.223–0.353 | |||

| SZ: State-3 | 0.237 (0.040) | 0.159–0.315 | |||

| ASD: State-4 | 0.458 (0.043) | 0.373–0.542 | |||

| TD: State-4 | 0.370 (0.036) | 0.299–0.442 | |||

| SZ: State-4 | 0.491 (0.043) | 0.406–0.577 | |||

Notes: Effect size is Cohen’s d, in brackets. emm: effect size from estimated marginal means. No effect sizes were calculated for the custom post hoc hypotheses tests (in italics).

aPost hoc correction used is least significant difference (LSD).

For the emotion Happy and State-3 and State-4, we conducted 3 × 2 mixed model ANOVAs with group as the between-subject factor and state as the within-subject factor for DT and FT. The group-by-state interaction was significant for both DT (F(2,101) = 7.409, P = 0.001) and FT (F(2,131) = 3.093, P = 0.049) (see Fig. 3B and C). For the significant DT finding, post hoc analysis showed that during Happy videos, TD was greater than both ASD and SZ in State-3, and both ASD and SZ were greater than TD in State-4 (see Fig. 3B and Table 3). Additional custom post hoc testing revealed that, for the contrast State-3 versus State-4 in Happy videos, DT for TD was greater than both ASD and SZ. For the significant FT finding, post hoc analysis showed that during Happy videos, TD was greater than ASD in State-3 and SZ was greater than TD in State-4 (see Fig. 3C and Table 3). Additional custom post hoc testing revealed that for the contrast State-3 versus State-4, TD was greater than ASD. Viewing Happy videos failed to lead to the increase of time spent in State-3 as seen in TD participants, and viewing Sad videos led to greater time in State-4 in patient groups compared withTD.

Relationship of dS Measures with Symptom Severity and Social Cognition Scores

Again, based on the TD only results described above, we focus on the emotions Happy and Sad and the cluster states associated with each of those two emotions, which are State-2 for Sad, and State-3 and State-4 for Happy.

The relationship of dS measures with BLERT symptom severity scores showed statistically significant two-way interactions of Group-by-BLERT for DT4 in Happy videos only (F(2,118) = 3.836, P = 0.024). A post hoc analysis for the significant two-way interaction showed that the slope of DT4 versus BLERT was 1) greater for ASD than TD in “Happy” videos (F(1,118) = 7.611; P = 0.007) and 2) significant overall for the ASD group in Happy videos (F(1,118) = 7.705; P = 0.006).

The relationship of dS measures with BVAQ symptom severity scores revealed a statistically significant two-way interaction of Group-by-BVAQ-Identifying for DT2 (but not FT2) in Sad videos (F(2,91) = 4.244, P = 0.017). A post hoc analysis for the significant two-way interaction showed that the slope of DT2 versus BVAQ-Identifying was 1) greater for ASD than SZ in Sad videos (F(1,91) = 8.385; P = 0.005) and 2) significant overall for ASD in Sad videos (F(1,91) = 9.119; P = 0.003).

There were no other significant three-way interactions for dS measures (including RMET, ADOS, and PANSS) with other social cognition and symptom severity scores.

Sensitivity Analysis: Medication Intake and Comorbid Diagnoses

Lastly, we performed a sensitivity analysis to assess the effect of medication intake and comorbid diagnoses of depression and anxiety on our findings from all three group comparisons (per Table 3). Overall, we found no statistically significant effects of either medication intake or comorbid diagnoses on our results. We also examined the differences between ASD and SZ with comorbid diagnoses and found no significant effects. See Supplementary Material, Supplement 4, for details.

Discussion

We investigated time-varying functional network connectivity (FNC) in ASD, SZ, and TD participants while they were watching videos of individuals talking to them directly describing happy or sad experiences with associated displays of emotion, with neutral content as a control condition. Applying k-means clustering to windowed FNC matrices, we identified four unique states of connectivity between ICA-derived intrinsic connectivity networks (ICNs) during video viewing. We showed that time spent three out of these four states (mean dwell time and/or fractional time) varied as a function of task emotional content and differed significantly between diagnostic groups.

Dynamic analysis of functional connectivity (dFC) analysis or its network analog (dFNC) is an emerging approach to delineate neural network architecture that fluctuates with time (Chang and Glover 2010; Sakoglu et al. 2010; Calhoun et al. 2014). Notably, we found group differences in specific dynamic connectivity state measures, thus demonstrating the value of dFNC analysis in studying complex changes in FC that cannot be explored with traditional static FNC analysis methods. While most studies to date have identified dynamic connectivity states during single-run rs-fMRI (Allen et al. 2014; Damaraju et al. 2014), our study examined dFNC states during an emotional task presented over three consecutive fMRI runs. In addition, we compared the control group with two clinical populations that differ clinically yet are characterized by similar social cognitive deficits and examined the relationship of dS measures with social cognition (symptom) scores in both groups. To determine normative neural responses to the emotion task, we first focused on the TD group and found that the emotion videos Happy and Sad were each uniquely engaged in three of the four dFNC states, consistent with a hypothesis offered by Wexler in 1986 that different emotions were associated with different overall organization of brain functional systems (Wexler 1986). Only State-1, the state with the greatest overall mean magnitude in network pair correlations, was not modulated by video emotional content in TD. The specific findings in State-2 through State-4 for the TD-only analysis, described in detail below, demonstrate that our emotion task successfully elicited emotion-specific neural connectivity patterns. While for the ASD-only analysis, we found no unique effect of emotion in any state dS measure, and for SZ-only, we found only that time spent in State-3 was associated with negative (sad) emotions; models directly comparing the three groups demonstrated abnormal dS measures in both ASD and SZ compared with TD, with no differences between the clinical groups. However, significant association between dS measures and social–emotional cognitive measures were found in the ASD group only. Below we discuss the results as they pertain to the specific emotional condition. We then emphasize the broader implications of our work to studying whole-brain dynamic connectivity using a task probing social and/or cognitive processes to delineate temporal and structural changes in functional circuit architecture in TD individuals as well as in psychiatric disorders.

Happy Videos: States 3 and 4

Our findings for the TD-only analysis suggest that during Happy videos TD exhibits a shift in time spent from State-4, the most weakly connected state, to State-3, characterized by intermediate connectivity. Notably, State-3 had a unique matrix structure where 16 independent networks, located mostly within the so-called triple network of central executive, salience, and default mode functional domains (Menon 2011), had positive functional network connectivity with each other, but negative connectivity between most other network pairs (see Supplementary Fig. 2, State-3 dFNC matrix, module MOD1). In this group, we found greater engagement in State-3 (for both DT and FT) in Happy compared with Neutral videos, with less engagement in State-4 (both DT and FT) in Happy compared with Sad videos (the difference between Happy and Neutral was in the same direction but did not reach significance).

In direct contrast with TD, both patient groups underwent a significant shift in time spent from State-3 to State-4 when watching Happy videos. More specifically, ASD and SZ were both characterized by a failure to engage State-3 (when compared with TD) during processing of happy emotions. Instead, both patient groups spent more time in State-4, the most weakly connected state.

These findings in State-3 and State-4 during Happy videos agree with most prior work using dynamic FNC to track whole-brain connectivity. Most dFNC-based studies conducted so far used resting-state fMRI (rs-fMRI), and in such studies, patient groups were found to spend significantly more time in the most weakly connected state and less time in more strongly connected states than the control group. In particular, this was found in both ASD (de Lacy et al. 2017; Rashid et al. 2018) and SZ (Damaraju et al. 2014; Du, Pearlson, et al. 2016b) when compared with TD controls. We previously showed similar effect in a sample significantly overlapping with the current report during rest (Rabany et al. 2019; see below for a more elaborated discussion). Thus, the current study extends these findings to suggest that both patient groups are less likely to achieve this dynamic configuration while engaged in positive emotional processes specifically.

The relationship of dS measures with social cognitive ability scores showed only two significant relationships, both in the ASD group. In Happy videos in State-4, ASD showed a significant positive slope between time spent (DT) and BLERT scores. This finding is somewhat counter-intuitive, as we expected that increased time spent in State-4, the most weakly connected state, would decrease as participant BLERT scores increased indicating better emotion recognition (i.e., closer to TD scores).

Sad Videos: State-2

When viewing Sad videos, we found that TD spend less time in State-2, which is a state characterized by increased functional connectivity between the SAL domain and Auditory and SM domains and reduced functional connectivity between the DMN domain and Visual domain. We concluded State-2 was associated with the emotion Sad, being a “Not-Sad” state, in that TD tends to “disengage from” (i.e., spend significantly less time in) State-2 during Sad videos. This might be related to implicit emotion regulation processes; however, our study is not designed to confirm this hypothesis. We also cannot confirm with any degree of accuracy to which of the other three dFNC states (1, 3, and/or 4) time spent had increased because no other state showed a statistically significant increase in time spent (DT or FT) during Sad videos.

A comparison of the two patient groups with TD in State-2 during Sad videos showed that while both ASD and SZ had higher proportion of participants entering into State-2, only ASD spent more time (FT only) than TD, while SZ had no statistically significant differences with either TD or ASD. These results suggest that ASD and SZ had some degree of abnormal dynamic state engagement during negative stimuli. However, individuals diagnosed with ASD also showed a failure to disengage from State-2 during the viewing of Sad videos. Although speculative, this is supported in part by a prior study of youth with autism in which the DMN failed to deactivate during a cognitive task (Spencer et al. 2012). In the context of negative emotion stimuli, this disengagement might hypothetically be related to deficits in implicit emotion regulation processes that are known to be impaired in ASD (Mazefsky et al. 2013; Samson et al. 2014). Again, our current study was not designed to assess emotion regulation and future studies will be needed to explore dFNC patterns in relation to emotion regulation measures.

We also found that in Sad videos in State-2, ASD had a significant positive slope between time spent (FT) in State-2 and BVAQ Identifying scores. Low BVAQ Identifying scores indicate better recognition of one’s own emotions, so the significantly larger FT, that is, the failure to disengage, in ASD (vs. TD) in State-2 during Sad videos is associated with a more pronounced alexithymia in ASD participants. The ability to identify self-emotion has been shown to be a precursor to identifying emotions in others (Goerlich 2018) and thus crucial to emotion regulation (Cai et al. 2018). Therefore, alexithymia in ASD might explain the abnormal engagement in State-2, a dynamic state in which TD individuals tend to disengage during negative stimuli.

Lastly, we did not find any association of emotion in State-1 for the TD-only analysis. We can only speculate why this is the case, but one possible explanation is that State-1 represents a dynamic connectivity configuration associated with the general passive processing of the video and audio material presented during the task. Future studies will be required to explore what brain processes this particular dFNC state represents.

Whole-Brain Dynamic Functional Architecture during SE Processes

Our study, as well as other studies examining dynamic functional connectivity changes, highlights the importance of examining temporal reconfiguration of functional network connectivity at the whole-brain level instead of between a small number of brain regions.

Findings from such studies lend support to the theory that during task processing the brain switches between distinct whole-brain states of functional connectivity. Underlying mechanisms for whole-brain state functional connectivity switching have been described by flexible hub theory (Cole et al. 2013; Cocuzza et al. 2020) and by Bayesian switching dynamical systems (BSDS) modeling (Taghia et al. 2018). In our study, dynamic state engagement differences between emotional states (in TD) and between patients and controls were also evident at the whole-brain level rather than between a few discrete brain regions and appeared as differences in time spent (mean dwell time, fractional time) in three of four whole-brain dynamic connectivity states. These dynamic states, especially State-2 and State-3, are potentially unique to our task and might reflect reconfigurations allowing for the recognition and processing of positive versus negative emotions. Underlying neural impairments in patient groups might result in either an inability to remain engaged in a particular dynamic state or, conversely, an inability to disengage from that dynamic state, according to specific task demands.

An important goal of our current study was to examine similarities and differences in ASD and SZ in emotion processing in a social context. For Happy videos, both patient groups showed significant differences from TD, especially in regard to DT for State-3 and State-4, with no differences between the patient groups, suggesting similar impairments in dynamic FNC state reconfiguration. Although only ASD had significant differences with TD in dS measures (DT/FT) in State-2 during Sad videos, the lack of such significant differences between ASD and SZ, and SZ and TD (with SZ being intermediate between ASD and TD similar to the pattern seen for the Happy videos), precludes a definite conclusion in relation to negative (sad) emotions. Importantly, only ASD had a significant association of clinical symptom scores with dS measures (DT/FT), which might indicate unique underlying neural mechanism of emotion processing deficits, both positive (happy) and negative (sad), in these two patient groups. We cannot rule out that our task stimuli are sensitive to socio-emotional deficits that are more unique to autism, which might drive this pattern of results. However, the fact that valence rating is similar in the groups and that at least some of the differential results were found in relation to the BLERT, which was validated in SZ populations and has been shown to capture this group’s social–emotional deficits (Bryson et al. 1997; Pinkham et al. 2018), suggest this is unlikely.

We further emphasize the importance of studying dynamic FC during task and not only during rs-fMRI, which is far more common in the field. We previously directly compared dFNC patterns of rs-fMRI in ASD, SZ, and TD controls in a sample that largely overlaps the current study’s sample (Rabany et al. 2019). Although a direct comparison of rest and task data is beyond the scope of this study, we briefly highlight some specific differences in the results: 1) In the rs-fMRI study, TD entered into a greater number of states (NS) than both ASD and SZ, and both TD and ASD had a greater number of transitions (NT) than SZ, while in our current study, there were no group differences for NS or NT in any emotion video; 2) in the rs-fMRI study, patient groups spent more time (FT) in the most weakly connected state than TD, while in our current study, only during Happy videos did patients spend more time in the most weakly connected state, and only SZ had a greater FT than TD in the most weakly connected state (during Happy videos only); 3) only in the current study did a patient group (ASD) spend significantly more time than TD in a moderately or strongly connected state (our State-2), specifically during Sad videos; 4) in the rs-fMRI study, SZ had spent more time than ASD in the most weakly connected state, and a shorter time (FT) than ASD in a state of intermediate connectivity, while in our current study, there were no differences between ASD and SZ in either DT or FT; 5) the rs-fMRI study found significant associations in the SZ group for ADOS (Total) scores with DT in State-3 (with intermediate connectivity) and for PANSS (Total) scores with NT. These findings were not duplicated in our current study. These differences emphasize the need to study dynamic connectivity patterns during tasks probing specific cognitive processes rather than in resting state only to delineate overlaps and differences of brain mechanisms of specific phenotypic phenomena (e.g., cognitive processes and psychiatric symptoms) in different clinical disorders.

Study Limitations

Study limitations include a relatively small sample size. Additionally, groups were not matched on age, estimated full-scale IQ, gender, or mean framewise displacement, although all four of these measures were included as covariates in all analyses. Furthermore, study participants watched different sets of three emotion videos, but these emotion video sets were randomized and balanced for order, as well as the actor’s gender and ethnicity. We also should note that the four dFNC states were determined using a TD cohort that was larger than that of either ASD or SZ (n = 55, vs. 42 and 41, respectively), and with a higher ratio of females. For the former (i.e., unequal group sizes), it is likely that having more TD than ASD or SZ caused k-means clustering to result in a set of dFNC states skewed more toward that of the TD group. This was a desired result; however, in that, it was important to have a normative standard with which to compare with the two clinical groups. For the latter (i.e., gender differences), we acknowledge that, although gender was included as a covariate in all analyses, the effects on our results of the greater number of females in the TD group cannot be ruledout.

In addition, the patient groups were treated with medications that could potentially affect our results, as well as any comorbid diagnoses. Notably, sensitivity analyses showed no significant medication (antipsychotics or antidepressants) or comorbid diagnoses (depression or anxiety) effects on our results, decreasing the likelihood of such effects. Lastly, we also note that we presented videos portraying only the emotions happy (positive) and sad (negative), but other negative emotions could have been presented and might be expected to give different results.

Response to emotion-evoking stimuli is a complex, dynamic multicomponent process, and it is likely that different psychiatric patients will have abnormalities in different aspects of emotion response. This study is one of the first to secure some relevant information on this important topic, but our study, like most fMRI studies, assessed brain activity during a limited range of conditions (i.e., only during happy and sad video viewing). Future studies should compare patient groups on a variety of tasks related to emotion response.

Conclusion

In conclusion, our study showed that dynamic changes in network connectivity state are responsive to emotional stimuli in TD controls and that dynamic connectivity measures were abnormal in both patient groups. Furthermore, we found a relationship of dynamic state measures (state mean dwell time and fractional time) with social cognitive measures in ASD only, potentially pointing to a specific underlying neural mechanism. Finally, our study shows the importance of examining dynamic connectivity states analyses during specific tasks rather than resting state only due to the unique dynamic connectivity states elicited only during tasks that probe specific cognitive processes.

Funding

National Institutes of Health (R01 MH095888, R01 MH119069 to M.A.); the National Alliance for Research in Schizophrenia and Affective Disorders (Young Investigator Award 17525 to S.C.).

Notes

Conflict of Interest: The authors have declared that there are no competing interests in relation to the subject of this study.

Supplementary Material

Contributor Information

Christopher J Hyatt, Olin Neuropsychiatry Research Center, Institute of Living, Hartford, CT 06106, USA.

Bruce E Wexler, Department of Psychiatry, School of Medicine, Yale University, New Haven, CT 06510, USA.

Brian Pittman, Department of Psychiatry, School of Medicine, Yale University, New Haven, CT 06510, USA.

Alycia Nicholson, Olin Neuropsychiatry Research Center, Institute of Living, Hartford, CT 06106, USA.

Godfrey D Pearlson, Olin Neuropsychiatry Research Center, Institute of Living, Hartford, CT 06106, USA; Department of Psychiatry and Neuroscience, School of Medicine, Yale University, New Haven, CT 06510, USA.

Silvia Corbera, Department of Psychiatry, School of Medicine, Yale University, New Haven, CT 06510, USA; Department of Psychological Science, Central Connecticut State University, New Britain, CT 06050, USA.

Morris D Bell, Department of Psychiatry, School of Medicine, Yale University, New Haven, CT 06510, USA; Department of Psychiatry, VA Connecticut Healthcare System West Haven, West Haven, CT 06516, USA.

Kevin Pelphrey, Department of Neurology, University of Virginia, Charlottesville, VA 22903, USA.

Vince D Calhoun, Tri-institutional Center for Translational Research in Neuroimaging and Data Science (TReNDS) Georgia State University, Georgia Institute of Technology, Emory University, Atlanta, GA 30303, USA.

Michal Assaf, Olin Neuropsychiatry Research Center, Institute of Living, Hartford, CT 06106, USA; Department of Psychiatry, School of Medicine, Yale University, New Haven, CT 06510, USA.

References

- Allen EA, Damaraju E, Plis SM, Erhardt EB, Eichele T, Calhoun VD. 2014. Tracking whole-brain connectivity dynamics in the resting state. Cereb Cortex. 24:663–676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen EA, Erhardt EB, Damaraju E, Gruner W, Segall JM, Silva RF, Havlicek M, Rachakonda S, Fries J, Kalyanam Ret al. . 2011. A baseline for the multivariate comparison of resting-state networks. Front Syst Neurosci. 5:2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anticevic A, Repovs G, Barch DM. 2012. Emotion effects on attention, amygdala activation, and functional connectivity in schizophrenia. Schizophr Bull. 38:967–980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Assaf M, Jagannathan K, Calhoun VD, Miller L, Stevens MC, Sahl R, O'Boyle JG, Schultz RT, Pearlson GD. 2010. Abnormal functional connectivity of default mode sub-networks in autism spectrum disorder patients. Neuroimage. 53:247–256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlati S, Minelli A, Ceraso A, Nibbio G, Carvalho Silva R, Deste G, Turrina C, Vita A. 2020. Social cognition in a research domain criteria perspective: a bridge between schizophrenia and autism spectra disorders. Front Psych. 11:806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. 2001. The "reading the mind in the eyes" test revised version: a study with normal adults, and adults with Asperger syndrome or high-functioning autism. J Child Psychol Psychiatry. 42:241–251. [PubMed] [Google Scholar]

- Bell AJ, Sejnowski TJ. 1995. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 7:1129–1159. [DOI] [PubMed] [Google Scholar]

- Blondel V, Guillaume J-L, Lambiotte R, Lefebvre E. 2008. Fast unfolding of communities in large networks. J Stat Mech. 2008:P10008. [Google Scholar]

- Bryson G, Bell M, Lysaker P. 1997. Affect recognition in schizophrenia: a function of global impairment or a specific cognitive deficit. Psychiatry Res. 71:105–113. [DOI] [PubMed] [Google Scholar]

- Cai RY, Richdale AL, Uljarevic M, Dissanayake C, Samson AC. 2018. Emotion regulation in autism spectrum disorder: where we are and where we need to go. Autism Res. 11:962–978. [DOI] [PubMed] [Google Scholar]

- Calhoun VD, Miller R, Pearlson G, Adali T. 2014. The chronnectome: time-varying connectivity networks as the next frontier in fMRI data discovery. Neuron. 84:262–274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang C, Glover GH. 2010. Time-frequency dynamics of resting-state brain connectivity measured with fMRI. Neuroimage. 50:81–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciaramidaro A, Bolte S, Schlitt S, Hainz D, Poustka F, Weber B, Bara BG, Freitag C, Walter H. 2015. Schizophrenia and autism as contrasting minds: neural evidence for the hypo-hyper-intentionality hypothesis. Schizophr Bull. 41:171–179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciaramidaro A, Bolte S, Schlitt S, Hainz D, Poustka F, Weber B, Freitag C, Walter H. 2018. Transdiagnostic deviant facial recognition for implicit negative emotion in autism and schizophrenia. Eur Neuropsychopharmacol. 28:264–275. [DOI] [PubMed] [Google Scholar]

- Cocuzza CV, Ito T, Schultz D, Bassett DS, Cole MW. 2020. Flexible coordinator and switcher hubs for adaptive task control. J Neurosci. 40:6949–6968. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole MW, Reynolds JR, Power JD, Repovs G, Anticevic A, Braver TS. 2013. Multi-task connectivity reveals flexible hubs for adaptive task control. Nat Neurosci. 16:1348–1355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corp. I . 2020. IBM SPSS statistics for windows, Version 27.0. In. Armonk, NY: IBM Corp. [Google Scholar]

- Damaraju E, Allen EA, Belger A, Ford JM, McEwen S, Mathalon DH, Mueller BA, Pearlson GD, Potkin SG, Preda Aet al. . 2014. Dynamic functional connectivity analysis reveals transient states of dysconnectivity in schizophrenia. Neuroimage Clin. 5:298–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Das P, Calhoun V, Malhi GS. 2012. Mentalizing in male schizophrenia patients is compromised by virtue of dysfunctional connectivity between task-positive and task-negative networks. Schizophr Res. 140:51–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacy N, Doherty D, King BH, Rachakonda S, Calhoun VD. 2017. Disruption to control network function correlates with altered dynamic connectivity in the wider autism spectrum. Neuroimage Clin. 15:513–524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du W, Ma S, Geng-Sheng F, Calhoun VD, Adali T, editors. 2014. A novel approach for assessing reliability of ICA for fMRI analysis. Florence, Italy: ICASSP. [Google Scholar]

- Du Y, Allen EA, He H, Sui J, Wu L, Calhoun VD. 2016a. Artifact removal in the context of group ICA: a comparison of single-subject and group approaches. Hum Brain Mapp. 37:1005–1025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du Y, Fan Y. 2013. Group information guided ICA for fMRI data analysis. Neuroimage. 69:157–197. [DOI] [PubMed] [Google Scholar]

- Du Y, Fryer SL, Lin D, Sui J, Yu Q, Chen J, Stuart B, Loewy RL, Calhoun VD, Mathalon DH. 2018a. Identifying functional network changing patterns in individuals at clinical high-risk for psychosis and patients with early illness schizophrenia: a group ICA study. Neuroimage Clin. 17:335–346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du Y, Fu Z, Calhoun VD. 2018b. Classification and prediction of brain disorders using functional connectivity: promising but challenging. Front Neurosci. 12:525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du Y, Fu Z, Sui J, Gao S, Xing Y, Lin D, Salman M, Abrol A, Rahaman MA, Chen Jet al. . 2020. NeuroMark: an automated and adaptive ICA based pipeline to identify reproducible fMRI markers of brain disorders. Neuroimage Clin. 28:102375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Du Y, Pearlson GD, Yu Q, He H, Lin D, Sui J, Wu L, Calhoun VD. 2016b. Interaction among subsystems within default mode network diminished in schizophrenia patients: a dynamic connectivity approach. Schizophr Res. 170:55–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eack SM, Bahorik AL, McKnight SA, Hogarty SS, Greenwald DP, Newhill CE, Phillips ML, Keshavan MS, Minshew NJ. 2013. Commonalities in social and non-social cognitive impairments in adults with autism spectrum disorder and schizophrenia. Schizophr Res. 148:24–28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eack SM, Wojtalik JA, Keshavan MS, Minshew NJ. 2017. Social-cognitive brain function and connectivity during visual perspective-taking in autism and schizophrenia. Schizophr Res. 183:102–109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falahpour M, Thompson WK, Abbott AE, Jahedi A, Mulvey ME, Datko M, Liu TT, Muller RA. 2016. Underconnected, but not broken? Dynamic functional connectivity MRI shows Underconnectivity in autism is linked to increased intra-individual variability across time. Brain Connect. 6:403–414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernandes JM, Cajao R, Lopes R, Jeronimo R, Barahona-Correa JB. 2018. Social cognition in schizophrenia and autism spectrum disorders: a systematic review and meta-analysis of direct comparisons. Front Psych. 9:504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. 2008. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 9:432–441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- First MB, Williams JBW, Spitzer RL, Gibbon M. 2002. Structured clinical interview for DSM-IV-TR Axis I disorders, research version, patient edition with psychotic screen (SCID-I/P W/ PSY SCREEN). New York: Biometrics Research, New York State Psychiatric Institute. [Google Scholar]

- Friston KJ, Ashburner J, Frith CD, Poline J-B, Heather JD, Frackowiak RSJ. 1995. Spatial registration and normalization of images. Hum Brain Mapp. 3:165–189. [Google Scholar]

- Glasser MF, Sotiropoulos SN, Wilson JA, Coalson TS, Fischl B, Andersson JL, Xu J, Jbabdi S, Webster M, Polimeni JRet al. . 2013. The minimal preprocessing pipelines for the human connectome project. Neuroimage. 80:105–124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goerlich KS. 2018. The multifaceted nature of alexithymia—a neuroscientific perspective. Front Psychol. 9:1614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutchison RM, Womelsdorf T, Allen EA, Bandettini PA, Calhoun VD, Corbetta M, Della Penna S, Duyn JH, Glover GH, Gonzalez-Castillo Jet al. . 2013. Dynamic functional connectivity: promise, issues, and interpretations. Neuroimage. 80:360–378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iraji A, Faghiri A, Lewis N, Fu Z, Rachakonda S, Calhoun VD. 2020. Tools of the trade: estimating time-varying connectivity patterns from fMRI data. Soc Cogn Affect Neurosci. 8:849–874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jia H, Hu X, Deshpande G. 2014. Behavioral relevance of the dynamics of the functional brain connectome. Brain Connect. 4:741–759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay SR, Fiszbein A, Opler LA. 1987. The positive and negative syndrome scale (PANSS) for schizophrenia. Schizophr Bull. 13:261–276. [DOI] [PubMed] [Google Scholar]

- Lloyd S. 1982. Least squares quantization in PCM. IEEE Trans Inform Theory. 28:129–137. [Google Scholar]

- Lord C, Risi S, Lambrecht L, Cook EH Jr, Leventhal BL, DiLavore PC, Pickles A, Rutter M. 2000. The autism diagnostic observation schedule-generic: a standard measure of social and communication deficits associated with the spectrum of autism. J Autism Dev Disord. 30:205–223. [PubMed] [Google Scholar]

- Lurie DJ, Kessler D, Bassett DS, Betzel RF, Breakspear M, Kheilholz S, Kucyi A, Liégeois R, Lindquist MA, McIntosh ARet al. . 2020. Questions and controversies in the study of time-varying functional connectivity in resting fMRI. Netw Neurosci. 4:30–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lynch CJ, Uddin LQ, Supekar K, Khouzam A, Phillips J, Menon V. 2013. Default mode network in childhood autism: posteromedial cortex heterogeneity and relationship with social deficits. Biol Psychiatry. 74:212–219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazaika P, Hoeft F, Glover GH, Reiss AL. 2009. Methods and software for fMRI analysis for clinical subjects. Hum Brain Mapp. 47:S58. [Google Scholar]