Abstract

Objective

The attitudes about the usage of artificial intelligence in healthcare are controversial. Unlike the perception of healthcare professionals, the attitudes of patients and their companions have been of less interest so far. In this study, we aimed to investigate the perception of artificial intelligence in healthcare among this highly relevant group along with the influence of digital affinity and sociodemographic factors.

Methods

We conducted a cross-sectional study using a paper-based questionnaire with patients and their companions at a German tertiary referral hospital from December 2019 to February 2020. The questionnaire consisted of three sections examining (a) the respondents’ technical affinity, (b) their perception of different aspects of artificial intelligence in healthcare and (c) sociodemographic characteristics.

Results

From a total of 452 participants, more than 90% already read or heard about artificial intelligence, but only 24% reported good or expert knowledge. Asked on their general perception, 53.18% of the respondents rated the use of artificial intelligence in medicine as positive or very positive, but only 4.77% negative or very negative. The respondents denied concerns about artificial intelligence, but strongly agreed that artificial intelligence must be controlled by a physician. Older patients, women, persons with lower education and technical affinity were more cautious on the healthcare-related artificial intelligence usage.

Conclusions

German patients and their companions are open towards the usage of artificial intelligence in healthcare. Although showing only a mediocre knowledge about artificial intelligence, a majority rated artificial intelligence in healthcare as positive. Particularly, patients insist that a physician supervises the artificial intelligence and keeps ultimate responsibility for diagnosis and therapy.

Keywords: Artificial intelligence, patients, digital technology, clinical decision support systems, algorithms, attitude, perception, surveys and questionnaires, digital divide

Introduction

Artificial intelligence (AI) is a topic that has become increasingly relevant in the social debate in recent years. Politicians, economists, scientists as well as lay people are talking controversially about this special subject. However, the level of public knowledge about AI is frequently low and its perception is not exclusively positive. 1 While a majority has optimistic opinions about AI’s capability to improve human life, there is also controversial discussion about ethical concerns, loss of control and undesired consequences by uncritical usage of AI. 2 These ambiguous feelings can be observed in the very sensitive areas of the healthcare sector like under a magnifying lens.

Background: AI

Although definitions for AI are as diverse as the definition of intelligence in general, an early AI definition, which was given by the Dartmouth Research Project in 1955, is still valid today: ‘Making a machine behave in ways that would be called intelligent if a human were so behaving’. More in detail, it can be defined as a system's ability to interpret external data correctly, to learn from such data and to use those learnings to achieve specific goals and tasks through flexible adaptation. 3 This quality allows AI to find patterns and subtle and complex associations in large, high-dimensional data that often escape traditional analysis techniques as well. 4 Thus, typical use cases for AI in medicine are image analysis tasks, not only in radiology, but also in ophthalmology, dermatology and pathology. 5 However, also, in clinical risk prediction, diagnostics and therapeutics, the usage of AI allows the consideration of more and more data. For instance, using AI allows the integration of genomic, proteomic and radiomic data to predict cancer outcomes, which is able to increase prediction accuracy relevantly. 6 Finally, the ability to process a higher amount of data in a shorter time period makes it possible to supplement the limited processing power of the human brain and thus reducing the workload of healthcare professionals. 7 Despite the high potential of AI in medicine, however, there are concerns among the stakeholders about the safety of AI but also data security. Patients fear that they might not have the choice to refuse an AI usage for their personal treatment, rising costs and problems with insurance coverage. 8 In a more technical view, missing, erroneous or insufficiently annotated training data may impair the performance of AI models and prevent them to generalize well beyond their original population. Especially biased data result in a biased output and can lead to a discrimination of the underrepresented subgroup. However, also legal issues, uncertainties about privacy and liability and a poor explainability due to the ‘black box’ nature of many AI models result in mistrust and hamper clinical implementation.9–11

AI perception of healthcare professionals and patients

During the last few years, numerous researchers focused on healthcare professionals when examining the perception of AI in medicine. For instance, in recent surveys conducted in Germany, France and the United Kingdom, healthcare professionals indicated that their attitude towards AI is generally positive and they expect it to improve their daily work. Nevertheless, they are also aware of the issues described above.12–14 However, it must be stated that for a reasonable use of AI in healthcare, the acceptance of patients and their families is necessary as well. Modern healthcare aims for participation and cooperation of patients, often described under the term ‘patient empowerment’. Insufficient acceptance of therapeutic measures impairs patient’s compliance and worsens an otherwise possible successful outcome, 15 so concerns about AI could impair the dissemination and use of these tools relevantly.

Current status of the literature

AI in healthcare represents one of the fastest growing research subjects in current medical research. Within the last few years, the number of publications showed a nearly exponential increase. A comprehensive description of this highly dynamic and rapidly growing literature field is challenging and exceeds the bounds of this paper. Reference is therefore made to corresponding publications.9,16–18 A rough summary of the literature shows that vast majority of the work is in the experimental stage and an implementation in clinical routine has only taken place in exceptions so far. 19 However, many publications state that their AI application would achieve a non-inferior or even better performance compared to human physicians, although poor reporting and high risk of bias is observed frequently in the respective publications. 20 Regardless of the high number of publications, patients’ opinions and general perceptions of AI in healthcare were much less in focus. The results vary relevantly depending on the surveyed group and the AI application in focus. Aggarwal and colleagues examined perceptions of 408 participants from London regarding AI along with questions about data collection and data sharing for the purpose of medical AI research. 21 They found that while patients generally have little prior knowledge about AI, the majority perceives its usage as positive, trusts its use and believes that the benefits outweigh the risks. A common finding in many studies is that patients want physician supervision of AI and prefer a physician in a direct comparison. Thus, a majority of 229 German patients preferred physicians over AI for all clinical tasks. As the only exception, for treatment planning based on current scientific evidence, an AI with physician supervision was strongly preferred. However, nearly all patients favoured a human physician if AI and physician came to differing estimations. 22 Similarly, in a study from the UK involving 107 neurosurgery patients, proportions of two-thirds up to three-fourths accepted AI for clinical tasks if a neurosurgeon was continuously in control. 23 A particularly striking example of the high respect and trust in the competence of physicians compared to AI tools is provided by the study of York and colleagues using the example of radiographic fracture identification. 24 On a 10-point scale representing the confidence, the study population of 216 respondents awarded their human radiologists a near maximum score of 9.2 points, while the AI tool received only 7.0 points. A multitude of additional studies showed that that people are reluctant to trust AI technology and prefer human physicians, even if the performance of the AI system is equal or better.25–28 In contrast, in a study from the US including 804 parents of paediatric patients, openness to AI-driven tools was given in majority if accuracy was proven and several other quality measures were fulfilled. 29 In contrast, from 1183 mostly female patients with chronic diseases, who lived in France, only 20% expected AI to be beneficial and 35% declined AI usage in their personal care completely. 30 In a dermatological setting, 48 patients from the US see AI usage mainly as a tool for second opinions for their treating physicians. These patients stated that AI exhibits strengths (like continuous high accuracy) as well as weaknesses (rare but serious misdiagnoses) at the same time. 31 As relevant risk factors, an impairment of the relation between patients and physicians, untrustworthy AI and missing regulation was indicated in a US online survey. 32 This perceived risk even persisted in an experimental setting among 634 respondents from the US if the AI tool was used by the physician as augmenting technology only. 33 A very patient-centred usage of AI was examined in a qualitative study from the US. 13 patients tested an AI tool which helped them to interpret and understand their written X-ray reports, thus aiming to adequately meet their information needs. They rated the usage of AI in this special setting as positive, but however, in addition to the previously described perceived risk factors, complained about the missing empathy of the system. 34 Another very patient-centric use case for AI is the application of symptom checkers which are available online. In an online survey among US American users of such symptom checker, more than 90% of the 329 respondents perceived it as useful diagnostic tool providing helpful information and in still half of the cases leading to positive health effects. 35

Research gap and objectives

While many previous publications assessed the perception of AI with respect to a specific technical application, the general perception on all different aspects of AI stays widely unclear. Thus, we aimed to collect data on the respondent’s current awareness about AI, which is present without supplying additional external knowledge. Examinations on the consumers’ perceptions of AI in medicine are frequently carried out using online survey tools36,37 or are based on analyses of social media. 38 These approaches offer a quick and simple generation of a big number of respondents, but it must not be overlooked that they are prone to methodological problems, 39 like in particular, a high risk of a selection bias excluding persons without internet access either through technical deficiencies or through missing skills. The situation in Germany is of special interest, since the speed of digitization in health care system has been particularly slow in Germany. In 2018, Germany ranked on the second last rank among 17 countries in a study examining the extent of this digitization. 40 A possible reason for that issue is the strict interpretation of data protection legislation in Germany compared to other countries.41,42 Other obstacles are a fragmented health care system with a large number of stakeholders, partly lacking willingness and necessary organizational structures. 43 It is therefore also of particular interest whether this situation also reflects in the perceptions and attitudes of patients as laypersons in this field, regardless of whether they are considered a cause or a consequence of delayed digitization. Therefore, the objective of this study was to investigate the perception of AI in healthcare using a paper-based questionnaire focussing on patients or their companions who were in direct contact with the healthcare system. Additionally, we aimed to examine whether the perception of AI in healthcare is affected by the digital affinity or sociodemographic factors of the respondents.

Materials and methods

We conducted a cross-sectional study using a paper-based questionnaire with patients and their companions at a tertiary referral hospital in Aachen, Germany. The reporting is carried out according to the Consensus-Based Checklist for Reporting of Survey Studies (CROSS). 44

Questionnaire development

To identify relevant subjects and items for the survey, we carried out a selective literature review analysing systematic reviews and original articles.2,12,30,45–48 Additionally, open and explorative interviews were carried out with six healthcare professionals. The structure of the resulting questionnaire is given in Table 1.

Table 1.

Structure of the final resulting questionnaire.

| Subsection | Number of questions |

|---|---|

| Evaluation of the respondent’s technical affinity | 9 questions |

| Among them: Self-reported technical affinity score | - 3 questions |

| Perception of different aspects of AI in healthcare | 26 questions |

| Subsections: | |

| - ‘AI brings advantages for patients’ | - 6 questions |

| - ‘Patients fear AI’ | - 4 questions |

| - ‘Patients are worried about physicians’ low AI competence’ | - 5 questions |

| - ‘AI needs to be controlled’ | - 3 questions |

| Details on sociodemographic characteristics | 5 questions |

The questions in the section “Perception of different aspects of AI in healthcare” used both positive and negative wording. The perception and self-assessment questions were answered on a five-point Likert scale or yes/no questions. Finally, respondents had the opportunity to give feedback in free text form. Since the target population consisted of patients and their attendance waiting for an appointment in a walk-in clinic, it was emphasized that it is possible to complete the questionnaire within 15 min. The healthcare professionals checked the first draft of the questionnaire for clearness, comprehensibility and possible mistakable phrases. Their feedback was implemented immediately.

Pretesting

According to Perneger et al., a sample size of 30 respondents was chosen for the pre-testing of the survey. 49 The questionnaire was pretested in a group of volunteers that was assumed to be as similar as possible to the expected final sample. For the sociodemographic characteristics of the pre-test population, please refer to the Appendix (Appendix 1, Table A1). The results of the pre-test were analysed for reliability using Cronbach’s alpha and inter-item correlation and corrected item-total correlation. The Cronbach’s alpha of the technical affinity rating in section 1 (“Technical affinity”) and the four subsections in section 2 (“Perception of AI in healthcare”) are given in the Appendix (Appendix 1, Tables A2 and A3). Questions with a corrected item-total correlation of <0.3 or an inter-item correlation of >0.8 were removed from the subsections to allow correct evaluation of the subsections. 50 However, these questions were not removed from the questionnaire, due to their relevance. Additional improvement on clearness were made according to the feedback of the pre-test participants. The pre-test revealed that about 75% of the participants were able to complete the questionnaire within 15 min. The final questionnaire is provided as a translated, English version in the Appendix 2.

Sample characteristics and sample size

The participants were opportunistically recruited from the waiting area of the premedication outpatient clinics of the Department of Anaesthesia of the University Hospital RWTH Aachen, Germany. Most participants consisted of inpatients and outpatients, but also accompanying relatives or friends were invited to fill in the questionnaire. The study was carried out from December 2019 to February 2020. The inclusion criteria were (a) the capability to read and understand the information given in the questionnaire and (b) the willingness to take part in the survey. There were no explicit exclusion criteria.

The results of our survey should be transferable to the German population as a whole. Given a population of 83 million inhabitants, a confidence level of 95% and an error margin of 5%, this resulted in an estimated sample size of 385 respondents. 51 We added a safety margin of 20% resulting in a target sample size of 462 participants.

The University Hospital in Aachen serves as a supramaximal healthcare provider covering the entire spectrum of medicine with 36 specialist clinics, 28 institutes and six interdisciplinary units. Due to its geographical position and its extended infrastructure, the hospital covers not only the city of Aachen, but also the surrounding midsize cities which are still affected by the structural change as well as some more rural areas. It covers all surgical procedures, from minor outpatient surgeries to procedures that last several hours and require subsequent intensive care. Therefore, the hospital serves a highly diverse spectrum of patients and is thus particularly suitable for the generation of a broad survey population.

Survey administration

The present study was reviewed and approved by the local Ethics Committee at the RWTH Aachen University Faculty of Medicine (EK 307/19). Since a scientific publication of the results in an anonymous manner was announced on the first page, the completion of the questionnaire was understood as consent and explicit written consent was waived. The survey was conducted in compliance with all relevant regulations including data privacy legislation.

At their visit at the premedication outpatient clinics, patients were asked for willingness to answer the questionnaire during their waiting time for their appointment with the anaesthesiologist. A member of the research group handed out the paper-based questionnaire and answered possible questions. After completion, participants put the questionnaire in an opaque box to ensure privacy.

Statistical analysis

The completed questionnaires were digitalized manually before analysis. Descriptive statistics were used to characterize the sample by age, gender, highest educational attainment, type of current occupation and an occupation in the health sector. Insufficiently filled in questionnaires (more than one-third of missing questions or sections not completed) were not included into the analysis. The results were expected statistically significant if p < .05. Continuous variables were expressed as mean value and standard deviation. Categorical variables and Likert scale ratings were calculated both as mean value and standard deviation and in absolute numbers and proportion. Missing data were not imputed. For evaluation of a subsection, the mean values of the different answers were combined to one score for the respective subsection. To analyse the influence of biometric characteristics on self-reported technical affinity, we performed an analysis of covariance (ANCOVA). Age, gender, the level of education (combined as low, medium and high) and an occupation in healthcare were used as dependent variables. The null hypothesis that the expected value of self-reported technical affinity is identical in the different classes studied (gender, education and healthcare profession) adjusted for age was tested with an F-test at the 5% significance level.

Additionally, we performed a multivariable logistic regression analysis to evaluate the influence of the previously mentioned sociodemographic factors and the self-reported technical affinity on the general perception on AI in healthcare. The null hypothesis that the odds ratio for all influencing variables is equal to one was tested with a Wald test at a significance level of 5%. The linearity in the logit was graphically assessed by plotting the observed values of age and technical affinity against the predicted logits of general perception on AI in healthcare. The possible presence of multicollinearity was discussed using a correlation matrix. All statistical analyses were carried out using SAS Version 9.4 (Cary, North Carolina, USA).

Results

Respondent characteristics

A total of 452 participants completed the questionnaire to the required extent and were included in the analysis; ten questionnaires were excluded. The demographic characteristics of the respondents are presented in Table 1. Age, gender and level of education showed a nearly equal distribution. More than half of the respondents were employed. About 25% had a medical or health professional background.

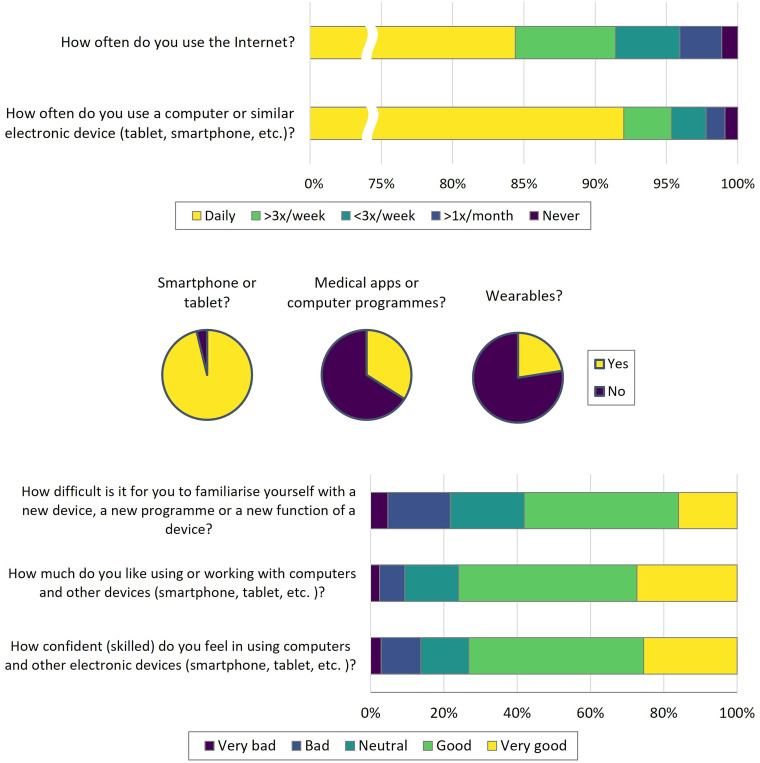

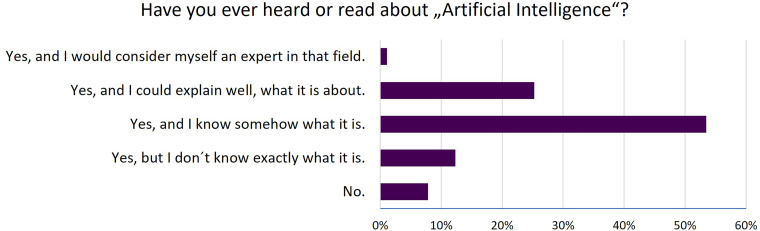

Usage of technical devices and self-estimated technical affinity

The usage of technical devices was high among the respondents (Figure 1). A total of 92.02% of the participants stated a daily use of computers, smartphones and other technical devices. As well, the internet was used on a daily basis by 84.39%. While the vast majority (96.2%) reported owning a smartphone, the usage of wearables for monitoring of body functions (22.54%) or of medical apps (34%) was more uncommon. In the self-rated technical affinity (Figure 1), the majority of respondents reported positive values for confidence using technical devices, preference for work with technical devices and competence for usage of new devices. More than 90% of the respondents stated, they had already read or heard about AI, but only 24% reported good or expert knowledge. The absolute majority said they knew roughly what AI was (Figure 2).

Figure 1.

Usage of information technology and self-reported technical affinity.

Figure 2.

Self-assessment of previous knowledge in relation to artificial intelligence.

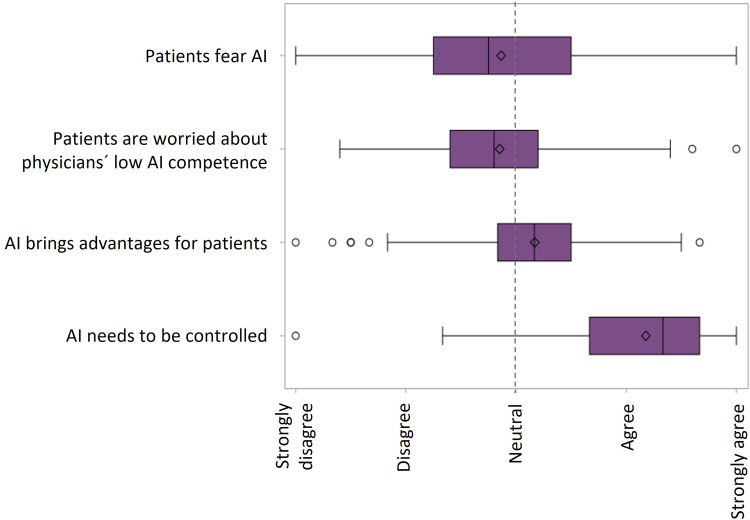

Perception on AI in healthcare

The results of the survey regarding the perception of AI usage in healthcare can be found in Figure 3. Asked on their general perception, a clear majority favoured the use of AI in medicine and healthcare: 53.18% of the respondents rated it as positive or very positive. On the opposite, only 4.77% had a negative or very negative opinion. The remaining of the respondents gave a neutral or no estimation.

Figure 3.

Perception of different aspects of AI in healthcare.

In contrast to this statement, the factor stating that AI brings benefits for patients received a slight agreement in the mean (2.17 ± 0.55) (see Figure 4). While a clear majority (55.73%) of respondents agreed with the statement that AI has benefits for patients, the proportion of respondents, who would ask for a usage of AI in their personal treatment, decreased to 41.2%. Nearly 20% would even refuse to be treated using AI-based applications. Furthermore, the agreement and disagreement to the statements that assume a relieving of work burden for the physicians by the use of AI are nearly equally distributed revealing respondents with a more pessimistic estimations beside the positive expectations.

Figure 4.

Boxplot representing the subsections characterized by four statements. The box corresponds to the interquartile range (IQR) with the median (line inside the box) and the whiskers representing 1.5 times the IQR. The mean is depicted by the diamond. Outlier are shown by single dots.

General fear of AI in healthcare itself as well as fear of low AI competence in healthcare providers tended to be less important to the respondents (see Figure 4). However, the extent of disagreement is in a similar range as the former described factor. For the rating of the fear for AI itself, the respondents denied fearing the influence of AI on medical treatments. Consistently, they would also not claim to stop or prevent AI usage in medicine in general. The risk of a wrong decision made by a physician is obviously seen similar to an AI made mistake. However, there seem to be relevant safety concerns worrying about cyberattacks that could sabotage the AI systems. Among the respondents, there are predominantly positive reactions with respect to the role of physicians using AI. Thus, there is a bigger part of respondents who rate physicians sufficiently competent to handle the challenges of AI usage, like an impairment of the relationship between physician and patient. Regarding AI potentially hampering the development of the physician’s clinical abilities, the ratings are nearly equally distributed. The generally high confidence in physicians is also reflected in a stronger disagreement to the question if a bad prognostic value could affect the physician’s effort to save a patient, which is disagreed by 43.49% of the respondents.

A very strong agreement was measured regarding the need for control when using AI in healthcare. Thus, the statement that a final decision on diagnosis or therapy should always be in the hands of a physicians received the highest degree of agreement (96.02%) in the whole survey. However, a very high agreement was measured for the need for a functional check of an AI-based application through an independent institution (76.46%) as well as a scientifically proven benefit before usage at bedside (73.37%).

Other questions yielded some more unexpected answers. Although they belonged thematically to one of the factors already described, they were removed from the respective factor due to the calculations from the pre-test. From some questions, one can conclude that patients and their relatives have a very high opinion of their physicians and their competences. So, nearly two-thirds of the respondents do not think that physicians might be less meaningful in future medicine. An even higher proportion (73.72%) would trust a physician more than an AI-based system and 69.44% would want the physician to override an AI’s recommendation if he/she comes to different conclusions. The point that physicians might possibly not have enough knowledge about AI to use it at the bedside receives more disagreement than agreement. A last important issue for further development of data-driven approaches in medicine is the willingness of patients to make their health-related data available for non-commercial research purposes, which is agreed by 74.78% of the population.

The participating patients and companions were asked to express their perceptions on AI in healthcare on a 5-point Likert scale enabling them to take a neutral position. It was striking that in many questions a very high rate of respondents chose this option, in two cases even more than 50%. In 13 questions, the neutral option was the most rated answer.

Free text statements

Forty-two respondents gave free text statements using the offered box at the end of the questionnaire. Many of them used this opportunity to explicitly stress their opinion on certain aspects, which were already contained in the questionnaire. The statements of 10 respondents were very similar covering a combination of three topics. They stated that human physicians have to keep the control over AI applications in healthcare. These applications must be used as a support tool but never perform any independent decisions. It was obviously important to these respondents to point out that AI must never replace a physician and that the ‘medical art’ must remain the basis for the treatment of patients. Another big group of respondents expressed positively about the usage of AI in healthcare. However, it was not always clear from the written statements whether the respondents were in favour of this development or whether they saw it as an unstoppable fact that could not be influenced anyway. Even if no explicit negative statements were made, a fatalistic interpretation of the statements could be assumed. A third group of respondents showed scepticism that AI would be helpful to reduce the workload of the physicians and nurses. Individual respondents shed light on yet other aspects: one respondent expressed concern about the unavailability of AI systems, for example, in the event of massive blackouts and the associated inability of physicians to act. Another respondent warned that every AI algorithm always can be as good as the underlying data bringing up the issue of data quality. Finally, another respondent expressed incomprehension that so much effort is being invested in AI and medical research, while other more important issues like an accelerating climate change remain insufficiently attended.

Influence of sociodemographic factors on technical affinity

To evaluate the influence of sociodemographic factors on the self-reported technical affinity, an ANCOVA was carried out. It could be shown that higher age (F(1/419) = 91.55, p < .0001), female gender (F(1/419) = 6.11, p = .0138) and lower educational levels (F(2/419) = 7.88, p = .0004) were associated with a lower technical affinity. Regarding the level of education, the effect across the classes was that the higher the subjects were educated, the higher their affinity for technology. A former or current occupation in healthcare did not have an influence on technical affinity.

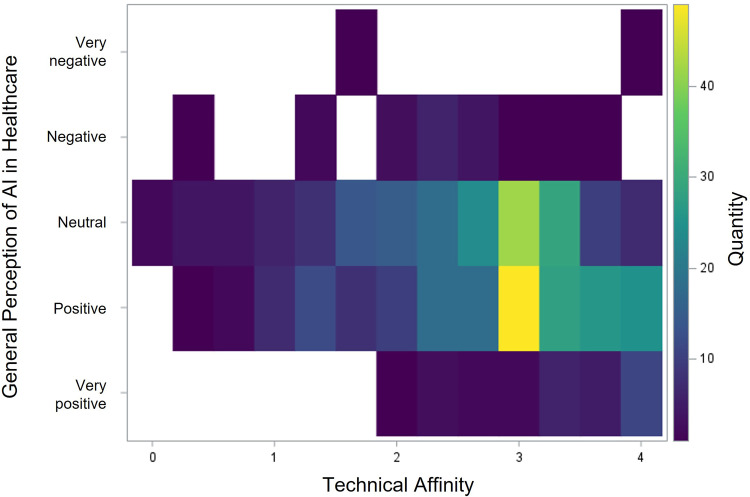

Influence of sociodemographic factors and technical affinity on the general perception of AI usage in healthcare

By use of a multivariable logistic regression model, we assessed the influence of the sociodemographic characteristics and the self-reported technical affinity on the general perception of AI usage in healthcare. The null hypothesis that all odds ratios are OR = 1 for all influence variables was rejected (Wald test = 53.996, p < .0001). Gender, educational level and technical affinity showed a significant influence on the general perception of AI usage in healthcare. The ORs and 95% confidence intervals (CIs) of the examined variables are given in Table 2. The self-reported technical affinity showed the strongest relation with the general AI perception (see Figure 5).

Table 2.

Sociodemographic characteristics of the respondents. Data are given as mean ± SD or n (%).

| N = 452 | |

|---|---|

| Age | 46.69 ± 16.03 |

| – <20 | 12 (2.65) |

| – 20–29 | 70 (15.49) |

| – 30–39 | 102 (22.57) |

| – 40–49 | 55 (12.17) |

| – 50–59 | 102 (22.57) |

| – 60–69 | 73 (16.15) |

| – 70–79 | 33 (7.30) |

| – >80 | 5 (1.11) |

| Gender | |

| – Male | 206 (45.68) |

| – Female | 244 (54.10) |

| – Non-binary | 1 (0.22) |

| Level of education | |

| – ‘Low’ | |

| ○ No school leaving certificate | 2 (0.45) |

| ○ Primary school (Volksschule) | 24 (5.37) |

| – ‘Medium’ | |

| ○ Secondary school (Hauptschule) | 55 (12.30) |

| ○ Secondary school (Mittlere Reife) | 115 (25.73) |

| – ‘High’ | |

| ○ A-levels/technical baccalaureate | 113 (25.28) |

| ○ (Technical) college/university | 130 (29.08) |

| – Other | 8 (1.79) |

| Current occupation | |

| – Pupil | 4 (0.89) |

| – Apprentice | 10 (2.22) |

| – Student | 19 (4.22) |

| – Househusband/wife | 30 (6.67) |

| – Employee | 245 (54.44) |

| – Self-employed | 26 (5.78) |

| – Civil servant | 12 (2.67) |

| – Job seeking | 6 (1.33) |

| – Retirement | 87 (19.33) |

| – Other | 11 (2.44) |

| Healthcare professional | |

| – Yes | 112 (25.40) |

| – No | 329 (74.60) |

Table 3.

Multivariate regression analysis, dependent variable: general perception of AI in healthcare. Wald Chi square and p-value from type 3 analysis of effects.

| Variable | Wald chi square | OR | 95% CI | p-value | |

|---|---|---|---|---|---|

| Age | 0.635 | 0.994 | 0.980 | 1.009 | 0.4257 |

| Female gender | 16.061 | 2.240 | 1.510 | 3.324 | <0.0001 |

| Educational level | 11.053 | 0.004 | |||

| - Medium vs low | 1.039 | 0.461 | 2.339 | ||

| - High vs low | 0.514 | 0.224 | 1.177 | ||

| Occupation in healthcare | 0.232 | 0.896 | 0.574 | 1.399 | 0.6299 |

| Technical affinity | 19.234 | 0.559 | 0.432 | 0.725 | <0.0001 |

Figure 5.

Heatmap depicting the correlation between self-reported technical affinity and general perception on AI in healthcare.

Discussion

Although the number of newly constructed AI-based applications for healthcare steadily increases and efforts to implement these models into clinical routine go on, surprisingly, the scientific interest on how patients perceive these developments came into the scientific focus just recently. However, this question is subject to many discussions among medical professionals and computer scientists as well as the interested public. Depending on personal beliefs, the positions in these discussions range from completely positive towards completely negative. Hence, it was the main objective to evaluate, where patients in general locate themselves within this continuous spectrum. Our results show that the majority of patients and their companions in Germany have a positive or very positive attitude to the usage of AI in healthcare, although their respective knowledge is moderate. However, the general perception seems to differ relevantly between certain groups of respondents. Elderly, female or less educated persons as well as those having a low technical affinity have a more sceptical view on AI in healthcare. Common to all respondents is the desire for intensive monitoring of AI by physicians and their rejection of too much autonomy of the systems. We consider these results relevant for all stakeholders, who are working on the different aspects of AI in medicine, like physicians, AI developers, healthcare industry, insurances and regulatory bodies. Our results can be helpful for the planning of strategies to guide the further development and implementation of AI in health care. Especially, an active involvement of patients into their treatment and their collaboration is a critical step to improve clinical outcomes. 52 This applies not only if patients use AI themselves but also if they are treated under AI usage. Knowledge on special opinions and requirements of certain patient groups allow a specific adaption of developing and implementing measures.

Study population

We aimed to examine a group of persons that is in direct contact to the healthcare system that is representative for the German population. The biographic parameters of our study sample were similar to the total population. In comparison to the German population, our respondents were 2.2 years older in the mean and our cohort included more female than male respondents. This difference was about 3% higher in our group than in the total population. 53 As far as age and gender ratio are concerned, it can be assumed that our sample covers the total German population with sufficient accuracy. The proportion of university graduates was nearly twice as high in our cohort, which might be due to the high number of academic and research centres in the district served by the hospital. 54 The ratio of persons who work or have worked in the healthcare sector seems quite high (25.4%), since the proportion of health care workers who are actively employed is given as 12.2% for Germany as a whole. 55 Even if our percentage includes retired health workers as well, their high proportion remains remarkable, and its reasons remain unclear in the end. Most respondents generally classified themselves as having a higher affinity for technology. However, it can be noted that this affinity does not easily reach the personal health sector. For example, while more than 96% of respondents use a smartphone, which is even higher than the German average, 56 only about a third uses health-related apps on it. Nevertheless, the proportion of health-app users is slightly higher in our sample than reported for Germany before. 57 Other authors came to similar results.58–60

General perception of AI usage in healthcare

Our survey showed a general open-mindedness of patients and their relatives towards the usage of AI in healthcare. More than 50% rated its usage as positive or very positive. A similar proportion expects benefits for patients through the usage of AI. However, this view of AI by patients, which seems very positive at first glance, should not tempt to take an uncritical view. There are several aspects that need to be considered to put the results into the right context.

Firstly, a relevant part of respondents chose the neutral option of the Likert-scale. They may be truly neutral, they may not have had enough information to make an informed choice or they may have been trying to avoid socially undesirable responses. 61 Although we took care to make the completion of the questionnaire as anonymous as possible, it cannot be ruled out that some respondents chose the most harmless option. A much more likely option is an insufficient level of information of the respondents, since in the self-rating, only one-third stated to be able to explain the meaning of AI. The big majority only knew ‘somehow’ what it meant or even less, which was in a similar range already shown for the German as well as other populations.21,62

Much more explicitly, our data point out that an application of AI at the bedside is only acceptable for the vast majority of patients if they can be sure that the AI is under continuous control and more specifically, they expect this control to be taken over by a physician. Several respondents strengthened this point in their free text notes explicitly. This finding was already demonstrated in several previous studies.8,22,23 Exceeding the extent of agreement in these studies that AI needs to be controlled, in our survey the agreement nearly reached unanimity (96.02%). At the latest when there is a discrepancy between a physician's and the AI's assessment, the positive rating of AI ends and patients rely on their human physicians. This attitude also includes the permission for a physician to override an AI recommendation. These points comply with the finding from a multitude of studies that patients trust human physicians much more than an AI system,22–28 which could also be reproduced in our study as well. Accompanying to the supervision by a medical professional, additional preclinical measures like a scientific evaluation and an independent certification of AI systems are requested by patients.8,10 Uncertainties among patients in this context could also be the reason why, despite a very positive attitude in general, only a smaller proportion of patients in our study would agree to be treated using AI personally and other patients would even refuse an AI treatment, although their proportion was much smaller than in previous studies. 30 In order to reach this sceptical group, it would be urgently necessary to combine the clinical implementation of AI methods with intensive information campaigns in order to overcome obvious reservations in the best possible way.

However, the great mistrust of AI in the healthcare sector expressed by several stakeholders in medicine but also public discussions 26 could not be demonstrated in our survey. The most likely explanation for this discrepancy is the assumption of patients that they expect a physician to be the gatekeeper for AI decisions. This is reflected by the high disagreement to the statements that physicians would play a less important role in the future treatment. So, in concordance with experts from the field,63–65 patients seem to prefer a cooperation of AI and human physicians, in which the physicians make use of their humanistic skills, like empathy, communication and shared decision making, while the AI offers its comprehensive knowledge and its fast and precise analysis of patient data. Although patients disagree that physicians would not have enough knowledge about AI to use it in their daily work, in contrast, previous research showed that they are frequently lacking a sufficient knowledge about AI and its principles.14,63,64 In this context, and with the increasing introduction of AI into clinical practice, there should be a stronger focus on AI education, especially in the training of future physicians.65,66

Influencing factors on AI perception

Our survey showed that older patients, women and persons with lower education had a more cautious view on the healthcare-related usage of AI. Less surprising, the personal technical affinity had an even stronger effect on the perception of AI usage. Thus, the fact that our survey population had a higher education compared to the German total population could bias the general view towards a more positive perception. Nevertheless, these influencing factors are certainly not new and are consistent with several previous publications.58,67–69 This divergent perception can lead to reduced usage of novel technologies in certain groups and is commonly described as ‘digital divide’ or ‘digital gap’. While many think of these key words in terms of global phenomena such as limited internet access in developing countries, many authors emphasize that sociodemographic factors within a developed society can influence access to novel digital technologies as well.58,67,70,71 For example, it is conceivable that older or less educated people may disagree to a treatment under AI usage due to concerns primarily resulting from a lack of information. Therefore, these underrepresented groups need to be given special consideration when designing programmes that aim to provide knowledge about new digital technologies in healthcare. For instance, the European Union already addressed this issue and started a ‘Digital Inclusion’ Initiative within the ‘Shaping Europe's digital future’ programme. 72

Ethical dimensions

There is a central dilemma when applying AI in medical treatment: who is ethically accountable for a decision which arises from the cooperation of a physician and an AI tool 73 ? Due to the ‘black box’ character of the most AI algorithms, it is difficult for physicians to understand how these algorithms create their recommendations. However, this understanding is necessary to impose an accountability on a medical professional. Similar to ‘analogous’ medicine, a physician should always be able to justify decisions and name the factors that led him or her there. If a physician makes clinical decisions in cooperation with a tool, whose decisions are unexplainable by design, it must be discussed whether such an opaque system should be used. Secondly, it is unclear who should be responsible and legally liable if a patient was harmed as a result of a clinician's usage of an AI tool. 74 Interestingly, the respondents in our survey who gave a non-neutral response impressively represent this dilemma by splitting half and half into a group who sees the physician as responsible and a group who disagreed.

Another relevant aspect getting increasing public attention is algorithmic fairness, representing the absence of biases in AI models to the best possible way. These biases can derive from the data included into the algorithm or from the algorithm itself. All AI algorithms rely on big training data sets for its development. If the training data population differs from the population, the algorithm is intended to be used on or a subpopulation is less represented in this training data, for example, due to their reduced contact to the health system, the ability of an AI model to make precise predictions on these patients is relevantly reduced. This so-called representation bias can be a relevant source of discrimination based on age, gender, skin colour, ethnic origin, financial or occupational status, and other factors. 75 However, also factors within the model itself might cause bias and consecutive harm, if a model is used for a global population although there are subgroups that should be considered differently (aggregation bias), if two metrics of the algorithm (e.g. overall accuracy vs sensitivity) impair each other (learning bias), if the dataset to test an AI model differs from the intended population to use the AI on (evaluation bias) or if an AI model is actually used differently from the way in which it is intended (deployment bias). 76

Patients are intended to benefit from the usage of AI in health care. However, it must never be lost sight of the fact that patients must bear the consequences first-hand in the event of a faulty or unethical implementation of a newly developed and implemented AI tool. So, since physicians and scientists impose the potential risks of their developments to other persons, there is a strong obligation to minimize these technical, ethical, and moral issues of AI to the lowest possible extent before a broad clinical implementation of AI.8,77

Limitations

We conducted a paper-based, single-centred survey about the general perception of AI usage in healthcare. With the aim of changing the existing opinion about AI as little as possible, we provided very little additional information about AI. Participants were only told that one part of AI in the field of medicine consists, for example, of extracting previously unknown correlations from large amounts of data to be able to diagnose diseases much earlier, to predict the effect of a certain therapy or to predict the course of disease. We are aware that this explanation already means a restriction of the huge field of AI, but we intended to prevent far-off or even unrealistic imaginations in the respondents, especially among those with lower AI knowledge. It is questionable, how meaningful a survey can be, if the participants are not familiar with the subject. However, it reflects a kind of real-world data and we have to realize that also this partially uninformed population is confronted with AI and forms opinions about it. Public discussions are held not only between experts or informed participants, but also less informed persons contribute. Thus, our aim was to capture the public opinion to be aware of possible reservations, which could influence the common perception. The location of the participating hospital is in a region with a strong technological and research focus. Even if a wide surrounding area is served, there is a certain probability that the positive aspects of AI are perceived more strongly in this environment than in a less technological environment. The data acquisition of our survey was finished before the world was struck by the COVID-19 pandemic, which caused a significant boost of digitalization of the health system as well as of the working and everyday life. 78 Further studies will be necessary to address questions about the impact of the global pandemic on the perception of AI in healthcare.

Conclusion

Concluding, patients and their companions in Germany are open towards the usage of AI in healthcare. Although the knowledge about AI is only mediocre in general, a majority of respondents rates AI in healthcare as positive or very positive in general. It is noticeable that patients are more reluctant when AI usage concerns their own personal treatment. Older patients, women, persons with lower education and lower technical affinity had a more cautious view on the healthcare-related usage of AI. Notably, patients strengthen that it is essential that a physician controls the AI application and has the ultimate responsibility for diagnosis and therapy.

Supplemental Material

Supplemental material, sj-docx-1-dhj-10.1177_20552076221116772 for Attitudes and perception of artificial intelligence in healthcare: A cross-sectional survey among patients by Sebastian J Fritsch, Andrea Blankenheim, Alina Wahl, Petra Hetfeld, Oliver Maassen, Saskia Deffge, Julian Kunze, Rolf Rossaint, Morris Riedel, Gernot Marx and Johannes Bickenbach in Digital Health

Supplemental material, sj-docx-2-dhj-10.1177_20552076221116772 for Attitudes and perception of artificial intelligence in healthcare: A cross-sectional survey among patients by Sebastian J Fritsch, Andrea Blankenheim, Alina Wahl, Petra Hetfeld, Oliver Maassen, Saskia Deffge, Julian Kunze, Rolf Rossaint, Morris Riedel, Gernot Marx and Johannes Bickenbach in Digital Health

Acknowledgements

We would like to express our sincere thanks to Ms. Adrijana Kolar and Ms. Büsra Aktas for their valuable support during the data acquisition.

Footnotes

Conflict of interests: GM is the coordinator of the S1 Guideline Telemedicine in Intensive Care Medicine and Chairman of the German Society for Telemedicine (DGTelemed). GM is cofounder of Clinomic GmbH. GM received restricted research grants and consultancy fees from BBraun Melsungen, Biotest, Adrenomed and Sphingotec GmbH outside of the submitted work. RR is cofounder of Docs-in-Cloud GmbH and received consultancy fees from Boehringer Ingelheim Company. The remaining authors declare that there is no conflict of interest.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship and/or publication of this article: This publication of the SMITH consortium was supported by the German Federal Ministry of Education and Research (Grant Nos. 01ZZ1803B and 01ZZ1803M).

Ethical approval: The present study was reviewed and approved by the local ethics committee at the RWTH Aachen University Faculty of Medicine (local EC number: EK 307/19).

Guarantor: SJF.

Author contributions: SJF, AB and OM designed the survey and set up the questionnaire. AW, SD, JK and JB critically revised the questionnaire and provided substantial improvements. SJF, AW, OM, SD and JK helped with the pre-test. AW and PH recruited participants and acquired the data. SF, AB and JB analysed the data. SF, AB, OM and JB wrote the manuscript. RR, MR, GM and JB helped with the interpretation of the results, critically reviewed and edited the manuscript. All authors read and approved the final manuscript.

ORCID iDs: Sebastian J Fritsch https://orcid.org/0000-0002-8350-8584

Oliver Maassen https://orcid.org/0000-0002-0437-9295

Saskia Deffge https://orcid.org/0000-0003-0937-4327

Supplemental material: Supplementary material for this article is available online.

References

- 1.McCradden MD, Sarker T, Paprica PA. Conditionally positive: a qualitative study of public perceptions about using health data for artificial intelligence research. BMJ Open 2020; 10: e039798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Fast E, Horvitz E. Long-term trends in the public perception of artificial intelligence. Proc AAAI Conf Artif Intell 2017; 31: 963–969. [Google Scholar]

- 3.Kaplan A, Haenlein M. Siri, Siri, in my hand: who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus Horiz 2019; 62: 15–25. [Google Scholar]

- 4.Maddox TM, Rumsfeld JS, Payne PRO. Questions for artificial intelligence in health care. Jama 2019; 321: 31–32. [DOI] [PubMed] [Google Scholar]

- 5.Kulkarni S, Seneviratne N, Baig MS, et al. Artificial intelligence in medicine: where are we now? Acad Radiol 2020; 27: 62–70. [DOI] [PubMed] [Google Scholar]

- 6.Noorbakhsh-Sabet N, Zand R, Zhang Y, et al. Artificial intelligence transforms the future of health care. Am J Med 2019; 132: 795–801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lauritzen AD, Rodríguez-Ruiz A, von Euler-Chelpin MC, et al. An artificial intelligence-based mammography screening protocol for breast cancer: outcome and radiologist workload. Radiology 2022; 304: 41–49. [DOI] [PubMed] [Google Scholar]

- 8.Richardson JP, Smith C, Curtis S, et al. Patient apprehensions about the use of artificial intelligence in healthcare. NPJ Digital Medicine 2021; 4: 40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mesko B, Gorog M. A short guide for medical professionals in the era of artificial intelligence. NPJ Digit Med 2020; 3: 20200924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Scott IA, Carter SM, Coiera E. Exploring stakeholder attitudes towards AI in clinical practice. BMJ Health Care Inform 2021; 28: e100450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sunarti S, Fadzlul Rahman F, Naufal M, et al. Artificial intelligence in healthcare: opportunities and risk for future. Gac Sanit 2021; 35: S67–S70. [DOI] [PubMed] [Google Scholar]

- 12.Maassen O, Fritsch S, Palm J, et al. Future medical artificial intelligence application requirements and expectations of physicians in German university hospitals: web-based survey. J Med Internet Res 2021; 23: e26646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Laï MC, Brian M, Mamzer MF. Perceptions of artificial intelligence in healthcare: findings from a qualitative survey study among actors in France. J Transl Med 2020; 18: 14–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Castagno S, Khalifa M. Perceptions of artificial intelligence among healthcare staff: a qualitative survey study. Front Artif Intell 2020; 3: 578983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kleinsinger F. Understanding noncompliant behavior: definitions and causes. Perm J 2003; 7: 18–21. [Google Scholar]

- 16.Guo Y, Hao Z, Zhao S, et al. Artificial intelligence in health care: bibliometric analysis. J Med Internet Res 2020; 22: e18228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Secinaro S, Calandra D, Secinaro A, et al. The role of artificial intelligence in healthcare: a structured literature review. BMC Med Inform Decis Mak 2021; 21: 25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sreedharan S, Mian M, Robertson RA, et al. The top 100 most cited articles in medical artificial intelligence: a bibliometric analysis. Journal of Medical Artificial Intelligence 2020; 3: 3. [Google Scholar]

- 19.Yin J, Ngiam KY, Teo HH. Role of artificial intelligence applications in real-life clinical practice: systematic review. J Med Internet Res 2021; 23: e25759. 20210422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Nagendran M, Chen Y, Lovejoy CA, et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies. Br Med J 2020; 368: m689.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Aggarwal R, Farag S, Martin G, et al. Patient perceptions on data sharing and applying artificial intelligence to health care data: cross-sectional survey. J Med Internet Res 2021; 23: e26162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lennartz S, Dratsch T, Zopfs D, et al. Use and control of artificial intelligence in patients across the medical workflow: single-center questionnaire study of patient perspectives. J Med Internet Res 2021; 23: e24221. 20210217.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Palmisciano P, Jamjoom AAB, Taylor D, et al. Attitudes of patients and their relatives toward artificial intelligence in neurosurgery. World Neurosurg 2020; 138: e627–e633. [DOI] [PubMed] [Google Scholar]

- 24.York T, Jenney H, Jones G. Clinician and computer: a study on patient perceptions of artificial intelligence in skeletal radiography. BMJ Health Care Inform 2020; 27: e100233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Yokoi R, Eguchi Y, Fujita T, et al. Artificial intelligence is trusted less than a doctor in medical treatment decisions: influence of perceived care and value similarity. Inter J Human Comp Interact 2021; 37: 981–990. [Google Scholar]

- 26.Longoni C, Bonezzi A, Morewedge CK. Resistance to medical artificial intelligence. J Consum Res 2019; 46: 629–650. [Google Scholar]

- 27.Promberger M, Baron J. Do patients trust computers? J Behav Decis Mak 2006; 19: 455–468. [Google Scholar]

- 28.Bigman YE, Gray K. People are averse to machines making moral decisions. Cognition 2018; 181: 21–34. [DOI] [PubMed] [Google Scholar]

- 29.Sisk BA, Antes AL, Burrous S, et al. Parental attitudes toward artificial intelligence-driven precision medicine technologies in pediatric healthcare. Children (Basel) 2020; 7: 20200920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tran VT, Riveros C, Ravaud P. Patients’ views of wearable devices and AI in healthcare: findings from the ComPaRe e-cohort. NPJ Digit Med 2019; 2: 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Nelson CA, Pérez-Chada LM, Creadore A, et al. Patient perspectives on the use of artificial intelligence for skin cancer screening: a qualitative study. JAMA Dermatol 2020; 156: 501–512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Esmaeilzadeh P. Use of AI-based tools for healthcare purposes: a survey study from consumers’ perspectives. BMC Med Inform Decis Mak 2020; 20: 170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Esmaeilzadeh P, Mirzaei T, Dharanikota S. Patients’ perceptions toward human–artificial intelligence interaction in health care: experimental study. J Med Internet Res 2021; 23: e25856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Zhang Z, Citardi D, Wang D, et al. Patients’ perceptions of using artificial intelligence (AI)-based technology to comprehend radiology imaging data. Health Informat J 2021; 27: 14604582211011215. [DOI] [PubMed] [Google Scholar]

- 35.Meyer AND, Giardina TD, Spitzmueller C, et al. Patient perspectives on the usefulness of an artificial intelligence–assisted symptom checker: cross-sectional survey study. J Med Internet Res 2020; 22: e14679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Haggenmüller S, Krieghoff-Henning E, Jutzi T, et al. Digital natives’ preferences on mobile artificial intelligence apps for skin cancer diagnostics: survey study. JMIR Mhealth Uhealth 2021; 9: e22909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ploug T, Sundby A, Moeslund TB, et al. Population preferences for performance and explainability of artificial intelligence in health care: choice-based conjoint survey. J Med Internet Res 2021; 23: e26611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gao S, He L, Chen Y, et al. Public perception of artificial intelligence in medical care: content analysis of social Media. J Med Internet Res 2020; 22: e16649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Andrade C. The limitations of online surveys. Indian J Psychol Med 2020; 42: 575–576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Thiel R, Deimel L, Schmidtmann D, et al. #SmartHealthSystems: international comparison of digital strategies. Gütersloh: Bertelsmann-Stiftung 2019. https://www.bertelsmann-stiftung.de/en/publications/publication/did/smarthealthsystems-1 (2019, accessed 30 July 2022). [Google Scholar]

- 41.Kelber U and Lerch MM. Pro & Kontra: Datenschutz als Risiko für die Gesundheit. Dtsch Arztebl International 2022; 119: A–960. [Google Scholar]

- 42.Kuhn AK. Grenzen der Digitalisierung der Medizin de lege lata und de lege ferenda. GesundheitsRecht 2016; 15: 748–751. [Google Scholar]

- 43.Hansen A, Herrmann M, Ehlers JP, et al. Perception of the progressing digitization and transformation of the German health care system among experts and the public: mixed methods study. JMIR Public Health Surveill 2019; 5: e14689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sharma A, Minh Duc NT, Luu Lam Thang T, et al. A consensus-based checklist for reporting of survey studies (CROSS). J Gen Intern Med 2021; 36: 3179–3187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Pinto Dos Santos D, Giese D, Brodehl S, et al. Medical students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol 2019; 29: 1640–1646. [DOI] [PubMed] [Google Scholar]

- 46.Haan M, Ongena YP, Hommes S, et al. A qualitative study to understand patient perspective on the use of artificial intelligence in radiology. J Am Coll Radiol 2019; 16: 1416–1419. [DOI] [PubMed] [Google Scholar]

- 47.Liyanage H, Liaw S-T, Jonnagaddala J, et al. Artificial intelligence in primary health care: perceptions, issues, and challenges. Yearb Med Inform 2019; 28: 041–046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hengstler M, Enkel E, Duelli S. Applied artificial intelligence and trust—the case of autonomous vehicles and medical assistance devices. Technol Forecast Soc Change 2016; 105: 105–120. [Google Scholar]

- 49.Perneger TV, Courvoisier DS, Hudelson PM, et al. Sample size for pre-tests of questionnaires. Qual Life Res 2015; 24: 147–151. [DOI] [PubMed] [Google Scholar]

- 50.Rattray J, Jones MC. Essential elements of questionnaire design and development. J Clin Nurs 2007; 16: 234–243. [DOI] [PubMed] [Google Scholar]

- 51.Bartlett JE, Kotrlik JW, Higgins CC. Organizational research: determining appropriate sample size in survey research. Inf Technol Learn Perform J 2001; 19: 43. [Google Scholar]

- 52.Hibbard JH, Greene J. What the evidence shows about patient activation: better health outcomes and care experiences; fewer data on costs. Health Aff (Millwood) 2013; 32: 207–214. [DOI] [PubMed] [Google Scholar]

- 53.Statistisches Bundesamt. Bevölkerung und Erwerbstätigkeit Bevölkerungsfortschreibung auf Grundlage des Zensus 2011, 2019. Fachserie 1 Reihe 1.3., https://www.statistischebibliothek.de/mir/receive/DEHeft_mods_00134556 (2021, accessed 30 July 2022).

- 54.Statistisches Bundesamt. Bildungsstand der Bevölkerung - Ergebnisse des Mikrozensus 2019, https://www.destatis.de/DE/Themen/Gesellschaft-Umwelt/Bildung-Forschung-Kultur/Bildungsstand/Publikationen/Downloads-Bildungsstand/bildungsstand-bevoelkerung-5210002197004.html (2020, accessed 30 July 2022).

- 55.Bundesministerium für Gesundheit. Gesundheitswirtschaft als Jobmotor. https://www.bundesgesundheitsministerium.de/themen/gesundheitswesen/gesundheitswirtschaft/gesundheitswirtschaft-als-jobmotor.html (2019, accessed 30 July 2022).

- 56.Tenzer F. Anzahl der Nutzer von Smartphones in Deutschland bis 2019. Statista. https://de.statista.com/statistik/daten/studie/198959/umfrage/anzahl-der-smartphonenutzer-in-deutschland-seit-2010/ (2019, accessed 20 July 2020).

- 57.Heidel A, Hagist C. Potential benefits and risks resulting from the introduction of health apps and wearables into the German statutory health care system: scoping review. JMIR Mhealth Uhealth 2020; 8: e16444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.De Santis KK, Jahnel T, Sina E, et al. Digitization and health in Germany: cross-sectional nationwide survey. JMIR Public Health Surveill 2021; 7: e32951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ernsting C, Dombrowski SU, Oedekoven M, et al. Using smartphones and health apps to change and manage health behaviors: a population-based survey. J Med Internet Res 2017; 19: e101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Rasche P, Wille M, Brohl C, et al. Prevalence of health app use among older adults in Germany: national survey. JMIR Mhealth Uhealth 2018; 6: e26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Cooper ID, Johnson TP. How to use survey results. J Med Libr Assoc 2016; 104: 174–177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Stürz RA, Stumpf C, Mendel U. Künstliche Intelligenz verstehen und gestalten: Ergebnisse und Implikationen einer bidt-Kurzbefragung in Deutschland. Munich: bidt Bayerisches Forschungsinstitut für Digitale Transformation, 2020. http://publikationen.badw.de/de/046808919 (2020, accessed 30 July 2022).

- 63.Oh S, Kim JH, Choi SW, et al. Physician confidence in artificial intelligence: an online Mobile survey. J Med Internet Res 2019; 21: e12422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Scheetz J, Rothschild P, McGuinness M, et al. A survey of clinicians on the use of artificial intelligence in ophthalmology, dermatology, radiology and radiation oncology. Sci Rep 2021; 11: 5193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Grunhut J, Wyatt ATM, Marques O. Educating future physicians in artificial intelligence (AI): an integrative review and proposed changes. J Med Educ Curric Dev 2021; 8: 23821205211036836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Kundu S. How will artificial intelligence change medical training? Commun Med 2021; 1: 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Cornejo Muller A, Wachtler B, Lampert T. [Digital divide-social inequalities in the utilisation of digital healthcare]. Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz 2020; 63: 185–191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Safi S, Danzer G, Schmailzl KJ. Empirical research on acceptance of digital technologies in medicine among patients and healthy users: questionnaire study. JMIR Hum Factors 2019; 6: e13472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Antes AL, Burrous S, Sisk BA, et al. Exploring perceptions of healthcare technologies enabled by artificial intelligence: an online, scenario-based survey. BMC Med Inform Decis Mak 2021; 21: 221–221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Merkel S, Hess M. The use of internet-based health and care services by elderly people in Europe and the importance of the country context: multilevel study. JMIR Aging 2020; 3: e15491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Grill E, Eitze S, De Bock F, et al. Sociodemographic characteristics determine download and use of a Corona contact tracing app in Germany-results of the COSMO surveys. PLoS One 2021; 16: e0256660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.European Commission. Digital inclusion, https://digital-strategy.ec.europa.eu/en/policies/digital-inclusion (2021, accessed 23 May 2022).

- 73.Bærøe K, Gundersen T, Henden E, et al. Can medical algorithms be fair? Three ethical quandaries and one dilemma. BMJ Health Care Informat 2022; 29: e100445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Smith H. Clinical AI: opacity, accountability, responsibility and liability. AI Soc 2021; 36: 535–545. [Google Scholar]

- 75.wGerke S, Minssen T, Cohen G. Ethical and legal challenges of artificial intelligence-driven healthcare. In: Bohr A and Memarzadeh K (eds) Artificial intelligence in healthcare. San Diego: Academic Press Inc, 2020, pp. 295–336. [Google Scholar]

- 76.Suresh H, Guttag J. A framework for understanding sources of harm throughout the machine learning life cycle. In: EAAMO ‘21: Equity and access in algorithms, mechanisms, and optimization. New York, NY: Association for Computing Machinery, 2021, pp. 1–9. [Google Scholar]

- 77.van de Sande D, Van Genderen ME, Smit JM, et al. Developing, implementing and governing artificial intelligence in medicine: a step-by-step approach to prevent an artificial intelligence winter. BMJ Health Care Informat 2022; 29: e100495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Subramanian M, Shanmuga Vadivel K, Hatamleh WA, et al. The role of contemporary digital tools and technologies in COVID-19 crisis: an exploratory analysis. Expert Syst 2021; 39: e12834. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, sj-docx-1-dhj-10.1177_20552076221116772 for Attitudes and perception of artificial intelligence in healthcare: A cross-sectional survey among patients by Sebastian J Fritsch, Andrea Blankenheim, Alina Wahl, Petra Hetfeld, Oliver Maassen, Saskia Deffge, Julian Kunze, Rolf Rossaint, Morris Riedel, Gernot Marx and Johannes Bickenbach in Digital Health

Supplemental material, sj-docx-2-dhj-10.1177_20552076221116772 for Attitudes and perception of artificial intelligence in healthcare: A cross-sectional survey among patients by Sebastian J Fritsch, Andrea Blankenheim, Alina Wahl, Petra Hetfeld, Oliver Maassen, Saskia Deffge, Julian Kunze, Rolf Rossaint, Morris Riedel, Gernot Marx and Johannes Bickenbach in Digital Health