Abstract

Objective

After a new electronic health record (EHR) was implemented at Mayo Clinic, a training program called reBoot Camp was created to enhance ongoing education in response to needs identified by physician leaders.

Materials and Methods

A reBoot camp focused on EHR topics pertinent to ambulatory care was offered from April 2018 through June 2020. There were 37 2-day sessions and 43 1-day sessions, with 673 unique participants. To evaluate outcomes of the reBoot camp, we used survey data to study baseline, immediate, and long-term perceptions of program satisfaction and self-assessed skills with the EHR. The study was conducted among practitioners at a large ambulatory practice network based in several states. Data were collected from April 2018 through January 2021. We analyzed automatically collected metadata and scores that evaluated the amount of personalization and proficiency of use.

Results

Confidence in skills increased by 13.5 points for general EHR use and was significant in 5 subdomains of use (13–18 point improvement). This degree of user confidence was maintained at the 6-month reassessment. The outcomes of configuration and proficiency scores also improved significantly.

Discussion

Ongoing education regarding EHR tools is necessary to support continued use of technology. This study was novel because of the amount and breadth of data collected, diversity of user participation, and validation that improvements were maintained over time.

Conclusions

Participating in a reBoot camp significantly improved user confidence in each domain of the EHR and demonstrated use of best-practice tools. Users maintained gains at the 6-month evaluation phase.

Keywords: continuing medical education, electronic health record, health workforce, medical informatics, staff development

INTRODUCTION

Mayo Clinic offers an electronic health record (EHR) training program called reBoot Camp, which is led by physicians and advanced practice practitioners (APPs). The program was developed in response to a need identified by physician leaders in informatics to enhance ongoing education after initial implementation of the EHR. This ongoing need is not unique to Mayo Clinic.1–4 To address this educational need across multiple sites, a reBoot camp was designed as an intensive and interactive refresher and optimization course, with the overall objective of sharing detailed practical information and enhancement recommendations about the EHR that are important to busy practitioners.

The reBoot camp is a 2-day program that teaches participants optimal use of the EHR system. The camp was initially targeted to superusers in primary care, but as word of the program spread, clinicians in other specialties asked to participate. Anticipating the need for iterative improvement, the program designers integrated multidimensional evaluations into the reBoot camps from the outset. These evaluations targeted longitudinal assessments of key program metrics: (1) participant satisfaction with the reBoot camp, (2) participant self-assessment of EHR skill, (3) participant efficiency, and (4) objective measures of EHR configuration and use by participants.

To our knowledge, no other studies have measured program effectiveness and impact of EHR training for practitioners already using such a system before, immediately after, and 6 months after attending a class. As novel health care technology development accelerates, it is imperative that advancements in health care technology training strategies occur. Not all training processes are the same. This report adds to the growing body of literature regarding impact-oriented assessments that allow leaders to elucidate best strategies for providers and organizations.

MATERIALS AND METHODS

This project was reviewed by the Mayo Clinic Institutional Review Board and determined exempt under 45 CFR 46 (No. 18-007540). STROBE guidelines were followed in developing the study and writing the manuscript.

EHR environment

The Mayo Clinic Plummer Project congregated data from all Mayo Clinic hospitals and clinics that had previously been in separate EHRs into 1 converged EHR (Epic), implemented with a single voice recognition system via 3M-M*Modal. The implementation of the new EHR began in July 2017 and was completed in October 2018.5

reBoot camp curriculum design

The reBoot camp was founded on 4 cornerstones: (1) intentional curriculum design, (2) peer instruction by qualified instructors, (3) ongoing evaluation of participant experience, and (4) measurements of participant performance in the EHR. Educational topics included clinical messaging (in-basket), chart review, documentation (including front-end speech recognition), order efficiency, problem-list management, schedule optimization, mobile tools, and reporting and analytics. The reBoot curriculum allowed for flexibility based on participant feedback, current practice hot topics, and recent software changes.

The reBoot camp curriculum and experience were informed by other educational programs reported in the literature,1,3,4,6,7 Epic’s Physician Builder and Power User programs, discussions with colleagues at health care technology learning collaborations,8 and user-group meetings. Topics were initially divided into modules led by senior instructors with specific expertise. Each reBoot camp was coordinated by a classroom host to maintain the instructional pace and positive environment. Instructors used set agendas, name tags on each workstation, and real-time learner feedback to ensure comprehension. Coinstructors circulated to troubleshoot and provide personalized attention. Each module was presented via a 3-step information exchange: didactic lectures, detailed dialogue, and active-learning laboratories. Active learning included personalization in the live-production environment and walkthroughs of workflows and techniques in the training environment.

The initial classroom instructors were primary care physicians who were also seasoned informatics leaders certified as Epic Physician Builders. As the reBoot camp offerings increased, engaged participants with instructor potential were asked to join the program. These additional instructors were onboarded through review sessions and coaching processes, following a “see one, do one, teach one” methodology until the new instructors felt ready and the preceptors deemed them proficient.9 As the work moved from pilot to program, the increased need for logistical and organizational support was needed and ultimately transitioned to Clinical Systems Education.

Learner recruitment

The multispecialty participants were self-selected. Participation was encouraged through formal and informal marketing and incentivized with continuing medical education (CME) and quality improvement Maintenance of Certification credits within the participants’ specialty medical boards. The tagline “developed by practitioners, taught by practitioners, and focused on the needs of practitioners” was used consistently in marketing materials to emphasize practice ownership of the methods.

Measurements

Qualitative survey evaluations

Survey data were collected and managed using Research Electronic Data Capture (REDCap) tools hosted at Mayo Clinic (Supplementary Box).9,10 REDCap is a secure, web-based software platform that supports data capture, data integration with external sources, and audit trails for research studies.

We developed 3 surveys: preparticipation, immediate postparticipation, and 6-month postparticipation. Some questions were included in all 3 surveys, and some questions were relevant to a specific survey period (before: Are you ready? or post: How impactful was the reBoot camp?) One set of questions presented in all surveys specifically assessed participants’ confidence with general use of the EHR and the 5 subdomains: documentation, order entry, medications, in-basket, and reports. For these questions, the score was self-assessed and entered via a 0–100 slider scale, which included rubric statements of “NOT skilled” at 0, “average” at 50, and “rockstar” at 100. The preparticipation survey was sent 7–10 days before the reBoot camp. The immediate post-reBoot camp survey was sent within a week of the reBoot camp’s ending. The final post-reBoot camp survey was sent at 6-month follow-up. Additional survey questions included topics of interest, program satisfaction, likelihood to recommend, impact on work, and level of sharing. As the training program evolved, the question set was adjusted when changes occurred in the curriculum or EHR (Supplementary S1). Supplementary S2 and S3 show a typical agenda. The measures for user confidence were also evaluated by subgroups of participants’ by provider type.

Objective measures

We used 2 objective measures to evaluate the impact of the reBoot camp for participants. The first was the User Settings Achievement Level (USAL), which measures whether an individual has set up the recommended configurations and adopted those personalized settings. The USAL audit is a snapshot in time per provider, and the individual scores are provided to the education team as an extract from Epic. The USAL audit was first available to Mayo Clinic in March 2019 and allowed us to identify practitioners who had achieved a defined gold level: the provider had personalized at least 1 item from ordering and 1 from documentation tools. We analyzed the number of Mayo Clinic practitioners who achieved the gold level in March 2019, December 2019, and December 2020, and compared reBoot participants in timeframe cohorts to the general Mayo Clinic practitioner population. Practitioners were only included in the USAL data if they used ambulatory clinic tools in the EHR within 30 days of the export, which excluded some of the participants. The participants were grouped into 3 cohorts for evaluating USAL scores: cohort 1, participated in the reBoot camp before the first score was released through the EHR; cohort 2, participated between the first and second measurement; and cohort 3, participated between the second and third measurement.

The second objective measure was the proficiency score (PS), which evaluates whether an individual uses the recommended configurations and is a composite measure of how frequently software tools are used. The PS, calculated by Epic, is available for individual practitioners on a rolling 4-week basis and creates a value between 0 and 10, with 0 equating to no use of the efficiency configurations and 10 showing a very high use of the most efficient configurations available. Factors influencing the score include use of quick actions, preference lists, SmartTool, chart tools, and personalization of features, such as default charge codes. The PS was analyzed retrospectively 3 times for each participant: baseline (1 month before the camp), 1 month after camp, and 6 months after camp.

Analysis

Statistical analyses were performed using R 3.6.2 (The R Project) and SAS 9.4 (SAS Institute Inc). The mean PS was assessed for 6 unique performance metrics with mean values and 95% CI for the mean. Paired t tests were used to analyze the change in PS from baseline to 1 month after the camp and from baseline to 6 months after the camp. The USAL rate of change analysis was completed using a logistic regression model with group and time interaction.

RESULTS

Participation

From April 2018 through June 2020, 673 unique participants attended reBoot camps. Participants were from multiple disciplines and all locations at Mayo Clinic, with a slight majority from primary care (51%) and the rest divided between medical (34%) and surgical (13%) specialties (Table 1). Completing the full curriculum took 2 days, and it was offered 37 times in person in 2018 and 2019 (Table 1). In 2020, the curriculum was split into 2 separate, 1-day reBoot camps to allow for flexibility of scheduling by practitioners, with 31 offerings completed through June 30, 2020. Because of the COVID-19 pandemic, the 1-day classes in 2020 were transitioned in April to a Zoom virtual platform (Zoom Video Communications) (Table 1).

Table 1.

Characteristics of courses and participants

| Characteristics | Individual participantsa (N = 673) |

|---|---|

| Course format | |

| 2018–2019 | |

| 16 h, 37 camps | 524 |

| 2020 | |

| 8 h (split), 31 camps | 149 |

| Specialty, No. (%) | |

| Primary care | |

| Physician | 210 (31) |

| APP | 134 (20) |

| Surgical | |

| Physician | 44 (7) |

| APP | 42 (6) |

| Medical | |

| Physician | 133 (20) |

| APP | 97 (14) |

| Other (therapist, audiologist, pharmacist, nurse) | 13 (2) |

| Survey response, No. (%) | |

| Presurvey | 604 (90) |

| Postsurvey (10–14 days) | 471 (70) |

| 6-months postsurvey | 239 (36) |

APP: advanced practice practitioner (physician assistant or nurse practioner).

Both courses were completed by 120 individuals in 2020, and 6 individuals took 2 courses in 2019. Survey data were included for the first course they took.

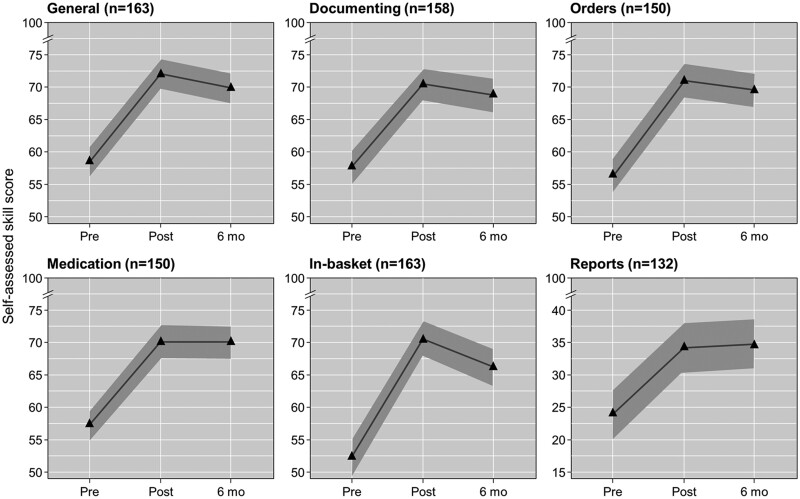

Survey results

A total of 1314 surveys were completed, with the last of the 6-month post-reBoot camp surveys recorded in January 2021. The survey response rate was 90% for the presurvey, 70% for the initial postsurvey, and 36% for the 6-month response. The most noteworthy results from the surveys were in the participants’ self-evaluation of skills. The analysis was performed to include only those participants who completed all 3 surveys. Each self-assessed level of proficiency for the top skills covered during the reBoot camps significantly improved immediately after the camp, and the skills were sustained 6 months later (Table 2 and Figure 1). This level of user confidence also remained significantly higher than baseline without variation in nearly every subgroup evaluated (Supplementary S4).

Table 2.

Change in self-assessed proficiency

| Proficiency change: before to after reBoot campa |

Proficiency change: before to 6-mo after reBoot campa |

||||||

|---|---|---|---|---|---|---|---|

| Skill | Participants, No.b | Mean change (mean, before and after) | 95% CI | P value | Mean change (mean, before and 6-mo after) | 95% CI | P value |

| General navigation | 163 | 13.5 (58.5, 72.0) | 11.4–15.6 | <.001 | 11.3 (58.5, 69.8) | 9.1–13.6 | <.001 |

| Documentation | 158 | 12.7 (57.7, 70.4) | 10.5–14.9 | <.001 | 11.1 (57.7, 68.8) | 8.8–13.3 | <.001 |

| Order entry | 150 | 14.6 (56.4, 71.0) | 12.3–16.9 | <.001 | 13.1 (56.4, 69.5) | 10.6–15.6 | <.001 |

| Medications | 150 | 12.8 (57.4, 70.1) | 10.4–15.1 | <.001 | 12.7 (57.4, 70.0) | 10.2–15.1 | <.001 |

| In-basket | 163 | 18.3 (52.3, 70.5) | 15.7–20.9 | <.001 | 13.9 (52.3, 66.2) | 10.8–17.0 | <.001 |

| Reports | 132 | 10.1 (24.1, 34.2) | 6.1–14.2 | <.001 | 10.8 (24.1, 34.9) | 6.3–15.3 | <.001 |

Skills assessed on a 1–100 scale.

No. of participants who completed all 3 surveys.

Figure 1.

Mean results of self-assessed skill scores for 6 main skills. Skills were assessed on a 1–100 scale. The shaded region represents the 95% CI for the mean.

In addition to improving their skills, participants reported high overall satisfaction with the program, stating that it had a positive effect on their practice. On the immediate postparticipation surveys, 97% (580/598) of respondents stated that they would recommend the reBoot camp to a colleague, and 92% (550/598) answered that the most important topic they wanted covered was addressed. Greater than 70% of responding participants (399/573) rated the impact as 70 or higher (0–100 scale) when they were asked to evaluate the “impact of this program on the ability to run a practice at Mayo Clinic.” In the 6-month post survey, participants were asked if they shared concepts, tips, tricks, and configurations with colleagues, and 94% (249/264) of responding participants reported that they shared at least 1 item they learned with others (as was encouraged during the event). Most shared informally, but 23% of responding participants (58/249) stated that they shared tips and tricks in a formal setting (in scheduled mentoring time, at a departmental meeting, and at similar dedicated venues) (Supplementary S5).

The skills participants were most interested in developing were identified in the preassessment survey and are reported in Supplementary S5. Although the program was approved for CME, only 39% of participants reported use of CME time allocation to attend. Another 10% did not know how their time was allocated. The other 51% reported that department time, vacation, or other methods were used to allocate time.

Objective measures

At baseline (March 2019), 58.7% of the total Mayo Clinic general population (2947/5070) achieved the gold level of USAL, with increases to 66.8% in December 2019 and 67.0% in December 2020 (Figure 2). A greater proportion of program participants achieved the goal before camp (76.9–89.2%) and after camp (96.7–99.2%). Compared with the rate of increase in the general Mayo Clinic population (+8.3% from March 2019 to December 2019 and +0.2% from December 2019 to December 2020), the camp significantly increased the proportion of individuals achieving the goal for cohort 2 (March 2019 to December 2019: +19.8% [P<.001]) and cohort 3 (December 2019 to December 2020: +7.5% [P<.001]).

Figure 2.

User settings achievement level. The graphed lines show the percentage of practitioners achieving gold level for system personalization as determined by user metadata analysis. The symbols indicate each cohort’s percent of members with gold scores at the time of measurement releases from Epic. Open symbols indicate values for that cohort before any reBoot camp intervention.

The mean of the participants’ PS data changed significantly when scores from before and after camp were compared. The mean increase was 0.38 (6.09–6.47; P<.001) from 1 month before to 1 month after reBoot camp, and this increase was sustained at 6 months (0.39 increase from 6.09 to 6.48; P<.001). As of January 31, 2021, 90.1% of participants (118/131) who completed courses in 2018 were still employed at Mayo Clinic, 96.9% of participants (381/393) who completed courses in 2019 were still employed, and 100% of participants (149/149) who completed courses in 2020 were still employed.

DISCUSSION

The reBoot camp program at Mayo Clinic successfully achieved improved user confidence, personalization, and proficiency in the EHR. The study showed significant improvements in self-assessed skill scores in general use of the EHR and each of the other 5 skill domains surveyed (documenting, orders, medications, in-basket, and reports). This level of confidence did not change after 6 months and remained significantly higher than baseline without variation in nearly every subgroup evaluated, with the only exception being improvement in self-assessment of in-basket skill, which was greater for physicians than for other providers (Supplementary S4). A practitioner’s overall clinical confidence greatly influences patient experiences11 and allows clinicians to set an atmosphere that will help achieve the best patient care.12–14 Our objective measures showed that participants improved their personal configurations in the EHR. Our target for USAL was achieved by 97% of users, a greater proportion than that of practitioners in general at Mayo Clinic and an increased rate of adoption of the personalization measured in the USAL. The PS is a more robust measure, indicating use of the tools measured by the USAL. This score also increased significantly from before to after the reBoot camps and was sustained at 6-month follow-up, indicating ongoing optimization and customization by trained participants.

Mayo Clinic’s reBoot camp was specifically geared toward reiterating fundamental principles, efficiency tips, and best-practice configurations across multiple specialties and all Mayo Clinic sites. The range of participants’ clinical practices was wide—from small community critical-access hospitals to large academic destination medical centers and across primary care, medical specialties, and surgical specialties. Having practitioners from various specialties in the reBoot camps allowed for identification of departmental-level build opportunities, alternative workflow considerations, appreciation of interdepartmental nuances for shared care models, and general maturation in cross-disciplinary camaraderie. A unique variable in course design was peer instructors, which ensured that material would be applicable in real settings. In addition, peer instructors understood and could explain the importance of each EHR click, including clicks for administrative and other reasons (clinical research reporting, payor, regulatory, clinical decision support). The instructors were encouraged to share their own struggles and concerns, which ensured a safe environment for others’ questions and crucial conversations. The program’s founders serve on various Mayo Clinic governance EHR committees, allowing for real-world feedback to enable best decisions and platform change requests to better meet needs of the end users.

The program had direct practice applicability, as shown by the 6-month results of the postcamp survey, with nearly one-third of participants ranking the “impact of the program on running my practice at Mayo Clinic” as greater than 90 on a 0–100 scale. This finding correlated with free text, postsurvey comments that described the training experience as “revolutionary,” “mind blowing,” “best ever,” “practice transforming,” and “spectacular.”

Giving CME credit to participants is a small incentive for completing the training and an avenue to justify the time away from clinical duties to participate. The actual time allocations were not recorded for analysis, but participants were surveyed about how they allocated their time for the reBoot camp. Only 39% reported that they used their CME time allocation to participate, and nearly as many reported that the time used to improve their skills was directly supported by their department. Finding the time to participate in the reBoot camps remains a critical bottleneck for some practitioners, and ongoing evaluations are needed to find solutions.

A substantial limitation in generalizing our findings was self-selection of participants. Participation in reBoot camps was voluntary, and, thus, we enrolled clinicians who were motivated to improve their EHR use, to engage, and to spend the time needed to improve their skills. This limitation was reflected in the higher before-training USAL score of the ReBoot camp participants as compared with the general Mayo Clinic practitioner group. However, given the ongoing demand and high enrollment rates for the reBoot camps, we have not yet reached saturation for the self-selected population of motivated learners, and word-of-mouth continues to engage potential participants who hear success stories from previous program participants. The project was also limited by not including any financial analysis for the program’s cost-effectiveness. In addition, the reBoot camp was a training program designed to meet the needs of Mayo Clinic at a specific time after EHR implementation. Transferring the design and implementation of this program to other organizations may alter the success. The specific design will vary based on many factors including scale, timing, resources, vendor, system maturity, and other organizational factors. A final limitation is purposefully not including burnout and wellness results. This information was not directly evaluated or included in this report because of its complexity. A full, dedicated discussion to ascertain the complete evaluation of data representing this complex and important issue is warranted.

The discussion on the return on investment is a complex and multifaceted conversation, and attributing changes to training alone would be misleading. However, turnover rates of reBoot camp participants during the study period (as of January 30, 2021) showed low turnover rates (<1% for those at 1 year, <5% for those at 2 years, <10% for those at 3 years), which were lower than Mayo Clinic’s average 6%/year turnover rate during this same period and other clinician turnover rates reported in the literature.15 The EHR has been implicated as a contributor to physician burnout,16 and burnout has been associated with turnover.16,17 It is not unreasonable to assume that effective EHR training may also reduce turnover associated with burnout, although specific studies are needed to determine whether this is true.

CONCLUSION

ReBoot camps are a well-received and effective solution for ongoing training needs. Our data showed significant improvement in EHR skill confidence and objective measures of EHR personalization and proficiency. Our data also suggested that practitioner turnover could be reduced, and overall physician interactions could be improved through the reBoot camp system. Key tenets of the reBoot camp were practitioner peer-to-peer instruction, blocked time, a specific curriculum, and time for live environment build and configuration. Although cost-effectiveness is difficult to measure and requires formal future evaluation, we believe this highly effective training model could be applied at other health care organizations.

FUNDING

This project was, in part, supported by NIH/NCRR/NCATS CTSA (grant number UL1 TR002377). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH. The funding organization had no involvement in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; or the decision to submit the manuscript for publication.

AUTHOR CONTRIBUTIONS

All authors approved the final version of the manuscript. No funding or administrative, technical, and material support were required. The authors made the following individual contributions to the manuscript. JEG: Study concept and design, acquisition of data, analysis and interpretation of data, drafting of the manuscript, critical revision of the manuscript for important intellectual content, and statistical analysis. SMB: Study concept and design, acquisition of data, analysis and interpretation of data, drafting of the manuscript, critical revision of the manuscript for important intellectual content, and statistical analysis. DLA: Acquisition of data and drafting of the manuscript. DB: Study concept and design, acquisition of data, analysis and interpretation of data, and drafting of the manuscript. RF: Acquisition of data and drafting of the manuscript. NMG: Acquisition of data and drafting of the manuscript. JH: Study concept and design, acquisition of data, analysis and interpretation of data, and drafting of the manuscript. SBL: Analysis and interpretation of data, drafting of the manuscript, and critical revision of the manuscript for important intellectual content. KAL: Acquisition of data and drafting of the manuscript. JKR: Analysis and interpretation of data, drafting of the manuscript, critical revision of the manuscript for important intellectual content, and statistical analysis. JCO: Study concept and design, acquisition of data, analysis and interpretation of data, drafting of the manuscript, critical revision of the manuscript for important intellectual content, and statistical analysis.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

Many persons contributed to the success of the reBoot camp program and helped with this paper. The authors would like to acknowledge the contributions of Mayo Clinic staff members Sarah H. Daniels, MS, BRMP, Michael W. Wright, and the entire team of Mayo Clinic reBoot camp instructors, informaticists, instructional designers, coordinators, and end-user support analysts. We also thank Thomas D. Thacher, MD, and the team at Mayo Clinic Department of Family Medicine for their time and support of this research. Marianne Mallia, ELS, MWC, senior scientific/medical editor, Mayo Clinic, substantively edited the manuscript. The Scientific Publications staff at Mayo Clinic provided proofreading, administrative, and clerical support. None of the authors, coauthors, or those mentioned in the acknowledgments received third-party direct or indirect compensation for their work on the study or assistance in the development, writing, or revisions of the manuscript.

CONFLICT OF INTEREST STATEMENT

JCO has received grants from Nference, Inc and the MITRE Corporation, as well as consulting fees from Elsevier, Inc and Bates College, none of which were related to the present work. None of the other authors have conflicts to declare or funding to disclose.

Contributor Information

Joel E Gordon, Chief Medical Information Officer, Mayo Clinic Health System Administration, Rochester, Minnesota, USA; Family Practice, Mayo Clinic Health System—Southwest Minnesota Region, Mankato, Minnesota, USA.

Sylvia M Belford, Clinical Systems Education, Mayo Clinic, Rochester, Minnesota, USA.

Dawn L Aranguren, Division of Medical Oncology, Mayo Clinic, Rochester, Minnesota, USA.

David Blair, Family Medicine, Mayo Clinic Health System—Northwest Wisconsin Region, Bloomer, Wisconsin, USA.

Richard Fleming, Family Practice, Mayo Clinic Health System—Southwest Minnesota Region, Mankato, Minnesota, USA.

Nikunj M Gajarawala, Department of Urology, Mayo Clinic, Jacksonville, Florida, USA.

Jon Heiderscheit, Chief Information Officer, Mayo Clinic Health System—Southwest Wisconsin Region, Onalaska, Wisconsin, USA; Family Medicine, Mayo Clinic Health System—Southwest Wisconsin Region, Onalaska, Wisconsin, USA.

Susan B Laabs, Family Practice, Mayo Clinic Health System—Southwest Minnesota Region, Mankato, Minnesota, USA.

Kathryn A Looft, Clinical Systems Education, Mayo Clinic Health System—Southwest Minnesota Region, Mankato, Minnesota, USA.

Jordan K Rosedahl, Division of Clinical Trials and Biostatistics, Mayo Clinic, Rochester, Minnesota, USA.

John C O’Horo, Division of Infectious Diseases, Mayo Clinic, Rochester, Minnesota, USA; Division of Pulmonary and Critical Care Medicine, Mayo Clinic, Rochester, Minnesota, USA.

Data Availability

JEG and SMB had full access to all data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. They take responsibility for the integrity of the work as a whole, from inception to published article. All relevant data were included in the manuscript.

REFERENCES

- 1. Robinson KE, Kersey JA.. Novel electronic health record (EHR) education intervention in large healthcare organization improves quality, efficiency, time, and impact on burnout. Medicine (Baltimore) 2018; 97 (38): e12319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Brown PC, Roediger HL, McDaniel MA.. Make It Stick: The Science of Successful Learning. Cambridge, MA: The Belknap Press of Harvard University Press; 2014. [Google Scholar]

- 3. Sieja A, Markley K, Pell J, et al. Optimization sprints: improving clinician satisfaction and teamwork by rapidly reducing electronic health record burden. Mayo Clin Proc 2019; 94 (5): 793–802. [DOI] [PubMed] [Google Scholar]

- 4. Kirshner M, Salomon H, Chin H.. One-on-one proficiency training: an evaluation of satisfaction and effectiveness using clinical information systems. AMIA Annu Symp Proc 2003; 2003: 366–70. [PMC free article] [PubMed] [Google Scholar]

- 5. Madson R. Mayo Clinic completes installation of Epic electronic health record. Secondary Mayo Clinic completes installation of Epic electronic health record 2018. https://newsnetwork.mayoclinic.org/discussion/mayo-clinic-completes-installation-of-epic-electronic-health-record/. Accessed February 2, 2021.

- 6.In the Loop. iNEED program turning providers into electronic record rock stars. Secondary iNEED program turning providers into electronic record rock stars 2015. https://intheloop.mayoclinic.org/2015/07/09/ineed-program-turning-providers-into-emr-rock-stars/. Accessed April 12, 2021.

- 7. Dastagir MT, Chin HL, McNamara M, Poteraj K, Battaglini S, Alstot L.. Advanced proficiency EHR training: effect on physicians’ EHR efficiency, EHR satisfaction and job satisfaction. AMIA Annu Symp Proc 2012; 2012: 136–43. [PMC free article] [PubMed] [Google Scholar]

- 8.Secondary 2021. https://klasresearch.com/arch-collaborative. Accessed July 23, 2020.

- 9. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG.. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009; 42 (2): 377–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Harris PA, Taylor R, Minor BL, REDCap Consortium, et al. The REDCap consortium: building an international community of software platform partners. J Biomed Inform 2019; 95: 103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Bendapudi NM, Berry LL, Frey KA, Parish JT, Rayburn WL.. Patients’ perspectives on ideal physician behaviors. Mayo Clin Proc 2006; 81 (3): 338–44. [DOI] [PubMed] [Google Scholar]

- 12. Driskell JE, Salas E.. Stress and Human Performance. Mahwah, NJ: Lawrence Erlbaum Associates; 1996. [Google Scholar]

- 13. Estrada CA, Isen AM, Young MJ.. Positive affect facilitates integration of information and decreases anchoring in reasoning among physicians. Organ Behav Hum Decis Process 1997; 72 (1): 117–35. [Google Scholar]

- 14. Curtin LL. The Yerkes-Dodson law. Nurs Manage 1984; 15 (5): 7–8. [PubMed] [Google Scholar]

- 15. Willard-Grace R, Knox M, Huang B, Hammer H, Kivlahan C, Grumbach K.. Burnout and health care workforce turnover. Ann Fam Med 2019; 17 (1): 36–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Shanafelt TD, Dyrbye LN, Sinsky C, et al. Relationship between clerical burden and characteristics of the electronic environment with physician burnout and professional satisfaction. Mayo Clin Proc 2016; 91 (7): 836–48. [DOI] [PubMed] [Google Scholar]

- 17. Shanafelt TD, Mungo M, Schmitgen J, et al. Longitudinal study evaluating the association between physician burnout and changes in professional work effort. Mayo Clin Proc 2016; 91 (4): 422–31. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

JEG and SMB had full access to all data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. They take responsibility for the integrity of the work as a whole, from inception to published article. All relevant data were included in the manuscript.