Abstract

Machine learning findings suggest Eurocentric (aka White/European) faces structurally resemble anger more than Afrocentric (aka Black/African) faces (e.g., Albohn, 2020; Zebrowitz et al., 2010); however, Afrocentric faces are typically associated with anger more so than Eurocentric faces (e.g., Hugenberg & Bodenhausen, 2003, 2004). Here, we further examine counter-stereotypic associations between Eurocentric faces and anger, and Afrocentric faces and fear. In Study 1, using a computer vision algorithm, we demonstrate that neutral European American faces structurally resemble anger more and fear less than do African American faces. In Study 2, we then found that anger- and fear-resembling facial appearance influences perceived racial prototypicality in this same counter-stereotypic manner. In Study 3, we likewise found that imagined European American versus African American faces were rated counter-stereotypically (i.e., more like anger than fear) on key emotion-related facial characteristics (i.e., size of eyes, size of mouth, overall angularity of features). Finally in Study 4, we again found counter-stereotypic differences, this time in processing fluency, such that angry Eurocentric versus Afrocentric faces and fearful Afrocentric versus Eurocentric faces were categorized more accurately and quickly. Only in Study 5, using race-ambiguous interior facial cues coupled with Afrocentric versus Eurocentric hairstyles and skin tone, did we find the stereotypical effects commonly reported in the literature. These findings are consistent with the conclusion that the “angry Black” association in face perception is socially constructed in that structural cues considered prototypical of African American appearance conflict with common race-emotion stereotypes.

Keywords: Emotion recognition, Face perception, Racial stereotypes

In a now well-known incident from 1999, four plain-clothed police officers gunned down an innocent African immigrant outside his apartment building in New York City. Amadou Diallo was unarmed, standing outside his apartment late at night, as a gang of four European American men approached him. Diallo likely thought he was about to be robbed. He reached for his wallet, likely to avoid conflict, and was promptly shot to death. In his book Blink (2005), Malcolm Gladwell made the point that at the moment right before being shot, Diallo’s expression was likely one of sheer terror. Yet, the police perceived him to be a direct and immediate threat. How did the officers misread this young man’s expression? This question has haunted science and society since. Over 20 years later, police continue to kill unarmed African Americans at alarming rates. African Americans make up only 13% of the US population, yet they account for nearly 24% of all fatal police shootings (Edwards et al., 2020). A recent analysis suggests that the probability of being fatally shot by police is higher for unarmed African Americans than armed European Americans, a bias that cannot be solely attributed to area-specific crime rates (Ross, 2015).

To date, it has been well documented that anger is perceived more in African American than European American faces. For instance, Hugenberg and Bodenhausen (2003) found that European American individuals who were higher in implicit racial bias perceived race-ambiguous anger expressions as emerging more quickly and lingering longer when presented on faces with darker skin tone and African versus European hairstyles. Conversely, European Americans who were higher in implicit bias were more likely to categorize racially ambiguous anger expressions as “Black” versus “White” (Hugenberg & Bodenhausen, 2004). Hutchings and Haddock (2008) later found that just labeling racially ambiguous faces as “Black” versus “White” led European Americans to perceive more anger in a face.

Despite evidence for this “angry Black” stereotype, advances in machine learning have led to some counterintuitive findings with regard to race and emotional expressions. For instance, using a connectionist model, Zebrowitz et al. (2010) examined structural cues in neutral faces that resemble emotional expression. In a set of 360 faces, they found that White (European and European American) neutral faces appeared angrier than Black (African and African American) and Korean faces, but Black faces appeared more surprised and happier than White faces. Further, when controlling for related appearance cues, the tendency for Black faces to be rated as relatively more hostile became even stronger, suggesting that Afrocentric features in the face actually attenuated stereotypic associations of hostility. To our knowledge, this represents the earliest evidence demonstrating that appearance cues associated with African American facial appearance can suppress the common race-emotion stereotypes.

That emotion-resembling cues in facial appearance influence the perceptual processing of overt emotional expressivity has also been well documented. For instance, emotion, gender, facial maturity, and age have all been found to give rise to similar perceptual impressions due to shared appearance cues. These social cues not only share similar social meaning, but they do so by physically resembling one another. Prototypical anger and fear expressions share perceptual resemblances to facial maturity and gender-related cues in the face (e.g., through eye size, mouth size, and facial angularity; Adams et al., 2012; Hess et al., 2004, 2005, 2009; Marsh et al., 2005). Critically, these cues also fundamentally share signal value as cues to affiliation and dominance: facial roundness, large eyes, and a large mouth are perceived as relatively warmer, whereas angularity, small eyes, and a small mouth are perceived as relatively more threatening (see Adams et al., 2015 for review). Similarly, age-related cues in the face (wrinkles, folds) have been found to impact emotion perception and impression formation (e.g., Adams et al., 2016; Freudenberg et al., 2015; Hess et al., 2012; Palumbo et al., 2017).

All of these prior findings offer growing evidence that appearance cues in the face associated with these different social categories directly influence emotion inferences that are subsequently made. In the current work, we investigate how emotion-resembling facial cues associated with Afrocentric and Eurocentric appearance actually run counter to common stereotypes. Here we test the common cue hypothesis (see Adams et al., 2015), that emotion resembling facial cues are associated with perceptions of different social categories (i.e., gender, age, race). In this case, prior research suggests that Eurocentric features resemble anger more and fear less than do Afrocentric features. We test this hypothesis across four studies.

In Study 1, we replicate and extend Zebrowitz et al.’s (2010) prior machine learning findings using a more contemporary algorithm and training methodology to reduce potential bias. In Study 2, we extend these findings to examine ratings of racial prototypicality of Afrocentric versus Eurocentric faces that were both manipulated to resemble anger versus fear. In Study 3, we contrast and compare common appearance cues associated with imagined facial expressions as well as with imagined race-, gender-, and maturity-related facial appearance. In Study 4, we extend this further by examining how facial appearance influences the processing fluency of emotional expression in a speeded reaction time task. All of these studies offer converging evidence that Eurocentric facial appearance is associated with relatively more anger-resembling cues, whereas Afrocentric facial appearance is associated with relatively more fear-resembling cues, in direct contrast to the common hostile Black stereotype that has been reported in the prior literature. Only in Study 5, where we employed race-ambiguous interior faces expressing anger and fear presented within faces with darker versus lighter skin tone and Afrocentric versus Eurocentric hairstyles, do we replicate the stereotypical finding commonly found in the literature, showing relatively faster and more accurate responses to Afrocentric faces expressing anger and Eurocentric faces expressing fear. Critically, like our Study 5 here, the previously published research examining race-emotion associations has consistently utilized facial stimuli that are either race ambiguous or visually impoverished in some manner.

Study 1: Counter-Stereotypic Race-Based Emotion Resemblance Algorithmically Derived from Neutral Facial Appearance

Overview

The goal of Study 1 was to replicate and extend the work of Zebrowitz et al. (2010) by utilizing contemporary machine learning models, a more diverse set of training stimuli, and by applying the trained model to a diverse and robust set of test stimuli.

Method

Reducing Bias in Computer Vision

Current computer vision and machine learning research has seen tremendous progress in the past decade. Computer vision models are becoming both increasingly complex and more readily available to researchers and practitioners outside of fields that have been typically associated with computer vision, such as computer science. However, issues related to perceptual bias and subjectivity have been raised alongside its increased popularity and access. As such, it is paramount that precautions have been taken to reduce any bias present in the model, as well as prevent the misuse of models (e.g., using them for predictions beyond what they were trained for or disseminating inaccurate knowledge related to such models).

“Bias” in machine learning is often discussed with regard to models that are trained on data containing a “ground truth.” For example, a model trained to detect faces in images has the ground truth of a face being present or not in the image. This training label (face present/face not present) is largely undisputable and highly reliable across human labelers compared to more subjective labels (e.g., related to which emotion expression is present). Bias exists in these models when the model, trained on a specific group of face images, fails to generalize to other images. For example, an algorithm may fail to recognize faces of African or Asian descent as real faces because the training set consisted mostly of individuals of European descent. Clearly, such bias in machine learning models is highly undesirable and can lead to extreme errors such as mistaking a member of U.S. Congress as a criminal (Snow, 2018) or mislabeling a person of African descent as a “gorilla” (Simonite, 2018).

With these issues surrounding bias in machine learning in mind, we have made explicit attempts to avoid potential for similar biases in the current work. As there is no “ground truth” for emotion expression classification (i.e., it is subjective based on the individual perceiver), the goal of our model is to predict the overall consensus that a specific facial configuration represents a valid emotion expression. We acknowledge that this could allow room for bias, and have employed stringent measures to avoid these being systematically related to race. To do this, we decomposed facial expressions into the structure (i.e., shape) that each represents. Because the model was trained only on structure information associated with emotional expressions (across a wide range of different race, age, and gendered faces), and structure information is the only information that the model has at its disposal to make predictions, it can not use any additional information when making a prediction, unlike a human. For instance, even if a human was instructed to only take face structure into account when making a judgment, it is unlikely that the individual could avoid utilizing other information present on the face (e.g., skin tone, wrinkles, gender), and they would most likely incorporate such information into their judgment. Thus, the current model (described below) was specifically trained to avoid race- and gender-emotion stereotypes when making predictions regarding emotion resemblance.

We believe that our model addresses this concern by including a relatively large image training set that consists of many individual faces that vary in gender, race, and age. For comparison, our model has 30 times more training stimuli and nearly four times as many predictors than the prior Zebrowitz et al.’s (2010) connectionist model. This reflects our attempt to replicate and extend that work in a more robust model that accounts for concerns of potential biases that have been raised since Zebrowitz et al.’s original research was conducted.

Computer Vision Model and Training Stimuli

A GLM machine learning model using the H2o (LeDell et al., 2019) platform for R (R Core Team, 2019) was trained on the facial structures of more than 1,600 expressive images (~ 300 per emotion) varying in race, age, and gender. Expressive faces of anger, disgust, fear, joy, sadness, and surprise were selected from standardized image sets of facial expressions to maintain high-quality training data. These image sets included FACES (Ebner et al., 2010), NIMSTIM (Tottenham et al., 2009), Chicago Face Database (Ma et al., 2015), RAFD (Langner et al., 2010), and Emotionet (Benitez-Quiroz et al., 2017). Sixty-eight facial landmarks used for training were automatically extracted from the cropped interior portion of the face using ensemble regression trees (Kazemi & Sullivan, 2014). The interior portion of the face was used so that extraneous features such as hair, background, and clothing were not incorporated into the learning phase, thus ensuring that the models were trained on strictly expressive cues in the face. Test accuracy assessed via faces withheld from the training step revealed that the model performed at an accuracy of 85.9% (see Albohn & Adams, 2021, for full model training methodology).

Testing Stimuli

Neutral faces varying in race (African American and European American) and gender (male and female) were taken from the Chicago Face Database (CFD; Ma et al., 2015). Each individual face was subjected to the face metric extraction procedure detailed by Albohn and Adams (2021). The unaltered CFD African American and European American neutral faces were input into the algorithm described above. The automated feature extraction procedure resulted in facial structure metrics for 197 African American neutral faces and 183 European American neutral faces.

Results

The output of the structure model was subjected to a 2 (stimulus race: African American, European American) × 2 (stimulus gender: male, female) × 2 (predicted emotion: anger, fear) repeated measures analysis of variance (ANOVA). The model output (confidence that the face being measured is the specific emotion) represented the dependent variable. Estimated marginal means were analyzed post hoc from the model using t tests that were adjusted for multiple comparisons (where appropriate).

There was a marginal main effect of race, F(1,376) = 3.88, p = 0.050, = 0.01. African American neutral faces (M = 0.26) were predicted by the model to be overall more expressive compared to European American neutral faces (M = 0.24). There was also a main effect of face gender, F(1,376) = 18.10, p < 0.001, = 0.50. Female neutral faces (M = 0.28) were predicted by the model to be overall more expressive compared to male neutral faces (M = 0.23). Finally, there was a main effect of emotion with larger predictions for fear (M = 0.31) over anger (M = 0.19), F(1,376) = 3.88, p = 0.050, = 0.01.

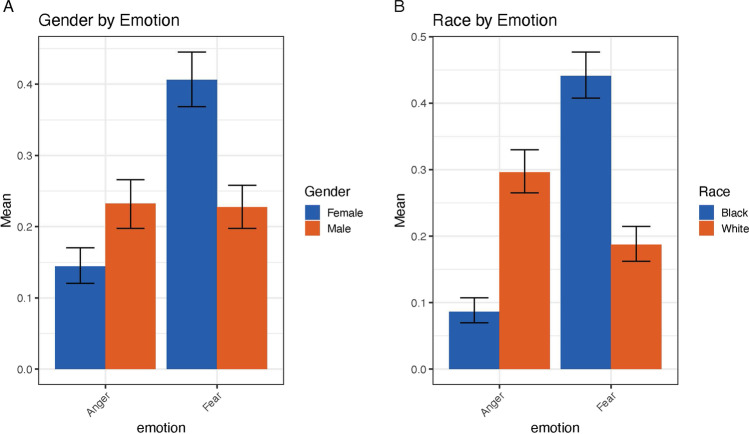

There was no race by gender interaction, F(1,376) = 0.16, p = 0.686, < 0.001. However, there was the predicted gender by emotion interaction, F(1,376) = 55.23, p < 0.001, = 0.13, as well as a race by emotion interaction, F(1,376) = 181.35, p < 0.001, = 0.33. Consistent with previous stereotypical findings, female neutral faces (M = 0.40) were found to structurally resemble fear expressions more than male neutral faces (M = 0.23; t(628) = 8.56, p < 0.001, d = 0.34), and male neutral faces (M = 0.23) structurally resembled anger expressions more than female neutral faces (M = 0.15; t(628) = 4.08, p < 0.001, d = 0.16). Likewise, and consistent with prior machine learning counter-stereotypical findings, European American neutral faces (M = 0.30) structurally resembled anger expressions more than African American neutral faces (M = 0.09; t(628) = − 10.41, p < 0.001, d = 0.42), while African American neutral faces (M = 0.44) structurally resembled fear expressions more than European American neutral faces (M = 0.19; t(628) = 12.48, p < 0.001, d = 0.50). The two, two-way interactions are presented in Fig. 1. Finally, there was no three-way interaction between face race, face gender, and emotion, F(1,376) = 0.17, p = 0.680, < 0.001.

Fig. 1.

Computer vision predictions of emotion resemblance based on neutral facial images: Interaction between face gender (A), face race (B), and predicted emotion. y-axes represent model confidence that the neutral face is displaying the predicted emotion (x-axes). Error bars represent 95% confidence intervals. Panel A shows predicted stereotypical common cues associated with male faces and anger expressions and female faces and fear expressions, whereas panel B reveals predicted counter-stereotypical common cues associated with European American faces and anger and African American faces and fear

Summary

Zebrowitz et al. (2010) reported that White neutral faces structurally resembled anger expressions more than Black neutral faces, and Black neutral faces structurally resembled happy and surprise expressions more than White neutral faces. This observation stands in direct contrast to human-based impressions, including those found by Zebrowitz et al. (2010) using human raters. The current study replicated and extended these previous results by finding stereotypic gender and counter-stereotypic race-emotion results. First, male neutral faces structurally resembled anger expressions more than female neutral faces, whereas female neutral faces structurally resembled fear more than male neutral faces, replicating well-documented stereotypic associates between gender and emotion in the face (see also, Adams et al., 2015; Becker et al., 2007; Hess et al., 2009). Critically, in line with the common cue hypothesis, the counter-stereotypic effect was also replicated here, such that European American neutral faces structurally resembled anger more than African American faces, whereas African American neutral faces structurally resembled fear expressions more than European American neutral faces.

Study 2: Counter-Stereotypic Effects of Emotion Resemblance on Perceived Race Prototypicality

Overview

Building on our computer vision findings, Study 2 next introduced anger- and fear-resembling cues into otherwise neutral Afrocentric and Eurocentric faces to examine the influence of these emotion cues on judgments of race prototypicality. As a manipulation check, we also employed the machine learning algorithm described above. This was also used to examine whether the findings of Study 1 extend to the current stimuli used here. Following the common cue hypothesis and findings from Study 1’s computer vision findings, we predicted that anger-resembling cues would make Eurocentric faces appear more racially prototypical, whereas fear-resembling cues would make Afrocentric faces appear more racially prototypical.

Method

Preliminary Power Analysis

Based on a sample of 17 participants who participated in a preliminary study, we computed a power analysis using G*Power (Faul et al., 2009) to determine an adequate sample size. We focused the power analysis on the predicted race by emotion interaction, F(1,16) = 21.25, p < 0.001, = 0.53. In order to replicate this effect at a power of ß = 0.80, p < 0.05, this analysis determined that a sample size of at least 10 participants would be necessary. Because the current study was run online due to the COVID pandemic, and the preliminary study was run in the lab, we oversampled to ensure sufficient power.

Participants

Eighty-seven undergraduates at The Pennsylvania State University participated in the study online via Qualtrics for partial course credit. One was dropped from the analyses for responding with “1” to all stimulus ratings. Two others were dropped for taking an excessive amount of time to complete the task (6 h/5 SD above the mean and 8 h/6.9 SD above the mean). Of the remaining 84 participants, 36 identified as male and 48 as female. Sixty-two participants identified as European American, four as African American, eight as Asian, ten as Latinx, two as Middle Eastern, and one as biracial (Arab/European). The average age of the participants was 18.9 years. In all the studies presented here, participants offered informed consent to participate.

Stimuli

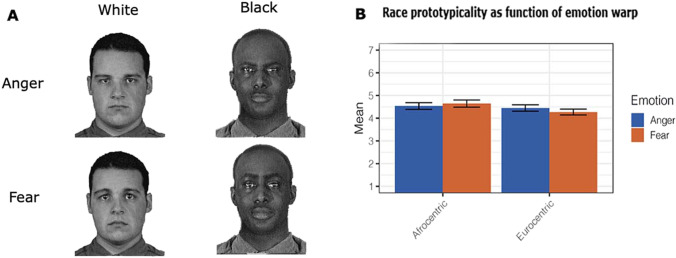

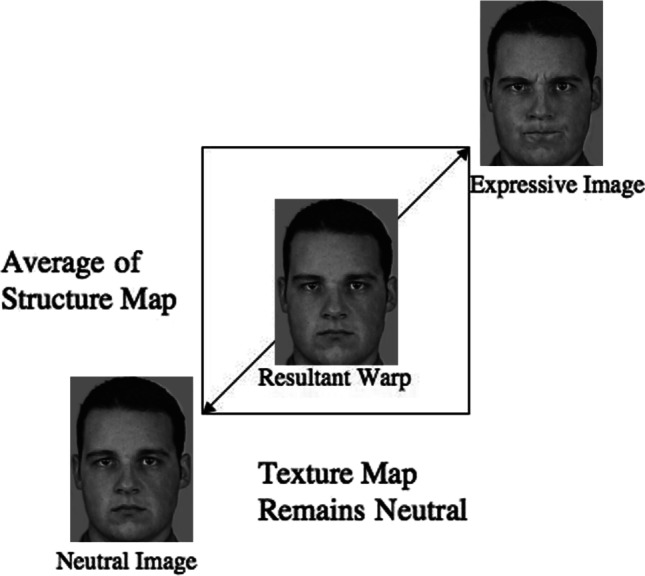

Stimuli consisted of 16 neutral faces from the Montreal Set of Facial Displays of Emotion (MSFDE), including four each of sub-Saharan African female and male models, and four each of French Canadian female and male models (Beaupré et al., 2000). Because these faces were not American, we refer to them here as Afrocentric and Eurocentric faces. We selected this stimulus set as the facial photographs are highly standardized for emotion expression using the Facial Action Coding System, as well as for lighting, size, and head orientation (same pitch, roll, and yaw), allowing our warping technique to be as precise as possible. To manipulate these faces to resemble emotion, we used the program Morph 2.5™. We applied a warping algorithm to generate a 50/50 average of the structural map of each neutral face and its corresponding anger and fear expressions, while keeping the original neutral texture map constant (see Fig. 2 for example of procedure and Fig. 3A for example stimuli; see also Adams et al., 2012 for use of similar procedure). This procedure yielded changes in the relative size and position of facial features without producing the bulging, furrows, wrinkles, and changes in contrast that are often apparent during overt displays of emotion. Warping each of the 16 neutral exemplar faces over their corresponding anger and fear expressions yielded a total of 32 stimuli. Each stimulus face was presented in grayscale on a white background and the average stimulus size was 4 × 5 in.

Fig. 2.

Example of stimulus warping manipulation used in Study 2: In this example, warping is accomplished by structurally averaging a neutral image with its corresponding anger expression to generate a 50/50 average of its structural components, while holding the neutral image’s texture map constant. The resultant image shares a structural resemblance with the original anger expression, but is not overtly expressive. This figure has been reproduced, with permission, from the Montreal Set of Facial Displays, see Beaupré and Hess (2005)

Fig. 3.

Emotion-resembling facial cues and perceived race prototypicality: Panel A shows examples of emotion warped faces. Panel B shows race prototypicality ratings varying as a function of race of face and emotion-resembling cues in the face. Afrocentric faces were rated as more prototypical when warped over fear than anger expressions, and Eurocentric faces were rated as more prototypical when warped over anger than fear expressions

We chose to examine anger and fear (rather than happiness, which has often been used in prior work) for several reasons. First, they are both negative, high arousal, threat-related emotions. Second, using anger and fear avoids a possible conflation of positive and negative associations with happiness and anger. Third, prior work related to the current studies (e.g., Hugenberg & Bodenhausen, 2004) found a strong influence of race on perceptions of anger but not happiness. Fourth, anger and fear are antithetical in approach versus avoidance motivation, as well as dominance versus affiliative orientations. They are also antithetical in certain physical expressive cues such as wide versus squinted eyes and widened versus pursed mouth, respectively. Finally, we used a computer vision algorithm trained on expressive faces as a manipulation check for our emotion warping, as well as to test whether race-related, emotion-resembling structural effects found in prior machine learning studies (i.e., Study 1 and Zebrowitz et al., 2010) are replicated in the current stimulus set.

Procedure

Participants were recruited from an online participant pool at The Pennsylvania State University. Once the participants enrolled in the study, they were redirected to Qualtrics™ where they were instructed to rate the racial prototypicality of the anger- and fear-warped faces on a 7-point Likert scale. Race prototypicality was defined as how Eurocentric or Afrocentric (i.e., White or Black) faces appeared, with “1” being the least and “7” being the most racially prototypical. Participants completed prototypicality ratings for all 32 faces across two counterbalanced blocks. Blocks were counterbalanced so that participants would not see the same face as both anger- and fear-warped in the same block. Within each block, faces were randomly presented in the center of the screen and remained until a response was made. After rating these faces, participants completed pilot ratings for another study before answering demographic questions.

Results

Computer Vision

To ensure that the manipulated face stimuli had structural characteristics that resembled the specific emotions with which they were warped, we subjected the fear and anger output from the machine learning model detailed in Study 1 into two separate independent t tests with warped emotion as the predictor variable. As predicted, anger warps were structurally more similar to anger expressions (M = 0.50) than fear expressions (M = 0.07), t(15) = 4.14, p < 0.001, d = 1.11. Similarly, fear warps were structurally more similar to fear expressions (M = 0.39) than to anger expressions (M = 0.14), t(15) = − 3.67, p < 0.001, d = − 0.86. These findings are unsurprising given that the warping technique physically alters the structural aspects of neutral faces to map directly onto the structural aspects of the expressive faces. Thus, these findings confirm that the warping technique performed as it was intended. Next, we conducted two t tests to see if anger and fear structural cues varied by race for the manipulated and non-manipulated neutral faces. As predicted, Eurocentric faces (M = 0.46) structurally resembled anger expressions more than Afrocentric faces (M = 0.18), t(46) = − 2.7, p = 0.009, d = − 0.78, CIs [− 1.36, − 0.19]. Similarly, there was a trend for Afrocentric faces (M = 0.26) structurally resembling fear expressions more than Eurocentric faces (M = 0.16), t(46) = 1.66, p = 0.052 (one-tailed), d = 0.48, CIs [− 0.1, 1.05]. These findings fit with those reported in Study 1, replicating here on a smaller and different stimulus set.

Human Ratings

In order to test the central hypothesis, a 2 (stimulus race: Afrocentric, Eurocentric) × 2 (stimulus gender: female, male) × 2 (emotion: anger, fear) repeated measures ANOVA was conducted for the race prototypicality ratings. The initial analyses included participant race as a factor (European American participants versus participants of color), which resulted in no significant main effects or interactions (all ps > 0.3). Therefore, the analyses included below are collapsed across the race of the participants.

There was a significant main effect of gender such that male faces were rated as more racially prototypical (M = 4.62) than female faces (M = 4.33), F(1,82) = 20.83, p < 0.001, = 0.20. There was also a trend for Afrocentric faces (M = 4.59) to be rated as more prototypical than Eurocentric faces (M = 4.36), F(1,82) = 2.80, p = 0.098, = 0.03. No significant main effect of emotion was found, F(1,82) = 0.81, p = 0.372. These main effects were qualified by the predicted stimulus race by emotion interaction, F(1,82) = 12.80, p < 0.001, = 0.14 (see Fig. 3B). A post hoc power analysis indicated that the power to detect this effect at p < 0.05 is 0.95. No other interactions approached significance (all ps > 0.3).

To examine the nature of the stimulus race by emotion interaction, paired sample t tests were computed. As predicted, Eurocentric angry faces were rated as more prototypical looking (M = 4.44) than Eurocentric fearful faces (M = 4.28), t(83) = 3.06, p = 0.003, d = 0.34, whereas Afrocentric fearful faces (M = 4.65) were rated as more prototypical looking than Afrocentric angry faces (M = 4.54), t(83) = − 2.12, p = 0.037, d = − 0.23. Afrocentric fearful faces were also rated as more prototypical looking (M = 4.65) than Eurocentric fearful faces (M = 4.28), t(83) = 2.82, p = 0.006, d = 0.31. No differences were found between Eurocentric angry and Afrocentric fearful faces (p > 0.4).

Summary

The findings of Study 2 again reveal counter-stereotypic race by emotion interactions, with Eurocentric anger-resembling faces being rated as more prototypical than Eurocentric fear-resembling faces and Afrocentric fear-resembling faces being rated as more prototypical than Afrocentric anger-resembling faces.

Study 3: Race-Emotion Counter-Stereotypic Cues Perceived in Imagined Faces

Overview

Study 2 demonstrated that manipulating faces to resemble anger versus fear led to counter-stereotypic influences on perceived race prototypicality, with anger resemblance yielding greater perceived Eurocentric prototypicality and fear resemblance yielding greater perceived Afrocentric prototypicality. In Study 3, we aimed to extend these findings further by examining whether counter-stereotypic race-emotion associations are also present in people’s mental representations of faces. To examine this, we had participants imagine faces varying in race, gender, emotion, and facial maturity. Once in mind, we asked participants to rate these mental images on size of mouth, size of eyes, and overall roundness of the face, all of which are features previously found to be confounded in some gender-emotion and maturity-emotion interactions (Becker et al., 2007; Hess et al., 2009; Sacco & Hugenberg, 2009). We also had the participants rate their own mental images on perceived dominance and affiliation. Following the common cue hypothesis, we expected that female versus male and youthful versus mature faces to be stereotypically rated as more similar to the pattern found for fear versus anger, respectively, whereas we predicted that imagined African American versus European American faces would again follow the counter-stereotypic pattern, i.e., imagined African American faces would be rated more like fear and less like anger than imagined European American faces.

Method

Participants

Thirty-one (20 females, 11 males; mean age = 19.03) European American undergraduate students at The Pennsylvania State University participated in the study for partial course credit.

Procedure

Between one and four participants entered the laboratory and filled out rating booklets instructing them to draw to mind images of different types of faces. Specifically, they were asked to imagine eight different prototypical faces, i.e., ones that display features they most associate with each type of face described. These included ratings that allowed us to make four comparisons of interest including imagined prototypical male versus female faces, European American versus African American faces, youthful versus mature faces, and anger versus fear expressions. First, participants rated each imagined face on three facial appearance characteristics, using 7-point scales, including (1) roundness of the face using, anchored by 1 = very angular and 7 = very round; (2) size of eyes, anchored by 1 = very small eyes to 7 = very large eyes; and (3) size of mouth, anchored by 1 = very small mouth and 7 = very large mouth. For imagined race, expression, and facial maturity faces, they were asked to indicate the gender of each face they imagined (those results are not presented here). Finally, they were asked to reimagine each face again and rate them on how submissive/dominant, where 1 = very submissive to 7 = very dominant, and how socially aloof/affiliative, where 1 = very socially aloof to 7 = very affiliative, they appeared.

Results

Each set of comparisons (emotion, gender, facial maturity, and race) was subjected to a MANOVA including five measures (dominance, affiliativeness, facial roundness, size of eyes, and size of mouth; see Table 1 for means, t values, and Cohen’s d associated with the univariate analyses).

Table 1.

Imaged face comparisons

| Mean (SD) | Mean (SD) | t statistic | Cohen’s d | |

|---|---|---|---|---|

| A | Fear | Anger | ||

| Dominance | 2.26 (1.15) | 6.39 (0.56) | − 17.549** | − 3.20 |

| Affiliation | 4.61 (1.80) | 2.71 (1.55) | 3.980** | 0.727 |

| Facial roundness | 4.97 (1.22) | 2.45 (1.43) | 6.685** | 2.22 |

| Mouth size | 5.26 (1.24) | 2.35 (1.43) | 8.058** | 1.47 |

| Eye size | 6.48 (.51) | 2.19 (1.22) | 17.424** | 3.18 |

| B | Female | Male | ||

| Dominance | 3.45 (1.09) | 5.58 (.81) | − 8.563** | − 1.56 |

| Affiliation | 5.58 (1.03) | 4.42 (1.31) | 3.771* | 0.68 |

| Facial roundness | 4.58 (1.06) | 3.35 (1.36) | 3.312* | 0.60 |

| Mouth size | 4.26 (1.25) | 3.87 (.85) | 1.975 | 0.36 |

| Eye size | 4.58 (1.26) | 3.71 (.90) | 2.182* | 0.40 |

| C | Babyish | Mature | ||

| Dominance | 2.32 (.83) | 5.45 (.85) | 17.574** | 3.21 |

| Affiliation | 5.29 (1.44) | 4.71 (1.16) | − 1.606 | − 0.29 |

| Facial roundness | 6.13 (.81) | 2.84 (1.24) | − 10.220** | − 1.87 |

| Mouth size | 3.74 (1.34) | 3.77 (.92) | .133 | 0.02 |

| Eye size | 5.45 (1.12) | 3.94 (1.03) | − 5.313** | − 0.97 |

| D | African American | Caucasian | ||

| Dominance | 5.29 (.94) | 4.77 (.80) | 3.737* | 0.68 |

| Affiliation | 5.00 (1.29) | 5.03 (1.08) | − .158 | − 0/03 |

| Facial roundness | 5.26 (1.00) | 3.81 (.95) | 6.281** | 1.15 |

| Mouth size | 5.77 (.76) | 3.71 (.86) | 9.971** | 1.82 |

| Eye size | 5.03 (.91) | 4.13 (.72) | 4.215** | 0.77 |

Note: df = 30

*p < .05, **p < .001, ***p < .001 (Bonferroni correction for multiple comparisons, p < .0025)

Bolded values highlight the higher rating for each comparison of interest

Comparison 1: Imagined Fear Versus Anger

The overall MANOVA was significant, F(5,26) = 59.23, p < 0.0001, = 0.919. In line with prior research, univariate results revealed that imagined fear expressions were rated as being less dominant and more affiliative, with larger eyes, larger mouth, and rounder features than imagined anger expressions.

Comparison 2: Imagined Facial Youthfulness/Babyishness Versus Maturity

The overall MANOVA was significant, F(5,26) = 104.78, p < 0.0001, = 0.953. Univariate results revealed no differences in ratings of affiliation or mouth size. As predicted, however, and in line with common cue hypothesis, imagined youthful faces were rated more like fear than anger, i.e., less dominant, with larger eyes, and rounder features than imagined mature faces.

Comparison 3: Imagined Female Versus Male Faces

The overall MANOVA was significant, F(5,26) = 19.41, p < 0.0001, = 0.789. Univariate results revealed that, as predicted, imagined female faces were rated more in line with fear than anger, i.e., less dominant and more affiliative, with larger eyes, larger mouth, and rounder features than imagined male expressions.

Comparison 4: Imagined African American Versus European American Faces

The overall MANOVA was significant, F(5,26) = 20.1, p < 0.0001, = 0.794. Univariate results revealed that imagined African American faces were rated as being more dominant than imagined European American faces, though this did not survive Bonferroni correction, and no difference was found for affiliativeness. As predicted, however, imagined African American faces were rated more like fear than anger, i.e., larger eyes, larger mouth, and rounder features than imagined European American faces, in contrast to prevailing stereotypes, but in line with the common cue hypothesis.

Summary

Again, counter to stereotypic associations, African American versus European American faces were imagined to share facial characteristics with fear more than anger, as were babyish versus mature, and female versus male faces (i.e., with rounder faces, and larger eyes and mouths). Prior work has shown that these common cues (roundness, eye size, and mouth size) are associated with both female and babyish faces being relatively more associated with fear as compared to male and mature faces, which are relatively more associated with anger expressions, consistent with our current findings.

Study 4: Influence of Race on Speeded Reactions to Unaltered Anger and Fear Expressions

Overview

Prior works demonstrating that Afrocentric looking faces are perceived as angrier than Eurocentric looking faces have tended to use faces that share identical race-ambiguous appearance coupled with race prototypical hairstyle and skin tone alone (e.g., Hugenberg & Bodenhausen, 2003, 2004). Social psychologists have long known that stereotypes exert their strongest influences under conditions of ambiguity, and these types of manipulations are aptly set up to allow stereotypical associations to exert their strongest influence. Study 1, using machine learning, showed that European American faces structurally resemble anger more than African American faces, a counter-stereotypical effect replicated in Study 2 using race prototypicality ratings of faces that were manipulated to appear angrier or fearful, and in Study 3 using facial appearance ratings of imagined faces. The next study aimed to replicate and extend these effects with a rapid-response paradigm using unaltered facial interior expressions (i.e., ovaled to show only expressive cues not facial frames). We predicted we would find evidence for counter-stereotypical associations, in this case faster and more accurate responses to angry versus fearful Eurocentric faces and to fearful versus angry Afrocentric faces.

Method

Preliminary Power Analysis

Based on a preliminary sample of 17 participants in this study, we computed a power analysis using G*Power (Faul et al., 2009) to determine an adequate sample size. We focused the power analysis on the predicted race by emotion interaction, F(1,16) = 6.29, p = 0.023, = 0.28. In order to replicate this effect at a power of ß = 0.80, p < 0.05, this analysis determined that a sample size of at least twenty-two participants would be necessary.

Participants

Thirty (17 females, 13 males; mean age = 18.9) European American undergraduates at The Pennsylvania State University participated in the study for partial course credit.

Stimuli

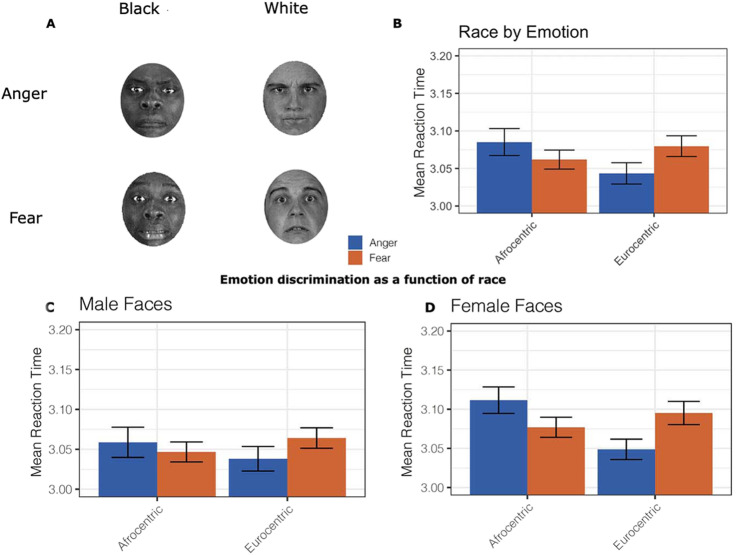

Stimuli consisted of the same 16 exemplar faces from MSFDE (Beaupré et al., 2000) used in Study 2, along with 16 African American and European American faces taken from the NimStim set (Tottenham et al., 2009). This time, faces were ovaled to show only interior facial features and were presented without the warping manipulation described in Study 2 (i.e., facial interiors were unaltered; see Fig. 4A).

Fig. 4.

Rapid responses to unaltered interior Afrocentric and Eurocentric expressive faces: Panel A shows examples of unaltered interior anger and fear expressions presented. Panel B highlights a counter-stereotypic pattern of reaction time effect, with faster responses made to Afrocentric fear than anger and to Eurocentric anger than fear expressions. Panels C and D highlight that counter-stereotypic race by emotion effects were more pronounced for female than male faces, though in both cases correct responses were made more quickly to Afrocentric fear than anger and to Eurocentric anger than fear expressions

Procedure

Between one and four participants entered the laboratory at a time and were seated at computers in individual cubicles approximately 24 in. in front of 15-in. monitors. They were instructed that they would see a series of faces displaying facial expressions and that their task was to indicate, as quickly and accurately as possible, whether each face was expressing anger or fear. Responses were made via an arrow key press (response keys were counterbalanced across participants) and accuracy and response latency were collected. The presentation of each facial stimulus was preceded by a centrally located fixation cross for 150 ms. Each face was centrally presented and remained until a response was made. Participants completed three blocks of trials (each containing 64 expressive stimuli) for a total of 192 trials.

Results

Computer Vision

We used the trained model to assess whether the unmanipulated neutral faces used in this study showed a similar gender-emotion stereotypic effect as well as a race-emotion counter-stereotypic effect. The model predictions were subjected to a 2 (stimulus race: Afrocentric, Eurocentric) × 2 (predicted emotion: anger, fear) × 2 (image set: MSFDE, NIMSTIM) mixed between-within ANOVA. There was no main effect of image set, F(1,28) = 0.23, p = 0.638, = 0.008. There was, however, a main effect of stimulus race, F(1,28) = 5.69, p = 0.024, = 0.17, and predicted emotion, F(1,28) = 10.14, p = 0.004, = 0.27. Overall, Eurocentric faces (M = 0.31) were predicted by the model to be more expressive compared to Afrocentric faces (M = 0.15), and predicted anger (M = 0.35) to be larger than predicted fear (M = 0.11).

There were no interactions for race by set, F(1,28) = 0.10, p = 0.756, = 0.004, race by emotion, F(1,28) = 0.16, p = 0.693, = 0.006, or set by race by emotion, F(1,28) = 0.008, p = 0.930, < 0.001. Critically, there was the predicted race by emotion interaction, F(1,28) = 16.48, p < 0.001, = 0.37. Eurocentric neutral faces (M = 0.59) were predicted by the model to be more structurally similar to anger expressions than Afrocentric faces (M = 0.12), t(54) = − 4.65, p < 0.001. Afrocentric (M = 0.19) and Eurocentric faces (M = 0.03) were predicted to be equivalent in fear, t(54) = 1.62, p = 0.111. It is noteworthy that this latter effect was still in the predicted direction as was found in Study 1 using a much larger sample.

Human Ratings

Two participants (one male, one female) were dropped for having accuracies more than three standard deviations below the mean. Accuracy and reaction time data for the remaining 30 were submitted to a 2 (stimulus race: Afrocentric, Eurocentric) × 2 (stimulus gender: female, male) × 2 (emotion: anger, fear) within-subject factorial ANOVA to test our predictions.

Accuracy

A significant main effect of race emerged, such that Eurocentric faces were responded to more accurately (M = 90%) than Afrocentric faces (M = 88.5%), F(1,29) = 4.81, p = 0.037, = 0.14. A significant main effect of gender was also found, such that male faces were responded to more accurately (M = 90.2%) than female faces (M = 88.3%), F(1,29) = 6.09, p = 0.02, = 0.17. Critically, the predicted interaction between race and emotion was significant, F(1,29) = 7.40, p = 0.011, = 0.08. Paired sample t test revealed that Eurocentric angry faces were perceived more accurately (M = 91.2%) than Afrocentric angry faces (M = 87.3%), t(29) = 3.02, p = 0.005, d = 0.56. No other effects reached significance.

Reaction Time

Before analyzing the data, incorrect trials were removed. As is customary with reaction time measurements, data were log-transformed to correct for positive skew. A significant main effect of race emerged, such that Eurocentric faces were responded to more quickly (M = 3.06) than Afrocentric faces (M = 3.07), F(1,29) = 4.75, p = 0.038, = 0.14. A significant main effect of gender also emerged, such that male faces were responded to more quickly (M = 3.03) than female faces (M = 3.08), F(1,29) = 29.31, p < 0.0001, = 0.50. These main effects were qualified by the predicted interaction between race and emotion, F(1,29) = 27.02, p < 0.0001, = 0.48. A post hoc power analysis indicated that the power to detect this effect at p < 0.05 is 0.99. Paired sample t tests revealed that this was due to Eurocentric angry faces being perceived more quickly (M = 3.04) than Afrocentric angry faces (M = 3.09), t(29) = − 4.49, p < 0.001, d = − 0.83, Afrocentric fearful faces (3.06), t(29) = − 2.35, p = 0.026, d = − 0.44, and Eurocentric fearful faces (M = 3.08), t(29) = − 4.31, p < 0.001, d = − 0.80. Conversely, Afrocentric fear expressions were perceived more quickly than Eurocentric fearful faces, t(29) = 2.84, p = 0.008, d = 0.53, and Afrocentric anger expressions, t(29) = 2.06, p < 0.048, d = 0.38 (see Fig. 4B). This interaction was further qualified by a gender by race by emotion 3-way interaction, F(1,29) = 5.74, p = 0.023, = 0.17. This was due to the counter-stereotypical race by emotion interaction being more pronounced for female faces, F(1,29) = 27.42, p < 0.0001, = 0.49 (see Fig. 4C), than male faces, F(1,29) = 7.86, p = 0.009, = 0.21 (see Fig. 4D). No other effects reached significance.

Summary

The unaltered Afrocentric and Eurocentric faces taken from the MSFDE and NIMSTIM sets of standardized anger and fear expressions revealed the same counter-stereotypic pattern of effects as found in Studies 1–3. Eurocentric anger expressions were perceived more quickly and accurately than Eurocentric fear expressions and Afrocentric fear expressions were perceived more quickly and accurately than Afrocentric anger expressions. Although counter to race-emotion stereotypes, these findings align with the findings in Study 1 using machine learning, and Studies 2 and 3, as well as with other behavioral findings showing lower accuracy and slower categorization of anger on Afrocentric versus Eurocentric faces in a prior Chinese sample (Li & Tse, 2016). Additionally, while reaction time differences are quite small, previous research has shown that small differences still help provide us with meaningful insight into the world and the decisions we make. For example, using a shooter paradigm, Correll and colleagues showed that for both laypeople (2002, 2007) and police (2007), a difference of 10–20 ms is significant in their decisions to shoot unarmed African Americans versus European Americans. Together, Studies 1–4 all offer support for the race-counter-stereotypic effects predicted by the common cue hypothesis. Also notable in the current study was that the race by emotion interaction was more pronounced for female versus male faces.

Study 5: Influence of Race on Speeded Reactions to Race-Ambiguous Anger and Fear Expressions

Overview

Study 4 established that unaltered Afrocentric and Eurocentric anger and fear expressions yielded the same counter-stereotypic effects as found in Studies 1–3 and in line with prior computer vision findings. In this final Study 5, we employed speeded responses to racially ambiguous interior faces, now using only the original facial exteriors and skin tone to establish perceived Afrocentric versus Eurocentric faces, much in the same way that Hugenberg and Bodenhausen (2003, 2004) did using computer-generated faces. In this way, the expressive cues used to categorize faces as angry and fearful remain identical with regard to Afrocentric and Eurocentric interior features. Therefore, any differences found can be directly attributed to top-down, race-based stereotypes. The aim of Study 5 was to show that under these conditions, we too replicate the race-emotion stereotypic findings commonly reported in the literature. Thus, in this case, unlike our prior studies, we predicted that anger expressions would be more accurately and quickly responded to in the context of Afrocentric hairstyles and skin tone, whereas fear expressions would be more accurately and quickly responded to in the context of Eurocentric hairstyles and skin tone, even though the structural expressive cues on the faces themselves were race-ambiguous and thus identical across conditions.

Method

Participants

Fifty-seven (45 females, 12 males, mean age = 18.1) undergraduates at The Pennsylvania State University participated in the study for partial course credit. Forty-four identified as European American, eight as Asian, three as African American, and two who did not self-identify.

Stimuli

The same 8 MSFDE male faces used in Studies 2 and 4 were used here as well. Each face was morphed with every other race to create a total of 16 new race-ambiguous interior faces (i.e., faces containing 50/50 Afrocentric and Eurocentric facial cues; see Fig. 5A for example stimuli). As in Study 2, Morph 2.5™ was employed to alter the images. In this case, morphing, as opposed to warping, employs an algorithm for averaging across both the structural and textural maps of images after applying carefully aligned landmarks around the eyes, mouth, etc. This procedure yielded changes in both the relative size and position of facial features as well as changes in skin tone, texture, folds, and bulges associated with expressive cues in the face, etc. Each oval was then reinserted into each of its corresponding exterior facial frames using Adobe Photoshop™, which resulted in 64 racially ambiguous interior expressions made to appear racially prototypical through hairstyle and skin tone. Each stimulus face was presented in grayscale on a white background and the average stimulus size was 4 × 5 in.

Fig. 5.

Rapid responses to race-ambiguous expressive faces made to appear Afrocentric versus Eurocentric with race prototypical hairstyles and skin tone: Panel A shows the same race-ambiguous expressions portrayed in Afrocentric and Eurocentric facial frames. Panel B shows the stereotypic pattern of accuracy effects, with more accurate responses made to Afrocentric anger than fear and more to Eurocentric fear than anger expressions. Panel C likewise highlights quicker correct responses to Afrocentric anger than fear and to European fear than anger expressions

Procedure

Participants were run in groups of between one and four at a time. They were seated at computers in individual cubicles approximately 24 in. in front of 15-in. monitors. Faces were randomly presented in two blocks. Participants were instructed that they would see a series of faces showing facial expressions and that their task would be to indicate, as quickly and accurately as possible, whether each face was expressing anger or fear. Responses were made via an arrow key press (response keys were counterbalanced across participants) and accuracy and response latency were collected. The presentation of each facial stimulus was preceded by a centrally located fixation cross for 150 ms. Each face was also centrally presented and remained until a response was made. Participants completed two blocks of trials (each containing all 64 emotional stimuli) for a total of 128 trials.

Results

Computer Vision

To ensure that the manipulated ambiguous race faces did not distort expressions, we performed a t test on the emotion output from the trained model. As expected, anger warps were structurally more similar to anger (M = 0.94) than fear (M = 0.10), t(31) = 14.54, p < 0.001, d = 2.57. Fear warps were structurally more similar to fear (M = 0.41) than anger (M = 0.02) expressions, t(31) = − 6.22, p < 0.001, d = − 1.10.

Human Responses

Accuracy and reaction time data from correct trials were submitted to a 2 (stimulus race: Afrocentric, Eurocentric) × 2 (emotion: anger, fear) within-subject factorial ANOVA.

Accuracy

A significant main effect of race emerged such that expressive faces presented in Afrocentric facial frames were perceived more accurately (M = 92.8%) than when presented in Eurocentric facial frames (M = 90.4%), F(1,56) = 27.16, p < 0.001, ηp2 = 0.33. Critically, the race by emotion interaction was significant, F(1,56) = 66.53, p < 0.001, ηp2 = 0.54 (see Fig. 5B). Paired sample t tests were computed to examine the nature of this interaction. These revealed that angry expressions presented in Afrocentric facial frames were perceived more accurately (M = 94.1%) than the same expressions presented in Eurocentric facial frames (M = 87.3%), t(56) = 13.00, p < 0.001, d = 1.74, whereas fear expressions presented in Eurocentric facial frames were responded to more accurately (M = 93.4%) than were the same fear expressions when presented in Afrocentric faces (M = 91.4%), t(56) = − 2.35, p = 0.022. d = − 0.31. Likewise, anger was perceived more accurately than fear when presented in Afrocentric facial frames, t(56) = 2.08, p = 0.049, d = 0.28, whereas fear was perceived more accurately than anger expressions when presented in Eurocentric facial frames, t(56) = − 6.17, p < 0.001, d = − 0.82.

Reaction Time

There were no significant main effects for reaction times. However, the predicted race by emotion interaction was significant, F(1,56) = 33.44, p < 0.001, ηp2 = 0.37 (see Fig. 5C). A post hoc power analysis indicated that the power to detect this effect at p < 0.05 is 0.99. Paired sample t tests revealed that angry expressions presented in Afrocentric faces were responded to more quickly (M = 2.84) than the same expressions presented in Eurocentric faces (M = 2.86), t(56) = − 3.56, p < 0.001, d = − 0.48, whereas fear expressions presented in Eurocentric faces were responded to more quickly (M = 2.83) than were the same fear expressions presented in Afrocentric faces (M = 2.86), t(56) = 4.55, p < 0.001, d = 0.61. Anger expressions were responded to more quickly than fear expressions when presented in Afrocentric faces, t(56) = − 2.29, p = 0.026, d = − 0.31, and fear expressions were responded to more quickly than anger expressions when presented in Eurocentric, t(56) = 3.54, p < 0.001, d = 0.48.

Summary

Study 5 resulted in the expected and previously reported race stereotypic pattern of effects using the same speeded response categorization task used in Study 4. In this study, the same racially ambiguous anger expressions were more accurately and quickly responded to in the context of Afrocentric hairstyles and skin tone, whereas the same fear expressions were more accurately and quickly responded to in the context of Eurocentric hairstyles and skin tone. This effect replicates the pattern previously found for greater association of anger in Afrocentric than Eurocentric faces when examining faces otherwise identical in structural appearance and expression, but varying only in skin tone and hairstyle (Hugenberg & Bodenhausen, 2003, 2004).

General Discussion

Study 1 replicated and extended prior computer vision findings showing that European American versus African American faces structurally resemble anger more and fear less. Study 2 yielded similar findings when manipulating emotion-resembling cues into appearance, revealing that such cues influence race prototypicality ratings in the same counter-stereotypic manner (i.e., anger versus fear resemblance makes Eurocentric faces appear more racially prototypical and vice versa for Afrocentric faces). Study 3 extended these findings by showing that imagined European American versus African American facial features were rated as appearing more similar to anger than fear (i.e., more angular, with smaller mouth and eyes). In Study 4, using unaltered faces, angry Eurocentric and fearful Afrocentric faces were responded to more quickly and accurately than fearful Eurocentric and angry Afrocentric faces. All four studies, therefore, offer converging evidence for the race-counter-stereotypic common cue hypothesis: Eurocentric facial cues are more structurally similar to anger expressions than Afrocentric facial cues, and vice versa for fear. Finally in Study 5, using racially ambiguous interior facial expressions, presented with racially prototypical hairstyles and skin tone, we then replicated the more common findings that have led to prior conclusions supporting the “angry Black” stereotype. Together, these findings suggest that the phenotypic cues associated with race actually clash with common stereotypes, a point also previously made by Zebrowitz et al. (2010).

These findings have implications for future work in this domain. If other laboratories have encountered similar counter-stereotypic effects when using naturally varying facial images, the current work may help explain why this is the case. It also emphasizes that when interior facial structure is held constant, perceived race does exert a powerful stereotype-driven effect on perception. Noteworthy, prior work examining gender-related facial appearance on the perception of facial emotion suggests that phenotypic and stereotypic influences are confounded and, thus, hard to tease apart (e.g., Adams et al., 2015; Becker et al., 2007). However, the current work suggests that phenotypic and stereotypic influences conflict with one another in terms of the “angry Black” stereotype. Thus, moving forward, teasing apart the mutual and interactive phenotypic and stereotypic influences should be feasible.

Given prior research supporting the “hostile Black” stereotype (Hugenberg & Bodenhausen, 2003, 2004; Devine, 1989; also see Kang & Chasteen, 2009), we refer to our findings here as a counter-stereotypical effect. We recognize, however, that it is important to account for historical considerations that highlight infantilized depictions of African Americans and, thereby, not angry (Mahar, 1999). Such historical depictions, as contemporary research shows, continue to influence our modern-day perceptions of African American faces (Goff et al., 2008). In contrast, the current predominant stereotype associating Afrocentrism with threat/danger/criminality is based on a more recent cultural shift (i.e., the civil rights movement, Drummond, 1990; Welch, 2007) . In short, modern-day depictions rooted in history alongside contrasting stereotypes propagated from recent cultural shifts both play a role in our impressions and perceptions of African Americans. Future work in this domain, therefore, would benefit from historical considerations and cross-cultural investigation to examine how culturally bound these effects may be.

Notably, we also replicated and extended previous work showcasing male-anger and female-happy stereotypic associations (e.g., Becker et al., 2007). Like this prior work, we found that male faces are more associated with anger, and extended this to show that female faces are likewise more associated with fear. Prior work focused on the confounded nature of gender appearance and emotion expression largely focused only on Eurocentric faces (see Adams et al., 2015 for review). Thus, our findings also extend this work by uniquely showcasing such gender-emotion effects in Afrocentric and Eurocentric faces and highlight the need for future intersectional work in this domain.

In terms of gender and race effects reported here, in Study 4, using unaltered faces, we found that counter-stereotypic race by emotion interaction was less pronounced for male than female faces, which could be due to the intersectional influence of the hostile male stereotype as well. While we did not find significant interactions between race × gender × emotion beyond this one finding, future research taking a more intersectional approach is clearly warranted. For example, African American women do not fit the archetype for common gender or racial stereotypes (Johnson et al., 2012) and therefore are subjected to different stereotypes than African American men or European American women (Wilkins, 2012). Our findings also open up a number of questions that warrant future research attention. For instance, under what conditions might stereotypes actually override what is physically conveyed by a face? Other variables such as context, stress, ambiguity, and racial bias have all been found to increase the extent to which stereotypes influence perception. Future work will benefit from examining these as moderating variables to determine the conditions under which stereotypes override the perception of the actual physical properties present in a face, such as when police officers misread overt fear as hostility.

Obviously answering all these questions is beyond the scope of the current work. What the current work does indicate, however, is that Afrocentric features do not physically resemble anger expressions more than Eurocentric features. In fact, the opposite appears to be the case. Despite this, decades of research on racial stereotyping utilizing faces as a vehicle of study has established that people tend to view African American faces as more angry and threatening than European American faces. We believe this contradiction exposes just how insidious an effect structural racism has on visual perception, one that plausibly contributes to the deadly violence committed by police officers against African Americans.

Acknowledgements

We would like to thank Michael Stevenson and Robert Franklin for help with data collection. We would also like to thank Nalini Ambady posthumously, who offered input and guidance in the early stages of this work.

Additional Information

Funding

Not applicable.

Data Availability

Data associated with these studies are available on OSF: https://osf.io/rkt7y/.

Ethical Approval

These studies were approved by the ethics committee of the Pennsylvania State University.

Conflict of Interest

The authors declare no competing interests.

Informed Consent

Informed consent was obtained from the participants included in these studies.

Footnotes

Reginald B. Adams Jr. and Daniel N. Albohn share 1st authorship.

Handling editor: Lasana Harris

Contributor Information

Reginald B. Adams, Jr., Email: rba10@psu.edu

Daniel N. Albohn, Email: Daniel.Albohn@chicagobooth.edu

References

- Adams RB, Jr, Nelson AJ, Soto JA, Hess U, Kleck RE. Emotion in the neutral face: A mechanism for impression formation? Cognition and Emotion. 2012;26(3):431–441. doi: 10.1080/02699931.2012.666502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams RB, Jr, Hess U, Kleck RE. The intersection of gender-related facial appearance and facial displays of emotion. Emotion Review. 2015;7(1):5–13. doi: 10.1177/1754073914544407. [DOI] [Google Scholar]

- Adams, R. B., Jr., Garrido, C. O., Albohn, D. N., Hess, U., & Kleck, R. E. (2016). What facial appearance reveals over time: When perceived expressions in neutral faces reveal stable emotion dispositions. Frontiers in Psychology, 7, 00986. 10.3389/fpsyg.2016.00986 [DOI] [PMC free article] [PubMed]

- Albohn, D. N (2020). Computationally predicting human impressions of neutral faces [Unpublished doctoral dissertation]. The Pennsylvania State University

- Albohn DN, Adams RB., Jr The expressive triad: Structure, color, and texture resemblance to emotion expressions predict impressions of neutral faces. Frontiers in Psychology. 2021;12:612923. doi: 10.3389/fpsyg.2021.612923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beaupré, M. G., & Hess, U. (2005). Cross-cultural emotion recognition among Canadian ethnic groups. Journal of Cross-Cultural Psychology, 36, 355–370.

- Beaupré, M. G., Cheung, N., & Hess, U. (2000). The Montreal set of facial displays of emotion [Slides]. Montreal, Canada: Department of Psychology, University of Quebec.

- Becker DV, Kenrick DT, Neuberg SL, Blackwell KC, Smith DM. The confounded nature of angry men and happy women. Journal of Personality and Social Psychology. 2007;92(2):179–190. doi: 10.1037/0022-3514.92.2.179. [DOI] [PubMed] [Google Scholar]

- Benitez-Quiroz, C. F., Srinivasan, R., Feng, Q., Wang, Y., and Martinez, A. M. (2017). Emotionet challenge: recognition of facial expressions of emotion in the wild. arXiv preprint arXiv:1703.01210

- Correll J, Park B, Judd CM, Wittenbrink B. The police officer’s dilemma: Using ethnicity to disambiguate potentially threatening individuals. Journal of Personality and Social Psychology. 2002;83(6):1314–1329. doi: 10.1037/0022-3514.83.6.1314. [DOI] [PubMed] [Google Scholar]

- Correll J, Park B, Judd CM, Wittenbrink B, Sadler MS, Keesee T. Across the thin blue line: Police officers and racial bias in the decision to shoot. Journal of Personality and Social Psychology. 2007;92(6):1006–1023. doi: 10.1037/0022-3514.92.6.1006. [DOI] [PubMed] [Google Scholar]

- Devine PG. Stereotypes and prejudice: Their automatic and controlled components. Journal of Personality and Social Psychology. 1989;56(1):5–18. doi: 10.1037/0022-3514.56.1.5. [DOI] [Google Scholar]

- Drummond WJ. About face: from alliance to alienation. Blacks and the News Media. The American Enterprise. 1990;1(4):22–29. [Google Scholar]

- Ebner NC, Riediger M, Lindenberger U. FACES—a database of facial expressions in young, middle-aged, and older women and men: Development and validation. Behavior Research Methods. 2010;42:351–362. doi: 10.3758/BRM.42.1.351. [DOI] [PubMed] [Google Scholar]

- Edwards, E., Greytak, E., Takei, C., Fernandez, P., & Nix, J. (2020). The other epidemic: fatal police shootings in the time of COVID-19 [ACLU Research Report]. American Civil Liberties Union. Retrieved from American Civil Liberties Union website: https://www.aclu.org/sites/default/files/field_document/aclu_the_other_epidemic_fatal_police_shootings_2020.pdf

- Faul F, Erdfelder E, Buchner A, Lang A-G. Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behavior Research Methods. 2009;41(4):1149–1160. doi: 10.3758/BRM.41.4.1149. [DOI] [PubMed] [Google Scholar]

- Freudenberg, M., Adams, R. B., Jr. , Kleck, R. E., & Hess, U. (2015). Through a glass darkly: facial wrinkles affect our processing of emotion in the elderly. Frontiers in Psychology, 6, 01476. 10.3389/fpsyg.2015.01476 [DOI] [PMC free article] [PubMed]

- Gladwell M. Blink: The power of thinking without thinking. 1. Little, Brown and Company; 2005. [Google Scholar]

- Goff PA, Eberhardt JL, Williams MJ, Jackson MC. Not yet human: Implicit knowledge, historical dehumanization, and contemporary consequences. Journal of Personality and Social Psychology. 2008;94(2):292–306. doi: 10.1037/0022-3514.94.2.292. [DOI] [PubMed] [Google Scholar]

- Hess U, Adams RB, Jr, Kleck RE. Facial appearance, gender, and emotion expression. Emotion. 2004;4(4):378–388. doi: 10.1037/1528-3542.4.4.378. [DOI] [PubMed] [Google Scholar]

- Hess U, Adams RB, Jr, Kleck R. Who may frown and who should smile? Dominance, affiliation, and the display of happiness and anger. Cognition & Emotion. 2005;19(4):515–536. doi: 10.1080/02699930441000364. [DOI] [Google Scholar]

- Hess U, Adams RB, Jr, Grammer K, Kleck RE. Face gender and emotion expression: Are angry women more like men? Journal of Vision. 2009;9(12):19–19. doi: 10.1167/9.12.19. [DOI] [PubMed] [Google Scholar]

- Hess U, Adams RB, Jr, Simard A, Stevenson MT, Kleck RE. Smiling and sad wrinkles: Age-related changes in the face and the perception of emotions and intentions. Journal of Experimental Social Psychology. 2012;48(6):1377–1380. doi: 10.1016/j.jesp.2012.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hugenberg K, Bodenhausen GV. Facing prejudice: Implicit prejudice and the perception of facial threat. Psychological Science. 2003;14(6):640–643. doi: 10.1046/j.0956-7976.2003.psci_1478.x. [DOI] [PubMed] [Google Scholar]

- Hugenberg K, Bodenhausen GV. Ambiguity in social categorization: The role of prejudice and facial affect in race categorization. Psychological Science. 2004;15(5):342–345. doi: 10.1111/j.0956-7976.2004.00680.x. [DOI] [PubMed] [Google Scholar]

- Hutchings PB, Haddock G. Look Black in anger: The role of implicit prejudice in the categorization and perceived emotional intensity of racially ambiguous faces. Journal of Experimental Social Psychology. 2008;44(5):1418–1420. doi: 10.1016/j.jesp.2008.05.002. [DOI] [Google Scholar]

- Johnson KL, Freeman JB, Pauker K. Race is gendered: How covarying phenotypes and stereotypes bias sex categorization. Journal of Personality and Social Psychology. 2012;102(1):116–131. doi: 10.1037/a0025335. [DOI] [PubMed] [Google Scholar]

- Kang SK, Chasteen AL. The moderating role of age-group identification and perceived threat on stereotype threat among older adults. The International Journal of Aging and Human Development. 2009;69(3):201–220. doi: 10.2190/AG.69.3.c. [DOI] [PubMed] [Google Scholar]

- Kazemi, V., & Sullivan, J. (2014). One millisecond face alignment with an ensemble of regression trees. 2014 IEEE Conference on Computer Vision and Pattern Recognition (pp. 1867–1874). IEEE. 10.1109/CVPR.2014.241

- Langner O, Dotsch R, Bijlstra G, Wigboldus DH, Hawk ST, van Knippenberg A. Presentation and validation of the radboud faces database. Cognition and Emotion. 2010;24:1377–1388. doi: 10.1080/02699930903485076. [DOI] [Google Scholar]

- LeDell, E., Gill, N., Aiello, S., Fu, A., Candel, A., Click, C., Kraljevic, T., Nykodym, T., Aboyoun, P., Kurka, M., et al. (2019). H2o: R interface for ‘h2o’. Available at: https://CRAN.R-project.org/package=h2o

- Li, Y., & Tse, C.-S. (2016). Interference among the processing of facial emotion, face race, and face gender. Frontiers in Psychology, 7, 01700. 10.3389/fpsyg.2016.01700 [DOI] [PMC free article] [PubMed]

- Ma DS, Correll J, Wittenbrink B. The Chicago face database: A free stimulus set of faces and norming data. Behavior Research Methods. 2015;47(4):1122–1135. doi: 10.3758/s13428-014-0532-5. [DOI] [PubMed] [Google Scholar]

- Mahar WJ. Behind the burnt cork mask: Early blackface minstrelsy and antebellum American popular culture. University of Illinois Press; 1999. [Google Scholar]

- Marsh AA, Adams RB, Jr, Kleck RE. Why do fear and anger look the way they do? Form and social function in facial expressions. Personality and Social Psychology Bulletin. 2005;31(1):73–86. doi: 10.1177/0146167204271306. [DOI] [PubMed] [Google Scholar]

- Palumbo, R., Adams, R. B., Jr., Hess, U., Kleck, R. E., & Zebrowitz, L. (2017). Age and gender differences in facial attractiveness, but not emotion resemblance, contribute to age and gender stereotypes. Frontiers in Psychology, 8, 01704. 10.3389/fpsyg.2017.01704 [DOI] [PMC free article] [PubMed]

- R Core Team (2019). R: a language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing Available at: https://www.R-project.org/

- Ross, C.T. (2015). A multi-level Bayesian analysis of racial bias in police shootings at the county-level in the United States, 2011 - 2014. PLoS One, 10, e0141854. 10.1371/journal.pone.0141854 [DOI] [PMC free article] [PubMed]

- Sacco DF, Hugenberg K. The look of fear and anger: Facial maturity modulates recognition of fearful and angry expressions. Emotion. 2009;9(1):39–49. doi: 10.1037/a0014081. [DOI] [PubMed] [Google Scholar]

- Simonite, T. (2018, Nov. 01). When it comes to gorillas, Google photos remains blind. Wired. Retrieved May 19, 2021, from https://www.wired.com/story/when-it-comes-to-gorillas-google-photos-remains-blind/

- Snow, J. (2018, July 26). Amazon’s face recognition falsely matched 28 members of congress with mugshots [ACLU]. Retrieved May 19, 2021, from American Civil Liberties Union website: https://www.aclu.org/blog/privacy-technology/surveillance-technologies/amazons-face-recognition-falsely-matched-28

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey B, Nelson C. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Welch K. Black criminal stereotypes and racial profiling. Journal of Contemporary Criminal Justice. 2007;23(3):276–288. doi: 10.1177/1043986207306870. [DOI] [Google Scholar]

- Wilkins AC. Becoming Black women: Intimate stories and intersectional identities. Social Psychology Quarterly. 2012;75(2):173–196. doi: 10.1177/0190272512440106. [DOI] [Google Scholar]

- Zebrowitz LA, Kikuchi M, Fellous J-M. Facial resemblance to emotions: Group differences, impression effects, and race stereotypes. Journal of Personality and Social Psychology. 2010;98(2):175–189. doi: 10.1037/a0017990. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data associated with these studies are available on OSF: https://osf.io/rkt7y/.