Abstract

What role does language play in emotion? Behavioral research shows that emotion words such as “anger” and “fear” alter emotion experience, but questions still remain about mechanism. Here, we review the neuroscience literature to examine whether neural processes associated with semantics are also involved in emotion. Our review suggests that brain regions involved in the semantic processing of words: (i) are engaged during experiences of emotion, (ii) coordinate with brain regions involved in affect to create emotions, (iii) hold representational content for emotion, and (iv) may be necessary for constructing emotional experience. We relate these findings with respect to four theoretical relationships between language and emotion, which we refer to as “non-interactive,” “interactive,” “constitutive,” and “deterministic.” We conclude that findings are most consistent with the interactive and constitutive views with initial evidence suggestive of a constitutive view, in particular. We close with several future directions that may help test hypotheses of the constitutive view.

Keywords: Emotion, Language, Neuroscience, Neuroimaging, Lesion

Commonsense—and indeed, some theoretical models of emotion—suggest that the words “anger,” “disgust,” and “fear” have nothing to do with emotional experience beyond mere description. Yet growing behavioral, developmental, and cross-cultural findings show that these words contribute to emotion by altering the intensity and specificity of emotional experiences (for recent reviews, see Lindquist, MacCormack, & Shablack, 2015; Lindquist, Satpute, & Gendron, 2015; Satpute et al., 2020). Currently, the underlying mechanisms by which language contributes to emotion remain unclear. Here, we review the neuroscience findings that inform the relationship between language and emotional experience. Specifically, we summarize functional neuroimaging, neuropsychology, and electrical stimulation methods in human participants that bear on how language may influence emotion. We end by relating these findings to theories of emotion and look to future studies that would further test different theoretical models of the role of language in emotion.

Brain Regions Implicated in Semantics and Emotion

Throughout this paper, we use the term “language” to refer to the capacity to represent concepts with words. We focus on the semantic, rather than the phonological or syntactic aspects of language. Words and the concepts they name are not necessarily the same, but linguistic and semantic processes are tightly linked. Accessing words involves accessing concepts and vice versa (Barsalou, 2008), and concept formation even for simple concrete concepts, like shoes and oranges, benefits when conspecifics use the word “shoes” or “oranges” to describe them (Lupyan & Thompson-Schill, 2012; Xu, 2002). In like manner, we have proposed elsewhere that emotion words are also important for acquiring and supporting emotion concepts, which in turn underlie experiences of emotion (Lindquist, Gendron, & Satpute 2016; Lindquist, MacCormack, & Shablack, 2015; Lindquist, Satpute, & Gendron, 2015; Satpute et al., 2020).

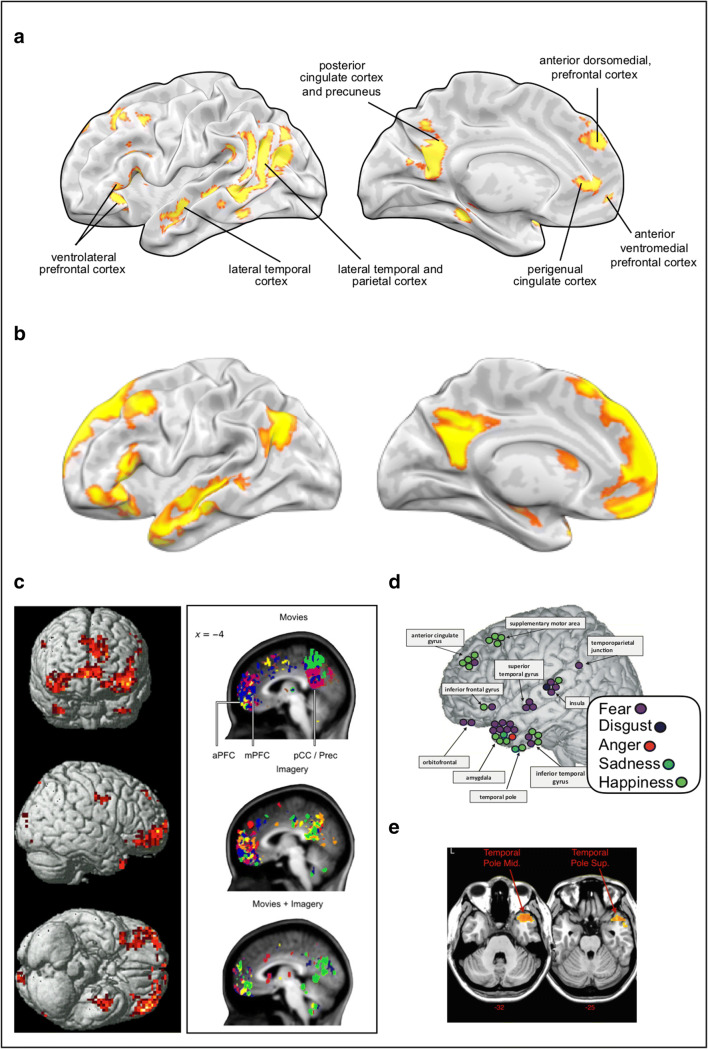

When focusing on the brain regions involved in language, we draw on a rich literature in cognitive neuroscience that has uncovered a set of brain regions that are critically involved in semantic processing. The key brain regions are outlined in Fig. 1a and include the anterior medial prefrontal cortex, the ventrolateral prefrontal cortex, and the lateral temporal cortex including the temporal pole. These areas have been implicated in semantic processing as revealed by structural studies involving individuals with brain lesions or neurodegeneration (Brambati et al., 2009; Grossman et al., 2004), meta-analyses of functional neuroimaging studies on semantic processing (Binder et al., 2009), and seed-based functional connectivity studies in neurotypical participants (Jackson et al., 2016; Ralph, 2014).

Fig. 1.

Brain regions associated with semantic processing and discrete emotional experience. (a) Reliable locations of functional activation from a neuroimaging meta-analysis of semantic processing tasks (Binder et al., 2009). (b) Brain regions involved in conceptual abstraction during social processing (Spunt & Adolphs, 2014). Emotion words, and the categories they refer to, are abstract social categories insofar as a given category (e.g., fear) may refer to a variety of situations with diverse features (Barrett, 2006; Satpute & Lindquist, 2019). Note the extensive overlap with areas involved in semantic processing. (c) Two MVPA studies showing locations of voxels informative for classification of discrete emotional experiences including the anterior medial prefrontal cortex, ventrolateral prefrontal cortex, and temporal pole (left, Kassam et al., 2013), and the anterior medial prefrontal cortex (right, Saarimäki et al., 2016). (d) Intracranial stimulation of areas implicated in semantic processing such as the temporal pole/temporal cortex and ventrolateral prefrontal cortex also elicit experiences of discrete emotions (Guillory & Bujarski, 2014). (e) Voxel-wise symptom lesion mapping studies implicate the temporal pole in both semantic processing and discrete emotion perception (Campanella et al., 2014)

Notably, these areas are rarely considered to be emotion processing regions from more traditional theoretical accounts. Indeed, it has long been supposed that discrete emotions are represented particularly in subcortical structures (e.g., the amygdala, hypothalamus, and periaqueductal gray) and perhaps in select cortical areas such as the insula (Dalgleish, 2004; MacLean, 1990; Panksepp, 1998; Wicker et al., 2003). However, more recent findings suggest that emotions involve a wide array of brain regions (and networks) spanning the cortical lobes (Damasio & Carvalho, 2013; Hamann, 2012; Kragel & LaBar, 2016; Lindquist et al., 2012; Pessoa, 2018; Smith & Lane, 2015; Vytal & Hamann, 2010; Wager et al., 2015). The focus of research has now shifted to understanding why so many brain regions are implicated in emotion and what particular roles they play (e.g., Barrett & Satpute, 2019; Pessoa, 2018; Satpute & Lindquist, 2019; Smith & Lane, 2015). Here, we address the role of brain regions that are known to be critical for semantic processing with respect to their involvement in representing discrete emotions. Prior work has implicated some of these brain regions—particularly prefrontal areas—in “cognitive” processing pertaining to cognitive emotion regulation (for a meta-analysis, see Buhle et al., 2012), or affective processing related to the representation of valence (for a meta-analysis, see Lindquist, Satpute, et al., 2016; also, Shinkareva et al., 2020) and arousal (Satpute et al., 2019; Satpute et al., 2012; Young et al., 2017). Here, we examine the ways in which these and other areas implicated in semantic processing (e.g., lateral temporal cortex and temporal poles) are also involved during experiences of discrete emotions such as fear, anger, and joy.

Relating Findings in Neuroscience to Research on Language and Emotion

In our review, we use the term “emotion” to refer to discrete states such as “anger,” “disgust,” and “fear” that people experience in daily life and perceive from the behaviors of those around them. We differentiate discrete emotions from “affect,” a term which describes feeling globally positive or negative and highly or lowly aroused (Barrett & Bliss-Moreau, 2009; Russell, 1980; Watson & Tellegen, 1985; Yik et al., 1999). Multiple models consider affect to be a central feature of emotion, but emotions are also not reducible to affect (Clore & Ortony, 2013; Cunningham et al., 2013; Ellsworth & Scherer, 2003; Fehr & Russell, 1984). We also differentiate between the subjective, phenomenological aspects of experiencing an emotion from directly observable behaviors including physiological changes (i.e., visceromotor behavior), facial and bodily muscle movements, and overt behaviors (e.g., freezing) that have non-specific links with subjective experiences of discrete emotions (Barrett, Lindquist, Bliss-Moreau, et al., 2007; LeDoux, 2013; LeDoux & Pine, 2016; Russell et al., 2003; Shaham & Aviezer, 2020). Finally, we differentiate emotion experience (feeling an emotion in one’s own body) from emotion perception (seeing an emotion in another person’s face or body). When possible, we discuss studies of emotion experience as our main focus. However, such studies are fewer in comparison to studies on emotion perception. Indeed, many studies of language and emotion in the neuroscience literature focus exclusively on perception of emotion in facial actions due to the ease of presenting this type of stimulus in the fMRI environment or to patients with brain lesions or implanted electrodes; this is a limitation of this literature. Moreover, several models of emotion across theoretical traditions suggest that there are shared psychological (and neural) mechanisms that underlie both facets (Barrett & Satpute, 2013; Ekman & Cordaro, 2011; Lindquist & Barrett, 2012; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015; Niedenthal, 2007; Oosterwijk et al., 2017; Tomkins, 1962; Wager et al., 2015; Wicker et al., 2003). Thus, we refer to studies of emotion perception when relevant since both literatures may inform certain shared mechanisms when it comes to the neural basis of language and emotion.

We include in our review research using diverse neuroscience methods including fMRI studies, lesion studies, and electrical stimulation studies. Each approach has its strengths and limitations, and so certain conclusions can be more strongly supported when considered as a whole. fMRI studies enable researchers to examine the macrolevel functional architecture of the entire brain, which is useful for addressing the overall topological similarity between brain regions previously implicated in semantic processing and those involved in the neural representation of emotion. They can also provide insight as to how these brain regions communicate with each other during a discrete emotional experience. Given the strengths of fMRI, we will thus focus our review on the degree to which a broad set of functionally connected brain areas previously referred to as participating in a “semantic processing network” also participate in emotion. However, fMRI is fundamentally a correlational technique and so care must be taken to not overinterpret the findings. The fMRI findings we review below support the conclusion that functional activity in certain brain regions previously implicated in semantic processing is also associated with emotional experience (i.e. a “forward inference;” Poldrack, 2006). But the common involvement of these areas in emotion and semantic processing may be due to many reasons, and so the stronger statement that activation in these areas implies that semantic processing is occurring during emotion construction is not necessarily supported (i.e. a “reverse inference;” Poldrack, 2006).

We also examine converging findings from lesion and invasive electrical stimulation studies that allow more causal conclusions about the role of language in emotion when brain regions linked to language are either permanently damaged or directly manipulated. While these studies lack the ability to examine functional activity throughout the whole brain in vivo, they can provide insight regarding whether specific brain regions that are well-established to be critical for semantic processing are also important for representing discrete emotions. For lesion studies, our review will necessarily require focusing on individual brain areas that have been damaged in humans through disease, stroke, or other brain damage and are associated with subsequent semantic deficits. We highlight the importance of the temporal cortex and the temporal pole, in particular. The temporal pole is considered to be a “semantic hub” since individuals with damage to this area exhibit major deficits in semantic processing (Brambati et al., 2009; Grossman et al., 2004; Mummery et al., 2000; Patterson et al., 2007), and several lines of work suggest it plays a critical role in concept retrieval (for a special issue on this topic, see Hoffman et al., 2015; Ralph, 2014). Although these studies offer more causal interpretations, they are limited in that participants are, by definition, not neurotypical. Finally, for electrical stimulation studies, we rely on a comprehensive review that summarizes the findings of many individual studies that show emotional outcomes upon electrical stimulation of diverse brain regions (Guillory & Bujarski, 2014). These studies necessarily focus on individual brain areas that have had electrodes inserted in them as part of treatment for neurological conditions (e.g., seizure). Again, although these studies offer more causal interpretations, they are limited in that brain regions are targeted one at a time and participants are again, not neurotypical.

Below, we organize our review based on four families of analytical approaches: activation-based fMRI analyses, connectivity-based fMRI analyses, multivoxel pattern analysis (MVPA)-based fMRI analyses, and finally, neuropsychology and electrical stimulations studies. Collectively, we argue that these findings provide support for a strong link between language and emotion.

Brain Regions Involved in Semantic Processing Are Involved in Making Meaning of Sensory Inputs as Emotions

If semantic processing is important for creating discrete emotions, then brain regions previously implicated in semantic processing may also show engagement when an individual experiences a discrete emotion (v. undifferentiated states). While more traditional models of emotion do not posit that brain regions such as the ventrolateral prefrontal cortex, anterior medial prefrontal cortex, or lateral temporal cortex and poles underlie discrete emotion representations, a key prediction of our constructionist model is that emotion concepts are important to transform more elemental sensations (e.g., interoceptive feelings of pleasure or displeasure, high or low arousal, or even exteroceptive inputs) into experiences and perceptions of discrete emotions (Barrett, Lindquist, & Gendron, 2007; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015; Satpute et al., 2020). Just as visual inputs can be perceived as lines in a given orientation, a chair, or more abstractly as furniture, affective sensations can be conceptualized and experienced as “discomfort in the stomach” (i.e., somaticized; Stonnington et al., 2013), or more abstractly as “fear” (Barrett, 2006; Lane & Schwartz, 1987; MacCormack & Lindquist, 2017; Satpute et al., 2012; Stonnington et al., 2013). From our constructionist perspective, conceptualization plays an integral role in transforming more rudimentary sensory information into discrete experiences of emotion and may rely on brain regions that have also been implicated in semantic memory.

Testing this hypothesis is not straightforward. The ideal study would influence conceptualization without varying other features of experience. However, most studies compare conditions involving an emotion induction against neutral states or another emotion state, in which it is difficult to isolate the processes related to conceptualization. A couple studies by Oosterwijk and colleagues tested this prediction a bit more directly. In one study, participants were presented with evocative scenarios and were trained to focus on creating a discrete emotion experience from the scenario v. focusing on a more elemental bodily sensation (e.g., their heartbeat; Oosterwijk et al., 2012). For example, upon reading the following (truncated) scenario: “You’re driving home after staying out drinking all night. You close your eyes for a moment, and the car begins to skid”, participants were trained to either focus on generating a discrete emotion (e.g., feeling afraid) or a somatovisceral sensation (e.g., feelings of tiredness in the eyes or the body lurching forward). Thus, the stimulus remained constant, and task instructions were used to influence how the stimulus was conceptualized. Constructing experiences of emotion from the scenario involved the left temporal cortex extending into the temporal pole to a greater degree than did constructing experiences of body states.

In another study, participants who were primed to conceptualize and experience affective images as discrete emotions also showed greater activity in the anterior medial prefrontal cortex and lateral inferior frontal gyrus (Oosterwijk et al., 2016). Specifically, participants were first led to (falsely) believe that a computer program—trained on previously collected data from each participant—could indicate whether they would feel one of three emotions (disgust, fear, or morbid fascination) vs. a control condition (“could not be determined”) to a subsequently presented evocative image. Participants then completed a set of trials in which they were first shown the computer program’s prediction of what the participant would feel (the conceptual prime) followed by an evocative image (of note, participants believed the computer predictions were accurate even though they were in truth randomly determined). fMRI analyses focused on activity during image viewing periods (which were separated from activity related to the visual presentation of the primes using jitters and catch trials). The anterior medial prefrontal cortex and lateral inferior frontal gyrus were more engaged when participants were primed to conceptualize and experience affective images as discrete emotions of “disgust,” “fear,” or even “morbid fascination,” in comparison to the control condition.

Although few other neuroimaging studies have examined the relationship between conceptualization and emotional experience directly, these studies are relatable to a broader literature on the neural basis of action identification (Spunt et al., 2016). Just as Oosterwijk’s studies held the stimulus constant and influenced how it was conceptualized, Vallacher and Wegner’s (1987) action identification theory suggests that the same social stimulus can be construed and thus experienced in fundamentally different ways. For example, the same visual stimulus can be identified as a lower level, concrete behavior (e.g., a picture of someone “squinting eyes,” “shaking a fist,” or “yelling”), or a higher order mental inference that includes an emotional state (e.g., “angry”). Sensory inputs from the body, too, can be perceived more concretely (i.e., “heart thumping”) or at a more abstract level (e.g., “feeling fear;” Lane et al., 2015). A robust finding in the fMRI literature on action identification is that when participants use higher v. lower level identifications of the same stimulus, greater activity is observed in the temporal poles, the anterior medial prefrontal cortex, and the ventrolateral prefrontal cortex (see Fig. 1b; Spunt & Adolphs, 2015; Spunt et al., 2016; Spunt et al., 2011). Thus, both of these literatures suggest that brain regions involved in semantic processing are also involved when making meaning of sensory inputs as higher order instances of discrete emotions.

Brain Regions Involved in Semantic Processing Have Greater Functional Connectivity with Circuitry Involved in Affective Processing During Emotional Experience

fMRI can also be used to investigate how certain brain regions communicate with each other when constructing emotion. To the extent that semantic processing plays an important role in carving undifferentiated affective states into experiences of discrete emotions, we might expect brain regions previously implicated in semantic processing to communicate more with brain regions supporting interoceptive and visceromotor processing (including the amygdala or other limbic areas). A couple studies examined which networks exhibited greater functional connectivity (correlated activity over time) while watching lengthy movie clips that introduced varying degrees of experienced sadness, fear, or anger over time (clips were targeted to elicit one or another discrete emotion). Indeed, greater functional connectivity between the anterior medial prefrontal cortex and temporal poles with limbic areas (i.e., the nucleus accumbens, amygdala, and hypothalamus) predicted more intense experiences of specific discrete emotions (Raz et al. 2012; 2016).

In another study, we found that connectivity between the amygdala and anterior medial PFC is also associated with more categorical and discrete perceptions of emotion (Satpute et al., 2016). Participants were shown pictures of morphed facial expressions ranging in degrees from “calm” to “fear.” They were instructed to either select discrete emotion words (e.g., forced choice, “fear,” and “calm”) for categorizing the facial stimuli or make ratings on an analog scale (i.e., ranging continuously from “fear” to “calm”). To measure perception, the point of subjective equivalence (PSEs), or the point at which a face was categorized as “fear” or “calm” with equal probability, was calculated for each judgment scale condition separately, and the influence of discretizing stimuli on emotion perception was measured by calculating the difference in PSEs between conditions. We found that the more that discretizing affective stimuli influenced emotion perception for a given participant, the greater the connectivity between amygdala and portions of the anterior medial prefrontal cortex (of note, in that study, neither the ventrolateral prefrontal cortex nor the temporal pole were included as regions of interest). Together, these findings suggest that brain regions involved in semantic processing have functional connectivity with circuitry involved in affective processing during emotional experience.

Brain Regions Involved in Semantic Processing Are Also Involved in Emotion Differentiation

While the prior two sections address whether areas previously implicated in semantic processing are engaged and communicate with limbic structures during emotion construction, another analytic approach is to examine whether these brain regions contain “representational content” that differentiates between discrete emotions. MVPA (Kriegeskorte et al., 2006; Mur et al., 2009) refers to a family of analytical approaches that examines whether the pattern of functional activity distributed across many brain voxels (i.e., volumetric pixels that are typically 1–3 mm3 in size) carries information that diagnoses the presence of a discrete emotion during a task (for reviews, see Clark-Polner et al., 2016; Kragel & LaBar, 2016; Nummenmaa & Saarimäki, 2017). MVPA studies have shown that brain regions previously implicated in semantic processing are also involved in differentiating one experience of emotion from another (Table 1).

Table 1.

Recent pattern classification studies consistently show that brain regions involved in semantic processing are also involved in differentiating between emotion categories

| Study | Emotions included | Experience (E) or perception (P) | Brain regions | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Anger | Fear | Happy/Joy | Sad | Disgust | Surprise | Neutral | Others | aMPFC | vlPFC | PCC | STS | TP | ||

| Saarimäki et al., 2016 | × | × | × | × | × | × | × | E | × | × | × | × | × | |

| Saarimäki et al., 2016 | × | × | × | × | × | × | × | E | × | × | × | × | ||

| Wager et al., 2015 | × | × | × | × | × | E/P | × | × | × | × | × | |||

| Said et al., 2010 | × | × | × | × | × | × | × | P | n/a | n/a | n/a | × | n/a | |

| Ethofer et al., 2009 | × | × | × | × | × | P | n/a | n/a | n/a | × | n/a | |||

| Peelen et al., 2010 | × | × | × | × | × | P | × | × | ||||||

| Kassam et al., 2013 | × | × | × | × | × | × | E | × | × | × | × | |||

| Kragel & LaBar, 2015 | × | × | × | × | × | × | E | × | × | × | × | × | ||

Studies are denoted by the first author and year, and details are provided for which emotion categories were included in the study and whether the study involved emotional experience (E) involving imagery or movie inductions to evoke emotional experience, for example, or emotion perception (P) involving viewing pictures of affective facial expressions, for example. “n/a” indicates that these regions were not included in analysis (the reliability of the TP may also be underestimated due to commonly occurring signal dropout in that area in most fMRI studies). Brain areas: anterior medial prefrontal cortex (aMPFC), ventrolateral prefrontal cortex (ventrolateral prefrontal cortex), precuneus/posterior cingulate cortex (PCC), superior temporal sulcus (STS), and temporal pole (TP). Emotional experience inductions were conducted in studies by Kragel and LaBar (2015), Kassam et al. (2013), and Saarimäki et al. (2016) (Experiments 1 and 2). Language and emotion words were used in some of these studies (Saarimäki et al., 2016; Kassam et al., 2013), but the influence of language was mitigated in others (Kragel & LaBar, 2015; see main text for details). Wager et al. (2015) included both emotional experience and emotion perception studies

Kassam et al. (2013) is one of the earliest studies to use this approach. They used an idiographic method for inducing emotions in which method actors wrote out their own scenarios for inducing nine discrete emotions. While undergoing fMRI, the subjects were shown emotion words to cue retrieval of those scenarios and the corresponding discrete emotional states. For each individual trial, they obtained the brain activation pattern during that trial and found that the emotional state could be diagnosed from the activation pattern using a classifier. Critically, their analysis was performed on all voxels throughout the brain, which gave every brain region an equal opportunity to participate in classifying emotions. Next, they identified the brain voxels that most contributed to classification success. The informative voxels were often in brain regions that have also been implicated in semantic processing including the temporal poles, the anterior dorsomedial prefrontal cortex, and the ventrolateral prefrontal cortex.

Other recent studies found similar findings using diverse stimuli to evoke emotions in both emotion perception and emotional experience paradigms (Table 1; Ethofer et al., 2009; Kragel & LaBar, 2015; Peelen et al., 2010; Saarimäki et al., 2016; Said et al., 2010; Wager et al., 2015), including several studies that did not explicitly present words during the task (Ethofer et al., 2009; Kragel & LaBar, 2015; Saarimäki et al., 2016; Said et al., 2010). One of the more consistent findings across many of these studies is that voxels in the temporal poles, the anterior prefrontal cortex, and the ventrolateral prefrontal cortex reliably carry information for classifying emotions (Table 1 and Fig. 1c; for a review, see Satpute & Lindquist, 2019). These findings further suggest that brain regions previously implicated in semantic processing carry representational content for emotions.

Brain Regions Involved in Semantic Processing Are Necessary for Emotion Differentiation

The research we have reviewed thus far stems mostly from correlational brain imaging techniques. But there is also growing evidence that brain regions involved in semantic processing are causally important for emotion representation. If language plays a critical role in emotion, then we might expect changes to areas important for semantic processing to also result in deficits in representing discrete emotions (Lindquist et al., 2014). In humans, these types of neuroscience findings come from two methodologies: brain damage studies and brain stimulation studies.

There is a broad literature examining emotion perception deficits in patients with brain damage (e.g., Borod et al., 1986; Schwartz et al., 1975). However, most of this work is unable to address the relationship between language and emotion in part because affect and emotion were poorly distinguished in the experimental designs used, and in part because it was difficult to precisely localize sources of brain damage. Instead, prior work examined more widespread damage to the right v. left hemisphere (Yuvaraj et al., 2013). With the support of neuroimaging methods, more recent work has used voxel-based symptom lesion mapping techniques to precisely localize damaged tissue. One study using this technique found that emotion perception deficits correlated with greater damage in areas previously implicated in semantic processing including bilateral anterior temporal cortex and temporal poles in patients (N = 71) who underwent tumor removal (Fig. 1e; Campanella et al., 2014). In another study, Grossi et al. (2014) used experience sampling techniques that assess emotional experience in daily life and found that patients with lesions to the temporal pole reported experiencing fewer discrete emotions in life, yet showed no difference in their reports of other states such as concrete physical sensations (e.g., of pain and heart beating). These findings suggest that the lateral temporal cortex and temporal pole may be particularly important for representing discrete emotions, but not necessarily critical for affective representations which in turn may involve other brain regions such as the insula and cingulate cortex (Craig, 2009; Critchley, 2009; MacCormack & Lindquist, 2017).

Similarly, patients with a variety of aphasias (including some patients with receptive aphasia) have maintained experience of affect, in that they can report on their general positive and negative mood (Haley et al., 2015). These findings are consistent with the prediction that the temporal pole is necessary for making meaning of sensory inputs as moments of discrete emotion, without which these moments are represented more concretely as affective or more elemental physical sensations (MacCormack & Lindquist, 2017). To date, there are few other studies involving semantic deficits that have also examined discrete emotions. In principle, this hypothesis would be ideally tested by examining whether patients with semantic deficits also have deficits in experiences of discrete emotions. However, these sorts of studies are practically challenging because there are few if any objective measures (behavioral, psychophysiological, and neural) that reliably identify when a person is experiencing one discrete emotion over another (Barrett, 2006; Lindquist et al., 2012; Mauss & Robinson, 2009; Siegel et al., 2018). Studies have thus relied on emotion perception as it allows for the quantification of participants’ perceptual experiences of standardized emotional visual stimuli.

A few studies have tested the hypothesis that individuals with lesions to areas involved in semantic processing also have deficits in emotion perception. A first case study examined a patient who had lost the ability to name objects following a stroke. When asked to sort pictures of facial expressions into piles, he produced disorganized piles that did not reflect discrete emotion categories (Roberson et al., 1999). More recently, we designed a behavioral paradigm (Lindquist et al., 2014) to test whether patients who were specifically diagnosed with semantic dementia (a form of primary progressive aphasia that results in impairments in concept knowledge availability and use, a.k.a. a receptive aphasia; Gorno-Tempini et al., 2011; Hodges & Patterson, 2007) would also lose the ability to perceive discrete emotions in faces. They were asked to perform a card sort task in which they sorted pictures of emotional facial expressions belonging to the categories of anger, disgust, fear, happiness, sadness, and neutral into as many categories as they saw necessary. Unlike healthy controls, patients failed to separate faces into six categories reflecting discrete emotions. Rather, patients separated faces into categories of affect (e.g., pleasant, neutral, and unpleasant), suggesting that they perceived valence, but not discrete emotion, on the faces. Control tasks ruled out alternative hypotheses (e.g., that participants had difficulty understanding a sorting task, that participants had deficits of facial processing more generally, and that patients could understand emotion categories if cued with words).

Two more recent studies have conceptually replicated these findings. First, Jastorff et al. (2016) used behavioral deficits in emotion differentiation to predict brain-wide patterns of neural degeneration in a range of patients with dementia. Patients who had difficulty identifying specific emotions in faces were more likely to have degeneration in regions of the brain associated with semantics such as the vlPFC and temporal pole. More recently, Bertoux et al. (2020) demonstrated that semantic fluency and conceptual knowledge about emotion predicted deficits in facial emotion perception in patients with semantic dementia, an effect associated with neurodegeneration in ventral frontal and temporal regions and changes to white matter tracts linking frontal and temporal cortices.

Whereas lesion studies assess causal relations due to permanent impairment of brain regions, stimulation studies involve direct intracranial stimulation of brain regions. These studies also have their limitations insofar as they typically occur in individuals undergoing brain surgery and thus also involve humans with brain pathology. Each study rarely examines more than one area; however, over the past several decades, these studies have accumulated. Guillory and Bujarski (2014) conducted a comprehensive summary of 64 such studies. Consistent with the lesion findings, stimulation of the temporal pole and lateral temporal cortex, regions also involved in semantics, appeared to elicit discrete emotional experiences (Fig. 1d; e.g., anxiety and sadness; Guillory & Bujarski, 2014). In combination with the lesion studies, these findings provide some of the first causal evidence that brain regions that are implicated in semantic processing are also necessary for emotion differentiation.

Implications for Emotion Theory

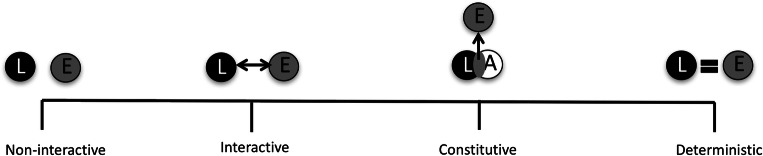

While each set of findings reviewed above may have its strengths and weaknesses, the collective body of work suggests that brain regions known to be important for semantic processing are also important for representing discrete emotions. These findings have the power to lend insight into the possible mechanisms by which semantic processing is associated with emotion. Figure 2 graphically represents a set of views on the relationship between language and emotion along a dimension ranging from non-interactive to deterministic. At the extremes, existing psychological models of emotion argue that emotions are either non-interactive with language (e.g., some variants of basic emotions models; Ekman & Cordaro, 2011) or entirely determined by language (e.g., the Linguistic Determinism Hypothesis, as outlined by Kay & Kempton, 1984). Given the neuroscience data we have reviewed here and the behavioral data we have reviewed elsewhere (Lindquist, Gendron, & Satpute, 2016; Lindquist, MacCormack, & Shablack, 2015; Lindquist, Satpute, & Gendron, 2015), it is more likely that language and emotion form either an interactionist or constitutive relationship. We explore the implications of the neuroscientific findings for these two approaches here and look to future studies that can weigh in on the precise relationship between the two.

Fig. 2.

A spectrum of relationships between language and emotion. “L” denotes language, whereas “E” denotes emotion. “A” refers to affect, which according to the constitutive approach, is another basic “ingredient” that combines with language to create emotions. The far left section depicts language and emotion in a non-interactive relationship such that language has little to no influence upon emotional experience, and vice versa. Basic emotions models that view emotions as triggering physiomotor action programs (Ekman & Cordaro, 2011), or as performing specific behaviors that are conserved across species (Panksepp, 1998), view emotions as entities separate from the words used to describe them. Still other models argue that universal emotion experiences “sediment” out in language for the purpose of communication (Fontaine et al., 2013; Scherer & Moors, 2019). The middle left section depicts an interactive relationship in which language may modulate emotion, for instance by increasing or decreasing the strength of an emotion. Theories that emphasize an interaction between “cognition” and “emotion” may fall in this range (Ellsworth, 2013; Kirkland & Cunningham, 2012; Moors & Scherer, 2013; Schachter & Singer, 1962). The middle right section depicts a constitutive relationship, in which language (i.e., emotion words like “anger” and “calm”) serve as key ingredients in the construction of emotion. The far right section depicts a deterministic relationship, in which emotions are wholly constructed by language (as in the Linguistic Determinism Hypothesis)

According to the “interactive view,” emotion words and the semantic concepts they refer to are separate from emotion but may interact with emotion after it has been formed (as in to communicate emotion or facilitate emotion regulation), or before it is generated (e.g., by directing attention to certain aspects of a stimulus, which may influence information processing leading up to an emotion). Models which propose that “cognition interacts with emotion” generally fall somewhere in this range (Clore & Ortony, 2008; Ellsworth, 2013; Kirkland & Cunningham, 2012; Moors & Scherer, 2013; Schachter & Singer, 1962). At the neural level, the interactive view implies that semantic processing and emotion processing involve distinct systems, but also that these systems may exert influences upon one another. For example, some emotion models suggest that the representational content for discrete emotions involves brainstem and somatosensory cortical areas (Damasio & Carvalho, 2013), or brainstem circuits that underlie defensive behaviors (Panksepp, 2011). From these accounts, systems for emotion and semantics may be interactive in that they may exert influence upon one another. That is, a semantic system—as a subset of a broader “cognitive” system —may take on a modulatory role over the emotional system by up or down regulating emotion, but does not itself help to represent or create these states.

As such, the interactive view may account for the first two sets of findings reviewed above: that brain regions previously shown to be involved in semantic processing are also active during emotion experience, and that these brain regions show greater connectivity with those supporting affective processing. However, it may be more difficult for this view to explain the latter two sets of findings. That is, the interactive view assumes that the representational content for emotion—the patterns of neural activity that instantiates one emotion at a given moment as separate from another emotion (Mur et al., 2009)—would be supported by brain regions that are separate from those involved in semantic processing, and damage to areas involved in semantic processing would not be expected to eliminate the ability to perceive and experience discrete emotions.

The constitutive view proposes a different relationship between semantic processing and emotion. Emotion words and concepts are considered to play a constitutive role in creating emotion. From this view, the constructs are actually organized hierarchically—semantic processing is a necessary ingredient for creating the experience and perception of discrete emotions (but it is not the only ingredient that is required). This idea is proposed by the constructionist approach to emotion called the Theory of Constructed Emotion (Barrett, 2006, 2017; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015). The idea that language helps to organize perceptual information into categories is not unique to our constructionist model of emotion. It also exists in models from cognitive psychology more broadly (Barsalou, 2008; Borghi & Binkofski, 2014; Lupyan & Thompson-Schill, 2012; Steels & Belpaeme, 2005; Tenenbaum et al., 2011; Vigliocco et al., 2009; Xu & Tenenbaum, 2007). For instance, Lupyan and Clark (2015) provide a mechanistic view for how language takes on an integral role in creating perceptual predictions. We have argued elsewhere (Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015; Shablack & Lindquist, in press) that the role of language in perceptual predictions may also extend to emotional experiences and perceptions. Emotion words may be especially important to perceptual predictions related to emotions, as words are important for helping to represent abstract concepts that do not have firm perceptual boundaries (such as emotions; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015; Niedenthal et al., 2005; Vigliocco et al., 2009; Wilson-Mendenhall et al., 2011).

In our constructionist view, emotion words such as “anger,” “sadness,” and “joy” name concepts that help transform the brain’s pleasant, unpleasant, and arousing affective predictions about the meaning of stimuli, whether in the world or inside the body, into perceptions and experiences of discrete emotions (a process also referred to as “conceptualization;” Barrett, 2017; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015, Lindquist, Gendron, & Satpute, 2016). Conceptualization transforms more elemental or basic affective sensory inputs into experiences of discrete emotions (unpleasantness into fear or anger, for example; Lindquist & Barrett, 2008). Words are therefore “constitutive” of emotions; they help a person to acquire a representation of emotion that groups together instances with diverse and heterogeneous features, and they are intimately linked with concept knowledge that is necessary to make meaning of affect as specific discrete emotions in context (Brooks et al., 2016; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015; Satpute et al., 2016).1

The constitutive view is not only consistent with the four sets of findings above, it also makes the prediction that representational content for emotion would reside in brain regions that support semantic processing, and that these areas are further necessary for representing discrete emotions. Brain regions involved in semantic processing are critical to emotions because they support the process of conceptualization whereby concepts make meaning of affect in order to create discrete emotions (Barrett, 2017; Barrett, Mesquita, et al., 2007). These findings are not clearly predicted by an interactive view and are the most difficult for it to explain ad-hoc.

An open question concerns how the above findings relate with the phenomenon of affect labeling. Several studies have now shown that matching affective stimuli (e.g., an affective facial expression) with emotion words (e.g., “fear”) is associated with reduced amygdala response (Hariri et al., 2000; Lieberman, 2011; Lieberman et al., 2007). This finding may seem at odds with our constitutive view and may suggest instead that emotion words and emotions interact competitively. However, as described by Torre and Lieberman (2018), explicitly labeling emotional experiences with emotion words influences judgments of affect (e.g., negative valence), but how emotion labeling influences the perception or experience of discrete emotions is not tested in those studies (for a more in-depth discussion of affect labeling, see Brooks et al., 2016; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015, Lindquist, Gendron, & Satpute, 2016). Labeling the meaning of an affective stimulus (i.e., a negative face) may reduce the ambiguity, novelty, or unpredictability of the sensory input by helping to refine it as a specific emotion (i.e., fearful; Brooks et al., 2016; Nook et al., 2015). Since amygdala is also responsive to uncertainty (Pessoa & Adolphs, 2010; Rosen & Donley, 2006), decrease in amygdala activity may more likely reflect the reduction of uncertainty and associated feelings of negative affect rather than a reduction in discrete emotion, per se. Indeed, it is possible that upon labeling a facial expression stimulus or an encounter with a spider as “fearful,” that feelings of more general negative valence may diminish, but that the experience of fear as a discrete emotion persists. Recent evidence from this issue (Nook et al. this issue) certainly suggests that labeling one's affective responses to images can "crystalize" the experience of a discrete emotion and make it more difficul to subsequently regulate. Such possibilities may be of interest to address in future work.

Conclusions

Understanding the role of language in emotion may benefit from research conducted at multiple levels of analysis. We and others have previously reviewed behavioral (see reviews by Lindquist & Gendron, 2013; Lindquist, MacCormack, & Shablack, 2015, Lindquist, Satpute, & Gendron, 2015, Lindquist, Gendron, & Satpute, 2016; Satpute et al., 2020; Shablack & Lindquist, in press), cross-cultural (Gendron et al., 2020), and linguistic (Jackson et al., 2019) findings that are consistent with a constitutive role for language in constructing emotional experience. Findings from this special issue also point to the important role of language in development of emotion understanding (Grosse, Streubel, Gunzenhauser, & Saalbach, this issue), in emotion regulation (Nook, Satpute & Ochsner, this issue), and in interaction with culture (Zhou, Dewaele, Ochs, & De Leersynder, this issue). While behavioral findings only allow researchers to examine the “inputs” and “outputs” of emotion, neuroscience can further examine the mechanisms at play in a person while they are experiencing and perceiving emotions in real time. Our review of the findings suggests that brain regions frequently implicated in semantic processing are also engaged during experiences of discrete emotions, communicate with areas involved in sensory processing (including interoceptive and exteroceptive inputs) during emotion, carry representational content for emotion, and may even be necessary for emotion. Moreover, our view that language shapes emotional experience dovetails with the work of others who have also argued that language plays a role in shaping other psychological phenomena (e.g., cognition, perceptual learning, and memory; Barsalou et al., 2008; Boroditsky, 2011; Lupyan & Clark, 2015; Satpute et al., 2020; Slobin, 1987).

However, the findings are also not as of yet definitive for a few reasons. There are, overall, few studies examining the neural basis of emotion language in particular and how affiliated brain regions contribute to emotional experience. Apart from the handful of studies discussed here, few fMRI experiments have tried to separate the differential contributions of emotion concepts and affective processing during task engagement. Here, researchers may adopt paradigms that manipulate the demands placed on retrieving and labeling emotions (e.g., Satpute et al., 2012). Researchers may draw on established behavioral paradigms that temporarily impair access to emotion words (e.g., Lindquist et al., 2006) or increase their accessibility (e.g., Lindquist & Barrett, 2008; Nook et al., 2015). Notably, we are aware of no fMRI experiments that simply require participants to free label their emotion, which is known to have markedly different behavioral response patterns relative to forced choice tasks (Russell, 1991; Russell & Widen, 2002), and which, we have recently found, may crystalize a discrete emotion and render it less malleable to change (Nook et al., this issue).

With regard to more causal analyses, as we acknowledge, most studies performed with patients with brain damage are not properly designed to weigh in on the role of language deficits in emotion v. affect processing. Future lesion studies in patients with and without semantic deficits would be of central interest to address the hypotheses of the constitutive view. It would also be of interest to test whether deficits in emotion perception due to damage in other areas can be explained by more general affective processing deficits (e.g., as in Cicone et al., 1980) or uniquely influence processing discrete emotions. Finally, studies could also use transcranial magnetic stimulation to create “temporary lesions” in brain regions associated with semantics in healthy individuals to address whether impairing language-related brain regions impairs emotion v. affect processing is involved. We look forward to investigating these future avenues in our own work and hope others will pursue them as well.

Acknowledgments

Research reported in this publication was supported by the Department of Graduate Education of the National Science Foundation (1835309), by the Division of Brain and Cognitive Sciences of the National Science Foundation (1947972), and by the Division of Brain and Cognitive Sciences of the National Science Foundation (1551688).

Additional Information

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Footnotes

Notably, the distinction we draw between interactive and constitutive roles parallels Aristotelian efficient and material causality, respectively. This distinction is a common theme in emotion research. When considering the relationship between emotion and appraisal, Ellsworth (2013) points out that the question of whether appraisals “cause” emotion is vague. Appraisals can be viewed as causing emotion in the sense that they are separate from emotion and trigger emotions (e.g., as one pool ball causes another to move; an interactive view) or—as she proposes—in the sense that they are part of the emotion (i.e., appraisals are an ingredient of the emotion; a constitutive view). The same ambiguity arises when considering the relationship between emotion and cognition more broadly. For instance, Pessoa (2008) advocates for a constitutive relationship—that the same underlying and overlapping processes compose emotion and cognition and thus the same domain-general ingredients make emotions and cognitions (also see Barrett & Satpute, 2013; Wager et al., 2015). And yet a more commonplace assumption is that emotion “interacts with” cognition in the sense that one exerts an influence upon the other or vice versa. This distinction is usefully applied to areas outside of emotion as well. For instance, in cognitive psychology, ongoing debates examine whether concepts are represented apart from sensory-motor representations but may interact with them (i.e., an interactive view), or whether concepts are constituted from these sensory-motor representations themselves (i.e., a constitutive view, see Barsalou, 2008; Binder, 2016; Deacon, 1998; Fernandino et al., 2016; Leshinskaya & Caramazza, 2016). And parallel arguments have occurred in language and thought, in which many researchers may agree with the more general claim that “language shapes thought,” but the disagreement and potential for theoretical advances lies in whether language is constitutive of thought (indeed is the vehicle for thought), or whether language merely interacts with thought (Boroditsky, 2001; Kay & Kempton, 1984). In general, whether psychological constructs relate with one another interactively or constitutively is a difficult, yet important theme in psychology and neuroscience.

References

- Barrett LF. Solving the emotion paradox: Categorization and the experience of emotion. Personality and Social Psychology Review. 2006;10:20–46. doi: 10.1207/s15327957pspr1001_2. [DOI] [PubMed] [Google Scholar]

- Barrett LF. The theory of constructed emotion: An active inference account of interoception and categorization. Social Cognitive and Affective Neuroscience. 2017;12:1–23. doi: 10.1093/scan/nsw154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Bliss-Moreau E. Affect as a psychological primitive. Advances in Experimental Social Psychology. 2009;41:167–218. doi: 10.1016/S0065-2601(08)00404-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Bliss-Moreau E, Duncan S, Gendron M, Mize J, Brennan L. Of mice and men: Natural kinds of emotions in the mammalian brain? A response to Panksepp and Izard. Perspectives in Psychological Science. 2007;2:297–311. doi: 10.1111/j.1745-6916.2007.00046.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, Gendron M. Language as context for the perception of emotion. Trends in Cognitive Sciences. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Mesquita B, Ochsner KN, Gross JJ. The experience of emotion. Annual Review of Psychology. 2007;58:373–403. doi: 10.1146/annurev.psych.58.110405.085709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Satpute AB. Large-scale brain networks in affective and social neuroscience: Towards an integrative functional architecture of the brain. Current Opinion in Neurobiology. 2013;23:361–372. doi: 10.1016/j.conb.2012.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Satpute AB. Historical pitfalls and new directions in the neuroscience of emotion. Neuroscience Letters. 2019;693:9–18. doi: 10.1016/j.neulet.2017.07.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsalou LW. Grounded cognition. Annual Review of Psychology. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Santos A, Simmons WK, Wilson CD. Language and simulation in conceptual processing. In: De Vega M, Glenberg AM, Graesser AC, editors. Symbols, embodiment, and meaning. Oxford: Oxford University Press; 2008. pp. 245–283. [Google Scholar]

- Bertoux, M., Duclos, H., Caillaud, M., Segobin, S., Merck, C., de la Sayette, V., Belliard, S., Desgranges, B., Eustache, F., & Laisney, M. (2020). When affect overlaps with concept: Emotion recognition in semantic variant of primary progressive aphasia. Brain - A Journal of Neurology, awaa313. [DOI] [PMC free article] [PubMed]

- Binder JR. In defense of abstract conceptual representations. Psychonomic Bulletin & Review. 2016;23:1096–1108. doi: 10.3758/s13423-015-0909-1. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borghi AM, Binkofski F. Words as social tools: An embodied view on abstract concepts. New York, NY: Springer; 2014. [Google Scholar]

- Borod JC, Koff E, Lorch MP, Nicholas M. The expression and perception of facial emotion in brain-damaged patients. Neuropsychologia. 1986;24:169–180. doi: 10.1016/0028-3932(86)90050-3. [DOI] [PubMed] [Google Scholar]

- Boroditsky L. Does language shape thought?: Mandarin and English speakers' conceptions of time. Cognitive Psychology. 2001;43:1–22. doi: 10.1006/cogp.2001.0748. [DOI] [PubMed] [Google Scholar]

- Boroditsky L. How language shapes thought. Scientific American. 2011;304:62–65. doi: 10.1038/scientificamerican0211-62. [DOI] [PubMed] [Google Scholar]

- Brambati S, Rankin K, Narvid J, Seeley W, Dean D, Rosen H, Miller BL, Ashburner J, Gorno-Tempini M. Atrophy progression in semantic dementia with asymmetric temporal involvement: A tensor-based morphometry study. Neurobiology of Aging. 2009;30:103–111. doi: 10.1016/j.neurobiolaging.2007.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks JA, Shablack H, Gendron M, Satpute AB, Parrish MH, Lindquist KA. The role of language in the experience and perception of emotion: A neuroimaging meta-analysis. Social Cognitive and Affective Neuroscience. 2016;12(2):169–183. doi: 10.1093/scan/nsw121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buhle JT, Kober H, Ochsner KN, Mende-Siedlecki P, Weber J, Hughes BL, Kross E, Atlas LY, McRae K, Wager TD. Common representation of pain and negative emotion in the midbrain periaqueductal gray. Social Cognitive and Affective Neuroscience. 2012;8:609–616. doi: 10.1093/scan/nss038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campanella F, Shallice T, Ius T, Fabbro F, Skrap M. Impact of brain tumour location on emotion and personality: A voxel-based lesion–symptom mapping study on mentalization processes. Brain. 2014;137:2532–2545. doi: 10.1093/brain/awu183. [DOI] [PubMed] [Google Scholar]

- Cicone M, Wapner W, Gardner H. Sensitivity to emotional expressions and situations in organic patients. Cortex. 1980;16:145–158. doi: 10.1016/s0010-9452(80)80029-3. [DOI] [PubMed] [Google Scholar]

- Clark-Polner E, Wager TD, Satpute AB, Barrett LF. The brain basis of affect, emotion, and emotion regulation: Current issues. In: Lewis M, Haviland-Jones J, Barrett LF, editors. Handbook of emotions. 4. New York, NY: The Guilford Press; 2016. pp. 146–165. [Google Scholar]

- Clore, G. L., & Ortony, A. (2008). Appraisal theories: How cognition shapes affect into emotion. In M. Lewis, J. M. Haviland-Jones, & L. F. Barrett (Eds.), Handbook of emotions (p. 628–642). The Guilford Press.

- Clore GL, Ortony A. Psychological construction in the OCC model of emotion. Emotion Review. 2013;5:335–343. doi: 10.1177/1754073913489751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craig AD. Emotional moments across time: A possible neural basis for time perception in the anterior insula. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences. 2009;364:1933–1942. doi: 10.1098/rstb.2009.0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley HD. Psychophysiology of neural, cognitive and affective integration: fMRI and autonomic indicants. International Journal of Psychophysiology. 2009;73:88–94. doi: 10.1016/j.ijpsycho.2009.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cunningham WA, Dunfield KA, Stillman PE. Emotional states from affective dynamics. Emotion Review. 2013;5:344–355. [Google Scholar]

- Dalgleish T. The emotional brain. Nature Reviews Neuroscience. 2004;5:583–589. doi: 10.1038/nrn1432. [DOI] [PubMed] [Google Scholar]

- Damasio A, Carvalho GB. The nature of feelings: Evolutionary and neurobiological origins. Nature Reviews Neuroscience. 2013;14:143–152. doi: 10.1038/nrn3403. [DOI] [PubMed] [Google Scholar]

- Deacon, T. W. (1998). The symbolic species: The co-evolution of language and the brain: WW Norton & Company.

- Ekman P, Cordaro D. What is meant by calling emotions basic. Emotion Review. 2011;3:364–370. [Google Scholar]

- Ellsworth PC. Appraisal theory: Old and new questions. Emotion Review. 2013;5:125–131. [Google Scholar]

- Ellsworth, P. C., & Scherer, K. R. (2003). Appraisal processes in emotion. In R. J. Davidson, K. R. Scherer, & H. H. Goldsmith (Eds.), Series in affective science. Handbook of affective sciences (p. 572–595). Oxford University Press.

- Ethofer T, Van De Ville D, Scherer K, Vuilleumier P. Decoding of emotional information in voice-sensitive cortices. Current Biology. 2009;19:1028–1033. doi: 10.1016/j.cub.2009.04.054. [DOI] [PubMed] [Google Scholar]

- Fehr B, Russell JA. Concept of emotion viewed from a prototype perspective. Journal of Experimental Psychology: General. 1984;113:464–486. [Google Scholar]

- Fernandino L, Humphries CJ, Conant LL, Seidenberg MS, Binder JR. Heteromodal cortical areas encode sensory-motor features of word meaning. Journal of Neuroscience. 2016;36:9763–9769. doi: 10.1523/JNEUROSCI.4095-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fontaine, J. J. R., Scherer, K. R., & Soriano, C. (Eds.). (2013). Series in affective science. Components of emotional meaning: A sourcebook. Oxford University Press.

- Gendron M, Hoemann K, Crittenden AN, Mangola SM, Ruark GA, Barrett LF. Emotion perception in Hadza Hunter-Gatherers. Scientific Reports. 2020;10:3867. doi: 10.1038/s41598-020-60257-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorno-Tempini ML, Hillis AE, Weintraub S, Kertesz A, Mendez M, Cappa SF, Ogar JM, Rohrer JD, Black S, Boeve BF, Manes F. Classification of primary progressive aphasia and its variants. Neurology. 2011;76:1006–1014. doi: 10.1212/WNL.0b013e31821103e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossi D, Di Vita A, Palermo L, Sabatini U, Trojano L, Guariglia C. The brain network for self-feeling: A symptom-lesion mapping study. Neuropsychologia. 2014;63:92–98. doi: 10.1016/j.neuropsychologia.2014.08.004. [DOI] [PubMed] [Google Scholar]

- Grossman M, McMillan C, Moore P, Ding L, Glosser G, Work M, Gee J. What’s in a name: Voxel-based morphometric analyses of MRI and naming difficulty in Alzheimer’s disease, frontotemporal dementia and corticobasal degeneration. Brain. 2004;127:628–649. doi: 10.1093/brain/awh075. [DOI] [PubMed] [Google Scholar]

- Guillory SA, Bujarski KA. Exploring emotions using invasive methods: Review of 60 years of human intracranial electrophysiology. Social Cognitive and Affective Neuroscience. 2014;9:1880–1889. doi: 10.1093/scan/nsu002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haley KL, Womack JL, Harmon TG, Williams SW. Visual analog rating of mood by people with aphasia. Topics in Stroke Rehabilitation. 2015;22:239–245. doi: 10.1179/1074935714Z.0000000009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamann S. Mapping discrete and dimensional emotions onto the brain: Controversies and consensus. Trends in Cognitive Sciences. 2012;16:458–466. doi: 10.1016/j.tics.2012.07.006. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Bookheimer SY, Mazziotta JC. Modulating emotional responses: Effects of a neocortical network on the limbic system. Neuroreport. 2000;11:43–48. doi: 10.1097/00001756-200001170-00009. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K. Semantic dementia: A unique clinicopathological syndrome. The Lancet Neurology. 2007;6:1004–1014. doi: 10.1016/S1474-4422(07)70266-1. [DOI] [PubMed] [Google Scholar]

- Hoffman P, Jefferies B, Ralph ML. Special issue of Neuropsychologia: Semantic cognition. Neuropsychologia. 2015;76:1–3. doi: 10.1016/j.neuropsychologia.2015.09.011. [DOI] [PubMed] [Google Scholar]

- Jackson JC, Watts J, Henry TR, List J-M, Forkel R, Mucha PJ, Greenhill SJ, Gray RD, Lindquist KA. Emotion semantics show both cultural variation and universal structure. Science. 2019;366(6472):1517–1522. doi: 10.1126/science.aaw8160. [DOI] [PubMed] [Google Scholar]

- Jackson RL, Hoffman P, Pobric G, Ralph MAL. The semantic network at work and rest: Differential connectivity of anterior temporal lobe subregions. The Journal of Neuroscience. 2016;36:1490–1501. doi: 10.1523/JNEUROSCI.2999-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jastorff J, De Winter FL, Van den Stock J, Vandenberghe R, Giese MA, Vandenbulcke M. Functional dissociation between anterior temporal lobe and inferior frontal gyrus in the processing of dynamic body expressions: Insights from behavioral variant frontotemporal dementia. Human Brain Mapping. 2016;37:4472–4486. doi: 10.1002/hbm.23322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kassam KS, Markey AR, Cherkassky VL, Loewenstein G, Just MA. Identifying emotions on the basis of neural activation. PLoS One. 2013;8:e66032. doi: 10.1371/journal.pone.0066032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay P, Kempton W. What is the Sapir-Whorf hypothesis? American Anthropologist. 1984;86:65–79. [Google Scholar]

- Kirkland T, Cunningham WA. Mapping emotions through time: How affective trajectories inform the language of emotion. Emotion. 2012;12:268–282. doi: 10.1037/a0024218. [DOI] [PubMed] [Google Scholar]

- Kragel PA, LaBar KS. Multivariate neural biomarkers of emotional states are categorically distinct. Social Cognitive and Affective Neuroscience. 2015;10:1437–1448. doi: 10.1093/scan/nsv032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kragel PA, LaBar KS. Decoding the nature of emotion in the brain. Trends in Cognitive Sciences. 2016;20:444–455. doi: 10.1016/j.tics.2016.03.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lane RD, Schwartz GE. Levels of emotional awareness: A cognitive-developmental theory and its application to psychopathology. The American Journal of Psychiatry. 1987;144(2):133–143. doi: 10.1176/ajp.144.2.133. [DOI] [PubMed] [Google Scholar]

- Lane RD, Weihs KL, Herring A, Hishaw A, Smith R. Affective agnosia: Expansion of the alexithymia construct and a new opportunity to integrate and extend Freud's legacy. Neuroscience and Biobehavioral Reviews. 2015;55:594–611. doi: 10.1016/j.neubiorev.2015.06.007. [DOI] [PubMed] [Google Scholar]

- LeDoux JE. The slippery slope of fear. Trends in Cognitive Sciences. 2013;17:155–156. doi: 10.1016/j.tics.2013.02.004. [DOI] [PubMed] [Google Scholar]

- LeDoux JE, Pine DS. Using neuroscience to help understand fear and anxiety: A two-system framework. American Journal of Psychiatry. 2016;173:1083–1093. doi: 10.1176/appi.ajp.2016.16030353. [DOI] [PubMed] [Google Scholar]

- Leshinskaya A, Caramazza A. For a cognitive neuroscience of concepts: Moving beyond the grounding issue. Psychonomic Bulletin & Review. 2016;23:991–1001. doi: 10.3758/s13423-015-0870-z. [DOI] [PubMed] [Google Scholar]

- Lieberman, M. D. (2011). Why symbolic processing of affect can disrupt negative affect: Social cognitive and affective neuroscience investigations. In A. Todorov, S. T. Fiske, & D. A. Prentice (Eds.), Oxford series in social cognition and social neuroscience. Social neuroscience: Toward understanding the underpinnings of the social mind (p. 188–209). Oxford University Press.

- Lieberman MD, Eisenberger NI, Crockett MJ, Tom SM, Pfeifer JH, Way BM. Putting feelings into words: Affect labeling disrupts amygdala activity in response to affective stimuli. Psychological Science. 2007;18:421–428. doi: 10.1111/j.1467-9280.2007.01916.x. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF. Constructing emotion: The experience of fear as a conceptual act. Psychological Science. 2008;19:898–903. doi: 10.1111/j.1467-9280.2008.02174.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF. A functional architecture of the human brain: Emerging insights from the science of emotion. Trends in Cognitive Sciences. 2012;16:533–540. doi: 10.1016/j.tics.2012.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF, Bliss-Moreau E, Russell JA. Language and the perception of emotion. Emotion. 2006;6:125–138. doi: 10.1037/1528-3542.6.1.125. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Gendron M. What’s in a word? Language constructs emotion perception. Emotion Review. 2013;5:66–71. [Google Scholar]

- Lindquist KA, Gendron M, Barrett LF, Dickerson BC. Emotion perception, but not affect perception, is impaired with semantic memory loss. Emotion. 2014;14:375–387. doi: 10.1037/a0035293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Gendron M, Satpute AB. Language and emotion. In: Lewis M, Haviland-Jones J, Barrett LF, editors. Handbook of emotions. 4. New York, NY: The Guilford Press; 2016. pp. 579–594. [Google Scholar]

- Lindquist KA, MacCormack JK, Shablack H. The role of language in emotion: Predictions from psychological constructionism. Frontiers in Psycholology. 2015;6:444. doi: 10.3389/fpsyg.2015.00444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Satpute AB, Gendron M. Does language do more than communicate emotion? Current Directions in Psychological Science. 2015;24:99–108. doi: 10.1177/0963721414553440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Satpute AB, Wager TD, Weber J, Barrett LF. The brain basis of positive and negative affect: Evidence from a meta-analysis of the human neuroimaging literature. Cerebral Cortex. 2016;26:1910–1922. doi: 10.1093/cercor/bhv001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist KA, Wager TD, Kober H, Bliss-Moreau E, Barrett LF. The brain basis of emotion: A meta-analytic review. Behavioral and Brain Sciences. 2012;35(3):121–143. doi: 10.1017/S0140525X11000446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lupyan G, Clark A. Words and the world predictive coding and the language-perception-cognition interface. Current Directions in Psychological Science. 2015;24:279–284. [Google Scholar]

- Lupyan G, Thompson-Schill SL. The evocative power of words: Activation of concepts by verbal and nonverbal means. Journal of Experimental Psychology: General. 2012;141(1):170–186. doi: 10.1037/a0024904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacCormack JK, Lindquist K. Bodily contributions to emotion: Schachter’s legacy for a psychological constructionist view on emotion. Emotion Review. 2017;9:36–45. [Google Scholar]

- MacLean PD. The triune brain in evolution: Role in paleocerebral functions. New York, NY: Plenum Press; 1990. [DOI] [PubMed] [Google Scholar]

- Mauss IB, Robinson MD. Measures of emotion: A review. Cognition and Emotion. 2009;23(2):209–237. doi: 10.1080/02699930802204677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moors, A., & Scherer, K. R. (2013). The role of appraisal in emotion. In M. D. Robinson, E. Watkins, & E. Harmon-Jones (Eds.), Handbook of cognition and emotion (p. 135–155). The Guilford Press.

- Mummery CJ, Patterson K, Price C, Ashburner J, Frackowiak R, Hodges JR. A voxel-based morphometry study of semantic dementia: Relationship between temporal lobe atrophy and semantic memory. Annals of Neurology. 2000;47(1):36–45. [PubMed] [Google Scholar]

- Mur, M., Bandettini, P. A., & Kriegeskorte, N. (2009). Revealing representational content with pattern-information fMRI—An introductory guide. Social Cognitive and Affective Neuroscience, nsn044. [DOI] [PMC free article] [PubMed]

- Niedenthal PM. Embodying emotion. Science. 2007;316(5827):1002–1005. doi: 10.1126/science.1136930. [DOI] [PubMed] [Google Scholar]

- Niedenthal PM, Barsalou LW, Winkielman P, Krauth-Gruber S, Ric F. Embodiment in attitudes, social perception, and emotion. Personality and Social Psychology Review. 2005;9(3):184–211. doi: 10.1207/s15327957pspr0903_1. [DOI] [PubMed] [Google Scholar]

- Nook EC, Lindquist KA, Zaki J. A new look at emotion perception: Concepts speed and shape facial emotion recognition. Emotion. 2015;15:569–578. doi: 10.1037/a0039166. [DOI] [PubMed] [Google Scholar]

- Nook, E., Satpute, A. B., & Ochsner, K. (in press). Emotion naming impedes emotion regulation. Affective Science. [DOI] [PMC free article] [PubMed]

- Nummenmaa L, Saarimäki H. Emotions as discrete patterns of systemic activity. Neuroscience Letters. 2017;693:3–8. doi: 10.1016/j.neulet.2017.07.012. [DOI] [PubMed] [Google Scholar]

- Oosterwijk S, Lindquist KA, Adebayo M, Barrett LF. The neural representation of typical and atypical experiences of negative images: Comparing fear, disgust and morbid fascination. Social Cognitive and Affective Neuroscience. 2016;11:11–22. doi: 10.1093/scan/nsv088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterwijk S, Lindquist KA, Anderson E, Dautoff R, Moriguchi Y, Barrett LF. States of mind: Emotions, body feelings, and thoughts share distributed neural networks. Neuroimage. 2012;62:2110–2128. doi: 10.1016/j.neuroimage.2012.05.079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterwijk S, Snoek L, Rotteveel M, Barrett LF, Scholte HS. Shared states: Using MVPA to test neural overlap between self-focused emotion imagery and other-focused emotion understanding. Social Cognitive and Affective Neuroscience. 2017;12(7):1025–1035. doi: 10.1093/scan/nsx037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panksepp J. Affective neuroscience: The foundations of human and animal emotions. New York: Oxford University Press; 1998. [Google Scholar]

- Panksepp J. The basic emotional circuits of mammalian brains: Do animals have affective lives? Neuroscience and Biobehavioral Reviews. 2011;35(9):1791–1804. doi: 10.1016/j.neubiorev.2011.08.003. [DOI] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Vuilleumier P. Supramodal representations of perceived emotions in the human brain. Journal of Neuroscience. 2010;30(30):10127–10134. doi: 10.1523/JNEUROSCI.2161-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L. On the relationship between emotion and cognition. Nature Reviews. Neuroscience. 2008;9(2):148–158. doi: 10.1038/nrn2317. [DOI] [PubMed] [Google Scholar]

- Pessoa L. Understanding emotion with brain networks. Current Opinion in Behavioral Sciences. 2018;19:19–25. doi: 10.1016/j.cobeha.2017.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: From a 'low road' to 'many roads' of evaluating biological significance. Nature Reviews Neuroscience. 2010;11:773–783. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poldrack RA. Can cognitive processes be inferred from neuroimaging data? Trends in Cognitive Sciences. 2006;10:59–63. doi: 10.1016/j.tics.2005.12.004. [DOI] [PubMed] [Google Scholar]

- Ralph MAL. Neurocognitive insights on conceptual knowledge and its breakdown. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 2014;369(1634):20120392. doi: 10.1098/rstb.2012.0392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raz G, Winetraub Y, Jacob Y, Kinreich S, Maron-Katz A, Shaham G, Podlipsky I, Gilam G, Soreq E, Hendler T. Portraying emotions at their unfolding: a multilayered approach for probing dynamics of neural networks. Neuroimage. 2012;60(2):1448–1461. doi: 10.1016/j.neuroimage.2011.12.084. [DOI] [PubMed] [Google Scholar]

- Raz, G., Touroutoglou, T., Wilson-Mendenhall, C., Gilam, G. Lin, T., Gonen, T., Jacob, Y., Atzil, S., Admon, R., Bleich-Cohen, M., Maron-Katz, A., Hendler, T. & Barrett, L. F. (2016). Functional connectivity dynamics during film viewing reveal common networks for different emotional experiences. Cognitive, Affective, and Behavioral Neuroscience, 16, 709–723. *Shared senior authorship. [DOI] [PubMed]

- Roberson D, Davidoff J, Braisby N. Similarity and categorisation: Neuropsychological evidence for a dissociation in explicit categorisation tasks. Cognition. 1999;71:1–42. doi: 10.1016/s0010-0277(99)00013-x. [DOI] [PubMed] [Google Scholar]

- Rosen JB, Donley MP. Animal studies of amygdala function in fear and uncertainty: Relevance to human research. Biological Psychology. 2006;73:49–60. doi: 10.1016/j.biopsycho.2006.01.007. [DOI] [PubMed] [Google Scholar]

- Russell JA. A circumplex model of affect. Journal of Personality and Social Psychology. 1980;39:1161–1178. [Google Scholar]

- Russell JA. Culture and the categorization of emotions. Psychological Bulletin. 1991;110:426–450. doi: 10.1037/0033-2909.110.3.426. [DOI] [PubMed] [Google Scholar]

- Russell JA, Bachorowski J-A, Fernández-Dols J-M. Facial and vocal expressions of emotion. Annual Review of Psychology. 2003;54:329–349. doi: 10.1146/annurev.psych.54.101601.145102. [DOI] [PubMed] [Google Scholar]

- Russell JA, Widen SC. A label superiority effect in children's categorization of facial expressions. Social Development. 2002;11:30–52. [Google Scholar]

- Saarimäki H, Gotsopoulos A, Jääskeläinen IP, Lampinen J, Vuilleumier P, Hari R, Sams M, Nummenmaa L. Discrete neural signatures of basic emotions. Cerebral Cortex. 2016;26(6):2563–2573. doi: 10.1093/cercor/bhv086. [DOI] [PubMed] [Google Scholar]

- Said CP, Moore CD, Engell AD, Todorov A, Haxby JV. Distributed representations of dynamic facial expressions in the superior temporal sulcus. Journal of Vision. 2010;10:11. doi: 10.1167/10.5.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satpute AB, Kragel PA, Barrett LF, Wager TD, Bianciardi M. Deconstructing arousal into wakeful, autonomic, and affective varieties. Neuroscience Letters. 2019;693:19–28. doi: 10.1016/j.neulet.2018.01.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satpute AB, Lindquist KA. The default mode network's role in emotion. Trends in Cognitive Sciences. 2019;23:851–864. doi: 10.1016/j.tics.2019.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satpute AB, Nook EC, Cakar ME. The role of language in the construction of emotion and memory: A predictive coding view. In: Lane RD, Nadel L, editors. Neuroscience of enduring change: Implications for psychotherapy. New York, NY: Oxford University Press, USA; 2020. pp. 56–88. [Google Scholar]

- Satpute AB, Nook EC, Narayanan S, Weber J, Shu J, Ochsner KN. Emotions in “black or white” or shades of gray? How we think about emotion shapes our perception and neural representation of emotion. Psychological Science. 2016;27:1428–1442. doi: 10.1177/0956797616661555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satpute AB, Shu J, Weber J, Roy M, Ochsner KN. The functional neural architecture of self-reports of affective experience. Biological Psychiatry. 2012;73:631–638. doi: 10.1016/j.biopsych.2012.10.001. [DOI] [PubMed] [Google Scholar]

- Schachter S, Singer JE. Cognitive, social, and physiological determinants of emotional state. Psychological Review. 1962;69:379–399. doi: 10.1037/h0046234. [DOI] [PubMed] [Google Scholar]

- Scherer KR, Moors A. The emotion process: Event appraisal and component differentiation. Annual Review of Psychology. 2019;70:719–745. doi: 10.1146/annurev-psych-122216-011854. [DOI] [PubMed] [Google Scholar]

- Schwartz GE, Davidson RJ, Maer F. Right hemisphere lateralization for emotion in the human brain: Interactions with cognition. Science. 1975;190(4211):286–288. doi: 10.1126/science.1179210. [DOI] [PubMed] [Google Scholar]

- Shablack, H., & Lindquist, K. A. (in press). The role of language in the development of emotion. In V. LoBue, K. Perez-Edgar, & K. Buss (Eds.), Handbook of emotion development.