Abstract

Situational awareness (SA) at both individual and team levels, plays a critical role in the operating room (OR). During the pre-incision time-out, the entire OR team comes together to deploy the surgical safety checklist (SSC). Worldwide, the implementation of the SSC has been shown to reduce intraoperative complications and mortality among surgical patients. In this study, we investigated the feasibility of applying computer vision analysis on surgical videos to extract team motion metrics that could differentiate teams with good SA from those with poor SA during the pre-incision time-out. We used a validated observation-based tool to assess SA, and a computer vision software to measure body position and motion patterns in the OR. Our findings showed that it is feasible to extract surgical team motion metrics captured via off-the-shelf OR cameras. Entropy as a measure of the level of team organization was able to distinguish surgical teams with good and poor SA. These findings corroborate existing studies showing that computer vision-based motion metrics have the potential to integrate traditional observation-based performance assessments in the OR.

Keywords: situational awareness, computer vision, cardiac surgery, teams

I. Introduction

Non-technical skills, such as teamwork, situational awareness, leadership, and communication play a critical role in the operating room (OR) [1] An increasing body of literature has shown that non-technical skills (NTS) are as important as technical skills to ensure patient safety and improve surgical performance in the OR [2]. Among the different social and cognitive skills, situational awareness (SA) plays a critical role in the surgical environment, presenting a significant association with medical errors and adverse events suffered by surgical patients [3].

As in other high-risk industries, surgery has adopted safety checklists and time-out procedures as a safety measure to improve quality patient care and enhance the performance of the surgical team [3]. In fact, several studies have demonstrated the positive impact of implementing the time-out and surgical checklist on morbidity and mortality across the globe [4]. During the pre-incision time-out, the entire OR team comes together to deploy the surgical safety checklist (SSC), requiring all team members to maintain adequate SA, while completing the SSC.

Measuring SA in the surgical setting is crucial for understanding the many factors influencing individual and team SA. It is also relevant with respect to potential computer-based solutions that could augment the surgical team cognition via enhancing SA during high-stress and complex situations [5,6]. To date, the vast majority of SA measures are either self-report tools or observation-based assessments, which do not allow for objective measurements in real-time [7].

Computer vision is a branch of artificial intelligence that enables computer systems to extract meaningful information from digital images and videos. Computer vision has increasingly been used in surgery to measure individual and team performance, leveraging video recordings easily captured via off-the-shelf cameras [8,9].

The objective of this pilot study was to investigate the feasibility of applying computer vision analysis on surgical videos to extract team motion metrics that could differentiate teams with good SA from those with poor SA during the pre-incision time-out.

II. METHODS

A. Participants

This research was approved by the Institutional Review Board at VA Boston Healthcare System and Harvard Medical School (IRB#3047). Informed consent was obtained from all participants, which included patients and all OR staff involved with the procedures. Data were collected during 30 non-emergent cardiac surgery procedures.

B. Procedures

A human factors expert observed videos from 30 cardiac surgery operations and rated the surgical team’s NTS during the pre-incision timeout, using the validated Non-Technical Skills for Surgeons (NOTSS) assessment tool [10]. The NOTSS tool has four categories (situational awareness, teamwork and communication, leadership, and decision-making). The SA category has the behavioral elements (gathering information, understanding information, and projecting and anticipating future state). The videos were recorded using a GoPro camera (HERO 4) capturing a wide view of the entire OR. Surgical teams were rated during the time-out using a 1–4 Likert scale. The rating for SA was used to select the teams below the first quartile (“Poor SA” group), and teams above the third quartile (“Good SA” group).

The open-source OpenPose software (version 1.4.0) [11] was used to extract 2-D body keypoints from all OR team members at 30 frames per second. The architecture of this software uses a two-branch multi-stage convolutional network (CNN) in which each stage in the first branch predicts confidence 2D maps of body part locations, and each stage in the second branch predicts Part Affinity Fields (PAF) which encode the degree of association between parts. Training and validation of the OpenPose algorithms were evaluated on two benchmarks for multi-person pose estimation: the MPII human multi-person dataset and the COCO 2016 keypoints challenge dataset. Both datasets had images collected from diverse real-life scenarios, such as crowding, scale variation, occlusion, and contact. The OpenPose system exceeded previous state-of-the-art systems [11].

The x and y coordinates of the neck keypoint (a surrogate for the entire body) were used to calculate the Euclidian distance between each team member’s neck and a reference point (x = 0, y = 0). Displacement of the neck keypoint measured from the previous frame relative to the current frame was calculated. Average displacement per frame (in pixels) across all team members was subsequently averaged over 1-second epochs. For each second, the team displacement was classified in one of 4 states (S1, S2, S3, S4) based on which quartile that value was in the entire motion data distribution. The distribution of these states overtime was quantified by calculating the Shannon’s entropy (H) in bits, using a 30-second sliding window updated each one second. The theoretical maximum entropy for four unique symbols randomly distributed is H=2.0 bits. Restricted symbol expression represents low entropy, which means there is a higher level of organization in the team motion [12]. The R programming language and R Studio software were used to calculate entropy using the ‘entropy’ package.

C. Statistical Analysis

Data distribution was tested for normality using the Kolmogorov–Smirnov test, and the data was non-normal, therefore, summarized as median (1st – 3rd interquartile). The non-parametric (Mann-Whitney U) Test was used to compare both groups (poor SA vs good SA).

III. RESULTS

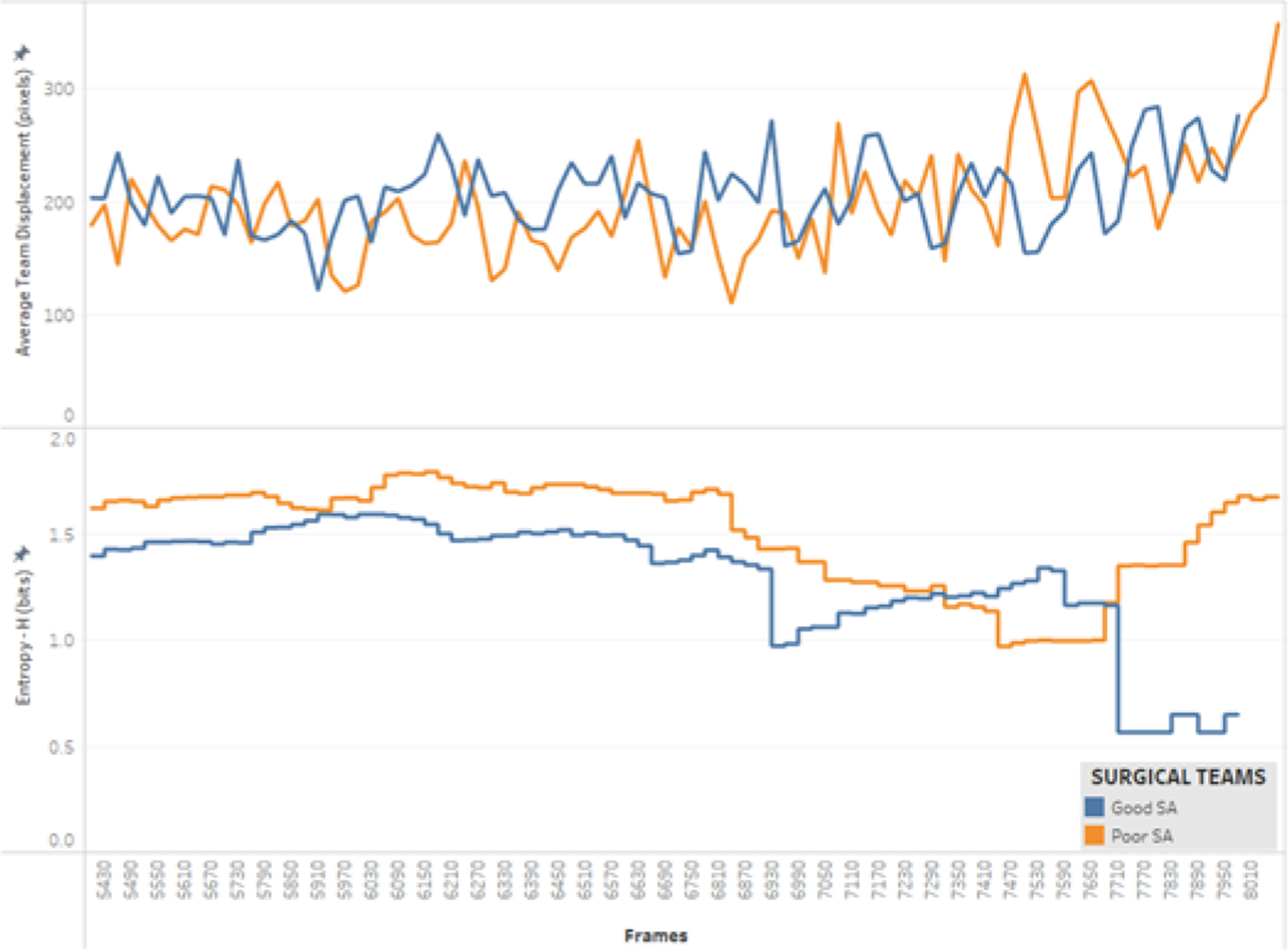

From a total of 30 cardiac procedures, 14 cardiac surgery teams (in the 1st and 4th quartile for SA scores) were included in this analysis. The median SA score was 2.0 in the ‘poor SA’ teams (N = 7) and 4.0 in the ‘good SA’ teams (N=7). ‘Good SA’ teams presented team displacement of 180 (127 – 267) pixels and entropy of 1.6 (1.2 – 1.8) bits. ‘Poor SA” teams presented team displacement of 190 (128 – 241) pixels and entropy of 1.7 (1.5 – 1.8) bits. The difference in team displacement was not statistically significant (p = 0.444). The difference in entropy was statistically significant (p < 0.001) between groups. Figure 1 shows the comparison between groups on the variation of the motion metrics overtime.

Fig. 1.

Variation of average team displacement and entropy over time (30 frames = 1 second)

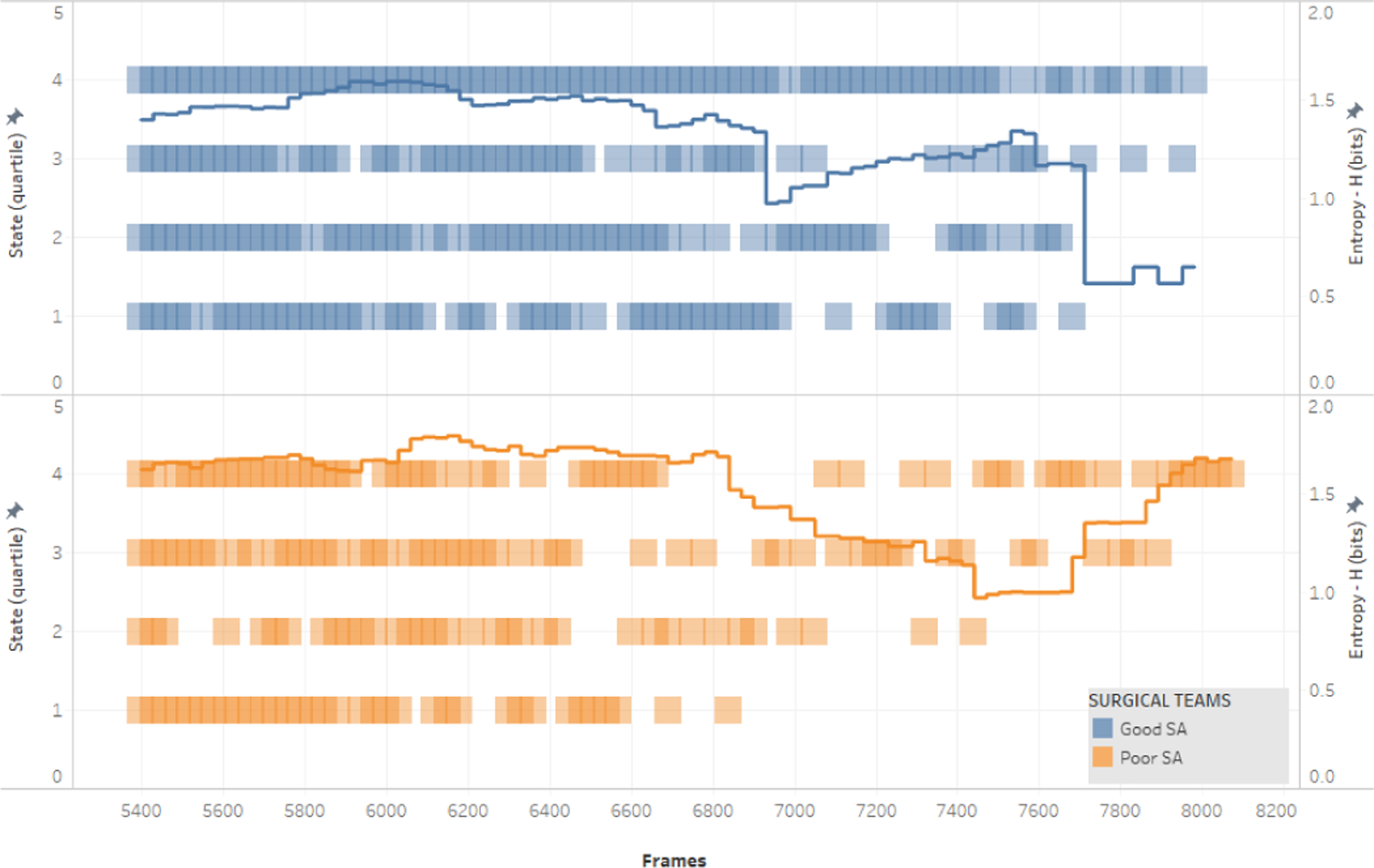

Figure 2 displays the difference in motion states patterns (S1, S2, S3, S4) between groups overtime. ‘Good SA’ teams presented more restricted and more continuous state streams, leading to lower entropy compared with ‘Poor SA” teams.

Fig. 2.

Distribution of motion states (S1, S2, S3, S4) and entropy over time (30 frames = 1 second).

IV. DISCUSSION

Our findings showed that it is feasible to extract surgical team motion metrics captured via off-the-shelf OR cameras and processed by an open-source computer vision software. Entropy as a measure of the level of team motion organization was able to distinguish both performance groups as it relates to SA assessed by a validated observational tool (NOTSS). These findings corroborate existing studies [8,9] showing that computer vision-based motion metrics have the potential to integrate traditional observation-based human performance assessments in the OR, with the advantage of providing objective metrics that can be extracted in real-time while the surgical operation is occurring.

The use of entropy measures to infer team cognitive states in surgical teams was also demonstrated when team members’ physiological data was used, instead of motion data. Dias et al. [13] have used heart rate variability parameters from surgeons, anesthesiologists, and perfusionists during cardiac surgery to measure cognitive load at the team level. In this study, the entropy of the team cognitive states streaming overtime, based on physiological parameters, was sensitive enough to detect variations in team cognitive load and uncertainty. Furthermore, there is extensive research on neurodynamic organizations of teams in the submarine and healthcare industry, using other physiological signals, such as electroencephalography [14–18]. The findings of our study using motion pattern metrics may provide additional insights for the field and multiple human behavioral and cognitive data can be used to infer cognitive constructs such as SA at the individual and team level.

SA metrics based on computer vision can also be used in computer-based cognitive systems aiming to augment the cognitive capabilities of the surgical team [6], particularly in situations prone to errors, such as during emergencies and/or complex operations. Previous studies have shown the potential to integrate motion analysis metrics from surgical with psychophysiological parameters, such as heart rate variability as a proxy for the cognitive load [13]. A multi-modal approach could be used for developing context- and cognitive-aware systems able to monitor de surgical team’s cognitive states and the surgical workflow, with the capability of providing real-time corrective feedback [19, 20]. This type of cognitive aid could allow enhancements in SA and the correction of course of actions, which ultimately have the potential to improve patient safety and prevent medical errors and surgical adverse events [21, 22].

Acknowledgment

This work was supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health [R01HL126896, R56HL157457]. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We would like to thank the clinical staff from the Veterans Affairs (VA) Boston Healthcare System for their valuable insights and collaboration in this study.

Contributor Information

Roger D. Dias, Department of Emergency Medicine, Harvard Medical School, Brigham and Women’s Hospital, Boston, MA, USA

Lauren R. Kennedy-Metz, Department of Surgery, Harvard Medical School, VA Boston Healthcare System, West Roxbury, MA, USA

Steven J. Yule, Department of Clinical Surgery, University of Edinburgh, Edinburgh, Scotland

Matthew Gombolay, College of Computing, Georgia Institute of Technology, Atlanta, GA, USA.

Marco A. Zenati, Department of Surgery, Harvard Medical School, VA Boston Healthcare System, West Roxbury, MA, USA

References

- [1].Ebnali M, Lamb R, Fathi R, Hulme K. Virtual reality tour for first-time users of highly automated cars: Comparing the effects of virtual environments with different levels of interaction fidelity. Appl Ergon 2021;90: 103226. [DOI] [PubMed] [Google Scholar]

- [2].Paterson-Brown S, Tobin S, Yule S. Assessing non-technical skills in the operating room. Enhancing Surgical Performance 2015. pp. 169–184. doi: 10.1201/b18702-10 [DOI] [Google Scholar]

- [3].Flin R, Youngson GG, Yule S. Enhancing Surgical Performance: A Primer in Non-technical Skills CRC Press; 2015. [Google Scholar]

- [4].A Surgical Safety Checklist to Reduce Morbidity and Mortality in a Global Population. Clinical Otolaryngology 2010. pp. 216–216. doi: 10.1111/j.1749-4486.2009.02137.x [DOI] [Google Scholar]

- [5].Graafland M, Schraagen JMC, Boermeester MA, Bemelman WA, Schijven MP. Training situational awareness to reduce surgical errors in the operating room. British Journal of Surgery 2014. pp. 16–23. doi: 10.1002/bjs.9643 [DOI] [PubMed] [Google Scholar]

- [6].Dias RD, Yule SJ, Zenati MA. Augmented Cognition in the Operating Room. In: Atallah S, editor. Digital Surgery Cham: Springer International Publishing; 2021. pp. 261–268. [Google Scholar]

- [7].Wickens CD. Situation Awareness: Review of Mica Endsley’s 1995 Articles on Situation Awareness Theory and Measurement. Human Factors: The Journal of the Human Factors and Ergonomics Society 2008. pp. 397–403. doi: 10.1518/001872008x288420 [DOI] [PubMed] [Google Scholar]

- [8].Dias RD, Shah JA, Zenati MA. Artificial intelligence in cardiothoracic surgery. Minerva Cardioangiologica 2020. doi: 10.23736/s0026-4725.20.05235-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Kennedy-Metz LR, Mascagni P, Torralba A, Dias RD, Perona P, Shah JA, et al. Computer Vision in the Operating Room: Opportunities and Caveats. IEEE Trans Med Robot Bionics 2021;3: 2–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Yule S, Gupta A, Gazarian D, Geraghty A, Smink DS, Beard J, et al. Construct and criterion validity testing of the Non-Technical Skills for Surgeons (NOTSS) behaviour assessment tool using videos of simulated operations. Br J Surg 2018;105: 719–727. [DOI] [PubMed] [Google Scholar]

- [11].Cao Z, Simon T, Wei S-E, Sheikh Y. Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017. doi: 10.1109/cvpr.2017.143 [DOI] [PubMed] [Google Scholar]

- [12].Dias RD, Zenati MA, Stevens R, Gabany JM, Yule SJ. Physiological synchronization and entropy as measures of team cognitive load. Journal of Biomedical Informatics 2019. p. 103250. doi: 10.1016/j.jbi.2019.103250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Dias RD, Yule SJ, Kennedy-Metz L, Zenati MA. Psychophysiological Data and Computer Vision to Assess Cognitive Load and Team Dynamics in Cardiac Surgery Available: http://www.ipcai2019.org/pdf/Long_Abstracts/Diaz_etal_IPCAI_2019_Long_Abstract.pdf

- [14].Stevens RH, Galloway TL. Toward a quantitative description of the neurodynamic organizations of teams. Soc Neurosci 2014;9: 160–173. [DOI] [PubMed] [Google Scholar]

- [15].Stevens R, Galloway T, Halpin D, Willemsen-Dunlap A. Healthcare Teams Neurodynamically Reorganize When Resolving Uncertainty. Entropy 2016;18: 427. [Google Scholar]

- [16].Gallowaya T, Stevensa R, Yulec S, Gormane J, Willemsen-Dunlap A, Dias R. Moving our Understanding of Team Dynamics from the Simulation Room to the Operating Room. Proc Hum Fact Ergon Soc Annu Meet 2019;63: 227–229. [Google Scholar]

- [17].Stevens RH, Galloway TL. Are Neurodynamic Organizations A Fundamental Property of Teamwork? Front Psychol 2017;8: 644. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Stevens R, Galloway T, Wang P, Berka C, Tan V, Wohlgemuth T, et al. Modeling the neurodynamic complexity of submarine navigation teams. Comput Math Organ Theory 2013;19: 346–369. [Google Scholar]

- [19].Dias RD, Conboy HM, Gabany JM, Clarke LA, Osterweil LJ, Avrunin GS, et al. Development of an Interactive Dashboard to Analyze Cognitive Workload of Surgical Teams During Complex Procedural Care. IEEE Int Interdiscip Conf Cogn Methods Situat Aware Decis Support 2018;2018: 77–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Zenati MA, Kennedy-Metz L, Dias RD. Cognitive Engineering to Improve Patient Safety and Outcomes in Cardiothoracic Surgery. Semin Thorac Cardiovasc Surg 2019. doi: 10.1053/j.semtcvs.2019.10.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Avrunin GS, Clarke LA, Conboy HM, Osterweil LJ, Dias RD, Yule SJ, et al. Toward Improving Surgical Outcomes by Incorporating Cognitive Load Measurement into Process-Driven Guidance. Softw Eng Healthc Syst SEHS IEEE ACM Int Workshop 2018;2018: 2–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tarola CL, Hirji S, Yule SJ, Gabany JM, Zenati A, Dias RD, et al. Cognitive Support to Promote Shared Mental Models during Safety-Critical Situations in Cardiac Surgery (Late Breaking Report). 2018 IEEE Conf Cogn Comput Asp Situat Manag CogSIMA (2018) 2018;2018: 165–167. [DOI] [PMC free article] [PubMed] [Google Scholar]