Abstract

Mobile device proficiency is increasingly required to participate in society. Unfortunately, there still exists a digital divide between younger and older adults, especially with respect to mobile devices (i.e., tablet computers and smartphones). Training is an important goal to ensure that older adults can reap the benefits of these devices. However, efficient/effective training depends on the ability to gauge current proficiency levels. We developed a new scale to accurately assess the mobile device proficiency of older adults: the Mobile Device Proficiency Questionnaire (MDPQ). We present and validate the MDPQ and a short 16-question version of the MDPQ (MDPQ-16). The MDPQ, its subscales, and the MDPQ-16 were found to be highly reliable and valid measures of mobile device proficiency in a large sample. We conclude that the MDPQ and MDPQ-16 may serve as useful tools for facilitating mobile device training of older adults and measuring mobile device proficiency for research purposes.

Keywords: technology, computers, mobile devices, survey, questionnaire

Introduction

As many nations experience an increase in the number and proportion of older adults that make up their population (population aging), it is increasingly important to explore the extent to which technology can help older adults maintain their independence (Charness & Boot, 2009). For example, computers and mobile devices (i.e., smartphones and tablet computers) offer reminder and calendaring systems that can help support prospective memory and facilitate medication adherence. The Internet and various mobile applications can provide access to important information and resources related to health, help individuals track and understand their own health status, and provide encouragement for engaging in activities such as physical exercise and healthy eating. Furthermore, technology can support communication with family and friends (e.g., email, Facebook, Skype), finance management (e.g., mobile banking, online tax filing), learning opportunities (e.g., online courses offered by organizations such as Coursera and Khan Academy), information gathering (e.g., Internet search engines, Wikipedia, WebMD), transportation (e.g., airfare booking websites, Uber), and access to enrichment opportunities provided by games, music, and movies (for recent discussion, see Delello & McWhorter, 2015). The potential for technology to provide assistance and enrichment to older adults is large, but unfortunately, many older adults, especially those with lower socioeconomic status (SES), disabilities, heightened technology anxiety, and low self-efficacy, have adopted technologies such as the Internet, computers, and smartphones at much lower rates than the adult population overall (Czaja et al., 2006; Smith, 2014).

Low technology adoption rates have been linked to cognitive abilities (Czaja et al., 2006), attitudinal barriers (Melenhorst, Rogers, & Bouwhuis, 2006; Mitzner et al., 2010), and poor technology design that does not take into account the knowledge, perceptual, and cognitive abilities of older adults (Stronge, Rogers, & Fisk, 2006). However, Boot and colleagues (2015) also argue that adequate training is an additional factor that needs to be considered to promote technology adoption and use. To train learners effectively and efficiently to use mobile devices such as tablet computers and smartphones, one first needs to know where training should begin. Training someone who already knows how to perform basic tablet and smartphone functions such as how to turn the device on and off, and how to type using an onscreen keyboard, would be a waste of time and frustrating for the learner. However, training someone without basic knowledge of how to navigate onscreen menus to perform the intricacies of managing files and privacy settings would be a fruitless effort. Thus, the ability to assess initial proficiency is crucial for training success. In addition to one-on-one training, knowing a learner’s current levels of proficiency is important for group training settings as well (e.g., technology training classes offered by senior centers). A measure of initial levels of proficiency can be used to form separate classes for low and high proficient individuals to ensure that their training begins at the right level and learners are not frustrated due to training being too basic or too advanced.

Boot and colleagues (2015) developed the Computer Proficiency Questionnaire (CPQ) and a short form of the CPQ (CPQ-12) to be able to obtain a quick and accurate measure of computer proficiency in older adults for research and training purposes. The CPQ focused on traditional desktop computer proficiency and on areas of computer use predicted to be important to support well-being and independence in an older adult population. For example, some subscales focused on older adults’ proficiency with respect to using computers to communicate, accessing information through the Internet, and using calendaring and reminding software. Overall, this scale was found to be both reliable and valid.

Trends in computing have created the need for a new scale. More often than ever, access to the Internet, email, and software is done through mobile devices such as tablet computers and smartphones. This trend is reflected in device ownership data from the Pew Research Center (2015). In 2015, 45% of U.S. adults owned tablet computers, substantially up from 8% in 2011. From a high of 80% in 2012, desktop/laptop computer ownership has declined to 73% in 2015. Although desktop computer sales are down and are predicted to continue to decline, predictions of a stronger dominance of the tablet over the desktop PC may have been overestimated, partly due to the fact that another mobile device has been competing with tablet computers: the smartphone (IDC Worldwide, Quarterly Smart Connected Device Tracker, 2015). Among U.S. adults, smartphone ownership has increased from 35% in 2011 to 68% in 2015. The increased popularity of mobile computing devices suggests that older adults may experience an increased need or expectation to use them. Yet currently only 32% of older adults (65+) own a tablet (compare this with 57% of 30–45 year olds) and 30% own a smartphone (compare this with 83% of 30–45 year olds; Anderson, 2015). These data provide evidence that a large digital divide exists with respect to mobile computing.

In addition to their increased presence, another point to consider is that there are inherent advantages to mobile computing compared with desktop computing, including being able to access information and services from any room of the house or even outside of the home. For example, a prospective memory software application designed to remind someone of upcoming appointments, or of when to take a medication, would be much more useful if it were implemented on a mobile platform that could be carried with the user throughout the day (compared with a PC in a fixed location). Without mobile device proficiency, older adults might be locked out of the advantages of useful technologies. For these reasons, we believe that there is a compelling reason to be able to assess the mobile device proficiency of older adults, above and beyond computer proficiency. Unless appropriate training is provided, older adults may be limited in their ability to derive the full benefits of technology by being limited to non-portable computing options.

The present study aims to validate a new tool for measuring mobile device proficiency across the life span by assessing both basic and advanced proficiencies related to smartphone and tablet use across eight subscales: (a) Mobile Device Basics, (b) Communication, (c) Data and File Storage, (d) Internet, (e) Calendar, (f) Entertainment, (g) Privacy, and (h) Troubleshooting and Software Management. These devices feature unique input methods (touchscreen menus, virtual keyboards) and design issues (small screens and buttons) that likely influence device proficiency but are not captured by the CPQ. In the following sections, we describe evidence that speaks to the reliability of this new measure, as well as the validity of the measure as it relates to measures of technology experience and computer proficiency for both younger and older adults.

The following hypotheses were tested to assess the reliability and the validity of the Mobile Device Proficiency Questionnaire (MDPQ; 46 questions) and a short form of the MDPQ (MDPQ-16; 16 questions).

Hypothesis 1 (H1): If the two measures (MDPQ, MDPQ-16) are reliable, Cronbach’s α will be greater than .80 for each scale and associated subscales.

Hypothesis 2 (H2): If the measures are valid, they should be sensitive to age-related differences in technology proficiency (reflecting a digital divide), both with respect to group comparisons of younger and older adults, and with respect to increasing age even among an older adult sample.

Hypothesis 3 (H3): If the measures are valid, a significant relationship should exist between computer proficiency and mobile device proficiency under the assumption that different types of technology proficiency should vary together.

Hypothesis 4 (H4): If the MDPQ and its short form are assessing largely mobile device proficiency (rather than overall technology proficiency), divergent validity should be observed: Mobile device proficiency among older adults should be more related to the frequency of self-reported mobile device use, and less related to the frequency of computer use.

Hypothesis 5 (H5): If MDPQ subscales are distinguishing meaningful categories of use, the factor structure revealed by principal components analysis (PCA) should mirror the organization of the MDPQ.

Method

Participants

All participants were recruited from the Tallahassee, Florida, region. Older adult participants (ages 65+, N = 109) were recruited in a number of ways to ensure variability in technology proficiency. Some participants were contacted through mail using a mailing list of individuals 65 years of age or older, and some filled out surveys as part of a cognitive intervention study in which they participated. To target more technology proficient older adults, we also emailed participants who had provided an email address for our participant database. This database is housed within the Florida State University (FSU) site of the Center for Research and Education on Aging and Technology Enhancement (CREATE) and contains information of participants (of all ages) from previous studies who mentioned interest in being contacted for future studies. These participants filled out identical online version of all measures using the online survey software, Qualtrics. Younger adult participants (n = 40) were FSU undergraduate students invited to participate in the current study after completing other studies within the lab. These participants filled out paper or digital (i.e., via Qualtrics) versions of the surveys and measures described below, in the lab or at home (postage was provided for materials to be returned to the lab). The number of younger adults in our sample was smaller by design as it was expected that younger adults would score near ceiling across most measures due to their high general technology experience (Smith, 2014).

Measures

All participants completed four measures: (a) the CPQ-12 (Cronbach’s alpha = .95, Boot et al., 2015); (b) a short tech experience survey that asked how long participants had been using computers, how many hours per week participants used computers, and identical questions related to mobile devices; (c) a survey collecting basic demographic information; and (d) the MDPQ. This protocol and all measures were approved by FSU’s Human Subjects Committee (HSC No. 2015.15702).

The MDPQ (see Appendix A) was designed to assess overall mobile device proficiency, while also providing information about proficiencies across eight activity domains. The first page of the survey educated participants about the types of devices of interest: tablet computers, e-readers, and smartphones. Our surveys referred to these devices as “portable touchscreen devices” and provided a description of the function and purpose of these devices and photographs of examples. However, after data collection, we came to prefer the more concise term mobile device rather than the longer “portable touchscreen device,” and in this manuscript and in associated materials provided, we have replaced “portable touchscreen device” with mobile device.

Like the CPQ, MDPQ subscales were designed to assess functions we predicted would be related to technology’s ability to help older adults maintain their independence and improve their well-being (Czaja et al., 2015). For example, calendaring might be used to support prospective memory. The Internet might be used to search for health information and access local and national resources to support independent living. Communication functions might help older adults keep in touch with friends and family. This may be especially important for older adults who might live alone and be at risk for social isolation.

The MDPQ asks seniors to rate their ability to perform 46 operations on a smartphone or tablet device (e.g., Using a mobile device, I can: Find health information on the Internet) on a 5-point scale (e.g., 1 = never tried, 2 = not at all, 3 = not very easily, 4 = somewhat easily, 5 = very easily). The first subscale, consisting of nine items, was comprised of questions related to participants’ proficiency performing mobile device basics, such as “Turn the device on and off.” The second subscale, consisting of nine items, was comprised of questions related to participants’ proficiency performing Communication tasks, such as “Open emails.” The third subscale, consisting of three items, was comprised of questions related to proficiency with Data and File Storage tasks, such as “Transfer information (files such as music, pictures, documents) on my portable device to my computer.” The fourth subscale, consisting of eight items, was comprised of questions related to proficiency using the Internet, such as “Use search engines (e.g., Google and Bing).” The fifth subscale, consisting of three items, was comprised of questions related to Calendar software, such as “Enter events and appointments into a calendar.” The sixth subscale, consisting of five items, was comprised of questions related to Entertainment, such as “Watch movies and videos.” The seventh subscale, consisting of four items, was comprised of questions related to Privacy, such as “Set up a password to lock/unlock the device.” The eighth subscale, consisting of five items, was comprised of questions related to the Troubleshooting and Software Management, such as “Restart the device when it is frozen, or not working right.”

Results

Participant Demographics

For the purposes of evaluating the questionnaire, our sample consisted of both younger (Mage = 19.5, SD = 1.8) and older adults (Mage = 73, SD = 5.2). A more detailed summary of the participant demographics can be seen in Table 1.

Table 1.

Participant Demographics.

| Young adults | Older adults | |

|---|---|---|

| n | 40 | 109 |

| M age (SD) | 19.5 (1.8) | 72.9 (5.2) |

| Age range | 18–29 | 65–93 |

| Proportion female | 0.78 | 0.52 |

| Proportion non-Caucasian | 0.25 | 0.07 |

| Proportion Hispanic/Latino | 0.33 | 0.03 |

Scoring and Creation of the MDPQ-16

We used an identical scoring scheme as the CPQ (Boot et al., 2015). For each subscale, answers for all questions were averaged. These averaged scores were then summed across subscales to come up with a total proficiency measure. A summary of MDPQ scores and subscale scores are provided in Table 2. Note that the number of participants fluctuates due to participants occasionally failing to answer one or more questions.

Table 2.

Summary of MDPQ Scores as a Function of Age.

| Scale | Young adults | Older adults | ||||

|---|---|---|---|---|---|---|

| n | M | SD | n | M | SD | |

| MDPQ | 39 | 37.7 | 2.3 | 97 | 19.2 | 10.5 |

| Mobile Device Basics | 40 | 4.9 | 0.2 | 104 | 3.1 | 1.5 |

| Communication | 40 | 4.7 | 0.4 | 107 | 2.5 | 1.4 |

| Data and File Storage | 40 | 4.3 | 0.9 | 109 | 1.7 | 1.2 |

| Internet | 40 | 4.8 | 0.4 | 106 | 2.7 | 1.7 |

| Calendar | 40 | 4.8 | 0.7 | 107 | 2.2 | 1.7 |

| Entertainment | 39 | 4.8 | 0.3 | 107 | 2.3 | 1.3 |

| Privacy | 40 | 4.6 | 0.6 | 108 | 2.3 | 1.5 |

| Troubleshooting and Software Management | 40 | 4.8 | 0.3 | 107 | 2.5 | 1.5 |

Note. MDPQ = Mobile Device Proficiency Questionnaire.

We replicated the analyses performed by Boot et al. (2015) to develop a short form of the MDPQ: the MDPQ-16 (see Appendix B). PCA was completed on each subscale, resulting in one factor per subscale. For the MDPQ-16, we retained the two questions from each subscale that loaded most highly onto each subscale factor. Proficiency scores were calculated in the same manner as the full MDPQ, averaging subscale questions and adding them to create one combined measure. A summary of MDPQ-16 scores and subscales is provided in Table 3. Note that fewer participants are missing in this table because some skipped questions were questions that were not retained in the MDPQ-16. Next, we turn to the reliability and validity of the MDPQ and the MDPQ-16.

Table 3.

Summary of MDPQ-16 Scores as a Function of Age.

| Scale | Young adults | Older adults | ||||

|---|---|---|---|---|---|---|

| n | M | SD | n | M | SD | |

| MDPQ-16 | 39 | 38.4 | 1.7 | 105 | 20.0 | 11.0 |

| Mobile Device Basics | 40 | 4.9 | 0.2 | 107 | 3.4 | 1.7 |

| Communication | 40 | 4.9 | 0.2 | 108 | 2.8 | 1.7 |

| Data and File Storage | 40 | 4.4 | 0.7 | 109 | 1.7 | 1.3 |

| Internet | 40 | 4.9 | 0.2 | 108 | 2.9 | 1.9 |

| Calendar | 40 | 4.8 | 0.7 | 108 | 2.3 | 1.7 |

| Entertainment | 39 | 4.9 | 0.2 | 108 | 2.3 | 1.5 |

| Privacy | 40 | 4.7 | 0.5 | 108 | 2.4 | 1.7 |

| Troubleshooting and Software Management | 40 | 4.9 | 0.2 | 108 | 2.4 | 1.7 |

Note. MDPQ-16 = short version of MDPQ; MDPQ = Mobile Device Proficiency Questionnaire.

Reliability

H1: If the two measures (MDPQ, MDPQ-16) are reliable, Cronbach’s α will be greater than .80 for each scale and associated subscales.

Cronbach’s α (Cronbach, 1951) was used to assess the reliability of the MDPQ and each of its subscales. Overall, the reliability of this scale was excellent (Cronbach’s α = .99), with subscales ranging from .84 to .97 (Mobile Device Basics, α = .95; Communication, α = .94; Data and File Storage, α = .90; Internet, α = .97; Calendar, α = .96; Entertainment, α = .84; Privacy, α = .90; Troubleshooting and Software Management, α = .93). The reliability of the short form of the MDPQ, the MDPQ-16, was excellent in general (Cronbach’s α = .96), with the reliability of MDPQ-16 subscales ranging from .75 to .99 (Mobile Device Basics, α = .94; Communication, α = .83; Data and File Storage, α = .89; Internet, α = .99; Calendar, α = .98; Entertainment, α = .75; Privacy, α = .87; Troubleshooting and Software Management, α = .89). Not surprisingly, reliability was lower for factors with fewer questions, but was still acceptable or better in all cases. Overall, these results suggest that the MDPQ and MDPQ-16 are each measuring a single construct reliably. Our next hypotheses related to whether this construct is mobile device proficiency.

Validity

H2: If the measures are valid, they should be sensitive to age-related differences in technology proficiency (reflecting a digital divide), both with respect to group comparisons of younger and older adults, and with respect to increasing age even among an older adult sample.

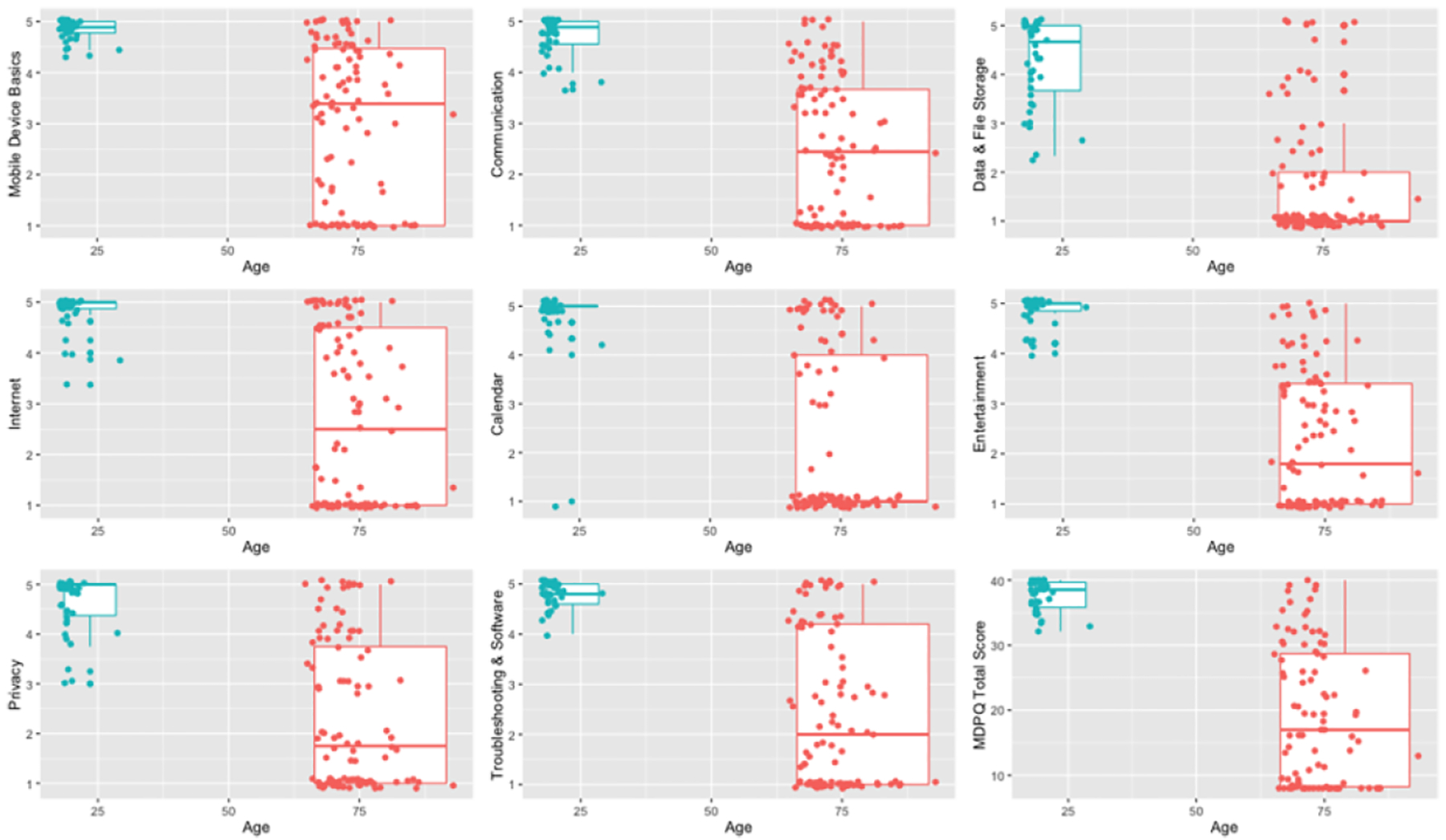

As can be seen from Tables 2 and 3, our younger adult sample (Mage = 19.5) reported higher total proficiency compared with our older sample (Mage = 73) and higher proficiency for each subscale for the MDPQ and MDPQ-16 (all differences p < .01). Even among only the older adult sample, age was reliably correlated with MDPQ, r(91) = −.29, p = .005, and MDPQ-16, r(99) = −.29, p = .003, scores.1 Figure 1, depicting MDPQ scores and subscale scores, also reveals variability in proficiency within the younger adult and the older adult samples, and as one might expect, the variability in proficiency was greater for older adults. It should also be noted that even among younger adults, subscales related to more complex mobile device actions such as managing privacy settings and files demonstrated greater variability and lower scores compared with more simple actions (mobile device basics).

Figure 1.

Boxplots of scores for each MDPQ subscale, and total MDPQ score with scatterplots overlaid.

Note. MDPQ = Mobile Device Proficiency Questionnaire.

H3: If the measures are valid, a significant relationship should exist between computer proficiency and mobile device proficiency under the assumption that different types of technology proficiency should vary together.

Given the large digital divide that might overestimate relationships between computer proficiency and mobile device proficiency, we explored this question with only the older adult data set. We expected that high technology proficiency in the domain of computers would be related to high mobile device proficiency. This is exactly what was observed; there was a significant correlation between the MDPQ and the CPQ-12, r(87) = .64, p < .001, and a significant correlation between the MDPQ-16 and the CPQ-12, r(95) = .64, p < .001, among older adults.

H4: If the MDPQ and its short form are assessing largely mobile device proficiency (rather than overall technology proficiency), divergent validity should be observed: Mobile device proficiency among older adults should be more related to the frequency of self-reported mobile device use, and less related to the frequency of computer use.

We assessed how frequently older adults used computers and mobile devices, and also how long older adults had been using each device. We expected mobile device proficiency to be more highly correlated with mobile device use, and less correlated with computer use. Table 4 depicts these results. As predicted, a much stronger correlation was observed between the MDPQ and MDPQ-16 and self-reported mobile device experience compared with computer experience. This provides divergent validity, further speaking to the ability of the MDPQ and MDPQ-16 to specifically assess largely mobile device proficiency.

Table 4.

Summary of Correlations of the MDPQ and MDPQ-16 With Computer and Mobile Device Experience (Older Adult Data).

| Scale | MDPQ | MDPQ-16 | ||

|---|---|---|---|---|

| n | r | n | r | |

| Computers, length of use | 94 | .09 | 102 | .09 |

| Computers, frequency of use | 94 | .25* | 102 | .27** |

| Mobile device, length of use | 95 | .61** | 102 | .62** |

| Mobile device, frequency of use | 95 | .63** | 102 | .63** |

Note. MDPQ = Mobile Device Proficiency Questionnaire; MDPQ-16 = short version of MDPQ.

p < .05.

p < .01.

Factor Structure of the MDPQ

H5: If MDPQ subscales are picking up on meaningful categories of use, the factor structure revealed by PCA should mirror the organization of the MDPQ.

We developed the MDPQ to tap eight different domains of mobile device proficiency. To characterize the structure of the full MDPQ, and determine whether questions generally fell within our predicted domains, a factor analysis was conducted on older adult data using Varimax rotation. This revealed that six rather than eight factors accounted for a little over 75% of the variation in the data. However, in general, questions expected to assess the same domains of proficiency clustered together (Table 5). Questions assessing mobile device basics and email communication proficiency clustered together under Factor 1. Questions related to file storage clustered together under Factor 2. Questions related to Internet proficiency clustered under Factor 3. Questions related to more advanced proficiencies related to privacy and software management clustered under Factor 4. Questions related to calendar software all clustered under Factor 5. Finally, questions related to entertainment clustered together under Factor 6. Thus, the factor structure of the MDPQ was sensible and largely conformed to expectations.

Table 5.

Principal Components Analysis of the MDPQ (Older Adult Data).

| Question | Factor | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 1a. Turn the device on and off | .853 | |||||

| 1b. Charge the device when the battery is low | .835 | |||||

| 1c. Navigate onscreen menus using the touchscreen | .834 | |||||

| 1d. Use the onscreen keyboard to type | .824 | |||||

| 1e. Copy and paste text using the touchscreen | .646 | |||||

| 1f. Adjust the volume of the device | .787 | |||||

| 1i. Connect to a WiFi network | .626 | |||||

| 2a. Open emails | .739 | |||||

| 2b. Send emails | .718 | |||||

| 2e. View pictures sent by email | .636 | |||||

| 2f. Send pictures by email | ||||||

| 2h. Use instant-messaging (e.g., AIM, Yahoo Messenger, MSN Messenger) | .678 | |||||

| 2i. Use video-messaging (e.g., Skype, Google Hangout, FaceTime) | .645 | |||||

| 3a. Transfer information (files such as music, pictures, documents) on my portable device to my computer | .754 | |||||

| 3b. Transfer information (files such as music, pictures, documents) on my computer to my portable device | .765 | |||||

| 3c. Store information with a service that lets me view my files from anywhere (e.g., Dropbox, Google Drive, Microsoft Onedrive) | .635 | |||||

| 4a. Use search engines (e.g., Google, Bing) | .662 | |||||

| 4b. Find information about local community resources on the Internet | .625 | |||||

| 4c. Find information about my hobbies and interests on the Internet | .668 | |||||

| 4d. Find health information on the Internet | .696 | |||||

| 4f. Make purchases on the Internet | .722 | |||||

| 5a. Enter events and appointments into a calendar | .784 | |||||

| 5b. Check the date and time of upcoming and prior appointments | .813 | |||||

| 5c. Set up alerts to remind me of events and appointments | .821 | |||||

| 6b. Watch movies and videos | .713 | |||||

| 6c. Listen to music | .608 | |||||

| 6e. Take pictures and video | .674 | |||||

| 7a. Set up a password to lock/unlock the device | .633 | |||||

| 7c. Erase all Internet browsing history and temporary files | .668 | |||||

| 8d. Delete games and other applications | .618 | |||||

Note. Only loadings >.60 are depicted. MDPQ = Mobile Device Proficiency Questionnaire.

Discussion

Currently, less than a third of older adults own a smartphone, and less than a third own a tablet computer (Anderson, 2015). As technology proficiency becomes increasingly important to perform activities such as paying bills, accessing public transportation, and accessing health information, and as computing shifts more toward mobile devices, it is increasingly important to ensure that older adults are not locked out of these activities as a function of low technology proficiency. Mobile device training can help ensure that older adults are able to reap the benefits of these forms of technology, but this training depends on accurately gauging current levels of knowledge.

We present the MDPQ and MDPQ-16 as reliable and valid measures of mobile device proficiency. Reliability analyses suggested that measures tapped a single construct, and validity analyses suggested this construct is strongly related to mobile device experience. Further evidence for the validity of the measure comes from expected relationships with age, computer proficiency, and computer and mobile device experience. In general, a PCA revealed a sensible factor structure that closely replicated a priori domains of mobile device function. The MDPQ-16 allows for the measurement of mobile device proficiency in a short amount of time. The matrix format of the survey and common question framing and response options should facilitate quick and accurate responses. The long version allows for a more detailed understanding of what older adults can and cannot do with mobile devices.

When training technology use, our colleages noted that the older adults they worked with expressed a desire to train with individuals of similar skill, and frustration when classes contained learners of mixed ability (Czaja & Sharit, 2012). Classes would progress too quickly for individuals with initially low levels of proficiency and too slowly for individual with high levels of proficiency. The MDPQ (and CPQ) allows for learners to be grouped based on initial proficiency to accommodate the needs and preferences of older adults. For example, prior to beginning mobile device training, all learners could be assessed for their initial proficiency. They could then be assigned to separate classes based on their proficiency or for training on the subset of skills that are not well understood as indicated by the responses given. However, additional research may be necessary to understand appropriate cut points for classifying learners of similar proficiency. At the end of training, participants could be re-assessed on proficiency to observe if there were any changes in the skills trained. Researchers using mobile devices for intervention research (e.g., game-based brain training interventions or telehealth studies) may also benefit from understanding the extent to which proficiency (or lack thereof) has an influence on intervention success and intervention adherence. Once trained, older adults may use these devices to support their prospective memory (calendaring), information gathering (Internet), and social interactions (communication features), potentially improving well-being and enhancing independence.

Limitations and Future Directions

As with all studies, our study is subject to limitations. First, the MDPQ is a self-report measure, and self-report measures may not perfectly capture objective proficiency. Second, every effort was made to ensure that the measures we developed were clear and understandable. However, additional validation may be needed to ensure the appropriateness of the MDPQ for participants from a diverse background with respect to education and SES. Only 12% of our older adult sample indicated having received only a high school education or less. We do not anticipate that the MDPQ and MDPQ-16 would be problematic for individuals with less education, but future work can confirm this. It is interesting to note the large degree of variability with respect to mobile device proficiency, even within this relatively advantaged older adult sample. Here, we validated the MDPQ and MDPQ-16 at one time point. Although results are promising regarding the validity and reliability of the scales, future directions might also assess changes in MDPQ over time to further validate the scales as individuals go from non-users to users of mobile devices, and compare self-reported proficiency with an objective measure of proficiency.

Best Practices for Training Older Adults to Use Technology

Creating more homogeneous classes with respect to ability is just one component of designing effective and efficient instructional and training programs for older adults. Social support during the learning process from friends and family members may play an important role (Tsai, Shillair, & Cotten, 2015). Other practices are encouraged, such as ensuring all training materials and guides use large font (at least 12 point) and a high contrast ratio to account for age-related changes in vision (Fisk, Rogers, Charness, Czaja, & Sharit, 2009). Czaja and Sharit (2012) provided a number of other best practice guidelines, including working within the framework of established instructional design models such as ADDIE (Analysis, Design, Development, Implementation, Evaluation), pilot testing training materials with representative samples, providing the learner with feedback throughout the learning process, and adjusting the pacing of training if necessary. The MDPQ may assist with the pacing of training as well in that it can be used to measure proficiency throughout training to ensure that learners have mastered basic mobile device proficiency before more advanced concepts are taught. We propose that the MDPQ, along with established guidelines of designing training and instruction for older adults, can help ensure that older adults are not locked out of useful technology that has the potential to support independence.

The authors appreciate input from Joseph Sharit, Wendy Rogers, and Neil Charness on an early version of the MDPQ.

Supplementary Material

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: The authors gratefully acknowledge support from the National Institute on Aging, Project CREATE IV—Center for Research and Education on Aging and Technology Enhancement (www.create-center.org, NIA 2P01AG017211-16A1).

Biographies

Nelson Roque is a graduate student in the cognitive psychology program at Florida State University. His research spans a number of areas, including basic mechanisms of attention, aging and technology, and aging and transportation safety.

Walter R. Boot, PhD, is an associate professor of psychology at Florida State University. He is one of six principal investigators of the Center for Research and Education on Aging and Technology Enhancement (CREATE; http://www.create-center.org/). His research focuses on how technology can improve the lives of older adults.

Footnotes

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Note that degrees of freedom fluctuate here and elsewhere due to some participants failing to complete all questions. This included some participants in the older adult group who were not comfortable sharing their age. However, we can be confident that these participants were within the targeted age range because only participants within this range were invited to participate.

Contributor Information

Nelson A. Roque, Florida State University.

Walter R. Boot, Florida State University.

References

- Anderson M (2015). Technology device ownership: 2015. Pew Research Center. Retrieved from http://www.pewinternet.org/2015/10/29/technology-device-ownership-2015 [Google Scholar]

- Boot WR, Charness N, Czaja SJ, Sharit J, Rogers WA, Fisk AD, & Nair S (2015). Computer Proficiency Questionnaire: Assessing low and high computer proficient seniors. The Gerontologist, 55, 404–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charness N, & Boot WR (2009). Aging and information technology use: Potential and barriers. Current Directions in Psychological Science, 18, 253–258. [Google Scholar]

- Cronbach LJ (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16, 297–334. [Google Scholar]

- Czaja SJ, Boot WR, Charness N, Rogers WA, Sharit J, Fisk AD, & Nair SN (2015). The personalized reminder information and social management system (PRISM) trial: Rationale, methods and baseline characteristics. Contemporary Clinical Trials, 40, 35–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czaja SJ, Charness N, Fisk AD, Hertzog C, Nair SN, Rogers WA, & Sharit J (2006). Factors predicting the use of technology: Findings from the Center for Research and Education on Aging and Technology Enhancement (CREATE). Psychology and Aging, 21, 333–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czaja SJ, & Sharit J (2012). Designing training and instructional programs for older adults. Boca Raton, FL: CRC Press. [Google Scholar]

- Delello JA, & McWhorter RR (2015). Reducing the digital device: Connecting older adults to iPad technology. Journal of Applied Gerontology. Advance online publication. doi: 10.1177/0733464815589985 [DOI] [PubMed] [Google Scholar]

- Fisk AD, Rogers WA, Charness N, Czaja SJ, & Sharit J (2009). Designing for older adults: Principles and creative human factors approaches (2nd ed.). Boca Raton, FL: CRC Press. [Google Scholar]

- IDC Worldwide, Quarterly Smart Connected Device Tracker. (2015). As tablets slow and PCs face ongoing challenges, smartphone grab an ever-larger share of the smart connected device market through 2019, according to IDC [Press release]. Retrieved from http://www.idc.com/getdoc.jsp?containerId=prUS25500515

- Melenhorst AS, Rogers WA, & Bouwhuis DG (2006). Older adults’ motivated choice for technological innovation: Evidence for benefit-driven selectivity. Psychology and Aging, 21, 190–195. [DOI] [PubMed] [Google Scholar]

- Mitzner TL, Boron JB, Fausset CB, Adams AE, Charness N, Czaja SJ, & Sharit J (2010). Older adults talk technology: Technology usage and attitudes. Computers in Human Behavior, 26, 1710–1721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pew Research Center. (2015). Device ownership over time. Retrieved from http://www.pewinternet.org/data-trend/mobile/device-ownership

- Smith A (2014). Older adults and technology use: Adoption is increasing, but many seniors remain isolated from digital life. Pew Research Center. Retrieved from http://www.pewinternet.org/2014/04/03/older-adults-and-technology-use/ [Google Scholar]

- Stronge AJ, Rogers WA, & Fisk AD (2006). Web-based information search and retrieval: Effects of strategy use and age on search success. Human Factors: The Journal of the Human Factors and Ergonomics Society, 48, 434–446. [DOI] [PubMed] [Google Scholar]

- Tsai HYS, Shillair R, & Cotten S (2015). Social support and “playing around”: An examination of how older adults acquire digital literacy with tablet computers. Journal of Applied Gerontology. Advance online publication. doi: 10.1177/0733464815609440 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.