Abstract

Importance:

Developing more and better diagnostic and therapeutic tools for central nervous system disorders is an ethical imperative. Human research with neural devices is important to this effort and a critical focus of the NIH BRAIN Initiative. Despite regulations and standard practices for conducting ethical research, researchers and others seek more guidance on how to ethically conduct neural device studies. This paper draws on, reviews, specifies, and interprets existing ethical frameworks, literature, and subject matter expertise to address three specific ethical challenges in neural devices research: analysis of risk, informed consent, and post-trial responsibilities to research participants.

Observations:

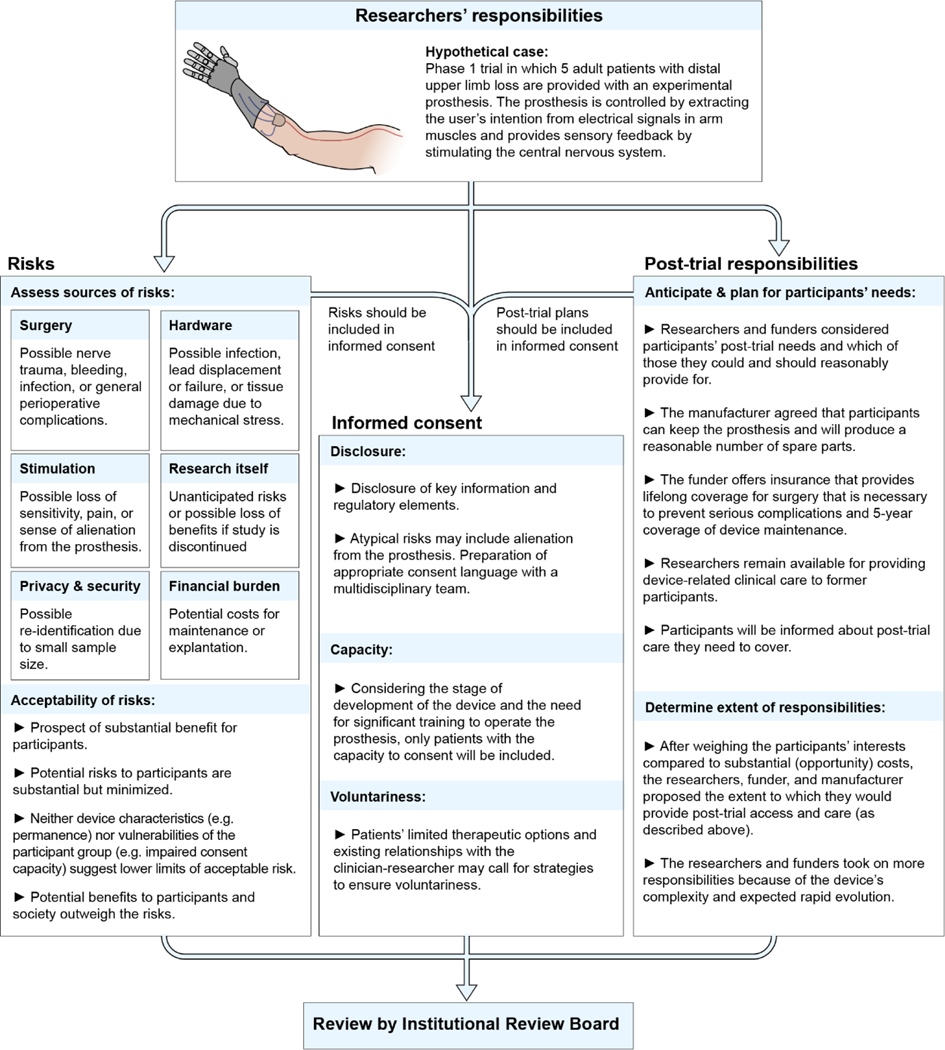

Research with humans proceeds after careful assessment of the risks and benefits. In assessing whether risks are justified by potential benefits in both invasive and non-invasive neural device research, the following categories of potential risks should be considered- those related to surgery, hardware, stimulation, research itself, privacy and security, and financial burdens.

All three of the standard pillars of informed consent – disclosure, capacity, voluntariness - raise challenges in neural device research. Among these challenges are the need to plan for appropriate disclosure of information about atypical and emerging risks, a structured evaluation of capacity when that is in doubt, and preventing patients from feeling unduly pressured to participate.

Researchers and funders should anticipate participants’ post-trial needs linked to study participation and take reasonable steps to facilitate continued access to neural devices that benefit participants. Possible mechanisms for doing this are explored here. Depending on the study, researchers and funders may have further post-trial responsibilities.

Conclusions and Relevance:

This ethical analysis and points to consider may assist researchers, institutional review boards, funders, and others engaged in human neural device research.

Introduction

Developing tools to alleviate the considerable burden of neurological, neuropsychiatric, and substance use disorders (hereafter central nervous system (CNS) disorders)1–3 is an ethical imperative4–6. Human research is essential to the NIH Brain Research through Advancing Innovative Neurotechnologies (BRAIN) Initiative’s quest to advance diagnostic and therapeutic approaches to these devastating disorders7. This research frequently involves new or expanded use of invasive and non-invasive neural devices, raising important ethical challenges.

Many ethical issues in human neural device research are encountered in other clinical research, especially device research8. Even so, existing ethical frameworks often need to be applied to the specific context of neural device research and appropriately interpreted; additional guidance may be nessesary5. Despite existing literature addressing the ethics of neural device research, especially deep brain stimulation (DBS)9–11, further discussion and guidance on various ethics challenges remain needed5. Considering these ethical challenges is also timely as human studies will likely increase with advances in neuroscience. The NIH BRAIN Initiative Neuroethics Working Group thus prioritized this area for consideration; this article grew out of a subsequent NIH workshop. Although recognizing many ethical challenges in human neural device research, analysis of risk, informed consent, and post-trial responsibilities to research participants were considered critical. This paper provides ethical analysis and key points to consider for researchers, Institutional Review Boards (IRBs), funders, and others engaged in human neural device research, particularly regarding neuromodulation devices.

The state of the science

Various invasive and non-invasive devices that record and/or modulate CNS function are under investigation. These devices may present an important adjunct or alternative treatment for CNS disorders, especially when pharmacotherapy has limited efficacy or intolerable side effects. Neural device research also can advance knowledge about the CNS.

Invasive neural devices require an incision or insertion to place or implant the device in a person for example, under the skull, below the dura, or within the brain. The most established invasive modality is DBS, a programmable and adjustable implant of electrodes into specific deep brain structures that delivers electrical impulses to alter circuit function and overcome abnormal activity12. The Food and Drug Administration (FDA) has approved DBS for treating Parkinson’s disease, essential tremor, and medically refractory epilepsy, and granted humanitarian device exemptions for drug-refractory dystonia and obsessive-compulsive disorder. DBS is being investigated for other brain disorders not adequately controlled by pharmacological therapy, including major depression13, chronic pain14, Alzheimer’s disease15, obesity16, addiction17, and traumatic brain injury18.

Researchers are investigating closed-loop brain stimulation systems, in which additional recording strips are placed, usually over the cortical surface, and brain activity measures are informing the stimulation parameters. Closed-loop systems incorporate feedback between input and output signals to effectively exert control over the targeted neural circuit19. Closed-loop systems ‘seamlessly’ adjust to symptoms, but raise ethical questions such as who has control over the device20. Responsive neurostimulation, a closed-loop intracranial stimulation system, has been approved for treatment-refractory epilepsy21.

Beyond DBS, brain-computer interfaces (BCIs) decode motor intentions from cortical signals in patients with tetraplegia, enabling user-driven control of assistive devices such as computers and robotic prostheses22. Electrical stimulation of the spinal cord and muscles is used in spinal cord injury to retrain motor circuits and improve residual capabilities22.

Non-invasive neuromodulation involves the external application of magnetic, electrical, or sonic stimulation to modulate CNS function. For example, the FDA has cleared electroconvulsive therapy23 and repetitive transcranial magnetic stimulation (TMS)24 for depression. Researchers are testing new indications for TMS, as well as transcranial direct current stimulation (tDCS), magnetic seizure therapy, and other modalities. Optimal dosing, spatial and temporal targeting, and mechanisms of action are being studied, even for approved indications. Techniques like TMS can be concurrently or consecutively combined with neuroimaging and electrophysiological techniques to assess effects and optimize subsequent stimulation25. Further research will improve insights into mechanisms of different non-invasive devices, dose/response relationships, and methods for ensuring safety and efficacy. Finally, along with regulatory and oversight frameworks, future research could help elucidate the safety and/or effectiveness of non-medical uses of non-invasive neuromodulation devices (e.g. attention enhancement). For example, tDCS is already sold directly to consumers for this purpose26.

Analysis of risk

Sources of risks

Most research with invasive or noninvasive27 neural devices entails some risk. Determining the type and extent of risk is fundamental to evaluating the ethics of neural device studies, to protect research participants from unnecessary harm, inform risk/benefit evaluations by IRBs, and enable informed consent28,29. Human neural device research poses risk from at least six sources, during and possibly after the trial. Although some risks are similar to those from devices implanted elsewhere in the body, others take on special meaning to research participants because of the brain’s centrality to e.g. mental states and identity.

First, surgery for implanting or replacing invasive devices poses risks, such as intracranial hemorrhage, stroke, infection, and seizures30,31. General perioperative complications are uncommon but can be severe, such as deep vein thrombosis, pulmonary embolism, or adverse effects of anesthesia.

Second, implanted device hardware poses risks, including infection, malfunction, erosion, and lead migration or fracture, which may require additional surgery or explantation30–32. Additionally, devices can fail, resulting in risks associated with sudden treatment termination and/or another surgery. Implanted devices may be contraindicated for some MRIs30 or cardiac pacemakers33.

Third, stimulation can cause adverse effects. For example, headaches and, rarely, seizures are associated with TMS34, and speech disturbances, paresthesias, and affective function disruptions with DBS35. Side-effects depend on the level and loci of stimulation and can often be alleviated by adjusting the settings (sometimes involving compromises between side-effects and benefits)27,32. Stimulation-induced adverse effects can occur if electrode placement in implanted devices or coil orientation in non-invasive devices is suboptimal32,36. Some studies report DBS effects on cognition (e.g. word-finding difficulties), and also atypical risks: effects on personality, mood, behavior, and perceptions of identity, authenticity, privacy, and agency20,32,37. In rare cases, these effects were long-term and possibly irreversible32. Such atypical risks are poorly understood, variable, and unpredictable. Effects on personality and behavior may be intended or unintended, and beneficial for some individuals and harmful to others20. Furthermore, patients may evaluate these effects differently than their family or caregivers. Further research should assess the likelihood of personality or behavior changes, characterize when changes are problematic, and weigh these risks against possible therapeutic benefits.

Fourth, research may involve incremental risks, including risks from procedures performed strictly for research purposes (e.g. extending clinically-indicated surgery to perform intracranial recordings for research), as well as emerging or unanticipated risks. Furthermore, research may entail an uncertain likelihood of benefit and possible loss of obtained or perceived benefits if the study is discontinued. Researchers should plan to monitor side effects during trials (including psychosocial side effects) and respond by taking appropriate measures. This is especially important for early device studies.

Fifth, neural device research often involves privacy and security risks. For example, analyzing aggregate brain data may disclose individual private information. Privacy risks may increase as more data is being recorded (especially continuous recording, possibly a future neural device feature) and technological capabilities for combining data increase, but exist even for post-hoc analyses of clinically acquired data (e.g. analyzing sleep physiology architecture from epilepsy implants). Investigators, IRBs, and funders should weigh the social and scientific value of data sharing against robust analyses of privacy risks. Furthermore, wireless devices and data transmission raise hacking concerns38. Third party hacking of a device may allow unauthorized data extraction or changing device settings, which could pose serious health risks38. Hacking could also occur with other implantable devices, and the FDA has guidance on device cybersecurity39.

Sixth, neural device research may pose financial risks for participants both during and after a study. After participants complete or discontinue a study, they may be left with costs for device maintenance, continued access, or explantation. This can significantly impact patients and their families and/or could lead to health risks.

Each research protocol should be evaluated for these six sources of risk. Invasiveness by itself is not a sufficient parameter of risk. Although risks of surgery and implanted hardware are specific to invasive devices, both invasive and non-invasive devices have risks from other sources. Rather, in evaluating risks, parameters such as the degree and type of harm, the likelihood of harm, and irreversibility40 should be assessed. In determining risk levels, IRBs should be as precise and consistent as possible. US federal regulations define risk levels, such as minimal risk or non-significant risks, however, these may not correspond to common uses of these terms.

Acceptability of risks

For clinical research to be ethical, potential risks to research participants are minimized and potential benefits to participants and society are proportionate to, or outweigh, the risks28,41. These requirements are grounded in the ethical values of beneficence, nonmaleficence, and nonexploitation28. Acceptable levels of risk are generally higher for studies with possible therapeutic benefits for participants.

The limits of acceptable risk in research with a prospect of benefit to participants are context-dependent. More protection is appropriate for certain device characteristics (e.g. permanence) or for certain vulnerable groups (e.g. adults with impaired consent capacity). Protecting vulnerable groups by exclusion, however, may deprive them of the benefits of research and expose them to additional risks if interventions are later used without adequate data.

Further conceptual and empirical research could clarify acceptable levels of risk in studies without a prospect of therapeutic benefit. For example, because of surgical risks, intracranial recordings and/or stimulation for research without prospect of benefit are only performed on patients with clinical indications for neurosurgery42. However, little agreement exists on how much prolongation of surgery to collect brain activity data, or insertion of research components in addition to standard of care devices, is acceptable, nor on acceptable risks associated with sham surgery or devices as control interventions6,43. An example of research with no or unknown prospect of direct health benefits, but possible social value, is research on neural devices for non-medical purposes (e.g. attention enhancement). Addressing concerns about the safety and effectiveness of current do-it-yourself use is important because it may involve more frequent sessions than have been studied, a lack of screening to identify individuals at heightened risk of complications, and uncertainties about regulatory oversight26,44,45.

Regulations and oversight structures aim to protect research participants46. IRBs should consistently apply appropriate safeguards as additional protections for certain populations and limits to research without therapeutic benefit in neural device research. The FDA regulates medical devices but most class I devices (low risk) are exempt from needing an application47. Furthermore, FDA oversight is not required for devices used in basic physiological research when a future marketing submission or treating a disease is not intended47, although other oversight structures may apply.

Informed Consent

Informed consent is an important part of human subjects’ protections, grounded in the ethical value of respect for persons28,29. Yet, practical and theoretical challenges persist in obtaining informed consent for clinical research, and some challenges are exacerbated in neural device research. Because neural device research affects the brain in predictable and unpredictable ways, facilitating informed consent by considering participants’ values, interests, and preferences28 may be especially important. Referring physicians may help patients explore how trial participation might align with their values.

The informed consent process entails disclosure of relevant information to a decisionally capable person who makes a voluntary decision to enroll48,49. All three pillars of consent – disclosure, capacity, voluntariness - raise challenges in neural device research.

Disclosure

Federal regulations require disclosure of research procedures and interventions, reasonably foreseeable risks and benefits, alternatives, that participation is voluntary, and more41. For neural device research, relevant risks from the six sources identified above should be disclosed. Participants should also be informed about procedures that are purely for research, whether the procedures or interventions are experimental, the incremental nature of science, and plans for post-trial care (e.g. device maintenance or explantation).

Decisions should be made about how to disclose any “reasonably foreseeable” emerging or atypical risks (e.g. changes in personality). Disclosing atypical risks is complicated by diverse individual preferences and value systems, which impact what information participants wish to receive and how participants or their families might perceive certain changes. For example, some participants may perceive neural stimulation as enhancing their sense of empowerment and authenticity, while others may perceive it as undermining their level of control or authenticity20,50. Furthermore, researchers may draw on experience from disclosing similar types of side effects from neuropharmacological therapies (e.g. dopamine agonists leading to impulsive behaviors such as pathological gambling51). Decisions about disclosing emerging risks (i.e. adverse events where the details or relevance are still unclear, for example, events reported in a single or small number of somewhat different cases), may also be challenging. A multidisciplinary team may be helpful in navigating these challenges.

Communicating information effectively to research participants may be difficult, as study information may be complex and some brain disorders impair cognition42. Furthermore, research participants may have difficulties distinguishing between the imperatives of clinical research and standard clinical care, or not recognize purely research procedures (i.e. therapeutic misconception)52,53. This is of particular concern when research procedures are coupled with clinical procedures and/or when risk-benefit ratios are unfavorable. Depending on the patient and study profile, more elaborate consent procedures than a one-off and one-to-one model6, as well as testing comprehension of risks and benefits may be appropriate11.

Capacity

Another informed consent challenge for neural device research arises from the link between various brain disorders and impairments in decision-making or decisioncommunicating6. Capacity to consent is usually presumed in adults, but when investigators or clinicians are unsure about a participant’s capacity, more formally assessing decisional capacity can be important. Capacity assessments are decision-specific and evaluate patients’ understanding, appreciation, reasoning, and choice about participation in a proposed research study54. Capacity assessment should use a systematic approach that corresponds to the legal and ethical concepts of informed consent and capacity. Some evidence-based capacity assessment tools have been developed, such as the MacArthur Competency Assessment Tool for Clinical Research54. In some studies, a legally authorized representative, as determined by US federal and state regulations as well as IRB guidance, may make research decisions for those without decision-making capacity55.

Patients with communication impairments (e.g. expressive aphasia, locked-in syndrome) may have decisional capacity but require special supportive measures to express their preferences. In these cases, researchers should optimize supports for consent or assent, by using augmentative and alternative communication tools (e.g. written communication or using pictures). Experimental BCIs may allow artificial speech synthesis from continuous decoding of neural signals underlying covert (or imagined) speech, however establishing reliability is required before use in medical decisionmaking56,57.

Voluntariness

Researchers should ensure patients know that declining research participation will not jeopardize their clinical care. Effective treatment options are limited for many CNS disorders. Some disagreement remains about whether having no therapeutic options or offering certain incentives (e.g. secondary benefits) influence the voluntariness of research enrollment decisions58,59. Further conceptual and empirical research could elucidate constraints on voluntariness.

An ongoing debate in research ethics is who should obtain informed consent, as clinicians, researchers, or study coordinators each come with pros and cons60. The dual role of clinician-researcher is particularly complex for neurosurgeons in research with invasive devices42. Patients may feel unable to say no to neurosurgeons with whom they already have a clinical relationship, yet neurosurgeons may understand study details best42. In the absence of empirical data and specific guidance supporting who should obtain consent, IRBs and researchers trade off these considerations differently. Best practices from other fields may be helpful. For example, when clinician-researchers with dual roles obtain informed consent in pediatrics, offering parents the opportunity to discuss the study with another person is recommended60. A similar team approach has been suggested for invasive neural devices6,42.

Post-trial responsibilities

Researchers, device manufacturers, and funders have responsibilities to anticipate and plan for participants’ post-trial needs linked to trial participation10,61,62. The researcher-participant relationship creates a limited responsibility to provide care beyond what the study’s scientific validity and safety requires63. Additionally, avoiding exploitation of research participants, promoting participant welfare and minimizing harm, and respecting participants as persons (not just as means) support post-trial responsibilities61,64,65. Many DBS patients expect researchers to provide post-trial medical care, expertise, and equipment (batteries)20. Engineers and basic scientists consider appropriate post-trial access important in BCI research66. However, invasive device trial budgets frequently do not cover the costs of, for example, device removal or replacing a depleted battery61. Some funders currently have no mechanisms for supporting post-trial care. Furthermore, health insurance plans deny coverage for investigational implants67, requiring participants who benefit from investigational devices to rely on personal funds and researchers’ advocacy for donations61. No definitive ethical or regulatory frameworks, or even standard practices, exist regarding post-trial responsibilities in neural device research61.

Ethical frameworks only recently have addressed researchers’ and funders’ post-trial responsibilities. The 2000 version of the Declaration of Helsinki first introduced a responsibility to assure post-trial access for participants to investigational agents68. Its subsequent revisions and other influential guidelines call for consideration and planning for post-trial access64,69–71. Beyond facilitating ongoing access to a drug or device, researcher and funder post-trial responsibilities may include sharing data, providing clinical care, device maintenance, and even long-term surveillance of risks and cost-effectiveness. These responsibilities are complex and not fully resolved65. For example, should participants in control groups receive access to the investigated therapy? Furthermore, most existing guidance focuses on drugs while acknowledging devices pose additional, unresolved challenges70. The extent and locus of post-trial responsibilities is currently determined on a case-by-case basis. More guidance, including guidance specific to neural devices, is needed61. Most agree that post-trial responsibilities are limited, shared among stakeholders, and should be determined before the trial starts (if possible)64,70.

Determining the extent of post-trial responsibilities

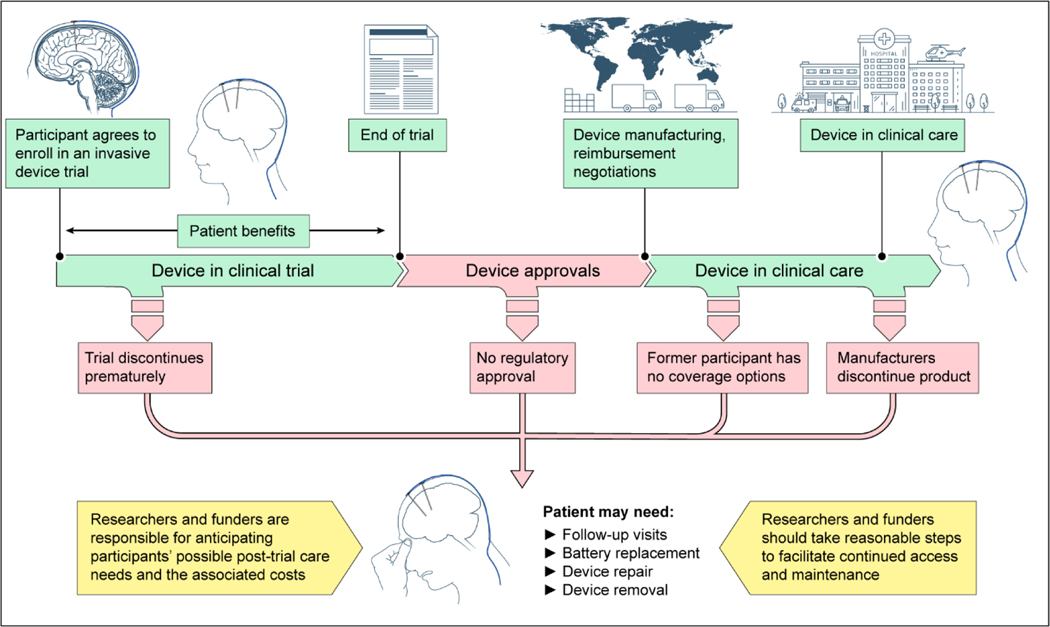

At a minimum, researchers and funders are responsible for anticipating possible post-trial care needs in neural device research, including its costs. Researchers and funders also should take reasonable steps to facilitate continued access to neural devices that are benefitting participants and may have further post-trial responsibilities as described above. Researchers and IRBs should explore available options for covering costs of continued access and device maintenance, such as inclusion in grant applications, planning ongoing studies, Medicare reimbursement for IDE, insurance company coverage, funder coverage for compassionate use, and others. Funders should consider options for insurance, financial contracts or other mechanisms to support post-trial follow-up and device maintenance. Researchers should delineate viable options for post-trial device access, maintenance, and explantation, in the research protocol and in consent forms. Options for various scenarios, such as device and trial failure or success, regulatory approval options, and decisions by device manufacturers to commercialize or discontinue a product, should be considered.

Post-trial responsibilities may be greater when participants would benefit substantially from care, discontinuing care would pose substantial risks, participants are particularly vulnerable, and the financial and opportunity costs of providing care are low61,63–65. More guidance is needed on weighing opportunity costs (which may represent collective interests) compared to research participants’ interests. Post-trial access and care may be important for non-invasive devices, yet especially for implanted devices which need long-term maintenance (e.g. follow-up visits, battery replacement, and device repair) or removal. Lacking access to care may expose patients with implanted devices to risks72.

Post-trial care responsibilities for neural devices are amplified as the brain holds special meaning to patients and atypical effects may occur (e.g. personality changes)32. Experience with other invasive devices suggests that neural devices’ complexity, limited knowledge about their long-term effects, and expected rapid evolution are also sources of vulnerability for participants that warrant consideration and long-term planning.

Continued access and device maintenance is especially challenging when medical devices are complex72 and participants need the research team’s expertise, rather than their local providers, to access care61,70. Neural devices often involve such complexity.

Ethicists called for long-term follow-up of safety, for example using a registry, of human tissue-based products because of their potential irreversibility and unclear long-term effects73. Similarly, neural device registries and standardized outcome metrics should be established6 to monitor and compare long-term adverse effects, rates of device maintenance and failure, costs, and other outcomes. Researchers, device manufacturers, and healthcare institutions should share responsibility for creating and maintaining registries.

Neural devices will likely be continually improved over time, with early trials containing prototypes that are refined into newer models. Furthermore, neural devices (like many other devices and drugs), are subject to commercial interests72. This may involve built-in obsolescence and proprietary hardware and software effectively locking patients and clinicians into ongoing relationships with a manufacturer72. Similar to pacemaker leads74,75, researchers, device manufacturers, and healthcare institutions should plan ahead to ensure that compatible replacement parts and software remain available for users with earlier models and that clinicians are trained to use them72.

Conclusions

Developing new diagnostic and therapeutic tools for CNS disorders is an ethical imperative that requires conducting human neural device research. Such studies are only possible because research participants generously contribute. Conducting such research ethically is vital. This paper provides points to consider for: analysis of risk, informed consent, and post-trial responsibilities in human neural device research. We encourage researchers, IRBs, and funders to continue to reflect on these, and other ethical challenges in neural device research and to embrace neuroethics as a way to enhance rigorous science.

Figure 1.

Potential Paths for Neural Devices and Implications for Posttrial Access and Maintenance

The green timeline shows the neural device developmental path; red indicates scenarios in which trial participants may have posttrial needs. The yellow boxes include recommendations for researchers and funders.

Figure 2.

Risk, Informed Consent, and Posttrial Responsibilities for a Hypothetical Case Involving a Hand Prosthesis

Box 1. Points to consider in the analysis of risk in neural device research.

Evaluating and minimizing risks in each proposed study is fundamental to the ethics of research.

Researchers should anticipate and describe the degree and types of expected risks for each study based on available evidence while recognizing uncertainties.

Clinical research with neural devices poses risks from at least six sources: risks related to surgery, hardware, stimulation, the research itself, privacy and security, and financial burdens.

Although research with invasive devices entails risks (e.g. surgical) that non invasive devices do not, most sources of risk are relevant for both invasive and non-invasive devices. Invasiveness itself is not a sufficient parameter for determining risk.

Evaluating possible changes to personality or behavior may be challenging, as these could be experienced as harmful, beneficial, or be an explicit goal of treatment.

Research risks should be justified by the potential benefit to the participant and/or the importance of the knowledge expected to be gained.

Acceptable levels of research risk are generally higher for studies that offer possible therapeutic benefit for participants.

Box 2. Points to consider in obtaining informed consent for neural device research.

Informed consent is an important way to protect human subjects.

Obtaining informed consent entails disclosure of relevant information to a decisionally capable person who makes a voluntary decision to enroll in the study.

Participants should be informed about risks and benefits, alternatives, which interventions and add-on procedures are purely for research, which interventions are experimental, and plans for device failure or long-term support (e.g. device maintenance).

Neural device research may involve atypical (e.g. personality changes) and possible emerging risks, about which it may be challenging to decide what and how to disclose information.

Some patients will not have the capacity to consent. In case of doubt, researchers should plan for assessing consent capacity, which involves structurally evaluating patient’s understanding, appreciation, reasoning, and choice about participation.

Depending on the nature of the study, federal regulations, state regulations, and institutional policies may allow a legally authorized representative to make research decisions for those without decision-making capacity.

Other patients may have capacity but an impaired ability to communicate, for which researchers should optimize supports for consent of assent (e.g. using augmentative and alternative communication tools).

Participants should understand that participation is voluntary. Researchers should be sensitive to concerns about potential pressures on patients due to a lack of alternative therapies or prior relationships with clinicians-investigators.

Box 3. Points to consider for post-trial responsibilities in neural device research.

Researchers and funders should anticipate and make plans for participants’ post-trial needs linked to study participation, including device access and maintenance.

In this process, researchers and funders should consider various post-trial scenarios, such as device and trial failure or success, regulatory approval options, and decisions by device manufacturers to commercialize or discontinue a product.

Further reasonable steps should be taken to facilitate continued access to neural devices when participants are benefitting.

The extent of the responsibility of researchers and funders to provide or arrange for post-trial access and care is determined on a case-by-case basis and likely to be more extensive for invasive devices.

Researchers should inform IRBs and potential participants of the potential need, risks, complexities, and costs of post-trial care and whether and how maintenance and/or explantation will be provided.

Specific attention is warranted to safeguard access of participants with complex devices to experts with the required expertise, to safeguard access to compatible device parts and software, and to track long-term outcomes (through device registries).

Regulators, researchers, funders, and ethicists should continue efforts to clarify researcher and funder responsibilities for post-trial care in neural device research.

Acknowledgments

We thank participants of the October 2017 workshop “Ethical Issues in Research with Invasive and Non-Invasive Neural Devices in Humans” for their valuable input and Andre Machado, MD, PhD (Cleveland Clinic, no compensation received) for his contribution to the workshop. We thank Walter Koroshetz, MD (National Institute of Neurological Disorders and Stroke, no compensation received) for his helpful comments on this manuscript. Some authors were supported by the NIH for travel to the workshop. S.H., C.G., W.C., P.F., S.G., H.T.G., M.L.K, S.Y.H.K., E.K., F.G.M., K.M.R., K.R., and A.W. have nothing to disclose. J.J.F. is co-investigator on a Brain Initiative grant (DBS in severe to moderate TBI) and receives royalties for his book ‘Rights Come to Mind’ (Cambridge University Press). K.H reported grants from Australian Research Council. H.May. reports grants from NIH and from Hope for Depression Research Foundation; personal fees from Abbott Labs outside the submitted work; and a patent to US2005/0033379A1 issued and licensed. H.Mas. disclosed funding for the Horizon 2020 BrainCom project. S.A.S. is a consultant for Koh Young and Medtronic. S.H.L. disclosed a patent on TMS technology that has been issued to the institution (not licensed and no royalties) and a loan of TMS equipment from Magstim Company to the institution.

Funding

The workshop was funded by the NIH Clinical Center Department of Bioethics and the NIH BRAIN Initiative. This research was supported in part by the NIH Intramural Research Program. The funder played no role in the preparation, review, or approval of the manuscript; or the decision to submit the manuscript for publication.

Footnotes

Disclaimer

The views expressed are the authors’ own and do not reflect those of the National Institutes of Health, the Department of Health and Human Services, or the United States government.

References

- 1.WHO. Neurological Disorders: Public Health Challenges. Geneva, Switzerland: World Health Organization;2006. [Google Scholar]

- 2.Presidential Commission. Gray Matters: Topics at the Intersection of Neuroscience, Ethics, and Society, Volume II. Washington, D.C.: Presidential Commission for the Study of Bioethical Issues 2015. [Google Scholar]

- 3.Walker ER, McGee RE, Druss BG. Mortality in mental disorders and global disease burden implications: a systematic review and meta-analysis. JAMA psychiatry. 2015;72(4):334–341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Greely HT, Grady C, Ramos KM, et al. Neuroethics Guiding Principles for the NIH BRAIN Initiative The Journal of neuroscience : the official journal of the Society for Neuroscience. 2018;38(50):10586–10588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Goering S, Yuste R. On the Necessity of Ethical Guidelines for Novel Neurotechnologies. Cell. 2016;167(4):882–885. [DOI] [PubMed] [Google Scholar]

- 6.Nuffield Council. Novel neurotechnologies: intervening in the brain. Nuffield Council;2013. [Google Scholar]

- 7.Bargmann C, Newsome W, Anderson D, et al. BRAIN 2025: A SCIENTIFIC VISION. Brain Research through Advancing Innovative Neurotechnologies (BRAIN) Working Group Report to the Advisory Committee to the Director, NIH;2014. [Google Scholar]

- 8.Morreim EH. Surgically implanted devices: ethical challenges in a very different kind of research. Thoracic surgery clinics. 2005;15(4):555–563, viii. [DOI] [PubMed] [Google Scholar]

- 9.Clausen J. Ethical brain stimulation - neuroethics of deep brain stimulation in research and clinical practice. Eur J Neurosci. 2010;32(7):1152–1162. [DOI] [PubMed] [Google Scholar]

- 10.Ford PJ, Deshpande A. Chapter 26 - The ethics of surgically invasive neuroscience research. In: Bernat JL, Beresford HR, eds. Handbook of Clinical Neurology. Vol 118. Elsevier; 2013:315–321. [DOI] [PubMed] [Google Scholar]

- 11.Rabins P, Appleby BS, Brandt J, et al. Scientific and ethical issues related to deep brain stimulation for disorders of mood, behavior, and thought. Archives of general psychiatry. 2009;66(9):931–937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Miocinovic S, Somayajula S, Chitnis S, Vitek JL. History, applications, and mechanisms of deep brain stimulation. JAMA neurology. 2013;70(2):163–171. [DOI] [PubMed] [Google Scholar]

- 13.Widge AS, Malone DA, Dougherty DD. Closing the Loop on Deep Brain Stimulation for Treatment-Resistant Depression. Frontiers in Neuroscience. 2018;12(175). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Boccard SGJ, Pereira EAC, Aziz TZ. Deep brain stimulation for chronic pain. Journal of Clinical Neuroscience. 2015;22(10):1537–1543. [DOI] [PubMed] [Google Scholar]

- 15.Lv Q, Du A, Wei W, Li Y, Liu G, Wang XP. Deep Brain Stimulation: A Potential Treatment for Dementia in Alzheimer’s Disease (AD) and Parkinson’s Disease Dementia (PDD). Frontiers in neuroscience. 2018;12:360–360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Göbel CH, Tronnier VM, Münte TF. Brain stimulation in obesity. International Journal Of Obesity. 2017;41:1721. [DOI] [PubMed] [Google Scholar]

- 17.Luigjes J, van den Brink W, Feenstra M, et al. Deep brain stimulation in addiction: a review of potential brain targets. Molecular Psychiatry. 2011;17:572. [DOI] [PubMed] [Google Scholar]

- 18.Schiff ND, Giacino JT, Kalmar K, et al. Behavioral Improvements with Thalamic Stimulation after Severe Traumatic Brain Injury. 2007;448(7153):600–603. [DOI] [PubMed] [Google Scholar]

- 19.Sun FT, Morrell MJ. Closed-loop Neurostimulation: The Clinical Experience. Neurotherapeutics. 2014;11(3):553–563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Klein E, Goering S, Gagne J, et al. Brain-computer interface-based control of closed-loop brain stimulation: attitudes and ethical considerations. Brain-Computer Interfaces. 2016;3(3):140–148. [Google Scholar]

- 21.Sun FT, Morrell MJ. The RNS System: responsive cortical stimulation for the treatment of refractory partial epilepsy. Expert Review of Medical Devices. 2014;11(6):563–572. [DOI] [PubMed] [Google Scholar]

- 22.Jackson A, Zimmermann JB. Neural interfaces for the brain and spinal cord—restoring motor function. Nature Reviews Neurology. 2012;8:690. [DOI] [PubMed] [Google Scholar]

- 23.McDonald WM, Weiner RD, Fochtmann LJ, McCall WV. The FDA and ECT. The journal of ECT. 2016;32(2):75–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.George MS, Lisanby SH, Avery D, et al. Daily left prefrontal transcranial magnetic stimulation therapy for major depressive disorder: a sham-controlled randomized trial. Archives of general psychiatry. 2010;67(5):507–516. [DOI] [PubMed] [Google Scholar]

- 25.Bergmann TO, Karabanov A, Hartwigsen G, Thielscher A, Siebner HR. Combining non-invasive transcranial brain stimulation with neuroimaging and electrophysiology: Current approaches and future perspectives. NeuroImage. 2016;140:4–19. [DOI] [PubMed] [Google Scholar]

- 26.Wexler A. A pragmatic analysis of the regulation of consumer transcranial direct current stimulation (TDCS) devices in the United States. Journal of law and the biosciences. 2015;2(3):669–696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Antal A, Alekseichuk I, Bikson M, et al. Low intensity transcranial electric stimulation: Safety, ethical, legal regulatory and application guidelines. Clinical neurophysiology : official journal of the International Federation of Clinical Neurophysiology. 2017;128(9):1774–1809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Emanuel EJ, Wendler D, Grady C. What makes clinical research ethical? JAMA. 2000;283(20):2701–2711. [DOI] [PubMed] [Google Scholar]

- 29.The National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. THE BELMONT REPORT: Ethical Principles and Guidelines for the Protection of Human Subjects of Research. HHS;1979. [PubMed] [Google Scholar]

- 30.Bronstein JM, Tagliati M, Alterman RL, et al. Deep brain stimulation for parkinson disease: An expert consensus and review of key issues. Archives of Neurology. 2011;68(2):165–165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fenoy AJ, Simpson RK Jr., Risks of common complications in deep brain stimulation surgery: management and avoidance. Journal of neurosurgery. 2014;120(1):132–139. [DOI] [PubMed] [Google Scholar]

- 32.Benabid AL, Chabardes S, Mitrofanis J, Pollak P. Deep brain stimulation of the subthalamic nucleus for the treatment of Parkinson’s disease. The Lancet Neurology. 2009;8(1):67–81. [DOI] [PubMed] [Google Scholar]

- 33.Capelle HH, Simpson RK Jr., Kronenbuerger M, Michaelsen J, Tronnier V, Krauss JK. Long-term deep brain stimulation in elderly patients with cardiac pacemakers. Journal of neurosurgery. 2005;102(1):53–59. [DOI] [PubMed] [Google Scholar]

- 34.George MS, Aston-Jones G. Noninvasive techniques for probing neurocircuitry and treating illness: vagus nerve stimulation (VNS), transcranial magnetic stimulation (TMS) and transcranial direct current stimulation (tDCS). Neuropsychopharmacology : official publication of the American College of Neuropsychopharmacology. 2010;35(1):301–316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Appleby BS, Duggan PS, Regenberg A, Rabins PV. Psychiatric and neuropsychiatric adverse events associated with deep brain stimulation: A meta-analysis of ten years’ experience. 2007;22(12):1722–1728. [DOI] [PubMed] [Google Scholar]

- 36.Lioumis P, Zomorrodi R, Hadas I, Daskalakis ZJ, Blumberger DM. Combined Transcranial Magnetic Stimulation and Electroencephalography of the Dorsolateral Prefrontal Cortex. Journal of visualized experiments : JoVE. 2018(138). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Schupbach M, Gargiulo M, Welter ML, et al. Neurosurgery in Parkinson disease: a distressed mind in a repaired body? Neurology. 2006;66(12):1811–1816. [DOI] [PubMed] [Google Scholar]

- 38.Denning T, Matsuoka Y, Kohno T. Neurosecurity: security and privacy for neural devices. Neurosurg Focus. 2009;27(1):E7. [DOI] [PubMed] [Google Scholar]

- 39.Wu L, Du X, Guizani M, Mohamed A. Access Control Schemes for Implantable Medical Devices: A Survey. IEEE Internet of Things Journal. 2017;4(5):1272–1283. [Google Scholar]

- 40.Pugh J. No going back? Reversibility and why it matters for deep brain stimulation. 2019:medethics-2018–105139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.HHS. 45CFR46. https://www.ecfr.gov/cgibin/retrieveECFR?gp=&SID=83cd09e1c0f5c6937cd9d7513160fc3f&pitd=20180719&n=pt45.1.46&r=PART&ty=HTML#se45.1.46_1116. Published 2018. Accessed.

- 42.Chiong W, Leonard MK, Chang EF. Neurosurgical Patients as Human Research Subjects: Ethical Considerations in Intracranial Electrophysiology Research. Neurosurgery. 2018;83(1):29–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rogers W, Hutchison K, Skea ZC, Campbell MKJBME. Strengthening the ethical assessment of placebo-controlled surgical trials: three proposals. 2014;15(1):78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wexler A. Who Uses Direct-to-Consumer Brain Stimulation Products, and Why? A Study of Home Users of tDCS Devices. Journal of Cognitive Enhancement. 2018;2:114–134. [Google Scholar]

- 45.Wurzman R, Hamilton RH, Pascual-Leone A, Fox MD. An open letter concerning do-it-yourself users of transcranial direct current stimulation. Annals of neurology. 2016;80(1):1–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.FDA. Facts to Consider when making benefit-risk determinations for Medical Device Investigational Device Exceptions | Guidance for Investigational Device Exemption Sponsors, Sponsor-Investigators and Food and Drug Administration Staff. Food and Drug Administration; 2017. [Google Scholar]

- 47.Anderson L, Antkowiak P, Asefa A, et al. FDA Regulation of Neurological and Physical Medicine Devices: Access to Safe and Effective Neurotechnologies for All Americans. Neuron. 2016;92(5):943–948. [DOI] [PubMed] [Google Scholar]

- 48.Berg JW, Appelbaum PS, Lidz CW, Parker LS. Informed Consent: Legal Theory and Clinical Practice. 2001. [Google Scholar]

- 49.GMC. Good practice in research and Consent to research. In: General Medical Council; 2013. [Google Scholar]

- 50.Gilbert F, O’Brien T, Cook M. The Effects of Closed-Loop Brain Implants on Autonomy and Deliberation: What are the Risks of Being Kept in the Loop? Camb Q Healthc Ethics. 2018;27(2):316–325. [DOI] [PubMed] [Google Scholar]

- 51.Grosset KA, Macphee G, Pal G, et al. Problematic gambling on dopamine agonists: Not such a rarity. Movement disorders : official journal of the Movement Disorder Society. 2006;21(12):2206–2208. [DOI] [PubMed] [Google Scholar]

- 52.Lidz CW, Appelbaum PS. The therapeutic misconception: problems and solutions. Medical care. 2002;40(9 Suppl):V55–63. [DOI] [PubMed] [Google Scholar]

- 53.Appelbaum PS, Roth LH, Lidz C. The therapeutic misconception: informed consent in psychiatric research. International journal of law and psychiatry. 1982;5(34):319–329. [DOI] [PubMed] [Google Scholar]

- 54.Appelbaum PS. Assessment of Patients’ Competence to Consent to Treatment. 2007;357(18):1834–1840. [DOI] [PubMed] [Google Scholar]

- 55.Saks ER, Dunn LB, Wimer J, Gonzales M, Kim S. Proxy consent to research: the legal landscape. Yale journal of health policy, law, and ethics. 2008;8(1):37–92. [PubMed] [Google Scholar]

- 56.Bocquelet F, Hueber T, Girin L, Chabardes S, Yvert B. Key considerations in designing a speech brain-computer interface. Journal of physiology, Paris. 2016;110(4 Pt A):392–401. [DOI] [PubMed] [Google Scholar]

- 57.Rainey S, Maslen H, Megevand P, Arnal L, Fourneret E, Yvert B. Neuroprosthetic speech: The ethical significance of accuracy, control and pragmatics. 2018. [DOI] [PubMed] [Google Scholar]

- 58.Roberts LW. Informed Consent and the Capacity for Voluntarism. 2002;159(5):705–712. [DOI] [PubMed] [Google Scholar]

- 59.Appelbaum PS, Lidz CW, Klitzman R. Voluntariness of consent to research: a preliminary empirical investigation. Irb. 2009;31(6):10–14. [PubMed] [Google Scholar]

- 60.Shah A, Porter K, Juul S, Wilfond BS. Precluding consent by clinicians who are both the attending and the investigator: an outdated shibboleth? The American journal of bioethics : AJOB. 2015;15(4):80–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Lázaro-Muñoz G, Yoshor D, Beauchamp MS, Goodman WK, McGuire AL. Continued access to investigational brain implants. Nature reviews Neuroscience. 2018;19(6):317–318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Fins JJ. Deep Brain Stimulation, Deontology and Duty: the Moral Obligation of Non-Abandonment at the Neural Interface. Journal of neural engineering. 2009;6:50201. [DOI] [PubMed] [Google Scholar]

- 63.Richardson HS. Moral Entanglements: The Ancillary-care Obligations of Medical Researchers. Oxford University Press; 2013. [Google Scholar]

- 64.NBCA. Ethical and Policy Issues in International Research: Clinical Trials in Developing Countries: Report and Recommendations of the National Bioethics Advisory Commission. Rockville, MD: National Bioethics Advisory Commission (NBCA);2001. [PubMed] [Google Scholar]

- 65.Sofaer N, Strech D. Reasons Why Post-Trial Access to Trial Drugs Should, or Need not be Ensured to Research Participants: A Systematic Review. Public Health Ethics. 2011;4(2):160–184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Pham M, Goering S, Sample M, Huggins JE, Klein E. Asilomar survey: researcher perspectives on ethical principles and guidelines for BCI research. BrainComputer Interfaces. 2018:1–15. [Google Scholar]

- 67.Rossi PJ, Giordano J, Okun MS. The Problem of Funding Off-label Deep Brain Stimulation: Bait-and-Switch Tactics and the Need for Policy Reform. JAMA neurology. 2017;74(1):9–10. [DOI] [PubMed] [Google Scholar]

- 68.WMA. DECLARATION OF HELSINKI 2000. In. Ethical Principles for Medical Research Involving Human Subjects. Edinburgh, Scotland: World Medical Association; 2000. [Google Scholar]

- 69.CIOMS. International Ethical Guidelines for Health-related Research Involving Humans. Geneva: Council for International Organizations of Medical Sciences (CIOMS) and World Health Organization (WHO) 2016. [Google Scholar]

- 70.MRCT. MRCT Center Post-Trial Responsibilities Framework Continued Access to Investigational Medicines In. I. Guidance Document. Boston: Multi-Regional Clinical Trials Center of Brigham and Women’s Hospital and Harvard University 2016. [Google Scholar]

- 71.Emanuel EJ, Wendler D, Grady C. An ethical framework for biomedical research. In: Emanuel EJ, Grady C, Crouch RA, Lie RK, Miller FG, Wendler D, eds. The Oxford Textbook of Clinical Research Ethics. New York, NY, USA: Oxford University Press; 2008:123–135. [Google Scholar]

- 72.Hutchison K, Sparrow R. What Pacemakers Can Teach Us about the Ethics of Maintaining Artificial Organs. The Hastings Center report. 2016;46(6):14–24. [DOI] [PubMed] [Google Scholar]

- 73.Trommelmans L, Selling J, Dierickx K. Ethical reflections on clinical trials with human tissue engineered products. Journal of medical ethics. 2008;34(9):e1. [DOI] [PubMed] [Google Scholar]

- 74.Standardisation IOf. Implants for Surgery – Cardiac Pacemakers – Part 3: Low-Profile Connectors (IS-1) for Implantable Pacemakers. In:2013. [Google Scholar]

- 75.Calfee RV, Saulson SH. A voluntary standard for 3.2 mm unipolar and bipolar pacemaker leads and connectors. Pacing and clinical electrophysiology : PACE. 1986;9(6 Pt 2):1181–1185. [DOI] [PubMed] [Google Scholar]