Abstract

Prominent theories within the field of implementation science contend that organizational leaders can improve providers’ fidelity to evidence-based practices (EBPs) by using focused implementation leadership behaviors that create an organizational climate for EBP implementation. However, this work has been criticized for overreliance on nonspecific, self-report fidelity measures and poor articulation of the boundary conditions that may attenuate leadership and climate’s influence. This study tests the predictions of EBP implementation leadership and climate theory on observed fidelity to three school-based EBPs for autism that vary in complexity—pivotal response training (PRT), discrete trial training (DTT) and visual schedules (VS). Educators in kindergarten to third-grade autism support classrooms in 65 schools assessed their principals’ EBP implementation leadership and school EBP implementation climate prior to the school year. Mid-school year, trained observers rated educator fidelity to all three interventions. Expert raters confirmed PRT was significantly more complex than DTT or VS using the Intervention Complexity Assessment Tool for Systematic Reviews. Linear regression analyses at the school level indicated principals’ increased frequency of EBP implementation leadership predicted higher school EBP implementation climate, which in turn predicted higher educator fidelity to PRT; however, there was no evidence of a relationship between implementation climate and fidelity to DTT or VS. Comparing principals whose EBP implementation leadership was +/− one standard deviation from the mean, there was a significant indirect association of EBP implementation leadership with PRT fidelity through EBP implementation climate (d = 0.49, 95% CI = 0.04 to 0.93). Strategies that target EBP implementation leadership and climate may support fidelity to complex behavioral interventions.

Keywords: evidence-based practice, implementation leadership, implementation climate, intervention complexity, autism, schools, mental health

Introduction

Despite significant advances in the development of evidence-based practices (EBPs) to support autistic children’s1 skill acquisition in areas associated with primary symptoms (social communication, restricted and repetitive patterns of behavior, interests, and activities), these interventions are infrequently delivered in community settings such as public schools (Pellecchia et al., 2015). When they are, fidelity to their essential components often is poor (Locke, Lawson, et al., 2019; Mandell et al., 2013; Pellecchia et al., 2015; Stahmer, 1999). Diminished fidelity to EBPs reduces their effectiveness (Mandell et al., 2013; Marques et al., 2019). Consequently, identifying factors that influence providers’ fidelity to EBPs, and developing implementation strategies that target those factors, holds significant promise to improve the quality and outcomes of behavioral healthcare for autistic youth (Stirman et al., 2016; Wolk & Beidas, 2018).

Implementation Leadership and Climate Theory

Drawing on research from organizational psychology and management (Ehrhart, Aarons, & Farahnak, 2014; Hong et al., 2013), implementation researchers have proposed that leaders of behavioral health organizations may represent important agents for improving the high-fidelity delivery of EBPs (Aarons, Farahnak, & Ehrhart, 2014; Birken et al., 2012; Fixsen, 2005). While acknowledging the unique and essential roles of leaders at all organizational levels, as well as the importance of alignment across levels (Aarons, Ehrhart, Farahnak, & Sklar, 2014), implementation theories have emphasized the importance of first-level leaders who manage or supervise direct service providers. First-level leaders are likely important for supporting providers’ high-fidelity delivery of EBPs because they a) have frequent interpersonal contact with providers, b) often directly supervise or guide clinical care, c) represent the most proximal and salient manifestation of the organization’s priorities, and d) frequently have longer tenure in organizations and the ability to shape implementation over a long period (Aarons, Farahnak, & Ehrhart, 2014; Birken et al., 2012).

According to one prominent theory of EBP implementation leadership proposed by Aarons, Farahnak, and Ehrhart (2014), first-level leaders influence providers’ fidelity to EBPs through both general leadership behaviors—typically operationalized as transformational leadership from the full-range leadership model (Bass, 1999)—and focused leadership behaviors—referred to as EBP implementation leadership. Focused EBP implementation leadership complements general leadership behaviors by communicating a strong priority, expectation, and support for followers to enact behaviors aligned with a specific organizational strategic goal—in this case, the effective and skillful delivery of EBP as an integral part of clinical intervention (Aarons, Ehrhart, & Farahnak, 2014). In schools, EBP implementation leadership includes leaders establishing clear expectations for educators to use EBPs, being knowledgeable about EBPs, recognizing and rewarding educators’ EBP implementation efforts, and persevering through implementation challenges (Locke et al., 2015; Lyon et al., 2018).

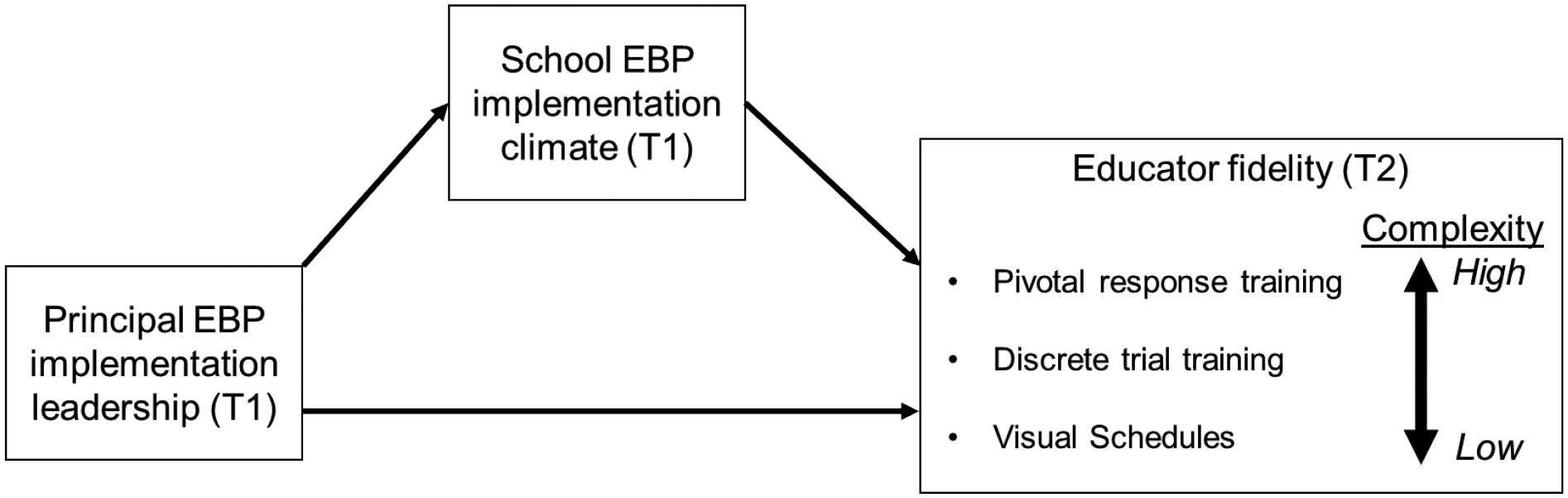

As shown in Figure 1, Aarons and colleagues’ theory (2014) posits that the use of focused EBP implementation leadership by first-level leaders influences provider fidelity to EBPs primarily by contributing to the development of a focused EBP implementation climate within the organization. EBP implementation climate is defined as employees’ shared perceptions of the extent to which the skillful use of EBPs is expected, supported, and rewarded within the organization (Ehrhart, Aarons, & Farahnak, 2014). First-level leaders are believed to have a strong influence on their subordinates’ perceptions of EBP implementation climate because their behaviors and communications are among the most salient and tangible representations of the organization’s policies, procedures, practices, and implicit logics of action (Kozlowski & Doherty, 1989; Zohar & Luria, 2004). Signals emitted by first-level leaders thus represent a key filter through which employees interpret their work environment and develop climate perceptions (Hong et al., 2013).

Figure 1.

Study theoretical model.

Note: EBP = evidence-based practice; T1 = time 1; T2 = time 2.

Emerging research offers preliminary support for Aarons and colleagues’ (2014) theory of EBP implementation leadership and climate. Psychometric studies have validated measures of these constructs and have shown that they vary meaningfully across settings that deliver behavioral healthcare (Beidas et al., 2019; Williams & Beidas, 2019). For example, both the Implementation Leadership Scale and the Implementation Climate Scale have been validated in outpatient behavioral health clinics (Aarons, Ehrhart, & Farahnak, 2014; Ehrhart, Aarons, & Farahnak, 2014), child welfare agencies (Ehrhart et al., 2016; Finn et al., 2016), substance use treatment settings (Aarons et al., 2016; Ehrhart et al., 2019), and schools (Lyon et al., 2018). Research also has linked these constructs to variation in providers’ attitudes towards EBP (Powell et al., 2017). One longitudinal study using a difference-in-differences design showed that improvement in clinic-level EBP implementation leadership over a 5-year period predicted improvement in EBP implementation climate and increases in providers’ self-reported use of EBP (Williams et al., 2020). However, a recent scoping review (Meza et al., 2021) noted methodological deficits in this line of research, including a lack of a) gold standard observational measures of implementation outcomes (e.g., observed fidelity), b) focus on specific clinical interventions, and c) attention to the boundary conditions that may attenuate the effects of leadership and climate on implementation outcomes. In this study, we address these issues by testing whether the relationships predicted by Aarons and colleagues’ (2014) theory of EBP implementation leadership and climate hold in a new setting (schools) and population (educators) with regard to observed fidelity to three specific autism behavioral interventions that vary in complexity—pivotal response training (PRT), discrete trial training (DTT), and visual schedules (VS).

Pivotal Response Training, Discrete Trial Training, and Visual Schedules

Pivotal response training (PRT), discrete trial training (DTT), and visual schedules (VS) are interventions that have strong empirical support for improving social communication, play, adaptive, academic, and cognitive skills among autistic youth (Steinbrenner et al., 2020). These interventions hold promise for school-based implementation to improve children’s outcomes, and researchers have devoted efforts to increasing educators’ use of these, despite implementation challenges (Mandell et al., 2013; Stahmer, 1999; Steinbrenner et al., 2020).

Though based in behavior principles, the process, context, and resources for implementing PRT, DTT, and VS differ. PRT is a naturalistic intervention that is child-directed. The educator follows the child’s lead and embeds learning opportunities into existing activities based on the child’s interest and focus. PRT is typically delivered in a one-on-one format (i.e., educator and student) to teach generalizable skills related to spontaneous language, social interactions, play, and emotion regulation (Stahmer, 1999). In contrast, DTT is a one-on-one instructional method that uses highly structured, adult-directed mass trials with a quick-paced repetition of adult cues to elicit specific responses, followed by brief reinforcement or error correction procedures (Arick et al., 2004; Schreibman, 2000; Smith, 2001). In the school context, DTT is typically used to teach receptive and expressive language and pre-academic concepts (e.g., identifying shapes, letters). VS is a structured physical schedule with representations of activities (e.g., cards, signage, props) used in schools as a class-wide intervention to facilitate transitions between activities (Dettmer et al., 2000). In classrooms, PRT and DTT sessions typically last about 10–15 minutes. VS is implemented classroom-wide throughout the entire day (Dettmer et al., 2000).

Intervention Complexity

Although there is no single agreed-upon definition of intervention complexity (Lewin et al., 2017), the definition offered by the Agency for Healthcare Research and Quality Evidence-Based Practice Center (Guise et al., 2017) captures core dimensions highlighted by numerous research groups, including: intervention complexity (i.e., degree to which an intervention has multiple components), pathway complexity (i.e., degree to which the intervention has multiple causal pathways, feedback loops, or mediators and moderators of effect), population complexity (i.e., extent to which the intervention targets multiple people, groups, or organizational levels), and contextual complexity (i.e., extent to which the intervention operates in a dynamic environment). These dimensions align well with reports from school-based providers of autism EBPs who indicate autism interventions vary in the number of active intervention components and steps in the causal pathway, the time and resources required to create a context conducive to intervention delivery, and the level of organizational support and coordination required for effective implementation (Brock et al., 2020; McNeill, 2019; Wilson & Landa, 2019).

Following others (Pellecchia et al., 2020; Rogers et al., 2005), we propose that variation in intervention complexity may act as a boundary condition that attenuates (or enhances) the effects of implementation antecedents such as EBP implementation leadership and climate (Amodeo et al., 2011). Specifically, whereas less complex interventions may not require a supportive organizational environment at multiple levels, more complex interventions—those that require higher interventionist training and skill, rely on specific contextual conditions to facilitate intervention delivery, require coordination among multiple persons, or target multiple organizational levels—may rely on a more supportive organizational environment and leadership (Wilson & Landa, 2019). Variation in intervention complexity may help explain why the association between EBP implementation leadership and climate and implementation outcomes has been variable (Beidas et al., 2017; Locke, Lee, et al., 2019; Meza et al., 2021). Because intervention complexity is relatively static, malleable factors such as leadership and climate represent attractive targets for facilitating implementation if and when complexity serves as a barrier (Amodeo et al., 2011).

Present Study - Implementation Leadership and Climate in Schools

This study tests Aarons and colleagues’ (2014) theory of EBP implementation leadership and climate in public schools that serve autistic youth, focusing on how its predictive validity for observed educator fidelity may vary depending on the level of intervention complexity. Schools are an important context for studying EBP implementation for autistic youth given that over 700,000 autistic students are served in U.S. public schools (Office of Special Education and Rehabilitative Services, 2019) and evidence that educators’ fidelity to EBPs for autism often is poor (Mandell et al., 2013; Pellecchia et al., 2015). In addition, qualitative and mixed methods research suggests principal leadership and school priorities represent important barriers to implementing EBPs for autistic youth in school settings (Ahlers et al., 2021; Locke et al., 2015; Wilson & Landa, 2019).

This study tests the following hypotheses (see Figure 1): H1: Increased use of EBP implementation leadership by principals will be associated with higher levels of school EBP implementation climate. H2: Higher levels of school EBP implementation climate will be associated with greater educator fidelity to PRT (a complex EBP) but there will be no evidence of an association with fidelity to DTT or VS (less complex EBPs). H3: Higher principal EBP implementation leadership will be indirectly associated with higher educator fidelity to PRT (a complex EBP) through school EBP implementation climate but there will be no evidence of an indirect association with fidelity to DTT or VS (less complex EBPs).

Method

Participants and Setting

Data for the present study come from a longitudinal, observational investigation into factors that supported or constrained fidelity to PRT, DTT, and VS in a large sample of schools in the northeastern and northwestern USA that elected to implement these practices (Locke et al., 2016).

In total, 92 schools were invited to participate in the study because they had a kindergarten through third-grade special education classroom with autistic students and planned to provide training and coaching to their staff to implement PRT, DTT, and VS as part of their standard operating procedures. Of these, 18 schools declined to participate, and nine had fewer than three staff working in their autism support classroom or did not provide complete data on measures for this study (i.e., > 30%), resulting in a final analytic sample of 65 schools (71%). Table 1 presents descriptive information on schools enrolled in the study. Study participants within these 65 schools included 88 autism support teachers and 137 classroom staff (e.g., one-on-one aides, assistants) who worked in kindergarten to third-grade autism support classrooms. The average age of participating educators was 40.5 years (SD = 12.4) with 5.1 years of experience (SD = 6.1) working in the special education setting and 4.1 years (SD = 4.9) in their current position. The sex distribution of the sample was 91% female (n = 208), 6% male (n = 13), and 3% unknown or not reported. With regard to ethnicity, participants were asked to indicate whether they identified as Hispanic, Latino, or Spanish (94% indicated “no”, n = 214) and, if so, whether they identified as Mexican, Mexican American, or Chicano (0%), Puerto Rican (2%, n = 5), or other (1%, n = 3); 3% (n = 6) did not respond. Participants self-identified their race using the categories of American Indian or Alaska Native (1%, n = 3), Asian (4%, n = 10), Black or African American (29%, n = 65), Native Hawaiian or Other Pacific Islander (<1%, n = 1), Other (1%, n = 2), or White (63%, n = 144); 4% (n = 8) of participants did not respond and 2% selected multiple race categories.

Table 1.

Descriptive statistics and bivariate correlations for all study variables (N = 65 schools).

| Bivariate Correlations (r) | |||||

|---|---|---|---|---|---|

| Variable | M | SD | PRT fidelity | DTT fidelity | VS fidelity |

| Outcomes | |||||

| Fidelity to Pivotal Response Training (0–4) | 2.11 | 0.98 | |||

| Fidelity to Discrete Trial Training (0–4) | 2.30 | 1.10 | 0.48 | ||

| Fidelity to Visual Schedules (0–4) | 1.62 | 0.96 | 0.43 | 0.44 | |

| Primary Antecedents | |||||

| School EBP Implementation Climate (0–4) | 1.96 | 0.62 | 0.32 | 0.16 | 0.00 |

| Principal EBP Implementation Leadership (0–4) | 2.50 | 0.72 | 0.27 | 0.03 | −0.06 |

| Control variables | |||||

| Principal Transformational Leadership (0–4) | 2.38 | 0.70 | 0.26 | 0.30 | 0.27 |

| Northwest Region (ref = Northeast Region) | 0.26 | 0.44 | −0.09 | 0.21 | 0.08 |

| School Size (N of students) | 585.29 | 198.89 | 0.01 | −0.02 | −0.15 |

| % of Students - African American | 40.67 | 33.13 | 0.03 | −0.23 | −0.10 |

| % of Students - Hispanic | 16.78 | 17.73 | 0.18 | 0.30 | 0.24 |

| % of Students - Asian | 8.31 | 10.74 | −0.13 | 0.02 | −0.10 |

| % of Students - Hawaiian or Other Pacific Islander | 0.17 | 0.31 | 0.13 | 0.24 | 0.08 |

| % of Students - American Indian or Alaskan Native | 0.22 | 0.24 | 0.23 | 0.13 | 0.01 |

| % of Students - Other Race | 9.13 | 3.36 | −0.07 | 0.05 | 0.04 |

| % of Students Receiving Free/Reduced Lunch | 78.27 | 31.61 | 0.05 | −0.10 | 0.02 |

| % of Students with Individualized Education Plan | 14.38 | 5.25 | −0.12 | 0.05 | 0.20 |

Note: Correlations greater than r = 0.24 and less than r = −0.24 are statistically significant at p < .05, two-tailed. EBP = evidence-based practice; DTT = discrete trial training; PRT = pivotal response training; VS = visual schedules.

Procedure

University institutional review boards and school districts provided ethics approval for the study. Recruitment entailed meeting with school district officials to describe the study and obtain a list of elementary schools with self-contained classrooms for autistic children where PRT, DTT, and VS training were used. Next, we contacted principals to obtain their consent for the school to participate. Then, researchers met with educators who worked in self-contained classrooms to obtain their consent to participate in the research. Educators could decline survey completion regardless of school participation.

Training and coaching in PRT, DTT, and VS were mandated and provided by the school districts for all educators working in self-contained classrooms as part of the districts’ standard operating procedures regardless of participation in the study. All training and coaching were provided by graduate-level clinicians working for a purveyor organization. The initial two-day training occurred prior to the start of the school year and incorporated didactic instruction, videotaped demonstrations, in vivo modeling, behavioral rehearsal, and discussions focused on applying the practices within participants’ classrooms. Educators were provided with intervention materials (treatment manuals, lesson plans, toys, flashcards, and data sheets). After the initial training, educators received in-classroom coaching for approximately two hours per month throughout the school year. Graduate-level clinicians from the purveyor organization served as coaches using didactics, modeling skills, and feedback based on observation.

Study participants completed measures of EBP implementation leadership and climate at the beginning of the school year following the training (November-December 2015). Participants were compensated $50 USD for their time (45–60 min measure). Beginning one month after administration of study measures (i.e., January 2016), research team members conducted the first classroom observations to assess educators’ fidelity to PRT, DTT, and VS in their classrooms. To avoid the beginning or end of the school year, when EBP implementation is less common, two observation sessions were conducted approximately two months apart from January to April 2016. Observations were conducted during regular school hours when educators reported they would be most likely to use the three interventions and had a duration typical of one-on-one sessions (15 minutes).

Measures

Fidelity to PRT, DTT, and VS.

Educators’ fidelity to PRT, DTT, and VS were rated by trained researchers during live in-classroom observations. During each observation, raters completed a 17-item PRT fidelity checklist (α = .97), an 11-item DTT checklist (α = .97), and a 10-item VS checklist (α = .93), all of which have demonstrated convergent validity with improved youth outcomes and self-reported teacher fidelity to these practices in prior research (Mandell et al., 2013; Pellecchia et al., 2016). Items on the checklist evaluated the degree to which teachers and staff implemented core components of each intervention using a Likert scale from 0 (“does not implement”) to 4 (“highly accurate implementation”). Coders demonstrated 90% reliability on the fidelity measure prior to conducting field observations (Pellecchia et al., 2016). A total score was computed for each intervention for each observation, and these were averaged across two observations to yield one fidelity score for each intervention for each classroom. Most schools (72%, n = 47) had only one classroom. In order to align the fidelity outcome with our level of analysis, in schools with more than one classroom, fidelity scores were aggregated to the school-level.

Complexity of PRT, DTT, and VS.

Three expert raters (MLH, DJC, JAW), each with advanced training and certification in the focal EBPs and over a decade of experience implementing and training practitioners on autism interventions, independently scored the complexity of PRT, DTT, and VS. Using the Intervention Complexity Assessment Tool for Systematic Reviews (iCATSR) (Lewin et al., 2017), each rater scored each intervention on 10 items that addressed different dimensions of complexity (e.g., number of components, the extent to which effects are modified by participant or interventionist factors). Items were scored on a scale from 1 (low complexity) to 3 (high complexity) and scores were summed to produce a total complexity score for each intervention. All three raters scored all three interventions independently and were blind to each other’s assessments. Consistency among raters on total complexity scores for all three interventions was tested using a two-way mixed, consistency, average-measures intraclass correlation coefficient or ICC (Hallgren, 2012). Cicchetti (1994) proposed guidelines for the practical interpretation of ICC as poor (<0.40), fair (0.41–0.59), good (0.60–0.74), and excellent (> 0.75).

After confirming interrater reliability (see Results for details), we calculated an overall intervention complexity score for each intervention by taking the average of the three raters’ total scores. Hypothetical values of these intervention complexity scores ranged from 10 (indicating all raters scored all 10 dimensions as ‘low’ = 1) to 30 (indicating all raters scored all 10 dimensions as ‘high’ = 3).

Principal EBP implementation leadership.

Teachers and classroom staff rated the extent to which their school principal exhibited focused EBP implementation leadership behaviors using the 12-item Implementation Leadership Scale (ILS) (Aarons, Ehrhart, & Farahnak, 2014). Items were modified in collaboration with the scale developers to ensure they addressed the school setting. Only surface-level modifications were made (e.g., changing the referent from “name of supervisor” to “principal”). The ILS assesses a leader’s behavior with regard to being proactive, knowledgeable, supportive, and perseverant with regard to EBP implementation. Responses are made on a 0 (“not at all”) to 4 (“very great extent”) Likert scale and averaged to produce a total score. Scores on the ILS exhibited excellent internal consistency as well as convergent and discriminant validity in prior research (Aarons, Ehrhart, & Farahnak, 2014; Lyon et al., 2018). Coefficient alpha in this sample was α = .97. Following theory and prior research, teacher and classroom staff ratings of principal leadership were aggregated (averaged) to reflect the principal’s role in school-level leadership.

School EBP implementation climate.

Teachers and classroom staff rated their school’s EBP implementation climate using the Implementation Climate Scale (ICS) (Ehrhart, Aarons, & Farahnak, 2014). Items were modified in collaboration with the scale developers to ensure they addressed the school setting. Like the ILS, only surface level modifications were made (e.g., changing the referent from “team/agency” to “school”). The ICS includes 18 items rated on a Likert scale from (0) “not at all” to (4) “very great extent.” Items are averaged to produce a total score. Item content covers six domains including: focus on EBPs, educational support for EBPs, recognition for EBPs, rewards for EBPs, selection for EBPs, and selection for openness. The scale is psychometrically validated (Ehrhart, Aarons, & Farahnak, 2014; Locke, Lawson, et al., 2019; Lyon et al., 2018). Coefficient alpha in this sample was α = .93. Consistent with theory and prior research, as well as our conceptualization of climate as a school-level construct, educator ratings of school climate were aggregated to the school level.

Control variables.

To isolate the association of principal’s focused EBP implementation leadership with educator fidelity, analyses controlled for principals’ general leadership as rated using the 20-item, psychometrically validated, transformational leadership scale of the multifactor leadership questionnaire (Avolio & Bass, 2002; Bass, 1999). Teachers and staff completed items describing the frequency of their principal’s behaviors, ranging from 0 (“not at all”) to 4 (“frequently”), in the domains of idealized influence, inspirational motivation, individualized consideration, and intellectual stimulation. Items were averaged to produce a total score. Coefficient alpha in this sample was α=0.96. Consistent with our theoretical model, educator ratings of their principals’ leadership were aggregated to the school level.

To control for differences across schools in student populations and site characteristics, all models included covariates indexing the school’s region (i.e., northwestern U.S. versus northeastern U.S.) and the proportion of youth of color who attended the school, received free or reduced-price lunch, and participated in an individualized education program.

Data Analysis

Hypotheses were tested using linear regression analyses within the counterfactual framework (Muthén, Muthén, & Asparouhov, 2017; VanderWeele et al., 2016). Following the study theoretical model, schools comprised the unit of analysis (N = 65). For each EBP, a set of simultaneous linear regression models were fit, estimating (a) the association of principal EBP implementation leadership with school EBP implementation climate, and (b) the association of school EBP implementation climate with educator fidelity to one of the three EBPs (i.e., PRT, DTT, or VS) controlling for EBP implementation leadership. All models controlled for all potential confounds described above. There were no missing data on outcomes, antecedents, or covariates. Analyses were implemented in Mplus Version 8.0 (Muthén & Muthén, 2017) using robust maximum likelihood estimation (ESTIMATOR=MLR). Following model estimation, the tenability of model assumptions was checked by examining residual plots and Cook’s distance values (Cook, 1977), which indicated no problems with influential outliers, heteroskedasticity, or non-linearity.

To describe the strength of the relationship between principal EBP implementation leadership and school EBP implementation climate, the ΔR2 statistic (i.e., change in R2) was calculated, which represents the proportion of variance in school EBP implementation climate uniquely explained by variation in principals’ EBP implementation leadership; ΔR2 also was used to characterize the strength of the association between school EBP implementation climate and educator fidelity to each of the three EBPs (i.e., PRT, DTT, VS) after controlling for all other variables in the model.

The indirect association of principal EBP implementation leadership with educator fidelity to PRT was estimated using formulas presented by VanderWeele (2016) as implemented in Mplus, Version 8 (Muthén, Muthén, & Asparouhov, 2017). For the analysis, values of principal EBP implementation leadership were set at +/− one standard deviation from the sample mean and all covariates were centered around their grand means. To facilitate interpretation of these results, a standardized effect size analogous to Cohen d was calculated. This value represents the standardized mean difference in fidelity associated with high (1 SD above the mean) versus low (1 SD below the mean) principal EBP implementation leadership through its association with school EBP implementation climate (Williams, 2016). Values of d are commonly interpreted as small (0.2), medium (0.5) or large (0.8) (Cohen, 1988).

Results

Intervention Complexity

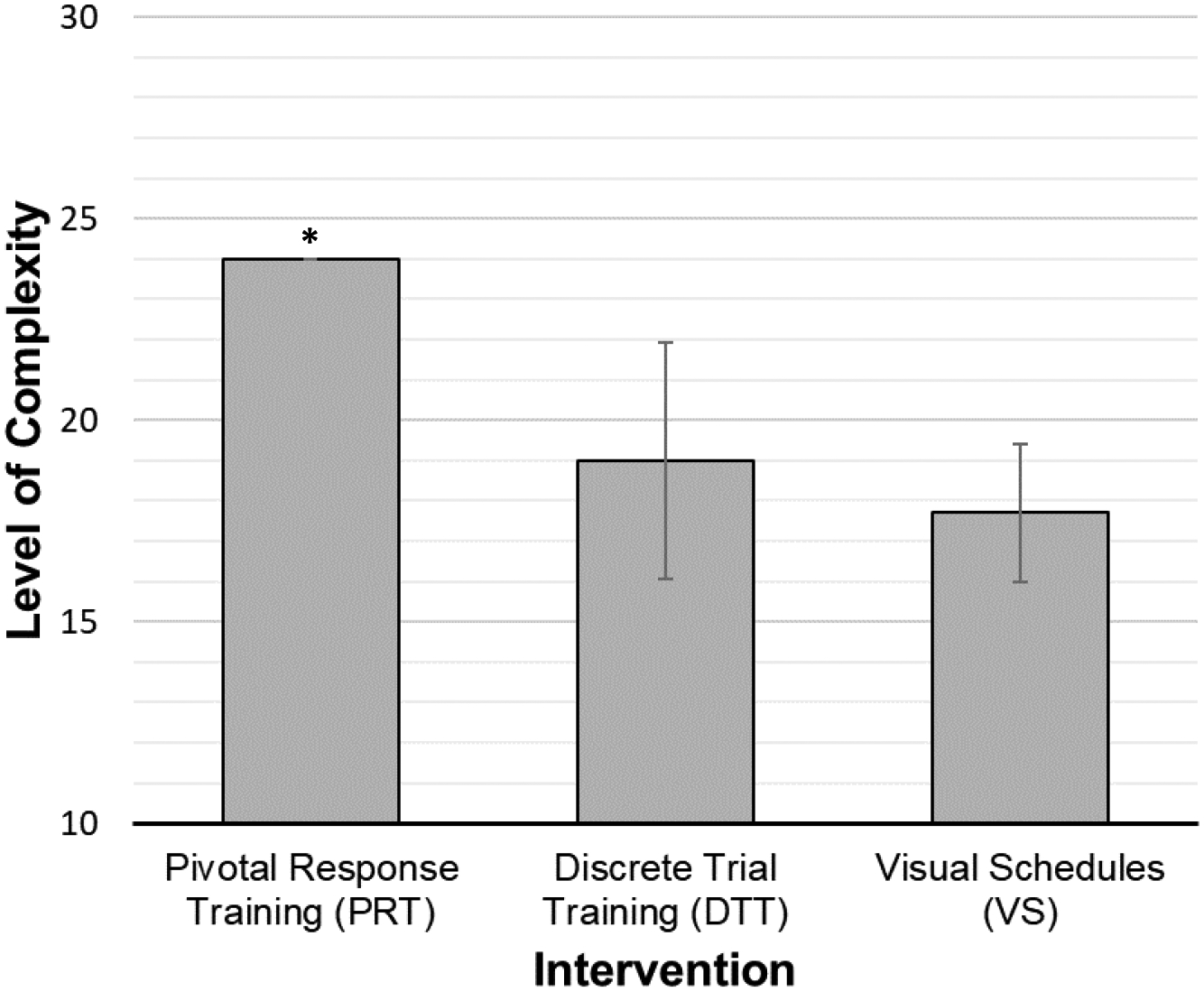

The ICC for experts’ ratings of intervention complexity was in the excellent range (ICC = 0.94), indicating intervention complexity was rated similarly across experts. As shown in Figure 2, mean ratings of complexity were highest for PRT (M = 24.0, SD = 0.0), followed by DTT (M = 19.0, SD = 2.6), and VS (M = 17.7, SD = 1.5). Results of a one-way ANOVA indicated there were significant mean differences in complexity across interventions, F (2, 6) = 10.75, p = 0.010. Planned contrasts confirmed PRT was rated significantly more complex than DTT and VS, t(3.2) = 6.43, p = 0.006, but failed to support a significant difference in complexity between DTT and VS, t(3.2) = 0.76, p = 0.501.

Figure 2.

Experts’ mean complexity ratings by intervention.

Note: N = 3 experts independently rated each intervention (possible scores = 10 – 30). Experts’ ratings were averaged to produce the total scores shown here. Planned contrasts indicated PRT was rated significantly more complex than DTT and VS, t(3.2) = 6.43, p = 0.006.

* For PRT, the standard error of the mean was zero resulting in upper and lower 95% confidence bounds equal to the mean.

Variation in Educator Fidelity

Table 1 presents descriptive statistics for all study variables and bivariate correlations between the three fidelity outcomes and each of the hypothesized antecedent variables. Means of the three fidelity outcomes were near the midpoint of the scales. The three outcomes were moderately correlated with one another (rs = 0.43–0.48, ps < .001). Consistent with hypotheses, there were statistically significant and positive bivariate correlations between principal EBP implementation leadership and PRT fidelity (r = 0.27, p < 0.030) and between school EBP implementation climate and PRT fidelity (r = 0.32, p = 0.009); however, there was no evidence of a statistically significant relationship between EBP implementation leadership or climate with DTT or VS fidelity (all ps > 0.05).

Association of Principal EBP Implementation Leadership with School EBP Implementation Climate

As is shown in Table 2, results of the regression analyses indicated increased frequency of principal EBP implementation leadership predicted higher levels of school EBP implementation climate (b = 0.58, SE = 0.11, p = 0.000, ΔR2 = 0.23), accounting for 23% of the variance. Controlling for all other variables in the model, a one standard deviation increase in principal EBP implementation leadership was associated with a 0.68 standard deviation increase in the level of school EBP implementation climate. These results supported H1.

Table 2.

Linear regression analyses linking principal EBP implementation leadership, school EBP implementation climate, and educator fidelity to three EBPs for autism

| Outcomes | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| School EBP Implementation Climate | Educator Fidelity to PRT | Educator Fidelity to DTT | Educator Fidelity to VS | |||||||||

| Antecedents | b | SE | p | b | SE | p | b | SE | p | b | SE | p |

| School EBP Implementation Climate | 0.57 | 0.24 | 0.018 | 0.28 | 0.33 | 0.398 | −0.04 | 0.24 | 0.885 | |||

| Principal EBP Implementation Leadership | 0.58 | 0.11 | 0.000 | −0.19 | 0.24 | 0.434 | −0.49 | 0.36 | 0.175 | −0.47 | 0.23 | 0.037 |

| Model R2 | 0.60 | 0.29 | 0.25 | 0.26 | ||||||||

Note: N = 65 schools. EBP = evidence-based practice; DTT = discrete trial training; PRT = pivotal response training; VS = visual schedules. Coefficients were estimated using multiple linear regression analysis incorporating a robust maximum likelihood estimator. All models control for region of U.S. (northeast vs. northwest), school size (# of students), proportion of students of color (African American, American Indian or Alaska Native, Asian, Hawaiian or Other Pacific Islander, Other Race), proportion of students receiving free or reduced lunch, and proportion of students with an individualized education plan. Comparing principals with high (+1 SD) versus low (−1 SD) EBP implementation leadership, the indirect association of EBP implementation leadership with PRT fidelity through school EBP implementation climate was d = 0.49 (95% CI = 0.04 to 0.93).

Association of School EBP Implementation Climate with Educator Fidelity

Table 2 presents results of the linear regression analyses testing the relationships between school EBP implementation climate and educator fidelity to each of the three EBPs after controlling for principal EBP implementation leadership and all other covariates. As hypothesized (H2), increased school EBP implementation climate was related to increased fidelity to PRT (b = 0.57, SE = 0.24, p = 0.018, ΔR2 = 0.05). Controlling for all other variables in the model, each one standard deviation increase in school EBP implementation climate was associated with a 0.36 standard deviation increase in educator fidelity to PRT. Also consistent with H2, there was no evidence of an association between school EBP implementation climate and fidelity to either DTT (b = 0.28, SE = 0.33, p = 0.398, ΔR2 = 0.01) or VS (b = −0.04, SE = 0.24, p = 0.885, ΔR2 = 0.00).

Indirect Association of Principal EBP Implementation Leadership with Educator Fidelity to PRT through School EBP Implementation Climate

Results of the regression analyses confirmed there was a significant indirect association of principal EBP implementation leadership with educator fidelity to PRT through school EBP implementation climate (indirect effect = 0.48, p = 0.038, 95% CI = 0.03 to 0.93). Comparing principals whose EBP implementation leadership was one standard deviation above the mean to those whose EBP implementation leadership was one standard deviation below the mean, the indirect association of leadership with PRT fidelity through school climate was d = 0.49 (95% CI = 0.04 to 0.93). This supports H3. Also consistent with H3, there was no evidence of indirect associations between EBP implementation leadership and educator fidelity to DTT or VS.

Discussion

Closing the research-practice gap is an essential step toward optimizing the effectiveness of behavioral health systems and improving the well-being of populations that experience behavioral disorders, including autistic youth (Stirman et al., 2016; Wolk & Beidas, 2018). This study highlights a promising point of intervention for improving the implementation of EBPs in community settings: implementation leadership and climate. Building on research from organizational psychology and management (Hong et al., 2013; Kuenzi & Schminke, 2009), this study confirms that a theory of implementation leadership and climate (Aarons, Farahnak, & Ehrhart, 2014) explained variation in observed fidelity to a complex EBP (PRT) in public schools. These results answer the call for research testing leadership and climate theory with specific EBPs and clinical populations using observed fidelity. Further, these findings apply these theories within a new population of settings (i.e., schools), leaders (i.e., principals), and providers (i.e., educators). Equally as important, this study identified a potential boundary condition that may modify the association between EBP implementation leadership, climate, and fidelity, namely, the EBP’s level of complexity. Importantly, while this study was unable to directly test the extent to which intervention complexity moderated the relationship between EBP implementation climate and fidelity, these results highlight the value of fully powered studies to directly test this hypothesis. Prior implementation research has not given sufficient attention to the issue of intervention complexity which is essential in the study of psychosocial EBPs, which may vary greatly along a continuum from simple to complex (Amodeo et al., 2011; Stirman et al., 2016). This is especially important for autism EBPs given the heterogeneity of the disorder and the number of evidence-based strategies to support autistic individuals. Understanding how boundary conditions such as intervention complexity interact with the effects of implementation antecedents such as leadership and climate is essential to designing and tailoring effective implementation strategies and applying them in situations that will be most beneficial.

Research has shown that EBPs for autistic youth have not been successfully adopted, implemented, and sustained in public schools (Brookman-Frazee et al., 2010). This is in part due to the complex nature of some of these EBPs that require highly trained clinicians/providers to implement, the use of extensive data collection instruments, and significant time requirements (Kucharczyk et al., 2015; Locke et al., 2015). School-based studies have shown that PRT fidelity is poorer than DTT and VS when implemented in public schools, perhaps because of educators reporting PRT as a “harder to use” intervention in comparison to other EBPs (Locke, Lawson, et al., 2019; Mandell et al., 2013). Therefore, the results from this study are especially promising in that there could be malleable organizational-level factors (EBP implementation leadership and climate) that may support the use of complex EBPs such as PRT for autistic youth in schools. These results presage the importance of effectiveness-implementation trials, currently underway, which test the differential effectiveness of organization- vs. provider-focused implementation strategies in schools and other healthcare settings (Brookman-Frazee & Stahmer, 2018; Lyon et al., 2019). To disentangle previously documented individual and organizational effects on EBP implementation (Locke, Lawson, et al., 2019), future trials should focus on comparing interventions of varying complexity. Doing so could prospectively evaluate the boundary conditions of complexity related to the delivery of tailored implementation supports by level (e.g., complex practices include implementation leadership, whereas simpler practices focus on individual-implementer factors such as beliefs and attitudes; Hugh et al., 2021). In practice, these findings also suggest educators may adopt and implement less complex EBPs without considerable school-level support. Conversely, the adoption and implementation of complex EBPs that require longer-term training (Odom et al., 2010) and higher interventionist skill may require a more conducive organizational implementation context.

Importantly, school leaders’ behaviors in this study were strongly associated with observed educator fidelity to PRT above and beyond resources or training opportunities for implementation. Specifically, strong EBP implementation leadership was associated with a positive implementation climate, and both constructs related to higher PRT fidelity. Evidence-based practice implementation leadership and climate have been documented as malleable determinants in the literature (Williams et al., 2020). For more complex EBPs, research-practice partnerships supporting scale-up may need to address implementation leadership and climate. In schools, EBP implementation for autistic youth may be improved by deploying the distributed leadership model (Locke, Lawson, et al., 2019) among leaders and educators, as special educators and principals often have discrepant but complementary knowledge, experience, priorities, and perspectives (Ahlers et al., 2021). Leadership structures like this can align both the characteristics of the interventions and the organizational implementation context to improve implementation in community settings.

Results of this study highlight the potential value of integrating information on intervention complexity into studies of implementation in behavioral health. While it must be emphasized that the absence of a statistically significant association between EBP implementation climate and fidelity to DTT and VS in this study cannot be construed as evidence that these variables are not related (or that intervention complexity modifies the association between these variables), these results are consistent with the idea that organizational leadership and climate may be more important for supporting the implementation of more complex EBPs. Further research is needed to directly test this hypothesis. In addition, studies are needed to better understand which dimensions of complexity are most salient for implementation success and under what conditions. For example, in this study the three interventions differed most on (a) skill level required of the interventionist (PRT highest, VS lowest), (b) degree of intended tailoring (PRT and VS higher, DTT lower), (c) degree to which participant or provider characteristics modified the effects of the intervention (PRT high, DTT and VS low), and (d) the number of behaviors targeted (PRT highest, VS lowest) (Lewin et al., 2017). Future trials could compare interventions with varying levels of these complexity dimensions to better understand how they relate to implementation success. It is also worth noting that some characteristics of these interventions, such as the one-on-one versus classroom-wide delivery format, did not influence complexity ratings because of how complexity was conceptualized by the iCATSR (i.e., even though the intervention formats differed, they all targeted only a single level of the organization and a single constituency within that level; Lewin et al., 2017). Given the ongoing development of the concept of intervention complexity (Guise et al., 2017), we encourage researchers to take an inclusive and exploratory approach in developing future implementation research on this topic.

Limitations and Future Directions

This study has limitations that lay the groundwork for future research. First, because this is not a randomized experiment, no causal interpretations can be given to the results. While the measurement of school EBP implementation leadership and climate preceded the assessment of educator fidelity, thus providing a potential basis for a cause-effect relationship, principal EBP implementation leadership and school EBP implementation climate were measured concurrently, thereby eliminating the ability to state which preceded the other, although theory (Aarons, Farahnak, & Ehrhart, 2014) and prior research (Williams et al., 2020) suggest leadership precedes the formation of climate. Future research should explore these relationships within and across time with attention to the timing of data collection to observe possible causal effects. Second, although we incorporated numerous control variables in our analyses, including an indicator of schools’ larger policy environments, it is not possible to address every potential confound. Longitudinal studies have established a link between change in EBP implementation leadership and climate (Williams et al., 2020); however, a definitive answer regarding the linkage between these factors and fidelity requires experimentation. Replication of these findings also is needed. Finally, although self-report measures have weaknesses for some variables, self-report is optimally suited to assess climate perceptions which consist of shared staff perceptions (Zohar & Luria, 2004).

Conclusion

This study supports the propositions of a theory of EBP implementation leadership and climate for complex psychosocial interventions in a new setting, population, and intervention while also highlighting potential boundary conditions related to intervention complexity. Results have important implications for the design and application of implementation strategies to support providers’ high-fidelity delivery of EBPs in community settings.

Highlights.

We tested a theory of improving use of autism evidence-based practices in schools

Higher implementation leadership by principals predicted improved school climate

Improved school implementation climate predicted enhanced use of a complex practice

There was no evidence school climate predicted use of less complex practices

Acknowledgements:

We are grateful for the collaboration and assistance of our school partners whose support made this work possible. We also wish to thank Dr. Steven Marcus for his consultation on this project.

Funding:

This study was supported by the following grants from the US National Institute of Mental Health: K01 MH100199 (PI: Locke) and R01MH106175 (PI: Mandell).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Declarations of interest: None.

To respect the diversity and preferences of the autistic community, we are using identity-first language (see Bottema-Beutel, K., Kapp, S., Lester, J., Sasson, N., & Hand, B. (2020). Avoiding ableist language: Suggestions for autism researchers. Autism in Adulthood, 3. http://doi.org/10.1089/aut.2020.0014).

References

- Aarons GA, Ehrhart MG, & Farahnak LR (2014). The implementation leadership scale (ILS): Development of a brief measure of unit level implementation leadership. Implementation Science, 9(1), 45. 10.1186/1748-5908-9-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Ehrhart MG, Torres EM, Finn NK, & Roesch SC (2016). Validation of the implementation leadership scale (ILS) in substance use disorder treatment organizations. Journal of Substance Abuse Treatment, 68, 31–35. 10.1016/j.jsat.2016.05.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Farahnak LR, & Ehrhart MG (2014). Leadership and strategic organizational climate to support evidence-based practice implementation. In Dissemination and implementation of evidence-based practices in child and adolescent mental health. (pp. 82–97). Oxford University Press. [Google Scholar]

- Ahlers K, Hugh ML, Frederick L, & Locke J (2021). Two sides of the same coin: A qualitative study of multiple stakeholder perspectives on factors associated with implementation of evidence-based practices for children with autism in elementary schools. International Review of Research in Developmental Disabilities. Academic Press. 10.1016/bs.irrdd.2021.07.003 [DOI] [Google Scholar]

- Amodeo M, Lundgren L, Cohen A, Rose D, Chassler D, Beltrame C, & D’Ippolito M (2011). Barriers to implementing evidence-based practices in addiction treatment programs: Comparing staff reports on motivational interviewing, adolescent community reinforcement approach, assertive community treatment, and cognitive-behavioral therapy. Evaluation and Program Planning, 34(4), 382–389. 10.1016/j.evalprogplan.2011.02.005 [DOI] [PubMed] [Google Scholar]

- Arick JR, Krug DA, Loos L, & Falco R (2004). The star program: Strategies for teaching based on autism research, (Level III). Pro-Ed. [Google Scholar]

- Avolio BJ, & Bass BM (2002). Manual for the multifactor leadership questionnaire (Form 5X). Redwood City, CA: Mindgarden. [Google Scholar]

- Bass BM (1999). Two decades of research and development in transformational leadership. European Journal of Work and Organizational Psychology, 8(1), 9–32. 10.1080/135943299398410 [DOI] [Google Scholar]

- Beidas R, Skriner L, Adams D, Wolk CB, Stewart RE, Becker-Haimes E, … & Marcus SC (2017). The relationship between consumer, clinician, and organizational characteristics and use of evidence-based and non-evidence-based therapy strategies in a public mental health system. Behaviour Research and Therapy, 99, 1–10. 10.1016/j.brat.2017.08.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Williams NJ, Becker-Haimes EM, Aarons GA, Barg FK, Evans AC, Jackson K, Jones D, Hadley T, Hoagwood K, Marcus SC, Neimark G, Rubin RM, Schoenwald SK, Adams DR, Walsh LM, Zentgraf K, & Mandell DS (2019). A repeated cross-sectional study of clinicians’ use of psychotherapy techniques during 5 years of a system-wide effort to implement evidence-based practices in Philadelphia. Implementation Science, 14(1). 10.1186/s13012-019-0912-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birken SA, Lee S-YD, & Weiner BJ (2012). Uncovering middle managers’ role in healthcare innovation implementation. Implementation Science, 7(1), 28. 10.1186/1748-5908-7-28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bottema-Beutel K, Kapp S, Lester J, Sasson N, & Hand B (2020). Avoiding ableist language: Suggestions for autism researchers. Autism in Adulthood, 3(1). 10.1089/aut.2020.0014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brock ME, Dynia JM, Dueker SA, & Barczak MA (2020). Teacher-reported priorities and practices for students with autism: Characterizing the research-to-practice gap. Focus on Autism and Other Developmental Disabilities, 35(2), 67–78. 10.1177/1088357619881217 [DOI] [Google Scholar]

- Brookman-Frazee L, & Stahmer AC (2018). Effectiveness of a multi-level implementation strategy for ASD interventions: Study protocol for two linked cluster randomized trials. Implementation Science, 13(1). 10.1186/s13012-018-0757-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brookman-Frazee LI, Taylor R, & Garland AF (2010). Characterizing community-based mental health services for children with autism spectrum disorders and disruptive behavior problems. Journal of Autism and Developmental Disorders, 40(10), 1188–1201. 10.1007/s10803-010-0976-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicchetti DV (1994). Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychological Assessment, 6(4), 284–290. 10.1037/1040-3590.6.4.284 [DOI] [Google Scholar]

- Cohen J (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Erlbaum. [Google Scholar]

- Cook RD (1977). Detection of influential observation in linear regression. Technometrics, 19(1), 15–18. 10.2307/1268249 [DOI] [Google Scholar]

- Dettmer S, Simpson RL, Brenda Smith M, & Ganz JB (2000). The use of visual supports to facilitate transitions of students with autism. Focus on Autism and Other Developmental Disabilities, 15(3), 163–169. 10.1177/108835760001500307 [DOI] [Google Scholar]

- Ehrhart MG, Aarons GA, & Farahnak LR (2014). Assessing the organizational context for EBP implementation: The development and validity testing of the Implementation Climate Scale (ICS). Implementation Science, 9(1). 10.1186/1748-5908-9-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrhart MG, Schneider B, & Macey WH (2014). Organizational climate and culture: An introduction to theory, research, and practice. Routledge/Taylor & Francis Group. [Google Scholar]

- Ehrhart MG, Torres EM, Hwang J, Sklar M, & Aarons GA (2019). Validation of the Implementation Climate Scale (ICS) in substance use disorder treatment organizations. Substance Abuse Treatment, Prevention, and Policy, 14(1), 1–10. 10.1186/s13011-019-0222-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrhart MG, Torres EM, Wright LA, Martinez SY, & Aarons GA (2016). Validating the Implementation Climate Scale (ICS) in child welfare organizations. Child Abuse & Neglect, 53, 17–26. 10.1016/j.chiabu.2015.10.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finn NK, Torres EM, Ehrhart MG, Roesch SC, & Aarons GA (2016). Cross-validation of the Implementation Leadership Scale (ILS) in child welfare service organizations. Child Maltreatment, 21(3), 250–255. 10.1177/1077559516638768 [DOI] [PubMed] [Google Scholar]

- Fixsen DL, Naoom Sandra F., Blase Karen A., Friedman Robert M., Wallace Frances. (2005). Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, National Implementation Research Network. [Google Scholar]

- Guise JM, Chang C, Butler M, Viswanathan M, & Tugwell P (2017). AHRQ series on complex intervention systematic reviews-paper 1: An introduction to a series of articles that provide guidance and tools for reviews of complex interventions. Journal of Clinical Epidemiology, 90, 6–10. 10.1016/j.jclinepi.2017.06.011 [DOI] [PubMed] [Google Scholar]

- Hallgren KA (2012). Computing inter-rater reliability for observational data: an overview and tutorial. Tutorials in Quantitative Methods for Psychology, 8(1), 23–34. 10.20982/tqmp.08.1.p023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hong Y, Liao H, Hu J, & Jiang K (2013). Missing link in the service profit chain: A meta-analytic review of the antecedents, consequences, and moderators of service climate. Journal of Applied Psychology, 98(2), 237–267. 10.1037/a0031666 [DOI] [PubMed] [Google Scholar]

- Hugh ML, Johnson L, & Cook C (2021). Preschool teachers’ practice selections for children with autism: An application of the theory of planned behavior. Autism. 10.1177/13623613211024795 [DOI] [PubMed] [Google Scholar]

- Kozlowski SW, & Doherty ML (1989). Integration of climate and leadership: Examination of a neglected issue. Journal of Applied Psychology, 74(4), 546–553. 10.1037/0021-9010.74.4.546 [DOI] [Google Scholar]

- Kucharczyk S, Reutebuch CK, Carter EW, Hedges S, El Zein F, Fan H, & Gustafson JR (2015). Addressing the needs of adolescents with autism spectrum disorder: Considerations and complexities for high school interventions. Exceptional Children, 81(3), 329–349. 10.1177/0014402914563703 [DOI] [Google Scholar]

- Kuenzi M, & Schminke M (2009). Assembling fragments into a lens: A review, critique, and proposed research agenda for the organizational work climate literature. Journal of Management, 35(3), 634–717. 10.1177/0149206308330559 [DOI] [Google Scholar]

- Lewin S, Hendry M, Chandler J, Oxman AD, Michie S, Shepperd S, Reeves BC, Tugwell P, Hannes K, Rehfuess EA, Welch V, McKenzie JE, Burford B, Petkovic J, Anderson LM, Harris J, & Noyes J (2017). Assessing the complexity of interventions within systematic reviews: Development, content and use of a new tool (iCAT_SR). BMC Medical Research Methodology, 17(1). 10.1186/s12874-017-0349-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke J, Beidas RS, Marcus S, Stahmer A, Aarons GA, Lyon AR, Cannuscio C, Barg F, Dorsey S, & Mandell DS (2016). A mixed methods study of individual and organizational factors that affect implementation of interventions for children with autism in public schools. Implementation Science, 11(135). 10.1186/s13012-016-0501-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke J, Lawson GM, Beidas RS, Aarons GA, Xie M, Lyon AR, Stahmer A, Seidman M, Frederick L, Oh C, Spaulding C, Dorsey S, & Mandell DS (2019). Individual and organizational factors that affect implementation of evidence-based practices for children with autism in public schools: A cross-sectional observational study. Implementation Science, 14(29). 10.1186/s13012-019-0877-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke J, Lee K, Cook CR, Frederick L, Vázquez-Colón C, Ehrhart MG, Aarons GA, Davis C, & Lyon AR (2019). Understanding the organizational implementation context of schools: A qualitative study of school district administrators, principals, and teachers. School Mental Health, 11(3), 379–399. 10.1007/s12310-018-9292-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke J, Olsen A, Wideman R, Downey MM, Kretzmann M, Kasari C, & Mandell DS (2015). A tangled web: The challenges of implementing an evidence-based social engagement intervention for children with autism in urban public school settings. Behavior Therapy, 46(1), 54–67. 10.1016/j.beth.2014.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Cook CR, Brown EC, Locke J, Davis C, Ehrhart M, & Aarons GA (2018). Assessing organizational implementation context in the education sector: Confirmatory factor analysis of measures of implementation leadership, climate, and citizenship. Implementation Science, 13(5). 10.1186/s13012-017-0705-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Cook CR, Duong MT, Nicodimos S, Pullmann MD, Brewer SK, Gaias LM, & Cox S (2019). The influence of a blended, theoretically-informed pre-implementation strategy on school-based clinician implementation of an evidence-based trauma intervention. Implementation Science, 14(54). 10.1186/s13012-019-0905-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mandell DS, Stahmer AC, Shin S, Xie M, Reisinger E, & Marcus SC (2013). The role of treatment fidelity on outcomes during a randomized field trial of an autism intervention. Autism, 17(3), 281–295. 10.1177/1362361312473666 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marques L, Valentine SE, Kaysen D, Mackintosh M-A, Dixon De Silva LE, Ahles EM, Youn SJ, Shtasel DL, Simon NM, & Wiltsey-Stirman S (2019). Provider fidelity and modifications to cognitive processing therapy in a diverse community health clinic: Associations with clinical change. Journal of Consulting and Clinical Psychology, 87(4), 357–369. 10.1037/1040-3590.6.4.284 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNeill J (2019). Social validity and teachers’ use of evidence-based practices for autism. Journal of Autism and Developmental Disorders, 49, 4585–4594. 10.1007/s10803-019-04190-y [DOI] [PubMed] [Google Scholar]

- Meza RD, Triplett NS, Woodard GS, Martin P, Khairuzzaman AN, Jamora G, & Dorsey S (2021). The relationship between first-level leadership and inner-context and implementation outcomes in behavioral health: A scoping review. Implementation Science, 16(69). 10.1186/s13012-021-01104-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muthén BO, Muthén LK, & Asparouhov T (2017). Regression and mediation analysis using Mplus (3rd ed.). Muthén & Muthén. [Google Scholar]

- Muthen LK, & Muthen B (2017). Mplus user’s guide: Statistical analysis with latent variables (8th ed.). Muthén & Muthén. [Google Scholar]

- Odom SL, Boyd BA, Hall LJ, & Hume K (2010). Evaluation of comprehensive treatment models for individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 40(4), 425–436. 10.1007/s10803-009-0825-1 [DOI] [PubMed] [Google Scholar]

- Office of Special Education and Rehabilitative Services. (2019). 41st Annual Report to Congress on the Implementation of the Individuals with Disabilities Education Act 2019. U.S. Department of Education. https://www2.ed.gov/about/reports/annual/osep/2019/parts-b-c/41st-arc-for-idea.pdf [Google Scholar]

- Pellecchia M, Beidas RS, Lawson G, Williams NJ, Seidman M, Kimberly JR, Cannuscio CC, & Mandell DS (2020). Does implementing a new intervention disrupt use of existing evidence-based autism interventions? Autism, 24(7), 1713–1725. 10.1177/1362361320919248 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pellecchia M, Beidas RS, Marcus SC, Fishman J, Kimberly JR, Cannuscio CC, Reisinger EM, Rump K, & Mandell DS (2016). Study protocol: Implementation of a computer-assisted intervention for autism in schools: A hybrid type II cluster randomized effectiveness-implementation trial. Implementation Science, 11(1), 154. 10.1186/s13012-016-0513-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pellecchia M, Connell JE, Beidas RS, Xie M, Marcus SC, & Mandell DS (2015). Dismantling the active ingredients of an intervention for children with autism. Journal of Autism and Developmental Disorders, 45(9), 2917–2927. 10.1007/s10803-015-2455-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Mandell DS, Hadley TR, Rubin RM, Evans AC, Hurford MO, & Beidas RS (2017). Are general and strategic measures of organizational context and leadership associated with knowledge and attitudes toward evidence-based practices in public behavioral health settings? A cross-sectional observational study. Implementation Science, 12(1). 10.1186/s13012-017-0593-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers EM, Melina UE, Rivera MA, and Wiley CJ (2005). Complex adaptive systems and the diffusion of innovations. The Innovation Journal: The Public Sector Innovation Journal, 10(3), 1–26. [Google Scholar]

- Schreibman L (2000). Intensive behavioral/psychoeducational treatments for autism: research needs and future directions. Journal of Autism and Developmental Disorders, 30(5), 373–378. 10.1023/A:1005535120023 [DOI] [PubMed] [Google Scholar]

- Smith T (2001). Discrete trial training in the treatment of autism. Focus on Autism and Other Developmental Disabilities, 16(2), 86–92. 10.1177/108835760101600204 [DOI] [Google Scholar]

- Stahmer AC (1999). Using pivotal response training to facilitate appropriate play in children with autistic spectrum disorders. Child Language Teaching and Therapy, 15(1), 29–40. 10.1177/026565909901500104 [DOI] [Google Scholar]

- Steinbrenner JR, Hume K, Odom SL, Morin KL, Nowell SW, Tomaszewski B, & Szendrey S, McIntyre NS, Yücesoy-Özkan S, & Savage MN (2020). Evidence-based practices for children, youth, and young adults with autism: Third generation review. Journal of Autism and Developmental Disorders, 51, 4013–4032. 10.1007/s10803-020-04844-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stirman SW, Gutner CA, Langdon K, & Graham JR (2016). Bridging the gap between research and practice in mental health service settings: An overview of developments in implementation theory and research. Behavior Therapy, 47(6), 920–936. 10.1016/j.beth.2015.12.001 [DOI] [PubMed] [Google Scholar]

- VanderWeele TJ (2016). Explanation in causal inference: developments in mediation and interaction. International Journal of Epidemiology, 45(6), 1904–1908. 10.1093/ije/dyw277 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ (2016). Multilevel mechanisms of implementation strategies in mental health: Integrating theory, research, and practice. Administration and Policy in Mental Health and Mental Health Services Research, 43(5), 783–798. 10.1007/s10488-015-0693-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, & Beidas RS (2019). Annual research review: The state of implementation science in child psychology and psychiatry: A review and suggestions to advance the field. Journal of Child Psychology and Psychiatry, 60(4), 430–450. 10.1111/jcpp.12960 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ, Wolk CB, Becker-Haimes EM, & Beidas RS (2020). Testing a theory of strategic implementation leadership, implementation climate, and clinicians’ use of evidence-based practice: A 5-year panel analysis. Implementation Science, 15(1). 10.1186/s13012-020-0970-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson KP, & Landa RJ (2019). Barriers to educator implementation of a classroom-based intervention for preschoolers with autism spectrum disorder. Frontiers in Education, 4. 10.3389/feduc.2019.00027 [DOI] [Google Scholar]

- Wolk CB, & Beidas RS (2018). The intersection of implementation science and behavioral health: An introduction to the special issue. Behavior Therapy, 49(4), 477–480. 10.1016/j.beth.2018.03.004 [DOI] [PubMed] [Google Scholar]

- Zohar D, & Luria G (2004). Climate as a social-cognitive construction of supervisory safety practices: Scripts as proxy of behavior patterns. Journal of Applied Psychology, 89(2), 322–333. 10.1037/0021-9010.89.2.322 [DOI] [PubMed] [Google Scholar]