The recent release of the 2021 impact factors (IFs) showed a considerable increase in the score of journals in the infectious diseases category and turned the classification of these journals upside down. Although IF was originally developed as a tool to help librarians index scientific journals, it has become a widely used tool for ranking the scientific impact and quality of journals and, by extension, researchers [1]. In many countries, credits and grants are allocated to scientists publishing in journals with the highest IFs. Given the importance of the IF for researchers, it seems legitimate to question the causes of such an increase.

The journal impact factor (JIF) is calculated as the number of citations received each year by documents published in the previous 2 years, divided by the number of documents published in the previous 2 years. One of its curious features is that documents considered to have no ‘substantive’ research content (such as editorials or viewpoints), called ‘non-citable’ documents, are not included in the denominator but the citations of these same documents are included in the numerator [1].

To investigate the determinant of this tremendous IF increase, we extracted citation reports from the Web of Science database for the 99 indexed infectious disease journals (NN category) as of 1 July, 2022 (Supplementary Material). We selected the 47 journals that have published at least 100 ‘citable’ research articles or reviews per year since 2014, and we computed their JIF from 2016 to 2021 (Figs. S1, S2). Between 2014 and 2020, 131,128 documents were published, of which 93,184 were ‘citable’ (71.1%), with an increase of 22% in 2020 compared with 2019 (21,590 vs. 17,699).

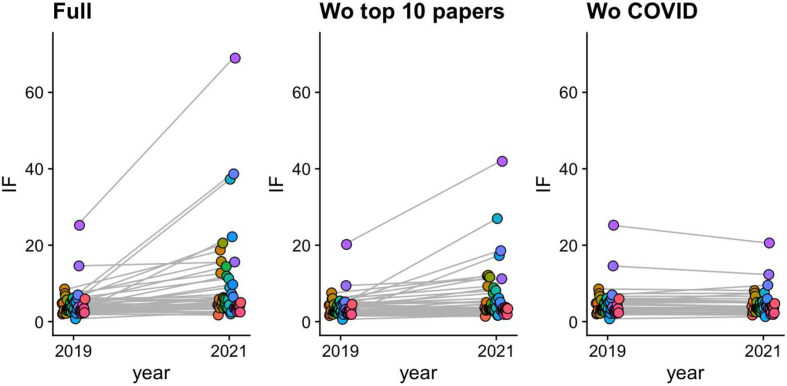

Although the mean JIF was stagnant from 2016 to 2019, it rose dramatically over the last 2 years of the COVID-19 pandemic, from 4.52 ± 3.83 in 2019 to 9.49 ± 12.00 in 2021. Based on their titles, a sixth (3505 [16%]) of all documents published in 2020 referred to COVID-19 (i.e. included the terms ‘COVID’ or ‘SARS-CoV-2’). Journals with the highest relative increase in JIF were those that published a higher proportion of documents related to the pandemic (r 2 = 0.68, p < 0.001; Figs. S3). When articles referring to COVID-19 were removed from the IF computation (Fig. 1 ), the average JIF for 2021 remained similar to that of 2019 at 4.55 ± 3.18. Hence, the COVID-19 pandemic was the main cause of the increase in IF among infectious disease journals.

Fig. 1.

Evolution of 2-year journal impact factors for infectious disease journals from 2019 to 2021. Two-year impact factors for the selected 47 infectious disease journals (see text) in 2019 and 2021. JIF (Journal impact factor) is computed for all articles or letters issued from the Web of Science database (left), without the 10 most cited publications in each journal (centre) or without publications related to COVID-19 (right). Colour circles correspond to journals.

To ensure this rise reflected a scientific output of consistent quality and interest, we examined the citations of the 41 journals with an increase in JIF. Whereas the weight of the top-10 most cited articles in the 2019 JIF was 13.9% in median (interquartile range [IQR], 11.1–19.0%), it increased to 25.6% in 2021 (IQR, 18.1–33.5.6%) (Figs. S4). Among the 28 journals with an increase in JIF by ≥ 1, the 2020 top-10 articles were responsible for 55.3% (IQR, 39.7–61.4%) of the increase in JIF (Fig. 1). To roughly assess the scientific quality of these ‘blockbuster’ publications, we analysed the abstracts of the 100 most cited articles published in 2020–2021 by infectious disease journals (Table S1). All articles were regarding COVID-19, and a half (47%) had been published at the beginning of the pandemic, before July 2020. Only two were randomized controlled trials, 12 were prospective cohort studies, 24 were retrospective cohort studies, 10 were cross-sectional studies, 10 were modelling studies, 7 were case reports or case series and 14 were narrative reviews or opinion papers. Apart from randomized controlled trials, the median number of patients enrolled in longitudinal or cross-sectional studies was only 76 (IQR, 33–550). Albeit limited, this analysis suggests that these publications likely reflect the critical need for information in the early times of the pandemic rather than a strong long-term scientific effort. Since then, most of these articles have probably become outdated. For example, two of the 10 most cited articles argued in favour of the use of hydroxychloroquine, a drug that has eventually proven to be ineffective for COVID-19 treatment.

The computation of JIF is based on all citations, either ‘positive’ or ‘negative’, as an article might be cited because its results are interesting and useful to the scientific community, or on the contrary, to criticize its quality, methodology or conclusions. Controversial papers might thus have a higher impact on the JIF than studies universally recognized as scientifically robust. Here again, the first papers supporting the use of hydroxychloroquine have been highly cited, notably for criticising their methodology or refuting their conclusions [2]. As an illustration, we randomly selected 100 citations (of 2687) of the most cited articles on hydroxychloroquine and found that a third (35) were criticizing the study or contradicting its conclusions (Table S2). Overall, it seems that the pandemic has exacerbated the ‘blockbuster effect’ inherent in the metric [3], which is poorly correlated with the intrinsic quality of the research.

Although widespread in infectious disease journals, this phenomenon has probably not spared other disciplines. Many of the top-ranked general medicine journals have more than doubled their IF. Even within other medical fields, leading journals have often seen increase in their JIFs, likely because of the COVID-19 pandemic. For instance, the 2020–2021 most cited documents in the Web of Science categories ‘Neurology’, ‘Gastroenterology’ or ‘Dermatology’ were COVID-19–related papers published before July 2020.

The IF metric has several well-known limitations that have been exploited by journals to maximize their JIF. For example, the publication of a large proportion of documents that are not ‘citable’ research articles (e.g. editorials), multiplication of self-citations or maintenance of articles in ‘online first’ status for a long period, mechanically lead to artificial inflation of the JIF [4]. And beyond the criticisms, it should be borne in mind that, within a journal, articles are very heterogeneously cited (as our analyses show) and their scientific quality may vary. Using the JIF to judge the quality of an article or a researcher is, therefore, meaningless. In a few years, moving away from the onset of the pandemic, we can expect IFs to decrease for many journals, not because of a decline in the quality of research but most likely because the ‘blockbuster effect’ will fade.

One can argue that alternative metrics have been proposed, in particular, the h-index [5]. Like the IF, this simple metric does not exclude self-citations and it cannot distinguish between positive and negative ones [6]. It is computed as the least number of publications (h) in a journal, cited at least h times. Because the h-index does not rely on the average number of citations, it is less sensitive to the ‘blockbuster effect’ than the JIF. However, the h-index cannot decrease, and it is strongly influenced by the age of the journal, penalising those that are recent. It also depends on the absolute number of citable articles in the journal, and even if we consider a limited time window (e.g. 2 years) to compute the h-index, it remains strongly correlated with the JIF (in our data, r 2 = 0.58, p < 0.001) [7]. Although many other bibliometric indicators exist [5,8], none have proven to be reliable in reflecting the scientific value of a researcher or a journal. Instead of a single metric, some authors have proposed using mixed approaches based on a combination of multiples indicators, quantitative to measure scientific output and citations (e.g. JIF) and qualitative to measure other dimensions of scientific work such as collaboration, fidelity or impact on health services [9]. However, given that most of these mixed indicators have not yet been developed, establishing a globally recognized combination will demand considerable collaborative academic work, if ever achievable. Furthermore, as mentioned above, it would be interesting to consider the ‘negative’ citations, which is now possible thanks to advances in natural language processing [10].

To conclude, we have shown that the tremendous increase in IF was caused by COVID-19 and driven by a ‘blockbuster effect’ poorly correlated to research quality. As the pandemic illustrates, the evaluation of journals, researchers and the allocation of research funds should not be determined by quantitative metrics alone, including the IF metric, at the risk of promoting sensationalism over true science. More generally, whichever metric we use to evaluate scientific production, we should be aware of its limits and apply it responsibly. It is time the scientific community be less addicted to IFs and recognize that a simple metric cannot capture the complexity of scientific value.

Author contributions

AM and TD conceptualized the commentary. AM extracted data and performed statistical analyses. AM and TD wrote the manuscript.

Transparency declaration

The authors declare that they have no conflict of interest.

Editor: L Leibovici

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.cmi.2022.08.011.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- 1.Larivière V., Sugimoto C.R. In: Springer handbook of science and technology indicators. Glänzel W., Moed H.F., Schmoch U., Thelwall M., editors. Springer International Publishing; Cham: 2019. The iournal impact factor: a brief history, critique, and discussion of adverse effects; pp. 3–24. [DOI] [Google Scholar]

- 2.Fiolet T., Guihur A., Rebeaud M.E., Mulot M., Peiffer-Smadja N., Mahamat-Saleh Y. Effect of hydroxychloroquine with or without azithromycin on the mortality of coronavirus disease 2019 (COVID-19) patients: a systematic review and meta-analysis. Clin Microbiol Infect. 2021;27:19–27. doi: 10.1016/j.cmi.2020.08.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Seglen P.O. Why the impact factor of journals should not be used for evaluating research. BMJ. 1997;314:498–502. doi: 10.1136/bmj.314.7079.497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Martin B.R. Editors’ JIF-boosting stratagems – which are appropriate and which not? Res Policy. 2016;45:1–7. doi: 10.1016/j.respol.2015.09.001. [DOI] [Google Scholar]

- 5.Thonon F., Boulkedid R., Delory T., Rousseau S., Saghatchian M., van Harten W., et al. Measuring the outcome of biomedical research: a systematic literature review. PloS One. 2015;10 doi: 10.1371/journal.pone.0122239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hirsch J.E. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci U S A. 2005;102:16569–16572. doi: 10.1073/pnas.0507655102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bornmann L., Marx W., Gasparyan A.Y., Kitas G.D. Diversity, value and limitations of the journal impact factor and alternative metrics. Rheumatol Int. 2012;32:1861–1867. doi: 10.1007/s00296-011-2276-1. [DOI] [PubMed] [Google Scholar]

- 8.Alonso S., Cabrerizo F.J., Herrera-Viedma E., h-index Herrera F. A review focused in its variants, computation and standardization for different scientific fields. J Informetr. 2009;3:273–289. doi: 10.1016/j.joi.2009.04.001. [DOI] [Google Scholar]

- 9.Martin B.R. The use of multiple indicators in the assessment of basic research. Scientometrics. 1996;36:343–362. doi: 10.1007/BF02129599. [DOI] [Google Scholar]

- 10.Catalini C., Lacetera N., Oettl A. The incidence and role of negative citations in science. Proc Natl Acad Sci U S A. 2015;112:13823–13826. doi: 10.1073/pnas.1502280112. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.