Abstract

Objectives

Verifying new reagent or calibrator lots is crucial for maintaining consistent test performance. The Institute for Quality Management in Healthcare (IQMH) conducted a patterns-of-practice survey and follow-up case study to collect information on lot verification practices in Ontario.

Methods

The survey had 17 multiple-choice questions and was distributed to 183 licensed laboratories. Participants provided information on materials used and approval/rejection criteria for their lot verification procedures for eight classes of testing systems. The case study provided a set of lot comparison data and was distributed to 132 laboratories. Responses were reviewed by IQMH scientific committees.

Results

Of the 175 laboratories that responded regarding reagent lot verifications, 74% verified all tests, 11% some, and 15% none. Of the 171 laboratories that responded regarding calibrator lot verifications, 39% verified all calibrators, 4% some, and 57% none. Reasons for not performing verifications ranged from difficulty performing parallel testing to high reagent cost. For automated chemistry assays and immunoassays, 23% of laboratories did not include patient-derived materials in reagent lot verifications and 42% included five to six patient materials; 58% of laboratories did not include patient-derived materials in calibrator lot verifications and 23% included five to six patient materials. Different combinations of test-specific rules were used for acceptance criteria. For a failed lot, 98% of laboratories would investigate further and take corrective actions. Forty-three percent of laboratories would accept the new reagent lot in the case study.

Conclusion

Responses to the survey and case study demonstrated variability in lot verification practices among laboratories.

Keywords: Reagent, Calibrator, Lot number, Verification

Abbreviations: CLSI, Clinical and Laboratory Standards Institute; ELISA, enzyme-linked immunosorbent assay; GC, gas chromatography; HPLC, high-performance liquid chromatography; IQMH, Institute for Quality Management in Healthcare; ISO, International Organization for Standardization; LC-MS/MS, liquid chromatography tandem mass spectrometry; PT, proficiency testing; QC, quality control; RIA, radioimmunoassay; TSH, thyroid-stimulating hormone

Highlights

-

•

Surveyed laboratories verified new reagent lots more commonly than calibrator lots.

-

•

Quality control material was used more than patient material for lot verifications.

-

•

Quality control results with a new lot were compared most to 2 standard deviations.

-

•

Percent difference was used most to assess patient material results between lots.

-

•

Laboratories need feasible guidelines on reagent and calibrator lot verifications.

1. Introduction

Clinicians depend on accurate laboratory results to make correct diagnostic and therapeutic decisions. Approximately two-thirds of medical decisions are influenced by laboratory results; therefore, it is crucial that all preventative measures are taken by the laboratory to ensure results are accurate prior to release [1]. Ensuring lot-to-lot consistency is an important part of the ongoing monitoring of test performance. This is most important when serially monitoring results of laboratory tests, such as tumour markers, for which small changes in analyte concentration may trigger further clinical interventions [2].

Although the performance of reagent lots is validated by the manufacturer prior to release, laboratories are expected to verify that performance of new reagent lots is acceptable for clinical use. Laboratories may have different acceptance criteria compared to vendors. In addition, various situations can cause a change in performance between initial validation and laboratory implementation, including suboptimal transportation or storage conditions or instability of a component in a calibrator or reagent [3]. Good laboratory practice as well as accreditation standards (e.g. International Organization for Standardization [ISO] 15189) require laboratories to verify the performance of new reagent lots [4]. Clinical and Laboratory Standards Institute (CLSI) EP26-A User Evaluation of Between-Reagent Lot Variation is the first guideline to recommend processes for reagent lot verification [3].

The Institute for Quality Management in Healthcare (IQMH) is a not-for-profit organization that provides proficiency testing (PT) for medical laboratories. IQMH's PT programs are accredited to ISO 17043: 2010 by the American Association for Laboratory Accreditation. For each laboratory medicine discipline, IQMH has a medical/scientific committee that provides technical and clinical advice on performance assessment and educational comments and feedback to participants. In addition to PT programs, which primarily assess the analytical phase of laboratory workflow, IQMH also provides educational surveys and case studies that facilitate the assessment of pre- and post-analytical phases of laboratory workflow. One of the educational tools used is a patterns-of-practice survey, which consists of a questionnaire covering a specific area of laboratory practice. Here we report the results of the patterns-of-practice survey designed to gather information on current reagent and calibrator lot verification practices and a follow-up case study intended to allow observation of the approach the laboratories would take with realistic lot verification data.

2. Materials and methods

2.1. Patterns-of-practice survey

A web-based patterns-of-practice survey was created and distributed in 2015 to 183 Ontario laboratories licensed to perform routine biochemistry, endocrinology, and/or immunology testing. A total of 178 laboratories responded. While IQMH did have some laboratories from outside of Ontario as PT survey participants at the time, a decision was made not to include them in the patterns-of-practice survey since they were not held to the same regulatory requirements as the Ontario laboratories and it would not be mandatory for them to submit a response. The survey consisted of 17 multiple-choice questions divided into four sections: process, materials used for lot verifications, data management, and approval or rejection process. A section was also included for participants to provide additional comments. A copy of the survey is provided as supplemental information. Results for each question were extracted and analyzed according to assay type and reagent or calibrator verification.

2.2. Follow-up case study

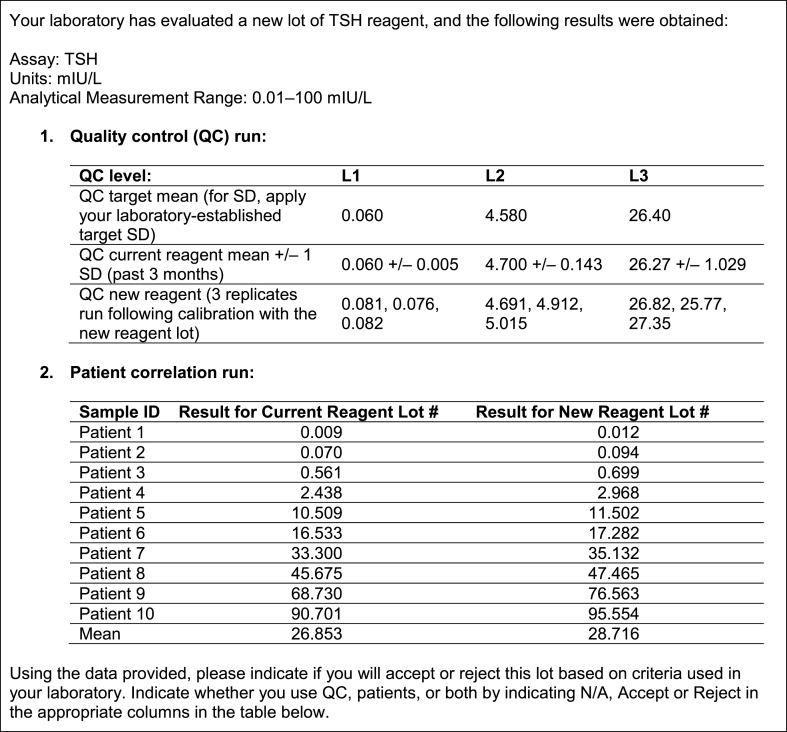

Three years later, in 2018, a follow-up case study about determining lot-to-lot variation of a thyroid-stimulating hormone (TSH) assay, and the acceptability of such variation, was distributed to 132 laboratories that perform immunoassays, most of which had participated in the original patterns-of-practice survey. One hundred twenty of the laboratories were in Ontario and 12 were voluntary out of province participants. The case that was distributed to laboratories is presented in Fig. 1. Participants were asked to select which method their laboratory would use to determine if the variation between the results obtained with the new reagent lot and the previous one was acceptable or not. Options for criteria used are presented in Fig. 2.

Fig. 1.

The case study.

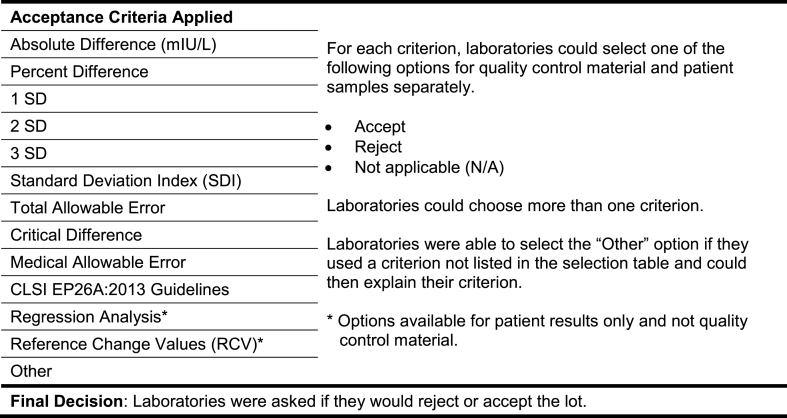

Fig. 2.

Acceptance criteria provided to laboratories as options.

Results were collected electronically using QView™ (IQMH's client web portal) and assessed using Microsoft Excel®.

3. Results

3.1. Responses to the patterns-of-practice survey

3.1.1. Process

Participants were asked to indicate if they performed new reagent or calibrator lot verification on all tests, some tests, or no tests within each of the categories of testing systems shown in Table 1. Participants could also indicate if they did not have the test system. Osmometers were specified by a number of laboratories as examples of other systems.

Table 1.

Test systems and examples provided to participants. Participants were asked to respond whether they performed verification of new lots of reagent or calibrator for all tests, some tests, or no tests within each test system category. RIA, radioimmunoassay; ELISA, enzyme-linked immunosorbent assay; HPLC, high-performance liquid chromatography; GC, gas chromatography; LC-MS/MS, liquid chromatography tandem mass spectrometry.

| Test Systems | Examples |

|---|---|

| Automated chemistry | Main analyzer |

| Automated immunoassay | |

| Semi-automated quantitative instruments | Blood gas analyzer, point-of-care testing devices |

| Semi-quantitative instruments | Electrophoresis, antibody titre, urine dipsticks |

| Qualitative or point-of-care testing assays | Drugs of abuse, pregnancy test |

| Commercial test kits | RIA, ELISA, western blot |

| In-house developed assays | HPLC, GC, LC-MS/MS |

| Other systems (to be specified in the section below this question on the questionnaire) |

3.1.2. Frequency of performing reagent or calibrator lot verification

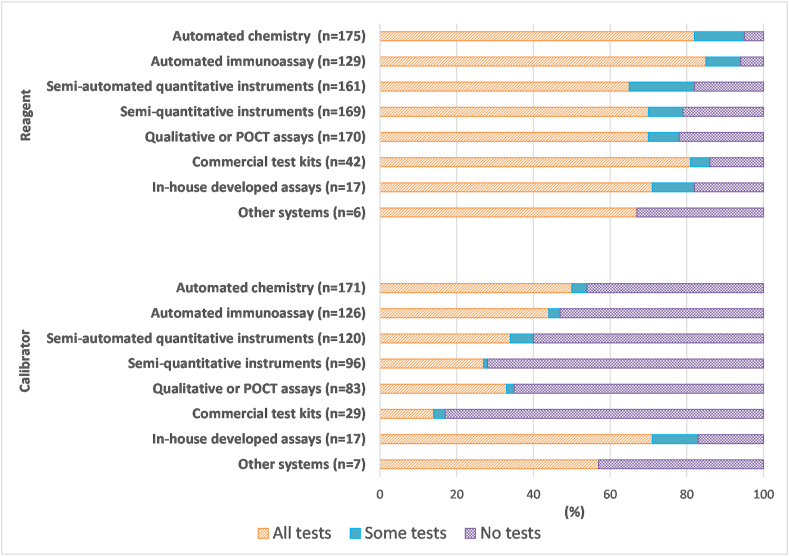

One hundred seventy-five laboratories submitted a total of 869 results regarding reagent lot verification for testing performed on-site. Responses from laboratories that did not have a given analytical system were excluded from the total and the calculations. On average, 74% of laboratories performed reagent lot verification for all tests, 11% for some tests, and 15% for none of the tests. One hundred seventy-one laboratories submitted 649 responses about calibrator lot verification for testing performed on-site. On average, 39% of laboratories performed calibrator lot verification for all tests, 4% for some tests, and 57% for none of the tests. Fig. 3 demonstrates the percentage of laboratories performing reagent or calibrator lot verification broken down by testing system.

Fig. 3.

Summary of responses from participating laboratories about reagent or calibrator lot verification practices according to test system. Results are expressed as a percentage of total responses (n-value shown in the figure) for each test system type from laboratories that performed such testing on-site.

Reagent lot verification was performed more frequently for automated immunoassays (85% of laboratories verify all tests, 9% verify some tests), automated chemistry tests (82% verify all tests, 13% verify some tests), and commercial test kits such as ELISA, RIA, or Western blot (81% verify all tests, 5% verify some tests) than other test systems.

Calibrator lot verification was most frequently performed for in-house developed assays (71% of laboratories verify all tests, 12% verify some tests) such as HPLC, GC, or LC-MS/MS compared to other test systems. As can be seen from Fig. 3, there were fewer responses received (n-values are smaller) about calibrator lot verification than about reagent lot verification. From the responses that were received about calibrator lot verification, it was clear that it was generally performed less often than reagent lot verification for each test system except in-house developed assays. For instance, for automated chemistry tests, 50% of laboratories were verifying calibrator lots for all tests and 4% for some tests.

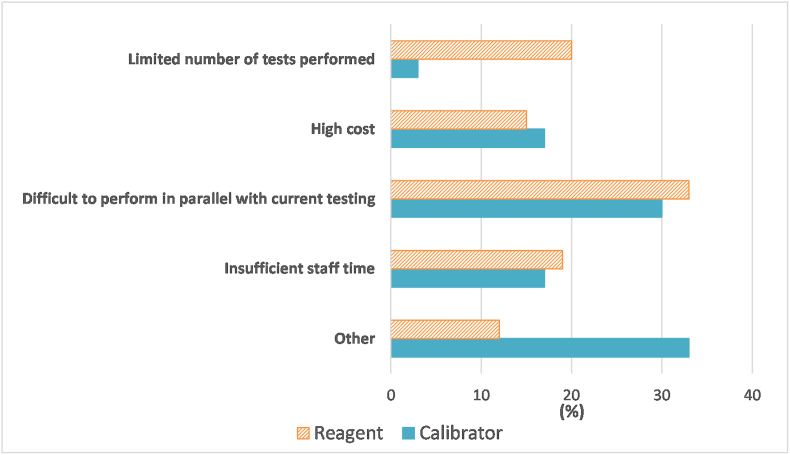

3.1.3. Reasons for not performing lot verification

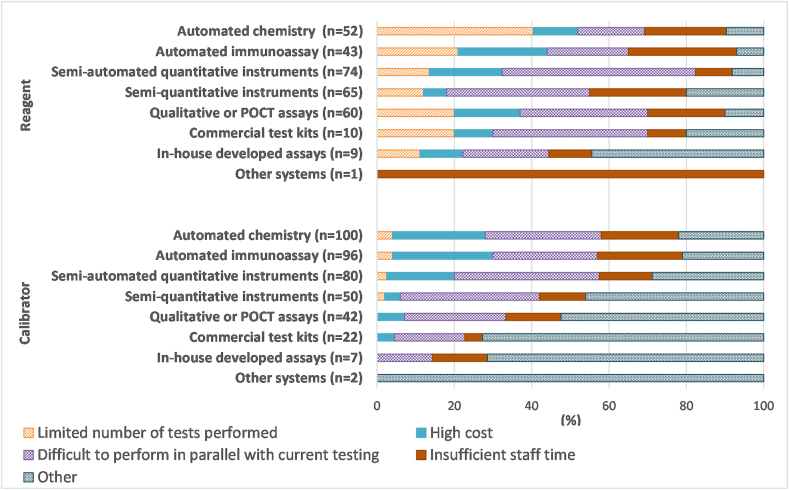

Laboratories not performing reagent and calibrator lot verifications for all tests performed by a given test system were asked to select the most common reason(s) from four options. A total of 125 participants responded to this question, with some only providing comments. Regarding reasons for not performing reagent lot verification, 74 laboratories submitted 314 responses. Across all testing platforms, the most common reason for not performing reagent lot verification was difficulty performing the verification of the new lot in parallel with use of their current lot (33%). Twenty percent of respondents listed a limited number of tests performed (i.e., low-volume tests or a limited number of samples), 19% indicated insufficient staff time, 15% selected high cost, and 12% indicated other as reasons for not performing verification of new reagent lots (Fig. 4). In the case of urinalysis, laboratories commented on reliance on manufacturer's QC data to ensure accuracy of urine dipsticks and correlation with microscopic analysis for lot verification.

Fig. 4.

Summary of the reasons provided for not conducting reagent or calibrator lot verifications. Responses were from 74 laboratories about reagents and 101 laboratories about calibrators and are expressed as a percentage of all responses within the reagent or calibrator category.

Regarding reasons for not performing calibrator lot verification, 101 laboratories provided a total of 399 responses. Receiving more reasons for not performing calibrator lot verification than reasons for not performing reagent lot verification is consistent with the fact that fewer laboratories appear to be performing calibrator lot verification compared to reagent lot verification (Fig. 3). Across all testing platforms, the most common reasons for not performing calibrator lot verification included difficulties in performing parallel testing (30%), high costs (17%), insufficient staff time (17%), or limited number of tests performed (3%) (Fig. 4). Thirty-three percent selected other and provided various reasons: no calibration is required with some kits (n = 34), not current laboratory policy (n = 9), calibrators are kit-specific (n = 4), no manufacturer's recommendation (n = 3), no regulatory requirement (n = 3), and insufficient calibrator (n = 1). Fig. 5 shows the reasons for not conducting reagent or calibrator lot verification for all tests, with responses broken down by test system.

Fig. 5.

Reasons for not performing reagent or calibrator lot verification for all tests within a methodology category. Results are expressed as a percentage of total responses (n-value shown in the figure) for each test system.

3.1.4. Verification of new shipment of previously-verified lot

When participants were asked whether they verify new shipments of previously-verified reagent and/or calibrator lots, 177 responses were received. Of those, 51% did not perform reagent and/or calibrator lot verifications on new shipments of a previously-verified lot, 33% performed verification of new shipments for all tests, 6% verified new shipments for most tests, and 11% verified new shipments for a few tests.

3.1.5. Lot verification in multi-site facilities

Participants from multi-site facilities were then asked to indicate how affiliated laboratories would handle verification of new reagent and/or calibrator lots if one of their sites had already completed verification. Ninety-three of 176 responses were from single site facilities. Of the remaining 83 responses, 58% performed full verification at each affiliated site, 35% carried out a modified protocol at affiliated sites such as relying on QC material alone for verification, and 7% did not conduct any additional reagent and/or calibrator lot verification at affiliated sites. Of the laboratories that provided responses indicating they were part of multi-site facilities, there may have been multiple responses received from each organization as we could not easily distinguish which laboratories belonged to the same organizations.

3.1.6. Time frame of lot verifications

Participants were asked to indicate when verification of new reagent and/or calibrator lots is performed. One hundred seventy-five responses were received. It was most common for laboratories to perform lot verifications immediately prior to implementation of the new lot (74%), while 13% performed the verification upon receipt of the new lot, and 17% performed the verification concurrent with the implementation of the new lot. The remaining laboratories selected other and the comments indicated a combination of the other three options since the same approach was not used for all tests within the laboratories.

3.1.7. Responsibility for lot verifications

One hundred seventy-six responses were received about who performs the testing required for lot verifications. For the majority (76%) of laboratories, the duty is assigned to bench technologists. In 18% of laboratories, the role is performed by a senior or charge technologist, a technical specialist, or a supervisor. Six percent of laboratories selected other and the comments were equally split between key operators and a combination of different positions.

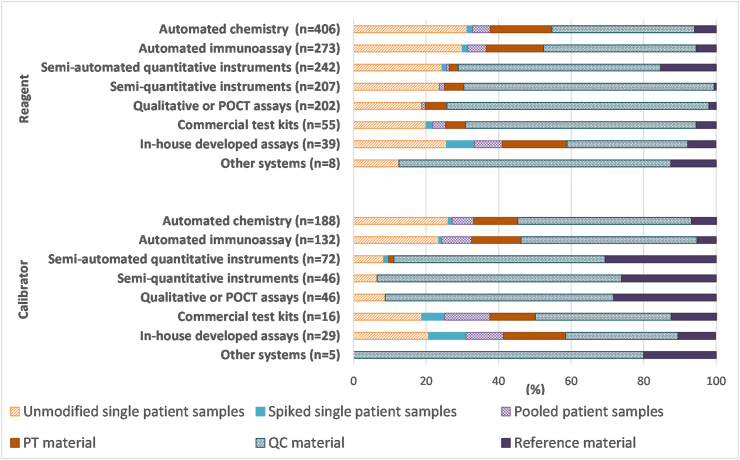

3.1.8. Materials used for lot verifications

Multiple questions were included about the materials used for reagent or calibrator lot verifications. In terms of the types of material used, laboratories were provided with options of unmodified single patient samples, spiked single patient samples, pooled patient samples, PT material, QC material, and reference material for each test system and were asked to select all applicable options. One hundred seventy-five laboratories responded, providing 1432 responses about reagent lot verification and 534 responses about calibrator lot verification. Considering all test systems together, for reagent lot verification, QC material represented the majority of responses received (53%), with single patient samples being the next most popular selection (26%). Similar results were obtained for calibrator lot verification, with 51% of responses across all test systems being for QC material and 19% being for single patient samples. In general, commercial material appeared to be more commonly used than patient material, particularly for calibrator lot verifications. Fig. 6 shows the breakdown of testing material responses according to test system.

Fig. 6.

Participant responses regarding types of material used in reagent or calibrator lot verifications for various analytical systems. Results are expressed as a percentage of total responses (n-value shown in the figure) for each test system.

3.1.9. Testing calibrators as patient samples

Laboratories were asked, specifically pertaining only to automated chemistry and/or automated immunoassay test systems, if they analyzed calibrators as patient samples during verification of new calibrator lots. Eighty-one percent of the 173 laboratories that responded indicated that they did not analyze calibrators as patient samples when verifying a new calibrator lot, while 3% of laboratories analyzed both current and new calibrators as patient samples, 5% analyzed only the current lot of calibrators as patient samples, and 12% analyzed only the new lot of calibrators as patient samples.

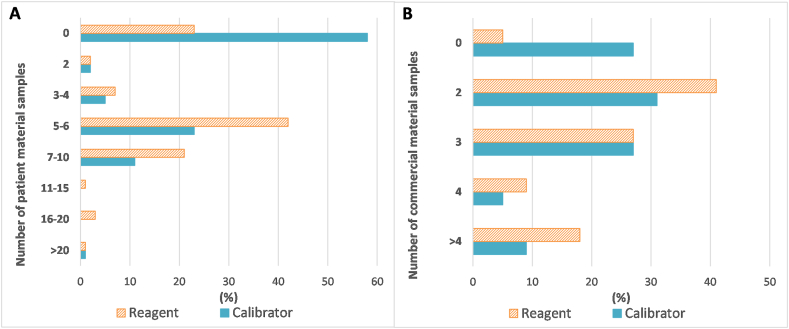

3.1.10. Number of patient or commercial material samples tested

Participants were asked to select the number of patient (unmodified single patient, spiked single patient, or pooled patient) material samples they would include on average when verifying a new reagent or calibrator lot for an automated chemistry or automated immunoassay test system. There were 169 responses obtained for reagent lot verification and 123 responses obtained for calibrator lot verification; they are summarized in Fig. 7A. For reagent lot verification, 42% of laboratories indicated they used five to six patient material samples on average, 23% were not using any patient materials, and 21% were using an average of seven to ten patient material specimens. Consistent with the results from Fig. 3, the majority of laboratories (58%) indicated they were not using patient materials for calibrator lot verification of these automated tests, while 23% of laboratories were using an average of five to six patient material samples.

Fig. 7.

Participant responses regarding number of patient or commercial material samples used in reagent or calibrator lot verifications for automated chemistry or immunoassay tests. A) average number of patient material samples used in verification of new reagent or calibrator lots. Results are expressed as a percentage of total responses (169 for reagent, 123 for calibrator). B) average number of commercial material samples used in verification of new reagent or calibrator lots. Results are expressed as a percentage of total responses (172 for reagent, 129 for calibrator).

Similarly, participants were asked to select the number of commercial (PT, QC, or reference) material samples they would include on average when verifying a new reagent or calibrator lot for an automated chemistry or automated immunoassay test system. There were 172 responses obtained for reagent lot verification and 129 responses obtained for calibrator lot verification; they are summarized in Fig. 7B. For reagent lot verification, 41% of laboratories indicated they used two commercial material samples on average, while 27% were using three. For calibrator lot verification of these automated tests, 31% of laboratories were using an average of two commercial material samples, 27% were using three, and 27% were using none.

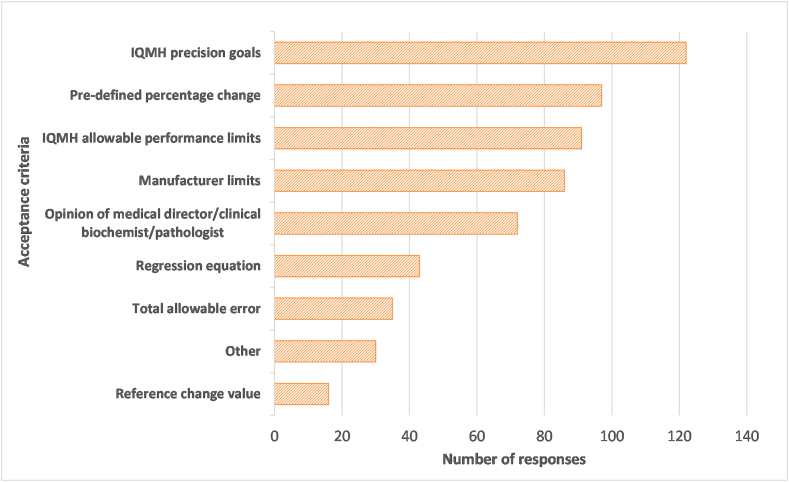

3.1.11. Acceptance criteria

Laboratories were asked to select all parameters used to determine the acceptability of lot verification data from a list of options presented in Fig. 8 and to specify any additional criteria used. There were 592 parameters provided by 174 laboratories. The most common criterion used was IQMH precision goals (21% of all responses), followed by a pre-defined percentage change (16%), and IQMH allowable performance limits (15%) or manufacturer's limits (15%). Many of the comments provided referred to Levey-Jennings charts or peer group data to determine acceptability of QC results with lot changes. There were also some comments referring to uncertainty of measurement or changes in patient averages as criteria used. In terms of the number of criteria used, 51% of participants used two to four criteria, 29% used five to seven criteria, and the remaining 21% used a single parameter.

Fig. 8.

Criteria used to determine acceptance or rejection of a new lot number. A total of 592 criteria were provided by 174 laboratories.

The individuals assessing the acceptability of results with new lots varied among respondents. In total, 373 responses were received from 176 laboratories to identify all positions responsible for these decisions. The most widely selected response (39%) was senior or charge technologist/technical specialist/supervisor, followed by bench technologist (24%), medical director/pathologist/clinical biochemist (18%), manager/director (13%), and other (6%). The key operator was indicated in many of the responses of other. In general, the decision appears to be made at a higher level than bench technologist in most laboratories.

Laboratories were asked to indicate how they would proceed when a reagent or calibrator lot number failed the acceptance criteria. They were asked to select all applicable options from three choices: (A) investigation and corrective action taken such as re-calibration of current and new lots and/or re-testing with fresh samples; B) lot rejected and manufacturer contacted; and C) lot accepted with restrictions. Of the responses provided by 175 laboratories, 98% included A alone or in combination with other responses; 84% included B, mostly in combination with A or both A and C; and 22% included C, but only in combination with other options. Therefore, additional investigation would nearly always be done before deciding whether to accept or reject a lot number that failed initial testing.

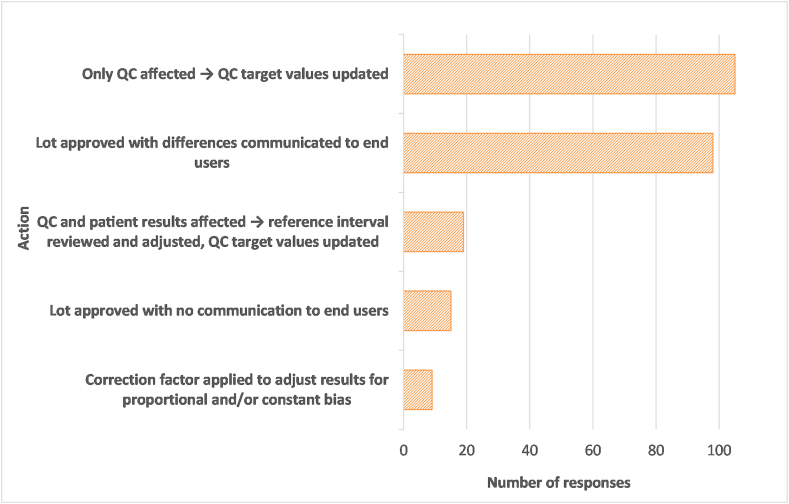

The next question asked laboratories to indicate what their restrictions or adjustments would be if a lot number were accepted with restrictions after violating the acceptance criteria. The breakdown of responses is shown in Fig. 9. There were 246 responses from 150 laboratories. Seventy percent of responding laboratories indicated that they would update QC target values if only QC were found to be affected by the lot change. A slightly smaller percentage (65%) indicated they would communicate differences to end users if they approved the lot, presumably if patient results were found to be affected and not only QC values. Laboratories were more reluctant to implement the new lot number without communicating with the end user (10%), to adjust results with correction factors (6%), or to adjust the reference interval (13%). Since only 39 laboratories had indicated that they would possibly implement a rejected lot number with restrictions, but 150 laboratories provided responses to this question, responses may be mostly theoretical or there may have been a difference in interpretation between the previous question and this one as to whether effects of a new lot number on QC material alone would constitute violation of acceptance criteria for the lot.

Fig. 9.

Actions that would be taken when accepting a new lot number that had failed previously-established acceptance criteria. A total of 246 actions were selected by 150 laboratories.

Finally, participants were asked if they monitored reagent and/or calibrator trends over several lot changes and, if so, by which mechanism(s). One hundred twenty-eight (86%) of the 148 laboratories that answered this question indicated that they do monitor lot changes over several lots. Only the laboratories that answered yes to this question were asked to select the parameters used to monitor such trends. However, 157 laboratories responded, including 29 laboratories that had left the prior question blank. The most commonly used parameters were QC mean (92%), QC standard deviations (SDs) (80%), and PT (71%) and 53% of laboratories indicated that they used a combination of these three parameters. In the other category, some factors mentioned were calibrator recovery values, calibration verifier results, cross-site testing, and patient comparison data (such as slopes and intercepts from regression data).

3.2. Responses to the follow-up case study

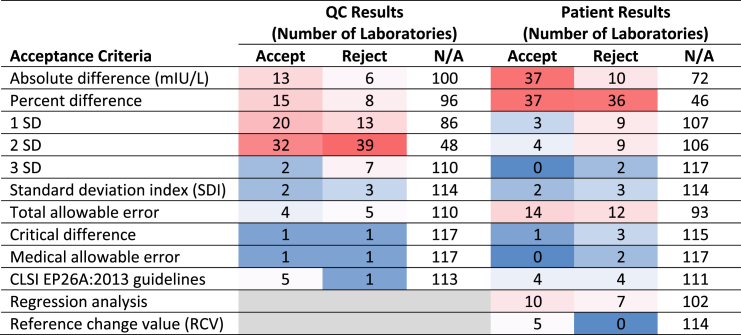

The pursuant case study was sent to a subset of the original laboratories, those performing immunoassays, and 12 laboratories from outside Ontario that were IQMH PT survey participants, to gain insight on how they would apply their practices to data for a new lot of TSH reagent. Submissions were received from 132 laboratories, but responses from 13 laboratories were excluded from the data analysis because they only included a final decision on lot acceptability without any details on acceptance criteria applied and five of those laboratories were also not performing TSH testing on-site. Of the 119 survey responses that were included in the final data, 112 laboratories were in Ontario and 7 (6%) were from out of province; in some instances we have referred to the data as being from Ontario for simplicity because the patterns-of-practice survey included only Ontario laboratories and the case study included only a small number of laboratories from outside Ontario. From 119 survey responses, 43% of laboratories indicated that they would accept the lot and 57% would reject it. Seventy-six percent of laboratories used both QC and patient results to make their decision, 15% used only QC results, and 9% used patient results only. Table 2 demonstrates the criteria laboratories applied to decide on acceptability of the new lot number and their decisions based on those criteria. The most commonly used acceptance criteria for QC results were 2 SD (60% of laboratories), 1 SD (28%), percent difference (19%), and absolute difference (16%). For patient results, the most commonly used acceptance criteria were percent difference (61%), absolute difference (39%), total allowable error (22%), and regression analysis (14%). It is clear from Table 2 that laboratories applying the same criteria to the same set of data did not all arrive at the same decisions on lot acceptability based on those criteria.

Table 2.

The number of laboratories that applied the QC and/or patient result acceptance criteria included in the case study and the verdicts from those criteria. The total number of laboratories that selected at least one criterion used in their decision was 119. The data are presented as a heat map, with higher numbers of responses being red and fewer numbers of responses being blue.

Of the 119 laboratories that applied acceptance criteria, 101 (85%) used more than one acceptance criterion for QC and/or patient results and 18 (15%) applied only one criterion. In the 101 laboratories that applied more than one acceptance criterion, all criteria were unanimous in either accepting or rejecting the lot in 58 of the laboratories and, in the other 43 laboratories, the criteria yielded conflicting results on whether to accept or reject the lot. Thirty-two percent of the laboratories with conflicting criteria chose to accept the lot, while 67% opted to reject it. Oddly, two laboratories opted to reject the new lot despite the criteria they applied indicating the lot was acceptable for use.

4. Discussion

The results of our patterns-of-practice survey showed that a majority of laboratories performed some type of reagent lot verification. Calibrator lot verifications were not performed as frequently, with the exception of in-house developed tests. Our survey results identified a variety of reasons that laboratories did not perform lot verifications such as insufficient staff time and high cost, but the most commonly given reason was difficulty in performing verification of the new lot in parallel with use of the current lot. In laboratories that were completing lot verifications, most often it took place just prior to implementation of the new lot, with the testing completed by the bench technologists. If multiple shipments of the same lot number were received, about half of laboratories would verify only the first shipment of the lot. Most multi-site facilities were completing the full lot verification at each of their sites.

In terms of the material being used for lot verifications, commercial materials, in particular QC material, were used more commonly than material derived from patient samples. Specifically for automated chemistry or immunoassay analyzers, use of two commercial samples was most common for reagent or calibrator lot verifications. When patient materials were used for verification of new reagent lots for automated tests, most often it was five to six unmodified single patient samples rather than spiked or pooled samples. Most laboratories were not using patient samples for verification of new calibrator lots for automated tests.

In the majority of laboratories, it was a senior or charge technologist, a technical specialist, or a supervisor who reviewed the lot verification data, and the most commonly applied criterion for assessment of lot acceptability was IQMH precision goals. Most often two to four acceptance criteria were applied. The choice of IQMH precision goals over IQMH allowable performance limits as the criterion of preference may be related to the fact that QC material was used more commonly than patient samples for lot verifications. For lots that initially failed the acceptability criteria, a follow-up investigation would almost always occur before a final decision would be made on whether to accept or reject the lot. If only QC values but not patient results were found to be affected by a new lot number, the majority of laboratories would adjust their QC target values. If a decision were made to implement a lot that had failed the acceptance criteria, most laboratories would communicate the differences observed with the new lot to the end user.

The majority of surveyed laboratories monitored assay performance over multiple lots, most commonly by monitoring trends in QC means and SDs and/or PT results. Previous studies have indicated the importance of monitoring long-term cumulative changes in reagent performance as a tool for timely detection of performance drift over time, which cannot be detected easily in isolated comparisons of two lots [5,6]. However, this approach requires large sample numbers to achieve sufficient statistical power and may only be achievable in very large laboratories. Collaboration between laboratories and manufacturers in monitoring cumulative patient averages may provide avenues for timely monitoring of clinically significant long-term lot-to-lot changes [7].

For the follow-up case study with verification data provided for a new lot of TSH reagent, most laboratories indicated they would use both QC and patient data and would apply more than one criterion in deciding on acceptability of the new lot, which appears consistent with the results of the initial survey. Most commonly, two SD would be applied as the acceptability criterion for QC results and percent difference would be used to assess patient results. There was considerable variability in the final decision on the new TSH reagent lot with 43% deciding to accept it and 57% deciding to reject it. It should be noted that the committee designed the case study to have results that might be considered borderline or intermediate in terms of their acceptability, rather than being obviously acceptable or rejectable. It was designed as such because that is often the real-world scenario that laboratories would encounter, and it was felt that it would provide more valuable insight on laboratory practices than a case study that most laboratories would agree about. Interestingly, even when laboratories applied the same criterion, they often did not arrive at the same decision on acceptability of the lot. There was not even consistency in the approach laboratories would take when they had conflicting results from multiple criteria, with two-thirds opting to reject the lot and one-third accepting it. The results of this case study highlight a lack of consistency among laboratories in their approaches to lot verifications. Unlike the patterns-of-practice survey, the follow-up case study did not capture who was responsible for the final decision on acceptability of the new lot when the lot verification has borderline results. Laboratories may have an escalation process in place in this situation, which may add to the complexity in new lot verification decision making.

It is important for laboratories to establish and follow a procedure for evaluating new lots, not only to fulfill accreditation requirements, but also to ensure release of accurate and consistent patient results to assist clinicians in diagnosis and treatment decisions [[8], [9], [10]]. CLSI guideline EP26-A [3] provides thorough guidance for laboratories on how to handle reagent lot verifications. The detailed recommendations from EP26-A are not reproduced here and laboratories are referred to the document itself. It clearly identifies the potential for effects on analyte results with a new reagent lot that may be due for instance to a change or instability of a reagent component or a difference in calibration of the new lot. Such issues may not always impact patient samples and QC material equally due to differences in their matrix. In some cases, QC material alone may show a difference in results with a new reagent lot and in other cases QC material may fail to identify changes that occur with patient results specifically. EP26-A thus recommends that patient specimens, at minimum three, be included in the verification process for a new reagent lot rather than relying solely on QC material. It provides calculations for the optimal number of patient specimens to be tested to catch clinically significant changes in reagent performance (true positives) while minimizing the possibility of falsely rejecting a reagent lot that does not have a clinically significant effect on patient results (false positives). The calculations are based on a hierarchy of preferred criteria for calculating a critical difference for each test and are influenced by the imprecision of the assay [3]. Such calculations are expected to be performed at a minimum of two clinically relevant concentrations. They are admittedly complicated calculations that are likely beyond the means of many laboratories to perform. In addition, the number of samples calculated may turn out to be unreasonably high in some situations. Another recommendation is to use ten patient samples, three low-range, three mid-range, and three high-range with an additional sample to cover a low limit of detection or the upper limit of linearity [11]. It appears though that a significant number of surveyed laboratories are not even meeting EP26-A's recommended minimum of three patient specimens per reagent lot verification.

Including patient specimens in reagent lot verification depends on having a sufficient number of appropriate samples that are sufficiently stable and have enough volume for repeat testing. That creates challenges for laboratories, as well as trying to complete the validation while continuing to offer testing with the current reagent lot. EP26-A recommends completing reagent lot verification before depleting the current reagent, but it is not always feasible to analyze two reagent lots in parallel. This difficulty was the number one reason our survey respondents gave for not completing lot verifications. Most of our surveyed laboratories that do complete some form of verification appeared to do so immediately before the lot number is put into use, which creates stressful situations when results do not meet specifications and troubleshooting must be performed before the new lot number can be used. With the number of assays being performed in a given laboratory and the number of new lot numbers needing verification, a great deal of stress can be associated with lot changes. One laboratory included in our survey commented that they monitored new lot numbers for electrolyte tests on their automated chemistry system by quality control material only since the electrolyte measurements used multiple reagents and two standard solutions, each of which could change lot numbers at different points in time; this comment highlights the complexity that can be involved with lot verifications.

While EP26-A provides recommendations for laboratories on how to evaluate new reagent lots, it does not discuss how laboratories should evaluate new calibrator lots. Given the complexity of the existing guidelines and their gaps, it is not very surprising that our surveys revealed such heterogeneity in laboratory practices regarding lot verifications. If anything is highlighted by our survey results, it may be the need for clear and feasible lot verification recommendations for laboratories.

Interestingly, our surveys revealed that in some cases laboratories are going beyond what is recommended by EP26-A. Forty-nine percent of our surveyed laboratories were performing at least partial verification of new shipments of previously-verified reagent and/or calibrator lots. For laboratories with limited storage capacity that have reagent lots sequestered off-site and shipped regularly by the assay vendor, this could represent a significant number of verifications. Similarly, 58% of multi-site laboratories indicated they were performing full verification of each lot at each of their affiliated sites. As stated in EP26-A, once a new lot has been confirmed to be acceptable using patient samples, it is not necessary to perform the same full verification on new shipments or at affiliated sites using the same method since any change in reagent performance that may have occurred during shipping or handling will be detected by testing of QC samples. As the matrix-related bias is expected to be consistent for the same reagent lot, it is acceptable to verify the same lot number in new shipments or at affiliated sites by use of QC samples alone, as long as sites are using the same assay material and instrumentation [3,12]. Laboratories should consider these modified verification procedures that can save time and resources.

Overall, the results of our surveys on lot verification have revealed that the majority of laboratories confirm acceptability of new reagent and/or calibrator lots before they are put into clinical use. However, they also revealed deficiencies in the processes being followed. Even when laboratories were given the same lot verification data to interpret, there was a strong lack of consistency in the approach laboratories would take in their decisions on whether to the accept the new reagent lot and their final verdicts. This highlights the need for easy to follow guidance for laboratories on this important topic.

Funding

This research did not receive any specific grant support.

CRediT authorship contribution statement

Angela C. Rutledge: Conceptualization, Methodology, Formal analysis, Writing – original draft. Anna Johnston: Conceptualization, Methodology, Formal analysis, Writing – original draft. Ronald A. Booth: Conceptualization, Methodology, Formal analysis, Writing – original draft. Kika Veljkovic: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Dana Bailey: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Hilde Vandenberghe: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Gayle Waite: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Lynn C. Allen: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Andrew Don-Wauchope: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Pak Cheung Chan: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Julia Stemp: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Pamela Edmond: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Victor Leung: Conceptualization, Methodology, Formal analysis, Writing – review & editing. Berna Aslan: Conceptualization, Methodology, Formal analysis, Writing – original draft.

Declarations of competing interest

None.

Acknowledgements

The authors would like to thank the IQMH Information Technology and Communications staff for their support with preparing the online surveys, distributing them to participating laboratories, and formatting the committee comments sent to laboratories.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.plabm.2022.e00300.

Contributor Information

Angela C. Rutledge, Email: angela.rutledge@lhsc.on.ca.

Anna Johnston, Email: ajohnston@iqmh.org.

Ronald A. Booth, Email: rbooth@uottawa.ca.

Kika Veljkovic, Email: kika.veljkovic@lifelabs.com.

Dana Bailey, Email: baileyd@dynacare.ca.

Hilde Vandenberghe, Email: hvandenberghe@mtsinai.on.ca.

Gayle Waite, Email: gayleathome@gmail.com.

Lynn C. Allen, Email: lynn.allen@utoronto.ca.

Andrew Don-Wauchope, Email: andrew.don-wauchope@lifelabs.com.

Pak Cheung Chan, Email: pc.chan@sunnybrook.ca.

Julia Stemp, Email: jstemp@iqmh.org.

Pamela Edmond, Email: ronedmond@outlook.com.

Victor Leung, Email: vleung@josephbranthospital.ca.

Berna Aslan, Email: berna.aslan@easternhealth.ca.

Appendix A. Supplementary data

The following is the Supplementary data to this article:

References

- 1.Carlson R.O., Amirahmadi F., Hernandez J.S. A primer on the cost of quality for improvement of laboratory and pathology specimen processes. Am. J. Clin. Pathol. 2012;138(3):347–354. doi: 10.1309/AJCPSMQYAF6X1HUT. [DOI] [PubMed] [Google Scholar]

- 2.Algeciras-Schimnich A. “Tackling reagent lot-to-lot verification in the clinical laboratory.” AACC.org. https://www.aacc.org/cln/articles/2014/july/bench-matters Accessed.

- 3.Clinical and Laboratory Standards Institute . Approved Guideline. CLSI Document EP26-A; 2013. User Evaluation of Between-Reagent Lot Variation. [Google Scholar]

- 4.International Organization for Standardization . third ed. 2012. ISO 15189 Medical Laboratories – Requirements for Quality and Competence. [Google Scholar]

- 5.Algeciras-Schimnich A., Bruns D.E., Boyd J.C., Bryant S.C., La Fortune K.A., Grebe S.K. Failure of current laboratory protocols to detect lot-to-lot reagent differences: findings and possible solutions. Clin. Chem. 2013;59(8):1187–1194. doi: 10.1373/clinchem.2013.205070. [DOI] [PubMed] [Google Scholar]

- 6.Liu J., Tan C.H., Loh T.P., Badrick T. Detecting long-term drift in reagent lots. Clin. Chem. 2015;61(10):1292–1298. doi: 10.1373/clinchem.2015.242511. [DOI] [PubMed] [Google Scholar]

- 7.Thompson S., Chesher D. Lot-to-lot variation. Clin. Biochem. Rev. 2018;39(2):51–60. [PMC free article] [PubMed] [Google Scholar]

- 8.Don-Wauchope A.C. Lot change for reagents and calibrators. Clin. Biochem. 2016;49(16–17):1211–1212. doi: 10.1016/j.clinbiochem.2016.04.003. [DOI] [PubMed] [Google Scholar]

- 9.Miller W.G., Erek A., Cunningham T.D., Oladipo O., Scott M.G. Commutability limitations influence quality control results with different reagent lots. Clin. Chem. 2011;57(1):76–83. doi: 10.1373/clinchem.2010.148106. [DOI] [PubMed] [Google Scholar]

- 10.Bais R., Chesher D. More on lot-to-lot changes. Clin. Chem. 2014;60(2):413–414. doi: 10.1373/clinchem.2013.215111. [DOI] [PubMed] [Google Scholar]

- 11.Martindale R.A., Cembrowski G.S., Journault L.J., Crawford J.L., Tran C., Hofer T.L., Rintoul B.J., Der J.S., Revers C.W., Vesso C.A., Shalapay C.E., Prosser C.I., LeGatt D.F. Validating new reagents: roadmaps through the wilderness. Lab. Med. 2006;37(6):347–351. doi: 10.1309/BRC6Y37NM3BU97WX. [DOI] [Google Scholar]

- 12.Clinical and Laboratory Standards Institute . 2012. Verification of Comparability of Patient Results within One Health Care System; Approved Guideline (Interim Revision). CLSI Document EP31-A-IR. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.