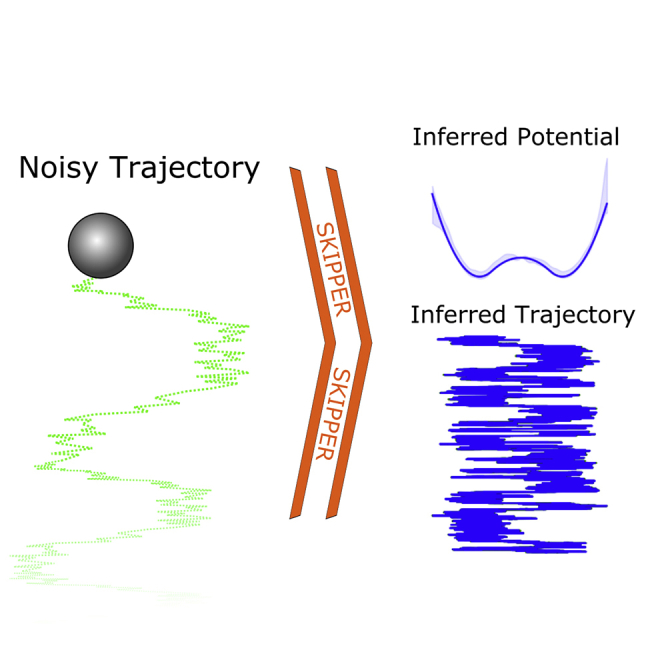

Summary

While particle trajectories encode information on their governing potentials, potentials can be challenging to robustly extract from trajectories. Measurement errors may corrupt a particle’s position, and sparse sampling of the potential limits data in higher energy regions such as barriers. We develop a Bayesian method to infer potentials from trajectories corrupted by Markovian measurement noise without assuming prior functional form on the potentials. As an alternative to Gaussian process priors over potentials, we introduce structured kernel interpolation to the Natural Sciences which allows us to extend our analysis to large datasets. Structured-Kernel-Interpolation Priors for Potential Energy Reconstruction (SKIPPER) is validated on 1D and 2D experimental trajectories for particles in a feedback trap.

Subject areas: Physics, Optics, Statistical physics

Graphical abstract

Highlights

-

•

A feedback trap was used to generate noisy Langevin microbead trajectories

-

•

The potential energy surface is recovered using a Bayesian formulation

-

•

The formulation uses a structured-kernel-interpolation Gaussian process (SKI-GP) to tractably approximate Gaussian process regression for larger datasets

-

•

Thanks to our adaptation of SKI-GP, we have broadened the use of Gaussian processes for natural science applications

Physics; Optics; Statistical physics

Introduction

Determining potentials governing particle dynamics is of fundamental relevance to materials science (Deringer et al., 2019; Handle and Sciortino, 2018; Oh et al., 2022), biology (Makarov, 2015; Wang and Ferguson, 2016; Wang et al., 1997; Chu et al., 2013; Kang and Liu, 2021; Wu et al., 2021), and beyond (García et al., 2018; Dudko et al., 2006; Preisler et al., 2004; La Nave et al., 2002). For example, shapes of energy landscapes provide reduced dimensional descriptions of dynamics along reaction coordinates (Wang and Ferguson, 2016; Wang and Verkhivker, 2003; Chu et al., 2013) and key estimates of thermodynamic and kinetic quantities (Hänggi et al., 1990; Berezhkovskii et al., 2017; Bessarab et al., 2013). Shapes of energy landscapes also provide key insight into molecular function such as the periodic three-well potential of the -ATP synthase rotary motor (Wang and Oster, 1998; Toyabe et al., 2012) and the asymmetric, linearly periodic potentials responsible for kinesin’s processivity (Kolomeisky and Fisher, 2007). In a different class of applications, fundamental experimental tests of statistical physics (Proesmans et al., 2020; Wu et al., 2009) often employ potentials with deliberately complex shapes created from feedback traps based on electrical (Cohen, 2005; Gavrilov et al., 2013), optical (Kumar and Bechhoefer, 2018a; Albay et al., 2018), or thermal forces (Braun et al., 2015), or optically generated with phase masks (Hayashi et al., 2008) or spatial light modulators (Chupeau et al., 2020).

Inferring naturally occurring energy landscapes or verifying artificially created potentials demands a method free of a priori assumptions on the potential’s shape. This requirement rules out many commonly used methods devised for harmonic systems (Neuman and Block, 2004; Berg-Sørensen and Flyvbjerg, 2004; Jones et al., 2015; Gieseler et al., 2021) or alternative, otherwise-limited, methods to deduce potentials from data (Reif, 2009; Türkcan et al., 2012; García et al., 2018; Wang et al., 2019; Frishman and Ronceray, 2020; Yang et al., 2021; Stilgoe et al., 2021). For example, some methods (Reif, 2009; Türkcan et al., 2012) necessarily rely on binned data, relating potential energies to Boltzmann weights or average apparent force, thereby limiting the frequency of data in each bin and requiring that equilibrium be reached before data acquisition. Other methods assume stitched locally harmonic forms (García et al., 2018). Still others use neural networks (Wang et al., 2019) to deduce potentials; the uncertainty originating from measurement error and data sparsity is then not easily propagated to local uncertainty estimates over the inferred potential.

In previous work (Bryan IV et al., 2020), we introduced a method starting from noiseless one-dimensional time series data to infer effective potential landscapes without binning, or assuming a potential form, even in the case of limited sampling, while admitting full posterior inference (and thus error bars or, equivalently, credible intervals) over any candidate potentials arising from sparse data. Our method was, however, fundamentally limited to one dimension (because of the poor scaling of the computation with respect to the dataset size). As such, the method could not distinguish the effects of inherent stochasticity in the dynamics due to temperature, say, and noise introduced from the measurement apparatus. It would therefore be helpful to develop a method capable of discriminating between both noise sources and learning features of both just as the perennial hidden Markov model achieves for discrete state spaces (Rabiner and Juang, 1986; Sgouralis and Pressé, 2017).

Here, we introduce a method to infer potentials from noisy, multidimensional data often sampled in a limited fashion. We take advantage of tools from Bayesian nonparametrics to place priors on potentials assuming no prior functional forms for these potential. To do so, we introduce structured-kernel-interpolation Gaussian processes (Wilson and Nickisch, 2015) (SKI-GP) to the Natural Sciences in order to circumvent the otherwise-prohibitive computational scaling of widely used Gaussian processes. In our application, we utilize the power of SKI-GP to create Structured-Kernel-Interpolation Priors for Potential Energy Reconstruction (SKIPPER). SKIPPER can be inferred from trajectories while meeting all the following criteria simultaneously: 1) no reliance on binning or pre-processing, 2) no assumed analytic potential form, 3) inferences drawn from posteriors, allowing for spatially nonuniform uncertainties to be informed by local density of available data in specific regions of the potential (e.g., fewer data points around barriers), 4) treatment of multidimensional trajectories, 5) rigorous incorporation of measurement noise through likelihoods, and 6) compatible with lightly sampled trajectories. No other existing method meets all six criteria simultaneously.

This work serves the dual role of a method for inferring potentials with SKIPPER, as well as a demonstration of how to incorporate SKI-GPs into an inference framework. In particular, we demonstrate how to construct an effective kernel matrix for an unknown variable (the potential landscape) and how to directly sample the variable from a conditional posterior.

Results

We benchmark SKIPPER on experimental data on a double-well potential and show that we can accurately infer the shape of the potential. We then show that SKI-GP allows us to explore 2D time series data (previously infeasible due to large amounts of data using a naive GP). We finally apply SKIPPER to trajectories in a high-barrier landscape where traces are too short to reach equilibrium. A demonstration on data from a simple harmonic well, a complicated 2D potential, and robustness tests over parameters of interest can be found in the SI (Bryan IV et al., 2022).

For testing the accuracy and effectiveness of SKIPPER, we simultaneously collected two measurements of each trajectory, one using a detector with low measurement noise and one using a second detector with higher measurement noise as shown in section. We refer to the low-noise trajectory as the “ground truth” trajectory, although it itself is subject to a small amount of measurement noise. For each experiment, we impose a potential on the particle using our feedback trap. We refer to this applied potential as the “ground truth” potential, although it may differ from the actual potential the particle experiences due to errors in the feedback trap setup, as well as experimental limitations such as drift.

Demonstration on simulated data

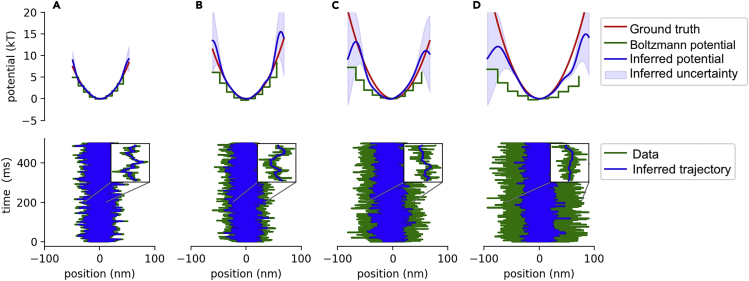

We started by analyzing simulated trajectories from a simple harmonic well. Results are shown in Figure 1. Each column shows the inferred potential (top row) and inferred trajectory (bottom row) for each dataset analyzed. We provide uncertainties and ground truth estimates for both the potential and trajectory. Additionally, for sake of comparison, we also show the potential estimated using the Boltzmann method (Bryan IV et al., 2020; Reif, 2009) (discussed in section). We highlight that the Boltzmann method does not provide trajectory estimates. By contrast, SKIPPER infers those positions obscured by noise.

Figure 1.

Demonstration on data from a harmonic potential

Here, we analyze four datasets with increasing measurement noise. For each dataset, we plot the inferred potential in the top row along with the ground truth and the results of the Boltzmann method. We plot the inferred trajectory with uncertainty against the ground truth in the bottom row. For clarity, we zoom into a region of the trajectory (200 ms–201 ms). Measurement noise is added by increasing the optical density of the ND filter. The optical densities of the sub-figures A, B, C, and D are 0, 0.3, 0.5, and 0.6, respectively. Each trace contains 50,000 data points.

Figure 1 shows that the ground truth potential and trajectory fall within our error bars (credible interval) for all datasets up to ND = 7. At ND = 7, the inferred potential develops bumps where it is unable to infer the trajectory accurately, resulting in a 2 nm shift of the potential well minimum. On the other hand, the Boltzmann method (see earlier discussion) does well in the low-noise case (Figure 1, left column) but fails as the noise introduced grows (Figure 1, right column). This is expected, as measurement noise broadens the position histogram and thereby the potential. Using SKIPPER, the estimate of the potential drops at the edges of the spatial region sampled by the particle, since the high-potential edges are rarely visited by the particle. We thus have insufficient information through the likelihood to inform those regions, and the inferred potential reverts back to the prior (set at 0, as described earlier).

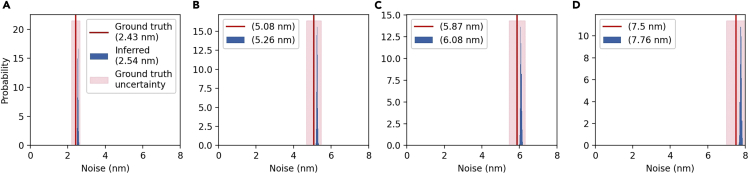

In addition to inferring estimates for the potential energy landscape and trajectory, we also estimate the magnitude of the measurement noise for each experiment. Figure 2 shows the inferred measurement noise magnitudes obtained from SKIPPER for each single-well experiment, with the mean of our PDFs within 5% of the “ground truth” measurement, for each dataset analyzed.

Figure 2.

Inferred noise levels from experiments on harmonic well

We analyze four datasets with increasing measurement noise. For each dataset, we plot the probability density function of the inferred magnitude of the noise against a vertical line representing the best estimate inferred using the calibration techniques outlined earlier, with a shaded pink region representing the uncertainty in the calibrations establishing “ground truth.” Measurement noise is added by increasing the optical density of the ND filter, thereby decreasing the light intensity incident on the QPD. The optical densities of sub-figures (A, B, C, and D) are 0, 0.3, 0.5, and 0.6, respectively. Each trace contains 50,000 data points.

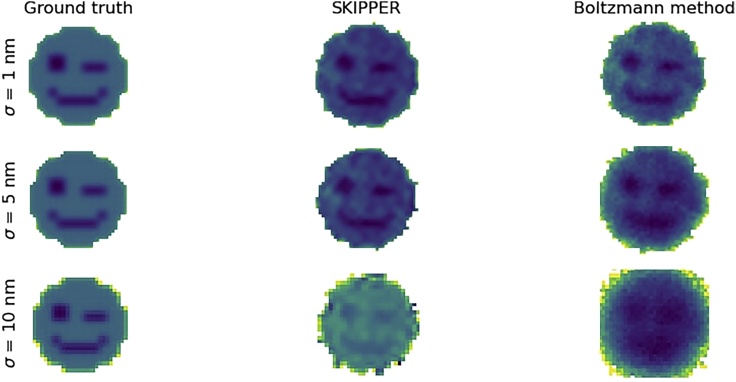

Next, to show the full utility of SKIPPER, we analyzed simulated data from a complicated 2D potential with wells in the shape of a winky face. The winky face was constructed from a grid of Gaussian wells with potential energy corresponding to the pixels of a winky face. The winky face is 13 × 13 pixels, where each pixel is 10 nm wide. The eyes and mouth are about 2 kT less than the rest of the face. The white space around the face has potential energy above 20 kT, to ensure that the particle stays inside the face. We simulated a 50,000 data point trace of a particle moving along the winky face.

Figure 3 shows results on this potential. Note that potential energies greater than 5 kT and potential energies in areas where the particle is not encountered are displayed as white background. In the top row of Figure 3, when measurement noise is small compared to the size of the face (1 vs 130 nm), both SKIPPER and the Boltzmann method are able to reconstruct a potential energy landscape that is recognizably a winky face. However, with moderate noise ( 5 nm), SKIPPER is able to pick up the fine detail in the face that is missed by the Boltzmann method (Figure 3 middle row). In the case of high noise ( 10 nm), SKIPPER’s advantage over the Boltzmann method is more dramatic, as SKIPPER’s reconstruction is still recognizably a winky face, whereas the Boltzmann method’s reconstruction not only fails to resemble a face but also overestimates the width of the face (Figure 3 bottom row).

Figure 3.

Demonstration on 2D winky face potential

Here, we show results from inference on a 2D potential with wells in the shape of a winky face. The left column shows the ground truth potential energy landscape. The middle column shows inference using SKIPPER. The right column shows inference using the Boltzmann histogram method. The top row analyzes data simulated with 1 nm measurement noise. The middle row analyzes data simulated with 5 nm measurement noise. The bottom row analyzes data simulated with 10 nm measurement noise. Note that in our display pixels with potential energy greater than 5 kT are white.

Robustness tests on simulated data

In order to probe SKIPPER’s robusteness with respect to parameters of interest, we demonstrate SKIPPER on data simulated with a single-well potential analyzed under different circumstances.

First, we tested SKIPPER’s robustness with respect to the number of data points on simulated data. Supplementary figure SI-1 shows the results on simulated data. Trivially, when the trajectory is so short that the particle does not travel across the well ( as in Fig. SI-1 left panel), we cannot infer the shape of the potential. When the particle samples the entire well ( as in Fig. SI-1 s panel), we infer the general shape of the potential, but the inference is highly impacted by small stochastic anomalies. See for example the far right of the potential, where a few higher- and lower-than-expected thermal kicks at the right side of the well caused SKIPPER to infer a second well. For reasonable inference, a few thousand points suffice ( in Fig. SI-1, third panel). When there are tens of thousands of data points ( in Fig. SI-1), our inferred potential almost exactly overlaps with the ground truth.

We then tested SKIPPER’s robustness with respect to measurement noise in order to probe the regime in which SKIPPER can be used. Supplementary figure SI-2 shows the results. SKIPPER was robust to measurement noise for the four values of measurement noise variance chosen, but could not reproduce the potential energy landscape when the magnitude of the noise was of the same order as the maximum range of the particle. Even so, when the magnitude of the noise was up to 10% the maximum range of the particle ( nm), we nonetheless inferred the potential energy landscape accurately.

Double well

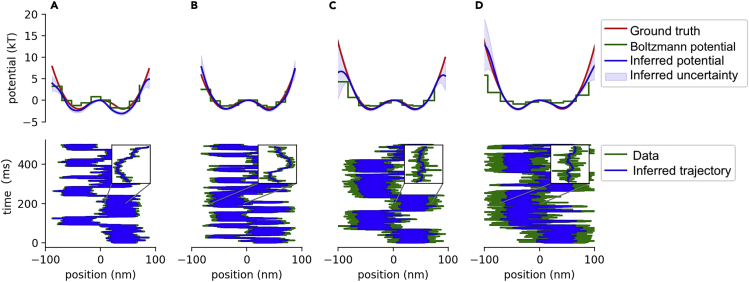

We analyzed data from a real particle in a double-well potential. Results are shown in Figure 4. Each column shows the inferred potential (top row) and inferred trajectory (bottom row) for each dataset analyzed. We provide uncertainties and ground truth estimates for both the potential and trajectory. Additionally, for sake of comparison, we also show the potential estimated using the Boltzmann method (Bryan IV et al., 2020; Reif, 2009). Briefly, the Boltzmann method, as outlined in the SI (Bryan IV et al., 2022), assumes that the bead localizations are sampled from the Boltzmann distribution and derives an estimate of the potential in discretized bins of space from the log of the relative frequency the bead is seen in each bin. We highlight that the Boltzmann method does not provide trajectory estimates. By contrast, SKIPPER infers those positions obscured by noise. Figure 4 shows that the ground truth potential and trajectory fall within the estimated range even when the measurement noise is so large that the particle is occasionally seen in the wrong well (Figure 4, top right panel). Both SKIPPER and the Boltzmann method slightly overestimate the potential of the left well at the lowest noise level, because the (short) trajectory spends too much time in the right well, leaving the left well undersampled.

Figure 4.

Demonstration on data from a double-well potential

We analyze four datasets with increasing measurement noise. For each dataset, we plot the inferred potential in the top row alongside the ground truth and results of the Boltzmann method. We plot the inferred trajectory against the ground truth in the bottom row. For clarity, we zoom into a region of the trajectory (200 ms–201 ms). Measurement noise is added by increasing the optical density of the ND filter. The optical densities of the sub-figures (A, B, C, and D) are 0, 0.3, 0.5, and 0.7, respectively. Each trace contains 50,000 data points.

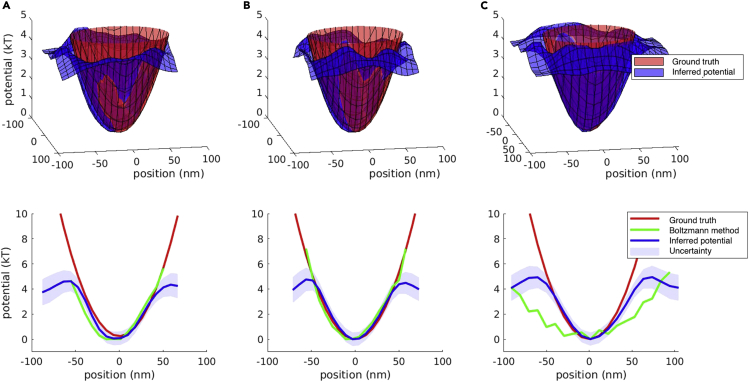

2D single well

Next, we analyzed data from a real particle in a 2D harmonic potential. Results are shown in Figure 5. For clarity, we do not show uncertainties, trajectories, or Boltzmann-method estimates for the 2D plot, but we do show them for a 1D potential slice. Despite the added complexity in inferring the potential in full 2D at once, our estimates fall within uncertainty in regions where data are appreciably sampled, even at high measurement noise.

Figure 5.

Demonstration on data from a 2D harmonic potential

Top row: three datasets with increasing measurement noise. Each column shows the inferred potential results along with the ground truth potential for a different dataset. At the top, we show the inferred potential and ground truth plotted in 2D. Bottom row: 1D slice taken through the middle of the potential. Measurement noise is added by increasing the optical density of the ND filter. The optical densities of ND filter used in the sub-figures (A, B, and C) are 0.0, 0.3, and 0.7, respectively.

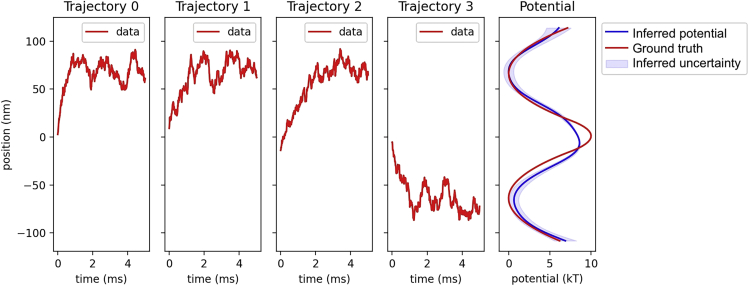

Trajectories with limited sampling

One advantage of SKIPPER is that it does not rely on equilibrium assumptions. As such, we can analyze trajectories initiating from far from equillibrium conditions and with limited sampling such that the particle does not reach equillibrium in the duration of the sampling. To demonstrate this, we created real datasets where the particle starts at the top of the potential well and “rolls off” to either side. The trajectories are short (5 ms), so that the particle does not reach equilibrium during the time trace. By including the likelihoods from 100 such trajectories into our posterior, we gain information on either side of the well and can recreate the full potential, even though each individual trajectory is initiated from the top and samples only one well.

In the first four panels of Figure 6, we illustrate 4 of 100 trajectories used to reconstruct the potential. We note that all trajectories start from the top of the barrier (defined as ), and none of the trajectories fully sample both wells.

Figure 6.

Demonstration of data from experimental trajectories with limited sampling

We reconstruct a potential by analyzing many short (500 data points) trajectories with limited sampling. The left four panels show four of the 100 small data segments used to reconstruct the potential. Each trajectory starts at the top of the potential and rolls off to either side. The far right shows the inferred potential plotted with uncertainty overlaid on the ground truth potential and the potential inferred using the Boltzmann method. For comparison, the inferred and ground truth energy landscapes were shifted so that the lowest point is set to 0 .

As all trajectories start from the top of the well, we collect a disproportionally large number of data at the top of the well. Indeed, for our 10 barrier with roughly 50,000 samples, we would not expect to see any samples at the top of barrier in an experiment taken after the bead had reached equilibrium. Despite this extreme oversampling, SKIPPER is able to infer the height of the barrier to within 15% accuracy (SKIPPER predicts an 8.6 barrier; the barrier of the design potential is 10 ). Our error bar at the top of the barrier in Figure 6 is artificially low because every trajectory initiates from the top.

Discussion

In conclusion, inferring potential landscapes is a key step toward providing a reduced dimensional description of complex systems (Wang et al., 2019; Chmiela et al., 2017; Espanol and Zuniga, 2011; Izvekov and Voth, 2005; Manzhos et al., 2015). Here, we go beyond existing methods by providing a means of obtaining potentials, among multiple other quantities, from time series data corrupted by measurement noise. We do so by efficiently learning the potential from the rawest form of data, point-by-point. That is, we achieve this without data pre-processing (e.g., binning), assuming an analytical potential form, nor requiring equilibrium conditions. As SKIPPER is Bayesian, it allows for direct error propagation to the final estimate of the inferred potential shape. In other words, SKIPPER differs from others assuming analytic potential forms (Pérez-García et al., 2021) or projection onto basis functions (Frishman and Ronceray, 2020), as well as methods relying on neural nets (Manzhos et al., 2015) that cannot currently propagate experimental uncertainty or provide error bars reflecting the amount of data informing the potential at a particular location. Importantly, unlike the Boltzmann method (Reif, 2009), SKIPPER does not invoke any equilibrium assumption and can consider trajectories initiated from positions not sampled from an equilibrium distribution. This feature is especially relevant in studying landscapes with rarely sampled regions of space and, in particular, far from equilibrium.

SKIPPER analyzes time-independent potential energy landscapes. Realistic potentials may however vary with time (Sánchez-Sánchez et al., 2019; León-Montiel and Quinto-Su, 2017). For this reason, it may be advantageous to adapt our framework to handle time-varying potentials. This can be done by either treating the potential as a Markov process where the potential is allowed to vary slightly each frame (Williams and Rasmussen, 2006), or by extending the placement of inducing points into a time dimension, and adapting the kernel function accordingly.

In this work, we assume a Gaussian noise model, but we can, in principle, utilize different noise models by substituting Equation 4 for the desired model. As SKI-GP is general and the measurement noise model can be tuned, moving forward we could apply modified SKIPPER algorithms to map potential landscapes from force spectroscopy (Gupta et al., 2011) or even single molecule fluorescence energy transfer (Kilic et al., 2021; Sgouralis et al., 2018), with applications to inferring protein conformational dynamics or binding kinetics (Schuler and Eaton, 2008; Chung and Eaton, 2018; Sturzenegger et al., 2018; Pressé et al., 2013, 2014). In inferring smooth potentials, we would move beyond the need to require discrete states inherent to traditional analyses paradigms such as hidden Markov models (Rabiner and Juang, 1986; Sgouralis and Pressé, 2017).

Beyond inferring potentials, we believe that SKI-GPs may become a powerful tool within the Natural Sciences especially in cases, such as this one, where we deal with noisy and abundant data while learning continuous functions from data. The SKI-GP prior shifts the computational burden from inferring the potential at every data point, to inferring the potential at select inducing points allowing the computation time to scale cubically with the number of inducing points rather than cubically with the number of data points. As the number of inducing points required to create a detailed map of the potential is often far less than the number of data points, this allows for significant computational cost reduction. Furthermore, as the inducing points are static, they allow for pre-computation of the kernel matrix despite the fact that locations of the data points change at each iteration of the Gibbs sampler. As demonstrated in this work, SKI-GPs can be used to accurately and tractably map fields of variables. Such fields are ubiquitous in nature, including temperature maps (Aigouy et al., 2005), optical absorption coefficient maps (Yuan and Jiang, 2006), and diffusion coefficient maps (Taylor and Bushell, 1985; Weistuch and Pressé, 2017). We believe that this work can serve as a demonstration for how to incorporate SKI-GPs into inference frameworks that can be extended beyond potential learning.

Limitations of the study

The model formulation requires that potentials are time independent and measurement noise is Gaussian distributed. The time scales and drag coefficient are assumed to be large enough to approximate motion with overdamped Langevin dynamics.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| Igor Pro 9 | WaveMetrics | https://www.wavemetrics.com |

| Numba 0.55.2 | Numba | https://numba.pydata.org/ |

| PotentialLearner | Zenodo | https://doi.org/10.5281/zenodo.6680638 |

| Data | Zenodo | https://doi.org/10.5281/zenodo.6680673 |

Resource availability

Lead contact

Further information and requests for resources, algorithms and methods should be directed to Steve Pressé, (spresse@asu.edu).

Materials availability

This study did not generate new unique materials.

Method details

Experimental apparatus

The experiment is done on a modified version of the feedback optical tweezer used in various stochastic thermodynamics experiments involving virtual potentials (Kumar and Bechhoefer, 2018a, 2020). The schematics of the setup are provided in Fig. SI-3. We used a continuous-wave diode-pumped laser (HÜBNER Photonics, Cobolt Samba, 1.5 W, 532 nm) and a custom-built microscope to construct the optical trap on a vibration-isolation table (Melles Griot). We split the laser into a trapping-beam and a detection-beam using a 90:10 beam splitter. The trapping beam then passes through a pair of acousto-optic deflectors (DTSXY-250-532, AA Opto Electronic), which allows us to deflect the beam in the orthogonal XY plane. We can steer the angle of the beam and also its intensity using analog voltage-controlled oscillators (DFRA10Y-B-0-60.90, AA Opto Electronic). We increase the beam-diameter using a two-lens system in telescopic configuration to overfill the back aperture of the trapping microscope objective (MO1 in Figure S3). Then a 4f relay system images the steering point of the AOD on the back aperture of MO2. A water-immersion, high-numerical-aperture objective (MO2, Olympus 60X, UPlanSApo, NA = 1.2) is used for trapping 1.5 m diameter spherical silica beads (Bangs Laboratories) in aqueous solution (SC).

The detection beam is passed through a half-wave plate (/2), so that its polarization is orthogonal to the trapping beam, to avoid unwanted interference. It is focused using a low-numerical-aperture microscope objective (MO1, 40X, NA = 0.4) antiparallel to the trapping objective MO2. The loosely focused detection beam (compared to the trapping beam) has a larger focal spot and offers a high linear range for the position detection. We can also adjust the detection plane using a 4f relay lens system. The forward scattered detection beam from the trapped bead is collected by the trapping objective MO2 and transmitted through the polarizing beam splitter (PBS). The PBS reflects the trapping beam and thus separates the detection beam. We also place another linear polarizer (P) after the PBS to minimize the amount of leaked trapping laser. The detection beam is then separated using a beam splitter on a pair of quadrant photodiodes (QPD, First Sensor, QP50-6-18u-SD2). We control the intensity of the detection beam on QPD1 by placing a neutral density filter (ND) of desired strength and thus control the signal-to-noise ratio (SNR) on QPD1.

A red LED (660nm, Thorlabs, M660L4) illuminates the trapped particle for imaging onto a camera. The light from the LED enters the detection object through a long-pass filter (LPF, cutoff wavelength 585 nm, Edmond Optics) which reflects the detection beam. The collected illumination light is separated by a short-pass filter (SPF, cutoff wavelength 600 nm, Edmond Optics) and reflected onto a camera (FLIR BFS-U3-04S2M−CS).

Using a LabView program, we digitize the analog signal from the pair of QPDs by a field programmable gate array (FPGA, National Instruments, NI PCIe-7857). The voltage signals are then calibrated as position signals using a QPD-AOD-camera method (Kumar and Bechhoefer, 2018b). The FPGA runs the control loop and uses a programmed feedback rule to send the appropriate control signal to the AODs.

Measurement noise

Here we discuss the estimation of measurement noise for our experimental setup. Briefly, we obtain an estimate of the noise by recording two simultaneous trajectories of a particle and finding the difference between the trajectories. As both measured trajectories should be equal in the limit of zero noise, we can interpret any deviation between the measured trajectories as coming from noise.

We start by applying a triangle wave voltage of frequency 1.6 Hz to the AOD, to move a bead linearly along the x axis. We record the beads motion at 100 kHz using two simultaneous detectors giving output and , respectively. We demonstrate this idea in Figure S4A. Figure S4B shows that the difference follows a Gaussian distribution. The mean of the Gaussian distribution shown in Fig. SI-4B, has a nonzero value of nm, which arises from nonlinear calibration errors in the two detectors. In Figure S4C, we verify that the autocorrelation is flat, indicating that each position measurement has independent measurement noise.

To calculate the variance of the measurement noise, we denote the SD of the detectors and , respectively. For Gaussian distributed measurement noise is also Gaussian, with variance equal to .

We can fit the power spectral density of with the aliased Lorentzian expression for discretely sampled times series (Berg-Sørensen and Flyvbjerg, 2004). Because the measurement noise is Gaussian, we add a noise term to the fitting function. Thus we can estimate the noise of each trajectory by integrating the noise term over the frequency domain. From this, we estimate standard deviations of noise in and as nm and nm, respectively.

Data acquisition

We performed experiments using a feedback optical tweezer, whose details are given in section and have been described in previous work (Kumar and Bechhoefer, 2018a). Briefly, we trap a silica bead of 1.5 m diameter using an optical tweezer, which creates a harmonic well without feedback. By applying feedback, we change the shape of the potential to a double well along one of the axes. We measure the position of a bead with two different quadrant photodiodes (QPD) simultaneously to give us two trajectories ( and ) with two different values of signal-to-noise (SNR) as explained in the section). One detector has high SNR and is used for feedback to create the desired virtual potential (Jun and Bechhoefer, 2012); the other has an adjustable SNR and is used to explore inferences from measured signals with lower SNR. We reduce the SNR in the other detector by placing neutral density (ND) filters of increasing optical density (OD) in front of it. Thus, we can use SKIPPER on the same trajectory over two different experimental SNRs and compare performance. We estimate the measurement noise and SNR in each detector from the noise floor of the power spectrum (see section).

Quantification and statistical analysis

Concretely, our goal is to use noisy positional measurements, , to infer all unknowns: 1) the potential at each point in space, (with denoting the potential evaluated at x); 2) the friction coefficient, ζ; 3) the magnitude of the measurement noise, (under a Gaussian noise model); and 4) the actual position at each time, . Toward achieving our goal, we construct a joint posterior probability distribution over all unknowns. As our posterior does not admit an analytic form, we devise an efficient Monte Carlo strategy to sample from it.

Dynamics

We describe the dynamics of the particle with an overdamped Langevin equation (Zwanzig, 2001),

| (Equation 1a) |

| (Equation 1b) |

where is the possibly multidimensional position coordinate at time t; is the velocity; is the force at position ; and ζ is the friction coefficient. The forces acting on the particle include positional forces expressed as the gradient of a conservative potential, . The stochastic (thermal) force, , is defined as follows:

| (Equation 2a) |

| (Equation 2b) |

where denotes an ensemble average over realizations, T is the temperature of the bath and k is Boltzmann’s constant. Under a forward Euler scheme (LeVeque, 2007) for Equation (1a) with time points given by , each position, given its past realization, is sampled from a normal distribution

| (Equation 3) |

In words, “the position given quantities and ζ is sampled from a Normal distribution with mean and variance .”

As is typical for experimental setups, we use a Gaussian noise model and write

| (Equation 4) |

In words, the above reads “ given quantities is drawn from a normal.” Here is the measurement noise variance. In Equation (4), the measurement process is instantaneous, i.e., assumed to be faster than the dynamical time scales. Our choice of Gaussian measurement model here can be modified at minimal computational cost (e.g., (Hirsch et al., 2013)) if warranted by the data, provided the final measurement noise model is stationary and each measurement depends only on the position at that time level.

Probabilities

Next, from the product of the likelihood () and the prior (), we obtain the posterior over all unknowns

| (Equation 5) |

The likelihood is derived from the noise model provided in Equation (4). By contrast, the prior is informed by the Langevin dynamics, as we see by decomposing it as follows:

| (Equation 6) |

The first term on the right-hand side of Equation (6) follows from Equation (Equation 1a), (Equation 1b), while we are free to choose the remaining priors, , , , and .

Important considerations dictate the prior on the potential. First, the potential may assume any shape (and, as such, is modeled nonparametrically) although it should be smooth (i.e., spatially correlated). A Gaussian process (GP) prior (Williams and Rasmussen, 2006) allows us to sample continuous curves with covariance provided by a pre-specified kernel. However, naive GP prior implementations are computationally prohibitive, with time and memory requirements scaling as the number of data points cubed (Bryan IV et al., 2020; Williams and Rasmussen, 2006).

These size-scaling issues can be resolved by adopting an SKI-GP (Wilson and Nickisch, 2015; Wilson et al., 2015; Titsias, 2009; Gal and van der Wilk, 2014) prior for the potential, . The SKI-GP prior is a hierarchical structure, where the potential at all points is interpolated according to M chosen inducing points at fixed locations, , and where the values of the potential at the inducing points are themselves drawn from a GP, as seen in section.

We note that under this model, we shift the focus from inferring to inferring from which we recover and with a modified kernel matrix (see section).

Choices for priors on , ζ and are less critical and chosen for computational convenience alone. For , we are free to choose any prior and select

| (Equation 7) |

For the friction, ζ, we select a gamma distribution for which support on the positive real axis is assured. That is,

| (Equation 8) |

Lastly, for the noise variance, , we place an inverse-gamma prior

| (Equation 9) |

The inverse-gamma prior is chosen because it is conjugate to the likelihood, meaning that we may directly sample from the posterior constructed by the prior multiplied by the likelihood (Bishop, 2006). The variables, , , , , and are hyperparameters that we are free to choose, and whose impact on the ultimate shape of the posterior reduces as more data are collected (Gelman et al., 2013; Sivia and Skilling, 2006).

Modified kernel matrix

Here we show how to calculate the force at an arbitrary test location using the structured kernel interpolation Gaussian process. We first derive the force for one-dimensional potentials exactly and then briefly explain how to generalize to higher dimensions. In order to calculate the force given , we write

| (Equation 10a) |

| (Equation 10b) |

| (Equation 10c) |

| (Equation 10d) |

| (Equation 10e) |

| (Equation 10f) |

where is now the covariance between the potential evaluated at the inducing points and the force evaluated at a test location, and we have assumed the kernel follows the familiar squared exponential form (Williams and Rasmussen, 2006).

To generalize to higher dimensions, we will need to calculate the force in each dimension separately. For example, to find the force in the k direction at a test point, ,

| (Equation 11) |

| (Equation 12) |

| (Equation 13) |

| (Equation 14) |

Inference

As our posterior does not assume an analytical form, we devise an overall Gibbs sampling scheme (Bishop, 2006) to draw samples from it. Within this scheme, we start with an initial set of values for the parameters () and then iteratively sample each variable holding all others fixed (Geman and Geman, 1984). Here, we show the conditional probabilities used for our Gibbs sampling algorithm.

Positions

The distribution for is simple. If we sample each one at a time,

| (Equation 15) |

| (Equation 16) |

| (Equation 17) |

Although these equations look Gaussian, they are not (except the last one), since we have to remember that is a function of . Thus, direct sampling is not possible, and we can only sample positions using a Metropolis-Hastings algorithm (Bishop, 2006).

Potential

Following the logic outlined in our previous manuscript (Bryan IV et al., 2020), we can infer the potential. For now, we focus on one-dimensional data.

We fully write the prior on positions, as

| (Equation 18) |

| (Equation 19) |

where and . Substituting in Equation 10d, we get,

| (Equation 20) |

| (Equation 21) |

| (Equation 22) |

| (Equation 23) |

| (Equation 24) |

where we have used matrix completing the square, with

| (Equation 25) |

| (Equation 26) |

Combining with the prior, we get

| (Equation 27) |

| (Equation 28) |

| (Equation 29) |

| (Equation 30) |

| (Equation 31) |

For multidimensional trajectories, we separate the likelihood into the forces experienced along each trajectory,

| (Equation 32) |

where d is the dimension index and D is the number of dimensions. A convenient choice of indexing allows us to sample the potential directly, even with multidimensional trajectories. It consists of “flattening out” our multidimensional force, velocity and kernel arrays into one-dimensional vectors, and “flattening out” the multidimensional kernel matrices into a matrix.

The convenient choice is as follows: Let the first N indices of all variables be reserved for values concerning the N measurements in the first dimension, then the through indices be reserved for values concerning the N measurements in the second dimension, and continue this pattern until all measurements have been accounted for. For example, define to be a vector such that is the force at the first time level along the first dimension, is the force at the first time level along the second dimension, …, is the force at the first time level along the second dimension, is the force at the second time level along the second dimension, and so forth. By utilizing this pattern for , , and , we can sample the potential directly using Equation (29).

Friction coefficient

The conditional probability for the friction coefficient is the product of Equations (3), (7) and (8)

| (Equation 33) |

which cannot be simplified into any known elementary distribution. We therefore sample, ζ using a Metropolis Hastings algorithm (Bishop, 2006).

Measurement noise

As our inverse gamma prior for , Equation (9) is conjugate to our likelihood, our conditional probability for can be simplified to an inverse gamma distribution (Gelman et al., 2013; Sivia and Skilling, 2006)

| (Equation 34) |

Boltzmann method

In the Results section, we compare to the Boltzmann method (Reif, 2009). The Boltzmann method, as opposed to the other existing methods, has the advantage that it is physically intuitive (it is derived from thermodynamics). It does not assume a potential shape a priori and therefore can be used for non-harmonic potentials. Its main limitation, in not treating measurement noise, is a limitation of all other competing methods.

The Boltzmann method uses the Boltzmann distribution from thermodynamics, which relates the potential in a region of space to the fraction of time that the particle will be seen in that region,

| (Equation 35) |

where is the potential of region i and is the fraction of the time that the particle spent in region i.

A limitation of the Boltzmann method is that the presence of measurement noise implies that the fraction of time that a particle was seen in a region, , does not equal the fraction of time that the particle was in the region. As a consequence, measurement noise will smear the shape of the inferred potential over the range of the measurement noise (see Figure 5).

Acknowledgments

John Bechhoefer and Prithviraj Basak acknowledge support from the Foundational Questions Institute Fund, a donor-advised fund of the Silicon Valley Community Foundation (grant no. FQXi-IAF19-02). Steve Pressé and J. Shepard Bryan IV acknowledge support from the NIH (grant No. R01GM134426 and R01GM130745) and NSF (Award no. 1719537).

Author contributions

Prithviraj Basak carried out all experiments. J Shepard Bryan IV conceptualized, derived, and coded all inference algorithms. Prithviraj Basak and J Shepard Bryan IV wrote the manuscript. John Bechhoefer and Steve Pressé oversaw all aspects of the project.

Declaration of interests

The authors declare no competing interests.

Published: September 16, 2022

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.isci.2022.104731.

Contributor Information

John Bechhoefer, Email: johnb@sfu.ca.

Steve Pressé, Email: spresse@asu.edu.

Supplemental information

Data and code availability

Data and code are available via Zenodo.

Data can be found at https://doi.org/10.5281/zenodo.6680673.

Code can be found at https://doi.org/10.5281/zenodo.6680638.

Any additional information required to reanalyze the data reported in this work paper is available from the Lead contact upon request.

References

- Aigouy L., Tessier G., Mortier M., Charlot B. Scanning thermal imaging of microelectronic circuits with a fluorescent nanoprobe. Appl. Phys. Lett. 2005;87:184105. [Google Scholar]

- Albay J.A.C., Paneru G., Pak H.K., Jun Y. Optical tweezers as a mathematically driven spatio-temporal potential generator. Opt Express. 2018;26(23):29906–29915. doi: 10.1364/OE.26.029906. [DOI] [PubMed] [Google Scholar]

- Berezhkovskii A.M., Dagdug L., Bezrukov S.M. Mean direct-transit and looping times as functions of the potential shape. J. Phys. Chem. B. 2017;121:5455–5460. doi: 10.1021/acs.jpcb.7b04037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berg-Sørensen K., Flyvbjerg H. Power spectrum analysis for optical tweezers. Rev. Sci. Instrum. 2004;75:594–612. [Google Scholar]

- Bessarab P.F., Uzdin V.M., Jónsson H. Potential energy surfaces and rates of spin transitions. Z. Phys. Chem. 2013;227:1543–1557. [Google Scholar]

- Bishop C.M. springer; 2006. Pattern Recognition and Machine Learning. [Google Scholar]

- Braun M., Bregulla A.P., Günther K., Mertig M., Cichos F. Single molecules trapped by dynamic inhomogeneous temperature fields. Nano Lett. 2015;15:5499–5505. doi: 10.1021/acs.nanolett.5b01999. [DOI] [PubMed] [Google Scholar]

- Bryan IV, J. S., Basak, P., Bechhoefer, J., and Pressé, S. (2022). Supplemental Material.–the supplementary material contains detailed information regarding the experimental apparatus, data acquisition, and noise calibrations. it also shows the construction of the posterior including choices of priors, as well as derivations and computational algorithms used for sampling the posterior using MCMC. it contains a description of the Boltzmann method which we compare our method to. lastly, it has a section devoted to robustness tests on simulated data that we use to benchmark our method

- Bryan J.S., 4th, Sgouralis I., Pressé S. Inferring effective forces for Langevin dynamics using Gaussian processes. J. Chem. Phys. 2020;152:124106. doi: 10.1063/1.5144523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chmiela S., Tkatchenko A., Sauceda H.E., Poltavsky I., Schütt K.T., Müller K.R. Machine learning of accurate energy-conserving molecular force fields. Sci. Adv. 2017;3:e1603015. doi: 10.1126/sciadv.1603015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chu X., Gan L., Wang E., Wang J. Quantifying the topography of the intrinsic energy landscape of flexible biomolecular recognition. Proc. Natl. Acad. Sci. USA. 2013;110:E2342–E2351. doi: 10.1073/pnas.1220699110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung H.S., Eaton W.A. Protein folding transition path times from single molecule fret. Curr. Opin. Struct. Biol. 2018;48:30–39. doi: 10.1016/j.sbi.2017.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chupeau M., Gladrow J., Chepelianskii A., Keyser U.F., Trizac E. Optimizing Brownian escape rates by potential shaping. Proc. Natl. Acad. Sci. USA. 2020;117:1383–1388. doi: 10.1073/pnas.1910677116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen A.E. Control of nanoparticles with arbitrary two-dimensional force fields. Phys. Rev. Lett. 2005;94:118102. doi: 10.1103/PhysRevLett.94.118102. [DOI] [PubMed] [Google Scholar]

- Deringer V.L., Caro M.A., Csányi G. Machine learning interatomic potentials as emerging tools for materials science. Adv. Mater. 2019;31:1902765. doi: 10.1002/adma.201902765. [DOI] [PubMed] [Google Scholar]

- Dudko O.K., Hummer G., Szabo A. Intrinsic rates and activation free energies from single-molecule pulling experiments. Phys. Rev. Lett. 2006;96:108101. doi: 10.1103/PhysRevLett.96.108101. [DOI] [PubMed] [Google Scholar]

- Español P., Zúñiga I. Obtaining fully dynamic coarse-grained models from MD. Phys. Chem. Chem. Phys. 2011;13:10538–10545. doi: 10.1039/c0cp02826f. [DOI] [PubMed] [Google Scholar]

- Frishman A., Ronceray P. Learning force fields from stochastic trajectories. Phys. Rev. X. 2020;10:021009. [Google Scholar]

- Gal Y., van der Wilk M. Variational inference in sparse Gaussian process regression and latent variable models – a gentle tutorial. arXiv. 2014 doi: 10.48550/arXiv.1402.1412. Preprint at. [DOI] [Google Scholar]

- Pérez García L., Donlucas Pérez J., Volpe G., V Arzola A., Volpe G. High-performance reconstruction of microscopic force fields from Brownian trajectories. Nat. Commun. 2018;9:5166. doi: 10.1038/s41467-018-07437-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gavrilov M., Jun Y., Bechhoefer J. Optical Trapping and Optical Micromanipulation X. Vol. 8810. International Society for Optics and Photonics; 2013. Particle dynamics in a virtual harmonic potential; p. 881012. [Google Scholar]

- Gelman A., Carlin J.B., Stern H.S., Dunson D.B., Vehtari A., Rubin D.B. CRC press; 2013. Bayesian Data Analysis. [Google Scholar]

- Geman S., Geman D. IEEE Transactions on Pattern Analysis and Machine Intelligence; 1984. Stochastic Relaxation, Gibbs Distributions, and the Bayesian Restoration of Images; pp. 721–741. [DOI] [PubMed] [Google Scholar]

- Gieseler J., Gomez-Solano J.R., Magazzù A., Pérez Castillo I., Pérez García L., Gironella-Torrent M., Viader-Godoy X., Ritort F., Pesce G., Arzola A.V., et al. Optical tweezers—from calibration to applications: a tutorial. Adv. Opt. Photonics. 2021;13(1):74–241. [Google Scholar]

- Gupta A.N., Vincent A., Neupane K., Yu H., Wang F., Woodside M.T. Experimental validation of free-energy-landscape reconstruction from non-equilibrium single-molecule force spectroscopy measurements. Nat. Phys. 2011;7:631–634. [Google Scholar]

- Handle P.H., Sciortino F. Potential energy landscape of tip4p/2005 water. J. Chem. Phys. 2018;148:134505. doi: 10.1063/1.5023894. [DOI] [PubMed] [Google Scholar]

- Hänggi P., Talkner P., Borkovec M. Reaction-rate theory: fifty years after kramers. Rev. Mod. Phys. 1990;62:251–341. [Google Scholar]

- Hayashi Y., Ashihara S., Shimura T., Kuroda K. Particle sorting using optically induced asymmetric double-well potential. Opt Commun. 2008;281(14):3792–3798. [Google Scholar]

- Hirsch M., Wareham R.J., Martin-Fernandez M.L., Hobson M.P., Rolfe D.J. A stochastic model for electron multiplication charge-coupled devices–from theory to practice. PLoS One. 2013;8:e53671. doi: 10.1371/journal.pone.0053671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izvekov S., Voth G.A. A multiscale coarse-graining method for biomolecular systems. J. Phys. Chem. B. 2005;109:2469–2473. doi: 10.1021/jp044629q. [DOI] [PubMed] [Google Scholar]

- Jones P., Maragó O., Volpe G. Cambridge University Press; 2015. Optical Tweezers. [Google Scholar]

- Jun Y., Bechhoefer J. Virtual potentials for feedback traps. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 2012;86:061106. doi: 10.1103/PhysRevE.86.061106. [DOI] [PubMed] [Google Scholar]

- Kang P.-L., Liu Z.-P. Reaction prediction via atomistic simulation: from quantum mechanics to machine learning. iScience. 2021;24:102013. doi: 10.1016/j.isci.2020.102013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilic Z., Sgouralis I., Heo W., Ishii K., Tahara T., Pressé S. Extraction of rapid kinetics from smfret measurements using integrative detectors. Cell Rep. Phys. Sci. 2021;2:100409. doi: 10.1016/j.xcrp.2021.100409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolomeisky A.B., Fisher M.E. Molecular motors: a theorist’s perspective. Annu. Rev. Phys. Chem. 2007;58:675–695. doi: 10.1146/annurev.physchem.58.032806.104532. [DOI] [PubMed] [Google Scholar]

- Kumar A., Bechhoefer J. Nanoscale virtual potentials using optical tweezers. Appl. Phys. Lett. 2018;113:183702. [Google Scholar]

- Kumar A., Bechhoefer J. Optical Trapping and Optical Micromanipulation XV. Vol. 10723. International Society for Optics and Photonics; 2018. Optical feedback tweezers; p. 107232J. [Google Scholar]

- Kumar A., Bechhoefer J. Exponentially faster cooling in a colloidal system. Nature. 2020;584:64–68. doi: 10.1038/s41586-020-2560-x. [DOI] [PubMed] [Google Scholar]

- La Nave E., Mossa S., Sciortino F. Potential energy landscape equation of state. Phys. Rev. Lett. 2002;88:225701. doi: 10.1103/PhysRevLett.88.225701. [DOI] [PubMed] [Google Scholar]

- León-Montiel R.d.J., Quinto-Su P.A. Noise-enabled optical ratchets. Sci. Rep. 2017;7:1–6. doi: 10.1038/srep44287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeVeque R.J. SIAM; 2007. Finite Difference Methods for Ordinary and Partial Differential Equations: Steady-State and Time-dependent Problems. [Google Scholar]

- Makarov D.E. Shapes of dominant transition paths from single-molecule force spectroscopy. J. Chem. Phys. 2015;143:194103. doi: 10.1063/1.4935706. [DOI] [PubMed] [Google Scholar]

- Manzhos S., Dawes R., Carrington T. Neural network-based approaches for building high dimensional and quantum dynamics-friendly potential energy surfaces. Int. J. Quantum Chem. 2015;115:1012–1020. [Google Scholar]

- Neuman K.C., Block S.M. Optical trapping. Rev. Sci. Instrum. 2004;75:2787–2809. doi: 10.1063/1.1785844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oh J., Orejon D., Park W., Cha H., Sett S., Yokoyama Y., Thoreton V., Takata Y., Miljkovic N. The apparent surface free energy of rare earth oxides is governed by hydrocarbon adsorption. iScience. 2022;25:103691. doi: 10.1016/j.isci.2021.103691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pérez-García L., Selin M., Magazzù A., Volpe G., Arzola A.V., Castillo I.P., Volpe G. Complex Light and Optical Forces XV. Vol. 11701. International Society for Optics and Photonics; 2021. Forma: expanding applications of optical tweezers; p. 1170111. [Google Scholar]

- Preisler H.K., Ager A.A., Johnson B.K., Kie J.G. Modeling animal movements using stochastic differential equations. Environmetrics. 2004;15:643–657. [Google Scholar]

- Pressé S., Lee J., Dill K.A. Extracting conformational memory from single-molecule kinetic data. J. Phys. Chem. B. 2013;117:495–502. doi: 10.1021/jp309420u. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pressé S., Peterson J., Lee J., Elms P., MacCallum J.L., Marqusee S., Bustamante C., Dill K. Single molecule conformational memory extraction: p5ab rna hairpin. J. Phys. Chem. B. 2014;118:6597–6603. doi: 10.1021/jp500611f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proesmans K., Ehrich J., Bechhoefer J. Finite-time landauer principle. Phys. Rev. Lett. 2020;125:100602. doi: 10.1103/PhysRevLett.125.100602. [DOI] [PubMed] [Google Scholar]

- Rabiner L., Juang B. An introduction to hidden Markov models. IEEE ASSP Mag. 1986;3:4–16. [Google Scholar]

- Reif F. Waveland Press; 2009. Fundamentals of Statistical and Thermal Physics. [Google Scholar]

- Sánchez-Sánchez M.G., León-Montiel R.d.J., Quinto-Su P.A. Phase dependent vectorial current control in symmetric noisy optical ratchets. Phys. Rev. Lett. 2019;123:170601. doi: 10.1103/PhysRevLett.123.170601. [DOI] [PubMed] [Google Scholar]

- Schuler B., Eaton W.A. Protein folding studied by single-molecule FRET. Curr. Opin. Struct. Biol. 2008;18:16–26. doi: 10.1016/j.sbi.2007.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sgouralis I., Madaan S., Djutanta F., Kha R., Hariadi R.F., Pressé S. A bayesian nonparametric approach to single molecule forster resonance energy transfer. J. Phys. Chem. B. 2019;123:675–688. doi: 10.1021/acs.jpcb.8b09752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sgouralis I., Pressé S. Icon: an adaptation of infinite hmms for time traces with drift. Biophys. J. 2017;112:2117–2126. doi: 10.1016/j.bpj.2017.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sivia D., Skilling J. OUP; 2006. Data Analysis: A Bayesian Tutorial. [Google Scholar]

- Stilgoe A.B., Armstrong D.J., Rubinsztein-Dunlop H. Enhanced signal-to-noise and fast calibration of optical tweezers using single trapping events. Micromachines. 2021;12:570. doi: 10.3390/mi12050570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sturzenegger F., Zosel F., Holmstrom E.D., Buholzer K.J., Makarov D.E., Nettels D., Schuler B. Transition path times of coupled folding and binding reveal the formation of an encounter complex. Nat. Commun. 2018;9:4708–4711. doi: 10.1038/s41467-018-07043-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor D.G., Bushell M.C. The spatial mapping of translational diffusion coefficients by the nmr imaging technique. Phys. Med. Biol. 1985;30:345–349. doi: 10.1088/0031-9155/30/4/009. [DOI] [PubMed] [Google Scholar]

- Titsias M. Artificial Intelligence and Statistics. PMLR; 2009. Variational learning of inducing variables in sparse Gaussian processes; pp. 567–574. [Google Scholar]

- Toyabe S., Ueno H., Muneyuki E. Recovery of state-specific potential of molecular motor from single-molecule trajectory. Europhys. Lett. 2012;97:40004. [Google Scholar]

- Türkcan S., Alexandrou A., Masson J.-B. A Bayesian inference scheme to extract diffusivity and potential fields from confined single-molecule trajectories. Biophys. J. 2012;102:2288–2298. doi: 10.1016/j.bpj.2012.01.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang H., Oster G. Energy transduction in the f 1 motor of atp synthase. Nature. 1998;396:279–282. doi: 10.1038/24409. [DOI] [PubMed] [Google Scholar]

- Wang J., Ferguson A.L. Nonlinear reconstruction of single-molecule free-energy surfaces from univariate time series. Phys. Rev. E. 2016;93:032412. doi: 10.1103/PhysRevE.93.032412. [DOI] [PubMed] [Google Scholar]

- Wang J., Olsson S., Wehmeyer C., Pérez A., Charron N.E., De Fabritiis G., Noé F., Clementi C. Machine learning of coarse-grained molecular dynamics force fields. ACS Cent. Sci. 2019;5:755–767. doi: 10.1021/acscentsci.8b00913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang J., Plotkin S.S., Wolynes P.G. Configurational diffusion on a locally connected correlated energy landscape; application to finite, random heteropolymers. J. Phys. I France. 1997;7:395–421. [Google Scholar]

- Wang J., Verkhivker G.M. Energy landscape theory, funnels, specificity, and optimal criterion of biomolecular binding. Phys. Rev. Lett. 2003;90:188101. doi: 10.1103/PhysRevLett.90.188101. [DOI] [PubMed] [Google Scholar]

- Weistuch C., Pressé S. Spatiotemporal organization of catalysts driven by enhanced diffusion. J. Phys. Chem. B. 2018;122:5286–5290. doi: 10.1021/acs.jpcb.7b06868. [DOI] [PubMed] [Google Scholar]

- Williams C.K.I., Rasmussen C.E. Vol. 2. MIT Press; 2006. (Gaussian Processes for Machine Learning). [Google Scholar]

- Wilson A., Nickisch H. International Conference on Machine Learning. 2015. Kernel interpolation for scalable structured Gaussian processes (kiss-gp) pp. 1775–1784. [Google Scholar]

- Wilson A.G., Dann C., Nickisch H. Thoughts on massively scalable Gaussian processes. arXiv. 2015 doi: 10.48550/arXiv.1511.01870. Preprint at. [DOI] [Google Scholar]

- Wu D., Ghosh K., Inamdar M., Lee H.J., Fraser S., Dill K., Phillips R. Trajectory approach to two-state kinetics of single particles on sculpted energy landscapes. Phys. Rev. Lett. 2009;103:050603. doi: 10.1103/PhysRevLett.103.050603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu L., Guo T., Li T. Machine learning-accelerated prediction of overpotential of oxygen evolution reaction of single-atom catalysts. iScience. 2021;24:102398. doi: 10.1016/j.isci.2021.102398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang S., Wong S.W.K., Kou S.C. Inference of dynamic systems from noisy and sparse data via manifold-constrained Gaussian processes. Proc. Natl. Acad. Sci. USA. 2021;118 doi: 10.1073/pnas.2020397118. e2020397118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan Z., Jiang H. Quantitative photoacoustic tomography: recovery of optical absorption coefficient maps of heterogeneous media. Appl. Phys. Lett. 2006;88:231101. [Google Scholar]

- Zwanzig R. Oxford University Press; 2001. Nonequilibrium Statistical Mechanics. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data and code are available via Zenodo.

Data can be found at https://doi.org/10.5281/zenodo.6680673.

Code can be found at https://doi.org/10.5281/zenodo.6680638.

Any additional information required to reanalyze the data reported in this work paper is available from the Lead contact upon request.