Abstract

According to the most recent estimates from global cancer statistics for 2020, liver cancer is the ninth most common cancer in women. Segmenting the liver is difficult, and segmenting the tumor from the liver adds some difficulty. After a sample of liver tissue is taken, imaging tests, such as magnetic resonance imaging (MRI), computer tomography (CT), and ultrasound (US), are used to segment the liver and liver tumor. Due to overlapping intensity and variability in the position and shape of soft tissues, segmentation of the liver and tumor from computed abdominal tomography images based on shade gray or shapes is undesirable. This study proposed a more efficient method for segmenting liver and tumors from CT image volumes using a hybrid ResUNet model, combining the ResNet and UNet models to address this gap. The two overlapping models were primarily used in this study to segment the liver and for region of interest (ROI) assessment. Segmentation of the liver is done to examine the liver with an abdominal CT image volume. The proposed model is based on CT volume slices of patients with liver tumors and evaluated on the public 3D dataset IRCADB01. Based on the experimental analysis, the true value accuracy for liver segmentation was found to be approximately 99.55%, 97.85%, and 98.16%. The authentication rate of the dice coefficient also increased, indicating that the experiment went well and that the model is ready to use for the detection of liver tumors.

Keywords: computed tomography, deep learning, liver segmentation, medical imaging, residual network, tumor segmentation

1. Introduction

The liver is the second largest organ in the body, located on the right side of the abdomen, and weighs about three pounds. The liver has two lobes, right and left, and is in contact with the gallbladder, pancreas, and intestines. Several organs are involved with the liver. Cancer in the liver may be primary (originating from various cells that compose the liver), secondary, or metastatic (caused by cancerous cells from other organs). Malignant hepatocellular carcinoma (HCC) is the most typical primary liver disease among all liver cancers.

The second most common global disease is liver cancer. According to data from the World Health Organization (WHO), it accounted for 8.8 million deaths in 2015, out of which 788,000 deaths were caused by carcinoma [1]. The American Cancer Society (ACS) predicted that around 20,710 new cases in the USA would be diagnosed in the year (29,200 in men and 11,510 in women). Out of these, 28,920 people (19,610 men and 9310 women) died because of primary carcinoma and intrahepatic epithelial canal cancer in 2017 [2]. Carcinoma is more common in the geographical regions of Africa and accounts for more than 600,000 deaths every year [2].

To identify the formation and texture of the liver, radiologists and oncologists use a computed tomography (CT) or magnetic resonance imaging (MRI). In both primary and secondary hepatic tumor cancer, these abnormalities are significant biomarkers for early disease diagnosis, and progression [3]. Usually, the CT volume scan of the liver is understood using semi-manual or manual techniques, but these techniques are costly, time-consuming, subjective, and prone to error. Several calculation methods have been developed to address these issues and improve liver cancer’s diagnostic performance. However, these systems were deficient in segmentation and detection of liver lesions due to several challenges: low contrast between the liver and the neighboring organs, such as liver and tumors of different contrast values; changes in the number of tumors; the size of the tumor being too small; tissue abnormalities; and irregular tumor growth [4]. A new approach is therefore needed to overcome these obstacles.

Earlier studies have had drawbacks, as they employed images from improved magnetic resonance imaging and computed tomography. Furthermore, the CNN technique was only used to assess a few types of liver tumors. In recent years, research has centered on creating a fully automated system for accurate and timely liver tumor prediction while conserving time and energy. The advantage of automatic techniques is that they develop over time as an outcome of their performance and through the integration of various conditions and contributions. Various studies have recently emerged that back up this argument [5,6]. We should try new technologies that have shown promising results in object recognition, image classification, obstacle avoidance, facial recognition, natural language processing, material inspection, and many other applications. These include convolutional neural networks (CNNs). A deep network learns to recognize more complex features by categorizing and combining features from previous layers. This function is known as feature tier, allowing deep learning networks to manage extremely large high-dimensional data, with millions of inputs passing the nonlinear function [7].

Most significantly, the CNN model has been verified to be extremely strong in assessing fluctuating image appearance, which inspires us to apply them to fully automatic liver and tumor segmentation in CT volumes. This study aims to fill a void in the previous research by evaluating the results of a deep learning framework model on a broad dataset of tumor volumes from 3DIRCAD1. We should try a new approach to overcome the above obstacles. Our goal is to create a powerful and robust deep learning model that can perform the task and locate the region of interest (ROI). Each node level in deep learning is trained in a combination of features different from the last layer of the results. Deep learning frameworks can be used for feature classification as well as automated feature extraction.

In conclusion, this study has the following main contributions:

We develop a fully automated system for segmenting liver and tumors from CT scan images in a single run.

Based on prior studies and their shortcomings, the researchers in this study attempt to achieve 95% mIOU on HCC tumors using VGG and Inception V4 based on the deep learning models. The research technique is intended to improve accuracy and fulfill expectations in the segmentation of liver tumors.

We propose a viable method for classifying liver and tumor cells after failing to achieve the desired results with the UNet model. Then, we develop a model that combines both ResNet and UNet, named ResUNet. This deep neural network model utilizes leftover patterns that use escape rather than simple convolutions, resulting in faster testing with few details.

We provide a high-level overview of this technology’s results.

We provide a general performance summary of this technique, with comparison to a few other fully automated techniques and define a scope for development based on new data and other features.

The rest of the paper is organized as follows. Section 2 presents the literature with regard to existing datasets. The proposed approach is described in Section 3. Section 4 provides an evaluation of ResUNeT. Finally, the limitation of the proposed approach is given in Section 5 followed by conclusion and future work in Section 6.

2. Literature Review

Liver segmentation from medical imaging has progressed significantly in medical practice. The objective is to extract knowledge about the human body that has a broad range of applications, including early disease detection and identification of the direction for a proper cure [8]. There are many techniques for processing medical images, which have their advantages and disadvantages. X-ray, molecular imaging, ultrasound, MRI, positron emission tomography, computed tomography, PET-CT, and ultrasonic images are commonly used imaging modalities [9,10]. Hemangioma, focal nodular hyperplasia, adenoma, hepatocellular carcinoma, intrahepatic cholangiocarcinoma, and hepatic metastasis are all diagnosed with CT, US, and MRI [11]. Contrast-enhanced CT imaging is commonly used for survey examinations to rule out the existence of hepatic and extrahepatic metastases and assess the local involvement level since it has a high sensitivity (93%) and specificity (100%). CT scans are widely used to detect liver cancer. Rex and Cantlie first proposed hemilivers and serge as features for manual liver segmentation [12].

However, automated liver and its lesion segmentation remain challenging due to the inconsistent variations between liver and lesion tissue caused by various acquisition methods, contrast agents, contrast enhancement levels, and scanner resolutions [13]. Compared to previous examinations, convolution neural networks (CNN) can help in deep learning with regard to liver lesions [7]. CNN’s performance is the best and, in some cases, has surpassed the knowledge of human radiology. In the medical domain, CNNs have been widely used to detect various tumor forms over the last few years.

The researchers used convolutional neural networks and other deep learning systems to incorporate ideas about liver tumor diagnosis. Both supervised and unsupervised classification is possible. The feature sets are grouped into predefined groups in a supervised system, while they are allocated to undefined classes in the unsupervised method. To train and evaluate the output of a classifier always requires training and testing data [14]. The data for medical image training is normally collected from one or more experts who have assigned labels to a set of objects. They distinguished between regular and abnormal liver cells in a dataset that included 79 H&E-stained liver tissue WSIs of hepatocellular carcinoma (HCC), 48 of which were HCC tissue and 31 of which were normal tissue [15]. The authors suggest a mechanism for detecting differences in normal neural networks and in CNN, using a high and low enlargement in the cell map and surface structure. The results showed a 91% probability of correct liver HCC tumor detection using CNN [15].

Deep learning (DL) techniques have excellent learning abilities [16]. Deep learning models such as the convolutional neural networks (CNNs), stacked automatic encoder (SAE), deep belief network (DBN), and deep Boltzmann machine (DBM) have been implemented [3,17]. Deep learning models are superior in terms of accuracy. After all, finding a suitable training dataset, which ought to be large and constructed by specialists, remains a significant challenge. The literature revealed that DL-based models for liver tumor detection have attained 94% accuracy.The CNN model comes in a variety of architectures [18,19], including AlexNet, VGGNet, ResNet, and others. While [12,20] used the VGG16 architecture in their research, other research [3,12,21,22,23] has employed the two-dimensional (2D) UNet, which is primarily used for splitting up medical images [24].

On the other side, one research report classified benign and malignant tumors. They used an enhanced contrast ultrasound (ECUS) dataset in video form, separated into two subgroups: 20% test data and 80% training data. Another study [15] suggested using CNN to minimize the number of liver tomography images required while reducing the time and money spent on maintenance. The model has been improved, and the potential has been developed to distinguish between benign and malignant tumors using a combination of deep learning and CNN. The researchers used a training dataset of 55,536 photos from the 2013 database and 100 liver images from the 2016 data for testing [7]. The experiments were repeated five times, and the results showed an accuracy of 92%.

In a similar study [12], researchers used computed tomography images and a convolutional neural network to compare various types of liver tumors. The researchers calculated the probability by segmenting each pixel using special segmentation algorithms and a deep convolutional neural network. They used layer wrap to minimize functions and eliminated three-dimensional variation by grouping layers into characteristics and classifying tumors based on a fully connected layer. Another study [13] was performed with 2.5D computed tomography images to segment liver lesions using a deep convolutional neural network using Res-Net and virtual UNet. The scholar used a working model design created in 2.5D instead of 3D. An NVIDIA (Santa Clara, CA, USA) Titan XGPU with 12 GB of memory and 3584 cores were used for training and testing for four days in a row.

Rectified linear units (ReLU) have become one of the most important actuation functions in deep learning and machine learning. The rectified linear activation function is a piece-wise linear function that outputs the input directly if it is positive. Otherwise, it outputs null or 0. It has become the norm trigger function for many types of neural networks because a model that uses it is easier to train and often performs better.

Much research has been performed on liver and liver tumor segmentation using semi-automatic, automatic, and manual techniques. Although manual segmentation varies in different segments or parts of the liver, hemi-liver, or vessels, automatic and semi-automatic methods focus on different algorithms to enable tumor or liver segmentation from medical images with more, less, or no user intervention [25]. This section provides a comprehensive literature review of liver and tumor segmentation based on state-of-the-art deep learning techniques.

3. Method

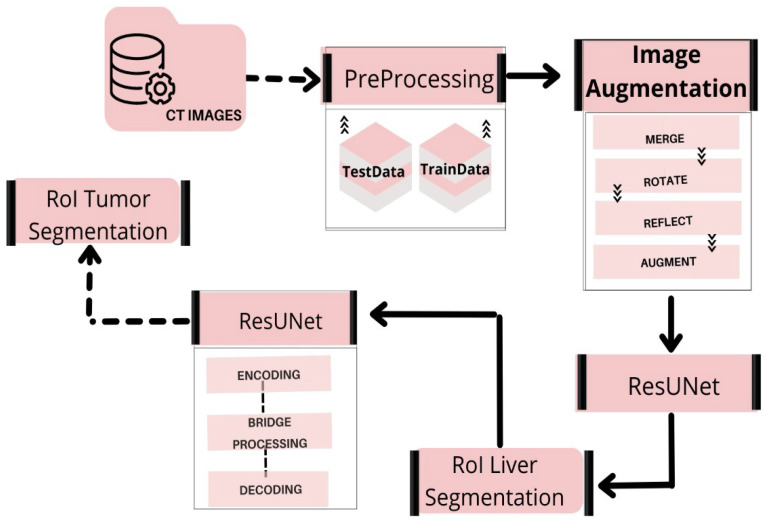

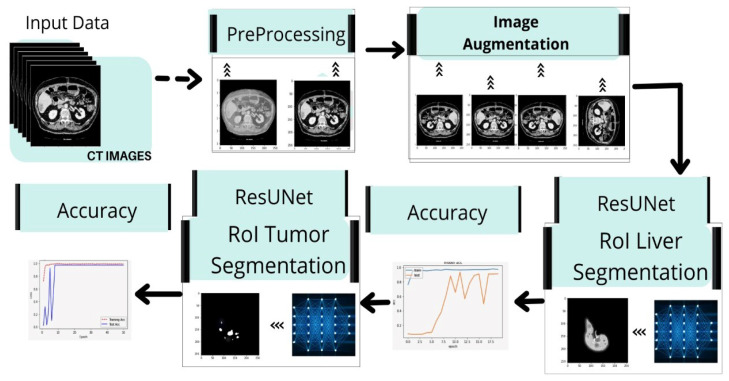

This section focuses on the steps used in implementing the liver and tumor segmentation using the hybrid deep learning network ResUNet. The preprocessing, feature extraction, classification, and segmentation are all part of the proposed method’s pattern in the deep learning network scheme. Figure 1 shows the overall framework of the proposed model for liver and tumor segmentation.

Figure 1.

Overview of our proposed workflow.

3.1. Dataset

The 3D-IRCADb-1 (http://www.ircad.fr/research/3dircadb, accessed on: 25 January 2022) dataset consists of three-dimensional (3D) CT images of patients, which are well ordered and made publicly available by the IRCAD. Each image has a width and height of 512 × 512 pixels. The depth of each patient’s slice, or the number of slices, varies between 74 and 260 for overall 2800 slices. These self-contained 3D CT scans of ten men and women each represent 75% positive cases. DICOM-formatted patient images, labeled images, and mask images are provided as data for the segmentation process in the preprocessing section. Couinaud segmentation [26] reveals the location of tumor volumes, highlighting the key challenges of using software to segment the liver [27].

3.2. CT and MRI Images Preprocessing

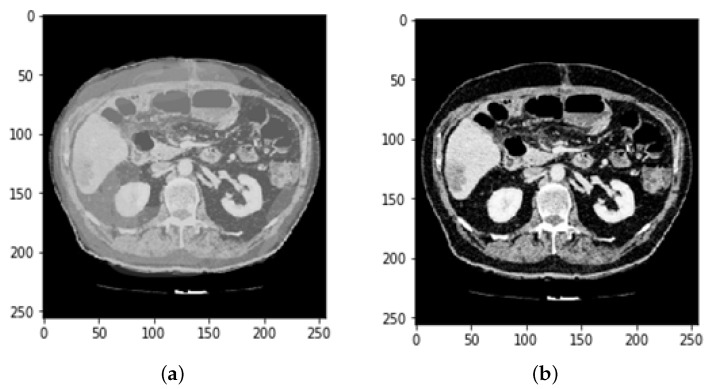

The proposed method is employed to extract useful segments from liver tumor images. Data augmentation, preprocessing, and CNN is used to diagnose the liver and identify tumors in the surrounding organs. In the preprocessing phase of CT images, Hounsfield unit values in the range of −100 to 400 are passed on, neglecting the adjacent organs. Then, histogram equalization is applied to the image to increase the contrast. Finally, some data from the magnification steps are used to increase the data and teach the desired invariant properties, such as translation, rotation, deformation, elasticity, and the addition of the Gaussian noise standard deviation, as shown in Figure 2.

Figure 2.

Preprocessing state of CT image (a) before Hounsfield unit windowing and (b) after HU windowing.

Every medical image analysis system uses image preprocessing to enhance the quality of the raw input image. This entails noise reduction, enhancement, normalization, and standardization techniques, along with other things. As defining blocks and feature extraction depend on image quality, preprocessing is important to achieve the other steps involved. The normalization and distributing procedures adjust the image’s values and reduce the spectrum, making it easier to improve the classifier. Noise reduction improves image screen resolution and eliminates unnecessary qualities in the image, making other processing tasks, including edge detection, segmentation, and compression, more efficient. Two approaches for eliminating noise from current medical images are the spatial domain approach and the spectral domain approach. Examples of spatial domain approaches are mean filtering, adaptive mean filtering, order-statistic filtering, adaptive weighted median filtering, maximum a posteriori filtering, nonlinear diffusion, geometric filtering, and so on [28]. The mean value of its neighbors replaces each pixel in mean filtering. It gives the picture a smoothing and blurring effect. The adaptive mean filtering technique uses local image statistics such as mean, variance, and correlation to detect and maintain edges and features [29]. Noise is reduced by using a local mean value to replace the original value. This filter adapts to the image’s properties locally, and aids in the selective removal of noise from various areas of the image [25]. Compared to the mean filter, the median filter is an order-statistic filter that creates less blur and preserves edge sharpness. By maximizing the Bayes theorem, an unobserved signal and a maximum a posteriori filter are used to estimate the values [30]. Curvelets may also be used to eliminate noise in medical images. Curvelet transform is a multi-scale conversion with scale and position parameters and indexed frame elements [31]. The results obtained after HU windowing and ResUNet segmentation are illustrated in Figure 3.

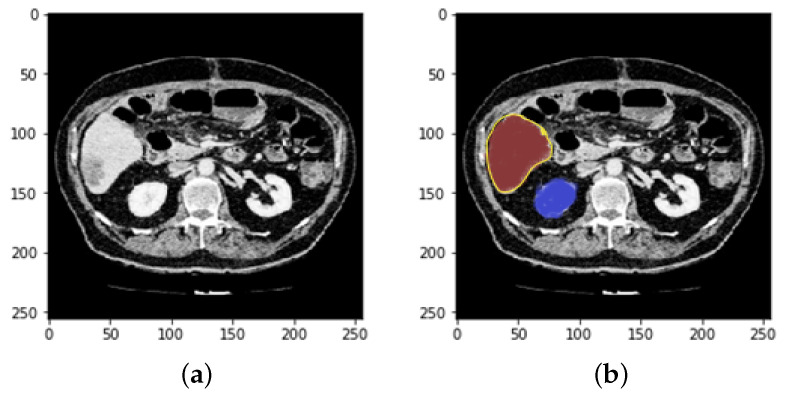

Figure 3.

Prepocessing state of CT image (a) after HU windowing and (b) using ResUNet Segmentation.

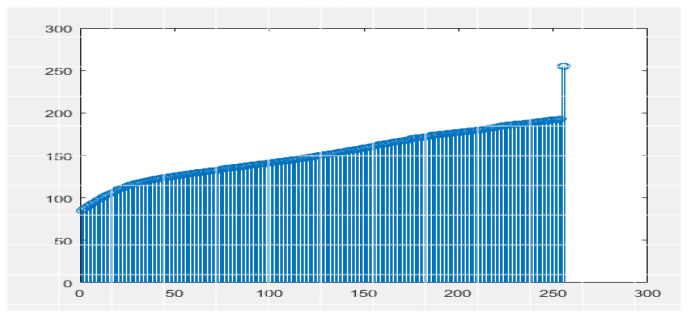

Histogram equalization is one of the image processing techniques used to enhance the contrast between the liver and its neighboring organs for more visibility or understanding. This made it easier to define the segmentation of the liver tumor. Histogram equalization is illustrated with before and after CT images in Figure 4. Each CT image slice dataset has its own tumor volumes and liver masks collection.

Figure 4.

Preprocessing state of CT image by applying histogram equalization.

3.3. Data Augmentation

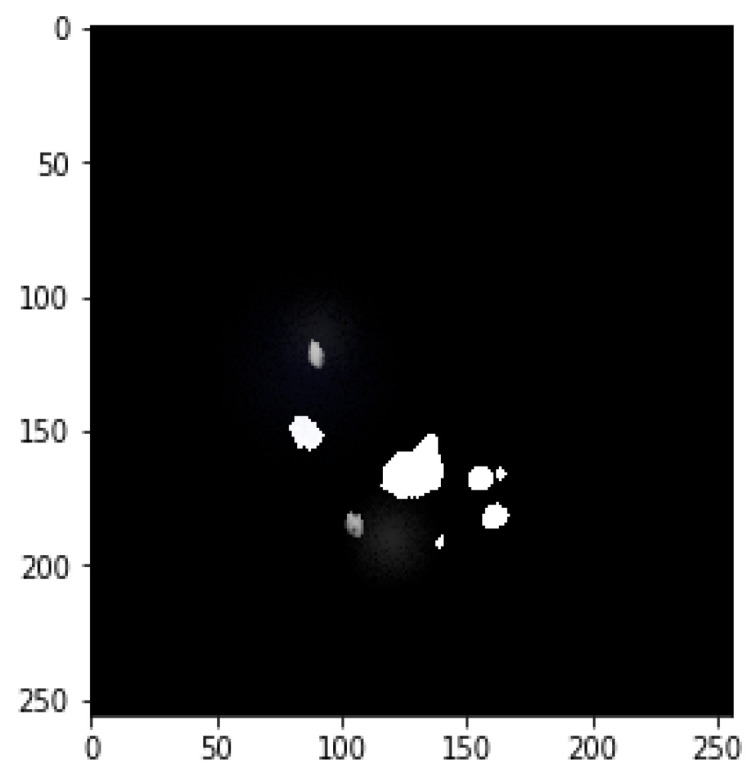

Data augmentation is mainly used to augment data size by performing various steps such as rotating, shifting, skewing, zooming, merging, and so on. This study primarily focused on image, mask reflection, and rotation. Figure 3 depicts the mask image for tumor detection, while Figure 4 shows tumor detection from the mask. Figure 5 explores the merger of final masks from both tumors.

Figure 5.

Mask image for tumor detection.

3.3.1. Feature Extraction and Selection

Several features can be derived from medical images, but texture-based features are used for training a classifier or automatic liver or tumor segmentation. Texture analysis provides a wealth of visual data and is an essential part of image analysis [32]. One of the most commonly used statistics is the gray level co-occurrence matrix (GLCM), which is based on second-order statistics of grayscale image histograms [33].

3.3.2. Feature Selection and Merging

All the different masks were combined to improve training and data augmentation. Since the IRCADB01 3D dataset comprises tumor masks for each tumor alone, we had to combine all the masks into one mask to make it easier to train and expand the data.

3.3.3. Reflection Image and Mask

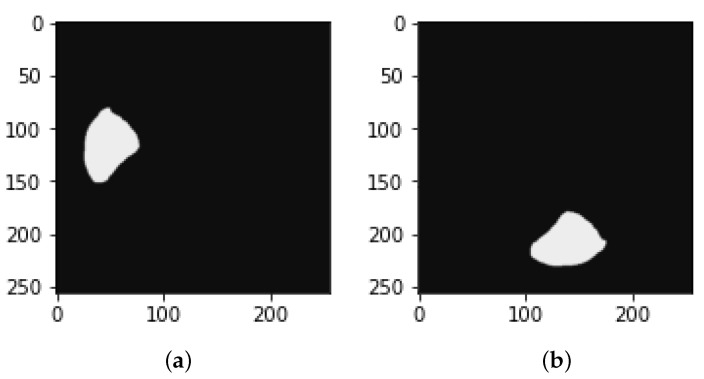

Other researchers have performed liver masks, tumor mask reflections, and adjustment of the Y-axis motion of each slice to improve the dataset’s training efficiency. Figure 6 demonstrates the slice reflection before and after, while Figure 7 depicts the mask before and after reflection.

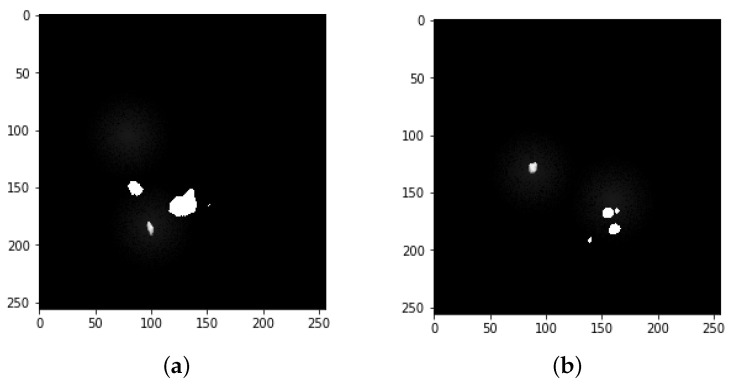

Figure 6.

Image mask samples: both (a,b) show the detected tumors from the mask.

Figure 7.

Final mask merged from both tumors.

3.3.4. Rotation image and mask

We rotate each slice containing a tumor along with the tumor mask and liver mask to raise the number of slices, as shown in Figure 8 and Figure 9.

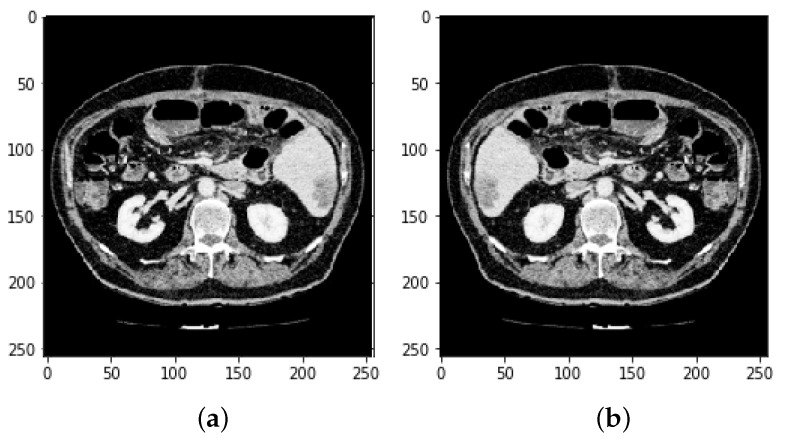

Figure 8.

(Image slices a) before reflection and (b) after reflection.

Figure 9.

Image of mask (a) before reflection and (b) after reflection.

3.4. Defining Region of Interest (ROI)

Usually, simple image segmentation is used to determine ROI in medical images. Thresholding, region increasing and boundary monitoring, classifier methods, deformable models, and atlas-guided methods are some popular methods for defining ROI. The method of separating the target area or object of interest from the entire context in preparation for feature extraction is known as region of interest (ROI). Manual, semi-automatic, and automatic processes can be used to define ROI in medical photos. It normally divides pixels into two groups, one for pixels with a specific range of intensity and the other for a wider range of intensity. While it is an easy and effective method, it has its drawbacks, including the inability to account for spatial image characteristics, noise sensitivity, intensity, and inhomogeneity [34,35].

Labels are used in classifier methods to divide the feature space into different classes based on tissue or anatomical area. Supervised classifiers use manual segmentation data as training data, which are then used as a guide for automated segmentation of new data. Unsupervised classifiers use clustering methods that perform the same functions as supervised classifiers without requiring training data. Regions are extracted using deformable models. Deformable models extract area boundaries using a closed parametric surface that changes or deforms in response to the model’s internal force and the image’s external force [36]. There are two types of deformable models: metric deformable models and geometric deformable models. An atlas of anatomy is used to segment organs using knowledge about the anatomy of interest. This approach seems sufficient if the structures are consistent across slices [37].

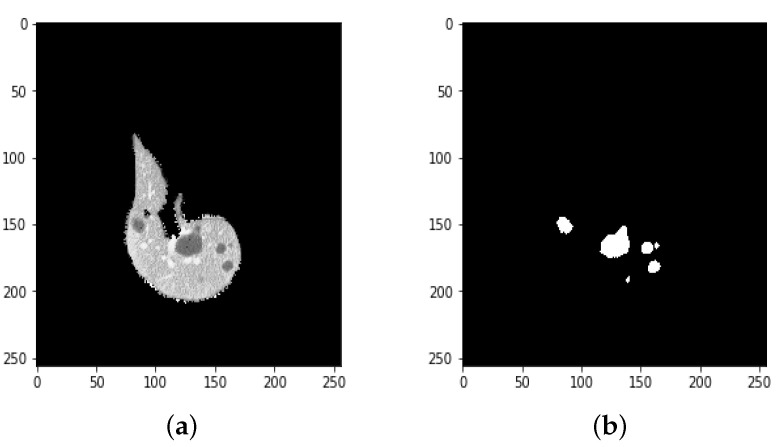

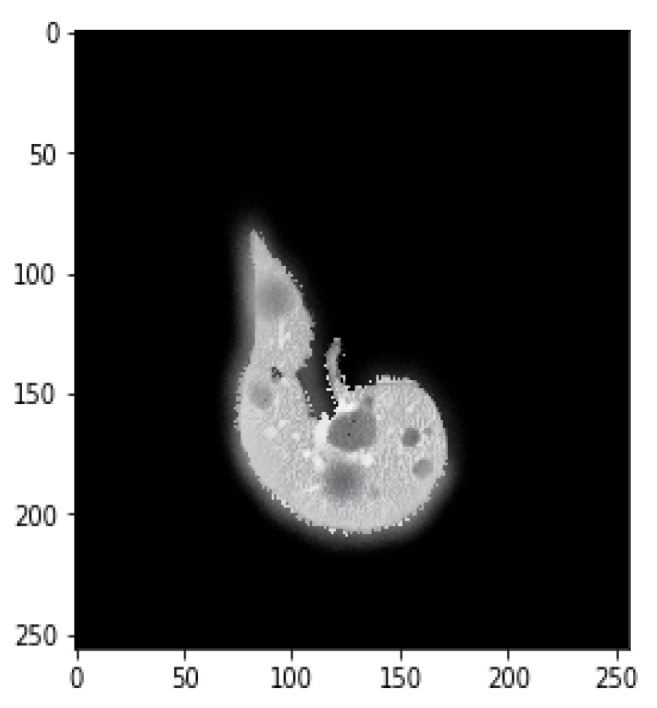

We used a UNet to segment tumors in the liver, but we obtained poor results, so we tried to segment tumors with ResUNet. This was trained with CT scans of the liver after extracting the ROI from the first CNN along with the cancer masks. Examples are included in Figure 10 and Figure 11.

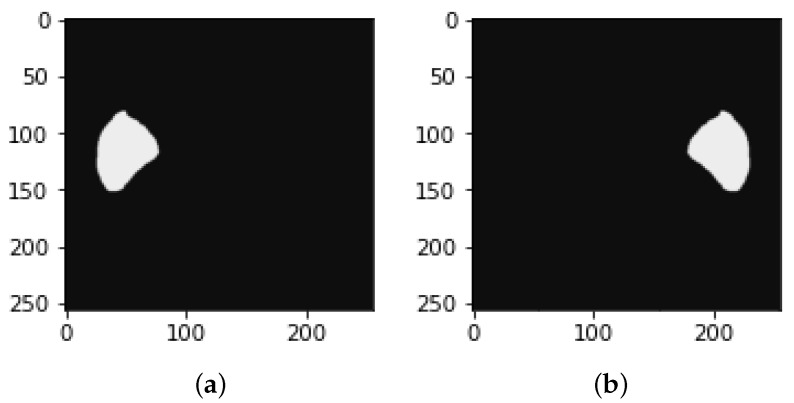

Figure 10.

Image slices (a) before rotation and (b) after rotation by 90.

Figure 11.

Image of mask (a) before rotation by 90 and (b) after rotation by 90.

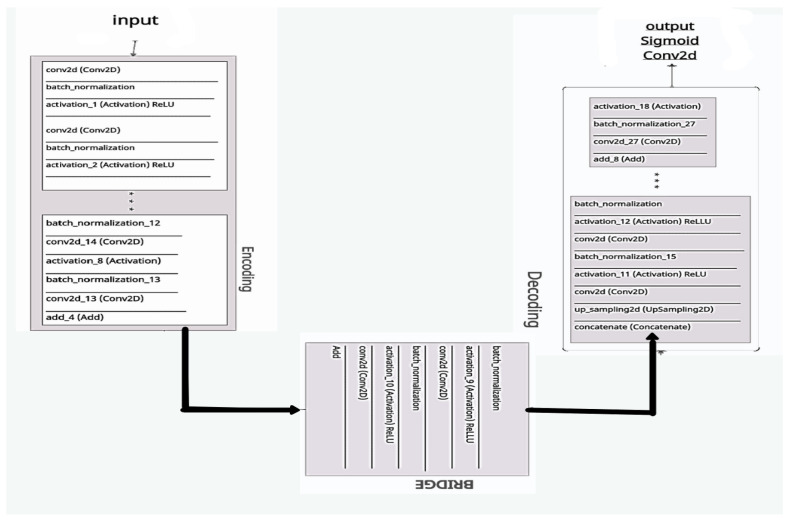

4. Evaluation with ResUNeT

ResUNet was equipped to locate the region of interest (ROI) from the nearby organs using CT scans and liver masks. After separating the ROI and training on CT scans of the liver, the ResUNet was used to segment tumors in the liver. ResUNet swaps convolutional patches for remaining sections, combining the advantages of both models. The deep learning preparation is simple, with residuals in each block, and eliminates relations between the network’s weak and high levels. It also contributes to having limited trainable parameters in each remaining unit. Deep learning preparations are simple to use with residuals in each block and eliminate the relationship between weak and high levels of the network. It also contributes to the limited training parameters in each remaining unit. Figure 12 shows the ResUNet architecture.

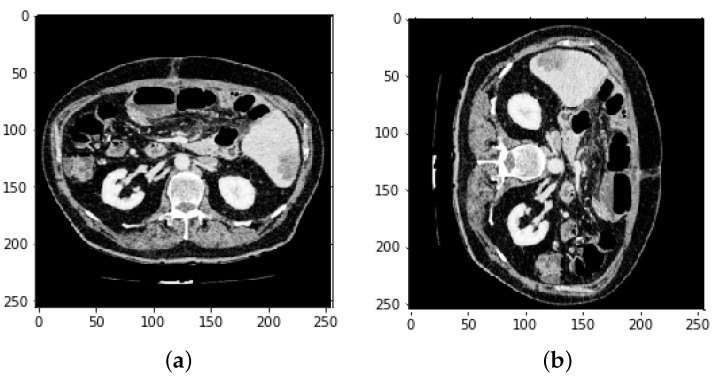

Figure 12.

(a) A ground truth tumor image and (b) the resulting tumor segmentation.

A CNN is a feature extractor that performs well in contrast to the other texture extractor features. Compared to other complex textural approaches, the features of the model extracted with a convolutional network and CNNs can take time for training. In classification tasks, CNNs have been shown to be successful [16]. Firstly, the CNN reads data or performs augmentation of the data to train the algorithm, and then it combines both. As discussed earlier, deep learning models will encode and decode the CT images for better segmentation, so the ResUNet comprises three different routes:

Encoding route: converts the input into an accurate recognition.

Decoding route: reverses the encoding and categorizes the representation pixel by pixel.

Bridge processing: joins the two routes.

ResNet, on the other hand, is a simplified version of residual blocks that uses artificial neural networks [13]. In residual blocks, the skip connections principle simplifies and accelerates the deep learning process in complex networks [38]. In contrast, the hybrid ResUNet allows comprehensive standby of convolutional blocks [15].

4.1. Segmentation Process of Liver and Liver Tumor

Liver segmentation is the process of segmenting a medical image (CT, MRI, or US) into liver parenchyma and non-liver parenchyma regions. Statistical shape models, graph cuts, clustering, deformable models, area expanding, a level range, thresholding, active contour, support vector machine (SVM), neural network (NN), and other methods are used to segment the liver. Many fully connected networks have recently been developed, and they appear to be promising. Still, they require a large amount of training data and a high-speed processor, making them computationally costly. By studying the homogeneity function of the region, Pohle et al. proposed an adaptive region rising method to segment the liver [39] automatically. Since this approach is based on homogeneity parameters of the tissue, it performs under-segmentation when the target is non-uniform.

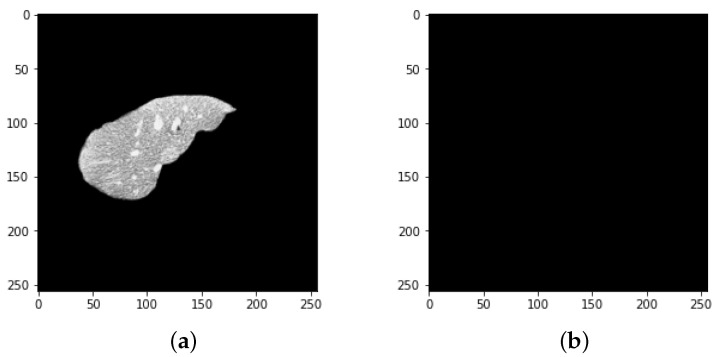

Suzuki et al. used fast-marching level collection and geodesic active contour to create a completely automatic system for calculating liver volume [40,41,42,43] using the segmentation outcome of one slice as the original segmentation for another slice. Quick marching and mathematical morphology, as well as static, were used to create a fully automated system for liver segmentation with graph cut. Quick marching and mathematical morphology, statistic adaptive threshold initialization, and k-means clustering were used to create a fully automated system for liver segmentation with graph cut [3,44,45]. Erdt et al. proposed a new SSM based on local shape priors combined with constraints directly derived from the model’s current curvature for fully automatic CT liver segmentation [37]. Figure 13 presents the implementation framework of deep learning neural network on liver and liver tumor segmentation. It involves image preprocessing steps such as noise reduction, standardization, and normalization techniques to enhance the image quality. Data augmentation operations (i.e., reflect the image, rotate the image, and mask) are performed to augment the training samples. ResUNet was prepared to find the region of interest (ROI) from the nearby organs using CT scans. After separating the ROI and training on CT scans of the liver, the ResUNet was used to segment tumors in the liver.

Figure 13.

Results of the proposed model (a) liver segmentation and (b) tumor segmentation (without tumor).

Furthermore, it is important to segment the tumor for any surgical procedure. At various stages of liver cancer, accurate and precise location and shape of a tumor are needed for a better cure plan. Accurate segmentation allows us to monitor the therapy’s progress over time. Based on deep learning classifiers and models, various semi-automatic and automatic techniques for liver tumor segmentation have been suggested. These characteristics were then used to train a Hopfield neural network to classify organs. Only one picture was used for the process, and the result was very disappointing [46] with the suggested segmentation algorithm for a liver image, which combined multi-layer perceptron NN and fuzzy-k-means. A few implementations of a fully linked network to segment the liver and liver tumors have been published recently [47,48,49]. A detect before extract system was proposed by Chen et al. to locate the liver boundary [50] automatically. Deep neural networks (DNNs) are a form of neural network (NN) that have more than one hidden layer or more than three layers (input and output) [27].

Each layer of nodes in deep learning is trained on a different set of features from the previous layer’s output [51,52]. The deep network is taught to recognize more complex features as it goes deeper by aggregating and recombining features from previous layers [53]. This skill is known as feature hierarchy, and it allows deep learning networks to manage extremely large high-dimensional data with billions of parameters passing the nonlinear function [7,53]. DNN can be used for feature classification as well as automated feature extraction. The DNN classifier is trained on labeled data before being applied to unlabeled, unstructured data, allowing it to process much larger datasets. Aside from that, other researchers’ approaches addressed here have only used a single-scale high-magnification patch with a cell-level information-based pattern. The tumor-based cells could not be detected absolutely. Identifying normal and abnormal cells in the liver was a challenging chore that necessitated a thorough examination of the layers of 3D images.

These published studies provide several conclusions. First, as opposed to semi-automatic methods, automatic systems do not degrade segmentation efficiency. In addition, severity alone does not seem adequate for segmenting lesions other than metastases. Following this, a liver envelope appears necessary for segmenting liver tumors, particularly for automatic approaches. Finally, in the case of the liver, deep learning and machine learning methods work well. Previous research has shown that segmentation is best achieved when restricted to the liver, particularly for automatic segmentation. Deep learning is important in segmenting liver tumors, particularly when texture changes differentiate lesions and you have perfect knowledge about the types of liver lesions.

Moreover, deep learning techniques are frequently employed to segment liver tumors. When using texture features, deep learning is particularly useful because the choice and combination of these features are challenging in supervised data schemes. All methods rely on texture features and are limited to an ROI based on the use of deep learning techniques. Deep learning techniques are used in all methods that rely on texture properties but are limited to an ROI based on the injury the user indicates, as this is the best option.

This article attempts to fill the gaps left by previous research. The convolutional network architecture has been used in previous studies to facilitate the use of multiple magnifications while providing information about the cellular structure for low-magnification patches. Based on earlier research, the authors of this study are trying to achieve 91% mIOU in HCC tumors by using convolutional-network-based VGG and Inception V4. The ResNet architecture is a categorized step of the encoder-decoder of the layers, which forms a deep convolution encoder-decoder. The proposed architecture was tested on a typical liver computed tomography dataset or a tumor volume in the training process. The research technique aims to improve accuracy and meet expectations in diagnosing liver tumors. They accompanied another model to get the best results using a UNet model.

To get the best results with a UNet model, the authors created ResUNet, which uses jumps instead of the traditional turns used by traditional networks, allowing faster preparation with less data.

4.2. Final Results

All patients were examined with CT images. Diagnostic results are analyzed in terms of sensitivity, accuracy, error rate, and specificity obtained using the values of true positive (TP), true negative (TN), false positive (FP), and false negative (FN). The formulation of these evaluation parameters is shown in the following equations:

| (1) |

Probability that the test will correctly recognize a patient who has the disease:

| (2) |

Probability that the test will correctly recognize a patient who has the disease:

| (3) |

Accuracy provides general information about how many samples are misclassified:

| (4) |

Intersection over junction (IoU) is the amount of classified pixels relative to the junction of what is expected and the original value from the same class. The mIoU represents the average between the IoU of fragmented items and the rest of the samples from the test dataset. It can be written as follows:

| (5) |

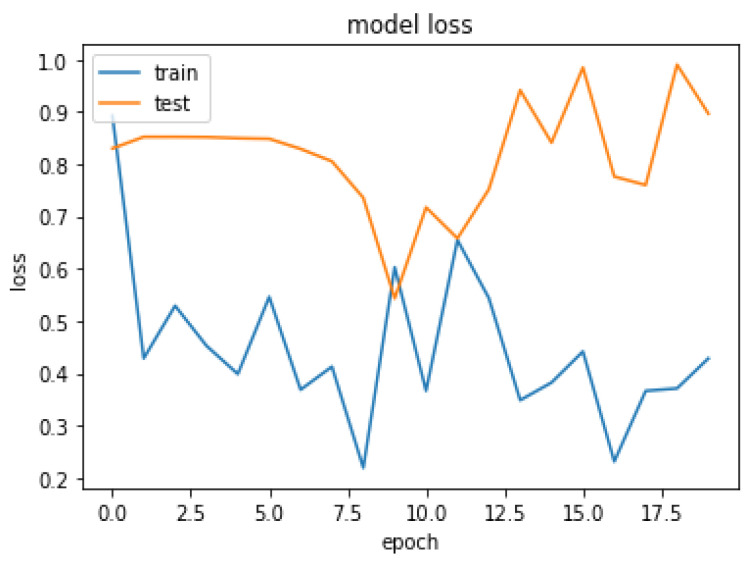

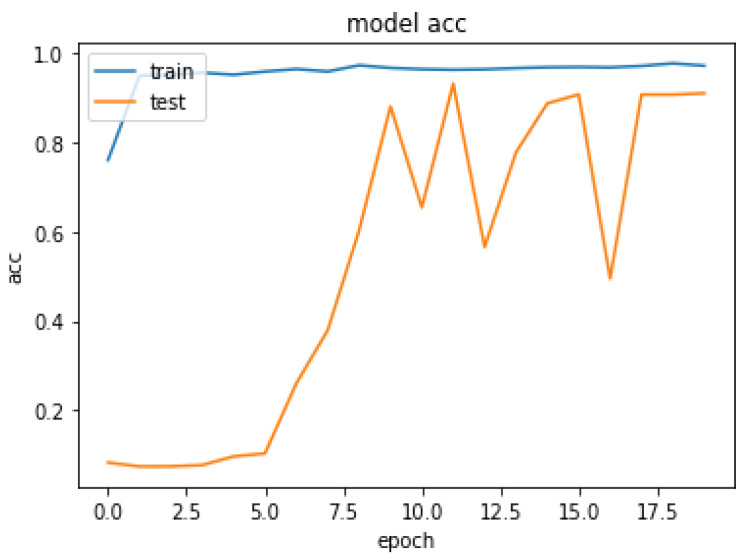

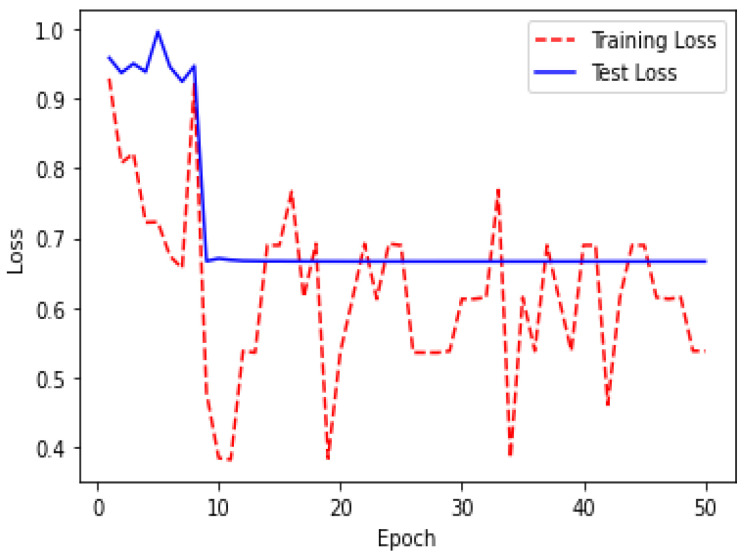

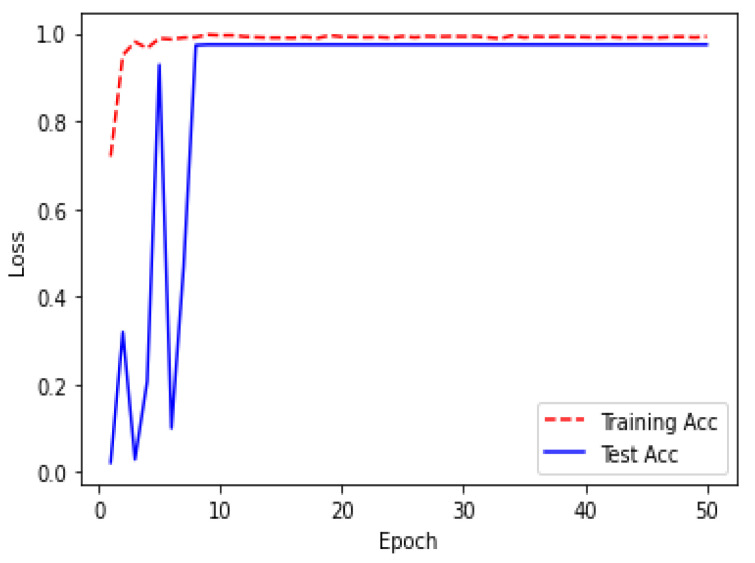

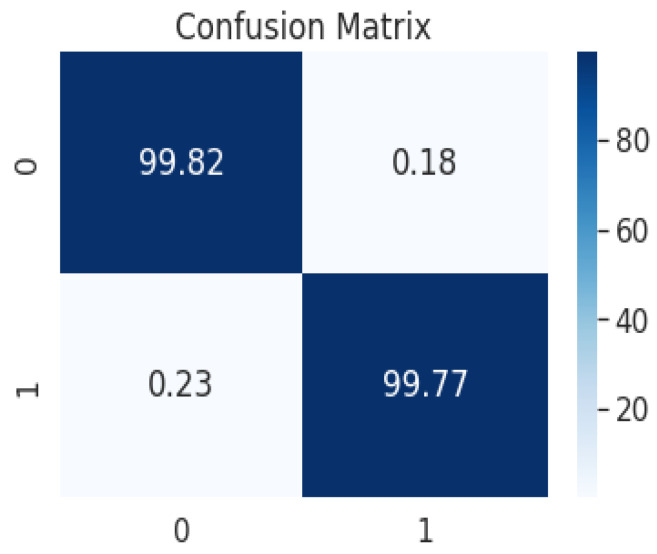

Due to the overfitting of the data, validation loss is high after five intervals, and the validation dice coefficient decreases. After using the ResUNet model to segment the liver, the results are shown in Figure 14, Figure 15, Figure 16, Figure 17, Figure 18 and Figure 19. Finally, Figure 20 represents the confusion matrix from the predicted value after tumor segmentation.

Figure 14.

The proposed ResUNet architecture.

Figure 15.

Implementation framework of deep learning neural network on liver and liver tumor segmentation.

Figure 16.

Loss over 20 epochs during the model ResUNet’s training progress for liver segmentation intervals.

Figure 17.

Acc over 20 epochs of the model ResUNet’s training progress for liver segmentation intervals.

Figure 18.

Loss over 50 epochs during the model ResUNet’s training progress for tumor segmentation intervals.

Figure 19.

Acc over 50 epochs during the model ResUNet’s training progress for liver segmentation intervals.

Figure 20.

Confusion matrix from the predicted value after tumor segmentation, achieving an Acc of 99.6% and a Dice coefficient of 99.2%.

Table 1 shows the training progress of the ResUNet model for liver segmentation over 20 epochs. Table 2 shows the results of tumor segmentation and the training progress of the ResUNet model over 50 epochs. The other assessment measurements, which incorporate accuracy and SVD, were additionally determined. The SVD shows the contrast between the real and predicted masks. The proposed model achieved an accuracy of 99% with an SVD score of 0.22, which was the lowest contrast between the actual and predicted masks, as displayed in Table 3. The justification for the higher accuracy was class unevenness. To examine CT images, more pixels have been placed in a foundation class, where the probability of the presence of a tumor is very low. Consequently, the accuracy esteem is biased toward the background class since accuracy counts all classes’ complete numbers of TP, FP, TN, and FN.

Table 1.

Loss and Acc results for function values training and validation data on the ResUNet model for liver segmentation using a different number of epochs.

| Epoch | Loss | Acc |

|---|---|---|

| 1 | 0.3927 | 0.8608 |

| 2 | 0.4286 | 0.9696 |

| 4 | 0.4525 | 0.9867 |

| 6 | 0.5462 | 0.9593 |

| 8 | 0.4127 | 0.9794 |

| 10 | 0.2027 | 0.9673 |

| 12 | 0.6548 | 0.9632 |

| 19 | 0.3710 | 0.9776 |

| 20 | 0.4284 | 0.9923 |

Table 2.

Loss and Acc results for function values training and validation data on the ResUNet model for Tumor Segmentation using a different number of epochs.

| Epoch | Loss | Acc |

|---|---|---|

| 1 | 0.2288 | 0.9196 |

| 2 | 0.5079 | 0.9504 |

| 4 | 0.7225 | 0.9660 |

| 6 | 0.6742 | 0.9864 |

| 8 | 0.8204 | 0.9913 |

| 10 | 0.3850 | 0.9953 |

| 12 | 0.4382 | 0.9924 |

| 49 | 0.5383 | 0.9906 |

| 50 | 0.2382 | 0.9927 |

Table 3.

Segmented results of proposed framework represented as mean ± standard deviations.

5. Limitations of the Proposed Approach

We acknowledge that the sample dataset size of the present study is an obvious limitation that prevents us from generalizing results. Despite the Res-UNet producing very promising results, there are a few limitations. We may get around these limitations by planning for more epochs, using more data, using different datasets, or using different preprocessing strategies. The findings will be summarized using some examples first, followed by a discussion of the general case. A collection of images with various types of tumors were subjected to straight segmentation using a classification function. Three slices were chosen to demonstrate segmentation accuracy by contrasting the automatic segmentation with the ground truth.

In addition, a limited-sample-size neural network is considered a risk. Therefore, the results presented should be interpreted with caution, and future research should be carried out to increase the sample size to confirm sufficient support of the results shown. In addition, deep learning techniques are often used to segment liver tumors. Deep learning is particularly useful when using texture features because selecting and combining these features poses a challenge for monitored data schemes. All methods are based on characteristics of texture. Deep learning techniques are used in all methods based on the properties of the texture, but are limited to an ROI based on the injury the user indicates, as this is the best option.

6. Conclusions and Future Work

This paper presents the use of a deep learning model for tumor and liver segmentation in CT images. As a result, the hybrid ResUNet is significantly more effective in terms of training time, memory usage, and accuracy as compared to baseline methods. The binary segmentation by classification layout was created to make processing medical images easier. The basic 3D-IRCADB1 dataset was used to train and evaluate the proposed model. The proposed technique properly identifies maximum tumor areas, with a tumor classification accuracy of over 98%. However, after reviewing the data, it was discovered that there were only a small number of false positives, which can be improved by false positive filters and training the model on a bigger dataset.

ResUNet delivered excellent results in terms of diagnosing quickly and efficiently. As we can see from the results, deep learning neural networks assisted us in achieving our goals and are possibly the best tool for dividing liver tumors. They can also be tried with tumors other than liver tumors, as the ResUNet showed promising results. The ResUNet model’s performance can be increased by using more datasets and different preprocessing techniques. It can aid in the diagnosis of liver tumors, with 99.9% precision. The rate of the authentication of DC also increased, suggesting that the experiment went well and that the model is ready for use in detecting liver tumors. In future studies, we plan to explore a new deep learning model at a further level to improve tumor localization accuracy, lower the FN rate, and increase the IoU metric.

Author Contributions

Conceptualization, H.R. and T.F.N.B.; methodology, T.F.N.B.; investigation, A.I.; resources S.T., A.A.; data curation, J.T.; writing—original draft preparation, T.F.N.B.; writing—review and editing, A.I., A.A.; visualization, S.T.; supervision, H.R. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

The dataset is available at http://www.ircad.fr/research/3dircadb.

Conflicts of Interest

The authors declare that there is no conflict of interest.

Funding Statement

This work is supported in part by the Beijing Natural Science Foundation (No. 4212015) and China Ministry of Education—China Mobile Scientific Research Foundation (No. MCM20200102).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.World Health Organization World Cancer Report. 2021. [(accessed on 25 January 2022)]. Available online: https://www.who.int/news-room/fact-sheets/detail/cancer.

- 2.Key Statistics about Liver Cancer. 2022. [(accessed on 29 January 2022)]. Available online: https://www.cancer.org/cancer/liver-cancer/about/what-is-key-statistics.html#:~:text=The%20American%20Cancer%20Society’s%20estimates,will%20die%20of%20these%20cancers.

- 3.Christ P.F., Elshaer M.E.A., Ettlinger F., Tatavarty S., Bickel M., Bilic P., Rempfler M., Armbruster M., Hofmann F., D’Anastasi M., et al. Automatic liver and lesion segmentation in CT using cascaded fully convolutional neural networks and 3D conditional random fields; Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI 2016); Athens, Greece. 17–21 October 2016; pp. 415–423. [DOI] [Google Scholar]

- 4.Soler L., Delingette H., Malandain G., Montagnat J., Ayache N., Koehl C., Dourthe O., Malassagne B., Smith M., Mutter D., et al. Fully automatic anatomical, pathological, and functional segmentation from CT scans for hepatic surgery. Comput. Aided Surg. 2001;6:131–142. doi: 10.3109/10929080109145999. [DOI] [PubMed] [Google Scholar]

- 5.Son J., Park S.J., Jung K.H. Retinal vessel segmentation in fundoscopic images with generative adversarial networks. arXiv. 2017 doi: 10.1007/s10278-018-0126-3.1706.09318 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mharib A.M., Ramli A.R., Mashohor S., Mahmood R.B. Survey on liver CT image segmentation methods. Artif. Intell. Rev. 2012;37:83–95. doi: 10.1007/s10462-011-9220-3. [DOI] [Google Scholar]

- 7.Luo S. Review on the methods of automatic liver segmentation from abdominal images. J. Comput. Commun. 2014;2:1. doi: 10.4236/jcc.2014.22001. [DOI] [Google Scholar]

- 8.Tariq T., Hassan M., Rahman H., Shah A. Predictive Model for Lung Cancer Detection. LC Int. J. STEM. 2020;1:61–74. [Google Scholar]

- 9.Latif J., Xiao C., Imran A., Tu S. Medical imaging using machine learning and deep learning algorithms: A review; Proceedings of the 2019 2nd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET); Sukkur, Pakistan. 30–31 January 2019; pp. 1–5. [Google Scholar]

- 10.Imran A., Li J., Pei Y., Yang J.J., Wang Q. Comparative analysis of vessel segmentation techniques in retinal images. IEEE Access. 2019;7:114862–114887. doi: 10.1109/ACCESS.2019.2935912. [DOI] [Google Scholar]

- 11.Tiferes D.A., D’Ippolito G. Liver neoplasms: Imaging characterization. Radiol. Bras. 2008;41:119–127. doi: 10.1590/S0100-39842008000200012. [DOI] [Google Scholar]

- 12.Bellver M., Maninis K.K., Pont-Tuset J., Giró-i Nieto X., Torres J., Van Gool L. Detection-aided liver lesion segmentation using deep learning. arXiv. 20171711.11069 [Google Scholar]

- 13.Kaluva K.C., Khened M., Kori A., Krishnamurthi G. 2D-densely connected convolution neural networks for automatic liver and tumor segmentation. arXiv. 20181802.02182 [Google Scholar]

- 14.Wen Y., Chen L., Deng Y., Zhou C. Rethinking pre-training on medical imaging. J. Vis. Commun. Image Represent. 2021;78:103145. doi: 10.1016/j.jvcir.2021.103145. [DOI] [Google Scholar]

- 15.Han X. Automatic liver lesion segmentation using a deep convolutional neural network method. arXiv. 20171704.07239 [Google Scholar]

- 16.Li X., Chen H., Qi X., Dou Q., Fu C.W., Heng P.A. H-DenseUNet: Hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE Trans. Med. Imaging. 2018;37:2663–2674. doi: 10.1109/TMI.2018.2845918. [DOI] [PubMed] [Google Scholar]

- 17.Meraj T., Rauf H.T., Zahoor S., Hassan A., Lali M.I., Ali L., Bukhari S.A.C., Shoaib U. Lung nodules detection using semantic segmentation and classification with optimal features. Neural Comput. Appl. 2021;33:10737–10750. doi: 10.1007/s00521-020-04870-2. [DOI] [Google Scholar]

- 18.Yang D., Xu D., Zhou S.K., Georgescu B., Chen M., Grbic S., Metaxas D., Comaniciu D. Automatic liver segmentation using an adversarial image-to-image network; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Quebec City, QC, Canada. 11–13 September 2017; pp. 507–515. [Google Scholar]

- 19.Shafaey M.A., Salem M.A.M., Ebied H.M., Al-Berry M.N., Tolba M.F. Deep learning for satellite image classification; Proceedings of the International Conference on Advanced Intelligent Systems and Informatics; Cairo, Egypt. 1–3 September 2018; pp. 383–391. [Google Scholar]

- 20.Chlebus G., Meine H., Moltz J.H., Schenk A. Neural network-based automatic liver tumor segmentation with random forest-based candidate filtering. arXiv. 20171706.00842 [Google Scholar]

- 21.Ke Q., Zhang J., Wei W., Damaševičius R., Woźniak M. Adaptive independent subspace analysis of brain magnetic resonance imaging data. IEEE Access. 2019;7:12252–12261. doi: 10.1109/ACCESS.2019.2893496. [DOI] [Google Scholar]

- 22.Pan F., Huang Q., Li X. Classification of liver tumors with CEUS based on 3D-CNN; Proceedings of the 2019 IEEE 4th International Conference on Advanced Robotics and Mechatronics (ICARM); Toyonaka, Japan. 3–5 July 2019; pp. 845–849. [Google Scholar]

- 23.Yasaka K., Akai H., Abe O., Kiryu S. Deep learning with convolutional neural network for differentiation of liver masses at dynamic contrast-enhanced CT: A preliminary study. Radiology. 2018;286:887–896. doi: 10.1148/radiol.2017170706. [DOI] [PubMed] [Google Scholar]

- 24.Wen Y., Chen L., Deng Y., Ning J., Zhou C. Towards better semantic consistency of 2D medical image segmentation. J. Vis. Commun. Image Represent. 2021;80:103311. doi: 10.1016/j.jvcir.2021.103311. [DOI] [Google Scholar]

- 25.Goshtasby A., Satter M. An adaptive window mechanism for image smoothing. Comput. Vis. Image Underst. 2008;111:155–169. doi: 10.1016/j.cviu.2007.09.008. [DOI] [Google Scholar]

- 26.El-Regaily S.A., Salem M.A.M., Aziz M.H.A., Roushdy M.I. Multi-view Convolutional Neural Network for lung nodule false positive reduction. Expert Syst. Appl. 2020;162:113017. doi: 10.1016/j.eswa.2019.113017. [DOI] [Google Scholar]

- 27.Mahjoub M.A. Automatic liver segmentation method in CT images. arXiv. 20121204.1634 [Google Scholar]

- 28.Kota N.S., Reddy G.U. Fusion based Gaussian noise removal in the images using curvelets and wavelets with Gaussian filter. Int. J. Image Process. 2011;5:456–468. [Google Scholar]

- 29.Bama S., Selvathi D. Despeckling of medical ultrasound kidney images in the curvelet domain using diffusion filtering and MAP estimation. Signal Process. 2014;103:230–241. doi: 10.1016/j.sigpro.2013.12.020. [DOI] [Google Scholar]

- 30.Despotović I., Goossens B., Philips W. MRI segmentation of the human brain: Challenges, methods, and applications. Comput. Math. Methods Med. 2015;2015:450341. doi: 10.1155/2015/450341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pham D.L., Xu C., Prince J.L. Current methods in medical image segmentation. Annu. Rev. Biomed. Eng. 2000;2:315–337. doi: 10.1146/annurev.bioeng.2.1.315. [DOI] [PubMed] [Google Scholar]

- 32.Salem M.A.M., Atef A., Salah A., Shams M. Handbook of Research on Machine Learning Innovations and Trends. IGI Global; Hershey, PA, USA: 2017. Recent survey on medical image segmentation; pp. 424–464. [Google Scholar]

- 33.Horn Z., Auret L., McCoy J., Aldrich C., Herbst B. Performance of convolutional neural networks for feature extraction in froth flotation sensing. IFAC-PapersOnLine. 2017;50:13–18. doi: 10.1016/j.ifacol.2017.12.003. [DOI] [Google Scholar]

- 34.Leondes C.T. Medical Imaging Systems Technology—Analysis and Computational Methods. Volume 1 World Scientific; Singapore: 2005. [Google Scholar]

- 35.Wang D., Hu G., Lyu C. Multi-path connected network for medical image segmentation. J. Vis. Commun. Image Represent. 2020;71:102852. doi: 10.1016/j.jvcir.2020.102852. [DOI] [Google Scholar]

- 36.Gadkari D. Master’s Thesis. University of Central Florida; Orlando, FL, USA: 2004. Image Quality Analysis Using GLCM. [Google Scholar]

- 37.Li W., Jia F., Hu Q. Automatic Segmentation of Liver Tumor in CT Images with Deep Convolutional Neural Networks. J. Comput. Commun. 2015;3:720–726. doi: 10.4236/jcc.2015.311023. [DOI] [Google Scholar]

- 38.Pohle R., Toennies K.D. Segmentation of medical images using adaptive region growing; Proceedings of the Medical Imaging 2001; San Jose, CA, USA. 21–22 February 2001; pp. 1337–1346. [DOI] [Google Scholar]

- 39.Da O., Rq F., Mm C. Segmentation of liver, its vessels and lesions from CT images for surgical planning. Biomed. Eng. Online. 2011;10:30. doi: 10.1186/1475-925X-10-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Oliveira D.A.B., Feitosa R.Q., Correia M.M. Liver segmentation using level sets and genetic algorithms; Proceedings of the Fourth International Conference on Computer Vision Theory and Applications; Lisboa, Portugal. 5–8 February 2009; pp. 154–159. [DOI] [Google Scholar]

- 41.Yang H., Wang Y., Yang J., Liu Y. A novel graph cuts based liver segmentation method; Proceedings of the 2010 International Conference of Medical Image Analysis and Clinical Application; Guangzhou, China. 10–13 June 2010; pp. 50–53. [DOI] [Google Scholar]

- 42.Massoptier L., Casciaro S. Fully automatic liver segmentation through graph-cut technique; Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Lyon, France. 22–26 August 2007; pp. 5243–5246. [DOI] [PubMed] [Google Scholar]

- 43.Chen Y.W., Tsubokawa K., Foruzan A.H. Liver segmentation from low contrast open MR scans using K-means clustering and graph-cuts; Proceedings of the International Symposium on Neural Networks; Shanghai, China. 6–9 June 2010; pp. 162–169. [DOI] [Google Scholar]

- 44.Erdt M., Steger S., Kirschner M., Wesarg S. Fast automatic liver segmentation combining learned shape priors with observed shape deviation; Proceedings of the 2010 IEEE 23rd International Symposium on Computer-Based Medical Systems (CBMS); Perth, WA, Australia. 12–15 October 2010; pp. 249–254. [Google Scholar]

- 45.Yuan Z., Wang Y., Yang J., Liu Y. A novel automatic liver segmentation technique for MR images; Proceedings of the 2010 3rd International Congress on Image and Signal Processing; Marrakesh, Morocco. 4–6 June 2010; pp. 1282–1286. [Google Scholar]

- 46.Hoogi A., Lambert J.W., Zheng Y., Comaniciu D., Rubin D.L. A fully-automated pipeline for detection and segmentation of liver lesions and pathological lymph nodes. arXiv. 20171703.06418 [Google Scholar]

- 47.Chen E.L., Chung P.C., Chen C.L., Tsai H.M., Chang C.I. An automatic diagnostic system for CT liver image classification. IEEE Trans. Biomed. Eng. 1998;45:783–794. doi: 10.1109/10.678613. [DOI] [PubMed] [Google Scholar]

- 48.Deng L., Yu D. Microsoft Research Monograph. Microsoft; Redmond, WA, USA: 2013. Deep learning for signal and information processing. [Google Scholar]

- 49.Bashar A. Survey on evolving deep learning neural network architectures. J. Artif. Intell. 2019;1:73–82. [Google Scholar]

- 50.Jones Y. Couinaud Classification of Hepatic Segments. 2018. [(accessed on 25 January 2022)]. Available online: https://radiopaedia.org/articles/couinaud-classification-of-hepatic-segments?report=reader.

- 51.Waqas M., Tu S., Halim Z., Rehman S.U., Abbas G., Abbas Z.H. The role of artificial intelligence and machine learning in wireless networks security: Principle, practice and challenges. Artif. Intell. Rev. 2022 doi: 10.1007/s10462-022-10143-2. [DOI] [Google Scholar]

- 52.Tu S., Waqas M., Rehman S.U., Mir T., Abbas G., Abbas Z.H., Halim Z., Ahmad I. Reinforcement learning assisted impersonation attack detection in device-to-device communications. IEEE Trans. Veh. Technol. 2021;70:1474–1479. doi: 10.1109/TVT.2021.3053015. [DOI] [Google Scholar]

- 53.Perez L., Wang J. The effectiveness of data augmentation in image classification using deep learning. arXiv. 20171712.04621 [Google Scholar]

- 54.Ronneberger O., Fischer P., Brox T. U-net: Convolutional networks for biomedical image segmentation; Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; Munich, Germany. 5–9 October 2015; pp. 234–241. [Google Scholar]

- 55.Maqsood M., Bukhari M., Ali Z., Gillani S., Mehmood I., Rho S., Jung Y.A. A residual-learning-based multi-scale parallel-convolutions-assisted efficient CAD system for liver tumor detection. Mathematics. 2021;9:1133. doi: 10.3390/math9101133. [DOI] [Google Scholar]

- 56.Sun C., Guo S., Zhang H., Li J., Chen M., Ma S., Jin L., Liu X., Li X., Qian X. Automatic segmentation of liver tumors from multiphase contrast-enhanced CT images based on FCNs. Artif. Intell. Med. 2017;83:58–66. doi: 10.1016/j.artmed.2017.03.008. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset is available at http://www.ircad.fr/research/3dircadb.