Abstract

The application of artificial intelligence (AI) algorithms in medicine could support diagnostic and prognostic analyses and decision making. In the field of dermatopathology, there have been various papers that have trained algorithms for the recognition of different types of skin lesions, such as basal cell carcinoma (BCC), seborrheic keratosis (SK) and dermal nevus. Furthermore, the difficulty in diagnosing particular melanocytic lesions, such as Spitz nevi and melanoma, considering the grade of interobserver variability among dermatopathologists, has led to an objective difficulty in training machine learning (ML) algorithms to a totally reliable, reportable and repeatable level. In this work we tried to train a fast random forest (FRF) algorithm, typically used for the classification of clusters of pixels in images, to highlight anomalous areas classified as melanoma “defects” following the Allen–Spitz criteria. The adopted image vision diagnostic protocol was structured in the following steps: image acquisition by selecting the best zoom level of the microscope; preliminary selection of an image with a good resolution; preliminary identification of macro-areas of defect in each preselected image; identification of a class of a defect in the selected macro-area; training of the supervised machine learning FRF algorithm by selecting the micro-defect in the macro-area; execution of the FRF algorithm to find an image vision performance indicator; and analysis of the output images by enhancing lesion defects. The precision achieved by the FRF algorithm proved to be appropriate with a discordance of 17% with respect to the dermatopathologist, allowing this type of supervised algorithm to be nominated as a help to the dermatopathologist in the challenging diagnosis of malignant melanoma.

Keywords: artificial intelligence, AI, malignant melanoma, skin, software, algorithms, fast random forest (FRF)

1. Introduction

After the foundations of artificial intelligence (AI) were established, as postulated by the mathematician Alan Mathison Turing [1], the study and the analysis of AI underwent an important halt under the weight of expectations until the end of the last century; from these years, indeed, a new wave of technological development allowed the functional enhancement of computers, which became more and more powerful and complex [2]. Even today it is not clear whether a machine (computer) could be able to think, but certainly the development of increasingly complex systems, such as convolutional neural networks (CNNs) [3], has allowed a rapid increase in performance not only in fields of computer science, but also in different fields, such as medical, pathological and even subclassified dermatopathological fields [4,5].

The first attempts based on convolutional neural networks (CNNs) have already proven to be up to expectations, but the objective difficulty in reproducibility of the criteria for labeling a lesion as malignant or benign has also been reflected in the training of AI applied to differential diagnostics of atypical pigmented lesions. [3,4]. Indeed, it is not always easy to diagnose melanoma by analyzing the images with a “naked eye” approach. The problem has been known for some time and is classically defined as the “gray zone” of dermatopathology: in fact, beyond the extremes of the spectrum of benign and malignant, there is a fairly large number of atypical pigmented lesions that it is not easy to define “tout-court“ as having benign or malignant biological behavior. This uncertainty is the consequence of the fact that the criteria normally used to define a lesion as malignant melanoma become conflicting and blurred in certain situations; for example, nevi with severe dysplasia, Spitz nevi with dysplastic aspects (so-called SPARK nevus) or melanocytic lesions with an uncertain potential for malignancy such as MELTUMP or SAMPUS. The loss of criteria defined and recognized by all, as well as the interpretative subjectivity, has repercussions on the reduction or, sometimes, loss of agreement between dermatopathologists, and also (as some studies have highlighted) even on intraobserver agreement over time [2,3,4].

In this work we tried to train the proposed fast random forest (FRF) algorithm to be able to support the specialist to highlight automatically the “anomalous pixel regions” and to estimate a possible risk by quantifying the percentage of these regions with atypical morphological features starting from routine histopathological images (digital pathology).

2. Materials and Methods

2.1. Data Acquisition

For training, validation and testing, we used a dataset of 125 photomicrographs of 63 patients suffering from malignant melanoma, originally taken at 1280 × 1080 pixels (SONY® Sensor IMX185, Tokyo, Japan) at 10× magnification, obtained from blocks of material fixed in formaldehyde and embedded in paraffin (FFPE) from 1 January 2020 to 1 January 2021. For each patient, demographic and clinical characteristics, including the Breslow thickness and other histopathological features, were recorded for routine clinical practice. Informed consent was obtained from all patients involved and the study was approved by the local ethical committee. In total, 63 specimens (mean length ± SD 1.64 ± 0.93 cm) were processed. For all specimens, hematoxylin and eosin (H&E) staining was used for histopathological analysis and, in some cases, ancillary immunohistochemical techniques were performed for a final correct diagnosis. For each of them, each individual case was analyzed and a more representative slide was chosen by two dermatopathologists with a lot of experience in skin histopathology (G.C. and A.C.). After collecting all the slides, we proceeded to identify at least 5 potential defects (hotspots) normally used in routine dermatopathological diagnostics to differentiate a dysplastic nevus from a malignant melanoma. The defects analyzed were divided into architectural (disposition of the single and aggregates of melanocytes, symmetry/asymmetry of the lesion in question) and cytological (nuclear atypia, pagetoid spreading of the melanocyte, possible necrosis) groups following the Spitz–Allen criteria [6] (Table 1).

Table 1.

Summary of these criteria.

| Dysplastic Nevus | Malignant Melanoma |

|---|---|

| Architectural criteria (mandatory, major) | |

| Lentiginous or contiguous melanocytic hyperplasia | Poor circumscription of the intraepidermal melanocytic component of the lesion |

| Focal melanocytic atypia | Increased number of melanocytes, solitary and in nests, within and above the epidermal basal cell layer and within adnexal epithelia (pagetoid spreading) |

| Marked variation in size and shape of the melanocytic nests | |

| Absence of maturation of melanocytes with descent into the dermis | |

| Melanocytes in mitosis | |

| Architectural criteria (minor, at least 2) | |

| “Shoulder phenomenon” | Melanocytes with nuclear atypia |

| Fusion of epithelial cones | Necrosis or degeneration of melanocytes |

| Subepidermal concentric lamellar fibrosis |

2.2. Fast Random Forest (FRF) Image Classification

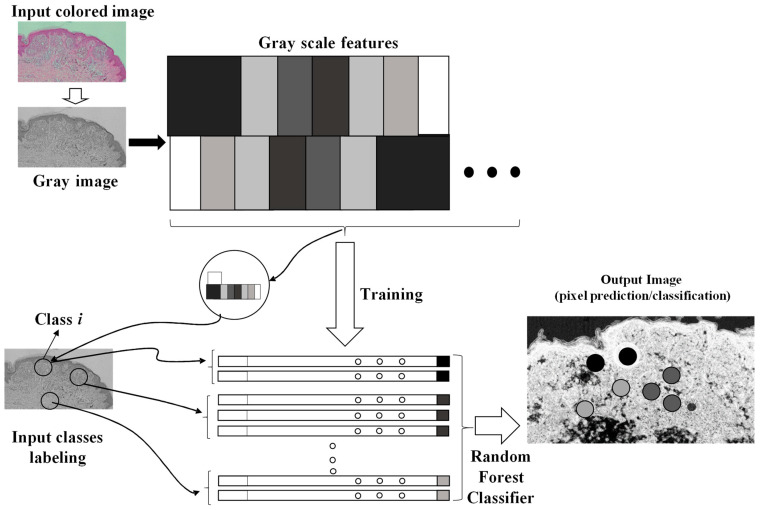

The artificial intelligence image processing algorithm used to classify and to enhance anomalies contained in the microscope image is the fast random forest (FRF) algorithm. The algorithm was designed by using a Java-based script framework. The learning process of the algorithm is based on a preliminary classification of clusters of pixels of the same image [5] including possible melanoma areas: the preliminary identification of melanoma morphological features represents the labeling approach typical of machine learning-supervised algorithms. The FRF testing provides as output the processed image with color-enhanced melanoma pixel clusters (each class selected in the learning step is represented by a color), probabilistic maps (high probability highlighted with white to identify an anomaly in a specified image region) and algorithm performance indicators (precision, recall, and receiver operating characteristic (ROC) curves [5]). The FRF algorithm executes an ensemble of decision trees able to classify clusters of pixels constituting an image with good accuracy, low computational cost and performing a multiclass segmentation [7,8,9,10,11,12]. The classification process is addressed by labeling clusters of pixels defined by the same features (clusters of gray pixel intensities). At the beginning, decision trees randomly select clusters of pixels by splitting the features at each node. The final classification of image areas are a result of the average classification of all the trees constructed during the algorithm iterations. The method is sketched in Figure 1, where it is possible to distinguish the following phases:

Different clusters of pixels (features) are used for the training model;

A marked region is distinguished as the features to find in the same image to process;

The RF is executed by finding similar features in the same image (similar features of similar clusters having a similar gray pixel intensity distribution).

Figure 1.

Image processing procedure based on pixel feature training and random forest classifier.

The training model can be constructed by setting the following tools or procedures (algorithm parameters):

Gaussian blur filtering, obtaining a blurred image to process;

Sobel filtering, which is able to approximate the image by a gradient of the intensity;

Hessian filtering, defined as:

| (1) |

where h1, h2, h3 and h4 are the elements of the Hessian matrix formed by the second-order partial derivatives stating the variation of the intensity of each pixel (derivative at the pixel point) in the x, y and xy direction (plane directions of the 2D image).

Defined by a Trace, a determinant (Det), a first eigenvalue (Fe), a second eigenvalue (Se), an orientation (Or; angle of the maximal second derivative), a Gamma, and a Square Gamma:

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

Difference of Gaussian functions;

Membrane projections due to the rotation of the original image kernel;

Main pixel parameters (mean, minimum, maximum, etc.);

Anisotropic diffusion filtering preserving sharp edges;

Bilateral filtering preserving edges;

Lipschitz filtering (preserving edges and decreasing noise);

Gabor filtering (mainly adopted for texture analysis);

Derivative filtering estimating high order derivatives;

Structured filtering estimating the eigenvalues;

Shifting of the image in different directions.

The optimized hyperparameters and filter properties applied for the image FRF processing (feature training) are: the Gaussian blur filter, Hessian matrix filter, membrane projections, membrane thickness equal to 1, membrane patch size equal to 19, minimum sigma equal to 1, and maximum sigma equal to 16.

In the proposed approach, the same image is adopted both for the training and for the testing: each image is converted into a grayscale image, and in the same image [12] the classes training the model are identified (classes of similar features including those of the Spitz nevi).

Different algorithms can be applied to calculate automatically the different areas [13]. In order to enhance classified clusters in the probabilistic image, a threshold filtering adjusting the intensities of the output grayscale image was adopted (see example of Appendix A): this setting is important to estimate with good precision the areas enclosing dermatological defects. The testing images were preliminary selected, taking into account images with certain defects in order to train the FRF algorithm efficiently.

The adopted method is summarized by the following steps:

-

-

A preliminary selection of images focusing the attention on the characteristics of dysplastic nevi and malignant melanoma (validation of the training model focusing on this classification);

-

-

Performing of a training of the selected images (FRF training model based on the classification of anomalous image areas embedding features of dysplastic nevi and MM, and the identification of other no-risk areas as structures of clusters of grayscale pixels);

-

-

Setting the optimization of the FRF algorithm parameters for the best identification of classes of the testing dataset;

-

-

Testing the execution of the FRF algorithm’s detection and estimation of anomalous areas by applying, after the analysis, image threshold filters (for the calculus, all the images have the same dimension of 1000 pixels × 2000 pixels);

-

-

Verification of the algorithm performance by estimating its precision.

3. Results

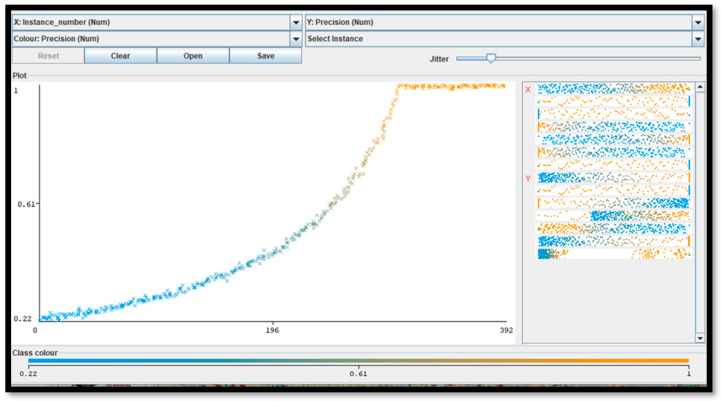

For five pixel clusters of the same dimensions (closest to the particular anomaly), a number of about 300 instances (computational cycles) occurs to achieve the maximum precision (equal to 1), with a computational cost of about 2 min using a processor, the Intel(R) Core(TM) i5-7200U CPU, 2.71 GHz. The minimum recall performance parameter (near to 0) is achieved in about 392 instances. The ROC curve (representing the true positive rate versus the false positive rate in the plane) is matched with the ideal curve of a perfect classifier (Figure 2). The performance indicators confirm the correct setting of the FRF hyperparameters.

Figure 2.

This figure illustrates the error metric (precision parameter [5]) of the adopted FRF algorithm versus iteration number (instance number) by proving that the final results are characterized by the maximum precision.

Appendix B illustrates the FRF probabilistic images of some of the classified areas. The algorithm performance is estimated by the precision parameter representing the metric of the FRF score (probability of algorithm error). A maximum precision of 1 is achieved after about 250 iteration steps (see Figure 2).

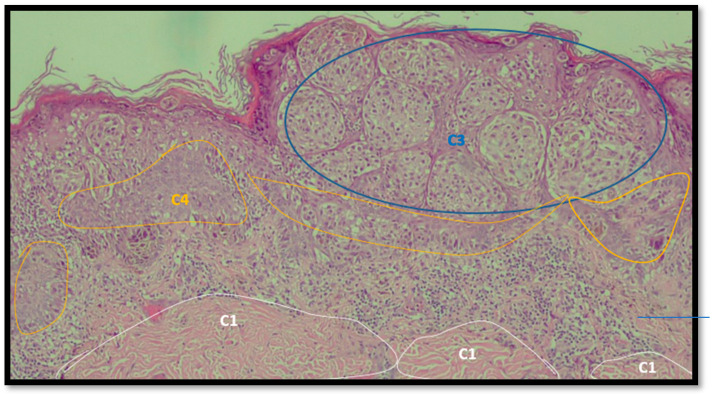

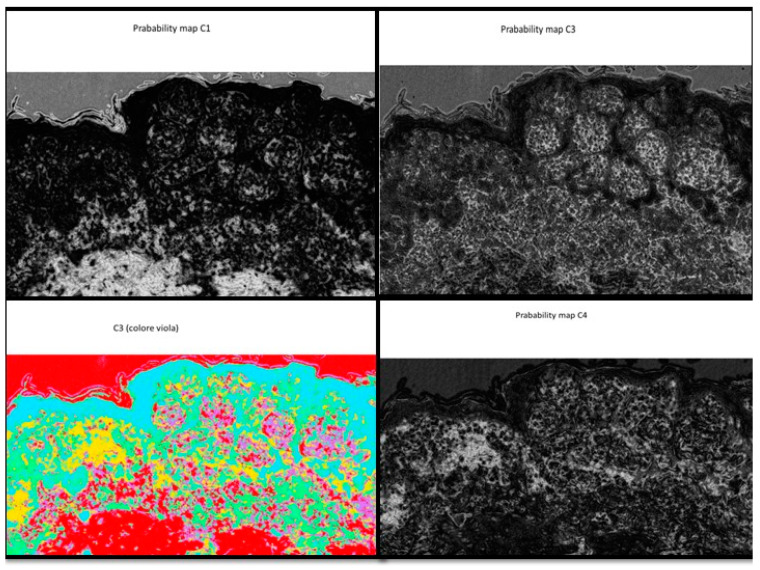

An example of image classification is illustrated in Figure 3 where C1, C3 and C4 are the four classes used to construct the learning model (areas having similar characteristics).

Figure 3.

Example of analysis and selection of defects (such as architectural and cytological atypia, pagetoid spreading, possible ulceration) in the macro-area of an image of malignant melanoma. Note the different colors of the circles/ellipses used to subclassify the anomalies (defects).

In Table 2 and Table 3 the FRF enhancement of possible classified defects are shown by estimating the percentage coverage in 1 mm × 2 mm images.

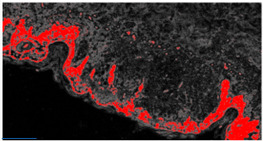

Table 2.

Example of analysis of two micrographs of malignant melanoma in which some constituent elements of the Allen–Spitz criteria have been analyzed, such as: symmetrical or asymmetrical lesion, clustering of melanocytes in nests or presence of single melanocyte, and eventual pagetoid spreading.

| Original Image | Defect Type (Name) | Defect Cluster (Enhanced Probability Image) | Percentage Presence on the Whole Image | Extension [mm2] |

|---|---|---|---|---|

| IMG00131 EE | // |

|

5.3% | 0.106 |

| IMG00132 EE | // |

|

4.1% | 0.082 |

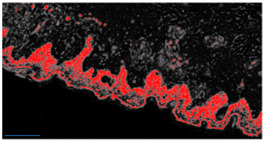

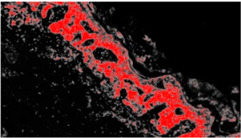

Table 3.

Example of two other images whose cytological characteristics were studied, including: cellular atypia, eventual pagetoid spreading, mitosis and nuclear pleomorphism.

| Original Image | Defect Type (Name) | Defect Cluster (Enhanced Probability Image) | Percentage Presence on the Whole Image | Extension [mm2] |

|---|---|---|---|---|

| IMG00150 | // |

|

6.6% | 0.132 |

| IMG00151 | // |

|

8.8% | 0.176 |

Analyzing the discrepancy between the performance of the FRF algorithm and the diagnosis by the dermatopathologist, a value of 17% was found, which is slightly lower than that described in the interobserver variability (about 25–26%).

4. Discussion

Historically, the histopathological diagnosis of malignant melanoma has always fluctuated from rather simple and easy to classify cases up to very complex and difficult to interpret cases [14], considering that, in the context of human pathology, MM is defined as the “great mime” [15]. For example, in a recent 2017 paper, Elmore G.J. et al. [15] analyzed the diagnostic results of 187 practicing pathologists in 10 states by comparing them with each other and with a consensus diagnosis reached by a group of three experienced dermatopathologists. The authors asked the 187 pathologists to interpret the same skin lesions after a certain time range (8 months) in order to have an estimate of the intraobserver readability. The degree of accuracy was very high (about 92%) in the case of diagnosing slightly atypical pigmented lesions and reached about 72% in the case of invasive melanomas. Conversely, diagnostic accuracy became much lower in the case of lesions in the spectrum between these two extremes. For example, fewer than half of diagnoses agreed with expert consensus for cases classified as severely atypical lesions, melanoma in situ, or early stage invasive melanoma. Similarly, over time, the diagnoses of the so-called “gray zone” of dermatopathology lost intraobserver reproducibility [16].

In this context, the development of artificial intelligence methods applied to pathological anatomy [17] had the merit of offering a possibility to understand if the evaluation of a melanocytic lesion that represented a malignant melanoma could be made more “objective” compared to lesions not endowed with the potential for malignancy “tout-court”.

This work is based on the definition of the steps to follow to classify malignant melanoma defects. A metric estimating the precision of the applied FRF algorithm is applied to estimate the error of the classification. The low computational cost related the image processing and the use of the same image for the algorithm training allow us to apply the FRF algorithm to new images without constructing a training model based on historical images. In this way, we used a set of images of lesions previously diagnosed by two board-certified dermatopathologists to understand how much the FRF algorithm was able to assist the pathologist in the decision-making process.

The precision achieved by the FRF algorithm proved to be appropriate, allowing this type of supervised algorithm to be nominated as a help in the dermatopathological diagnosis of MM. In particular, analyzing the discrepancy between the performance of the FRF algorithm and the diagnosis by the dermatopathologist, a value of 17% was found, which is slightly lower than that described in the interobserver variability (about 25–26%). These data are similar to that reported by Hekler et al. [18] who, in their paper, trained a CNN according to a binary model of classification of nevi and melanoma, and reported a discrepancy value between the trained CNN and dermatopathologist equal to 18% for melanoma, 20% for nevi and 19% overall. On the other hand, it is important to underline that any AI algorithm is trained on diagnostic criteria chosen “a priori” by the pathologist and, therefore, there can similarly be false-positive misdiagnoses: this is the case with Spitz nevi. Indeed, Hart S.N. et al. [19] trained a CNN for binary classification between conventional nevi and Spitz nevi. Their algorithm was tested on WSIs of Spitz nevi and conventional nevi, producing a classification accuracy of 92% overall, based on a sensitivity of 85% and specificity of 99%. In a second phase of their study, the authors demonstrated how important it was to improve the diagnostic performance values of the AI algorithm to allow a dermatopathologist to preselect the areas of interest of the entire WSI, as in the absence of this, the validation accuracy was unacceptable at only 52%.

All these data agree in allowing us to consider the inclusion of the FRF algorithm in the normal workflow of histopathological diagnostics. The framework of the FRF algorithm used in this work is based on Weka [20] libraries implemented in the Java language.

The main motivation is in the novel approach adopted for the algorithm training. Other studies use a traditional approach based on the discrimination of the training model and of the testing model, which are performed by adopting different images [21]. In the proposed approach, it adopts the same image both for the training and for the testing: each image is converted into grayscale images, and in the same image [12] the classes training the model are identified (classes of similar features, including those of the Spitz nevi). In this way the doctor can circle the areas (classes) without having a historical dataset of images. The algorithm versatility gives an idea of the anomalous distribution (enhancing anomalous groups of pixels) of malignant areas quickly by estimating the covering percentage.

Furthermore, from a clinical point of view, there have already been numerous papers published in the literature that have attempted to correlate, specifically, dermoscopic characteristics with clinical diagnosis. A very interesting contribution by Argenziano et al. [22] in 2003 evaluated the sensitivity, specificity and, therefore, diagnostic accuracy of criteria commonly used for the diagnosis of MM. In the paper, 108 melanocytic lesions were evaluated by 40 experienced dermoscopists in an attempt to evaluate the interobserver and intraobserver agreement by using four algorithms such as the pattern analysis, ABCD rule, Menzies method and 7-point checklist. Of all these, there was a good agreement except for with the dermoscopic criteria, demonstrating how important it is also in dermoscopy to adopt more “accurate” parameters.

Limitations

It is important to note that in our paper we used exclusively histopathological (morphological) criteria and, therefore, were subject to limitations including the different sizes of cancer cells, a concept intrinsic to tumor heterogeneity [23].

5. Conclusions

The FRF images were processed by following a specific image diagnostic protocol, oriented on reading and algorithm error minimization. An important tool for melanoma diagnosis is the probability image estimated by the processed FRF output image. The probability image is useful to better discriminate information about ambiguous lesions. A single probability image refers to a particular class of “defect” and enhances, by the white color, the defect distribution in the whole analyzed image. By knowing the dimension of the acquired microscope image, it is also possible to estimate the defect distribution percentage. All the adopted approaches are suitable to create a specific image vision platform for telemedicine digital pathology.

Acknowledgments

In memory of Antonietta Cimmino (A.C.).

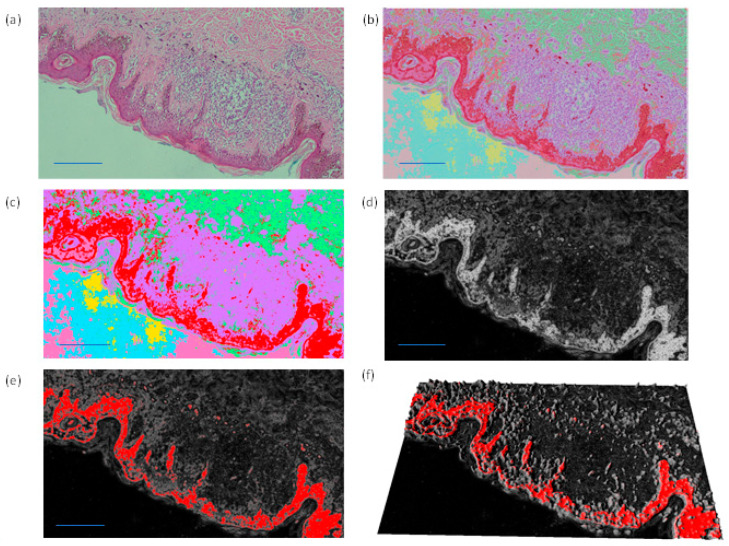

Appendix A

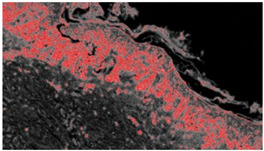

The enhancement of the classified areas by the FRF algorithm can be performed by adopting different image processing filters. In Figure A1 different image filtering approaches enhancing classes and the probabilistic image are shown. The probabilistic image refers to a particular class related to a defect.

Figure A1.

(a) Original image. (b,c) Images with all detected classes defined by different colors. (d,e) Probabilistic image and (f) related 3D reconstruction.

Figure A1 indicates possible alternative color schemes to highlight dangerous areas: the red color of the classified output image (b, c), the white color of the probabilistic images (d) and the red color of the probabilistic image in a 2D (f) and 3D scheme (g).

Appendix B

The probabilistic images are the output of the FRF algorithm. In Figure A2 some pixel cluster classifications of the original image of Figure 3 are shown.

Figure A2.

Example of distribution of the probability classes from C1 (low probability of recognition of the anomaly, black shade) to C4 (high probability of recognition of the anomaly, white shade).

Figure A2 is an example that represents different classes, where each one is a probabilistic image with the white color representing the higher probability value to find specific class features. Alternatively, all classes are identified in the same image by different colors.

Author Contributions

Conceptualization, G.C., A.C., T.L., P.P., S.C., G.I., L.R., E.M. and A.V.; methodology, G.C., L.L., E.C., E.N. and A.M.; software, A.M.; validation, T.L., S.C., G.I., L.R. and E.M.; formal analysis, G.C.; investigation, G.C., A.C., T.L., S.C., G.I., L.R., E.M. and A.V.; resources, G.C.; data curation, G.C., A.C., T.L., S.C., G.I., L.R. and E.M.; writing—original draft preparation, G.C. and A.M.; writing—review and editing, G.C., A.C., T.L., S.C., G.G., G.I., L.R., E.M. and A.V.; visualization, G.C.; supervision, A.V. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Approved by CITEL (Dipartimento di Scienze Biomediche e Oncologia Umana—Dimo U.O.C. Medicina Interna “G. Baccelli” Policlinico di Bari, number 5732).

Informed Consent Statement

Informed consent was recorded by all patients involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Turing A.M. I.—Computing Machinery and Intelligence. Mind. 1950;LIX:433–460. doi: 10.1093/mind/LIX.236.433. [DOI] [Google Scholar]

- 2.Bjola C. AI for development: Implications for theory and practice. Oxf. Dev. Stud. 2022;50:78–90. doi: 10.1080/13600818.2021.1960960. [DOI] [Google Scholar]

- 3.Venerito V., Angelini O., Cazzato G., Lopalco G., Maiorano E., Cimmino A., Iannone F. A convolutional neural network with transfer learning for automatic discrimination between low and high-grade synovitis: A pilot study. Intern. Emerg. Med. 2021;16:1457–1465. doi: 10.1007/s11739-020-02583-x. [DOI] [PubMed] [Google Scholar]

- 4.Onega T., Reisch L.M., Frederick P.D., Geller B.M., Nelson H.D., Lott J.P., Radick A.C., Elder D.E., Barnhill R.L., Piepkorn M.W., et al. Use of digital whole slide imaging in dermatopathology. J. Digit. Imaging. 2016;29:243–253. doi: 10.1007/s10278-015-9836-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Massaro A. Electronic in Advanced Research Industry: From Industry 4.0 to Industry 5.0 Advances. Wiley; Hoboken, NJ, USA: IEEE; New York, NY, USA: 2021. [Google Scholar]

- 6.Allen A.C., Spitz S. Malignant melanoma; a clinicopathological analysis of the criteria for diagnosis and prognosis. Cancer. 1953;6:1–45. doi: 10.1002/1097-0142(195301)6:1<1::AID-CNCR2820060102>3.0.CO;2-C. [DOI] [PubMed] [Google Scholar]

- 7.Breiman L. Random forests. Mach. Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 8.Mahapatra D. Analyzing training information from random forests for Improved image segmentation. IEEE Trans. Image Process. 2014;23:1504–1512. doi: 10.1109/TIP.2014.2305073. [DOI] [PubMed] [Google Scholar]

- 9.Belgiu M., Drăguţ L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016;114:24–31. doi: 10.1016/j.isprsjprs.2016.01.011. [DOI] [Google Scholar]

- 10.Arganda-Carreras I., Kaynig V., Rueden C., Eliceiri K.W., Schindelin J., Cardona A., Seung H.S. Trainable Weka segmentation: A machine learning tool for microscopy pixel classification. Bioinformatics. 2017;33:2424–2426. doi: 10.1093/bioinformatics/btx180. [DOI] [PubMed] [Google Scholar]

- 11.Lormand C., Zellmer G.F., Németh K., Kilgour G., Measd S., Palmer A.S., Sakamoto N., Yurimoto H., Moebis A. Weka trainable segmentation plugin in ImageJ: A semi-automatic tool applied to crystal size distributions of microlites in volcanic rocks. Microsc. Microanal. 2018;24:667–675. doi: 10.1017/S1431927618015428. [DOI] [PubMed] [Google Scholar]

- 12.Alessandro M. Detection of Anomalies in Images by Machine Learning Fast Random Forest—FRF-Algorithm. Zenodo; Geneva, Switzerland: 2022. [DOI] [Google Scholar]

- 13.Lay-Ekuakille A., Spano F., Mvemba P.K., Massaro A., Galiano A., Casciaro S., Conversano F. Conductivity image characterization of gold nanoparticles based-device through atomic force microscopy; Proceedings of the IEEE 2018 Nanotechnology for Instrumentation and Measurement (NANOfIM); Mexico City, Mexico. 7–8 November 2018; Piscataway, NJ, USA: IEEE; 2019. [Google Scholar]

- 14.Roush G.C., Kirkwood J.M., Ernstoff M., Somma S.J., Duray P.H., Klaus S.N., Stenn K.S., Titus L.J., Lerner A.B. Reproducibility and validity in the clinical diagnosis of the nonfamilial dysplastic nevus: Work in progress. Recent Results Cancer Res. 1986;102:154–158. doi: 10.1007/978-3-642-82641-2_13. [DOI] [PubMed] [Google Scholar]

- 15.Cazzato G., Colagrande A., Cimmino A., Demarco A., Lospalluti L., Arezzo F., Resta L., Ingravallo G. The Great Mime: Three Cases of Melanoma with Carcinoid-Like and Paraganglioma-Like Pattern with Emphasis on Differential Diagnosis. Dermatopathology. 2021;8:130–134. doi: 10.3390/dermatopathology8020019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Elmore J.G., Barnhill R.L., Elder D.E., Longton G.M., Pepe M.S., Reisch L.M., Carney P.A., Titus L.J., Nelson H.D., Onega T., et al. Pathologists’ diagnosis of invasive melanoma and melanocytic proliferations: Observer accuracy and reproducibility study. BMJ. 2017;357:j2813. doi: 10.1136/bmj.j2813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Harrison J.H., Gilbertson J.R., Hanna M.G., Olson N.H., Seheult J.N., Sorace J.M., Stram M.N. Introduction to Artificial Intelligence and Machine Learning for Pathology. Arch. Pathol. Lab. Med. 2021;145:1228–1254. doi: 10.5858/arpa.2020-0541-CP. [DOI] [PubMed] [Google Scholar]

- 18.Hekler A., Utikal J.S., Enk A.H., Berking C., Klode J., Schadendorf D., Jansen P., Franklin C., Holland-Letz T., Krahl D., et al. Pathologist-level classification of histopathological melanoma images with deep neural networks. Eur. J. Cancer. 2019;115:79–83. doi: 10.1016/j.ejca.2019.04.021. [DOI] [PubMed] [Google Scholar]

- 19.Hart S.N., Flotte W., Norgan A.P., Shah K.K., Buchan Z.R., Mounajjed T., Flotte T.J. Classification of Melanocytic Lesions in Selected and Whole-Slide Images via Convolutional Neural Networks. J. Pathol. Inform. 2019;10:5. doi: 10.4103/jpi.jpi_32_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Witten I.H., Frank E., Hall M.A., Pal C.J. Data Mining. 4th ed. Morgan Kaufmann; Burlington, MA, USA: 2017. [DOI] [Google Scholar]

- 21.Raza R., Zulfiqar F., Tariq S., Anwar G.B., Sargano A.B., Habib Z. Melanoma Classification from Dermoscopy Images Using Ensemble of Convolutional Neural Networks. Mathematics. 2022;10:26. doi: 10.3390/math10010026. [DOI] [Google Scholar]

- 22.Argenziano G., Soyer H.P., Chimenti S., Talamini R., Corona R., Sera F., Binder M., Cerroni L., De Rosa G., Ferrara G., et al. Dermoscopy of pigmented skin lesions: Results of a consensus meeting via the Internet. J. Am. Acad. Dermatol. 2003;48:679–693. doi: 10.1067/mjd.2003.281. [DOI] [PubMed] [Google Scholar]

- 23.Kłóś A., Płonka P.M. Growth of mixed cancer cell population—In silico the size matters. Acta Biochim. Pol. 2020;67:453–463. doi: 10.18388/abp.2020_5500. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.