Abstract

Knee osteoarthritis (KOA) is one of the deadliest forms of arthritis. If not treated at an early stage, it may lead to knee replacement. That is why early diagnosis of KOA is necessary for better treatment. Manually KOA detection is a time-consuming and error-prone task. Computerized methods play a vital role in accurate and speedy detection. Therefore, the classification and localization of the KOA method are proposed in this work using radiographic images. The two-dimensional radiograph images are converted into three-dimensional and LBP features are extracted having the dimension of N × 59 out of which the best features of N × 55 are selected using PCA. The deep features are also extracted using Alex-Net and Dark-net-53 with the dimensions of N × 1024 and N × 4096, respectively, where N represents the number of images. Then, N × 1000 features are selected individually from both models using PCA. Finally, the extracted features are fused serially with the dimension of N × 2055 and passed to the classifiers on a 10-fold cross-validation that provides an accuracy of 90.6% for the classification of KOA grades. The localization model is proposed with the combination of an open exchange neural network (ONNX) and YOLOv2 that is trained on the selected hyper-parameters. The proposed model provides 0.98 mAP for the localization of classified images. The experimental analysis proves that the presented framework provides better results as compared to existing works.

Keywords: knee osteoarthritis (KOA), handcrafted features, KL grading, features fusion, classification, localization

1. Introduction

In the world, around 30% of people over the age of 60 have OA, which is the main cause of impairment in the elderly. Over 250 million patients are suffering from this disease globally [1]. Primary KOA symptoms are pain, stiffness, decreased range of joint motion, and malfunctioning gait that ultimately increases the progression rate of the disease [2]. These indications affect the individuals’ functional independence and degrade their life quality. The Kellgren–Lawrence (KL) grading system is used as a gold standard for assessments of KOA radiographs. The KL grading system classifies KOA into 0–4 grades, where grade 0 represents healthy with no symptoms of KOA while grade 4 presents a severe stage [3]. The KL grading system is commonly used clinically for KOA diagnosis which is time consuming and needs skilled experts. For accurate KL grading evaluation, two skilled experts are required that could independently process the radiographs without considering other input data [4]. The computerized system is developed for the automated labeling of KOA severity using a deep siamese convolution neural network. This method is trained on the MOST dataset in which 3000 testing subjects are selected randomly out of 5960 hence providing an average accuracy of 66.7% and 0.83 co-efficient of kappa [5]. Sobel horizontal gradient with SVM classifier is used for the diagnosis of knee abnormality using X-ray radiographs [6]. The automated KOA method is presented and tested on 94 images of radiographs that provides a 72.61% precision rate. Due to the poor contrast and variable locations of knee gaps, detecting KOA is a difficult process [7].

The method for the classification and localization of knee OA is proposed here to address these issues. The core contribution is as:

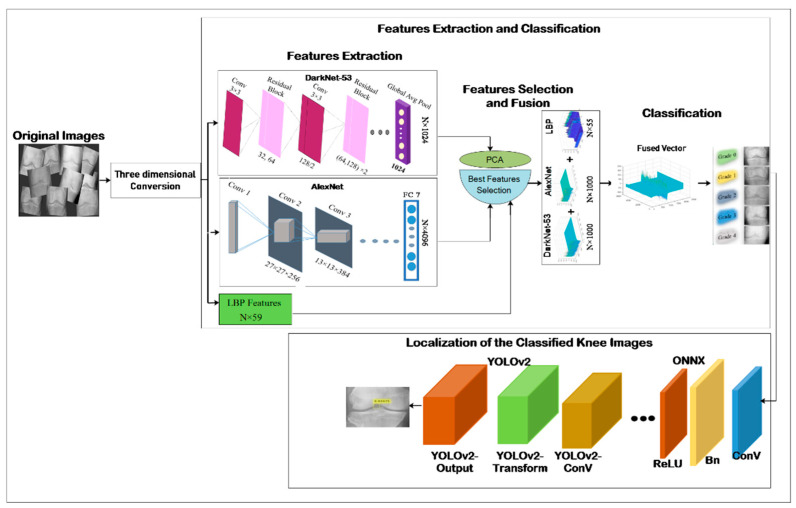

For accurate classification, KOA images are converted into three channels. After conversion, LBP and deep features are derived using Darknet-53 and Alex-Net and fused serially to select the best features by PCA that are input to the classifiers for KOA grades. The classified images are supplied into the proposed localization model, which extracts features from the ONNX model and feeds them into the YOLOv2 detector. The optimal hyper-parameters are used for model training to accurately localize the infected knee region.

The remaining article is organized as: Section 2 gives related work, the proposed model is explained in Section 3, results and discussion are written in Section 4, and Section 5 gives a conclusion.

2. Related Work

KOA is a complex peripheral joint disease with many risk factors that contribute to significant loss of control, weakness, and rigidity [8]. Its severity level is computed manually through the KL grading system, but it takes time and can lead to misclassification. There has been plenty of work carried out in the area of KOA imaging to identify and classify knee diseases. In image processing, feature extraction is an effective step for image representation [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48]. For the recognition of diseases, feature extraction is very helpful to machine learning (ML) algorithms. Many researchers used handcrafted features for KOA classification [49]. A new computer-based approach is proposed for segmenting knee menisci in MR images with the help of handcrafted features named HOG and LBP in which they used the variant of histogram HOG-UoCTTI. The ratio of overlap area is calculated by the Dice similarity formula to select 31 and 58 features of LBP and HOG, respectively. In knee MR, 45 slices are under evaluation, so after random sub-sampling, the size of the feature matrix is 7000 × 837 for each image. These features are selected by using PCA and achieved 82% Dice similarity [50]. Saygili et al. presented automated detection of knee menisci from MR images. These images are obtained from the OAI dataset such that 75% of these are taken for training while 25% are for testing. Features are extracted with the HOG method for both testing and training processes. To find the correlation between different patches, the regression approach is used in the training process [51]. Mahrukh et al. used a HOG-based template matching automated technique for required region extraction named tibiofemoral in knee radiographs [52]. Their methodology achieved an accuracy of 96.10% with an average mean rate of 88.26%, which exceeds current strength approaches such as fuzzy-c means and deep models [53]. A three-dimensional deformation technique for homogeneity in the knee was developed and evaluated. D. Kim et al. demonstrated that the current issue could be solved depending on the histogram. Explanatory results have shown 95% Dice similarity, 93% sensitivity, and 99% specificity [54]. An adaptive segmentation method is presented for selecting ROI by using different handcrafted methods and improving the classification process. After the pre-processing of raw data images from OAI and MOST databases, they chose ROI to calculate texture descriptors. In their studies, they used both (rectangular ROI and adaptive ROI) techniques [55]. Fractal dimension (FD) [56], local binary pattern (LBP), Haralick features [57], Shannon entropy [57], and HOG have been analyzed and compared. Their proposed method achieved an improvement of 9% in AUC as compared to commonly used ROI, and LBP provided the best performance in all features [55]. In the area of ML, deep learning (DL) has gained more interest in recent years [55]. DL methods are more precise as compared to the approaches focusing on handcrafted features. In medical imaging, several models have been developed such as Alexnet, VGG19 [58], Darknet [59], etc., for the extraction of features. Kevin et al. developed a model for OA diagnosis and total knee replacement by using DL model Resnet-34 [60] which has 34 layers. They trained their model on OAI and WOMAC + OA outcome scores [61], jointly predicted KL grade and TKR on the same model, and achieved a higher AUC of 87% as compared to the previous [62]. B. Zhang et al. developed a model to automatically diagnose KOA. They applied a modified residual neural network by changing the kernel size of the average pooling layer for the detection of a knee joint and then combined it with convolutional (BAM) to achieve the state of art performance from previous methods [63]. For the assessment of tumors in knee bones, H.J. Yang et al. provided an effective DL model. A combination of supervised and unsupervised techniques was used to recognize significant patterns in the identification of prevalent and anomalous bones and also to identify bone tumors. The results indicated that the model performance is better than the existing remarkable models [64]. Vishwanath et al. used an MR high-resolution algorithm with new full 3D CNN and a multi-class loss functionality to develop a segmentation of knee cartilage and achieved better performance on publicly available MICCAI SKI10 dataset. They have also applied their proposed methodology to a similar MR and enhanced segmentation accuracy [65]. In another work, the researchers developed a technique for automatic classification of knee radiography severity. They used the DenseNet CNN model to predict KL grade which has 169 layers [66].

3. Proposed Methodology

This section describes the classification of the KOA method for tackling current limitations and addressing the challenges mentioned above. In this method, deep and LBP features are extracted after which the best features are selected using PCA for classifying different grades of KOA. Then classified images are localized using the YOLOv2-ONNX model. The overall scenario is presented in Figure 1.

Figure 1.

The architecture of the proposed methodology.

3.1. Local Binary Pattern (LBP)

LBP [67] is established on the gray level structure of an image and extracts texture features from an image. It works in a form of a 3 × 3 window slider over an image. The center pixel of an image is a threshold value to its neighboring pixels. Each pixel is compared around the window with eight different pixels such that 28 = 256 various patterns for the selected region can be achieved from an image.

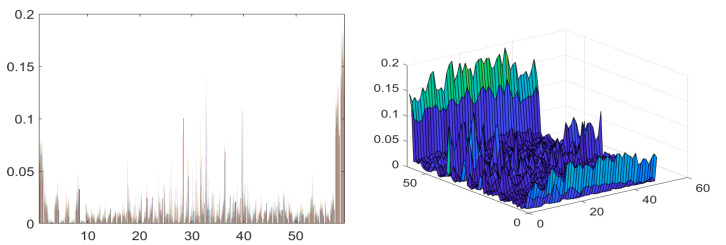

Figure 2 shows LBP features of dimension N × 59. The LBP operator is given by Equation (1).

| (1) |

where s denotes the operator that retains sign of differences defined by:

where denotes center pixel value, P symbolizes neighboring pixels of , R represents the radius of the window, and denotes pixel intensity values in the neighborhood. The size of the feature vector is N × 59. Once these features are extracted, N × 55 best features are selected by using PCA.

Figure 2.

Graphical representation of LBP features.

3.2. Deep Feature Extraction

CNN is a DL algorithm used for the extraction of important image information and can differentiate various objects from one another. It works in the form of layers named convolution, pooling, and ReLU. Our dataset is on a large scale; hence, CNN is very helpful for feature extraction in image classification. Therefore, features are derived from Alex-net and Darknet-53 models. The Alexnet [68] model consists of 25 layers including five convolutional and three fully-connected (FC6, FC7, and FC8), ReLU (6), drop (5), pooling (5), softmax, and classification. The features are derived from the FC7 layer of the Alexnet model with the dimension of N × 4096. The pre-trained DarkNet53 [69] with the dimensions of 1 × 1 and 3 × 3 has a 53-layer deep model. This model contains 184 layers in which 1 input, 53 Conv, 52 batch-norm, 52 leaky-ReLU, 23 addition, 1 softmax, 1 classification, and 1 average global pooling are included. Features are derived from the pool average layer named avg1 for the activation process to get a vector size of N × 1024 features.

3.3. Feature Fusion

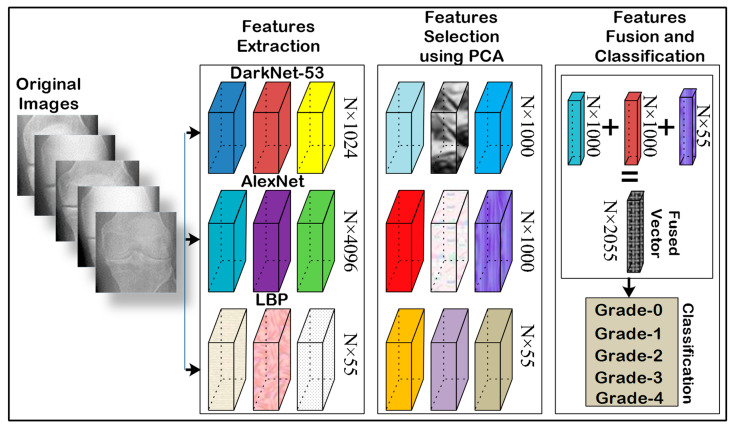

This step fuses handcrafted and deep features with the dimension N × 2055 for the classification of KOA because 1000 features are selected from Alexnet, and 1000 features from Darknet-53 as well as 55 features from LBP by using PCA [70]. PCA reduced the dimension of larger vectors into smaller ones by keeping its actual information. Figure 3 shows the fusion process of handcrafted and CNN features.

Figure 3.

Overview of feature extraction, selection, fusion, and classification.

In Figure 3, the feature vector dimension is N × 4096, N × 1025, and LBP descriptor with N × 59 in which N × 1000 deep, and LBPN × 55 features are selected using PCA. Finally, these extracted features are fused with the dimension of N × 2055.

ICA is used to optimize the statistics of high order like kurtosis. PCA is used to optimize the covariance matrix that denotes second-order statistics [68,71]. ICA searches independent components, while PCA searches un-correlated components. The final vector of the fusion process is mathematically defined in Equation (2).

| (2) |

In the above equation, denotes LBP feature vector, (i) is the final vector after the fusion process, (i) and (i) are features vectors of Alexnet and Darknet-53, respectively, while M × N represents the dimensions of these vectors. The SVM [72], KNN [73], and Ensemble classifiers with different kernels are used for classification. To choose the best features, an experiment is conducted using ICA and PCA as mentioned in Table 1.

Table 1.

Experiment for features selection method.

| Features Selection Methods | Accuracy |

|---|---|

| ICA | 0.87 |

| PCA | 0.90 |

In this experiment, high accuracy was achieved using PCA as compared to ICA. Therefore, PCA is selected for further experimentation.

3.4. Localization of Knee Osteoarthritis by Using YOLOv2 with the ONNX Model

YOLO-v2 delivers higher efficiency for object detection in terms of accuracy and speed [51]. Extraction of features and location steps are performed by using YOLO-v2 in a single unit. The proposed model YOLO-v2ONNX has 31 layers designed by using YOLO-v2 with the pre-trained architecture of the ONNX [52,53,54] model for the detection of KOA. ONNX model is a multiple output network in which 35 layers are present, but this work used only 24 layers for the preparation of the proposed model as (i) input layer, (ii) 2 element-wise Affine layers, (iii) 4 convolutional layers, (iv) 4 BN layers, (v) 3 max-pooling layers, and (vi) 4 activation layers. These layers are passed to YOLO-v2 which has 3 convolutional layers, 2 BN layers, and 2 ReLUlayers that are serially linked and accompanied by YOLO-v2 transformation and YOLO-v2 output to accurately detect the location in an input image with the class labels of infected regions.

YOLO-v2ONNX model detects class labels by using anchor boxes. Three major attributes are defined as (a) IoU (b) Offset, and (c) class probability for the prediction of anchor boxes. IoU predicts objects score across each anchor box, the position of the anchor box is defined by an offset, and class probability is measured to calculate relevant class labels allocated to the corresponding anchor boxes.

The object detector YOLO-v2 improves mean square error (MSE) loss between expected and ground truth bounding boxes. The proposed model is trained on three types of losses to reduce MSE: (a) localization loss in which error is measured between ground truth, and bounding box and parameters for measuring the localization loss as follows.

Here g denotes grid cells, d shows bounding boxes size, = 1 if 1 bounding box is responsible for detecting the object in grid cell k otherwise it is considered 0, = 1 if there is no object detected in 1 bounding box, = 1 if the object is located otherwise it is considered 0. (,) and (,) represent the center point of l bounding box and ground truth in grid cell k, while (,) and (,) denote width and height, and weight of localization loss is denoted by . The second step is confidence loss. The error of confidence score is measured when the object is detected. When there is no object detected in the l bounding box of grid cell k then the error of confidence score is measured. The parameters for measuring the confidence loss are (,) representing the confidence score of the l bounding box and ground truth in grid cell k whereas (, are the weights of confidence score error if the object is detected or not. The last step of the loss function of YOLOv2 called classification loss is used to compute the squared error between the probabilities of each class from which the object is detected in grid cell k of the l bounding box. The and are the estimated and actual probabilities of conditional class for object class c in grid cell k, and represents the classification error weight. With the increase in the value of , the weightage of classification loss also increases.

4. Results and Discussion

In this work, the knee joints dataset is publicly accessible [74] which includes training 2139 images and testing 1656 images. The dataset is in 2 channels, so it is converted into 3 channels (RGB) because deep models accept 3 channel images. This work is implemented on MATLAB-2020 Ra, a Windows operating system with 2070 RTX-GPU.

4.1. Experiment #1 (Grades of KOAClassification)

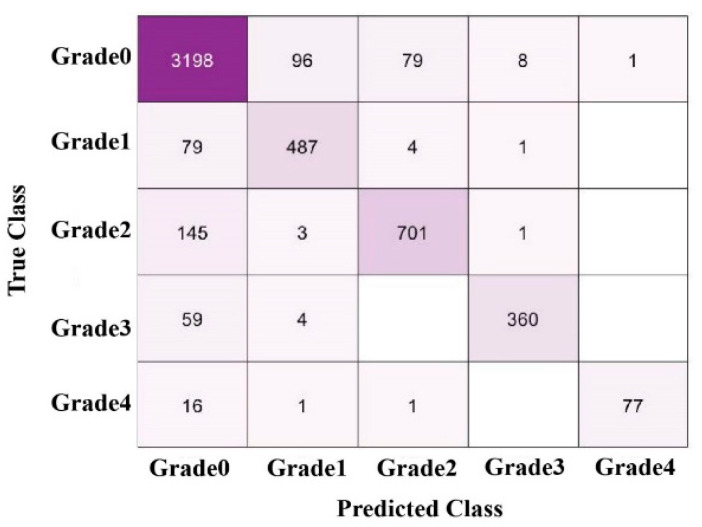

In this experiment, SVM, KNN, and Ensemble classifiers are used to classify KOA grades into Grade-0 to Grade-4 as manifested in Figure 4.

Figure 4.

Multi-class confusion matrix.

The sensitivity of 85% is achieved in Grade-I in which 487 true positive and 4179 false negative values are included. As shown in Table 2, a 10-fold cross-validation is used for classification.

Table 2.

Classification outcomes utilizing 10-fold cross-validation.

| Classifiers | G0 | G1 | G2 | G3 | G4 | Accuracy% (ACC) |

Precision% (Pre) |

Sensitivity% (SE) |

F1 Score% (F1) |

|---|---|---|---|---|---|---|---|---|---|

| SVM | ✓ | 77.9 | 0.97 | 0.89 | 0.93 | ||||

| ✓ | 0.73 | 0.87 | 0.80 | ||||||

| ✓ | 0.75 | 0.90 | 0.82 | ||||||

| ✓ | 0.81 | 0.96 | 0.88 | ||||||

| ✓ | 0.82 | 0.93 | 0.87 | ||||||

| Fine KNN | ✓ | 90.6 | 0.97 | 0.89 | 0.93 | ||||

| ✓ | 0.73 | 0.85 | 0.79 | ||||||

| ✓ | 0.75 | 0.90 | 0.82 | ||||||

| ✓ | 0.81 | 0.96 | 0.88 | ||||||

| ✓ | 0.83 | 0.92 | 0.87 | ||||||

| Ensemble KNN | ✓ | 89.4 | 0.95 | 0.91 | 0.93 | ||||

| ✓ | 0.85 | 0.82 | 0.84 | ||||||

| ✓ | 0.82 | 0.89 | 0.86 | ||||||

| ✓ | 0.85 | 0.97 | 0.91 | ||||||

| ✓ | 0.81 | 0.99 | 0.89 |

In Table 2, overall accuracy obtained on collective KOA grades and individual grades is presented including 90.6% on Fine KNN, 77.9% on SVM, and 89.4% on Ensemble KNN. Maximum precision of 0.97 is attained using SVM on Grade (0), 0.85 on Grade (1,3) based on Ensemble KNN, 0.82 on Grade (2) using Ensemble KNN, and 0.82 on Grade (4) using SVM. The classification results comparison is mentioned in Table 3.

Table 3.

Comparison of classifications results.

In Table 3, deep siamese CNN provided 66.7% accuracy. This method needs improvement to increase the detection accuracy [14]. Chen et al. developed a model to automatically measure KOA severity from knee radiographs and provided an accuracy of 69.7% [10]. B. Zhang et al. presented a technique to automatically diagnose KOA. They applied a modified residual neural network by changing the kernel size for the detection of the knee joint and then combined it with convolutional (BAM) to achieve multi-class accuracy of 74.8%. This method also needs to improve the classification accuracy [43]. Kondal et al. [55] used two datasets, one from OAI, which has 4447 DICOM format images with their KL grades for training, and the second dataset is from an Indian private hospital having 1043 knee radiographs. However, they did not obtain high-performance results on this target dataset. They showed average (precision, recall, and F1-score) when their model is evaluated on the OAI dataset. The ensemble fuzzy features selection method is used based on the embedded, wrapper, and filter method with a random forest classifier for the classification of knee grades. This method provides 73.35% accuracy [74]. ResNet-18 and ResNet-34 are used with convolutional attention blocks for the prediction of KL grades. This method achieved 74.81% accuracy [77].

After experimentation, we achieved maximum accuracy of 90.6% while the previous maximum accuracy was 84%. Still, there is a gap in this domain due to the complex structure of knee radiographs. Therefore, more novel methods are required to fill this research gap.

4.2. Experiment#2 Localization of Knee Osteoarthritis

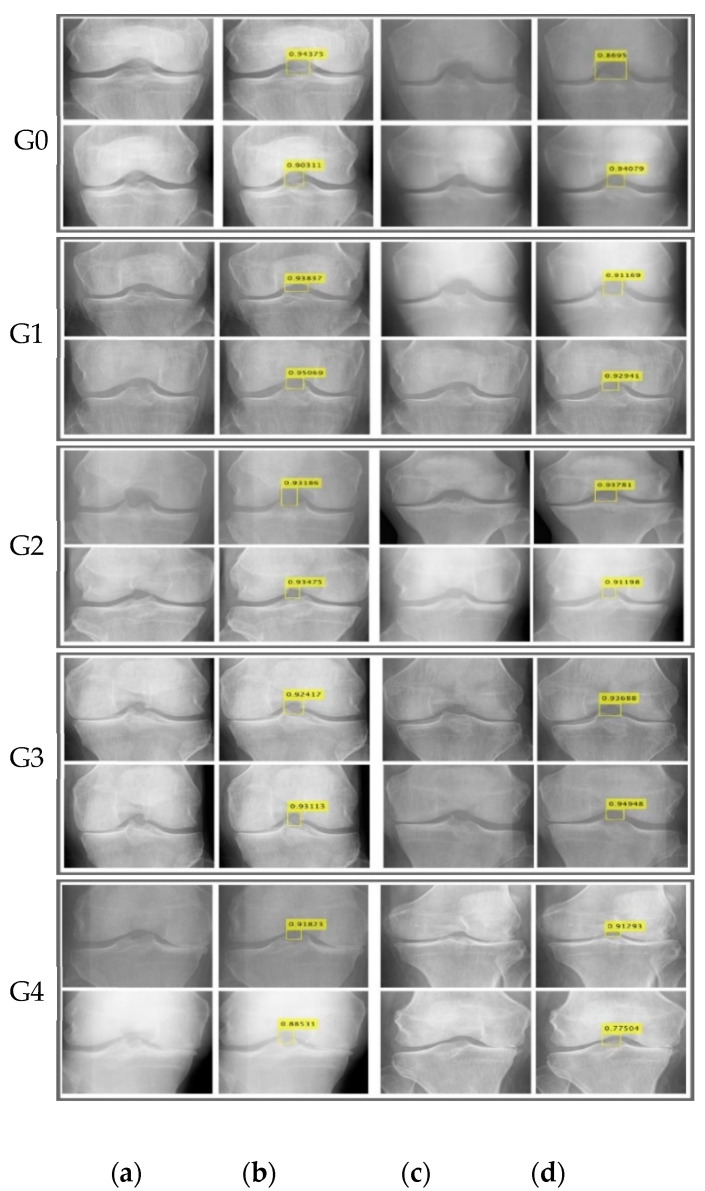

This experiment localized classified images using the proposed localization model into different grades (Grade 0 to Grade 4) of KOA with maximum confidence scores as shown in Figure 5.

Figure 5.

KOA localization results (a,c) original KOA slices (b,d) predicted scores (where G denotes grades).

Table 4 presents YOLOv2-ONNX model configuration parameters chosen after rigorous testing.

Table 4.

Configuration parameters of YOLOv2-ONNX model.

| Classes | 5 |

| Anchors | 13,17,18,21,43,49,73,108 |

| Mini-batch size | 64 |

| Max epochs | 100 |

| Verbose frequency | 30 |

| Learning rate | 0.001 |

Table 5 shows the outcomes of the proposed localization model in terms of mIoU and mAP.

Table 5.

Localization results comparison.

| Ref# | Year | Results |

|---|---|---|

| [78] | 2022 | 0.95 IoU |

| Proposed Method | 0.96 IoU, 0.98 mAP | |

In Table 5, the existing method [78] provided an IoU of 0.95. In the literature, no method exists for the localization of KOA images.

5. Conclusions

Precise and accurate identification and classification of KOA is a challenging task. The similarity between different KL grades makes it more complex. Its severity level is computed manually through the KL grading system, but it takes time and can lead to misclassification. Automated grading of KOA severity can provide reliable results in a short period. However, various forms of KOA must be handled more carefully. In addition, robust features and efficient classifiers have an immense effect on the efficiency of the diagnosis method. In this study, a new technique is developed for OA detection using radiographic images. The proposed model includes (a) pre-processed original dataset, (b) extraction of handcrafted features, (c) extraction of deep features from pre-trained CNN models, (d) PCA model for the best selection of features, (e) feature fusion, (f) classification, and (g) localization of classified images using the YOLO-v2ONNX model. The proposed technique achieved a precision rate of 0.95 on Grade-0, 0.85 on Grade-1, 0.82 on Grade-2, 0.85 on Grade-3, and 0.81 on Grade-4 with the Ensemble KNN classifier. For the localization of KOA, the YOLO-v2ONNX model is developed by using the ONNX model as the backbone of YOLO-v2 and achieved 0.96 IOU and 0.98 mAP on classified images.

Nomenclature

| G | Grid cells |

| d | Size of a bounding box |

| (,), (,) | Center points of predicted and ground truth bounding box respectively |

| (,),(,) | Width, height |

| W | Weight |

| Probability | |

| C | Conditional class |

| (,) | The confidence score of predicted and ground truth bounding box |

| LBP feature vector | |

| (i) | Fused feature vector |

| (i) | Feature vector of Alexnet |

| (i) | The feature vector of Darknet-53 |

| s | The operator that retains sign of differences |

| Center pixel value | |

| Intensity values | |

| P | Neighboring pixels |

Author Contributions

U.Y., Investigation, edited—original draft; J.A., performed writing draft, conceptualization, and implementation; M.S., Part of result validation team and writing conclusion of the paper; M.Y., Conversion of paper as per journal formatting and putting data into pictorial form; S.K. (Seifedine Kadry), Data curation, Investigation, Literature reviews, Resources, Project administration; S.K. (Sujatha Krishnamoorthy), Data curation, Investigation, Literature reviews, Resources, Fund acquisition. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is downloaded from that P. Chen, “Knee osteoarthritis severity grading dataset,” Mendeley Data, v1 http://dx.doi.org/10.17632/56rmx5bjcr, vol. 1, 2018. https://radiopaedia.org/articles/osteoarthritis-of-the-knee (accessed on 5 July 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Felson D.T., Naimark A., Anderson J., Kazis L., Castelli W., Meenan R.F. The prevalence of knee osteoarthritis in the elderly. The Framingham Osteoarthritis Study. Arthritis Rheum. Off. J. Am. Coll. Rheumatol. 1987;30:914–918. doi: 10.1002/art.1780300811. [DOI] [PubMed] [Google Scholar]

- 2.Global Burden of Disease Study 2013 Collaborators Global, regional, and national incidence, prevalence, and years lived with disability for 301 acute and chronic diseases and injuries in 188 countries, 1990–2013: A systematic analysis for the Global Burden of Disease Study 2013. Lancet. 2015;286:743–800. doi: 10.1016/S0140-6736(15)60692-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Murphy L., Helmick C.G. The impact of osteoarthritis in the United States: A population-health perspective: A population-based review of the fourth most common cause of hospitalization in US adults. Orthop. Nurs. 2012;31:85–91. doi: 10.1097/NOR.0b013e31824fcd42. [DOI] [PubMed] [Google Scholar]

- 4.Kurtz S., Ong K., Lau E., Mowat F., Halpern M. Proyecciones de artroplastia primaria y de revisión de cadera y rodilla en los Estados Unidos de 2005 a 2030. JBJS. 2007;89:780. doi: 10.2106/00004623-200704000-00012. [DOI] [Google Scholar]

- 5.Kaufman K.R., Hughes C., Morrey B.F., Morrey M., An K.-N. Gait characteristics of patients with knee osteoarthritis. J. Biomech. 2001;34:907–915. doi: 10.1016/S0021-9290(01)00036-7. [DOI] [PubMed] [Google Scholar]

- 6.Sharifrazi D., Alizadehsani R., Roshanzamir M., Joloudari J.H., Shoeibi A., Jafari M., Hussain S., Sani Z.A., Hasanzadeh F., Khozeimeh F. Fusion of convolution neural network, support vector machine and Sobel filter for accurate detection of COVID-19 patients using X-ray images. Biomed. Signal Process. Control. 2021;68:102622. doi: 10.1016/j.bspc.2021.102622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kotti M., Duffell L.D., Faisal A.A., McGregor A.H. Detecting knee osteoarthritis and its discriminating parameters using random forests. Med. Eng. Phys. 2017;43:19–29. doi: 10.1016/j.medengphy.2017.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lawrence R.C., Helmick C.G., Arnett F.C., Deyo R.A., Felson D.T., Giannini E.H., Heyse S.P., Hirsch R., Hochberg M.C., Hunder G.G. Estimates of the prevalence of arthritis and selected musculoskeletal disorders in the United States. Arthritis Rheum. Off. J. Am. Coll. Rheumatol. 1998;41:778–799. doi: 10.1002/1529-0131(199805)41:5<778::AID-ART4>3.0.CO;2-V. [DOI] [PubMed] [Google Scholar]

- 9.Amin J., Anjum M.A., Sharif A., Sharif M.I. A modified classical-quantum model for diabetic foot ulcer classification. Intell. Decis. Technol. 2022;16:23–28. doi: 10.3233/IDT-210017. [DOI] [Google Scholar]

- 10.Sadaf D., Amin J., Sharif M., Yasmin M. Advances in Deep Learning for Medical Image Analysis. CRC Press; Boca Raton, FL, USA: 2000. Detection of Diabetic Foot Ulcer Using Machine/Deep Learning; pp. 101–123. [Google Scholar]

- 11.Amin J. Segmentation and Classification of Diabetic Retinopathy. Univ. Wah J. Comput. Sci. 2019;2:1–10. [Google Scholar]

- 12.Amin J., Sharif M., Anjum M.A., Siddiqa A., Kadry S., Nam Y., Raza M. 3d semantic deep learning networks for leukemia detection. CMC. 2021;69:785–799. doi: 10.32604/cmc.2021.015249. [DOI] [Google Scholar]

- 13.Amin J., Sharif M., Anjum M.A., Nam Y., Kadry S., Taniar D. Diagnosis of COVID-19 infection using three-dimensional semantic segmentation and classification of computed tomography images. Comput. Mater. Contin. 2021;68:2451–2467. doi: 10.32604/cmc.2021.014199. [DOI] [Google Scholar]

- 14.Amin J., Anjum M.A., Sharif A., Raza M., Kadry S., Nam Y. Malaria Parasite Detection Using a Quantum-Convolutional Network. CMC. 2022;70:6023–6039. doi: 10.32604/cmc.2022.019115. [DOI] [Google Scholar]

- 15.Amin J., Anjum M.A., Sharif M., Kadry S., Nadeem A., Ahmad S.F. Liver Tumor Localization Based on YOLOv3 and 3D-Semantic Segmentation Using Deep Neural Networks. Diagnostics. 2022;12:823. doi: 10.3390/diagnostics12040823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Amin J., Sharif M., Fernandes S.L., Wang S.H., Saba T., Khan A.R. Breast microscopic cancer segmentation and classification using unique 4qubitquantum model. Microsc. Res. Technol. 2022;85:1926–1936. doi: 10.1002/jemt.24054. [DOI] [PubMed] [Google Scholar]

- 17.Amin J., Anjum M.A., Gul N., Sharif M. A secure two-qubit quantum model for segmentation and classification of brain tumor using MRI images based on blockchain. Neural Comput. Appl. 2022:1–14. doi: 10.1007/s00521-022-07388-x. [DOI] [Google Scholar]

- 18.Amin J., Anjum M.A., Malik M. Fused information of DeepLabv3+ and transfer learning model for semantic segmentation and rich features selection using equilibrium optimizer (EO) for classification of NPDR lesions. Knowl. Based Syst. 2022;249:108881. doi: 10.1016/j.knosys.2022.108881. [DOI] [Google Scholar]

- 19.Amin J., Sharif M., Yasmin M., Fernandes S.L. A distinctive approach in brain tumor detection and classification using MRI. Pattern Recognit. Lett. 2020;139:118–127. doi: 10.1016/j.patrec.2017.10.036. [DOI] [Google Scholar]

- 20.Amin J., Sharif M., Yasmin M., Ali H., Fernandes S.L. A method for the detection and classification of diabetic retinopathy using structural predictors of bright lesions. J. Comput. Sci. 2017;19:153–164. doi: 10.1016/j.jocs.2017.01.002. [DOI] [Google Scholar]

- 21.Sharif M.I., Li J.P., Amin J., Sharif A. An improved framework for brain tumor analysis using MRI based on YOLOv2 and convolutional neural network. Complex Intell. Syst. 2021;7:2023–2036. doi: 10.1007/s40747-021-00310-3. [DOI] [Google Scholar]

- 22.Saba T., Mohamed A.S., El-Affendi M., Amin J., Sharif M. Brain tumor detection using fusion of hand crafted and deep learning features. Cogn. Syst. Res. 2020;59:221–230. doi: 10.1016/j.cogsys.2019.09.007. [DOI] [Google Scholar]

- 23.Amin J., Sharif M., Raza M., Saba T., Anjum M.A. Brain tumor detection using statistical and machine learning method. Comput. Methods Programs Biomed. 2019;177:69–79. doi: 10.1016/j.cmpb.2019.05.015. [DOI] [PubMed] [Google Scholar]

- 24.Amin J., Sharif M., Raza M., Yasmin M. Detection of brain tumor based on features fusion and machine learning. J. Ambient. Intell. Humaniz. Comput. 2018:1–17. doi: 10.1007/s12652-018-1092-9. [DOI] [Google Scholar]

- 25.Amin J., Sharif M., Gul N., Yasmin M., Shad S.A. Brain tumor classification based on DWT fusion of MRI sequences using convolutional neural network. Pattern Recognit. Lett. 2020;129:115–122. doi: 10.1016/j.patrec.2019.11.016. [DOI] [Google Scholar]

- 26.Sharif M., Amin J., Raza M., Yasmin M., Satapathy S.C. An integrated design of particle swarm optimization (PSO) with fusion of features for detection of brain tumor. Pattern Recognit. Lett. 2020;129:150–157. doi: 10.1016/j.patrec.2019.11.017. [DOI] [Google Scholar]

- 27.Amin J., Sharif M., Yasmin M., Saba T., Anjum M.A., Fernandes S.L. A new approach for brain tumor segmentation and classification based on score level fusion using transfer learning. J. Med. Syst. 2019;43:1–16. doi: 10.1007/s10916-019-1453-8. [DOI] [PubMed] [Google Scholar]

- 28.Amin J., Sharif M., Raza M., Saba T., Sial R., Shad S.A. Brain tumor detection: A long short-term memory (LSTM)-based learning model. Neural Comput. Appl. 2020;32:15965–15973. doi: 10.1007/s00521-019-04650-7. [DOI] [Google Scholar]

- 29.Amin J., Sharif M., Raza M., Saba T., Rehman A. Brain tumor classification: Feature fusion; Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS); Sakaka, Saudi Arabia. 3–4 April 2019; pp. 1–6. [Google Scholar]

- 30.Amin J., Sharif M., Yasmin M., Saba T., Raza M. Use of machine intelligence to conduct analysis of human brain data for detection of abnormalities in its cognitive functions. Multimed. Tools Appl. 2020;79:10955–10973. doi: 10.1007/s11042-019-7324-y. [DOI] [Google Scholar]

- 31.Amin J., Sharif A., Gul N., Anjum M.A., Nisar M.W., Azam F., Bukhari S.A.C. Integrated design of deep features fusion for localization and classification of skin cancer. Pattern Recognit. Lett. 2020;131:63–70. doi: 10.1016/j.patrec.2019.11.042. [DOI] [Google Scholar]

- 32.Amin J., Sharif M., Gul N., Raza M., Anjum M.A., Nisar M.W., Bukhari S.A.C. Brain tumor detection by using stacked autoencoders in deep learning. J. Med. Syst. 2020;44:1–12. doi: 10.1007/s10916-019-1483-2. [DOI] [PubMed] [Google Scholar]

- 33.Sharif M., Amin J., Raza M., Anjum M.A., Afzal H., Shad S.A. Brain tumor detection based on extreme learning. Neural Comput. Appl. 2020;32:15975–15987. doi: 10.1007/s00521-019-04679-8. [DOI] [Google Scholar]

- 34.Amin J., Sharif M., Rehman A., Raza M., Mufti M.R. Diabetic retinopathy detection and classification using hybrid feature set. Microsc. Res. Technol. 2018;81:990–996. doi: 10.1002/jemt.23063. [DOI] [PubMed] [Google Scholar]

- 35.Amin J., Sharif M., Anjum M.A., Raza M., Bukhari S.A.C. Convolutional neural network with batch normalization for glioma and stroke lesion detection using MRI. Cogn. Syst. Res. 2020;59:304–311. doi: 10.1016/j.cogsys.2019.10.002. [DOI] [Google Scholar]

- 36.Muhammad N., Sharif M., Amin J., Mehboob R., Gilani S.A., Bibi N., Javed H., Ahmed N. Neurochemical Alterations in Sudden Unexplained Perinatal Deaths—A Review. Front. Pediatr. 2018;6:6. doi: 10.3389/fped.2018.00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sharif M., Amin J., Nisar M.W., Anjum M.A., Muhammad N., Shad S.A. A unified patch based method for brain tumor detection using features fusion. Cogn. Syst. Res. 2020;59:273–286. doi: 10.1016/j.cogsys.2019.10.001. [DOI] [Google Scholar]

- 38.Sharif M., Amin J., Siddiqa A., Khan H.U., Malik M.S.A., Anjum M.A., Kadry S. Recognition of different types of leukocytes using YOLOv2 and optimized bag-of-features. IEEE Access. 2020;8:167448–167459. doi: 10.1109/ACCESS.2020.3021660. [DOI] [Google Scholar]

- 39.Anjum M.A., Amin J., Sharif M., Khan H.U., Malik M.S.A., Kadry S. Deep semantic segmentation and multi-class skin lesion classification based on convolutional neural network. IEEE Access. 2020;8:129668–129678. doi: 10.1109/ACCESS.2020.3009276. [DOI] [Google Scholar]

- 40.Sharif M., Amin J., Yasmin M., Rehman A. Efficient hybrid approach to segment and classify exudates for DR prediction. Multimed. Tools Appl. 2020;79:11107–11123. doi: 10.1007/s11042-018-6901-9. [DOI] [Google Scholar]

- 41.Amin J., Sharif M., Anjum M.A., Khan H.U., Malik M.S.A., Kadry S. An Integrated Design for Classification and Localization of Diabetic Foot Ulcer Based on CNN and YOLOv2-DFU Models. IEEE Access. 2020;8:228586–228597. doi: 10.1109/ACCESS.2020.3045732. [DOI] [Google Scholar]

- 42.Amin J., Sharif M., Yasmin M. Segmentation and classification of lung cancer: A review. Immunol. Endocr. Metab. Agents Med. Chem. (Formerly Curr. Med. Chem. Immunol. Endocr. Metab. Agents) 2016;16:82–99. doi: 10.2174/187152221602161221215304. [DOI] [Google Scholar]

- 43.Amin J., Sharif M., Gul E., Nayak R.S. 3D-semantic segmentation and classification of stomach infections using uncertainty aware deep neural networks. Complex Intell. Syst. 2021:1–17. doi: 10.1007/s40747-021-00328-7. [DOI] [Google Scholar]

- 44.Amin J., Anjum M.A., Sharif M., Saba T., Tariq U. An intelligence design for detection and classification of COVID19 using fusion of classical and convolutional neural network and improved microscopic features selection approach. Microsc. Res. Technol. 2021;84:2254–2267. doi: 10.1002/jemt.23779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Amin J., Anjum M.A., Sharif M., Rehman A., Saba T., Zahra R. Microscopic segmentation and classification of COVID-19 infection with ensemble convolutional neural network. Microsc. Res. Technol. 2021;85:385–397. doi: 10.1002/jemt.23913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Saleem S., Amin J., Sharif M., Anjum M.A., Iqbal M., Wang S.-H. A deep network designed for segmentation and classification of leukemia using fusion of the transfer learning models. Complex Intell. Syst. 2021:1–16. doi: 10.1007/s40747-021-00473-z. [DOI] [Google Scholar]

- 47.Umer M.J., Amin J., Sharif M., Anjum M.A., Azam F., Shah J.H. An integrated framework for COVID-19 classification based on classical and quantum transfer learning from a chest radiograph. Concurr. Comput. Pract. Exp. 2021:e6434. doi: 10.1002/cpe.6434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Amin J., Almas Anjum M., Sharif M., Kadry S., Nam Y. Fruits and vegetable diseases recognition using convolutional neural networks. Comput. Mater. Contin. 2021;70:619–635. doi: 10.32604/cmc.2022.018562. [DOI] [Google Scholar]

- 49.Ahmed S.M., Mstafa R.J. A Comprehensive Survey on Bone Segmentation Techniques in Knee Osteoarthritis Research: From Conventional Methods to Deep Learning. Diagnostics. 2022;12:611. doi: 10.3390/diagnostics12030611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Saygılı A., Albayrak S. A new computer-based approach for fully automated segmentation of knee meniscus from magnetic resonance images. Biocybern. Biomed. Eng. 2017;37:432–442. doi: 10.1016/j.bbe.2017.04.008. [DOI] [Google Scholar]

- 51.Saygili A., Kaya H., Albayrak S. Automatic detection of meniscal area in the knee MR images; Proceedings of the 2016 24th Signal Processing and Communication Application Conference (SIU); Zonguldak, Turkey. 16–19 May 2016; pp. 1337–1340. [Google Scholar]

- 52.Saleem M., Farid M.S., Saleem S., Khan M.H. X-ray image analysis for automated knee osteoarthritis detection. Signal Image Video Processing. 2020;14:1079–1087. doi: 10.1007/s11760-020-01645-z. [DOI] [Google Scholar]

- 53.Mun J., Jang Y., Son S.H., Yoon H.J., Kim J. A SSLBP-based feature extraction framework to detect bones from knee MRI scans; Proceedings of the 2018 Conference on Research in Adaptive and Convergent Systems; Honolulu, HI, USA. 9–12 October 2018; pp. 23–28. [Google Scholar]

- 54.Kim D., Lee J., Yoon J.S., Lee K.J., Won K. Development of automated 3D knee bone segmentation with inhomogeneity correction for deformable approach in magnetic resonance imaging; Proceedings of the 2018 Conference on Research in Adaptive and Convergent Systems; Honolulu, HI, USA. 9–12 October 2018; pp. 285–290. [Google Scholar]

- 55.Bayramoglu N., Tiulpin A., Hirvasniemi J., Nieminen M.T., Saarakkala S. Adaptive segmentation of knee radiographs for selecting the optimal ROI in texture analysis. Osteoarthr. Cartil. 2020;28:941–952. doi: 10.1016/j.joca.2020.03.006. [DOI] [PubMed] [Google Scholar]

- 56.Lynch J., Hawkes D., Buckland-Wright J. Analysis of texture in macroradiographs of osteoarthritic knees, using the fractal signature. Phys. Med. Biol. 1991;36:709. doi: 10.1088/0031-9155/36/6/001. [DOI] [PubMed] [Google Scholar]

- 57.Shamir L., Ling S.M., Scott W.W., Bos A., Orlov N., Macura T.J., Eckley D.M., Ferrucci L., Goldberg I.G. Knee x-ray image analysis method for automated detection of osteoarthritis. IEEE Trans. Biomed. Eng. 2008;56:407–415. doi: 10.1109/TBME.2008.2006025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Üreten K., Erbay H., Maraş H.H. Detection of hand osteoarthritis from hand radiographs using convolutionalneural networks with transfer learning. Turk. J. Electr. Eng. Comput. Sci. 2020;28:2968–2978. doi: 10.3906/elk-1912-23. [DOI] [Google Scholar]

- 59.Vasavi S., Priyadarshini N.K., Harshavaradhan K. Invariant feature-based darknet architecture for moving object classification. IEEE Sens. J. 2020;21:11417–11426. doi: 10.1109/JSEN.2020.3007883. [DOI] [Google Scholar]

- 60.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 61.Hochberg M., Favors K., Sorkin J. Quality of life and radiographic severity of knee osteoarthritis predict total knee arthroplasty: Data from the osteoarthritis initiative. Osteoarthr. Cartil. 2013;21:S11. doi: 10.1016/j.joca.2013.02.044. [DOI] [Google Scholar]

- 62.Leung K., Zhang B., Tan J., Shen Y., Geras K.J., Babb J.S., Cho K., Chang G., Deniz C.M. Prediction of total knee replacement and diagnosis of osteoarthritis by using deep learning on knee radiographs: Data from the osteoarthritis initiative. Radiology. 2020;296:584. doi: 10.1148/radiol.2020192091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Zhang B., Tan J., Cho K., Chang G., Deniz C.M. Attention-based cnn for kl grade classification: Data from the osteoarthritis initiative; Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI); Iowa City, IA, USA. 3–7 April 2020; pp. 731–735. [Google Scholar]

- 64.Chan L., Li H., Chan P., Wen C. A machine learning-based approach to decipher multi-etiology of knee osteoarthritis onset and deterioration. Osteoarthr. Cartil. Open. 2021;3:100135. doi: 10.1016/j.ocarto.2020.100135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Raj A., Vishwanathan S., Ajani B., Krishnan K., Agarwal H. Automatic knee cartilage segmentation using fully volumetric convolutional neural networks for evaluation of osteoarthritis; Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018); Washington, DC, USA. 4–7 April 2018; pp. 851–854. [Google Scholar]

- 66.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Densely connected convolutional networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- 67.Ojala T., Pietikainen M., Maenpaa T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002;24:971–987. doi: 10.1109/TPAMI.2002.1017623. [DOI] [Google Scholar]

- 68.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Processing Syst. 2012;25:1–9. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 69.Imran M., Zaidi F.S. Errors of Indices in Household Surveys of Punjab Urban through Principal Components. Pak. J. Humanit. Soc. Sci. 2021;9:51–58. doi: 10.52131/pjhss.2021.0901.0112. [DOI] [Google Scholar]

- 70.Jolliffe I.T., Cadima J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2016;374:20150202. doi: 10.1098/rsta.2015.0202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Garg I., Panda P., Roy K. A low effort approach to structured CNN design using PCA. IEEE Access. 2019;8:1347–1360. doi: 10.1109/ACCESS.2019.2961960. [DOI] [Google Scholar]

- 72.Boser B.E., Guyon I.M., Vapnik V.N. A training algorithm for optimal margin classifiers; Proceedings of the Fifth Annual Workshop on Computational Learning Theory; Pittsburgh, PA, USA. 27–29 July 1992; pp. 144–152. [Google Scholar]

- 73.Friedman J.H., Bentley J.L., Finkel R.A. An algorithm for finding best matches in logarithmic expected time. ACM Trans. Math. Softw. 1977;3:209–226. doi: 10.1145/355744.355745. [DOI] [Google Scholar]

- 74.Chen P. Knee osteoarthritis severity grading dataset. Mendeley Data. 2018;1 doi: 10.17632/56rmx5bjcr. [DOI] [Google Scholar]

- 75.Tiulpin A., Thevenot J., Rahtu E., Lehenkari P., Saarakkala S. Automatic knee osteoarthritis diagnosis from plain radiographs: A deep learning-based approach. Sci. Rep. 2018;8:1727. doi: 10.1038/s41598-018-20132-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Kondal S., Kulkarni V., Gaikwad A., Kharat A., Pant A.J.A.P.A. Automatic Grading of Knee Osteoarthritis on the Kellgren-Lawrence Scale from Radiographs Using Convolutional Neural Networks. Springer; Berlin/Heidelberg, Germany: 2020. [Google Scholar]

- 77.Brejnebøl M.W., Hansen P., Nybing J.U., Bachmann R., Ratjen U., Hansen I.V., Lenskjold A., Axelsen M., Lundemann M., Boesen M. External validation of an artificial intelligence tool for radiographic knee osteoarthritis severity classification. Eur. J. Radiol. 2022;150:110249. doi: 10.1016/j.ejrad.2022.110249. [DOI] [PubMed] [Google Scholar]

- 78.Gu H., Li K., Colglazier R.J., Yang J., Lebhar M., O’Donnell J., Jiranek W.A., Mather R.C., French R.J., Said N. Automated Grading of Radiographic Knee Osteoarthritis Severity Combined with Joint Space Narrowing. arXiv. 20222203.08914 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data is downloaded from that P. Chen, “Knee osteoarthritis severity grading dataset,” Mendeley Data, v1 http://dx.doi.org/10.17632/56rmx5bjcr, vol. 1, 2018. https://radiopaedia.org/articles/osteoarthritis-of-the-knee (accessed on 5 July 2022).